VestAid: A Tablet-Based Technology for Objective Exercise Monitoring in Vestibular Rehabilitation

Abstract

1. Introduction

2. Materials and Methods

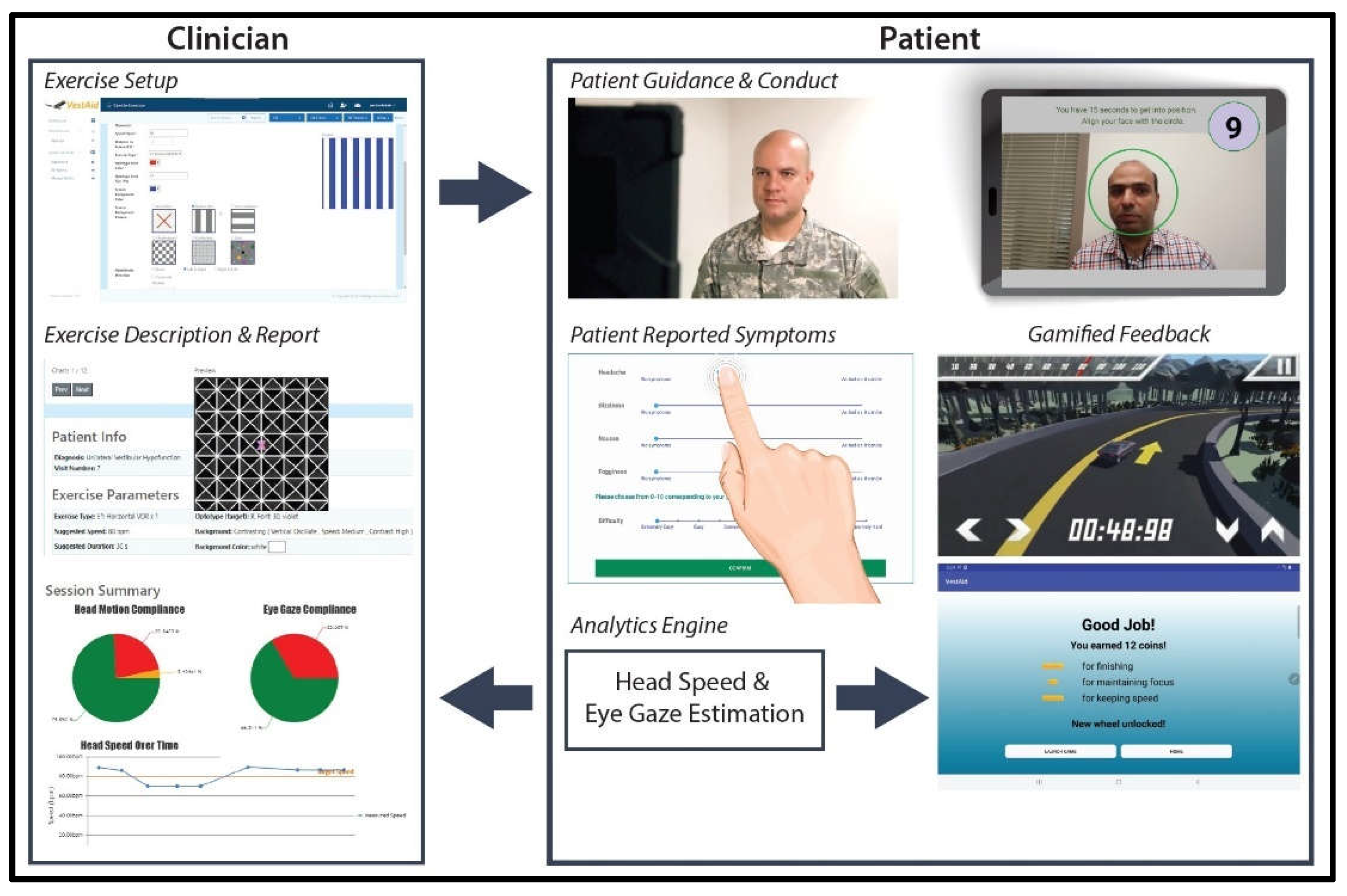

2.1. Description of the System

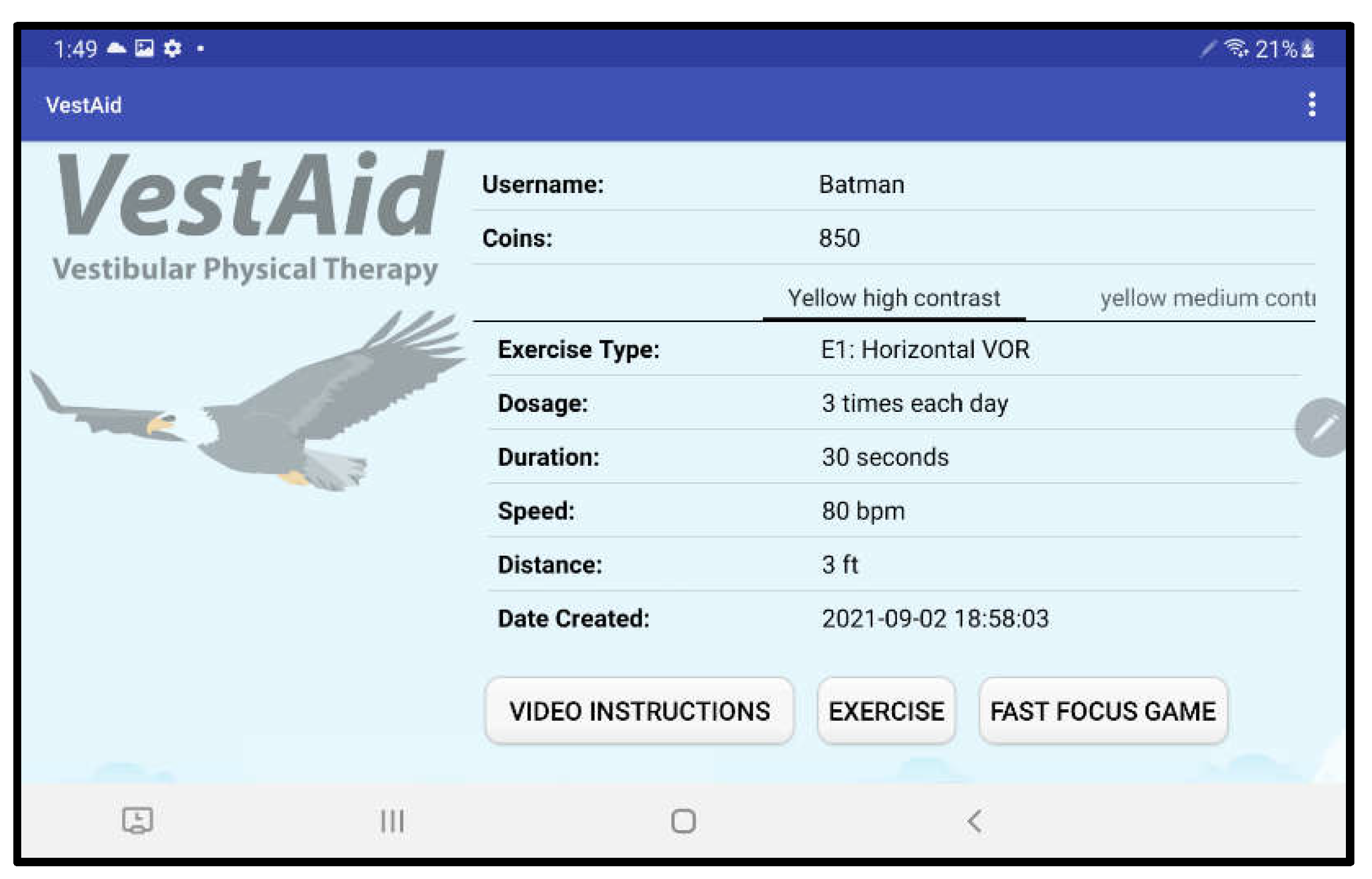

2.2. Description of Patient Interaction with the App

2.3. Gamification

2.4. Description of the Algorithms

2.4.1. Detection of the Patient’s Face in the Frames of the Captured Video

2.4.2. Detection of Facial Landmarks and Estimation of Head Angles

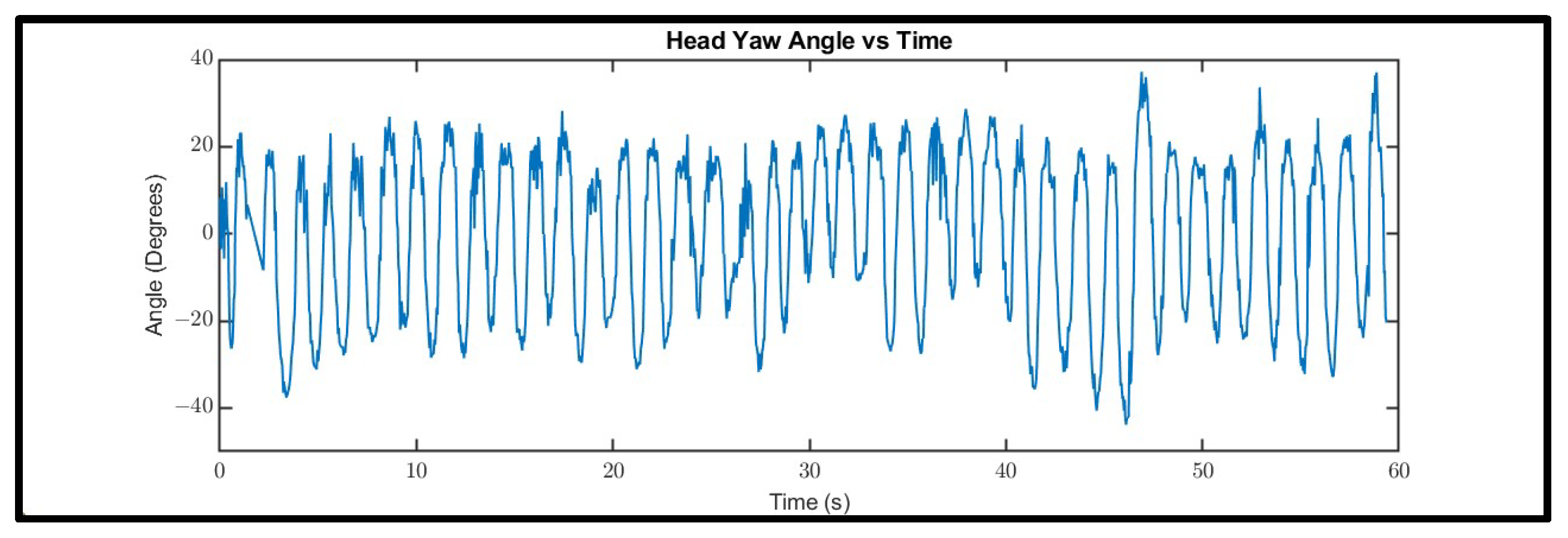

2.4.3. Determination of Head-Motion Compliance Based on the Estimated Head Angles

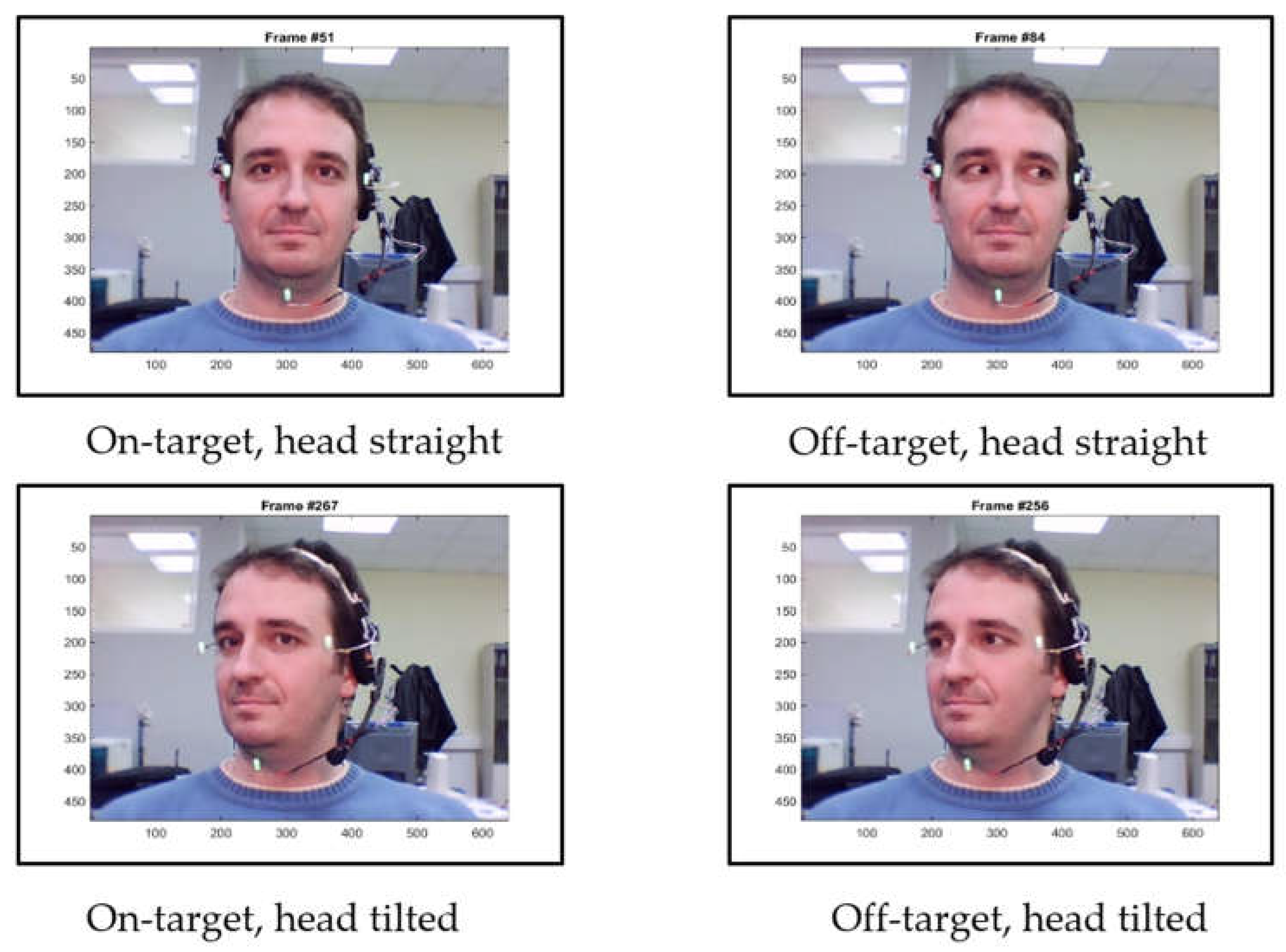

2.4.4. Determination of Eye-Gaze Compliance Based on Classification of Eye-Gaze Detection

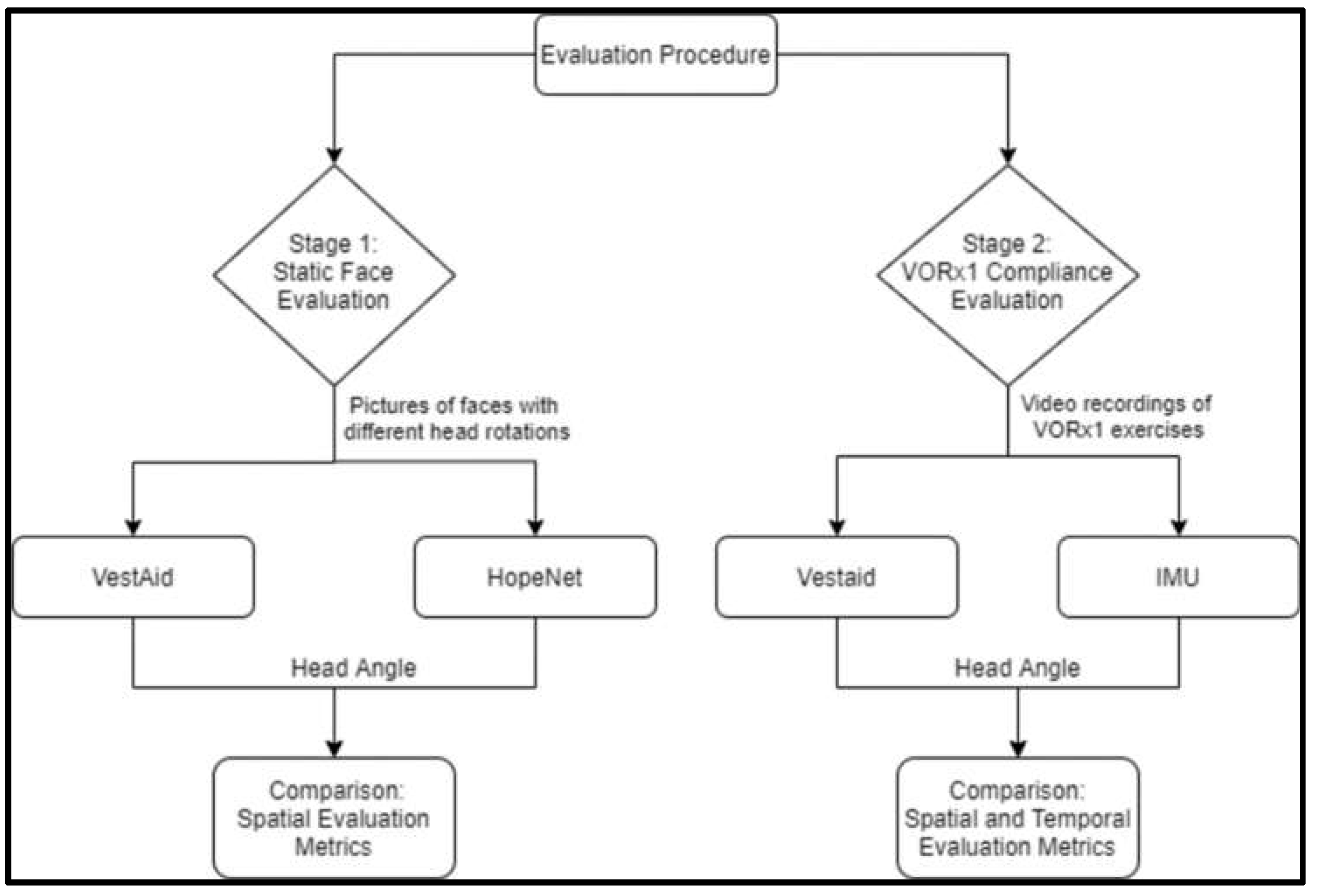

2.5. Evaluating the Accuracy of Head-Angle Estimation

2.5.1. Evaluation of Head-Angle Estimation on Static Faces from a Public Dataset

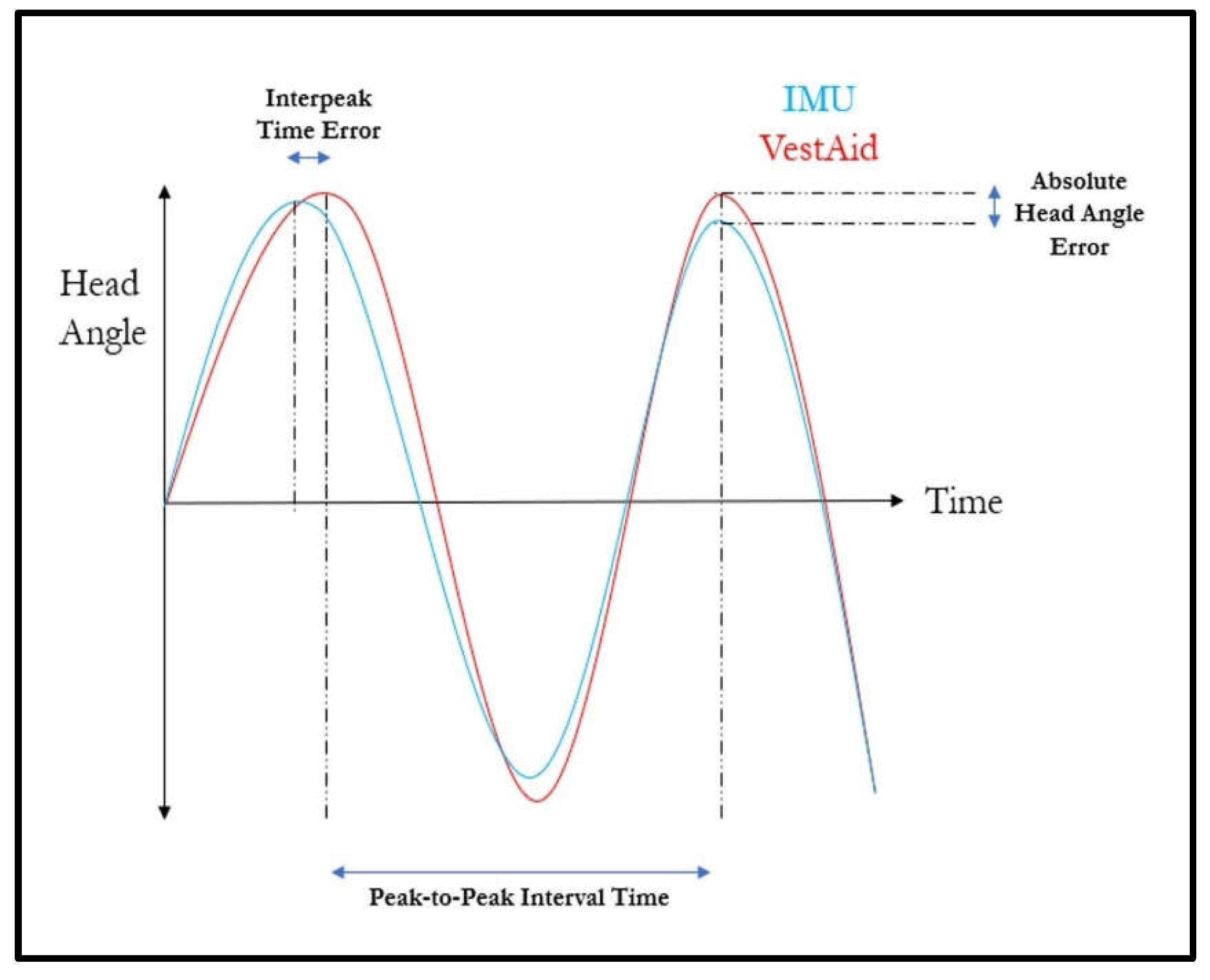

2.5.2. Evaluation of Head Angles and Speed Compliance in Action

3. Results/Discussion

3.1. Evaluation of Head-Angle Estimation on Static Faces from a Public Dataset

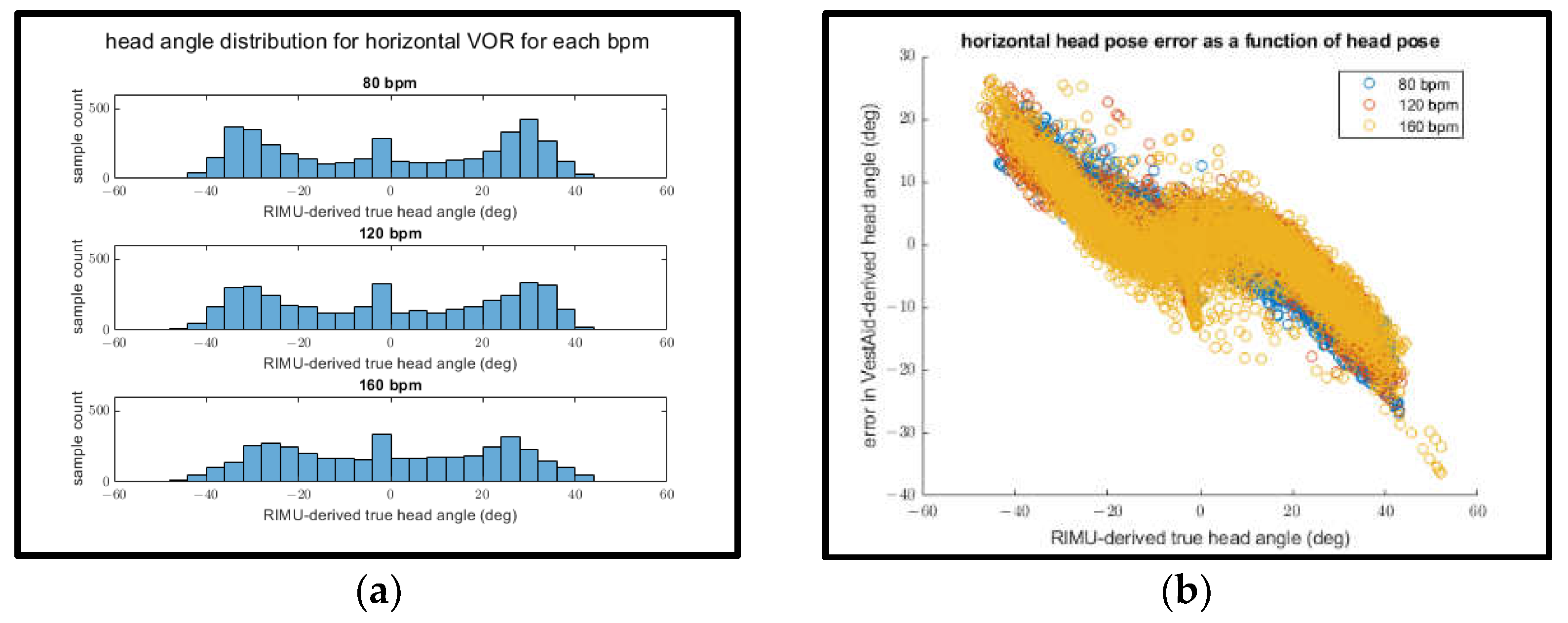

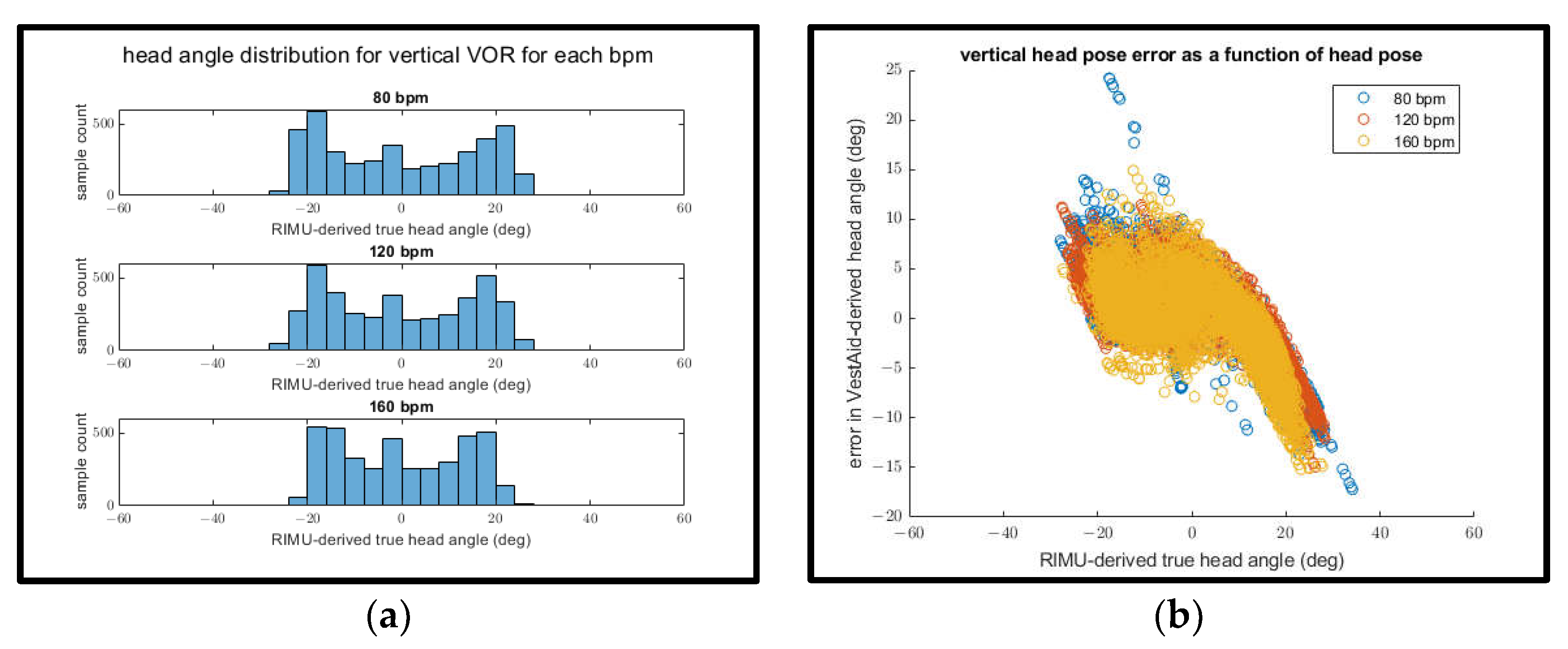

3.2. Evaluation of Head Speed Compliance

4. Summary

5. Patents

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hall, C.; Herdman, S.; Whitney, S.; Anson, E.; Carender, W.; Hoppes, C.; Cass, S.; Christy, J.; Cohen, H.; Fife, T.; et al. Vestibular rehabilitation for peripheral vestibular hypofunction: An updated clinical practice guideline from the academy of neurologic physical therapy of the American physical therapy association. J. Neurol. Phys. Ther. 2021. Online ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, Y.; Carey, J.; della Santina, J.; Schubert, M.; Minor, L. Disorders of balance and vestibular function in US adults: Data from the National Health and Nutrition Examination Survey, 2001–2004. Arch. Int. Med. 2009, 169, 938–944. [Google Scholar] [CrossRef] [PubMed]

- Scherer, M.R.; Schubert, M.C. Traumatic brain injury and vestibular pathology as a comorbidity after blast exposure. Phys. Ther. 2009, 89, 980–992. [Google Scholar] [CrossRef] [PubMed]

- Whitney, S.; Sparto, P. Physical therapy principles of vestibular rehabilitation. Neurorehabilit. J. 2011, 29, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Alsalaheen, B.A.; Whitney, S.L.; Mucha, A.; Morris, L.O.; Furman, J.M.; Sparto, P.J. Exercise prescription patterns in patients treated with vestibular rehabilitation after concussion. Physiother. Res. Int. 2012, 18, 100–108. [Google Scholar] [CrossRef] [PubMed]

- Shepard, N.T.; Telian, S.A. Programmatic vestibular rehabilitation. Otolaryngol. Head Neck Surg. 1995, 112, 173–182. [Google Scholar] [CrossRef]

- Gottshall, K. Vestibular rehabilitation after mild traumatic brain injury with vestibular pathology. NeuroRehabilitation 2011, 29, 167–171. [Google Scholar] [CrossRef] [PubMed]

- Hall, C.D.; Herdman, S.J. Reliability of clinical measures used to assess patients with peripheral vestibular disorders. J. Neurol. Phys. Ther. 2006, 30, 74–81. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.; Sparto, P.; Kiesler, S.; Siewiorek, D.; Smailagic, A. iPod-based in-home system for monitoring gaze-stabilization exercise compliance of individuals with vestibular hypofunction. J. Neuroeng. Rehabil. 2014, 11, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Todd, C.; Hübner, P.; Hübner, P.; Schubert, M.; Migliaccio, A. StableEyes—A portable vestibular rehabilitation device. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1223–1232. [Google Scholar] [CrossRef] [PubMed]

- Ruiz, N.; Chong, E.; Rehg, J.M. Fine-Grained Head Pose Estimation Without Keypoints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Mucha, A.; Collins, M.; Elbin, R.; Furman, J.; Troutman-Enseki, C.; DeWolf, R.; Marchetti, G.; Kontos, A. A brief vestibular/ocular motor screening (VOMS) assessment to evaluate concussions: Preliminary findings. Am. J. Sports Med. 2014, 42, 2479–2486. [Google Scholar] [CrossRef] [PubMed]

- Robertson, R.J.; Goss, F.L.; Rutkowski, J.; Lenz, B.; Dixon, C.; Timmer, J.; Andreacci, J. Concurrent validation of the OMNI perceived exertion scale for resistance exercise. Med. Sci. Sports Exerc. 2003, 35, 333–341. [Google Scholar] [CrossRef] [PubMed]

- Robertson, R.J.; Goss, F.L.; Dube, J.; Rutkowski, J.; Dupain, M.; Brennan, C.; Andreacci, J. Validation of the adult OMNI scale of perceived exertion for cycle ergometer exercise. Med. Sci. Sports Exerc. 2004, 36, 102–108. [Google Scholar] [CrossRef] [PubMed]

- Deterding, A.; Dixon, D.; Khaled, R.; Nacke, L. From Game Design Elements to Gamefulness: Defining “Gamification”. In Proceedings of the 15th International Academic MindTrek Conference, Tampere, Finland, 28–30 September 2011. [Google Scholar]

- Sailer, M.; Hense, J.U.; Mayr, S.K.; Heinz, M. How gamification motivates: An experimental study of the effects of spectific game design elements on psychological need satisfaction. Comput. Hum. Behav. 2017, 69, 371–380. [Google Scholar] [CrossRef]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does gamification work? A literature review of empirical studies on gamification. In Proceedings of the 47th International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014. [Google Scholar]

- Liao, S.; Jain, A.; Li, S. A fast and accurate unconstrained face detector. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 211–223. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; Cao, X.; Wei, Y.; Sun, J. Face alignment at 3000 fps via regressing local binary features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Xiong, X.; de la Torre, F. Supervised descent method and its applications to face alignment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Wu, Y.; Gou, C.; Ji, Q. Simultaneous facial landmark detection, pose and deformation estimation under facial occlusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3471–3480. [Google Scholar]

- O’Haver, T. A Pragmatic Introduction to Signal Processing. 2018. Available online: https://terpconnect.umd.edu/~toh/spectrum/IntroToSignalProcessing.pdf (accessed on 10 December 2021).

- George, A.; Routray, A. Real-time eye gaze direction classification using convolutional neural network. In Proceedings of the IEEE International Conference on Signal Processing and Communications (SPCOM), Bangalore, India, 12–15 June 2016. [Google Scholar]

- Asteriadis, S.; Soufleros, D.; Karpouzis, K.; Kollias, S. A natural head pose and eye gaze dataset. In Proceedings of the International Workshop on Affective-Aware Virtual Agents and Social Robots, Boston, MA, USA, 6 November 2009. [Google Scholar]

- Tharwat, A. Classification assessment methods. New Engl. J. Entrep. 2020, 17, 168–192. [Google Scholar] [CrossRef]

- Fanelli, G.; Dantone, M.; Juergen, G.; Fossati, A.; van Gool, L. Random Forests for Real Time 3D Face Analysis. Int. J. Comput. Vis. 2013, 101, 437–458. [Google Scholar] [CrossRef]

- Rhudy, M. Time Alignment Techniques for Experimental Sensor Data. Int. J. Comput. Sci. Eng. Surv. (IJCSES) 2014, 5, 1–14. [Google Scholar] [CrossRef]

- Kermen, A.; Aydin, T.; Ercan, A.O.; Erdem, T. A multi-sensor integrated head-mounted display setup for augmented reality applications. In Proceedings of the 2015 3DTV-Conference: The True Vision—Capture, Transmission and Display of 3D Video (3DTV-CON), Lisbon, Portugal, 8–10 July 2015. [Google Scholar]

| Functionality | Implementation |

|---|---|

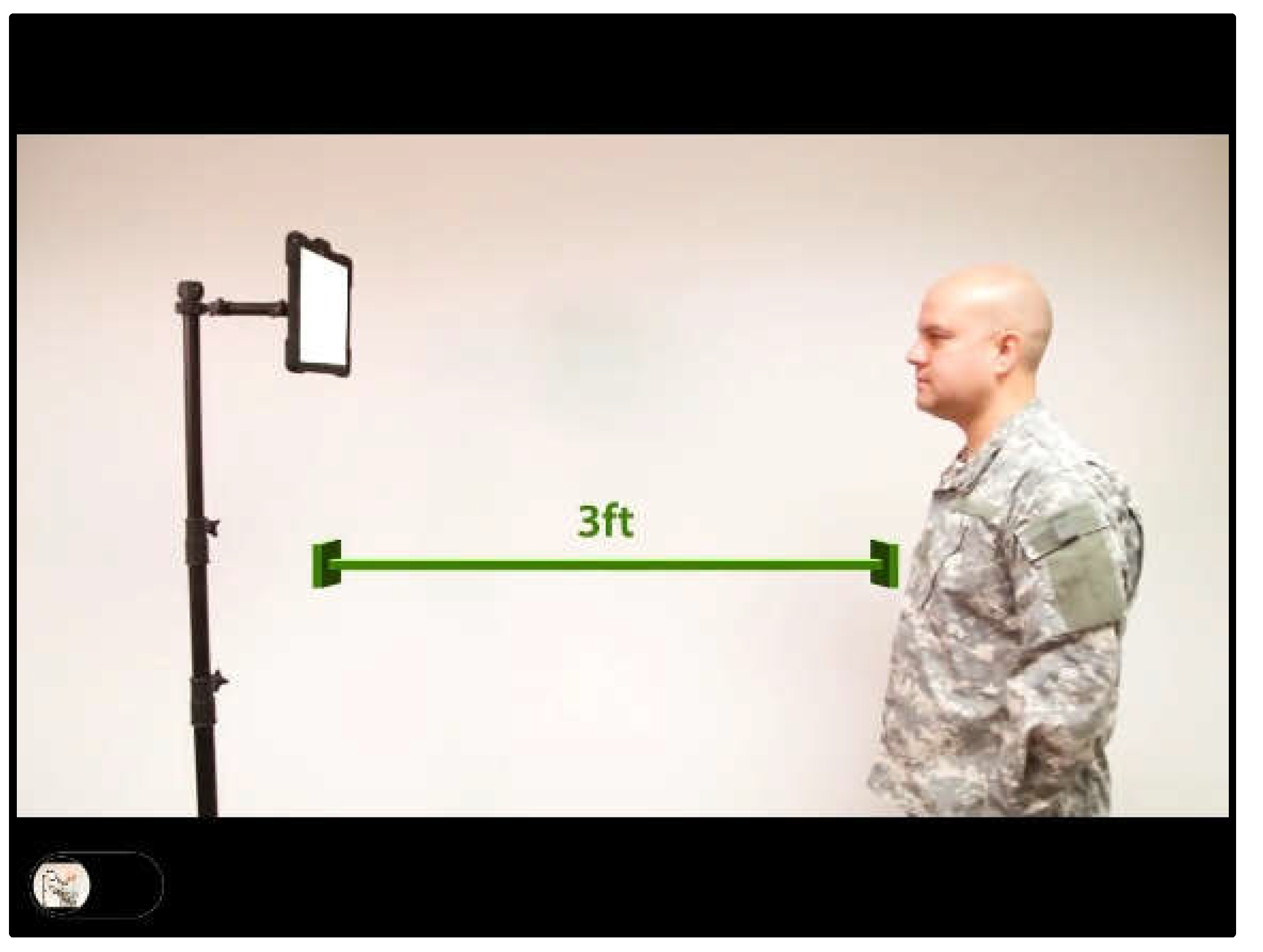

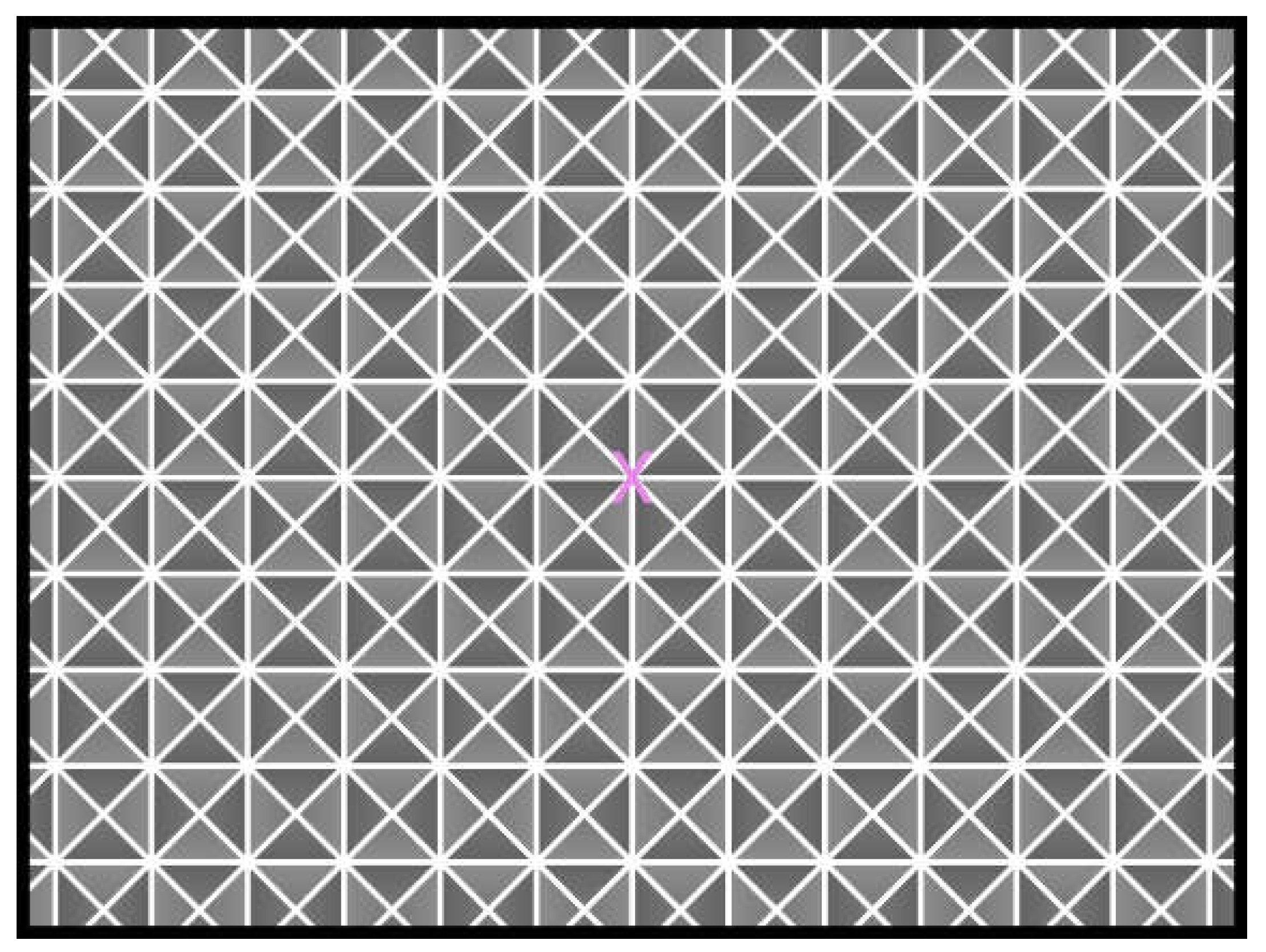

| Exercise setup | The therapist can easily set individualized exercise parameters for VORx1 exercises in the VestAid web portal: Exercise duration and dosing (no. of times/day); distance from the screen; screen background; size, color, and attributes of optotypes; and frequency of head movement. |

| Exercise guidance | The app includes instructional videos to help patients understand how to perform the exercises. The app guides the patients during the exercises by providing audio metronome beeps with the prescribed frequency. Audio beeps are played in the app similar to a metronome with an adjustable beat per minute (bpm) rate. The PT sets the bpm rate according to the required frequency of head movement with a default value of 1–2 Hz as supported by research [1,12]. |

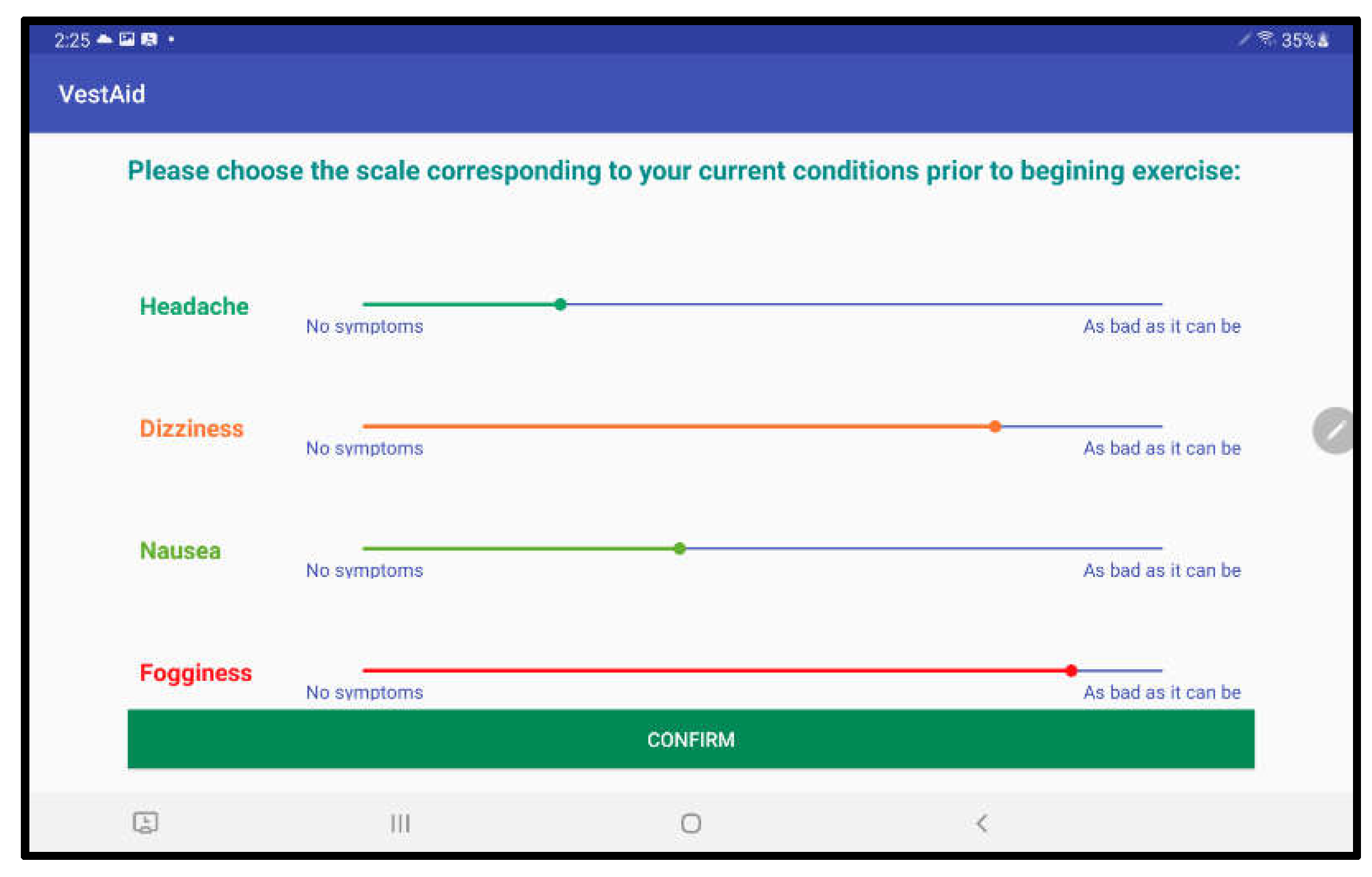

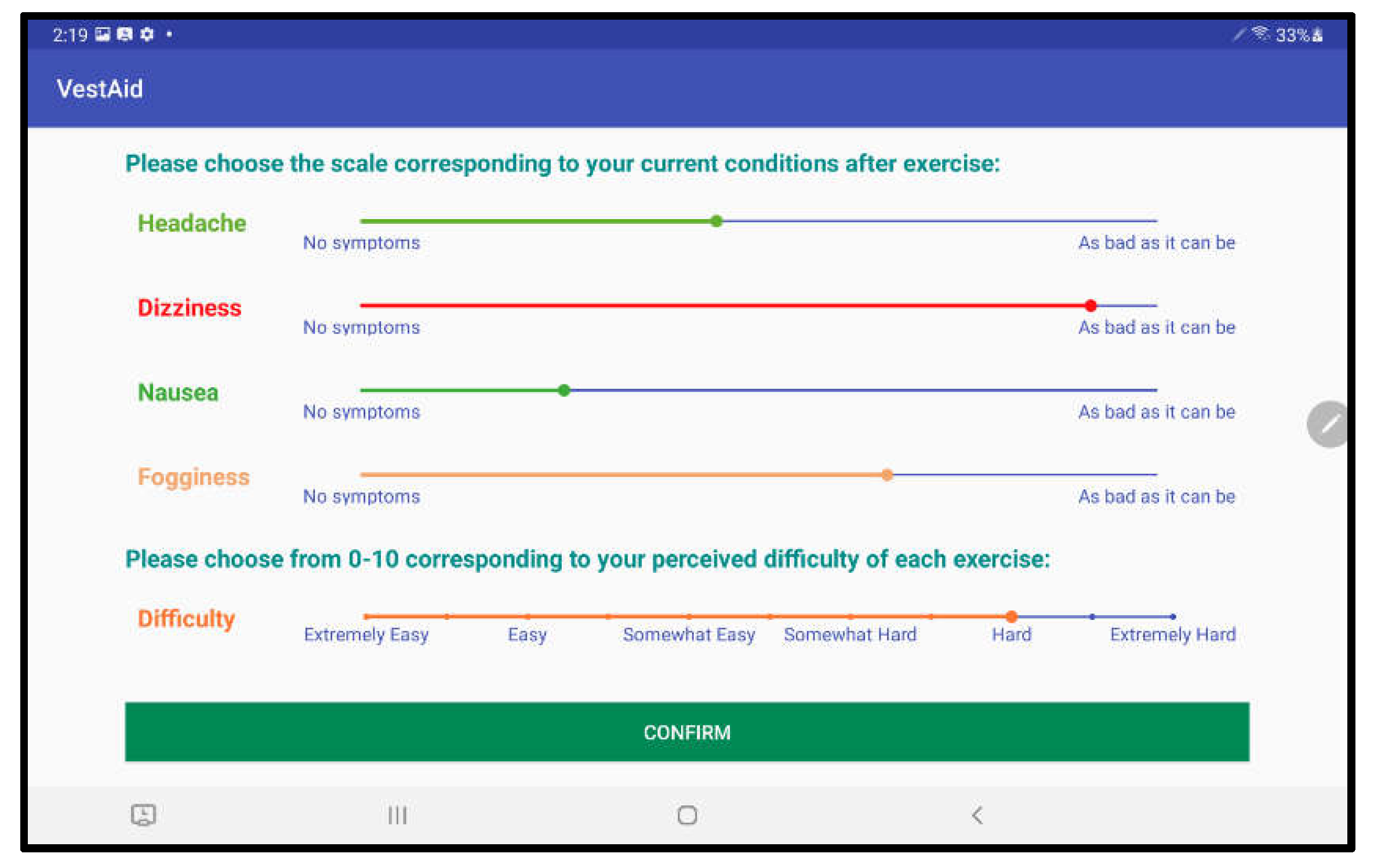

| Objective and subjective data collection | VestAid computes objective measures of the patients’ head motion and eye-gaze compliance (from video captured by the tablet camera during the exercise). VestAid collects pre- and post-exercise subjective symptom ratings (headache, dizziness, nausea, and fogginess) based on vestibular/ocular-motor screening (VOMS) for concussion [12]. At the end of each exercise, VestAid collects patients’ ratings of the perceived difficulty. |

| Compliance determination | Machine learning algorithms determine patients’ facial features and head angles. Based on these features, compliance of head motion (percentage of time conducted with prescribed speed vs. fast or slow; change of the head speed as a function of time) and eye-gaze (percentage of time focusing on the optotype target) are determined. |

| Patient feedback | Feedback on exercise compliance is provided to patients using an encouraging game-based rewards system. If enabled by the PT, patients can spend their exercise rewards in a computerized racing game. |

| PT reports | Easy-to-understand, time-stamped reports with graphical summaries are generated for the therapist. The PT can access reports through the web portal. |

| Network | Confusion Matrix | Accuracy | Precision | Recall | F1 Score | |||

|---|---|---|---|---|---|---|---|---|

| Two layers of CNN for each eye, three fully connected layers | Prediction | 0.9428 | 0.9296 | 0.8989 | 0.9140 | |||

| Off-target | On-target | |||||||

| Ground Truth | Off-target | 2002 | 72 | |||||

| On-target | 107 | 951 | ||||||

| One layer of CNN for each eye, three fully connected layers | Prediction | 0.9176 | 0.8984 | 0.8526 | 0.8749 | |||

| Off-target | On-target | |||||||

| Ground Truth | Off-target | 1972 | 102 | |||||

| On-target | 156 | 902 | ||||||

| Three layers of CNN for each eye, three fully connected layers | Prediction | 0.9256 | 0.8852 | 0.8960 | 0.8906 | |||

| Off-target | On-target | |||||||

| Ground Truth | Off-target | 1951 | 123 | |||||

| On-target | 110 | 948 | ||||||

| Two layers of CNN for each eye, two fully connected layers | Prediction | 0.9412 | 0.9092 | 0.9178 | 0.9135 | |||

| Off-target | On-target | |||||||

| Ground Truth | Off-target | 1977 | 97 | |||||

| On-target | 87 | 971 | ||||||

| Two layers of CNN for each eye, four fully connected layers | Prediction | 0.9345 | 0.9074 | 0.8979 | 0.9026 | |||

| Off-target | On-target | |||||||

| Ground Truth | Off-target | 1977 | 97 | |||||

| On-target | 108 | 950 | ||||||

| Task | Direction | Speed (bpm) |

|---|---|---|

| 1 | Horizontal | 80 |

| 2 | Horizontal | 120 |

| 3 | Horizontal | 160 |

| 4 | Vertical | 80 |

| 5 | Vertical | 120 |

| 6 | Vertical | 160 |

| Model | Avg abs Pitch Error (deg.) | Avg abs Yaw Error (deg.) | Avg abs Roll Error (deg.) | Avg Geodesic (deg.) |

|---|---|---|---|---|

| HopeNet | 4.89 | 8.47 | 4.00 | 10.27 |

| VestAid | 7.61 | 5.98 | 4.91 | 9.65 |

| Direction | Horizontal | Vertical | ||||

|---|---|---|---|---|---|---|

| Speed (bpm) | 80 | 120 | 160 | 80 | 120 | 160 |

| No. of trials in category | 5 | 5 | 5 | 5 | 5 | 5 |

| Mean abs head angle error (deg.) | 9.12 | 7.29 | 6.55 | 4.09 | 3.66 | 3.36 |

| Mean head angle RMSE (deg.) | 10.46 | 8.92 | 8.05 | 5.08 | 4.49 | 4.15 |

| Mean no. of ID’d IMU peaks | 17.00 | 26.00 | 32.40 | 17.00 | 25.60 | 33.40 |

| Mean no. of ID’d VestAid peaks | 17.00 | 26.00 | 31.80 | 16.80 | 25.20 | 33.20 |

| Mean matched interpeak time error (s) | 0.06 | 0.08 | 0.05 | 0.09 | 0.07 | 0.04 |

| Mean matched interpeak time RMSE (s) | 0.07 | 0.14 | 0.07 | 0.15 | 0.12 | 0.07 |

| Mean abs head turn frequency error (bpm) | 3.08 | 9.02 | 10.77 | 4.42 | 8.17 | 8.51 |

| Mean head turn frequency RMSE (bpm) | 3.79 | 12.44 | 13.62 | 6.21 | 11.37 | 13.00 |

| Mean correct percent IMU (%) | 99.52 | 98.67 | 97.47 | 98.76 | 98.76 | 91.13 |

| Mean correct percent VestAid (%) | 99.56 | 86.98 | 85.20 | 98.82 | 90.67 | 80.67 |

| Mean correct percent difference (%) | 0.04 | −11.69 | −12.27 | 0.06 | −8.08 | −10.46 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hovareshti, P.; Roeder, S.; Holt, L.S.; Gao, P.; Xiao, L.; Zalkin, C.; Ou, V.; Tolani, D.; Klatt, B.N.; Whitney, S.L. VestAid: A Tablet-Based Technology for Objective Exercise Monitoring in Vestibular Rehabilitation. Sensors 2021, 21, 8388. https://doi.org/10.3390/s21248388

Hovareshti P, Roeder S, Holt LS, Gao P, Xiao L, Zalkin C, Ou V, Tolani D, Klatt BN, Whitney SL. VestAid: A Tablet-Based Technology for Objective Exercise Monitoring in Vestibular Rehabilitation. Sensors. 2021; 21(24):8388. https://doi.org/10.3390/s21248388

Chicago/Turabian StyleHovareshti, Pedram, Shamus Roeder, Lisa S. Holt, Pan Gao, Lemin Xiao, Chad Zalkin, Victoria Ou, Devendra Tolani, Brooke N. Klatt, and Susan L. Whitney. 2021. "VestAid: A Tablet-Based Technology for Objective Exercise Monitoring in Vestibular Rehabilitation" Sensors 21, no. 24: 8388. https://doi.org/10.3390/s21248388

APA StyleHovareshti, P., Roeder, S., Holt, L. S., Gao, P., Xiao, L., Zalkin, C., Ou, V., Tolani, D., Klatt, B. N., & Whitney, S. L. (2021). VestAid: A Tablet-Based Technology for Objective Exercise Monitoring in Vestibular Rehabilitation. Sensors, 21(24), 8388. https://doi.org/10.3390/s21248388