Abstract

A robot’s ability to grasp moving objects depends on the availability of real-time sensor data in both the far-field and near-field of the gripper. This research investigates the potential contribution of tactile sensing to a task of grasping an object in motion. It was hypothesised that combining tactile sensor data with a reactive grasping strategy could improve its robustness to prediction errors, leading to a better, more adaptive performance. Using a two-finger gripper, we evaluated the performance of two algorithms to grasp a ball rolling on a horizontal plane at a range of speeds and gripper contact points. The first approach involved an adaptive grasping strategy initiated by tactile sensors in the fingers. The second strategy initiated the grasp based on a prediction of the position of the object relative to the gripper, and provided a proxy to a vision-based object tracking system. It was found that the integration of tactile sensor feedback resulted in a higher observed grasp robustness, especially when the gripper–ball contact point was displaced from the centre of the gripper. These findings demonstrate the performance gains that can be attained by incorporating near-field sensor data into the grasp strategy and motivate further research on how this strategy might be expanded for use in different manipulator designs and in more complex grasp scenarios.

1. Introduction

Robotic manipulators are widely deployed to grasp and manipulate objects in controlled environments, such as manufacturing plants and factory assembly lines, where the structured nature of the environment can be leveraged to reduce the complexity of the manipulation task. However, for them to be effective in a wider range of settings, including in collaborative and service robot use-cases, new methods must be developed that enable them to operate in dynamic, less structured conditions.

The ability to grasp moving objects is a challenging problem that is closely coupled with the sensing paradigm that is used. Previous work has relied heavily on far-field visual sensing, where the gripper–object intercept position and time was computed based on a real-time prediction of the object trajectory. In these control systems, estimates of the gripper–object interception point and time have been the sole inputs informing grasp initiation (i.e., when the fingers start to close), with most strategies using a predefined grasping motion. This ‘object tracking’ approach has shown some noteworthy results [1,2]; however, there are several issues that limit the applicability of these methods to real-world use-cases. Firstly, results have been achieved in a laboratory setting, where lighting and other environmental conditions were tightly controlled. Secondly, the performance has relied almost exclusively on the use of external motion capture sensors placed in the surrounding environment, which are costly and rarely available outside of research laboratories. Thirdly, due to high bandwidth requirements, accurately tracking the 3D motion of objects relative to the robot using vision sensing has required high computational effort [3,4,5].

In addition to these practical issues, there are errors associated with the use of object tracking methods to inform gripper initiation. Even with the most advanced motion tracking systems available, estimates of the exact trajectory of the moving object are subject to some degree of sensor noise and latency. Another source of error is the potential for the robot’s embodiment to become a source of object occlusion, limiting the ability of the vision sensors to track the object in the near-field of the robot [2,6]. Even assuming a perfect sensing of the object, controlling the precise location of the end effector can be affected by the propagation of small positional errors through the kinematic chain of the robot’s arm, introducing uncertainty in the exact pose of the end effector. The aggregation of these errors leads to misalignment between both the actual and optimum position and the time at which the gripper intercepts and the time at which it attempts to grasp the moving object.

It was hypothesised that the implementation of a reactive sensing approach that uses tactile sensor data could enable a more adaptive grasp that is more robust to spatial errors at the point of the gripper–object contact.

This article is structured as follows. The following section will outline relevant, prior research. Section 3 describes the experimental setup, discusses the implementation of each grasping strategy in more detail and finally describes the experimental procedure. Section 4 presents the results of testing and a subsequent statistical analysis. Section 5 examines the results, outlines findings and suggests future work. Finally, Section 6 draws conclusions from the research presented.

2. Prior Work

Tactile sensing is a widely used sensing modality in robotic grasping. It can help infer information about an object’s properties, such as its size, shape, weight, compliance, texture and temperature [7,8,9,10,11,12]. Furthermore, tactile sensing is essential for detecting slip between gripper and object [13], and can also be used to inform and control robotic motion, referred to as tactile servoing [14,15]. It is useful, therefore, not only in grasping tasks [16], but also in object exploration [15,17,18], object classification [8,11,19], object localisation [9], assessing grasp stability [20,21] and in-hand manipulation [22]. Despite its contribution to a large number of grasping applications and its effectiveness in unstructured environments, the contribution of tactile sensing to grasping moving objects remains unexplored.

Research on the robotic grasping of moving objects dates back to the 1990s [23], and there are numerous examples of instances where robots have been required to manipulate moving objects. Examples include grasping [24], catching [2,6,25,26], batting [27,28], juggling [29] and picking up objects on a conveyor belt [30]. Sensing for this problem can generally be broken down into two stages. In the first stage, the robot must track the far-field motion of the grasp object in order to determine the point of interception. In the second stage, the robot must sense the near-field area in proximity to the gripper to initiate and adapt the grasp.

The requirement to track the far-field trajectory of the moving object is common to all grasping approaches of this type. To our knowledge, only one study has accomplished this task using exclusively egocentric sensing, where the only sensors used to track the moving object were located on board the robot [1]. With few exceptions, object tracking has been achieved primarily by using an arrangement of cameras in the area surrounding the robot. The simplest of these exocentric sensor arrangements have involved using combinations of monocular cameras to produce stereo images, allowing for the 3D motion of the object to be inferred [3,24,31]. Researchers have also tracked the motion of moving objects using one or more structured light cameras [6,30]. In a study by Cuevas-Velasquez et al., the researchers used four structured light cameras to track the far-field motion of the object and a robot-mounted stereo camera to track the near-field motion [32]. The most sophisticated object tracking setups have used specialised motion capture hardware, designed specifically to track the motion of objects moving in a 3D space [2,25,33,34]. While these systems boast the greatest accuracy and reliability, they are expensive and require a complex environment setup.

Sensing the near-field position of the object relative to the gripper has received little attention in the literature, with most controllers initiating the grasp using only estimates of the object tracking data from surrounding cameras [2,3,6,25,31]. Some exceptions include a paper by Escaida Navarro et al., where tactile sensing in the fingers was incorporated into the strategy for grasping objects moving on a conveyor belt [30]. In another relevant study, Koyama et al. used near-field proximity sensors in a pincer gripper to dynamically adjust the gripping forces in order to catch falling objects of variable sizes and textures [34]. While both of these papers incorporated dedicated near-field sensing in the grasping strategy, the benefits of this hybrid approach over grasping strategies based only on object tracking data were not explored. A recent study by Lynch and McGinn demonstrated that a reactive control strategy using tactile sensing to initiate grasping led to significant improvements in the grasp performance, especially when the object made initial contact with the gripper towards the extremity of the finger [35]. However, since their experiments were conducted in a simulation, the transferability of these findings into the real-world is questionable, given limitations in the fidelity of robotic simulation [5,22,36,37,38]. Furthermore, it is also not clear how the same tests might be replicated using a physical testing setup.

Upon examination of the prior art, the problem of grasping moving objects remains a relatively poorly understood topic and there is a need for fundamental research to better understand how factors, such as tactile sensing, could enhance the grasping performance. Building on previous work conducted in a simulation, this research proposes a systematic way to experimentally evaluate the performance of robotic grasping algorithms tasked with grasping moving objects, and investigates how control systems that use tactile feedback can lead to an improved robustness to spatial and temporal errors in the estimated interception point when grasping an object in motion.

3. Materials and Methods

An experiment was formulated that involved measuring the success of a two-finger robotic gripper grasping a ball moving toward it along a horizontal plane. This provided a systematic way to test each of the parameters that were hypothesised to effect grasp success: the speed of the moving object, the position of the object–gripper contact point along the finger and the timing of the grasp (i.e., when the grasp was initiated). This experiment extends an approach previously implemented in a simulation [35] that has not been adapted for use in a real-world robotic system.

To investigate the effect tactile sensing has on the ability of a robot to grasp an object in motion, a bespoke experimental apparatus was developed. In the subsections below, key components of this apparatus are described, including: the mechanism for regulating the speed of the ball, the implementation of the 2-finger gripper and a description of the different control strategies.

3.1. Motion of the Ball

The moving object that was chosen for the experiment was a smooth ball (diameter = 57 mm, mass = 166 g). This object was chosen as the orientation of the ball would not affect grasp performance, its mass provided a good test of grasp effectiveness, it could be easily replicated by other researchers and its velocity could be accurately controlled using a simple inclined track.

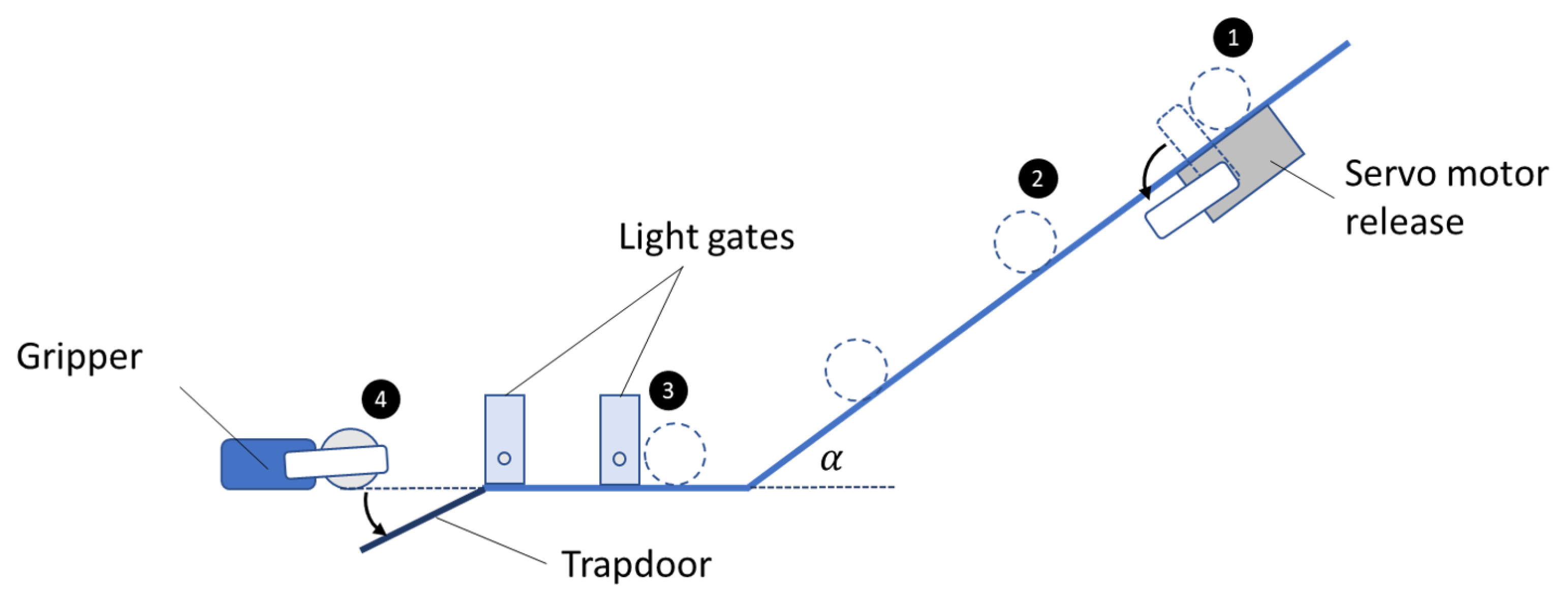

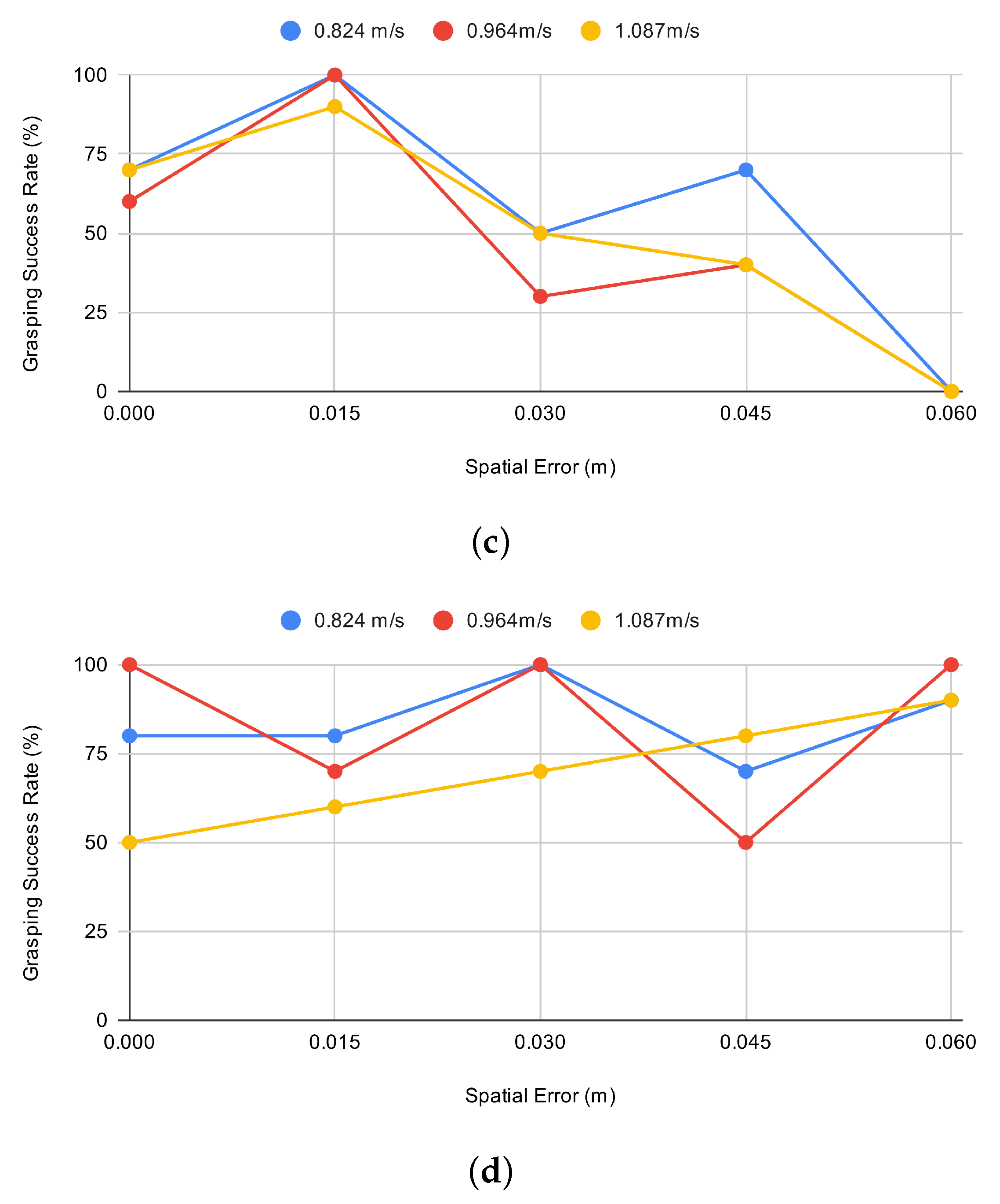

The ball was set in motion by allowing it to roll down an inclined plane, which was implemented using a track with a U-shaped cross-section that constrained the motion of the ball to 1 degree of freedom. At the base of the incline, the ball transitioned to a horizontal planar surface, where its speed was measured using a set of light gates. The velocity of the ball at the light gates was dependent on the release height on the inclined plane, and servo motors were used to provide a highly repeatable release mechanisms to set the ball in motion. A simple illustration of the setup is given in Figure 1. The repeatability of this approach was validated—see Table 1—two hundred tests conducted at each of the three test speeds showed a relative standard deviation of just 1.6%, 1.3% and 2.0%, respectively.

Figure 1.

Illustration of the experimental setup. The ball is set in motion by the release of a servo arm (1). The ball rolls down the incline (2) and through the light gates, which record its velocity and notify the gripper’s controller (3). The gripper grasps (or attempts to grasp) the ball and the trapdoor is released to ensure that the full mass of the ball is supported by the gripper (4).

Table 1.

The mean speed, standard deviation and standard error of the moving ball measured by the light gates for three different elevations of the incline. 200 tests were performed for each incline setting.

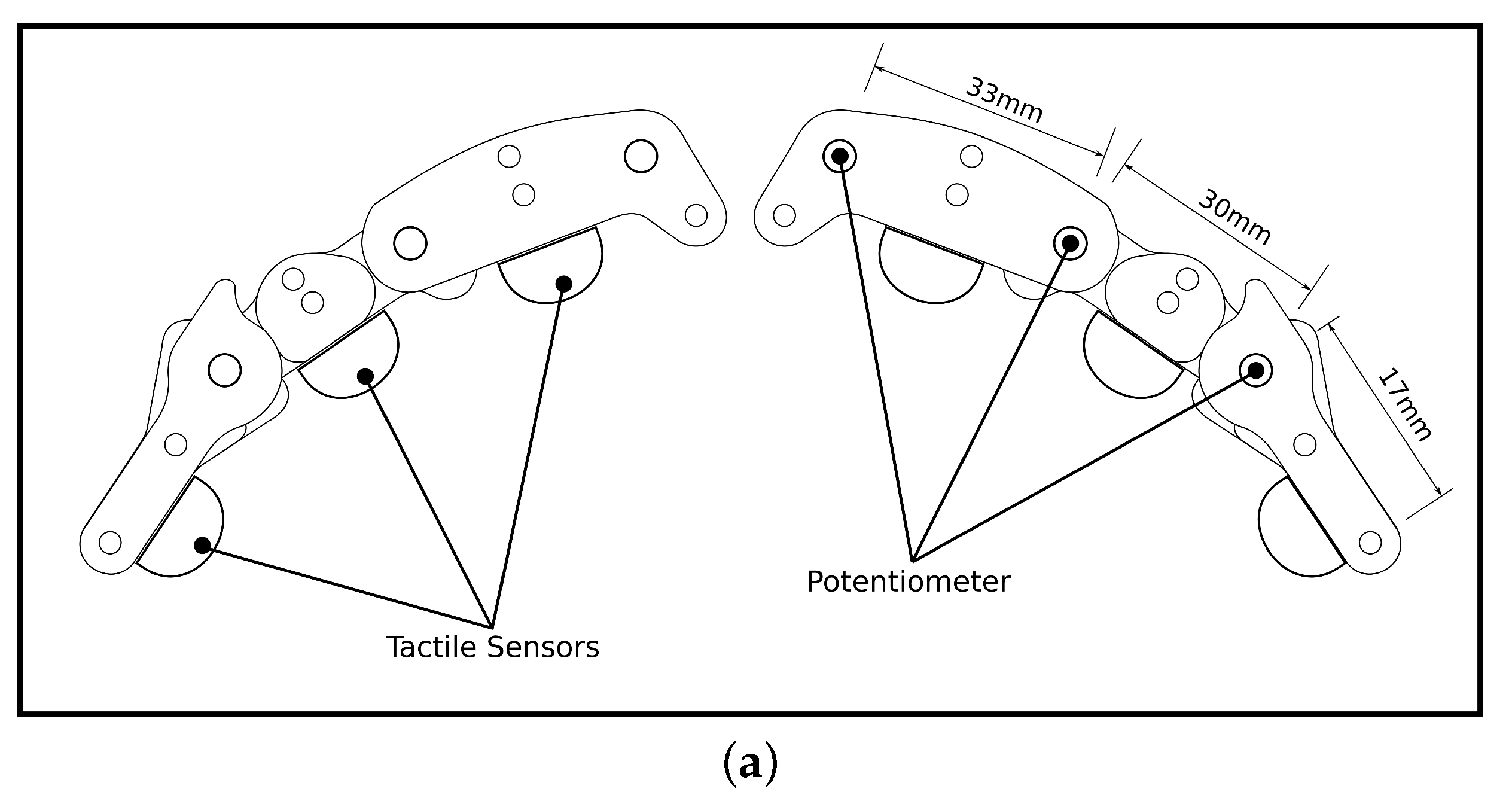

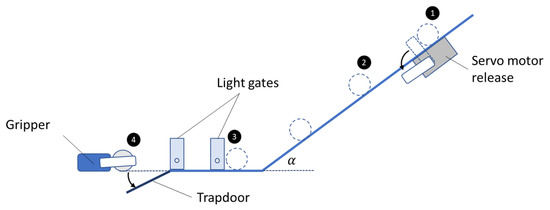

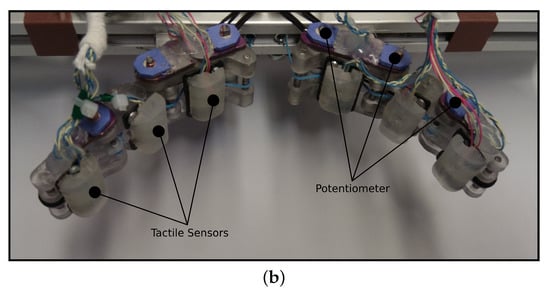

3.2. Implementation of 2-Finger Gripper

Grasping was achieved using a two-finger gripper positioned along the horizontal plane and located adjacent to the light gates. Each finger had 3 degrees of freedom and was controlled by an under-actuated cable-driven mechanism. A servo actuator was used to control closing of each finger, while the restoring action was provided by a spring connected in an antagonistic configuration. Tactile sensing was implemented using magnets embedded in the silicone fingertips (Polycraft T-15 RTV) and placed over a hall effect sensor (Adafruit MLX90393), similar to the approaches used in [39,40]. An air gap between the sensor and silicone was used to increase sensitivity, as described previously in [41]. The gripper was mounted to a servo-actuated carriage on a linear rail that ran perpendicular to the direction of motion of the ball, thus enabling the gripper to move laterally during the grasp. The layout of the gripper and a photo of the embodiment used in this experiment is given in Figure 2.

Figure 2.

Under-actuated two-finger pincer gripper with distributed tactile sensing, shown as (a) diagram, (b) real-world gripper.

3.3. Grasping Strategies

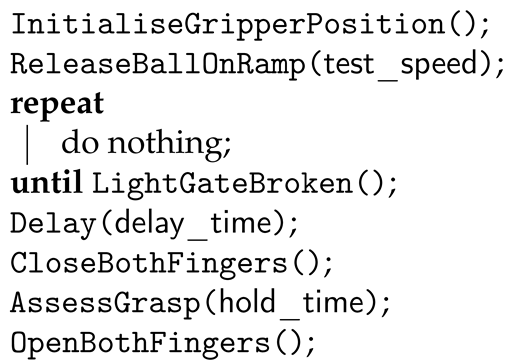

Two different grasping strategies were evaluated. The first strategy involved initiating the grasp based on a prediction of when the object would contact the gripper; this approach provided a proxy to current approaches that rely solely on object tracking data to determine the grasp initiation time. The light gate was used to determine the proximity of the ball approaching the gripper; this allowed for a high resolution estimation of the position of the ball as it approached the gripper, while maintaining a lower experimental complexity compared to a vision-based object tracking system. This, in turn, allowed for different grasp timings to be tested in an accurate, repeatable way. Determination of the optimum time to initiate the grasp is complex, and subject to change with each set of experimental parameters. To contend with this, preliminary testing was conducted to approximate the optimum range of timings across the range of experimental conditions. Based on this, three grasp initiation timings were chosen, corresponding with 0 ms, 5 ms and 10 ms after passing the light gate. This procedure enabled results to be compared with the reactive strategy, with the understanding that the time offset closest to the optimum grasp initiation time will change depending on the set of experimental conditions (ball speed and location of gripper–object contact points). In this way, the optimum grasp timing, the sensitivity to deviation from this timing and comparison with the reactive strategy can be determined. Details of the implementation of the predictive strategy are given in the form of pseudocode in Algorithm 1.

| Algorithm 1: Pseudocode describing the implementation of the predictive strategy. |

|

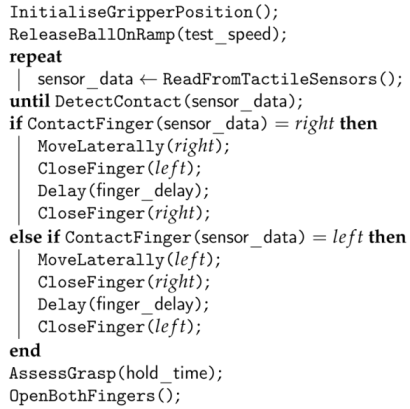

The second grasping strategy involved a reactive control system that used feedback from tactile sensing to initiate and adapt the grasping motion. This algorithm was achieved through the implementation of three basic heuristics:

- The grasp was triggered when the object first made contact with any of the tactile sensors in the gripper;

- Upon detection of contact with an object, the gripper moved laterally in an attempt to centre the object in the gripper.;

- To minimise forces exerted on the ball at contact with the gripper, the closing motion of the finger that first comes into contact with the ball was delayed by 3 ms relative to the other finger. This duration was empirically determined through a process of trial and error.

Details of the implementation of the reactive strategy are give in the form of pseudocode in Algorithm 2.

| Algorithm 2: Pseudocode describing the implementation of the reactive grasping strategy. |

|

3.4. Experimental Procedure

For each test, the ball was placed on the incline and held in place by one of the release servos. On command, the servo released the ball, allowing it to accelerate down the incline. The ball then transitioned to the flat plane, where it passed through the light gates before the gripper would attempt to grasp it using one of the two control approaches outlined in Section 3.3. After the attempt, the platform on which the ball was rolling was lowered to remove any ground support force. The grasp was deemed a success if the gripper was able to support the full weight of the ball for 5 s.

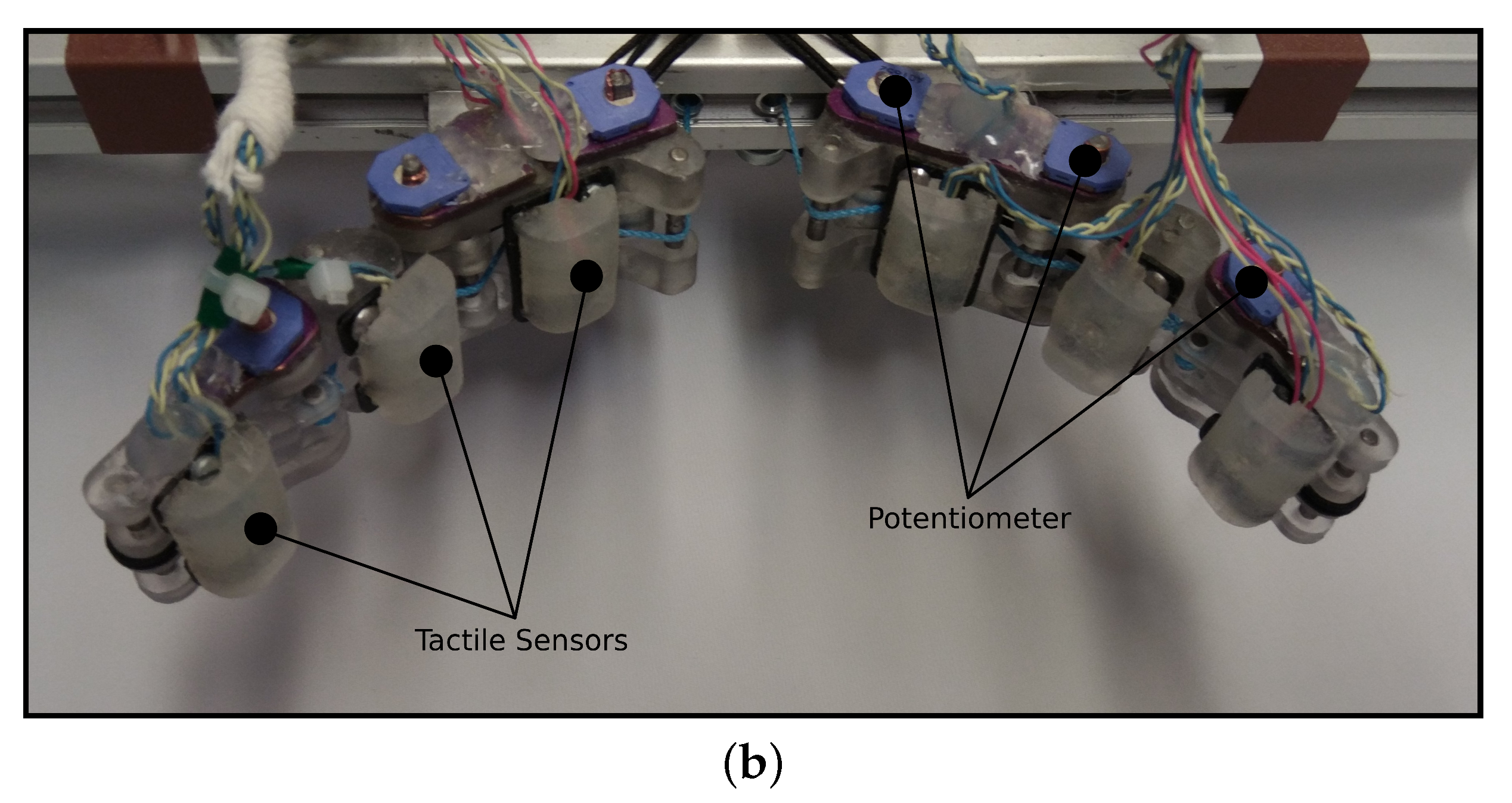

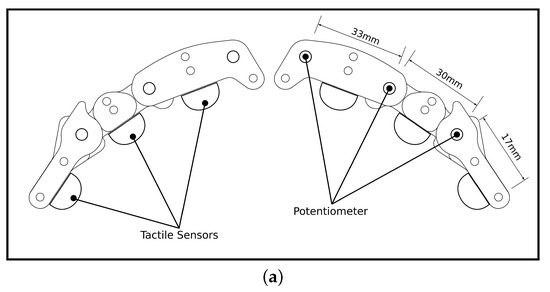

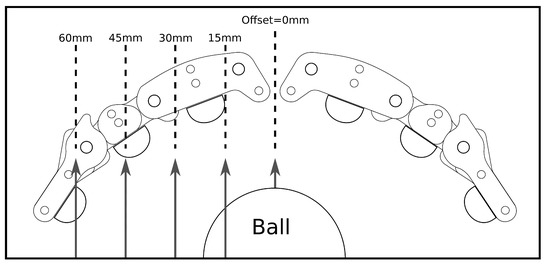

The robustness of the grasp to the location on the finger that the moving object made contact was tested systematically at 5 equal intervals of 15 mm (Figure 3). This was intended to replicate small positional errors in the pose of the gripper at the interception point of the object, and allowed for an examination of each strategy’s robustness to those errors. A video illustrating the testing procedure is presented in the Supplementary Materials.

Figure 3.

Illustration of the different gripper–object contact points that we tested in the experiment.

4. Results

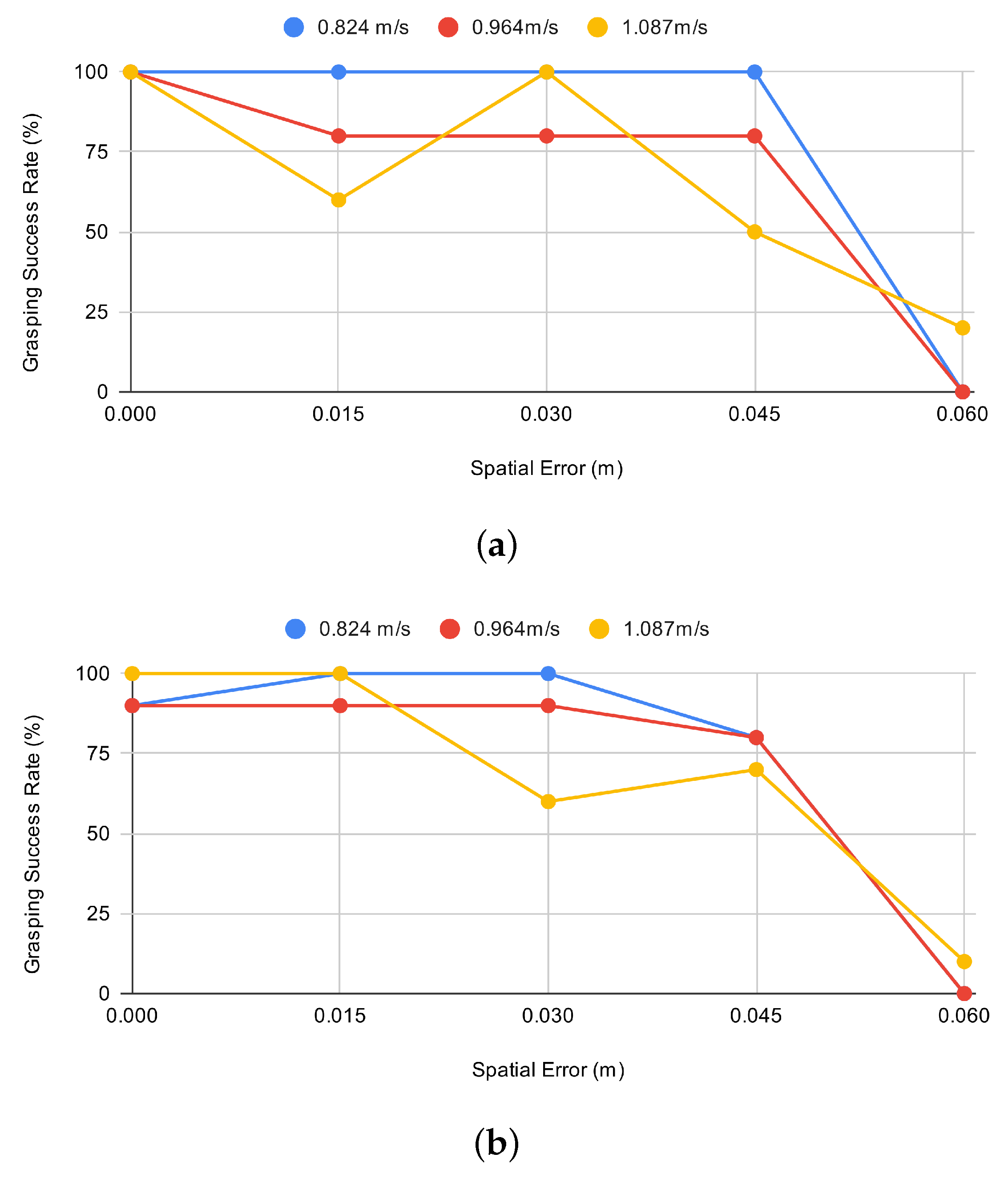

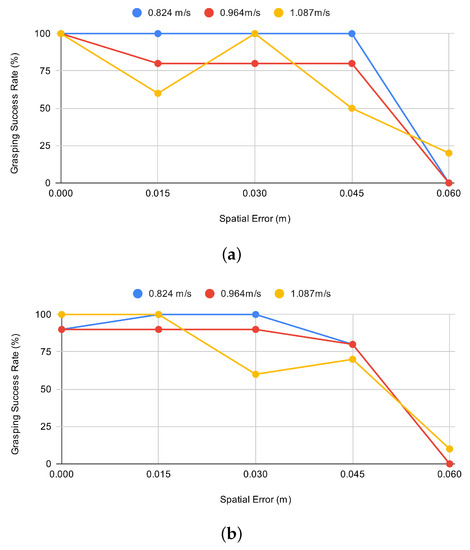

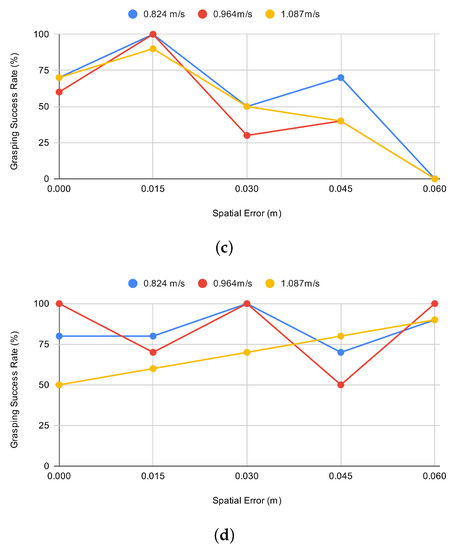

A series of experiments were conducted to compare the performance of the reactive control strategy that used tactile sensor data to initiate the grasp with three variants of the predictive strategy that attempted the grasp based solely on anticipation of when the object would contact the gripper. The performance of each strategy was measured for different object speeds (0.82 m/s, 0.96 m/s and 1.09 m/s) and at five finger–object contact points (0 mm, 15 mm, 30 mm, 45 mm and 60 mm). Ten tests were performed for each set of conditions, with the dependent variable being if the grasp was successful or not. The grasping performance of each control strategy for each of the test conditions is illustrated in Figure 4.

Figure 4.

Grasping success rates at different gripper–object contact locations (spatial error) for each testing condition at three different ball speeds: (a) object tracking strategy with grasp initiated 0 ms after passing through timing gate; (b) object tracking strategy with grasp initiated 5 ms after passing through timing gate; (c) object tracking strategy with grasp initiated 10 ms after passing through timing gate; (d) reactive grasping strategy using tactile sensing.

To determine which controller performed best across all test conditions, data from all experiments (i.e., different ball speeds and gripper–object contact points) were aggregated for each control strategy (Table 2). From 150 grasping trials, the reactive controller that used tactile sensing achieved the best performance (79.3% success rate), followed by the predictive controller that initiated the grasp immediately after passing the light gate (71.3% success rate), 5 ms after passing the light gate (70.6% success rate) and 10 ms after passing the light gate (51.3% success rate).

Table 2.

Summary of grasp performance across all test conditions for each control strategy, where ‘Delay’ is the delay after passing the light gate before the grasp is initiated for the ‘Predictive’ condition.

For a more granular analysis, a chi-square test of independence was performed to examine the relationship] between the grasping method and grasp success for each test condition. These results are presented in Table 3.

Table 3.

Summary of results from real-world grasping experiments. Where ‘Gripper Offset’ is the distance from the centre of the gripper to the point on the finger where the object makes first contact, and ‘Delay’ is the delay after passing the light gate before the grasp is initiated for the ‘Predictive’ condition. An asterisk indicates a significant difference in performance at a significance level(.

5. Discussion

Results from the experiment show that the reactive strategy that initiated and adapted its grip using tactile sensing achieved the highest number of successful grasps, with a success rate of nearly 80%. The best performing predictive strategies achieved successes rates of 71.3%, 70.6% and 51.3%, respectively. The reactive controller was found to offer the greatest advantage when the gripper–object contact point was further from the centre of the gripper, where it significantly outperformed the other gripping strategies (). These findings support earlier results obtained through performing a series of equivalent tests in a simulation [35] and verifies our original hypothesis that the implementation of a reactive sensing approach that uses tactile sensor data can enable a more adaptive grasp that is more robust to the exact point of the gripper–object contact.

Results, shown in Figure 4, show that the object tracking strategies performed best when the point of the gripper–object contact took place near to the centre of the gripper. The performance deteriorated as the gripper–object contact moved towards the extremity of the finger. These findings are consistent with results of simulation-based tests in [35] and confirm our hypothesis that grasping strategies that do not include near-field data are likely to perform poorly when the gripper is not closely aligned with the moving object.

Findings from the predictive controller suggest that the best grasp performance was achieved when the grasp was initiated as soon as the object entered its grasp envelope. This was especially true at higher object speeds, where a delayed grasp response often led to the ball bouncing off the finger on contact and subsequently escaping from the grasp. Since the reactive controller was only initiated on contact with the tactile sensor, it is likely that performance improvements in this control strategy were not due to the optimisation of the grasp initiation, but were attributable to other factors of the grasp behaviour, namely the tendency for the gripper to move laterally to centre the object in the gripper (heuristic 2) and the delay induced in the closing motion of the finger that made contact with the object (heuristic 3). This hypothesis is supported by the data because the reactive grasping strategy was found to perform best when the ball made contact with the fingertips, where the effect of these two behaviours was large, and was found to perform worse when closer to the centre of the gripper, where the behaviour of the system approximated a predictive controller with a longer grasp initiation delay. Sub-optimal grasp initiation may not have been the only factor that affected the performance of the reactive controller. With only three tactile sensors per finger, the response was not consistent for all object–finger contact locations; this issue could be addressed in future work by increasing the number of sensors in the finger, perhaps by using higher density tactile sensing technology, such as that presented in [7].

In this study, the benefits of incorporating tactile feedback in the grasping strategy was evident. However, this research also serves to highlight several avenues for future research. The testing apparatus presented enabled a systematic testing of a spherical object, where the speed and trajectory of the object could be controlled to a high degree of accuracy. Future work aims to expand upon this to enable the testing of a range of non-spherical objects, such that the orientation can also be controlled as an independent variable. Besides the shape and orientation of the target object, there are many other object parameters that could be considered in future research; these include, but are not limited to, the size, mass, texture and spin.

The tactile sensors used in this research were inspired by examples found in prior literature [41] that exhibit a high sensitivity with relatively little noise. However, future research should examine how different types and configurations of tactile sensing affects the performance. This includes, but is not limited to, different sensing mechanisms, geometry, sensing density, sensitivity and three-axis tactile sensing. There is a need to explore how the grasp performance could be further improved using near-field proximity sensing to trigger an earlier grasp initiation than is possible using tactile sensing alone. A strategy that considers time-series data (as opposed to data about the initial point of contact) could also enable further performance improvements. Machine learning techniques could improve the performance and should be investigated. Finally, this research focused on a grasping problem of a sphere rolling on a horizontal plane; however, future research could apply the same techniques to more complex grasp objects.

6. Conclusions

In this paper, it was hypothesised that the incorporation of a reactive control strategy that used near-field sensing to initiate and adapt a robot’s grasp could lead to a better and more robust performance for applications involving grasping moving objects. We presented the design of a testing apparatus and procedure to systematically test this hypothesis using a two-finger gripper. In our experiments, we compared the performance of a reactive grasping strategy that used tactile sensing to initiate the grasp with a traditional predictive strategy that used an estimate of the object–gripper contact point to initiate the grasp. Analysis of the data indicates that, in order to achieve the best performance, optimum grasp initiation should take place as soon as the moving object enters the gripper’s grasp envelope. Although the reactive gripping strategy initiated the grasp later than the other grasps, the effect of adaptive behaviours, such as differentially regulating the finger closure and moving the gripper laterally to centre the object within the gripper, led to it achieving the best overall grasp success rate of any of the strategies tested, with a significantly better grasping performance when the object–gripper contact point was towards the extremity of the finger. This demonstrates the importance of near-field sensing in enabling a more robust and adaptive grasping of moving objects.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/s21248339/s1, Video S1: Video Illustrating the Testing Procedure.

Author Contributions

Conceptualisation, P.L.; methodology, P.L.; software, P.L.; validation, P.L.; formal analysis, P.L., M.F.C. and C.M.; investigation, P.L.; resources, P.L.; data curation, P.L.; writing—original draft preparation, P.L.; writing—review and editing, P.L., M.F.C. and C.M.; visualisation, P.L.; supervision, C.M.; project administration, C.M.; funding acquisition, P.L. and C.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Irish Research Council and Ubotica under their Enterprise Partnership scheme.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data collected during this research is presented in full in this manuscript.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Bäuml, B.; Birbach, O.; Wimböck, T.; Frese, U.; Dietrich, A.; Hirzinger, G. Catching flying balls with a mobile humanoid: System overview and design considerations. In Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; pp. 513–520. [Google Scholar] [CrossRef]

- Kim, S.; Shukla, A.; Billard, A. Catching objects in flight. IEEE Trans. Robot. 2014, 30, 1049–1065. [Google Scholar] [CrossRef]

- Bäuml, B.; Wimböck, T.; Hirzinger, G. Kinematically optimal catching a flying ball with a hand-arm-system. In Proceedings of the IEEE/RSJ 2010 International Conference on Intelligent Robots and Systems, IROS 2010—Conference Proceedings, Taipei, Taiwan, 18 October 2010; pp. 2592–2599. [Google Scholar] [CrossRef]

- Wei, H.; Wang, L. A Visual Cortex-Inspired Imaging-Sensor Architecture and Its Application in Real-Time Processing. Sensors 2018, 18, 2116. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Billard, A.; Kragic, D. Trends and challenges in robot manipulation. Science 2019, 364, 1149. [Google Scholar] [CrossRef]

- Kober, J.; Glisson, M.; Mistry, M. Playing catch and juggling with a humanoid robot. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Osaka, Japan, 29 November 2012; pp. 875–881. [Google Scholar] [CrossRef]

- Tomo, T.P.; Schmitz, A.; Wong, W.K.; Kristanto, H.; Somlor, S.; Hwang, J.; Jamone, L.; Sugano, S. Covering a Robot Fingertip With uSkin: A Soft Electronic Skin With Distributed 3-Axis Force Sensitive Elements for Robot Hands. IEEE Robot. Autom. Lett. 2018, 3, 124–131. [Google Scholar] [CrossRef]

- Xu, D.; Loeb, G.E.; Fishel, J.A. Tactile identification of objects using Bayesian exploration. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3056–3061. [Google Scholar] [CrossRef]

- Bauza, M.; Canal, O.; Rodriguez, A. Tactile Mapping and Localization from High-Resolution Tactile Imprints. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3811–3817. [Google Scholar]

- Dallaire, P.; Giguère, P.; Émond, D.; Chaib-draa, B. Autonomous tactile perception: A combined improved sensing and Bayesian nonparametric approach. Robot. Auton. Syst. 2014, 62, 422–435. [Google Scholar] [CrossRef]

- Gao, Y.; Hendricks, L.A.; Kuchenbecker, K.J.; Darrell, T. Deep learning for tactile understanding from visual and haptic data. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 536–543. [Google Scholar] [CrossRef] [Green Version]

- Blanes, C.; Cortés, V.; Ortiz, C.; Mellado, M.; Talens, P. Non-Destructive Assessment of Mango Firmness and Ripeness Using a Robotic Gripper. Food Bioprocess Technol. 2015, 8, 1914–1924. [Google Scholar] [CrossRef] [Green Version]

- Khamis, H.; Xia, B.; Redmond, S.J. Real-time Friction Estimation for Grip Force Control. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1608–1614. [Google Scholar] [CrossRef]

- Sutanto, G.; Ratliff, N.; Sundaralingam, B.; Chebotar, Y.; Su, Z.; Handa, A.; Fox, D. Learning Latent Space Dynamics for Tactile Servoing. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3622–3628. [Google Scholar]

- Lepora, N.F.; Aquilina, K.; Cramphorn, L. Exploratory Tactile Servoing With Active Touch. IEEE Robot. Autom. Lett. 2017, 2, 1156–1163. [Google Scholar] [CrossRef] [Green Version]

- Kumar, V.; Hermans, T.; Fox, D.; Birchfield, S.; Tremblay, J. Contextual Reinforcement Learning of Visuo-tactile Multi-fingered Grasping Policies. arXiv 2019, arXiv:1911.09233. [Google Scholar]

- Lepora, N.F.; Church, A.; de Kerckhove, C.; Hadsell, R.; Lloyd, J. From Pixels to Percepts: Highly Robust Edge Perception and Contour Following Using Deep Learning and an Optical Biomimetic Tactile Sensor. IEEE Robot. Autom. Lett. 2019, 4, 2101–2107. [Google Scholar] [CrossRef] [Green Version]

- Hauser, K. Bayesian Tactile Exploration for Compliant Docking With Uncertain Shapes. IEEE Trans. Robot. 2019, 35, 1084–1096. [Google Scholar] [CrossRef]

- Funabashi, S.; Gang, Y.; Geier, A.; Schmitz, A.; Sugano, S. Morphology-Specific Convolutional Neural Networks for Tactile Object Recognition with a Multi-Fingered Hand. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 57–63. [Google Scholar]

- Cockbum, D.; Roberge, J.P.; Le, T.H.L.; Maslyczyk, A.; Duchaine, V. Grasp stability assessment through unsupervised feature learning of tactile images. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 2238–2244. [Google Scholar] [CrossRef] [Green Version]

- Bekiroglu, Y.; Damianou, A.; Detry, R.; Stork, J.A.; Kragic, D.; Ek, C.H. Probabilistic consolidation of grasp experience. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 193–200. [Google Scholar] [CrossRef] [Green Version]

- Delgado, A.; Jara, C.A.; Torres, F. In-hand recognition and manipulation of elastic objects using a servo-tactile control strategy. Robot. Comput.-Integr. Manuf. 2017, 48, 102–112. [Google Scholar] [CrossRef] [Green Version]

- Bühler, M.; Koditschek, D.E.; Kindlmann, P.J. Planning and Control of Robotic Juggling Tasks. In The Fifth International Symposium on Robotics Research; MIT Press: Cambridge, MA, USA, 1990; pp. 321–332. [Google Scholar]

- Luo, R.C.; Liao, C. Robotic conveyor tracking with dynamic object fetching for industrial automation. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 369–374. [Google Scholar] [CrossRef]

- Salehian, S.S.M.; Khoramshahi, M.; Billard, A. A Dynamical System Approach for Softly Catching a Flying Object: Theory and Experiment. IEEE Trans. Robot. 2016, 32, 462–471. [Google Scholar] [CrossRef]

- Carneiro, D.; Silva, F.; Georgieva, P. The role of early anticipations for human–robot ball catching. In Proceedings of the 2018 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Torres Vedras, Portugal, 25–27 April 2018; pp. 10–16. [Google Scholar] [CrossRef]

- Kober, J.; Mülling, K.; Krömer, O.; Lampert, C.H.; Schölkopf, B.; Peters, J. Movement templates for learning of hitting and batting. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, Alaska, 3–8 May 2010; pp. 853–858. [Google Scholar] [CrossRef]

- Senoo, T.; Namiki, A.; Ishikawa, M. Ball control in high-speed batting motion using hybrid trajectory generator. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, 2006. ICRA 2006, Orlando, FL, USA, 15–19 May 2006; pp. 1762–1767. [Google Scholar] [CrossRef] [Green Version]

- Riley, M.; Atkeson, C.G. Robot catching: Towards engaging human-humanoid interaction. Auton. Robot. 2002, 12, 119–128. [Google Scholar] [CrossRef]

- Escaida Navarro, S.; Weiss, D.; Stogl, D.; Milev, D.; Hein, B. Tracking and Grasping of Known and Unknown Objects from a Conveyor Belt. In Proceedings of the ISR/Robotik 2014; 41st International Symposium on Robotics, Munchen, Germanny, 2–3 June 2014; pp. 1–8. [Google Scholar]

- Smith, C.; Christensen, H.I. Using COTS to Construct a High Performance Robot Arm. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10 April 2007; pp. 4056–4063. [Google Scholar] [CrossRef] [Green Version]

- Cuevas-Velasquez, H.; Li, N.; Tylecek, R.; Saval-Calvo, M.; Fisher, R.B. Hybrid Multi-camera Visual Servoing to Moving Target. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1132–1137. [Google Scholar] [CrossRef] [Green Version]

- Imai, Y.; Namiki, A.; Hashimoto, K.; Ishikawa, M. Dynamic active catching using a high-speed multifingered hand and a high-speed vision system. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 2, pp. 1849–1854. [Google Scholar] [CrossRef]

- Koyama, K.; Murakami, K.; Senoo, T.; Shimojo, M.; Ishikawa, M. High-Speed, Small-Deformation Catching of Soft Objects Based on Active Vision and Proximity Sensing. IEEE Robot. Autom. Lett. 2019, 4, 578–585. [Google Scholar] [CrossRef]

- Lynch, P.; McGinn, C. Using Tactile Sensing to Improve Performance when Grasping Moving Objects. In Proceedings of the 2021 SMC Conference, IEEE Systems, Man and Cybernetics, Melbourne, Australia, 17–20 October 2021. [Google Scholar]

- Joergensen, J.A.; Ellekilde, L.P.; Petersen, H.G. RobWorkSim—An Open Simulator for Sensor based Grasping. In Proceedings of the ISR 2010 (41st International Symposium on Robotics) and ROBOTIK 2010 (6th German Conference on Robotics), Munich, Germany, 7–9 June 2010; pp. 1–8. [Google Scholar]

- Agboh, W.C.; Dogar, M.R. Real-Time Online Re-Planning for Grasping Under Clutter and Uncertainty. In Proceedings of the 2018 IEEE-RAS 18th International Conference on Humanoid Robots (Humanoids), Beijing, China, 6–9 November 2018; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Tremblay, J.; To, T.; Sundaralingam, B.; Xiang, Y.; Fox, D.; Birchfield, S. Deep Object Pose Estimation for Semantic Robotic Grasping of Household Objects. arXiv 2018, arXiv:1809.10790. [Google Scholar]

- Ledermann, C.; Wirges, S.; Oertel, D.; Mende, M.; Woern, H. Tactile sensor on a magnetic basis using novel 3D Hall sensor - First prototypes and results. In Proceedings of the INES 2013—IEEE 17th International Conference on Intelligent Engineering Systems, San Jose, Costa Rica, 19–21 June 2013; pp. 55–60. [Google Scholar] [CrossRef]

- Jamone, L.; Metta, G.; Nori, F.; Sandini, G. James: A humanoid robot acting over an unstructured world. In Proceedings of the Proceedings of the 2006 6th IEEE-RAS International Conference on Humanoid Robots, HUMANOIDS, Genova, Italy, 4–6 December 2006; pp. 143–150. [Google Scholar] [CrossRef] [Green Version]

- Jamone, L.; Natale, L.; Metta, G.; Sandini, G. Highly sensitive soft tactile sensors for an anthropomorphic robotic hand. IEEE Sens. J. 2015, 15, 4226–4233. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).