1. Introduction

Emotions are an integral part of our everyday life that represent conscious and/or unconscious mental reactions to events, objects, and situations. Emotions are a combined form of feelings, thoughts, and behavior and show people’s psychophysiological reactions. Emotions affect the way people think, comply, and feel about people, things, and events. The most widely used emotion classification models are Ekman and pleasure, arousal, dominance (PAD) models [

1]. The PAD emotional state model was developed by Albert Mehrabian and James A. Russell to define and measure emotional states. The PAD model describes continuous emotion in three dimensions of pleasure, arousal, and dominance [

2,

3,

4]. Initially, it was used in an environmental psychology theory with the central assumption that environments influence individuals through their emotional effect [

2]. PAD is a psychological model for estimating all the emotional states of humans with respect to pleasure, arousal, and dominance. Paul Ekman and his colleagues concluded that there are six basic emotions including disgust, fear, anger, happiness, sadness, and surprise [

5], where each emotion has particular characteristics that allow them to be expressed to varying degrees [

6]. Since emotions are basically genetically determined, distinct emotions are perceived similarly throughout most cultures or nations [

7]. Many works employ dimensional representations to analyze human emotions in many emotional dimensions. Emotions are composed of five coordinated activities: mental situation assessment, clinical findings (central and autonomous nervous system response), actions, facial expressions, and thoughts [

8]. The usage and role of emotion recognition are indispensable in a multitude of domains. For example, emotion detection plays an essential role in the field of medicine specifically for patients with psycho-neural disorder or patients with learning disabilities and autism. Children with autism spectrum disorder (ASD) typically find it difficult to understand, communicate and regulate feelings [

9]. For advanced human-computer interface (HCI) designs, the interaction between robots and humans can be more realistic and dynamic if the emotional state of humans can be determined accurately. In this way, it can strengthen human-machine engagement by using emotional information during conversation.

The importance and diverse use of emotions in artificial intelligence, psychology, cognitive neuroscience, and advanced robotics, etc., has made emotions recognition extremely significant. Consequently, several methods have been developed for emotion recognition over the past years. Predominantly, such methods are based on individual physical signs that include different expressions, speech, body movement, and gestures, etc. [

10]. So, facial expressions, speech, behavior, and physiological signals can be used for emotion recognition [

10,

11,

12]. Depending upon the nature of the data used for emotion recognition, these methods fall under subjective and objective categories. The first three methods are subjective as it is easier for people to hide their genuine emotions by deliberately changing their voice, manipulating their facial expression, and altering their behavior [

13]. Physiological signals-based methods are objective and more reliable where the signals generated by central nervous systems such as electroencephalogram (EEG) are used for emotion recognition. Objective methods are less susceptible to manipulation and show better performance [

14]. Thus, emotion recognition mapping with physiological signals intuitively seems more reasonable. Several methods record physiological signals such as Galvanic skin response (GSR), EEG, Electrocardiogram (ECG), and Electromyography (EMG) for the electric activity of the heart, skin, muscles, and brain, respectively. Understanding emotional response using the physiological signals is promising because they show unconscious representations and are not consciously manipulated by humans [

15].

Emotion recognition methods using physiological signals involve invasive technologies or on-body sensors for signals measurement which make them prone to error. For example, the electroencephalogram cap used in EEG is comprised of electrodes, amplifiers, and analog to digital converters for recording human brain activity and it is to be placed on the human head for this purpose [

16]. EEG signals analysis is challenging due to being non-stationary and the influence of complex environmental factors. The EEG signals, for example, are noisy and highly susceptible to environmental interference due to their low amplitude (i.e., 50

V to 100

V) [

14]. Similarly, GSR records skin conductance that occurs due to sweat gland activity and involves skin electrodes mounted on hand and foot regions. Research shows that the data and the accuracy of GSR-based methods are sensitive to several factors such as inappropriately worn devices, unrestricted movements of participants and gender, etc. [

17,

18]. Additionally, many humans do not feel comfortable with the on-body sensors, and their movements introduce noise and error in the collection that affects the performance of emotion recognition [

19]. Keeping in view the challenges and limitations associated with invasive emotion recognition approaches, a non-invasive emotion recognition method is a compelling necessity. This study utilizes impulse radio ultra-wideband (IR-UWB) to propose a non-invasive emotion recognition method and makes the following contributions:

A non-invasive emotion recognition method is proposed using IR-UWB radar. Emotion recognition is carried by measuring the chest movement of the subjects without involving on-body sensors and invasive technology.

A dataset of IR-UWB data is maintained involving 35 participants in total, including both males and females.

An approach is presented to measure respiration per minute (RPM) from the measured chest to IR-UWB distance data. Results of the proposed approach are verified by a commercial pulse oximeter.

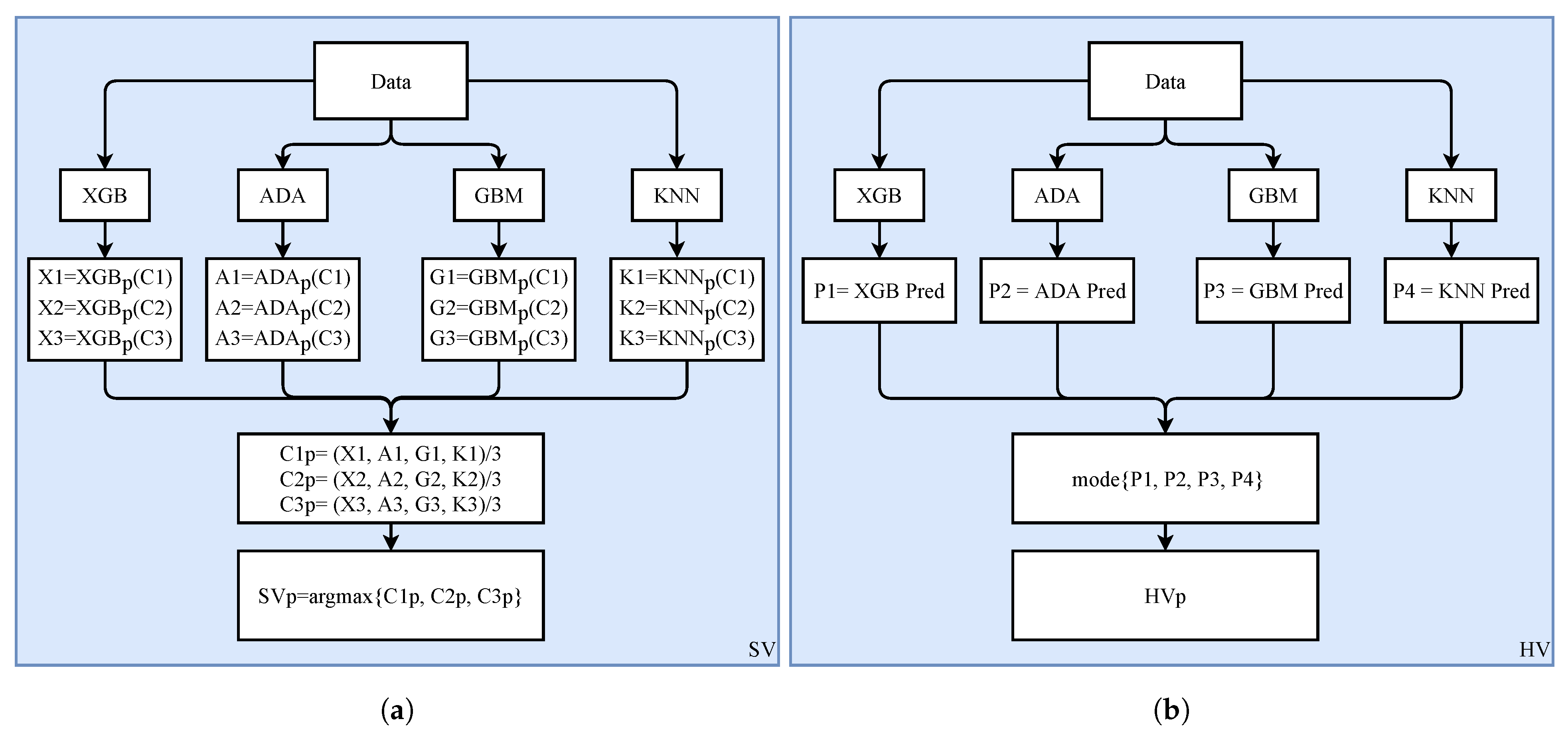

For identifying the emotions, the IR-UWB data is complemented with the machine learning approach where ensemble voting is utilized including both hard voting and soft voting.

Analysis has been carried out for male and female participants separately to present the differences with respect to gender. The emotion recognition performance of the ensemble models is compared with other machine learning models.

The rest of the paper is structured as follows.

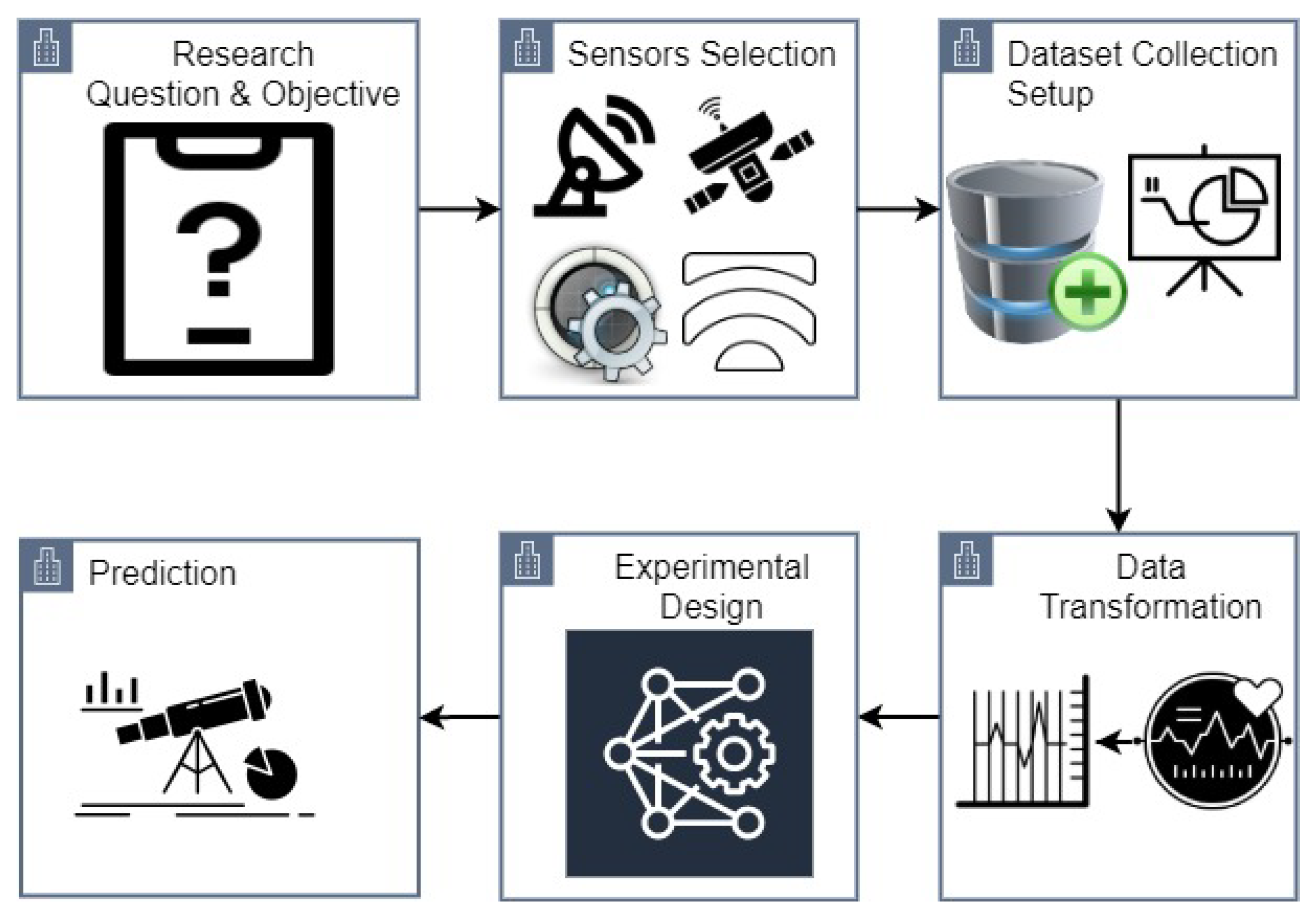

Section 3 discusses important research works related to the current study. The process of data collection using IR-UWB proposed research methodology, and its related contents are described in

Section 4.

Section 5 provides the analysis and discussion of results. In the end, the conclusion is given in

Section 6.

3. Related Work

On account of large interest in emotion recognition during the past few years and the importance of respiration rate association with emotions, several studies have presented methods and systems to recognize emotions based on respiration rate.

Augsburg’s dataset of physiological signals has been used for the classification of emotions by [

32]. Augsburg dataset consists of twenty-five records for four emotions including joy, sadness, pleasure, and anger where these emotions were triggered by the musical induction process. Four forms of physiological signals have been obtained during this process: ECG, EMG, respiration, and skin conductivity (SC). The ensemble empirical mode decomposition (EEMD) approach is used to extract time-domain non-linear, time-frequency, and intrinsic mode (IMF) features. The C4.5 decision tree (DT) is used to limit the number of features to five optimal features with a major contribution to classification. The correct classification rate (CRR) is used to measure the output and results show a CRR of 88% using the selected features.

The study [

33] uses a dataset for emotion analysis using physiological signals (DEAP) dataset containing ECG, GSR, blood pressure, breathing, skin temperature (ST), EMG, and Electrooculogram signals of thirty-two participants (16 of each gender) with ages from 19 to 37 years. Data are recorded when the subjects are watching forty-one-minute music video clips with a rating of 1-9 which is negative/low to positive/high using arousal, valence, and liking. The study uses only two ECG and respiration signals for emotion recognition. Thirteen features are obtained at a sampling rate of 512 Hz. Respiration rate (RR) interval, low frequency (LF), heart rate (HR), and high frequency (HF), RSA power, RSA frequency, and RSA amplitude are among the thirteen cardiac features. Breathing frequency and amplitude, RSA amplitude ratio to respiratory oscillation, respiratory and RSA frequency difference, the phase difference of respiration and RSA, the slope of phase difference, and standard deviation are calculated. For emotion classification, an SVM classifier with a multilayer perceptron kernel is used. Low/high liking, positive/negative valence, and low/high arousal are performed for the classification of ECG and respiration signals. Using the ECG signals, accuracy scores of 74%, 71%, and 72% are obtained for liking, arousal, and valence, respectively. Respiration rate shows an accuracy of 73% for liking, 72% for arousal, and 70% for valence. On the other hand, with a combination of HR and RR, the classification accuracy is increased to 76%, 74%, and 74% for liking, arousal, and valence, respectively.

Along the same lines, ref. [

34] uses various physiological signals such as ECG, ST, GSR, EMG, HR, respiration rate, blood oxygen level, systolic blood pressure (SBP), diastolic blood pressure (DBP), and blood volume pulse (BVP) to distinguish anger, pleasure and neutral emotions. A total of three stable male subjects aged 18 to 19 years participated in the data collection process. Zephyr BioPatch chest brace is used for the collection of ECG and respiration signals while E4 wrist-band is used to capture GSR, ST, and (BVP). The SBP and DBP are calculated using CONTEC which is an off-the-shelf Bluetooth-enabled blood pressure device. Blood oxygen level is measured by a pulse oximeter. The Zephyr chest strap is worn under the pectoral muscle for data collection. Blood glucose readings are taken twice at the beginning and the end of the trial. Baseline signals are captured while the participant is sitting comfortably, followed by inducing the joy emotion joy using video clips. For inducing anger, cognitive techniques are applied. The physiological signals of each subject are recorded twice in the state of the induced emotion. Four physiological signs SBP, DBP, EMG, and blood oxygen levels are omitted because they do not contribute much to the classification of joy and anger emotions. Bandpass filtering is used for ECG, respiration, GSR, ST, HR, and BVP signals. Because the dataset is limited (two instances for each emotion class), predictive and statistical analysis of the data is carried out. The emotion is classified as happiness when the signal has high GSR, HR, and moderate respiration value while anger has low GSR, ST, high respiration, and moderate HR values.

ECG, RR, blood pressure, and respiration inhalation, and exhalation temperature are used for emotion classification in [

35]. Several sensors are used to capture four physiological signals from a single subject at the time of the induction of emotion through a three-minute video clip. The movie clip is played at a one-meter distance at the laptop and the signals are recorded for one minute. By applying the Low-Pass filter to the raw ECG and respiratory signal, the noise is reduced. Afterward, nineteen statistical, temporal, and spectral features are extracted for emotion recognition. An artificial neural network (ANN) with two hundred hidden layers is used for classification. A total of six emotions happy, sad, fear, disgust, anger, and surprise are classified with an average accuracy of 80%.

In [

36] MAHNOB-HCI physiological signal dataset is used for the classification of emotions in the arousal valence model. ECG, respiration, skin temperature, and galvanic skin reaction are used for emotion classification. The MAHNOB-HCI multimodal dataset contains data for twenty-four subjects using twenty video samples. The signal of the first and last thirty seconds is omitted because of neutral emotion. Butterworth filter is applied on GSR, ECG, and respiration signal with a cutoff frequency of 0.3 Hz, 0.7 Hz, and 1 Hz, respectively. Heart rate variability (HRV) is calculated from the ECG signal and respiration rate from respiration amplitude. A total of one hundred sixty-nine features are extracted from these signals. An SVM with different kernels is used for classifying the samples into high and low arousal, negative and positive in valence. SVM with RBF kernel shows a better accuracy of 68.5% for arousal and 68.75% for valence class.

A physiological signal interpretation framework, Emo-CSI, is presented for emotional classification in [

37] which uses heart rate, respiration pattern, skin humidity, and strength to recognize emotions of pleasure, displeasure, calm, neutral, and excited emotions. Twenty-three subjects with ages from 20 to 27 are included in the data collection process seconds comprising ten males and thirteen females. Emotions are induced using pictures and matching sounds. A total of thirty-two features including average, maximum, minimum, and standard deviation, etc. are extracted from physiological signals to support prediction using SVM, DT, and artificial neural network (ANN). SVM outperforms the other two classification models with an accuracy of 55.45% and 59% for valence and arousal classes, respectively.

Respiration data are used in [

38] where the emotions are classified using that are Fast Fourier Transform (FFT) and machine learning models separately. Twenty-five males and females with ages from 18 to 25 participated in the experiments where seven movie clips are shown to subjects for six emotions inducing happiness, sadness, surprise, anger, anxiety, and disgust. The study uses the BIOPAC instrument, airflow sensor, and a mouthpiece for collecting respiration, and breathing patterns. FFT-based classification achieves an 80% accuracy while LR obtains an 80% recall. A system using HRV signal based on respiration rate for emotion classification is proposed in [

39]. Twenty-five subjects (12 males & 13 females) with ages from 18 to 35 are involved in experiments for anger, fear, joy, and sadness emotions. ECG and respiration signals are recorded using a BIOPAC device. The largest peak from the bandpass filtered respiratory signal is used to calculate respiratory frequency. The area under the receiver operating characteristic curve (AUC) is calculated to find the capability of features in emotion classification. Features having AUC greater than or equal to 0.70 are considered for further process. Accuracy of two-class classification (relax vs. joy, joy vs. sad, and joy vs. anger) is 79.2%, 77.8%, and 77.3%, respectively.

The literature study has several important findings. First, despite the higher classification accuracy, predominantly, the used methods involve invasive or on-body sensors. Invasive sensors can be used in virtual or controlled environments but for real-life situations their practicability is limited. Often causing attention or inconvenience, the data collection process becomes erroneous. Secondly, respiration rate has been employed largely in recent studies and is a potential candidate for accurate emotion detection. Thirdly, UWB radar has not been studied extensively and requires further research efforts to explore its full potential for emotion recognition. Keeping in view these points, this study presents a non-invasive emotion recognition approach by employing IR-UWB for recording physiological signals.

6. Conclusions and Future Work

Emotions are conscious and/or unconscious mental reactions from humans to various events, objects, and situations and are part and parcel of human life. Although emotion recognition is important for humans to respond appropriately, the past few years are marked with increased research in emotion recognition for human therapy, the latest human-computer interface, and advanced humanoids. Several kinds of physiological signals can be utilized for the task at hand with EEG, ECG, GSR, and respiration-based methods as the most famous. Predominantly, such methods involve placing devices on the head and chest or attaching sensors to various limbs. Consequently, such methods introduce inconvenience for humans which leads to noisy and erroneous data and wrong emotion recognition.

This study presents a novel non-invasive emotion recognition approach based on human respiration patterns. The respiration data are obtained using the novel use of IR-UWB radar for three emotions including happiness, fear, and disgust. These emotions are induced using movie clips while the chest movements of thirty-five participants are collected including both males and females. RPM calculated by the proposed method is validated by a commercial Pulse Oximeter which shows a 100% accuracy. RPM and other features are fed into the machine and deep learning models for training and testing. Additionally, hard and soft voting-based ensemble models are proposed for emotion recognition as well. Extensive experiments have been carried out involving different train-test splits. Results indicate that using the proposed novel non-invasive approach, a 76% F1 score can be obtained from the proposed SV with an 80:20 train-test split. Besides the basic emotion recognition, separate experiments are performed based on gender which provides insight on gender-based behavior during different emotions. Male participants show high arousal in happiness while fear emotion is more prevalent and intense in female participants. The emotion intensity for disgust is almost similar for both males and females. This study lays the foundation for IR-UWB based non-invasive emotion recognition, yet, only three emotions are studied at the moment. In the future, the dataset will be extended to add further emotions, as well as, improve the accuracy of machine learning, and deep learning models by incorporating additional features.