Abstract

Planetary soft landing has been studied extensively due to its promising application prospects. In this paper, a soft landing control algorithm based on deep reinforcement learning (DRL) with good convergence property is proposed. First, the soft landing problem of the powered descent phase is formulated and the theoretical basis of Reinforcement Learning (RL) used in this paper is introduced. Second, to make it easier to converge, a reward function is designed to include process rewards like velocity tracking reward, solving the problem of sparse reward. Then, by including the fuel consumption penalty and constraints violation penalty, the lander can learn to achieve velocity tracking goal while saving fuel and keeping attitude angle within safe ranges. Then, simulations of training are carried out under the frameworks of Deep deterministic policy gradient (DDPG), Twin Delayed DDPG (TD3), and Soft Actor Critic (SAC), respectively, which are of the classical RL frameworks, and all converged. Finally, the trained policy is deployed into velocity tracking and soft landing experiments, results of which demonstrate the validity of the algorithm proposed.

1. Introduction

With the development of space technology, the scope of space exploration is constantly expanding. To further explore and study planets such as the Moon and Mars, a large number of planetary surface exploration missions have been carried out and many are in planning [1,2]. In planetary surface exploration missions, the lander faces many challenges. On the one hand, to avoid damaging onboard equipments, the landing velocity relative to the planet’s surface must be kept under a threshold. In addition, the maneuvering ability of the landing probe is limited, so the lander needs to have a high landing accuracy to explore a specific area. Therefore, precise soft landing guidance technology is always one of the key technologies of planetary exploration, which has been widely studied and achieved many achievements [3,4,5].

At present, soft landing algorithms can be roughly divided into five categories: establishing lunar vertical line, gravity turn guidance, nominal trajectory guidance, explicit guidance, and learning-based method.

The establishment of lunar vertical line is an open-loop guidance method, which requires high accuracy of orbit entry and mid-course correction [6]. Gravity turn guidance is a semi-open loop and semi-closed loop guidance method. In the main braking period, the main goal is to reduce the speed of the lander. While the distance between the lander and the lunar surface is shortened to a certain range, the closed-loop guidance works are based on the feedback information of the sensor to improve the landing accuracy and stability [7]. These two methods were generally only used in early lunar landings [8]. Nominal trajectory guidance consists of open-loop offline trajectory planning and closed-loop online trajectory tracking. Before landing, the lander needs to plan an optimized landing trajectory. During the landing process, the deviation between the lander position and velocity and the planned trajectory is constantly measured and eliminated, through which the lander is controlled to land at the desired landing sites [9,10,11,12]. Explicit guidance method solves the closed-loop guidance problem based on the explicit function of control functional. To obtain the analytical expression of the optimal control problem of soft landing, it is necessary to simplify the soft landing model when building the mathematical model [13]. The work in [14] proposes a guidance method for the powered soft landing of a launcher with non-cluster configured engines, for which it is difficult to maintain a low thrust-to-weight ratio. The work in [15] deals with designing soft landing trajectory, from lunar parking orbit to the surface of Moon and solve the optimization problem with Differential Evolution (DE) which is superior in convergence speed.

In recent years, machine learning has made great breakthroughs and development. Some researchers have studied soft landing based on intelligent learning algorithms such as deep learning. In [16], the deep architectures’ ability to drive the onboard decision-making system is investigated in detail. Under the assumption of perfect state information, deep networks are trained to approximate the optimal control action in pinpoint landing experiments and have the ability to cope with large sets of possible initial states. The work in [17] proposes an autonomous lunar landing method based on deep learning that takes raw images taken by onboard optimal cameras as input and directly outputs fuel-optimal control actions, in which the direct filters for state estimation is not necessary. Moreover, the deep networks are trained by a supervised machine learning algorithm, and the training datasets are generated by NLP solver software packages. Further, in [18], a recurrent neural network architecture is proposed to predict the fuel-optimal thrust from a sequence of states in the powered descent phase of planetary soft landing.

In future exploration missions, it is necessary to enable the lander with higher autonomy that can make real-time adjustments to landing trajectory according to the landing condition, which is difficult for offline planning methods.

As an important branch of machine learning, DRL integrates the perception ability of deep learning with the decision-making ability of RL. It is an end-to-end algorithm that directly takes the environmental information as inputs and outputs the control efforts. It is especially suitable for solving the decision-making and planning problems of complex systems and has been widely studied and applied in the fields of games [19], autonomous driving [20], and manipulator control [21].

In this paper, the problem of planetary soft landing of the powered descent phase is studied. The soft landing problem of the powered descent phase is formulated, and the theoretical basis of RL used in this paper is introduced. To make it easier for the training process to converge, a reward function including process reward is designed to solving the problem of sparse reward. In addition, the fuel consumption penalty and constraints violation penalty are included to save fuel and keep the attitude angle within constraints. The main contributions of this work are (i) a velocity tracking reward function is designed with process reward, which makes it easier for the lander to learn to achieve the goal of soft landing as well as enables it with a better generalization capability, and (ii) the goals of keeping the attitude within constraints and reducing fuel consumption are reached by including fuel consumption penalty and constraints violation penalty into the reward function.

The remainder of this paper is organized as follows. Section 2 gives the preliminaries about RL and formulates the soft landing as an RL problem. Section 3 describes the details about soft landing control method based on DRL. Simulation results and necessary discussions are given in Section 4. Finally, conclusions are presented in Section 5.

2. Preliminaries and Problem Formulation

2.1. Soft Landing Problem Formulation

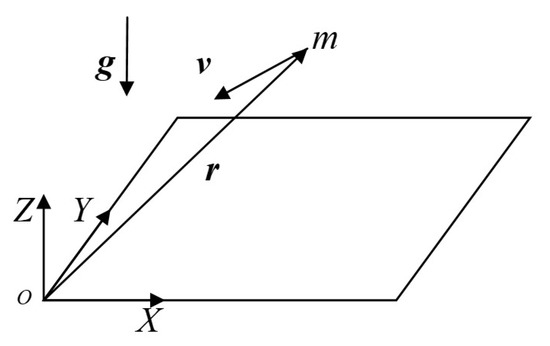

The planetary surface fixed frame of reference is defined as Figure 1. The forces acting on the lander in power descent include gravity, aerodynamic force, and engine thrust [22].

Figure 1.

Navigation coordinates of planetary surface.

As the powered descent begins at an altitude that is quite low compared to the planet’s radius, and the distance between the lander and target landing sites varies slightly during this phase, it is appropriate to assume that the planet’s gravity is a constant .

When it comes to the power descent phase, the lander has already released the parachute and the speed is on the order of 100 meters per second [22]. Compared with planetary gravity, the acceleration caused by aerodynamic force was very small. Therefore, the external force on the lander was dominated by gravity, and the aerodynamic force caused by the wind field was added into the model as an environmental disturbance.

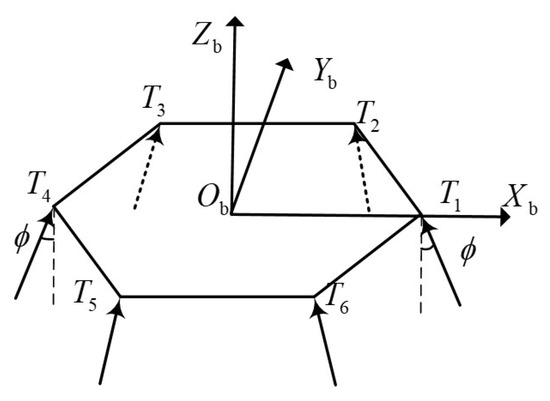

The lander is equipped with six thrusters deployed in body frame as Figure 2.

Figure 2.

Lander body frame and thrusters layout. The thrusters are deployed in regular hexagon.

The thrust of each engine is , and meets constraint

Let be the vector composed of the thrusts of six thrusters, and the thrust vector in body frame can be obtained according to the geometric relation

Moreover, the same for the torque vector in body frame

The translation dynamics are expressed as

where is the translational vector of the lander, is the velocity vector, and and are the velocity and angular velocity in the body frame, respectively. is the quaternion. and m are the inertia matrix and mass of the lander, respectively. is the direction cosine matrix from the body frame to the surface fixed frame of reference.

Attitude dynamics are express as

During soft landing, the mass of the lander will gradually decrease with the fuel consumption, i.e.,

where is the specific impulse of the engine, and the inertia matrix will also gradually decrease as the mass decreases. The shape of the lander is a cuboid of sides of length with uniform mass distribution

The soft landing problem is described as a fuel optimization problem as

2.2. RL Basis

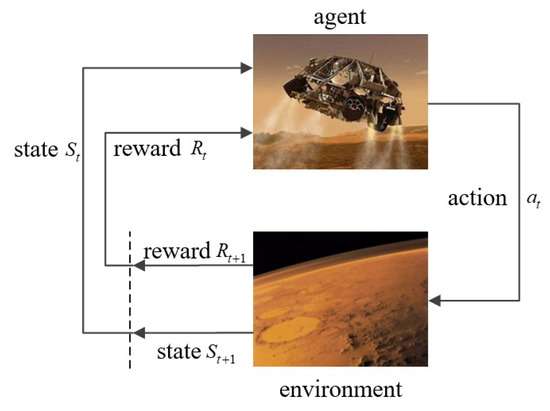

RL is a data-driven algorithm, which is different from supervised learning. RL obtains training data(experience) through interaction with the environment, as shown in Figure 3.

Figure 3.

Interaction between agent and environment.

At time step t, the agent gets an observation from the environment, then takes an action according to the policy , the environment transfers to the next state based on the model and returns a reward . Define the accumulated return of an episode as

where is the discount factor. When , the agent only cares about the most recent rewards. While , the agent has a longer horizon and cares more about the future reward. Moreover, the target is to update policy to maximize .

DDPG, TD3, and SAC are three of the most successful and popular RL algorithms, so we chose them as the framework in this paper.

- (1)

- DDPG

DDPG is a deterministic policy RL framework that outputs a deterministic action, and it is the result of the deep Q network (DQN) extended to continuous control space. DDPG has been extensively researched and applied to the field of continuous control. It learns both a value function and a policy. First, it approximates the value function via the Bellman equation with offline experience through gradient descent. Then, the policy is updated by maximizing the approximated value function.

DDPG is one of the standard algorithms that training a deep neural network to approximate Q function. It makes use of the past experience through the trick of replay buffer. When it is time to update, it randomly samples from the buffer. In order to stabilize the training process, the size of the replay buffer should be properly chosen. If it is too small, it can only store recent experiences, which makes the policy brittle. However, if it is too large, the possibility of sampling a good experience will decrease, and it takes more episodes for the training process to converge.

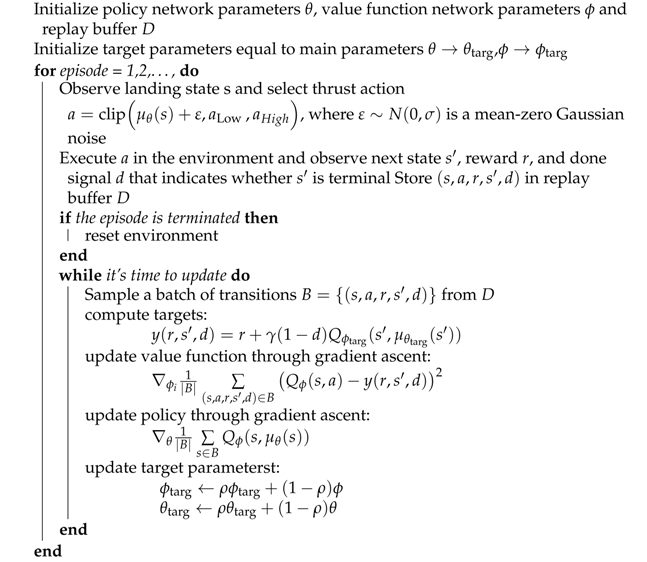

As shown in Algorithm 1, the whole learning process consists of two parts: Q-learning and policy learning.

According to Bellman equation, optimal Q function under optimal policy satisfies

where P is the environment model. Given the experience , value function under the policy can be represented as

| Algorithm 1: DDPG based soft landing. |

|

Setup a mean-squared Bellman error function as

where is the value function target. In the condition of continuous action space, it is difficult to compute action which maximizes . Therefore, DDPG uses target network to solve this problem

where is obtained via target policy . With the training process going on, policy and value function will gradually converge to optimal policy and optimal value function respectively.

- (2)

- TD3

TD3 is modified from DDPG. DDPG can perform well sometimes but it highly depends on the choice of hyperparameters. TD3 takes three critical tricks to make the training process more stable.

- Clipped Double-Q Learning. TD3 learns two value function networks at the same time. When calculating the target, and are input to the two target value function networks at the same time after obtaining. When the value function network is updated, the smaller one is selected to compute the loss function of the error of the Bellman equation.

- Target Policy Smoothing. The value function learning method of TD3 and DDPG is the same. When the value function network is updated, noise is added to the action output of the target policy network to avoid overexploitation of the value functionwhere ∼ is a mean-zero Gaussian noise, and is the parameters of the target strategy network. Adding noise to the output action of the strategy network serves as regularization, which avoids the overexploitation of value function and stabilizes the training process.

- Delayed Policy Updates. As the output of the target strategy network is used to compute the target of the value function, the agent can be brittle because of frequent strategy updates, so TD3 adopts the Delayed Policy Updates trick. When updating the strategy network, the update frequency of the strategy network is lower than that of the value function network. This helps to suppress the training fluctuation and makes the learning process more stable.

- (3)

- SAC

SAC is an RL framework that maximizes cross-entropy. It applies the learning techniques of DDPG to the learning of random strategies and optimizes random strategies in an offline learning mode.

The agent starts from the initial state ∼, samples from policy distribution ∼, and gets an action acting on the environment. Then, the environment returns a reward and transfers to a new state ∼ according to the environmental model. Repeating the interacting process and the trajectory of the state, can be obtained. The probability distribution of the trajectory regarding the strategy is expressed as

The maximum cross-entropy RL optimizes the cumulative return and cross-entropy of the strategy. For Markov decision processes (MDPs) with infinite loss rewards, the optimization objective can be expressed as

Moreover, the optimal policy is represented as

where is the cross-entropy of strategy distribution, which can be added into the optimization target to encourage agents to explore the environment in training, and to improve the robustness of training results. is the temperature coefficient, which is used to adjust the importance of cross-entropy, and thus plays a role in regulating the randomness of the optimal strategy. A large encourages the agent to explore the environment. Therefore, the larger the is, the more stochastic the strategy will be. While the smaller alpha is, it is more likely that the policy falls into a local optimal point. When , maximizing cross-entropy RL degenerates into conventional RL that maximizes cumulative reward.

Based on the optimization objective above, the state value function is defined as

Moreover, the value function

Then, according to the Bellman equation,

SAC makes use of “Clipped Double Q-learning” and the Q-learning is similar to TD3 except for the compute of the target value function

According to Equation (24), then

The updating of the policy network makes use of the re-parameterization trick

where the action distribution is Gaussian; and are the mean value and variance of the Gaussian distribution, respectively; and is the standard Gaussian distribution. After sampling from the distribution, the action output is restricted to the constrained range through the Tanh activation function.

Besides, SAC makes use of the Clipped Q trick when updating its strategy

The strategy optimization objective is finally represented as

Because of the inherent stochasticity, SAC can effectively avoid the overexploitation of value function.

3. Soft Landing with DRL

Based on the dynamic model established above, in this section we will design an algorithm based on RL according to the characteristics of soft landing problems, including the selection of observation values and the design of reward function and other settings concerning how the agent interacts the environment.

3.1. Reward Setting

The reward function is an index to evaluate the behavior of agents, which is directly related to the training result.

- Goal achieving reward: When the altitude of the lander is less than 0, the speed is downward, the speed is less than the upper limit of soft landing speed, and the attitude angle and angular rate is within the limited range, the lander is considered to have achieved soft landing, and gets the rewardwhere is a large positive constant serving as a soft landing bonus. are upper bounds of Euler angle of the lander, and is the upper bounds of angular rate.

- Velocity tracking reward: At the beginning of the phase of powered descent, the lander is several kilometers away from the landing zone, and the initial velocity is around 100 m/s. If the agent is rewarded only when it achieves a soft landing at the target area, the state space is so sparse that it’s nearly impossible to converge. Therefore, we transfer the soft landing problem into a velocity tracking problem. The process reward is introduced in the landing process, that is, a reference velocity is given according to the real-time relative position between the lander and target landing areawhere and are constant coefficients that determine the mapping relationship between position and reference velocity, and large coefficients lead to smaller reference velocity in the powered descent process. Moreover, the reward is given according to the deviation between the real velocity and the reference velocity of the landerwhere is the reward coefficient of velocity tracking error.

- Crash penalty: To avoid the crash of the lander, a penalty is included in the reward. When the attitude angle or speed deviation exceeds the threshold, the episode terminates and the environment returns a large negative reward as a penaltywhere is the penalty of attitude crash.

- : In planetary exploration missions, the fuel carried by the lander is limited, so the fuel consumption should be minimized. A reward regarding fuel consumption is defined aswhere weights a term penalizing fuel consumption. The fuel consumption coefficient and velocity tracking error coefficient explicitly control the trade-off between fuel consumption and velocity tracking. With higher and lower , fuel consumption weights more in the reward and the lander will exchange some velocity tracking performance for less fuel consumption.

- Constant reward: Notice that the rewards , , are all negative. To encourage the agent to explore more, a positive constant reward needs to be introduced into the reward.

Therefore, the overall reward format is as follows:

3.2. Observation Space

To improve the landing performance, it is necessary to include position, velocity, attitude, and angular rate into the observation . Based on the analysis of reward setting, the absolute velocity in is replaced by the velocity deviation in the lander body frame.

Tracking velocity deviation rather than direct position can improve the generalization ability of the trained agent.

Each attitude Angle is input into the observation vector in the form of sine value and cosine value, and the quaternion is observed simultaneously.

3.3. Action Space

The lander’s action is the thrusts of the engines, which are bounded in a specific range. After going through the Tanh activation function, the output of the policy network is bounded to . Then, the actual thrust is got through a linear mapping

where and are the lower and upper bounds of the thrust, respectively.

3.4. Network Architecture

We use the deep learning framework PyTorch to build the neural networks. The hyperparameters such as network learning rates and noise variance are defined Section 4.

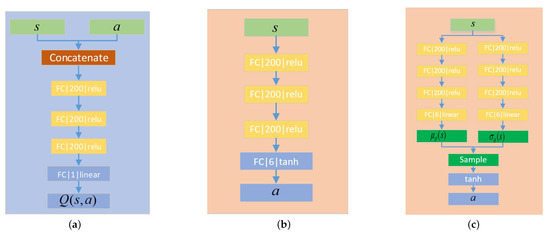

DDPG, TD3, and SAC all contain value function networks and policy networks. In this paper, all the networks of value functions have the same structure as Figure 4a. We employ three hidden layers to process the vector concatenated of observation and action. All the hidden layers contain 200 nonlinear units and the activation function is ReLU.

Figure 4.

Network architecture. (a) Value function network. (b) Policy network of DDPG and TD3. (c) Policy network of SAC.

The policy network structure of DDPG and TD3 is the same, as shown in Figure 4b. The network includes three hidden layers, of which the activation function is ReLU, and the activation function of the output layer is Tanh, through which the action is normalized to [−1, 1].

Different from the deterministic policy of DDPG and TD3, SAC is a stochastic strategy and the structure of the policy network is shown in Figure 4c. The strategy network consists of two paths of networks. Both of them have the same structure, which outputs the mean value and variance of the Gaussian distribution, respectively. Then, the network output is obtained by sampling and activation functions in turn.

4. Simulation Results and Discussion

In this section, the simulation experiments are carried out and the results and related discussions are proposed.

4.1. Simulation Settings

We train the lander velocity controller in a 6DOF environment established in Section 2. The environmental parameters settings are shown in Table 1.

Table 1.

Parameter settings of environment.

The hyper parameter settings of DDPG, TD3, and SAC algorithms are shown in Table 2, Table 3 and Table 4, respectively. The training of DDPG is unstable and the learning rate of its value function is lower than TD3.

Table 2.

Parameter settings of DDPG.

Table 3.

Parameter settings of TD3.

Table 4.

Parameter settings of SAC.

4.2. Simulation Results

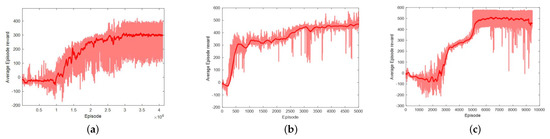

We deployed training algorithms according to the setting of environment and algorithm parameters listed above, and the reward change curves of DDPG, TD3, and SAC in the training process are shown in Figure 5.

Figure 5.

The curves of accumulative reward for each training episode. (a) DDPG. (b) TD3. (c) SAC.

The dark red curve is the average reward. From Figure 5a, the episode reward starts to rise at around episode 10,000 and continues to increase until episode 20,000. Though the curve of average reward looks stable, the episode reward fluctuates between 100 and 400, which is very unstable.

It is obvious from the reward curve that the performance of the TD3 agent improves significantly after 700 episodes of training. After 5000 episodes of training, the average reward converges at 450. Compared with DDPG, the learning speed of TD3 is faster and the performance is more stable, which is the result of the three tricks of “Clipped Double-Q Learning”, “Target Policy Smoothing”, and “Delayed Policy Updates”.

The training of the SAC agent experienced a significant improvement at around episode 3000 and 5000, respectively, and finally stabilized and the reward converged to 500. Due to the introduction of the “Clipped Double-Q Learning” like TD3, plus the inherent smoothing characteristics of the stochastic strategy, the training process of SAC fluctuates within a very small range, and the exploration of the environment is sufficient. The accumulated rewards of some episodes are close to 600, which is higher than DDPG and SAC.

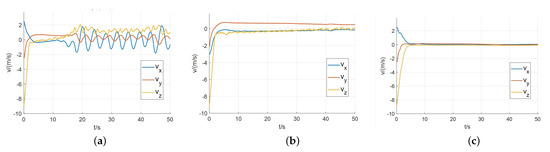

The reference velocity is set as , and the policy obtained from training is used to control the speed. With the initial velocity , the curves of the velocity deviation are shown in Figure 6.

Figure 6.

The curves of velocity deviation in velocity tracking experiments. (a) DDPG. (b) TD3. (c) SAC.

All of the trained controllers can keep the velocity deviation within a certain range. But there is a continuous oscillation in the velocity control by DDPG agent. The agent trained by SAC algorithm performs the best, which gets the velocity error converges to 0 and keeps it stable.

Besides velocity tracking experiments, we validate the trained policy of each algorithm by soft landing tests. Through 100 shooting experiments, landing statistics are shown in Table 5. Among the three, SAC has the highest landing success rate of , while DDPG terminates 26 times due to attitude over constraint, which is caused by continuous oscillation when tracking reference velocity. In the soft landing process, the reference velocity changes dynamically, causing the oscillation more serious and leading to a higher failure rate.

Table 5.

Landing success rate.

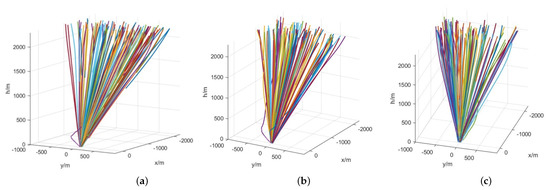

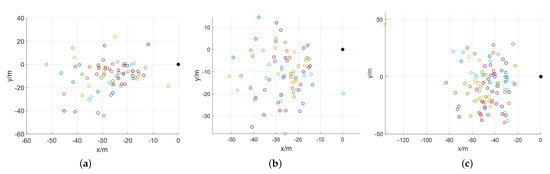

Taking the target landing site as the origin, the landing trajectory and landing point distribution of the three controllers are shown in Figure 7 and Figure 8, respectively. By analyzing the distribution of landing points, DDPG and SAC have nearly the same accuracy of 100 m in successful landing cases, while some landing points of SAC distance 200 m from the origin.

Figure 7.

Landing trajectories of lander under the control of the trained velocity controller. (a) DDPG. (b) TD3. (c) SAC.

Figure 8.

2D distribution of landing points. (a) DDPG. (b) TD3. (c) SAC.

5. Conclusions

This paper presents an end-to-end soft landing control algorithm based on RL. First, the 6DOF soft landing dynamics model is established and the soft landing problem of the powered descent phase is formulated. The theoretical basis of RL is briefly introduced. Then, to solve the problem of sparse reward, which makes it hard for the policy to converge, the reward function including process reward is designed. Besides, the fuel consumption penalty and constraints violation penalty are included in the reward function to optimize fuel consumption and keep attitude angle within constraints. Moreover, the networks architecture of the RL algorithms used is designed. The value functions of DDPG, TD3, and SAC are approximated by deep neural networks that have the same architecture. Both DDPG and TD3 have a policy network that outputs deterministic action, while the action output of SAC is sampled according to a Gaussian distribution characterized by the output of its policy network. Finally, simulations of training are carried out to evaluate the algorithm proposed. The results show that the performance varies between different RL frameworks, and the agent trained by SAC tracks the reference velocity best. In addition, the trained policy is deployed to soft landing experiments, results of which demonstrate the validity of the algorithm proposed. Future work will focus on the stability guarantee of RL-based soft landing algorithm, which is of great importance in space exploration missions to ensure success.

Author Contributions

Conceptualization, X.X., Y.C. and C.B.; methodology, X.X. and Y.C.; software, X.X.; validation, C.B.; formal analysis, C.B.; data curation, X.X.; writing—original draft preparation, X.X. and Y.C.; writing—review and editing, C.B. and Y.C.; visualization, X.X. and Y.C.; supervision, C.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China grant number 61973101 and Aeronautical Science Foundation of China grant number 20180577005.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DRL | Deep reinforcement learning |

| DDPG | Deep deterministic policy gradient |

| TD3 | Twin Delayed DDPG |

| SAC | Soft Actor Critic |

| 6DOF | 6 Degree of Freedom |

| NLP | Nonlinear Programming |

| planet gravity | |

| thrust of each engine | |

| minimum thrust of each engine | |

| maximum thrust of each engine | |

| cant angle for the engines | |

| thrust vector composed of each engine | |

| thrust vector in lander body frame | |

| torque vector in lander body frame | |

| surface relative lander position vector | |

| velocity vector | |

| direction cosine matrix between | |

| lander body frame and planet surface frame | |

| angular rate vector in lander body frame | |

| attitude quaterion | |

| m | lander mass |

| inertia matrix of the lander | |

| specific impulse for thrusters | |

| lander sides of length | |

| J | optimization target |

| agent accumulated return in an episode | |

| limited range for velocity, attitude angle and angular rate to | |

| achieve soft landing | |

| reference velocity | |

| reward coefficients | |

| simulation sample time | |

| maximum simulation time in one episode |

References

- Sanguino, T.D.J.M. 50 years of rovers for planetary exploration: A retrospective review for future directions. Robot. Auton. Syst. 2017, 94, 172–185. [Google Scholar]

- Lu, B. Review and prospect of the development of world lunar exploration. Space Int. 2019, 481, 12–18. [Google Scholar]

- Xu, X.; Bai, C.; Chen, Y.; Tang, H. A Survey of Guidance Technology for Moon /Mars Soft Landing. J. Astronaut. 2020, 41, 719–729. [Google Scholar]

- Sostaric, R.R. Powered descent trajectory guidance and some considerations for human lunar landing. In Proceedings of the 30th Annual AAS Guidance and Control Conference, Breckenridge, CO, USA, 3–7 February 2007. [Google Scholar]

- Tata, A.; Salvitti, C.; Pepi, F. From vacuum to atmospheric pressure: A review of ambient ion soft landing. Int. J. Mass Spectrom. 2020, 450, 116309. [Google Scholar] [CrossRef]

- He, X.S.; Lin, S.Y.; Zhang, Y.F. Optimal Design of Direct Soft-Landing Trajectory of Lunar Prospector. J. Astronaut. 2007, 2, 409–413. [Google Scholar]

- Cheng, R.K. Lunar Terminal Guidance, Lunar Missions and Exploration. In University of California Engineering and Physical Sciences Extension Series; Leondes, C.T., Vance, R.W., Eds.; Wiley: New York, NY, USA, 1964; pp. 308–355. [Google Scholar]

- Citron, S.J.; Dunin, S.E.; Meissinger, H.F. A terminal guidance technique for lunar landing. AIAA J. 1964, 2, 503–509. [Google Scholar] [CrossRef]

- Hull, D.G.; Speyer, J. Optimal reentry and plane-change trajectories. In Proceedings of the AIAA Astrodynamics Specialist Conference, Lake Tahoe, NV, USA, 3–5 August 1981. [Google Scholar]

- Pellegrini, E.; Russell, R.P. A multiple-shooting differential dynamic programming algorithm. Part 1: Theory. Acta Astronaut. 2020, 170, 686–700. [Google Scholar] [CrossRef]

- Bolle, A.; Circi, C.; Corrao, G. Adaptive Multiple Shooting Optimization Method for Determining Optimal Spacecraft Trajectories. U.S. Patent 9,031,818, 12 May 2015. [Google Scholar]

- Bai, C.; Guo, J.; Zheng, H. Optimal Guidance for Planetary Landing in Hazardous Terrains. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 2896–2909. [Google Scholar] [CrossRef]

- Chandler, D.C.; Smith, I.E. Development of the iterative guidance mode with its application to various vehicles and missions. J. Spacecr. Rocket. 1967, 4, 898–903. [Google Scholar] [CrossRef]

- Song, Z.; Wang, C. Powered soft landing guidance method for launchers with non-cluster configured engines. Acta Astronaut. 2021, 189, 379–390. [Google Scholar] [CrossRef]

- Amrutha, V.; Sreeja, S.; Sabarinath, A. Trajectory Optimization of Lunar Soft Landing Using Differential Evolution. In Proceedings of the 2021 IEEE Aerospace Conference (50100), Big Sky, MT, USA, 6–13 March 2021; pp. 1–9. [Google Scholar]

- Sánchez-Sánchez, C.; Izzo, D. Real-time optimal control via deep neural networks: Study on landing problems. J. Guid. Control Dyn. 2018, 41, 1122–1135. [Google Scholar] [CrossRef] [Green Version]

- Furfaro, R.; Bloise, I.; Orlandelli, M.; Di Lizia, P.; Topputo, F.; Linares, R. Deep learning for autonomous lunar landing. In Proceedings of the 2018 AAS/AIAA Astrodynamics Specialist Conference, Snowbird, UT, USA, 19–28 August 2018; Volume 167, pp. 3285–3306. [Google Scholar]

- Furfaro, R.; Bloise, I.; Orlandelli, M.; Di Lizia, P.; Topputo, F.; Linares, R. A recurrent deep architecture for quasi-optimal feedback guidance in planetary landing. In Proceedings of the IAA SciTech Forum on Space Flight Mechanics and Space Structures and Materials, Moscow, Russia, 13–15 November 2018; pp. 1–24. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing atari with deep reinforcement learning. arXiv 2013, arXiv:1312.5602. [Google Scholar]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Pérez, P. Deep reinforcement learning for autonomous driving: A survey. arXiv 2020, arXiv:2002.00444. [Google Scholar] [CrossRef]

- Mohammed, M.Q.; Chung, K.L.; Chyi, C.S. Review of Deep Reinforcement Learning-based Object Grasping: Techniques, Open Challenges and Recommendations. IEEE Access 2020, 8, 178450–178481. [Google Scholar] [CrossRef]

- Acikmese, B.; Ploen, S.R. Convex programming approach to powered descent guidance for mars landing. J. Guid. Control Dyn. 2007, 30, 1353–1366. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).