Analysis of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods Used in Hydrography

Abstract

1. Introduction

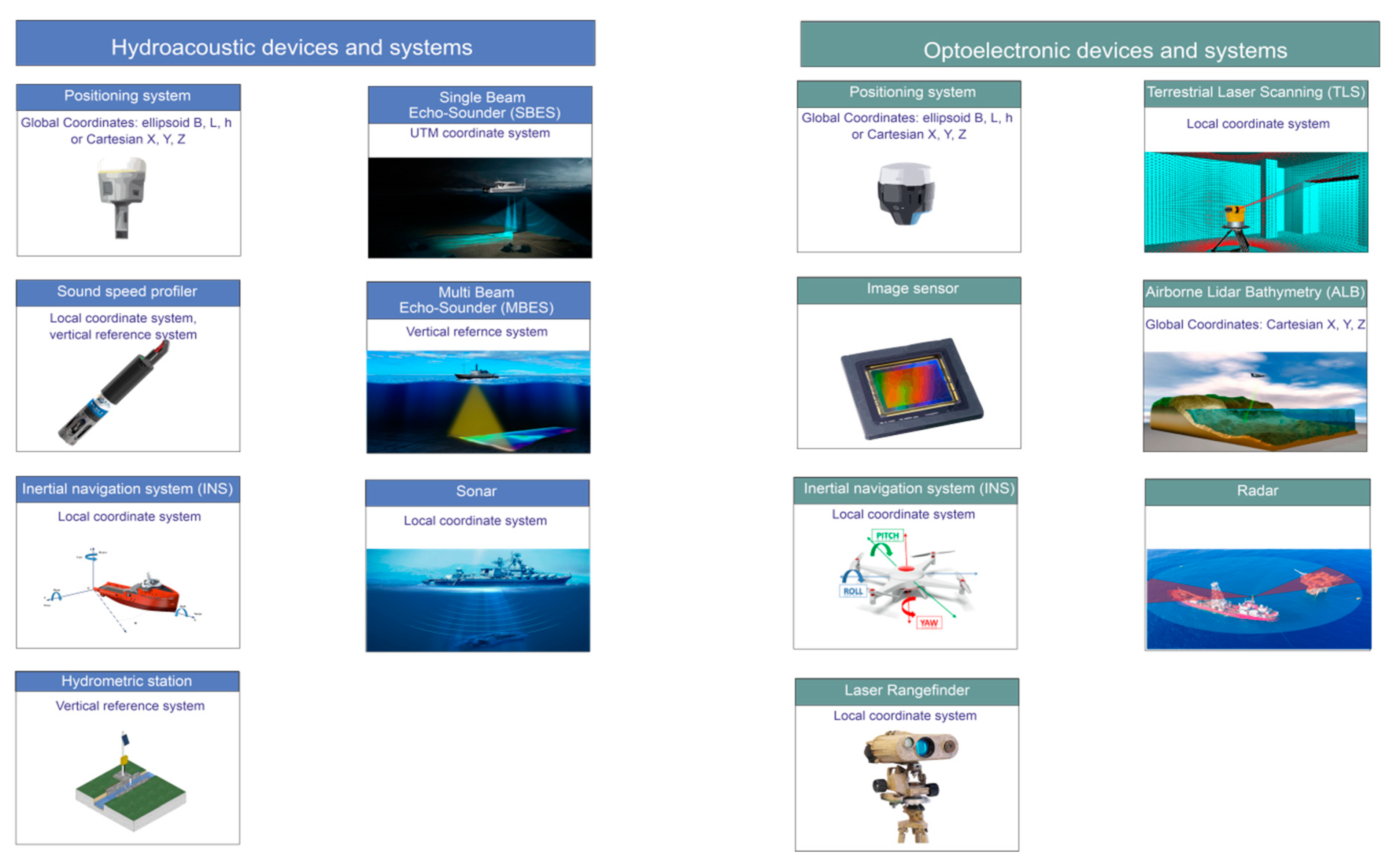

- A positioning system is used to determine the coordinates of the vessel’s position. In hydrography, the most commonly applied positioning system is a Differential Global Positioning System (DGPS) or a Real Time Kinematic (RTK) receiver [7]. It determines the position coordinates that are presented in curvilinear coordinates (B, L, h) in relation to the reference ellipsoid used or in Cartesian coordinates (X, Y, Z);

- A sound velocity probe determines the sound velocity in water. The propagation of sound waves in water can be measured directly with a Sound Velocity Profile (SVP) or indirectly using a Conductivity, Temperature, Depth (CTD) sensor which measures the conductivity, hydrostatic pressure and temperature in seawater and then, based on these physicochemical variables, determines the sound velocity, as well as the seawater density and salinity [8]. A measurement of a sound wave in a vertical distribution is expressed in a vertical datum, while in a water column, it is expressed in a local datum associated with the sensor location;

- An Inertial Navigation System (INS) records the pitch, roll and yaw angles (RPY angles) of a vessel. It enables the determination of the vehicle’s orientation based on the knowledge of the RPY angles in the presence of disturbances due to waves and wind. Devices equipped with sensors of this type measure linear accelerations and rotation angles in three planes (X, Y, Z) in relation to a specified local system. Linear accelerations are determined by applying accelerometers, while the RPY angles are measured using a gyroscope;

- A hydrometric station records and collects data concerning the water quantity status on a lake, reservoir or river. An instrument used to measure water levels is a staff gauge, while the device used for the same purpose is a tide gauge. Some hydrometric stations are equipped with a telemetric function which enables automated data transfer, i.e., a General Packet Radio Service (GPRS) or radio modem. Information on water levels during bathymetric surveys enables the expression of the measured depths in relation to a pre-determined vertical datum. Changes in water levels need to be recorded and included during the hydrographic data processing;

- A Single Beam EchoSounder (SBES) is a device used for measuring depth in the vertical direction. A SBES generates a single, narrow-angle acoustic pulse, which enables the recording of only depth data following sounding profiles [9]. The disadvantage of this measurement is the lack of information on the depths between the profiles. A measurement system built from a Global Navigation Satellite System (GNSS) and a SBES can be a separate system to record the X, Y, Z data synchronically in the Universal Transverse Mercator (UTM) system;

- A MultiBeam EchoSounder (MBES) is a system that records bathymetric data over a wide swath of the bottom, perpendicular to the direction of a vessel’s movement. The transducer in a multibeam echosounder generates multiple acoustic beams. For this reason, bathymetric surveys conducted using a MBES can completely cover the studied bottom with depth data [10]. Multibeam echosounders are applied in both shallow and deep-water surveys. The data derived from this system are expressed in the vertical datum;

- A SOund Navigation And Ranging (SONAR) is a device used to determine the location and classification of submerged objects using sound waves. A sonar emits sound pulses in water, which are then sent out and reflected off the bottom, fish or vegetation. The returning sound pulses are converted into electrical signals. The measured velocity of sound wave propagation in water enables both the estimation of the depth of the object from which the wave was reflected and the identification of underwater objects. Sonar data provide information on underwater objects that may pose a navigational hazard for other vessels.

- A positioning system determines the position coordinates of an aerial vehicle. An optoelectronic system can be equipped with a GNSS receiver which records curvilinear coordinates (B, L, h) in relation to the reference ellipsoid applied or in Cartesian coordinates (X, Y, Z). Where an optoelectronic device includes a low accuracy GNSS system, it is reasonable to determine the coordinates of selected points in the field using satellite techniques. Thanks to this method, it is possible to make a correction to the vehicle’s coordinates;

- An image sensor is used to convert electromagnetic waves into electrical impulses which are converted in the electronic system into an image of the scanned surface. In optoelectronic devices, an image is obtained using a so-called photodiode detector, a photomultiplier tube or Charge-Coupled Device (CCD) and Complementary Metal-Oxide-Semiconductor (CMOS) cameras. Image sensors are used in photo cameras, radars, sonars and Unmanned Aerial Vehicles (UAV) [12];

- An INS system is a device for measuring accelerations, rotation angles in three planes (X, Y, Z), and the Earth’s magnetic field. One of the components of the INS system installed on aircraft is an Inertial Measurement Unit (IMU), i.e., a device comprised of sensors such as accelerometers, gyroscopes and magnetometers. The system provides information on the vehicle’s motion parameters, i.e., the acceleration and velocity as well as the orientation in space [13]. The measurements of the IMU angles are taken in relation to the locally adopted coordinate system;

- A laser rangefinder is an instrument used to measure distance. A rangefinder sends out a laser pulse in the form of a laser beam, which is then reflected off the surface being measured and returns to the measurement instrument. The device circuit then calculates distances based on the time between the emission and reception of the sound wave. A rangefinder is a stand-alone instrument which is also available in the form of modules that are incorporated into considerably larger systems or integrated with additional systems, e.g., camera or a GNSS. Data logging is recorded in a local system;

- A Terrestrial Laser Scanning (TLS) is a system that measures the angle and distance between the instrument and the surface being measured. As a result of the reflection of a laser beam off the observed object, both the distance between the instrument and the measurement point, as well as the horizontal and vertical angle are determined. Surveys carried out using TLS scanning provide geospatial data on the studied object or surface in the form of a point cloud, recorded in the local system of the device with the coordinates of X, Y, Z [14];

- An Airborne Lidar Bathymetry (ALB) is a device that measures the distance from a flying aircraft to the ground points. The airborne laser scanning system includes three main devices, i.e., a laser rangefinder interfacing with the GNSS and INS systems. The data integration from these three measurement systems provides information on the position from which the distance measurement was taken and the distance itself and its direction in space. The device records the ground point coordinates in the rectangular coordinate system [15];

- A RAdio Detection And Ranging (RADAR) is an instrument that determines the angle, distance (range) or velocity of an object. The radar operation involves the measurement of the time between the signal transmission and the recording of its echo. During hydrographic surveys, the device supports automated measurement platforms in monitoring the environment while preventing hazardous events.

2. Review of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods

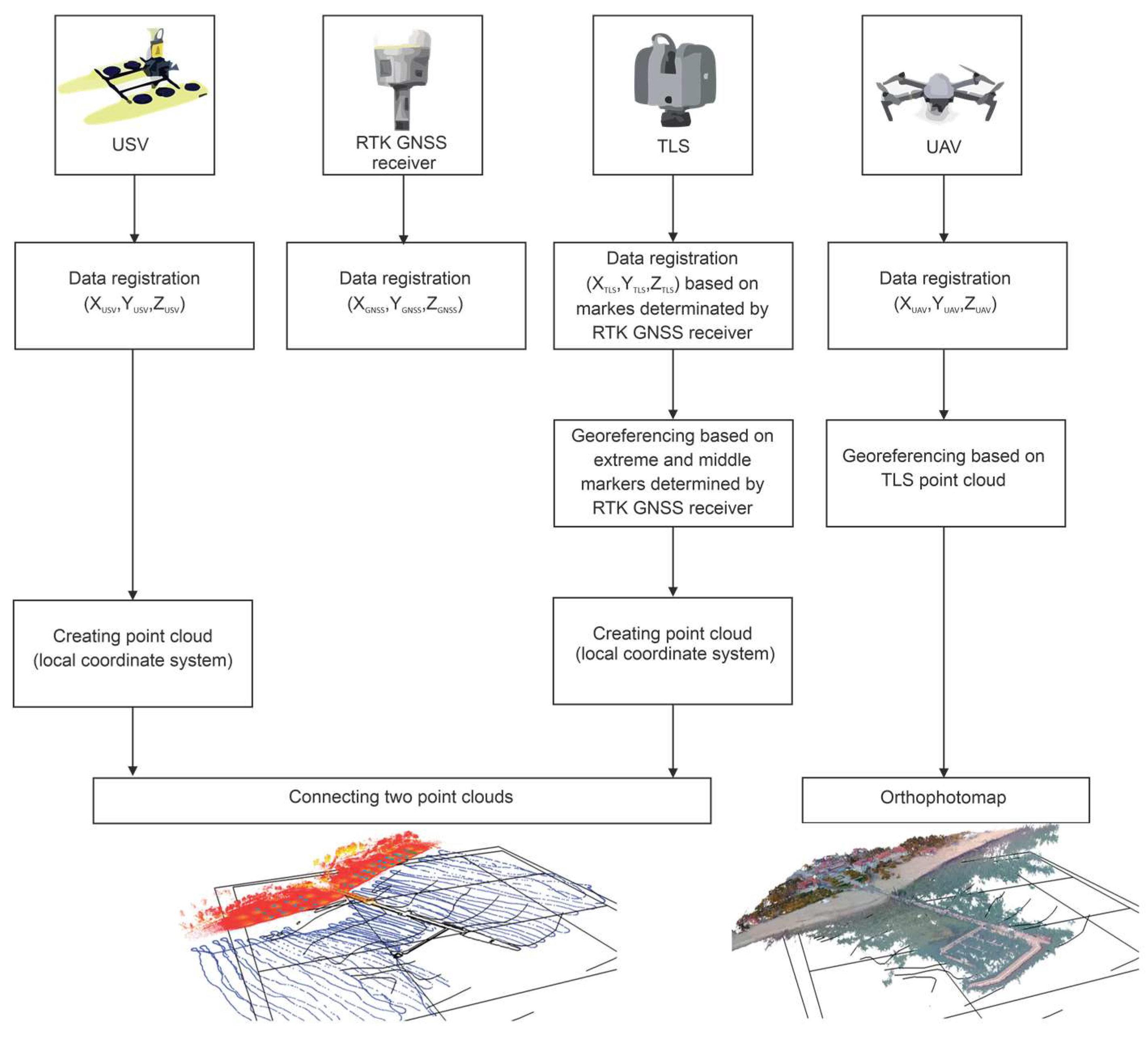

2.1. Method of GNSS, TLS, UAV and USV Data Integration according to Dąbrowski P.S. et al.

- —point coordinates in the local based coordinate system ;

- —point coordinates in the local modified coordinate system ;

- —rotation angle;

- —three-dimensional coordinates of the translation vector.

- —rotation matrix;

- —partial rotation matrices;

- —scaling matrix.

- —adjustment point coordinates in the corrected coordinate system;

- —adjustment point coordinates in the corrected coordinate system after rotation;

- —offset vector.

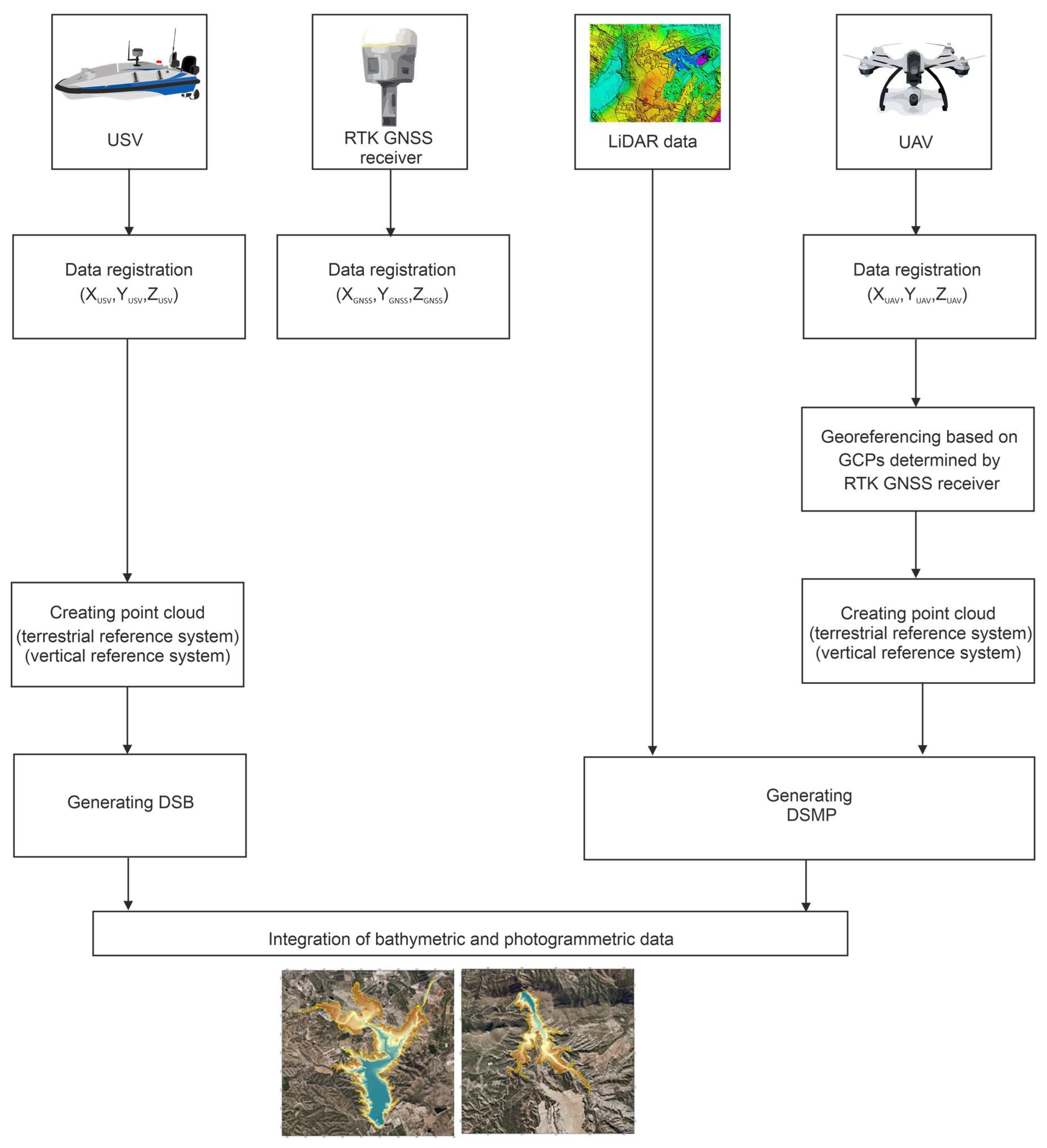

2.2. Method of GNSS, TLS, UAV and USV Data Integration according to Erena M. et al.

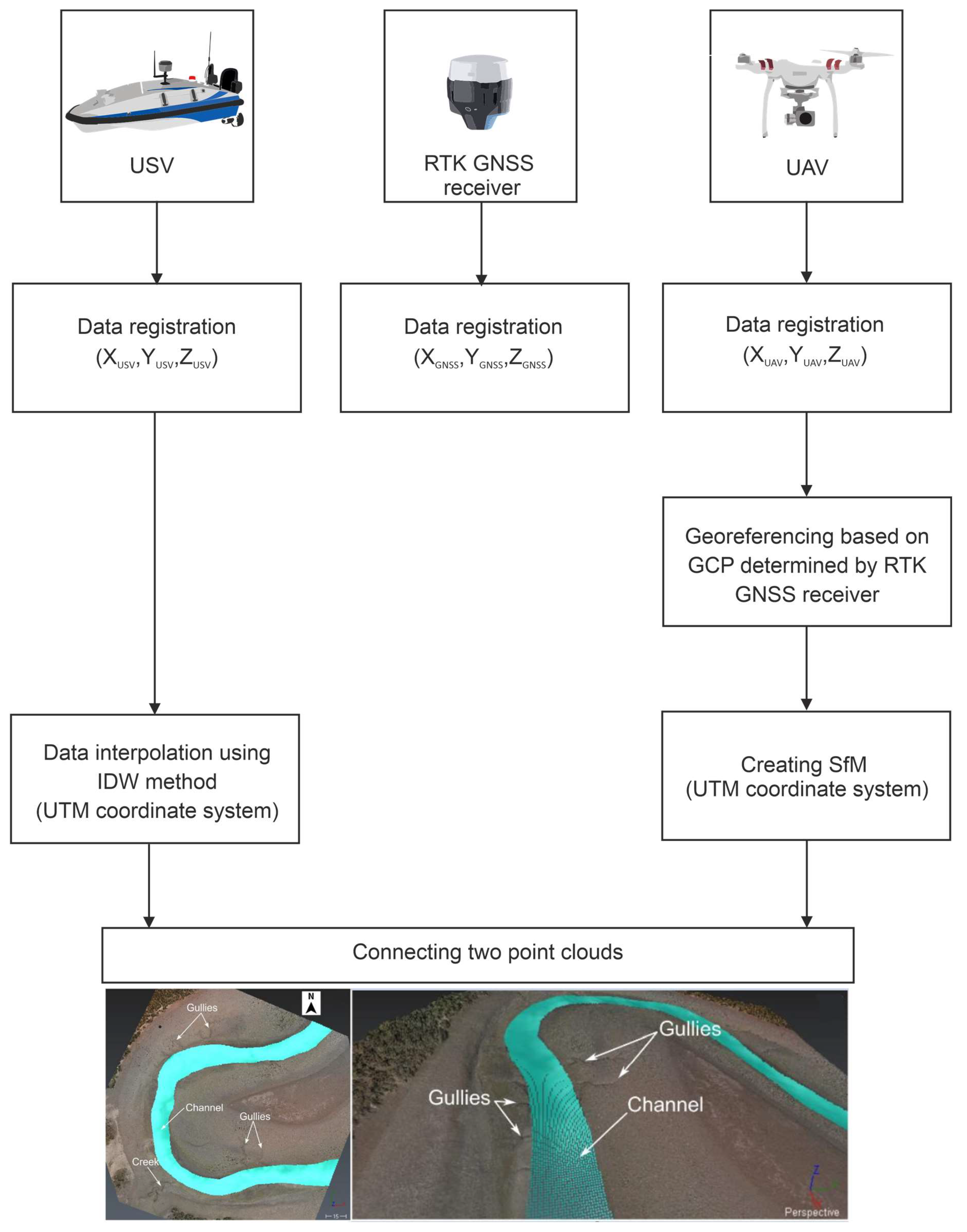

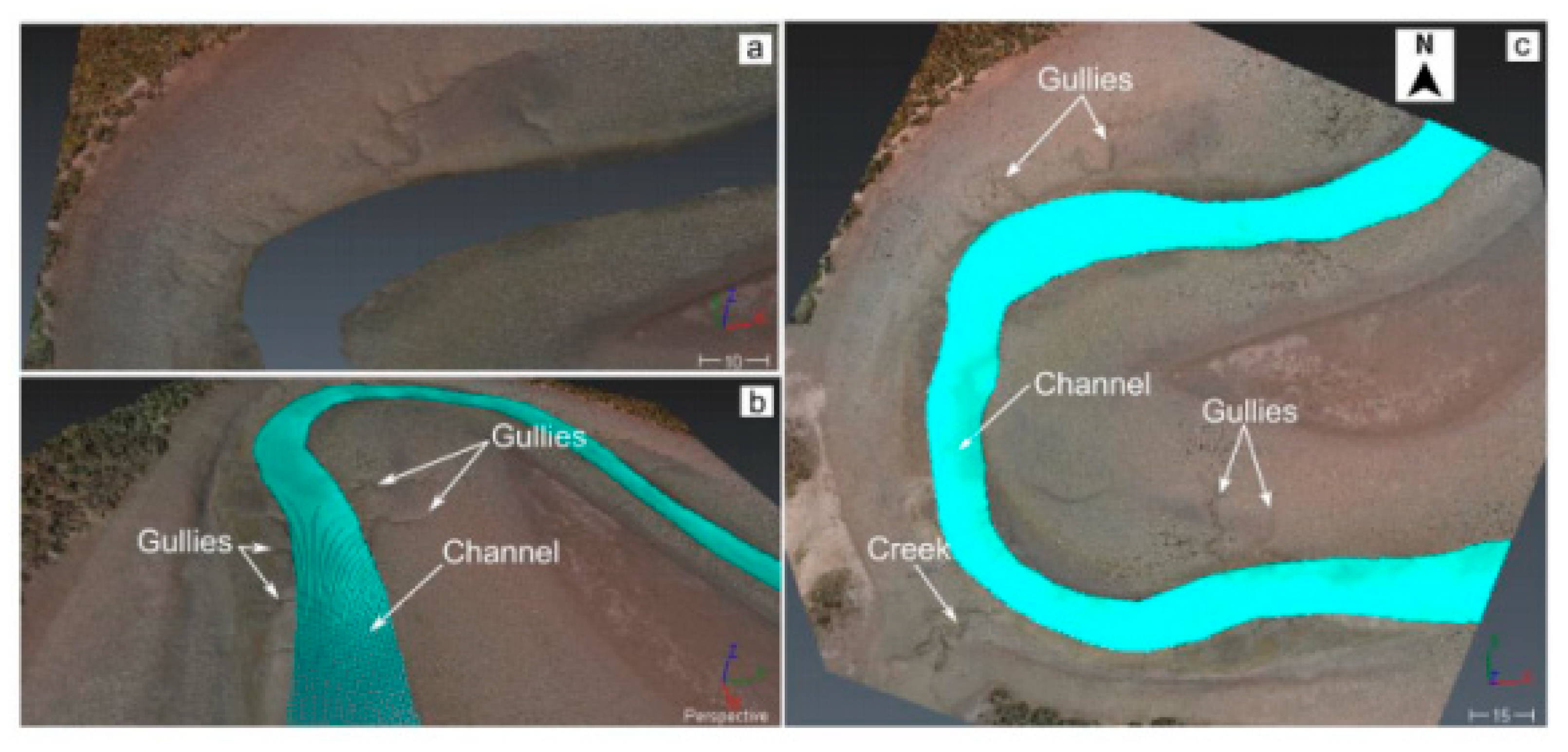

2.3. Method of UAV and USV Data Integration according to Genchi S.A. et al.

- —number of points;

- — value for observation ;

- —predicted value of for observation ;

- —arithmetic mean of value.

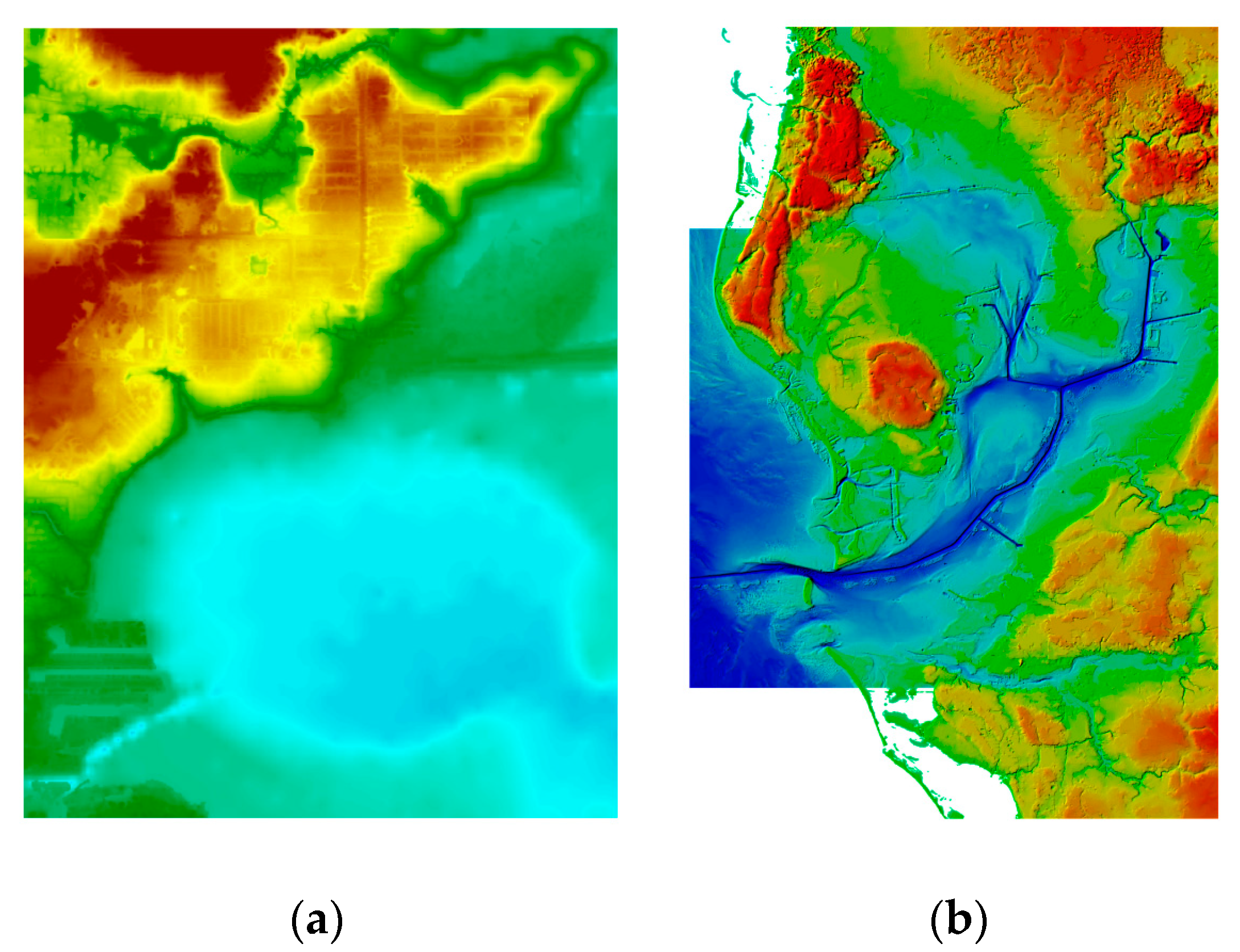

2.4. Method of LiDAR, NOAA and USGS Data Integration according to Gesch D. and Wilson R.

3. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cao, W.; Wong, M.H. Current Status of Coastal Zone Issues and Management in China: A Review. Environ. Int. 2007, 33, 985–992. [Google Scholar] [CrossRef] [PubMed]

- Cicin-Sain, B.; Knecht, R.W. Integrated Coastal and Ocean Management: Concepts and Practices, 1st ed.; Island Press: Washington, DC, USA, 1998. [Google Scholar]

- Postacchini, M.; Romano, A. Dynamics of the Coastal Zone. J. Mar. Sci. Eng. 2019, 7, 451. [Google Scholar] [CrossRef]

- Popielarczyk, D.; Templin, T. Application of Integrated GNSS/Hydroacoustic Measurements and GIS Geodatabase Models for Bottom Analysis of Lake Hancza: The Deepest Inland Reservoir in Poland. Pure Appl. Geophys. 2014, 171, 997–1011. [Google Scholar] [CrossRef]

- Kang, M. Overview of the Applications of Hydroacoustic Methods in South Korea and Fish Abundance Estimation Methods. Fish. Aquat. Sci. 2014, 17, 369–376. [Google Scholar] [CrossRef][Green Version]

- Makar, A. Method of Determination of Acoustic Wave Reflection Points in Geodesic Bathymetric Surveys. Annu. Navig. 2008, 14, 1–89. [Google Scholar]

- Marchel, Ł.; Specht, C.; Specht, M. Assessment of the Steering Precision of a Hydrographic USV along Sounding Profiles Using a High-precision GNSS RTK Receiver Supported Autopilot. Energies 2020, 13, 5637. [Google Scholar] [CrossRef]

- Makar, A. Influence of the Vertical Distribution of the Sound Speed on the Accuracy of Depth Measurement. Rep. Geod. 2001, 5, 31–34. [Google Scholar]

- Parente, C.; Vallario, A. Interpolation of Single Beam Echo Sounder Data for 3D Bathymetric Model. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 6–13. [Google Scholar] [CrossRef]

- Wlodarczyk-Sielicka, M.; Stateczny, A. Comparison of Selected Reduction Methods of Bathymetric Data Obtained by Multibeam Echosounder. In Proceedings of the 2016 Baltic Geodetic Congress (BGC 2016), Gdańsk, Poland, 2–4 June 2016. [Google Scholar]

- Stateczny, A.; Włodarczyk-Sielicka, M.; Grońska, D.; Motyl, W. Multibeam Echosounder and LiDAR in Process of 360-degree Numerical Map Production for Restricted Waters with HydroDron. In Proceedings of the 2018 Baltic Geodetic Congress (BGC 2018), Gdańsk, Poland, 21–23 June 2018. [Google Scholar]

- Kondo, H.; Ura, T. Navigation of an AUV for Investigation of Underwater Structures. Control Eng. Pract. 2004, 12, 1551–1559. [Google Scholar] [CrossRef]

- Noureldin, A.; Karamat, T.B.; Georgy, J. Inertial Navigation System. In Fundamentals of Inertial Navigation, Satellite-Based Positioning and Their Integration; Springer: Berlin/Heidelberg, Germany, 2013; pp. 125–166. [Google Scholar]

- Williams, R.; Brasington, J.; Vericat, D.; Hicks, M.; Labrosse, F.; Neal, M. Chapter Twenty–Monitoring Braided River Change Using Terrestrial Laser Scanning and Optical Bathymetric Mapping. In Developments in Earth Surface Processes; Elsevier: Amsterdam, The Netherlands, 2011; Volume 15, pp. 507–532. [Google Scholar]

- Wehr, A.; Lohr, U. Airborne Laser Scanning—An Introduction and Overview. ISPRS J. Photogramm. Remote Sens. 1999, 54, 68–82. [Google Scholar] [CrossRef]

- Abdalla, R. Introduction to Geospatial Information and Communication Technology (GeoICT); Springer: Berlin/Heidelberg, Germany, 2016; pp. 105–124. [Google Scholar]

- Dąbrowski, P.S.; Specht, C.; Specht, M.; Burdziakowski, P.; Makar, A.; Lewicka, O. Integration of Multi-source Geospatial Data from GNSS Receivers, Terrestrial Laser Scanners, and Unmanned Aerial Vehicles. Can. J. Remote Sens. 2021, 47, 621–634. [Google Scholar] [CrossRef]

- Specht, C.; Lewicka, O.; Specht, M.; Dąbrowski, P.; Burdziakowski, P. Methodology for Carrying out Measurements of the Tombolo Geomorphic Landform Using Unmanned Aerial and Surface Vehicles near Sopot Pier, Poland. J. Mar. Sci. Eng. 2020, 8, 384. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Specht, C.; Dabrowski, P.S.; Specht, M.; Lewicka, O.; Makar, A. Using UAV Photogrammetry to Analyse Changes in the Coastal Zone Based on the Sopot Tombolo (Salient) Measurement Project. Sensors 2020, 20, 4000. [Google Scholar] [CrossRef]

- EP, European Council. Directive 2007/2/EC of the European Parliament and of the Council of 14 March 2007 Establishing an Infrastructure for Spatial Information in the European Community (INSPIRE); EP, European Council: Brussels, Belgium, 2007. [Google Scholar]

- Vaníček, P.; Steeves, R.R. Transformation of Coordinates between Two Horizontal Geodetic Datums. J. Geod. 1996, 70, 740–745. [Google Scholar] [CrossRef]

- Bronshtein, I.N.; Semendyayev, K.A.; Musiol, G.; Mühlig, H. Handbook of Mathematics, 6th ed.; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Hackeloeer, A.; Klasing, K.; Krisp, J.M.; Meng, L. Georeferencing: A Review of Methods and Applications. Ann. GIS 2014, 20, 61–69. [Google Scholar] [CrossRef]

- Erena, M.; Atenza, J.F.; García-Galiano, S.; Domínguez, J.A.; Bernabé, J.M. Use of Drones for the Topo-bathymetric Monitoring of the Reservoirs of the Segura River Basin. Water 2019, 11, 445. [Google Scholar] [CrossRef]

- Romero, M.A.; López Bermúdez, F.; Cabezas, F. Erosion and Fluvial Sedimentation in the River Segura Basin (Spain). Catena 1992, 19, 379–392. [Google Scholar] [CrossRef]

- Martínez-Carricondo, P.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Mesas-Carrascosa, F.-J.; García-Ferrer, A.; Pérez-Porras, F.-J. Assessment of UAV-photogrammetric Mapping Accuracy Based on Variation of Ground Control Points. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- Lee, J. Comparison of Existing Methods for Building Triangular Irregular Network, Models of Terrain from Grid Digital Elevation Models. Int. J. Geogr. Inf. Syst. 1991, 5, 267–285. [Google Scholar] [CrossRef]

- Brivio, P.A.; Colombo, R.; Maggi, M.; Tomasoni, R. Integration of Remote Sensing Data and GIS for Accurate Mapping of Flooded Areas. Int. J. Remote Sens. 2002, 23, 429–441. [Google Scholar] [CrossRef]

- Brown, D.G.; Riolo, R.; Robinson, D.T.; North, M.; Rand, W. Spatial Process and Data Models: Toward Integration of Agent-based Models and GIS. J. Geogr. Syst. 2005, 7, 25–47. [Google Scholar] [CrossRef]

- Genchi, S.A.; Vitale, A.J.; Perillo, G.M.E.; Seitz, C.; Delrieux, C.A. Mapping Topobathymetry in a Shallow Tidal Environment Using Low-cost Technology. Remote Sens. 2020, 12, 1394. [Google Scholar] [CrossRef]

- Friedrichs, C.T. Tidal Flat Morphodynamics. In Treatise on Estuarine and Coastal Science; Wolanski, E., McLusky, D., Eds.; Elsevier: Amsterdam, The Netherlands, 2011; Volume 3, pp. 137–170. [Google Scholar]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using Unmanned Aerial Vehicles (UAV) for High-resolution Reconstruction of Topography: The Structure from Motion Approach on Coastal Environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV Topographic Surveys Processed with Structure-from-Motion: Ground Control Quality, Quantity and Bundle Adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef]

- Bartier, P.M.; Keller, C.P. Multivariate Interpolation to Incorporate Thematic Surface Data Using Inverse Distance Weighting (IDW). Comput. Geosci. 1996, 22, 795–799. [Google Scholar] [CrossRef]

- Oliver, M.A.; Webster, R. Kriging: A Method of Interpolation for Geographical Information Systems. Int. J. Geogr. Inf. Syst. 1990, 4, 313–332. [Google Scholar] [CrossRef]

- Willmott, C.J.; Matsuura, K. Advantages of the Mean Absolute Error (MAE) over the Root Mean Square Error (RMSE) in Assessing Average Model Performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Pham, H. A New Criterion for Model Selection. Mathematics 2019, 7, 1215. [Google Scholar] [CrossRef]

- Wikle, C.K.; Berliner, L.M. A Bayesian Tutorial for Data Assimilation. Phys. D Nonlinear Phenom. 2007, 230, 1–16. [Google Scholar] [CrossRef]

- Gesch, D.; Wilson, R. Development of a Seamless Multisource Topographic/Bathymetric Elevation Model of Tampa Bay. Mar. Technol. Soc. J. 2001, 35, 58–64. [Google Scholar] [CrossRef][Green Version]

- Andreasen, C.; Pryor, D.E. Hydrographic and Bathymetric Systems for NOAA Programs. Mar. Geod. 1988, 12, 21–39. [Google Scholar] [CrossRef]

- Tyler, D.; Zawada, D.G.; Nayegandhi, A.; Brock, J.C.; Crane, M.P.; Yates, K.K.; Smith, K.E.L. Topobathymetric Data for Tampa Bay, Florida; USGS: Reston, VA, USA, 2007.

- Myers, E.P. Review of Progress on VDatum, a Vertical Datum Transformation Tool. In Proceedings of the OCEANS 2005, Washington, DC, USA, 20–23 June 2005. [Google Scholar]

- Nwankwo, U.C.; Howden, S.; Wells, D.; Connon, B. Validation of VDatum in Southeastern Louisiana and Western Coastal Mississippi. Mar. Geod. 2021, 44, 1–25. [Google Scholar] [CrossRef]

- White, S. Utilization of LIDAR and NOAA’s Vertical Datum Transformation Tool (VDatum) for Shoreline Delineation. In Proceedings of the OCEANS 2007, Vancouver, BC, Canada, 29 September–4 October 2007. [Google Scholar]

- Keller, W.; Borkowski, A. Thin Plate Spline Interpolation. J. Geod. 2019, 93, 1251–1269. [Google Scholar] [CrossRef]

- Gesch, D.B.; Oimoen, M.J.; Greenlee, S.K.; Nelson, C.A.; Steuck, M.J.; Tyler, D.J. The National Elevation Data Set. Photogramm. Eng. Remote Sens. 2002, 68, 511. [Google Scholar]

- IHO. IHO Standards for Hydrographic Surveys, 6th ed.; Special Publication No. 44; IHO: Monte Carlo, Monaco, 2020. [Google Scholar]

- Dickens, K.; Armstrong, A. Application of Machine Learning in Satellite Derived Bathymetry and Coastline Detection. SMU Data Sci. Rev. 2019, 2, 4. [Google Scholar]

- Dong, X.L.; Rekatsinas, T. Data Integration and Machine Learning: A Natural Synergy. In Proceedings of the 2018 International Conference on Management of Data (SIGMOD 2018), Houston, TX, USA, 10–15 June 2018. [Google Scholar]

| Measurement Accuracy | Method According to Dąbrowski P.S. et al. | Method According to Genchi S.A. et al. | Method According to Gesch D. and Wilson R. |

|---|---|---|---|

| dN 1 | 0.023 m | – | – |

| dE 2 | 0.16 m | – | – |

| dNH 3 | 0.027 m | – | – |

| RMSEx 4 | – | 0.15 m | – |

| RMSEy 5 | – | 0.18 m | – |

| RMSEz 6 | – | 0.007 m | – |

| RMSE 7 | – | 0.18 m | – |

| MAE 8 | – | 0.05 m | – |

| R2 9 | – | 0.90 | – |

| RMSE 10 | – | – | 0.43 m |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lewicka, O.; Specht, M.; Stateczny, A.; Specht, C.; Brčić, D.; Jugović, A.; Widźgowski, S.; Wiśniewska, M. Analysis of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods Used in Hydrography. Sensors 2021, 21, 7831. https://doi.org/10.3390/s21237831

Lewicka O, Specht M, Stateczny A, Specht C, Brčić D, Jugović A, Widźgowski S, Wiśniewska M. Analysis of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods Used in Hydrography. Sensors. 2021; 21(23):7831. https://doi.org/10.3390/s21237831

Chicago/Turabian StyleLewicka, Oktawia, Mariusz Specht, Andrzej Stateczny, Cezary Specht, David Brčić, Alen Jugović, Szymon Widźgowski, and Marta Wiśniewska. 2021. "Analysis of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods Used in Hydrography" Sensors 21, no. 23: 7831. https://doi.org/10.3390/s21237831

APA StyleLewicka, O., Specht, M., Stateczny, A., Specht, C., Brčić, D., Jugović, A., Widźgowski, S., & Wiśniewska, M. (2021). Analysis of GNSS, Hydroacoustic and Optoelectronic Data Integration Methods Used in Hydrography. Sensors, 21(23), 7831. https://doi.org/10.3390/s21237831