sEMG-Based Hand Posture Recognition Considering Electrode Shift, Feature Vectors, and Posture Groups

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

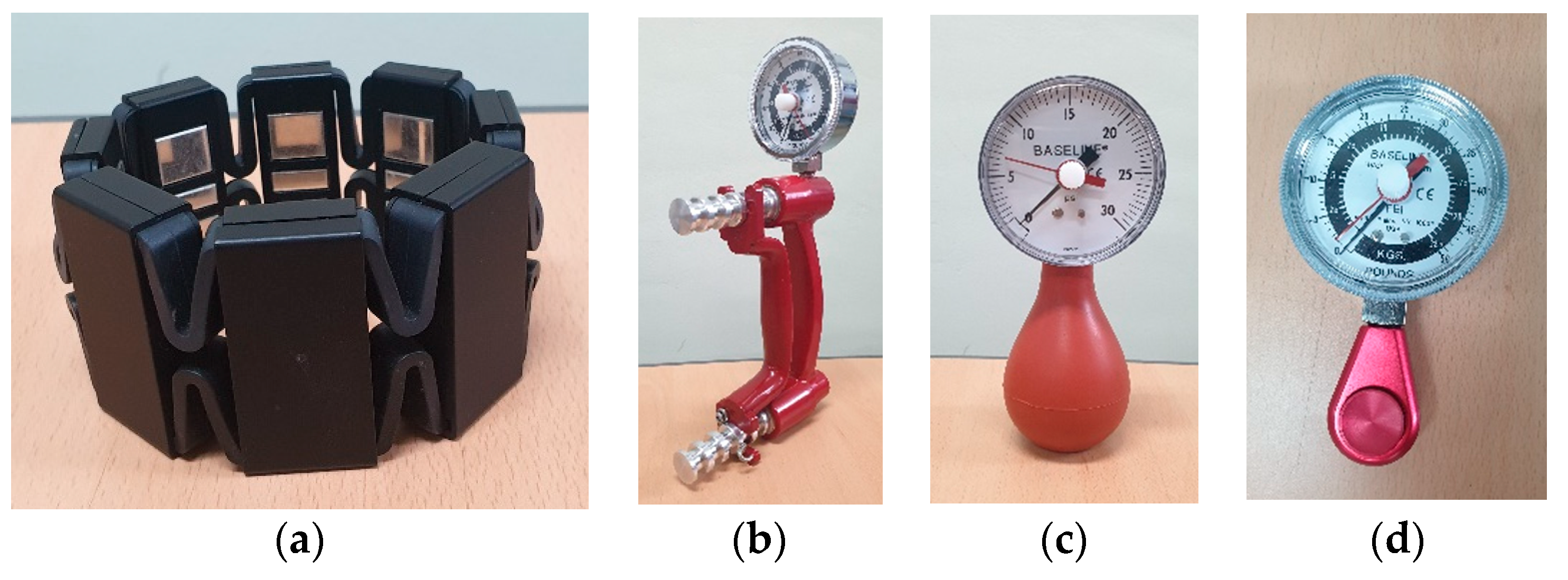

2.2. Equipment

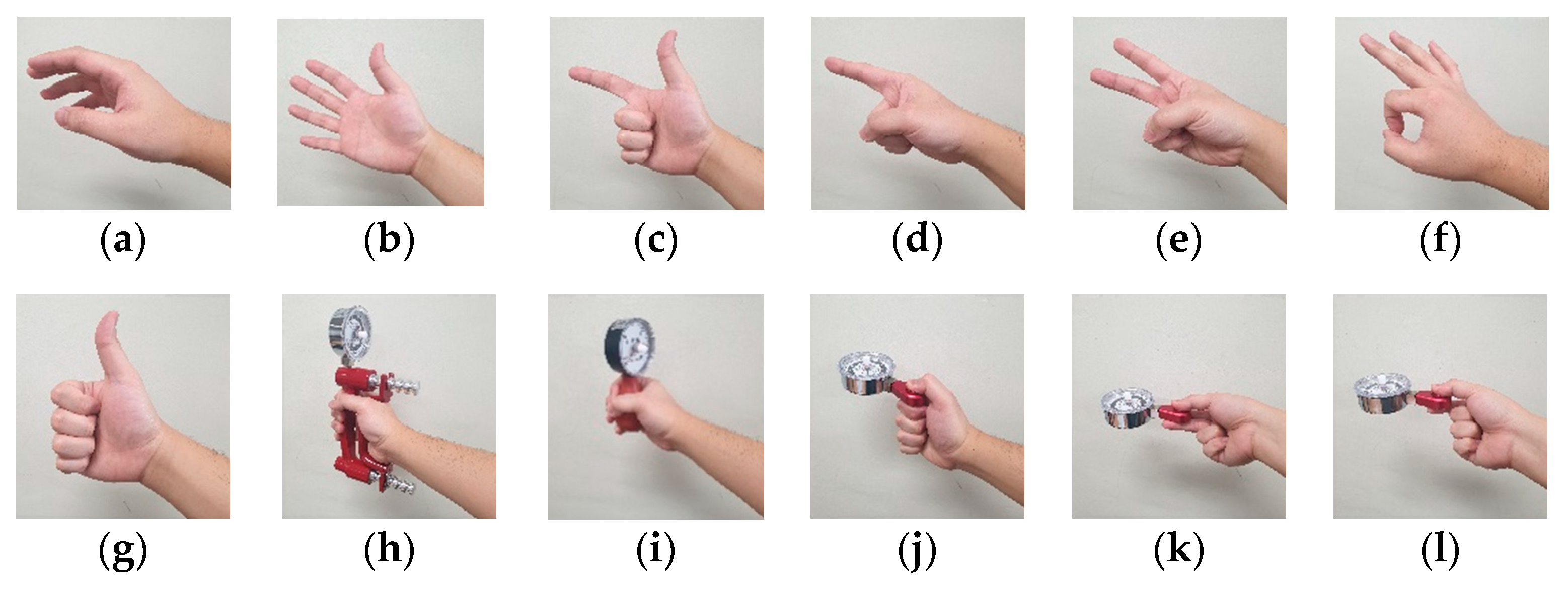

2.3. Experimental Procedures

2.4. Feature Vector Extraction

2.5. Classifier

2.6. Performance Evaluation

3. Results

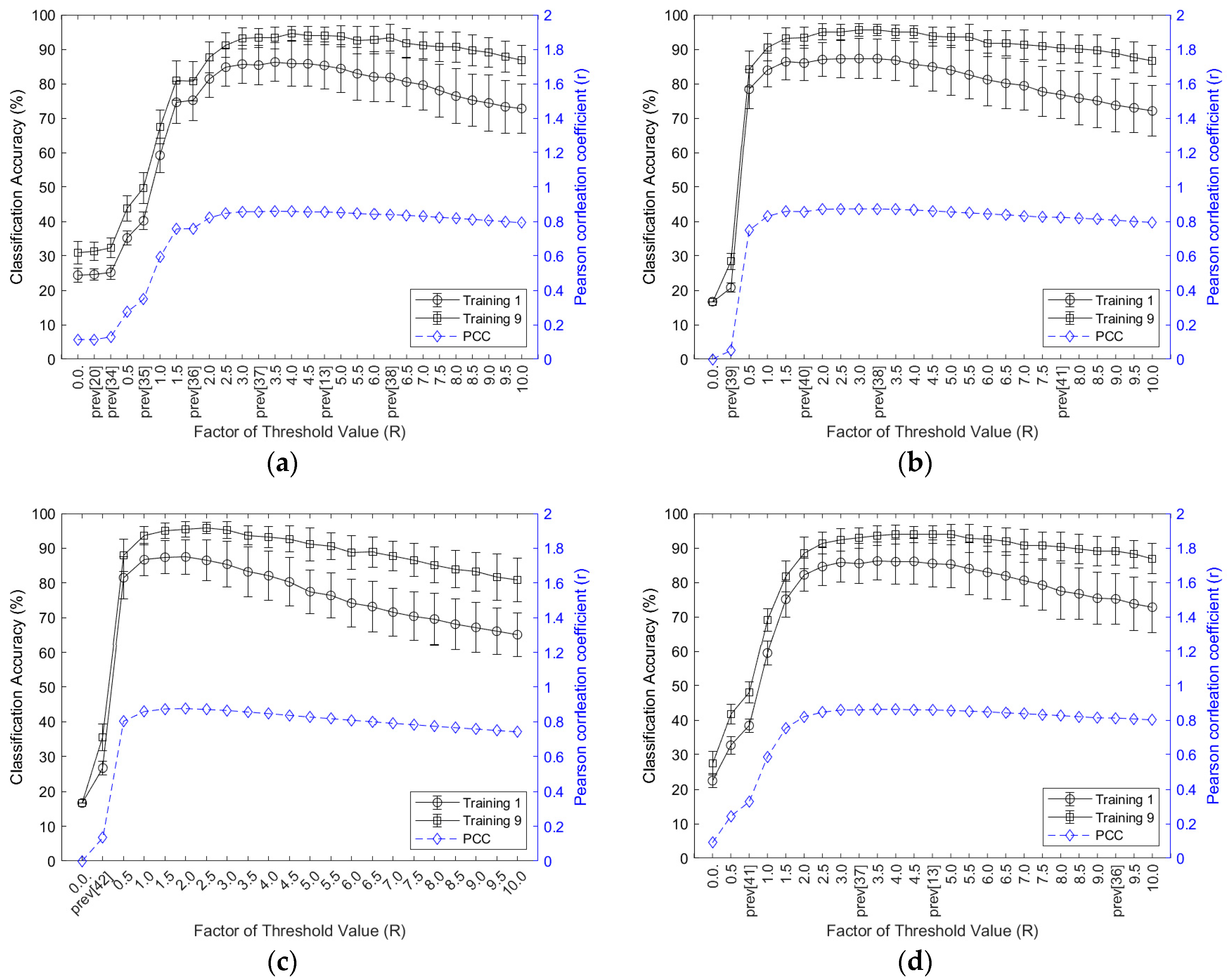

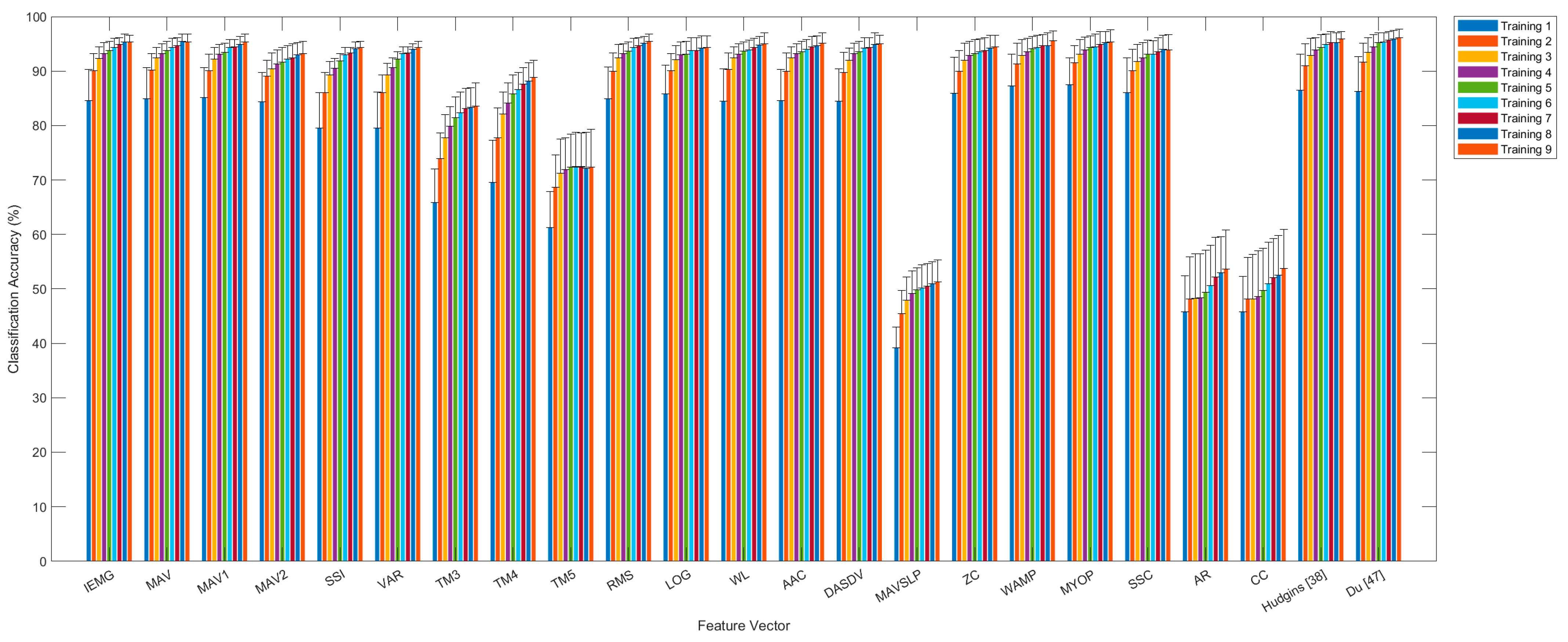

3.1. Classification Accuracy Based on Threshold Values and Feature Vector Orders

3.2. Classification Accuracy and Inter-Session PCC

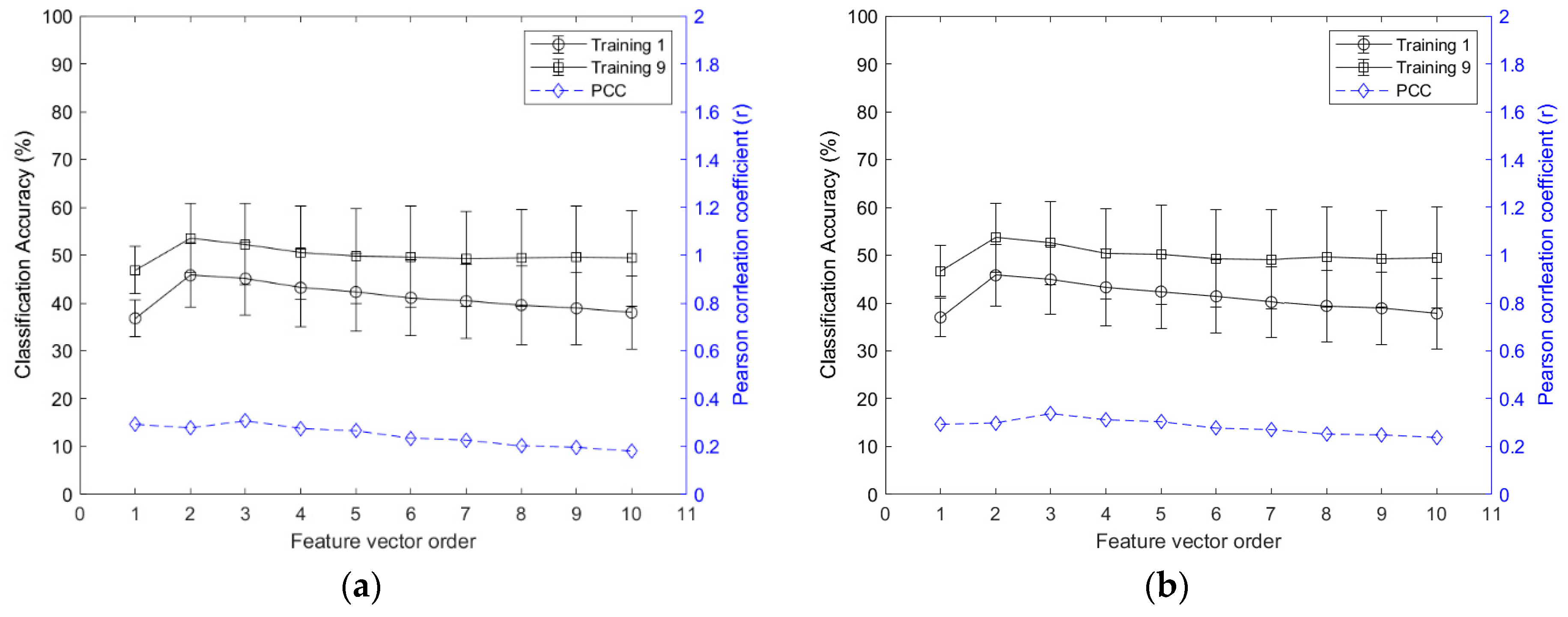

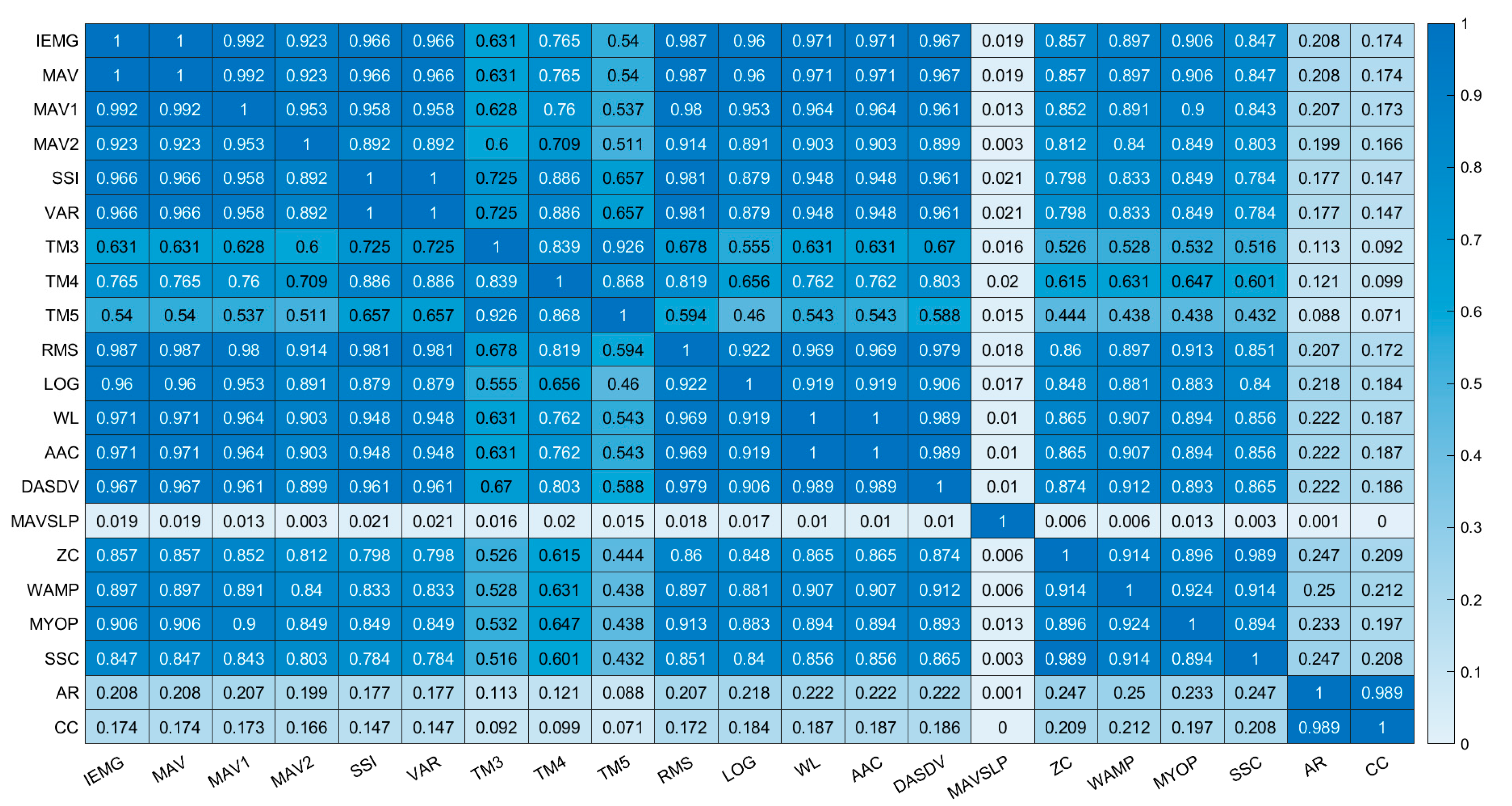

3.3. Classification Accuracy and Inter-Feature PCC

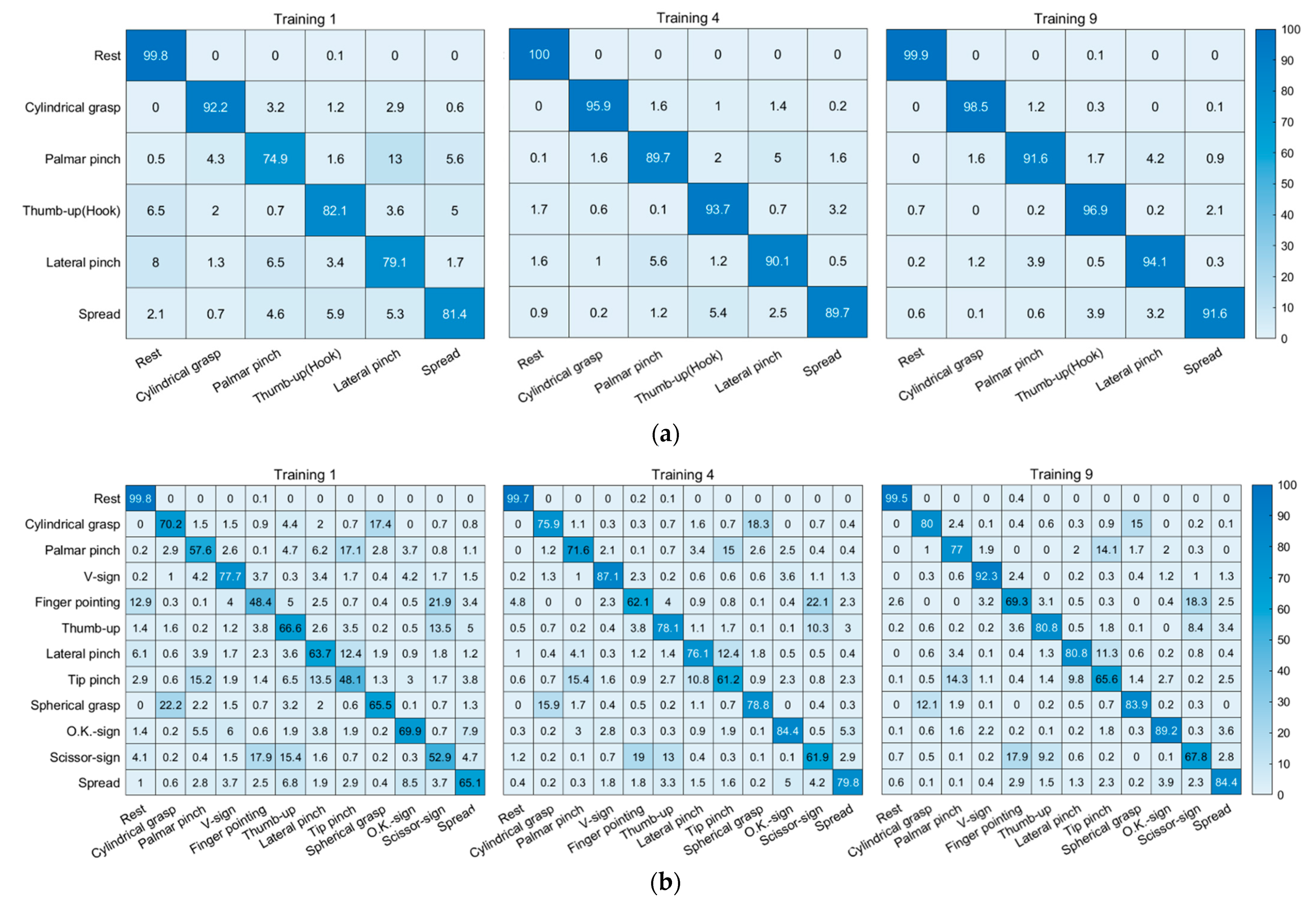

3.4. Classification Accuracies According to Hand Posture Groups

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mitra, S.; Acharya, T. Gesture recognition: A survey. IEEE Trans. Syst. Man. Cybern. Part C Appl. Rev. 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Chakraborty, B.K.; Sarma, D.; Bhuyan, M.K.; MacDorman, K.F. Review of constraints on vision-based gesture recognition for human–computer interaction. IET Comput. Vis. 2018, 12, 3–15. [Google Scholar] [CrossRef]

- Wachs, J.P.; Kölsch, M.; Stern, H.; Edan, Y. Vision-based hand-gesture applications. Commun. ACM 2011, 54, 60–71. [Google Scholar] [CrossRef] [Green Version]

- Shin, J.H.; Lee, J.S.; Kil, S.K.; Shen, D.F.; Ryu, J.G.; Lee, E.H.; Hong, S.H. Hand region extraction and gesture recognition using entropy analysis. Int. J. Comput. Sci. Netw. Secur. 2006, 6, 216–222. [Google Scholar]

- Stergiopoulou, E.; Papamarkos, N. Hand gesture recognition using a neural network shape fitting technique. Eng. Appl. Artif. Intell. 2009, 22, 1141–1158. [Google Scholar] [CrossRef]

- Nam, Y.; Wohn, K. Recognition of space-time hand-gestures using hidden Markov model. In Proceedings of the ACM Symposium on Virtual Reality Software and Technology, Hong Kong, China, 1–4 July 1996; pp. 51–58. [Google Scholar]

- Yin, S.; Yang, J.; Qu, Y.; Liu, W.; Guo, Y.; Liu, H.; Wei, D. Research on gesture recognition technology of data glove based on joint algorithm. In Proceedings of the International Conference on Mechanical, Electronic, Control and Automation Engineering, Qingdao, China, 30–31 March 2018; pp. 1–10. [Google Scholar]

- Kim, J.; Mastnik, S.; André, E. EMG-based hand gesture recognition for real-time biosignal interfacing. In Proceedings of the 13th International Conference on Intelligent User Interfaces, Gran Canaria, Spain, 13–16 January 2008; pp. 30–39. [Google Scholar]

- Shi, W.T.; Lyu, Z.J.; Tang, S.T.; Chia, T.L.; Yang, C.Y. A bionic hand controlled by hand gesture recognition based on surface EMG signals: A preliminary study. Biocybern. Biomed. Eng. 2018, 38, 126–135. [Google Scholar] [CrossRef]

- Jiang, S.; Lv, B.; Guo, W.; Zhang, C.; Wang, H.; Sheng, X.; Shull, P.B. Feasibility of wrist-worn, real-time hand, and surface gesture recognition via sEMG and IMU sensing. IEEE Trans. Ind. Inf. 2017, 14, 3376–3385. [Google Scholar] [CrossRef]

- Abreu, J.G.; Teixeira, J.M.; Figueiredo, L.S.; Teichrieb, V. Evaluating sign language recognition using the myo armband. In Proceedings of the 2016 XVIII Symposium on Virtual and Augmented Reality, Gramado, Brazil, 21–24 June 2016; pp. 64–70. [Google Scholar]

- Phinyomark, A.; Hirunviriya, S.; Limsakul, C.; Phukpattaranont, P. Evaluation of EMG feature extraction for hand movement recognition based on Euclidean distance and standard deviation. In Proceedings of the ECTI-CON2010: The 2010 ECTI International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Chiang Mai, Thailand, 19–21 May 2010; pp. 856–860. [Google Scholar]

- Tkach, D.; Huang, H.; Kuiken, T.A. Study of stability of time-domain features for electromyographic pattern recognition. J. NeuroEng. Rehabil. 2010, 7, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kakoty, N.M.; Hazarika, S.M.; Gan, J.Q. EMG feature set selection through linear relationship for grasp recognition. J. Med. Biol. Eng. 2016, 36, 883–890. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. GA-based feature subset selection for myoelectric classification. In Proceedings of the 2006 IEEE International Conference on Robotics and Biomimetics, Kunming, China, 17–20 December 2006; pp. 1465–1470. [Google Scholar]

- Wahid, M.F.; Tafreshi, R.; Al-Sowaidi, M.; Langari, R. Subject-independent hand gesture recognition using normalization and machine learning algorithms. J. Comput. Sci. 2018, 27, 69–76. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, K.; Qian, J.; Zhang, L. Real-time surface EMG pattern recognition for hand gestures based on an artificial neural network. Sensors 2019, 19, 3170. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Castiblanco, J.C.; Ortmann, S.; Mondragon, I.F.; Alvarado-Rojas, C.; Jöbges, M.; Colorado, J.D. Myoelectric pattern recognition of hand motions for stroke rehabilitation. Biomed. Signal Process. Control 2020, 57, 101737. [Google Scholar] [CrossRef]

- Kim, S.; Kim, J.; Ahn, S.; Kim, Y. Finger language recognition based on ensemble artificial neural network learning using armband EMG sensors. Technol. Health Care 2018, 26, 249–258. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Kim, J.; Koo, B.; Kim, T.; Jung, H.; Park, S.; Kim, Y. Development of an armband EMG module and a pattern recognition algorithm for the 5-finger myoelectric hand prosthesis. Int. J. Precis. Eng. Manuf. 2019, 20, 1997–2006. [Google Scholar] [CrossRef]

- De Andrade, F.H.C.; Pereira, F.G.; Resende, C.Z.; Cavalieri, D.C. Improving sEMG-based hand gesture recognition using maximal overlap discrete wavelet transform and an autoencoder neural network. In Proceedings of the XXVI Brazilian Congress on Biomedical Engineering, Armação dos Búzios, Brazil, 21–25 October 2019; pp. 271–279. [Google Scholar]

- Suarez, J.; Murphy, R.R. Hand gesture recognition with depth images: A review. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 411–417. [Google Scholar]

- Murthy, G.R.S.; Jadon, R.S. Hand gesture recognition using neural networks. In Proceedings of the 2010 IEEE 2nd International Advance Computing Conference (IACC), Patiala, India, 19–20 February 2010; pp. 134–138. [Google Scholar]

- Li, W.J.; Hsieh, C.Y.; Lin, L.F.; Chu, W.C. Hand gesture recognition for post-stroke rehabilitation using leap motion. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 386–388. [Google Scholar]

- Chonbodeechalermroong, A.; Chalidabhongse, T.H. Dynamic contour matching for hand gesture recognition from monocular image. In Proceedings of the 2015 12th International Joint Conference on Computer Science and Software Engineering (JCSSE), Hatyai, Thailand, 22–24 July 2015; pp. 47–51. [Google Scholar]

- Ren, Z.; Meng, J.; Yuan, J.; Zhang, Z. Robust hand gesture recognition with Kinect sensor. In Proceedings of the 19th ACM International Conference on Multimedia, New York, NY, USA, 1–28 December 2011; pp. 759–760. [Google Scholar]

- Sayin, F.S.; Ozen, S.; Baspinar, U. Hand gesture recognition by using sEMG signals for human machine interaction applications. In Proceedings of the 2018 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 19–21 September 2018; pp. 27–30. [Google Scholar]

- Yang, Y.; Fermuller, C.; Li, Y.; Aloimonos, Y. Grasp type revisited: A modern perspective on a classical feature for vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 400–408. [Google Scholar]

- Plouffe, G.; Cretu, A.M. Static and dynamic hand gesture recognition in depth data using dynamic time warping. IEEE Trans. Instrum. Meas. 2015, 65, 305–316. [Google Scholar] [CrossRef]

- Apostol, B.; Mihalache, C.R.; Manta, V. Using spin images for hand gesture recognition in 3D point clouds. In Proceedings of the 2014 18th International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 17–19 October 2014; pp. 544–549. [Google Scholar]

- Frey Law, L.A.; Avin, K.G. Endurance time is joint-specific: A modelling and meta-analysis investigation. Ergonomics 2010, 53, 109–129. [Google Scholar] [CrossRef] [Green Version]

- Oskoei, M.A.; Hu, H. Support vector machine-based classification scheme for myoelectric control applied to upper limb. IEEE Trans. Biomed. Eng. 2008, 55, 1956–1965. [Google Scholar] [CrossRef] [PubMed]

- Englehart, K.; Hudgin, B.; Parker, P.A. A wavelet-based continuous classification scheme for multifunction myoelectric control. IEEE Trans. Biomed. Eng. 2001, 48, 302–311. [Google Scholar] [CrossRef]

- Kamavuako, E.N.; Scheme, E.J.; Englehart, K.B. Determination of optimum threshold values for EMG time domain features; a multi-dataset investigation. J. Neural Eng. 2016, 13, 046011. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Amrani, M.Z.E.A.; Daoudi, A.; Achour, N.; Tair, M. Artificial neural networks based myoelectric control system for automatic assistance in hand rehabilitation. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 968–973. [Google Scholar]

- Phinyomark, A.; Quaine, F.; Laurillau, Y. The relationship between anthropometric variables and features of electromyography signal for human–computer interface. In Applications, Challenges, and Advancements in Electromyography Signal Processing; Ganesh, R.N., Ed.; IGI Global: Hershey, PA, USA, 2014; pp. 321–353. [Google Scholar]

- Phinyomark, A.; Limsakul, C.; Phukpattaranont, P. A novel feature extraction for robust EMG pattern recognition. J. Comput. 2009, 1, 71–80. [Google Scholar]

- Hudgins, B.; Parker, P.; Scott, R.N. A new strategy for multifunction myoelectric control. IEEE Trans. Biomed. Eng. 1993, 40, 82–94. [Google Scholar] [CrossRef] [PubMed]

- Phinyomark, A.; Limsakul, C.; Phukpattaranont, P. EMG feature extraction for tolerance of white Gaussian noise. In Proceedings of the International Workshop and Symposium Science Technology, Nong Khai, Thailand, 2 December 2008; pp. 178–183. [Google Scholar]

- Boostani, R.; Moradi, M.H. Evaluation of the forearm EMG signal features for the control of a prosthetic hand. Physiol. Meas. 2003, 24, 309–319. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Phinyomark, A.; Limsakul, C.; Phukpattaranont, P. EMG feature extraction for tolerance of 50 Hz interference. In Proceedings of the PSU-UNS International Conference on Engineering Technologies, ICET, Novi Sad, Serbia, 28–30 April 2009; pp. 289–293. [Google Scholar]

- Phinyomark, A.; Hirunviriya, S.; Nuidod, A.; Phukpattaranont, P.; Limsakul, C. Evaluation of EMG feature extraction for movement control of upper limb prostheses based on class separation index. In Proceedings of the 5th Kuala Lumpur International Conference on Biomedical Engineering 2011, Kuala Lumpur, Malaysia, 20–23 June 2011; pp. 750–754. [Google Scholar]

- Fougner, A.L. Proportional Myoelectric Control of a Multifunction Upper-Limb Prosthesis. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, June 2007. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Mat. Control. Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Hornik, K. Approximation capabilities of multilayer feedforward networks. Neural Netw. 1991, 4, 251–257. [Google Scholar] [CrossRef]

- Heaton, J. The Number of Hidden Layers. Available online: https://www.heatonresearch.com/2017/06/01/hidden-layers.html (accessed on 31 August 2021).

- Du, Y.C.; Lin, C.H.; Shyu, L.Y.; Chen, T. Portable hand motion classifier for multi-channel surface electromyography recognition using grey relational analysis. Expert Syst. Appl. 2010, 37, 4283–4291. [Google Scholar] [CrossRef]

- Lu, Z.; Tong, K.Y.; Zhang, X.; Li, S.; Zhou, P. Myoelectric pattern recognition for controlling a robotic hand: A feasibility study in stroke. IEEE Trans. Biomed. Eng. 2018, 66, 365–372. [Google Scholar] [CrossRef] [PubMed]

- Phinyomark, A.; Campbell, E.; Scheme, E. Surface electromyography (EMG) signal processing, classification, and practical considerations. In Biomedical Signal Processing; Springer: Singapore, 2020; pp. 3–29. [Google Scholar] [CrossRef]

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal. Process. Control 2007, 2, 275–294. [Google Scholar] [CrossRef]

- Abbaspour, S.; Lindén, M.; Gholamhosseini, H.; Naber, A.; Ortiz-Catalan, M. Evaluation of surface EMG-based recognition algorithms for decoding hand movements. Med. Biol. Eng. Comput. 2020, 58, 83–100. [Google Scholar] [CrossRef] [Green Version]

- Phinyomark, A.; Quaine, F.; Charbonnier, S.; Serviere, C.; Tarpin-Bernard, F.; Laurillau, Y. EMG feature evaluation for improving myoelectric pattern recognition robustness. Expert Syst. Appl. 2013, 40, 4832–4840. [Google Scholar] [CrossRef]

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431. [Google Scholar] [CrossRef]

- Phinyomark, A.; Khushaba, R.N.; Scheme, E. Feature extraction and selection for myoelectric control based on wearable EMG sensors. Sensors 2018, 18, 1615. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Function | Hand Postures | Posture Groups | |||

|---|---|---|---|---|---|

| Holding objects | Cylindrical grasp [21,27,28] (for cylindrical objects) | Spherical grasp [21,28](for spherical objects) | Group 1 | Rest, cylindrical grasp, palmar pinch, hook (thumb-up), lateral pinch, spread | |

| Holding small/thin/flat objects | Palmar pinch [21,28] (using thumb, index, and middle fingers for palm facing the objects) | Tip pinch [21,28] (using thumb and index fingers for palm facing the objects) | Group 2 | Group 1 + finger pointing | |

| Lateral pinch [21,27] (using a thumb pad and the radial side of the index finger) | Group 3 | Group 1 + tip pinch | |||

| Group 4 | Group 1 + spherical grasp | ||||

| Releasing objects | - | Spread [10,24,27,29,30] | Group 5 | Group 1 + scissor-sign | |

| Supporting loads | |||||

| Expression of emotion | Thumb-up (hook) [10,21,23,28,29,30] | V-sign [10,25,29,30] | O.K.-sign [10,22,29,30] | Group 6 | Rest, cylindrical grasp, palmar pinch, V-sign, finger pointing, thumb-up (hook), lateral pinch |

| No activation | Rest | ||||

| Pointing objects | Finger pointing [10,23,27] (using index finger, only) | Scissor-sign [23,26] (using thumb and index finger) | |||

| Group 7 | All hand postures (12) | ||||

| N: window size, i: data sample, EMGi: sEMG signal, wi: white noise error term; p: function order | |||

| ; | |||

| ; ; ; | |||

| Feature Vector | Classification Accuracy (%): Mean (Standard Deviation) | PCC (r) | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TRN1 | TRN2 | TRN3 | TRN4 | TRN5 | TRN6 | TRN7 | TRN8 | TRN9 | |||||||||||

| IEMG | 84.6 | (5.7) | 90.1 | (3.1) | 92.3 | (2.2) | 93.2 | (2.0) | 93.8 | (1.7) | 94.4 | (1.6) | 94.9 | (1.3) | 95.4 | (1.4) | 95.4 | (1.2) | 0.837 |

| MAV | 84.9 | (5.8) | 90.2 | (3.0) | 92.5 | (1.9) | 93.2 | (1.8) | 93.8 | (1.7) | 94.3 | (1.7) | 94.7 | (1.5) | 95.5 | (1.3) | 95.4 | (1.4) | 0.837 |

| MAV1 | 85.2 | (5.5) | 90.1 | (3.0) | 92.2 | (2.1) | 93.1 | (1.8) | 93.5 | (1.6) | 94.3 | (1.5) | 94.5 | (1.3) | 94.9 | (1.5) | 95.4 | (1.4) | 0.835 |

| MAV2 | 84.4 | (5.3) | 89.1 | (2.9) | 90.4 | (2.9) | 91.3 | (2.6) | 91.7 | (2.7) | 92.2 | (2.5) | 92.5 | (2.5) | 93.0 | (2.3) | 93.2 | (2.3) | 0.808 |

| SSI | 79.6 | (6.5) | 86.0 | (3.8) | 89.3 | (2.5) | 90.5 | (1.8) | 91.9 | (1.3) | 93.0 | (1.3) | 93.3 | (1.1) | 94.1 | (1.3) | 94.4 | (1.1) | 0.735 |

| VAR | 79.5 | (6.7) | 86.0 | (3.3) | 89.3 | (2.1) | 90.6 | (1.8) | 92.2 | (1.4) | 93.2 | (1.3) | 93.3 | (1.2) | 94.0 | (1.0) | 94.3 | (1.2) | 0.735 |

| TM3 | 65.9 | (6.1) | 73.9 | (4.7) | 77.7 | (4.3) | 79.9 | (3.6) | 81.5 | (3.8) | 82.4 | (3.8) | 83.1 | (3.7) | 83.4 | (3.5) | 83.6 | (4.2) | 0.392 |

| TM4 | 69.6 | (7.7) | 77.8 | (5.5) | 82.1 | (4.1) | 84.1 | (3.7) | 85.8 | (3.5) | 86.6 | (3.1) | 87.6 | (3.1) | 88.2 | (3.4) | 88.9 | (3.1) | 0.428 |

| TM5 | 61.3 | (6.6) | 68.7 | (5.9) | 71.3 | (6.2) | 71.9 | (5.8) | 72.4 | (6.0) | 72.5 | (6.3) | 72.5 | (6.1) | 72.1 | (6.7) | 72.4 | (6.9) | 0.248 |

| RMS | 84.9 | (5.9) | 90.0 | (3.3) | 92.4 | (2.5) | 93.2 | (1.8) | 93.7 | (1.7) | 94.3 | (1.7) | 94.7 | (1.4) | 95.1 | (1.3) | 95.5 | (1.3) | 0.829 |

| LOG | 85.8 | (5.3) | 90.1 | (3.1) | 92.1 | (2.6) | 93.0 | (2.4) | 93.1 | (2.4) | 93.8 | (2.3) | 93.8 | (2.4) | 94.2 | (2.3) | 94.3 | (2.2) | 0.859 |

| WL | 84.5 | (5.9) | 90.3 | (3.0) | 92.4 | (2.1) | 93.1 | (1.9) | 93.7 | (1.7) | 93.9 | (1.8) | 94.4 | (1.6) | 94.8 | (1.6) | 95.0 | (2.1) | 0.832 |

| AAC | 84.6 | (5.7) | 90.0 | (3.3) | 92.4 | (2.1) | 93.2 | (1.8) | 93.6 | (1.9) | 94.0 | (1.8) | 94.5 | (1.9) | 94.7 | (1.8) | 95.1 | (2.0) | 0.832 |

| DASDV | 84.5 | (5.9) | 89.8 | (3.7) | 92.0 | (2.2) | 93.2 | (1.9) | 93.6 | (1.9) | 94.2 | (1.9) | 94.3 | (1.8) | 94.9 | (2.1) | 95.0 | (1.6) | 0.824 |

| MAVSLP | 39.2 | (3.8) | 45.5 | (4.2) | 47.9 | (4.3) | 49.1 | (4.2) | 49.8 | (4.1) | 50.2 | (4.2) | 50.5 | (4.2) | 50.9 | (4.1) | 51.3 | (4.0) | 0.005 |

| ZC | 85.9 | (6.7) | 90.0 | (3.8) | 92.0 | (3.1) | 92.9 | (2.6) | 93.2 | (2.6) | 93.6 | (2.3) | 93.8 | (2.3) | 94.2 | (2.4) | 94.5 | (2.1) | 0.858 |

| WAMP | 87.3 | (5.8) | 91.3 | (3.8) | 92.9 | (2.9) | 93.6 | (2.4) | 94.1 | (2.3) | 94.3 | (2.3) | 94.7 | (2.0) | 94.7 | (2.3) | 95.6 | (1.8) | 0.873 |

| MYOP | 87.5 | (5.0) | 91.5 | (3.2) | 93.1 | (2.7) | 93.9 | (2.4) | 94.4 | (2.1) | 94.5 | (2.3) | 94.9 | (2.4) | 95.2 | (2.1) | 95.4 | (2.2) | 0.876 |

| SSC | 86.0 | (6.5) | 90.1 | (3.9) | 91.8 | (3.1) | 92.4 | (2.8) | 93.1 | (2.6) | 93.1 | (2.5) | 93.6 | (2.5) | 94.0 | (2.6) | 93.9 | (2.8) | 0.862 |

| AR | 45.8 | (6.6) | 48.1 | (7.8) | 48.3 | (8.2) | 48.4 | (8.0) | 49.4 | (7.7) | 50.6 | (7.4) | 52.2 | (7.3) | 53.0 | (6.6) | 53.6 | (7.2) | 0.278 |

| CC | 45.8 | (6.5) | 48.2 | (7.6) | 48.1 | (8.2) | 48.6 | (8.4) | 49.7 | (7.8) | 50.9 | (7.7) | 52.1 | (7.1) | 52.5 | (7.3) | 53.7 | (7.2) | 0.299 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Koo, B.; Nam, Y.; Kim, Y. sEMG-Based Hand Posture Recognition Considering Electrode Shift, Feature Vectors, and Posture Groups. Sensors 2021, 21, 7681. https://doi.org/10.3390/s21227681

Kim J, Koo B, Nam Y, Kim Y. sEMG-Based Hand Posture Recognition Considering Electrode Shift, Feature Vectors, and Posture Groups. Sensors. 2021; 21(22):7681. https://doi.org/10.3390/s21227681

Chicago/Turabian StyleKim, Jongman, Bummo Koo, Yejin Nam, and Youngho Kim. 2021. "sEMG-Based Hand Posture Recognition Considering Electrode Shift, Feature Vectors, and Posture Groups" Sensors 21, no. 22: 7681. https://doi.org/10.3390/s21227681

APA StyleKim, J., Koo, B., Nam, Y., & Kim, Y. (2021). sEMG-Based Hand Posture Recognition Considering Electrode Shift, Feature Vectors, and Posture Groups. Sensors, 21(22), 7681. https://doi.org/10.3390/s21227681