Development and Experimental Validation of an Intelligent Camera Model for Automated Driving

Abstract

1. Introduction

1.1. Role of Cameras in ADAS/AD Functions

1.2. Virtual Testing of ADAS/AD Functions

1.3. Previous Work on Automotive Camera Modeling

1.4. Datasets for Automotive Camera Sensors

1.5. Scope of Work

1.6. Structure of the Article

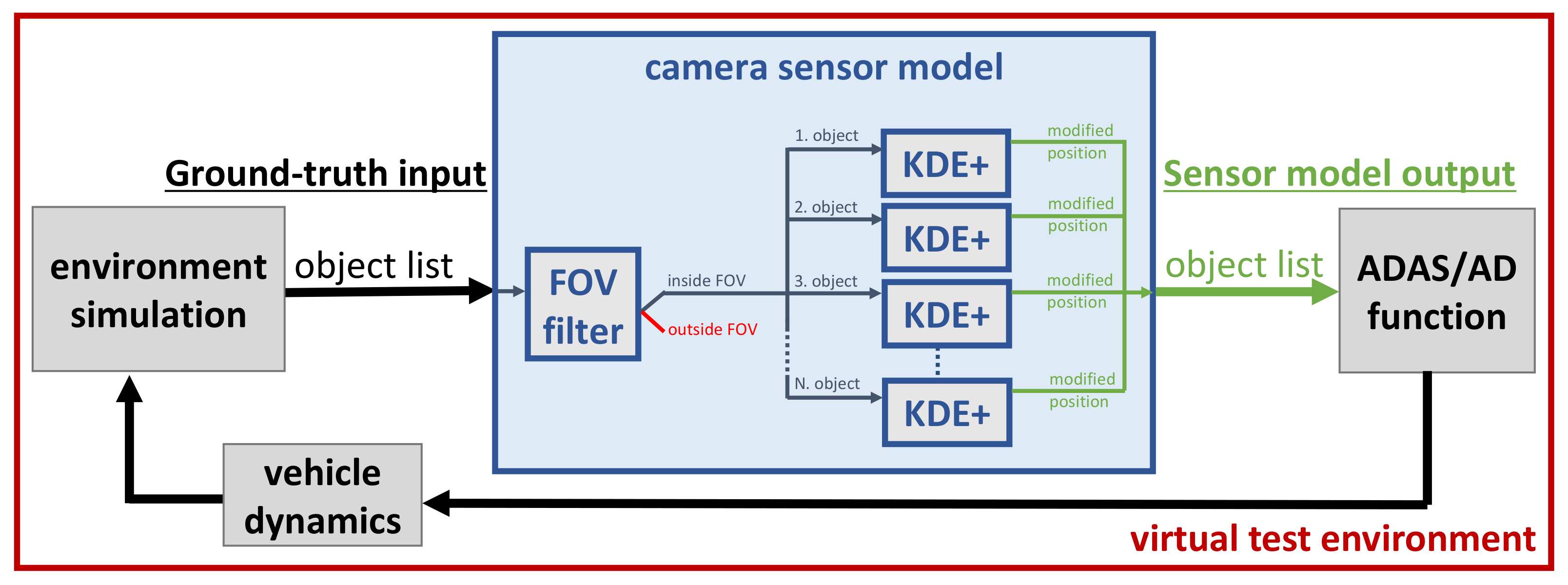

2. Object-List-Based Sensor Model

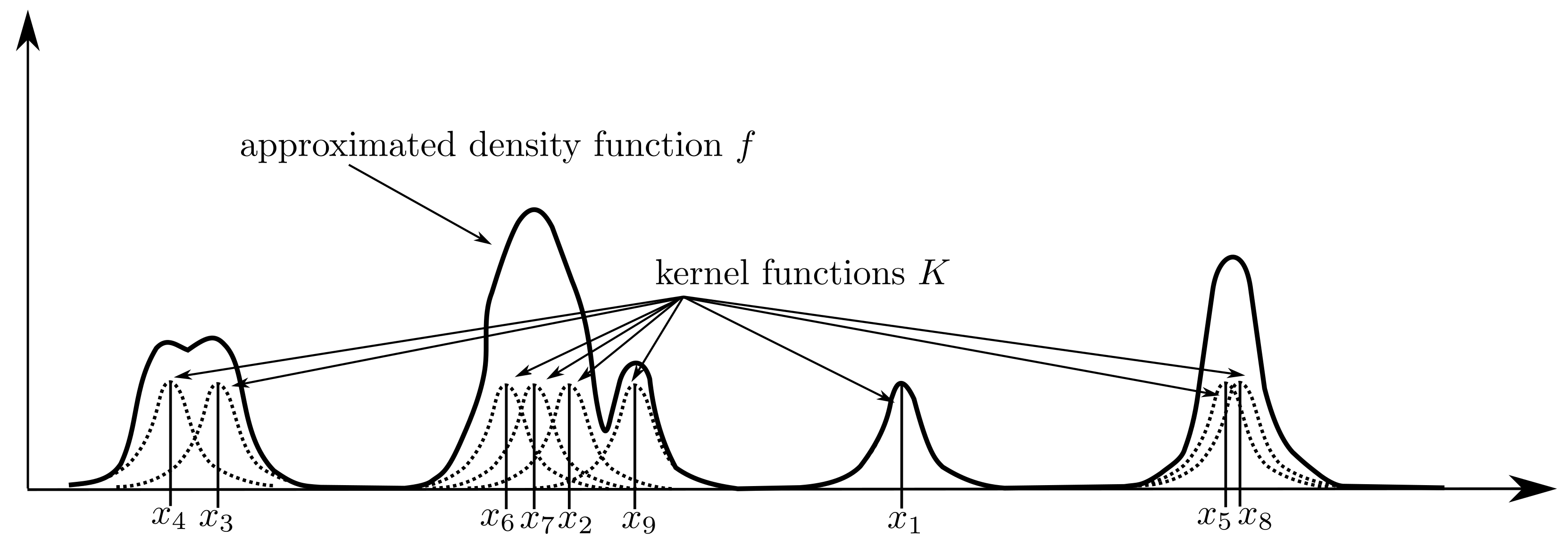

2.1. Kernel Density Estimation: A Short Introduction

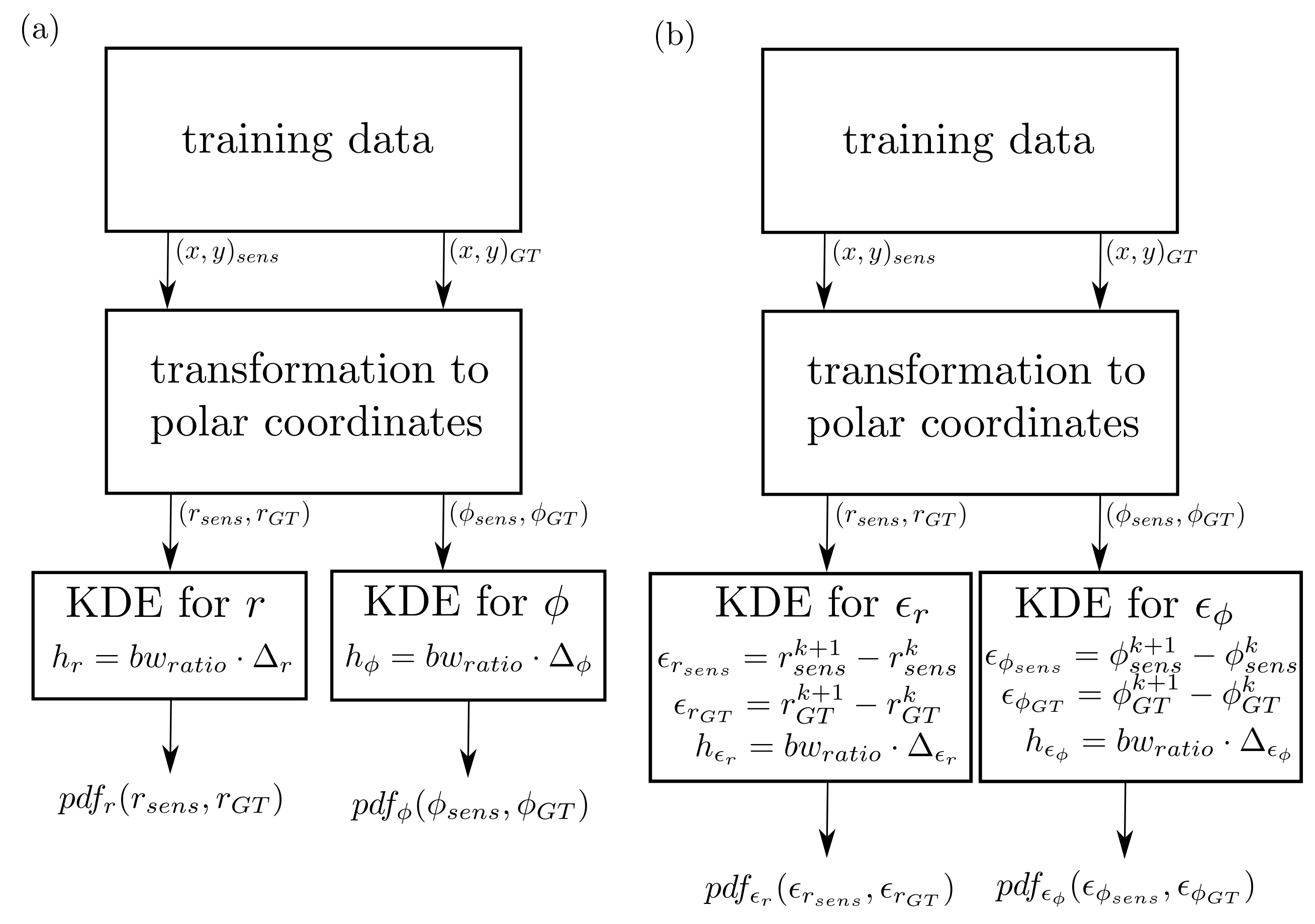

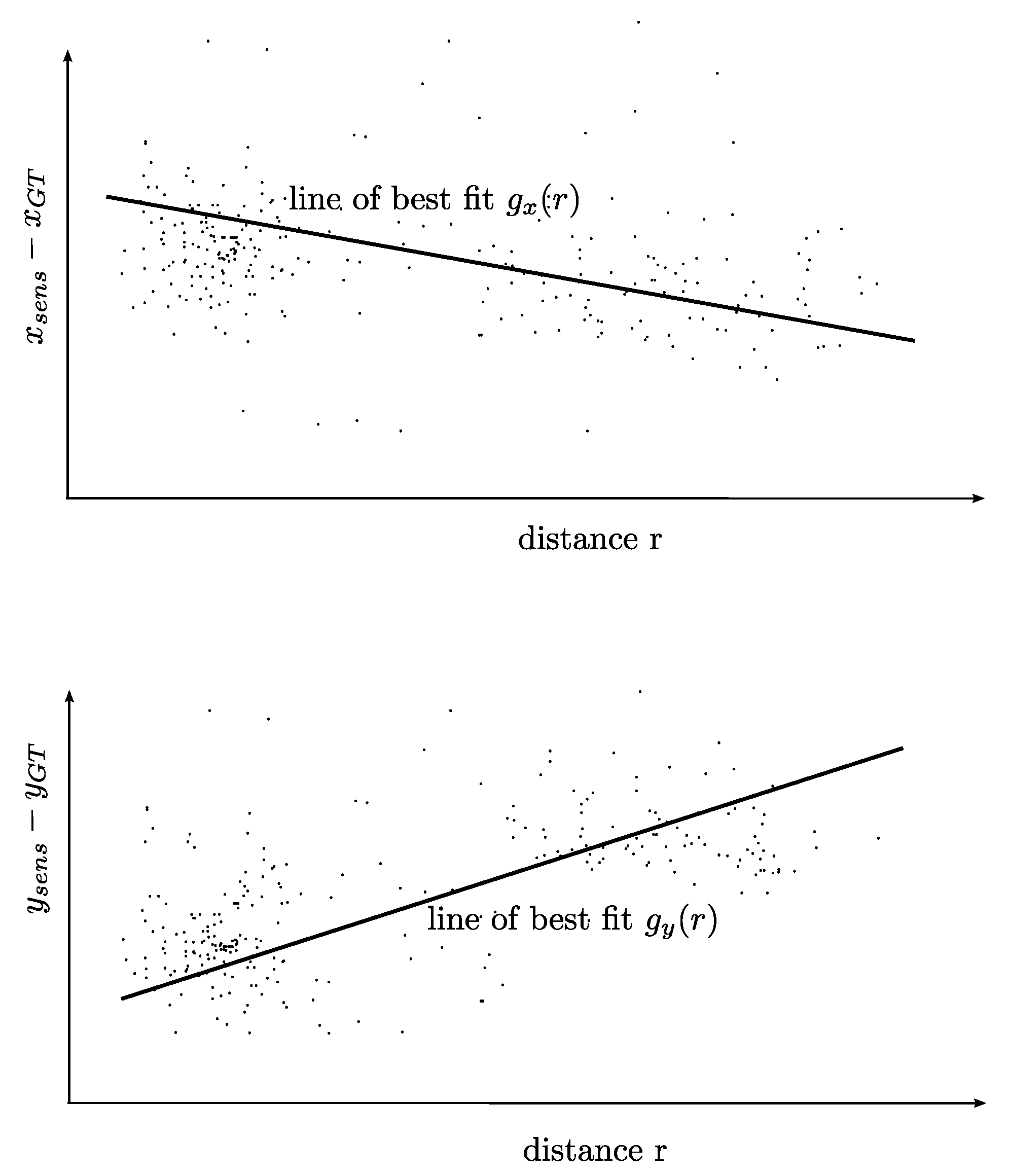

2.2. Sensor Model Development

3. Validation Data: Measurement Campaign

3.1. Campaign Description

- Road section 1. Interchange area (red): The two carriageways have different horizontal and vertical alignment, while leaving the M85-M86 interchange. In this section, two 3.50 m-wide lanes are available for the through traffic, and there are additional accelerating/decelerating lanes linked to junction ramps;

- Road section 2. Open highway (blue): A common, approximately 300 m-long dual-carriageway section with two 3.50 m-wide traffic lanes and a 3.00 m-wide hard shoulder on both sides.

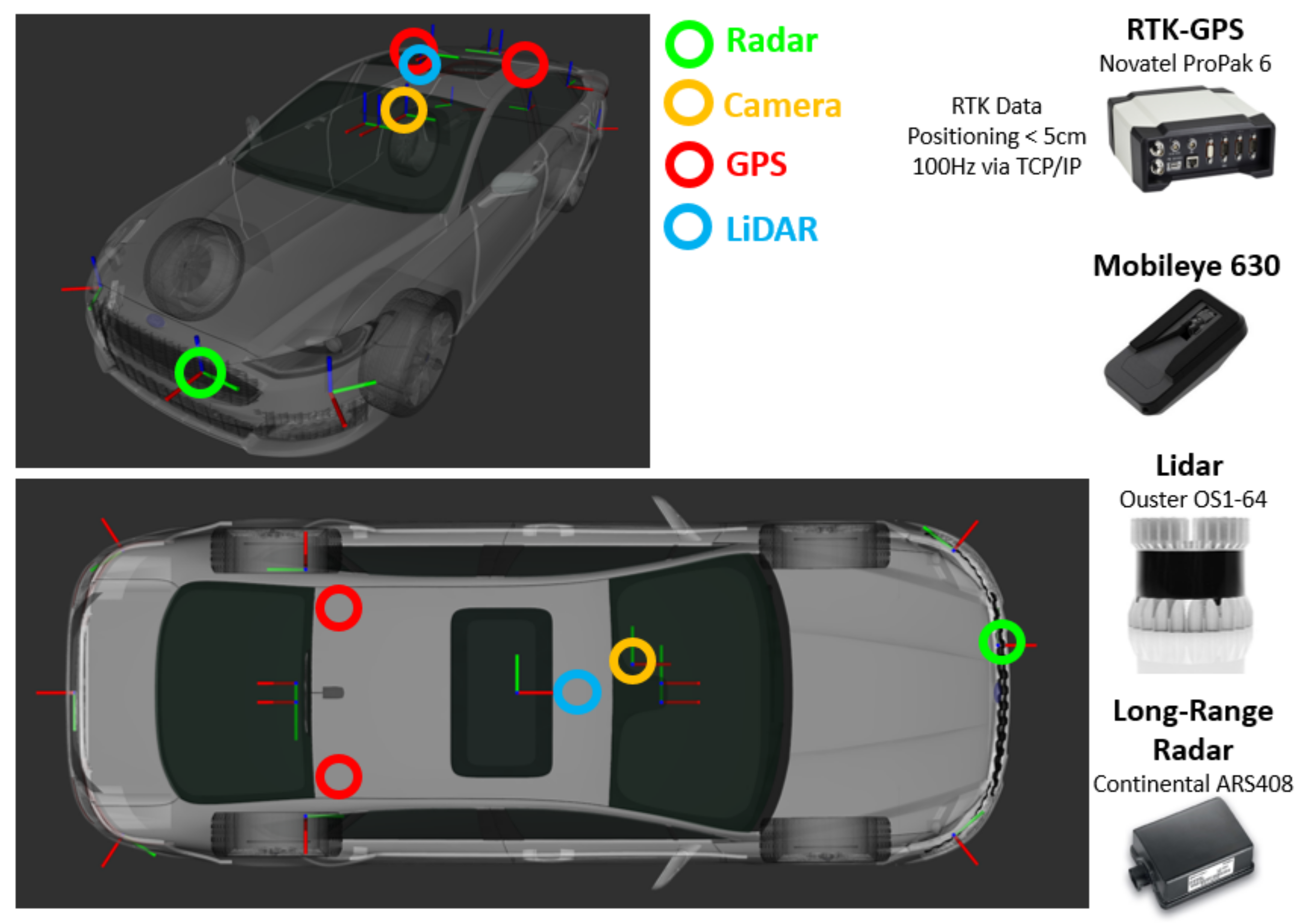

3.2. Test Setup and Measurement Hardware

3.3. Scenario Descriptions

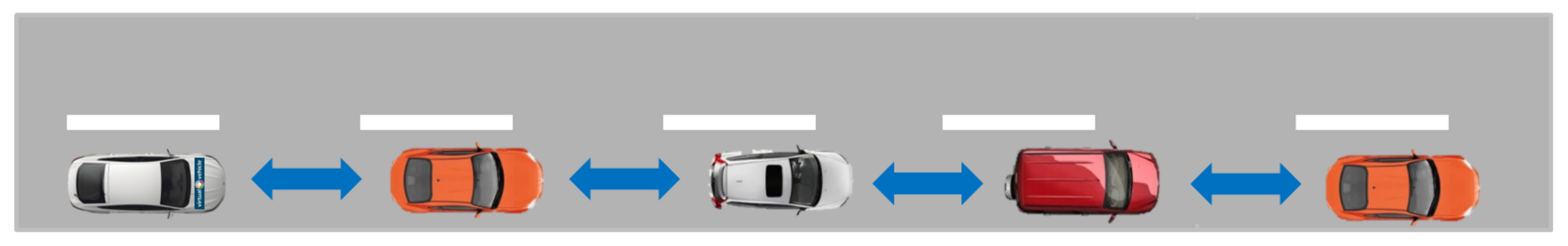

3.3.1. Sensor Scenario 1 (Cut-In)

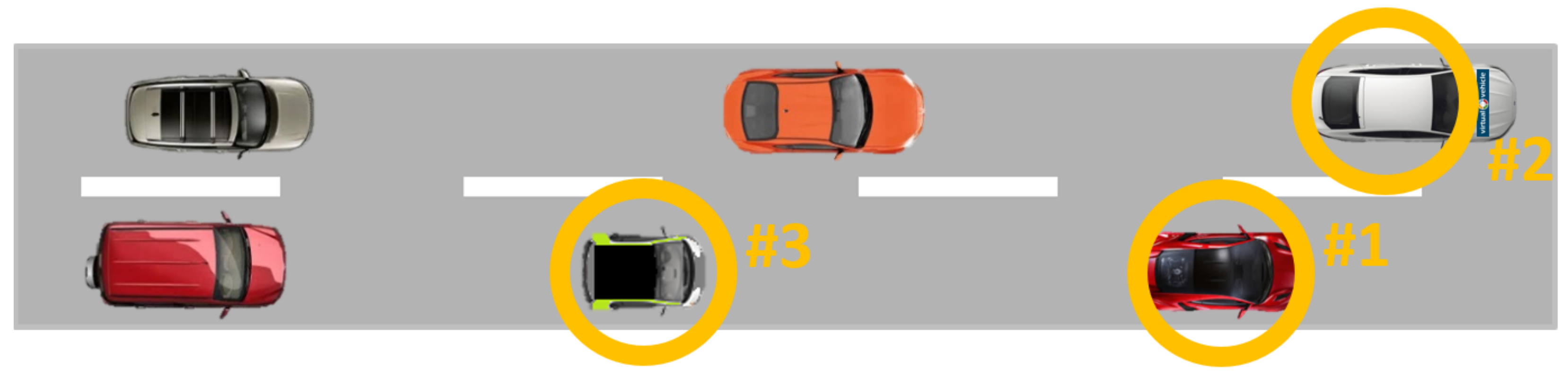

3.3.2. Sensor Scenario 2 (Occlusion)

3.3.3. Sensor Scenario 3 (Separability)

3.3.4. C-ITS Scenario 1 (Variable Speed Limits)

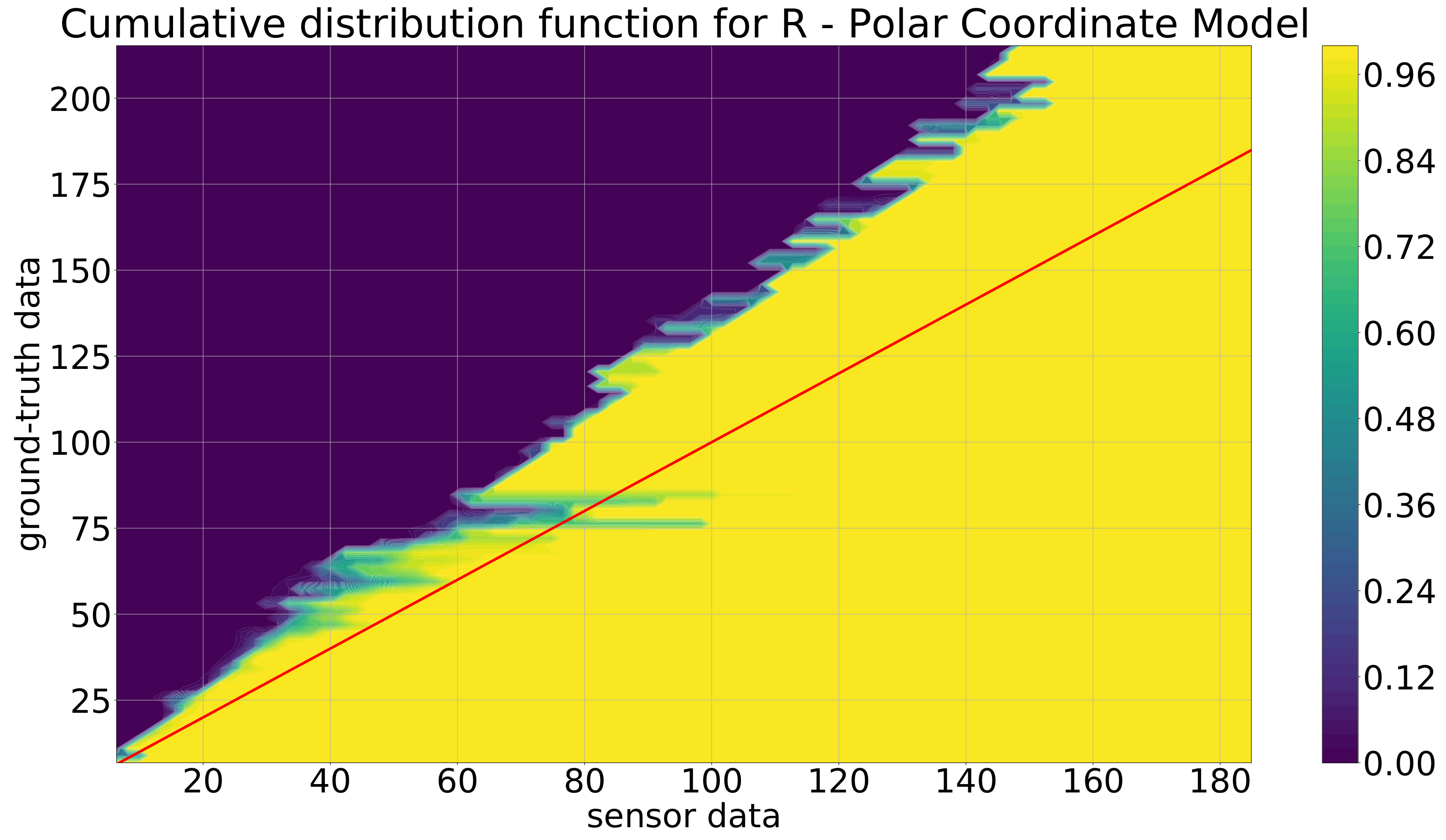

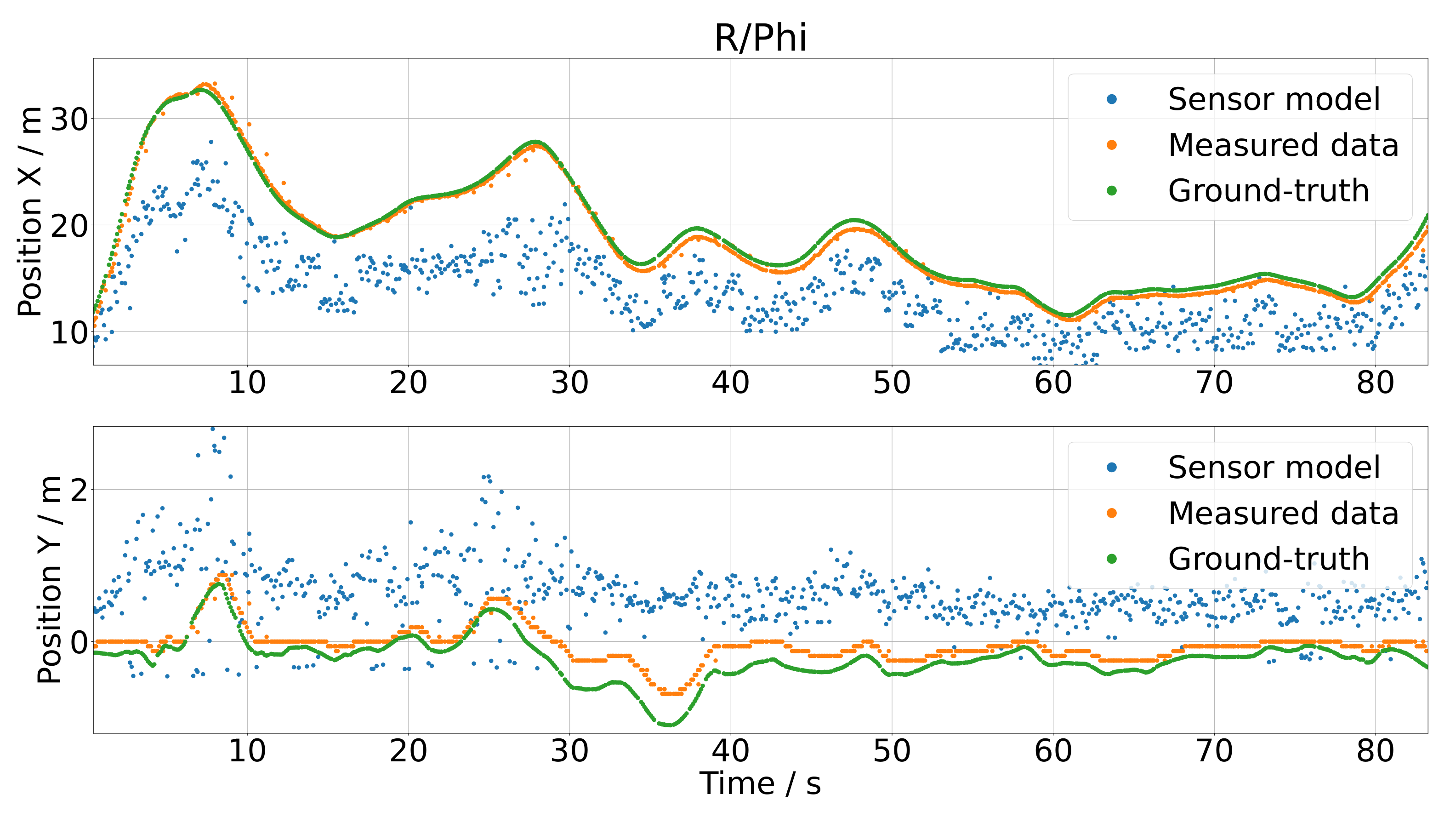

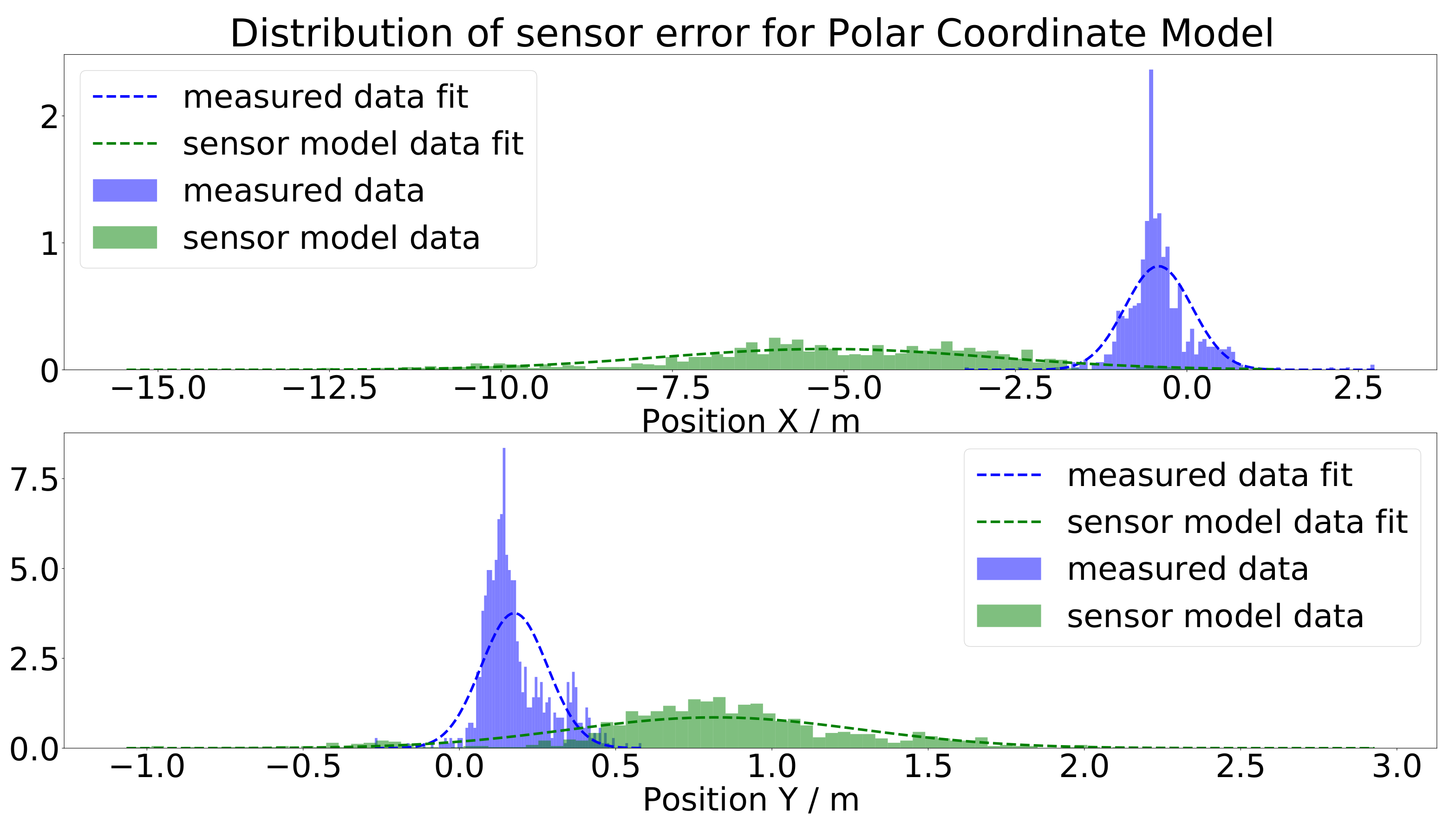

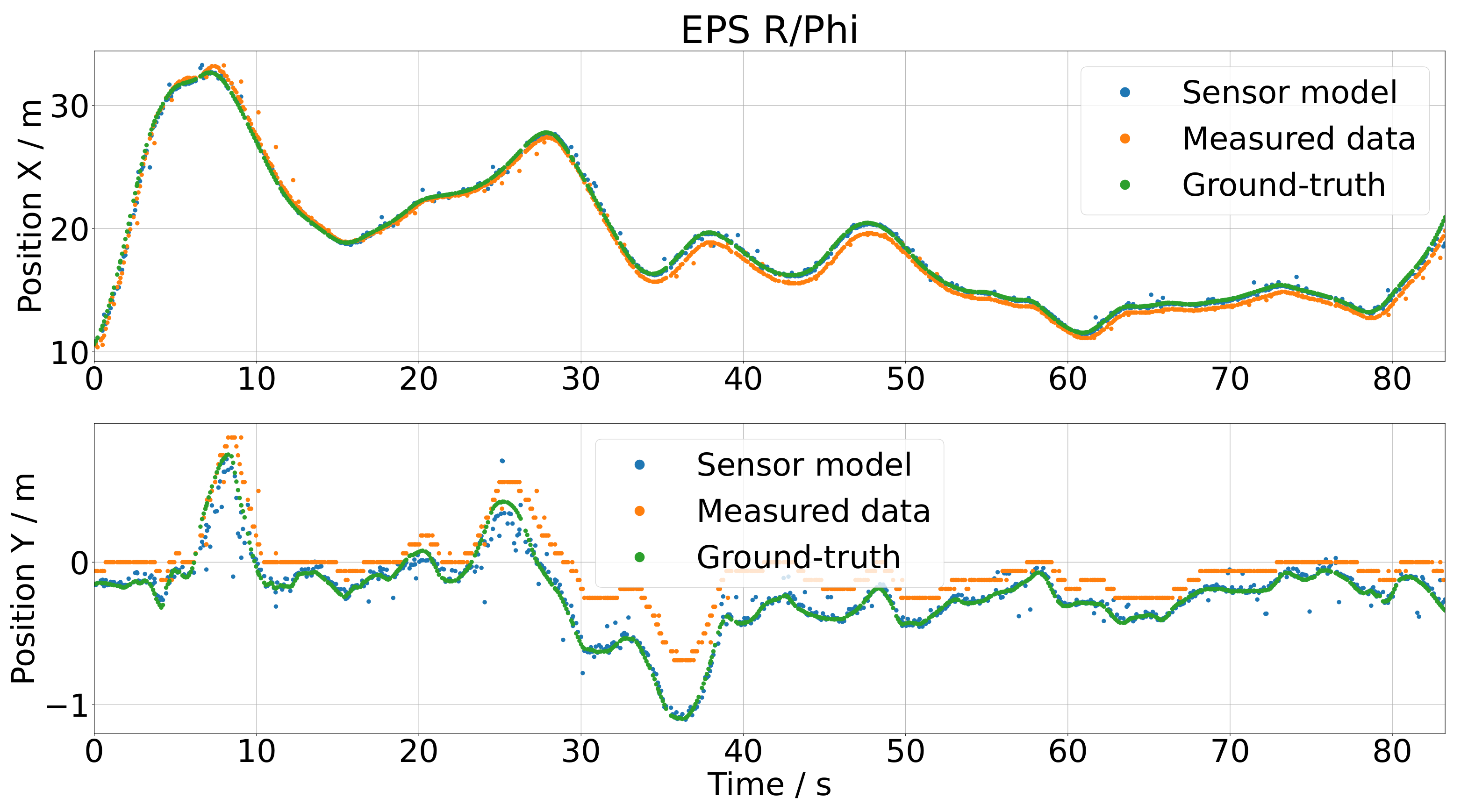

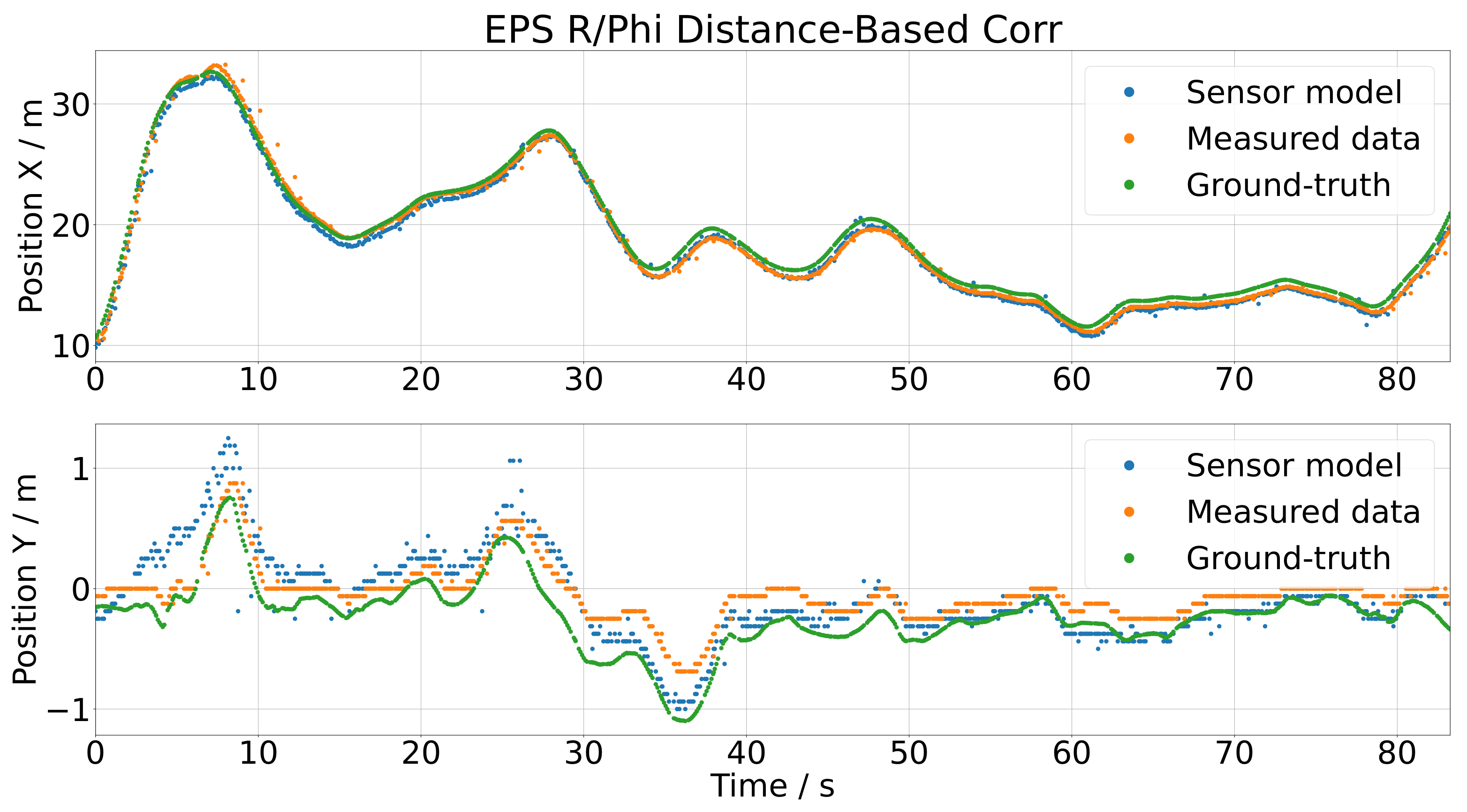

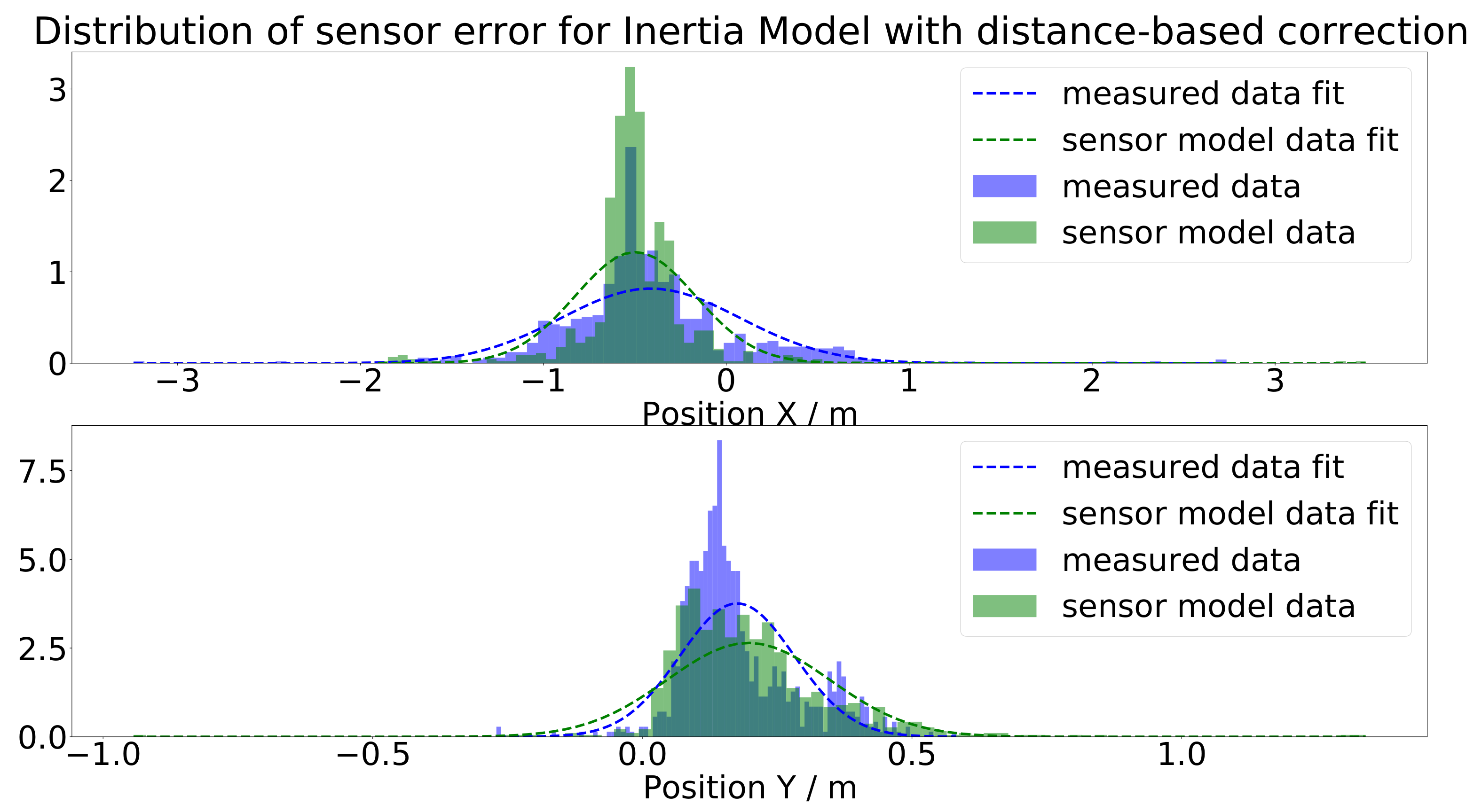

4. Sensor Model Evaluation

4.1. Sensor Models

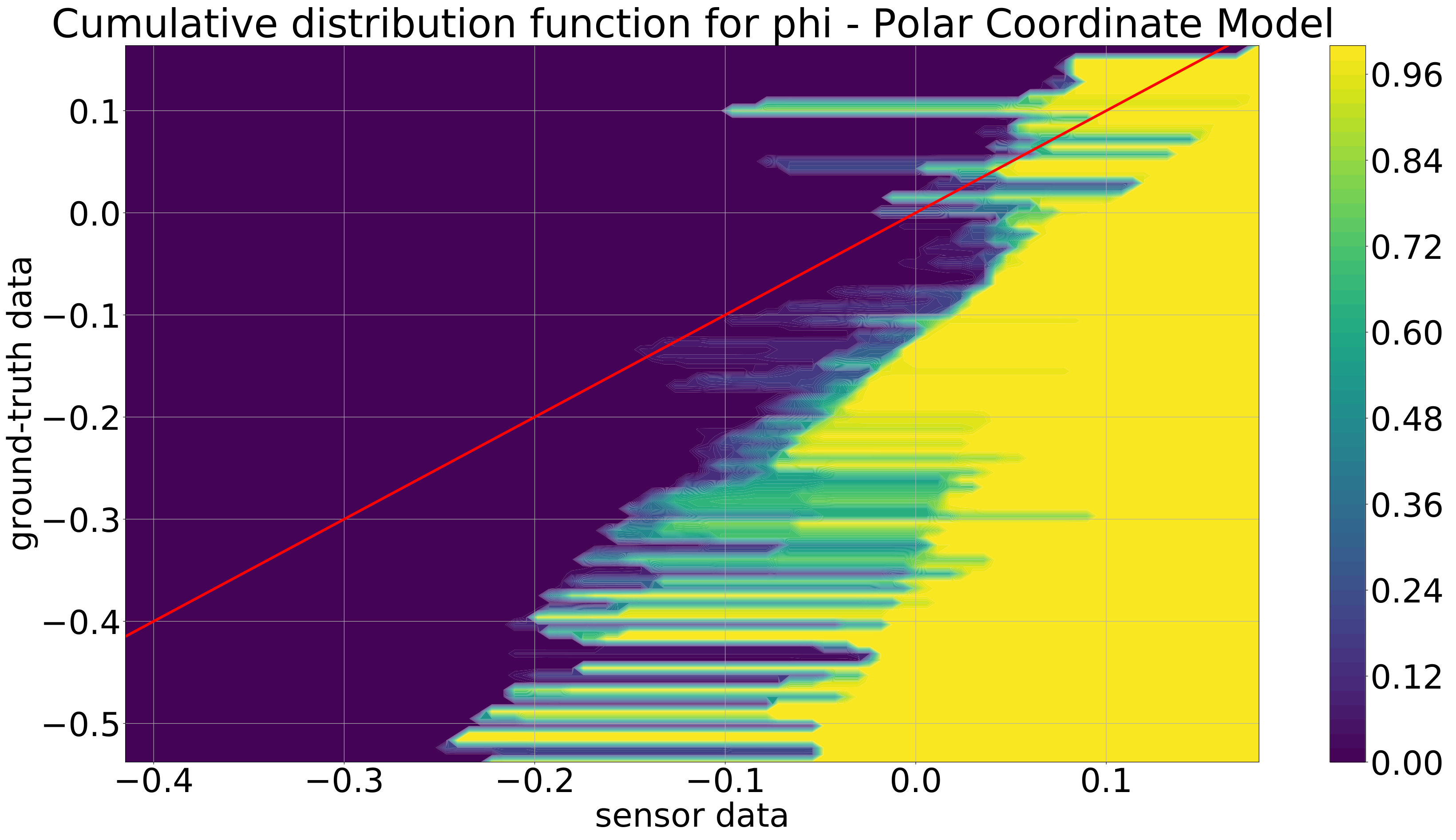

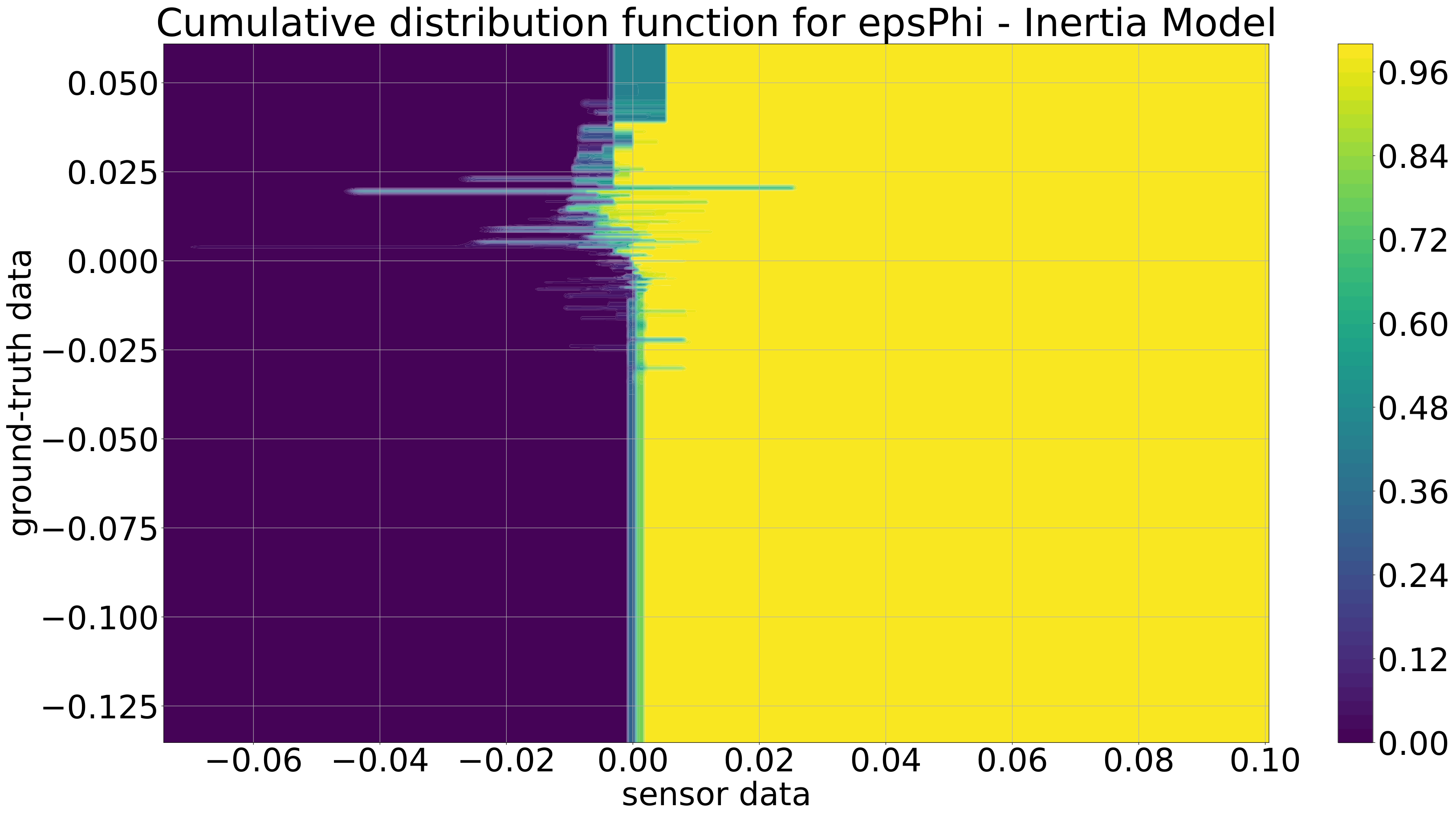

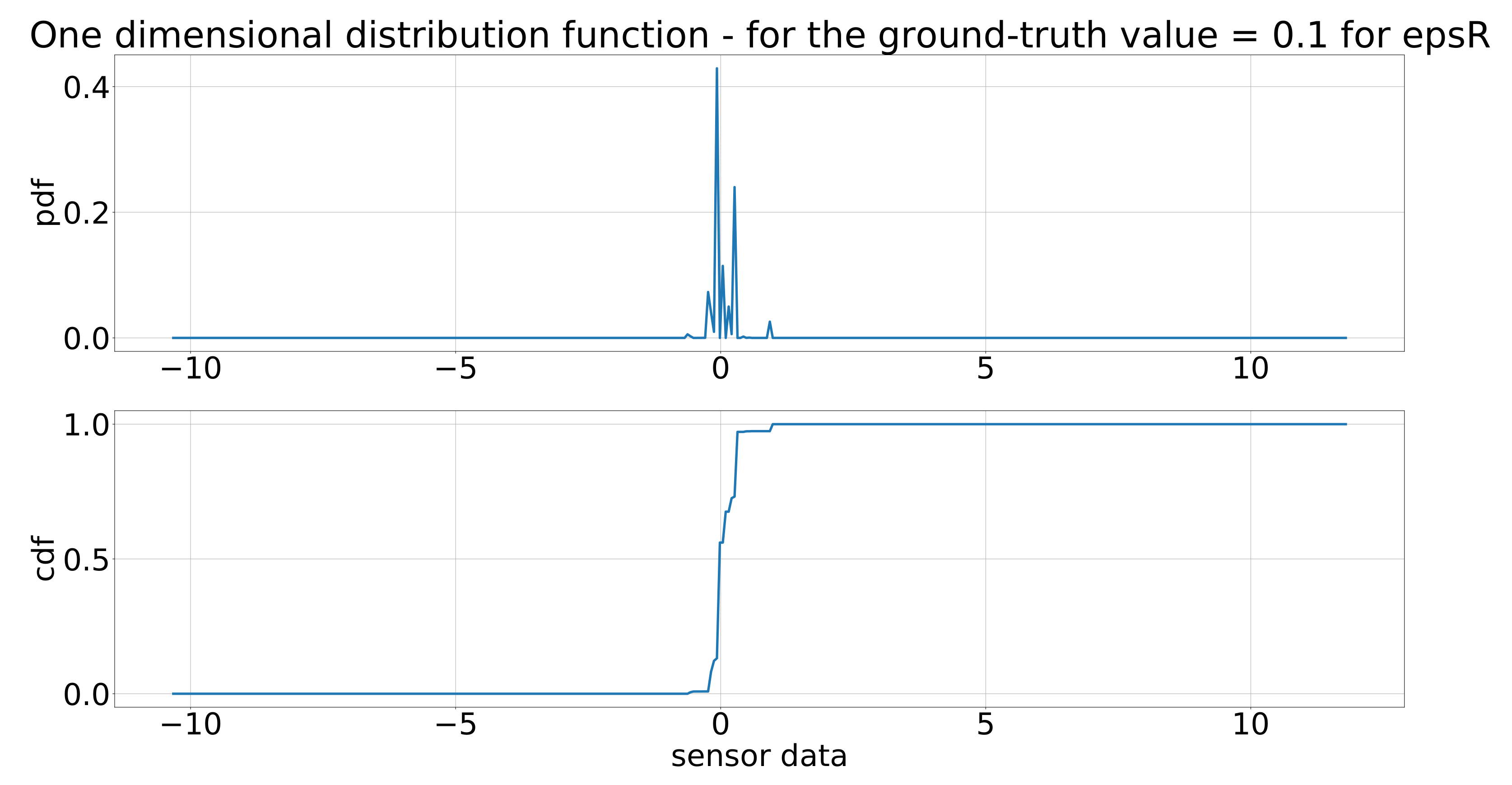

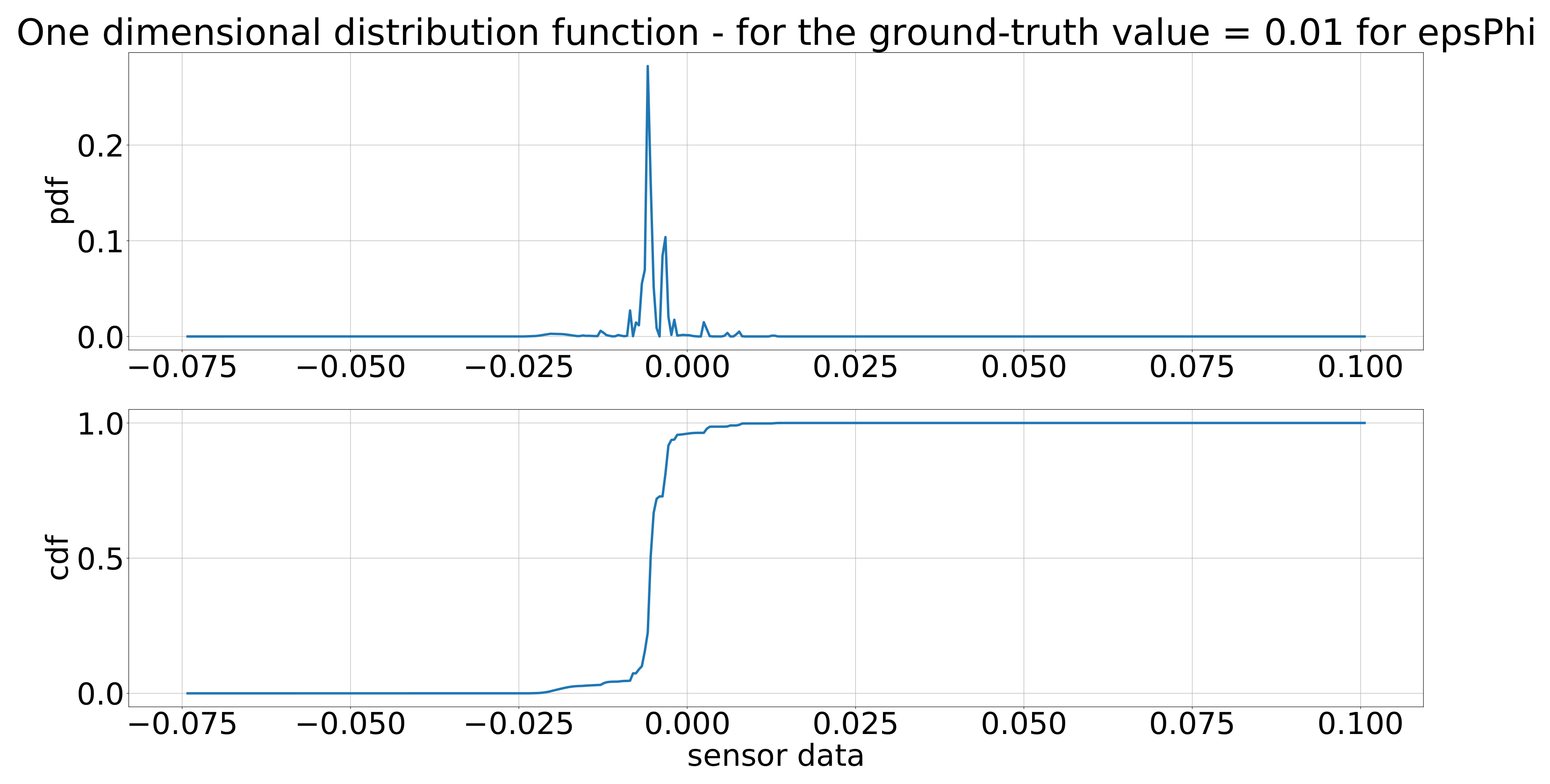

4.2. Results

5. Summary and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- World Health Organisation. Global Status Report on Road Safety 2018; World Health Organization: Geneva, Switzerland, 2018. Available online: https://apps.who.int/iris/bitstream/handle/10665/276462/9789241565684-eng.pdf (accessed on 20 January 2020).

- Anderson, J.M.; Kalra, N.; Stanley, K.D.; Sorensen, P.; Samaras, C.; Oluwatola, O.A. Autonomous Vehicle Technology: A Guide for Policymakers; RAND Corporation: Santa Monica, CA, USA, 2016; Available online: http://www.rand.org/pubs/research_reports/RR443-2.html (accessed on 23 January 2020). [CrossRef]

- Fagnant, D.J.; Kockelman, K. Preparing a nation for autonomous vehicles: Opportunities, barriers and policy recommendations. Transp. Res. Part Policy Pract. 2015, 77, 167–181. Available online: http://www.sciencedirect.com/science/article/pii/S0965856415000804 (accessed on 12 November 2021). [CrossRef]

- Watzenig, D.; Horn, M. (Eds.) Automated Driving: Safer and More Efficient Future Driving; Springer: Berlin, Germany, 2016. [Google Scholar]

- SAE International. Ground Vehicle Standard J3016_201806. 2018. Available online: https://saemobilus.sae.org/content/j3016_201806 (accessed on 31 May 2021).

- Marti, E.; Perez, J.; de Miguel, M.A.; Garcia, F. A Review of Sensor Technologies for Perception in Automated Driving. IEEE Intell. Transp. Syst. Mag. 2019, 11, 94–108. [Google Scholar] [CrossRef]

- Winner, H.; Hakuli, S.; Lotz, F.; Singer, C. Handbook of Driver Assistance Systems, 1st ed.; Springer International Publishing: Cham, Switzerland, 2016; ISBN 978-3-319-12353-0. [Google Scholar]

- Zaarane, A.; Slimani, I.; Okaishi, W.A.; Atouf, I.; Hamdoun, A. Distance Measurement System for Autonomous Vehicles Using Stereo Camera. Array 2020, 5, 100016. [Google Scholar] [CrossRef]

- Dogan, S.; Temiz, M.S.; Külür, S. Real Time Speed Estimation of Moving Vehicles from Side View Images from an Uncalibrated Video Camera. Sensors 2010, 10, 4805–4824. [Google Scholar] [CrossRef] [PubMed]

- Xique, I.J.; Buller, W.; Fard, Z.B.; Dennis, E.; Hart, B. Evaluating Complementary Strengths and Weaknesses of ADAS Sensors. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 1–5 August 2018. [Google Scholar]

- Kalra, N.; Paddock, S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part Policy Pract. 2016, 94, 182–193. Available online: http://www.sciencedirect.com/science/article/pii/S0965856416302129 (accessed on 12 November 2021). [CrossRef]

- Hakuli, S.; Krug, M. Virtuelle Integration’ Kapitel 8 in ‘Handbuch Fahrerassistenzsysteme—2015, Grundlagen, Komponenten und Systeme Fuer Aktive Sicherheit und Komfort; Winner, H., Hakuli, S., Lotz, F., Singer, C., Eds.; Springer: Vieweg, Wiesbaden, 2015. [Google Scholar]

- Solmaz, S.; Holzinger, F. A Novel Testbench for Development, Calibration and Functional Testing of ADAS/AD Functions. In Proceedings of the 2019 IEEE International Conference on Connected Vehicles and Expo (ICCVE), Graz, Austria, 4–8 November 2019; pp. 1–8. [Google Scholar]

- Solmaz, S.; Rudigier, M.; Mischinger, M. A Vehicle-in-the-Loop Methodology for Evaluating Automated Driving Functions in Virtual Traffic. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1465–1471. [Google Scholar]

- Solmaz, S.; Rudigier, M.; Mischinger, M.; Reckenzaun, J. Hybrid Testing: A Vehicle-in-the-Loop Testing Method for the Development of Automated Driving Functions. SAE Intl. J. CAV 2021, 4, 133–148. [Google Scholar] [CrossRef]

- Solmaz, S.; Holzinger, F.; Mischinger, M.; Rudigier, M.; Reckenzaun, J. Novel Hybrid-Testing Paradigms for Automated Vehicle and ADAS Function Development. In Towards Connected and Autonomous Vehicle Highway: Technical, Security and Ethical Challenges; EAI/Springer Innovations in Communications and Computing Book Series; Springer: Cham, Switzerland, 2021; ISBN 978-3-030-66041-3. [Google Scholar]

- VIRES Simulationstechnologie GmbH. VTD—VIRES Virtual Test Drive. Available online: https://vires.mscsoftware.com (accessed on 31 May 2021).

- IPG Automotive GmbH. CarMaker: Virtual Testing of Automobiles and Light-Duty Vehicles. Available online: https://ipg-automotive.com/products-services/simulation-software/carmaker/ (accessed on 31 May 2021).

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. In Field and Service Robotics; Springer Proceedings in Advanced Robotics; Hutter, M., Siegwart, R., Eds.; Springer: Cham, Switzerland, 2018; Volume 5. [Google Scholar] [CrossRef]

- AIMotive. aiSim—The World’s First ISO26262 ASIL-D Certified Simulator Tool. Available online: https://aimotive.com/aisim (accessed on 31 May 2021).

- Hanke, T.; Hirsenkorn, N.; van-Driesten, C.; Garcia-Ramos, P.; Schiementz, M.; Schneider, S.; Biebl, E. Open Simulation Interface—A Generic Interface for the Environment Perception of Automated Driving Functions in Virtual Scenarios. Research Report. 2017. Available online: https://www.hot.ei.tum.de/forschung/automotive-veroeffentlichungen/ (accessed on 12 November 2021).

- Schlager, B.; Muckenhuber, S.; Schmidt, S.; Holzer, H.; Rott, R.; Maier, F.M.; Kirchengast, M.; Saad, K.; Stettinger, G.; Watzenig, D.; et al. State-of- the-Art Sensor Models for Virtual Testing of Advanced Driver Assistance Systems/Autonomous Driving Functions. SAE Int. J. CAV 2020, 3, 233–261. [Google Scholar] [CrossRef]

- Hanke, T.; Hirsenkorn, N.; Dehlink, B.; Rauch, A.; Rasshofer, R.; Biebl, E. Generic Architecture for Simulation of ADAS Sensors. In Proceedings of the 2015 Proceedings International Radar Symposium, Dresden, Germany, 24–26 June 2015. [Google Scholar]

- Muckenhuber, S.; Holzer, H.; Rübsam, J.; Stettinger, G. Object-based sensor model for virtual testing of ADAS/AD functions. In Proceedings of the 2019 IEEE International Conference on Connected Vehicles and Expo (ICCVE), Graz, Austria, 4–8 November 2019. [Google Scholar]

- Schmidt, S.; Schlager, B.; Muckenhuber, S.; Stark, R. Configurable Sensor Model Architecture for the Development of Automated Driving Systems. Sensors 2021, 21, 4687. [Google Scholar] [CrossRef]

- Stolz, M.; Nestlinger, G. Fast Generic Sensor Models for Testing Highly Automated Vehicles in Simulation. Elektrotechnik Informationstechnik 2018, 135, 365–369. [Google Scholar] [CrossRef]

- Hirsenkorn, N.; Hanke, T.; Rauch, A.; Dehlink, B.; Rasshofer, R.; Biebl, E. A non-parametric approach for modeling sensor behavior. In Proceedings of the 16th International Radar Symposium, Dresden, Germany, 24–26 June 2015; pp. 131–136. [Google Scholar]

- Carlson, A.; Skinner, K.A.; Vasudevan, R.; Roberson, M.J. Modeling Camera Effects to Improve Visual Learning from Synthetic Data. In Proceedings of the Computer Vision—ECCV 2018 Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Carlson, A.; Skinner, K.A.; Vasudevan, R.; Roberson, M.J. Sensor Transfer: Learning Optimal Sensor Effect Image Augmentation for Sim-to-Real Domain Adaptation. IEEE Robot. Autom. Lett. 2019, 4, 2431–2438. [Google Scholar] [CrossRef]

- Schneider, S.-A.; Saad, K. Camera Behavioral Model and Testbed Setups for Image-Based ADAS Functions. Elektrotechnik Informationstechnik 2018, 135, 328–334. [Google Scholar] [CrossRef]

- Wittpahl, C.; Zakour, H.B.; Lehmann, M.; Braun, A. Realistic Image Degradation with Measured PSF. Electron. Imaging Auton. Veh. Mach. 2018, 2018, 1–6. [Google Scholar] [CrossRef]

- Kang, Y.; Yin, H.; Berger, C. Test Your Self-Driving Algorithm: An Overview of Publicly Available Driving Datasets and Virtual Testing Environments. IEEE Trans. Intell. Veh. 2019, 4, 171–185. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Huang, X.; Cheng, X.; Geng, Q.; Cao, B.; Zhou, D.; Wang, P.; Lin, Y.; Yang, R. The ApolloScape Dataset for Autonomous Driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 954–960. [Google Scholar]

- Huang, X.; Wang, P.; Cheng, X.; Zhou, D.; Geng, Q.; Yang, R. The ApolloScape Open Dataset for Autonomous Driving and its Application. arXiv 2019, arXiv:1803.06184v4. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Gao, Y.; Yu, F.; Darrell, T. End-to-end learning of driving models from large-scale video datasets. In Proceedings of the IEEE Conference on Computer Vision Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3530–3538. [Google Scholar]

- Yu, F.; Xian, W.; Chen, Y.; Liu, F.; Liao, M.; Madhavan, V.; Darrell, T. BDD100K: A diverse driving video database with scalable annotation tooling. arXiv 2018, arXiv:1805.04687. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. arXiv 2019, arXiv:1912.04838v5. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. arXiv 2019, arXiv:1903.11027v1. [Google Scholar]

- Tihanyi, V.; Tettamanti, T.; Csonthó, M.; Eichberger, A.; Ficzere, D.; Gangel, K.; Hörmann, L.B.; Klaffenböck, M.A.; Knauder, C.; Luley, P.; et al. Motorway Measurement Campaign to Support R&D Activities in the Field of Automated Driving Technologies. Sensors 2021, 21, 2169. [Google Scholar] [PubMed]

- Parzen, E. On Estimation of a Probability Density Function and Mode; Stanford University: San Francisco, CA, USA, 1962. [Google Scholar]

- Turlach, B.A. Bandwidth Selection in Kernel Density Estimation: A Review; Universite Catholique de Louvain: Louvain-la-Neuve, Belgium, 1999. [Google Scholar]

- Muckenhuber, S.; Museljic, E.; Stettinger, G. Performance evaluation of a state-of-the-art automotive radar and corresponding modeling approaches based on a large labeled dataset. J. Intell. Transp. Syst. 2021, 1–20. [Google Scholar] [CrossRef]

- Austrian Ministry for Transport, Innovation and Technology. Austrian Action Programme on Automated Mobility 2019–2022. Vienna 2018. Available online: https://www.bmk.gv.at (accessed on 17 August 2021).

- Solmaz, S.; Muminovic, R.; Civgin, A.; Stettinger, G. Development, Analysis and Real-life Benchmarking of RRT-based Path Planning Algorithms for Automated Valet Parking. In Proceedings of the 24th IEEE International Intelligent Transportation Systems Conference (ITSC21), Indianapolis, IN, USA, 19–22 September 2021. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Genser, S.; Muckenhuber, S.; Solmaz, S.; Reckenzaun, J. Development and Experimental Validation of an Intelligent Camera Model for Automated Driving. Sensors 2021, 21, 7583. https://doi.org/10.3390/s21227583

Genser S, Muckenhuber S, Solmaz S, Reckenzaun J. Development and Experimental Validation of an Intelligent Camera Model for Automated Driving. Sensors. 2021; 21(22):7583. https://doi.org/10.3390/s21227583

Chicago/Turabian StyleGenser, Simon, Stefan Muckenhuber, Selim Solmaz, and Jakob Reckenzaun. 2021. "Development and Experimental Validation of an Intelligent Camera Model for Automated Driving" Sensors 21, no. 22: 7583. https://doi.org/10.3390/s21227583

APA StyleGenser, S., Muckenhuber, S., Solmaz, S., & Reckenzaun, J. (2021). Development and Experimental Validation of an Intelligent Camera Model for Automated Driving. Sensors, 21(22), 7583. https://doi.org/10.3390/s21227583