Applying State-of-the-Art Deep-Learning Methods to Classify Urban Cities of the Developing World

Abstract

1. Introduction

- We show the efficacy of the categorization method proposed in [2], in seven cities from the developing world.

- We show that three models from different backbones are successful in socioeconomic segmentation.

- We present comparative results for three different models for all of the cities mentioned above.

- We discuss the implication of the categorization method, along with deep learning techniques on policymaking in developing countries.

2. Research Background

2.1. Urban Environment

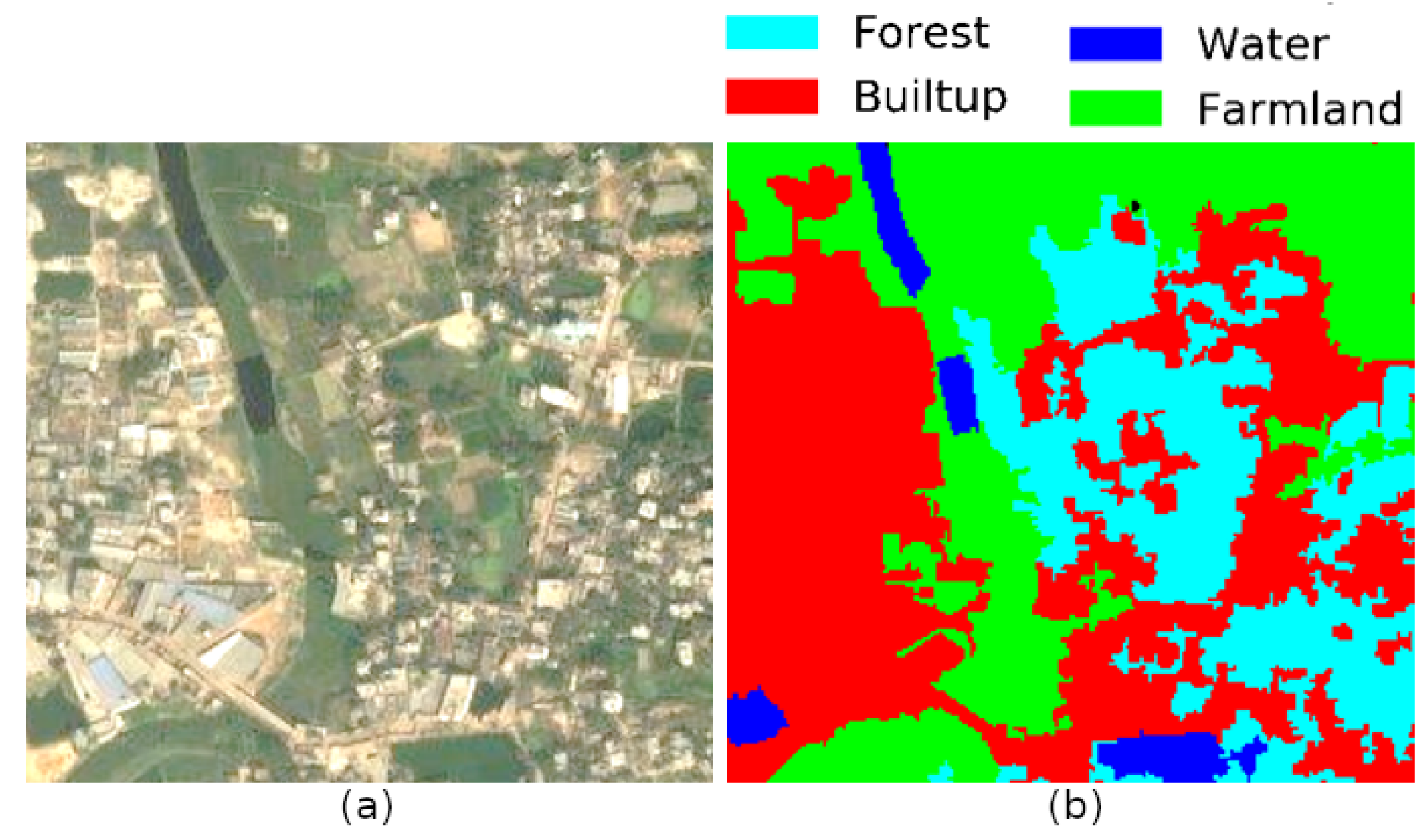

2.2. Traditional Machine Learning Techniques for Understanding Satellite Images

2.3. Semantic Segmentation for Urban Environment Development

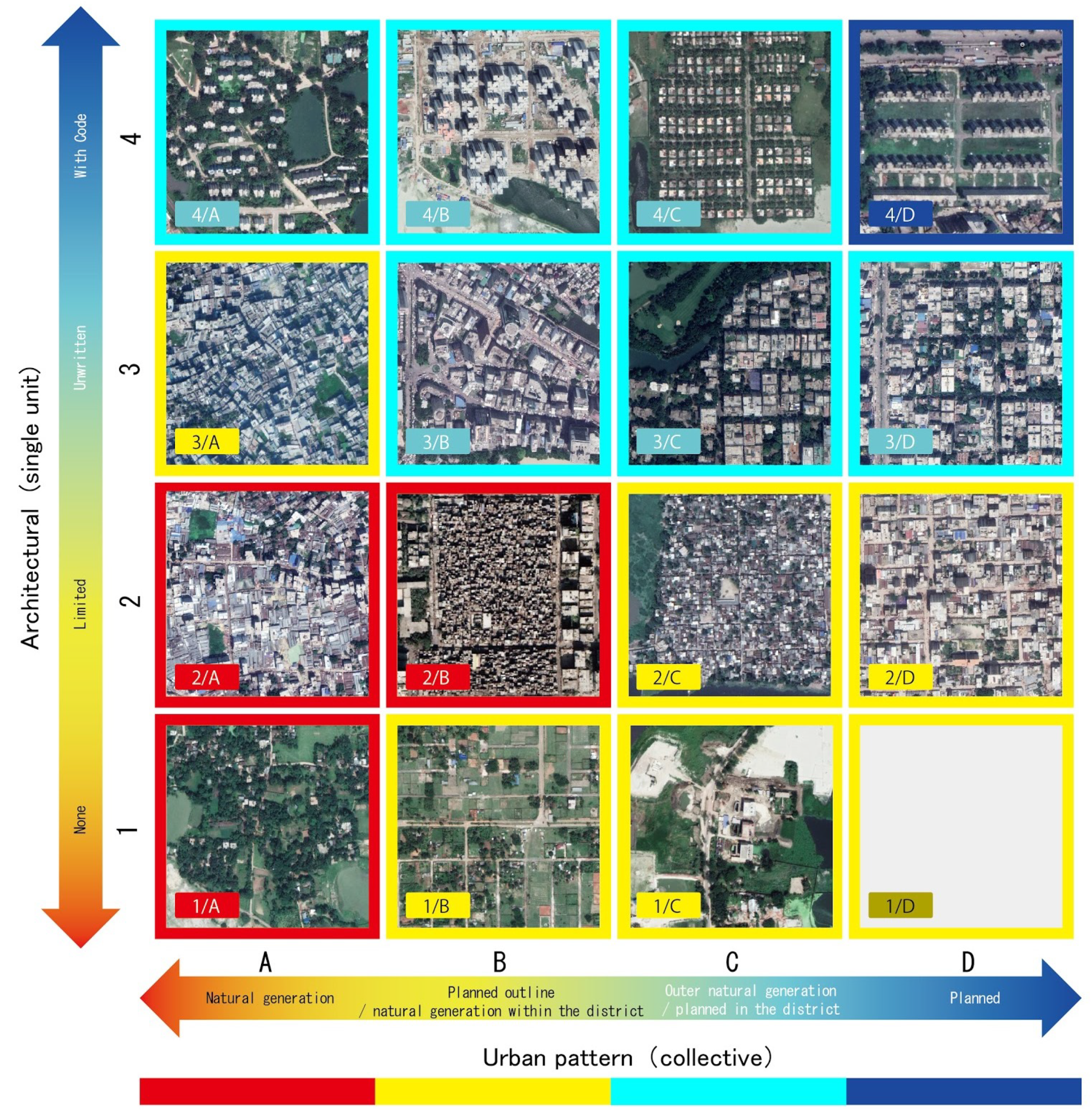

3. Urban Environment Categorization

3.1. Traditional Urban Environment Categorization

3.2. Summary of the Urban Environment Categorization Method

4. Study Area and Methodology

4.1. Geographic Details of Seven Cities

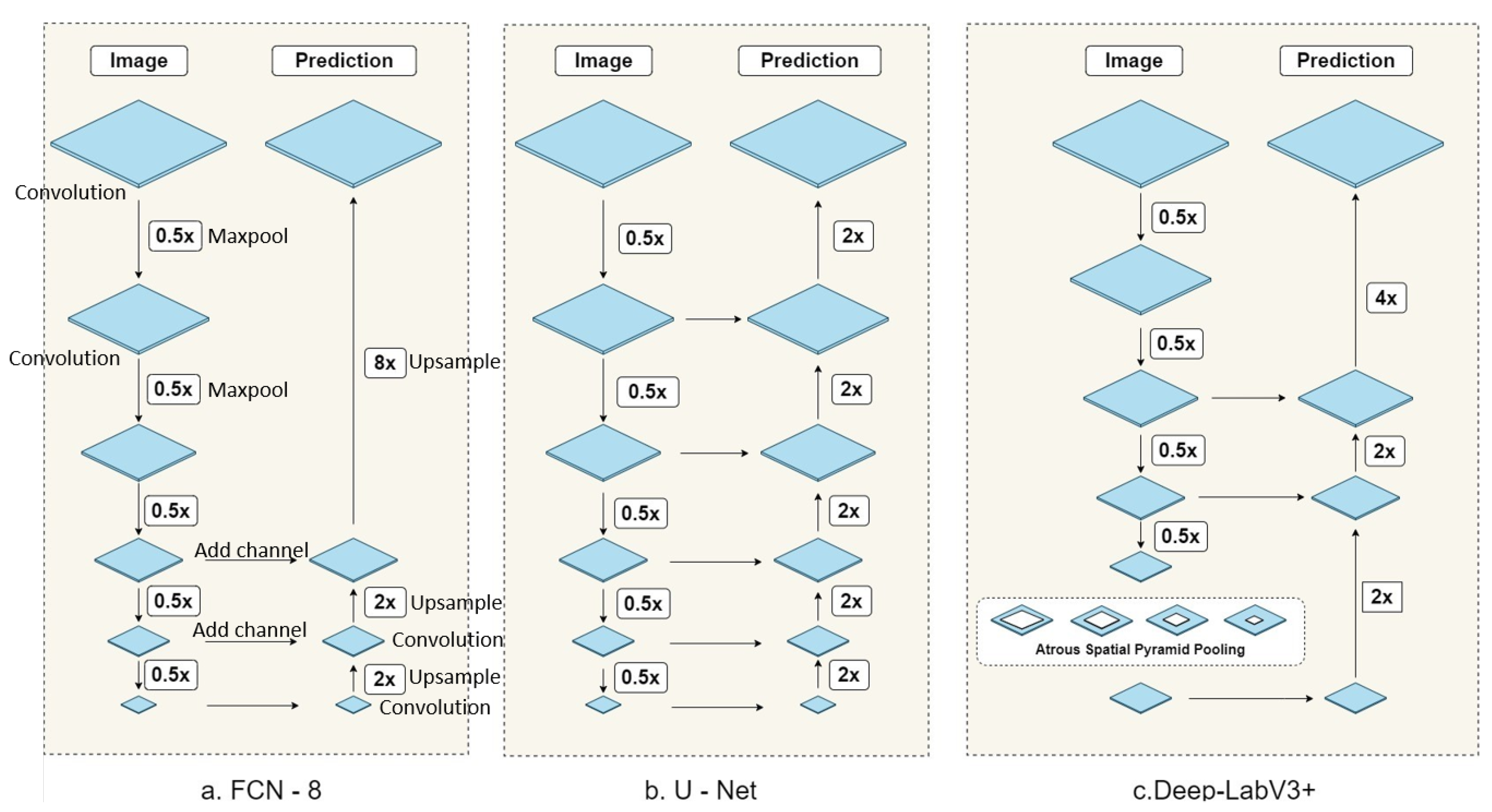

4.2. Deep Learning Algorithms for Automatic Building Categorization

4.2.1. Fully Convolutional Network (FCN-8)

4.2.2. U-Net

4.2.3. DeepLabv3+

5. Automatic Building-Categorization

5.1. Data Collection, Annotation and Preprocessing

5.2. Producing Training Data with Overlap

5.3. Training Procedure

5.3.1. Training

5.3.2. Hyperparameters

5.4. Testing Procedure

5.5. Performance Evaluation

6. Experimental Results and Performance Comparison

7. Policy Implication and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

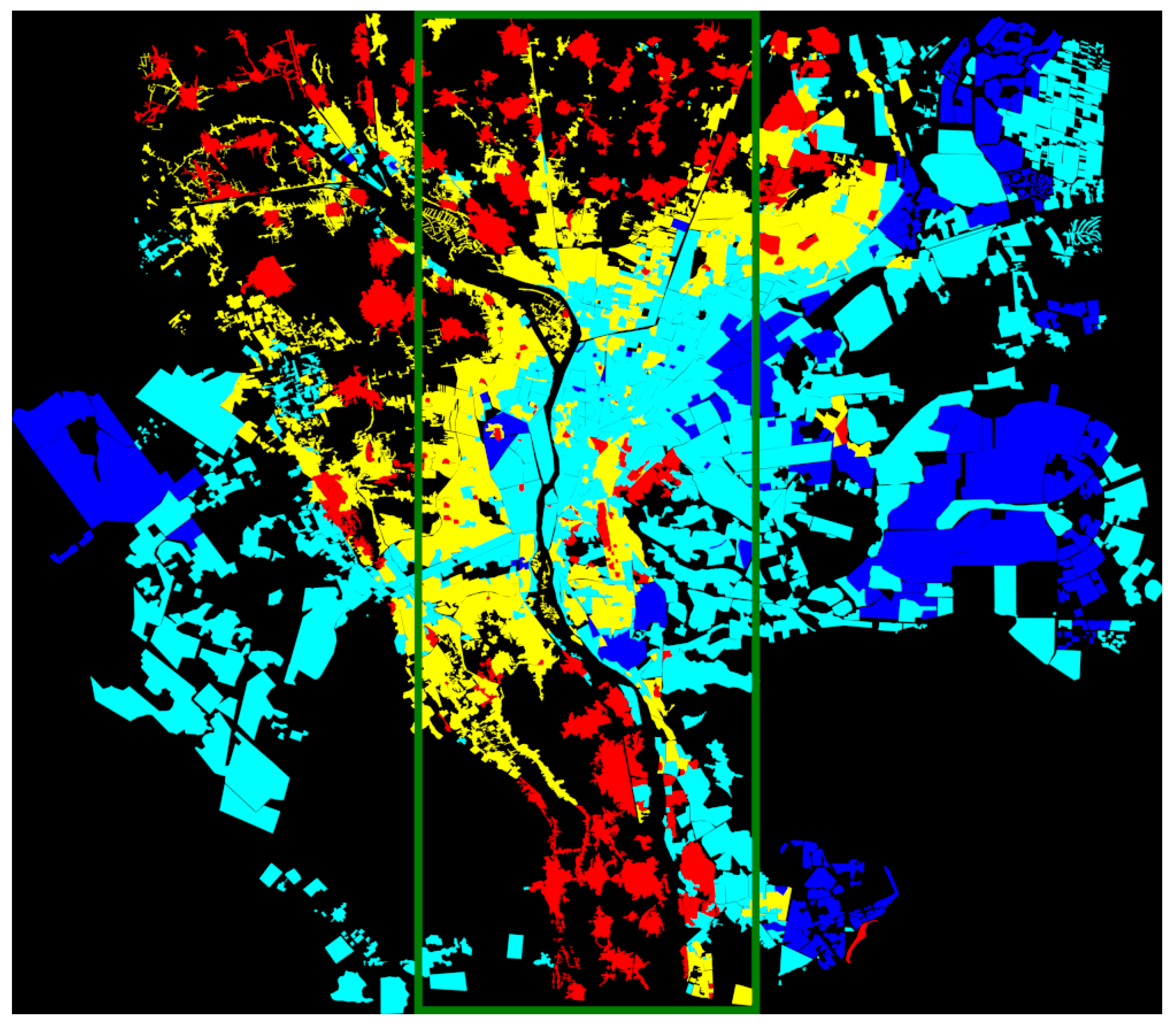

Appendix A

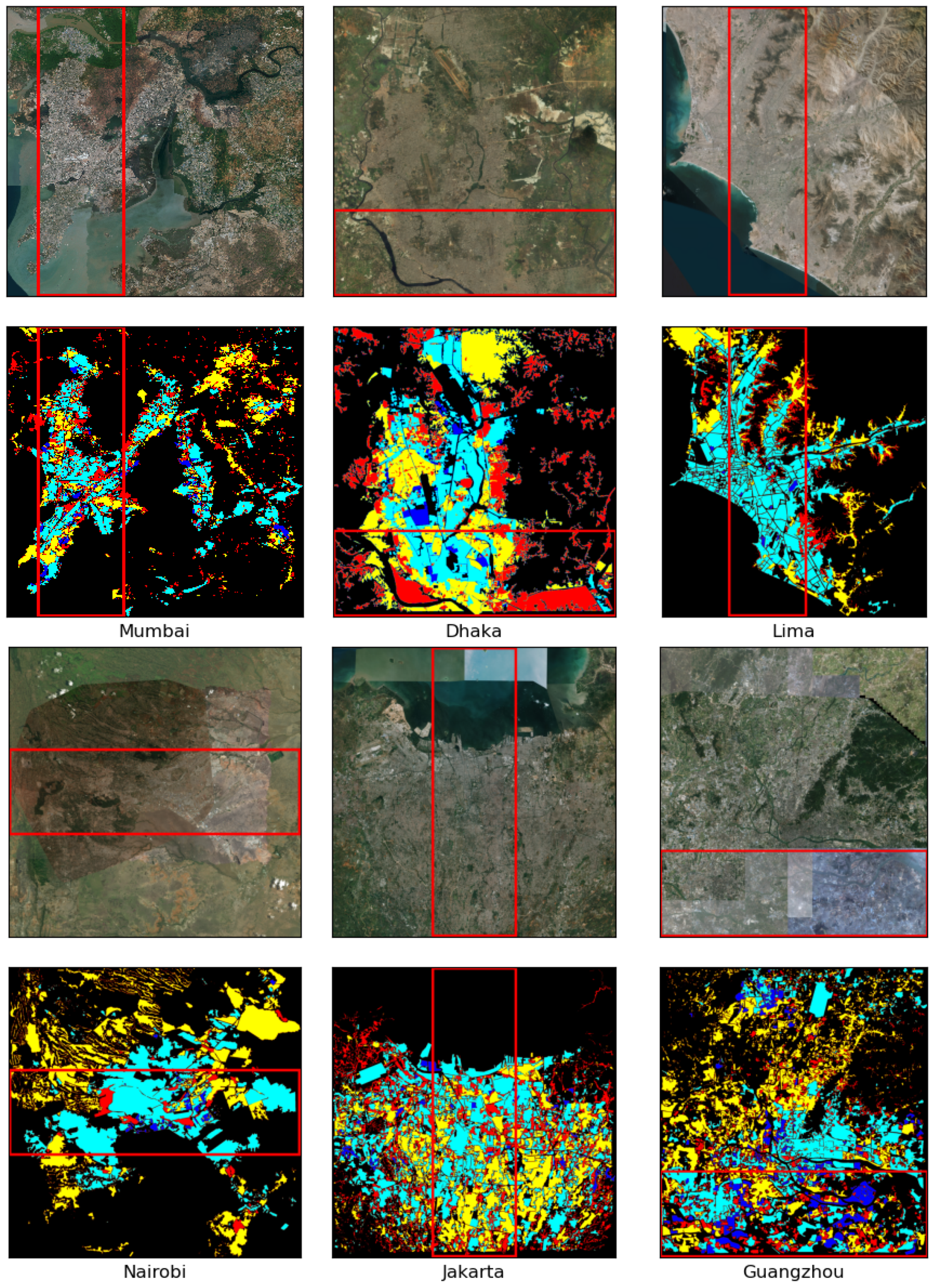

Appendix A.1. Dhaka

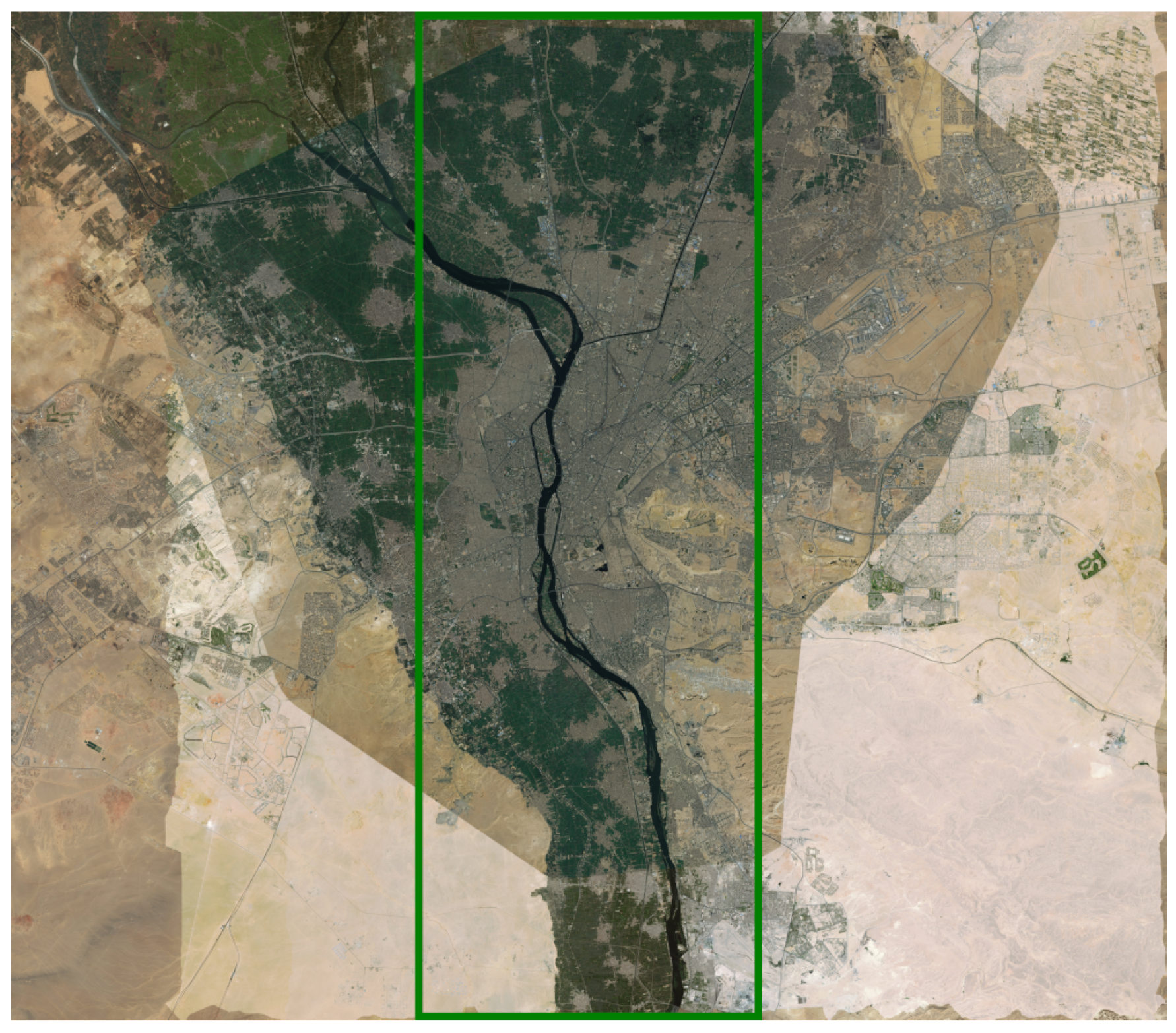

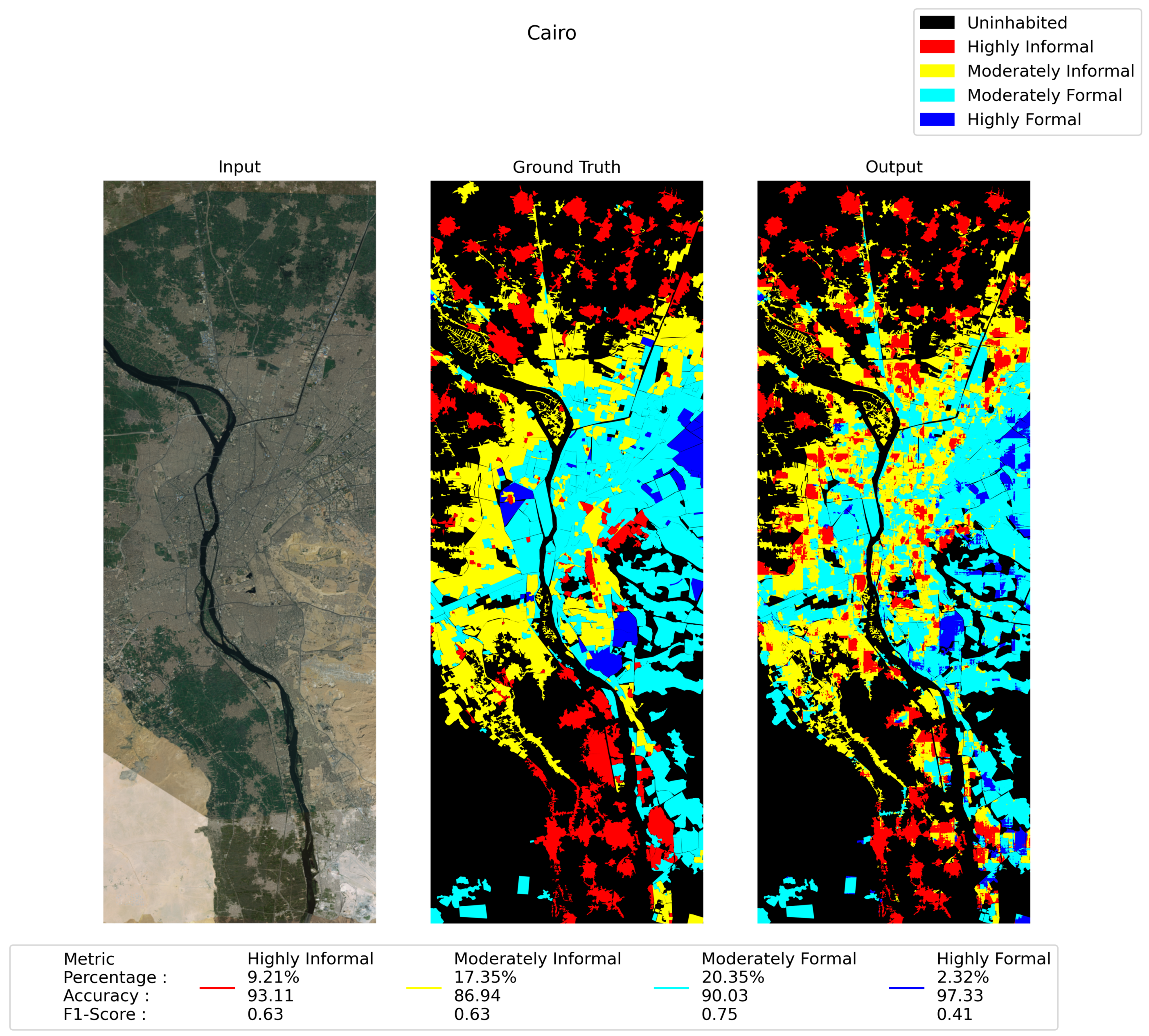

Appendix A.2. Cairo

Appendix A.3. Nairobi

Appendix A.4. Lima

Appendix A.5. Jakarta

Appendix A.6. Mumbai

Appendix A.7. Guangzhou

References

- UN DESA. 2018 Revision of World Urbanization Prospects; UN DESA: New York, NY, USA, 2018. [Google Scholar]

- Cheng, Q.; Rahman, A.; Sarker, A.; Nayem, A.B.S.; Paul, O.; Ali, A.A.; Amin, M.A.; Shibasaki, R.; Zaber, M. Deep-learning coupled with novel classification method to classify the urban environment of the developing world. arXiv 2020, arXiv:2011.12847. [Google Scholar]

- Frey, H. Designing the City: Towards a More Sustainable Urban Form; Taylor & Francis: Oxfordshire, UK, 2003. [Google Scholar]

- Haughton, G.; Hunter, C. Sustainable Cities; Routledge: Oxford, UK, 2004. [Google Scholar]

- Guo, Y.; Tang, Z.; Guo, J. Could a Smart City Ameliorate Urban Traffic Congestion? A Quasi-Natural Experiment Based on a Smart City Pilot Program in China. Sustainability 2020, 12, 2291. [Google Scholar] [CrossRef]

- Hammami, F. The impact of optimizing delivery areas on urban traffic congestion. Res. Transp. Bus. Manag. 2020, 37, 100569. [Google Scholar] [CrossRef]

- Robinson, C.; Hohman, F.; Dilkina, B. A deep learning approach for population estimation from satellite imagery. In Proceedings of the 1st ACM SIGSPATIAL Workshop on Geospatial Humanities, Redondo Beach, CA, USA, 7–10 November 2017; pp. 47–54. [Google Scholar]

- Tingzon, I.; Orden, A.; Go, K.; Sy, S.; Sekara, V.; Weber, I.; Fatehkia, M.; García-Herranz, M.; Kim, D. Mapping poverty in the Philippines using machine learning, satellite imagery, and crowd-sourced geospatial information. In Proceedings of the AI for Social Good ICML 2019 Workshop, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Roy, P.K.; Hayakawa, S. Characteristics of Air Pollution Condition in Dhaka City Effect of Automobiles and Factories on Environment. J. Agric. Meteorol. 1997, 52, 529–532. [Google Scholar] [CrossRef]

- Assembly, G. United Nations: Transforming Our World: The 2030 Agenda for Sustainable Development; UN: New York, NY, USA, 2015. [Google Scholar]

- Yang, J.; Zhang, J.; Wang, H. Urban traffic control in software defined internet of things via a multi-agent deep reinforcement learning approach. IEEE Trans. Intell. Transp. Syst. 2020, 22, 3742–3754. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Taubenböck, H.; Standfuß, I.; Klotz, M.; Wurm, M. The physical density of the city—Deconstruction of the delusive density measure with evidence from two European megacities. ISPRS Int. J. Geo-Inf. 2016, 5, 206. [Google Scholar] [CrossRef]

- Taubenböck, H.; Kraff, N.J.; Wurm, M. The morphology of the Arrival City—A global categorization based on literature surveys and remotely sensed data. Appl. Geogr. 2018, 92, 150–167. [Google Scholar] [CrossRef]

- Dovey, K.; Rao, F.; Pafka, E. Agglomeration and assemblage: Deterritorialising urban theory. Urban Stud. 2018, 55, 263–273. [Google Scholar] [CrossRef]

- Shashi, M.; Jain, K. Use of photogrammetry in 3D modeling and visualization of buildings. ARPN J. Eng. Appl. Sci. 2007, 2, 37–40. [Google Scholar]

- Owen, K.K.; Wong, D.W. An approach to differentiate informal settlements using spectral, texture, geomorphology and road accessibility metrics. Appl. Geogr. 2013, 38, 107–118. [Google Scholar] [CrossRef]

- Glaeser, E.L.; Kahn, M.E. The greenness of cities: Carbon dioxide emissions and urban development. J. Urban Econ. 2010, 67, 404–418. [Google Scholar] [CrossRef]

- Baud, I.; Kuffer, M.; Pfeffer, K.; Sliuzas, R.; Karuppannan, S. Understanding heterogeneity in metropolitan India: The added value of remote sensing data for analyzing sub-standard residential areas. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 359–374. [Google Scholar] [CrossRef]

- Huang, J.; Lu, X.X.; Sellers, J.M. A global comparative analysis of urban form: Applying spatial metrics and remote sensing. Landsc. Urban Plan. 2007, 82, 184–197. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI); Springer: Berlin/Heidelberg, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Moreno, E.L. Concepts, Definitions and Data Sources for the Study of Urbanization: The 2030 Agenda for Sustainable Development. United Nations Expert Group Meeting on Sustainable Cities, Human Mobility and International Migration. Available online: https://www.un.org/en/development/desa/population/events/pdf/expert/27/papers/II/paper-Moreno-final.pdf (accessed on 25 October 2021).

- Rana, M.M.P. Urbanization and sustainability: Challenges and strategies for sustainable urban development in Bangladesh. Environ. Dev. Sustain. 2011, 13, 237–256. [Google Scholar] [CrossRef]

- Kit, O.; Lüdeke, M.; Reckien, D. Defining the bull’s eye: Satellite imagery-assisted slum population assessment in Hyderabad, India. Urban Geogr. 2013, 34, 413–424. [Google Scholar] [CrossRef]

- Habitat, U. Urbanization and Development: Emerging Futures; World City Report; UN Habitat: Nairobi, Kenya, 2016. [Google Scholar]

- Bruzzone, L.; Carlin, L. A multilevel context-based system for classification of very high spatial resolution images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2587–2600. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Guo, L.; Liu, Z.; Bu, S.; Ren, J. Effective and efficient midlevel visual elements-oriented land-use classification using VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4238–4249. [Google Scholar] [CrossRef]

- Kumar, H.S.; Raja, K.; Venugopal, K.; Patnaik, L. Automatic image segmentation using wavelets. Int. J. Comput. Sci. Netw. Secur. 2009, 9, 305–313. [Google Scholar]

- Karoui, I.; Fablet, R.; Boucher, J.M.; Augustin, J.M. Variational region-based segmentation using multiple texture statistics. IEEE Trans. Image Process. 2010, 19, 3146–3156. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Ball, J.E.; Anderson, D.T.; Chan Sr, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef]

- Nogueira, K.; Penatti, O.A.; Dos Santos, J.A. Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recognit. 2017, 61, 539–556. [Google Scholar] [CrossRef]

- Andries, A.; Morse, S.; Lynch, J.; Woolliams, E.; Fonweban, J.; Murphy, R. Translation of Remote Sensing data into Sustainable Development Indicators. In Proceedings of the ISDRS24, Messina, Italy, 13–15 June 2018. [Google Scholar]

- You, J.; Li, X.; Low, M.; Lobell, D.; Ermon, S. Deep gaussian process for crop yield prediction based on remote sensing data. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Mahabir, R.; Croitoru, A.; Crooks, A.T.; Agouris, P.; Stefanidis, A. A critical review of high and very high-resolution remote sensing approaches for detecting and mapping slums: Trends, challenges and emerging opportunities. Urban Sci. 2018, 2, 8. [Google Scholar] [CrossRef]

- Benza, M.; Weeks, J.R.; Stow, D.A.; Lopez-Carr, D.; Clarke, K.C. A pattern-based definition of urban context using remote sensing and GIS. Remote Sens. Environ. 2016, 183, 250–264. [Google Scholar] [CrossRef] [PubMed]

- Maimaitijiang, M.; Ghulam, A.; Sandoval, J.O.; Maimaitiyiming, M. Drivers of land cover and land use changes in St. Louis metropolitan area over the past 40 years characterized by remote sensing and census population data. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 161–174. [Google Scholar] [CrossRef]

- Ghosh, T.; Anderson, S.J.; Elvidge, C.D.; Sutton, P.C. Using nighttime satellite imagery as a proxy measure of human well-being. Sustainability 2013, 5, 4988–5019. [Google Scholar] [CrossRef]

- Jean, N.; Burke, M.; Xie, M.; Davis, W.M.; Lobell, D.B.; Ermon, S. Combining satellite imagery and machine learning to predict poverty. Science 2016, 353, 790–794. [Google Scholar] [CrossRef] [PubMed]

- Yue, W.; Gao, J.; Yang, X. Estimation of gross domestic product using multi-sensor remote sensing data: A case study in Zhejiang province, East China. Remote Sens. 2014, 6, 7260–7275. [Google Scholar] [CrossRef]

- Oshri, B.; Hu, A.; Adelson, P.; Chen, X.; Dupas, P.; Weinstein, J.; Burke, M.; Lobell, D.; Ermon, S. Infrastructure quality assessment in africa using satellite imagery and deep learning. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 July 2018; pp. 616–625. [Google Scholar]

- Tsoka, M.; Chunga, J.; Kayuni, H. Afrobarometer Round 6: The Quality of Democracy and Governance in Malawi, 2014; ICPSR: Ann Arbor, MI, USA, 2017. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics. JMLR Workshop and Conference Proceedings, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Copernicus Land Monitoring Service. Urban Atlas; European Environment Agency: Paris, France, 2020. [Google Scholar]

- Albert, A.; Kaur, J.; Gonzalez, M.C. Using convolutional networks and satellite imagery to identify patterns in urban environments at a large scale. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, 13–17 August 2017; pp. 1357–1366. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Basu, S.; Ganguly, S.; Mukhopadhyay, S.; DiBiano, R.; Karki, M.; Nemani, R. Deepsat: A learning framework for satellite imagery. In Proceedings of the 23rd SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 3–6 November 2015; pp. 1–10. [Google Scholar]

- Piaggesi, S.; Gauvin, L.; Tizzoni, M.; Cattuto, C.; Adler, N.; Verhulst, S.; Young, A.; Price, R.; Ferres, L.; Panisson, A. Predicting City Poverty Using Satellite Imagery. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Barke, M. The importance of urban form as an object of study. In Teaching Urban Morphology; Springer: Berlin/Heidelberg, Germany, 2018; pp. 11–30. [Google Scholar]

- Kärrholm, M. The territorialisation of a pedestrian precinct in Malmö: Materialities in the commercialisation of public space. Urban Stud. 2008, 45, 1903–1924. [Google Scholar] [CrossRef]

- Jabareen, Y.R. Sustainable urban forms: Their typologies, models, and concepts. J. Plan. Educ. Res. 2006, 26, 38–52. [Google Scholar] [CrossRef]

- Jenks, M.; Dempsey, N. Future Forms and Design for Sustainable Cities; Routledge: London, UK, 2005. [Google Scholar]

- Seto, K.C.; Sánchez-Rodríguez, R.; Fragkias, M. The new geography of contemporary urbanization and the environment. Annu. Rev. Environ. Resour. 2010, 35, 167–194. [Google Scholar] [CrossRef]

- Zhang, Q.; Seto, K.C. Mapping urbanization dynamics at regional and global scales using multi-temporal DMSP/OLS nighttime light data. Remote Sens. Environ. 2011, 115, 2320–2329. [Google Scholar] [CrossRef]

- Song, Y.; Knaap, G.J. Measuring urban form: Is Portland winning the war on sprawl? J. Am. Plan. Assoc. 2004, 70, 210–225. [Google Scholar] [CrossRef]

- Xie, M.; Jean, N.; Burke, M.; Lobell, D.; Ermon, S. Transfer learning from deep features for remote sensing and poverty mapping. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Kamalipour, H.; Dovey, K. Mapping the visibility of informal settlements. Habitat Int. 2019, 85, 63–75. [Google Scholar] [CrossRef]

- Iannelli, G.C.; Lisini, G.; Dell’Acqua, F.; Feitosa, R.Q.; Costa, G.A.O.P.d.; Gamba, P. Urban area extent extraction in spaceborne HR and VHR data using multi-resolution features. Sensors 2014, 14, 18337–18352. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, I.; Ahmad, M.; Khan, F.A.; Asif, M. Comparison of deep-learning-based segmentation models: Using top view person images. IEEE Access 2020, 8, 136361–136373. [Google Scholar] [CrossRef]

| Urban Environment Topology | Category | Color |

|---|---|---|

| Red (1/A, 2/A, 2/B) | Highly informal | |

| Yellow (1/B, 1/C, 1/D, 2/C, 2/D, 3/A) | Moderately informal | |

| Cyan (3/B, 3/C, 3/D, 4/A, 4/B, 4/C) | Moderately formal | |

| Blue (4/D) | Highly formal |

| DeepLabv3+ | U-Net | FCN-8 | ||||||

|---|---|---|---|---|---|---|---|---|

| City | Socioeconomic Semantic Region | Area (%) | Accuracy (%) | F1-Score | Accuracy (%) | F1-Score | Accuracy (%) | F1-Score |

| Dhaka | Highly Informal | 20.86 | 86.47 | 0.66 | 85.72 | 0.65 | 80.52 | 0.59 |

| Moderately Informal | 17.95 | 83.24 | 0.56 | 81.74 | 0.55 | 79.11 | 0.34 | |

| Moderately Formal | 12.28 | 94.33 | 0.77 | 93.95 | 0.78 | 89.01 | 0.59 | |

| Highly Formal | 0.95 | 99.10 | 0.18 | 98.98 | 0.05 | 98.20 | 0.11 | |

| Mumbai | Highly Informal | 7.13 | 98.10 | 0.86 | 97.27 | 0.77 | 95.12 | 0.67 |

| Moderately Informal | 8.29 | 91.16 | 0.45 | 88.94 | 0.36 | 89.53 | 0.26 | |

| Moderately Formal | 16.56 | 90.25 | 0.71 | 89.34 | 0.69 | 88.09 | 0.66 | |

| Highly Formal | 2.08 | 98.0 | 0.10 | 97.75 | 0.02 | 97.74 | 0.01 | |

| Lima | Highly Informal | 4.81 | 98.05 | 0.81 | 97.32 | 0.79 | 96.43 | 0.56 |

| Moderately Informal | 8.51 | 95.14 | 0.73 | 89.42 | 0.54 | 89.54 | 0.49 | |

| Moderately Formal | 33.20 | 95.03 | 0.93 | 90.48 | 0.86 | 89.32 | 0.85 | |

| Highly Formal | 0.72 | 99.01 | 0.17 | 99.18 | 0.01 | 99.20 | 0.00 | |

| Jakarta | Highly Informal | 9.78 | 95.20 | 0.73 | 92.31 | 0.50 | 86.52 | 0.38 |

| Moderately Informal | 19.25 | 91.02 | 0.79 | 84.81 | 0.63 | 78.69 | 0.43 | |

| Moderately Formal | 28.13 | 94.01 | 0.89 | 89.04 | 0.81 | 82.59 | 0.69 | |

| Highly Formal | 0.64 | 99.05 | 0.22 | 99.21 | 0.03 | 99.04 | 0.01 | |

| Cairo | Highly Informal | 9.21 | 93.11 | 0.63 | 90.89 | 0.42 | 89.08 | 0.40 |

| Moderately Informal | 17.35 | 86.94 | 0.63 | 84.07 | 0.58 | 83.58 | 0.54 | |

| Moderately Formal | 20.35 | 90.03 | 0.75 | 88.79 | 0.73 | 87.93 | 0.70 | |

| Highly Formal | 2.32 | 97.33 | 0.41 | 96.74 | 0.24 | 96.32 | 0.33 | |

| Guangzhou | Highly Informal | 11.88 | 90.31 | 0.32 | 90.70 | 0.40 | 88.80 | 0.13 |

| Moderately Informal | 13.19 | 71.11 | 0.36 | 73.03 | 0.36 | 76.30 | 0.28 | |

| Moderately Formal | 21.08 | 77.05 | 0.43 | 74.20 | 0.42 | 70.29 | 0.47 | |

| Highly Formal | 10.82 | 90.18 | 0.25 | 89.40 | 0.06 | 89.05 | 0.05 | |

| Nairobi | Highly Informal | 3.6 | 94.04 | 0.41 | 96.34 | 0.16 | 96.11 | 0.16 |

| Moderately Informal | 13.28 | 87.12 | 0.58 | 85.62 | 0.59 | 81.14 | 0.46 | |

| Moderately Formal | 32.55 | 87.08 | 0.77 | 86.41 | 0.77 | 81.50 | 0.69 | |

| Highly Formal | 1.91 | 98.00 | 0.19 | 98.14 | 0.00 | 98.12 | 0.00 | |

| DeepLabv3+ Average F1 | DeepLabv3+ Stdev (F1) | U-Net Average F1 | U-Net Stdev (F1) | FCN-8 Average F1 | FCN-8 Stdev (F1) | |

|---|---|---|---|---|---|---|

| Highly Informal | 0.63 | 0.20 | 0.53 | 0.23 | 0.41 | 0.21 |

| Moderately Informal | 0.59 | 0.15 | 0.52 | 0.11 | 0.40 | 0.11 |

| Moderately Formal | 0.75 | 0.16 | 0.72 | 0.14 | 0.66 | 0.12 |

| Highly Formal | 0.30 | 0.23 | 0.16 | 0.28 | 0.14 | 0.23 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahman, A.K.M.M.; Zaber, M.; Cheng, Q.; Nayem, A.B.S.; Sarker, A.; Paul, O.; Shibasaki, R. Applying State-of-the-Art Deep-Learning Methods to Classify Urban Cities of the Developing World. Sensors 2021, 21, 7469. https://doi.org/10.3390/s21227469

Rahman AKMM, Zaber M, Cheng Q, Nayem ABS, Sarker A, Paul O, Shibasaki R. Applying State-of-the-Art Deep-Learning Methods to Classify Urban Cities of the Developing World. Sensors. 2021; 21(22):7469. https://doi.org/10.3390/s21227469

Chicago/Turabian StyleRahman, A. K. M. Mahbubur, Moinul Zaber, Qianwei Cheng, Abu Bakar Siddik Nayem, Anis Sarker, Ovi Paul, and Ryosuke Shibasaki. 2021. "Applying State-of-the-Art Deep-Learning Methods to Classify Urban Cities of the Developing World" Sensors 21, no. 22: 7469. https://doi.org/10.3390/s21227469

APA StyleRahman, A. K. M. M., Zaber, M., Cheng, Q., Nayem, A. B. S., Sarker, A., Paul, O., & Shibasaki, R. (2021). Applying State-of-the-Art Deep-Learning Methods to Classify Urban Cities of the Developing World. Sensors, 21(22), 7469. https://doi.org/10.3390/s21227469