Integration with 3D Visualization and IoT-Based Sensors for Real-Time Structural Health Monitoring

Abstract

:1. Introduction

2. Related Works

3. Materials and Methods

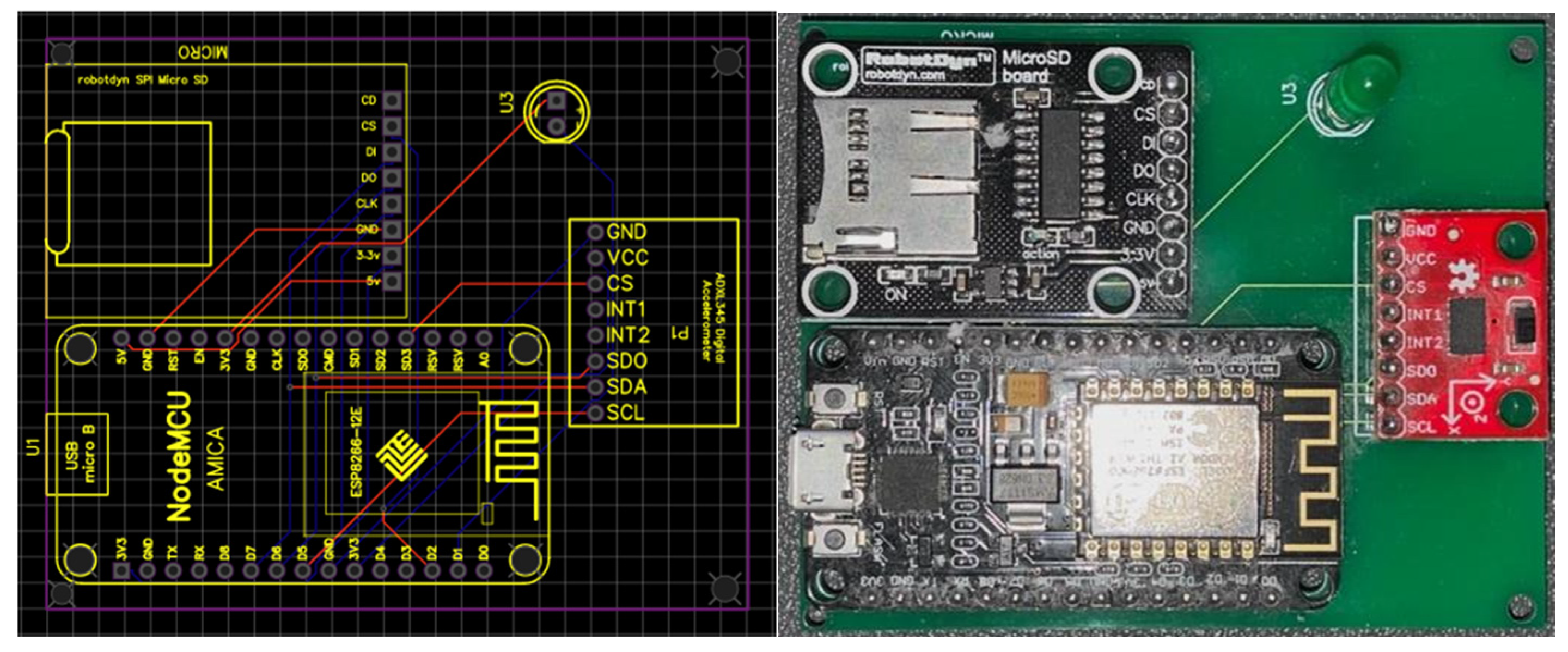

3.1. Sensor Device

3.2. System Architecture

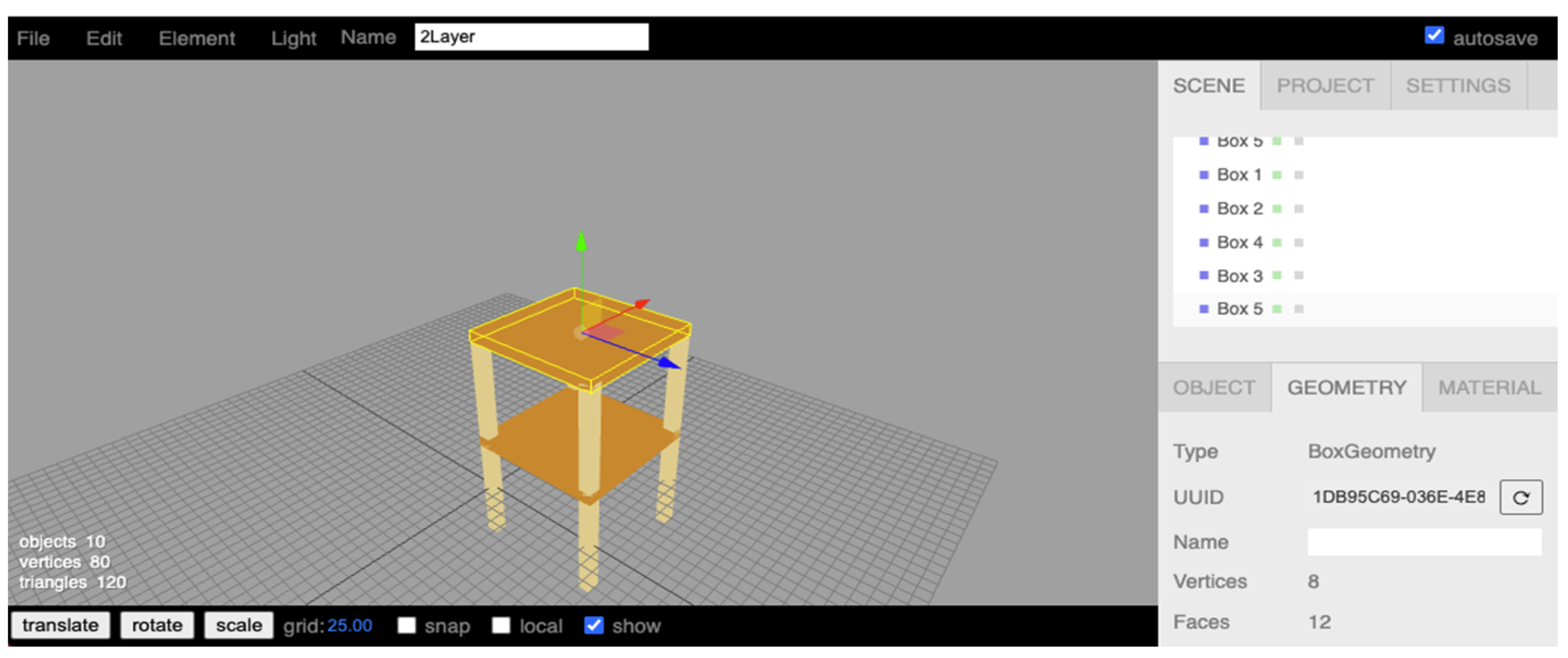

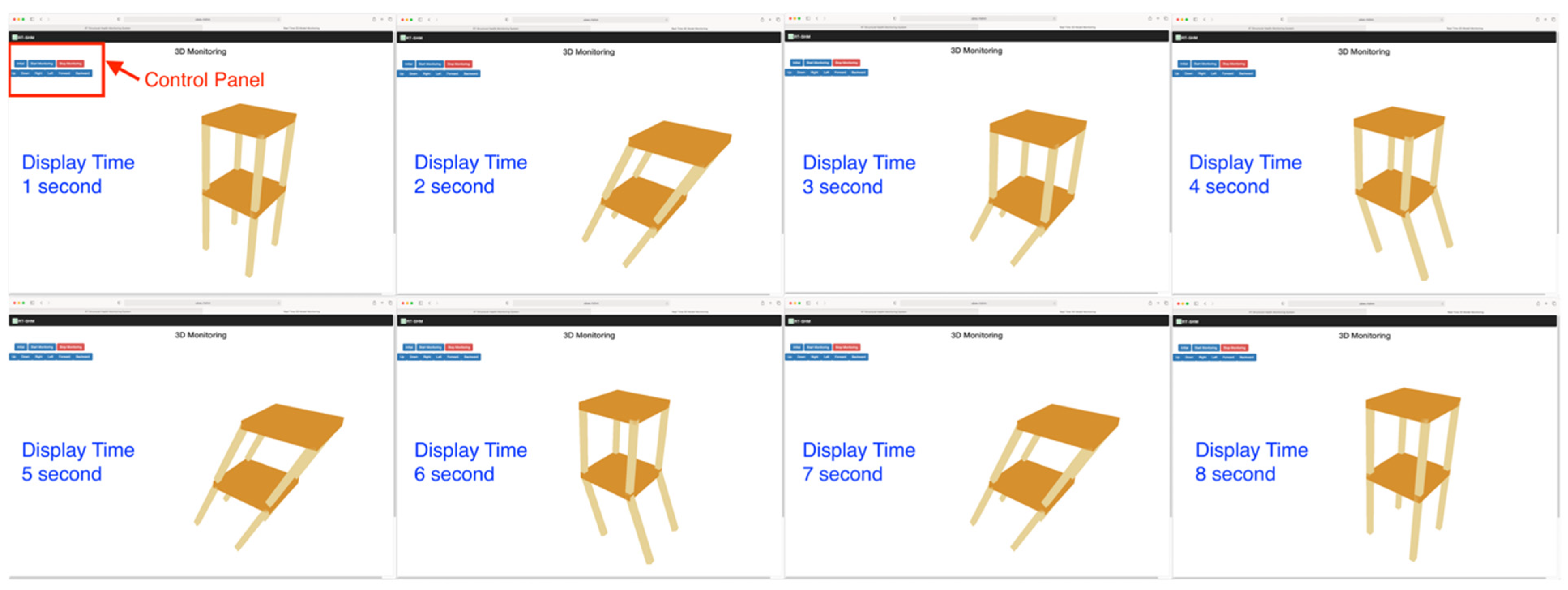

3.3. Three-Dimensional Structural Model Visualization

3.3.1. Challenges and Assumptions

3.3.2. Structural Global Displacement

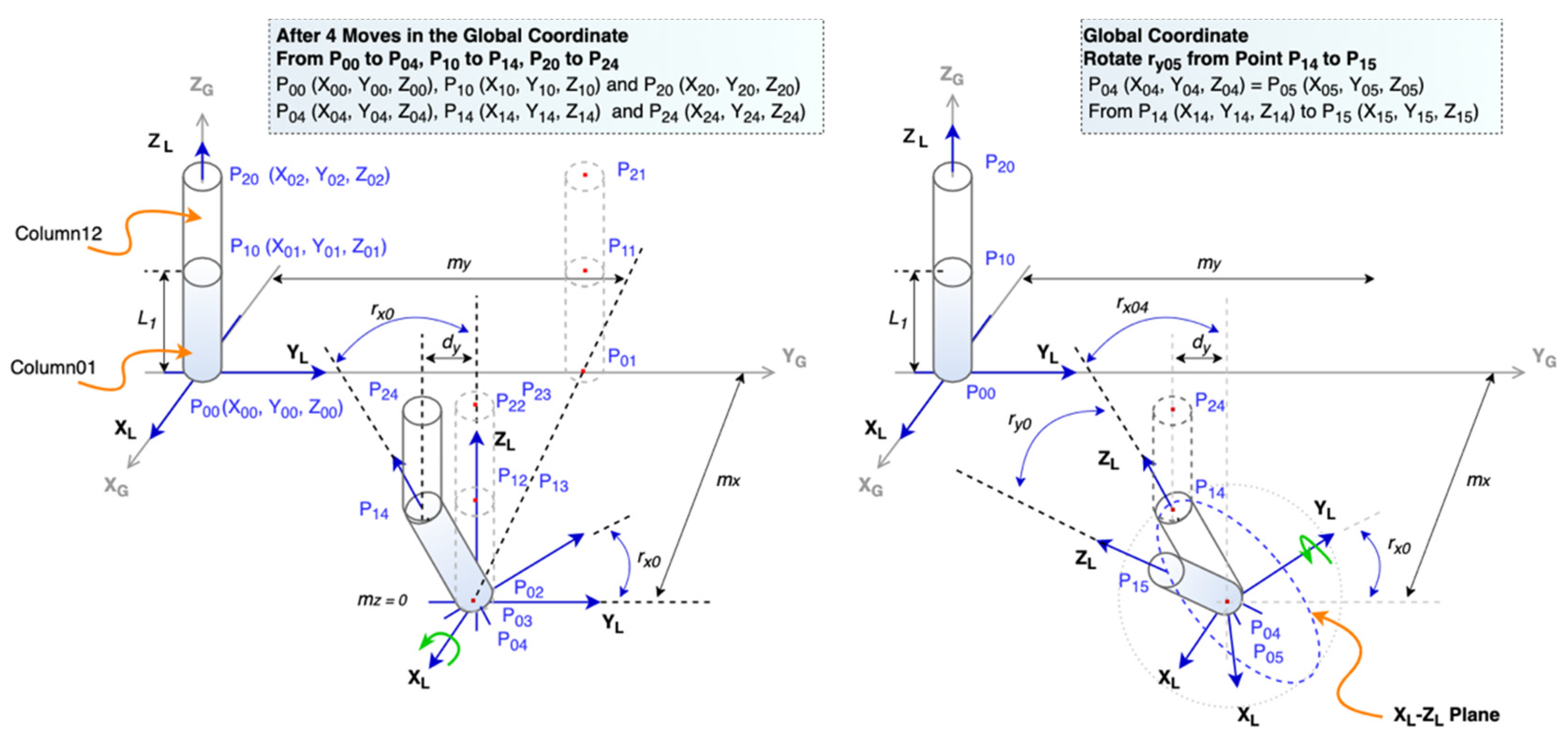

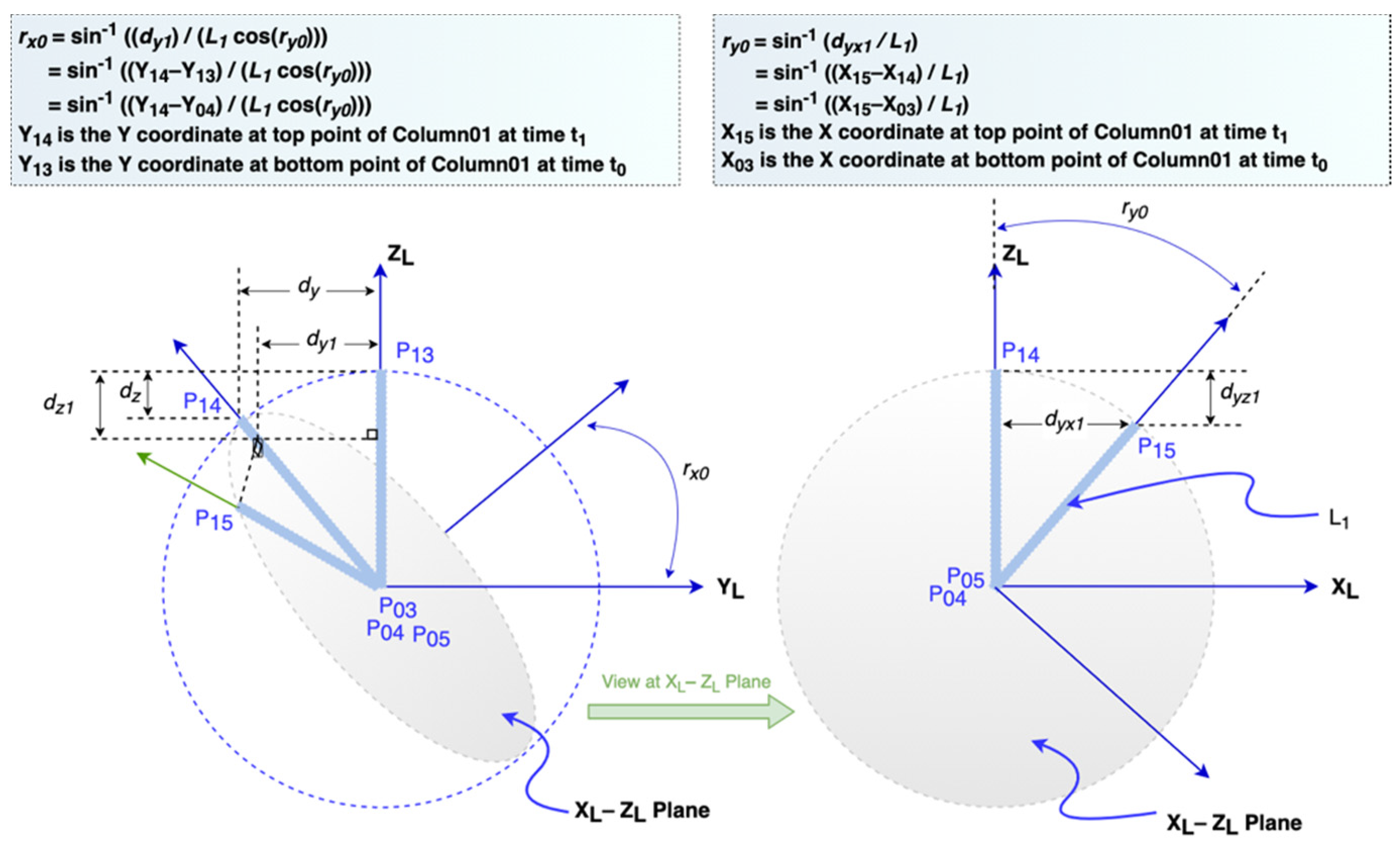

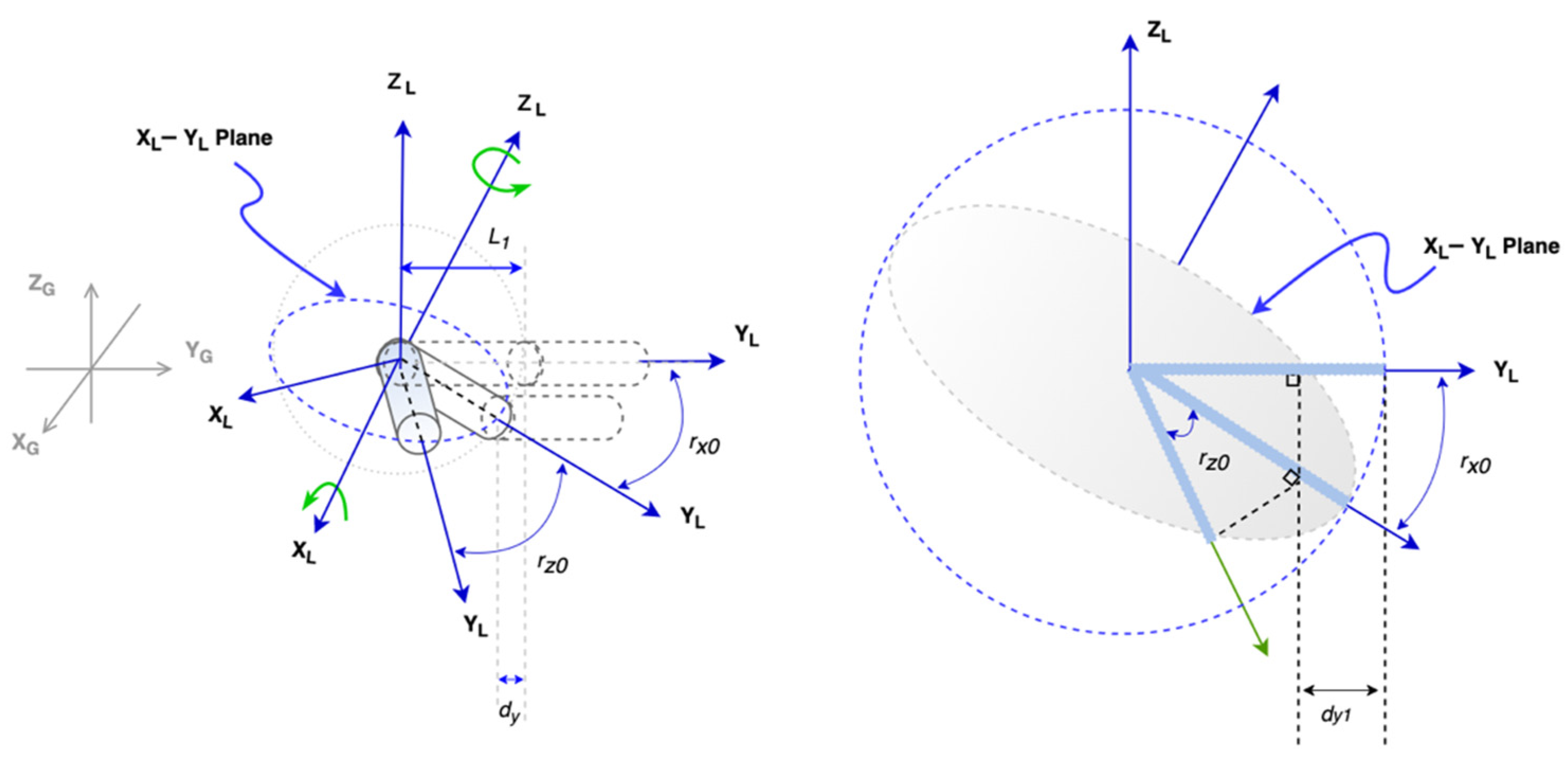

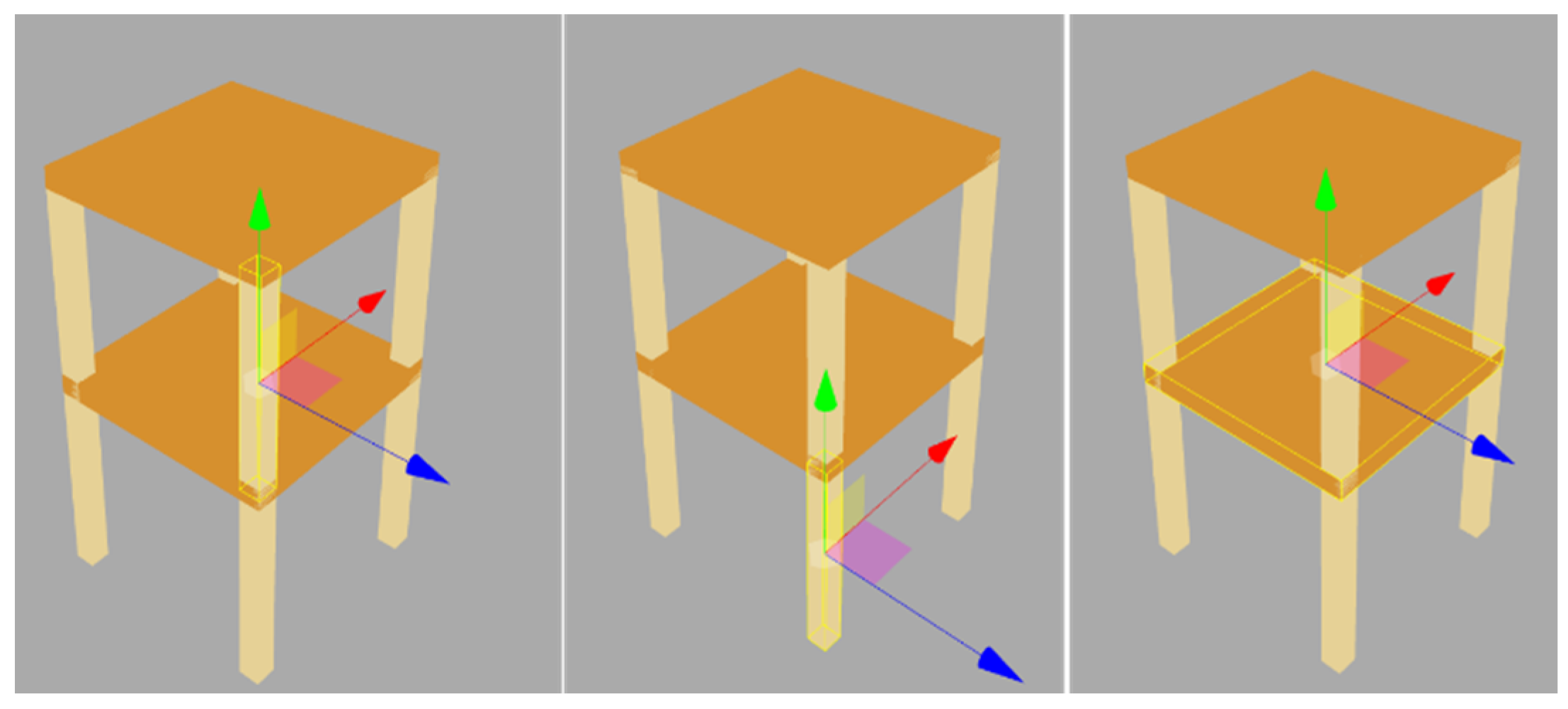

3.3.3. Conversion from Global Displacement to Element’s Local Rotation and Displacement

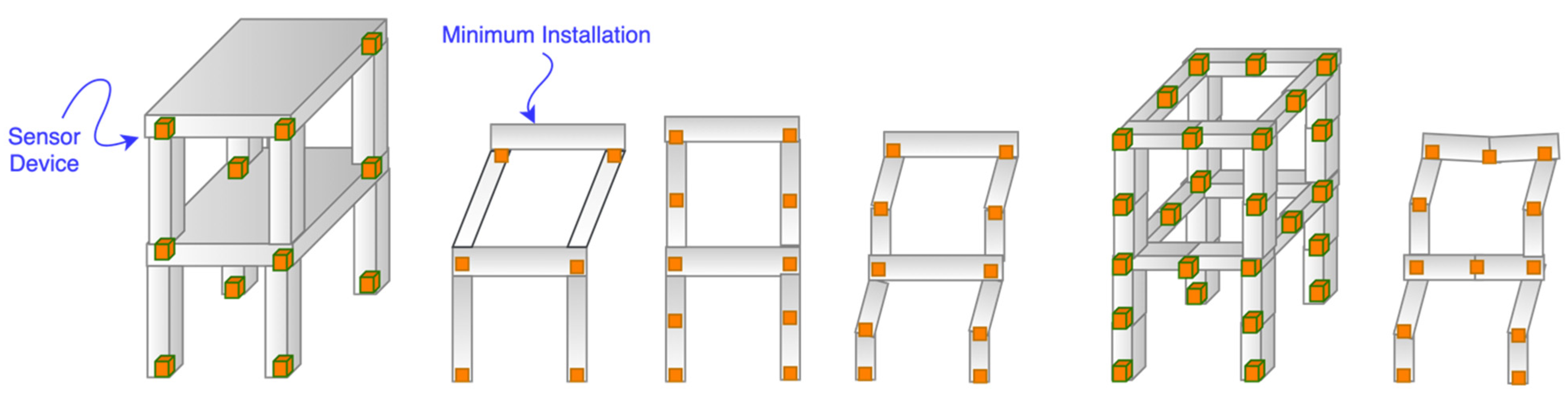

3.3.4. Sensor Deployment

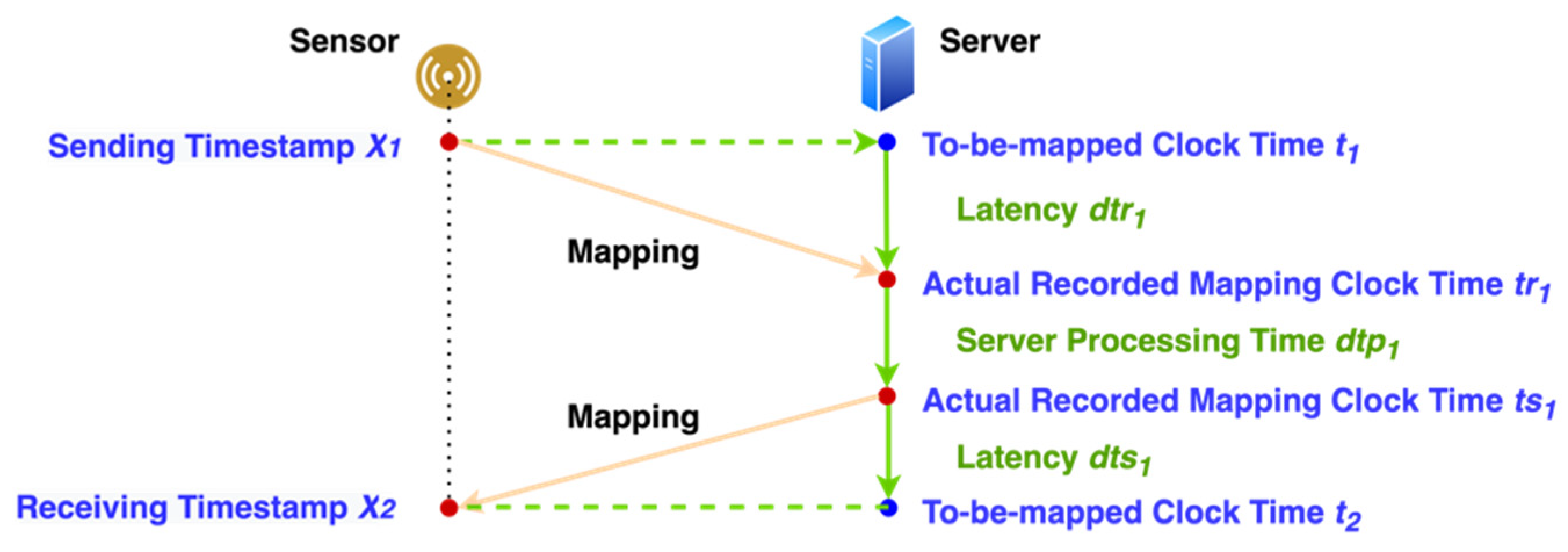

3.3.5. Timestamp

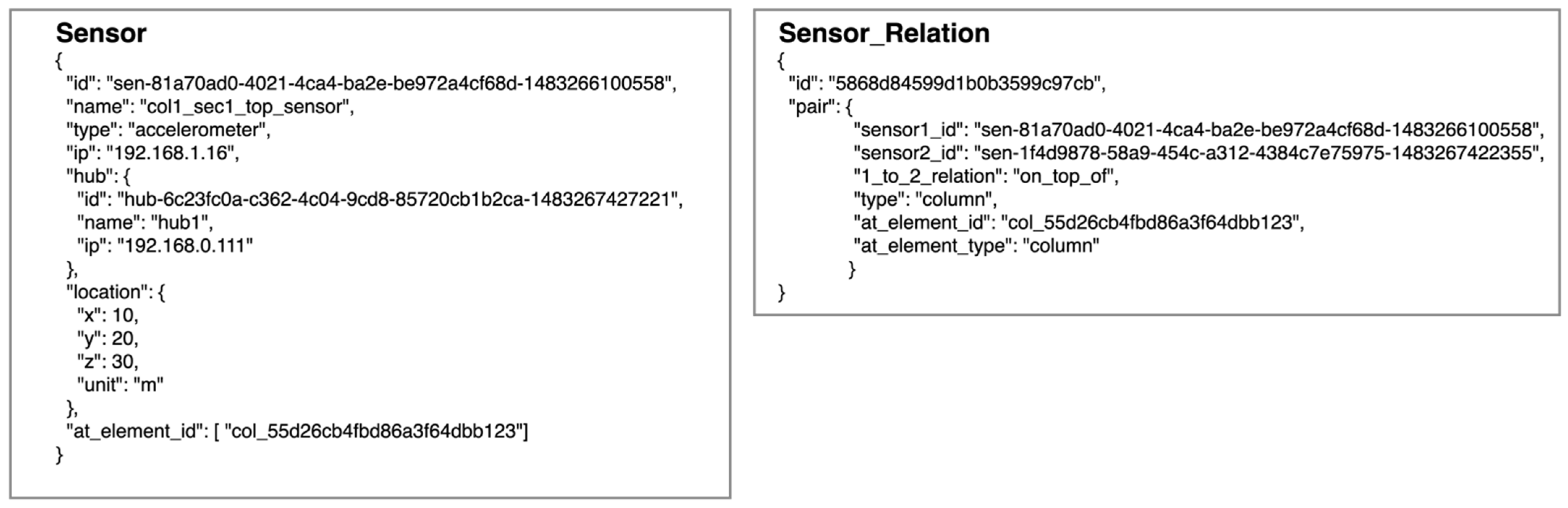

3.3.6. Data Format

4. Implementation and Validation

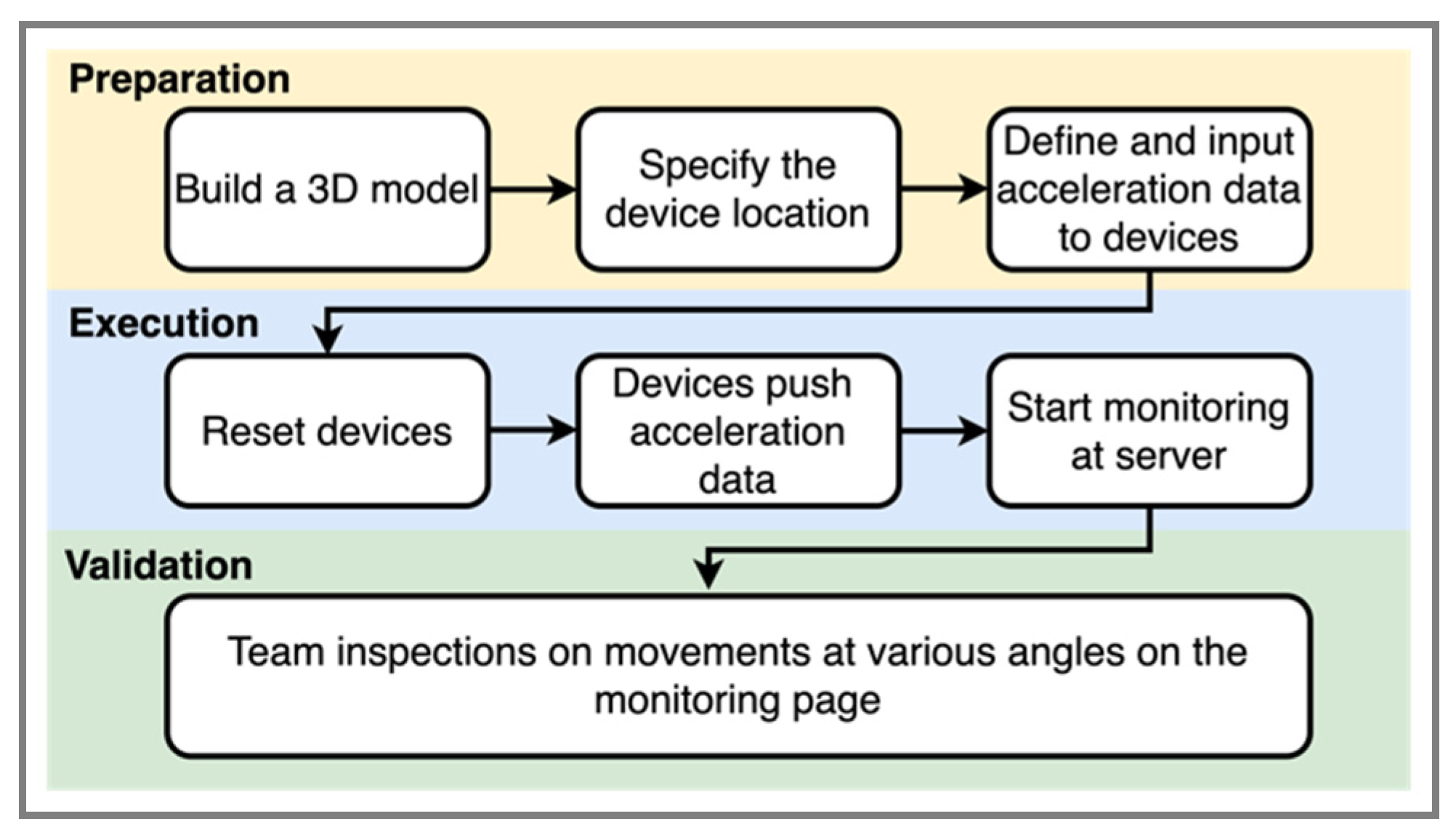

4.1. Implementation

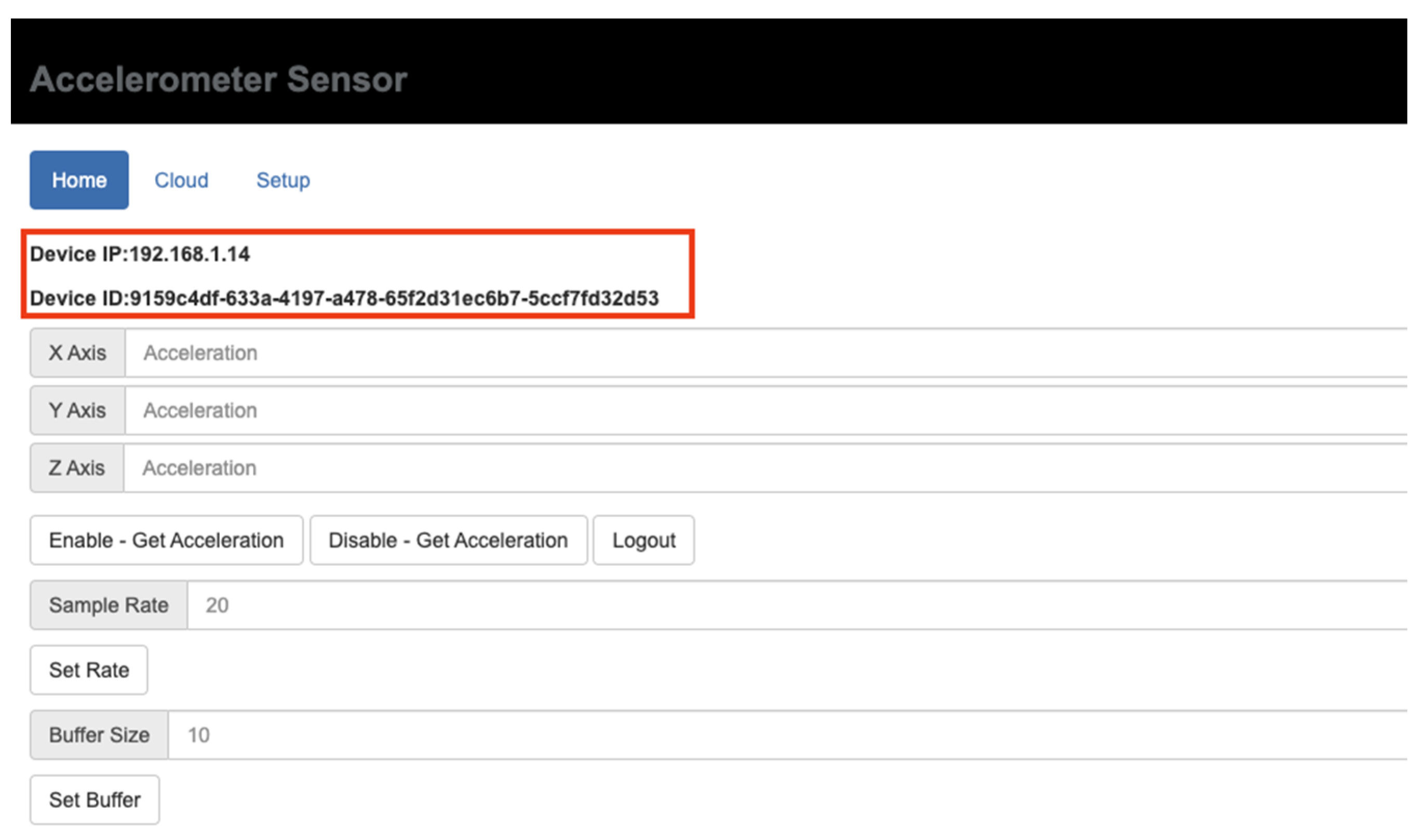

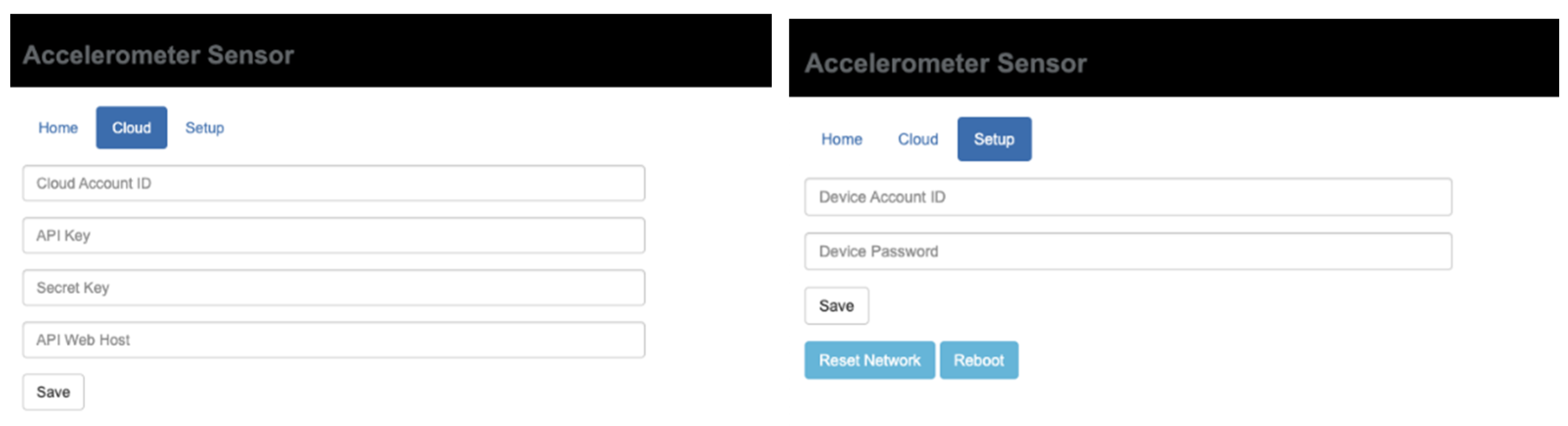

4.1.1. Sensor Device Software

4.1.2. Server Software

4.2. Validation

4.2.1. Time and Latency

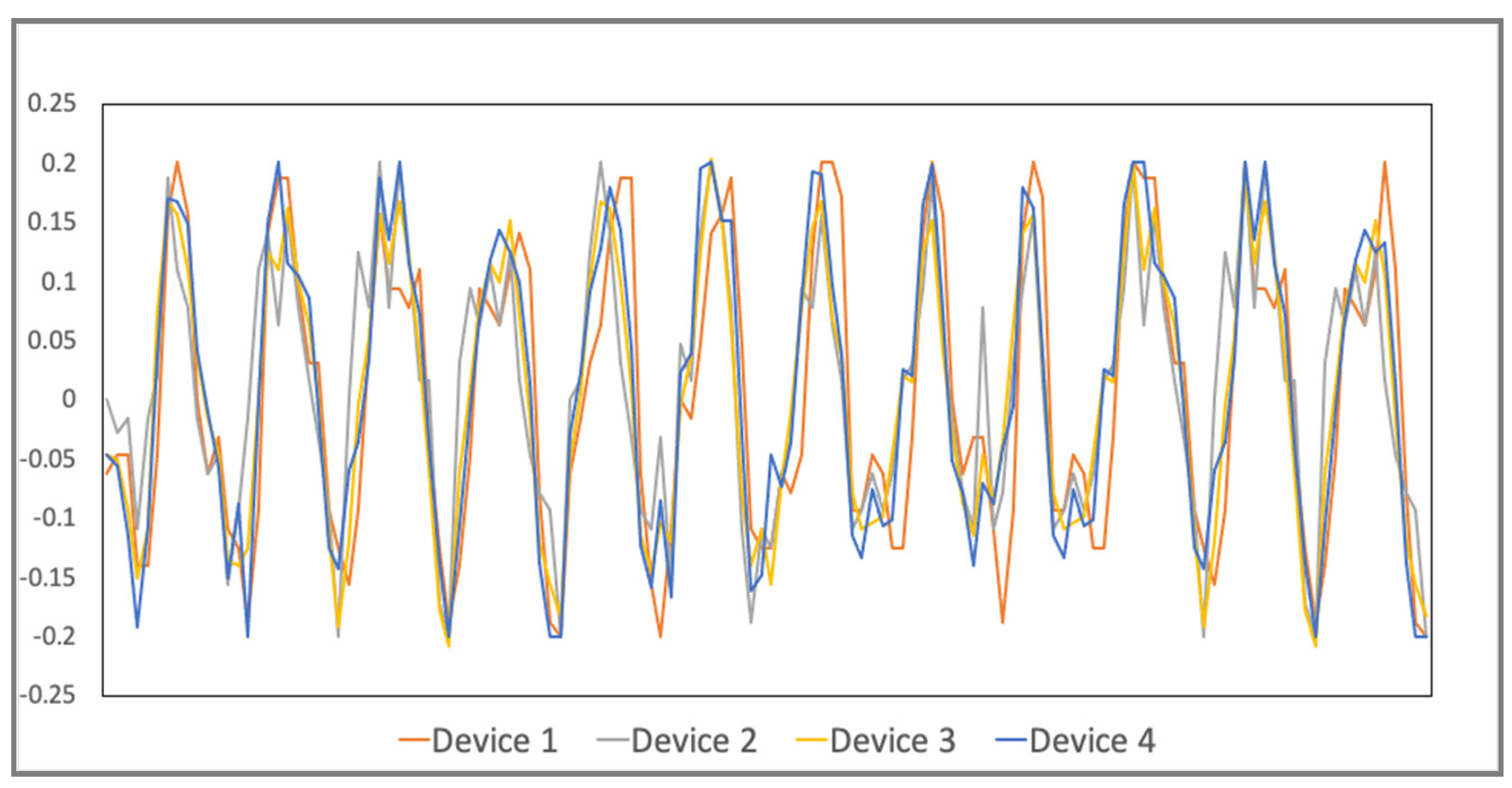

4.2.2. 3D Movement Calculation

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chong, K.P. Health Monitoring of Civil Structures. J. Intell. Mater. Syst. Struct. 1998, 9, 892–898. [Google Scholar] [CrossRef]

- Sun, M.; Staszewski, W.J.; Swamy, R.N. Smart sensing technologies for structural health monitoring of civil engineering structures. Adv. Civ. Eng. 2010, 2010, 724962. [Google Scholar] [CrossRef] [Green Version]

- Mainetti, L.; Patrono, L.; Vilei, A. Evolution of Wireless Sensor Networks towards the Internet of Things: A Survey. J. Comput. Des. Eng. 2015, 2, 148–156. [Google Scholar]

- Atzori, L.; Iera, A.; Morabito, G. The Internet of Things: A Survey. Comput. Netw. 2010, 54, 2787–2805. [Google Scholar] [CrossRef]

- Sundmaeker, H.; Guillemin, P.; Friess, P.; Woelfflé, S. (Eds.) Vision and Challenges for Realising the Internet of Things; European Comission-Information Society and Media DG: Brussels, Belgium, 2010. [Google Scholar]

- Krishnamurthi, R.; Kumar, A.; Gopinathan, D.; Nayyar, A.; Qureshi, B. An Overview of IoT Sensor Data Processing, Fusion, and Analysis Techniques. Sensors 2020, 20, 6076. [Google Scholar] [CrossRef] [PubMed]

- Buratti, C.; Conti, A.; Dardari, D.; Verdone, R. An overview on wireless sensor networks technology and evolution. Sensors 2009, 9, 6869–6896. [Google Scholar] [CrossRef] [Green Version]

- Mattern, F.; Floerkemeier, C. From the Internet of Computers to the Internet of Things. J. Univers. Something 2005, 11, 11–111. [Google Scholar]

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A Vision, Architectural Elements, and Future Directions. J. Comput. Des. Eng. 2013, 29, 1645–1660. [Google Scholar] [CrossRef] [Green Version]

- Masri, S.; Sheng, L.; Caffrey, J.; Nigbor, R.; Wahbeh, M.; Abdel-Ghaffar, A. Application of a web-enabled realtime structural health monitoring system for civil infrastructure systems. Smart Mater. Struct. 2004, 13, 1269–1283. [Google Scholar] [CrossRef]

- Xu, N.; Rangwala, S.; Chintalapudi, K.; Ganesan, D.; Broad, A.; Govindan, R.; Estrin, D. A wireless sensor network for structural monitoring. In Proceedings of the ACM Conference on Embedded Networked Sensor Systems, Baltimore, MD, USA, 3–5 November 2004. [Google Scholar]

- Kim, S.; Pakzad, S.; Culler, D.; Demmel, J.; Fenves, G.; Glaser, S.; Turon, M. Health monitoring of civil infrastructures using wireless sensor networks. In Proceedings of the 6th International Conference on Information Processing in Sensor Networks (IPSN), Cambridge, MA, USA, 25–27 April 2007. [Google Scholar]

- Ceriotti, M.; Mottola, L.; Picco, G.P.; Murphy, A.L.; Guna, S.; Corra, M.; Pozzi, M.; Zonta, D.; Zanon, P. Monitoring Heritage Buildings with Wireless Sensor Networks: The Torre Aquila Deployment. In Proceedings of the 2009 International Conference on Information Processing in Sensor Networks, San Francisco, CA, USA, 13–16 April 2009. [Google Scholar]

- Rice, J.; Mechitov, K.; Sim, S.; Spencer, B.; Agha, G. Enabling Framework for Structural Health Monitoring using Smart Sensors. Struct. Control Health Monit. 2011, 18, 574–587. [Google Scholar] [CrossRef]

- Paek, J.; Chintalapudi, K.; Govindan, R.; Caffrey, J.; Masri, S. A Wireless Sensor Network for Structural Health Monitoring: Performance and Evaluation. In Proceedings of the Second IEEE Workshop on Embedded Networked Sensors, Sydney, NSW, Australia, 30 April–1 May 2005; Institute of Electrical and Electronics Engineers: Piscataway, NJ, USA, 2005; pp. 1–10. [Google Scholar]

- Aloisio, A.; Di Battista, L.; Alaggio, R.; Fragiacomo, M. Sensitivity analysis of subspace-based damage indicators under changes in ambient excitation covariance, severity and location of damage. Eng. Struct. 2020, 208, 110235. [Google Scholar] [CrossRef]

- Zhou, Y.L.; Wahab, M.A. Cosine based and extended transmissibility damage indicators for structural damage detection. Eng. Struct. 2017, 141, 175–183. [Google Scholar] [CrossRef]

- Sony, S.; Sadhu, A. Identification of progressive damage in structures using time-frequency analysis. In Proceedings of the CSCE Annual Conference, Montreal, BC, Canada, 12–15 June 2019. [Google Scholar]

- Muttillo, M.; Stornelli, V.; Alaggio, R.; Paolucci, R.; Di Battista, L.; Rubeis, T.; Ferri, G. Structural Health Monitoring: An IoT Sensor System for Structural Damage Indicator Evaluation. Sensors 2020, 20, 4908. [Google Scholar] [CrossRef] [PubMed]

- Abdelgawad, A.; Yelamarthi, K. Internet of Things (IoT) Platform for Structure Health Monitoring. Wirel. Commun. Mob. Comput. 2017, 2017, 6560797. [Google Scholar] [CrossRef]

- Martinez, S.; Jiménez, J.; Baculima, R.; Serrano, I. IoT-based Microseismic Monitoring System for the Evaluation of Structural Health in Smart Cities. In Proceedings of the First Ibero-American Congress, ICSC—CITIES 2018, Soria, Spain, 26–27 September 2018. [Google Scholar]

- Aba, E.N.; Olugboji, O.A.; Nasir, A.; Olutoye, M.A.; Adedipe, O. Petroleum pipeline monitoring using an internet of things (IoT) platform. SN Appl. Sci. 2021, 3, 180. [Google Scholar] [CrossRef] [PubMed]

- Analog Devices; ADXL 345 Datasheet Rev E. 2013. Available online: https://www.analog.com/media/en/technical-documentation/data-sheets/ADXL345.pdf (accessed on 1 July 2021).

- Nyamsuren, P.; Lee, S.; Hwang, H.; Kim, T. A web-based collaborative framework for facilitating decision making on a 3D design developing process. J. Comput. Des. Eng. 2015, 2, 148–156. [Google Scholar] [CrossRef] [Green Version]

- Seifert, K.; Camacho, O. Implementing Positioning Algorithms Using Accelerometers; Application Note; Freescale Semiconductor: Tempe, AZ, USA, 2007. [Google Scholar]

- Martin, A.; Manfred, P. Calculation of displacements of measured accelerations, analysis of two accelerometers and application in road engineering. In Proceedings of the 6th Swiss Transport Research Conference, Ascona, Switzerland, 15–17 March 2006. [Google Scholar]

- Wei-Meng, N.; Fang, L.; Zi-Yuan, Q.; De-Qing, G. Small Displacement Measuring System Based on MEMS Accelerometer. Math. Probl. Eng. 2019, 2019, 3470604. [Google Scholar] [CrossRef]

- Andrew, B.; Graham, W.; William, W. Deriving Displacement from 3-Axis Accelerometers. In Proceedings of the Computer Games, Multimedia & Allied Technology, Singapore, 11–12 May 2009. [Google Scholar]

- Alablani, I.; Alenazi, M. EDTD-SC: An IoT Sensor Deployment Strategy for Smart Cities. Sensors 2020, 20, 7191. [Google Scholar] [CrossRef]

- Burhanuddin, M.A.; Mohammed, A.A.-J.; Ismail, R.; Hameed, M.E.; Kareem, A.N.; Basiron, H. A Review on Security Challenges and Features in Wireless Sensor Networks: IoT Perspective. J. Telecommun. Electron. Comput. Eng. 2018, 10, 17–21. [Google Scholar]

- Garrido-Hidalgo, C.; Hortelano, D.; Roda-Sanchez, L.; Olivares, T.; Ruiz, M.C.; Lopez, V. IoT Heterogeneous Mesh Network Deployment for Human-in-the-Loop Challenges Towards a Social and Sustainable Industry 4.0. IEEE Access 2018, 6, 28417–28437. [Google Scholar] [CrossRef]

| Maximum Standard Deviation | Minimum Standard Deviation |

|---|---|

| 0.0950045 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, H.-F.; Shokrolah Shirazi, M. Integration with 3D Visualization and IoT-Based Sensors for Real-Time Structural Health Monitoring. Sensors 2021, 21, 6988. https://doi.org/10.3390/s21216988

Chang H-F, Shokrolah Shirazi M. Integration with 3D Visualization and IoT-Based Sensors for Real-Time Structural Health Monitoring. Sensors. 2021; 21(21):6988. https://doi.org/10.3390/s21216988

Chicago/Turabian StyleChang, Hung-Fu, and Mohammad Shokrolah Shirazi. 2021. "Integration with 3D Visualization and IoT-Based Sensors for Real-Time Structural Health Monitoring" Sensors 21, no. 21: 6988. https://doi.org/10.3390/s21216988