Biosignal-Based Human–Machine Interfaces for Assistance and Rehabilitation: A Survey

Abstract

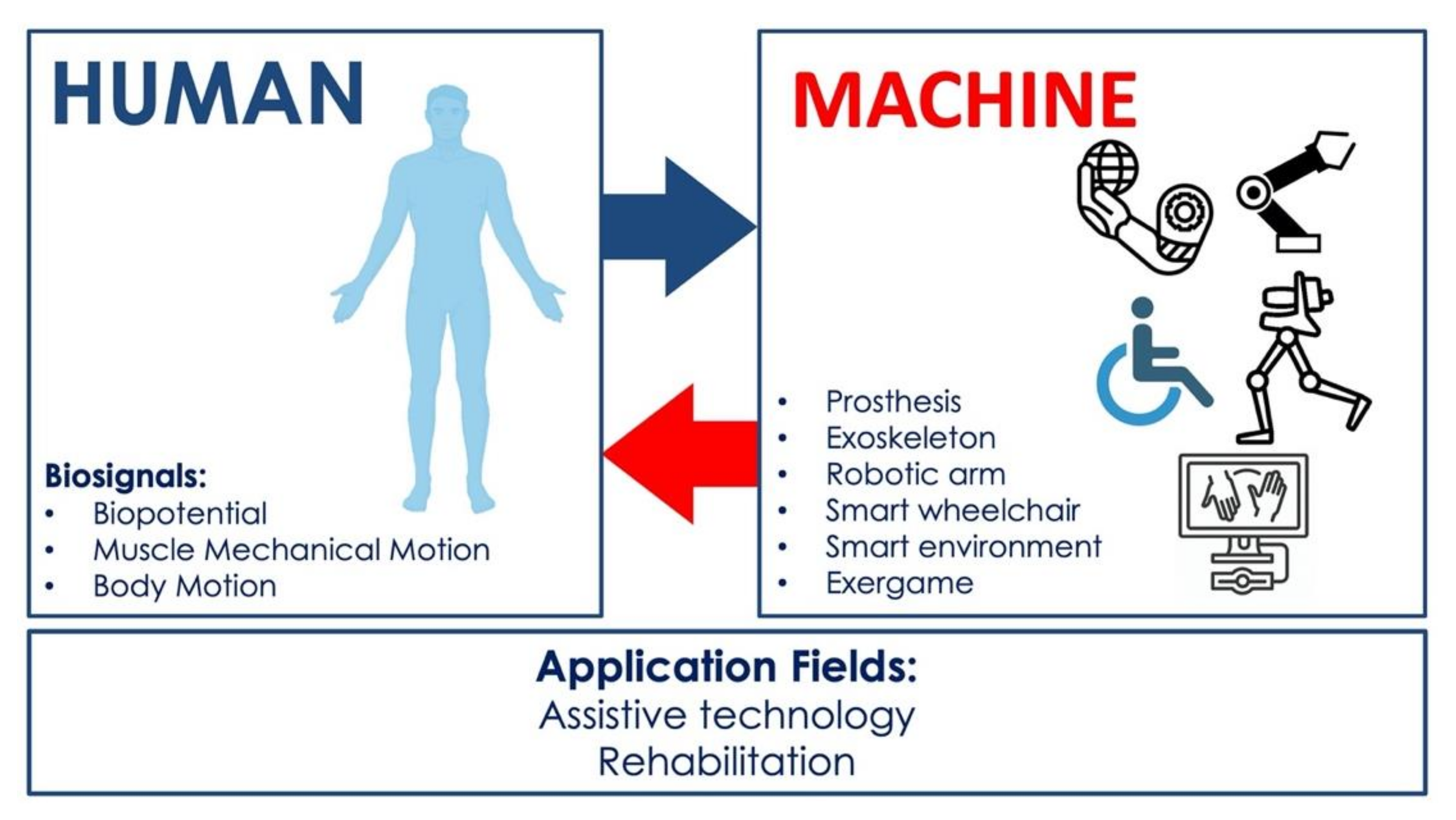

:1. Introduction

2. Survey Method

- Exergaming: a specific type of serious game (not designed for pure entertainment) is the so-called exergame: a human-activated video game that tracks the user’s gestures or movements and simulates them into a connected screen. It can be used as a potential rehabilitation tool to increase physical activity and improve health and physical function in patients with neuromuscular diseases [29,30,31,32].

3. HMI Control Strategies

3.1. HMI Control Based on Biopotentials

3.1.1. EEG-Based HMIs

3.1.2. EMG-Based HMIs

3.1.3. ENG-Based HMIs

3.1.4. EOG-Based HMIs

3.1.5. Hybrid Biopotential-Based HMIs

3.2. HMI Control Based on Muscle Mechanical Motion

3.2.1. Muscle Gross Motion-Based HMIs

3.2.2. Muscle Vibrations-Based HMIs

3.2.3. Muscle–Tendons Movement-Based HMIs

3.2.4. Hybrid Muscle Mechanical Motion-Based HMIs

3.3. Body Motion-Based HMIs

3.3.1. Image-Based Body Motion HMIs

3.3.2. Nonimage-Based Body Motion HMIs

3.4. Hybrid HMIs

3.4.1. Biopotentials and Image-Based Systems

3.4.2. Biopotentials and Mechanical Motion Detection

3.4.3. Other Various Hybrid Controls

4. Discussion

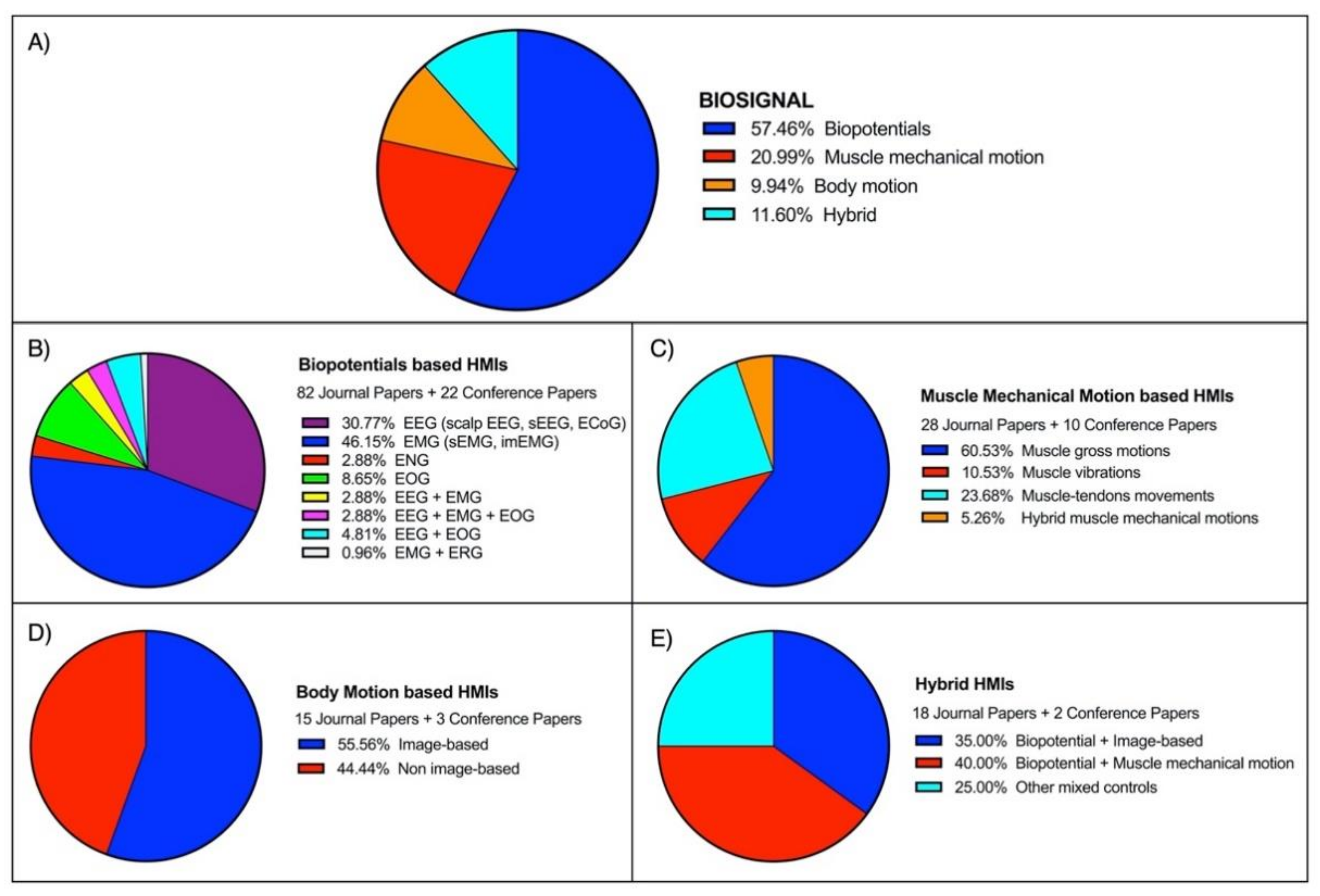

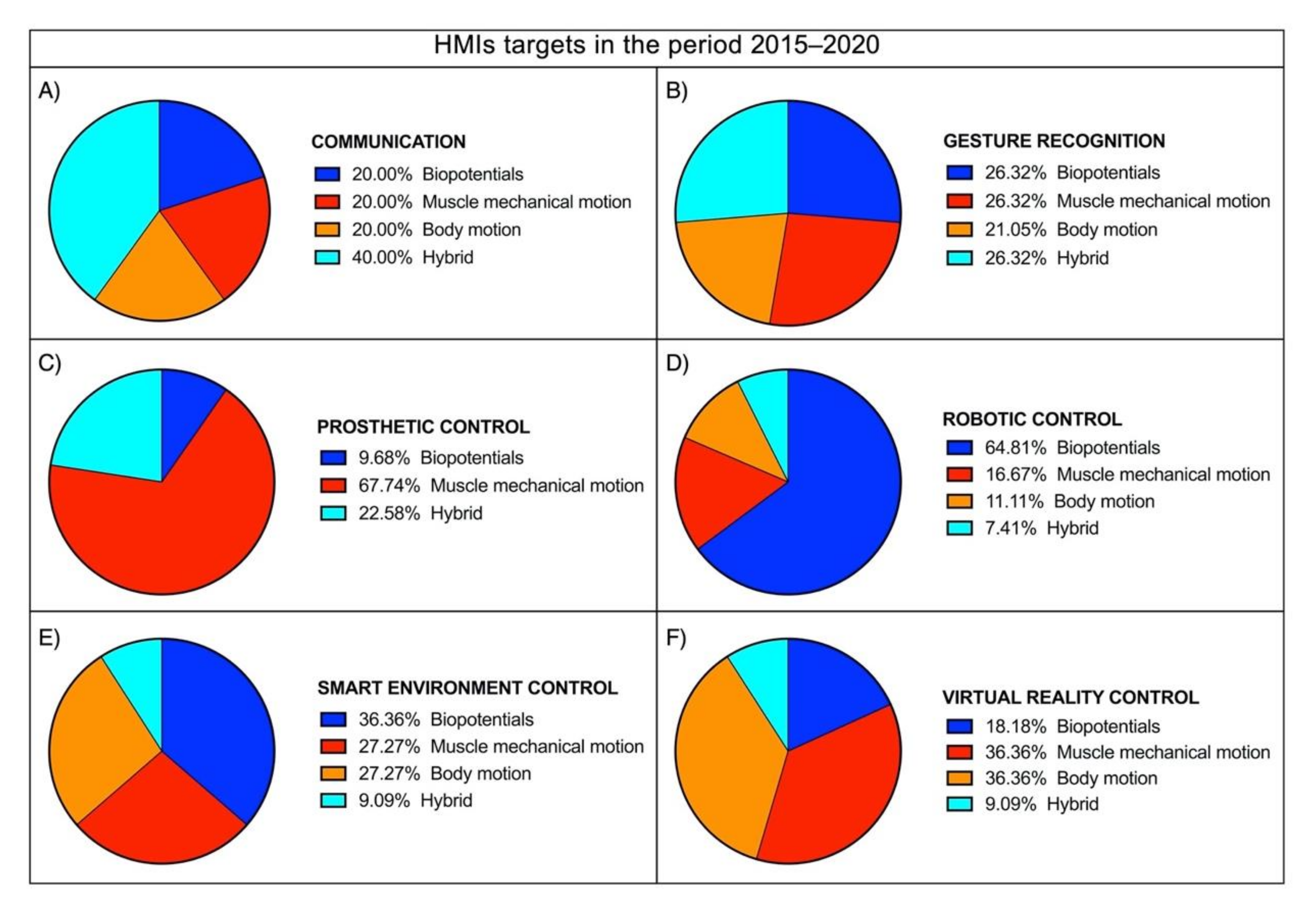

4.1. Statistical Analysis

4.2. Advantages and Disadvantages of Biosignal Categories

4.3. Latest Trends

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Singh, H.P.; Kumar, P. Developments in the Human Machine Interface Technologies and Their Applications: A Review. J. Med. Eng. Technol. 2021, 45, 552–573. [Google Scholar] [CrossRef]

- Kakkos, I.; Miloulis, S.-T.; Gkiatis, K.; Dimitrakopoulos, G.N.; Matsopoulos, G.K. Human–Machine Interfaces for Motor Rehabilitation. In Advanced Computational Intelligence in Healthcare-7: Biomedical Informatics; Maglogiannis, I., Brahnam, S., Jain, L.C., Eds.; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–16. ISBN 978-3-662-61114-2. [Google Scholar]

- Beck, T.W.; Housh, T.J.; Cramer, J.T.; Weir, J.P.; Johnson, G.O.; Coburn, J.W.; Malek, M.H.; Mielke, M. Mechanomyographic Amplitude and Frequency Responses during Dynamic Muscle Actions: A Comprehensive Review. Biomed. Eng. Online 2005, 4, 67. [Google Scholar] [CrossRef] [Green Version]

- Xiao, Z.G.; Menon, C. A Review of Force Myography Research and Development. Sensors 2019, 19, 4557. [Google Scholar] [CrossRef] [Green Version]

- Lazarou, I.; Nikolopoulos, S.; Petrantonakis, P.C.; Kompatsiaris, I.; Tsolaki, M. EEG-Based Brain–Computer Interfaces for Communication and Rehabilitation of People with Motor Impairment: A Novel Approach of the 21st Century. Front. Hum. Neurosci. 2018, 12, 14. [Google Scholar] [CrossRef] [Green Version]

- Ptito, M.; Bleau, M.; Djerourou, I.; Paré, S.; Schneider, F.C.; Chebat, D.-R. Brain-Machine Interfaces to Assist the Blind. Front. Hum. Neurosci. 2021, 15, 638887. [Google Scholar] [CrossRef]

- Baniqued, P.D.E.; Stanyer, E.C.; Awais, M.; Alazmani, A.; Jackson, A.E.; Mon-Williams, M.A.; Mushtaq, F.; Holt, R.J. Brain–Computer Interface Robotics for Hand Rehabilitation after Stroke: A Systematic Review. J. Neuroeng. Rehabil. 2021, 18, 15. [Google Scholar] [CrossRef]

- Mrachacz-Kersting, N.; Jiang, N.; Stevenson, A.J.T.; Niazi, I.K.; Kostic, V.; Pavlovic, A.; Radovanovic, S.; Djuric-Jovicic, M.; Agosta, F.; Dremstrup, K.; et al. Efficient Neuroplasticity Induction in Chronic Stroke Patients by an Associative Brain-Computer Interface. J. Neurophysiol. 2016, 115, 1410–1421. [Google Scholar] [CrossRef] [PubMed]

- Ahmadizadeh, C.; Khoshnam, M.; Menon, C. Human Machine Interfaces in Upper-Limb Prosthesis Control: A Survey of Techniques for Preprocessing and Processing of Biosignals. IEEE Signal Process. Mag. 2021, 38, 12–22. [Google Scholar] [CrossRef]

- Grushko, S.; Spurný, T.; Černý, M. Control Methods for Transradial Prostheses Based on Remnant Muscle Activity and Its Relationship with Proprioceptive Feedback. Sensors 2020, 20, 4883. [Google Scholar] [CrossRef] [PubMed]

- Úbeda, A.; Iáñez, E.; Azorín, J.M. Shared Control Architecture Based on RFID to Control a Robot Arm Using a Spontaneous Brain–Machine Interface. Robot. Auton. Syst. 2013, 61, 768–774. [Google Scholar] [CrossRef]

- Ma, J.; Zhang, Y.; Cichocki, A.; Matsuno, F. A Novel EOG/EEG Hybrid Human-Machine Interface Adopting Eye Movements and ERPs: Application to Robot Control. IEEE Trans. Biomed. Eng. 2015, 62, 876–889. [Google Scholar] [CrossRef]

- Xia, W.; Zhou, Y.; Yang, X.; He, K.; Liu, H. Toward Portable Hybrid Surface Electromyography/A-Mode Ultrasound Sensing for Human–Machine Interface. IEEE Sens. J. 2019, 19, 5219–5228. [Google Scholar] [CrossRef]

- Xu, B.; Li, W.; He, X.; Wei, Z.; Zhang, D.; Wu, C.; Song, A. Motor Imagery Based Continuous Teleoperation Robot Control with Tactile Feedback. Electronics 2020, 9, 174. [Google Scholar] [CrossRef] [Green Version]

- Varada, V.; Moolchandani, D.; Rohit, A. Measuring and Processing the Brain’s EEG Signals with Visual Feedback for Human Machine Interface. Int. J. Sci. Eng. Res. 2013, 4, 1–4. [Google Scholar]

- Zhu, M.; Sun, Z.; Zhang, Z.; Shi, Q.; He, T.; Liu, H.; Chen, T.; Lee, C. Haptic-Feedback Smart Glove as a Creative Human-Machine Interface (HMI) for Virtual/Augmented Reality Applications. Sci. Adv. 2020, 6, eaaz8693. [Google Scholar] [CrossRef] [PubMed]

- National Library of Medicine—National Institutes of Health. Available online: https://www.nlm.nih.gov/ (accessed on 9 August 2021).

- Taylor, M.; Griffin, M. The Use of Gaming Technology for Rehabilitation in People with Multiple Sclerosis. Mult. Scler. 2015, 21, 355–371. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fatima, N.; Shuaib, A.; Saqqur, M. Intra-Cortical Brain-Machine Interfaces for Controlling Upper-Limb Powered Muscle and Robotic Systems in Spinal Cord Injury. Clin. Neurol. Neurosurg. 2020, 196, 106069. [Google Scholar] [CrossRef]

- Garcia-Agundez, A.; Folkerts, A.-K.; Konrad, R.; Caserman, P.; Tregel, T.; Goosses, M.; Göbel, S.; Kalbe, E. Recent Advances in Rehabilitation for Parkinson’s Disease with Exergames: A Systematic Review. J. Neuroeng. Rehabil. 2019, 16, 17. [Google Scholar] [CrossRef]

- Mohebbi, A. Human-Robot Interaction in Rehabilitation and Assistance: A Review. Curr. Robot. Rep. 2020, 1, 131–144. [Google Scholar] [CrossRef]

- Frisoli, A.; Solazzi, M.; Loconsole, C.; Barsotti, M. New Generation Emerging Technologies for Neurorehabilitation and Motor Assistance. Acta Myol. 2016, 35, 141–144. [Google Scholar] [PubMed]

- Wright, J.; Macefield, V.G.; van Schaik, A.; Tapson, J.C. A Review of Control Strategies in Closed-Loop Neuroprosthetic Systems. Front. Neurosci. 2016, 10, 312. [Google Scholar] [CrossRef] [PubMed]

- Ciancio, A.L.; Cordella, F.; Barone, R.; Romeo, R.A.; Bellingegni, A.D.; Sacchetti, R.; Davalli, A.; Di Pino, G.; Ranieri, F.; Di Lazzaro, V.; et al. Control of Prosthetic Hands via the Peripheral Nervous System. Front. Neurosci. 2016, 10, 116. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ngan, C.G.Y.; Kapsa, R.M.I.; Choong, P.F.M. Strategies for Neural Control of Prosthetic Limbs: From Electrode Interfacing to 3D Printing. Materials 2019, 12, 1927. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Parajuli, N.; Sreenivasan, N.; Bifulco, P.; Cesarelli, M.; Savino, S.; Niola, V.; Esposito, D.; Hamilton, T.J.; Naik, G.R.; Gunawardana, U.; et al. Real-Time EMG Based Pattern Recognition Control for Hand Prostheses: A Review on Existing Methods, Challenges and Future Implementation. Sensors 2019, 19, 4596. [Google Scholar] [CrossRef] [Green Version]

- Igual, C.; Pardo, L.A.; Hahne, J.M.; Igual, J. Myoelectric Control for Upper Limb Prostheses. Electronics 2019, 8, 1244. [Google Scholar] [CrossRef]

- Kumar, D.K.; Jelfs, B.; Sui, X.; Arjunan, S.P. Prosthetic Hand Control: A Multidisciplinary Review to Identify Strengths, Shortcomings, and the Future. Biomed. Signal Process. Control 2019, 53, 101588. [Google Scholar] [CrossRef]

- Da Gama, A.; Fallavollita, P.; Teichrieb, V.; Navab, N. Motor Rehabilitation Using Kinect: A Systematic Review. Games Health J. 2015, 4, 123–135. [Google Scholar] [CrossRef]

- Laver, K.E.; Lange, B.; George, S.; Deutsch, J.E.; Saposnik, G.; Crotty, M. Virtual Reality for Stroke Rehabilitation. Cochrane Database Syst. Rev. 2017, 2017, CD008349. [Google Scholar] [CrossRef] [Green Version]

- Mat Rosly, M.; Mat Rosly, H.; Davis Oam, G.M.; Husain, R.; Hasnan, N. Exergaming for Individuals with Neurological Disability: A Systematic Review. Disabil. Rehabil. 2017, 39, 727–735. [Google Scholar] [CrossRef]

- Reis, E.; Postolache, G.; Teixeira, L.; Arriaga, P.; Lima, M.L.; Postolache, O. Exergames for Motor Rehabilitation in Older Adults: An Umbrella Review. Phys. Ther. Rev. 2019, 24, 84–99. [Google Scholar] [CrossRef]

- Li, W.; Shi, P.; Yu, H. Gesture Recognition Using Surface Electromyography and Deep Learning for Prostheses Hand: State-of-the-Art, Challenges, and Future. Front. Neurosci. 2021, 15, 621885. [Google Scholar] [CrossRef]

- Liang, H.; Zhu, C.; Iwata, Y.; Maedono, S.; Mochita, M.; Liu, C.; Ueda, N.; Li, P.; Yu, H.; Yan, Y.; et al. Feature Extraction of Shoulder Joint’s Voluntary Flexion-Extension Movement Based on Electroencephalography Signals for Power Assistance. Bioengineering 2018, 6, 2. [Google Scholar] [CrossRef] [Green Version]

- He, Y.; Nathan, K.; Venkatakrishnan, A.; Rovekamp, R.; Beck, C.; Ozdemir, R.; Francisco, G.E.; Contreras-Vidal, J.L. An Integrated Neuro-Robotic Interface for Stroke Rehabilitation Using the NASA X1 Powered Lower Limb Exoskeleton. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology, Chicago, IL, USA, 26–30 August 2014; pp. 3985–3988. [Google Scholar] [CrossRef]

- Xu, R.; Jiang, N.; Mrachacz-Kersting, N.; Lin, C.; Asín Prieto, G.; Moreno, J.C.; Pons, J.L.; Dremstrup, K.; Farina, D. A Closed-Loop Brain-Computer Interface Triggering an Active Ankle-Foot Orthosis for Inducing Cortical Neural Plasticity. IEEE Trans. Biomed. Eng. 2014, 61, 2092–2101. [Google Scholar] [CrossRef]

- Fall, C.L.; Gagnon-Turcotte, G.; Dube, J.-F.; Gagne, J.S.; Delisle, Y.; Campeau-Lecours, A.; Gosselin, C.; Gosselin, B. Wireless SEMG-Based Body-Machine Interface for Assistive Technology Devices. IEEE J. Biomed. Health Inform. 2017, 21, 967–977. [Google Scholar] [CrossRef]

- Laksono, P.W.; Kitamura, T.; Muguro, J.; Matsushita, K.; Sasaki, M.; Amri bin Suhaimi, M.S. Minimum Mapping from EMG Signals at Human Elbow and Shoulder Movements into Two DoF Upper-Limb Robot with Machine Learning. Machines 2021, 9, 56. [Google Scholar] [CrossRef]

- Alibhai, Z.; Burreson, T.; Stiller, M.; Ahmad, I.; Huber, M.; Clark, A. A Human-Computer Interface For Smart Wheelchair Control Using Forearm EMG Signals. In Proceedings of the 2020 3rd International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 24–26 June 2020; pp. 34–39. [Google Scholar]

- Song, J.-H.; Jung, J.-W.; Lee, S.-W.; Bien, Z. Robust EMG Pattern Recognition to Muscular Fatigue Effect for Powered Wheelchair Control. J. Intell. Fuzzy Syst. 2009, 20, 3–12. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, Y.; Luo, Y.; Chen, D. Robust Bio-Signal Based Control of an Intelligent Wheelchair. Robotics 2013, 2, 187–197. [Google Scholar] [CrossRef] [Green Version]

- Zhang, R.; He, S.; Yang, X.; Wang, X.; Li, K.; Huang, Q.; Yu, Z.; Zhang, X.; Tang, D.; Li, Y. An EOG-Based Human–Machine Interface to Control a Smart Home Environment for Patients with Severe Spinal Cord Injuries. IEEE Trans. Biomed. Eng. 2019, 66, 89–100. [Google Scholar] [CrossRef] [PubMed]

- Bissoli, A.; Lavino-Junior, D.; Sime, M.; Encarnação, L.; Bastos-Filho, T. A Human–Machine Interface Based on Eye Tracking for Controlling and Monitoring a Smart Home Using the Internet of Things. Sensors 2019, 19, 859. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clark, J.W.J. The origin of biopotentials. In Medical Instrumentation: Application and Design, 4th ed.; Webster, J.G., Ed.; John Wiley & Sons: Hoboken, NJ, USA, 2010; pp. 126–181. [Google Scholar]

- Thakor, N.V. Biopotentials and Electrophysiology Measurements. In Measurement, Instrumentation, and Sensors Handbook: Electromagnetic, Optical, Radiation, Chemical, and Biomedical Measurement, 2nd ed.; Webster, J.G., Eren, H., Eds.; CRC Press: Boca Raton, FL, USA, 2017; pp. 1–7. [Google Scholar]

- Gao, H.; Luo, L.; Pi, M.; Li, Z.; Li, Q.; Zhao, K.; Huang, J. EEG-Based Volitional Control of Prosthetic Legs for Walking in Different Terrains. IEEE Trans. Autom. Sci. Eng. 2021, 18, 530–540. [Google Scholar] [CrossRef]

- Gannouni, S.; Belwafi, K.; Aboalsamh, H.; AlSamhan, Z.; Alebdi, B.; Almassad, Y.; Alobaedallah, H. EEG-Based BCI System to Detect Fingers Movements. Brain Sci. 2020, 10, 965. [Google Scholar] [CrossRef]

- Fuentes-Gonzalez, J.; Infante-Alarcón, A.; Asanza, V.; Loayza, F.R. A 3D-Printed EEG Based Prosthetic Arm. In Proceedings of the 2020 IEEE International Conference on E-health Networking, Application Services (HEALTHCOM), Shenzhen, China, 1–2 March 2021; pp. 1–5. [Google Scholar]

- Song, Y.; Cai, S.; Yang, L.; Li, G.; Wu, W.; Xie, L. A Practical EEG-Based Human-Machine Interface to Online Control an Upper-Limb Assist Robot. Front. Neurorobot. 2020, 14, 32. [Google Scholar] [CrossRef]

- Korovesis, N.; Kandris, D.; Koulouras, G.; Alexandridis, A. Robot Motion Control via an EEG-Based Brain–Computer Interface by Using Neural Networks and Alpha Brainwaves. Electronics 2019, 8, 1387. [Google Scholar] [CrossRef] [Green Version]

- Gordleeva, S.Y.; Lobov, S.A.; Grigorev, N.A.; Savosenkov, A.O.; Shamshin, M.O.; Lukoyanov, M.V.; Khoruzhko, M.A.; Kazantsev, V.B. Real-Time EEG–EMG Human–Machine Interface-Based Control System for a Lower-Limb Exoskeleton. IEEE Access 2020, 8, 84070–84081. [Google Scholar] [CrossRef]

- Noce, E.; Dellacasa Bellingegni, A.; Ciancio, A.L.; Sacchetti, R.; Davalli, A.; Guglielmelli, E.; Zollo, L. EMG and ENG-Envelope Pattern Recognition for Prosthetic Hand Control. J. Neurosci. Methods 2019, 311, 38–46. [Google Scholar] [CrossRef]

- Eisenberg, G.D.; Fyvie, K.G.H.M.; Mohamed, A.-K. Real-Time Segmentation and Feature Extraction of Electromyography: Towards Control of a Prosthetic Hand. IFAC-PapersOnLine 2017, 50, 151–156. [Google Scholar] [CrossRef]

- Tavakoli, M.; Benussi, C.; Lourenco, J.L. Single Channel Surface EMG Control of Advanced Prosthetic Hands: A Simple, Low Cost and Efficient Approach. Expert Syst. Appl. 2017, 79, 322–332. [Google Scholar] [CrossRef]

- Nguyen, A.T.; Xu, J.; Jiang, M.; Luu, D.K.; Wu, T.; Tam, W.-K.; Zhao, W.; Drealan, M.W.; Overstreet, C.K.; Zhao, Q.; et al. A Bioelectric Neural Interface towards Intuitive Prosthetic Control for Amputees. J. Neural Eng. 2020, 17, 066001. [Google Scholar] [CrossRef] [PubMed]

- Golparvar, A.J.; Yapici, M.K. Toward Graphene Textiles in Wearable Eye Tracking Systems for Human–Machine Interaction. Beilstein J. Nanotechnol. 2021, 12, 180–189. [Google Scholar] [CrossRef] [PubMed]

- Huang, Q.; He, S.; Wang, Q.; Gu, Z.; Peng, N.; Li, K.; Zhang, Y.; Shao, M.; Li, Y. An EOG-Based Human–Machine Interface for Wheelchair Control. IEEE Trans. Biomed. Eng. 2018, 65, 2023–2032. [Google Scholar] [CrossRef] [PubMed]

- Arrow, C.; Wu, H.; Baek, S.; Iu, H.H.C.; Nazarpour, K.; Eshraghian, J.K. Prosthesis Control Using Spike Rate Coding in the Retina Photoreceptor Cells. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Korea, 22–28 May 2021; pp. 1–5. [Google Scholar]

- Noce, E.; Gentile, C.; Cordella, F.; Ciancio, A.L.; Piemonte, V.; Zollo, L. Grasp Control of a Prosthetic Hand through Peripheral Neural Signals. J. Phys. Conf. Ser. 2018, 1026, 012006. [Google Scholar] [CrossRef]

- Towe, B.C. Bioelectricity and its measurement. In Biomedical Engineering and Design Handbook, 2nd ed; Kutz, M., Ed.; McGraw-Hill Education: New York, NY, USA, 2009; pp. 481–527. [Google Scholar]

- Barr, R.C. Basic Electrophysiology. In Biomedical Engineering Handbook; Bronzino, J.D., Ed.; CRC Press: Boca Raton, FL, USA, 1999; pp. 146–162. [Google Scholar]

- Miller, L.E.; Hatsopoulos, N. Neural activity in motor cortex and related areas. In Brain–Computer Interfaces: Principles and Practice; Wolpaw, J., Wolpaw, E.W., Eds.; Oxford University Press: New York, NY, USA, 2012; pp. 15–43. [Google Scholar]

- Pfurtscheller, G.; Lopes da Silva, F.H. Event-Related EEG/MEG Synchronization and Desynchronization: Basic Principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Antoniou, E.; Bozios, P.; Christou, V.; Tzimourta, K.D.; Kalafatakis, K.; Tsipouras, M.G.; Giannakeas, N.; Tzallas, A.T. EEG-Based Eye Movement Recognition Using Brain–Computer Interface and Random Forests. Sensors 2021, 21, 2339. [Google Scholar] [CrossRef] [PubMed]

- Matsushita, K.; Hirata, M.; Suzuki, T.; Ando, H.; Yoshida, T.; Ota, Y.; Sato, F.; Morris, S.; Sugata, H.; Goto, T.; et al. A Fully Implantable Wireless ECoG 128-Channel Recording Device for Human Brain–Machine Interfaces: W-HERBS. Front. Neurosci. 2018, 12, 511. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Spataro, R.; Chella, A.; Allison, B.; Giardina, M.; Sorbello, R.; Tramonte, S.; Guger, C.; La Bella, V. Reaching and Grasping a Glass of Water by Locked-In ALS Patients through a BCI-Controlled Humanoid Robot. Front. Hum. Neurosci. 2017, 11, 68. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- López-Larraz, E.; Trincado-Alonso, F.; Rajasekaran, V.; Pérez-Nombela, S.; del-Ama, A.J.; Aranda, J.; Minguez, J.; Gil-Agudo, A.; Montesano, L. Control of an Ambulatory Exoskeleton with a Brain–Machine Interface for Spinal Cord Injury Gait Rehabilitation. Front. Neurosci. 2016, 10, 359. [Google Scholar] [CrossRef]

- Hortal, E.; Planelles, D.; Costa, A.; Iáñez, E.; Úbeda, A.; Azorín, J.M.; Fernández, E. SVM-Based Brain–Machine Interface for Controlling a Robot Arm through Four Mental Tasks. Neurocomputing 2015, 151, 116–121. [Google Scholar] [CrossRef]

- Wang, H.; Su, Q.; Yan, Z.; Lu, F.; Zhao, Q.; Liu, Z.; Zhou, F. Rehabilitation Treatment of Motor Dysfunction Patients Based on Deep Learning Brain–Computer Interface Technology. Front. Neurosci. 2020, 14, 595084. [Google Scholar] [CrossRef]

- Hong, L.Z.; Zourmand, A.; Victor Patricks, J.; Thing, G.T. EEG-Based Brain Wave Controlled Intelligent Prosthetic Arm. In Proceedings of the 2020 IEEE 8th Conference on Systems, Process and Control (ICSPC), Melaka, Malaysia, 11–12 December 2020; pp. 52–57. [Google Scholar]

- Ortiz, M.; Ferrero, L.; Iáñez, E.; Azorín, J.M.; Contreras-Vidal, J.L. Sensory Integration in Human Movement: A New Brain-Machine Interface Based on Gamma Band and Attention Level for Controlling a Lower-Limb Exoskeleton. Front. Bioeng. Biotechnol. 2020, 8, 735. [Google Scholar] [CrossRef]

- Kasim, M.A.A.; Low, C.Y.; Ayub, M.A.; Zakaria, N.A.C.; Salleh, M.H.M.; Johar, K.; Hamli, H. User-Friendly LabVIEW GUI for Prosthetic Hand Control Using Emotiv EEG Headset. Procedia Comput. Sci. 2017, 105, 276–281. [Google Scholar] [CrossRef]

- Murphy, D.P.; Bai, O.; Gorgey, A.S.; Fox, J.; Lovegreen, W.T.; Burkhardt, B.W.; Atri, R.; Marquez, J.S.; Li, Q.; Fei, D.-Y. Electroencephalogram-Based Brain–Computer Interface and Lower-Limb Prosthesis Control: A Case Study. Front. Neurol. 2017, 8, 696. [Google Scholar] [CrossRef] [Green Version]

- Li, G.; Jiang, S.; Xu, Y.; Wu, Z.; Chen, L.; Zhang, D. A Preliminary Study towards Prosthetic Hand Control Using Human Stereo-Electroencephalography (SEEG) Signals. In Proceedings of the 2017 8th International IEEE/EMBS Conference on Neural Engineering (NER), Shanghai, China, 25–28 May 2017; pp. 375–378. [Google Scholar]

- Bhagat, N.A.; Venkatakrishnan, A.; Abibullaev, B.; Artz, E.J.; Yozbatiran, N.; Blank, A.A.; French, J.; Karmonik, C.; Grossman, R.G.; O’Malley, M.K.; et al. Design and Optimization of an EEG-Based Brain Machine Interface (BMI) to an Upper-Limb Exoskeleton for Stroke Survivors. Front. Neurosci. 2016, 10, 122. [Google Scholar] [CrossRef]

- Morishita, S.; Sato, K.; Watanabe, H.; Nishimura, Y.; Isa, T.; Kato, R.; Nakamura, T.; Yokoi, H. Brain-Machine Interface to Control a Prosthetic Arm with Monkey ECoGs during Periodic Movements. Front. Neurosci. 2014, 8, 417. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, X.; Li, R.; Li, Y. Research on Brain Control Prosthetic Hand. In Proceedings of the 2014 11th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Kuala Lumpur, Malaysia, 12–15 November 2014; pp. 554–557. [Google Scholar]

- Yanagisawa, T.; Hirata, M.; Saitoh, Y.; Goto, T.; Kishima, H.; Fukuma, R.; Yokoi, H.; Kamitani, Y.; Yoshimine, T. Real-Time Control of a Prosthetic Hand Using Human Electrocorticography Signals. J. Neurosurg. 2011, 114, 1715–1722. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.; Sun, S.; Zhang, S.; Chen, Y.; Li, C.; Chen, S. A Brain-Machine Interface Based on ERD/ERS for an Upper-Limb Exoskeleton Control. Sensors 2016, 16, 2050. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Randazzo, L.; Iturrate, I.; Perdikis, S.; Millán, J.D. Mano: A Wearable Hand Exoskeleton for Activities of Daily Living and Neurorehabilitation. IEEE Robot. Autom. Lett. 2018, 3, 500–507. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Li, J.; Zhao, S.; Yuan, Y.; Kang, Y.; Chen, C.L.P. Adaptive Neural Control of a Kinematically Redundant Exoskeleton Robot Using Brain–Machine Interfaces. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3558–3571. [Google Scholar] [CrossRef]

- Kwak, N.-S.; Müller, K.-R.; Lee, S.-W. A Lower Limb Exoskeleton Control System Based on Steady State Visual Evoked Potentials. J. Neural Eng. 2015, 12, 056009. [Google Scholar] [CrossRef]

- Araujo, R.S.; Silva, C.R.; Netto, S.P.N.; Morya, E.; Brasil, F.L. Development of a Low-Cost EEG-Controlled Hand Exoskeleton 3D Printed on Textiles. Front. Neurosci. 2021, 15, 661569. [Google Scholar] [CrossRef]

- Kashihara, K. A Brain-Computer Interface for Potential Non-Verbal Facial Communication Based on EEG Signals Related to Specific Emotions. Front. Neurosci. 2014, 8, 244. [Google Scholar] [CrossRef] [Green Version]

- Mahmoudi, B.; Erfanian, A. Single-Channel EEG-Based Prosthetic Hand Grasp Control for Amputee Subjects. In Proceedings of the Second Joint 24th Annual Conference and the Annual Fall Meeting of the Biomedical Engineering Society, Engineering in Medicine and Biology, Houston, TX, USA, 23–26 October 2002; Volume 3, pp. 2406–2407. [Google Scholar]

- De Luca, C.J. Electromyography. In Encyclopedia of Medical Devices and Instrumentation, 2nd ed.; Webster, J.G., Ed.; John Wiley & Sons: Hoboken, NJ, USA, 2006; pp. 98–109. [Google Scholar]

- Bai, D.; Liu, T.; Han, X.; Chen, G.; Jiang, Y.; Hiroshi, Y. Multi-Channel SEMG Signal Gesture Recognition Based on Improved CNN-LSTM Hybrid Models. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Tokoname, Japan, 4–6 March 2021; pp. 111–116. [Google Scholar]

- Cao, T.; Liu, D.; Wang, Q.; Bai, O.; Sun, J. Surface Electromyography-Based Action Recognition and Manipulator Control. Appl. Sci. 2020, 10, 5823. [Google Scholar] [CrossRef]

- Benatti, S.; Milosevic, B.; Farella, E.; Gruppioni, E.; Benini, L. A Prosthetic Hand Body Area Controller Based on Efficient Pattern Recognition Control Strategies. Sensors 2017, 17, 869. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ulloa, G.D.F.; Sreenivasan, N.; Bifulco, P.; Cesarelli, M.; Gargiulo, G.; Gunawardana, U. Cost Effective Electro—Resistive Band Based Myo Activated Prosthetic Upper Limb for Amputees in the Developing World. In Proceedings of the 2017 IEEE Life Sciences Conference (LSC), Sydney, NSW, Australia, 13–15 December 2017; pp. 250–253. [Google Scholar]

- Polisiero, M.; Bifulco, P.; Liccardo, A.; Cesarelli, M.; Romano, M.; Gargiulo, G.D.; McEwan, A.L.; D’Apuzzo, M. Design and Assessment of a Low-Cost, Electromyographically Controlled, Prosthetic Hand. Med. Devices 2013, 6, 97–104. [Google Scholar] [CrossRef] [Green Version]

- Gailey, A.; Artemiadis, P.; Santello, M. Proof of Concept of an Online EMG-Based Decoding of Hand Postures and Individual Digit Forces for Prosthetic Hand Control. Front. Neurol. 2017, 8, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bernardino, A.; Rybarczyk, Y.; Barata, J. Versatility of Human Body Control through Low-Cost Electromyographic Interface. In Proceedings of the International Conference on Applications of Computer Engineering, San Francisco, CA, USA, 22–24 October 2014. [Google Scholar]

- Zhao, J.; Jiang, L.; Shi, S.; Cai, H.; Liu, H.; Hirzinger, G. A Five-Fingered Underactuated Prosthetic Hand System. In Proceedings of the 2006 International Conference on Mechatronics and Automation, Luoyang, China, 25–28 June 2006; pp. 1453–1458. [Google Scholar]

- Carozza, M.C.; Cappiello, G.; Stellin, G.; Zaccone, F.; Vecchi, F.; Micera, S.; Dario, P. On the Development of a Novel Adaptive Prosthetic Hand with Compliant Joints: Experimental Platform and EMG Control. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 1271–1276. [Google Scholar]

- Jiang, Y.; Togane, M.; Lu, B.; Yokoi, H. SEMG Sensor Using Polypyrrole-Coated Nonwoven Fabric Sheet for Practical Control of Prosthetic Hand. Front. Neurosci. 2017, 11, 33. [Google Scholar] [CrossRef] [PubMed]

- Brunelli, D.; Tadesse, A.M.; Vodermayer, B.; Nowak, M.; Castellini, C. Low-Cost Wearable Multichannel Surface EMG Acquisition for Prosthetic Hand Control. In Proceedings of the 2015 6th International Workshop on Advances in Sensors and Interfaces (IWASI), Gallipoli, Italy, 18–19 June 2015; pp. 94–99. [Google Scholar]

- Shair, E.F.; Jamaluddin, N.A.; Abdullah, A.R. Finger Movement Discrimination of EMG Signals Towards Improved Prosthetic Control Using TFD. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 2020, 11, 244–251. [Google Scholar] [CrossRef]

- Khushaba, R.N.; Kodagoda, S.; Takruri, M.; Dissanayake, G. Toward Improved Control of Prosthetic Fingers Using Surface Electromyogram (EMG) Signals. Expert Syst. Appl. 2012, 39, 10731–10738. [Google Scholar] [CrossRef]

- Kamavuako, E.N.; Scheme, E.J.; Englehart, K.B. On the Usability of Intramuscular EMG for Prosthetic Control: A Fitts’ Law Approach. J. Electromyogr. Kinesiol. 2014, 24, 770–777. [Google Scholar] [CrossRef]

- Dewald, H.A.; Lukyanenko, P.; Lambrecht, J.M.; Anderson, J.R.; Tyler, D.J.; Kirsch, R.F.; Williams, M.R. Stable, Three Degree-of-Freedom Myoelectric Prosthetic Control via Chronic Bipolar Intramuscular Electrodes: A Case Study. J. Neuroeng. Rehabil. 2019, 16, 147. [Google Scholar] [CrossRef] [Green Version]

- Al-Timemy, A.H.; Bugmann, G.; Escudero, J.; Outram, N. Classification of Finger Movements for the Dexterous Hand Prosthesis Control with Surface Electromyography. IEEE J. Biomed. Health Inform. 2013, 17, 608–618. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, X.Q.; Jiang, L.; Wu, X.; Feng, W.; Zhou, D.; Liu, H. Biomechatronic Design and Control of an Anthropomorphic Artificial Hand for Prosthetic Applications. Robotica 2016, 34, 2291–2308. [Google Scholar] [CrossRef]

- Dalley, S.A.; Varol, H.A.; Goldfarb, M. A Method for the Control of Multigrasp Myoelectric Prosthetic Hands. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 20, 58–67. [Google Scholar] [CrossRef] [Green Version]

- Russo, R.E.; Fernández, J.; Rivera, R.; Kuzman, M.G.; López, J.; Gemin, W.; Revuelta, M.Á. Algorithm of Myoelectric Signals Processing for the Control of Prosthetic Robotic Hands. J. Comput. Sci. Technol. 2018, 18, 28–34. [Google Scholar] [CrossRef]

- Stepp, C.E.; Heaton, J.T.; Rolland, R.G.; Hillman, R.E. Neck and Face Surface Electromyography for Prosthetic Voice Control after Total Laryngectomy. IEEE Trans. Neural Syst. Rehabil. Eng. 2009, 17, 146–155. [Google Scholar] [CrossRef] [PubMed]

- Visconti, P.; Gaetani, F.; Zappatore, G.A.; Primiceri, P. Technical Features and Functionalities of Myo Armband: An Overview on Related Literature and Advanced Applications of Myoelectric Armbands Mainly Focused on Arm Prostheses. Int. J. Smart Sens. Intell. Syst. 2018, 11, 1–25. [Google Scholar] [CrossRef] [Green Version]

- Lu, Z.; Zhou, P. Hands-Free Human-Computer Interface Based on Facial Myoelectric Pattern Recognition. Front. Neurol. 2019, 10, 444. [Google Scholar] [CrossRef] [PubMed]

- Kumar, B.; Paul, Y.; Jaswal, R.A. Development of EMG Controlled Electric Wheelchair Using SVM and KNN Classifier for SCI Patients. In Proceedings of the Advanced Informatics for Computing Research, Shimla, India, 15–16 June 2019; pp. 75–83. [Google Scholar]

- Kalani, H.; Moghimi, S.; Akbarzadeh, A. Towards an SEMG-Based Tele-Operated Robot for Masticatory Rehabilitation. Comput. Biol. Med. 2016, 75, 243–256. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhu, X.; Dai, L.; Luo, Y. Forehead SEMG Signal Based HMI for Hands-Free Control. J. China Univ. Posts Telecommun. 2014, 21, 98–105. [Google Scholar] [CrossRef]

- Hamedi, M.; Salleh, S.-H.; Swee, T.T. Kamarulafizam Surface Electromyography-Based Facial Expression Recognition in Bi-Polar Configuration. J. Comput. Sci. 2011, 7, 1407–1415. [Google Scholar] [CrossRef] [Green Version]

- Wege, A.; Zimmermann, A. Electromyography Sensor Based Control for a Hand Exoskeleton. In Proceedings of the 2007 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 15–18 December 2007. [Google Scholar] [CrossRef]

- Ho, N.S.K.; Tong, K.Y.; Hu, X.L.; Fung, K.L.; Wei, X.J.; Rong, W.; Susanto, E.A. An EMG-Driven Exoskeleton Hand Robotic Training Device on Chronic Stroke Subjects: Task Training System for Stroke Rehabilitation. In Proceedings of the 2011 IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–5. [Google Scholar]

- Loconsole, C.; Leonardis, D.; Barsotti, M.; Solazzi, M.; Frisoli, A.; Bergamasco, M.; Troncossi, M.; Foumashi, M.M.; Mazzotti, C.; Castelli, V.P. An Emg-Based Robotic Hand Exoskeleton for Bilateral Training of Grasp. In Proceedings of the 2013 World Haptics Conference (WHC), Daejeon, Korea, 14–17 April 2013; pp. 537–542. [Google Scholar]

- Hussain, I.; Spagnoletti, G.; Salvietti, G.; Prattichizzo, D. An EMG Interface for the Control of Motion and Compliance of a Supernumerary Robotic Finger. Front. Neurorobot. 2016, 10, 18. [Google Scholar] [CrossRef] [Green Version]

- Abdallah, I.B.; Bouteraa, Y.; Rekik, C. Design and Development of 3d Printed Myoelectric Robotic Exoskeleton for Hand Rehabilitation. Int. J. Smart Sens. Intell. Syst. 2017, 10, 341–366. [Google Scholar] [CrossRef] [Green Version]

- Secciani, N.; Bianchi, M.; Meli, E.; Volpe, Y.; Ridolfi, A. A Novel Application of a Surface ElectroMyoGraphy-Based Control Strategy for a Hand Exoskeleton System: A Single-Case Study. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419828197. [Google Scholar] [CrossRef] [Green Version]

- Song, R.; Tong, K.; Hu, X.; Zhou, W. Myoelectrically Controlled Wrist Robot for Stroke Rehabilitation. J. Neuroeng. Rehabil. 2013, 10, 52. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Li, X.; Zhu, A.; Zheng, Z.; Zhu, H. Design and Evaluation of a Surface Electromyography-Controlled Lightweight Upper Arm Exoskeleton Rehabilitation Robot. Int. J. Adv. Robot. Syst. 2021, 18, 17298814211003460. [Google Scholar] [CrossRef]

- Cai, S.; Chen, Y.; Huang, S.; Wu, Y.; Zheng, H.; Li, X.; Xie, L. SVM-Based Classification of SEMG Signals for Upper-Limb Self-Rehabilitation Training. Front. Neurorobot. 2019, 13, 31. [Google Scholar] [CrossRef] [Green Version]

- Yin, G.; Zhang, X.; Chen, D.; Li, H.; Chen, J.; Chen, C.; Lemos, S. Processing Surface EMG Signals for Exoskeleton Motion Control. Front. Neurorobot. 2020, 14, 40. [Google Scholar] [CrossRef]

- Tang, Z.; Zhang, K.; Sun, S.; Gao, Z.; Zhang, L.; Yang, Z. An Upper-Limb Power-Assist Exoskeleton Using Proportional Myoelectric Control. Sensors 2014, 14, 6677–6694. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lu, Z.; Chen, X.; Zhang, X.; Tong, K.-Y.; Zhou, P. Real-Time Control of an Exoskeleton Hand Robot with Myoelectric Pattern Recognition. Int. J. Neural Syst. 2017, 27, 1750009. [Google Scholar] [CrossRef]

- Gui, K.; Liu, H.; Zhang, D. A Practical and Adaptive Method to Achieve EMG-Based Torque Estimation for a Robotic Exoskeleton. IEEE/ASME Trans. Mechatron. 2019, 24, 483–494. [Google Scholar] [CrossRef]

- La Scaleia, V.; Sylos-Labini, F.; Hoellinger, T.; Wang, L.; Cheron, G.; Lacquaniti, F.; Ivanenko, Y.P. Control of Leg Movements Driven by EMG Activity of Shoulder Muscles. Front. Hum. Neurosci. 2014, 8, 838. [Google Scholar] [CrossRef] [PubMed]

- Lyu, M.; Chen, W.-H.; Ding, X.; Wang, J.; Pei, Z.; Zhang, B. Development of an EMG-Controlled Knee Exoskeleton to Assist Home Rehabilitation in a Game Context. Front. Neurorobot. 2019, 13, 67. [Google Scholar] [CrossRef] [Green Version]

- Martínez-Cerveró, J.; Ardali, M.K.; Jaramillo-Gonzalez, A.; Wu, S.; Tonin, A.; Birbaumer, N.; Chaudhary, U. Open Software/Hardware Platform for Human-Computer Interface Based on Electrooculography (EOG) Signal Classification. Sensors 2020, 20, 2443. [Google Scholar] [CrossRef]

- Perez Reynoso, F.D.; Niño Suarez, P.A.; Aviles Sanchez, O.F.; Calva Yañez, M.B.; Vega Alvarado, E.; Portilla Flores, E.A. A Custom EOG-Based HMI Using Neural Network Modeling to Real-Time for the Trajectory Tracking of a Manipulator Robot. Front. Neurorobot. 2020, 14, 67. [Google Scholar] [CrossRef]

- Choudhari, A.M.; Porwal, P.; Jonnalagedda, V.; Mériaudeau, F. An Electrooculography Based Human Machine Interface for Wheelchair Control. Biocybern. Biomed. Eng. 2019, 39, 673–685. [Google Scholar] [CrossRef]

- Heo, J.; Yoon, H.; Park, K.S. A Novel Wearable Forehead EOG Measurement System for Human Computer Interfaces. Sensors 2017, 17, 1485. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, X.; Pei, W.; Wang, Y.; Chen, Y.; Zhang, H.; Wu, X.; Yang, X.; Chen, H.; Liu, Y.; Liu, R. A Human-Machine Interface Based on Single Channel EOG and Patchable Sensor. Biomed. Signal Process. Control 2016, 30, 98–105. [Google Scholar] [CrossRef]

- Wu, J.F.; Ang, A.M.S.; Tsui, K.M.; Wu, H.C.; Hung, Y.S.; Hu, Y.; Mak, J.N.F.; Chan, S.C.; Zhang, Z.G. Efficient Implementation and Design of a New Single-Channel Electrooculography-Based Human–Machine Interface System. IEEE Trans. Circuits Syst. II Express Briefs 2015, 62, 179–183. [Google Scholar] [CrossRef] [Green Version]

- Ferreira, A.; Silva, R.L.; Celeste, W.C.; Filho, T.F.B.; Filho, M.S. Human–Machine Interface Based on Muscular and Brain Signals Applied to a Robotic Wheelchair. J. Phys. Conf. Ser. 2007, 90, 012094. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, B.; Zhang, C.; Xiao, Y.; Wang, M.Y. An EEG/EMG/EOG-Based Multimodal Human-Machine Interface to Real-Time Control of a Soft Robot Hand. Front. Neurorobot. 2019, 13, 7. [Google Scholar] [CrossRef] [Green Version]

- Huang, Q.; Zhang, Z.; Yu, T.; He, S.; Li, Y. An EEG-/EOG-Based Hybrid Brain-Computer Interface: Application on Controlling an Integrated Wheelchair Robotic Arm System. Front. Neurosci. 2019, 13, 1243. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Zhang, Y.; Nam, Y.; Cichocki, A.; Matsuno, F. EOG/ERP Hybrid Human-Machine Interface for Robot Control. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 859–864. [Google Scholar]

- Rezazadeh, I.M.; Firoozabadi, M.; Hu, H.; Golpayegani, S.M.R.H. Co-Adaptive and Affective Human-Machine Interface for Improving Training Performances of Virtual Myoelectric Forearm Prosthesis. IEEE Trans. Affect. Comput. 2012, 3, 285–297. [Google Scholar] [CrossRef]

- Rezazadeh, I.M.; Firoozabadi, S.M.; Hu, H.; Hashemi Golpayegani, S.M.R. A Novel Human–Machine Interface Based on Recognition of Multi-Channel Facial Bioelectric Signals. Australas. Phys. Eng. Sci. Med. 2011, 34, 497–513. [Google Scholar] [CrossRef] [PubMed]

- Iáñez, E.; Ùbeda, A.; Azorín, J.M. Multimodal Human-Machine Interface Based on a Brain-Computer Interface and an Electrooculography Interface. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 4572–4575. [Google Scholar] [CrossRef]

- Laport, F.; Iglesia, D.; Dapena, A.; Castro, P.M.; Vazquez-Araujo, F.J. Proposals and Comparisons from One-Sensor EEG and EOG Human-Machine Interfaces. Sensors 2021, 21, 2220. [Google Scholar] [CrossRef] [PubMed]

- Neto, A.F.; Celeste, W.C.; Martins, V.R.; Filho, T.; Filho, M.S. Human-Machine Interface Based on Electro-Biological Signals for Mobile Vehicles. In Proceedings of the 2006 IEEE International Symposium on Industrial Electronics, Montreal, QC, Canada, 9–13 July 2006. [Google Scholar] [CrossRef]

- Esposito, D. A Piezoresistive Sensor to Measure Muscle Contraction and Mechanomyography. Sensors 2018, 18, 2553. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Prakash, A.; Sahi, A.K.; Sharma, N.; Sharma, S. Force Myography Controlled Multifunctional Hand Prosthesis for Upper-Limb Amputees. Biomed. Signal Process. Control 2020, 62, 102122. [Google Scholar] [CrossRef]

- Wu, Y.; Jiang, D.; Liu, X.; Bayford, R.; Demosthenous, A. A Human-Machine Interface Using Electrical Impedance Tomography for Hand Prosthesis Control. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 1322–1333. [Google Scholar] [CrossRef] [Green Version]

- Asheghabadi, A.S.; Moqadam, S.B.; Xu, J. Multichannel Finger Pattern Recognition Using Single-Site Mechanomyography. IEEE Sens. J. 2021, 21, 8184–8193. [Google Scholar] [CrossRef]

- Chen, X.; Zheng, Y.-P.; Guo, J.-Y.; Shi, J. Sonomyography (SMG) Control for Powered Prosthetic Hand: A Study with Normal Subjects. Ultrasound Med. Biol. 2010, 36, 1076–1088. [Google Scholar] [CrossRef]

- Xiao, Z.; Menon, C. Performance of Forearm FMG and SEMG for Estimating Elbow, Forearm and Wrist Positions. J. Bionic Eng. 2017, 14, 284–295. [Google Scholar] [CrossRef]

- Sakr, M.; Jiang, X.; Menon, C. Estimation of User-Applied Isometric Force/Torque Using Upper Extremity Force Myography. Front. Robot. AI 2019, 6, 120. [Google Scholar] [CrossRef] [Green Version]

- Sakr, M.; Menon, C. Exploratory Evaluation of the Force Myography (FMG) Signals Usage for Admittance Control of a Linear Actuator. In Proceedings of the 2018 7th IEEE International Conference on Biomedical Robotics and Biomechatronics (Biorob), Enschede, The Netherlands, 26–29 August 2018. [Google Scholar] [CrossRef]

- Ahmadizadeh, C.; Pousett, B.; Menon, C. Investigation of Channel Selection for Gesture Classification for Prosthesis Control Using Force Myography: A Case Study. Front. Bioeng. Biotechnol. 2019, 7, 331. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Z.; Elnady, A.M.; Menon, C. Control an Exoskeleton for Forearm Rotation Using FMG. In Proceedings of the 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics, Sao Paulo, Brazil, 12–15 August 2014. [Google Scholar] [CrossRef]

- Ferigo, D.; Merhi, L.-K.; Pousett, B.; Xiao, Z.; Menon, C. A Case Study of a Force-Myography Controlled Bionic Hand Mitigating Limb Position Effect. J. Bionic Eng. 2017, 14, 692–705. [Google Scholar] [CrossRef]

- Esposito, D. A Piezoresistive Array Armband with Reduced Number of Sensors for Hand Gesture Recognition. Front. Neurorobot. 2020, 13, 114. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Esposito, D.; Savino, S.; Andreozzi, E.; Cosenza, C.; Niola, V.; Bifulco, P. The “Federica” Hand. Bioengineering 2021, 8, 128. [Google Scholar] [CrossRef] [PubMed]

- Esposito, D.; Cosenza, C.; Gargiulo, G.D.; Andreozzi, E.; Niola, V.; Fratini, A.; D’Addio, G.; Bifulco, P. Experimental Study to Improve “Federica” Prosthetic Hand and Its Control System. In Proceedings of the XV Mediterranean Conference on Medical and Biological Engineering and Computing—MEDICON 2019, Coimbra, Portugal, 26–28 September 2019; pp. 586–593. [Google Scholar]

- Ha, N.; Withanachchi, G.P.; Yihun, Y. Force Myography Signal-Based Hand Gesture Classification for the Implementation of Real-Time Control System to a Prosthetic Hand. In Proceedings of the 2018 Design of Medical Devices Conference, Minneapolis, MN, USA, 9–12 April 2018. [Google Scholar]

- Ha, N.; Withanachchi, G.P.; Yihun, Y. Performance of Forearm FMG for Estimating Hand Gestures and Prosthetic Hand Control. J. Bionic Eng. 2019, 16, 88–98. [Google Scholar] [CrossRef]

- Fujiwara, E.; Wu, Y.T.; Suzuki, C.K.; de Andrade, D.T.G.; Neto, A.R.; Rohmer, E. Optical Fiber Force Myography Sensor for Applications in Prosthetic Hand Control. In Proceedings of the 2018 IEEE 15th International Workshop on Advanced Motion Control (AMC), Tokyo, Japan, 9–11 March 2018; pp. 342–347. [Google Scholar]

- Bifulco, P.; Esposito, D.; Gargiulo, G.D.; Savino, S.; Niola, V.; Iuppariello, L.; Cesarelli, M. A Stretchable, Conductive Rubber Sensor to Detect Muscle Contraction for Prosthetic Hand Control. In Proceedings of the 2017 E-Health and Bioengineering Conference (EHB), Sinaia, Romania, 22–24 June 2017; pp. 173–176. [Google Scholar]

- Radmand, A.; Scheme, E.; Englehart, K. High-Density Force Myography: A Possible Alternative for Upper-Limb Prosthetic Control. J. Rehabil. Res. Dev. 2016, 53, 443–456. [Google Scholar] [CrossRef]

- Cho, E.; Chen, R.; Merhi, L.-K.; Xiao, Z.; Pousett, B.; Menon, C. Force Myography to Control Robotic Upper Extremity Prostheses: A Feasibility Study. Front. Bioeng. Biotechnol. 2016, 4, 18. [Google Scholar] [CrossRef] [Green Version]

- Dong, B.; Yang, Y.; Shi, Q.; Xu, S.; Sun, Z.; Zhu, S.; Zhang, Z.; Kwong, D.-L.; Zhou, G.; Ang, K.-W.; et al. Wearable Triboelectric-Human-Machine Interface (THMI) Using Robust Nanophotonic Readout. ACS Nano 2020, 14, 8915–8930. [Google Scholar] [CrossRef]

- An, T.; Anaya, D.V.; Gong, S.; Yap, L.W.; Lin, F.; Wang, R.; Yuce, M.R.; Cheng, W. Self-Powered Gold Nanowire Tattoo Triboelectric Sensors for Soft Wearable Human-Machine Interface. Nano Energy 2020, 77, 105295. [Google Scholar] [CrossRef]

- Clemente, F.; Ianniciello, V.; Gherardini, M.; Cipriani, C. Development of an Embedded Myokinetic Prosthetic Hand Controller. Sensors 2019, 19, 3137. [Google Scholar] [CrossRef] [Green Version]

- Tarantino, S.; Clemente, F.; Barone, D.; Controzzi, M.; Cipriani, C. The Myokinetic Control Interface: Tracking Implanted Magnets as a Means for Prosthetic Control. Sci. Rep. 2017, 7, 17149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kumar, S.; Sultan, M.J.; Ullah, A.; Zameer, S.; Siddiqui, S.; Sami, S.K. Human Machine Interface Glove Using Piezoresistive Textile Based Sensors. IOP Conf. Ser. Mater. Sci. Eng. 2018, 414, 012041. [Google Scholar] [CrossRef]

- Castellini, C.; Kõiva, R.; Pasluosta, C.; Viegas, C.; Eskofier, B.M. Tactile Myography: An Off-Line Assessment of Able-Bodied Subjects and One Upper-Limb Amputee. Technologies 2018, 6, 38. [Google Scholar] [CrossRef] [Green Version]

- Dong, W.; Xiao, L.; Hu, W.; Zhu, C.; Huang, Y.; Yin, Z. Wearable Human–Machine Interface Based on PVDF Piezoelectric Sensor. Trans. Inst. Meas. Control 2017, 39, 398–403. [Google Scholar] [CrossRef]

- Lim, S.; Son, D.; Kim, J.; Lee, Y.B.; Song, J.-K.; Choi, S.; Lee, D.J.; Kim, J.H.; Lee, M.; Hyeon, T.; et al. Transparent and Stretchable Interactive Human Machine Interface Based on Patterned Graphene Heterostructures. Adv. Funct. Mater. 2015, 25, 375–383. [Google Scholar] [CrossRef]

- Rasouli, M.; Ghosh, R.; Lee, W.W.; Thakor, N.V.; Kukreja, S. Stable Force-Myographic Control of a Prosthetic Hand Using Incremental Learning. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 4828–4831. [Google Scholar]

- Islam, M.A.; Sundaraj, K.; Ahmad, R.B.; Sundaraj, S.; Ahamed, N.U.; Ali, M.A. Cross-Talk in Mechanomyographic Signals from the Forearm Muscles during Sub-Maximal to Maximal Isometric Grip Force. PLoS ONE 2014, 9, e96628. [Google Scholar] [CrossRef] [Green Version]

- Islam, M.A.; Sundaraj, K.; Ahmad, R.; Ahamed, N.; Ali, M. Mechanomyography Sensor Development, Related Signal Processing, and Applications: A Systematic Review. IEEE Sens. J. 2013, 13, 2499–2516. [Google Scholar] [CrossRef]

- Orizio, C.; Gobbo, M. Mechanomyography. In Encyclopedia of Medical Devices and Instrumentation, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Ibitoye, M.O.; Hamzaid, N.A.; Zuniga, J.M.; Abdul Wahab, A.K. Mechanomyography and Muscle Function Assessment: A Review of Current State and Prospects. Clin. Biomech. 2014, 29, 691–704. [Google Scholar] [CrossRef]

- Castillo, C.S.M.; Wilson, S.; Vaidyanathan, R.; Atashzar, S.F. Wearable MMG-Plus-One Armband: Evaluation of Normal Force on Mechanomyography (MMG) to Enhance Human-Machine Interfacing. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 196–205. [Google Scholar] [CrossRef]

- Wicaksono, D.H.B.; Soetjipto, J.; Ughi, F.; Iskandar, A.A.; Santi, F.A.; Biben, V. Wireless Synchronous Carbon Nanotube-Patch Mechanomyography of Leg Muscles. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar]

- Xie, H.-B.; Zheng, Y.-P.; Guo, J.-Y. Classification of the Mechanomyogram Signal Using a Wavelet Packet Transform and Singular Value Decomposition for Multifunction Prosthesis Control. Physiol. Meas. 2009, 30, 441–457. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Yang, X.; Li, Y.; Zhou, D.; He, K.; Liu, H. Ultrasound-Based Sensing Models for Finger Motion Classification. IEEE J. Biomed. Health Inform. 2018, 22, 1395–1405. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; He, K.; Sun, X.; Liu, H. Human-Machine Interface Based on Multi-Channel Single-Element Ultrasound Transducers: A Preliminary Study. In Proceedings of the 2016 IEEE 18th International Conference on e-Health Networking, Applications and Services (Healthcom), Munich, Germany, 14–16 September 2016; pp. 1–6. [Google Scholar]

- Ortenzi, V.; Tarantino, S.; Castellini, C.; Cipriani, C. Ultrasound Imaging for Hand Prosthesis Control: A Comparative Study of Features and Classification Methods. In Proceedings of the 2015 IEEE International Conference on Rehabilitation Robotics (ICORR), Singapore, 11–14 August 2015; pp. 1–6. [Google Scholar]

- Sikdar, S.; Rangwala, H.; Eastlake, E.B.; Hunt, I.A.; Nelson, A.J.; Devanathan, J.; Shin, A.; Pancrazio, J.J. Novel Method for Predicting Dexterous Individual Finger Movements by Imaging Muscle Activity Using a Wearable Ultrasonic System. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 69–76. [Google Scholar] [CrossRef]

- Sierra González, D.; Castellini, C. A Realistic Implementation of Ultrasound Imaging as a Human-Machine Interface for Upper-Limb Amputees. Front. Neurorobot. 2013, 7, 17. [Google Scholar] [CrossRef] [Green Version]

- Castellini, C.; Gonzalez, D.S. Ultrasound Imaging as a Human-Machine Interface in a Realistic Scenario. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1486–1492. [Google Scholar]

- Shi, J.; Chang, Q.; Zheng, Y.-P. Feasibility of Controlling Prosthetic Hand Using Sonomyography Signal in Real Time: Preliminary Study. J. Rehabil. Res. Dev. 2010, 47, 87–98. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Booth, R.; Goldsmith, P. A Wrist-Worn Piezoelectric Sensor Array for Gesture Input. J. Med. Biol. Eng. 2018, 38, 284–295. [Google Scholar] [CrossRef]

- Maule, L.; Luchetti, A.; Zanetti, M.; Tomasin, P.; Pertile, M.; Tavernini, M.; Guandalini, G.M.A.; De Cecco, M. RoboEye, an Efficient, Reliable and Safe Semi-Autonomous Gaze Driven Wheelchair for Domestic Use. Technologies 2021, 9, 16. [Google Scholar] [CrossRef]

- Lin, C.-S.; Chan, C.-N.; Lay, Y.-L.; Lee, J.-F.; Yeh, M.-S. An Eye-Tracking Human-Machine Interface Using an Auto Correction Method. J. Med. Biol. Eng. 2007, 27, 105–109. [Google Scholar]

- Conci, N.; Ceresato, P.; De Natale, F.G.B. Natural Human-Machine Interface Using an Interactive Virtual Blackboard. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; Volume 5, pp. V-181–V-184. [Google Scholar]

- Baklouti, M.; Bruin, M.; Guitteny, V.; Monacelli, E. A Human-Machine Interface for Assistive Exoskeleton Based on Face Analysis. In Proceedings of the 2008 2nd IEEE RAS EMBS International Conference on Biomedical Robotics and Biomechatronics, Scottsdale, AZ, USA, 19–22 October 2008; pp. 913–918. [Google Scholar]

- Chang, C.-M.; Lin, C.-S.; Chen, W.-C.; Chen, C.-T.; Hsu, Y.-L. Development and Application of a Human–Machine Interface Using Head Control and Flexible Numeric Tables for the Severely Disabled. Appl. Sci. 2020, 10, 7005. [Google Scholar] [CrossRef]

- Gautam, A.K.; Vasu, V.; Raju, U.S.N. Human Machine Interface for Controlling a Robot Using Image Processing. Procedia Eng. 2014, 97, 291–298. [Google Scholar] [CrossRef] [Green Version]

- Gmez-Portes, C.; Lacave, C.; Molina, A.I.; Vallejo, D. Home Rehabilitation Based on Gamification and Serious Games for Young People: A Systematic Mapping Study. Appl. Sci. 2020, 10, 8849. [Google Scholar] [CrossRef]

- Palaniappan, S.M.; Duerstock, B.S. Developing Rehabilitation Practices Using Virtual Reality Exergaming. In Proceedings of the 2018 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Louisville, KY, USA, 6–8 December 2018; pp. 090–094. [Google Scholar]

- Nguyen, A.-V.; Ong, Y.-L.A.; Luo, C.X.; Thuraisingam, T.; Rubino, M.; Levin, M.F.; Kaizer, F.; Archambault, P.S. Virtual Reality Exergaming as Adjunctive Therapy in a Sub-Acute Stroke Rehabilitation Setting: Facilitators and Barriers. Disabil. Rehabil. Assist. Technol. 2019, 14, 317–324. [Google Scholar] [CrossRef]

- Chuang, W.-C.; Hwang, W.-J.; Tai, T.-M.; Huang, D.-R.; Jhang, Y.-J. Continuous Finger Gesture Recognition Based on Flex Sensors. Sensors 2019, 19, 3986. [Google Scholar] [CrossRef] [Green Version]

- Dong, W.; Yang, L.; Fortino, G. Stretchable Human Machine Interface Based on Smart Glove Embedded with PDMS-CB Strain Sensors. IEEE Sens. J. 2020, 20, 8073–8081. [Google Scholar] [CrossRef]

- Zhu, C.; Li, R.; Chen, X.; Chalmers, E.; Liu, X.; Wang, Y.; Xu, B.B.; Liu, X. Ultraelastic Yarns from Curcumin-Assisted ELD toward Wearable Human–Machine Interface Textiles. Adv. Sci. 2020, 7, 2002009. [Google Scholar] [CrossRef] [PubMed]

- Hang, C.-Z.; Zhao, X.-F.; Xi, S.-Y.; Shang, Y.-H.; Yuan, K.-P.; Yang, F.; Wang, Q.-G.; Wang, J.-C.; Zhang, D.W.; Lu, H.-L. Highly Stretchable and Self-Healing Strain Sensors for Motion Detection in Wireless Human-Machine Interface. Nano Energy 2020, 76, 105064. [Google Scholar] [CrossRef]

- Ueki, S.; Kawasaki, H.; Ito, S.; Nishimoto, Y.; Abe, M.; Aoki, T.; Ishigure, Y.; Ojika, T.; Mouri, T. Development of a Hand-Assist Robot With Multi-Degrees-of-Freedom for Rehabilitation Therapy. IEEE/ASME Trans. Mechatron. 2012, 17, 136–146. [Google Scholar] [CrossRef]

- Rahman, M.A.; Al-Jumaily, A. Design and Development of a Hand Exoskeleton for Rehabilitation Following Stroke. Procedia Eng. 2012, 41, 1028–1034. [Google Scholar] [CrossRef] [Green Version]

- Cortese, M.; Cempini, M.; de Almeida Ribeiro, P.R.; Soekadar, S.R.; Carrozza, M.C.; Vitiello, N. A Mechatronic System for Robot-Mediated Hand Telerehabilitation. IEEE/ASME Trans. Mechatron. 2015, 20, 1753–1764. [Google Scholar] [CrossRef]

- Han, H.; Yoon, S.W. Gyroscope-Based Continuous Human Hand Gesture Recognition for Multi-Modal Wearable Input Device for Human Machine Interaction. Sensors 2019, 19, 2562. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wei, L.; Hu, H. A Hybrid Human-Machine Interface for Hands-Free Control of an Intelligent Wheelchair. Int. J. Mechatron. Autom. 2011, 1, 97–111. [Google Scholar] [CrossRef] [Green Version]

- Huang, Y.; Yang, J.; Liu, S.; Pan, J. Combining Facial Expressions and Electroencephalography to Enhance Emotion Recognition. Future Internet 2019, 11, 105. [Google Scholar] [CrossRef] [Green Version]

- Downey, J.E.; Weiss, J.M.; Muelling, K.; Venkatraman, A.; Valois, J.-S.; Hebert, M.; Bagnell, J.A.; Schwartz, A.B.; Collinger, J.L. Blending of Brain-Machine Interface and Vision-Guided Autonomous Robotics Improves Neuroprosthetic Arm Performance during Grasping. J. Neuroeng. Rehabil. 2016, 13, 28. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bu, N.; Bandou, Y.; Fukuda, O.; Okumura, H.; Arai, K. A Semi-Automatic Control Method for Myoelectric Prosthetic Hand Based on Image Information of Objects. In Proceedings of the 2017 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 24–26 November 2017; pp. 23–28. [Google Scholar]

- Malechka, T.; Tetzel, T.; Krebs, U.; Feuser, D.; Graeser, A. SBCI-Headset—Wearable and Modular Device for Hybrid Brain-Computer Interface. Micromachines 2015, 6, 291–311. [Google Scholar] [CrossRef] [Green Version]

- McMullen, D.P.; Hotson, G.; Katyal, K.D.; Wester, B.A.; Fifer, M.S.; McGee, T.G.; Harris, A.; Johannes, M.S.; Vogelstein, R.J.; Ravitz, A.D.; et al. Demonstration of a Semi-Autonomous Hybrid Brain-Machine Interface Using Human Intracranial EEG, Eye Tracking, and Computer Vision to Control a Robotic Upper Limb Prosthetic. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 784–796. [Google Scholar] [CrossRef] [Green Version]

- Frisoli, A.; Loconsole, C.; Leonardis, D.; Banno, F.; Barsotti, M.; Chisari, C.; Bergamasco, M. A New Gaze-BCI-Driven Control of an Upper Limb Exoskeleton for Rehabilitation in Real-World Tasks. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2012, 42, 1169–1179. [Google Scholar] [CrossRef]

- Dunai, L.; Novak, M.; García Espert, C. Human Hand Anatomy-Based Prosthetic Hand. Sensors 2021, 21, 137. [Google Scholar] [CrossRef]

- Krasoulis, A.; Kyranou, I.; Erden, M.S.; Nazarpour, K.; Vijayakumar, S. Improved Prosthetic Hand Control with Concurrent Use of Myoelectric and Inertial Measurements. J. Neuroeng. Rehabil. 2017, 14, 71. [Google Scholar] [CrossRef]

- Shahzad, W.; Ayaz, Y.; Khan, M.J.; Naseer, N.; Khan, M. Enhanced Performance for Multi-Forearm Movement Decoding Using Hybrid IMU–SEMG Interface. Front. Neurorobot. 2019, 13, 43. [Google Scholar] [CrossRef] [Green Version]

- Kyranou, I.; Krasoulis, A.; Erden, M.S.; Nazarpour, K.; Vijayakumar, S. Real-Time Classification of Multi-Modal Sensory Data for Prosthetic Hand Control. In Proceedings of the 2016 6th IEEE International Conference on Biomedical Robotics and Biomechatronics (BioRob), Singapore, 26–29 June 2016; pp. 536–541. [Google Scholar]

- Jaquier, N.; Connan, M.; Castellini, C.; Calinon, S. Combining Electromyography and Tactile Myography to Improve Hand and Wrist Activity Detection in Prostheses. Technologies 2017, 5, 64. [Google Scholar] [CrossRef] [Green Version]

- Guo, W.; Sheng, X.; Liu, H.; Zhu, X. Toward an Enhanced Human–Machine Interface for Upper-Limb Prosthesis Control with Combined EMG and NIRS Signals. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 564–575. [Google Scholar] [CrossRef]

- Dwivedi, A.; Gerez, L.; Hasan, W.; Yang, C.-H.; Liarokapis, M. A Soft Exoglove Equipped with a Wearable Muscle-Machine Interface Based on Forcemyography and Electromyography. IEEE Robot. Autom. Lett. 2019, 4, 3240–3246. [Google Scholar] [CrossRef]

- Perez, E.; López, N.; Orosco, E.; Soria, C.; Mut, V.; Freire-Bastos, T. Robust Human Machine Interface Based on Head Movements Applied to Assistive Robotics. Sci. World J. 2013, 2013, e589636. [Google Scholar] [CrossRef] [Green Version]

- Bastos-Filho, T.F.; Cheein, F.A.; Müller, S.M.T.; Celeste, W.C.; de la Cruz, C.; Cavalieri, D.C.; Sarcinelli-Filho, M.; Amaral, P.F.S.; Perez, E.; Soria, C.M.; et al. Towards a New Modality-Independent Interface for a Robotic Wheelchair. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 567–584. [Google Scholar] [CrossRef] [PubMed]

- Anwer, S.; Waris, A.; Sultan, H.; Butt, S.I.; Zafar, M.H.; Sarwar, M.; Niazi, I.K.; Shafique, M.; Pujari, A.N. Eye and Voice-Controlled Human Machine Interface System for Wheelchairs Using Image Gradient Approach. Sensors 2020, 20, 5510. [Google Scholar] [CrossRef] [PubMed]

- Gardner, M.; Mancero Castillo, C.S.; Wilson, S.; Farina, D.; Burdet, E.; Khoo, B.C.; Atashzar, S.F.; Vaidyanathan, R. A Multimodal Intention Detection Sensor Suite for Shared Autonomy of Upper-Limb Robotic Prostheses. Sensors 2020, 20, 6097. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.-M.; Chen, Y.-J.; Chen, S.-C.; Yeng, C.-H. Wireless Home Assistive System for Severely Disabled People. Appl. Sci. 2020, 10, 5226. [Google Scholar] [CrossRef]

- Assistive Robotics and Rehabilitation. Available online: https://www.knowledge-share.eu/en/sector/assistive-robotics-and-rehabilitation/ (accessed on 26 August 2021).

- Andreoni, G.; Parini, S.; Maggi, L.; Piccini, L.; Panfili, G.; Torricelli, A. Human Machine Interface for Healthcare and Rehabilitation. In Advanced Computational Intelligence Paradigms in Healthcare-2; Vaidya, S., Jain, L.C., Yoshida, H., Eds.; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2007; pp. 131–150. ISBN 978-3-540-72375-2. [Google Scholar]

| Authors [Reference] | Title | Topic |

|---|---|---|

| Taylor et al. [18] | The use of gaming technology for rehabilitation in people with multiple sclerosis | Exergaming |

| De Gama et al. [29] | Motor Rehabilitation Using Kinect: A Systematic Review | Exergaming |

| Laver et al. [30] | Virtual reality for stroke rehabilitation | Exergaming |

| Wright et al. [23] | A Review of Control Strategies in Closed-Loop Neuroprosthetic Systems | Prosthetic control |

| Ciancio et al. [24] | Control of Prosthetic Hands via the Peripheral Nervous System | Prosthetic control |

| Frisoli et al. [22] | New generation emerging technologies for neurorehabilitation and motor assistance | Wearable devices (exoskeletons) |

| Rosly et al. [31] | Exergaming for individuals with neurological disability: A systematic review | Exergaming |

| Lazarou et al. [5] | EEG-Based Brain–Computer Interfaces for Communication and Rehabilitation of People with Motor Impairment: A Novel Approach of the 21st Century | BCI |

| Ngan et al. [25] | Strategies for neural control of prosthetic limbs: From electrode interfacing to 3D printing | Prosthetic control |

| Parajuli et al. [26] | Real-Time EMG Based Pattern Recognition Control for Hand Prostheses: A Review on Existing Methods, Challenges, and Future Implementation | Prosthetic control |

| Igual et al. [27] | Myoelectric Control for Upper Limb Prostheses | Prosthetic control |

| Kumar et al. [28] | Prosthetic hand control: A multidisciplinary review to identify strengths, shortcomings, and the future | Prosthetic control |

| Reis et al. [32] | Exergames for motor rehabilitation in older adults: An umbrella review | Exergaming |

| Garcia-Agundez et al. [20] | Recent advances in rehabilitation for Parkinson’s Disease with exergames: A Systematic Review | Exergaming |

| Fatima et al. [19] | Intracortical brain–machine interfaces for controlling upper-limb-powered muscle and robotic systems in spinal cord injury | Prosthetic control |

| Grushko et al. [10] | Control Methods for Transradial Prostheses Based on Remnant Muscle Activity and Its Relationship with Proprioceptive Feedback | Prosthetic control |

| Mohebbi et al. [21] | Human–Robot Interaction in Rehabilitation and Assistance: A Review | Robotic control |

| Ptito et al. [6] | Brain–Machine Interfaces to Assist the Blind | BCI |

| Li et al. [33] | Gesture Recognition Using Surface Electromyography and Deep Learning for Prostheses Hand: State-of-the-Art, Challenges, and Future | Prosthetic control |

| Ahmadizadeh et al. [9] | Human Machine Interfaces in Upper-Limb Prosthesis Control: A Survey of Techniques for Preprocessing and Processing of Biosignals | Prosthetic control |

| Baniqued et al. [7] | Brain–computer interface robotics for hand rehabilitation after stroke: A systematic review | BCI |

| Authors [Reference] | Kind of Biopotential | Target | Field |

|---|---|---|---|

| Gao et al. [46] | Scalp EEG | Prosthetic Control | Assistance |

| Gannouni et al. [47] | Scalp EEG | Prosthetic Control | Assistance, Rehabilitation |

| Fuentes-Gonzalez et al. [48] | Scalp EEG | Prosthetic Control | Assistance |

| Song et al. [49] | Scalp EEG | Robotic Control | Assistance, Rehabilitation |

| Korovesis et al. [50] | Scalp EEG | Robotic Control | Assistance |

| Antoniou et al. [64] | Scalp EEG | Robotic Control | Rehabilitation |

| Xu et al. [14] | Scalp EEG | Robotic Control | Assistance, Rehabilitation |

| Liang et al. [34] | Scalp EEG | Robotic Control | Assistance, Rehabilitation |

| Matsushita et al. [65] | ECoG | Robotic Control | Assistance |

| Spataro et al. [66] | Scalp EEG | Robotic Control | Assistance |

| López-Larraz et al. [67] | Scalp EEG | Robotic Control | Rehabilitation |

| Xu et al. [36] | Scalp EEG | Robotic Control | Rehabilitation |

| Kwak et al. [82] | Scalp EEG | Robotic Control | Rehabilitation |

| Hortal et al. [68] | Scalp EEG | Robotic Control | Assistance, Rehabilitation |

| Varada et al. [15] | Scalp EEG | Robotic Control, Smart Environment Control | Assistance, Rehabilitation |

| Wang et al. [69] | Scalp EEG | Robotic Control, Prosthetic Control | Rehabilitation |

| Zhan Hong et al. [70] | Scalp EEG | Prosthetic Control | Assistance |

| Ortiz et al. [71] | Scalp EEG | Robotic Control | Assistance, Rehabilitation |

| Kasim et al. [72] | Scalp EEG | Prosthetic Control | Assistance |

| Murphy et al. [73] | Scalp EEG | Prosthetic Control | Assistance |

| Li et al. [74] | sEEG | Prosthetic Control | Assistance |

| Bhagat et al. [75] | Scalp EEG | Robotic Control | Rehabilitation |

| Morishita et al. [76] | ECoG | Prosthetic Control | Rehabilitation |

| Zhang et al. [77] | Scalp EEG | Prosthetic Control | Assistance, Rehabilitation |

| Yanagisawa et al. [78] | ECoG | Prosthetic Control | Assistance, Rehabilitation |

| He et al. [35] | Scalp EEG | Robotic Control | Rehabilitation |

| Tang et al. [79] | Scalp EEG | Robotic Control | Assistance |

| Randazzo et al. [80] | Scalp EEG | Robotic Control | Assistance, Rehabilitation |

| Li et al. [81] | Scalp EEG | Robotic Control | Assistance, Rehabilitation |

| Araujo et al. [83] | Scalp EEG | Robotic Control | Rehabilitation |

| Kashihara et al. [84] | Scalp EEG | Communication | Assistance |

| Mahmoudi and Erfanian [85] | Scalp EEG | Prosthetic Control | Assistance |

| Authors [Reference] | Kind of Biopotential | Target | Field |

|---|---|---|---|

| Eisenberg et al. [53] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Tavakoli et al. [54] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Bai et al. [87] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Cao et al. [88] | sEMG | Prosthetic Control | Assistance |

| Benatti et al. [89] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Ulloa et al. [90] | sEMG | Prosthetic Control | Assistance |

| Polisiero et al. [91] | sEMG | Prosthetic Control | Assistance |

| Gailey et al. [92] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Bernardino et al. [93] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Zhao et al. [94] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Carrozza et al. [95] | sEMG | Prosthetic Control | Assistance |

| Jiang et al. [96] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Brunelli et al. [97] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Shair et al. [98] | sEMG | Prosthetic Control | Assistance |

| Khushaba et al. [99] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Kamavuako et al. [100] | imEMG | Prosthetic Control | Assistance |

| Dewald et al. [101] | imEMG | Gesture Recognition, Prosthetic Control, Virtual Reality Control | Assistance |

| Al-Timemy et al. [102] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Zhang et al. [103] | sEMG | Prosthetic Control | Assistance |

| Dalley et al. [104] | sEMG | Prosthetic Control | Assistance |

| Russo et al. [105] | sEMG | Gesture Recognition, Prosthetic Control | Assistance |

| Stepp et al. [106] | sEMG | Prosthetic Control | Rehabilitation |

| Visconti et al. [107] | sEMG | Gesture Recognition, Prosthetic Control, Robotic Control, Smart Environment Control, Virtual Reality Control | Assistance, Rehabilitation |

| Lu and Zhou [108] | sEMG | Smart Environment Control | Assistance |

| Kumar et al. [109] | sEMG | Robotic Control | Assistance |

| Kalani et al. [110] | sEMG | Robotic Control | Rehabilitation |

| Alibhai et al. [39] | sEMG | Gesture Recognition, Robotic Control | Assistance |

| Fall et al. [37] | sEMG | Robotic Control | Assistance |

| Song et al. [40] | sEMG | Gesture Recognition, Robotic Control | Assistance |

| Laksono et al. [38] | sEMG | Robotic Control | Assistance |

| Xu et al. [41] | sEMG | Robotic Control | Assistance |

| Zhang et al. [111] | sEMG | Robotic Control | Assistance |

| Hamedi et al. [112] | sEMG | Gesture Recognition | Assistance, Rehabilitation |

| Wege and Zimmermann [113] | sEMG | Robotic Control | Rehabilitation |

| Ho et al. [114] | sEMG | Robotic Control | Rehabilitation |

| Loconsole et al. [115] | sEMG | Robotic Control | Rehabilitation |

| Hussain et al. [116] | sEMG | Gesture Recognition, Robotic Control | Assistance |

| Abdallah et al. [117] | sEMG | Robotic Control | Rehabilitation |

| Secciani et al. [118] | sEMG | Robotic Control | Assistance |

| Song et al. [119] | sEMG | Robotic Control | Rehabilitation |

| Liu et al. [120] | sEMG | Robotic Control | Rehabilitation |

| Cai et al. [121] | sEMG | Robotic Control | Rehabilitation |

| Yin et al. [122] | sEMG | Robotic Control | Rehabilitation |

| Tang et al. [123] | sEMG | Robotic Control | Rehabilitation |

| Lu et al. [124] | sEMG | Robotic Control | Rehabilitation |

| Gui et al. [125] | sEMG | Robotic Control | Rehabilitation |

| La Scaleia et al. [126] | sEMG | Robotic Control, Virtual Reality Control | Assistance, Rehabilitation |

| Lyu et al. [127] | sEMG | Robotic Control | Rehabilitation |

| Authors [Reference] | Target | Field |

|---|---|---|

| Noce et al. [52] | Gesture Recognition, Prosthetic Control | Assistance |

| Nguyen et al. [55] | Prosthetic Control | Assistance |

| Noce et al. [59] | Gesture Recognition, Prosthetic Control | Assistance |

| Authors [Reference] | Target | Field |

|---|---|---|

| Golparvar and Yapici [56] | Robotic Control, Smart Environment Control | Assistance |

| Zhang et al. [42] | Smart Environment Control | Assistance |

| Huang et al. [57] | Robotic Control | Assistance |

| Martínez-Cerveró et al. [128] | Communication | Assistance |

| Perez Reynoso et al. [129] | Robotic Control | Assistance |

| Choudhari et al. [130] | Robotic Control | Assistance |

| Heo et al. [131] | Communication, Robotic Control | Assistance |

| Guo et al. [132] | Smart Environment Control | Assistance |

| Wu et al. [133] | Robotic Control, Smart Environment Control | Assistance, Rehabilitation |

| Authors [Reference] | Kind of Biopotential | Target | Field |

|---|---|---|---|

| Gordleeva et al. [51] | EEG + EMG | Robotic Control | Rehabilitation |

| Ferreira et al. [134] | EEG + EMG | Robotic Control | Assistance |

| Zhang et al. [135] | EEG + EMG + EOG | Gesture Recognition, Robotic Control | Assistance, Rehabilitation |

| Huang et al. [136] | EEG + EOG | Robotic Control | Assistance |

| Ma et al. [12] | EEG + EOG | Robotic Control | Assistance |

| Ma et al. [137] | EEG + EOG | Robotic Control | Assistance |

| Arrow et al. [58] | EMG + ERG | Prosthetic Control | Assistance |

| Rezazadeh et al. [138] | EEG + EMG | Virtual Reality Control | Assistance |

| Rezazadeh et al. [139] | EEG + EMG + EOG | Communication, Gesture Recognition | Assistance, Rehabilitation |

| Iáñez et al. [140] | EEG + EOG | Smart Environment Control | Assistance |

| Laport et al. [141] | EEG + EOG | Smart Environment Control | Assistance |

| Neto et al. [142] | EEG + EMG + EOG | Robotic Control | Assistance |

| Authors [Reference] | Kind of sensor | Application Site | Target | Field |

|---|---|---|---|---|

| Prakash et al. [144] | FSR | Forearm | Prosthetic Control | Assistance |

| Clemente et al. [165] | Magnetic Field | Forearm | Prosthetic Control | Assistance |

| Xiao et al. [152] | FSR | Forearm | Robotic Control | Rehabilitation |

| Ferigo et al. [153] | FSR | Forearm | Prosthetic Control | Assistance |

| Esposito et al. [154] | FSR | Forearm | Gesture Recognition, Prosthetic Control | Assistance |

| Esposito et al. [155] | FSR | Forearm | Prosthetic Control | Assistance |

| Esposito et al. [156] | FSR | Forearm | Prosthetic Control | Assistance |

| Ha et al. [157] | Piezoelectric | Forearm | Prosthetic Control | Assistance |

| Ha et al. [158] | Piezoelectric | Forearm | Prosthetic Control | Assistance |

| Ahmadizadeh et al. [151] | FSR | Forearm | Prosthetic Control | Assistance |

| Fujiwara et al. [159] | Optical Fibre | Forearm | Gesture Recognition, Prosthetic Control, Virtual Reality Control | Assistance, Rehabilitation |

| Bifulco et al. [160] | Resistive | Forearm | Prosthetic Control | Assistance |

| Radmand et al. [161] | FSR | Forearm | Prosthetic Control | Assistance |

| Cho et al. [162] | FSR | Forearm | Prosthetic Control | Assistance |

| Dong et al. [163] | Triboelectric | Hand | Robotic Control, Virtual Reality Control | Assistance, Rehabilitation |

| Zhu et al. [16] | Triboelectric | Hand | Robotic Control, Virtual Reality Control | Assistance, Rehabilitation |

| An et al. [164] | Triboelectric | Arm | Robotic Control, Smart Environment Control | Assistance |

| Tarantino et al. [166] | Magnetic field | Forearm | Prosthetic Control | Assistance |

| Kumar et al. [167] | Piezoresistive | Hand | Communication, Smart Environment Control | Assistance |

| Castellini et al. [168] | Resistive | Forearm | Prosthetic Control | Assistance |

| Dong et al. [169] | Piezoelectric | Wrist | Prosthetic Control | Assistance |

| Lim et al. [170] | Piezoelectric | Forearm, Wrist | Robotic Control | Assistance |

| Rasouli et al. [171] | Piezoelectric | Forearm | Prosthetic Control | Assistance |

| Authors [Reference] | Kind of Sensor | Application Site | Target | Field |

|---|---|---|---|---|

| Asheghabadi et al. [146] | Piezoelectric + Strain Gauge | Forearm | Prosthetic Control | Assistance |

| Castillo et al. [176] | Microphone | Forearm | Prosthetic Control | Assistance |

| Wicaksono et al. [177] | Piezoresistive | Lower limb | Prosthetic Control, Robotic Control | Assistance, Rehabilitation |

| Xie et al. [178] | Accelerometer | Forearm | Gesture Recognition, Prosthetic Control | Assistance |

| Authors [Reference] | Kind of Sensor | Application Site | Target | Field |

|---|---|---|---|---|

| Wu et al. [145] | Bioamplifier | Forearm | Gesture Recognition, Prosthetic Control | Assistance |

| Chen et al. [147] | US probe | Forearm | Prosthetic Control | Assistance |

| Huang et al. [179] | US probe | Forearm | Gesture Recognition, Prosthetic Control, Robotic Control | Assistance |

| Li et al. [180] | US transducer | Forearm | Gesture Recognition, Robotic Control | Rehabilitation |

| Ortenzi et al. [181] | US probe | Forearm | Prosthetic Control | Assistance |

| Sikdar et al. [182] | US probe | Forearm | Prosthetic Control | Assistance |

| Sierra González et al. [183] | US probe | Forearm | Robotic Control | Rehabilitation |

| Castellini et al. [184] | US probe | Forearm | Robotic Control | Rehabilitation |

| Shi et al. [185] | US probe | Forearm | Prosthetic Control | Assistance |

| Authors [Reference] | Kind of Sensor | Application Site | Target | Field |

|---|---|---|---|---|

| Esposito et al. [143] | FSR | Forearm | Prosthetic Control | Assistance |

| Booth et al. [186] | Piezoelectric | Wrist | Gesture Recognition, Prosthetic Control, Robotic Control, Smart Environment Control, Virtual Reality Control | Assistance, Rehabilitation |

| Authors [Reference] | Tracked Body Part | Target | Field |

|---|---|---|---|

| Maule et al. [187] | Eyes | Robotic Control | Assistance |

| Bissoli et al. [43] | Eyes | Smart Environment Control | Assistance |

| Lin et al. [188] | Eyes | Smart Environment Control | Assistance |

| Conci et al. [189] | Hands | Gesture Recognition, Smart Environment Control | Assistance |

| Baklouti et al. [190] | Head/Mouth | Robotic control | Rehabilitation |

| Chang et al. [191] | Head | Communication | Assistance |

| Gautam et al. [192] | Head | Robotic Control | Assistance |

| Gmez-Portes et al. [193] | Whole body | Virtual Reality Control | Rehabilitation |

| Palaniappan et al. [194] | Upper limb | Virtual Reality Control | Rehabilitation |

| Nguyen et al. [195] | Whole body with “JRS”; wrist and elbow with “MHT” | Virtual Reality Control | Rehabilitation |

| Authors [Reference] | Kind of Sensors | Application Sites of Sensors | Target | Field |

|---|---|---|---|---|

| Chuang et al. [196] | Resistive flex sensors | Embedded in a glove | Gesture Recognition | Assistance |

| Dong et al. [197] | Piezoresistive strain sensors (based on PDMS-CB) | Embedded in a glove | Gesture Recognition, Robotic Control | Assistance, Rehabilitation |

| Zhu et al. [198] | Stretchable conductive yarns | Embedded in a glove | Robotic Control, Smart Environment Control | Assistance |

| Hang et al. [203] | PAAm hydrogel-based strain sensor | Various body positions | Gesture Recognition, Robotic Control | Assistance |

| Ueki et al. [199] | Force/torque sensors and 3D motion sensor | Embedded in a glove, hand and forearm | Robotic Control, Virtual Reality Control | Rehabilitation |

| Rahman et al. [200] | Flex sensors | Embedded in a glove, hand | Robotic Control | Rehabilitation |

| Cortese et al. [201] | MEMS accelerometers | Embedded in a glove, hand | Robotic Control | Rehabilitation |

| Han et al. [202] | Three-axis gyroscope | Hand back | Gesture Recognition, Smart Environment Control | Assistance |

| Authors [Reference] | Kind of Sensors | Application Site of Electrodes | Location of Video System/s | Target | Field |

|---|---|---|---|---|---|

| Wei and Hu [204] | EMG electrodes + Video camera | Forehead | towards the subject’s face | Robotic Control | Assistance |

| Haung et al. [205] | Video camera + EEG electrodes | 10–20 EEG international system | towards the subject’s face | Communication | Assistance |

| Downey et al. [206] | Intracortical microelectrode arrays + RGB–D camera | Motor cortex | on the arm of the robot | Robotic Control | Assistance |

| Bu et al. [207] | EMG electrodes + Video camera | Forearm | towards the target objects | Prosthetic Control | Assistance |

| Malechka et al. [208] | EEG electrodes + 3 video cameras | 10–10 EEG international system | two video cameras towards subject’s face (one for each eye tracking); one video camera towards the target objects | Smart Environment Control | Assistance |

| McMullen et al. [209] | ECoG and depth electrodes + Microsoft Kinect + video camera | Motor cortex | Kinect sensor towards the target objects; video camera towards the subject’s face | Prosthetic Control | Assistance |

| Frisoli et al. [210] | EEG electrodes + scene camera (i.e., 2 infrared cameras + 2 infrared LEDs + 1 wide-angle camera) + Microsoft Kinect | Over sensorimotor cortex | Scene camera mounted on glasses; Kinect sensor towards the target objects | Robotic Control | Rehabilitation |

| Authors [Reference] | Kind of Hybrid Sensors | Application Sites of Hybrid Sensors | Target | Field |

|---|---|---|---|---|

| Dunai et al. [217] | sEMG electrodes + FSR sensors | sEMG electrodes on Extensor digitorum (forearm). FSR sensors on prosthetic fingertips. | Prosthetic Control | Assistance |

| Krasoulis et al. [211] | Hybrid sEMG/IMU sensors | Eight hybrid sensors are equally spaced around the forearm (3 cm below the elbow); two are placed on the extrinsic hand muscles superficialis; two are placed on the biceps and triceps brachii muscles. | Prosthetic Control | Assistance |

| Shahzad et al. [212] | sEMG electrodes + IMU | Two sEMG sensors are placed on the forearm flexors, and other two are placed at the forearm extensors. The forearm IMU was placed proximal to the wrist, and the upper arm IMU was paced over the biceps brachii muscle. | Gesture Recognition, Prosthetic control | Assistance |

| Kyranou et al. [213] | Hybrid sEMG/IMU | Twelve hybrid sensors are placed on the proximal forearm via an elastic bandage. | Gesture Recognition, Prosthetic control | Assistance |

| Jaquier et al. [214] | sEMG electrodes + pressure sensors (resistive elastomers) | Ten sEMG sensors are placed on the proximal forearm. Ten pressure sensors (via a bracelet) are placed on the proximal forearm. | Gesture Recognition, Prosthetic control | Assistance |