Action Recognition Using Close-Up of Maximum Activation and ETRI-Activity3D LivingLab Dataset

Abstract

:1. Introduction

2. Related Works

Contributions

- We utilize a self-attention map [42] to localize the activated part in a video, and the close-up of the maximum activation process magnifies a meaningful part for action recognition precisely. The proposed method mitigates the influence of the background and allows the action model to see the foreground in detail.

3. System Model

3.1. Overview of System Model

3.2. Maximum Activation Search

3.3. Close-Up of Maximum Activation

3.4. Training and Testing

| Algorithm 1: Training a video input by using the close-up of maximum activation. |

|

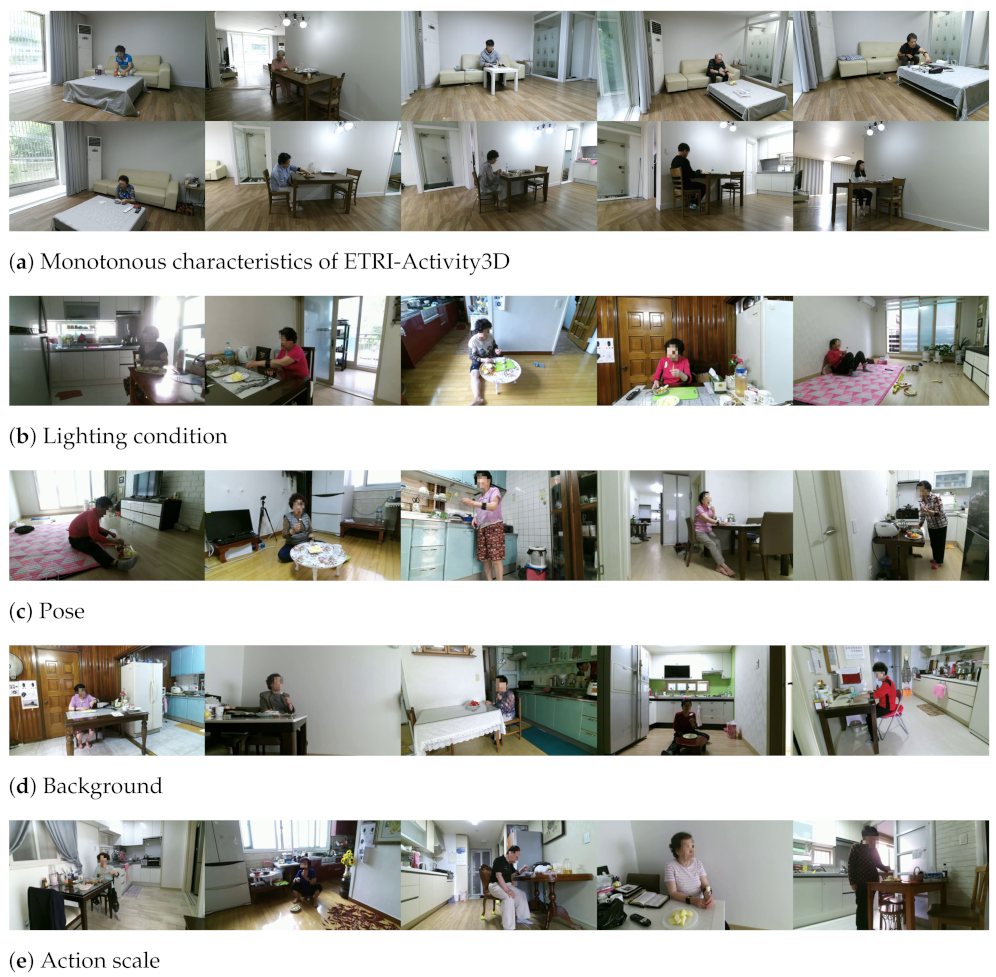

4. Datasets

5. Implementation Details

6. Experimental Results

6.1. Intra-Dataset (ETRI-Activity3D)

6.2. Inter-Dataset (ETRI-Activity3D and ETRI-Activity3D-LivingLab)

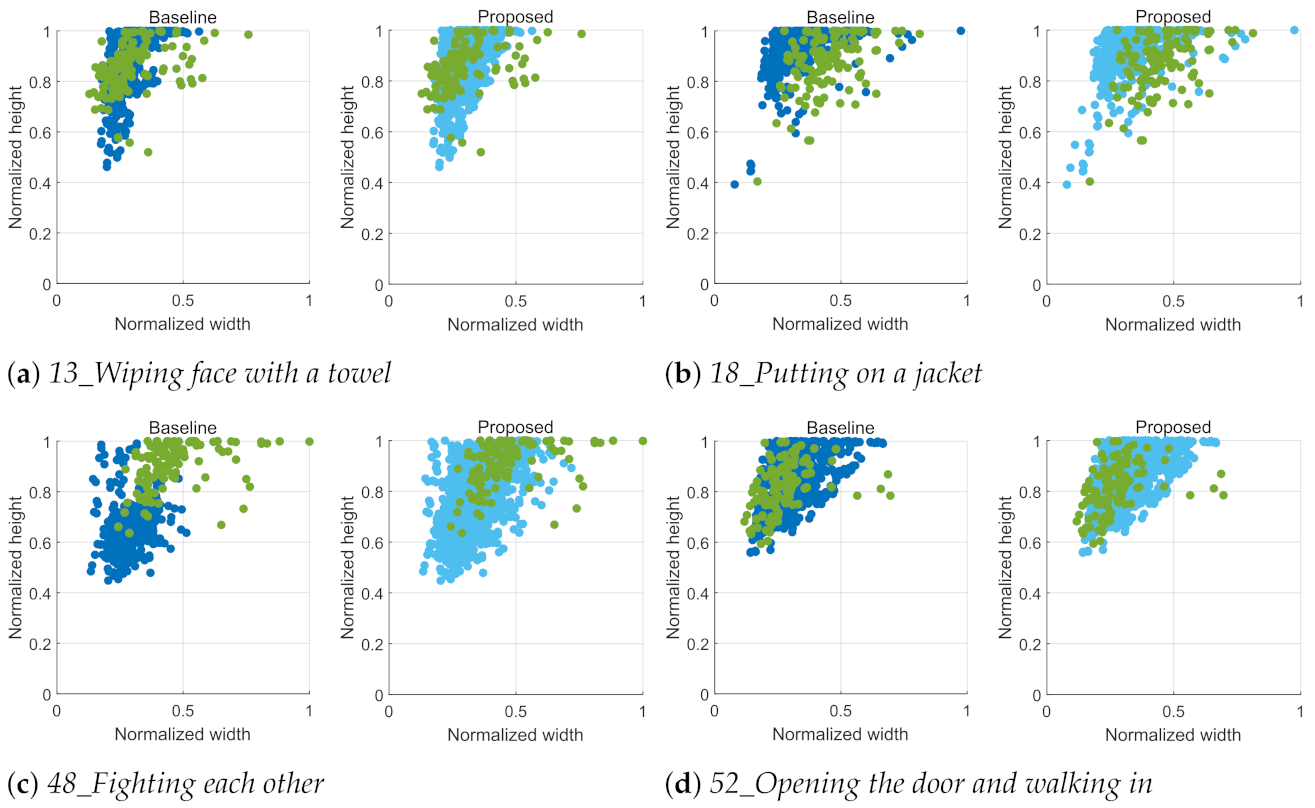

6.3. Dataset Shift Analysis: ETRI-Activity3D and ETRI-Activity3D-LivingLab

6.4. Inter-Dataset (KIST SynADL, ETRI-Activity3D, and ETRI-Activity3D-LivingLab)

6.5. Dataset Shift Analysis: KIST SynADL, ETRI-Activity3D, and ETRI-Activity3D-LivingLab

6.6. Application to Other Methods

6.7. Visualization of Self-Attention Map

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Baradel, F.; Wolf, C.; Mille, J.; Taylor, G.W. Glimpse clouds: Human activity recognition from unstructured feature points. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 28–23 June 2018; pp. 469–478. [Google Scholar]

- Tran, D.; Wang, H.; Torresani, L.; Ray, J.; LeCun, Y.; Paluri, M. A closer look at spatiotemporal convolutions for action recognition. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 28–23 June 2018; pp. 6450–6459. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 28–23 June 2018; pp. 7794–7803. [Google Scholar]

- Wang, X.; Gupta, A. Videos as space-time region graphs. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 399–417. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. Slowfast networks for video recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6202–6211. [Google Scholar]

- Girdhar, R.; Carreira, J.; Doersch, C.; Zisserman, A. Video action transformer network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 244–253. [Google Scholar]

- Feichtenhofer, C. X3d: Expanding architectures for efficient video recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 203–213. [Google Scholar]

- Bloom, V.; Makris, D.; Argyriou, V. G3d: A gaming action dataset and real time action recognition evaluation framework. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 7–12. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar]

- Jhuang, H.; Garrote, H.; Poggio, E.; Serre, T.; Hmdb, T. A large video database for human motion recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2556–2563. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Kim, J.; Lee, S. Fully deep blind image quality predictor. IEEE J. Sel. Top. Signal Process. 2016, 11, 206–220. [Google Scholar] [CrossRef]

- Lee, S.; Pattichis, M.S.; Bovik, A.C. Foveated video compression with optimal rate control. IEEE Trans. Image Process. 2001, 10, 977–992. [Google Scholar] [PubMed]

- Lee, S.; Pattichis, M.S.; Bovik, A.C. Foveated video quality assessment. IEEE Trans. Multimed. 2002, 4, 129–132. [Google Scholar]

- Kim, J.; Zeng, H.; Ghadiyaram, D.; Lee, S.; Zhang, L.; Bovik, A.C. Deep convolutional neural models for picture-quality prediction: Challenges and solutions to data-driven image quality assessment. IEEE Signal Process. Mag. 2017, 34, 130–141. [Google Scholar] [CrossRef]

- Kim, J.; Nguyen, A.; Lee, S. Deep CNN-based blind image quality predictor. IEEE Trans. Neural Networks Learn. Syst. 2018, 30, 11–24. [Google Scholar] [CrossRef] [PubMed]

- Shahroudy, A.; Liu, J.; Ng, T.; Wang, G. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Liu, J.; Shahroudy, A.; Perez, M.; Wang, G.; Duan, L.; Kot, A.C. Ntu rgb+ d 120: A largescale benchmark for 3d human activity understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2684–2701. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jang, J.; Kim, D.; Park, C.; Jang, M.; Lee, J.; Kim, J. Etri-activity3d: A largescale rgb-d dataset for robots to recognize daily activities of the elderly. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Las Vegas, CA, USA, 25–29 October 2020; pp. 10990–10997. [Google Scholar]

- Hwang, H.; Jang, C.; Park, G.; Cho, J.; Kim, I. Eldersim: A synthetic data generation platform for human action recognition in eldercare applications. IEEE Access 2021. [Google Scholar] [CrossRef]

- Kwon, B.; Kim, J.; Lee, K.; Lee, Y.K.; Park, S.; Lee, S. Implementation of a virtual training simulator based on 360° multi-view human action recognition. IEEE Access 2017, 5, 12496–12511. [Google Scholar] [CrossRef]

- Lee, K.; Lee, I.; Lee, S. Propagating lstm: 3d pose estimation based on joint interdependency. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 119–135. [Google Scholar]

- Liu, J.; Shahroudy, A.; Xu, D.; Wang, G. Spatio-temporal lstm with trust gates for 3d human action recognition. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 816–833. [Google Scholar]

- Song, S.; Lan, C.; Xing, J.; Zeng, W.; Liu, J. An end-to-end spatio-temporal attention model for human action recognition from skeleton data. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Ke, Q.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. A new representation of skeleton sequences for 3d action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3288–3297. [Google Scholar]

- Liu, J.; Wang, G.; Hu, P.; Duan, L.; Kot, A.C. Global context-aware attention lstm networks for 3d action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1647–1656. [Google Scholar]

- Lee, I.; Kim, D.; Kang, S.; Lee, S. Ensemble deep learning for skeleton-based action recognition using temporal sliding lstm networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1012–1020. [Google Scholar]

- Shahroudy, A.; Ng, T.; Gong, Y.; Wang, G. Deep multimodal feature analysis for action recognition in rgb+ d videos. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1045–1058. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zolfaghari, M.; Oliveira, G.L.; Sedaghat, N.; Brox, T. Chained multi-stream networks exploiting pose, motion, and appearance for action classification and detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2904–2913. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LO, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-stream adaptive graph convolutional networks for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12026–12035. [Google Scholar]

- Lee, I.; Kim, D.; Lee, S. 3-d human behavior understanding using generalized ts-lstm networks. IEEE Trans. Multimed. 2020, 43, 415–428. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Choe, J.; Shim, H. Attention-based dropout layer for weakly supervised object localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2219–2228. [Google Scholar]

- Zhang, P.; Lan, C.; Xing, J.; Zeng, W.; Xue, J.; Zheng, N. View adaptive neural networks for high performance skeleton-based human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1963–1978. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, Z.; Shen, X.; Tian, X.; Li, H.; Huang, J.; Hua, X. Spatio-temporal inception graph convolutional networks for skeleton-based action recognition. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2122–2130. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1180–1189. [Google Scholar]

| Method | Modalities | CS | CV |

|---|---|---|---|

| Glimpse [2] | RGB | 80.2% | 80.0% |

| ST-GCN [44] | Skeleton | 83.4% | 77.9% |

| VA_CNN [43] | Skeleton | 82.0% | 79.7% |

| FSA-CNN [20] | RGB | 90.1% | - |

| Skeleton | 90.6% | - | |

| RGB + Skeleton | 93.7% | - | |

| Baseline | RGB | 95.0% | 95.3% |

| Proposed | RGB | 95.5% | 95.7% |

| Method | ||||||

|---|---|---|---|---|---|---|

| Baseline | 50.4% | - | - | - | - | |

| 2-scale | RandCrop | 54.1% | 50.1% | - | - | - |

| ActCrop | 54.4% | 51.0% | - | - | - | |

| 3-scale | RandCrop | 53.7% | 52.5% | 47.5% | - | - |

| ActCrop | 58.1% | 56.6% | 48.8% | - | - | |

| 4-scale | RandCrop | 54.7% | 53.4% | 51.4% | 44.4% | - |

| ActCrop | 58.8% | 57.8% | 55.6% | 49.1% | - | |

| 5-scale | RandCrop | 54.1% | 54.8% | 53.1% | 48.2% | 39.0% |

| ActCrop | 59.4% | 60.0% | 60.0% | 57.8% | 49.3% | |

| Method | Action # | <0.6 | <0.7 | <0.8 | <0.9 | <1.0 |

|---|---|---|---|---|---|---|

| Baseline | 1 | 0.034 | 0.067 | 0.092 | 0.160 | 0.647 |

| 8 | - | 0.059 | 0.119 | 0.110 | 0.712 | |

| 44 | - | - | - | 0.067 | 0.933 | |

| 48 | - | - | 0.042 | 0.025 | 0.932 | |

| Proposed | 1 | - | - | - | - | 1.000 |

| 8 | - | - | 0.017 | 0.085 | 0.898 | |

| 44 | - | - | - | 0.008 | 0.992 | |

| 48 | - | - | - | - | 1.000 |

| Training Data | Method | Baseline | Proposed |

|---|---|---|---|

| (1) KIST SynADL | Supervised | 32.5% | 34.2% |

| (2) KIST SynADL (S) + ETRI-Activity3D (T) | UDA [46] | 42.7% | 43.9% |

| (3) ETRI-Activity3D | Supervised | 50.4% | 59.4% |

| (4) KIST SynADL + ETRI-Activity3D | Supervised | 61.6% | 67.2% |

| Training Dataset | Method | Action # | <0.6 | <0.7 | <0.8 | <0.9 | <1.0 |

|---|---|---|---|---|---|---|---|

| KIST SynADL | Baseline | 13 | - | 0.017 | 0.050 | 0.092 | 0.842 |

| 18 | - | - | 0.008 | 0.083 | 0.908 | ||

| 48 | 0.034 | 0.078 | 0.138 | 0.362 | 0.388 | ||

| 52 | - | - | 0.025 | 0.076 | 0.898 | ||

| Proposed | 13 | - | 0.017 | 0.050 | 0.075 | 0.858 | |

| 18 | - | - | 0.008 | 0.058 | 0.933 | ||

| 48 | - | - | 0.008 | 0.034 | 0.958 | ||

| 52 | - | - | 0.025 | 0.076 | 0.898 | ||

| KIST SynADL + ETRI-Activity3D | Baseline | 13 | - | - | 0.017 | 0.059 | 0.924 |

| 18 | - | - | - | 0.008 | 0.992 | ||

| 48 | - | - | 0.042 | 0.008 | 0.949 | ||

| 52 | - | - | - | 0.025 | 0.975 | ||

| Proposed | 13 | - | - | - | 0.008 | 0.992 | |

| 18 | - | - | - | 0.008 | 0.992 | ||

| 48 | - | - | - | 0.025 | 0.975 | ||

| 52 | - | - | - | - | 1.000 |

| Method | 1-Scale | 2-Scale | 3-Scale | 4-Scale | 5-Scale |

|---|---|---|---|---|---|

| Non-local | 51.2% | 54.7% | 58.3% | 60.1% | 61.6% |

| SlowFast | 50.3% | 55.2% | 59.1% | 60.9% | 62.3% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.; Lee, I.; Kim, D.; Lee, S. Action Recognition Using Close-Up of Maximum Activation and ETRI-Activity3D LivingLab Dataset. Sensors 2021, 21, 6774. https://doi.org/10.3390/s21206774

Kim D, Lee I, Kim D, Lee S. Action Recognition Using Close-Up of Maximum Activation and ETRI-Activity3D LivingLab Dataset. Sensors. 2021; 21(20):6774. https://doi.org/10.3390/s21206774

Chicago/Turabian StyleKim, Doyoung, Inwoong Lee, Dohyung Kim, and Sanghoon Lee. 2021. "Action Recognition Using Close-Up of Maximum Activation and ETRI-Activity3D LivingLab Dataset" Sensors 21, no. 20: 6774. https://doi.org/10.3390/s21206774

APA StyleKim, D., Lee, I., Kim, D., & Lee, S. (2021). Action Recognition Using Close-Up of Maximum Activation and ETRI-Activity3D LivingLab Dataset. Sensors, 21(20), 6774. https://doi.org/10.3390/s21206774