Smart Wearables with Sensor Fusion for Fall Detection in Firefighting

Abstract

:1. Introduction

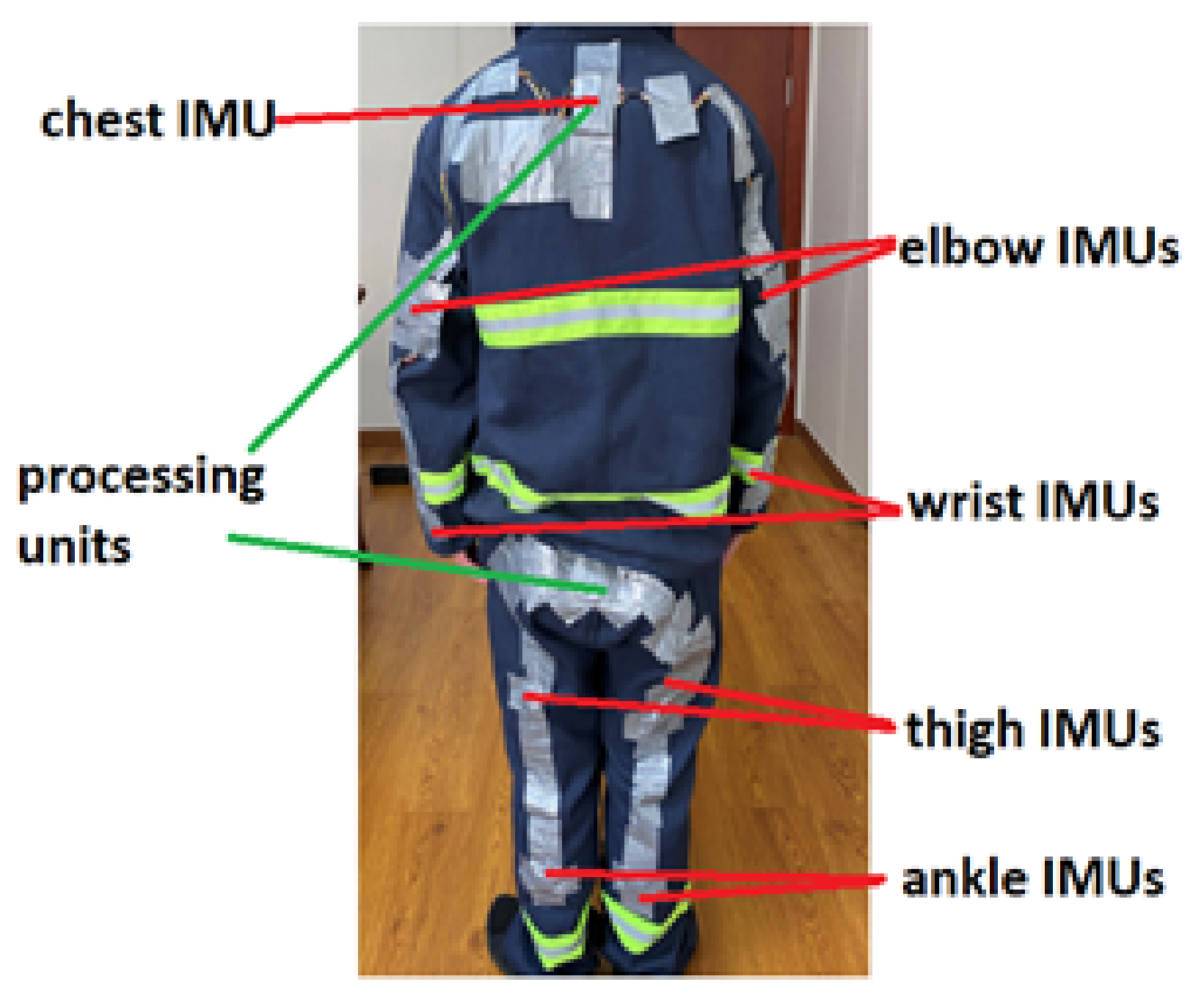

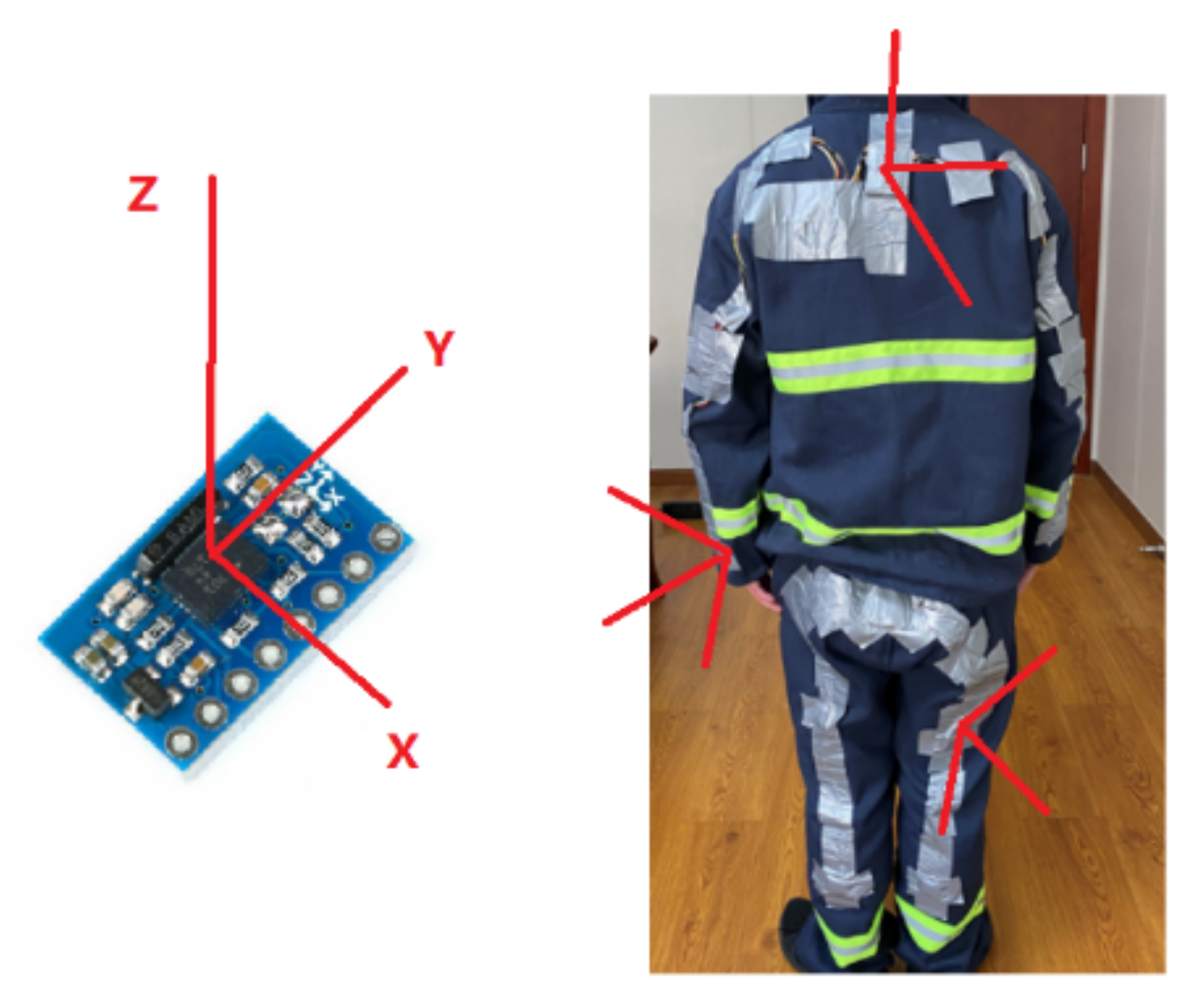

- Performance evaluation of firefighter fall detection based on motion sensors that are placed in different parts of the body (PPC), including chest, elbows, wrists, thighs, and ankles.

- Aim to build a high realistic falling related movements dataset through collaboration with real firefighters.

- Proposes a novel fall-detection model which is trained with deep learning approach that can classify actual falls and fall-like events.

2. Related Works

- (1)

- Building a dataset by collecting motion data of actual firefighters, including falls and fall-like activities, for academic research purposes,

- (2)

- Investigating the optimization of motion sensors in fall-activity classification, in terms of their quantity and placement on firefighter protective clothing, and

- (3)

- Presenting a fall-detection framework applied for firefighters, especially when they are often working in high-stress situations.

3. Materials and Methods

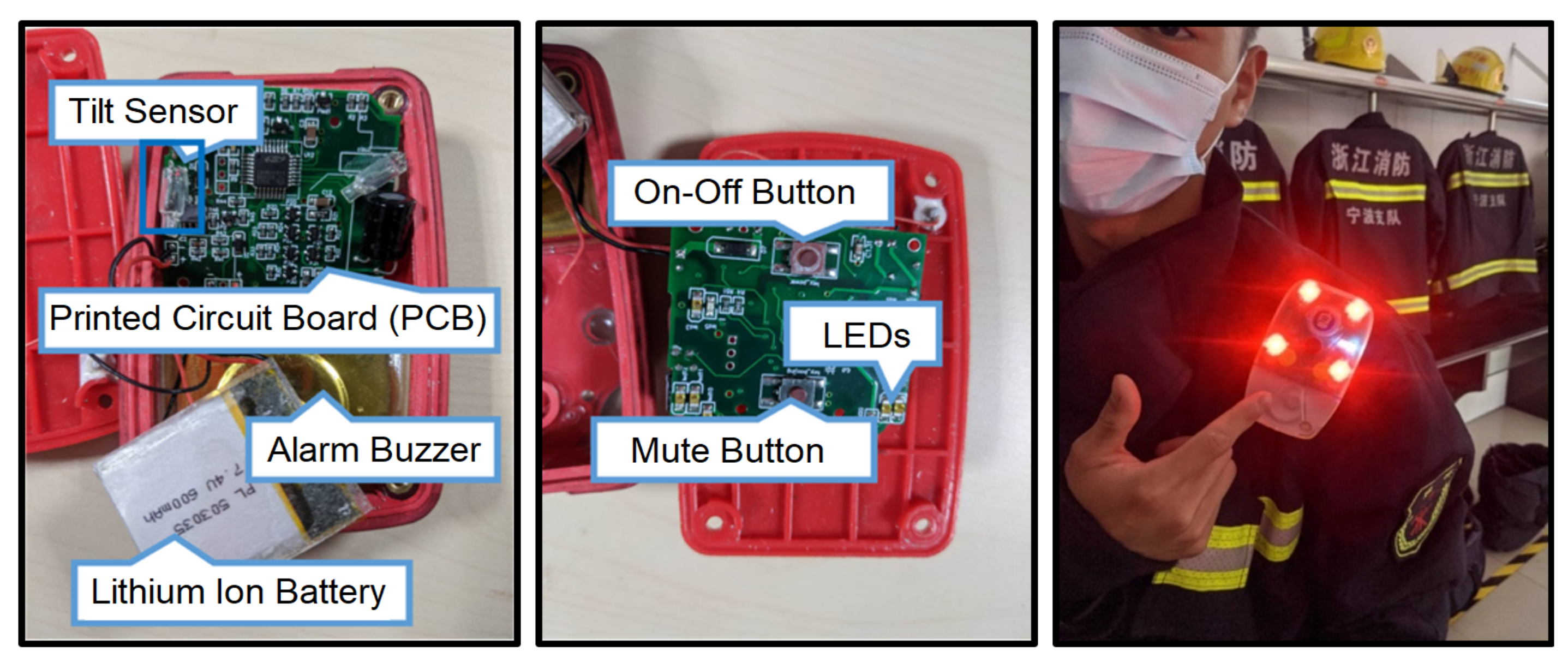

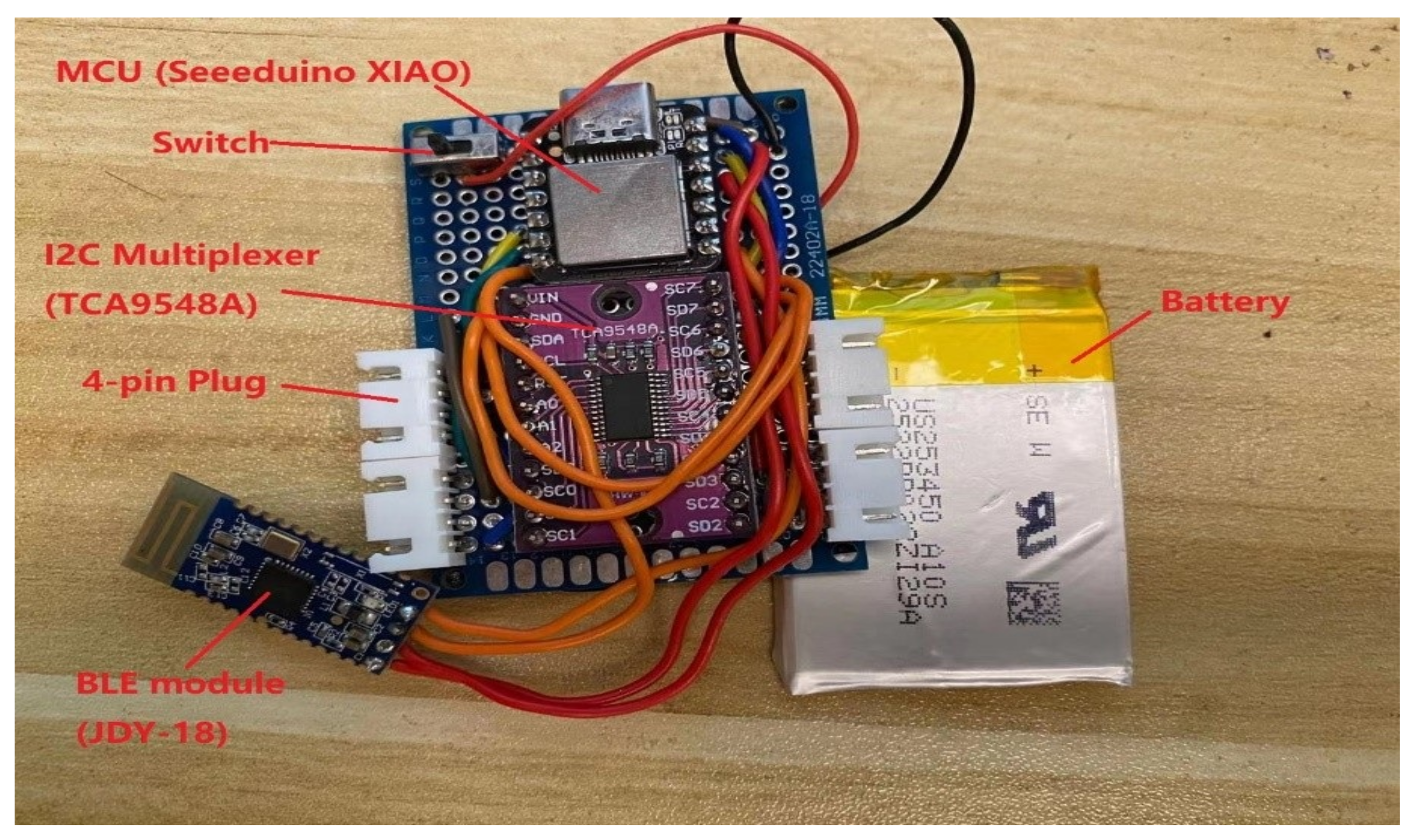

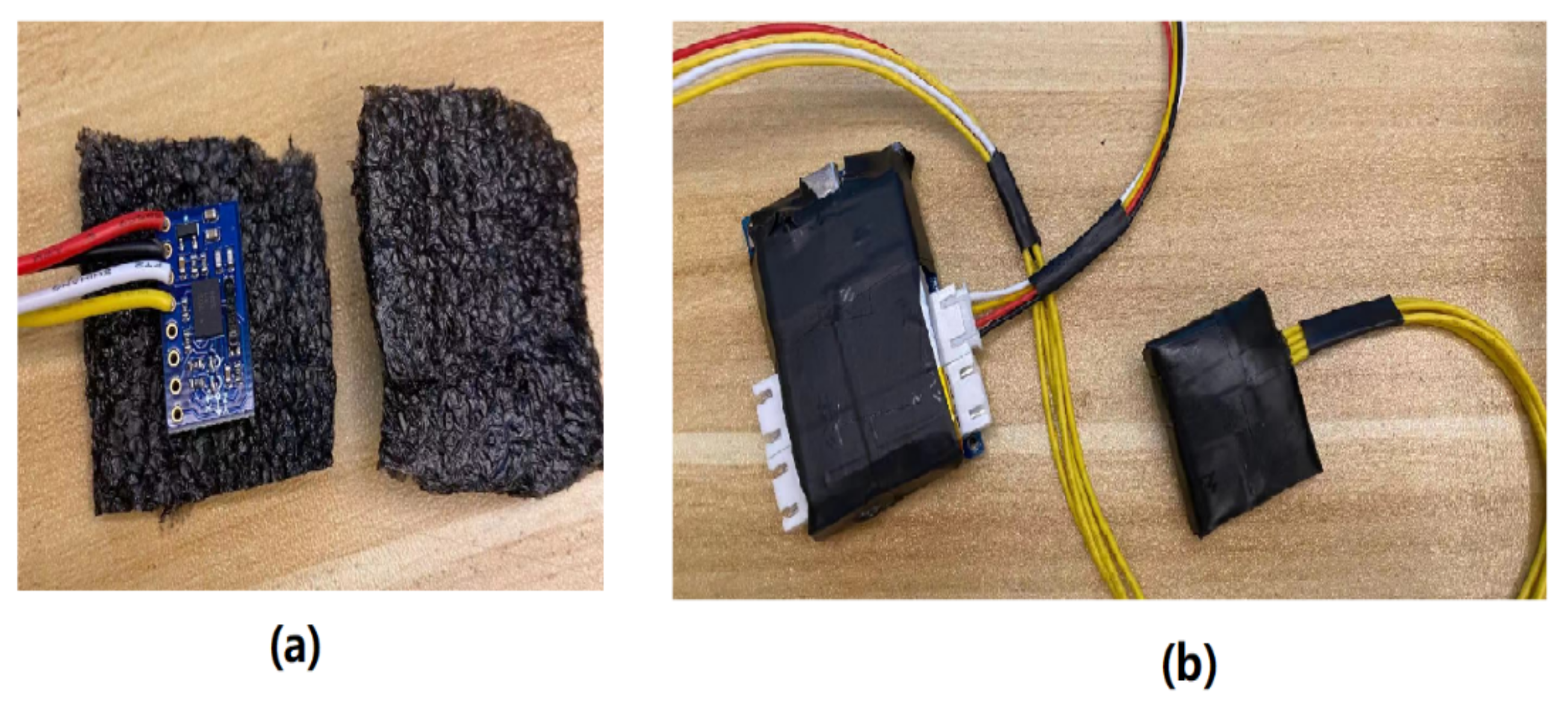

3.1. Smart PPC Prototype

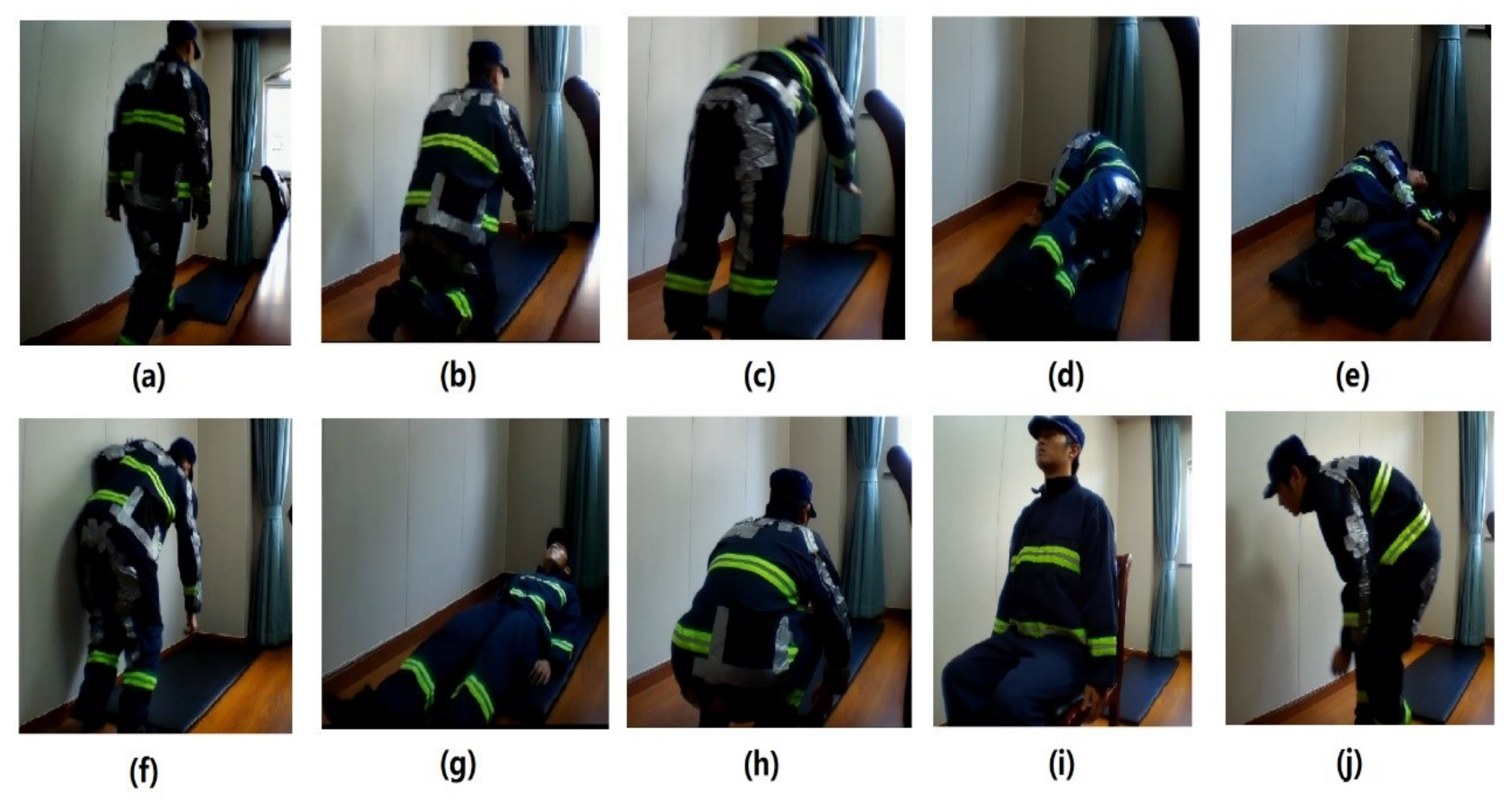

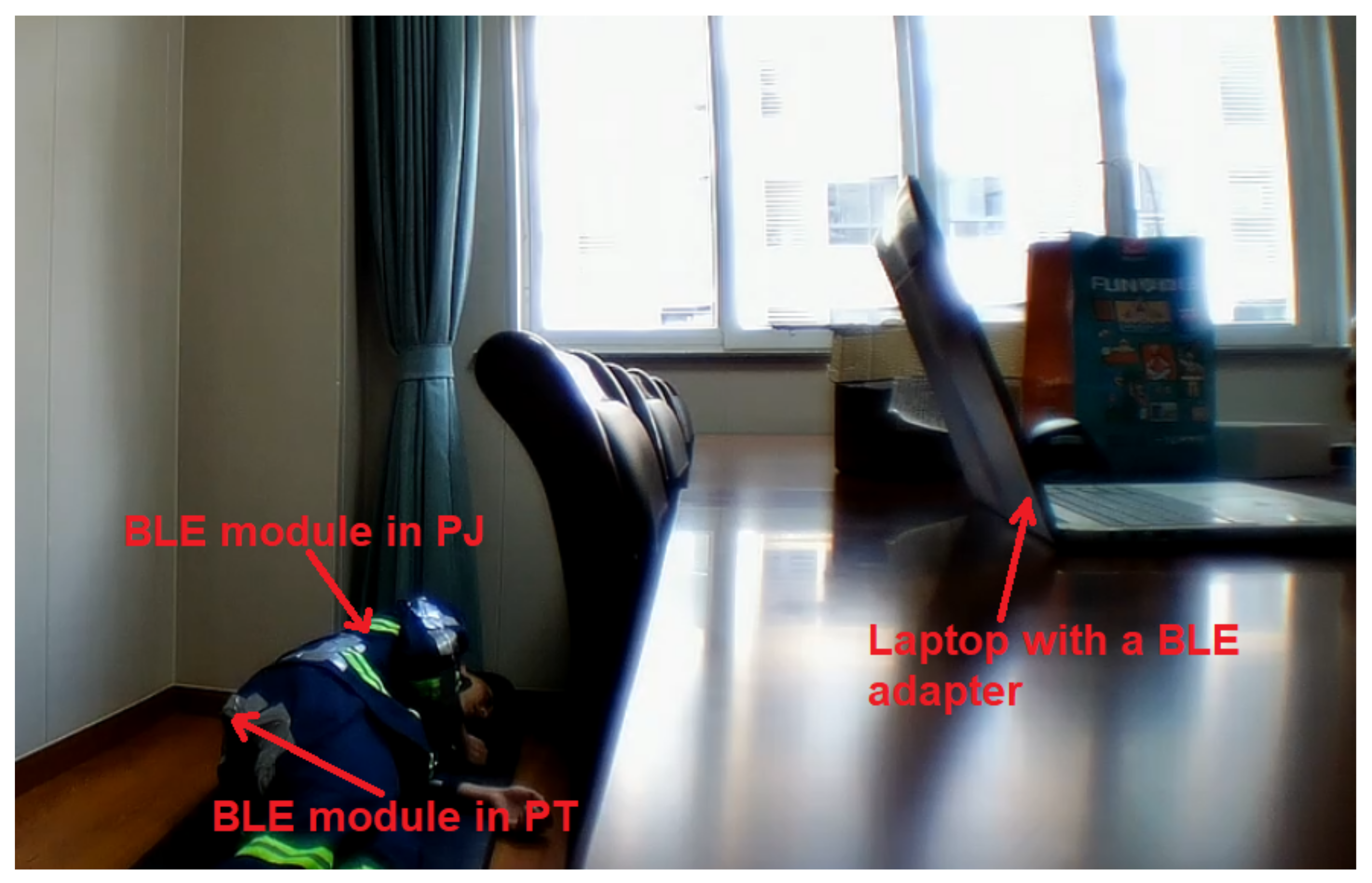

3.2. Dataset Collection

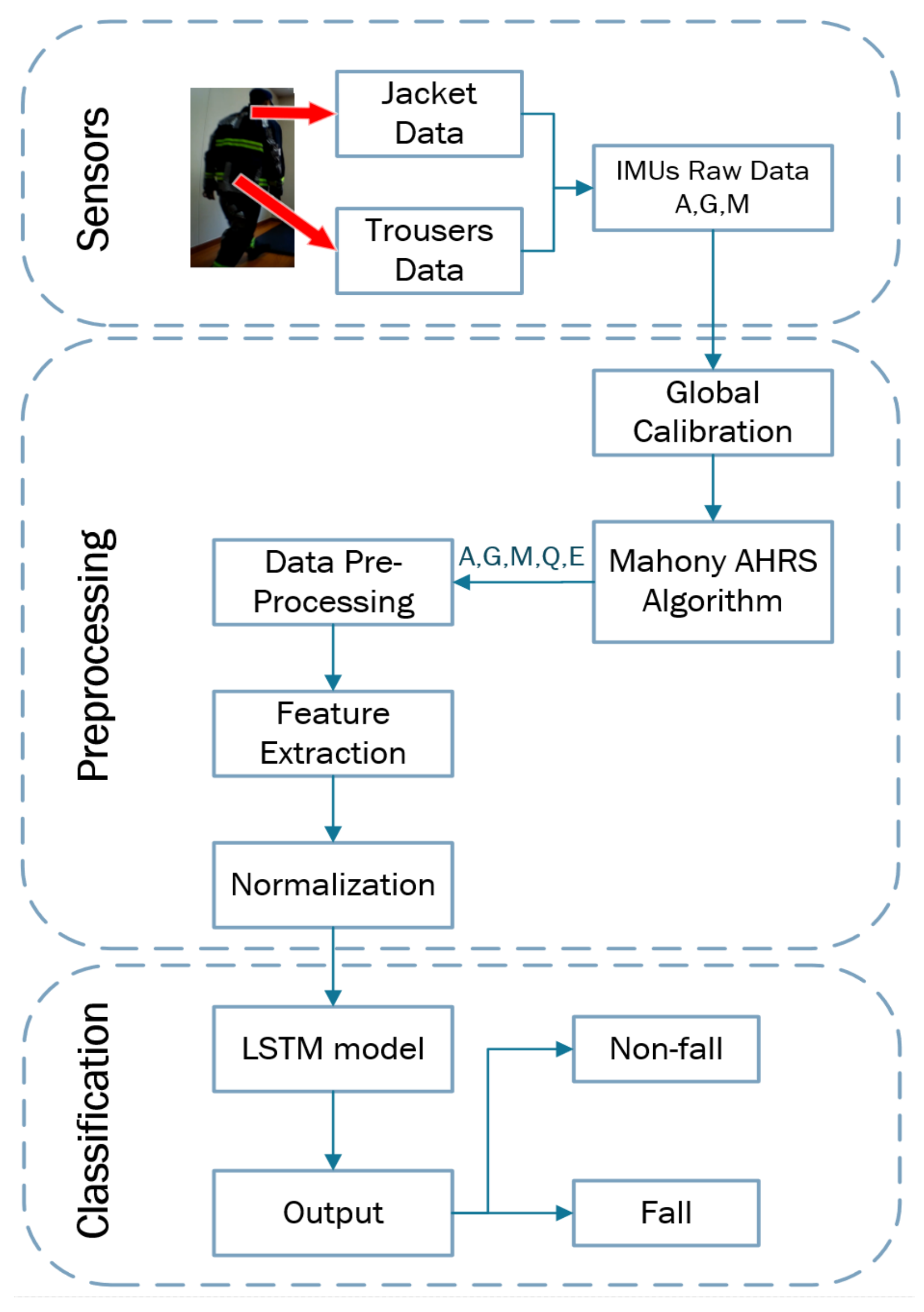

3.3. Framework

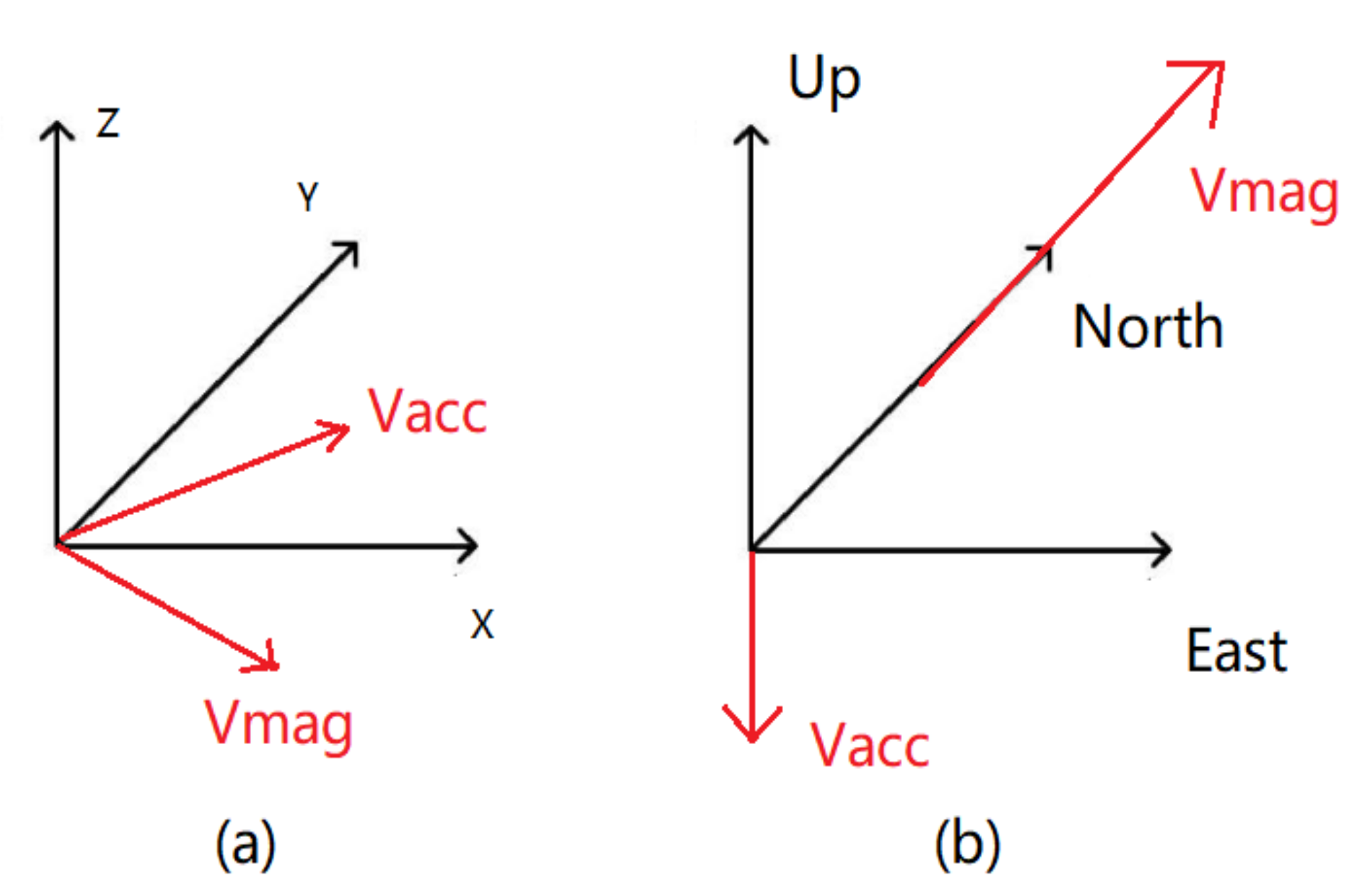

3.3.1. Global Calibration of IMUs

3.3.2. Data Pre-Processing

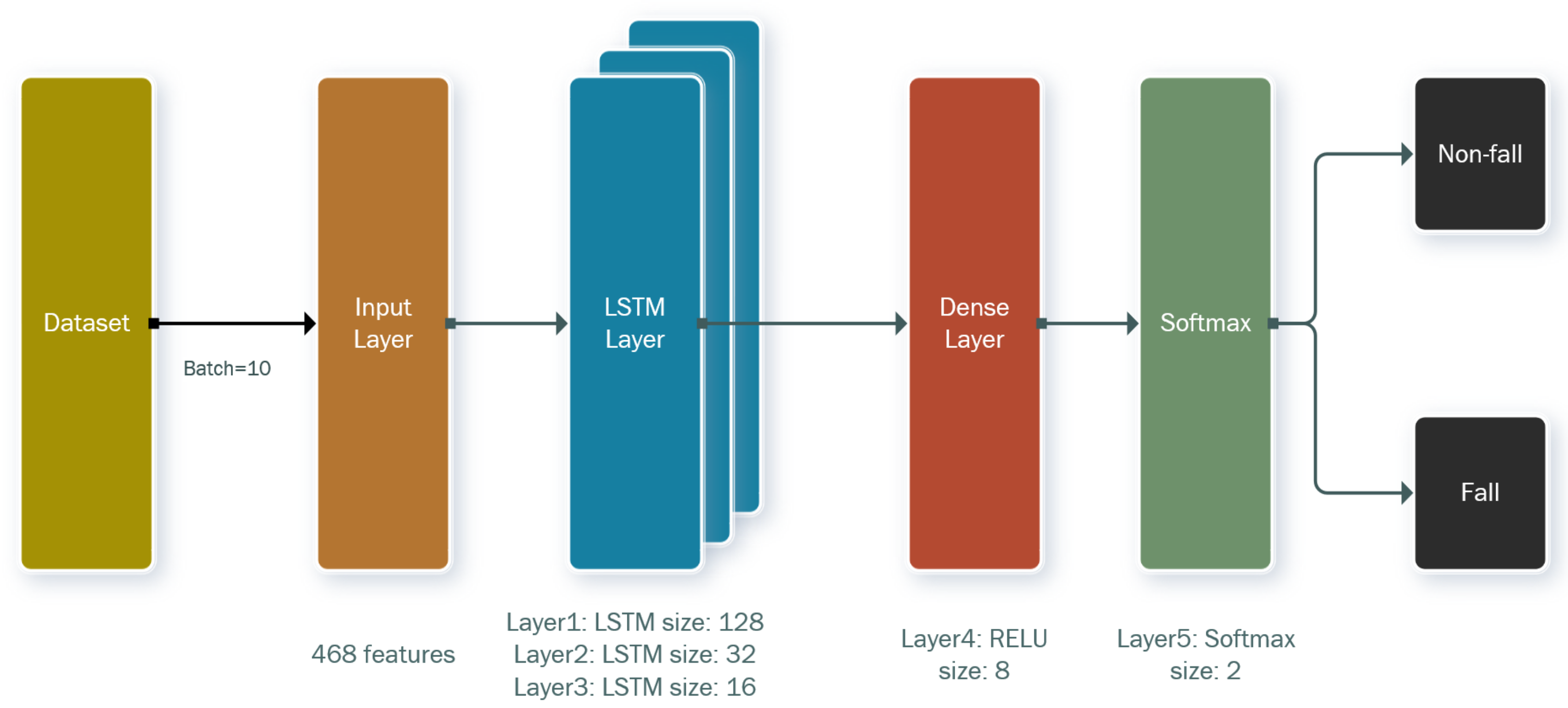

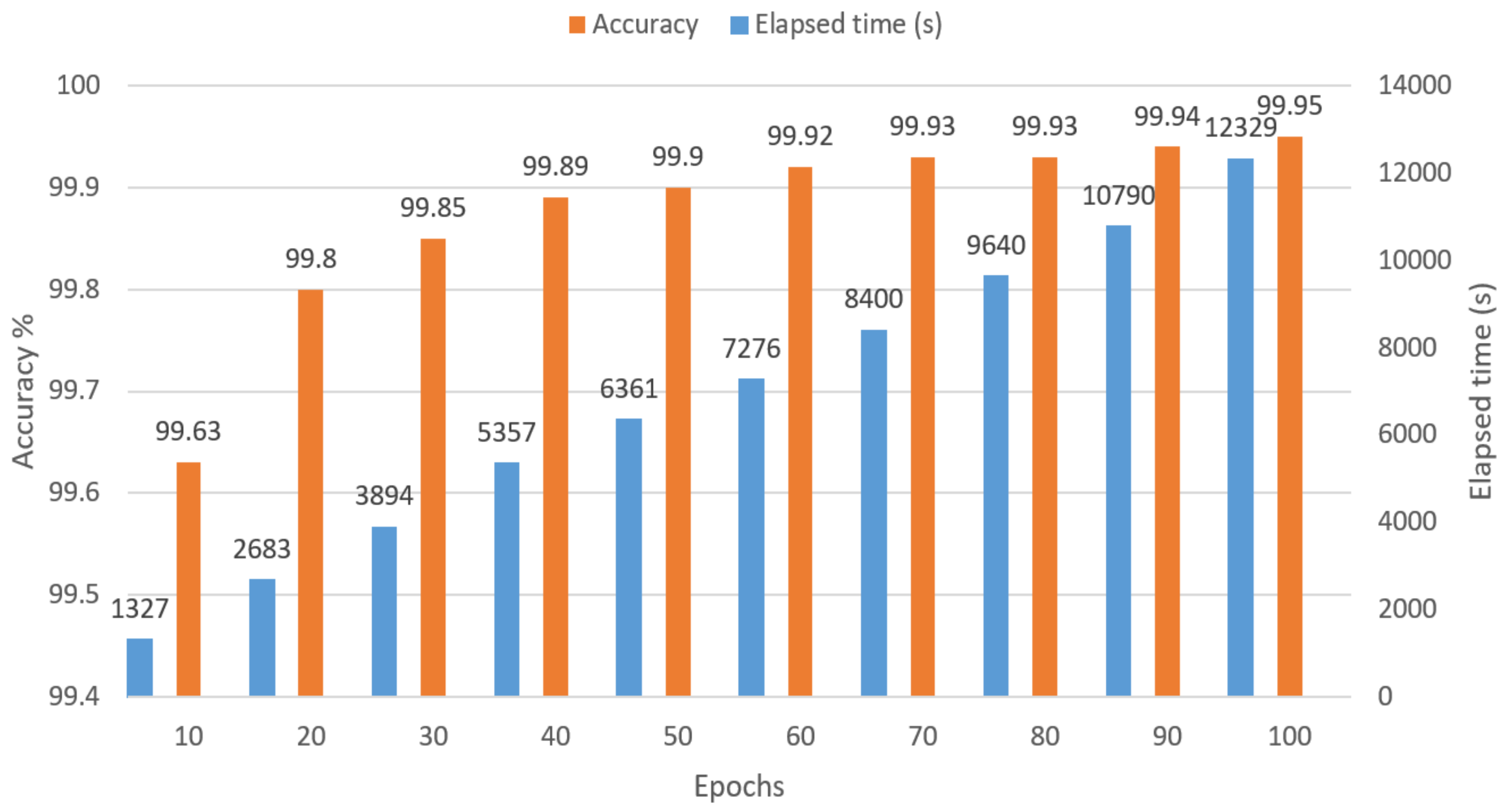

3.3.3. Recurrent Neural Network Classifier

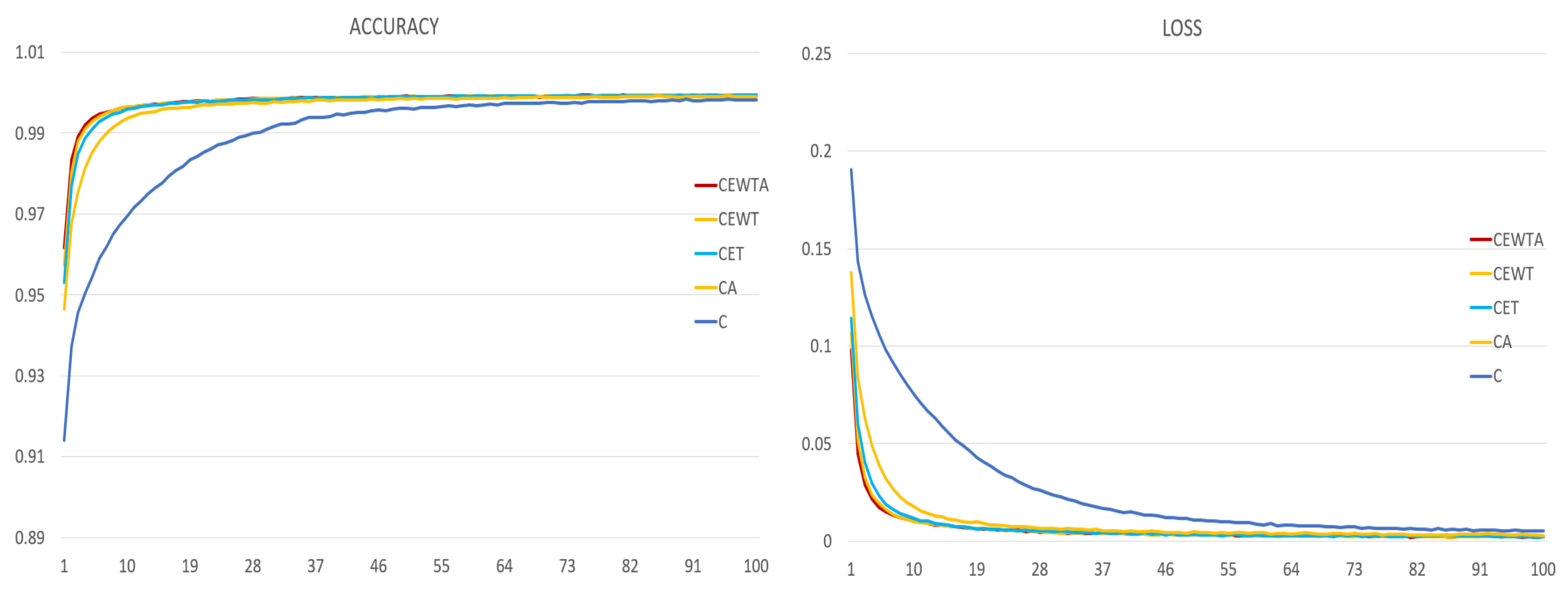

4. Results

- AUC: Area under the receiver operating characteristic (ROC) curve.

- Specificity (Sp): the ability to predict negative samples.

- Sensitivity (Se): also called recall; the ratio means the accuracy among all predictions of falling.

- Accuracy (Ac): the accuracy among all predictions, both positive and negative.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- China Fire Protection Yearbook; Yunnan People’s Publishing House: Kunming, China, 2018.

- Fahy, R.F.; Molis, J.L. Firefighter Fatalities in the US-2018. Available online: https://www.nfpa.org/-/media/Files/News-and-Research/Fire-statistics-and-reports/Emergency-responders/2019FFF.ashx (accessed on 19 May 2021).

- Sun, Z. Research on safety safeguard measures for fire fighting and Rescue. Fire Daily 2001, 3, 52–58. (In Chinese) [Google Scholar]

- Brushlinsky, N.; Ahrens, M.; Sokolov, S.; Wagner, P. World Fire Statistics. 2021. Available online: https://ctif.org/sites/default/files/2021-06/CTIF_Report26_0.pdf (accessed on 5 August 2021).

- Fan, M.; Yang, Q.; Feng, S.; Zhao, C.; Pu, J. Research on Casualties of Chinese Firefighters in Various Firefighting and Rescue Tasks. Ind. Saf. Environ. Prot. 2015. [Google Scholar]

- Zhu, L.; Zhou, P.; Pan, A.; Guo, J.; Sun, W.; Wang, L.; Chen, X.; Liu, Z. A Survey of Fall Detection Algorithm for Elderly Health Monitoring. In Proceedings of the 2015 IEEE Fifth International Conference on Big Data and Cloud Computing, Dalian, China, 26–28 August 2015; pp. 270–274. [Google Scholar] [CrossRef]

- Mubashir, M.; Shao, L.; Seed, L. A Survey on Fall Detection: Principles and Approaches. Neurocomputing 2013, 100, 144–152. [Google Scholar] [CrossRef]

- Noury, N.; Fleury, A.; Rumeau, P.; Bourke, A.; ÓLaighin, G.; Rialle, V.; Lundy, J.E. Fall detection – Principles and Methods. In Proceedings of the 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 1663–1666. [Google Scholar] [CrossRef]

- Casilari, E.; Santoyo-Ramon, J.A.; Cano-Garcia, J.M. Analysis of Public Datasets for Wearable Fall Detection Systems. Sensors 2017, 17, 1513. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Teng, G.; Zhang, Y. A survey of fall detection model based on wearable sensor. In Proceedings of the 2019 12th International Conference on Human System Interaction (HSI), Richmond, VA, USA, 25–27 June 2019; pp. 181–186. [Google Scholar] [CrossRef]

- Ramachandran, A.; Ramesh, A.; Karuppiah, A. Evaluation of Feature Engineering on Wearable Sensor-based Fall Detection. In Proceedings of the 2020 International Conference on Information Networking (ICOIN), Barcelona, Spai, 7–10 January 2020; pp. 110–114. [Google Scholar] [CrossRef]

- Iazzi, A.; Rziza, M.; Oulad Haj Thami, R. Fall Detection System-Based Posture-Recognition for Indoor Environments. J. Imag. 2021, 7, 42. [Google Scholar] [CrossRef]

- Diraco, G.; Leone, A.; Siciliano, P. An active vision system for fall detection and posture recognition in elderly healthcare. In Proceedings of the 2010 Design, Automation Test in Europe Conference Exhibition (DATE 2010), Dresden, Germany, 8–12 March 2010; pp. 1536–1541. [Google Scholar] [CrossRef]

- Rougier, C.; Meunier, J. Demo: Fall detection using 3D head trajectory extracted from a single camera video sequence. J. Telemed. Telecare 2005, 11, 7–9. [Google Scholar]

- Jansen, B.; Deklerck, R. Context aware inactivity recognition for visual fall detection. In Proceedings of the 2006 Pervasive Health Conference and Workshops, Innsbruck, Austria, 29 November–1 December 2006; pp. 1–4. [Google Scholar] [CrossRef]

- Lin, C.L.; Chiu, W.C.; Chu, T.C.; Ho, Y.H.; Chen, F.H.; Hsu, C.C.; Hsieh, P.H.; Chen, C.H.; Lin, C.C.K.; Sung, P.S.; et al. Innovative Head-Mounted System Based on Inertial Sensors and Magnetometer for Detecting Falling Movements. Sensors 2020, 20, 5774. [Google Scholar] [CrossRef]

- Waheed, M.; Afzal, H.; Mehmood, K. NT-FDS—A Noise Tolerant Fall Detection System Using Deep Learning on Wearable Devices. Sensors 2021, 21, 2006. [Google Scholar] [CrossRef]

- Vavoulas, G.; Pediaditis, M.; Spanakis, E.G.; Tsiknakis, M. The MobiFall dataset: An initial evaluation of fall detection algorithms using smartphones. In Proceedings of the 13th IEEE International Conference on BioInformatics and BioEngineering, Chania, Greece, 10–13 November 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Medrano, C.; Igual, R.; Plaza, I.; Castro, M. Detecting Falls as Novelties in Acceleration Patterns Acquired with Smartphones. PLoS ONE 2014, 9, e94811. [Google Scholar] [CrossRef] [Green Version]

- Wertner, A.; Czech, P.; Pammer, V. An Open Labelled Dataset for Mobile Phone Sensing Based Fall Detection. In Proceedings of the ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering), Gent, Belgium, 22–24 July 2015. [Google Scholar] [CrossRef]

- Vavoulas, G.; Chatzaki, C.; Malliotakis, T.; Pediaditis, M.; Tsiknakis, M. The MobiAct Dataset: Recognition of Activities of Daily Living using Smartphones. In Proceedings of the International Conference on Information and Communication Technologies for Ageing Well and e-Health—ICT4AWE, (ICT4AGEINGWELL 2016), Rome, Italy, 21–22 April 2016; pp. 143–151. [Google Scholar] [CrossRef] [Green Version]

- Micucci, D.; Mobilio, M.; Napoletano, P. UniMiB SHAR: A Dataset for Human Activity Recognition Using Acceleration Data from Smartphones. Appl. Sci. 2017, 7, 1101. [Google Scholar] [CrossRef] [Green Version]

- Martinez-Villasenor, L.; Ponce, H.; Brieva, J.; Moya-Albor, E.; Nunez-Martínez, J.; Penafort-Asturiano, C. UP-Fall Detection Dataset: A Multimodal Approach. Sensors 2019, 19, 1988. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, D.W.; Jun, K.; Naheem, K.; Kim, M.S. Deep Neural Network–Based Double-Check Method for Fall Detection Using IMU-L Sensor and RGB Camera Data. IEEE Access 2021, 9, 48064–48079. [Google Scholar] [CrossRef]

- Kwolek, B.; Kepski, M. Improving fall detection by the use of depth sensor and accelerometer. Neurocomputing 2015, 168, 637–645. [Google Scholar] [CrossRef]

- Chen, X.; Jiang, S.; Lo, B. Subject-Independent Slow Fall Detection with Wearable Sensors via Deep Learning. In Proceedings of the 2020 IEEE SENSORS, Online, 25–28 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Van Thanh, P.; Nguyen, T.; Nga, H.; Thi, L.; Ha, T.; Lam, D.; Chinh, N.; Tran, D.T. Development of a Real-time Supported System for Firefighters in Emergency Cases. In Proceedings of the International Conference on the Development of Biomedical Engineering in Vietnam, June Ho Chi Minh, Vietnam, 27–29 June 2017. [Google Scholar]

- Van Thanh, P.; Le, Q.B.; Nguyen, D.A.; Dang, N.D.; Huynh, H.T.; Tran, D.T. Multi-Sensor Data Fusion in A Real-Time Support System for On-Duty Firefighters. Sensors 2019, 19, 4746. [Google Scholar] [CrossRef] [Green Version]

- Geng, Y.; Chen, J.; Fu, R.; Bao, G.; Pahlavan, K. Enlighten Wearable Physiological Monitoring Systems: On-Body RF Characteristics Based Human Motion Classification Using a Support Vector Machine. IEEE Trans. Mob. Comput. 2016, 15, 656–671. [Google Scholar] [CrossRef]

- Blecha, T.; Soukup, R.; Kaspar, P.; Hamacek, A.; Reboun, J. Smart firefighter protective suit - functional blocks and technologies. In Proceedings of the 2018 IEEE International Conference on Semiconductor Electronics (ICSE), Kuala Lumpur, Malaysia, 15–17 August 2018; p. C4. [Google Scholar] [CrossRef]

- BNO055 Inertial Measurement Unit. Available online: https://item.taobao.com/item.htm?spm=a230r.1.14.16.282a69630O3V2r&id=541798409353&ns=1&abbucket=5#detail (accessed on 10 May 2021).

- Seeeduino XIAO MCU. Available online: https://detail.tmall.com/item.htm?id=612336208350&spm=a1z09.2.0.0.48d12e8d1z1cxA&_u=ajtqea1c090 (accessed on 3 June 2021).

- TCA9548A I2C Multiplexer. Available online: https://detail.tmall.com/item.htm?id=555889112029&spm=a1z09.2.0.0.48d12e8d1z1cxA&_u=ajtqea191ec (accessed on 19 May 2021).

- JDY-18 Bluetooth Low Energy 4.2 Module. Available online: https://detail.tmall.com/item.htm?id=561783372873&spm=a1z09.2.0.0.48d12e8d1z1cxA&_u=ajtqea15f8b (accessed on 1 June 2021).

- 7 V 400 mAh Lthium-Lon Battery. Available online: https://item.taobao.com/item.htm?id=619553965700&ali_refid=a3_430008_1006:1102265936:N:%2BblvRi4iO%2FgjtUw1Rz5DMnH2RFqSzBpj:1cecdd7aee090b757014f8c1916c98e1&ali_trackid=1_1cecdd7aee090b757014f8c1916c98e1&spm=a230r.1.0.0 (accessed on 22 May 2021).

- Yan, Y.; Ou, Y. Accurate fall detection by nine-axis IMU sensor. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macao, China, 5–8 December 2017; pp. 854–859. [Google Scholar] [CrossRef]

- O’Donovan, K.J.; Kamnik, R.; O’Keeffe, D.T.; Lyons, G.M. An inertial and magnetic sensor based technique for joint angle measurement. J. Biomech. 2007, 40, 2604–2611. [Google Scholar] [CrossRef]

- Li, Q.; Stankovic, J.A.; Hanson, M.A.; Barth, A.T.; Lach, J.; Zhou, G. Accurate, Fast Fall Detection Using Gyroscopes and Accelerometer-Derived Posture Information. In Proceedings of the 2009 Sixth International Workshop on Wearable and Implantable Body Sensor Networks, Berkeley, CA, USA, 3–5 June 2009; pp. 138–143. [Google Scholar] [CrossRef] [Green Version]

- Wu, F.; Zhao, H.; Zhao, Y.; Zhong, H. Development of a Wearable-Sensor-Based Fall Detection System. Int. J. Telemed. Appl. 2015, 2015, 576364. [Google Scholar] [CrossRef] [Green Version]

- Ahn, S.; Kim, J.; Koo, B.; Kim, Y. Evaluation of Inertial Sensor-Based Pre-Impact Fall Detection Algorithms Using Public Dataset. Sensors 2019, 19, 774. [Google Scholar] [CrossRef] [Green Version]

- Mahony, R.; Hamel, T.; Pflimlin, J.M. Nonlinear Complementary Filters on the Special Orthogonal Group. IEEE Trans. Autom. Control. 2008, 53, 1203–1218. [Google Scholar] [CrossRef] [Green Version]

- Shi, J.; Chen, D.; Wang, M. Pre-Impact Fall Detection with CNN-Based Class Activation Mapping Method. Sensors 2020, 20, 4750. [Google Scholar] [CrossRef]

- Luna-Perejon, F.; Munoz-Saavedra, L.; Civit-Masot, J.; Civit, A.; Dominguez-Morales, M. AnkFall—Falls, Falling Risks and Daily-Life Activities Dataset with an Ankle-Placed Accelerometer and Training Using Recurrent Neural Networks. Sensors 2021, 21, 1889. [Google Scholar] [CrossRef] [PubMed]

- Kiprijanovska, I.; Gjoreski, H.; Gams, M. Detection of Gait Abnormalities for Fall Risk Assessment Using Wrist-Worn Inertial Sensors and Deep Learning. Sensors 2020, 20, 5373. [Google Scholar] [CrossRef] [PubMed]

| Components | Specification |

|---|---|

| IMU | Triaxial accelerometer |

| Triaxial gyroscope | |

| Triaxial magnetometer | |

| Operating voltage: 3 V to 5 V | |

| Seeeduino XIAO MCU | Operating voltage: 3.3 V/5 V |

| CPU: 40 MHz ARM Cortex-M0+ | |

| Flash memory: 256 KB | |

| RAM: 32 KB | |

| Size: 20 × 17.5 × 3.5 mm | |

| I2C: 1 pair | |

| TCA29548A multiplexer | Operating voltage: 3V to 5V |

| I2C: 8 pairs | |

| JDY-18 BLE | Operating voltage: 1.8 V to 3.6 V |

| BLE version: 4.2 | |

| Frequency: 2.4 GHz | |

| Size: 27 × 12.8 × 1.6 mm | |

| Lithium-lon battery | Power supply: 3.7 V |

| Capacity: 400 mAh |

| Code | Type | Activity | Trials for Each Subject | Total Trials |

|---|---|---|---|---|

| F1 | Falls | forward falls using knees | 5 | 70 |

| F2 | forward falls using hands | 5 | 70 | |

| F3 | inclined falls left | 4 | 56 | |

| F4 | inclined falls right | 4 | 56 | |

| F5 | slow forward falls with crouch first | 3 | 42 | |

| F6 | backward falls | 3 | 42 | |

| FL1 | Fall-like | crouch | 4 | 56 |

| FL2 | walk with stoop | 4 | 56 | |

| FL3 | sit | 3 | 42 |

| Placement | Chest | Elbows | Wrists | Thighs | Ankles |

|---|---|---|---|---|---|

| Code | C | E | W | T | A |

| IMU Quantity | Combination | AUC | Se | Sp | Ac |

|---|---|---|---|---|---|

| 9 | CEWTA | 0.97 | 92.25% | 94.59% | 94.10% |

| 7 | CEWT | 0.98 | 91.22% | 94.72% | 93.98% |

| 7 | CEWA | 0.95 | 89.04% | 94.25% | 93.15% |

| 7 | CETA | 0.95 | 88.01% | 95.37% | 93.82% |

| 7 | CWTA | 0.95 | 90.35% | 94.21% | 93.38% |

| 8 | EWTA | 0.94 | 90.72% | 90.21% | 90.32% |

| 5 | CEW | 0.94 | 88.72% | 91.94% | 91.26% |

| 5 | CEA | 0.95 | 88.39% | 92.24% | 91.43% |

| 5 | CWT | 0.98 | 88.39% | 92.24% | 91.43% |

| 6 | EWA | 0.93 | 85.06% | 92.42% | 90.87% |

| 6 | EWT | 0.96 | 89.14% | 91.42% | 90.94% |

| 5 | CWA | 0.96 | 90.84% | 93.02% | 92.56% |

| 5 | CET | 0.97 | 91.61% | 94.06% | 93.55% |

| 5 | ETA | 0.96 | 90.54% | 92.30% | 91.93% |

| 5 | WTA | 0.93 | 90.54% | 92.30% | 91.93% |

| 3 | CE | 0.96 | 92.88% | 89.92% | 90.54% |

| 3 | CW | 0.95 | 90.96% | 93.49% | 92.96% |

| 3 | CT | 0.95 | 86.94% | 92.97% | 91.70% |

| 3 | CA | 0.94 | 90.23% | 93.97% | 93.18% |

| 4 | TA | 0.92 | 83.92% | 90.61% | 89.20% |

| 4 | ET | 0.91 | 85.34% | 92.19% | 90.75% |

| 4 | EA | 0.95 | 87.40% | 93.49% | 92.21% |

| 4 | WT | 0.91 | 85.99% | 84.76% | 85.02% |

| 4 | WA | 0.90 | 81.98% | 90.72% | 88.88% |

| 4 | EW | 0.94 | 83.01% | 90.75% | 89.14% |

| 2 | E | 0.91 | 85.08% | 88.70% | 87.94% |

| 2 | W | 0.84 | 71.99% | 80.65% | 78.83% |

| 2 | T | 0.88 | 78.56% | 86.56% | 84.87% |

| 2 | A | 0.89 | 73.97% | 92.44% | 88.55% |

| 1 | C | 0.96 | 92.82% | 92.43% | 92.51% |

| Activity | CEWTA | CEWT | CET | ||||||

| Se | Sp | Ac | Se | Sp | Ac | Se | Sp | Ac | |

| F1 | 91.45% | 96.87% | 95.42% | 87.28% | 98.40% | 95.42% | 89.11% | 98.25% | 95.80% |

| F2 | 94.48% | 98.34% | 97.15% | 93.54% | 98.26% | 96.81% | 95.22% | 98.38% | 97.41% |

| F3 | 96.55% | 97.20% | 97.00% | 95.69% | 97.58% | 97.07% | 97.09% | 97.10% | 97.10% |

| F4 | 95.02% | 98.72% | 97.62% | 94.91% | 99.02% | 97.79% | 95.37% | 98.72% | 97.72% |

| F5 | 96.62% | 97.40% | 97.22% | 98.46% | 97.22% | 97.50% | 97.23% | 97.22% | 97.22% |

| F6 | 78.38% | 99.05% | 92.80% | 80.70% | 99.27% | 93.66% | 76.71% | 99.11% | 92.33% |

| FL1 | 0% | 90.31% | 90.31% | 0% | 90.06% | 90.06% | 0% | 89.38% | 89.38% |

| FL2 | 0% | 89.92% | 89.92% | 0% | 84.17% | 84.17% | 0% | 82.21% | 82.21% |

| FL3 | 0% | 87.79% | 87.79% | 0% | 89.87% | 89.87% | 0% | 87.60% | 87.60% |

| Total | 92.25% | 94.59% | 94.10% | 91.22% | 94.72% | 93.98% | 91.61% | 94.06% | 93.55% |

| Activity | CA | C | Average | ||||||

| Se | Sp | Ac | Se | Sp | Ac | Se | Sp | Ac | |

| F1 | 91.25% | 94.31% | 93.49% | 90.84% | 95.31% | 94.11% | 89.99% | 96.63% | 94.85% |

| F2 | 81.84% | 95.89% | 91.58% | 97.66% | 95.81% | 96.38% | 92.55% | 97.34% | 95.87% |

| F3 | 93.64% | 97.44% | 96.27% | 99.68% | 95.80% | 97.00% | 96.53% | 97.02% | 96.89% |

| F4 | 94.61% | 98.08% | 94.07% | 84.14% | 96.61% | 92.90% | 92.81% | 98.23% | 96.02% |

| F5 | 98.46% | 96.32% | 96.80% | 96.61% | 97.04% | 96.94% | 97.48% | 97.04% | 97.14% |

| F6 | 78.38% | 97.60% | 91.79% | 88.55% | 97.88% | 95.06% | 80.54% | 98.58% | 93.13% |

| FL1 | 0% | 83.72% | 83.72% | 0% | 99.61% | 99.61% | 0% | 90.62% | 90.62% |

| FL2 | 0% | 86.88% | 86.88% | 0% | 80.88% | 80.88% | 0% | 84.81% | 84.81% |

| FL3 | 0% | 89.99% | 89.99% | 0% | 93.05% | 93.05% | 0% | 89.66% | 89.66% |

| Total | 90.23% | 93.97% | 93.18% | 92.82% | 92.43% | 92.51% | / | / | / |

| Reference | Application | Methodology | Algorithm | SR | Se | Sp | Ac |

|---|---|---|---|---|---|---|---|

| Van et al. (2018) [27] | Firefighters | 1 3-DOF accelerometer and 1 barometer on the thigh pocket, and 1 CO sensor on the mask (they raised 4 algorithms in [27] and 1 algorithm in [28]) | Algorithm 1 | 100 Hz | 100% | 100% | 100% |

| Algorithm 2 | 100% | 94.44% | 95.83% | ||||

| Algorithm 3 | 100% | 90.74% | 93.05% | ||||

| Algorithm 4 | 100% | 91.67% | 93.75% | ||||

| Van et al. (2018) [28] | Algorithm 1 | 88.9% | 94.45% | 91.67% | |||

| Shi et al.(2020) [42] | Elderly | 1 IMU on waist | / | 100 Hz | 95.54% | 96.38% | 95.96% |

| AnkFall (2021) [43] | 1 IMU on ankle | / | 100 Hz | 76.8% | 92.8% | / | |

| Kiprijanovska et al. (2020) [44] | Ordinary being | 2 IMUs in 2 smartwatches | / | 100 Hz | 90.6% | 86.2% | 88.9% |

| Proposed method | Firefighters | 9 9-DOF IMUs on the chest, wrists, elbows, thighs and ankles | / | 15 Hz | 92.25% | 94.59% | 94.10% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chai, X.; Wu, R.; Pike, M.; Jin, H.; Chung, W.-Y.; Lee, B.-G. Smart Wearables with Sensor Fusion for Fall Detection in Firefighting. Sensors 2021, 21, 6770. https://doi.org/10.3390/s21206770

Chai X, Wu R, Pike M, Jin H, Chung W-Y, Lee B-G. Smart Wearables with Sensor Fusion for Fall Detection in Firefighting. Sensors. 2021; 21(20):6770. https://doi.org/10.3390/s21206770

Chicago/Turabian StyleChai, Xiaoqing, Renjie Wu, Matthew Pike, Hangchao Jin, Wan-Young Chung, and Boon-Giin Lee. 2021. "Smart Wearables with Sensor Fusion for Fall Detection in Firefighting" Sensors 21, no. 20: 6770. https://doi.org/10.3390/s21206770

APA StyleChai, X., Wu, R., Pike, M., Jin, H., Chung, W.-Y., & Lee, B.-G. (2021). Smart Wearables with Sensor Fusion for Fall Detection in Firefighting. Sensors, 21(20), 6770. https://doi.org/10.3390/s21206770