1. Introduction

As the combination of a wide spectrum of cutting-edge technologies, autonomous vehicles (AVs) are destined to fundamentally change and reform the whole mobility system [

1]. AVs have great potentials in improving safety and mobility [

2,

3,

4], reducing fuel consumption and emission [

5,

6], and redefining civil infrastructure systems, such as road networks [

7,

8,

9], parking spaces [

10,

11,

12], and public transit systems [

13,

14]. Over the past two decades, many advanced driver assistance systems (ADAS) (e.g., lane keeping, adaptive cruise control) have been deployed in various types of production vehicles. Currently, both traditional car manufacturers and high-tech companies are competing to lead full autonomy technologies. For example, Waymo’s AVs alone are driving 25,000 miles every day in 2018 [

15], and there have been commercialized AVs operating in multiple cities by Uber [

16].

Despite the rapid development of AVs technologies, there is still a long way to reach the full autonomy and to completely replace all conventional vehicles with AVs. We will witness a long period over which AVs and conventional vehicles co-exist on public roads. How to sense, model and manage the mixed transportation systems presents a great challenge to public agencies. To the best of our knowledge, most current studies view AVs as controllers and focus on modeling and managing the mixed traffic networks [

17]. For example, novel system optimal (SO) and user equilibrium (UE) models are established to include AVs [

18,

19], coordinated intersections are proposed to improve the traffic throughput [

20,

21,

22], vehicle platooning strategies are developed to reduce highway congestion [

23,

24], and AVs can also complement conventional vehicles to solve last-mile problems [

25,

26]. However, there is a lack of studies in traffic sensing methods for the mixed traffic networks.

In this paper, we advocate the great potentials of AVs as moving observers in high-resolution traffic sensing. We note that traffic sensing with AVs in this paper is different from perception of AVs [

27]. The perception of AV is the key to the safe and reliable AVs, and it refers to the ability of AVs in collecting information and extracting relevant knowledge from the environment through various sensors [

28], while traffic sensing with AVs refers to estimating the traffic conditions, such as flow, density and speed using the information perceived by AVs [

29]. To be precise, traffic sensing with AVs is built on top of the perception technologies on AV, and in this paper, we will discuss the impact of different perception technologies on traffic sensing.

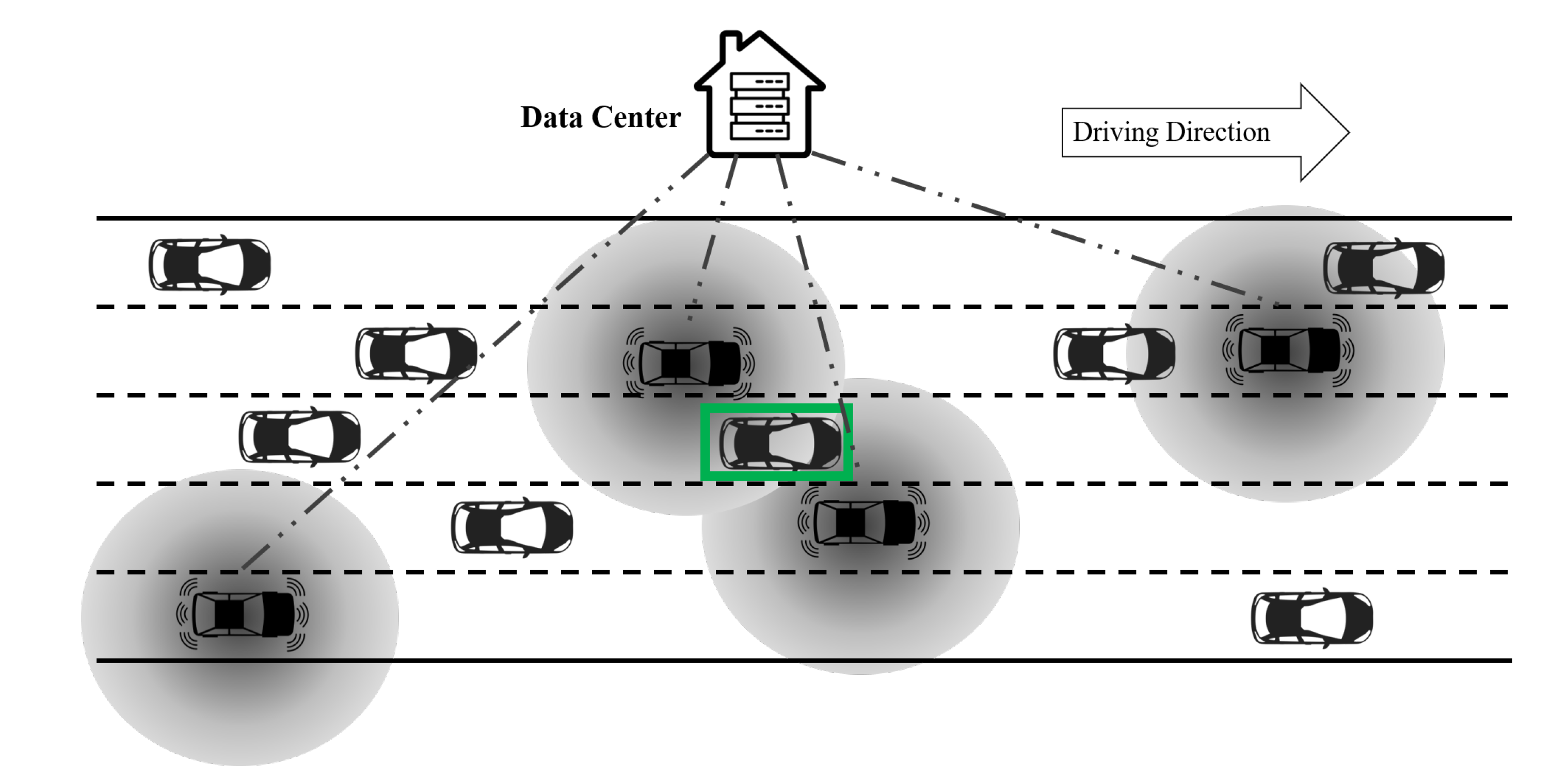

In fact, a fleet of autonomous vehicles (AVs) can serve as floating (or probe) sensors, detecting and tracking the surrounding traffic conditions to infer traffic information when cruising around the roadway network. Enabling traffic sensing with AVs is cost effective. The AVs equipped with various sensors and data analytics capabilities may be costly. While costly, those sensors and data are used primarily to detect and track adjacent objects to enable safe AV driving in the first place. Therefore, there is no additional overhead cost of these data collections for traffic sensing, since it is a secondary use.

High-resolution traffic sensing is central to traffic management and public policies. For instance, local municipalities would need information regarding how public space (e.g., curbs) is being utilized to set up optimal parking duration limits; metropolitan planning agencies would need various types of traffic/passenger information, including travel speed, traffic density and traffic flow by vehicle classifications, as well as pedestrians and cyclists. In addition, non-emergent and emergent incidents are reported by citizens through the 911 system, respectively. Automated traffic sensing, both historical and in real time, can complement those systems to enhance their timeliness, accuracy and accessibility. In general, accurate and ubiquitous information of infrastructure and usage patterns in public space is currently missing.

By leveraging the rich data collected through AVs, we are able to detect and track various objects in transportation networks. The objects include, but are not limited to, moving vehicles by vehicle classifications, parked vehicles, pedestrians, cyclists, signage in public space. When all those objects in high spatio-temporal resolutions are being continuously tracked, those data can be translated to useful traffic information for public policies and decision making. The three key features of traffic sensing based on autonomous vehicles sensors are: inexpensive, ubiquitous and reliable. Those data are collected by automotive manufacturers for guiding autonomous driving in the first place, which promises great scalability in this approach. With minimum additional efforts, the same data can be effectively translated into information useful for the community. For instance, how much time in public space at a particular location is utilized by different classifications of vehicles and by what travel modes, respectively? Can we effectively evaluate the accessibility, mobility and safety of the mobility networks? The sensing coverage will become ubiquitous in the near future, provided with an increasing market share of autonomous vehicles. Data acquired from individual autonomous vehicles can be compared, validated, corrected, consolidated, generalized and anonymized to retrieve the most reliable and ubiquitous traffic information. In addition, this paper for traffic sensing also implies the future possibility of interventions for effective and timely traffic management. It enables real-time traffic monitoring, potentially safer traffic operation, faster emergency response, and smarter infrastructure management.

The rest of this paper focuses on a critical problem to estimate the fundamental traffic state variables, namely, flow, density and speed, in high resolution, to demonstrate the sensing power of AVs. In addition to traffic sensing, there are many aspects and data in community sensing that could be explored in the near future. For example, perception of AVs can be used for monitoring urban forest health, air quality, street surface roughness and many other applications of municipal asset management [

30,

31,

32,

33].

Traffic state variables (e.g., flow, density and speed) play a key role in traffic operation and management. Over the past several decades, traffic state estimation (TSE) methods have been developed for not only stationary sensors (i.e., Eulerian data) but also moving observers (i.e., Lagrangian data) [

34]. Stationary sensors, including loop detectors, cameras and radar, monitor the traffic conditions at a fixed location. Due to the high installation and maintenance cost, the stationary sensors are usually sparsely installed in the network, and hence the collected data are not sufficient for the practical traffic operation and management [

35]. Data collected by moving observers (e.g., probe vehicles, ride-sourcing vehicles, unmanned aerial vehicles, mobile phones) have a better spatial coverage and hence it enables cost-effective TSE in large-scale networks [

36]. Though the TSE method with moving observer dates back to 1954 [

37], recent advances in communication and Internet of Things (IoT) technologies have catalyzed the development and deployment of various moving observers in the real world. Readers are referred to Seo et al. [

29], Wang and Papageorgiou [

38] for a comprehensive review of existing TSE models.

To highlight our contributions, we present studies that are closely related to this paper. The moving observers can be categorized into four types: originally defined moving observers, probe vehicles (PVs), unmanned aerial vehicles (UAVs) and AVs. Their characteristics and related TSE models are presented as follows:

Originally defined moving observers. The moving observer method for TSE was originally proposed by Wardrop and Charlesworth [

37]. The proposed method requires a probe vehicle to transverse along the road and count the number of slower vehicles overtaken by the probe vehicle and the number of faster vehicles which overtake the probe vehicle [

39]. Though the setting of the originally defined moving observers is too ideal for practice, it enlightened us on the value of using Lagrangian data for TSE.

PVs. The PVs refer to all the vehicles that can be geo-tracked, and it includes, but is not limited to, taxis, buses, trucks, connected vehicles, ride-sourcing vehicles [

40]. The PV data have great advantages in estimating speed, while it hardly contains density/flow information. Studies have explored the sensing power of PVs [

41]. PV data are usually used to complement stationary sensor data to enhance the traffic state estimation [

42,

43]. PVs with spacing measurement equipment can estimate traffic flow and speed simultaneously [

44,

45,

46,

47].

UAVs. By flying over the roads and viewing from top-view perspectives, UAVs are able to monitor a segment of road or even the entire network [

48,

49,

50]. UAVs have the advantage of better spatial coverage, while extra purchase of UAVs and the corresponding maintenance cost are required. Traffic sensing with UAV has been extensively studied in recent years, including vehicle identification algorithms [

50,

51,

52], sensing frameworks [

53,

54], and UAV routing mechanisms [

55,

56].

AVs. AVs can be viewed as probe vehicles equipped with more sensors and hence have better perception capabilities. Not only can the AV itself be geo-tracked, the vehicles surrounded by AVs can also be detected and tracked. AVs also share some similarities with UAVs because AVs can scan a continuous segment of road. We believe that AVs fall in between the PVs and UAVs, and hence existing TSE methods can hardly be applied to AVs. Furthermore, there are few studies on TSE with AVs. Moreover, Chen et al. [

57] presents a cyber-physical system to model the traffic flow near AVs based on flow theory, while the TSE for the whole road is not studied. Recently, Uber ATG conduct an experiment to explore the possibility of TSE using AVs [

58] but no rigorous quantitative analysis is provided.

The characteristics of TSE methods using different sensors are compared in

Table 1. One can see that AVs have unique characteristics that are different from any other moving observers. Given the unique characteristics of AVs, there is a great need to study the AV-based TSE methods. However, as discussed above, this research area is still under-explored. In view of this, we develop a data-driven framework that estimates high-resolution traffic state variables, namely flow, density and speed using the massive data collected by AVs. The framework clearly defines the task of TSE with AVs involved and considers different perception levels of AVs. A two-step TSE method is proposed under a low AV market penetration rate. While this paper focuses on the road level traffic state estimation, the proposed approach could be further extended to the network-wide TSE, which is left for future research. The main contributions of this paper are summarized as follows:

We firstly raise and clearly define the problem of TSE with multi-source data collected by AVs.

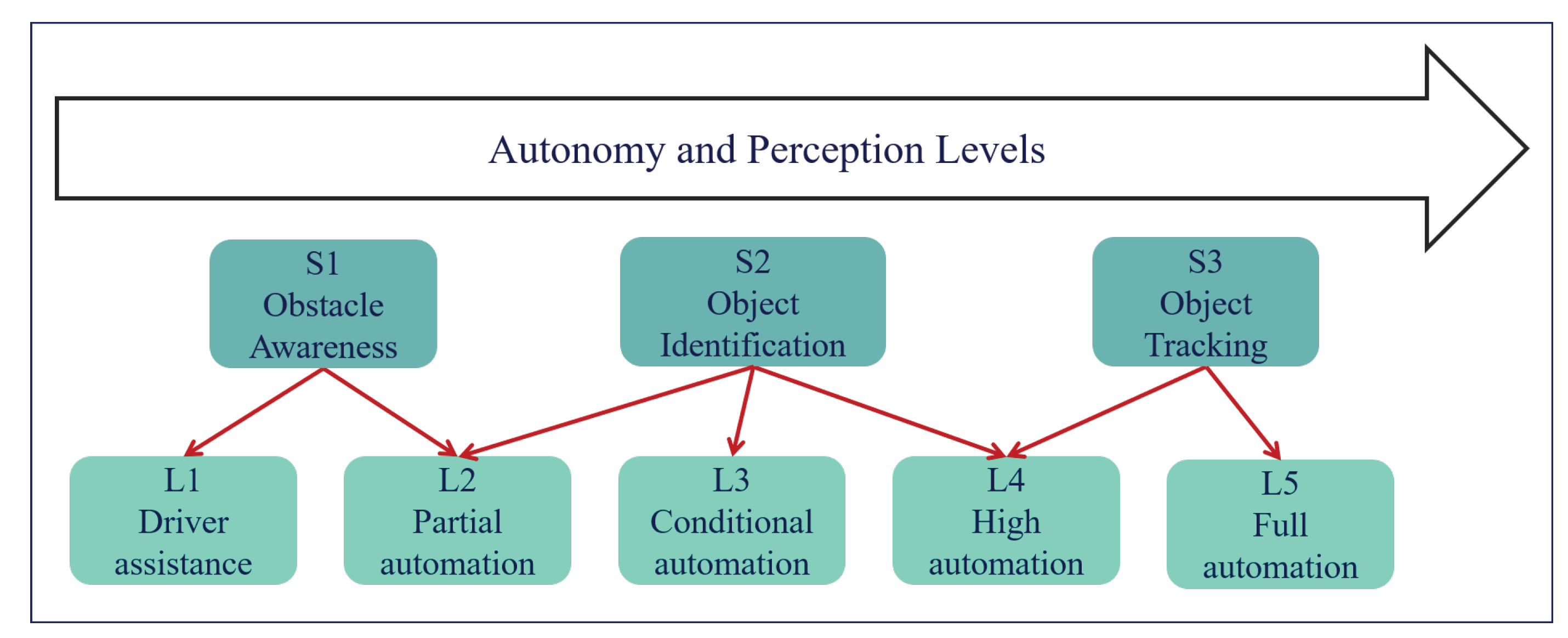

We discuss the functionality and role of various AV sensors in traffic state estimation. The sensing power of AVs is categorized into three levels.

We rigorously formulate the AV-based TSE problem. A two-step framework that leverages the sensing power of AVs to estimate high-resolution traffic state variables is developed. The first step directly translates the information observed by AVs to spatio-temporal traffic states and the second step employs data-driven methods to estimate the traffic states that are not observed by AVs. The proposed estimation methods are data-driven and consistent with the traffic flow theory.

The next generation simulation (NGSIM) data are adopted to examine the accuracy and robustness of the proposed framework. Experimental results are compelling, satisfactory and interpretable. Sensitivity analysis regarding AV penetration rate, sensor configuration, and perception accuracy will also be studied.

Since TSE with AV data has not been explored in previous studies, this paper is probably the first attempt to rigorously tackle this problem. To this end, we first review the existing AV technologies that can contribute to traffic sensing, then we rigorously formulate the TSE problem. Finally, we propose and examine the solution framework. An overview of the paper structure is presented in

Figure 1.

Section 2 discusses the sensing power of AVs.

Section 3 rigorously formulates the high-resolution TSE framework with AVs, followed by a discussion of the solution algorithms in

Section 4. In

Section 5, numerical experiments are conducted with NGSIM data to demonstrate the effectiveness of the proposed framework. Lastly, conclusions are drawn in

Section 6.

3. Formulation

Now, we are ready to rigorously formulate the traffic state estimation (TSE) framework with AVs. We first present the notations, and then the traffic states variables are defined. A two-step estimation method is proposed: the first step directly translates the information observed by AVs to spatio-temporal traffic states, and the second step employs data-driven methods to estimate the traffic states that are not observed by AVs.

3.1. Notations

All the notations will be introduced in context, and

Table 4 provides a summary of the commonly used notations for reference.

3.2. Modeling Traffic States in Time-Space Region

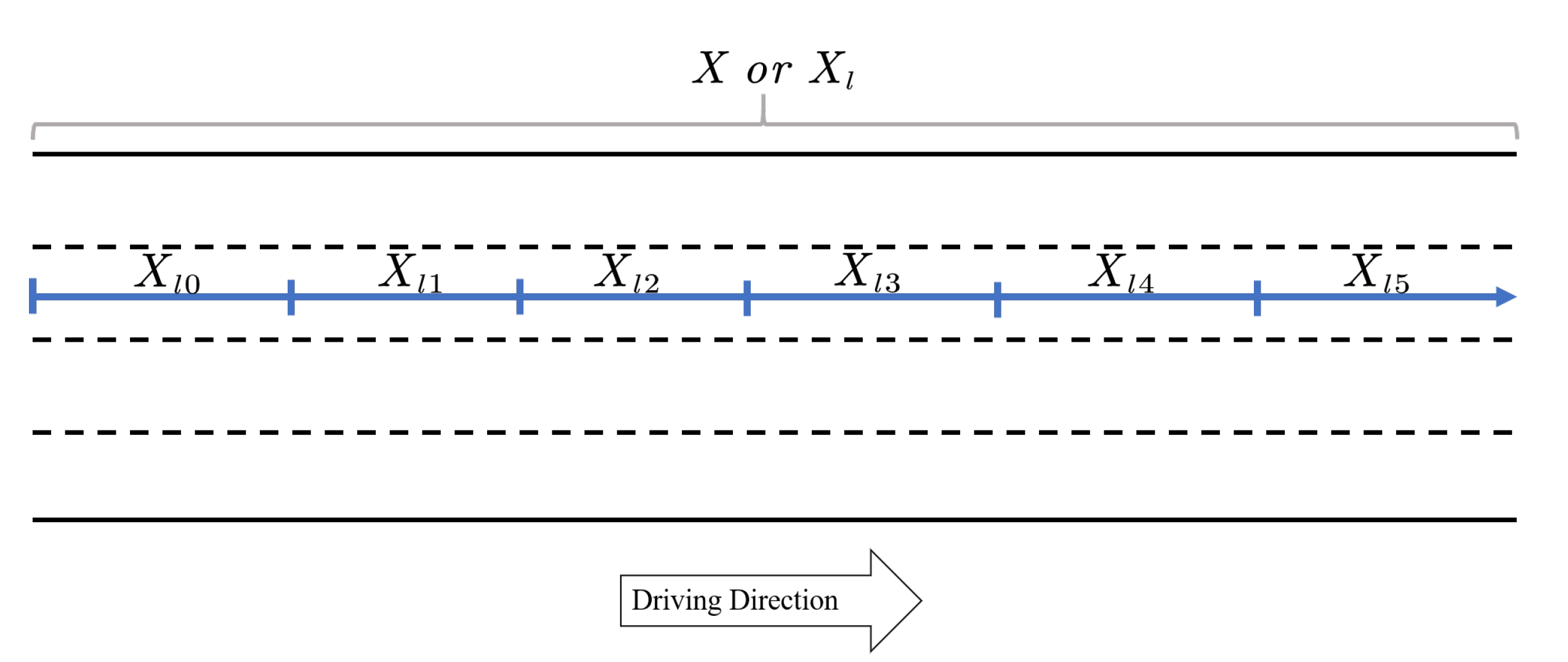

We consider a highway with

lanes, where

. The operator

is the counting measure for countable sets. For each lane

, we denote

as the set of longitudinal locations on lane

l. In this paper, we treat each lane as a one-dimensional line. Without loss of generality, we set the starting point of

to be 0, hence

, where

is the length of lane

l. Throughout the paper, we denote operator

as the Lebesgue measure in either one or two dimensional Euclidean space, and it represents the length or area for one or two dimensional space. Note in this paper we assume the length of each lane is the same

, while the proposed estimation method can be easily extended to accommodate different lane lengths. We further discretize the road

to

equal distance road segments and each road segment is denoted by

, where

is the index of the road segment and

. Hence, we have

and

. The above road discretization is visualized in

Figure 6.

We denote

i as the index of a vehicle and

I as the set of all vehicle indices. We further define the location

, speed

, and space headway

at a time point

, where

is the longitudinal location of vehicle

i at time

t,

is the lane in which vehicle

i is located at time

t, and

T is the set of all time points in the study period. We assume that each vehicle

i only enters the highway once. If a vehicle enters the highway multiple times, the vehicle at each entrance will be treated as a different vehicle. To obtain the traffic states, we construct the distance

and time

and headway area

from vehicle location

, speed

, and headway

for a vehicle

i based on Edie [

74]. Throughout the paper, each vehicle is represented by a point, which is located at the center of each vehicle shape. When the vehicle is at the border of two lanes, function

will randomly assign the vehicle to either of the lanes.

Suppose

denotes the time point when the vehicle enters the highway and

denotes the time point when the vehicle exits the highway, we denote the distance traveled and time spent on lane

l by vehicle

i as

and

, respectively. Mathematically,

and

are presented in Equation (

1).

We use the headway area

to represent the headway between vehicle

i and its preceding vehicle on lane

l in the time-space region, and it is represented by Equation (

2).

When we have the trajectories of all vehicles on the road, we can model the traffic states of each lane in a time-space region. Without loss of generality, we set the starting point of t to be zero, hence we have , where is the length of the study period. We discretize the study period T to equal time intervals, where . We denote as the set of time points for interval h, where . Therefore, we have , where . In this paper, we use uniform discretization for and T to simplify the formulation, while the proposed estimation methods work for the arbitrary discretization scheme.

We use

to denote a cell in the time-space region for road segment

and time period

, as presented in Equation (

3).

We denote the headway area of vehicle

i in cell

by

, as presented in Equation (

3). The headway area can be thought of as the multiplication of the space headway and time headway in the time-space region.

Example 1 (Variable representation in time-space region).

In this example, we illustrate the variables defined in the time-space region. We consider a one-lane road and the lane index is l. Furthermore, is segmented into 6 road segments (), and T is segmented into 10 time intervals (), as presented in Figure 7. The cell is the intersection of and , is the intersection of and .Each green line in the time-space region represents the trajectory of a vehicle. In Figure 7, we highlight the first (), second () and the 8th () vehicle trajectory. The distance traveled by each vehicle is the same, hence . We also highlight in Figure 7, which represents the time spent by each vehicle on lane l. The headway area of vehicle , denoted by , is represented by the green shaded area. The red shaded area, which represents , is the intersection of and , based on Equation (3). According to Seo et al. [

46], Edie [

74], we compute the traffic states variables, namely flow

, density

and speed

, for each road segment

and time period

, as presented in Equation (

4).

We treat the traffic states, (e.g., flow , density and speed ) estimated from full samples of vehicles I as ground truth and unknown. In the following sections, we will develop a data-driven framework to estimate the traffic states from the partially observed traffic information obtained from autonomous vehicles under different levels of perception power.

3.3. Challenges in the High-Resolution TSE with AVs

As summarized in

Table 1, TSE with AVs is a unique problem that has not been explored. In this section, we highlight the difficulties of this unique problem, and further motivate the proposed traffic sensing framework in the following sections.

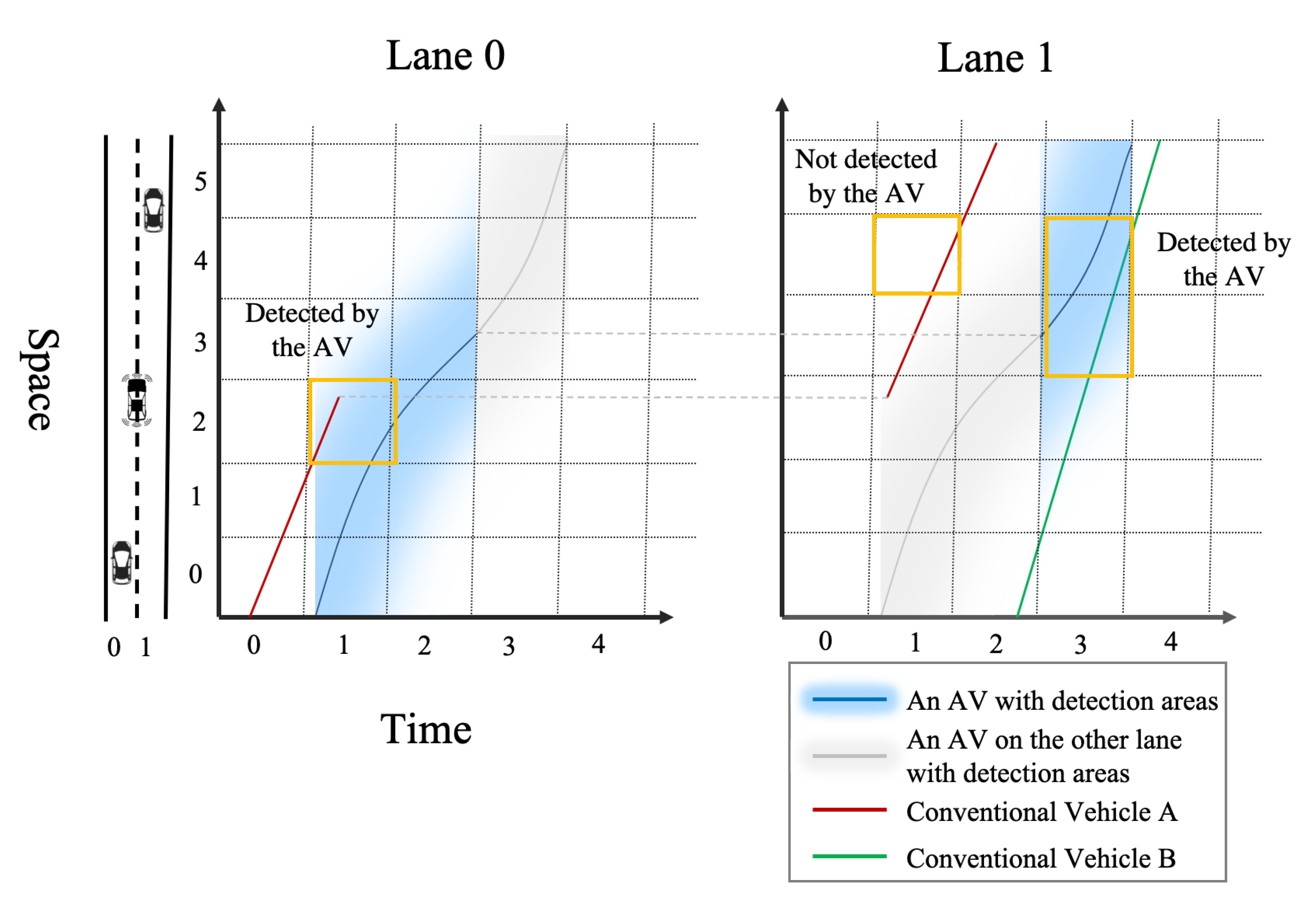

As the vehicle trajectories in high-resolution time-space region are complicated, the information collected by the AVs is also highly complicated and fragmented. To illustrate, we consider a two-lane road with three vehicles (one AV, and two conventional vehicles A and B), as shown in

Figure 8. The trajectory of the AV is represented by the blue line, and the shaded area is the detection area of the AV. The detection area of the AV is represented by the shaded areas with blue and grey color. Even when the AV is on the lane 0, it can still detect the vehicles on lane 1, thanks to the characteristics of LiDAR and cameras. Hence, the blue area means that the AV is on the current lane, and the grey area means that the AV is on the other lane.

As shown in

Figure 8, both the AV and vehicle A change lanes during the trip. Vehicle A changes lane at the middle of time interval 1, and the AV change lanes at the beginning of time interval 3. One can see that vehicle A can be detected by the AV in the cell

on both lane 0 and lane 1, and vehicle B can be detected in the cell

and

on lane 1. For the cell

on lane 1, it is not straightforward to see whether vehicle A is detected or not, hence rigorous mathematical formulations should be developed to determine which cells are observable and which cells should be estimated. In real-world congested roads, vehicles might change lanes frequently, and hence their trajectories can be very complicated. From the example, we can see that, due to the complicated vehicle trajectories on the roads, the information collected by the AVs is non-uniformly distributed in the time-space region, and most of the existing TSE methods cannot be applied to such data. Therefore, solving the TSE with AV data calls for a new estimation framework.

3.4. Overview of the Traffic Sensing Framework

In this section, we present an overview of the traffic sensing framework with AVs. We assume a subset of vehicles are AVs, namely

, where

denotes the index set of all AVs. The goal for the traffic sensing framework is to estimate the density and speed of each cell in the time-space region, using the information observed by AVs. Once the speed and density are estimated accurately, the traffic flow can be obtained by the conservation law [

75].

The TSE methods can be categorized into two types: model-driven methods and data-driven methods. The model-driven methods rely on physical models such as traffic flow theory, while the data-driven methods automatically learn the relationship between different variables. In the case of AV-based TSE, the observed information is fragmented and lacking in certain patterns, so it is challenging to establish the physical model. In contrast, the data-driven methods can be built easily, thanks to the massive data collected by the AVs. Therefore, this paper focuses on the data-driven approach.

The proposed framework consists of two major parts: direct observation and data-driven estimation, as presented in

Figure 9.

In the direct observation step, density and speed are observed directly through AVs. Since AVs are moving observers [

37], traffic states can only be observed partially for a set of time intervals and road segments (i.e. cells) in a time-space region.

Section 3.5 will rigorously determine the set of cells that can be directly observed by AVs and compute the direct observations from information obtained by AVs. We will discuss the direct observation with different levels of sensing power. The second part aims at filling up the unobserved information with data-driven estimation methods. The functions

and

are used to estimate the unobserved density and speed on lane

l, respectively. Details will be presented in

Section 3.6.

3.5. Direct Observation

In this section, we present to compute traffic states using the information that is directly observed by AVs under different levels of perception. We define

as the set of time-space indices

of the cells that are observed by the AVs, and

j is the index of the detection area. The set

presents all

detected by

, and

present all

detected by

. Details are presented in

Appendix A. Now we formulate the traffic states that can be directly observed by AVs under different levels of perception. As a notation convention, we use ⋆ to represent the information that cannot be directly observed by AVs, and

denote the directly observed density and speed, respectively.

3.5.1. : Tracking the Preceding Vehicle

In the perception level

, an AV can only detect and track its preceding vehicle, and hence its detection area for density and speed is

. The observed density and speed can be represented in Equation (

5).

Equation (

5) is proven to be an accurate estimation of the traffic states [

46]. We note that some AVs also have a LRR mounted to track the following vehicle behind the AVs, and this situation can be accommodated by replacing the set

with

in Equation (

5), where

represents all the vehicles that follow AVs in

.

When the AV market penetration rate is low, only covers a small fraction of all cells in the time-space region, especially for multi-lane highways. In contrast, covers more cells than . Practically, it implies that the LiDAR and cameras are the major sensors for traffic sensing with AVs.

3.5.2. : Locating Surrounding Vehicles

In the perception level

, both

and

are enabled by the LRR, LiDAR and cameras, while

can only detect the location of surrounding vehicles. Hence, the density can be observed in both

and

, and the speed is only observed in

. The estimation method for

cannot be used for

, since the preceding vehicles of the detected vehicle might not be detected, hence

cannot be estimated accurately. Instead,

provides a snapshot of the traffic density at a certain time point, and we can compute the density of time interval

h by taking the average of all snapshots, as presented in Equation (

6).

where

represents the set of time indices when

is covered by the

in

, and

represents the set of vehicles detected by

on

at time

t. Detailed formulations are presented in

Appendix A.

3.5.3. : Tracking Surrounding Vehicles

In the perception level

, both localization and tracking are enabled by the LRR, LiDAR and cameras. In addition to the information obtained by

, speed information of surrounding vehicles in

is also available. Similar to the density estimation, we first computed the instantaneous speed of a cell at a certain time point by taking the harmonic mean of all detected vehicles, and then the average speed of a cell is computed by taking the average of all time points, as presented by Equation (

7).

where

represents the harmonic mean. Though

provides the most speed information, the directly observed density is the same for

and

. Overall, the sensing power of AV increases as more cells are directly observed from

to

. In the following section, we will present to fill the ⋆ using data-driven methods.

3.6. Data-Driven Estimation Method

In this section, we propose a data-driven framework to estimate the unobserved density and speed in . To differentiate the density (speed) before and after the estimation, we use and to represent the estimated density and speed for time interval h road segment s and lane l, while denote the density and speed before the estimation (i.e. after the direct observation). The method consists of two steps: (1) estimate the unobserved density given the observed density ; (2) estimate the unobserved speed given that the density is fully known from estimation and speed is partially known from direct observation.

We present the generalized form for estimating the unobserved density and speed in Equations (

8) and (

9), respectively.

where

is a generalized function that takes the observed density

and time/space index

as input and outputs the estimated density.

is also a generalized function to estimate speed, while its inputs include the observed speed

, the estimated density

, and the time/space index

. In this paper, we propose matrix completion-based methods for

, and both matrix completion-based and regression-based methods for

. The details are presented in the following subsections.

3.6.1. Matrix Completion-Based Methods

The matrix completion-based model can be used to estimate either density or speed. We first assume that densities (speeds) in certain cells are directly observed by the AVs, as presented in Equation (

10).

where we denote

and

as the detection area for density and speed of lane

l in time-space region with a certain sensing power. Precisely, for

,

; for

,

; and for

,

.

For each lane

l, the estimated density

(or speed

) forms a matrix in the time-space region, and each row represents a road segment

s, and each column represents a time interval

h. Some entries (

or

) in the density matrix (or speed matrix) are missing. To fill the missing entries, many standard matrix completion methods can be used. For example, the naive imputation (imputing with the average values across all time intervals or across all cells), k-nearest neighbor (k-NN) imputation [

76], and the singular-value decomposition (SVD)-based

SoftImpute algorithm [

77]. In the numerical experiments, the above-mentioned methods will be benchmarked using real-world data.

3.6.2. Regression-Based Methods

The speed data can also be estimated by a regression-based model, given that the density

is fully estimated. We train a regression model

to estimate the speed from densities for lane

l, as presented in Equation (

11).

where

represent the number of nearby lanes, time intervals and road segments considered in the regression model. The intuition behind the regression model is that the speed of a cell can be inferred by the densities of its neighboring cells. The choice of Equation (

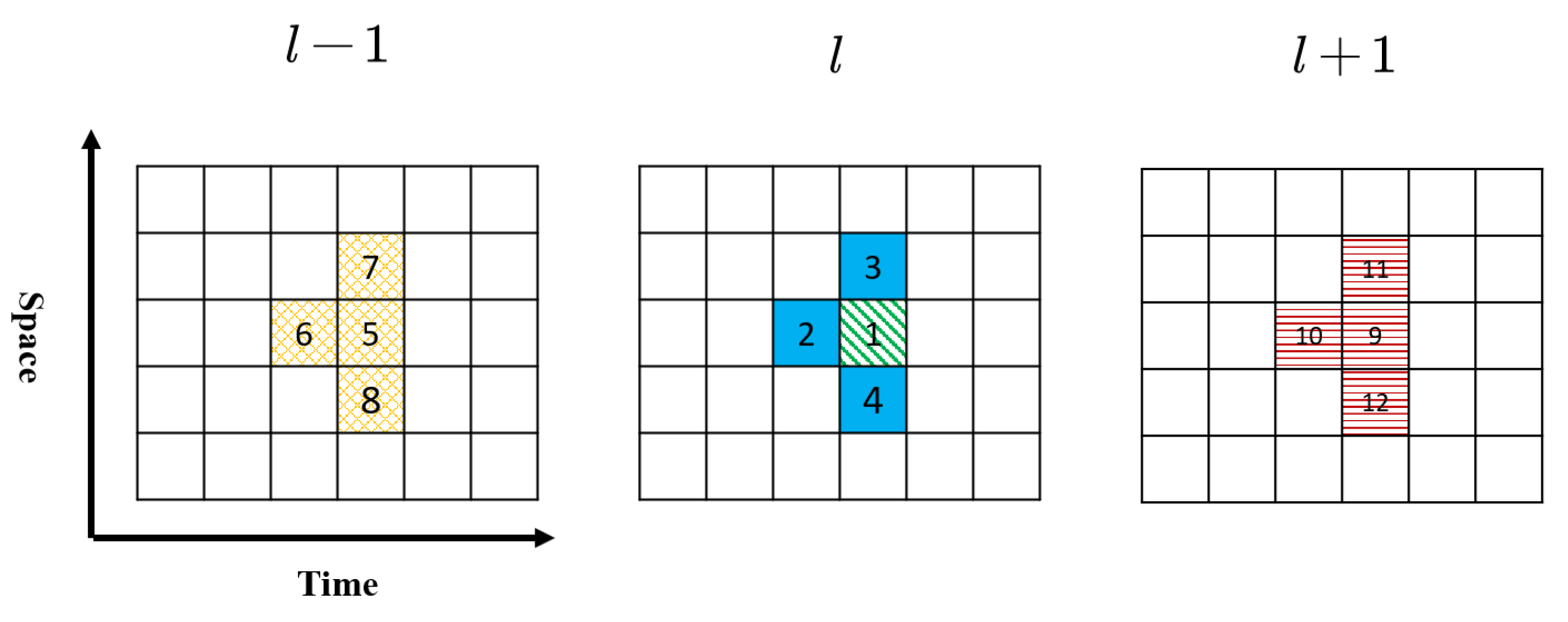

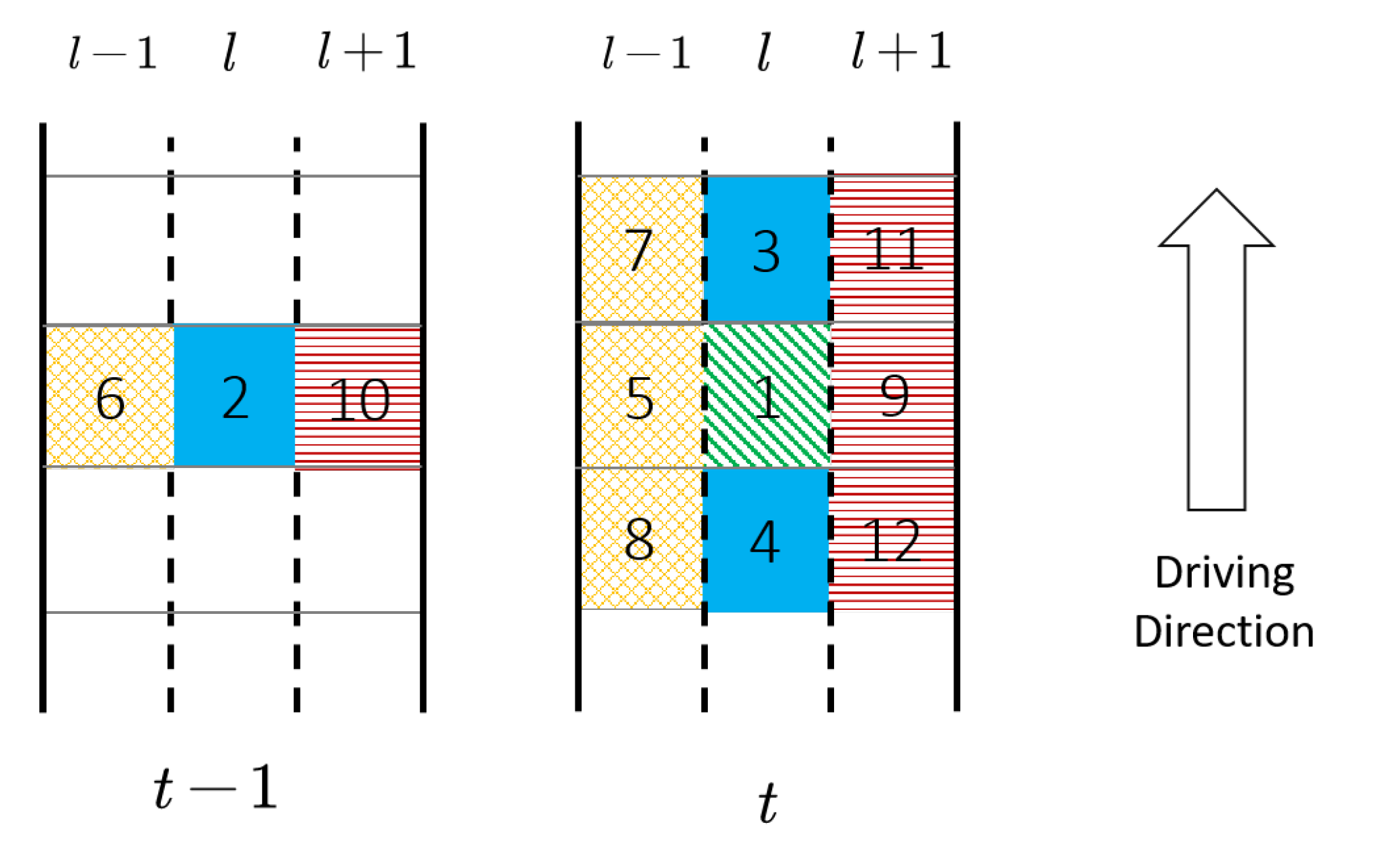

11) is inspired by the traffic flow theory (e.g., fundamental diagrams and car-following models) as the interactions between vehicles result in the road volume/speed. A specific example of

is the fundamental diagram [

78], which is formulated as

by setting

.

In this paper, we adopt a simplified function

presented in

Figure 10. Suppose we want to estimate the speed for cell 1; there are 12 neighboring cells (including cell 1) considered as inputs. The regression methods adopted in this paper are Lasso [

79] and random forests [

80]. We also map the cells to the physical roads in time

t and

, as presented in

Figure 11.

Figure 11 shows a three-lane road in time

t and

, and the numbers marked for each road segment exactly match the cell number in

Figure 10.

5. Numerical Experiments

In this section, we conduct the numerical experiments with NGSIM data to examine the proposed TSE framework. All the experiments below are conducted on a desktop with Intel Core i7-6700K CPU @ 4.00GHz × 8, 2133 MHz 2 × 16GB RAM, 500GB SSD, and the programming language is Python 3.6.8.

5.1. Data and Experiment Setups

We use next generation simulation (NGSIM) data to validate the proposed framework. NGSIM data contain high-resolution vehicle trajectory data on different roads [

83]. Our experiments are conducted on I-80, US-101 and Lankershim Boulevard, and the overviews of the three roads are presented in

Figure 12. NGSIM data are collected using a digital video camera, and its temporal resolution is 100ms. Details of the three roads can be found in Alexiadis et al. [

83], He [

84].

We assume that a random set of vehicles are AVs and the AVs can perceive the surrounding traffic conditions. Given the limited information collected by AVs, we estimate the traffic states using the proposed framework. We further compare the estimation results with the ground truth computed from the full vehicle trajectory data. The normalized root mean squared error (NRMSE), symmetric mean absolute percentage error (SMAPE1, SMAPE2) will be used to examine the estimation accuracy, as presented by Equation (

12). SMAPE2 is considered as a robust version of SMAPE1 [

86]. All three measurements are unitless.

where

z is the true vector,

is the estimated vector,

is the index of the vector, and

is the set of indices in vector

z and

. When comparing two matrices, we flatten the matrices to vector and then conduct the comparison.

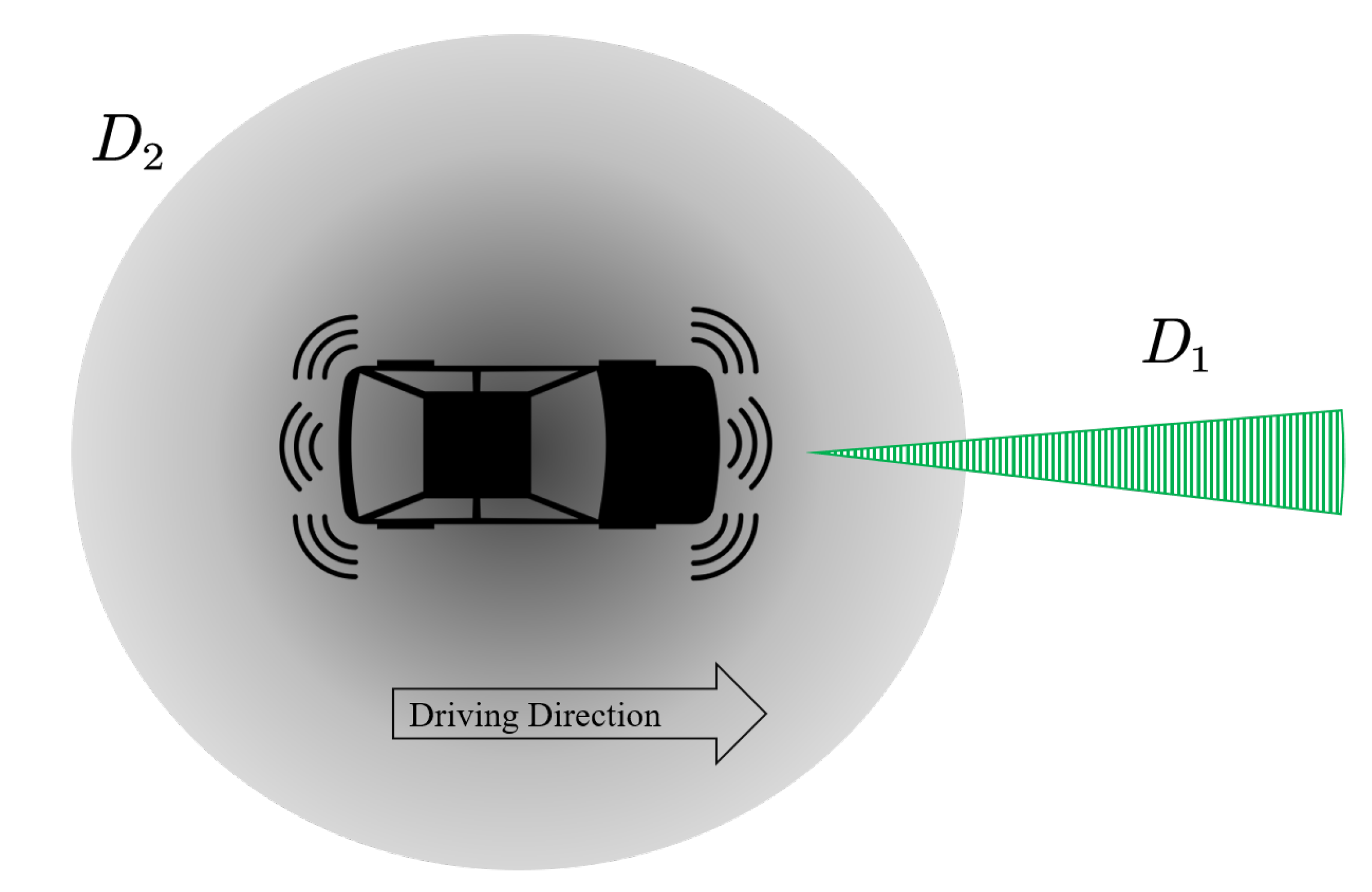

Here, we describe all the factors that affect the estimation results. The market penetration rate denotes the portion of AVs in the fleet. In the experiments, we assume that AVs are uniformly distributed in the fleet. The detection area

is a ray fulfilled by LRR and

is a circle fulfilled by LiDAR. We assume that LiDAR has a detection range (radius of the circle) and it might also oversee a vehicle with a certain probability (referred to as missing rate). The AVs can be at one level of perception, as discussed in

Section 2. The sampling rate of data center can be different. In addition, different data-driven estimation methods are used to estimate the density and speed, as presented in

Section 3.6. We define LR1 and LR2 as Lasso regressions, and RF1 and RF2 as random forests regressions. The number

1 means that only cells 1 to 4 are used as inputs, while the number

2 means that all 12 cells in

Figure 10 are used as inputs. SI denotes the

SoftImpute, KNN denotes the k-nearest neighbor imputation, and NI denotes the naive imputation by simply replacing missing entries with the mean of each column.

Baseline setting: the market penetration rate of AVs is . The detection range of LRR is 150 m, and the detection range of LiDAR is 50 m with missing rate. The level of perception is , and the speed is detected without any noise. The sampling rate of data center is 1 Hz. SI is used to estimate density and LR2 is used to estimate speed. We set and .

5.2. Basic Results

We first run the proposed estimation method with the baseline setting. The estimation method takes around 7 minutes to estimate all three roads, and the most time consuming part is the information aggregation in the data center (discussed in

Section 2.4) and the computation of Equation (

7). The estimation accuracy is computed by averaging the NRMSE, SMAPE1 and SMAPE2 through all lanes, and the results are presented in

Table 5. In addition to the unitless measures, we also include the mean absolute error (MAE) in the table.

In general, the estimation method yields accurate estimation on highways (I-80 and US-101), while it underperforms on the complex arterial road (Lankershim Boulevard). Estimation accuracy of speed is always higher than that of density, which is because the density estimation requires every vehicle being sensed, while speed estimation only needs a small fraction of vehicles being sensed [

87].

Estimation accuracy on different lanes. We then examine the performance of the proposed method on each lane separately, and the estimation accuracy of each lane is summarized in

Table 6.

One can read from

Table 6 that the proposed method performs similarly on most lanes. One interesting observation is that the proposed method performs well on Lane 6 on I-80, Lane 5 on US-101 and Lane 1 on Lankershim Blvd, and those lanes are merged with ramps. This implies that the proposed method has the potential to work well on merging intersections.

The proposed method performs differently on lanes that are near the edge of roads. For example, the proposed method yields the worst density estimation and the best speed estimation on lane 1 of I-80, which is an HOV lane. The vehicle headway is relatively large on the HOV lane, hence estimating density is more challenging given limited detection range of LiDAR. In contrast, speed on HOV lane is relatively stable, making the speed estimation easy. In addition, the estimation accuracy of the Lane 4 of Lankershim Blvd is low, as a result of the physical discontinuity of the lane.

One noteworthy point is that the estimation accuracy also depends on the traffic conditions. For example, traffic conditions on the HOV lane of I-80 tend to be free-flow and low-density, so the estimation accuracy is different from other lanes which tend to be dense and congested.

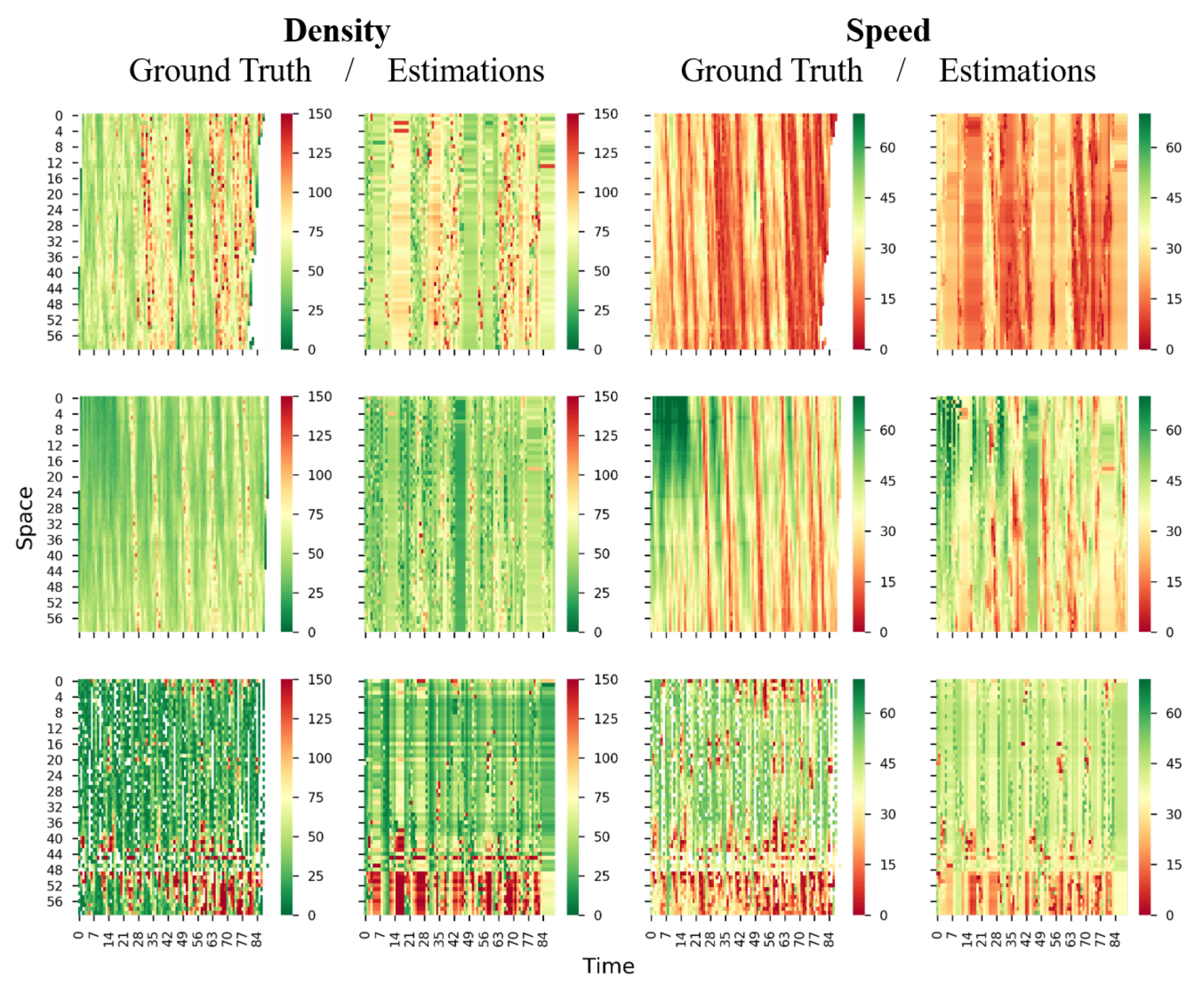

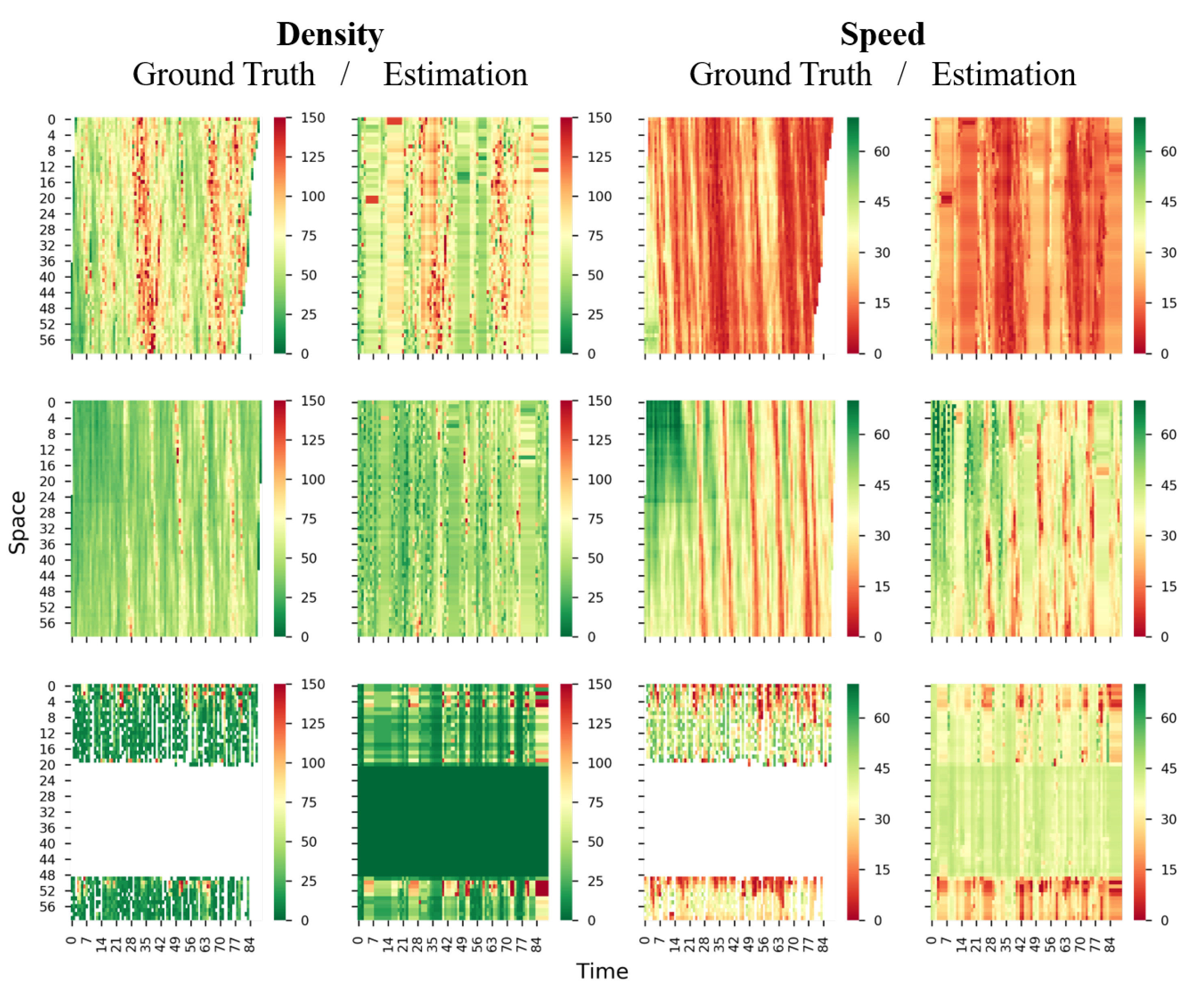

To visually inspect the estimation accuracy, we plot the true and estimated density and speed in time-space region for Lane 2 and Lane 4 of all three roads in

Figure 13 and

Figure 14. It can be seen that the estimated density and speed resemble the ground truth; even the congestion is discontinuous in the time-space region (see Lankershim Blvd in

Figure 13). Again, the Lane 4 of Lankershim Blvd is physically discontinuous, hence a large block of entries are entirely missing in time-space region (see the third row of

Figure 14), and the blocked missingness may affect the proposed methods and increase the estimation errors.

Effects of Densities on Speeds in the Regression-Based Models

We use the LR2 method to estimate the speed. After fitting the LR2 model, we look at the fitted regression coefficients and interpret the coefficients from the perspective of traffic flow theories. In particular, we select Lane 2 on US-101 and summarize the fitted coefficients in

Table 7. The regression coefficients for other lanes and networks can be found in the supplementary material.

The R-square for Lane 2 on US-101 is 0.832, indicating that the regression model is fairly accurate. From

Table 7, one can see that the

Intercept is positive and it represents the free flow speed when the density is zero. Coefficients for

x1 to

x12 are all negative with high confidence, and this implies that higher density generally yields lower speed.

Recall

Figure 10; suppose we want to estimate the speed for cell 1, we refer to cell

as the surrounding cells in the current lane and cell

as the surrounding cells in the nearby lanes. The coefficients of

x1 to

x4 are the most negative, indicating the densities of the surrounding cells in the current lane have the highest impact on the speed. The densities of surrounding cells in the nearby lanes also have negative impact on the speed but the magnitude is lower.

5.3. Comparing TSE Methods with Different Types of Probe Vehicles (PVs)

In this section, we compare the proposed method with other TSE methods using different types of PVs. Consistent with

Table 1, we consider the following three types of PVs:

All three methods are implemented with baseline setting, and we make 5% of PVs conventional PVs, PVs with spacing measurement, or AVs. The estimation accuracy in terms of SMAPE1 is presented in

Figure 15.

One can see that, by using more information collected by AVs, the proposed method outperforms the other TSE methods using different PVs for both speed and density estimation. This experiment further highlights the great potential of using AVs for TSE.

5.4. Comparing Different Algorithms

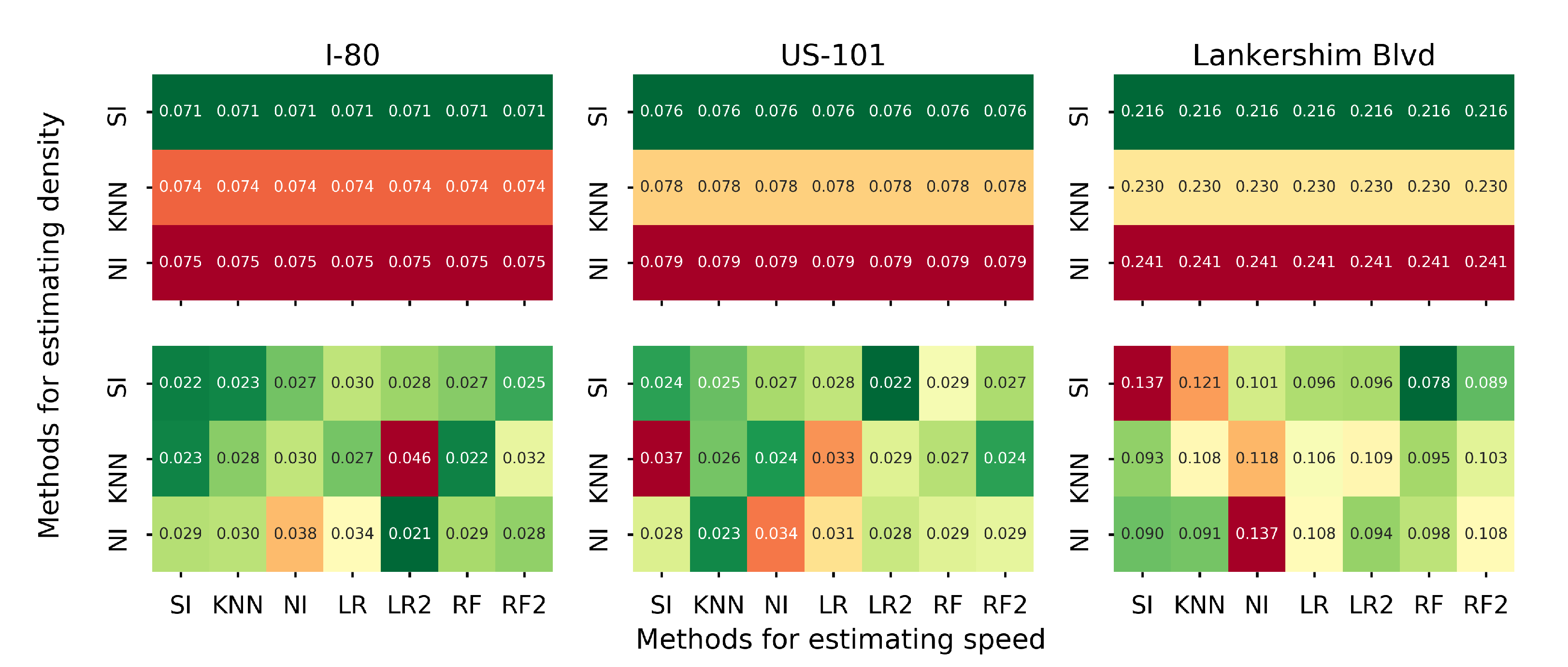

In this section, we examine different methods in estimating density and speed. Recall in

Section 3.6 that the matrix completion-based methods can estimate both density and speed, while the regression-based methods can only estimate the speed. We run the proposed estimation method with different combinations of estimation methods for density and speed, and the rest of the settings are the same as the baseline setting. To be precise, three methods are used to estimate density: naive Imputation (NI), k-nearest neighbor imputation (KNN) and

SoftImpute (SI). Seven methods will be used to estimate speed, and they are NI, KNN, SI, LR1, LR2, RF and RF2. We plot the heatmap of SMAPE1 for each road separately, as presented in

Figure 16.

The speed estimation does not affect the density estimation, as the density estimation is conducted first. SI always outperforms KNN and NI for density estimation. Different combinations of algorithms perform differently on each road. We use A-B to denote the method that uses A for density estimation and B for speed estimation. NI-LR2 on I-80, SI-LR2 on US-101 and SI-RF on Lankershim Blvd outperform the rest of the methods in terms of speed estimation. Overall, the SI-LR2 generates accurate estimation for all three roads.

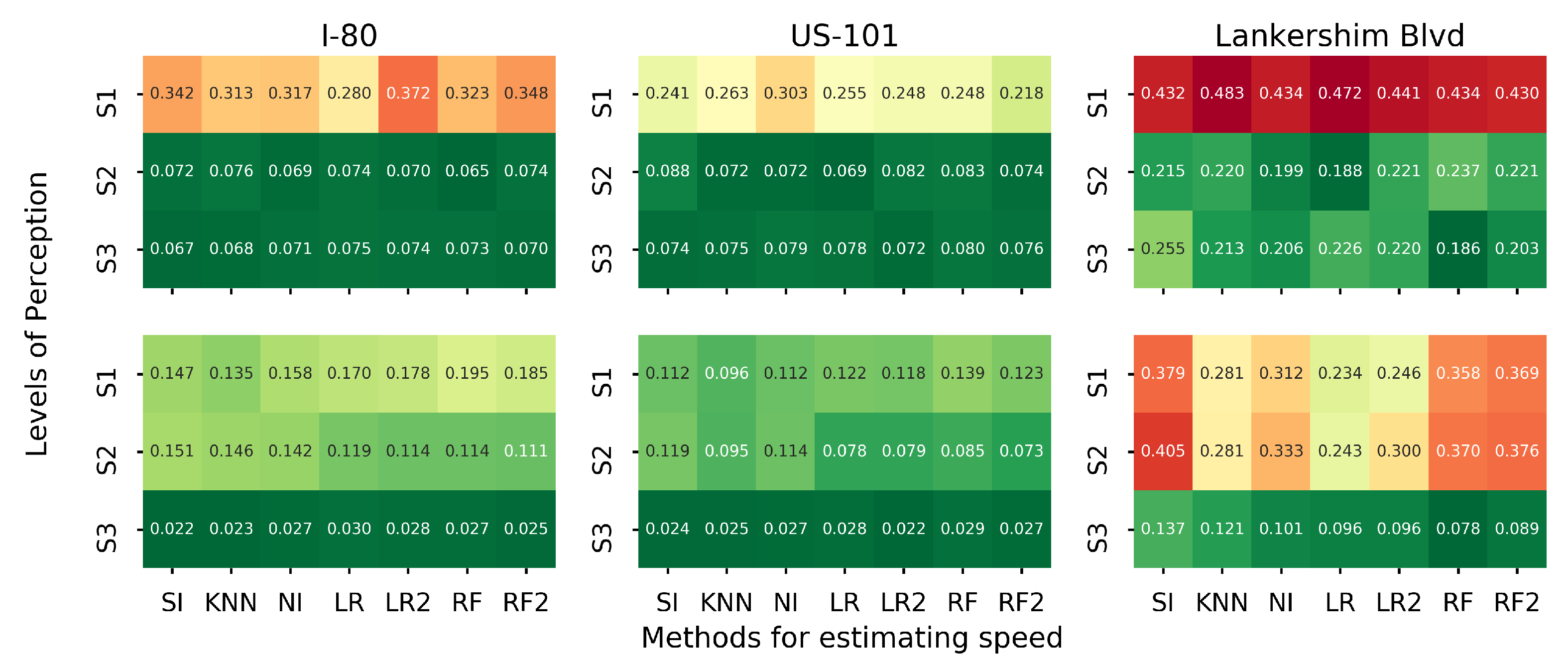

5.5. Impact of Sensing Power

We analyze the impact of sensing power of AVs on the estimation accuracy. Recalling

Section 2.2 and

Section 3.5, we consider three levels of perception for AVs. Based on Equations (

5)–(

7), more entries in the time-space region are directly observed when the perception level increases. We run the proposed estimation method with different perception levels and different methods for speed estimation. Other settings are the same as the baseline setting. The heatmap of MSAPE1 for each road is presented in

Figure 17.

As shown in

Figure 17, the proposed method performs the best on US-101 and the worst on Lankershim Blvd. With 5% market penetration rate, at least S2 is required for I-80 and US-101 to obtain an accurate traffic state estimation. Similarly, S3 is required for Lankershim Blvd to ensure the estimation quality. Later, we will discuss the impact of market penetration rate on the estimation accuracy under different perception levels.

The estimation accuracy improves for all speed estimation algorithms and all three roads when the perception level increases. Different speed estimation algorithms perform differently on different roads within the same perception level. For example, in S2, the imputation-based methods outperform the regression-based method on I-80 and US-101, while the Lasso regression outperforms the rest on Lankershim Blvd. In S3, all the density estimation methods perform similarly on I-80 and US-101, while the regression-based method significantly outperforms the imputation-based methods on Lankershim Blvd in terms of density estimation.

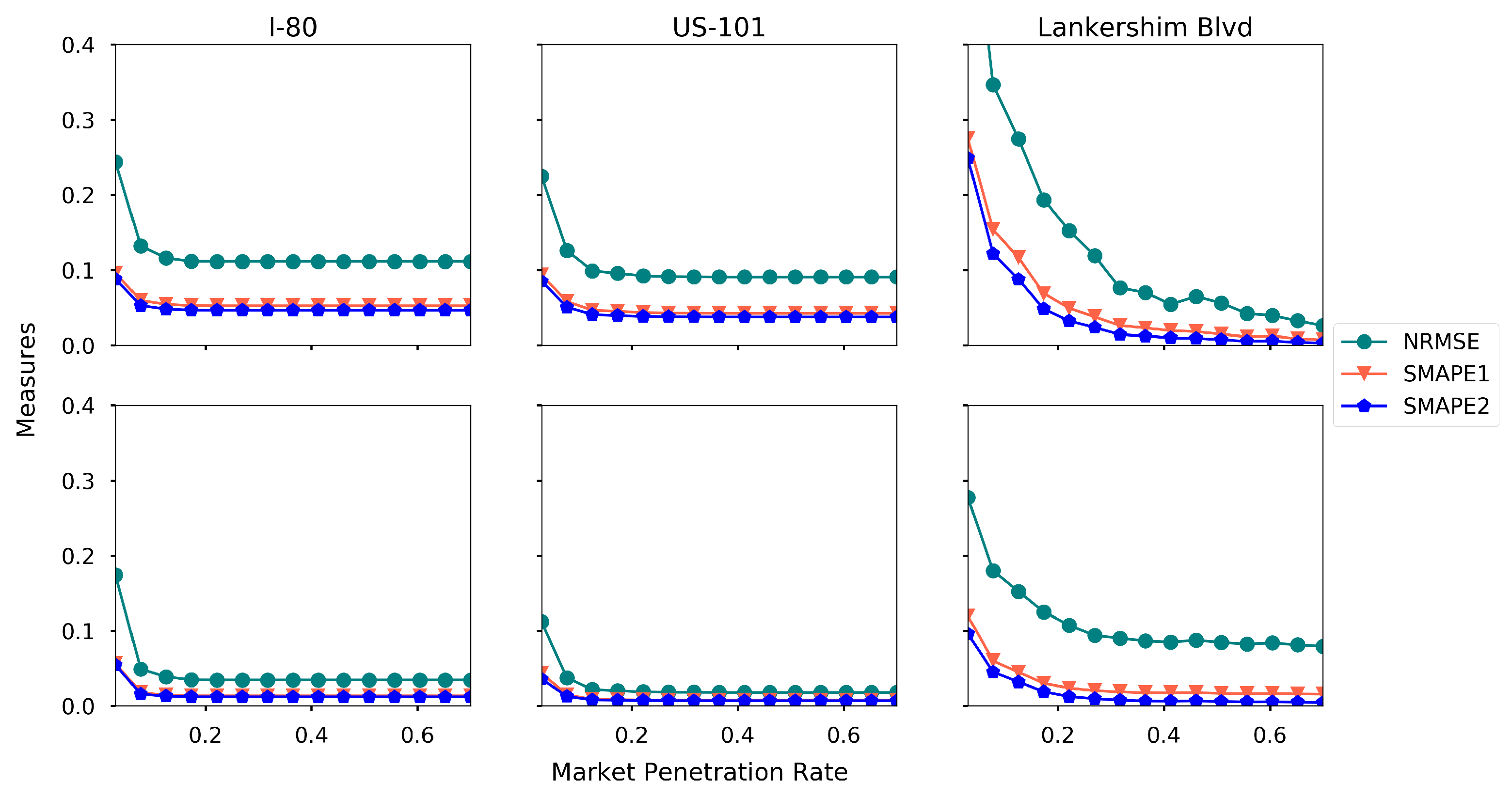

5.6. Impact of AV Market Ppenetration Rate

To examine the impact of AV market penetration rate, we run the proposed method with different market penetration rates ranging from

to

, and the rest of the settings are the baseline settings. The experimental results are presented in

Figure 18.

Generally, the estimation accuracy increases when the AV market penetration rate increases. Moreover,

penetration rate is a tipping point for an accurate estimation for I-80 and US-101, while Lankershim Blvd requires larger penetration rate. To further investigate the impact of market penetration rate under different levels of perception, we run the experiment with different penetration rate under three levels of perception, and the results are presented in

Figure 19.

One can read that S2 and S3 yield the same density estimation, as the vehicle detection is enough for density estimation. Better speed estimation can be achieved on S3, since more vehicles are tracked and the speeds are measured. Again,

Figure 19 indicates that at least S2 is required for I-80 and US-101 to obtain an accurate traffic state estimation, and S3 is required for Lankershim Blvd to ensure the estimation quality. For S1 and S2 in Lankershim Blvd, the estimation accuracy for speed reduces when the market penetration rate increases, probably due to the overfitting issue of the LR2 method.

We remark that AVs in S1 level are equivalent to connected vehicles with spacing measurements [

46], and hence

Figure 19 also presents a comparison between the proposed framework and the existing method. The results demonstrate that by using more information collected by AVs, the proposed framework outperforms the existing methods significantly when the market penetration rate is low.

In addition to the above findings, another interesting finding is that when the market penetration rate is low, the regression methods usually outperform the matrix completion-based methods, while the matrix completion-based methods outperform the regression-based methods when the market penetration rate is high.

5.7. Platooning

In the baseline setting, AVs are uniformly distributed in the fleet, while many studies suggest that a dedicated lane for platooning can further enhance mobility [

88]. In this case, AVs are not uniformly distributed on the road. To simulate the dedicated lane, we view all vehicles on Lane 1 of I-80, Lane 1 of US-101, and Lane 1,2 of Lankershim Blvd as AVs, and all the vehicles on other lanes as conventional vehicles. To compare, we also set another scenario with the same number of vehicles, which are treated as AVs, uniformly distributed on roads. We run the proposed method on both scenarios with the rest of settings being the baseline setting, and the results are presented in

Table 8.

As can be seen from

Table 8, the distribution of AVs has a marginal impact on the estimation accuracy. The proposed method performs similarly on the scenarios of the dedicated lane and uniformly distribution for all three roads, which is probably because the detection range of LiDAR is large enough to cover the width of the roads.

5.8. Effects of Sensing Errors

As the object detection and tracking depend on the accuracy of sensors and algorithms, the ability of AVs in sensing varies. In this section, we study the effects of sensing errors on the estimation accuracy, and the sensing errors can be categorized into detection missing rate, speed detection noise, and distance measurement errors.

Detection missing rate. The AVs might overlook a certain vehicle during the detection, and we use the missing rate to denote the probability. We examine the impact of the missing rate by running the proposed estimation method with different missing rate ranging from 0.01 to 0.9, and the rest of the settings are the baseline settings. We plot the estimation accuracy for each road separately, as presented in

Figure 20.

From

Figure 20, one can read that the estimation error increases when the missing rate increases for all three roads. The density estimation is much more sensitive to the missing rate than the speed estimation. This is because overlooking vehicles have a significant impact on density estimation, while speed estimation only needs a small fraction of vehicles being observed.

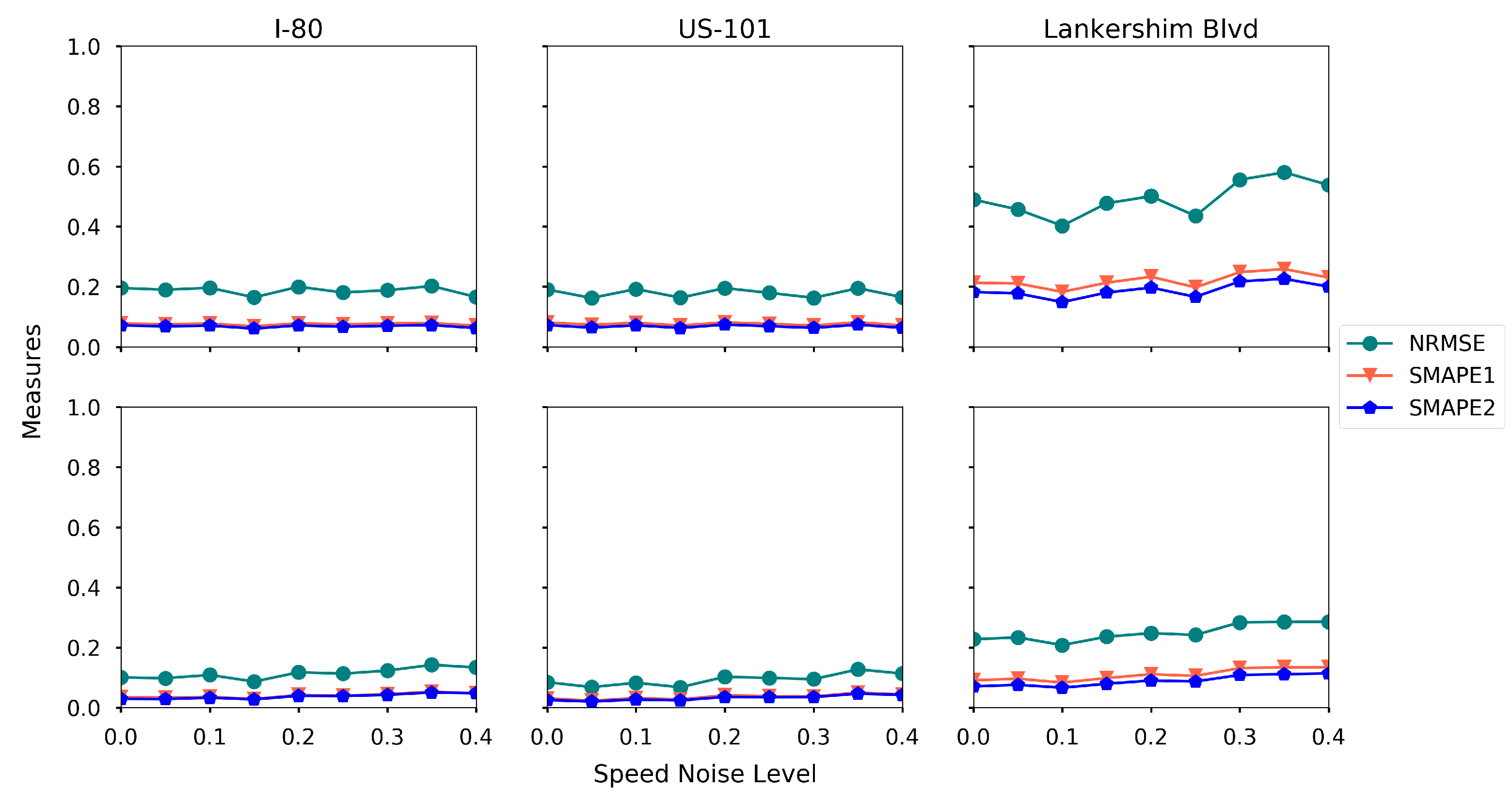

Noise level in speed detection. We further look at the impact of noise in speed detection. We assume that the speed of a vehicle is detected with noise, and the noise level is denoted as

. If the true vehicle speed is

, we sample

from the uniform distribution

, and then the detected vehicle speed is assumed to be

. The reason that we define this noise is that the observation noise is usually proportional to the scale of the observation. We run the proposed estimation method by sweeping

from

to

, and the rest of the settings are baseline settings. The estimation accuracy is presented in

Figure 21.

Surprisingly, the proposed method is robust to the noise in speed detection, as the estimation errors remain stable when the speed noise level increases. One explanation for this is that the speed of each cell is computed by averaging the detected speeds from multiple vehicles, hence the detection noise is complemented and reduced based on the law of large numbers.

Distance measurement errors. When AVs detect or track certain vehicles, it measures the distance between itself and the detected/tracked vehicles in order to locate the vehicles. The distance measurement is either conducted by the sensors ( e.g., LiDAR) or computer vision algorithms, and hence the measurement might incur errors.

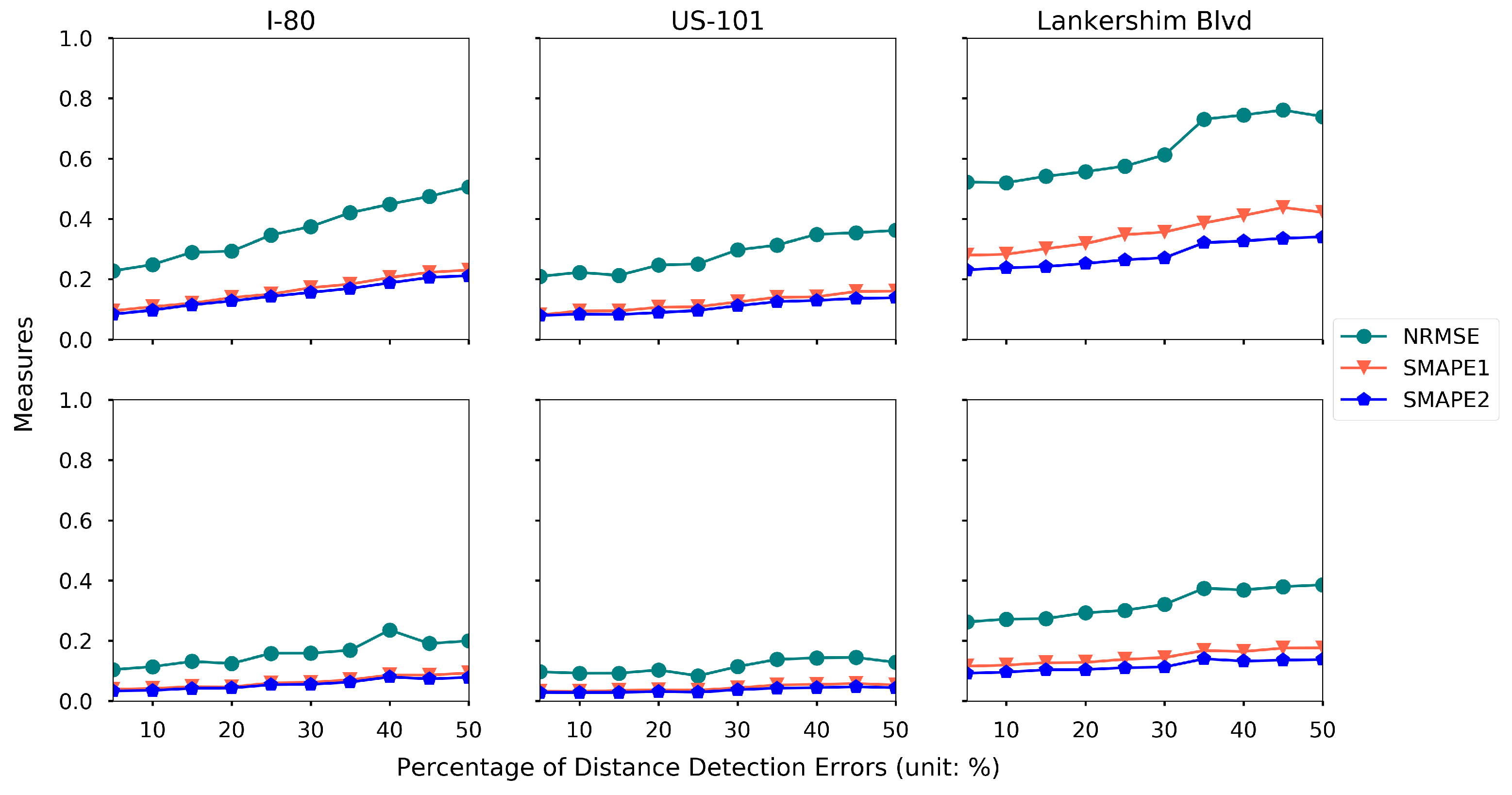

We categorize the distance measurement errors into two components: (1) frequency of the error happening, which is quantified by the percentage of the distance measurement that are associated with an error; (2) magnitude of the error, which is quantified by the number of cells that are offset from the true cell. For example, we assume that the distance measurement is and 5 cells, that means of the distance measures are associated with an error, and the detected location is at most 5 cells away from the true cell (the cell in which the detected vehicle is actually located).

To quantify the effects of the distance measurement errors, two experiments are conducted. Keeping the other settings the same as the baseline setting, we set the magnitude of the distance measurement as 5 cells, and then we vary the percentage of the errors from

to

. The results are presented in

Figure 22. Similarly, we set the percentage of the errors to be

and vary the magnitude from 1 cell to 9 cells, and the results are presented in

Figure 23.

Both figures indicate that the increasing frequency and magnitude of the distance measurement errors could reduce the estimation accuracy. The proposed framework is more robust on the magnitude of the measurement error, while it is more sensitive to the frequency of the measurement error.

5.9. Sensitivity Analysis

In this section, we examine the sensitivity of other important factors, (e.g., LiDAR detection range, sampling rate, and discretization size) in our experiments.

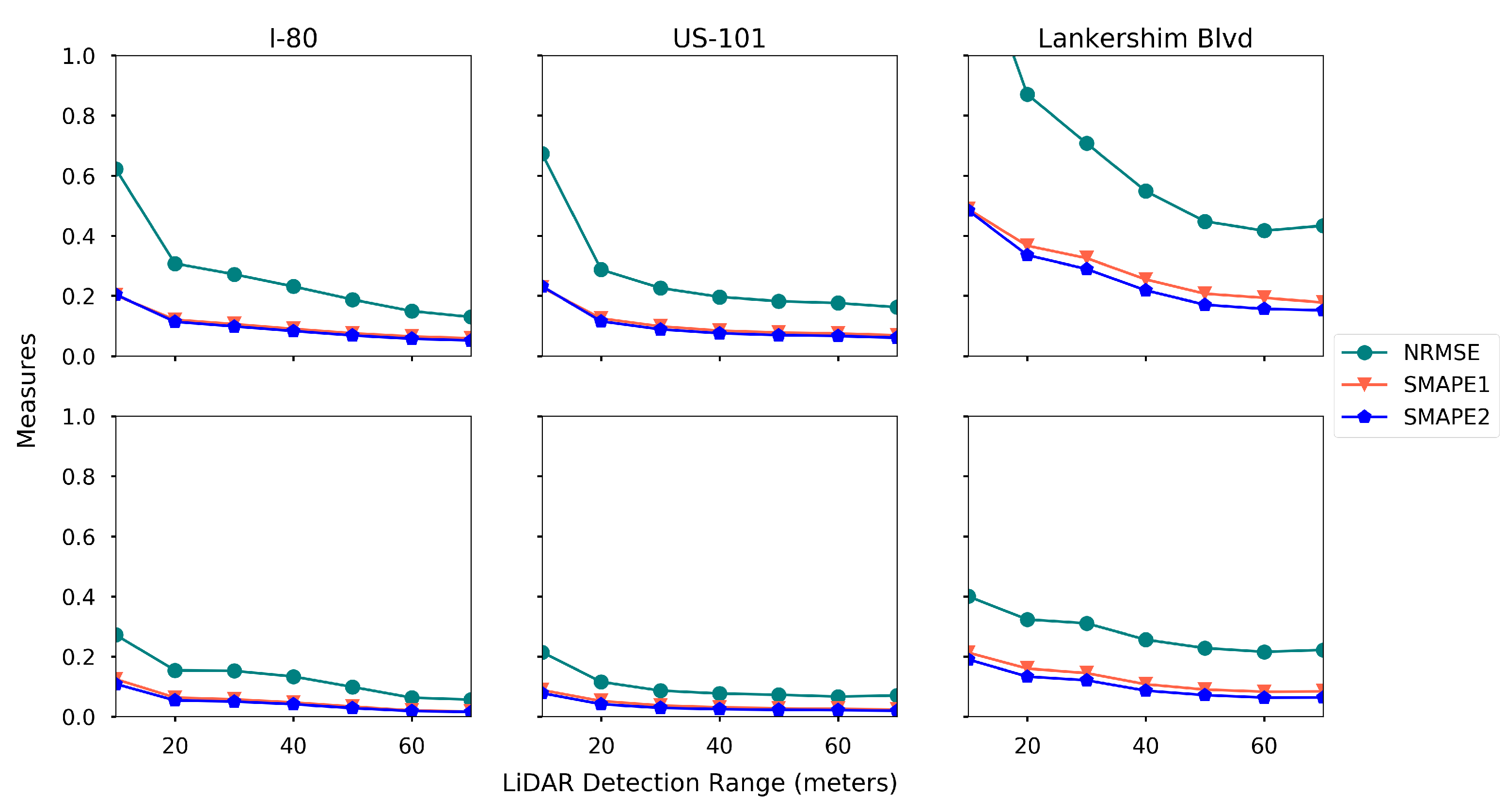

LiDAR detection range. The detection range of LiDAR varies in a wide range for different brands [

89]. We run the proposed estimation method using different detection rage ranging from 10 m to 70 m, and the rest of the settings are the baseline settings. The estimation accuracy for each road is presented in

Figure 24.

One can read that the estimation error reduces for both density and speed when the detection range increases. The gain in estimation accuracy becomes marginal when the detection range is large. For example, when the detection range exceeds 40 m on US-101, the improvement of the estimation accuracy is negligible. Another interesting observation is that, on Lankershim Blvd, even a 70-m detection range cannot yield a good density estimation with a 5% market penetration rate.

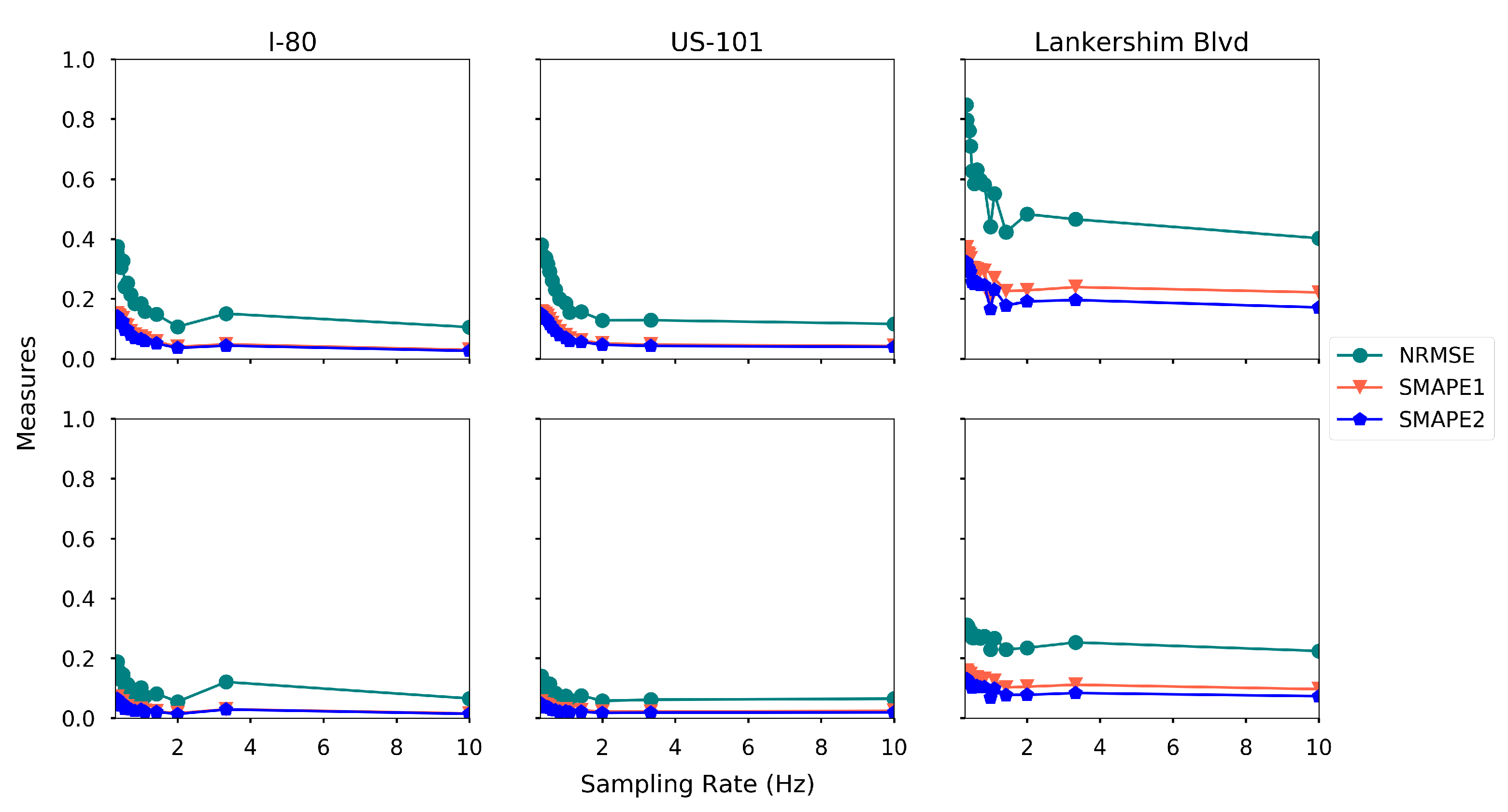

Sampling rate. Recall that the sampling rate denotes the frequency of the message (which contains the location/speed of itself and detected vehicles) sent to the data center, as discussed in

Section 2.4. When the sampling rate is low, we conjecture that the data center received fewer messages, which increases the estimation error. To verify our conjecture, we run the proposed estimation method with different sampling rate ranging from 0.3 Hz to 10 Hz, and the rest of the settings are the base settings. The estimation accuracy on each road is further plotted in

Figure 25.

The estimation accuracy increases when the sampling rate increases for all three roads, as expected. The density estimation is more sensitive to the sampling rate than the speed estimation. This is probably because the density changes dramatically in time-space region, while the speed is relatively stable.

Different discretization sizes. In this section, we demonstrate how the different discretization sizes affect the estimation accuracy.

and

in the baseline setting, and we change that to

and

. The other settings remain the same, and the comparison results are presented in

Table 9.

One can see that a bigger discretization size yields better estimation accuracy, because the speed and density change more stably on larger cells. This result also suggests that higher-granular TSE is more challenging and hence it requires more coverage of the observation data. A proper discretization size should be chosen based on the requirements for the estimation resolution and the available data coverage.

6. Conclusions

This paper proposes a high-resolution traffic sensing framework with probe autonomous vehicles (AVs). The framework leverages the perception power of AVs to estimate the fundamental traffic state variables, namely, flow, density and speed, and the underlying idea is to use AVs as moving observers to detect and track vehicles surrounded by AVs. We discuss the potential usage of each sensor mounted on AVs, and categorize the sensing power of AVs into three levels of perception. The powerful sensing capabilities of those probe AVs enable a data-driven traffic sensing framework which is then rigorously formulated. The proposed framework consists of two steps: (1) directly observation of the traffic states using AVs; (2) data-driven estimation of the unobserved traffic states. In the first step, we define the direct observations under different perception levels. The second step is done by estimating the unobserved density using matrix-completion methods, followed by the estimation of unobserved speed using either matrix completion methods or regression-based methods. The implementation details of the whole framework are further discussed.

The next generation simulation (NGSIM) data are adopted to examine the accuracy and robustness of the proposed framework. The proposed estimation framework is examined extensively on I-80, US-101 and Lankershim Boulevard. In general, the proposed framework estimates the traffic states accurately with a low AV market penetration rate. The speed estimation is always easier than density estimation, as expected. Results show that, with 5% AV market penetration rate, at least S2 is required for I-80 and US-101 to obtain an accurate traffic state estimation, while S3 is required for Lankershim Blvd to ensure the estimation quality. During the estimation of speed, all the coefficients in the Lasso regression are consistent with the fundamental diagrams. In addition, a sensitivity analysis regarding AV penetration rates, sensor configurations, speed detection noise and perception accuracy is conducted.

This study would help policymakers and private sectors (e.g Uber, Waymo and other AV manufacturers) understand the values of AVs in traffic operation and management, especially the values of massive data collected by AVs. Hopefully, new business models to commercializing the data [

90] or collaborations between private sectors and public agencies can be established for smart communities. In the near future, we will examine the sensing capabilities of AVs at network level and extend the proposed traffic sensing framework to large-scale networks. We also plan to develop a traffic simulation environment to enable the comprehensive analysis of the proposed framework under different traffic conditions. Another interesting research direction is to investigate the privacy issue when AVs share the observed information with the data center.