Recovery of Ionospheric Signals Using Fully Convolutional DenseNet and Its Challenges

Abstract

:1. Introduction

2. Experimental Data from Ionosondes

2.1. Ionograms

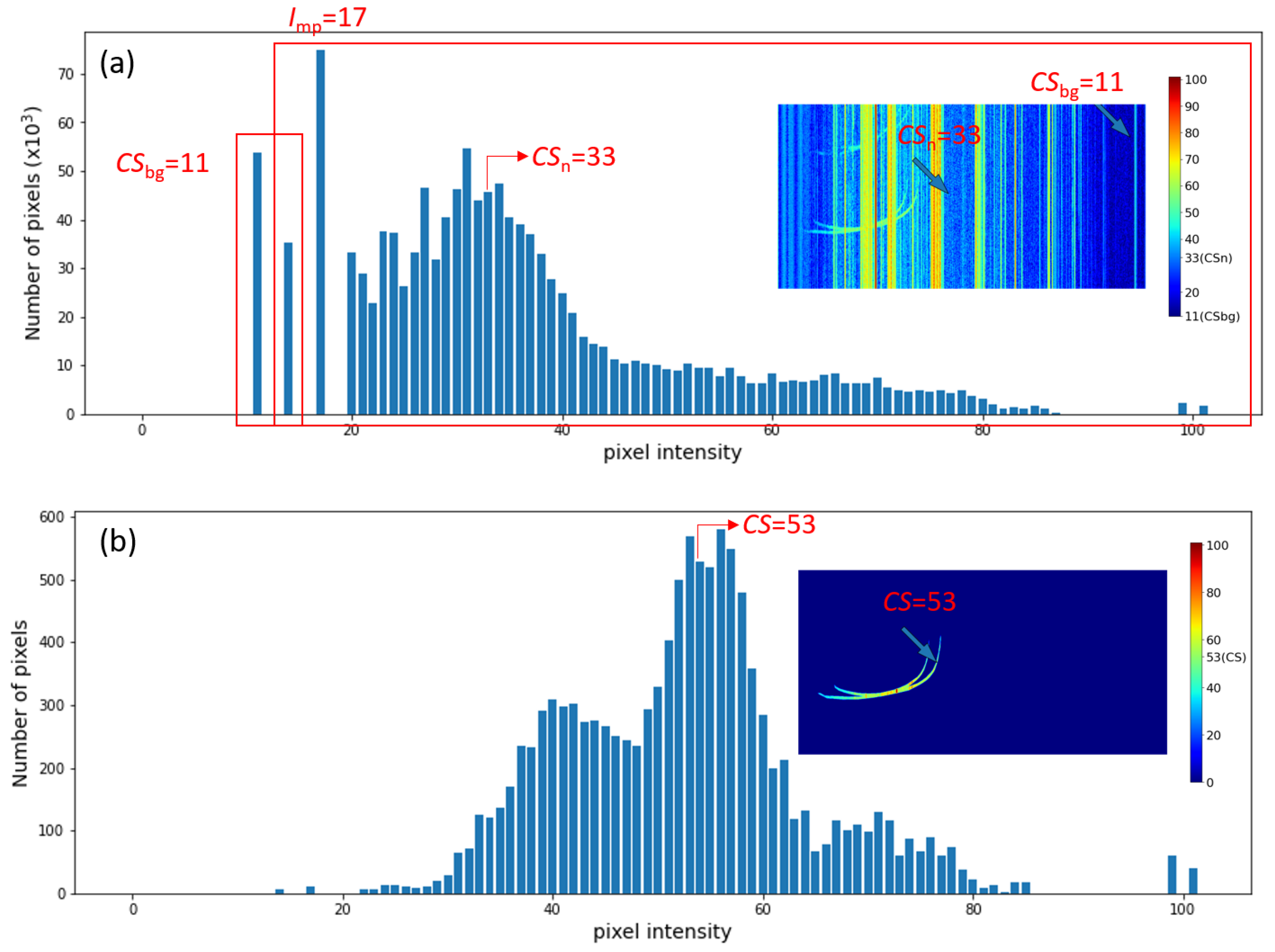

2.2. Signal-to-Noise Ratio

2.3. Shape Parameter

3. Materials and Methods

3.1. Convolutional Neural Networks

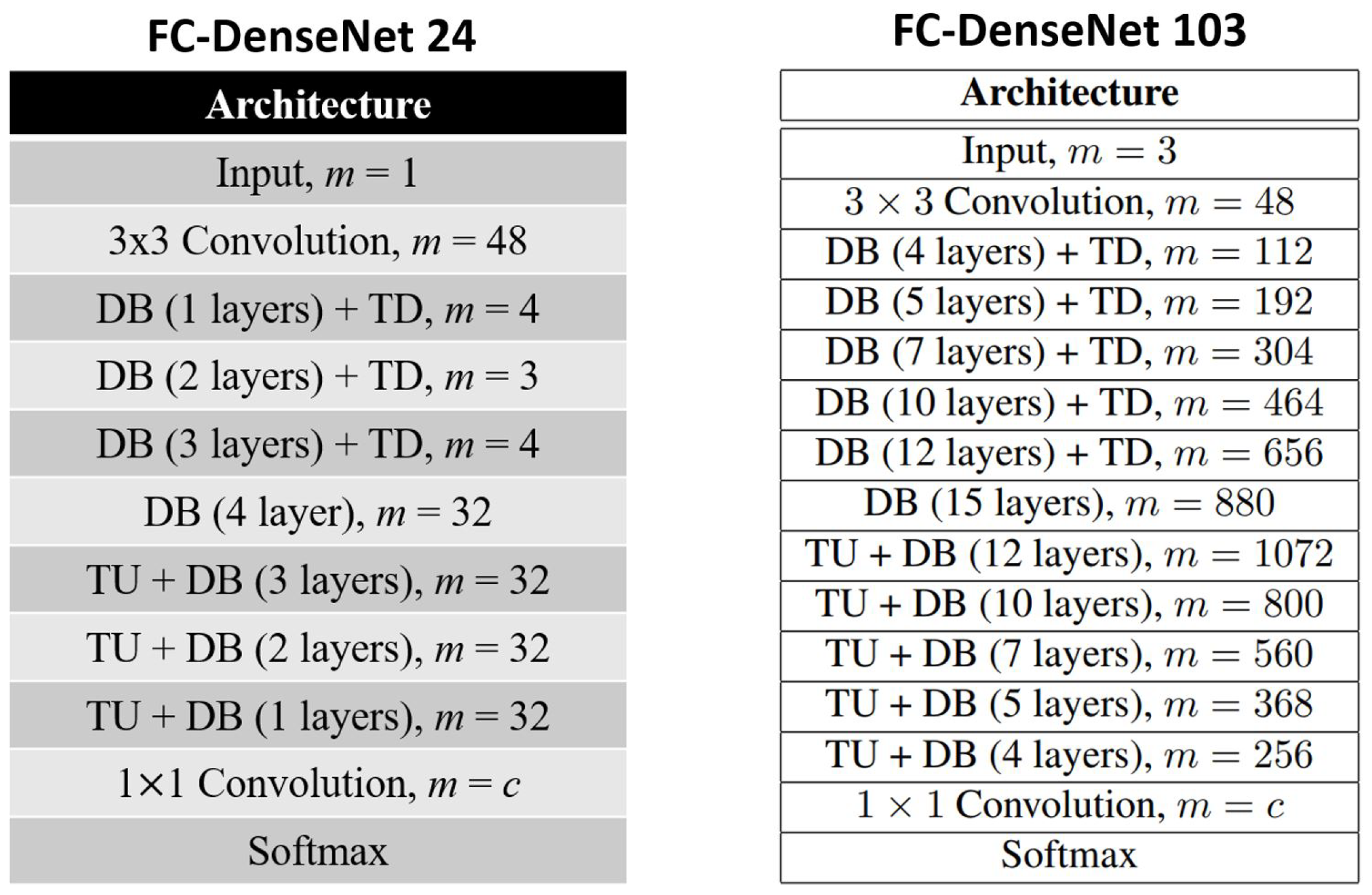

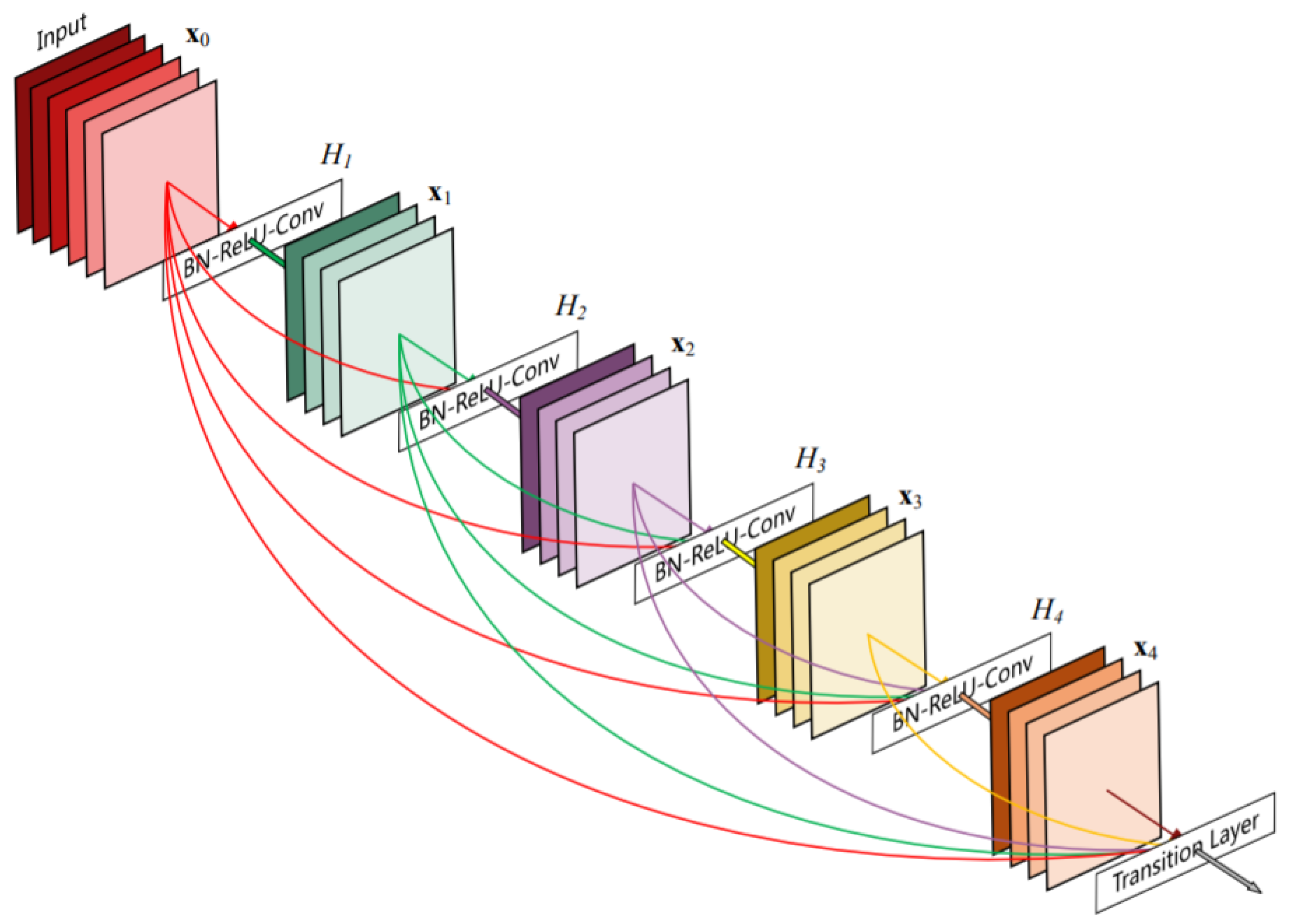

3.2. Fully Convolutional DenseNet

3.3. Filtering Algorithm

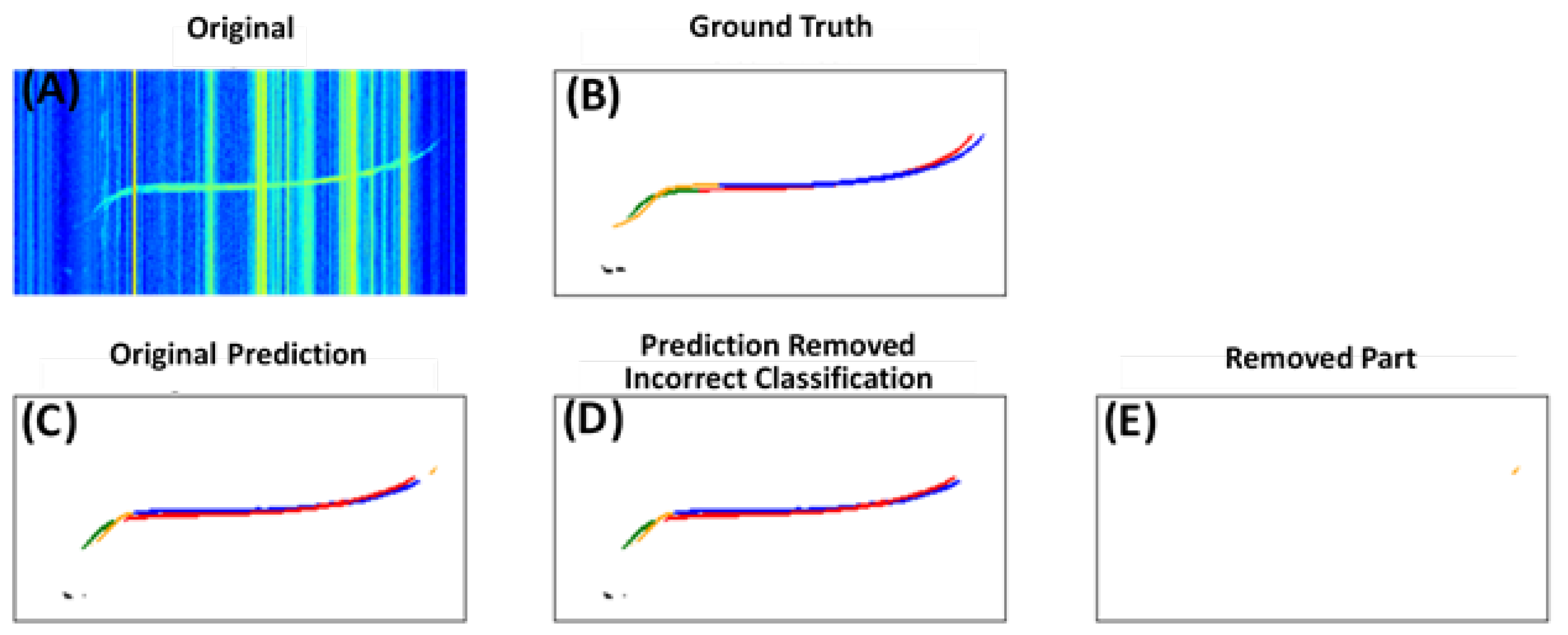

3.3.1. Filter for Incorrect Classification

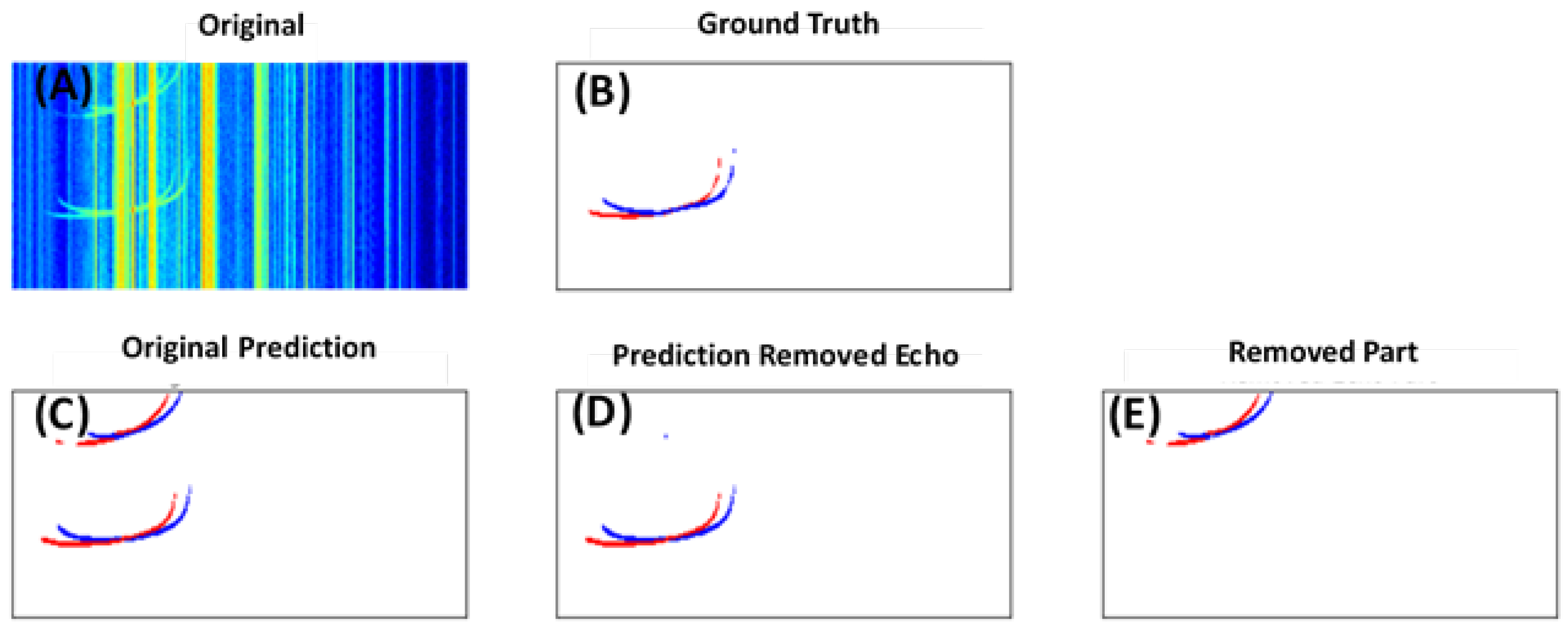

3.3.2. Filter for Secondary Ionospheric Echoes

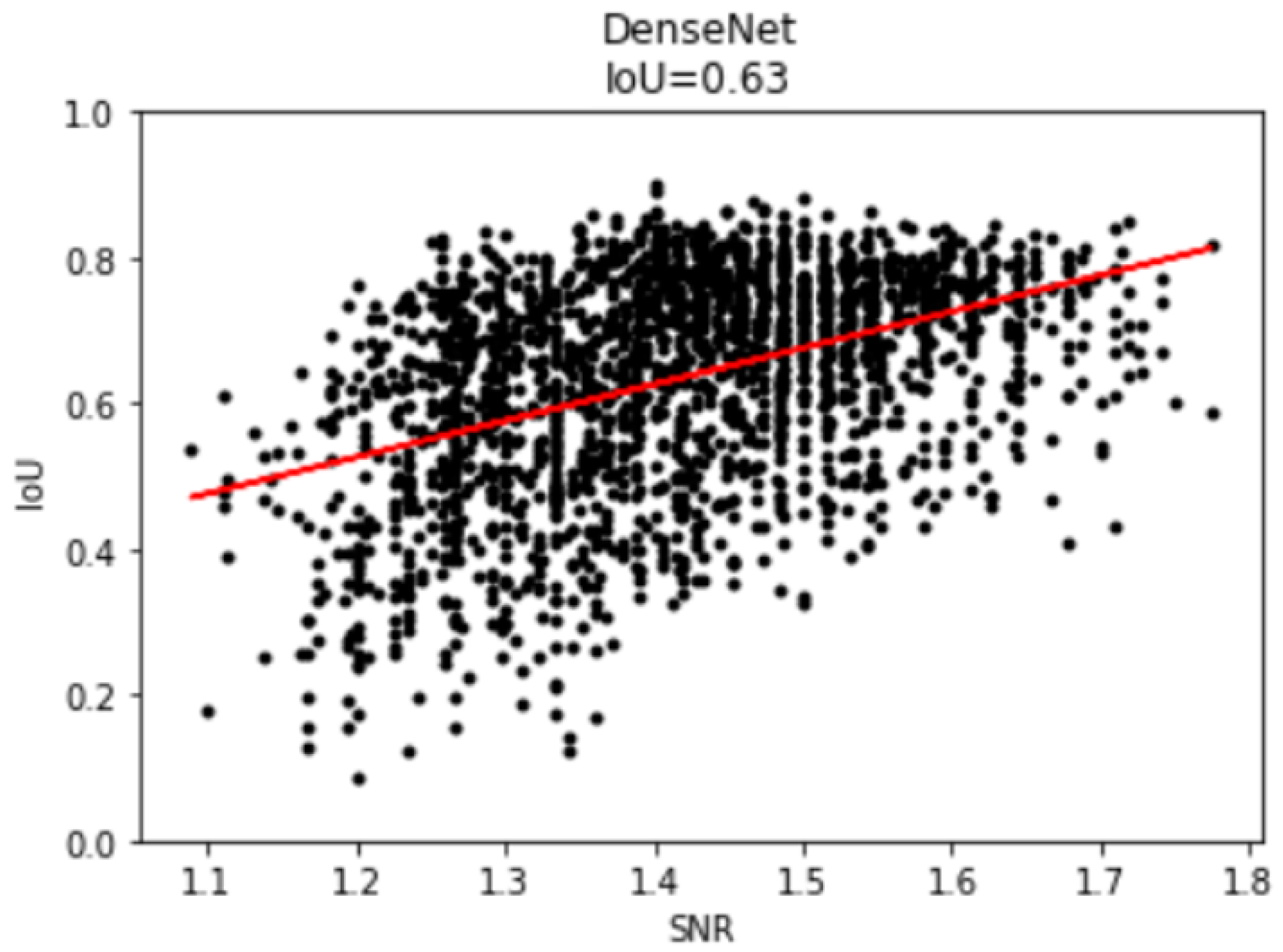

4. Results

- 1.

- The percentage of signal samples in the dataset:A comparison of Table 3 and Table 4 revealed that the sample percentages for eo and ex were both lower than 10%, the percentage for fax was less than 40%, and that for fao was barely 40%. This suggests that the sample percentage in a dataset needs to be higher than 40% in order for our model to adequately learn the characteristics of the signal and be able to recover it from the noisy ionograms;

- 2.

- Mixing with other signals:In addition to the sample percentage, another factor that can affect the model performance is whether the location of a signal is overlapping with other signal classes. Figure 9B,C show a clear example of an overlapping signal in our ground truth and predictions, respectively. Although the sample percentage of fbx, which was nearly 90%, was significantly higher than that of esa, which was slightly over 50%, esa was located distinctly away from any F signal classes. In contrast, fbo and fbx signals were often overlapped, which caused difficulty in distinguishing and separating them even by a human. In addition, F1 layers in the ionograms were often seen connected (continued) to F2 layers without a distinctive dividing point, affecting the determination of their critical frequencies. Both factors contributed to the unimpressive IoU for fbx signals;

- 3.

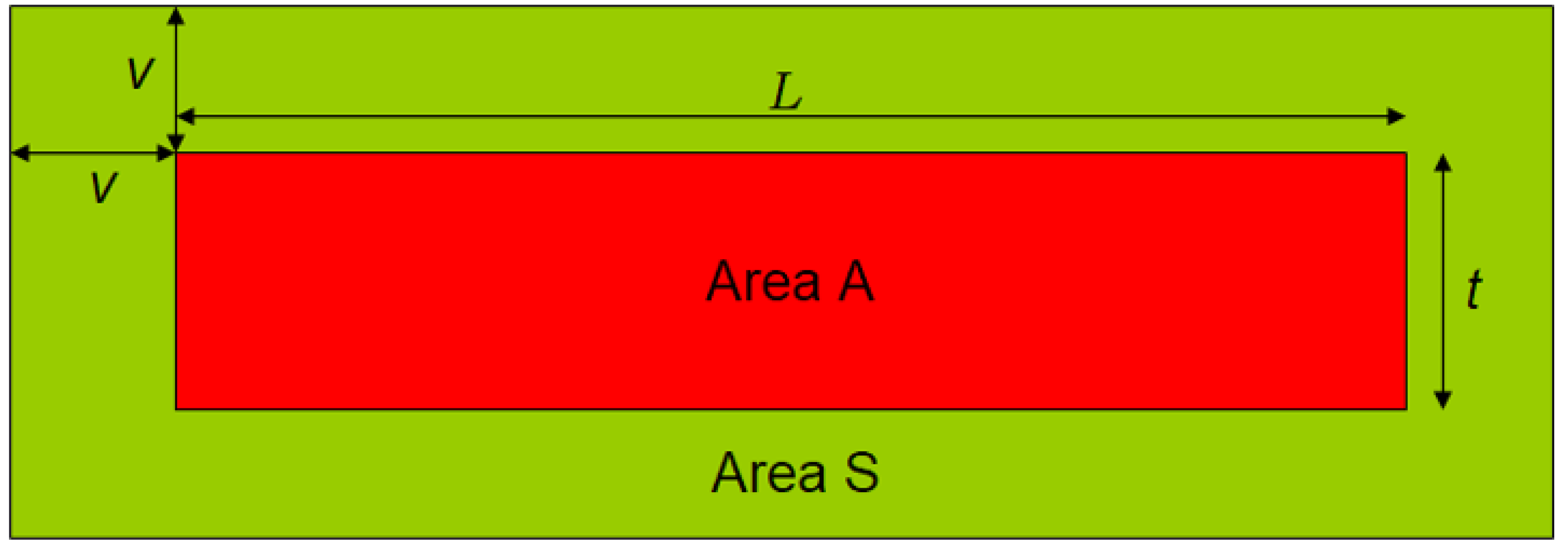

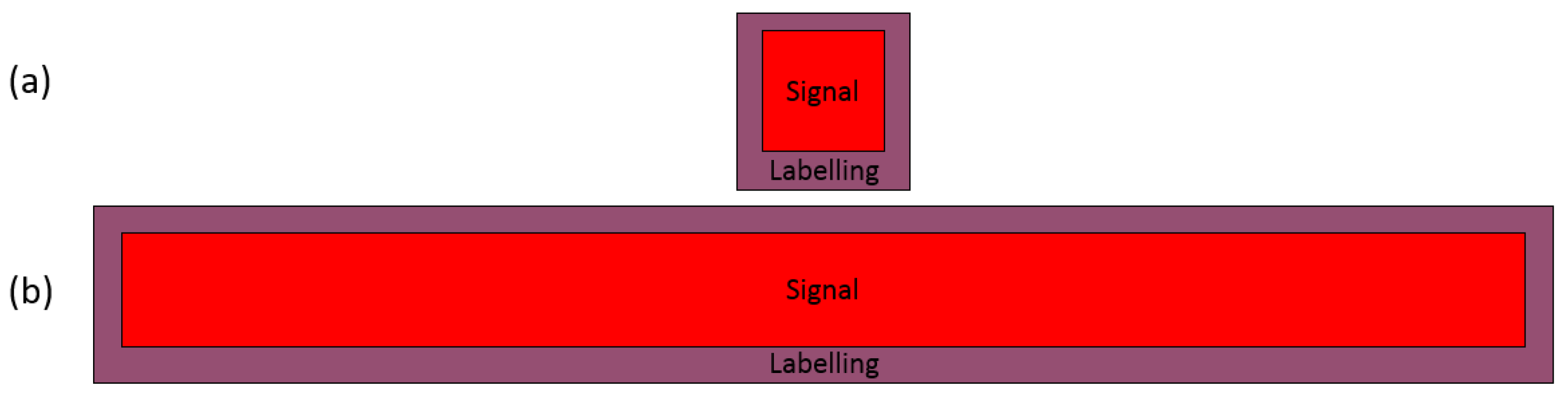

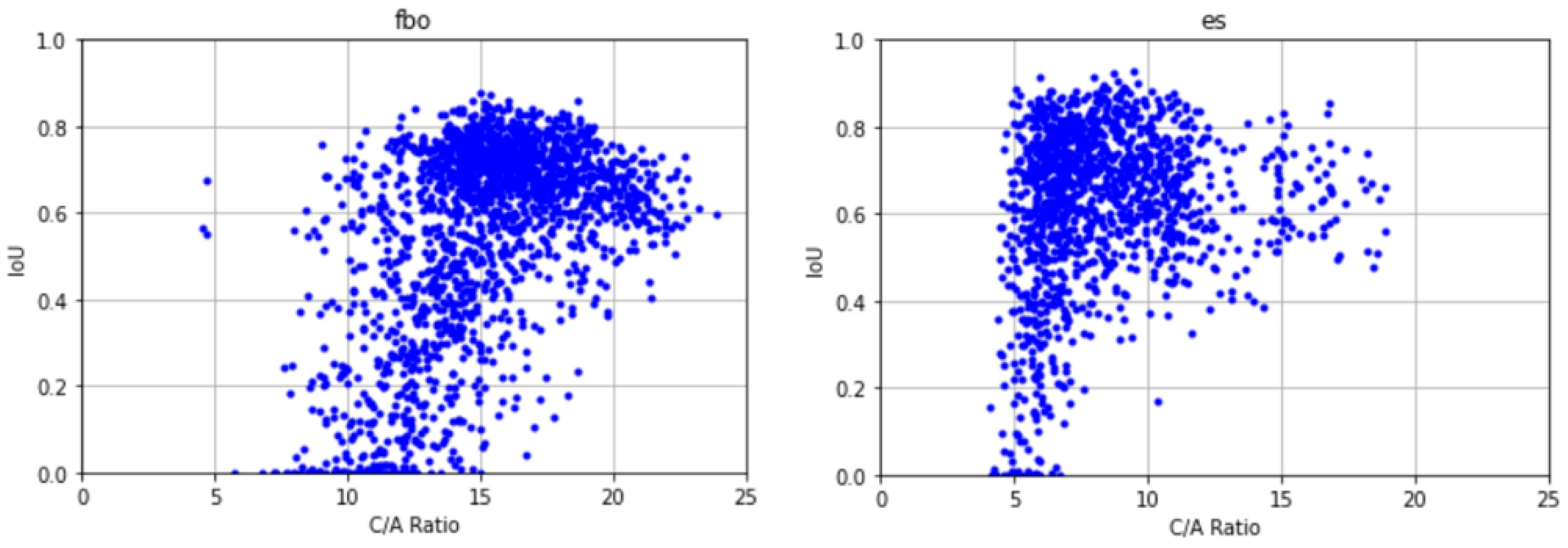

- C/A ratio:As explained earlier, our “ground truth” occupied an area larger than the area of the true signal because we labeled around the outer edge of the signal. Therefore, even if the model perfectly predicted the true signal, the IoU, which compares the prediction with the “ground truth”, would still be less than one. The derivation in the earlier section showed that the lower the C/A, the lower the IoU of the perfect prediction. As can be seen from the ionogram layers in Figure 1, F1 layers usually had a lower C/A than Es layers, which can contribute to the lower IoU for fao and fax. Figure 12 shows the scatter plots for compact and elongated signals for Es layers and F2 layers, respectively. The elongated F2 signals show that they had a high IoU in the regions where C/A was high. For the Es signals, we see a similar result wherein the high IoU cases were associated with the high C/A cases. It is evident that there was an inevitable decrease in the IoU as a result of labeling for both the compact and the elongated cases of the signal shape.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Shape and Contamination

Appendix A.1. The Case of a Square (Regular Tetragon) Signal

Appendix A.2. The Case of a Very Elongated Signal

References

- Mendoza, M.M.; Macalalad, E.P.; Juadines, K.E.S. Analysis of the Ionospheric Total Electron Content during the Series of September 2017 Solar Flares over the Philippine—Taiwan Region. In Proceedings of the 2019 6th International Conference on Space Science and Communication (IconSpace), Johor Bahru, Malaysia, 28–30 July 2019. [Google Scholar]

- Dmitriev, A.V.; Huang, C.M.; Brahmanandam, P.S.; Chang, L.C.; Chen, K.T.; Tsai, L.C. Longitudinal variations of positive dayside ionospheric storms related to recurrent geomagnetic storms. J. Geophys. Res. Space Phys. 2013, 118, 6806–6822. [Google Scholar] [CrossRef] [Green Version]

- Lyons, L.R.; Evans, D.S.; Lundin, R. Observed Relation Between Magnetic Field Aligned Electric Fields and Downward Electron Energy Fluxes in the Vicinity of Auroral Forms. J. Geophys. Res. 1979, 84, 457–461. [Google Scholar] [CrossRef]

- Scherliess, L.; Thompson, D.C.; Schunk, R.W. Longitudinal variability of low-latitude total electron content: Tidal influences. J. Geophys. Res. Space Phys. 2008, 113, 1–16. [Google Scholar] [CrossRef]

- Komjathy, J.A.; Galvan, V.D.A.; Stephens, P.; Butala, M.D.; Akopian, V.; Wilson, B.; Verkhoglyadova, O.; Mannucci, A.J.; Hickey, M. Detecting ionospheric TEC perturbations caused by natural hazards using a global network of GPS receivers: The Tohoku case study. Earth Planets Space 2012, 64, 1287–1294. [Google Scholar] [CrossRef] [Green Version]

- Artru, J.; Ducic, V.; Kanamori, H.; Lognonné, P.; Murakami, M. Ionospheric detection of gravity waves induced by tsunamis. Geophys. J. Int. 2005, 160, 840–848. [Google Scholar] [CrossRef] [Green Version]

- Huang, X.; Reinisch, B.W. Vertical electron content from ionograms in real time. Radio Sci. 2001, 36, 335–342. [Google Scholar] [CrossRef] [Green Version]

- Mendoza, M.M.; Juadines, K.E.S.; MacAlalad, E.P.; Tung-Yuan, H. A Method in Determining Ionospheric Total Electron Content Using GNSS Data for non-IGS Receiver Stations. In Proceedings of the 2019 6th International Conference on Space Science and Communication (IconSpace), Johor Bahru, Malaysia, 28–30 July 2019. [Google Scholar]

- Tsai, L.C.; Tien, M.H.; Chen, G.H.; Zhang, Y. HF radio angle-of-arrival measurements and ionosonde positioning. Terr. Atmos. Ocean. Sci. 2014, 25, 401–413. [Google Scholar] [CrossRef] [Green Version]

- Tsai, L.C.; Berkey, F.T. Ionogram analysis using fuzzy segmentation and connectedness techniques. Radio Sci. 2000, 35, 1173–1186. [Google Scholar] [CrossRef] [Green Version]

- Ulukavak, M. Deep learning for ionospheric TEC forecasting at mid-latitude stations in Turkey. Acta Geophys. 2021, 69, 589–606. [Google Scholar] [CrossRef]

- Boulch, A.; Cherrier, N.; Castaings, T. Ionospheric activity prediction using convolutional recurrent neural networks. arXiv 2018, arXiv:1810.13273. [Google Scholar]

- Sitaula, C.; Hossain, M.B. Attention-based VGG-16 model for COVID-19 chest X-ray image classification. Appl. Intell. 2021, 51, 2850–2863. [Google Scholar] [CrossRef]

- De la Jara Sanchez, C. Ionospheric Echoes Detection in Digital Ionograms Using Convolutional Neural Networks. Ph.D. Thesis, Pontificia Universidad Catolica del Peru-CENTRUM Catolica, Surco, Peru, 2019. [Google Scholar]

- Jegou, S.; Drozdzal, M.; Vazquez, D.; Romero, A.; Bengio, Y. The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 11–19. [Google Scholar]

- Arel, I.; Rose, D.C.; Karnowski, T.P. Deep machine learning-a new frontier in artificial intelligence research [research frontier]. IEEE Comput. Intell. Mag. 2010, 5, 13–18. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Smirnov, E.A.; Timoshenko, D.M.; Andrianov, S.N. Comparison of regularization methods for imagenet classification with deep convolutional neural networks. Aasri Procedia 2014, 6, 89–94. [Google Scholar] [CrossRef]

- Alex, K.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, NIPS’12, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hochreiter, S. The vanishing gradient problem during learning recurrent neural nets and problem solutions. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 1998, 6, 107–116. [Google Scholar] [CrossRef] [Green Version]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318. [Google Scholar]

| Ionospheric Layer | Class Label |

|---|---|

| E layer ordinary mode | eo |

| E layer extraordinary mode | ex |

| Sporadic E layer ordinary mode | eso |

| Sporadic E layer extraordinary mode | esx |

| Sporadic E layer | es |

| F1 layer ordinary mode | fao |

| F1 layer extraordinary mode | fax |

| F2 layer ordinary mode | fbo |

| F2 layer extraordinary mode | fbx |

| F3 layer ordinary mode | fco |

| F3 layer extraordinary mode | fcx |

| Dense Block (DB) | Transition Down (TD) | Transition Up (TU) |

|---|---|---|

| Batch Normalization | Batch Normalization | 3 × 3 Transposed Convolution (stride = 2) |

| ReLU | ReLU | |

| 3 × 3 Convolution | 1 × 1 Convolution | |

| Dropout (p = 2) | Dropout (p = 2) | |

| 2 × 2 Max Pooling |

| eo | ex | eso | esx | es | esa | fao | fax | fbo | fbx | fco | fcx | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Train | 7.6 | 1.1 | 12.6 | 10.0 | 39.9 | 51.9 | 39.3 | 26.4 | 94.6 | 87.8 | 0.15 | 0.13 |

| Test | 9.2 | 1.1 | 14.8 | 11.2 | 40.8 | 55.1 | 38.7 | 25.5 | 94.2 | 87.7 | 0.08 | 0.08 |

| Validation | 7.5 | 0.5 | 14.5 | 12.2 | 38.0 | 52.1 | 39.4 | 25.3 | 95.5 | 89.8 | 0.10 | 0.40 |

| eo | ex | eso | esx | es | esa | fao | fax | fbo | fbx | fco | fcx | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Original | 0.00 | 0.00 | 0.37 | 0.16 | 0.51 | 0.56 | 0.47 | 0.33 | 0.59 | 0.48 | 0.00 | 0.00 |

| Filtered | 0.00 | 0.00 | 0.38 | 0.16 | 0.57 | 0.66 | 0.47 | 0.33 | 0.61 | 0.50 | 0.00 | 0.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mendoza, M.M.; Chang, Y.-C.; Dmitriev, A.V.; Lin, C.-H.; Tsai, L.-C.; Li, Y.-H.; Hsieh, M.-C.; Hsu, H.-W.; Huang, G.-H.; Lin, Y.-C.; et al. Recovery of Ionospheric Signals Using Fully Convolutional DenseNet and Its Challenges. Sensors 2021, 21, 6482. https://doi.org/10.3390/s21196482

Mendoza MM, Chang Y-C, Dmitriev AV, Lin C-H, Tsai L-C, Li Y-H, Hsieh M-C, Hsu H-W, Huang G-H, Lin Y-C, et al. Recovery of Ionospheric Signals Using Fully Convolutional DenseNet and Its Challenges. Sensors. 2021; 21(19):6482. https://doi.org/10.3390/s21196482

Chicago/Turabian StyleMendoza, Merlin M., Yu-Chi Chang, Alexei V. Dmitriev, Chia-Hsien Lin, Lung-Chih Tsai, Yung-Hui Li, Mon-Chai Hsieh, Hao-Wei Hsu, Guan-Han Huang, Yu-Ciang Lin, and et al. 2021. "Recovery of Ionospheric Signals Using Fully Convolutional DenseNet and Its Challenges" Sensors 21, no. 19: 6482. https://doi.org/10.3390/s21196482

APA StyleMendoza, M. M., Chang, Y.-C., Dmitriev, A. V., Lin, C.-H., Tsai, L.-C., Li, Y.-H., Hsieh, M.-C., Hsu, H.-W., Huang, G.-H., Lin, Y.-C., & Tsogtbaatar, E. (2021). Recovery of Ionospheric Signals Using Fully Convolutional DenseNet and Its Challenges. Sensors, 21(19), 6482. https://doi.org/10.3390/s21196482