1. Introduction

Blockchain is an emerging technology that plays an important role in decentralized technologies and applications, such as storage, calculation, security, interaction, and transactions. Since 2008, along with the increasing popularity of cryptocurrencies (e.g., Bitcoin and Ether) in the financial market, the related blockchain technology has also been maturing and developing and has become one of the most promising network information technologies for ensuring security and privacy [

1,

2]. As opposed to conventional security schemes that focus on the path traversed by data, the blockchain is essentially a decentralized shared ledger, which focuses on protecting data and providing immutability and authentication.

Smart contracts, as the programs running on the blockchain, have been applied in a variety of business areas to achieve automatic point-to-point trustable transactions [

3,

4,

5].

A number of blockchain platforms, such as Ethereum, provide some application program interfaces (APIs) for the development of smart contracts. When a developer deploys a smart contract to blockchain platforms, the source code of the smart contract will be compiled into bytecode and reside on the blockchain platforms [

3,

4,

6,

7]. Then, every node on the blockchain can receive the bytecode of the smart contract, and everyone can call the smart contract by sending the transaction to the corresponding smart contract address.

Blockchain and smart contracts have been applied to a variety of fields, such as the Internet of Things (IoT). For example, the Commonwealth Bank of Australia, Wells Fargo, and Brighann Cotton conducted the first interbank trade transaction in the world, which combined IoT, blockchain technologies, and smart contracts (

https://www.gtreview.com/news/global/landmark-transaction-merges-blockchain-smart-contracts-and-iot/ accessed on 31 July 2021). They employed IoT technologies with a GPS device to track the geographic location of goods in transit. When the goods reached their final destination, the release of funds was automatically triggered by the smart contracts. With the help of smart contracts, paperwork that takes a few days using manual processes can be completed in minutes, which largely reduces the time cost and improves the trade efficiency. In addition, smart contracts make transactions more transparent, because the transaction data are updated in real time in the same system. Meanwhile, smart contracts cannot be tampered after deployment; thus, security is greatly enhanced, and the risk of fraud is reduced.

However, due to the high complexity of blockchain-related technologies, it is generally difficult for investors to understand the business logic of smart contracts in depth, and they can only comprehend the operation mechanism of the business through some descriptive information about smart contracts. As a result, some speculators have introduced the classic form of financial investment fraud—the Ponzi scheme—into blockchain transactions and brought extremely costly losses to investors. The highly complex smart contract program makes Ponzi schemes more confusing. For the endless stream of smart contract-based Ponzi schemes, a post on a popular Bitcoin forum (bitcointalk.org) showed that more than 1800 Ponzi scheme contracts emerged between June 2011 and November 2016, where the financial losses caused were even harder to estimate [

8]. As Ponzi schemes become more prevalent in blockchain transactions, researchers need to find a way to automatically detect Ponzi scheme contracts.

Some existing works in the literature [

7,

9] focused on manually extracting features from the smart contract code and the transaction history of smart contracts. Specifically, Chen et al. [

7,

9] compiled the smart contract code to generate bytecode and then decompiled it into operating code (Opcode) using external tools to extract the Opcode features. In addition, they extracted the statistical account features from the transaction history of smart contracts. Finally, the random forest algorithm was used as the classification model based on the composited features to detect Ponzi scheme contracts. However, the source code of smart contracts has a well-defined structure and semantic information, which the hand-crafted features cannot capture well. Therefore, the detection performance of the existing works is not satisfactory enough.

With the development of deep learning technologies, many researchers have tried to apply deep learning algorithms to extract more powerful features from source code to conduct the related tasks. However, to the best of our knowledge, there is no investigation on the significance of deep learning for Ponzi scheme contract detection. The challenges of automatic Ponzi scheme contract detection using deep learning usually include:

(1) How to extract the structural features of the smart contract code well.

Using the plain source code of smart contracts as the input ignores the structural information of smart contracts. How to achieve the serialization transformation of the code without destroying the structural semantics of the code and conform to the input requirements of the deep learning model after the transformation is a problem that needs to be considered.

(2) How to capture the long-range dependencies between code tokens of smart contracts.

The source code of smart contract in our experimental dataset is very long. For the long sequence training, traditional deep learning models (e.g., LSTM [

10] and GRU [

11]) have the problem of gradient disappearance. The more relevant the output of the last time step is, the later the input is. Furthermore, an earlier input causes more information to be lost in the transmission process. Obviously, such logic does not make sense in the context of semantic understanding. This phenomenon is manifested in the model as gradient disappearance. Therefore, in the long sequence training, we need a model that can capture the long-range dependencies efficiently and without gradient disappearance.

To address these problems, we propose a Ponzi scheme contract detection approach called MTCformer based on the multi-channel TextCNN (MTC) and Transformer. The MTCformer first parses the smart contract code into an Abstract Syntax Tree (AST). Then, in order to reserve the structural information, the MTCformer employs the Structure-Based Traversal (SBT) method proposed by Hu et al. [

12] to convert the AST to the SBT sequence. After that, the MTCformer employs the multi-channel TextCNN to learn feature representations based on neighboring words (tokens) to obtain local structural and semantic feature of the source code. The multi-channel TextCNN contains multiple filters of different sizes, which can learn multiple different dimensions of information and capture more complete local features in the same window. Next, the MTCformer uses Transformer to capture the long-range dependencies between code tokens. Finally, a fully connected neural network with a cost-sensitive loss function is used for classification.

We conduct experiments on a Ponzi scheme contract detection dataset, which contains 200 Ponzi scheme contracts and 3588 non-Ponzi scheme contracts. We extensively compare the performance of the MTCformer against the three recently proposed methods (i.e., Account, Opcode, Account + Opcode). The experimental results show that (1) the MTCformer outperforms Account by 51.56% in terms of precision, 315% in terms of recall, and 297% in terms of F-score; (2) the MTCformer performs better than Opcode by 3.19%, 13.7%, and 8.54% in terms of the three metrics; and (3) the MTCformer also outperforms Account + Opcode by 2.1%, 20.29%, and 12.66% in terms of precision, recall, and F-score, respectively. We also evaluate the MTCformer against the variants, and the experimental results indicate that the MTCformer outperforms its variants in terms of the three metrics.

In summary, the primary contributions of this paper are as follows:

(1) We propose an MTCformer method combining the multi-channel TextCNN and Transformer for Ponzi scheme contract detection. The MTCformer can both extract the local structural and semantic features and capture the long-range dependencies between code tokens.

(2) We compare the MTCformer with the state-of-the-art methods and their variants. The experimental results show that the MTCformer achieves more encouraging results than the compared methods.

The remainder of this paper is organized as follows.

Section 2 introduces the related work and background.

Section 3 proposes our MTCformer method to detect Ponzi scheme contracts.

Section 4 presents the experimental setup and results.

Section 5 discusses the impact of the parameters. Finally,

Section 6 concludes the paper and enumerates ideas for future studies.

2. Related Work and Background

This section provides background information on topics relevant to this paper.

Section 2.1 describes the application of blockchain in the Internet of Things (IoTs).

Section 2.2 briefly explains the basic concepts of Ethereum and smart contracts.

Section 2.3 introduces the related work of the Ponzi scheme contract detection.

Section 2.4 briefly introduces the Abstract Syntax Tree and Structure-Based Traversal used to structure the source code of the smart contract.

Section 2.6 briefly introduces the text-based Convolutional Neural Network and Transformer.

2.1. Blockchain and IoT

Blockchain refers to a series of decentralized and tamper-proof ledgers combined into a network. It provides a service to the end-users with lower transaction costs and without unnecessary intervention. Because of its uniqueness, blockchain has offered many benefits to business and management, such as decentralization, intractability and strategic applications, security and behavior, and operations and strategic decision making [

13]. Currently, blockchain is already widely used in the Internet of Things (IoT). Singh et al. [

14] used blockchain and artificial intelligence to design and develop IoT architectures to support effective big data analysis. Tsang et al. [

15] explored the intellectual cores of the blockchain–Internet of Things (BIoT). Zhang et al. [

16] proposed an e-commerce model for IoT E-business to realize the transaction of smart property. Puri et al. [

17] designed a strategy based on smart contracts to handle the security and privacy issues in an IoT network. Zhang et al. [

18] studied key access control issues in IoT and proposed a smart contract-based model to enable reliable access control for IoT systems. By integrating the IoT with blockchain systems and smart contracts, Ellul et al. [

19] provided the automatic verification of physical processes involving different parties.

2.2. Ethereum and Smart Contracts

Ethereum is a blockchain platform that provides a Turing-complete programming language (Solidity) and a corresponding runtime environment (i.e., EVM) [

20]. The platform allows users to develop blockchain applications using short code [

21]. Currently, Ethereum is the largest platform that provides an execution environment for smart contracts [

22]. The smart contracts running on Ethereum are a series of EVM bytecodes residing on the blockchain that can be triggered for execution. These bytecodes are compiled by the EVM compiler from the smart contract source code. Deployment is accomplished by uploading bytecode to the blockchain through an Ethereum client. These codes implement certain predefined rules and are “autonomous agents” that exist in the Ethereum execution environment. Once deployed, smart contracts cannot be changed, and the execution of their coding functions produces the same result for anyone running them.

In Ethereum, two types of accounts exist. One is an externally owned account (EOA) and the other is a contract account [

23,

24]. EOAs have a private key that provides access to the corresponding Ethereum or contract. On the other hand, contract accounts have smart contract codes. Contract accounts cannot run their own smart contracts. Running a smart contract requires an external account to initiate a transaction to the contract account, which initiates the execution of the code within it.

2.3. Ponzi Scheme Contract

A Ponzi scheme is a type of investment scam in the financial market. Organizers of Ponzi schemes use the funds of new investors to pay interest and short-term returns to previous investors. The organizers often package the investment project with the illusion of low risk and high and stable returns, which are used to confuse investors who are unfamiliar with the industry or have a fluke mentality.

In the blockchain era, many Ponzi schemes are disguised in smart contracts. We refer to these Ponzi schemes as smart Ponzi schemes and refer to the corresponding smart contracts as Ponzi scheme contracts [

9]. Due to their self-executing and non-tamper-evident characteristics, smart contracts have become a powerful tool for Ponzi schemes to attract victims. More importantly, the originators of Ponzi schemes are anonymous.

Machine learning and data mining technologies have been used to detect Ponzi scheme contracts. Ngai et al. [

25] proposed a technology based on data mining to detect financial fraud, and it is used for detecting Bitcoin Ponzi schemes [

26]. Chen et al. [

7,

9] used the transaction history of smart contracts in Ethereum and the Opcode of smart contracts as hand-crafted features to detect smart Ponzi schemes. Different from their studies, our paper focuses on automatically learning the hidden rich semantic features from the source code to detect Ponzi scheme contracts by using deep learning and natural language processing technologies.

2.4. Abstract Syntax Tree and Structure-Based Traversal

In the field of natural language processing, the processing of text data includes syntactic analysis, lexical analysis, dependency analysis, and machine translation. Generally, ordinary text is unstructured data, which requires to be structured before analysis and understanding. The structured data is more conducive to learning semantic features and dependencies in the text.

An abstract syntax tree (AST) is a tree-like representation of the abstract syntactic structure of the source code, where each node is a construct occurring in the code [

27,

28,

29]. The reason for the abstraction is that the abstract syntax tree does not represent every detail of the appearance of the real syntax. For example, nested brackets are implied in the structure of the tree and are not presented in the form of nodes. In short, it is the conversion of unstructured code into a tree structure according to certain rules.

The Structure-Based Traversal (SBT) method proposed by Hu et al. [

12] converts the abstract syntax trees into specially formatted sequences via globally traversing the trees. Existing code representation works [

30,

31,

32] have proved that the SBT method has a strong ability in preserving the code structure and lexical information. Therefore, we also employ the SBT method to structure the source code.

2.5. Text-Based Convolutional Neural Network

Convolutional Neural Networks (CNN) were initially applied in the field of computer vision. Subsequently, they have been proven to achieve excellent results in traditional natural language processing field, such as semantic analysis [

33,

34,

35,

36], search query [

37], sentence modeling [

38], etc. The TextCNN is a deep learning algorithm with high performance in feature learning [

39].

The core goal of the TextCNN is to capture local features. All words need to be converted to low-dimensional dense vectors. During the training process, if these word vectors are fixed, it is called CNN-static. Otherwise, as the word embeddings are updated, the corresponding model is called CNN-non-static [

40]. In general, the

i-th word can be represented as a

k-dimensional word vector

in the sentence. A sentence of length

n is expressed as

. In this way,

is similar to an image that can be used as input to CNN. In the convolution layer, many filters with different window sizes are sliding over

. Each filter convolves

to generate a different feature mapping. Correspondingly, for the text, local features are sliding windows consisting of several words, similar to N-grams. The advantage of Convolutional Neural Networks is that they can automatically combine and filter N-gram features to obtain local semantic information at different levels of abstraction [

41,

42,

43,

44,

45,

46]. Then, the maximum pooling operation is applied to the feature mapping to obtain the maximum value as input to the Transformer layer. Generally, some regularization techniques such as dropout and batch normalization can be used after the pooling layer to prevent model overfitting.

2.6. Transformer-Related Structures

With traditional RNN-based models (e.g., LSTM, GRU, etc.), the computation can only be done sequentially from left to right or from right to left when text is used as input. There are two problems with this mechanism:

The computation of time step t relies on the results of the computation at moment . This limits the parallel computing capability of the model.

LSTM and GRU can solve the problem of back-and-forth dependence of long sequences to some extent, but the performance will drop sharply when encountering particularly long sequences.

Both problems are addressed to some extent by the Transformer model [

47] proposed by Google in 2017. Unlike CNN and RNN, the entire network structure of the Transformer is composed entirely of the attention mechanism. More precisely, the Transformer only consists of self-attention and a Feed Forward Neural Network. A trainable neural network based on Transformer can be built by stacking the Transformer.

The Transformer model does not need to process words sequentially in sequence and can train all words at the same time, which greatly improves the degree of parallelism and increases the computational efficiency. Furthermore, the attention mechanism pays attention to all words of the whole input sequence, making the model associate the words of the context. It helps the model to encode the text better. However, the attention mechanism itself cannot capture positional information. Therefore, the “positional encoding” approach is proposed. Specifically, positional encoding adds the positional information of words to the word vector and uses the word embedding and positional embedding together as the input of Transformer. It makes the model understand the position of each word in the sentence, not just the semantics of the word itself.

In this paper, Ponzi scheme contract detection is a classification task that does not require the use of the full Transformer model but instead uses positional encoding and an attention encoder for feature learning.

3. Smart Ponzi Scheme Detection Model

3.1. Overall Process

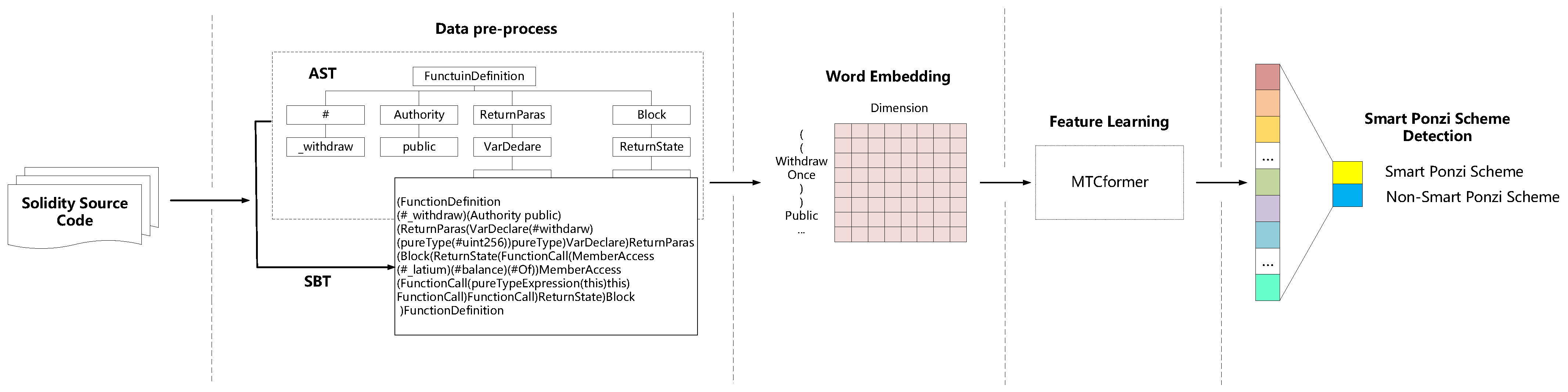

As shown in

Figure 1, the overall process of Ponzi scheme contract detection consists of four steps:

Data pre-processing: The source code of the smart contract is firstly parsed into an Abstract Syntax Tree (AST) according to the ANTLR syntax rules. Then, we employ the SBT method to convert the AST into a SBT sequence to reserve the structure information.

Word embedding: The pre-processed SBT sequences are fed into the embedding layer for word embedding, and the words (tokens) in each sequence will be converted into fixed dimensional word vectors. Then, an SBT sequence will be converted into word embedding matrices.

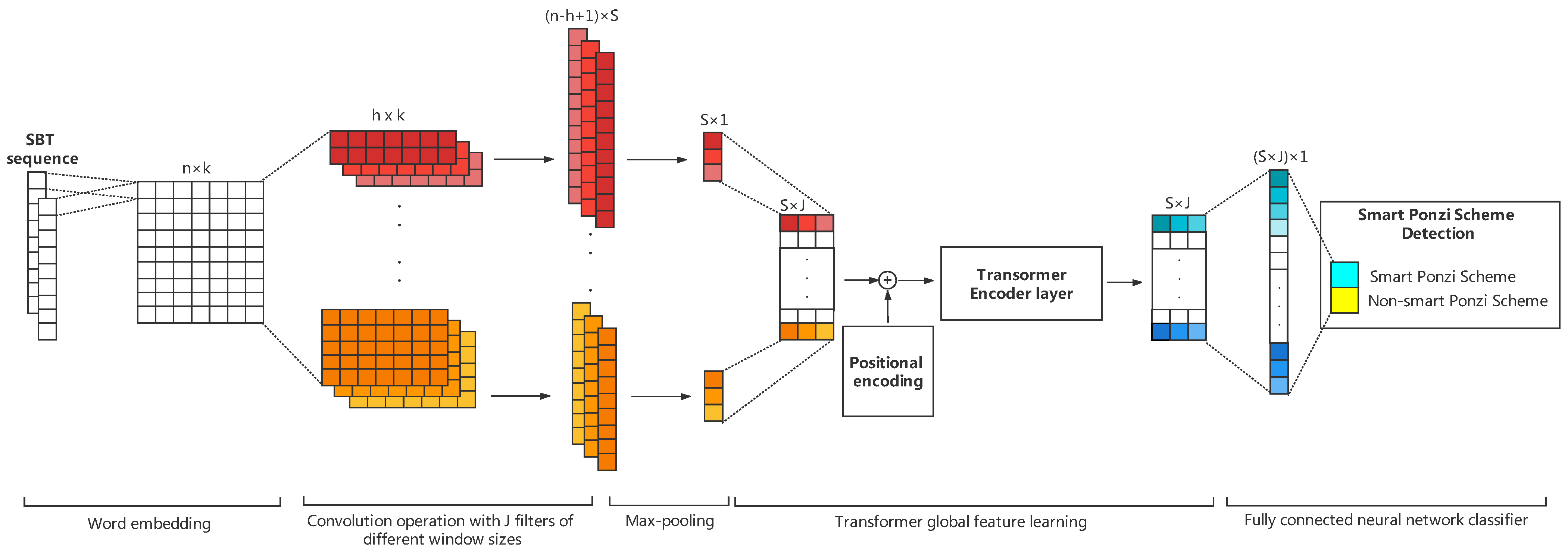

Feature learning: We use the multi-channel TextCNN and Transformer to automatically generate structural and semantic features of smart contract code from the input word embedding matrices. The feature learning process is shown in

Figure 2.

Ponzi scheme contract detection: We use a fully connected layer neural network to do the final classification and conduct a calculation on the real label (presence of a smart Ponzi scheme) to optimize the loss function.

3.2. Data Pre-Processing

The source code of the smart contract is in unstructured form; thus, we need to learn the structure features of the smart contract code for better Ponzi scheme contract detection [

48,

49,

50]. Therefore, instead of using the plain source code directly as the input of the model, we parse the source code into an Abstract Syntax Tree (AST) according to the ANTLR [

51] syntax rules and then generate a Structure-Based Traversal (SBT) sequence from the AST using the SBT method [

12].

The detailed process of the SBT method is as follows:

Starting with the root node, the method firstly uses a pair of parentheses to represent the tree structure and places the root node itself after the right parenthesis.

Next, the method traverses the subtree of the root node and places all root nodes of the subtree in parentheses.

Finally, the method recursively traverses each subtree until all nodes are traversed to obtain the final sequence.

As shown in

Figure 3, we firstly use the parsing tool solidity-parser-antlr (

https://github.com/federicobond/solidity-parser-antlr accessed on 31 July 2021) to parse the source code to the AST and then convert the AST to the SBT sequence. The StockExchange contract defines a function called ‘withdraw’, in which non-leaf nodes are represented by type (e.g., the root node of the contract is FunctionDefinition, and variable, function name, return value name, etc., are represented by “#”). The leaf nodes represent the value of each type.

3.3. Embedding Layer

The word embedding matrix can be initialized using random initialization or using pre-trained vectors learned by models such as CodeBert [

52], Word2Vec [

53], GloVe [

54], FastText [

55], ELMo [

56], etc. The pre-trained word embedding can leverage other corpora to obtain more prior knowledge, while word vectors trained by the current network can better capture the features associated with the current task. Random initialization is used in this paper due to the absence of the pre-trained model of the smart contract code.

The is the k-dimensional word vector corresponding to the i-th word in the SBT sentence. A sequence of length n can be expressed as a matrix . Then, matrix is taken as the input to the convolution layer.

3.4. Convolutional Layer

In the convolution layer, in order to extract local features,

J filters of different sizes are convolved on

. The width of each filter window is the same as

; only the height is different. In this way, different filters can obtain the relationship of words in different ranges. Each filter has

convolution kernels. The Convolutional Neural Networks learn parameters in the convolutional kernel, and each filter has its own focus, so that multiple filters can learn multiple different pieces of information. Multiple convolutional kernels of the same size are designed to learn features that are complementary to each other from the same window. The detailed formula is as follows:

where the

denotes the weight of the

j-th

filter of the convolution operation, the

is the new feature resulting from the convolution operation,

is a bias, and

f is a non-linear function. Many filters with varying window sizes slide over the full rows of

, generating a feature map

. The most important feature value

was obtained by 1-max pooling for one scalar and mathematically written as:

S convolution kernels are computed to obtain

S feature values, which are concatenated to obtain a feature vector

:

Finally, the feature vector of all filters is stacked into a complete feature mapping matrix

:

which is used as the input of the Transformer layer. Generally, some regularization techniques such as dropout and batch normalization can be imposed after the pooling layer to prevent model overfitting [

40].

3.5. Transformer

Since the multi-head attention is not the convolution and recurrent structure, it needs position encoding to utilize the sequence order of the feature matrix

M. This kind of positional encoding rule is as follows:

where

is the token position in the sequence,

i is the dimension index,

d is the dimensions of the complete feature mapping

M, and

is the position encoding matrix isomorphic to

M.

The matrix

is fed into multi-head attention to capture long-range dependencies. The details are given by the following equations:

The matrices of queries, keys, and values are denoted by

, respectively, while

represent their splitted matrices for

. Specifically,

. The

are three weight trainable matrices. Equation (

10) describes the output of

. After the concatenating from all heads and the linear transformation with

, we can obtain the output of the multi-head attention [

47,

57].

Then, the output of the multi-head attention is delivered to the FFN (Feed Forward Network). The FFN contains two linear transformation layers and the activation function (ReLU) in between. The detailed equation of the FFN is as follows:

where

and

are the weight matrices of each layer,

and

are their corresponding bias, and

x is the input matrix. Finally, the matrix

is reshaped into a one-dimensional vector

, which is the input to the fully connected network classifier.

3.6. Loss Function

The smart Ponzi scheme detection problem studied in this paper can be considered as a binary classification task. Therefore, there are two types of misclassifications:

A non-Ponzi scheme contract is wrongly predicted to be a Ponzi scheme contract. At this point, we can manually check the smart contract to confirm security. Even if a transaction is generated, it will not cause financial loss.

A Ponzi scheme contract is wrongly predicted to be a non-Ponzi scheme contract. Therefore, the Ponzi scheme contract will be deployed and reside on the blockchain platforms.

As the failure to find a Ponzi scheme contract can lead to large economic loss, misclassifying a Ponzi scheme contract results in a higher cost than misclassifying a non-Ponzi scheme contract. In addition, there are far fewer contracts with Ponzi schemes than the non-Ponzi scheme contracts in the smart Ponzi scheme detection dataset. The smart Ponzi scheme detection model trained on the imbalanced dataset will focus more on the non-Ponzi scheme contracts and is prone to predict that the new contract to be a non-Ponzi scheme contract. Therefore, a cost-sensitive loss function is used for the fitting. In the training set, we suppose the number of contracts with a Ponzi scheme is

u, and the number of contracts without a Ponzi scheme is

v. The cross entropy loss function with weights is defined as follows:

where

is the true label,

is the predicted outcome, and

c denotes each contract.

3.7. Time Complexity Analysis

The time complexity has an important impact on the model, which qualitatively describes the running time of the algorithm. The MTCformer model proposed in this paper has the structure of both the multi-channel TextCNN and Transformer. Its specific time complexity is analyzed as follows.

There are m filters in the multi-channel TextCNN, where each filter has s convolution kernels. Furthermore, we perform n convolution kernel operations, and each convolution kernel size is . Thus, the total time complexity of the multi-channel TextCNN is .

The Transformer consists of the self-attention stage and the multi-head attention stage. Let the size of K, Q, and V be . In the self-attention stage, the time complexity of the similarity calculation, the softmax calculating for each line, and the weighted sum are , , and , respectively. Therefore, the time complexity of the self-attention stage is .

In the multi-head attention stage, the time complexity of the input linear projection, attention operation, and output linear projection are , , and , respectively. Therefore, the time complexity of the multi-head attention stage is .

In summary, the time complexity of MTCformer is . After ignoring the constant term and taking the highest power, the time complexity of MTCformer is .