Brain-Computer Interface: Advancement and Challenges

Abstract

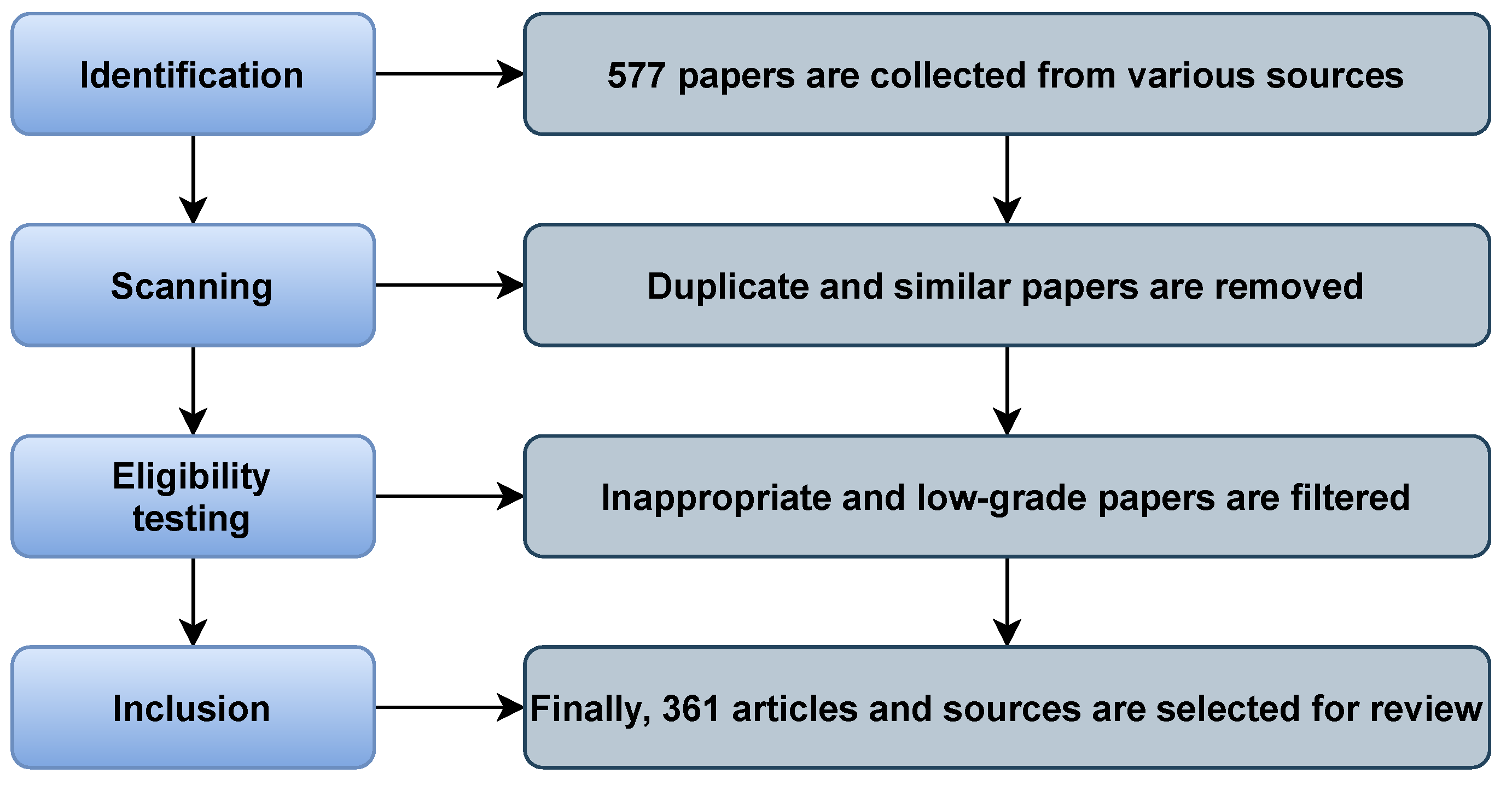

1. Introduction

- The paper explicitly illustrates Brain-Computer Interface’s (BCI) present, past, and future trends and technologies.

- The paper presents a taxonomy of BCI and elaborates on the few traditional BCI systems with workflow and architectural concepts.

- The paper investigates some BCI tools and datasets. The datasets are also classified on different BCI research domains.

- In addition, the paper demonstrates the application of BCI, explores a few unsolved challenges, and analyzes the opportunities.

2. Applications of BCI

2.1. Biomedical Applications

2.1.1. Substitute to CNS

2.1.2. Assessment and Diagnosis

2.1.3. Therapy or Rehabilitation

2.1.4. Affective Computing

2.2. Non-Biomedical Applications

2.2.1. Gaming

2.2.2. Industry

2.2.3. Artistic Application

2.2.4. Transport

3. Structure of BCI

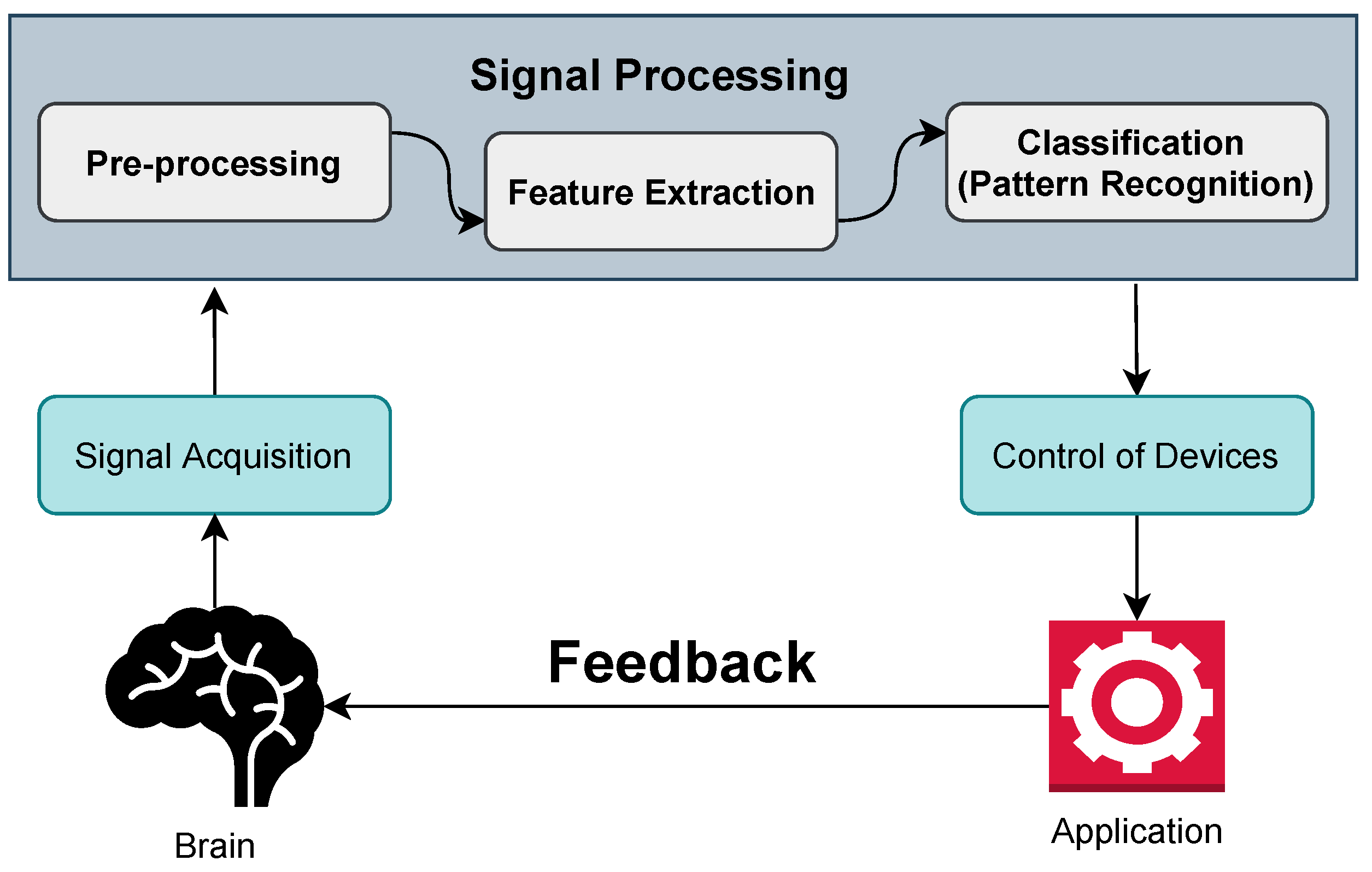

- Signal acquisition: In the case of BCI, it is a process of taking samples of signals that measure the brain activity and turning them into commands that can control a virtual or real-world application. The various techniques of BCI for signal acquisition are described later.

- Pre-processing: After the signal acquisition, the pre-processing of signals is needed. In most cases, the collected signals from the brain are noisy and impaired with artifacts. This step helps to clean this noise and artifacts with different methods and filtering. That is why it is named signal enhancement.

- Feature extraction: The next stage is feature extraction, which involves analyzing the signal and extracting data. As the brain activity signal is complicated, it is hard to extract useful information just by analyzing it. It is thus necessary to employ processing algorithms that enable the extraction of features of a brain, such as a person’s purpose.

- Classification: The next step is to apply classification techniques to the signal, free of artifacts. The classification aids in determining the type of mental task the person is performing or the person’s command.

- Control of devices: The classification step sends a command to the feedback device or application. It may be a computer, for example, where the signal is used to move a cursor, or a robotic arm, where the signal is utilized to move the arm.

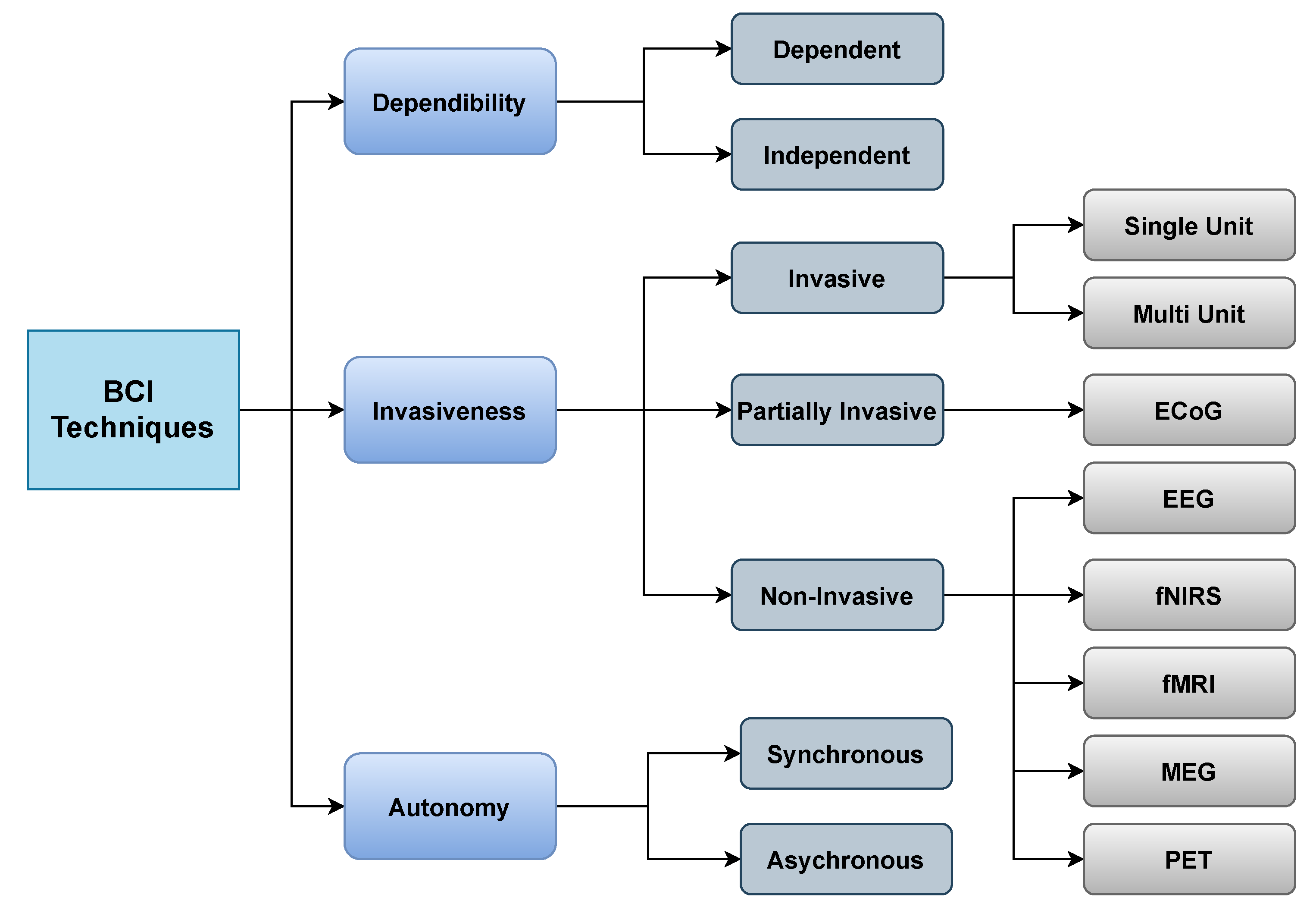

- Dependability: BCI can be classified as dependent or independent. Dependent BCIs necessitate certain types of motor control from the operator or healthy subjects, such as gaze control. On the other hand, independent BCIs do not enable the individual to exert any form of motor control; this type of BCI is appropriate for stroke patients or seriously disabled patients.

- Invasiveness: BCI is also classified into three types according to invasiveness: invasive, partially invasive, and non-invasive. Invasive BCIs are by far the most accurate as they are implanted directly into the cortex, allowing researchers to monitor the activity of every neuron. Invasive varieties of BCI are inserted directly into the brain throughout neurosurgery. There are two types of invasive BCIs: single unit BCIs, which detect signals from a single place of brain cells, and multi-unit BCIs, which detect signals from several areas. Semi-invasive BCIs use Electrocorticography (ECoG), a kind of signal platform that enables electrodes to be placed on the attainable edge of the brain to detect electrical impulses originating from the cerebral cortex. Although this procedure is less intrusive, it still necessitates a surgical opening in the brain. Noninvasive BCIs use external sensing rather than brain implants. Electroencephalography (EEG), Magnetoencephalography (MEG), Positron emission tomography (PET), Functional magnetic resonance imaging (fMRI), and Functional near-infrared spectroscopy (fNIRS) are all noninvasive techniques used it to analyze the brain. However, because of the low cost and portability of the gear, EEG is the most commonly used.

- Autonomy: BCI can operate either in a synchronous or asynchronous manner. Time-dependent or time-independent interactions between the user and system are possible. The system is known as synchronous BCI if the interaction is carried out within a particular amount of time in response to a cue supplied by the system. In asynchronous BCI, the subject can create a mental task at a certain time to engage with the system. Synchronous BCIs are less user-friendly than asynchronous BCIs; however, designing one is substantially easier than developing an asynchronous BCI.

3.1. Invasive

3.2. Partially Invasive

Electrocorticography (ECoG)

3.3. Noninvasive

3.3.1. Electroencephalography (EEG)

3.3.2. Magnetoencephalography (MEG)

3.3.3. Functional Magnetic Resonance Imaging (fMRI)

3.3.4. Functional Near-Infrared Spectroscopy (fNIRS)

3.3.5. Positron Emission Tomography (PET)

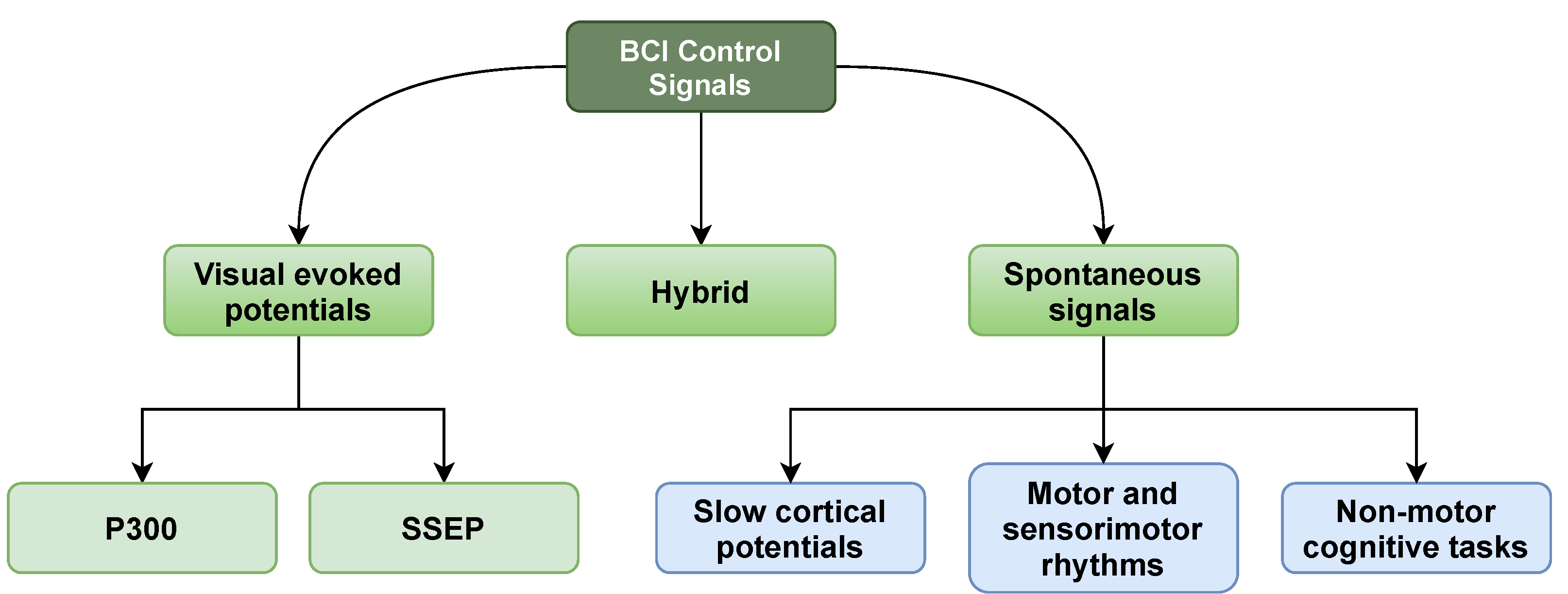

4. Brain Control Signals

4.1. Visual Evoked Potentials

4.1.1. Steady-State Evoked Potential (SSEP)

4.1.2. P300 Evoked Potentials (P300)

4.2. Spontaneous Signals

4.2.1. Motor and Sensorimotor Rhythms

4.2.2. Slow Cortical Potentials (SCP)

4.2.3. Non-Motor Cognitive Tasks

4.3. Hybrid Signals

5. Dataset

6. Signal Preprocessing and Signal Enhancement

6.1. Independent Component Analysis (ICA)

6.2. Common Average Reference (CAR)

6.3. Adaptive Filters

6.4. Principal Component Analysis (PCA)

6.5. Surface Laplacian (SL)

6.6. Signal De-Noising

- Wavelet de-noising and thresholding: The multi-resolution analysis is used to transfer the EEG signal to the discrete wavelet domain. The contrasting or adaptive threshold level is used to reduce particular coefficients associated with the noise signal [261]. Shorter coefficients would tend to define noise characteristics throughout time and scale in a well-matched wavelet representation. In contrast, threshold selection is one of the most critical aspects of successful wavelet de-noising. Thresholding can isolate the signal from the noise in this case; hence, thresholding approaches come in several shapes and sizes. All coefficients underneath a predetermined threshold value are set to zero in hard thresholding. Soft thresholding is a method of reducing the value of the remaining coefficients by a factor of two [262].

- Empirical mode decomposition (EMD): It is a signal analysis algorithm for multivariate signals. It breaks the signal down into a series of frequency and amplitude-regulated zero-mean signals, widely known as intrinsic mode functions (IMFs). Wavelet decomposition, which decomposes a signal into multiple numbers of Intrinsic Mode Functions (IMFs), is compared by EMD. It decomposes these IMFs using a shifting method. An IMF is a function with a single maximum between zero crossings and a mean value of zero. It produces a residue after degrading IMFs. These IMFs are sufficient to characterize a signal [263].

7. Feature Extraction

7.1. EEG-Based Feature Extraction

7.1.1. Time Domain

- Event related potentials: Event-related potentials (ERPs) are very low voltages generated in brain regions in reaction to specific events or stimuli. They are time-locked EEG alterations that provide a safe and noninvasive way to research psychophysiological aspects of mental activities. A wide range of sensory, cognitive, or motor stimuli can trigger event-related potentials [269,270]. ERPs are useful to measure the time to process a stimulus and a response to be produced. The temporal resolution of event-related potentials is remarkable, but it has a low spatial resolution. ERPs were used by Changoluisa, V. et al. [271] to build an adaptive strategy for identifying and detecting changeable ERPs. Continuous monitoring of the curve in ERP components takes account of their temporal and spatial information. Some limitations of ERPs are that it shows poor spatial resolution, whether it is suitable with temporal resolution [272]. Furthermore, a significant drawback of ERP is the difficulty in determining where the electrical activity originates in the brain.

- Statistical features: Several statistical characteristics were employed by several scholars [273,274,275] in their research:

- −

- Mean absolute value:

- −

- Power:

- −

- Standard deviation:

- −

- Root mean square (RMS):

- −

- Square root of amplitude (SRA):

- −

- Skewness value (SV):

- −

- Kurtosis value (KV):

where is the pre-processed EEG signal with N number of samples; refers to the meaning of the samples. Statistical features are useful at low computational cost. - Hjorth features: Bo Hjorth introduced the Hjorth parameters in 1970 [276]; the three statistical parameters employed in time-domain signal processing are activity, mobility, and complexity. Dagdevir, E. et al. [277] proposed a motor imagery-based BCI system where the features were extracted from the dataset using the Hjorth algorithm. The Hjorth features have advantages in real-time analyses as it has a low computation cost. However, it has a statistical bias over signal parameter calculation.

- Phase lag index (PLI): The functional connectivity is determined by calculating the PLI for two pairs of channels. Since it depicts the actual interaction between sources, this index may help estimate phase synchronization in EEG time series. PLI measures the asymmetry of the distribution of phase differences between two signals. The advantage of PLI is that it is less affected by phase delays. It quantifies the nonzero phase lag between the time series of two sources, making it less vulnerable to signals. The effectiveness of functional connectivity features evaluated by phase lag index (PLI), weighted phase lag index (wPLI), and phase-locking value (PLV) on MI classification was studied by Feng, L.Z. et al. [278].

7.1.2. Frequency Domain

- Fast fourier transform (FFT): The Fourier transform is a mathematical transformation that converts any time-domain signal into its frequency domain. Discrete Fourier Transform (DFT) [279], Short Time Fourier Transform (STFT) [280,281], and Fast Fourier Transform are the most common Fourier transform utilized for EEG-based emotion identification (FFT) [282]. Djamal, E.C. et al. [283] developed a wireless device that is used to record a player’s brain activity and extracts each action using Fast Fourier Transform. FFT is faster than any other method available, allowing it to be employed in real-time applications. It is a valuable instrument for signal processing at a fixed location. A limitation of FFT is that it can convert the limited range of waveform data and the requirement to add a window weighting function to the waveform to compensate for spectral leakage.

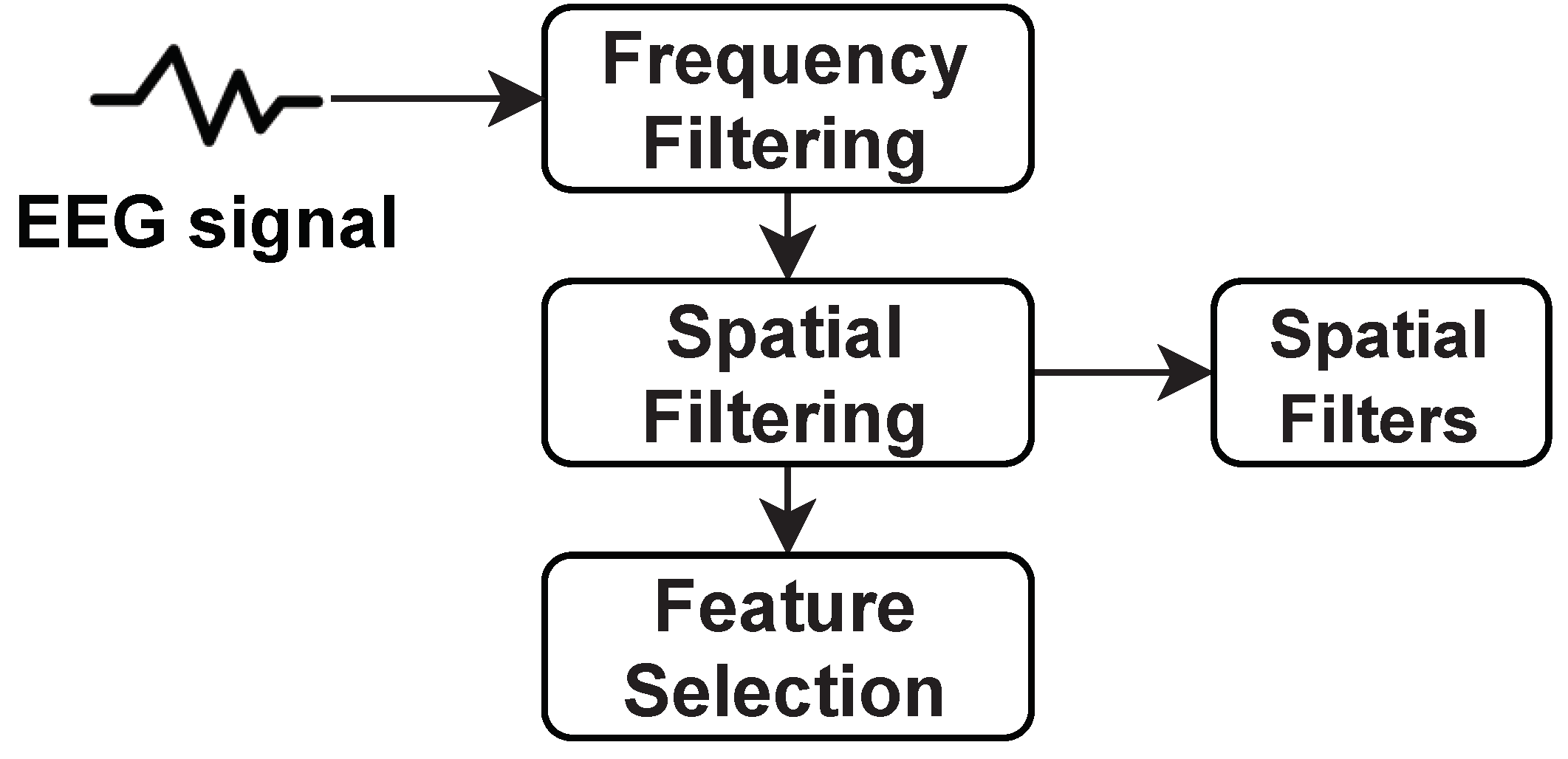

- Common spatial patterns (CSP): It is a spatial filtering technique usually employed in EEG and ECoG-based BCIs to extract classification-relevant data [284]. It optimizes the ratio of their variances whenever two classes of data are utilized to increase the separability of the two classes. In the case of dimensionality reduction, if a different dimension reduction phase precedes CSP, it appears to be better and has more essential generalization features. The basic structure of the CSP can be described by the Figure 5.In Figure 5, CSP provides spatial filters that minimize the variance of an individual class while concurrently maximizing the variance of other classes. These filters are mainly used to choose the frequency from the multichannel EEG signal. After frequency filtering, spatial filtering is performed using spatial filters that are employed to extract spatial information from the signal. Spatial information is significantly necessary to differentiate intent patterns in multichannel EEG recordings for BCI. The performance of this spatial filtering depends on the operational frequency band of EEG. Therefore, CSP is categorized as a frequency domain feature. However, CSP acts as signal enhancement while it requires no preceding excerpt or information of sub-specific bands.

- Higher-order Spectral (HOS): Second-order signal measurements include the auto-correlation function and the power spectrum. Second-order measures operate satisfactorily if the signal resembles a Gaussian probability distribution function. However, most of the real-world signals are non-Gaussian. Therefore, Higher-Order Spectral (HOS) [285] is an extended version of the second-order measure that works well for non-Gaussian signals, when it comes into the equation. In addition, most of the physiological signals are nonlinear and non-stationary. HOS are considered favorable to detect these deviations from the signal’s linearity or stationarity. It is calculated using the Fourier Transform at various frequencies.where is the Fourier transform of the raw EEG signal and l is a shifting parameter.

7.1.3. Time–Frequency Domain

- Autoregressive model: For EEG analysis, the Autoregressive (AR) model has been frequently employed. The central premise of the autoregressive (AR) model is that the real EEG can be approximated using the AR process. With this premise, the approximation AR model’s order and parameters are set to suit the observed EEG as precisely as possible. AR produces a smooth spectrum if the model order is too low, while it produces false peaks if it is too high [287]. AR also reduces leakage and enhances frequency resolution, but choosing the model order in spectral estimation is difficult. The observational data, denoted as , results from a linear system with an transfer function. Then, encounters an AR model of rank p in the formula [288].The AR parameters are , the observations are and the excitation white noise is . Lastly, the most challenging part of AR EEG modeling is choosing the correct model to represent and following the changing spectrum correctly.

- Wavelet Transform (WT): The WT technique encodes the original EEG data using wavelets, which are known as simple building blocks. It looks at unusual data patterns using variable windows with expansive windows for low frequencies and narrow windows for high frequencies. In addition, WT is considered an advanced approach as it offers a simultaneous localization in the time-frequency domain, which is a significant advantage. These wavelets can be discrete or continuous and describe the signal’s characteristics in a time-domain frequency. The Discrete Wavelet Transform (DWT) and the Continuous Wavelet Transform (CWT) are used frequently in EEG analysis [289]. DWT is now a more widely used signal processing method than CWT as CWT is very redundant. DWT decomposes any signal into approximation and detail coefficients corresponding to distinct frequency ranges maintaining the temporal information in the signal. However, most researchers try all available wavelets before choosing the optimal one that produces the best results, as selecting a mother wavelet is challenging. In wavelet-based feature extraction, the Daubechies wavelet of order 4 (db4) is the most commonly employed [290].

7.2. ECoG-Based Features

7.2.1. Linear Filtering

7.2.2. Spatial Filtering

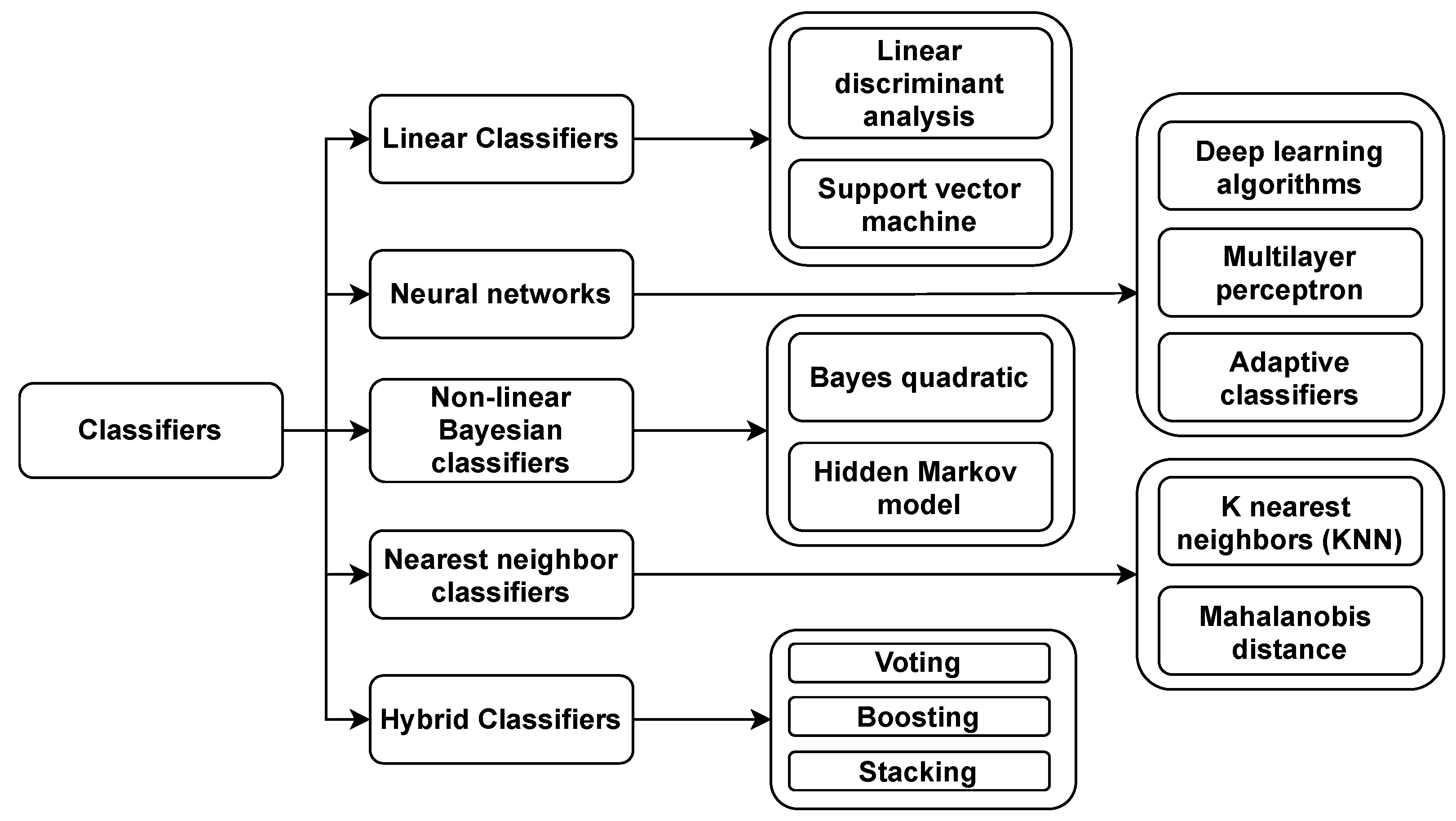

8. BCI Classifiers

8.1. Linear Classifiers

8.1.1. Linear Discriminant Analysis (LDA)

8.1.2. Support Vector Machine (SVM)

8.2. Neural Networks (NN)

8.2.1. Deep Learning (DL) Models

- Convolutional Neural Network (CNN): A convolutional neural network (CNN) is an ANN intended primarily to analyze visual input used in image recognition and processing. The convolutional layer, pooling layer, and fully connected layer are the three layers that comprise CNN. Using a CNN, the input data may be reduced to instant response formations with a minimum loss, and the characteristic spatial relationships of EEG patterns can be recorded. Fatigue detection, sleep stage classification, stress detection, motor imagery data processing, and emotion recognition are among the EEG-based BCI applications using CNNs. In BCI, the CNN models are used in the input brain signals to exploit the latent semantic dependencies.

- Generative Adversarial Network (GAN): Generative adversarial networks are a recent ML technique. The GAN used two ANN models for competing to train each other simultaneously. GANs allow machines to envision and develop new images on their own. EEG-based BCI techniques recorded the signals first and then moved to the GAN techniques to regenerate the images [299]. The significant application of GAN-based BCI systems is data augmentation. Data augmentation increases the amount of training data available and allows for more complicated DL models. It can also reduce overfitting and can increase classifier accuracy and robustness. In the context of BCI, generative algorithms, including GAN, are frequently used to rebuild or generate a set of brain signal recordings to improve the training set.

- Recurrent Neural Network (RNN): RNNs’ basic form is a layer with the output linked to the input. Since it has access to the data from past time-stamps, and the architecture of an RNN layer allows for the model to store memory [300,301]. Since RNN and CNN have strong temporal and spatial feature extraction abilities in most DL approaches, it is logical to mix them for temporal and spatial feature learning. RNN can be considered a more powerful version of hidden Markov models (HMM), which classifies EEG correctly [302]. LSTM is a kind of RNN with a unique architecture that allows it to acquire long-term dependencies despite the difficulties that RNNs confront. It contains a discrete memory cell, a type of node. To manage the flow of data, LSTM employs an architecture with a series of “gates”. When it comes to modeling time series of tasks such as writing and voice recognition, RNN and LSTM have been proven to be effective [303].

8.2.2. Multilayer Perceptron (MLP)

8.2.3. Adaptive Classifiers

8.3. Nonlinear Bayesian Classifiers

8.3.1. Bayes Quadratic

8.3.2. Hidden Markov Model

8.4. Nearest Neighbor Classifiers

8.4.1. K Nearest Neighbors

8.4.2. Mahalanobis Distance

8.5. Hybrid

8.5.1. Boosting

8.5.2. Voting

8.5.3. Stacking

9. Evaluation Measurement

9.1. Generally Used Evaluation Metrics

9.1.1. The Confusion Matrix

9.1.2. Classification Accuracy and Error Rate

9.1.3. Information Transfer Rate

- Target detection accuracy: The accuracy of target identification may be enhanced by increasing the Signal-to-Noise Ratio (SNR) and the separability of several classes. Several techniques, such as trial averaging, spatial filtering, and eliciting increased task-related EEG signals, are employed in the preprocessing step to reduce the SNR. Many applications utilize trail averaging across topics to improve the performance of a single BCI. These mental states may be used to lower the SNR [53].

- Number of classes: The number of classes is raised and more sophisticated applications are built with a high ITR. TDMA, FDMA, and CDMA are among the stimulus coding techniques that have been adopted for BCI systems [243,329]. P300, for example, uses TDMA to code the target stimulus. In VEP-based BCI systems, FDMA and CDMA have been used.

- Target detection time: The detection time is when a user first expresses their purpose and when the system makes a judgment. One of the goals of BCI systems is to improve the ITR by reducing target detection time. Adaptive techniques, such as the “dynamic halting” method, might be used to minimize the target detection time [330].

9.1.4. Cohen’s Kappa Coefficient

9.2. Continuous BCI System Evaluation

9.2.1. Correlation Coefficient

9.2.2. Accuracy

9.2.3. Fitts’s Law

9.3. User-Centric BCI System Evaluation

9.3.1. Usability

- Effectiveness or accuracy: It depicts the overall accuracy of the BCI system as experienced from the end user’s perspective [333].

- Efficiency or information transfer rate: It refers to the speed and timing at which a task is accomplished. Therefore, it depicts the overall BCI system’s speed, throughput, and latency seen through the eyes of the end user’s perspective [333].

- Learnability: The BCI system can make users feel as if they can use the product effectively and quickly learn additional features. Both the end-user and the provider are affected by learnability [338].

- Satisfaction: It is based on participants’ reactions to actual feelings while using BCI systems, showing the user’s favorable attitude regarding utilizing the system. To measure satisfaction, we can use rating scales or qualitative methods [333].

9.3.2. Affect

9.3.3. Ergonomics

9.3.4. Quality of Life

10. Limitations and Challenges

10.1. Based on Usability

10.1.1. Training Time

10.1.2. Fatigue

10.1.3. Mobility to Users

10.1.4. Psychophysiological and Neurological Challenges

10.2. Technical Challenges

10.2.1. Non-Linearity

10.2.2. Non-Stationarity

10.2.3. Transfer Rate of Signals

10.2.4. Signal Processing

10.2.5. Training Sets

10.2.6. Lack of Data Analysis Method

10.2.7. Performance Evaluation Metrics

10.2.8. Low ITR of BCI Systems

10.2.9. Specifically Allocated Lab for BCI Technology

10.3. Ethical Challenges

11. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Berger, H. Über das elektroenkephalogramm des menschen. Archiv. Psychiatr. 1929, 87, 527–570. [Google Scholar] [CrossRef]

- Lindsley, D.B. Psychological phenomena and the electroencephalogram. Electroencephalogr. Clin. Neurophysiol. 1952, 4, 443–456. [Google Scholar] [CrossRef]

- Vidal, J.J. Toward direct brain-computer communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef]

- Zeng, F.G.; Rebscher, S.; Harrison, W.; Sun, X.; Feng, H. Cochlear implants: System design, integration, and evaluation. IEEE Rev. Biomed. Eng. 2008, 1, 115–142. [Google Scholar] [CrossRef] [PubMed]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces: A review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef]

- Tiwari, N.; Edla, D.R.; Dodia, S.; Bablani, A. Brain computer interface: A comprehensive survey. Biol. Inspired Cogn. Archit. 2018, 26, 118–129. [Google Scholar] [CrossRef]

- Vasiljevic, G.A.M.; de Miranda, L.C. Brain–computer interface games based on consumer-grade EEG Devices: A systematic literature review. Int. J. Hum. Comput. Interact. 2020, 36, 105–142. [Google Scholar] [CrossRef]

- Martini, M.L.; Oermann, E.K.; Opie, N.L.; Panov, F.; Oxley, T.; Yaeger, K. Sensor modalities for brain-computer interface technology: A comprehensive literature review. Neurosurgery 2020, 86, E108–E117. [Google Scholar] [CrossRef]

- Bablani, A.; Edla, D.R.; Tripathi, D.; Cheruku, R. Survey on brain-computer interface: An emerging computational intelligence paradigm. ACM Comput. Surv. (CSUR) 2019, 52, 20. [Google Scholar] [CrossRef]

- Fleury, M.; Lioi, G.; Barillot, C.; Lécuyer, A. A Survey on the Use of Haptic Feedback for Brain-Computer Interfaces and Neurofeedback. Front. Neurosci. 2020, 14, 528. [Google Scholar] [CrossRef] [PubMed]

- Torres, P.E.P.; Torres, E.A.; Hernández-Álvarez, M.; Yoo, S.G. EEG-based BCI emotion recognition: A survey. Sensors 2020, 20, 5083. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Yao, L.; Wang, X.; Monaghan, J.J.; Mcalpine, D.; Zhang, Y. A survey on deep learning-based non-invasive brain signals: Recent advances and new frontiers. J. Neural Eng. 2021, 18, 031002. [Google Scholar] [CrossRef]

- Gu, X.; Cao, Z.; Jolfaei, A.; Xu, P.; Wu, D.; Jung, T.P.; Lin, C.T. EEG-based brain-computer interfaces (BCIs): A survey of recent studies on signal sensing technologies and computational intelligence approaches and their applications. IEEE/ACM Trans. Comput. Biol. Bioinform. 2021. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; EBSE Technical Report; Keele University and Durham University Joint Report: Durham, UK, 2007. [Google Scholar]

- Kitchenham, B. Procedures for Performing Systematic Reviews; Technical Report TR/SE-0401; Keele University: Keele, UK, 2004; Volume 33, pp. 1–26. [Google Scholar]

- Nijholt, A. The future of brain-computer interfacing (keynote paper). In Proceedings of the 2016 5th International Conference on Informatics, Electronics and Vision (ICIEV), Dhaka, Bangladesh, 13–14 May 2016; pp. 156–161. [Google Scholar]

- Padfield, N.; Zabalza, J.; Zhao, H.; Masero, V.; Ren, J. EEG-based brain-computer interfaces using motor-imagery: Techniques and challenges. Sensors 2019, 19, 1423. [Google Scholar] [CrossRef] [PubMed]

- Hara, Y. Brain plasticity and rehabilitation in stroke patients. J. Nippon. Med Sch. 2015, 82, 4–13. [Google Scholar] [CrossRef]

- Bousseta, R.; El Ouakouak, I.; Gharbi, M.; Regragui, F. EEG based brain computer interface for controlling a robot arm movement through thought. Irbm 2018, 39, 129–135. [Google Scholar] [CrossRef]

- Perales, F.J.; Riera, L.; Ramis, S.; Guerrero, A. Evaluation of a VR system for Pain Management using binaural acoustic stimulation. Multimed. Tools Appl. 2019, 78, 32869–32890. [Google Scholar] [CrossRef]

- Shim, M.; Hwang, H.J.; Kim, D.W.; Lee, S.H.; Im, C.H. Machine-learning-based diagnosis of schizophrenia using combined sensor-level and source-level EEG features. Schizophr. Res. 2016, 176, 314–319. [Google Scholar] [CrossRef]

- Sharanreddy, M.; Kulkarni, P. Detection of primary brain tumor present in EEG signal using wavelet transform and neural network. Int. J. Biol. Med. Res. 2013, 4, 2855–2859. [Google Scholar]

- Poulos, M.; Felekis, T.; Evangelou, A. Is it possible to extract a fingerprint for early breast cancer via EEG analysis? Med. Hypotheses 2012, 78, 711–716. [Google Scholar] [CrossRef]

- Christensen, J.A.; Koch, H.; Frandsen, R.; Kempfner, J.; Arvastson, L.; Christensen, S.R.; Sorensen, H.B.; Jennum, P. Classification of iRBD and Parkinson’s disease patients based on eye movements during sleep. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 441–444. [Google Scholar]

- Mikołajewska, E.; Mikołajewski, D. The prospects of brain—Computer interface applications in children. Open Med. 2014, 9, 74–79. [Google Scholar] [CrossRef]

- Mane, R.; Chouhan, T.; Guan, C. BCI for stroke rehabilitation: Motor and beyond. J. Neural Eng. 2020, 17, 041001. [Google Scholar] [CrossRef]

- Van Dokkum, L.; Ward, T.; Laffont, I. Brain computer interfaces for neurorehabilitation–its current status as a rehabilitation strategy post-stroke. Ann. Phys. Rehabil. Med. 2015, 58, 3–8. [Google Scholar] [CrossRef] [PubMed]

- Soekadar, S.R.; Silvoni, S.; Cohen, L.G.; Birbaumer, N. Brain-machine interfaces in stroke neurorehabilitation. In Clinical Systems Neuroscience; Springer: Berlin/Heidelberg, Germany, 2015; pp. 3–14. [Google Scholar]

- Beudel, M.; Brown, P. Adaptive deep brain stimulation in Parkinson’s disease. Park. Relat. Disord. 2016, 22, S123–S126. [Google Scholar] [CrossRef]

- Mohagheghian, F.; Makkiabadi, B.; Jalilvand, H.; Khajehpoor, H.; Samadzadehaghdam, N.; Eqlimi, E.; Deevband, M. Computer-aided tinnitus detection based on brain network analysis of EEG functional connectivity. J. Biomed. Phys. Eng. 2019, 9, 687. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Caballero, A.; Navarro, E.; Fernández-Sotos, P.; González, P.; Ricarte, J.J.; Latorre, J.M.; Rodriguez-Jimenez, R. Human-avatar symbiosis for the treatment of auditory verbal hallucinations in schizophrenia through virtual/augmented reality and brain-computer interfaces. Front. Neuroinformatics 2017, 11, 64. [Google Scholar] [CrossRef]

- Dyck, M.S.; Mathiak, K.A.; Bergert, S.; Sarkheil, P.; Koush, Y.; Alawi, E.M.; Zvyagintsev, M.; Gaebler, A.J.; Shergill, S.S.; Mathiak, K. Targeting treatment-resistant auditory verbal hallucinations in schizophrenia with fMRI-based neurofeedback–exploring different cases of schizophrenia. Front. Psychiatry 2016, 7, 37. [Google Scholar] [CrossRef] [PubMed]

- Ehrlich, S.; Guan, C.; Cheng, G. A closed-loop brain-computer music interface for continuous affective interaction. In Proceedings of the 2017 International Conference on Orange Technologies (ICOT), Singapore, 8–10 September 2017; pp. 176–179. [Google Scholar]

- Placidi, G.; Cinque, L.; Di Giamberardino, P.; Iacoviello, D.; Spezialetti, M. An affective BCI driven by self-induced emotions for people with severe neurological disorders. In International Conference on Image Analysis and Processing; Springer: Berlin/Heidelberg, Germany, 2017; pp. 155–162. [Google Scholar]

- Kerous, B.; Skola, F.; Liarokapis, F. EEG-based BCI and video games: A progress report. Virtual Real. 2018, 22, 119–135. [Google Scholar] [CrossRef]

- Stein, A.; Yotam, Y.; Puzis, R.; Shani, G.; Taieb-Maimon, M. EEG-triggered dynamic difficulty adjustment for multiplayer games. Entertain. Comput. 2018, 25, 14–25. [Google Scholar] [CrossRef]

- Zhang, B.; Wang, J.; Fuhlbrigge, T. A review of the commercial brain-computer interface technology from perspective of industrial robotics. In Proceedings of the 2010 IEEE International Conference on Automation and Logistics, Hong Kong, China, 16–20 August 2010; pp. 379–384. [Google Scholar]

- Van De Laar, B.; Brugman, I.; Nijboer, F.; Poel, M.; Nijholt, A. BrainBrush, a multimodal application for creative expressivity. In Proceedings of the Sixth International Conference on Advances in Computer-Human Interactions (ACHI 2013), Nice, France, 24 February–1 March 2013; pp. 62–67. [Google Scholar]

- Todd, D.; McCullagh, P.J.; Mulvenna, M.D.; Lightbody, G. Investigating the use of brain-computer interaction to facilitate creativity. In Proceedings of the 3rd Augmented Human International Conference, Megève, France, 8–9 March 2012; pp. 1–8. [Google Scholar]

- Liu, Y.T.; Wu, S.L.; Chou, K.P.; Lin, Y.Y.; Lu, J.; Zhang, G.; Lin, W.C.; Lin, C.T. Driving fatigue prediction with pre-event electroencephalography (EEG) via a recurrent fuzzy neural network. In Proceedings of the 2016 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Vancouver, BC, Canada, 24–29 July 2016; pp. 2488–2494. [Google Scholar]

- Binias, B.; Myszor, D.; Cyran, K.A. A machine learning approach to the detection of pilot’s reaction to unexpected events based on EEG signals. Comput. Intell. Neurosci. 2018, 2018, 2703513. [Google Scholar] [CrossRef] [PubMed]

- Waldert, S. Invasive vs. non-invasive neuronal signals for brain-machine interfaces: Will one prevail? Front. Neurosci. 2016, 10, 295. [Google Scholar] [CrossRef]

- Panoulas, K.J.; Hadjileontiadis, L.J.; Panas, S.M. Brain-computer interface (BCI): Types, processing perspectives and applications. In Multimedia Services in Intelligent Environments; Springer: Berlin/Heidelberg, Germany, 2010; pp. 299–321. [Google Scholar]

- Wikipedia Contributors. Electrocorticography—Wikipedia, The Free Encyclopedia. 2021. Available online: https://en.wikipedia.org/w/index.php?title=Electrocorticography&oldid=1032187616 (accessed on 8 July 2021).

- Kuruvilla, A.; Flink, R. Intraoperative electrocorticography in epilepsy surgery: Useful or not? Seizure 2003, 12, 577–584. [Google Scholar] [CrossRef]

- Homan, R.W.; Herman, J.; Purdy, P. Cerebral location of international 10–20 system electrode placement. Electroencephalogr. Clin. Neurophysiol. 1987, 66, 376–382. [Google Scholar] [CrossRef]

- Cohen, D. Magnetoencephalography: Evidence of magnetic fields produced by alpha-rhythm currents. Science 1968, 161, 784–786. [Google Scholar] [CrossRef] [PubMed]

- Wikipedia Contributors. Human Brain—Wikipedia, The Free Encyclopedia. 2021. Available online: https://en.wikipedia.org/w/index.php?title=Human_brain&oldid=1032229379 (accessed on 8 July 2021).

- Zimmerman, J.; Thiene, P.; Harding, J. Design and operation of stable rf-biased superconducting point-contact quantum devices, and a note on the properties of perfectly clean metal contacts. J. Appl. Phys. 1970, 41, 1572–1580. [Google Scholar] [CrossRef]

- Wilson, J.A.; Felton, E.A.; Garell, P.C.; Schalk, G.; Williams, J.C. ECoG factors underlying multimodal control of a brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 246–250. [Google Scholar] [CrossRef]

- Weiskopf, N.; Veit, R.; Erb, M.; Mathiak, K.; Grodd, W.; Goebel, R.; Birbaumer, N. Physiological self-regulation of regional brain activity using real-time functional magnetic resonance imaging (fMRI): Methodology and exemplary data. Neuroimage 2003, 19, 577–586. [Google Scholar] [CrossRef]

- Ramadan, R.A.; Vasilakos, A.V. Brain computer interface: Control signals review. Neurocomputing 2017, 223, 26–44. [Google Scholar] [CrossRef]

- Huisman, T. Diffusion-weighted and diffusion tensor imaging of the brain, made easy. Cancer Imaging 2010, 10, S163. [Google Scholar] [CrossRef]

- Borkowski, K.; Krzyżak, A.T. Analysis and correction of errors in DTI-based tractography due to diffusion gradient inhomogeneity. J. Magn. Reson. 2018, 296, 5–11. [Google Scholar] [CrossRef]

- Purnell, J.; Klopfenstein, B.; Stevens, A.; Havel, P.J.; Adams, S.; Dunn, T.; Krisky, C.; Rooney, W. Brain functional magnetic resonance imaging response to glucose and fructose infusions in humans. Diabetes Obes. Metab. 2011, 13, 229–234. [Google Scholar] [CrossRef]

- Tai, Y.; Piccini, P. Applications of positron emission tomography (PET) in neurology. J. Neurol. Neurosurg. Psychiatry 2004, 75, 669–676. [Google Scholar] [CrossRef]

- Walker, S.M.; Lim, I.; Lindenberg, L.; Mena, E.; Choyke, P.L.; Turkbey, B. Positron emission tomography (PET) radiotracers for prostate cancer imaging. Abdom. Radiol. 2020, 45, 2165–2175. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, R.; Gao, X.; Hong, B.; Gao, S. A practical VEP-based brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 234–240. [Google Scholar] [CrossRef]

- Lim, J.H.; Hwang, H.J.; Han, C.H.; Jung, K.Y.; Im, C.H. Classification of binary intentions for individuals with impaired oculomotor function: ‘eyes-closed’ SSVEP-based brain–computer interface (BCI). J. Neural Eng. 2013, 10, 026021. [Google Scholar] [CrossRef] [PubMed]

- Bera, T.K. Noninvasive electromagnetic methods for brain monitoring: A technical review. In Brain-Computer Interfaces; Springer: Berlin/Heidelberg, Germany, 2015; pp. 51–95. [Google Scholar]

- Zhu, D.; Bieger, J.; Garcia Molina, G.; Aarts, R.M. A survey of stimulation methods used in SSVEP-based BCIs. Comput. Intell. Neurosci. 2010, 2010, 702357. [Google Scholar] [CrossRef] [PubMed]

- Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 2007, 118, 2128–2148. [Google Scholar] [CrossRef] [PubMed]

- Golub, M.D.; Chase, S.M.; Batista, A.P.; Byron, M.Y. Brain–computer interfaces for dissecting cognitive processes underlying sensorimotor control. Curr. Opin. Neurobiol. 2016, 37, 53–58. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.H.; Kim, B.C.; Byun, Y.T.; Jhon, Y.M.; Lee, S.; Woo, D.H.; Kim, S.H. All-optical AND gate using cross-gain modulation in semiconductor optical amplifiers. Jpn. J. Appl. Phys. 2004, 43, 608. [Google Scholar] [CrossRef]

- Dobrea, M.C.; Dobrea, D.M. The selection of proper discriminative cognitive tasks—A necessary prerequisite in high-quality BCI applications. In Proceedings of the 2009 2nd International Symposium on Applied Sciences in Biomedical and Communication Technologies, Bratislava, Slovakia, 24–27 November 2009; pp. 1–6. [Google Scholar]

- Penny, W.D.; Roberts, S.J.; Curran, E.A.; Stokes, M.J. EEG-based communication: A pattern recognition approach. IEEE Trans. Rehabil. Eng. 2000, 8, 214–215. [Google Scholar] [CrossRef] [PubMed]

- Amiri, S.; Fazel-Rezai, R.; Asadpour, V. A review of hybrid brain-computer interface systems. Adv. Hum. Comput. Interact. 2013, 2013, 187024. [Google Scholar] [CrossRef]

- Mustafa, M. Auditory Evoked Potential (AEP) Based Brain-Computer Interface (BCI) Technology: A Short Review. Adv. Robot. Autom. Data Anal. 2021, 1350, 272. [Google Scholar]

- Cho, H.; Ahn, M.; Ahn, S.; Kwon, M.; Jun, S.C. EEG datasets for motor imagery brain–computer interface. GigaScience 2017, 6, gix034. [Google Scholar] [CrossRef] [PubMed]

- Gaur, P.; Gupta, H.; Chowdhury, A.; McCreadie, K.; Pachori, R.B.; Wang, H. A Sliding Window Common Spatial Pattern for Enhancing Motor Imagery Classification in EEG-BCI. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- Long, J.; Li, Y.; Yu, T.; Gu, Z. Target selection with hybrid feature for BCI-based 2-D cursor control. IEEE Trans. Biomed. Eng. 2011, 59, 132–140. [Google Scholar] [CrossRef]

- Ahn, S.; Ahn, M.; Cho, H.; Jun, S.C. Achieving a hybrid brain-computer interface with tactile selective attention and motor imagery. J. Neural Eng. 2014, 11, 066004. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Li, Y.; Long, J.; Yu, T.; Gu, Z. An asynchronous wheelchair control by hybrid EEG–EOG brain-computer interface. Cogn. Neurodyn. 2014, 8, 399–409. [Google Scholar] [CrossRef]

- Alomari, M.H.; AbuBaker, A.; Turani, A.; Baniyounes, A.M.; Manasreh, A. EEG mouse: A machine learning-based brain computer interface. Int. J. Adv. Comput. Sci. Appl. 2014, 5, 193–198. [Google Scholar]

- Xu, B.G.; Song, A.G. Pattern recognition of motor imagery EEG using wavelet transform. J. Biomed. Sci. Eng. 2008, 1, 64. [Google Scholar] [CrossRef]

- Wang, X.; Hersche, M.; Tömekce, B.; Kaya, B.; Magno, M.; Benini, L. An accurate eegnet-based motor-imagery brain–computer interface for low-power edge computing. In Proceedings of the 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Bari, Italy, 1 June–1 July 2020; pp. 1–6. [Google Scholar]

- Kayikcioglu, T.; Aydemir, O. A polynomial fitting and k-NN based approach for improving classification of motor imagery BCI data. Pattern Recognit. Lett. 2010, 31, 1207–1215. [Google Scholar] [CrossRef]

- Loboda, A.; Margineanu, A.; Rotariu, G.; Lazar, A.M. Discrimination of EEG-based motor imagery tasks by means of a simple phase information method. Int. J. Adv. Res. Artif. Intell. 2014, 3, 10. [Google Scholar] [CrossRef]

- Alexandre, B.; Rafal, C. Grasp-and-Lift EEG Detection, Identify Hand Motions from EEG Recordings Competition Dataset. Available online: https://www.kaggle.com/c/grasp-and-lift-eeg-detection/data (accessed on 19 August 2021).

- Chen, X.; Zhao, B.; Wang, Y.; Xu, S.; Gao, X. Control of a 7-DOF robotic arm system with an SSVEP-based BCI. Int. J. Neural Syst. 2018, 28, 1850018. [Google Scholar] [CrossRef] [PubMed]

- Lin, B.; Deng, S.; Gao, H.; Yin, J. A multi-scale activity transition network for data translation in EEG signals decoding. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020. [Google Scholar] [CrossRef]

- Neuper, C.; Müller-Putz, G.R.; Scherer, R.; Pfurtscheller, G. Motor imagery and EEG-based control of spelling devices and neuroprostheses. Prog. Brain Res. 2006, 159, 393–409. [Google Scholar] [PubMed]

- Ko, W.; Yoon, J.; Kang, E.; Jun, E.; Choi, J.S.; Suk, H.I. Deep recurrent spatio-temporal neural network for motor imagery based BCI. In Proceedings of the 2018 6th International Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 15–17 January 2018; pp. 1–3. [Google Scholar]

- Duan, F.; Lin, D.; Li, W.; Zhang, Z. Design of a multimodal EEG-based hybrid BCI system with visual servo module. IEEE Trans. Auton. Ment. Dev. 2015, 7, 332–341. [Google Scholar] [CrossRef]

- Kaya, M.; Binli, M.K.; Ozbay, E.; Yanar, H.; Mishchenko, Y. A large electroencephalographic motor imagery dataset for electroencephalographic brain computer interfaces. Sci. Data 2018, 5, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Duan, L.; Zhong, H.; Miao, J.; Yang, Z.; Ma, W.; Zhang, X. A voting optimized strategy based on ELM for improving classification of motor imagery BCI data. Cogn. Comput. 2014, 6, 477–483. [Google Scholar] [CrossRef]

- Hossain, I.; Khosravi, A.; Hettiarachchi, I.; Nahavandi, S. Multiclass informative instance transfer learning framework for motor imagery-based brain-computer interface. Comput. Intell. Neurosci. 2018, 2018, 6323414. [Google Scholar] [CrossRef]

- Khan, M.A.; Das, R.; Iversen, H.K.; Puthusserypady, S. Review on motor imagery based BCI systems for upper limb post-stroke neurorehabilitation: From designing to application. Comput. Biol. Med. 2020, 123, 103843. [Google Scholar] [CrossRef]

- Duan, L.; Bao, M.; Miao, J.; Xu, Y.; Chen, J. Classification based on multilayer extreme learning machine for motor imagery task from EEG signals. Procedia Comput. Sci. 2016, 88, 176–184. [Google Scholar] [CrossRef]

- Velasco-Álvarez, F.; Ron-Angevin, R.; da Silva-Sauer, L.; Sancha-Ros, S. Audio-cued motor imagery-based brain–computer interface: Navigation through virtual and real environments. Neurocomputing 2013, 121, 89–98. [Google Scholar] [CrossRef]

- Ahn, M.; Jun, S.C. Performance variation in motor imagery brain–computer interface: A brief review. J. Neurosci. Methods 2015, 243, 103–110. [Google Scholar] [CrossRef] [PubMed]

- Blankertz, B.; Müller, K.R.; Krusienski, D.; Schalk, G.; Wolpaw, J.R.; Schlögl, A.; Pfurtscheller, G.; Millán, J.d.R.; Schröder, M.; Birbaumer, N. BCI Competition iii. 2005. Available online: http://www.bbci.de/competition/iii/ (accessed on 19 August 2021).

- Blankertz, B.; Muller, K.R.; Krusienski, D.J.; Schalk, G.; Wolpaw, J.R.; Schlogl, A.; Pfurtscheller, G.; Millan, J.R.; Schroder, M.; Birbaumer, N. The BCI competition III: Validating alternative approaches to actual BCI problems. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 153–159. [Google Scholar] [CrossRef]

- Jin, J.; Miao, Y.; Daly, I.; Zuo, C.; Hu, D.; Cichocki, A. Correlation-based channel selection and regularized feature optimization for MI-based BCI. Neural Netw. 2019, 118, 262–270. [Google Scholar] [CrossRef] [PubMed]

- Lemm, S.; Schafer, C.; Curio, G. BCI competition 2003-data set III: Probabilistic modeling of sensorimotor/spl mu/rhythms for classification of imaginary hand movements. IEEE Trans. Biomed. Eng. 2004, 51, 1077–1080. [Google Scholar] [CrossRef]

- Tangermann, M.; Müller, K.R.; Aertsen, A.; Birbaumer, N.; Braun, C.; Brunner, C.; Leeb, R.; Mehring, C.; Miller, K.J.; Mueller-Putz, G.; et al. Review of the BCI competition IV. Front. Neurosci. 2012, 6, 55. [Google Scholar]

- Park, Y.; Chung, W. Frequency-optimized local region common spatial pattern approach for motor imagery classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1378–1388. [Google Scholar] [CrossRef]

- Wang, D.; Miao, D.; Blohm, G. Multi-class motor imagery EEG decoding for brain-computer interfaces. Front. Neurosci. 2012, 6, 151. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.; Hettiarachchi, I.; Khatami, A.; Gordon-Brown, L.; Lim, C.P.; Nahavandi, S. Classification of multi-class BCI data by common spatial pattern and fuzzy system. IEEE Access 2018, 6, 27873–27884. [Google Scholar] [CrossRef]

- Satti, A.; Guan, C.; Coyle, D.; Prasad, G. A covariate shift minimisation method to alleviate non-stationarity effects for an adaptive brain-computer interface. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 105–108. [Google Scholar]

- Sakhavi, S.; Guan, C.; Yan, S. Parallel convolutional-linear neural network for motor imagery classification. In Proceedings of the 2015 23rd European Signal Processing Conference (EUSIPCO), Nice, France, 31 August–4 September 2015; pp. 2736–2740. [Google Scholar]

- Raza, H.; Cecotti, H.; Li, Y.; Prasad, G. Adaptive learning with covariate shift-detection for motor imagery-based brain–computer interface. Soft Comput. 2016, 20, 3085–3096. [Google Scholar] [CrossRef]

- Selim, S.; Tantawi, M.M.; Shedeed, H.A.; Badr, A. A CSP∖AM-BA-SVM Approach for Motor Imagery BCI System. IEEE Access 2018, 6, 49192–49208. [Google Scholar] [CrossRef]

- Hersche, M.; Rellstab, T.; Schiavone, P.D.; Cavigelli, L.; Benini, L.; Rahimi, A. Fast and accurate multiclass inference for MI-BCIs using large multiscale temporal and spectral features. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018; pp. 1690–1694. [Google Scholar]

- Sakhavi, S.; Guan, C.; Yan, S. Learning temporal information for brain-computer interface using convolutional neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 5619–5629. [Google Scholar] [CrossRef] [PubMed]

- Hossain, I.; Khosravi, A.; Nahavandhi, S. Active transfer learning and selective instance transfer with active learning for motor imagery based BCI. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 4048–4055. [Google Scholar]

- Zhu, X.; Li, P.; Li, C.; Yao, D.; Zhang, R.; Xu, P. Separated channel convolutional neural network to realize the training free motor imagery BCI systems. Biomed. Signal Process. Control. 2019, 49, 396–403. [Google Scholar] [CrossRef]

- Sun, L.; Feng, Z.; Chen, B.; Lu, N. A contralateral channel guided model for EEG based motor imagery classification. Biomed. Signal Process. Control. 2018, 41, 1–9. [Google Scholar] [CrossRef]

- Uran, A.; Van Gemeren, C.; van Diepen, R.; Chavarriaga, R.; Millán, J.d.R. Applying transfer learning to deep learned models for EEG analysis. arXiv 2019, arXiv:1907.01332. [Google Scholar]

- Gandhi, V.; Prasad, G.; Coyle, D.; Behera, L.; McGinnity, T.M. Evaluating Quantum Neural Network filtered motor imagery brain-computer interface using multiple classification techniques. Neurocomputing 2015, 170, 161–167. [Google Scholar] [CrossRef]

- Ha, K.W.; Jeong, J.W. Motor imagery EEG classification using capsule networks. Sensors 2019, 19, 2854. [Google Scholar] [CrossRef]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 2017. [Google Scholar] [CrossRef] [PubMed]

- Ahn, M.; Cho, H.; Ahn, S.; Jun, S.C. High theta and low alpha powers may be indicative of BCI-illiteracy in motor imagery. PLoS ONE 2013, 8, e80886. [Google Scholar] [CrossRef] [PubMed]

- Amin, S.U.; Alsulaiman, M.; Muhammad, G.; Mekhtiche, M.A.; Hossain, M.S. Deep Learning for EEG motor imagery classification based on multi-layer CNNs feature fusion. Future Gener. Comput. Syst. 2019, 101, 542–554. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.R.; Zhang, B.; Lei, M.Y.; Cui, W.G.; Guo, Y.Z. A channel-projection mixed-scale convolutional neural network for motor imagery EEG decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1170–1180. [Google Scholar] [CrossRef] [PubMed]

- Ahn, M.; Ahn, S.; Hong, J.H.; Cho, H.; Kim, K.; Kim, B.S.; Chang, J.W.; Jun, S.C. Gamma band activity associated with BCI performance: Simultaneous MEG/EEG study. Front. Hum. Neurosci. 2013, 7, 848. [Google Scholar] [CrossRef]

- Wang, W.; Degenhart, A.D.; Sudre, G.P.; Pomerleau, D.A.; Tyler-Kabara, E.C. Decoding semantic information from human electrocorticographic (ECoG) signals. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2011, 2011, 6294–6298. [Google Scholar] [PubMed]

- Williams, J.J.; Rouse, A.G.; Thongpang, S.; Williams, J.C.; Moran, D.W. Differentiating closed-loop cortical intention from rest: Building an asynchronous electrocorticographic BCI. J. Neural Eng. 2013, 10, 046001. [Google Scholar] [CrossRef]

- Li, Z.; Qiu, L.; Li, R.; He, Z.; Xiao, J.; Liang, Y.; Wang, F.; Pan, J. Enhancing BCI-Based emotion recognition using an improved particle swarm optimization for feature selection. Sensors 2020, 20, 3028. [Google Scholar] [CrossRef]

- Onose, G.; Grozea, C.; Anghelescu, A.; Daia, C.; Sinescu, C.; Ciurea, A.; Spircu, T.; Mirea, A.; Andone, I.; Spânu, A.; et al. On the feasibility of using motor imagery EEG-based brain–computer interface in chronic tetraplegics for assistive robotic arm control: A clinical test and long-term post-trial follow-up. Spinal Cord 2012, 50, 599–608. [Google Scholar] [CrossRef]

- Meng, J.; Streitz, T.; Gulachek, N.; Suma, D.; He, B. Three-dimensional brain–computer interface control through simultaneous overt spatial attentional and motor imagery tasks. IEEE Trans. Biomed. Eng. 2018, 65, 2417–2427. [Google Scholar] [CrossRef]

- Kosmyna, N.; Tarpin-Bernard, F.; Rivet, B. Towards brain computer interfaces for recreational activities: Piloting a drone. In IFIP Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2015; pp. 506–522. [Google Scholar]

- Dua, D.; Graff, C. UCI Machine Learning Repository; University of California: Irvine, CA, USA, 2017. [Google Scholar]

- Sonkin, K.M.; Stankevich, L.A.; Khomenko, J.G.; Nagornova, Z.V.; Shemyakina, N.V. Development of electroencephalographic pattern classifiers for real and imaginary thumb and index finger movements of one hand. Artif. Intell. Med. 2015, 63, 107–117. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Pokorny, C.; Klobassa, D.S.; Horki, P. A single-switch BCI based on passive and imagined movements: Toward restoring communication in minimally conscious patients. Int. J. Neural Syst. 2013, 23, 1250037. [Google Scholar] [CrossRef]

- Eskandari, P.; Erfanian, A. Improving the performance of brain-computer interface through meditation practicing. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2008, 2008, 662–665. [Google Scholar]

- Edelman, B.J.; Baxter, B.; He, B. EEG source imaging enhances the decoding of complex right-hand motor imagery tasks. IEEE Trans. Biomed. Eng. 2015, 63, 4–14. [Google Scholar] [CrossRef]

- Lotte, F.; Jeunet, C. Defining and quantifying users’ mental imagery-based BCI skills: A first step. J. Neural Eng. 2018, 15, 046030. [Google Scholar] [CrossRef]

- Jeunet, C.; N’Kaoua, B.; Subramanian, S.; Hachet, M.; Lotte, F. Predicting mental imagery-based BCI performance from personality, cognitive profile and neurophysiological patterns. PLoS ONE 2015, 10, e0143962. [Google Scholar] [CrossRef]

- Rathee, D.; Cecotti, H.; Prasad, G. Single-trial effective brain connectivity patterns enhance discriminability of mental imagery tasks. J. Neural Eng. 2017, 14, 056005. [Google Scholar] [CrossRef]

- Sadiq, M.T.; Yu, X.; Yuan, Z.; Aziz, M.Z. Identification of motor and mental imagery EEG in two and multiclass subject-dependent tasks using successive decomposition index. Sensors 2020, 20, 5283. [Google Scholar] [CrossRef] [PubMed]

- Lotte, F.; Jeunet, C. Online classification accuracy is a poor metric to study mental imagery-based bci user learning: An experimental demonstration and new metrics. In Proceedings of the 7th international BCI conference, Pacific Grove, CA, USA, 21–25 May 2017. [Google Scholar]

- Wierzgała, P.; Zapała, D.; Wojcik, G.M.; Masiak, J. Most popular signal processing methods in motor-imagery BCI: A review and meta-analysis. Front. Neuroinformatics 2018, 12, 78. [Google Scholar] [CrossRef] [PubMed]

- Park, C.; Looney, D.; ur Rehman, N.; Ahrabian, A.; Mandic, D.P. Classification of motor imagery BCI using multivariate empirical mode decomposition. IEEE Trans. Neural Syst. Rehabil. Eng. 2012, 21, 10–22. [Google Scholar] [CrossRef] [PubMed]

- Alexandre, B.; Rafal, C. BCI Challenge @ NER 2015, A Spell on You If You Cannot Detect Errors! Available online: https://www.kaggle.com/c/inria-bci-challenge/data (accessed on 19 August 2021).

- Mahmud, M.; Kaiser, M.S.; McGinnity, T.M.; Hussain, A. Deep learning in mining biological data. Cogn. Comput. 2021, 13, 1–33. [Google Scholar] [CrossRef] [PubMed]

- Cruz, A.; Pires, G.; Nunes, U.J. Double ErrP detection for automatic error correction in an ERP-based BCI speller. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 26, 26–36. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharyya, S.; Konar, A.; Tibarewala, D.N.; Hayashibe, M. A generic transferable EEG decoder for online detection of error potential in target selection. Front. Neurosci. 2017, 11, 226. [Google Scholar] [CrossRef] [PubMed]

- Jrad, N.; Congedo, M.; Phlypo, R.; Rousseau, S.; Flamary, R.; Yger, F.; Rakotomamonjy, A. sw-SVM: Sensor weighting support vector machines for EEG-based brain–computer interfaces. J. Neural Eng. 2011, 8, 056004. [Google Scholar] [CrossRef] [PubMed]

- Zeyl, T.; Yin, E.; Keightley, M.; Chau, T. Partially supervised P300 speller adaptation for eventual stimulus timing optimization: Target confidence is superior to error-related potential score as an uncertain label. J. Neural Eng. 2016, 13, 026008. [Google Scholar] [CrossRef] [PubMed]

- Wirth, C.; Dockree, P.; Harty, S.; Lacey, E.; Arvaneh, M. Towards error categorisation in BCI: Single-trial EEG classification between different errors. J. Neural Eng. 2019, 17, 016008. [Google Scholar] [CrossRef] [PubMed]

- Combaz, A.; Chumerin, N.; Manyakov, N.V.; Robben, A.; Suykens, J.A.; Van Hulle, M.M. Towards the detection of error-related potentials and its integration in the context of a P300 speller brain–computer interface. Neurocomputing 2012, 80, 73–82. [Google Scholar] [CrossRef]

- Zeyl, T.; Yin, E.; Keightley, M.; Chau, T. Improving bit rate in an auditory BCI: Exploiting error-related potentials. Brain-Comput. Interfaces 2016, 3, 75–87. [Google Scholar] [CrossRef]

- Spüler, M.; Niethammer, C. Error-related potentials during continuous feedback: Using EEG to detect errors of different type and severity. Front. Hum. Neurosci. 2015, 9, 155. [Google Scholar]

- Kreilinger, A.; Neuper, C.; Müller-Putz, G.R. Error potential detection during continuous movement of an artificial arm controlled by brain–computer interface. Med. Biol. Eng. Comput. 2012, 50, 223–230. [Google Scholar] [CrossRef]

- Kreilinger, A.; Hiebel, H.; Müller-Putz, G.R. Single versus multiple events error potential detection in a BCI-controlled car game with continuous and discrete feedback. IEEE Trans. Biomed. Eng. 2015, 63, 519–529. [Google Scholar] [CrossRef]

- Dias, C.L.; Sburlea, A.I.; Müller-Putz, G.R. Masked and unmasked error-related potentials during continuous control and feedback. J. Neural Eng. 2018, 15, 036031. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Atkinson, J.; Campos, D. Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst. Appl. 2016, 47, 35–41. [Google Scholar] [CrossRef]

- Lan, Z.; Sourina, O.; Wang, L.; Scherer, R.; Müller-Putz, G.R. Domain adaptation techniques for EEG-based emotion recognition: A comparative study on two public datasets. IEEE Trans. Cogn. Dev. Syst. 2018, 11, 85–94. [Google Scholar] [CrossRef]

- Al-Nafjan, A.; Hosny, M.; Al-Wabil, A.; Al-Ohali, Y. Classification of human emotions from electroencephalogram (EEG) signal using deep neural network. Int. J. Adv. Comput. Sci. Appl 2017, 8, 419–425. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, P.; Mao, Z.; Huang, Y.; Jiang, D.; Zhang, Y. Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks. IEEE Access 2019, 7, 44317–44328. [Google Scholar] [CrossRef]

- Sánchez-Reolid, R.; García, A.S.; Vicente-Querol, M.A.; Fernández-Aguilar, L.; López, M.T.; Fernández-Caballero, A.; González, P. Artificial neural networks to assess emotional states from brain-computer interface. Electronics 2018, 7, 384. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.; Fu, Y.; Chen, X. Continuous convolutional neural network with 3d input for eeg-based emotion recognition. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 433–443. [Google Scholar]

- Liu, J.; Wu, G.; Luo, Y.; Qiu, S.; Yang, S.; Li, W.; Bi, Y. EEG-based emotion classification using a deep neural network and sparse autoencoder. Front. Syst. Neurosci. 2020, 14, 43. [Google Scholar] [CrossRef]

- Lim, W.; Sourina, O.; Wang, L. STEW: Simultaneous task EEG workload data set. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2106–2114. [Google Scholar] [CrossRef]

- Savran, A.; Ciftci, K.; Chanel, G.; Mota, J.; Hong Viet, L.; Sankur, B.; Akarun, L.; Caplier, A.; Rombaut, M. Emotion detection in the loop from brain signals and facial images. In Proceedings of the eNTERFACE 2006 Workshop, Dubrovnik, Croatia, 17 July–11 August 2006. [Google Scholar]

- Onton, J.A.; Makeig, S. High-frequency broadband modulation of electroencephalographic spectra. Front. Hum. Neurosci. 2009, 3, 61. [Google Scholar] [CrossRef] [PubMed]

- Data-EEG-25-users-Neuromarketing, Recorded EEG Signals While Viewing Consumer Products on Computer Screen, Indian Institute of Technology, Roorkee, India. Available online: https://drive.google.com/file/d/0B2T1rQUvyyWcSGVVaHZBZzRtTms/view?resourcekey=0-wuVvZnp9Ub89GMoErrxSrQ (accessed on 19 August 2021).

- Yadava, M.; Kumar, P.; Saini, R.; Roy, P.P.; Dogra, D.P. Analysis of EEG signals and its application to neuromarketing. Multimed. Tools Appl. 2017, 76, 19087–19111. [Google Scholar] [CrossRef]

- Aldayel, M.; Ykhlef, M.; Al-Nafjan, A. Deep learning for EEG-based preference classification in neuromarketing. Appl. Sci. 2020, 10, 1525. [Google Scholar] [CrossRef]

- Zheng, W.; Liu, W.; Lu, Y.; Lu, B.; Cichocki, A. EmotionMeter: A Multimodal Framework for Recognizing Human Emotions. IEEE Trans. Cybern. 2018, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Seidler, T.G.; Plotkin, J.B. Seed dispersal and spatial pattern in tropical trees. PLoS Biol. 2006, 4, e344. [Google Scholar] [CrossRef] [PubMed]

- Getzin, S.; Wiegand, T.; Hubbell, S.P. Stochastically driven adult–recruit associations of tree species on Barro Colorado Island. Proc. R. Soc. Biol. Sci. 2014, 281, 20140922. [Google Scholar] [CrossRef] [PubMed]

- Kong, X.; Kong, W.; Fan, Q.; Zhao, Q.; Cichocki, A. Task-independent eeg identification via low-rank matrix decomposition. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 412–419. [Google Scholar]

- González, J.; Ortega, J.; Damas, M.; Martín-Smith, P.; Gan, J.Q. A new multi-objective wrapper method for feature selection–Accuracy and stability analysis for BCI. Neurocomputing 2019, 333, 407–418. [Google Scholar] [CrossRef]

- Dalling, J.W.; Brown, T.A. Long-term persistence of pioneer species in tropical rain forest soil seed banks. Am. Nat. 2009, 173, 531–535. [Google Scholar] [CrossRef] [PubMed]

- Aznan, N.K.N.; Atapour-Abarghouei, A.; Bonner, S.; Connolly, J.D.; Al Moubayed, N.; Breckon, T.P. Simulating brain signals: Creating synthetic eeg data via neural-based generative models for improved ssvep classification. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Zhong, P.; Wang, D.; Miao, C. EEG-based emotion recognition using regularized graph neural networks. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef]

- Li, H.; Jin, Y.M.; Zheng, W.L.; Lu, B.L. Cross-subject emotion recognition using deep adaptation networks. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 403–413. [Google Scholar]

- Thejaswini, S.; Kumar, D.K.; Nataraj, J.L. Analysis of EEG based emotion detection of DEAP and SEED-IV databases using SVM. In Proceedings of the Second International Conference on Emerging Trends in Science & Technologies For Engineering Systems (ICETSE-2019), Bengaluru, India, 17–18 May 2019. [Google Scholar]

- Liu, W.; Qiu, J.L.; Zheng, W.L.; Lu, B.L. Multimodal emotion recognition using deep canonical correlation analysis. arXiv 2019, arXiv:1908.05349. [Google Scholar]

- Rim, B.; Sung, N.J.; Min, S.; Hong, M. Deep learning in physiological signal data: A survey. Sensors 2020, 20, 969. [Google Scholar] [CrossRef]

- Cimtay, Y.; Ekmekcioglu, E. Investigating the use of pretrained convolutional neural network on cross-subject and cross-dataset EEG emotion recognition. Sensors 2020, 20, 2034. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. A multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef] [PubMed]

- Ma, B.Q.; Li, H.; Zheng, W.L.; Lu, B.L. Reducing the subject variability of eeg signals with adversarial domain generalization. In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2019; pp. 30–42. [Google Scholar]

- Ko, W.; Oh, K.; Jeon, E.; Suk, H.I. VIGNet: A Deep Convolutional Neural Network for EEG-based Driver Vigilance Estimation. In Proceedings of the 2020 8th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 26–28 February 2020; pp. 1–3. [Google Scholar]

- Zhang, G.; Etemad, A. RFNet: Riemannian Fusion Network for EEG-based Brain-Computer Interfaces. arXiv 2020, arXiv:2008.08633. [Google Scholar]

- Munoz, R.; Olivares, R.; Taramasco, C.; Villarroel, R.; Soto, R.; Barcelos, T.S.; Merino, E.; Alonso-Sánchez, M.F. Using black hole algorithm to improve eeg-based emotion recognition. Comput. Intell. Neurosci. 2018, 2018, 3050214. [Google Scholar] [CrossRef]

- Izquierdo-Reyes, J.; Ramirez-Mendoza, R.A.; Bustamante-Bello, M.R.; Pons-Rovira, J.L.; Gonzalez-Vargas, J.E. Emotion recognition for semi-autonomous vehicles framework. Int. J. Interact. Des. Manuf. 2018, 12, 1447–1454. [Google Scholar] [CrossRef]

- Xu, H.; Plataniotis, K.N. Subject independent affective states classification using EEG signals. In Proceedings of the 2015 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Orlando, FL, USA, 14–16 December 2015; pp. 1312–1316. [Google Scholar]

- Drouin-Picaro, A.; Falk, T.H. Using deep neural networks for natural saccade classification from electroencephalograms. In Proceedings of the 2016 IEEE EMBS International Student Conference (ISC), Ottawa, ON, Canada, 29–31 May 2016; pp. 1–4. [Google Scholar]

- Al-Nafjan, A.; Hosny, M.; Al-Ohali, Y.; Al-Wabil, A. Review and classification of emotion recognition based on EEG brain-computer interface system research: A systematic review. Appl. Sci. 2017, 7, 1239. [Google Scholar] [CrossRef]

- Soleymani, M.; Pantic, M. Multimedia implicit tagging using EEG signals. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo (ICME), San Jose, CA, USA, 15–19 July 2013; pp. 1–6. [Google Scholar]

- Soroush, M.Z.; Maghooli, K.; Setarehdan, S.K.; Nasrabadi, A.M. A review on EEG signals based emotion recognition. Int. Clin. Neurosci. J. 2017, 4, 118. [Google Scholar] [CrossRef]

- Faller, J.; Cummings, J.; Saproo, S.; Sajda, P. Regulation of arousal via online neurofeedback improves human performance in a demanding sensory-motor task. Proc. Natl. Acad. Sci. USA 2019, 116, 6482–6490. [Google Scholar] [CrossRef]

- Gaume, A.; Dreyfus, G.; Vialatte, F.B. A cognitive brain–computer interface monitoring sustained attentional variations during a continuous task. Cogn. Neurodynamics 2019, 13, 257–269. [Google Scholar] [CrossRef]

- Pattnaik, P.K.; Sarraf, J. Brain Computer Interface issues on hand movement. J. King Saud-Univ.-Comput. Inf. Sci. 2018, 30, 18–24. [Google Scholar] [CrossRef]

- Weiskopf, N.; Scharnowski, F.; Veit, R.; Goebel, R.; Birbaumer, N.; Mathiak, K. Self-regulation of local brain activity using real-time functional magnetic resonance imaging (fMRI). J. Physiol.-Paris 2004, 98, 357–373. [Google Scholar] [CrossRef]

- Cattan, G.; Rodrigues, P.L.C.; Congedo, M. EEG Alpha Waves Dataset. Ph.D. Thesis, GIPSA-LAB, University Grenoble-Alpes, Saint-Martin-d’Hères, France, 2018. [Google Scholar]

- Grégoire, C.; Rodrigues, P.; Congedo, M. EEG Alpha Waves Dataset; Centre pour la Communication Scientifique Directe: Grenoble, France, 2019. [Google Scholar]

- Tirupattur, P.; Rawat, Y.S.; Spampinato, C.; Shah, M. Thoughtviz: Visualizing human thoughts using generative adversarial network. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 950–958. [Google Scholar]

- Walker, I.; Deisenroth, M.; Faisal, A. Deep Convolutional Neural Networks for Brain Computer Interface Using Motor Imagery; Ipmerial College of Science, Technology and Medicine Department of Computing: London, UK, 2015; p. 68. [Google Scholar]

- Spampinato, C.; Palazzo, S.; Kavasidis, I.; Giordano, D.; Souly, N.; Shah, M. Deep learning human mind for automated visual classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6809–6817. [Google Scholar]

- Tan, C.; Sun, F.; Zhang, W. Deep transfer learning for EEG-based brain computer interface. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 916–920. [Google Scholar]

- Xu, G.; Shen, X.; Chen, S.; Zong, Y.; Zhang, C.; Yue, H.; Liu, M.; Chen, F.; Che, W. A deep transfer convolutional neural network framework for EEG signal classification. IEEE Access 2019, 7, 112767–112776. [Google Scholar] [CrossRef]

- Fahimi, F.; Zhang, Z.; Goh, W.B.; Lee, T.S.; Ang, K.K.; Guan, C. Inter-subject transfer learning with an end-to-end deep convolutional neural network for EEG-based BCI. J. Neural Eng. 2019, 16, 026007. [Google Scholar] [CrossRef]

- Tang, J.; Liu, Y.; Hu, D.; Zhou, Z. Towards BCI-actuated smart wheelchair system. Biomed. Eng. Online 2018, 17, 1–22. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef]

- Bashivan, P.; Bidelman, G.M.; Yeasin, M. Spectrotemporal dynamics of the EEG during working memory encoding and maintenance predicts individual behavioral capacity. Eur. J. Neurosci. 2014, 40, 3774–3784. [Google Scholar] [CrossRef]

- Sprague, S.A.; McBee, M.T.; Sellers, E.W. The effects of working memory on brain–computer interface performance. Clin. Neurophysiol. 2016, 127, 1331–1341. [Google Scholar] [CrossRef][Green Version]

- Ramsey, N.F.; Van De Heuvel, M.P.; Kho, K.H.; Leijten, F.S. Towards human BCI applications based on cognitive brain systems: An investigation of neural signals recorded from the dorsolateral prefrontal cortex. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 214–217. [Google Scholar] [CrossRef]

- Cutrell, E.; Tan, D. BCI for passive input in HCI. In Proceedings of the CHI, Florence, Italy, 5–10 April 2008; Volume 8, pp. 1–3. [Google Scholar]

- Riccio, A.; Simione, L.; Schettini, F.; Pizzimenti, A.; Inghilleri, M.; Olivetti Belardinelli, M.; Mattia, D.; Cincotti, F. Attention and P300-based BCI performance in people with amyotrophic lateral sclerosis. Front. Hum. Neurosci. 2013, 7, 732. [Google Scholar] [CrossRef] [PubMed]

- Schabus, M.D.; Dang-Vu, T.T.; Heib, D.P.J.; Boly, M.; Desseilles, M.; Vandewalle, G.; Schmidt, C.; Albouy, G.; Darsaud, A.; Gais, S.; et al. The fate of incoming stimuli during NREM sleep is determined by spindles and the phase of the slow oscillation. Front. Neurol. 2012, 3, 40. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Ye, N.; Xu, X. EEG analysis of alcoholics and controls based on feature extraction. In Proceedings of the 2006 8th International Conference on Signal Processing, Guilin, China, 16–20 November 2006; Volume 1. [Google Scholar]

- Nguyen, P.; Tran, D.; Huang, X.; Sharma, D. A proposed feature extraction method for EEG-based person identification. In Proceedings of the 2012 International Conference on Artificial Intelligence, Las Vegas, NV, USA, 16–19 July 2012; pp. 826–831. [Google Scholar]

- Kjøbli, J.; Tyssen, R.; Vaglum, P.; Aasland, O.; Grønvold, N.T.; Ekeberg, O. Personality traits and drinking to cope as predictors of hazardous drinking among medical students. J. Stud. Alcohol 2004, 65, 582–585. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Altahat, S.; Tran, D.; Sharma, D. Human identification with electroencephalogram (EEG) signal processing. In Proceedings of the 2012 International Symposium on Communications and Information Technologies (ISCIT), Gold Coast, QLD, Australia, 2—5 October 2012; pp. 1021–1026. [Google Scholar]

- Palaniappan, R.; Raveendran, P.; Omatu, S. VEP optimal channel selection using genetic algorithm for neural network classification of alcoholics. IEEE Trans. Neural Netw. 2002, 13, 486–491. [Google Scholar] [CrossRef] [PubMed]

- Zhong, S.; Ghosh, J. HMMs and coupled HMMs for multi-channel EEG classification. In Proceedings of the 2002 International Joint Conference on Neural Networks, Honolulu, HI, USA, 12–17 May 2002; Volume 2, pp. 1154–1159. [Google Scholar]

- Wang, H.; Li, Y.; Hu, X.; Yang, Y.; Meng, Z.; Chang, K.M. Using EEG to Improve Massive Open Online Courses Feedback Interaction. In AIED Workshops; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Wang, H. Confused Student EEG Brainwave Data, EEG Data from 10 Students Watching MOOC Videos. 2018. Available online: https://www.kaggle.com/wanghaohan/confused-eeg/ (accessed on 19 August 2021).

- Fahimirad, M.; Kotamjani, S.S. A review on application of artificial intelligence in teaching and learning in educational contexts. Int. J. Learn. Dev. 2018, 8, 106–118. [Google Scholar] [CrossRef]

- Kanoga, S.; Nakanishi, M.; Mitsukura, Y. Assessing the effects of voluntary and involuntary eyeblinks in independent components of electroencephalogram. Neurocomputing 2016, 193, 20–32. [Google Scholar] [CrossRef]

- Abe, K.; Sato, H.; Ohi, S.; Ohyama, M. Feature parameters of eye blinks when the sampling rate is changed. In Proceedings of the TENCON 2014–2014 IEEE Region 10 Conference, Bangkok, Thailand, 22–25 October 2014; pp. 1–6. [Google Scholar]

- Narejo, S.; Pasero, E.; Kulsoom, F. EEG based eye state classification using deep belief network and stacked autoencoder. Int. J. Electr. Comput. Eng. 2016, 6, 3131–3141. [Google Scholar]

- Reddy, T.K.; Behera, L. Online eye state recognition from EEG data using deep architectures. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 712–717. [Google Scholar]

- Lim, C.K.A.; Chia, W.C.; Chin, S.W. A mobile driver safety system: Analysis of single-channel EEG on drowsiness detection. In Proceedings of the 2014 International Conference on Computational Science and Technology (ICCST), Kota Kinabalu, Malaysia, 27–28 August 2014; pp. 1–5. [Google Scholar]

- Chun, J.; Bae, B.; Jo, S. BCI based hybrid interface for 3D object control in virtual reality. In Proceedings of the 2016 4th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Korea, 22–24 February 2016; pp. 1–4. [Google Scholar]

- Agarwal, M.; Sivakumar, R. Blink: A fully automated unsupervised algorithm for eye-blink detection in eeg signals. In Proceedings of the 2019 57th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 24–27 September 2019; pp. 1113–1121. [Google Scholar]

- Andreev, A.; Cattan, G.; Congedo, M. Engineering study on the use of Head-Mounted display for Brain-Computer Interface. arXiv 2019, arXiv:1906.12251. [Google Scholar]

- Agarwal, M.; Sivakumar, R. Charge for a whole day: Extending battery life for bci wearables using a lightweight wake-up command. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–14. [Google Scholar]

- Rösler, O.; Suendermann, D. A First Step towards Eye State Prediction Using EEG. 2013. Available online: https://www.kaggle.com/c/vibcourseml2020/data/ (accessed on 19 August 2021).

- Zhang, Y.; Xu, P.; Guo, D.; Yao, D. Prediction of SSVEP-based BCI performance by the resting-state EEG network. J. Neural Eng. 2013, 10, 066017. [Google Scholar] [CrossRef][Green Version]

- Hamilton, C.R.; Shahryari, S.; Rasheed, K.M. Eye state prediction from EEG data using boosted rotational forests. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 429–432. [Google Scholar]

- Kim, Y.; Lee, C.; Lim, C. Computing intelligence approach for an eye state classification with EEG signal in BCI. In Proceedings of the 2015 International Conference on Software Engineering and Information Technology (SEIT2015), Guilin, China, 26–28 June 2016; pp. 265–270. [Google Scholar]

- Agarwal, M. Publicly Available EEG Datasets. 2021. Available online: https://openbci.com/community/publicly-available-eeg-datasets/ (accessed on 19 August 2021).

- Pan, J.; Li, Y.; Gu, Z.; Yu, Z. A comparison study of two P300 speller paradigms for brain–computer interface. Cogn. Neurodynamics 2013, 7, 523–529. [Google Scholar] [CrossRef] [PubMed]

- Vareka, L.; Bruha, P.; Moucek, R. Event-related potential datasets based on a three-stimulus paradigm. GigaScience 2014, 3, 2047-217X-3-35. [Google Scholar] [CrossRef] [PubMed]

- Gao, W.; Guan, J.A.; Gao, J.; Zhou, D. Multi-ganglion ANN based feature learning with application to P300-BCI signal classification. Biomed. Signal Process. Control. 2015, 18, 127–137. [Google Scholar] [CrossRef]

- Marathe, A.R.; Ries, A.J.; Lawhern, V.J.; Lance, B.J.; Touryan, J.; McDowell, K.; Cecotti, H. The effect of target and non-target similarity on neural classification performance: A boost from confidence. Front. Neurosci. 2015, 9, 270. [Google Scholar] [CrossRef]

- Shin, J.; Von Lühmann, A.; Kim, D.W.; Mehnert, J.; Hwang, H.J.; Müller, K.R. Simultaneous acquisition of EEG and NIRS during cognitive tasks for an open access dataset. Sci. Data 2018, 5, 1–16. [Google Scholar] [CrossRef]

- Håkansson, B.; Reinfeldt, S.; Eeg-Olofsson, M.; Östli, P.; Taghavi, H.; Adler, J.; Gabrielsson, J.; Stenfelt, S.; Granström, G. A novel bone conduction implant (BCI): Engineering aspects and pre-clinical studies. Int. J. Audiol. 2010, 49, 203–215. [Google Scholar] [CrossRef]

- Guger, C.; Krausz, G.; Allison, B.Z.; Edlinger, G. Comparison of dry and gel based electrodes for P300 brain–computer interfaces. Front. Neurosci. 2012, 6, 60. [Google Scholar] [CrossRef]

- Shahriari, Y.; Vaughan, T.M.; McCane, L.; Allison, B.Z.; Wolpaw, J.R.; Krusienski, D.J. An exploration of BCI performance variations in people with amyotrophic lateral sclerosis using longitudinal EEG data. J. Neural Eng. 2019, 16, 056031. [Google Scholar] [CrossRef]

- McCane, L.M.; Sellers, E.W.; McFarland, D.J.; Mak, J.N.; Carmack, C.S.; Zeitlin, D.; Wolpaw, J.R.; Vaughan, T.M. Brain-computer interface (BCI) evaluation in people with amyotrophic lateral sclerosis. Amyotroph. Lateral Scler. Front. Degener. 2014, 15, 207–215. [Google Scholar] [CrossRef] [PubMed]

- Miller, K.J.; Schalk, G.; Hermes, D.; Ojemann, J.G.; Rao, R.P. Spontaneous decoding of the timing and content of human object perception from cortical surface recordings reveals complementary information in the event-related potential and broadband spectral change. PLoS Comput. Biol. 2016, 12, e1004660. [Google Scholar] [CrossRef] [PubMed]

- Bobrov, P.; Frolov, A.; Cantor, C.; Fedulova, I.; Bakhnyan, M.; Zhavoronkov, A. Brain-computer interface based on generation of visual images. PLoS ONE 2011, 6, e20674. [Google Scholar] [CrossRef] [PubMed]

- Cancino, S.; Saa, J.D. Electrocorticographic signals classification for brain computer interfaces using stacked-autoencoders. Applications of Machine Learning 2020. Int. Soc. Opt. Photonics 2020, 11511, 115110J. [Google Scholar]

- Wei, Q.; Liu, Y.; Gao, X.; Wang, Y.; Yang, C.; Lu, Z.; Gong, H. A Novel c-VEP BCI Paradigm for Increasing the Number of Stimulus Targets Based on Grouping Modulation With Different Codes. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1178–1187. [Google Scholar] [CrossRef]

- Bin, G.; Gao, X.; Wang, Y.; Li, Y.; Hong, B.; Gao, S. A high-speed BCI based on code modulation VEP. J. Neural Eng. 2011, 8, 025015. [Google Scholar] [CrossRef]

- Gembler, F.W.; Benda, M.; Rezeika, A.; Stawicki, P.R.; Volosyak, I. Asynchronous c-VEP communication tools—Efficiency comparison of low-target, multi-target and dictionary-assisted BCI spellers. Sci. Rep. 2020, 10, 17064. [Google Scholar] [CrossRef]

- Spüler, M.; Rosenstiel, W.; Bogdan, M. Online adaptation of a c-VEP brain-computer interface (BCI) based on error-related potentials and unsupervised learning. PLoS ONE 2012, 7, e51077. [Google Scholar] [CrossRef]

- Kapeller, C.; Hintermüller, C.; Abu-Alqumsan, M.; Prückl, R.; Peer, A.; Guger, C. A BCI using VEP for continuous control of a mobile robot. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 5254–5257. [Google Scholar]

- Spüler, M.; Rosenstiel, W.; Bogdan, M. One Class SVM and Canonical Correlation Analysis increase performance in a c-VEP based Brain-Computer Interface (BCI). ESANN 2012. [Google Scholar] [CrossRef]

- Bin, G.; Gao, X.; Wang, Y.; Hong, B.; Gao, S. VEP-based brain-computer interfaces: Time, frequency, and code modulations [Research Frontier]. IEEE Comput. Intell. Mag. 2009, 4, 22–26. [Google Scholar] [CrossRef]

- Zhang, Y.; Yin, E.; Li, F.; Zhang, Y.; Tanaka, T.; Zhao, Q.; Cui, Y.; Xu, P.; Yao, D.; Guo, D. Two-stage frequency recognition method based on correlated component analysis for SSVEP-based BCI. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1314–1323. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, X.; Gao, X.; Gao, S. A benchmark dataset for SSVEP-based brain–computer interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 25, 1746–1752. [Google Scholar] [CrossRef]