Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case

Abstract

:1. Introduction

- The essential concepts and background knowledge necessary for the development of Edge-AI G-IoT systems are detailed.

- The most recent Edge-AI G-IoT communications architectures are described together with their main subsystems to allow future researchers to design their own systems.

- The latest trends on the convergence of AI and edge computing are detailed. Moreover, a cross-analysis is provided in order to determine the main issues that arise when combining G-IoT and Edge-AI.

- The energy consumption of a practical Industry 5.0 application case is analyzed to illustrate the theoretical concepts introduced in the article.

- The most relevant future challenges for the successful development of Edge-AI G-IoT systems are outlined to provide a roadmap for future researchers.

2. Background

2.1. Digital Circular Economy

2.1.1. Circular Economy

2.1.2. Digital Circular Economy (DCE)

2.1.3. G-IoT and Edge-AI for Digital Circular Economy (DCE)

2.2. Industry 5.0 and Society 5.0

2.3. Technology Enablers

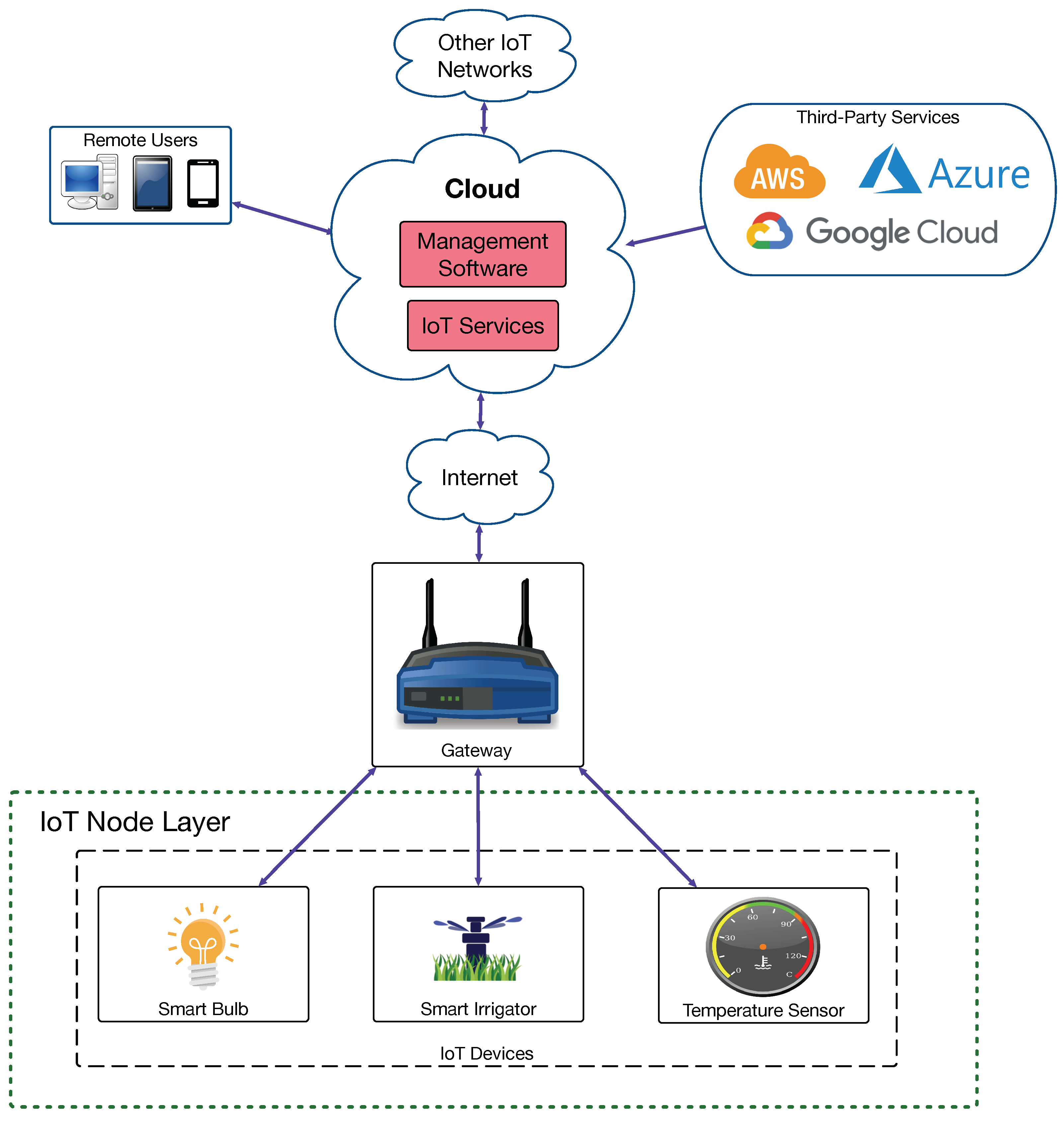

2.3.1. IoT and IIoT

2.3.2. Cloud and Edge Computing

2.3.3. AI

3. Energy Efficiency for IoT: Developing Green IoT Systems

3.1. Communications Architectures for G-IoT Systems

3.2. Types of G-IoT Devices

3.3. Hardware of the Control and Power Subsystems

3.4. Communications Subsystem

- ZigBee [56]. It was conceived for deploying Wireless Sensor Networks (WSNs) that are able to provide overall low energy consumption by being asleep most of the time, just waking up periodically. In addition, it is easy to scale ZigBee networks, since they can create mesh networks to extend the IoT node communications range.

- LoRA (Long-Range Wide Area Network) and LoRAWAN [57]. These technologies have been devised to deploy Wide Area IoT networks while providing low energy consumption.

- Ultrawideband (UWB). It is able to provide low-energy wide-bandwidth communications as well as centimeter-level positioning accuracy in short-range indoor applications. Mazhar et al. [58] evaluate different UWB positioning methods, algorithms, and implementations. The authors conclude that some techniques (e.g., hybrid techniques combining both Time-of-Arrival (TOA) and Angle-of-Arrival (AOA)), although more complex, are able to offer additional advantages in terms of power consumption and performance.

- Wi-Fi Hallow/IEEE 802.11ah. In contrast to Wi-Fi, it offers very low energy consumption by adopting novel power-saving strategies to ensure an efficient use of energy resources available in IoT nodes. It was specifically created to address the needs of Machine-to-Machine (M2M) communications based on many devices (e.g., hundreds or thousands), long range, sporadic traffic needs, and substantial energy constraints [59].

3.5. Green Control Software

3.6. Energy Efficient Security Mechanisms

3.7. G-IoT Carbon Footprint

- Hardware power consumption. The used IoT hardware is the basis for the IoT network, so its energy consumption should be as energy efficient as possible while preserving its functionality and required computing power.

- IoT node software energy consumption. Software needs to be optimized together with the hardware, so developers need to introduce energy-aware constraints during the development of G-IoT solutions. Such optimizations are especially critical for certain digital signal processing tasks such as compression, feature extraction, or machine learning training [80].

- IoT protocol energy efficiency. The IoT relies on protocols that enable communicating between the multiple nodes and routing devices involved in an IoT network. As a consequence, such protocols need to be energy efficient in terms of software implementation and should consider the minimization of the usage of communication interfaces. For instance, Peer-to-Peer (P2P) protocols are well-known for being intensive in terms of the number of communications they manage, although some research has been dedicated to reducing their energy consumption [81,82,83,84,85].

- RF spectrum management optimization. The increasing number of deployed IoT nodes will derive into the congestion of the RF spectrum, so its management will need to be further optimized to minimize node energy consumption [77].

- Datacenter sustainability. As the demand for IoT devices grows, ever-increasing amounts of energy are needed to power the datacenters where remote cloud services are provided. This issue is especially critical for corporations such as Google or Microsoft, which rely on huge data centers and, in fact, the U.S. Environmental Protection Agency (EPA) already warned about this problem in 2007 [86]. As a consequence of such a warning, carbon footprint estimations were performed in order to determine the emissions related to the construction and operation of a datacenter [87].

- Data storage energy usage. In cloud-centric architectures, most of the data are stored in a server or in a farm of servers in a remote datacenter, but some of the latest architectures decentralize data storage to prevent single-point-of-failure issues and avoid high operation costs. Thus, for such decentralized architectures, G-IoT requires minimizing node energy consumption and communications. This is not so easy, since devices are physically scattered, and they usually make use of heterogeneous platforms whose energy optimization may differ significantly.

- Use of green power sources. IoT networks can become greener by making use of renewable power sources from wind, solar, or thermal energy. IoT nodes can also make use of energy-harvesting techniques to minimize their dependence on batteries or extend their battery life [46,47,48,49]. Moreover, battery manufacturing and end-of-life processes have their own carbon footprint and impact the environment with their toxicity. Furthermore, IoT architectures can be in part powered through decentralized green smart grids, which can collaborate among them to distribute the generated energy [78].

- Green task offloading. Traditional centralized architectures have tended to offload the computing and storage resources of IoT devices to a remote cloud, which requires additional power consumption and network communications that are proportional to the tasks to be performed and to the latency of the network. In contrast, architectures such as the ones described in Section 3.1, can selectively choose which tasks to offload to the cloud. Thus, most of the node requests are processed in the edge of the network, which reduces latency and network resource consumption due to the decrease in the number of involved gateways and routers [88]. Nonetheless, G-IoT designers must be aware of the energy implications of decentralized systems [89].

4. AI and Edge Computing Convergence

- DL can automatically identify and select the features that will be used in the classification stage. In contrast, ML requires the features to be provided manually (i.e., unsupervised vs. supervised learning).

- DL requires high-end hardware and large training data sets to deliver accurate results, as opposed to ML, which can operate in low-end hardware with smaller data sets in the training stage (i.e., ML is typically adopted in resource contained embedded hardware).

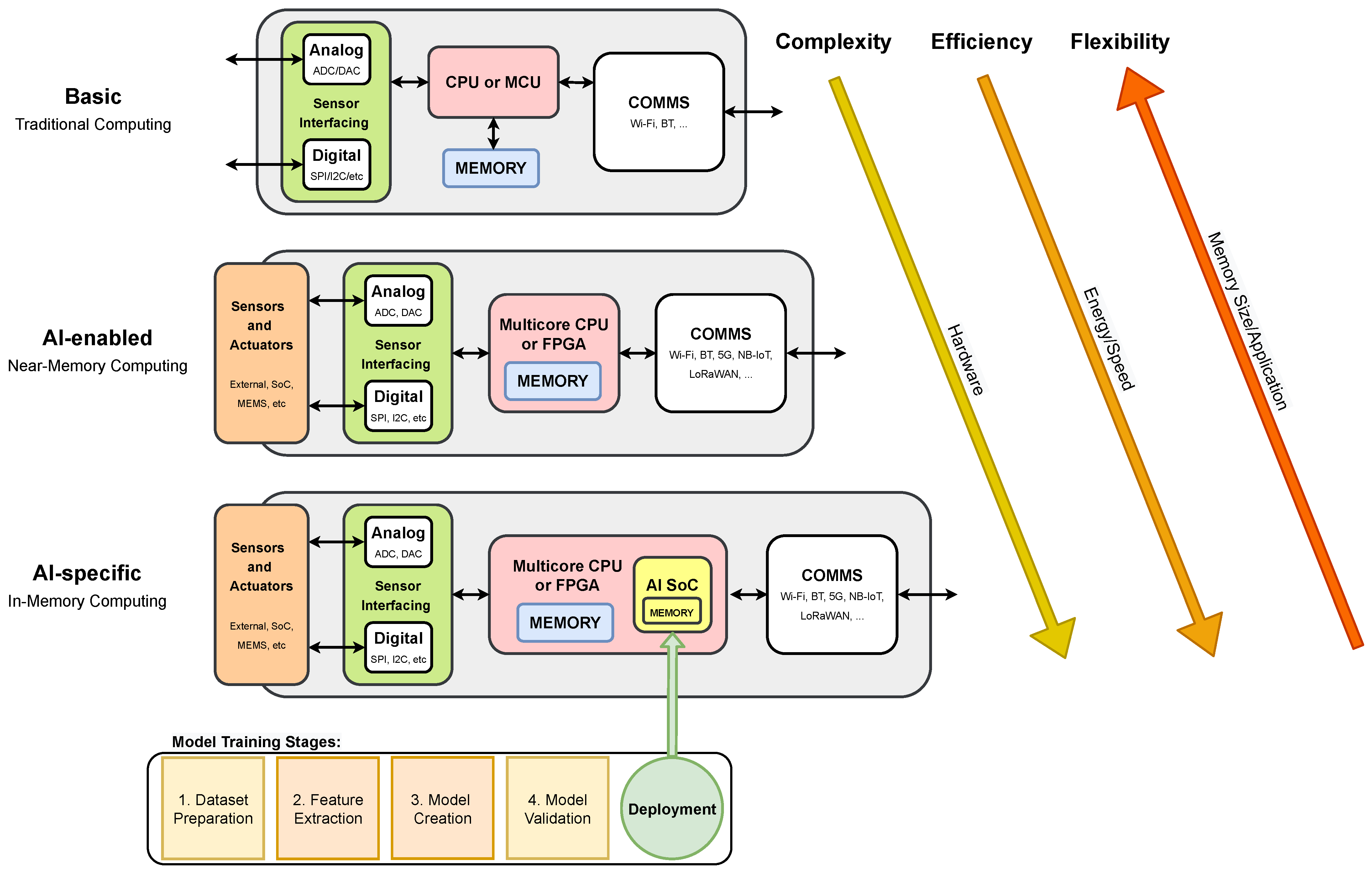

4.1. AI-Enabled IoT Hardware

4.1.1. Common Edge-AI Device Architectures

4.1.2. Embedded AI SoC Architectures

4.1.3. AI-Enabled IoT Hardware Selection Criteria

- Reliable Connectivity: data can be gathered and processed on the same device instead of relying on a network connection to transmit data to the cloud, which reduces the probability of network connection problems.

- Reduced Latency: when processing is performed locally, all communications-related latencies are avoided, resulting in an overall latency that converges to the inference latency.

- Increased Security and Privacy: reducing the need for communicating between the IoT edge device and the cloud means reducing the risk that data will be compromised, lost, stolen, or leaked.

- Bandwidth Efficiency: reducing the communications between IoT edge devices and the cloud, also reduces bandwidth needs and the overall communications cost.

4.2. Edge Intelligence or Edge-AI

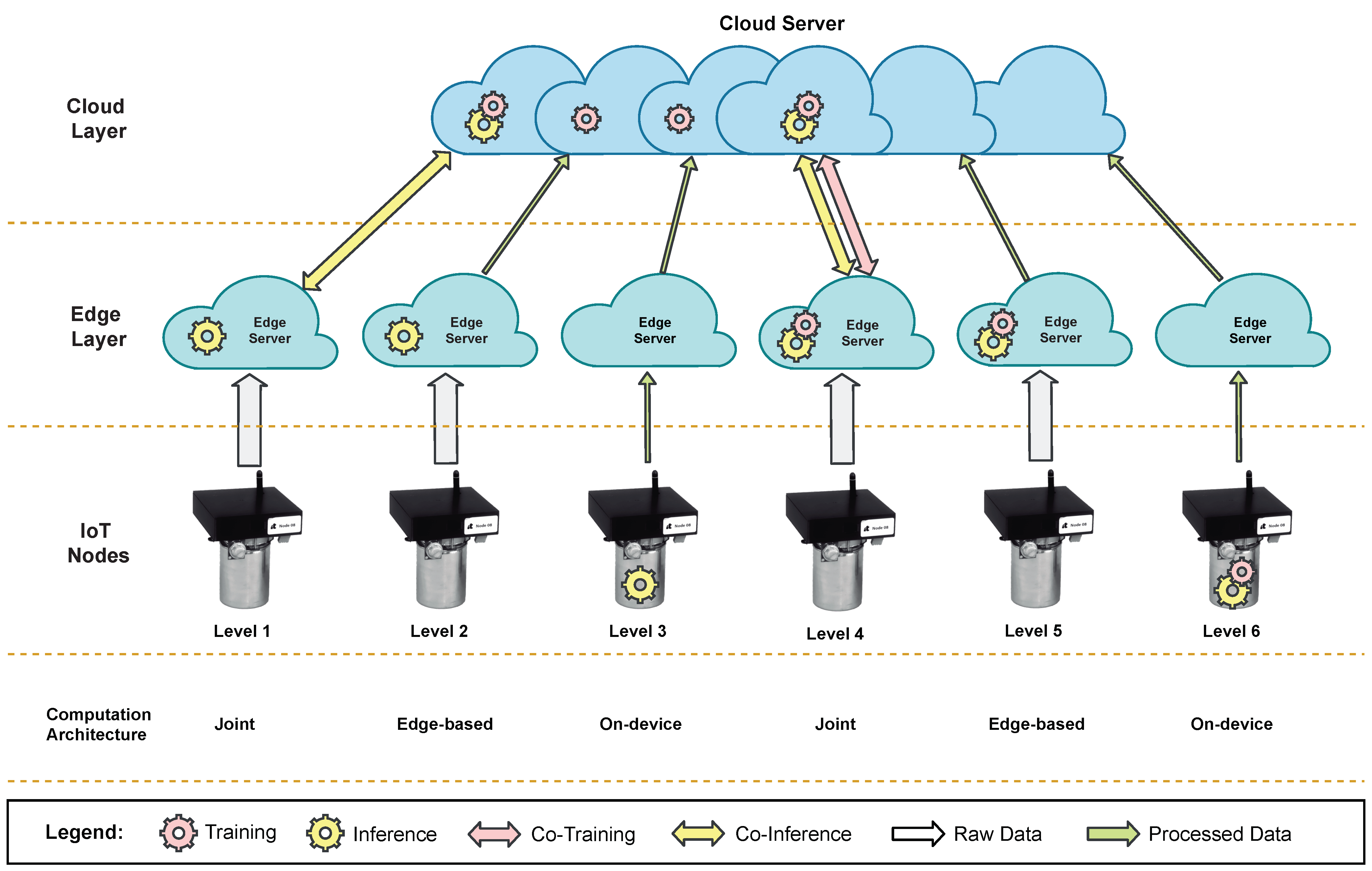

4.2.1. Model Inference Architectures

- (i)

- On-device computation: it relies on AI techniques (e.g., Deep Neural Networks (DNNs)) that are executed on the end device.

- (ii)

- Edge-based computation: it computes on edge devices the information collected from end devices.

- (iii)

- Joint computation: it allows for processing data on the cloud during training and inference stages.

4.2.2. Edge-AI Levels

4.2.3. Embedded ML

- Visual Wake Words (VWW): classification task for binary images that detects the presence of a person. For instance, an application use case is in-home security monitoring.

- Image Classification (IC): small image classification benchmark with 10 classes, with several use cases in smart video recognition applications.

- Keyword Spotting (KWS): uses a neural network to detect keywords from a spectrogram, with several use cases in consumer end devices, such as virtual assistants.

- Anomaly Detection (AD): uses a neural network to identify anomalies in machine operating sounds, and has several application cases in industrial manufacturing (e.g., predictive maintenance, asset tracking, and monitoring).

4.3. Edge-AI Computational Cost

4.4. Measuring Edge-AI Energy Consumption and Carbon Footprint

4.4.1. Energy Consumption

4.4.2. CO2 Emissions

4.5. Measuring Edge-AI Performance

4.5.1. Accuracy

4.5.2. Memory Bandwidth

4.5.3. Energy Efficiency

4.5.4. Execution Time

5. Cross-Analysis of G-IoT and Edge-AI: Key Findings

- Communications between G-IoT nodes and Edge-AI devices are essential, so developers should consider the challenges related to the use of energy efficient transceivers and fast-response architectures. Thus, researchers need to contemplate aspects such as the use of low-power communications technologies (e.g., ZigBee, LoRa, UWB, and Wi-Fi Hallow), the management of the RF spectrum or the design of distributed AI training, learning algorithms, and architectures that achieve low-latency inference (either distributed or decentralized [107]).

- Although the most straightforward way to implement Edge-AI systems is to deploy the entire model on edge devices, which eliminates the need for any communications overhead, when the model size is large or the computational requirements are very high, this approach is unfeasible and it is necessary to include additional techniques that involve the cooperation among nodes to accomplish the different AI training and inference tasks (e.g., federated learning techniques [107]). Such techniques should minimize the network traffic load and communications overhead in resource-constrained devices.

- Edge-AI G-IoT systems should consider that the different nodes of the architecture (e.g., mist nodes, edge computing devices, and cloudlets) have heterogeneous capabilities in terms of communications, computation, storage, and power; therefore, the tasks to be performed should be distributed in a smart way among the available devices according to their capabilities.

- Besides heterogeneity, developers should take into account that G-IoT node hardware constrains the performance of the developed Edge-AI systems. Such hardware must be far more powerful than traditional IoT nodes and provide a suitable trade-off between performance and power consumption. In addition, such hardware should be customized to the selected Edge-AI G-IoT architecture and application.

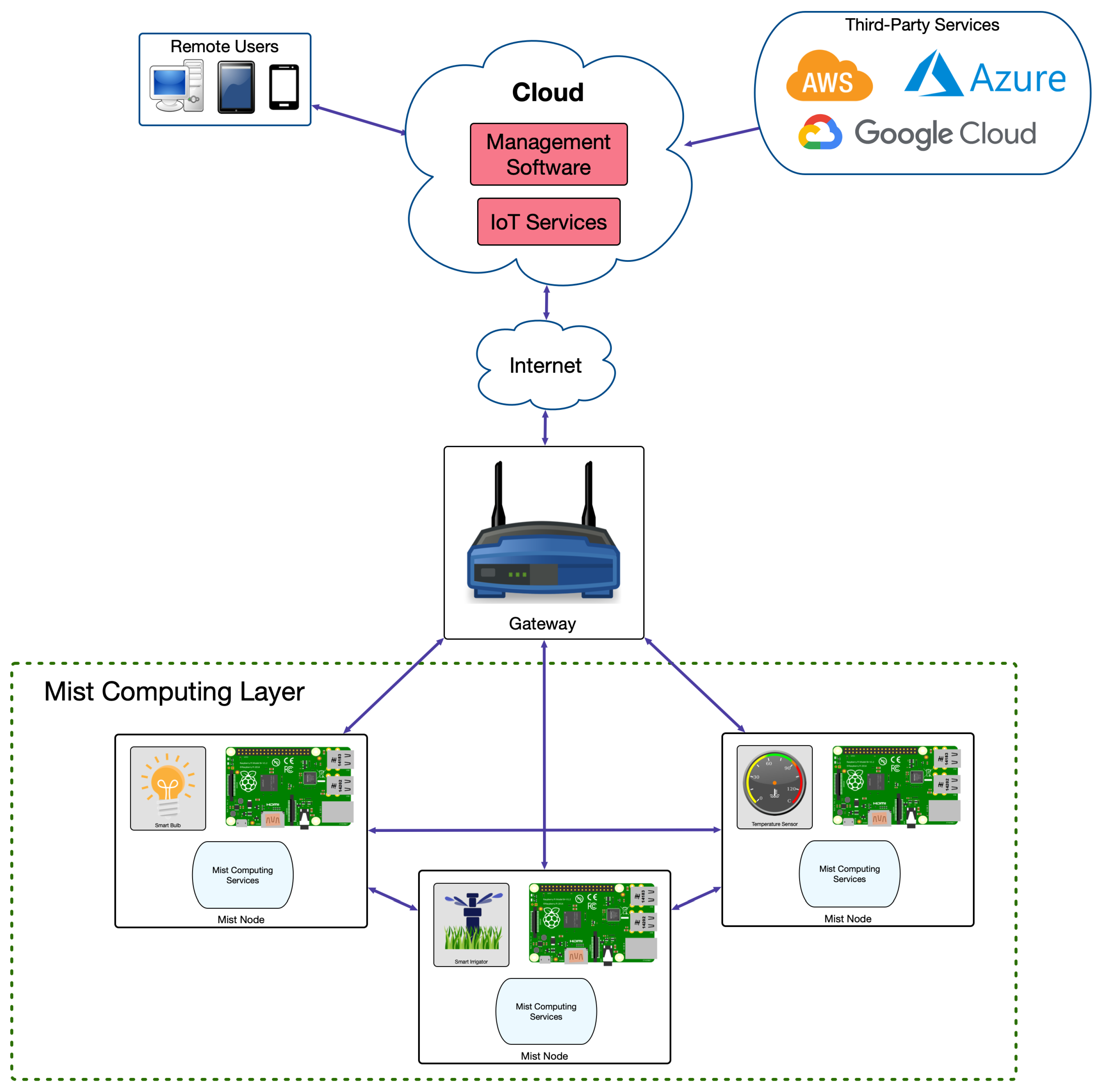

- Currently, most G-IoT systems rely on traditional cloud computing architectures, which do not meet some of the needs of Edge-AI G-IoT applications in terms of high availability, low latency, high network bandwidth, and low power consumption. Moreover, current cloud-based approaches may be compromised by cyberattacks; therefore, new architectures such as the ones based on fog, mist, and edge computing should be considered to increase the robustness against cyberattacks and to allow for choosing which AI tasks to offload to the cloud, if any, while reducing network resource consumption.

- Green power sources and energy-harvesting capabilities for Edge-AI G-IoT systems still need to be studied further. Although batteries are typically used to meet power requirements, future developers should analyze the use of renewable power sources or energy-harvesting mechanisms to minimize energy consumption. In addition, the use of decentralized green smart grids for Edge-AI G-IoT architectures can be considered.

- High-security mechanisms are usually not efficient in terms of energy consumption, so it is important to analyze their performance and carry out practical energy measurements for the developed Edge-AI G-IoT systems.

- Developers should consider using energy efficiency metrics for the developed AI solutions. For instance, in [123] the authors propose four key indicators for an objective assessment of AI models (i.e., accuracy, memory bandwidth, energy efficiency, and execution time). The trade-off between such metrics will depend on the environment where the model will be employed (e.g., "increased safety" scenarios impose low execution time).

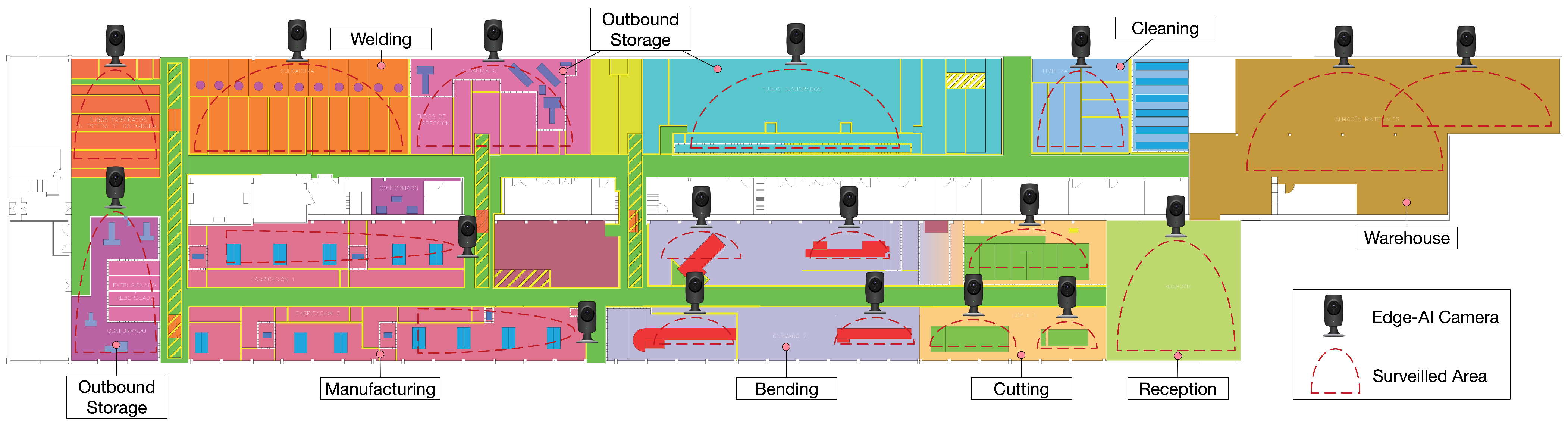

6. Application Case: Developing a Smart Workshop

6.1. Workshop Characterization and Edge-AI System Main Goals

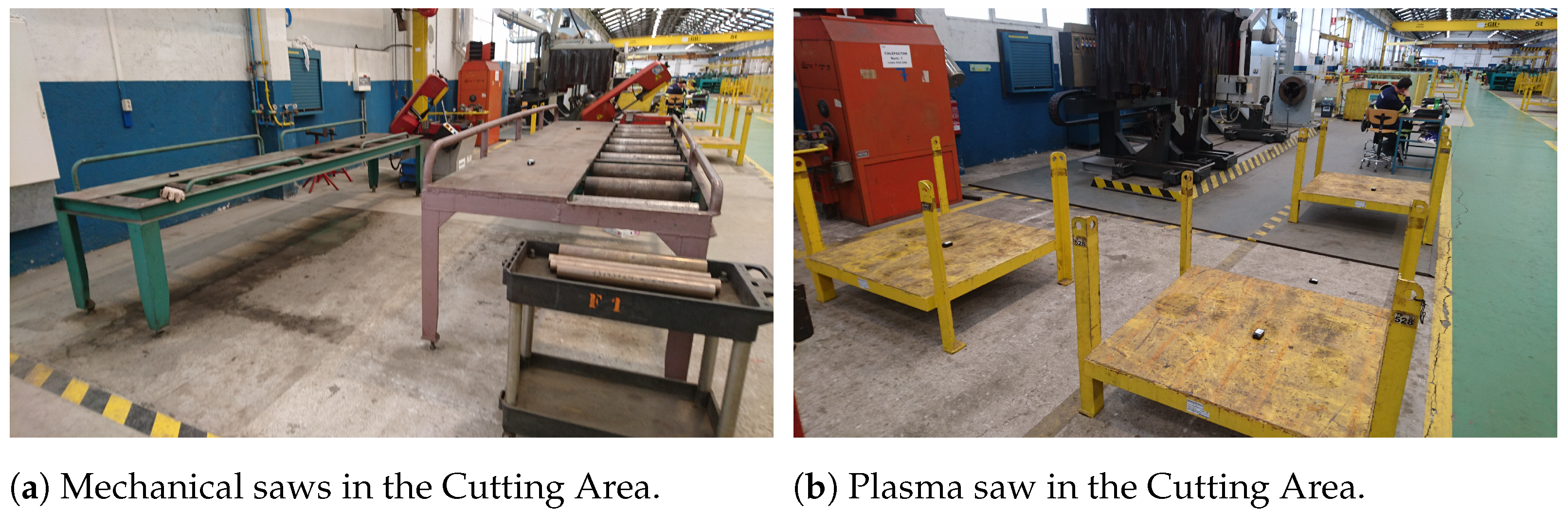

- First, raw pipes are stored in the Reception Area (shown in Figure 6a). Thus, they are collected by the workers as they are needed. If the pipes are delivered with dirt or grease, then, before being stored in the Reception Area, they are cleaned in the Cleaning Area (in Figure 6b). Operators need to keep away from the Cleaning Area unless authorized because of the presence of dangerous chemical products (e.g., chloridric acid, caustic soda) and water that is pressurized and hot.

- Second, every pipe is first cut in the Cutting Area according to the required dimensions. Really powerful (and dangerous) mechanical and plasma saws (shown in Figure 7a,b) are used in the Cutting Area. It is important to note that pipes are moved from the Reception Area to the Cutting Area (or from one area to any other area) by stacking them on pallets, which are carried by big gantries installed in the ceiling of the workshop (several pallets can be seen on the foreground of Figure 7b).

- Third, pipes are bent in the Bending Area. There are three large bending machines in such an area. Operators need to always keep a safe distance and safety glasses when operating a bending machine.

- Fourth, pipes are cleaned and moved to the Manufacturing Area, where accessories are added. For instance, operators may need to weld a valve to a pipe. Welding requires taking specific safety measures and only the authorized operators can access the welding area when someone is working.

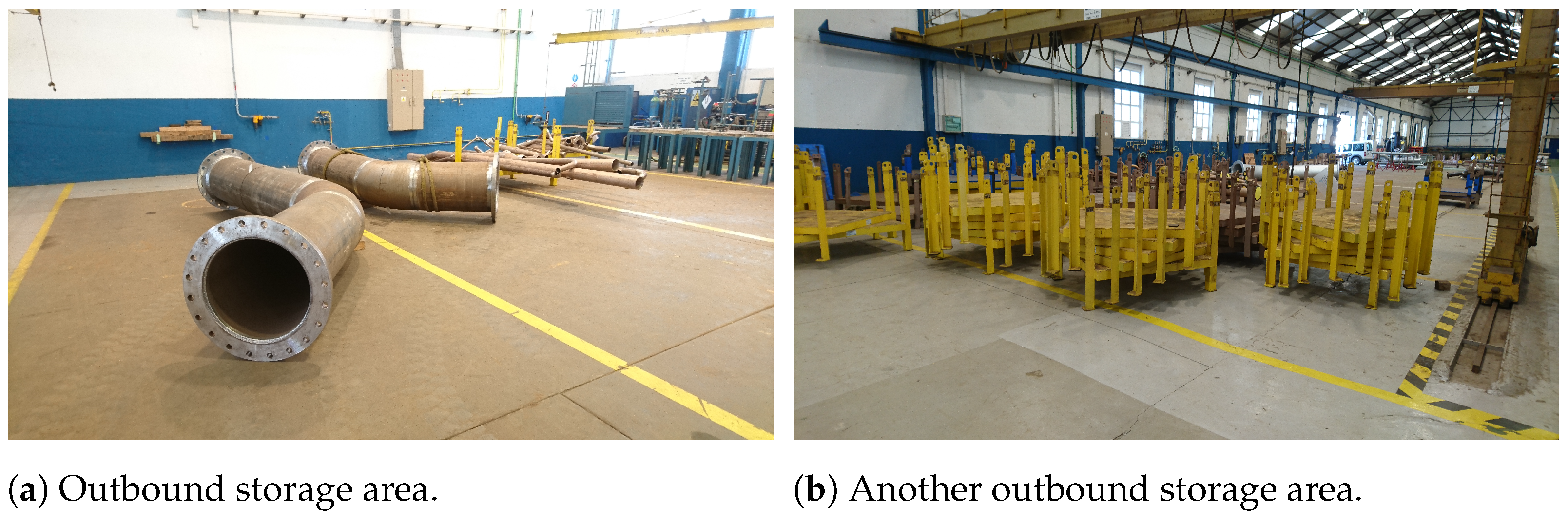

- Finally, pipes are stacked into pallets, packed, and then stored in two different areas of the workshop (shown in Figure 8a,b).

- Increased Safety: automatically detect humans in the proximity of machinery that is operating. After detection, a sound warning should be physically generated in the surrounding zone. After triggering the sound warning, if the detection persists and the estimated distance between the operating machine and the human does not increase, a shutdown command should be sent to the operating machine.

- Operation Tracking: automatically detect and track human operators and moving machinery. The tracking information is then used for the continuous improvement of manufacturing processes.

- Smarter use of resources: the detection of the presence of operators allows for determining when machinery should be working and when it should be shut down.

- Reduction of total annual greenhouse gas emissions: the smarter use of resources decreases energy consumption and, as a consequence, carbon footprint.

- Enhanced process safety: human proximity detection allows for protecting against possible incidents or accidents with the deployed industrial devices and machinery.

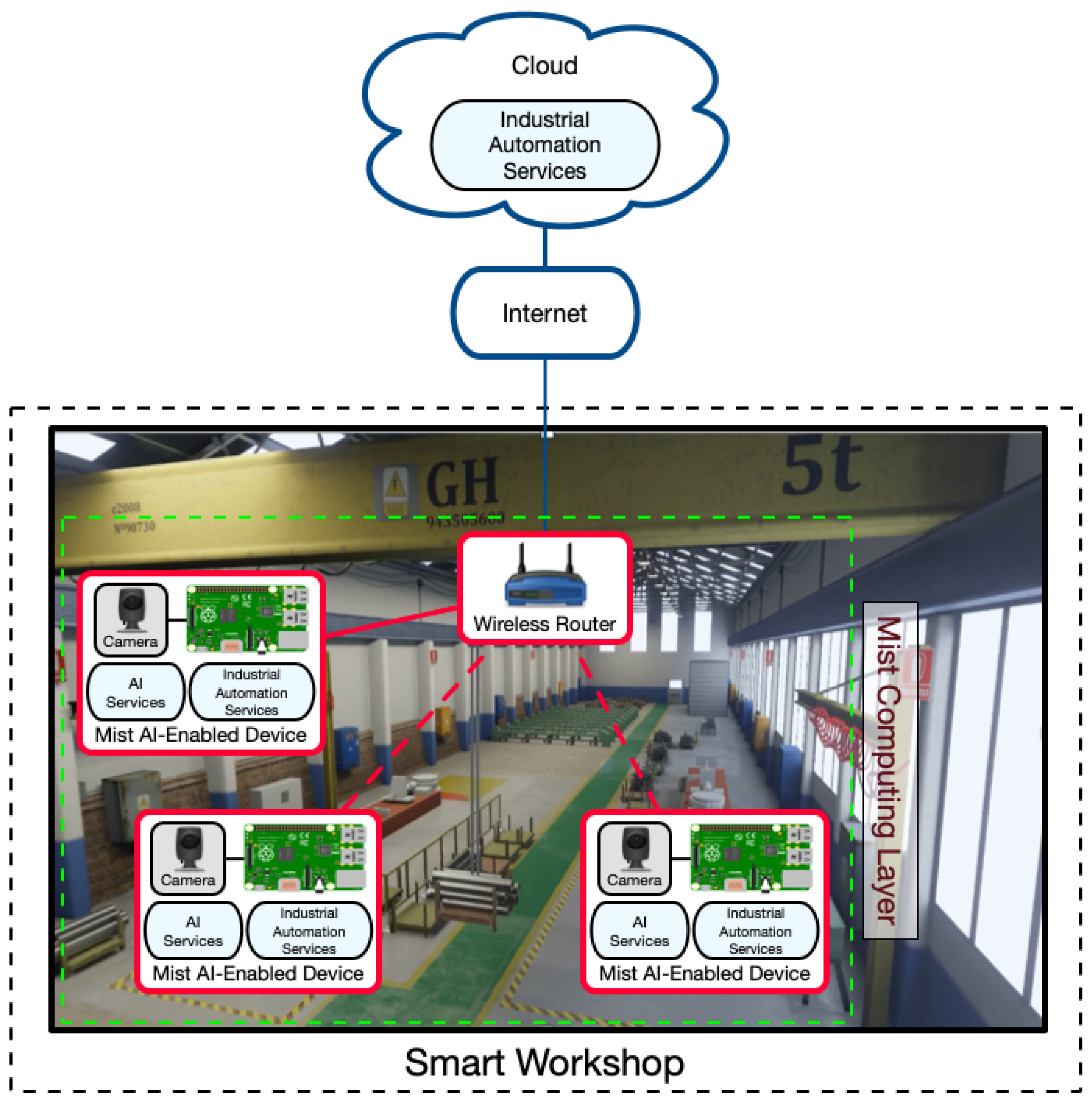

6.2. System Architecture

- Mist Computing Layer: it is composed of AI-enabled IIoT nodes that run AI algorithms locally. Thus, after the AI training stage, nodes avoid exchanging image data through the network with edge computing devices or with the cloud, benefiting from:

- -

- Lower latency. Since most of the processing is carried out locally, the mist computing device can respond faster.

- -

- Communications problems in complex environments can be decreased. Local processing avoids continuous communications with local edge devices or remote clouds. Thus, potential communications problems are reduced, which is really important in industrial scenarios that require wireless communications [126].

- -

- Fewer privacy issues. Camera images do not need to be sent to other devices through the network, so potential attacks to such devices or man-in-the-middle attacks can be prevented and thus avoid image leakages.

- -

- Improved local communications with other nodes. Mist devices can implement additional logic to communicate directly with other mist devices and machines, so responses and data exchanges are faster, and less traffic is generated due to not needing to make use of intermediate devices such as edge computing servers or the cloud.

Despite the benefits of using mist AI-enabled nodes, it is important to note that IIoT nodes, since they integrate cameras/sensors and the control hardware, are more expensive and complex (i.e., there are more hardware parts that can fail). - Cloud: it behaves like in the edge computing based architecture. As a consequence, it deals with the requests of the mist devices that cannot be handled locally.

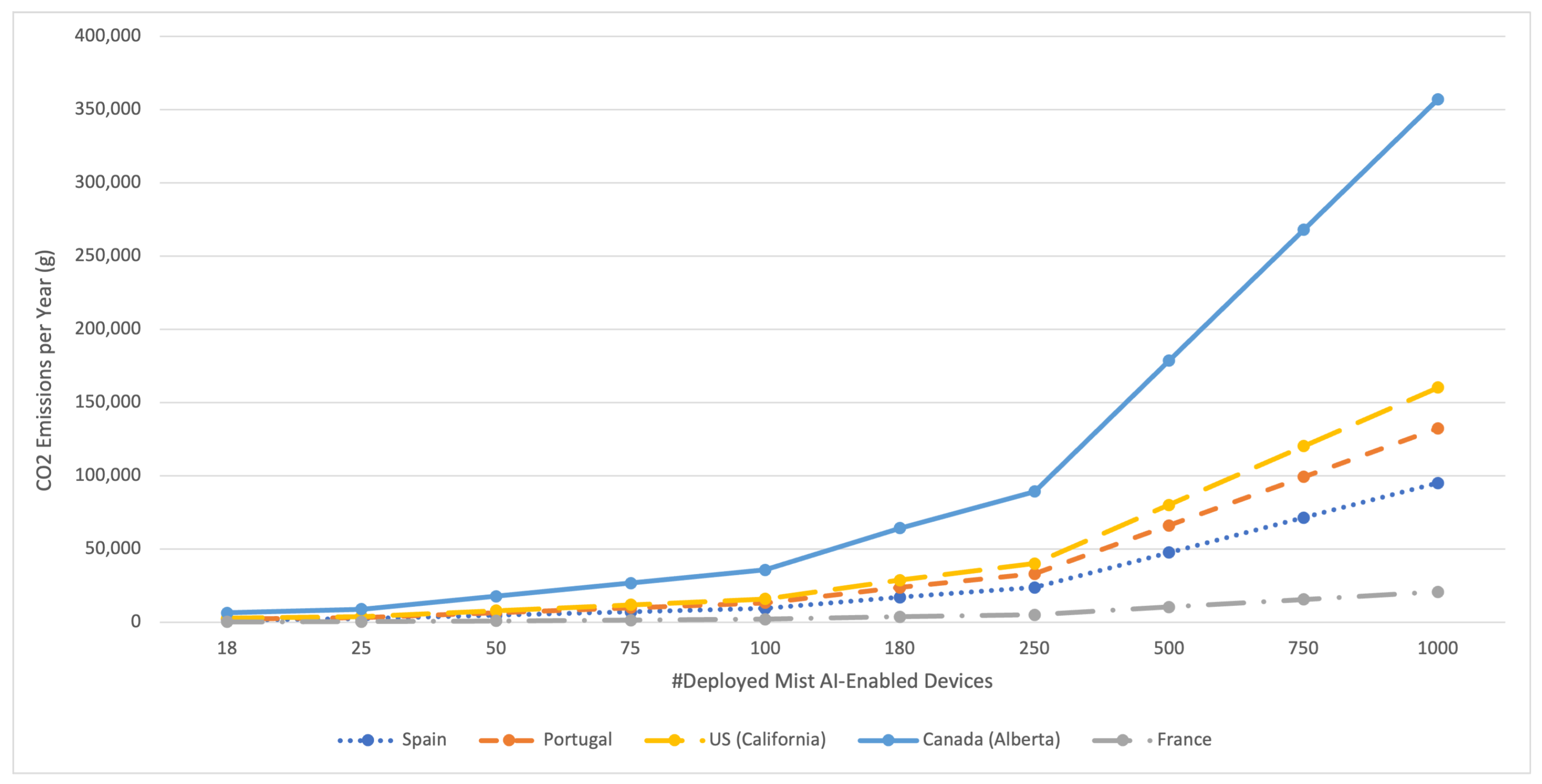

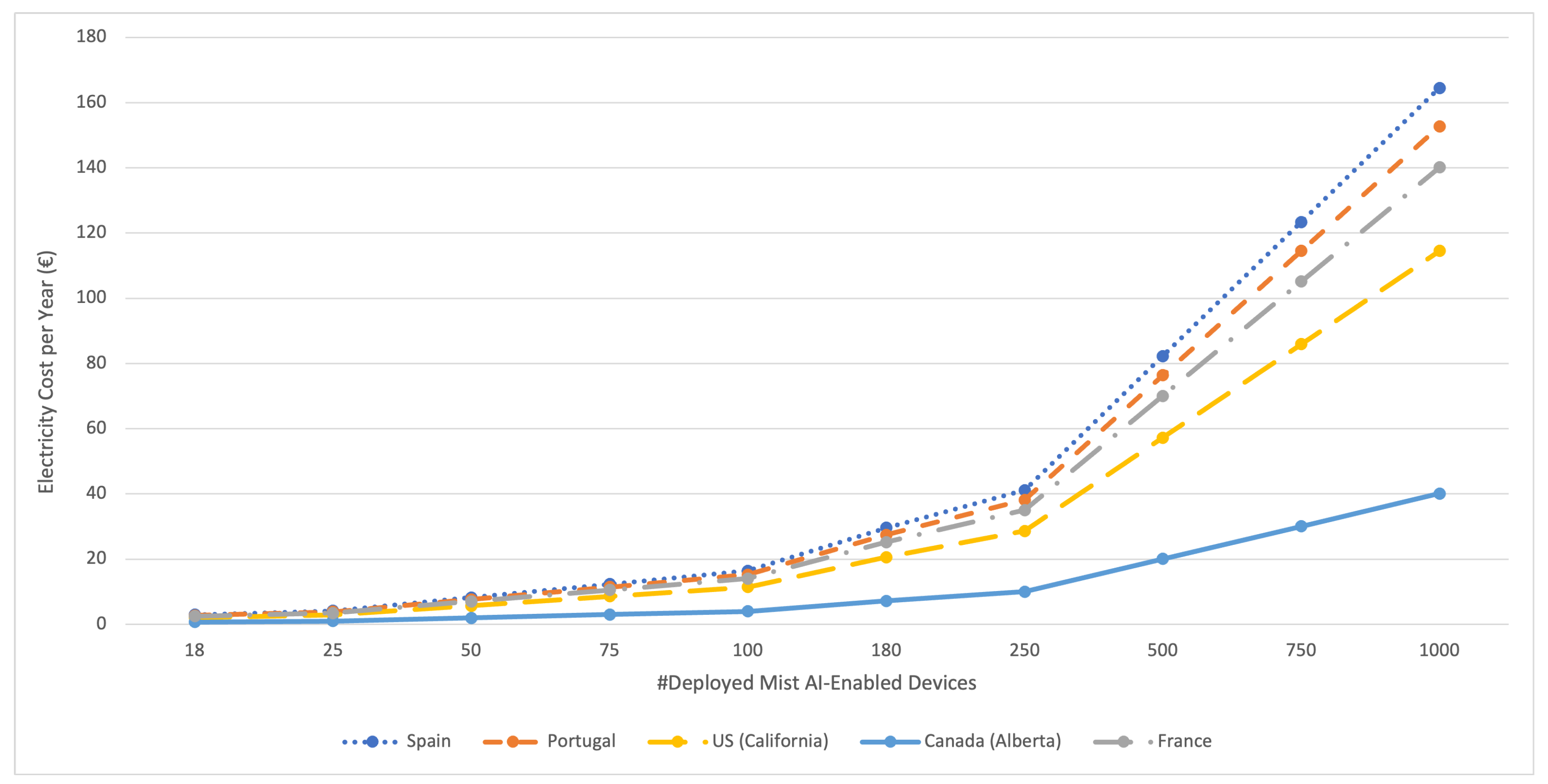

6.3. Energy Consumption of the Mist AI-Enabled Model

6.4. Carbon Footprint

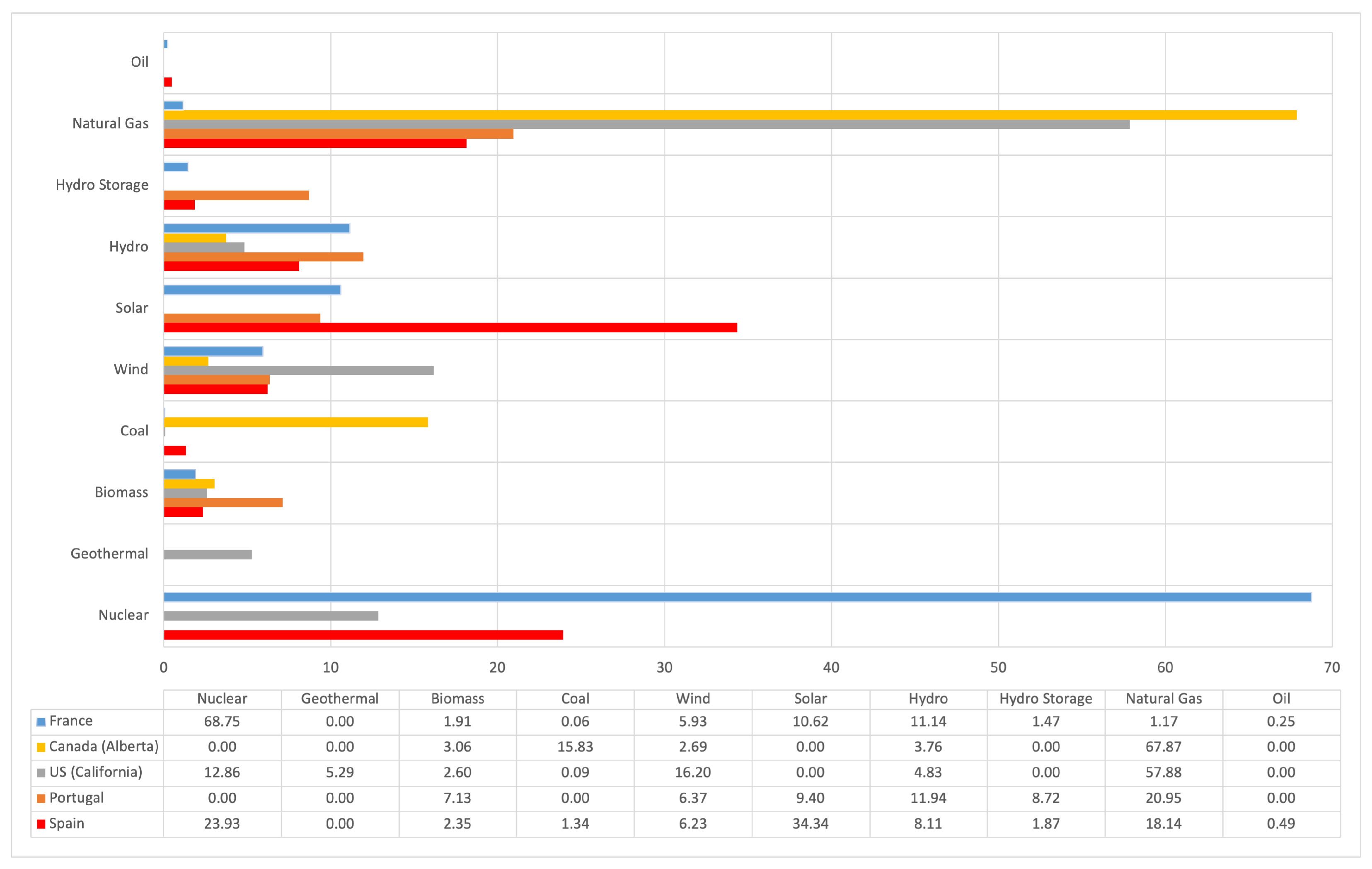

- France (data source: Réseau de Transport d’Electricité (RTE)): it has almost got rid of CO2-intensive energy sources thanks to generating most of its electricity through nuclear power. Nonetheless, on 25 July 2021, when the data in Figure 11 were collected, only roughly 31% of France’s energy came from renewable sources.

- Portugal (data source: European Network of Transmission System Operators for Electricity (ENTSOE)): its most relevant energy source is natural gas, but, when the data were gathered, approximately 43% of its energy came from renewable sources and none from nuclear power.

- Spain (data source: ENTSOE): like Portugal, it has a dependency on natural gas, but, thanks to a powerful solar energy sector, it generates roughly 53% of its energy from renewable sources. In addition, almost 24% of the Spanish energy comes from nuclear power, so a total of 77% of the energy is generated from low-carbon technologies.

- California (data source: California Independent System Operator (CAISO)): in spite of being a state of the U.S., it was selected due to its crucial role in IT and cloud-based services. Nearly 42% of its energy on 25 July 2021 was generated through low-carbon technologies, but almost 58% came from natural gas.

- Alberta (data source: Alberta Electric System Operator (AESO)): it was included as an example of a rich area with a key role in the oil and natural gas production in North America. As it can be observed in Figure 11, most of its energy (almost 84%) is generated by natural gas and coal, which results in the generation of a large amount of CO2 emissions.

7. Future Challenges of Edge-AI G-IoT Systems

- Additional mechanisms are needed to offer protection against network, physical, software, and encryption attacks. In addition, it is critical to have protection against adversarial attacks during on-device learning [130].

- Future communications networks. 5G/6G are intended to deliver low-latency communications and large capacity; therefore, moving the processing tasks to the network edge will demand higher edge computing power, which puts G-IoT and Edge-AI convergence as fundamental technology enablers for the next 6G mobile infrastructure. Moreover, the rapid proliferation of new products and devices and their native connectivity (at a global level) will force the convergence of not only G-IoT and Edge-AI, but also 5G/6G communication technologies, the latter being a fundamental prerequisite for future deployments. Indeed, future communications services should also provide better dependability and increased flexibility to effectively cope with a continuously changing environment.

- Edge-AI G-IoT Infrastructure. The IoT market is currently fragmented, so it is necessary to provide a comprehensive standardized framework that can handle all the requirements of Edge-AI G-IoT systems.

- Decentralized storage. Cloud architectures store data in remote data centers and digital infrastructures that require substantial levels of energy. Luckily, recent architectures for Edge-AI G-IoT systems are able to decentralize data storage to prevent cyberattacks and avoid high operating costs; however, to achieve energy optimizations for such decentralized architectures, sophisticated P2P protocols are needed.

- G-IoT supply chain visibility and transparency. To increase the adoption of the DCE and limit the environmental impact of a huge number of connected devices, further integration of value chains and digital enabling technologies (e.g., functional electronics, UAVs, blockchain) is needed. End-to-end trustworthy G-IoT supply chains that produce, utilize, and recycle efficiently are required.

- Development of Edge-AI G-IoT applications for Industry 5.0. The applications to be developed should be first analyzed in terms of its critical requirements (e.g., latency, fault tolerance) together with the appropriate communications architecture, while considering its alignment with social fairness, sustainability, and environmental impact. In addition, hardware should be customized to the selected Edge-AI G-IoT architecture and the specific application.

- Complete energy consumption assessment. For the sake of fairness, researchers should consider the energy consumption of all the components and subsystems involved in an Edge-AI G-IoT system (e.g., communications hardware, remote processing, communications protocols, communications infrastructure, G-IoT nodes, and data storage), which may be difficult in some practical scenarios and when using global networks.

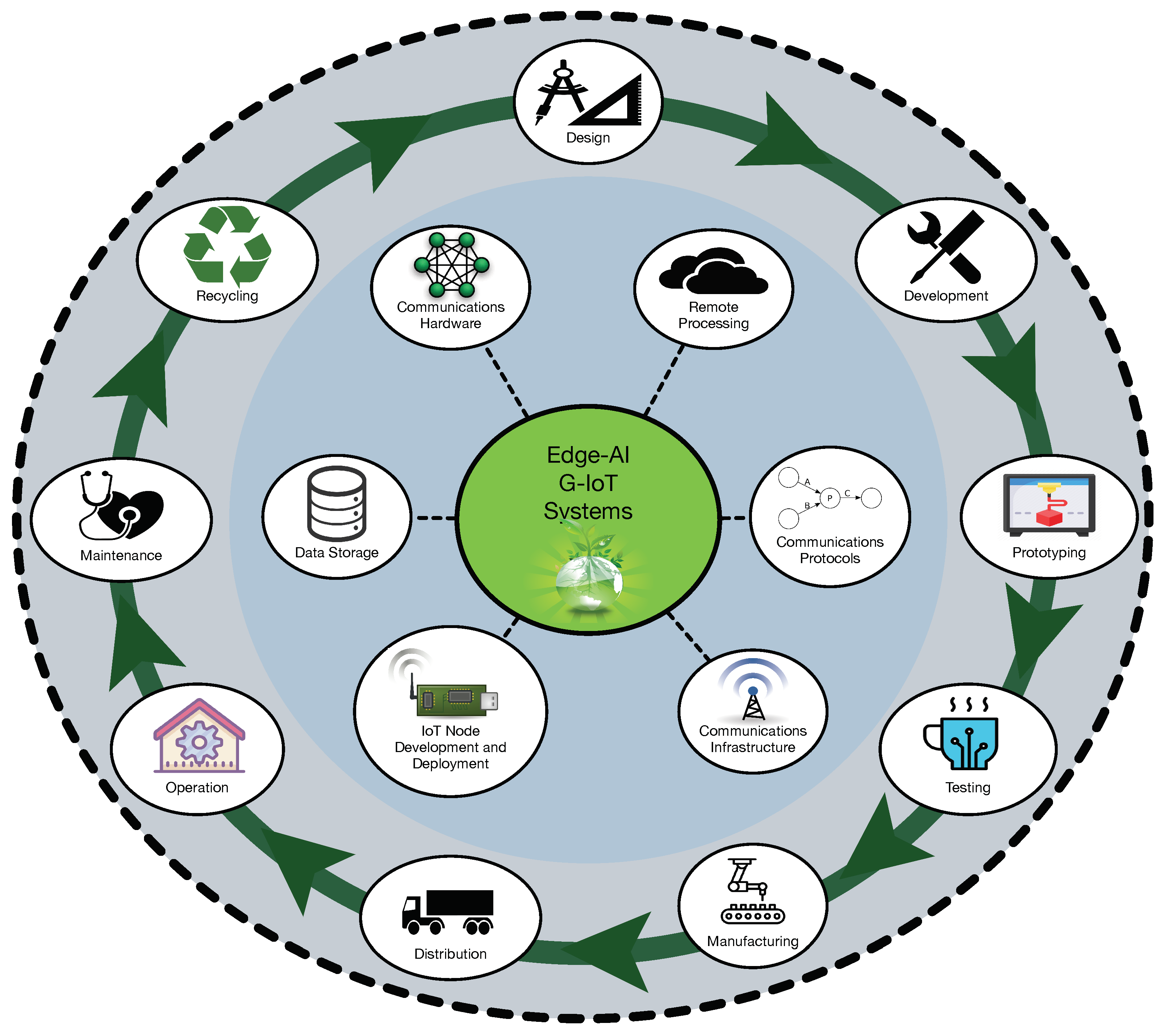

- Digital circular life cycle of Edge-AI G-IoT systems. In order to assess the impact of circular economy based applications, all the different stages of the digital circular life cycle (i.e., design, development, prototyping, testing, manufacturing, distribution, operation, maintenance, and recycling stages) should be contemplated.

- CO2 emission minimization for large-scale deployments. Future developers will need to consider that CO2 emissions increase with the number of deployed Edge-AI IoT devices. In addition, such growth changes dramatically from one country to another depending on the available energy source.

- Corporate governance, corporate strategy, and culture. Organization willingness to explore new business strategies and long-term investments will be critical in the adoption of Edge-AI G-IoT systems, as a collaborative approach is required to involve all the stakeholders and establish new ways for creating value while reducing the carbon footprint. New business models will emerge (e.g., Edge-AI as a service, such as NVIDIA Clara [131]).

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- UN General Assembly, Transforming Our World: The 2030 Agenda for Sustainable Development, 21 October 2015, A/RES/70/1. Available online: https://www.refworld.org/docid/57b6e3e44.html (accessed on 28 July 2021).

- World Economic Forum, Internet of Things Guidelines for Sustainability, Future of Digital Economy and Society System Initiative. 2018. Available online: http://www3.weforum.org/docs/IoTGuidelinesforSustainability.pdf (accessed on 28 July 2021).

- Miranda, J.; Mäkitalo, N.; Garcia-Alonso, J.; Berrocal, J.; Mikkonen, T.; Canal, C.; Murillo, J.M. From the Internet of Things to the Internet of People. IEEE Internet Comput. 2015, 19, 40–47. [Google Scholar] [CrossRef]

- Miraz, M.H.; Ali, M.; Excell, P.S.; Picking, R. A review on Internet of Things (IoT), Internet of Everything (IoE) and Internet of Nano Things (IoNT). In Proceedings of the 2015 Internet Technologies and Applications (ITA), Wrexham, UK, 8–11 September 2015; pp. 219–224. [Google Scholar]

- Arshad, R.; Zahoor, S.; Shah, M.A.; Wahid, A.; Yu, H. Green IoT: An Investigation on Energy Saving Practices for 2020 and Beyond. IEEE Access 2017, 5, 15667–15681. [Google Scholar] [CrossRef]

- Albreem, M.A.; Sheikh, A.M.; Alsharif, M.H.; Jusoh, M.; Mohd Yasin, M.N. Green Internet of Things (GIoT): Applications, Practices, Awareness, and Challenges. IEEE Access 2021, 9, 38833–38858. [Google Scholar] [CrossRef]

- Zhu, C.; Leung, V.C.M.; Shu, L.; Ngai, E.C.-H. Green Internet of Things for Smart World. IEEE Access 2015, 3, 2151–2162. [Google Scholar] [CrossRef]

- Mulay, A. Sustaining Moore’s Law: Uncertainty Leading to a Certainty of IoT Revolution. Morgan Claypool 2015, 1, 1–109. [Google Scholar] [CrossRef]

- Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of the Regions. The European Green Deal. Bussels. 11 December 2019. Available online: https://ec.europa.eu/info/sites/info/files/european-green-deal-communication_en.pdf (accessed on 28 July 2021).

- United Nation’s 2030 Agenda and Sustainable Development Goals. Available online: https://sustainabledevelopment.un.org/ (accessed on 28 July 2021).

- Askoxylakis, I. A Framework for Pairing Circular Economy and the Internet of Things. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- European Commission. Circular Economy Action Plan. Available online: https://ec.europa.eu/environment/strategy/circular-economy-action-plan_es (accessed on 28 July 2021).

- World Economic Forum. Circular Economy and Material Value Chains. Available online: https://www.weforum.org/projects/circular-economy (accessed on 28 July 2021).

- Explore the Circularity Gap Report 2021. Available online: https://www.circularity-gap.world (accessed on 28 July 2021).

- Digital Circular Economy: A Cornerstone of a Sustainable European Industry Transformation. White Paper-ECERA European Circular Economy Research Alliance. 20 October 2020. Available online: https://www.era-min.eu/sites/default/files/publications/201023_ecera_white_paper_on_digital_circular_economy.pdf (accessed on 28 July 2021).

- PACE (Platform for Accelerating the Circular Economy) and World Economic Forum. A New Cicular Vision for Electronics. Time for a Global Reboot. January 2019. Available online: http://www3.weforum.org/docs/WEF_A_New_Circular_Vision_for_Electronics.pdf (accessed on 28 July 2021).

- Fernández-Caramés, T.M.; Fraga-Lamas, P. Design of a Fog Computing, Blockchain and IoT-Based Continuous Glucose Monitoring System for Crowdsourcing mHealth. Proceedings 2019, 4, 37. [Google Scholar] [CrossRef] [Green Version]

- De Donno, M.; Tange, K.; Dragoni, N. Foundations and Evolution of Modern Computing Paradigms: Cloud, IoT, Edge, and Fog. IEEE Access 2019, 7, 150936–150948. [Google Scholar] [CrossRef]

- Fraga-Lamas, P.; Ramos, L.; Mondéjar-Guerra, V.; Fernández-Caramés, T.M. A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance. Remote Sens. 2019, 11, 2144. [Google Scholar] [CrossRef] [Green Version]

- Alsamhi, S.H.; Afghah, F.; Sahal, R.; Hawbani, A.; Al-qaness, A.A.; Lee, B.; Guizani, M. Green internet of things using UAVs in B5G networks: A review of applications and strategies. Ad Hoc Netw. 2021, 117, 102505. [Google Scholar] [CrossRef]

- Kibria, M.G.; Nguyen, K.; Villardi, G.P.; Zhao, O.; Ishizu, K.; Kojima, F. Big Data Analytics, Machine Learning, and Artificial Intelligence in Next-Generation Wireless Networks. IEEE Access 2018, 6, 32328–32338. [Google Scholar] [CrossRef]

- European Commission, Industry 5.0: Towards a Sustainable, Human-centric and Resilient European Industry. January 2021. Available online: https://ec.europa.eu/info/news/industry-50-towards-more-sustainable-resilient-and-human-centric-industry-2021-jan-07_en (accessed on 28 July 2021).

- Nahavandi, S. Industry 5.0—A Human-Centric Solution. Sustainability 2019, 11, 16. [Google Scholar] [CrossRef] [Green Version]

- Paschek, D.; Mocan, A.; Draghici, A. Industry 5.0—The expected impact of next industrial revolution. In Proceedings of the MakeLearn & TIIM Conference, Piran, Slovenia, 15–17 May 2019; pp. 1–8. [Google Scholar]

- Council for Science, Technology and Innovation, Government of Japan, Report on The 5th Science and Technology Basic Plan. 18 December 2015. Available online: https://www8.cao.go.jp/cstp/kihonkeikaku/5basicplan_en.pdf (accessed on 28 July 2021).

- Keidanren (Japan Business Federation), Society 5.0: Co-Creating the Future. November 2018. Available online: https://www.keidanren.or.jp/en/policy/2018/095.html (accessed on 28 July 2021).

- Blanco-Novoa, O.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Castedo, L. An Electricity-Price Aware Open-Source Smart Socket for the Internet of Energy. Sensors 2017, 17, 643. [Google Scholar] [CrossRef] [Green Version]

- Suárez-Albela, M.; Fraga-Lamas, P.; Fernández-Caramés, T.M.; Dapena, A.; González-López, M. Home Automation System Based on Intelligent Transducer Enablers. Sensors 2016, 16, 1595. [Google Scholar] [CrossRef] [Green Version]

- Pérez-Expósito, J.M.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Castedo, L. VineSens: An Eco-Smart Decision Support Viticulture System. Sensors 2017, 3, 465. [Google Scholar] [CrossRef]

- Wang, H.; Osen, O.L.; Li, G.; Li, W.; Dai, H.-N.; Zeng, W. Big data and industrial Internet of Things for the maritime industry in Northwestern Norway. In Proceedings of the TENCON, Macao, China, 1–4 November 2015. [Google Scholar]

- Shu, L.; Mukherjee, M.; Pecht, M.; Crespi, N.; Han, S.N. Challenges and Research Issues of Data Management in IoT for Large-Scale Petrochemical Plants. IEEE Syst. J. 2017, 99, 1–15. [Google Scholar] [CrossRef]

- Fraga-Lamas, P.; Fernández-Caramés, T.M.; Suárez-Albela, M.; Castedo, L.; González-López, M. A Review on Internet of Things for Defense and Public Safety. Sensors 2016, 16, 1644. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kshetri, N. Can Blockchain Strengthen the Internet of Things? IT Prof. 2017, 19, 68–72. [Google Scholar] [CrossRef] [Green Version]

- Gartner. Forecast: The Internet of Things, Worldwide. 2013. Available online: https://www.gartner.com/en/documents/2625419/forecast-the-internet-of-things-worldwide-2013 (accessed on 28 July 2021).

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog Computing and its Role in the Internet of Things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finlad, 17 August 2012; pp. 13–16. [Google Scholar]

- Dolui, K.; Datta, S.K. Comparison of edge computing implementations: Fog computing, cloudlet and mobile edge computing. In Proceedings of the Global Internet of Things Summit (GIoTS), Geneva, Switzerland, 6–9 June 2017. [Google Scholar]

- Suárez-Albela, M.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Castedo, L. A Practical Evaluation of a High-Security Energy-Efficient Gateway for IoT Fog Computing Applications. Sensors 2017, 17, 1978. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fraga-Lamas, P.; Fernández-Caramés, T.M.; Blanco-Novoa, Ó.; Vilar-Montesinos, M. A Review on Industrial Augmented Reality Systems for the Industry 4.0 Shipyard. IEEE Access 2018, 6, 13358–13375. [Google Scholar] [CrossRef]

- Schuetz, S.; Venkatesh, V. The Rise of Human Machines: How Cognitive Computing Systems Challenge Assumptions of User-System Interaction. J. Assoc. Inf. Syst. 2020, 21, 460–482. [Google Scholar]

- Preden, J.S.; Tammemäe, K.; Jantsch, A.; Leier, M.; Riid, A.; Calis, E. The Benefits of Self-Awareness and Attention in Fog and Mist Computing. Computer 2015, 48, 37–45. [Google Scholar] [CrossRef]

- Fernández-Caramés, T.M.; Fraga-Lamas, P.; Suárez-Albela, M.; Vilar-Montesinos, M. A Fog Computing and Cloudlet Based Augmented Reality System for the Industry 4.0 Shipyard. Sensors 2018, 18, 1798. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Suárez-Albela, M.; Fraga-Lamas, P.; Fernández-Caramés, T.M. A Practical Evaluation on RSA and ECC-Based Cipher Suites for IoT High-Security Energy-Efficient Fog and Mist Computing Devices. Sensors 2018, 18, 3868. [Google Scholar] [CrossRef] [Green Version]

- Markakis, E.K.; Karras, K.; Zotos, N.; Sideris, A.; Moysiadis, T.; Corsaro, A.; Alexiou, G.; Skianis, C.; Mastorakis, G.; Mavromoustakis, C.X.; et al. EXEGESIS: Extreme Edge Resource Harvesting for a Virtualized Fog Environment. IEEE Commun. Mag. 2017, 55, 173–179. [Google Scholar] [CrossRef]

- Yeow, K.; Gani, A.; Ahmad, R.W.; Rodrigues, J.J.P.C.; Ko, K. Decentralized Consensus for Edge-Centric Internet of Things: A Review, Taxonomy, and Research Issues. IEEE Access 2018, 6, 1513–1524. [Google Scholar] [CrossRef]

- Radner, H.; Stange, J.; Büttner, L.; Czarske, J. Field-Programmable System-on-Chip-Based Control System for Real-Time Distortion Correction in Optical Imaging. IEEE Trans. Ind. Electron. 2021, 68, 3370–3379. [Google Scholar] [CrossRef]

- De Mil, P.; Jooris, B.; Tytgat, L.; Catteeuw, R.; Moerman, I.; Demeester, P.; Kamerman, A. Design and implementation of a generic energy- harvesting framework applied to the evaluation of a large-scale electronic shelf-labeling wireless sensor network. J. Wireless Com. Netw. 2010, 2020, 343690. [Google Scholar] [CrossRef] [Green Version]

- Ercan, A.Ö.; Sunay, M.O.; Akyildiz, I.F. RF Energy Harvesting and Transfer for Spectrum Sharing Cellular IoT Communications in 5G Systems. IEEE Commun. Mag. 2018, 17, 1680–1694. [Google Scholar] [CrossRef]

- Ejaz, W.; Naeem, M.; Shahid, A.; Anpalagan, A.; Jo, M. Efficient Energy Management for the Internet of Things in Smart Cities. IEEE Commun. Mag. 2017, 55, 84–91. [Google Scholar] [CrossRef] [Green Version]

- Lazaro, A.; Villarino, R.; Girbau, D. A Survey of NFC Sensors Based on Energy Harvesting for IoT Applications. Sensors 2018, 18, 3746. [Google Scholar] [CrossRef] [Green Version]

- Coksun, V.; Ok, K.; Ozdenizci, B. Near Field Communications: From Theory to Practice, 1st ed.; Wiley: Chichester, UK, 2012. [Google Scholar]

- Fraga-Lamas, P.; Fernández-Caramés, T.M. Reverse engineering the communications protocol of an RFID public transportation card. In Proceedings of the IEEE International Conference RFID, Phoenix, AZ, USA, 9–11 May 2017; pp. 30–35. [Google Scholar]

- Murofushi, R.H.; Tavares, J.J.P.Z.S. Towards fourth industrial revolution impact: Smart product based on RFID technology. IEEE Instrum. Meas. Mag. 2017, 20, 51–56. [Google Scholar] [CrossRef]

- Fernández-Caramés, T.M. An Intelligent Power Outlet System for the Smart Home of the Internet-of-Things. Int. J. Distrib. Sens. Netw. 2015, 11, 214805. [Google Scholar] [CrossRef] [Green Version]

- Held, I.; Chen, A. Channel Estimation and Equalization Algorithms for Long Range Bluetooth Signal Reception. In Proceedings of the IEEE Vehicular Technology Conference, Taipei, Taiwan, 16–19 May 2010. [Google Scholar]

- Hernández-Rojas, D.L.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Escudero, C.J. Design and Practical Evaluation of a Family of Lightweight Protocols for Heterogeneous Sensing through BLE Beacons in IoT Telemetry Applications. Sensors 2017, 18, 57. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- ZigBee Alliance. Available online: http://www.zigbee.org (accessed on 28 July 2021).

- Khutsoane, O.; Isong, B.; Abu-Mahfouz, A.M. IoT devices and applications based on LoRa/LoRaWAN. In Proceedings of the Annual Conference of the IEEE Industrial Electronics Society, Beijing, China, 29 October–1 November 2017. [Google Scholar]

- Mazhar, F.; Khan, M.G.; Sällberg, B. Precise indoor positioning using UWB: A review of methods, algorithms and implementations. Wirel. Pers. Commun. 2017, 97, 4467–4491. [Google Scholar] [CrossRef]

- Adame, T.; Bel, A.; Bellalta, B.; Barcelo, J.; Oliver, M. IEEE 802.11AH: The WiFi approach for M2M communications. IEEE Wirel. Commun. 2014, 21, 144–152. [Google Scholar] [CrossRef] [Green Version]

- Xie, J.; Zhang, B.; Zhang, C. A Novel Relay Node Placement and Energy Efficient Routing Method for Heterogeneous Wireless Sensor Networks. IEEE Access 2020, 8, 202439–202444. [Google Scholar] [CrossRef]

- Bagula, A.; Abidoye, A.P.; Zodi, G.-A.L. Service-Aware Clustering: An Energy-Efficient Model for the Internet-of-Things. Sensors 2016, 16, 9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kaur, N.; Sood, S.K. An Energy-Efficient Architecture for the Internet of Things (IoT). IEEE Syst. J. 2017, 11, 796–805. [Google Scholar] [CrossRef]

- Xia, S.; Yao, Z.; Li, Y.; Mao, S. Online Distributed Offloading and Computing Resource Management with Energy Harvesting for Heterogeneous MEC-enabled IoT. IEEE Trans. Wirel. Commun. 2021. [Google Scholar] [CrossRef]

- Xiao, L.; Wan, X.; Lu, X.; Zhang, Y.; Wu, D. IoT Security Techniques Based on Machine Learning: How Do IoT Devices Use AI to Enhance Security? IEEE Signal Process. Mag. 2018, 35, 41–49. [Google Scholar] [CrossRef]

- Suárez-Albela, M.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Castedo, L. A Practical Performance Comparison of ECC and RSA for Resource-Constrained IoT Devices. In Proceedings of the 2018 Global Internet of Things Summit (GIoTS), Bilbao, Spain, 4–7 June 2018; pp. 1–6. [Google Scholar]

- Rivest, R.L.; Shamir, A.; Adleman, L.M. A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 1978, 21, 120–126. [Google Scholar] [CrossRef]

- Koblitz, N. Elliptic curve cryptosystems. Math. Comput. 1987, 48, 203–209. [Google Scholar] [CrossRef]

- Miller, V.S. Use of elliptic curves in cryptography. Proc. Adv. Cryptol. 1985, 218, 417–426. [Google Scholar]

- Diffie, W.; Hellman, M.E. New directions in cryptography. IEEE Trans. Inf. Theory 1976, IT-22, 644–654. [Google Scholar] [CrossRef] [Green Version]

- The Transport Layer Security (TLS) Protocol Version 1.3. Available online: https://datatracker.ietf.org/doc/html/rfc8446 (accessed on 28 July 2021).

- Burg, A.; Chattopadhyay, A.; Lam, K. Wireless Communication and Security Issues for Cyber-Physical Systems and the Internet-of-Things. Proc. IEEE 2018, 106, 38–60. [Google Scholar] [CrossRef]

- Scharfglass, K.; Weng, D.; White, J.; Lupo, C. Breaking Weak 1024-bit RSA Keys with CUDA. In Proceedings of the 2012 13th International Conference on Parallel and Distributed Computing, Applications and Technologies, Beijing, China, 14–16 December 2012; pp. 207–212. [Google Scholar]

- Liu, Z.; Großschädl, J.; Hu, Z.; Järvinen, K.; Wang, H.; Verbauwhede, I. Elliptic Curve Cryptography with Efficiently Computable Endomorphisms and Its Hardware Implementations for the Internet of Things. IEEE Trans. Comput. 2017, 66, 773–785. [Google Scholar] [CrossRef]

- Chaves, R.; Kuzmanov, G.; Sousa, L.; Vassiliadis, S. Cost-Efficient SHA Hardware Accelerators. IEEE Trans. Very Large Scale Integr. Syst. 2008, 16, 999–1008. [Google Scholar] [CrossRef] [Green Version]

- Gielata, A.; Russek, P.; Wiatr, K. AES hardware implementation in FPGA for algorithm acceleration purpose. In Proceedings of the 2008 International Conference on Signals and Electronic Systems, Krakow, Poland, 14–17 September 2008; pp. 137–140. [Google Scholar]

- IoT Analytics, State of IoT Q4/2020 & Outlook 2021. November 2020. Available online: https://iot-analytics.com/product/state-of-iot-q4-2020-outlook-2021/ (accessed on 28 July 2021).

- Salameh, H.B.; Irshaid, M.B.; Ajlouni, A.A.; Aloqaily, M. Energy-Efficient Cross-layer Spectrum Sharing in CR Green IoT Networks. IEEE Trans. Green Commun. Netw. 2021, 5, 1091–1100. [Google Scholar] [CrossRef]

- Sharma, P.K.; Kumar, N.; Park, J.H. Blockchain Technology Toward Green IoT: Opportunities and Challenges. IEEE Netw. 2020, 34, 4. [Google Scholar] [CrossRef]

- Bol, D.; de Streel, G.; Flandre, D. Can we connect trillions of IoT sensors in a sustainable way? A technology/circuit perspective. In Proceedings of the IEEE SOI-3D-Subthreshold Microelectronics Technology Unified Conference (S3S), Rohnert Park, CA, USA, 5–8 October 2015. [Google Scholar]

- Fafoutis, X.; Marchegiani, L. Rethinking IoT Network Reliability in the Era of Machine Learning. In Proceedings of the 2019 International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Atlanta, GA, USA, 14–17 July 2019; pp. 1112–1119. [Google Scholar]

- Zhou, Z.; Xie, M.; Zhu, T.; Xu, W.; Yi, P.; Huang, Z.; Zhang, Q.; Xiao, S. EEP2P: An energy-efficient and economy-efficient P2P network protocol. In Proceedings of the International Green Computing Conference, Dallas, TX, USA, 3–5 November 2014. [Google Scholar]

- Sharifi, L.; Rameshan, N.; Freitag, F.; Veiga, L. Energy Efficiency Dilemma: P2P-cloud vs. Datacenter. In Proceedings of the IEEE 6th International Conference on Cloud Computing Technology and Science, Singapore, 15–18 December 2014. [Google Scholar]

- Zhang, P.; Helvik, B.E. Towards green P2P: Analysis of energy consumption in P2P and approaches to control. In Proceedings of the International Conference on High Performance Computing & Simulation (HPCS), Madrid, Spain, 2–6 July 2012. [Google Scholar]

- Miyake, S.; Bandai, M. Energy-Efficient Mobile P2P Communications Based on Context Awareness. In Proceedings of the IEEE 27th International Conference on Advanced Information Networking and Applications (AINA), Barcelona, Spain, 25–28 March 2013. [Google Scholar]

- Liao, C.C.; Cheng, S.M.; Domb, M. On Designing Energy Efficient Wi-Fi P2P Connections for Internet of Things. In Proceedings of the IEEE 85th Vehicular Technology Conference (VTC Spring), Sydney, Australia, 4–7 June 2017. [Google Scholar]

- EPA, Report on Server and Data Center Energy Efficiency. August 2007. Available online: https://www.energystar.gov/ia/partners/prod_development/downloads/EPA_Report_Exec_Summary_Final.pdf (accessed on 28 July 2021).

- Schneider Electric. White Paper: Estimating a Data Center’s Electrical Carbon Footprint; Schneider Electric: Le Creusot, France, 2010. [Google Scholar]

- Fernández-Caramés, T.M.; Fraga-Lamas, P.; Suárez-Albela, M.; Díaz-Bouza, M.A. A Fog Computing Based Cyber-Physical System for the Automation of Pipe-Related Tasks in the Industry 4.0 Shipyard. Sensors 2018, 18, 1691. [Google Scholar] [CrossRef] [Green Version]

- Yan, M.; Chen, B.; Feng, G.; Qin, S. Federated Cooperation and Augmentation for Power Allocation in Decentralized Wireless Networks. IEEE Access 2020, 8, 48088–48100. [Google Scholar] [CrossRef]

- Ray, S. A Quick Review of Machine Learning Algorithms. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; pp. 35–39. [Google Scholar]

- Samek, W.; Montavon, G.; Vedaldi, A.; Hansen, L.K.; Muller, K.-R. Explainable AI: Interpreting, Explaining and Visualizing Deep Learning; Lecture Notes in Computer Science Book Series (LNCS, Volume 11700); Springer Nature Switzerland AG: Cham, Switzerland, 2019. [Google Scholar]

- Rausch, T.; Dustdar, S. Edge Intelligence: The Convergence of Humans, Things, and AI. In Proceedings of the 2019 IEEE International Conference on Cloud Engineering (IC2E), Prague, Czech Republic, 24–27 June 2019; pp. 86–96. [Google Scholar]

- Bhardwaj, K.; Suda, N.; Marculescu, R. EdgeAI: A Vision for Deep Learning in IoT Era. IEEE Des. Test 2021, 38, 37–43. [Google Scholar] [CrossRef] [Green Version]

- Martins, P.; Lopes, S.I.; Rosado da Cruz, A.M.; Curado, A. Towards a Smart & Sustainable Campus: An Application-Oriented Architecture to Streamline Digitization and Strengthen Sustainability in Academia. Sustainability 2021, 13, 3189. [Google Scholar]

- Martins, P.; Lopes, S.I.; Curado, A.; Rocha, Á.; Adeli, H.; Dzemyda, G.; Moreira, F.; Ramalho Correia, A.M. (Eds.) Designing a FIWARE-Based Smart Campus with IoT Edge-Enabled Intelligence. In Trends and Applications in Information Systems and Technologies; WorldCIST 2021; Advances in Intelligent Systems and Computing; Springer Nature Switzerland AG: Cham, Switzerland, 2021; Volume 1367. [Google Scholar]

- Alvarado, I. AI-enabled IoT, Network Complexity and 5G. In Proceedings of the 2019 IEEE Green Energy and Smart Systems Conference (IGESSC), Long Beach, CA, USA, 4–5 November 2019; pp. 1–6. [Google Scholar]

- Zou, Z.; Jin, Y.; Nevalainen, P.; Huan, Y.; Heikkonen, J.; Westerlund, T. Edge and Fog Computing Enabled AI for IoT-An Overview. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), Hsinchu, Taiwan, 18–20 March 2019; pp. 51–56. [Google Scholar]

- Mauro, A.D.; Conti, F.; Schiavone, P.D.; Rossi, D.; Benini, L. Always-On 674μW@4GOP/s Error Resilient Binary Neural Networks with Aggressive SRAM Voltage Scaling on a 22-nm IoT End-Node. IEEE Trans. Circuits Syst. I Regul. Pap. 2020, 67, 3905–3918. [Google Scholar] [CrossRef]

- Qin, H.; Gong, R.; Liu, X.; Bai, X.; Song, J.; Sebe, N. Binary neural networks: A survey, Pattern Recognition. Pattern Recognit. 2020, 105, 107281. [Google Scholar] [CrossRef] [Green Version]

- Tensor Flow Light Guide. Available online: https://www.tensorflow.org/lite/guide (accessed on 28 July 2021).

- Arduino Nano 33 BLE Sense. Available online: https://store.arduino.cc/arduino-nano-33-ble-sense (accessed on 28 July 2021).

- SparkFun Edge Development Board-Apollo3. Blue. Available online: https://www.sparkfun.com/products/15170 (accessed on 28 July 2021).

- Adafruit EdgeBadge-Tensorflow Lite for Microcontrollers. Available online: https://www.adafruit.com/product/4400 (accessed on 28 July 2021).

- Espressif Systems. ESP32 Overview. Available online: https://www.espressif.com/en/products/devkits/esp32-devkitc/overview (accessed on 28 July 2021).

- Espressif Systems. ESP-EYE-Enabling a Smarter Future with Audio and Visual AIoT. Available online: https://www.espressif.com/en/products/devkits/esp-eye/overview (accessed on 28 July 2021).

- STM32, Nucleo-L4R5ZI Product Overview. Available online: https://www.st.com/en/evaluation-tools/nucleo-l4r5zi.html (accessed on 28 July 2021).

- Shi, Y.; Yang, K.; Jiang, T.; Zhang, J.; Letaief, K.B. Communication-Efficient Edge AI: Algorithms and Systems. IEEE Commun. Surv. Tutor, 2020, 22, 2167–2191. [Google Scholar] [CrossRef]

- Rocha, A.; Adeli, H.; Dzemyda, G.; Moreira, F.; Ramalho Correia, A.M. Trends and Applications in Information Systems and Technologies Features. In Proceedings of the 9th World Conference on Information Systems and Technologies (WorldCIST’21), Terceira Island, Azores, Portugal, 30 March–2 April 2021; Volume 3. [Google Scholar]

- Merenda, M.; Porcaro, C.; Iero, D. Edge Machine Learning for AI-Enabled IoT Devices: A Review. Sensors 2020, 9, 2533. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence With Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef] [Green Version]

- Karras, K.; Pallis, E.; Mastorakis, G.; Nikoloudakis, Y.; Batalla, J.M.; Mavromoustakis, C.X.; Markakis, E. A Hardware Acceleration Platform for AI-Based Inference at the Edge. Circuits Syst Signal Process. 2020, 39, 1059–1070. [Google Scholar] [CrossRef]

- Kim, Y.; Kong, J.; Munir, A. CPU-Accelerator Co-Scheduling for CNN Acceleration at the Edge. IEEE Access 2020, 8, 211422–211433. [Google Scholar] [CrossRef]

- Kim, B.; Lee, S.; Trivedi, A.R.; Song, W.J. Energy-Efficient Acceleration of Deep Neural Networks on Realtime-Constrained Embedded Edge Devices. IEEE Access 2020, 8, 216259–216270. [Google Scholar] [CrossRef]

- Reddi, V.J.; Plancher, B.; Kennedy, S.; Moroney, L.; Warden, P.; Agarwal, A.; Banbury, C.; Banzi, M.; Bennett, M.; Brown, B.; et al. Widening Access to Applied Machine Learning with TinyML. arXiv 2021, arXiv:2106.04008. [Google Scholar]

- MLCommons™, MLPerf™ Tiny Inference Benchmark v0.5 Benchmark, San Francisco, CA. 16 June 2021. Available online: https://mlcommons.org/en/inference-tiny-05/ (accessed on 28 July 2021).

- Banbury, C.; Reddi, V.J.; Torelli, P.; Holleman, J.; Jeffries, N.; Kiraly, C.; Montino, P.; Kanter, D.; Ahmed, S.; Pau, D.; et al. MLPerf Tiny Benchmark. arXiv 2021, arXiv:2106.07597. [Google Scholar]

- Amodei, D.; Hernandez, D. AI and Compute. May 2018. Available online: https://openai.com/blog/ai-and-compute/ (accessed on 28 July 2021).

- Schwartz, R.; Dodge, J.; Smith, N.A.; Etzioni, O. Green AI. arXiv 2019, arXiv:1907.10597. [Google Scholar] [CrossRef]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/Accuracy Trade-Offs for Modern Convolutional Object Detectors. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3296–3297. [Google Scholar]

- Pinto, G.; Castor, F. Energy efficiency: A new concern for application software developers. Commun. ACM 2017, 60, 68–75. [Google Scholar] [CrossRef]

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and Policy Considerations for Deep Learning in NLP. arXiv 2019, arXiv:1906.02243. [Google Scholar]

- World Data Bank, CO2 Emissions (Metric Tons per Capita). Available online: https://data.worldbank.org/indicator/EN.ATM.CO2E.PC (accessed on 28 July 2021).

- Lundegård, A.D.Y. GreenML—A Methodology for Fair Evaluation of Machine Learning Algorithms with Respect to Resource Consumption. Master’s Thesis, Department of Computer and Information Science, Linköping University, Linköping, Sweden, 2019. [Google Scholar]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-Datacenter Performance Analysis of a Tensor Processing Unit. In Proceedings of the 44th Annual International Symposium on Computer Architecture (ISCA ’17), Toronto, ON, Canada, 24–28 June 2017; pp. 1–12. [Google Scholar]

- Fraga-Lamas, P.; Fernández-Caramés, T.M.; Noceda-Davila, D.; Díaz-Bouza, M.A.; Pena-Agras, J.D.; Castedo, L. Enabling automatic event detection for the pipe workshop of the shipyard 4.0. In Proceedings of the 2017 56th FITCE Congress, Madrid, Spain, 14–15 September 2017; pp. 20–27. [Google Scholar]

- Fraga-Lamas, P.; Fernández-Caramés, T.M.; Noceda-Davila, D.; Vilar-Montesinos, M. RSS stabilization techniques for a real-time passive UHF RFID pipe monitoring system for smart shipyards. In Proceedings of the 2017 IEEE International Conference on RFID (RFID), Phoenix, AZ, USA, 9–11 May 2017; pp. 161–166. [Google Scholar]

- Fraga-Lamas, P.; Noceda-Davila, D.; Fernández-Caramés, T.M.; Díaz-Bouza, M.A.; Vilar-Montesinos, M. Smart Pipe System for a Shipyard 4.0. Sensors 2016, 16, 2186. [Google Scholar] [CrossRef] [Green Version]

- Stoll, C.; Klaaßen, L.; Gallersdörfer, U. The Carbon Footprint of Bitcoin. Joule 2019, 3, 1647–1661. [Google Scholar] [CrossRef]

- Electricity Maps Public Website. Available online: https://www.electricitymap.org (accessed on 28 July 2021).

- Dong, Y.; Cheng, J.; Hossain, M.; Leung, V.C.M. Secure distributed on-device learning networks with byzantine adversaries. IEEE Netw. 2019, 33, 180–187. [Google Scholar] [CrossRef]

- NVIDIA, NVIDIA Clara: An Application Framework Optimized for Healthcare and Life Sciences Developers. Available online: https://developer.nvidia.com/clara (accessed on 28 July 2021).

| Technology | Power Consumption | Frequency Band | Maximum Range | Data Rate | Main Features | Popular Applications |

|---|---|---|---|---|---|---|

| NFC | Tags require no batteries, no power | 13.56 MHz | <20 cm | 424 kbit/s | Low cost | Ticketing and payments |

| Bluetooth 5 LE | 1–20 mW, Low power and rechargeable (days to weeks) | 2.4 GHz | <400 m | 1360 kbit/s | Trade-off among different PHY modes | Beacons, wireless headsets |

| EnOcean | Very low consumption or battery-less thanks to using energy harvesting | 868–915 MHz | 300 m | 120 kbit/s | Up to nodes | Energy harvesting building automation applications |

| HF RFID | Tags require no batteries | 3–30 MHz (13.56 MHz) | a few meters | <640 kbit/s | NLOS, low cost | Smart Industry, payments, asset tracking |

| LF RFID | Tags require no batteries | 30–300 KHz (125 KHz) | <10 cm | <640 kbit/s | NLOS, durability, low cost | Smart Industry and security access |

| UHF RFID | Batteries last from days to years | 30 MHz–3 GHz | tens of meters | <640 kbit/s | NLOS, durability, low cost | Smart Industry, asset tracking and toll payment |

| UWB/IEEE 802.15.3a | Low power, rechargeable (hours to days) | 3.1 to 10.6 GHz | <10 m | >110 Mbit/s | Low interference | Fine location, short-distance streaming |

| Wi-Fi (IEEE 802.11b/g/n/ac) | High power consumption, rechargeable (hours) | 2.4–5 GHz | <150 m | up to 433 Mbit/s (one stream) | High-speed, ubiquity, easy to deploy and access | Wireless LAN connectivity, Internet access |

| Wi-Fi HaLow/IEEE 802.11ah | Power consumption of 1 mW | 868–915 MHz | <1 km | 100 Kbit/s per channel | Low power, different QoS levels (8192 stations per AP) | IoT applications |

| ZigBee | Very low power consumption, 100–500 W, batteries last months to years | 868–915 MHz, 2.4 GHz | <100 m | Up to 250 kbit/s | Up to 65,536 nodes | Smart Home and industrial applications |

| LoRa | Long battery life, it lasts >10 years | 2.4 GHz | kilometers | 0.25−50 kbit/s | High range, resistant to interference | Smart cities, M2M applications |

| SigFox | Battery lasts 10 years sending 1 message, <10 years sending 6 messages | 868–902 MHz | 50 km | 100 kbit/s | Global cellular network | M2M applications |

| Board | Processor | Power | Connectivity | Architecture Type | Cryptographic Engine | Cost |

|---|---|---|---|---|---|---|

| Arduino Nano 33 BLE Sense [101] | ARM Cortex-M0 32-bit@64 MHz | 52 A/MHz | BLE | AI-enabled | Yes | €27 |

| SparkFun Edge [102] | ARM Cortex-M4F 32-bit@48/96 MHz | 6 A/MHz | BLE 5 | AI-enabled | Yes | €15 |

| Adafruit EdgeBadge [103] | ATSAMD51J19A 32-bit@120 MHz | 65 A/MHz | BLE/WiFi | AI-enabled | Yes | €35 |

| ESP32-DevKitC [104] | Xtensa dual-core 32-bit@160/240 MHz | 2 mA/MHz | BLE/WiFi | AI-enabled | Yes | €10 |

| ESPEYE-DevKit [105] | Xtensa dual-core 32-bit@160/240 MHz | 2 mA/MHz | BLE/WiFi | AI-enabled | Yes | €50 |

| STM32 Nucleo-144 [106] | ARM Cortex-M4 Nucleo-L4R5ZI 32-bit@160/120 MHz | 43 A/MHz | Ethernet | AI-enabled | No | €100 |

| ID | Submitter | Device | Processor | Software | Results | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Task | #1 - VWW | #2 - IC | #3 - KS | #4 - AD | |||||||||

| Data | Visual Wake Words Dataset | CIFAR-10 | Google Speech Commands | ToyADMOS (ToyCar) | |||||||||

| Model | MobileNetV1 (0.25x) | ResNet-V1 | DSCNN | FC AutoEncoder | |||||||||

| Accuracy | 80% (Top 1) | 85% (Top 1) | 90% (Top 1) | 0.85 (AUC) | |||||||||

| Units | Latency (ms) | Energy (uJ) | Latency (ms) | Energy (uJ) | Latency (ms) | Energy (uJ) | Latency (ms) | Energy (uJ) | |||||

| 1l0.5-464 | Harvard (Reference) | Nucleo-L4R5ZI | Arm Cortex M4 w/ FPU | Tensorflow Lite for Microcontrollers | 603.14 | 24,320.84 | 704.23 | 29,207.01 | 181.92 | 7373.70 | 10.40 | 416.31 | |

| 0.5-465 | Peng Cheng Laboratory | PCL Scepu02 | RV32IMAC with FPU | TensorFlowLite for Microcontrollers 2.3.1 (modified) | 846.74 | - | 1239.16 | - | 325.63 | - | 13.65 | - | |

| 1l0.5-466 | Latent AI | RPi 4 | Broadcom BCM2711 | LEIP Framework | 3.75 | - | 1.31 | - | 0.39 | - | 0.17 | - | |

| 0.5-467 | Latent AI | RPi 4 | Broadcom BCM2711 | LEIP Framework | 2.60 | - | 1.07 | - | 0.42 | - | 0.19 | - | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fraga-Lamas, P.; Lopes, S.I.; Fernández-Caramés, T.M. Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case. Sensors 2021, 21, 5745. https://doi.org/10.3390/s21175745

Fraga-Lamas P, Lopes SI, Fernández-Caramés TM. Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case. Sensors. 2021; 21(17):5745. https://doi.org/10.3390/s21175745

Chicago/Turabian StyleFraga-Lamas, Paula, Sérgio Ivan Lopes, and Tiago M. Fernández-Caramés. 2021. "Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case" Sensors 21, no. 17: 5745. https://doi.org/10.3390/s21175745

APA StyleFraga-Lamas, P., Lopes, S. I., & Fernández-Caramés, T. M. (2021). Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case. Sensors, 21(17), 5745. https://doi.org/10.3390/s21175745