DBSCAN-Based Tracklet Association Annealer for Advanced Multi-Object Tracking

Abstract

:1. Introduction

- A new density-based tracklet association enhancement method for improving the performance of “multi-object tracking by detection” is proposed.

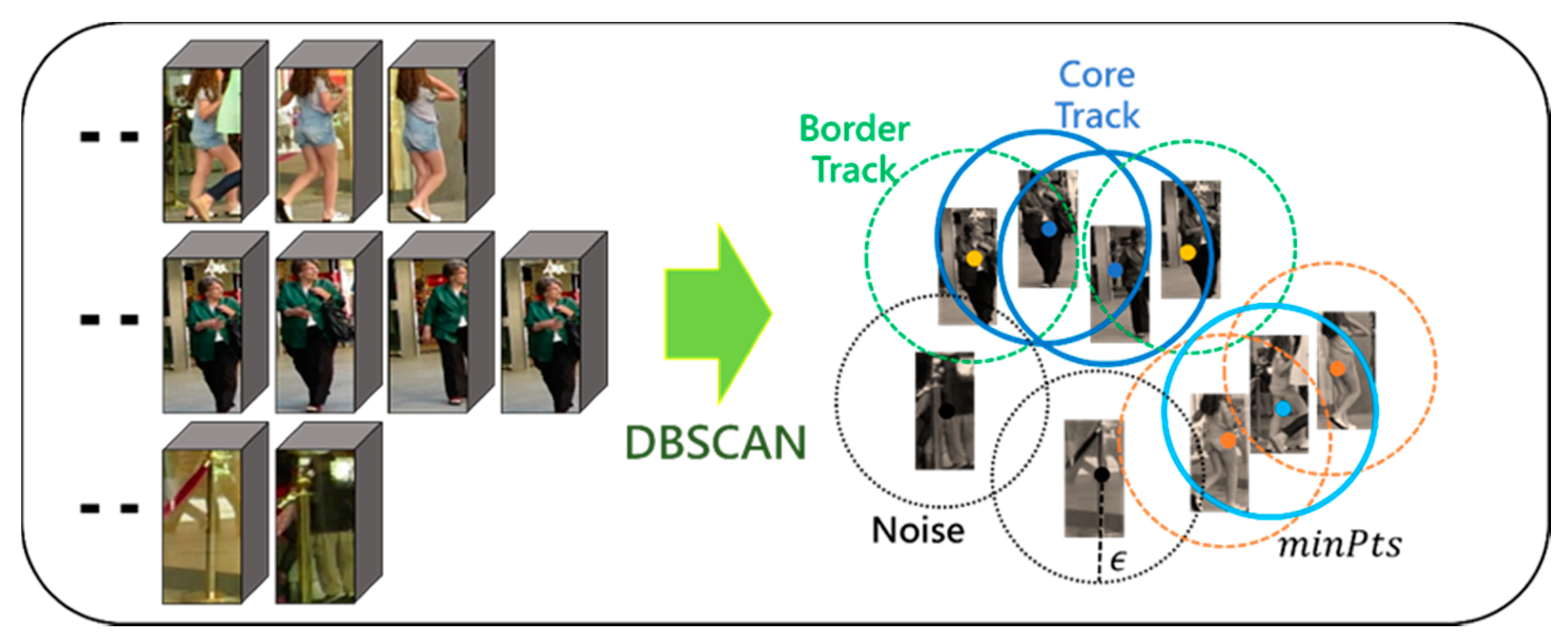

- A clustering technique suitable for multi-object tracking is introduced that combines the reduced-dimensional feature vectors obtained through CNN into very similar tracklets using DBSCAN.

- When tracking multiple objects, the severe vulnerability to small external interference and the difficulty of separating multi-object trajectories were improved, and real-time multi-object tracking became possible through simple structure extension.

2. Density-Based Tracklet Association

2.1. DBSCAN

2.2. Appearance Feature Extraction

2.3. Tracklets Association Using DBSCAN

| Algorithm 1. Strengthening tracklet association process through DBSCAN clustering |

|

3. Experimental Results

| Tracker | MOTA↑ | FAR↓ | IDS↓ | Frag↓ | Hz↑ |

| Public | |||||

| SORT [27] | 26.0 | 1.20 | 779 | 1171 | 100+ |

| IOU Tracker [28] | 25.8 | 1.53 | 689 | 1120 | 100+ |

| SST [44] | 31.5 | 1.86 | 1262 | 1542 | 10 |

| DTAA(ours) | 26.8 | 0.93 | 421 | 879 | 30 |

| Private | |||||

| JDE [45] | 35.5 | 3.68 | 520 | 823 | 15 |

| TubeTK [46] | 59.6 | 1.02 | 858 | 1103 | 6 |

| Tracker | MOTA↑ | FAR↓ | IDS↓ | Frag↓ |

| MOT15 [36] Public | ||||

| SORT [27] + DTAA | 27.5 (+5.7%) | 1.00 (−16.7%) | 442 (−48.4%) | 879 (−4.9%) |

| IOU Tracker [28] + DTAA | 26.4 (+2.3%) | 1.42 (−7.2%) | 518 (−24.8%) | 751 (−2.9%) |

| SST [44]+ DTAA | 33.7 (+7.0%) | 1.39 (−25.3%) | 717 (−3.2%) | 1112 (−7.9%) |

| Private | ||||

| JDE [45] + DTAA | 39.1 (+10.1%) | 3.31 (−10.1%) | 374 (−28.1%) | 685 (−6.8%) |

| TubeTK [46] + DTAA | 58.4 (−0.2%) | 0.98 (−4.7%) | 798 (−32.2%) | 851 (−7.8%) |

| MOT16 [37] Public | ||||

| SORT [27] + DTAA | 22.6 (−) | 2.15 (−60%) | 1366 (−65%) | 4713 (−53%) |

| IOU Tracker [28] + DTAA | 27.5 (+1.3%) | 0.20 (−12%) | 751 (−21%) | 841 (−13%) |

| SST [44] + DTAA | 27.9 (+3.4%) | 1.30 (−14%) | 1095 (−14%) | 2786 (−6.2%) |

| Private | ||||

| JDE [45] + DTAA | 72.8 (−0.9%) | 1.05 (−16%) | 1248 (−6.3%) | 1510 (−32.1%) |

| TubeTK [46] + DTAA | 73.5 (+0.1%) | 1.02 (−12.1%) | 653 (−12.2%) | 1123 (−8.4%) |

| Tracker | MOTA↑ | FAR↓ | IDS↓ | Frag↓ |

| SORT [27] | 18.6 | 0.90 | 859 | 1906 |

| IOU Tracker [28] | 19.9 | 1.17 | 1306 | 1722 |

| SST [44] | 23.2 | 1.38 | 1240 | 1682 |

| SORT [27] + DTAA | 18.6 (−) | 0.90 (−) | 707 (−17.7%) | 1477 (−22.5%) |

| IOU Tracker [28] + DTAA | 22.3 (+12.1%) | 1.01 (−13.7%) | 503 (−61.5%) | 1566 (−9.1%) |

| SST [44]+ DTAA | 24.9 (+7.3%) | 1.10 (−20.3%) | 761 (−38.6%) | 1432 (−14.9%) |

| Tracker | MOTA↑ | FAR↓ | IDS↓ | Frag↓ |

| SORT [27] | 12.8 | 0.70 | 954 | 1454 |

| IOU Tracker [28] | 7.5 | 1.41 | 1192 | 2340 |

| SST [44] | 13.8 | 0.84 | 1046 | 1762 |

| SORT [27] + DTAA | 14.4 (+12.5%) | 0.40 (−42.8%) | 399 (−58.2%) | 788 (−45.8%) |

| IOU Tracker [28]+ DTAA | 10.8 (+44.0%) | 1.12 (−20.6%) | 676 (−43.3%) | 1977 (−15.5%) |

| SST [44] + DTAA | 15.1 (+9.4%) | 0.76 (−9.5%) | 785 (−24.9%) | 1482 (−15.9%) |

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ciaparrone, G.; Sánchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef] [Green Version]

- Brasó, G.; Leal-Taixé, L. Learning a neural solver for multiple object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6247–6257. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 7263–7271. [Google Scholar]

- Zhang, Y.; Sheng, H.; Wu, Y.; Wang, S.; Lyu, W.; Ke, W.; Xiong, Z. Long-term tracking with deep tracklet association. IEEE Trans. Image Process. 2020, 29, 6694–6706. [Google Scholar] [CrossRef]

- Peng, J.; Wang, T.; Lin, W.; Wang, J.; See, J.; Wen, S.; Ding, E. TPM: Multiple object tracking with tracklet-plane matching. Pattern Recognit. 2020, 107, 107480. [Google Scholar] [CrossRef]

- Leal-Taixé, L.; Canton-Ferrer, C.; Schindler, K. Learning by tracking: Siamese CNN for robust target association. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 33–40. [Google Scholar]

- Voigtlaender, P.; Krause, M.; Osep, A.; Luiten, J.; Sekar, B.B.G.; Geiger, A.; Leibe, B. Mots: Multi-object tracking and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 7942–7951. [Google Scholar]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 941–951. [Google Scholar]

- Janai, J.; Güney, F.; Behl, A.; Geiger, A. Computer vision for autonomous vehicles: Problems, datasets and state of the art. Found. Trends® Comput. Graph. Vis. 2020, 12, 1–308. [Google Scholar] [CrossRef]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.K. Multiple object tracking: A literature review. Artif. Intell. 2020, 293, 103448. [Google Scholar] [CrossRef]

- Berclaz, J.; Fleuret, F.; Fua, P. Robust people tracking with global trajectory optimization. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 744–750. [Google Scholar]

- Pirsiavash, H.; Ramanan, D.; Fowlkes, C.C. Globally-optimal greedy algorithms for tracking a variable number of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 1201–1208. [Google Scholar]

- Zhang, S.; Huang, J.B.; Lim, J.; Gong, Y.; Wang, J.; Ahuja, N.; Yang, M.H. Tracking persons-of-interest via unsupervised representation adaptation. Int. J. Comput. Vis. 2020, 128, 96–120. [Google Scholar] [CrossRef] [Green Version]

- Tang, S.; Andriluka, M.; Andres, B.; Schiele, B. Multiple people tracking by lifted multicut and person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3539–3548. [Google Scholar]

- Choi, W.; Savarese, S. A unified framework for multi-target tracking and collective activity recognition. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 215–230. [Google Scholar]

- Sharma, S.; Ansari, J.A.; Murthy, J.K.; Krishna, K.M. Beyond pixels: Leveraging geometry and shape cues for online multi-object tracking. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–26 May 2018; pp. 3508–3515. [Google Scholar]

- Kim, C.; Li, F.; Ciptadi, A.; Rehg, J.M. Multiple hypothesis tracking revisited. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4696–4704. [Google Scholar]

- Mahmoudi, N.; Ahadi, S.M.; Rahmati, M. Multi-target tracking using CNN-based features: CNNMTT. Multimed. Tools Appl. 2019, 78, 7077–7096. [Google Scholar] [CrossRef]

- Huang, C.L. Exploring effective data augmentation with TDNN-LSTM neural network embedding for speaker recognition. In Proceedings of the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Singapore, 14–18 December 2019; pp. 291–295. [Google Scholar]

- Ullah, M.; Cheikh, F.A. Deep feature based end-to-end transportation network for multi-target tracking. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3738–3742. [Google Scholar]

- Fang, K.; Xiang, Y.; Li, X.; Savarese, S. Recurrent autoregressive networks for online multi-object tracking. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 466–475. [Google Scholar]

- Kim, H.U.; Koh, Y.J.; Kim, C.S. Online Multiple Object Tracking Based on Open-Set Few-Shot Learning. IEEE Access 2020, 8, 190312–190326. [Google Scholar] [CrossRef]

- Ristani, E.; Tomasi, C. Features for multi-target multi-camera tracking and re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6036–6046. [Google Scholar]

- Zhang, Z.; Wu, J.; Zhang, X.; Zhang, C. Multi-target, multi-camera tracking by hierarchical clustering: Recent progress on dukemtmc project. arXiv 2017, arXiv:1712.09531. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by-detection without using image information. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, Arizona, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Zhu, H.; Zhou, M. Efficient role transfer based on Kuhn–Munkres algorithm. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2011, 42, 491–496. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Fu, H.; Wu, L.; Jian, M.; Yang, Y.; Wang, X. MF-SORT: Simple online and Realtime tracking with motion features. In Proceedings of the International Conference on Image and Graphics, Beijing, China, 23–25 August 2019; pp. 157–168. [Google Scholar]

- Xiao, B.; Wu, H.; Wei, Y. Simple baselines for human pose estimation and tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 466–481. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the 2nd International Conference on Knowledge Dicovery and Data Mining, Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- Kim, J.; Cho, J. Delaunay triangulation-based spatial clustering technique for enhanced adjacent boundary detection and segmentation of LiDAR 3D point clouds. Sensors 2019, 19, 3926. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, J.; Cho, J. An online graph-based anomalous change detection strategy for unsupervised video surveillance. EURASIP J. Image Video Process. 2019, 2019, 76. [Google Scholar] [CrossRef] [Green Version]

- Leal-Taixé, L.; Milan, A.; Reid, I.; Roth, S.; Schindler, K. Motchallenge 2015: Towards a benchmark for multi-target tracking. arXiv 2015, arXiv:1504.01942. [Google Scholar]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN revisited, revisited: Why and how you should (still) use DBSCAN. ACM Trans. Database Syst. (TODS) 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Peng, S.; Huang, H.; Chen, W.; Zhang, L.; Fang, W. More trainable inception-ResNet for face recognition. Neurocomputing 2020, 411, 9–19. [Google Scholar] [CrossRef]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Hornakova, A.; Henschel, R.; Rosenhahn, B.; Swoboda, P. Lifted disjoint paths with application in multiple object tracking. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 12–18 July 2020; pp. 4364–4375. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 17–35. [Google Scholar]

- Sun, S.; Akhtar, N.; Song, H.; Mian, A.; Shah, M. Deep affinity network for multiple object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 104–119. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 107–122. [Google Scholar]

- Pang, B.; Li, Y.; Zhang, Y.; Li, M.; Lu, C. Tubetk: Adopting tubes to track multi-object in a one-step training model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 6308–6318. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.; Cho, J. DBSCAN-Based Tracklet Association Annealer for Advanced Multi-Object Tracking. Sensors 2021, 21, 5715. https://doi.org/10.3390/s21175715

Kim J, Cho J. DBSCAN-Based Tracklet Association Annealer for Advanced Multi-Object Tracking. Sensors. 2021; 21(17):5715. https://doi.org/10.3390/s21175715

Chicago/Turabian StyleKim, Jongwon, and Jeongho Cho. 2021. "DBSCAN-Based Tracklet Association Annealer for Advanced Multi-Object Tracking" Sensors 21, no. 17: 5715. https://doi.org/10.3390/s21175715

APA StyleKim, J., & Cho, J. (2021). DBSCAN-Based Tracklet Association Annealer for Advanced Multi-Object Tracking. Sensors, 21(17), 5715. https://doi.org/10.3390/s21175715