Abstract

3D visual recognition is a prerequisite for most autonomous robotic systems operating in the real world. It empowers robots to perform a variety of tasks, such as tracking, understanding the environment, and human–robot interaction. Autonomous robots equipped with 3D recognition capability can better perform their social roles through supportive task assistance in professional jobs and effective domestic services. For active assistance, social robots must recognize their surroundings, including objects and places to perform the task more efficiently. This article first highlights the value-centric role of social robots in society by presenting recently developed robots and describes their main features. Instigated by the recognition capability of social robots, we present the analysis of data representation methods based on sensor modalities for 3D object and place recognition using deep learning models. In this direction, we delineate the research gaps that need to be addressed, summarize 3D recognition datasets, and present performance comparisons. Finally, a discussion of future research directions concludes the article. This survey is intended to show how recent developments in 3D visual recognition based on sensor modalities using deep-learning-based approaches can lay the groundwork to inspire further research and serves as a guide to those who are interested in vision-based robotics applications.

1. Introduction

Today, robotic systems with social characteristics are considered an important keystone in household chores, healthcare services, and modern industrial production [1]. 3D visual recognition is the fundamental component of these social robots. Social robots [2] are autonomous robots that are currently being developed on a large scale for safe and secure robot interactions in the human-centric environment [3]. The appearance and applications of these robotic systems vary; however, recognition in the context of object and place plays a central and vital role in these systems for semantic understanding of the environment. This article starts with the impact of social robots and lists the key features of some recently developed social robots that are tailored in public, domestic, hospital, and industrial use.

These robots are designed to interact and exhibit social behaviors with broad human-like capabilities, which integrate visual recognition, knowledge representation, task planning, localization, and navigation. Among all these, we focus on a systematic review of the approaches that address the most essential robotic capability, known as visual recognition. In this direction, we present data representation methods based on sensor modalities for 3D recognition using deep learning (DL) and examine the approaches for both 3D object recognition (3DOR) and 3D place recognition (3DPR).

Visual recognition is a vital component for robotic systems that operate in human environments. The methods to perform visual recognition tasks generally fall into two categories: either machine-learning-based approaches, which first require feature definition, i.e., using scale invariant feature transform [4], histogram of oriented gradients [5], and then classification techniques, such as support vector machine [6] or deep learning (DL)-based approaches that perform recognition task using convolutional neural networks (CNN) [7] without specifically defining the features.

Autonomous robotic systems deal with a large amount of real-world data. Therefore, the manually designed models of traditional machine learning algorithms are not feasible [8] for real-world robotics applications. On the other hand, the flexibility of DL-based models and their better performance as the scale of data increases make them well suited for use in robotics applications. Over the last few years, CNN-based DL models, starting in 2D space using two-stage [9,10] and one-stage object detectors [11,12,13,14,15,16], have achieved state-of-the-art object recognition results with the output of 2D bounding boxes (BBoxes).

Typically, two-stage detectors, such as R-CNN [17], Fast R-CNN [18], and Faster R-CNN [9], exploit region proposal networks in a first step to propose regions of interest (RoI). Afterward, they send region proposals to the network pipeline for object prediction by calculating features over RoI. As a trade-off for run time, one-stage detectors, such as YOLOv3 [15], YOLOv4 [19], Scaled-YOLOv4 [20], and single shot multibox detector [12] do not involve region proposal.

Researchers [12,15] have handled object detection as a regression problem and directly learned class probabilities to detect the object with bounding box coordinates. One-stage detectors are faster and capable of real-time performance; however, their accuracy rate is lower than two-stage detectors [21]. The task of place recognition is similar to object retrieval [22] and has been performed using dynamic object detection [23] or constructing object maps that contain object information in a place [24]. Although extensive research has been conducted on 2D recognition, it has potential limitations compared with 3D recognition.

With the recent monumental innovations in sensor technology, a wide variety of DL-based 3D object [25,26,27,28] and place recognition approaches [29,30,31] have been developed for different types of sensors. LiDAR and camera are two frequently used and increasingly popular sensors [32] that have been employed for object and place recognition in robotic systems. 3D object recognition predicts 3D information of objects, such as the pose, volume, and shape of the object with 3D BBoxes and class labels. It plays an important role in the intelligent perception of robotic systems.

In contrast to 2D object detection, it requires richer input data and efficient algorithms to estimate six degrees of freedom (DoF) poses [33] with high precision of oriented 3D BBox [34,35] dimensions for objects. 3D Place recognition involves distinguishing two identical places based on their sensor information [36]. Different approaches for place recognition are used, such as several feature maps that are correctly matched between images, learning representative features [37], and calculating the pixel-wise distance between camera images.

LiDAR-based methods for place recognition concentrate on developing local [38] and global [39] descriptors from structural information, segmenting [40] the point cloud (PC) data in 3D LiDAR point clouds and utilizing CNN techniques with 3D LiDAR PC by projecting range sensors on 2D images [41]. However, the synchronization of camera and LiDAR sensors [42] is essential for capturing detailed information of objects and large-scale place recognition.

1.1. Contributions

During the last decade, there has been rapid progress in the domain of social robots, including autonomous vehicles. Parts of this success rely on the implementation of both 3D object and place visual recognition tasks.

Previous reviews, shown in Table 1, concentrated only on 3D object recognition and did not address the 3D place recognition methods. In contrast to the previous studies, this article reviews and analyzes sensor-based data representation methods for both 3D object and place recognition (3DOPR) using state-of-the-art DL-based approaches. Moreover, we also discuss recently developed social robots.

Table 1.

The Contributions of This Survey.

This review is concentrated on 3D visual recognition approaches that have their applications in the domain of robotics, while approaches in the domain of smart environments are beyond the scope of the current survey. We aim at facilitating novice researchers and experts to overcome the challenging task of determining and utilizing the most suitable visual recognition approach for their intended robotic system, as one can quickly explore the recent research progress through this review.

Compared to the existing survey papers, shown in Table 1, the present review is different in the following terms, to the best of our knowledge:

- We discuss the latest representative social robots that have been developed recently (Section 2).

- The present study is the first article that comes up with a combined review of two robotic capabilities: 3D object recognition and 3D place recognition in a comprehensive assessment. It provides data representation modalities based on camera and LiDAR for both 3D recognition tasks using DL-based approaches (Section 3).

- It reviews 14 3D object detection datasets.

- The current survey presents a comparison of existing results to evaluate the performance on datasets.

- It yields an analysis of selected approaches from the domain of robotics, delineates the advantages, summarizes the current main research trends, discusses the limitations, and outlines the possible future directions.

- Compared to the earlier surveys, this study is more concerned with the most recent work. Therefore, it provides the reader an important opportunity to advance their understanding of state-of-the-art robotic 3D recognition methods.

1.2. Survey Structure

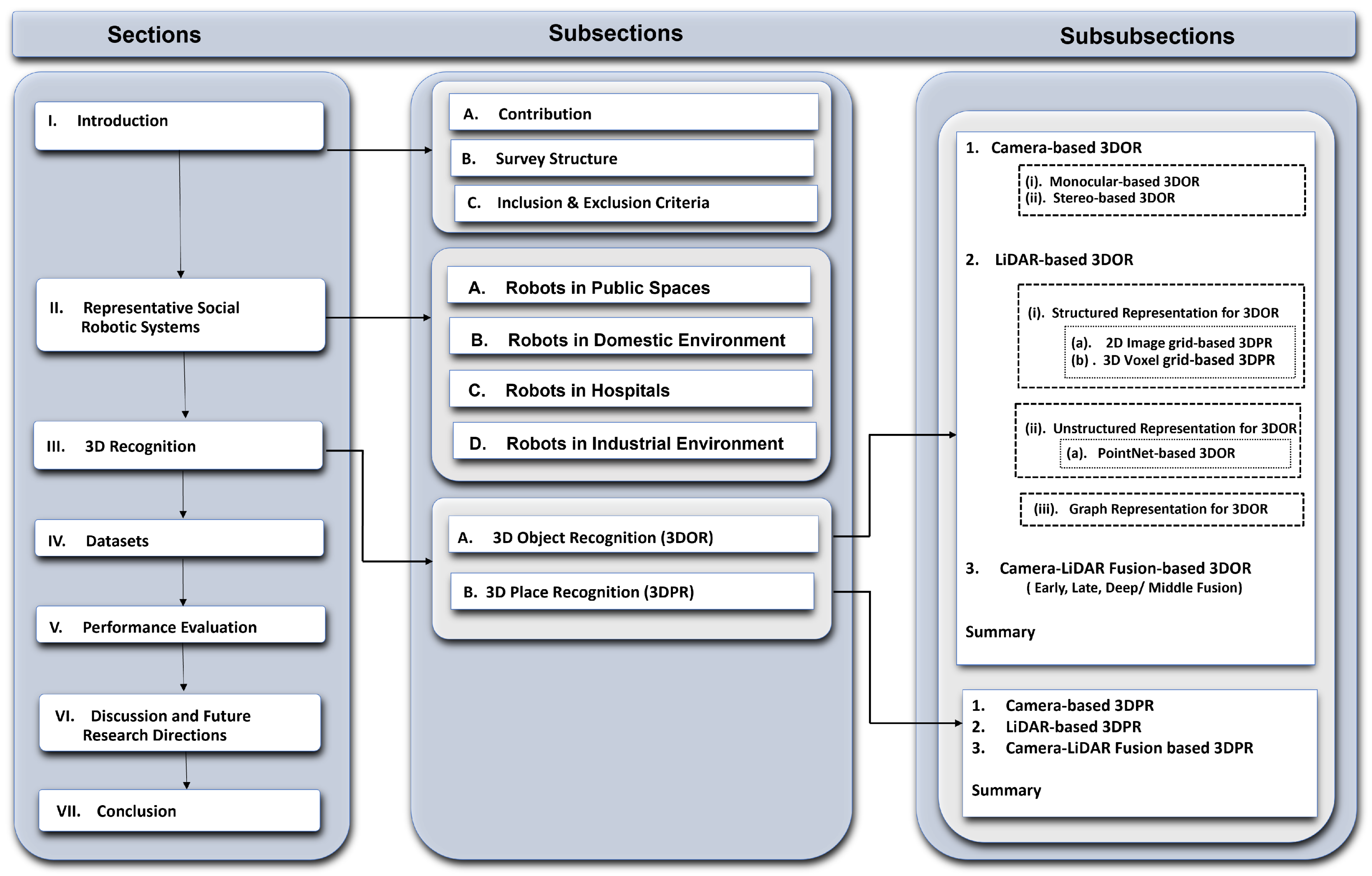

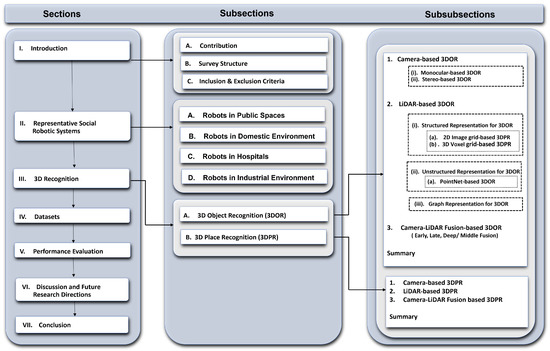

The survey has been organized in a top-down manner. The overall structure of the survey with corresponding topics and subsections is diagrammatically illustrated in Figure 1. In Section 2, the aim is to provide fresh insight to the readers into recently developed social robots with their impact on society, use cases, sensors, tasks (i.e., recognition), and semantic functions (i.e., assisting) in public places (Section 2.1), domestic (Section 2.2), hospitals (Section 2.3), and industrial environments (Section 2.4).

Figure 1.

The overall structure of the survey that shows all the topics discussed in each section.

In Section 3, inspired by the recognition capabilities of social robots, as described in Section 2, the article examines the sensor (camera and LiDAR) based data representation approaches used for the 3D object (Section 3.1) and place (Section 3.2) recognition applying DL-based models. In addition, it gives a brief overview of datasets (Section 4) that have been used for the evaluation of 3D recognition methods. Consequently, in Section 6, the article discusses current research challenges and future research directions, and finally we conclude the survey with a summary in Section 7.

1.3. Inclusion and Exclusion Criteria

The inclusion and exclusion criteria are mainly focused on Section 3 for 3DOR and 3DPR methods. Section 2 does not involve comparison (instead it highlights the importance of visual recognition capability by giving the examples of recently developed robots from different sectors); therefore, it is not restricted to follow the same time span as Section 3. However, Section 3 performs the literature analysis for 3DOR and 3DPR methods; therefore, all studies in Section 3 are restricted to follow a specific time span based on inclusion and exclusion criteria. For 3DOR (Section 3.1) and 3DPR (Section 3.2), the inclusion criteria are as follows:

- The research publications must be from 2014 to 2021.

- Their domain must be a robotic system.

- They must be either journal or conference publications.

- They must address 3DOR or 3DPR methods using deep-learning approaches based on Camera and LiDAR sensor modalities.

Table 2 represents both inclusion and exclusion criteria that were applied to perform the paper selection, and the results of the systematic approach for paper filtering process are described below.

Table 2.

3D Recognition: Inclusion and Exclusion Criteria.

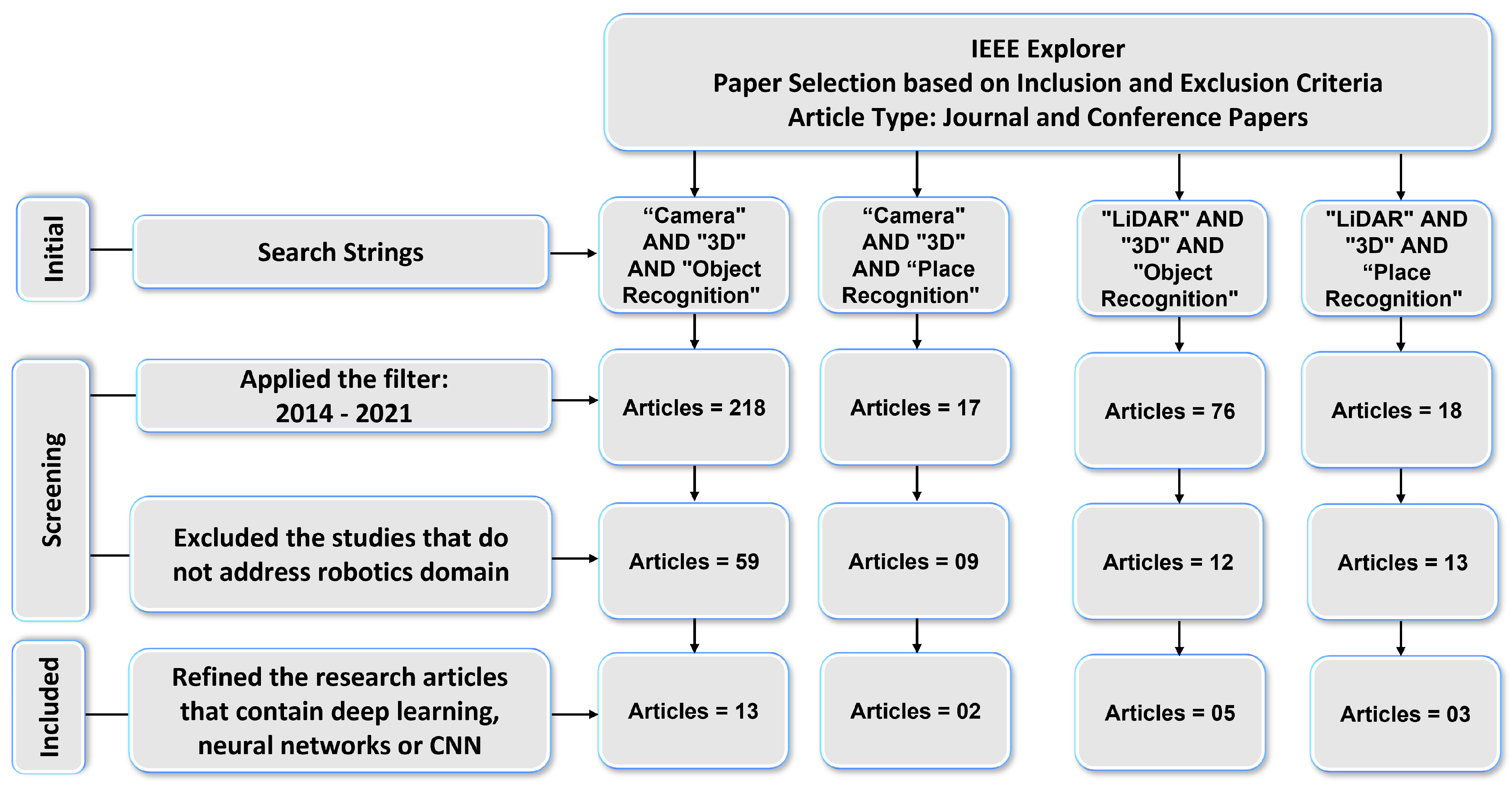

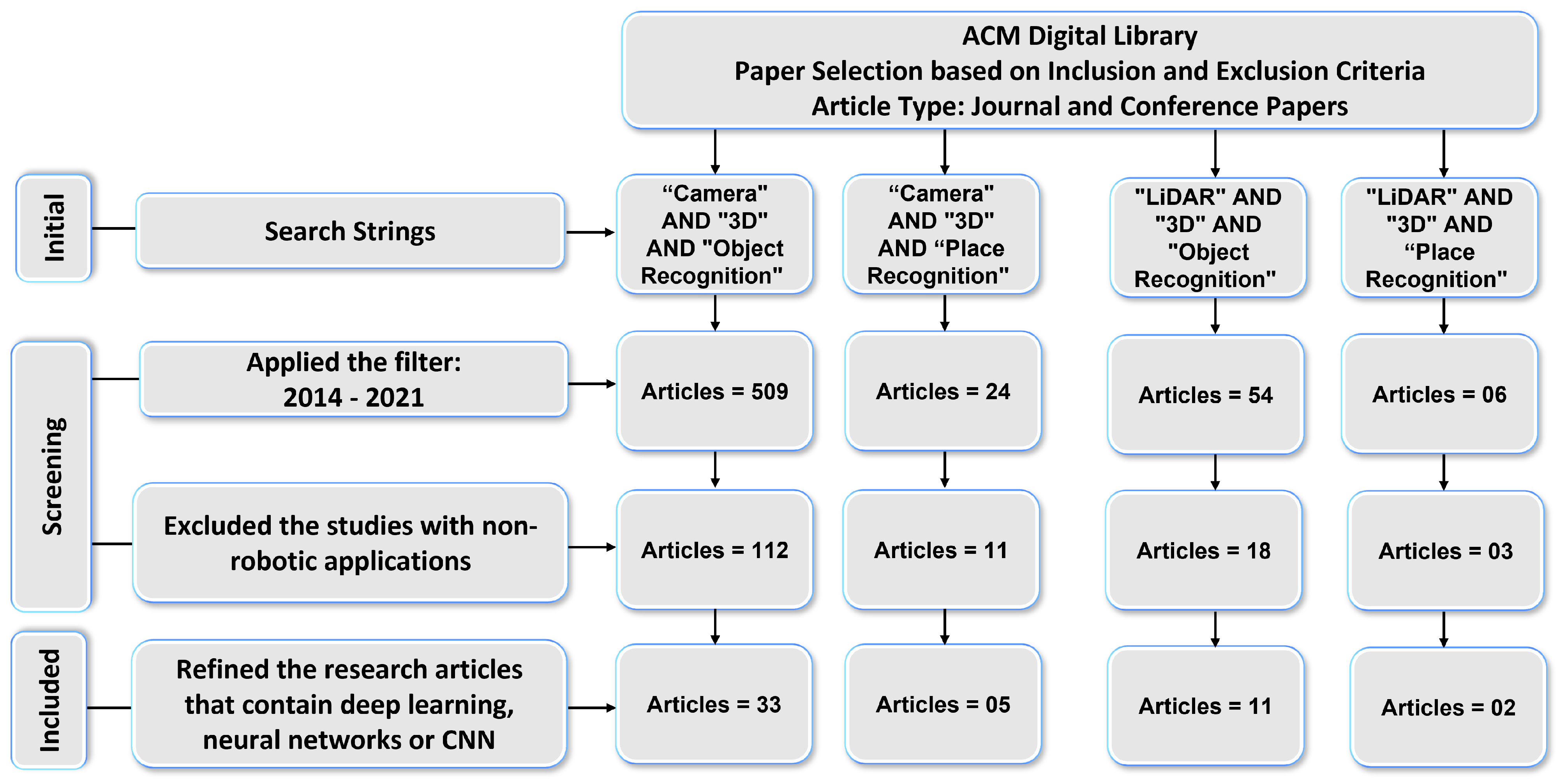

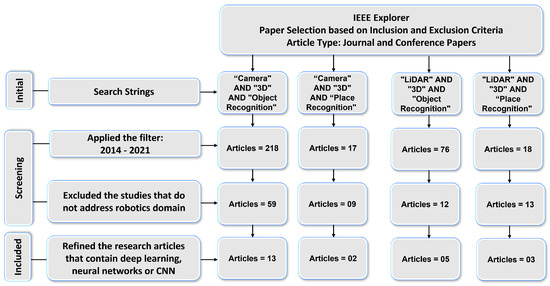

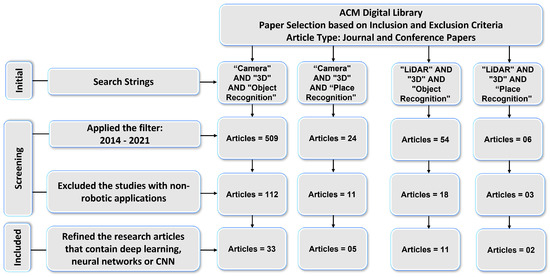

Results of the Paper Selection Process

We conducted a systematic literature review for Section 3 to determine which DL-based models are being used for 3D object and place recognition based on sensor modalities. We used four search strings (“Camera” AND “3D” AND “Object Recognition”, “LiDAR” AND “3D” AND “Object Recognition”, “Camera” AND “3D” AND “Place Recognition”, and “LiDAR” AND “3D” AND “Place Recognition”) to extract the research articles from two key digital databases of academic journal articles that were IEEE Explorer and the ACM Digital Library. The paper selection process of this article consists of four steps as shown in Figure 2 and Figure 3.

Figure 2.

Results: IEEE Explorer paper selection based on the inclusion and exclusion criteria.

Figure 3.

Results: ACM Digital Library paper selection based on the inclusion and exclusion criteria.

First, the relevant articles for the survey from digital libraries using search strings were collected that correspond to the type of sensor (camera and LiDAR) and category of 3D recognition (object and place). In the second step, 329 articles in IEEE explores library and 593 articles in ACM digital library were extracted by applying the time period filter. The third step refined the 93 articles from IEEE Explorer and 144 articles from ACM Digital Library that belonged to the robotics category. We used MS Access database management software to find duplicates among these articles. For this, we ran SQL query on the database table and found that 35 articles in ACM and 21 articles In IEEE Explorer were duplicates.

After removing the duplicate articles, the fourth step involved splitting the articles that used deep-learning-based approaches and resulted in 23 articles from IEEE explorer and 51 articles from the ACM Digital Library that met the inclusion and exclusion criteria. Lastly, the selected articles based on their sensor data representation methods were arranged into 3DOR and 3DPR categories in which 17 articles from IEEE Explorer and 44 articles from ACM Digital library are related to the 3DOR task and five articles from IEEE Explorer and seven articles from ACM Digital library are related to the 3DPR task.

2. Representative Social Robotic Systems

This section presents recently developed social robotic systems that demonstrate recognition tasks and semantic understanding to perform a function in public (Section 2.1), domestic (Section 2.2), medical (Section 2.3), and industrial (Section 2.4) environments.

2.1. Robots in Public Spaces

Robots in public spaces indicates social robots used in places that are generally accessible for everyone, such as airports, supermarkets, libraries, and museums. Amazon launched a six-wheeled autonomous Scout delivery robot [45] in its Seattle-based research and development lab. It is commercially available in a few places in the USA, which are Atlanta, Georgia and Franklin, Tennessee after a long test run [90]. It uses an array of cameras and ultrasonic sensors for route planning and navigation on sidewalks at a walking pace and climbing up the front porch for package delivery. It has the ability of semantic task understanding, such as recognizing people and pets, detecting, and avoiding obstacles using machine learning algorithms.

AIMBOT [46] is an anti-epidemic autonomous driving robot that is designed for indoor crowded public environments, including schools, hospitals, and office buildings to provide safe and efficient Covid-19 protection. It is available for commercial use. It recognizes 200 people per minute, uses infrared thermal imaging camera to measures their body temperature, detects whether individuals are wearing masks, and sends a voice reminder to the people without a mask. Table 3 lists the sensors, purpose, and tasks as well as their algorithm, appearances, semantic functions, and development status.

Table 3.

Robots in Public Spaces.

2.2. Robots in Domestic Environment

Robots in the domestic environment refer to the robots that are used at homes for household chores, entertainment, or personal assistance. At the consumer electronics show 2020, Samsung showcased a robotic chef’s assistant [47], which consists of a pair of arms that mimic human gestures to cook the meal and performs the task on voice commands. It downloads the appropriate skills and performs the tasks, such as slicing by picking up the knife, pouring the ingredients, and mixing them. It is equipped with sensors and cameras and relies on AI and computer vision algorithms for the recognition task. The prototype of the Samsung chef robot was first unveiled at KBIS 2019 [91]. It is not available commercially.

Amazon’s Astro [48] is an Alexa-based home assistant robot that combines Alexa, computer vision, and AI software. It is a commercially available robot for home security, including a six-month free trial of Ring Protect Pro that allows saving videos in Ring’s cloud storage [92]. It obeys voice commands, such as follow me or go to a specific room. It performs face recognition to deliver items to a specific person. It acts as a family companion and entertains children by playing music. It cares for elderly people by reminding them to take medicine and record their blood pressure. It also assists to take voice or video calls. It uses SLAM for mapping the environment and roaming around the house. It automatically attaches itself to the charging dock. House members can use its mobile application for remote monitoring if they are outside.

Table 4 presents the sensors, usability, and tasks of domestic robots along with their algorithm, appearances, semantic functions, and development status.

Table 4.

Robots in Domestic Environments.

2.3. Robots in Hospitals

Robots in hospitals are used in healthcare and treatment centers for relieving medical personnel either by aiding in surgery or caring for the patients. Moxi is a robotic assistant [49] in semi-structured hospital environments that is commercially available. The Medical City Dallas Heart and Spine Hospital is the first North Texas health care provider using the Moxi robot to combat a lack of nursing personnel in hospital systems [93]. It uses AI and machine learning algorithms to reduce the cognitive workload of nurses by performing tasks that do not require interaction with patients, such as delivering supplies to patient rooms, fetching items, and removing linen bags. Table 5 illustrates its characteristics, which include the robot’s sensors, purpose, and tasks, algorithm, appearances, semantic functions, and development status.

Table 5.

Robots in Hospitals.

Ahn et al. [50], developed a multi-robot system consisting of ReceptionistBot and CareBot for the hospital environment that performs the tasks of receptionist, nurse assistant, and medical server. Both ReceptionistBot and CareBot are in the prototype stage and are not available commercially. ReceptionistBot communicate with patients and obtains their personal information. If visitors want to meet the medical staff, it guides them to meet CareBot for treatment. Carebot collects data about the patient’s health condition by asking questions. It assists the nurse using different healthcare devices to measure the blood pressure, pulse rate, and oxygen level of the patients. It also communicates with RoboGen, which is a secure server for managing patient information. MAiRA [51] is a multi-sensing intelligent robot that assists in complex medical procedures. This intelligent assistant is a commercially available cognitive robot [94]. It has voice recognition capability.

It performs human–robot interaction in a collaborative industrial environment. It can learn from instructions given through voice commands or gestures. It can perform object detection, pose estimation, and object grasping tasks either with professionals or wholly autonomously.

2.4. Robots in Industrial Environment

Robots in industry are used to assist in manufacturing by automating repetitive tasks, such as welding, assembly, and shipping. Handle is an autonomous mobile manipulation robot [52] developed by Boston Dynamics for moving boxes in a warehouse and unloading them from shipping containers. It relies on a 2D and 3D perception learning-based vision system to detect boxes. Table 6 enumerates the sensors, usability, tasks, algorithm, appearances, semantic functions, and development status. Handle will be available for sale in two years according to Playter the Chief Executive Officer at Boston Dynamics [96]. LARA [53] is a collaborative industrial robotic arm, developed recently. Its prototype is complete. However, it is expected to be realized soon for commercial use [97]. It is available in two sizes with 5 and 10 kg payload capacities. Its 3D vision allows detection and recognition of an object for a manipulation task.

Table 6.

Robots in Industrial Environments.

Stretch [54] is a recently designed robot for autonomously moving boxes around the warehouses. Boston Dynamics expects that the robot will be commercially available from 2022 [98]. The strength of its arm makes it unique for potential entry into robotic warehouses. It is flexible and can do different tasks, such as loading, unloading boxes, and building up pallets.

3. 3D Recognition

With the recent breakthroughs in deep learning (DL) and significant improvements in sensor technologies, 3D recognition has made great progress, which leads toward rapid development in autonomous robotic systems, including autonomous driving. In this section, we concentrate on camera and LiDAR-based data representation methods employed for both 3D object recognition (3DOR) (Section 3.1) and 3D place recognition (3DPR) (Section 3.2) using DL models. Recently developed autonomous robotic systems (as described in Section 2) are mostly equipped with both cameras and LiDAR for visual perception tasks.

LiDAR is suitable to work with real-time autonomous systems in both indoor and outdoor environments, although most of the perception approaches focus on the use of LiDAR in autonomous vehicles. However, recent trends in deep-learning-based end-to-end approaches have also led researchers’ interest in the innovative use of LiDAR in autonomous robots for recognition tasks that benefit from the detailed 3D PC data to detect objects accurately. The PC data provided by the LiDAR sensor retains information related to the object’s position and reflection intensity as well as shape representation of different objects in complex scenes.

Hence, integrating this 3D PC information with DL-based recognition models is indispensable to perform precise 3D recognition. On the other hand, monocular and stereo cameras are less expensive sensors than LiDAR for 3D object detection but require post-processing techniques to determine the size and relative distance. The detection capability and reliability of the camera and LiDAR are limited in different environments. Table 7 summarizes the advantages and limitations of both sensors. Camera-LiDAR fusion is used to overcome these issues.

Table 7.

Comparison of Camera and LiDAR Sensors.

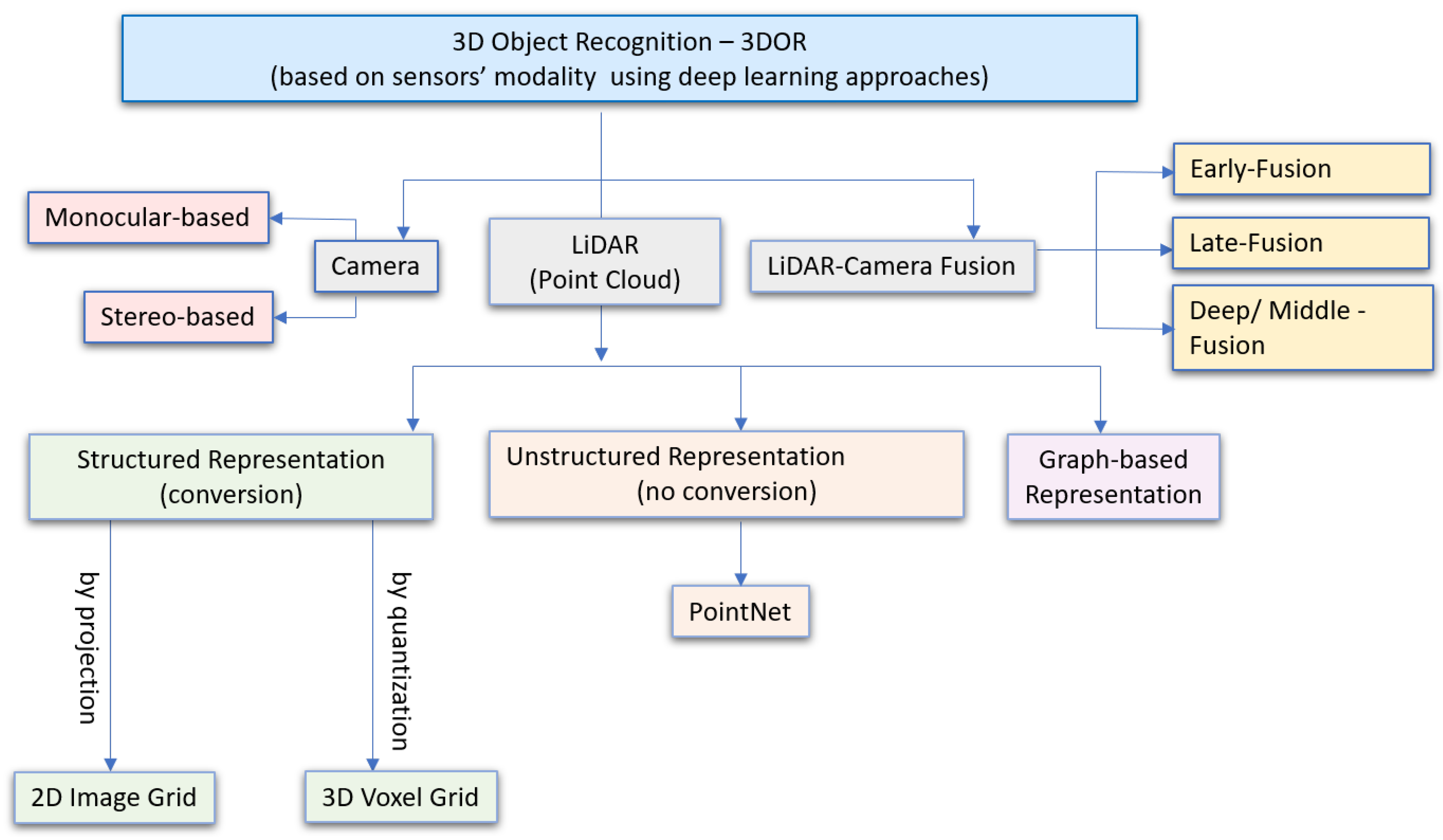

3.1. 3D Object Recognition (3DOR)

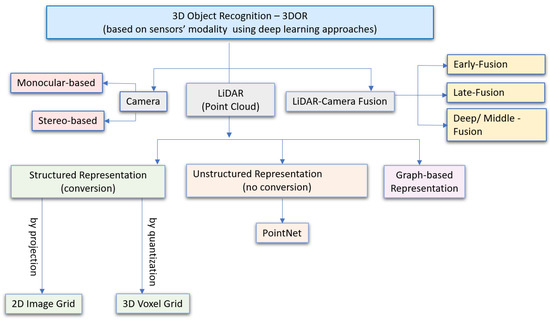

This section categorizes data representation methods based on sensors’ modalities for 3D object recognition using deep learning in autonomous robotic systems. Compared with traditional recognition methods, the success of DL in the past ten years for robust and accurate object detection has made deep CNN the most promising method to perform 3D vision recognition tasks for robotic systems. The overall taxonomy is shown in Figure 4, which illustrates data representation in visual sensors that include a camera (Section 3.1.1), LiDAR (Section 3.1.2), and camera-LiDAR fusion (Section 3.1.3).

Figure 4.

Camera and LiDAR-based Data Representation Modalities for 3D Object Recognition (3DOR).

3.1.1. Camera-Based 3DOR

This section explores the methods that perform 3DOR by estimating 3D bounding boxes (BBoxes) based on either monocular or stereo camera images as discussed in Table 8 with limitations and research gap. We first give an overview of camera-based methods and then describe their advantages and limitations in Table 9.

Table 8.

Methodology and Limitations: Camera-based 3DOR.

Table 9.

Literature Analysis: Camera-based 3D Object Detection Methods.

- (i)

- Monocular-Based 3DOR

A monocular camera is essential for the deployment of low power and low-cost systems in the real-world application of robotics or autonomous driving [99]. Therefore, researchers have shown increasing interest in monocular 3D object detection in recent years [34,100,101,102,103,104]. Even though existing 3D detectors have achieved good accuracy, most of them do not consider the information related to occluded objects, which are partially visible. To this end, Chen et al. [55] improved 3D object detection by establishing a relationship of paired samples, which allows modeling spatial constraints for occluded objects. Its 3D detector introduced an uncertainty-aware prediction module for computing object location and object-to-object distances.

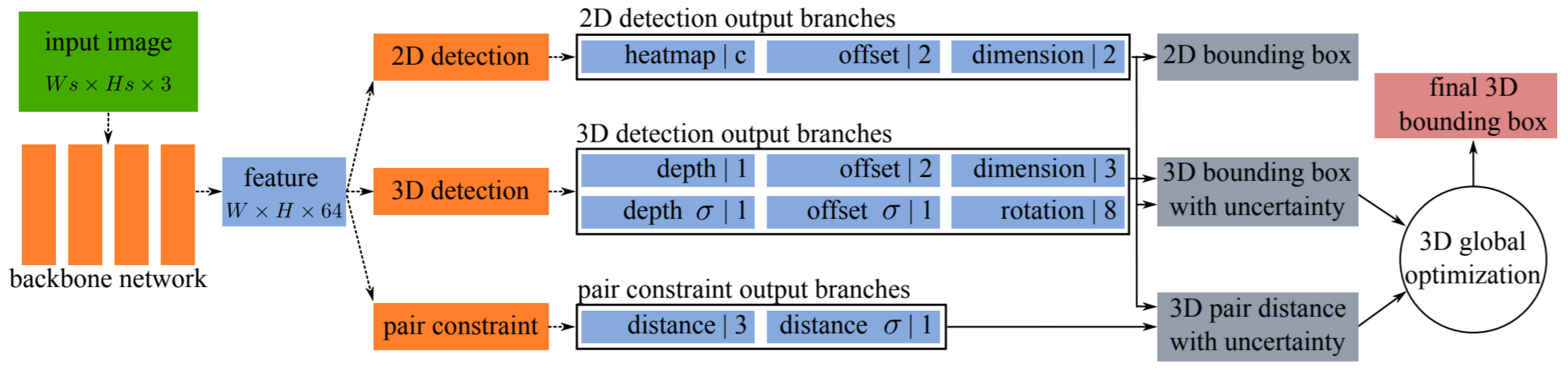

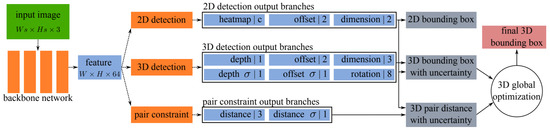

This method adopted a one-stage architecture by sharing the anchor-free 2D object detection approaches, consisting of one backbone and several task specific dense prediction network branches. The backbone accepted one monocular image as input while (WxHx64) size as output feature map. It had eleven output branches as shown in Figure 5, which were divided into three parts: three for 2DOR, six for 3DOR, and two for the prediction of pairwise geometric constraints, which were estimated among adjacent objects using key points on the feature map.

Figure 5.

The architecture [55] overview with eleven prediction branches divided into 2DOR, 3DOR, and pair constraints.

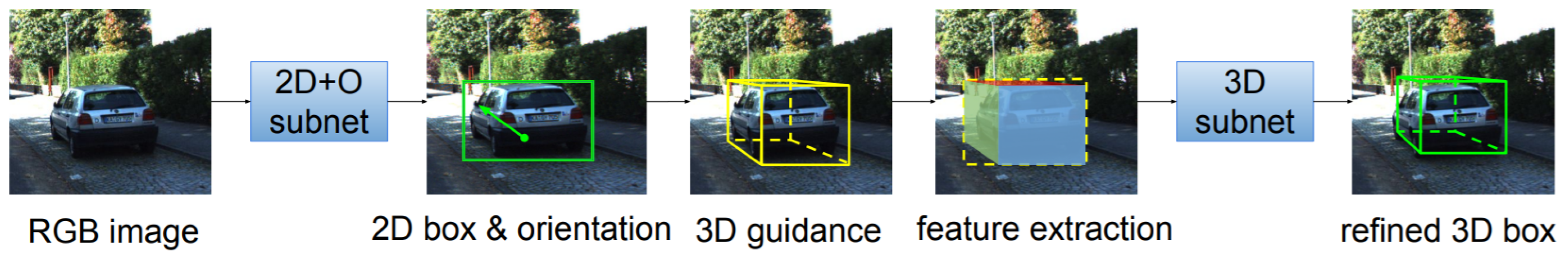

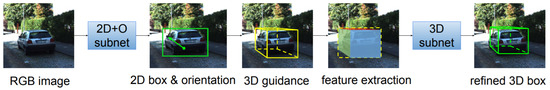

Li et al. [56] presented a 3D object detection method by extracting 3D information from a 2D image and generated accurate 3D BBoxes by obtaining coarse cuboids of predicted 2D boxes. In contrast to typical methods that rely on feature extraction from 2D BBoxes, it exploited 3D structural information by employing visual features and used the extracted features from surfaces to eliminate the feature ambiguity problem of 2D bounding boxes. It modified faster R-CNN for orientation prediction by including a new branch. Figure 6 shows an overview of its proposed framework in which single RGB image was passed as input, and it was processed in four steps. First, a CNN-based detector, known as 2D+O subnet, was used for extracting 2D BBoxes and orientations of the objects.

Figure 6.

The proposed 3D object detection paradigm [56] consisting of a CNN based model (2D+O subnet), 3D guidance generated using the obtained output of 2D+O subnet, and extracted features utilized by the refinement model (3D subnet).

In the second step, these were utilized with the prior knowledge for driving scenario and basic cuboid were generated, which were called guidance. In the third step, this guidance was projected on the image plane and features were fused as distinguishable structural information to eliminate the ambiguity. In the fourth step, another CNN called 3D subnet was used fused features as the network input to improve the guidance.

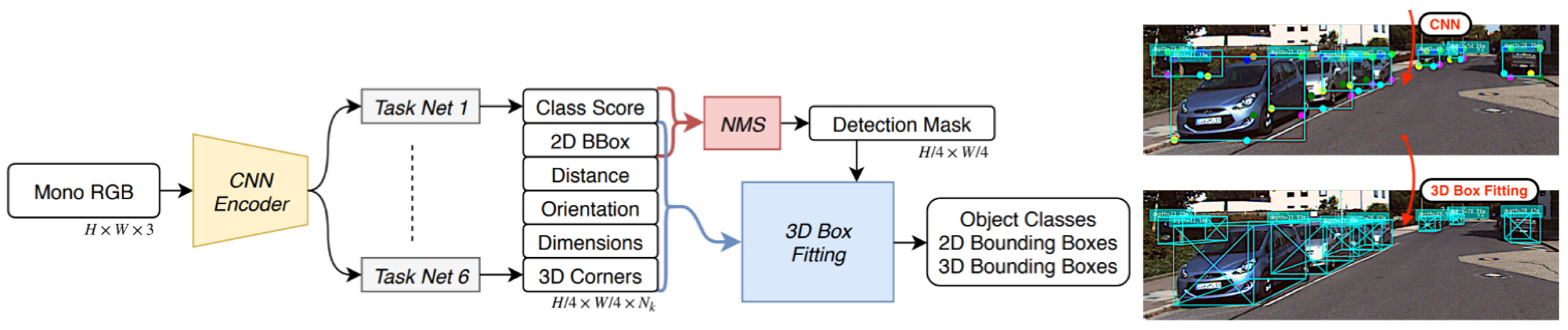

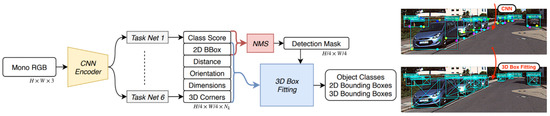

Jörgensen et al. [57] proposed single-stage monocular 3D (SS3D) architecture. It contained two main parts: a CNN that was used for detecting the objects by regressing a surrogate 3D representation and a 3D BBox optimizer for fitting respective 3D BBoxes. SS3D regressed 2D and 3D BBoxes simultaneously after specifying the object’s center and its 2D and 3D BBox tuple contained 26 surrogate elements. Its proposed pipeline is illustrated in Figure 7 and consists of three steps. The first step is object detection with class scores and regression for 3D BBoxes’ fitting, while the second step involves non-maximum suppression for the elimination of redundant detections. Finally, 3D BBoxes were yielded through an optimizer using learning weights, and these 3D BBoxes were fitted independently and in parallel using the non-linear least squares method.

Figure 7.

The pipeline of SS3D [57] for 3DOR from a single view.

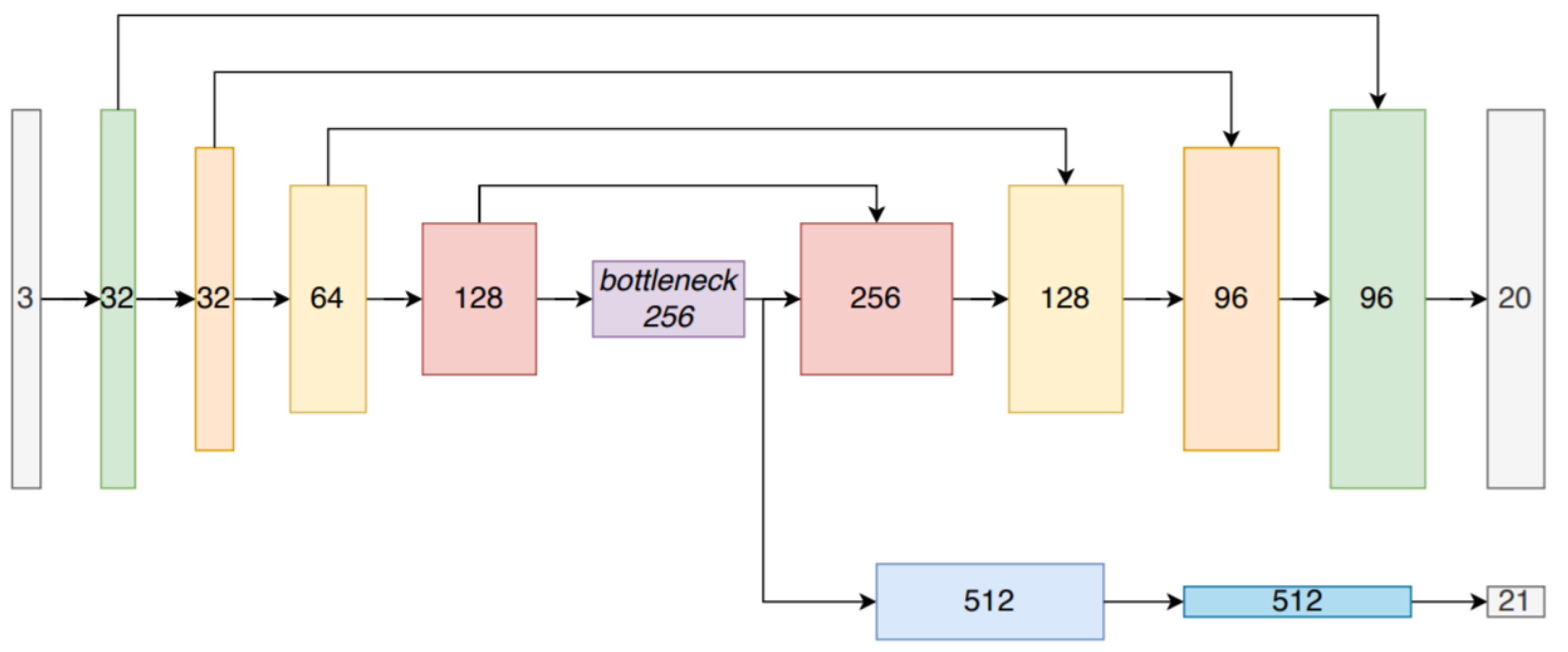

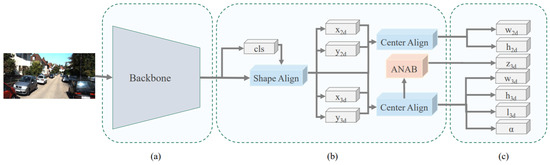

Luo et al. [58] introduced a monocular 3D single stage object detector (M3DSSD) to overcome the feature mismatching issue of anchor-based monocular 3DOR methods by proposing a two-step feature alignment approach. The major components of its architecture shown in Figure 8 are a backbone network that is modified version of [105], feature alignment, attention block, and prediction head. Its asymmetric non-local attention block (ANAB) extracts depth-wise features for representing the global information. Its feature alignment consisted of two steps to handle the misalignment of 2D and 3D BBoxes. The first step obtained the target region based on the classification confidence and allowed the respective filed of the feature map to concentrate on the anchor regions. The second step used the 2D/3D center prediction for feature offset estimation to reduce the gap between predictions and feature maps.

Figure 8.

The architecture of M3DSSD [58] for monocular 3D object detection (a) Framework backbone. (b) The two-step feature alignment, classification and regression heads with ANAB for depth prediction. (c) Other regression heads.

- (ii)

- Stereo-Based 3DOR

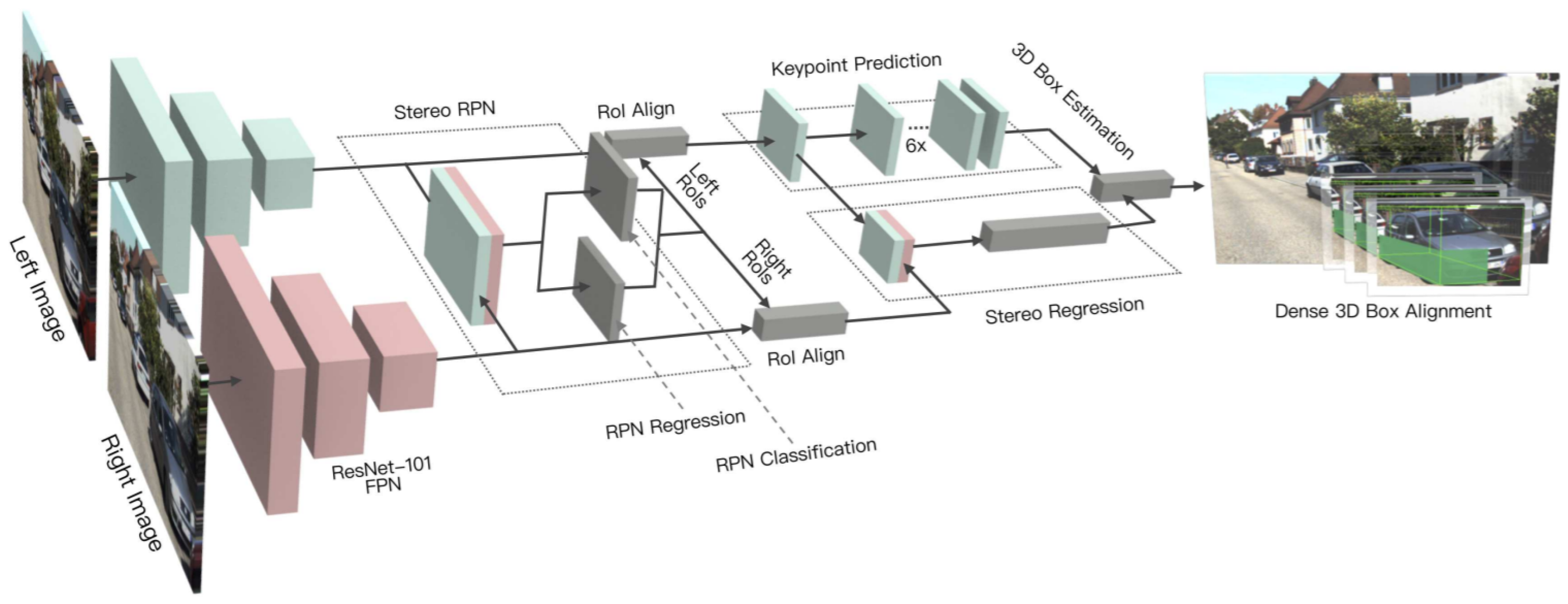

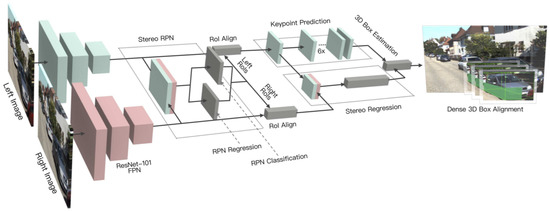

Compared to the monocular camera, there are relatively fewer studies that utilize stereo vision for 3D object detection. Li et al. [59] exploited semantic and geometric information in the stereo image by proposing a stereo R-CNN based 3D object detector, which was an extension of Faster R-CNN. The stereo region proposal network, stereo R-CNN, and key points branch were three major components of its architecture as shown in Figure 9.

Figure 9.

The architecture of Stereo R-CNN [59], which outputs key points, stereo boxes, along with the viewpoint angle and dimensions, followed by 3D BBox estimation.

The stereo region proposal network module generated right and left RoI proposals. The stereo R-CNN module applied RoI-Align [10] on feature maps and concatenated them for object classification. It adds a stereo regression branch for accurate regression of 2D stereo boxes. The key point branch took left RoI features for detecting object key points. It performed 3D box estimation by projecting the relations between 2D right-left boxes with 3D box corners and key points. It specified accurate 3D bounding boxes and object localization by employing a dense region-based photometric alignment method.

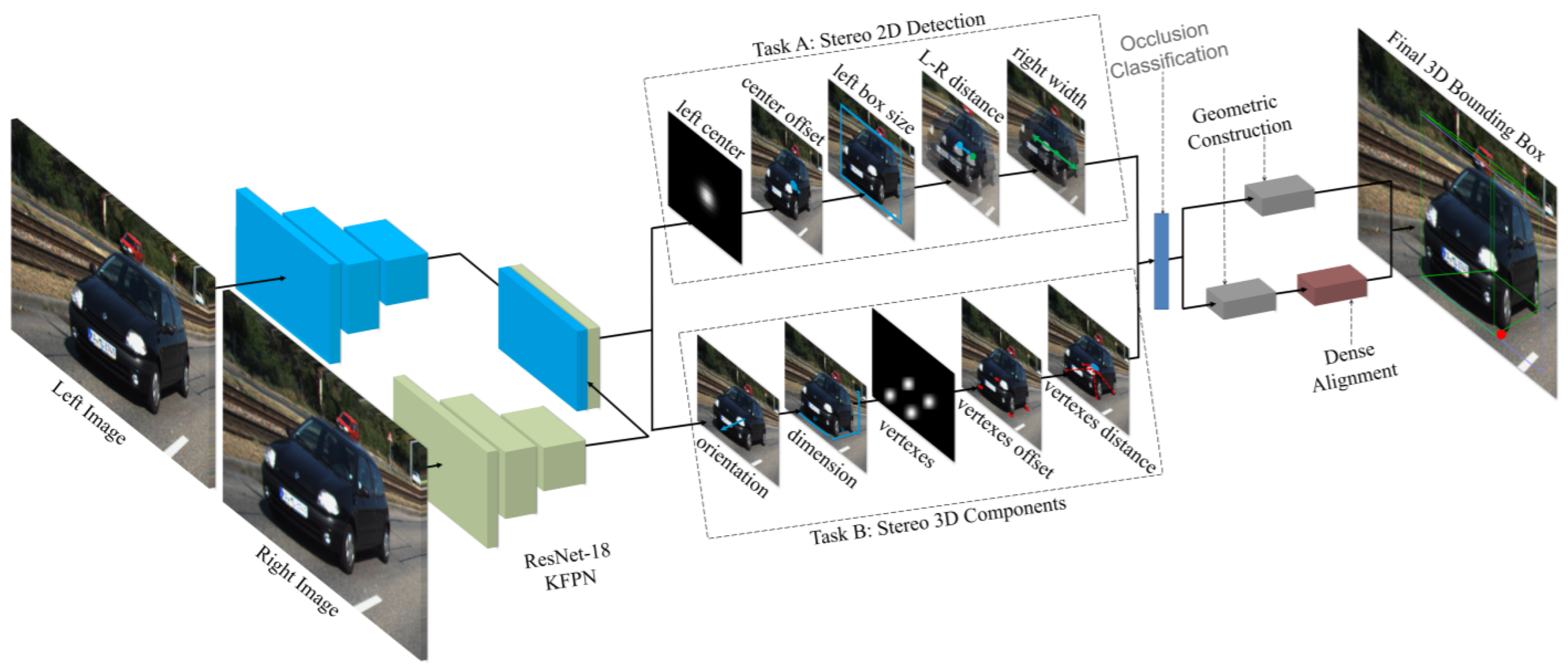

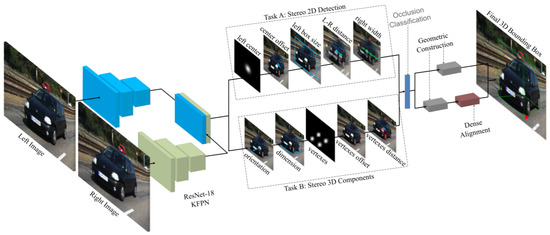

Inspired by CenterNet [106] and Stereo R-CNN [59], Shi et al. [60] proposed a 3D object detection method to recognize the target by extracting semantic and geometric features in stereo RGB images without relying on depth information. It used 2D left-right boxes and predicted four semantic key points of the object’s 3D BBoxes while optimizing the position of 3D BBoxes using a photometric alignment module. Its network was built on CenterNet, which extracted the features from left and right image architecture as shown in Figure 10 using a weight-share backbone, which outputs 10 sub-branches. It performed two tasks. The first task is related to stereo 2D detection in which five sub-branches estimate the center, offset, and BBox of the left object. The second task is the stereo 3D component in which five sub-branches were used to estimate the dimension, orientation, vertices, and center distance of 3D BBoxes for left objects.

Figure 10.

Network architecture of Stereo CenterNet [60] with 10 outputs and sub-branches for two tasks and the estimated 3D BBoxes.

3.1.2. LiDAR-Based 3DOR

LiDAR gives accurate depth information of the environment for 3DOR by discretizing the whole 3D space [107]. The major challenges toward applying DL-based approaches for LiDAR-based 3D object recognition research are the unordered, irregular, discrete, and sparse data representation of PCs, which makes it difficult to process point clouds data directly with CNN-based models. This is due to CNN models rely on convolution operation, which takes ordered, regular, and structured data. More recently, literature has emerged with different methods to address PCs data processing challenges using CNN for 3D recognition. This section divides DL-based 3D recognition methods for LiDAR point clouds into three categories: structured (ordered), unstructured (un-ordered), and graph-based representation.

- (i)

- Structured Representation for 3DOR

This section discusses 2D image grid and 3D voxel grid-based representation for LiDAR-based 3DOD via deep-learning approaches.

- (a)

- 2D Image Grid-Based 3DOR

Much of the current literature on 3DOR pays particular attention to project discrete 3D PC data into a 2D grid representation using DL-based models. Table 10 gives a brief overview of the 2D image grid-based 3DOR method with current restrictions and research gaps. Studies along with their advantages and limitations are discussed in Table 11.

Table 10.

Methodology and Limitation(s): 2D Image Grid-based 3DOR Methods.

Table 11.

Literature Analysis: 2D Image Grid-based 3DOR Methods.

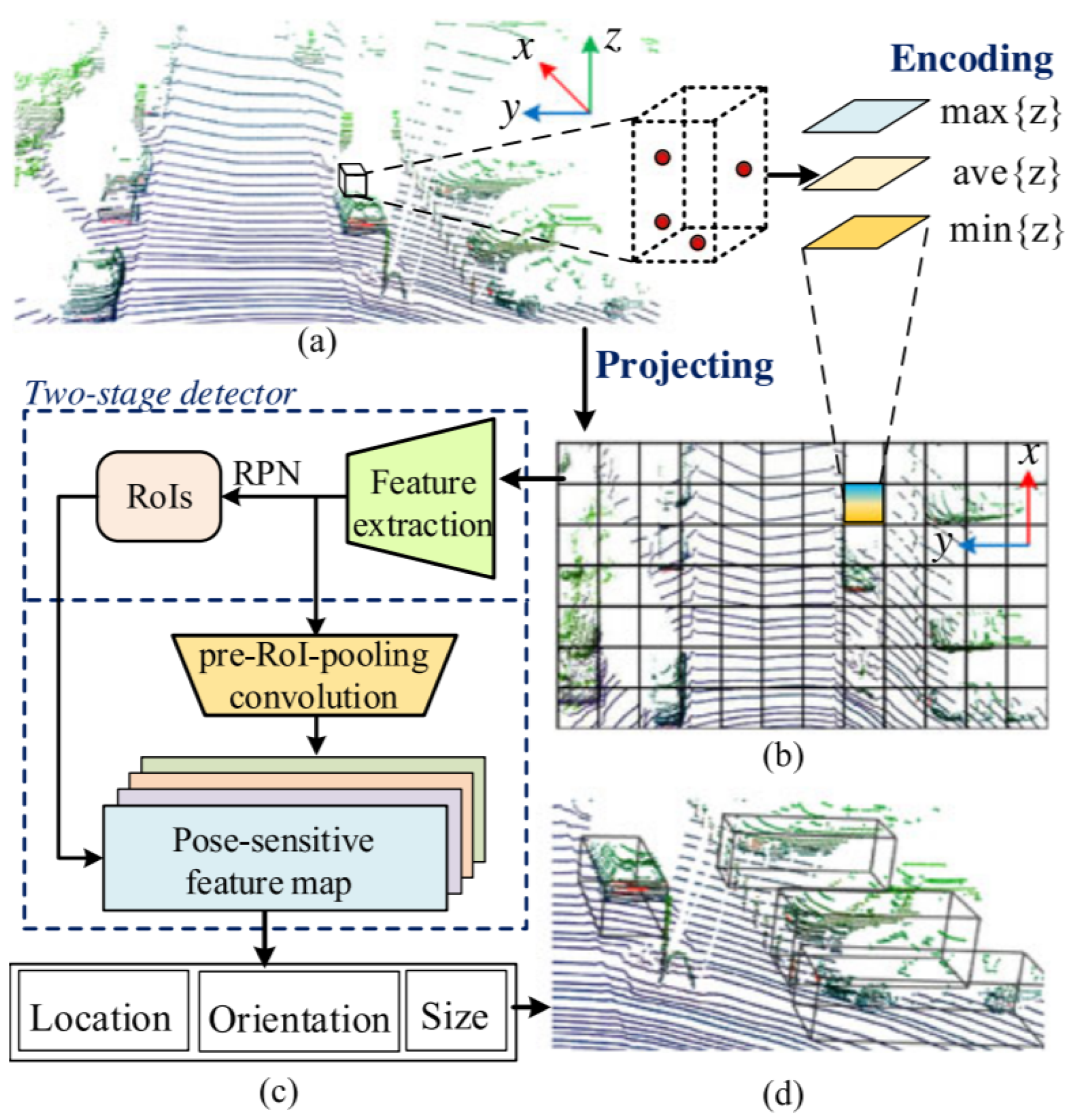

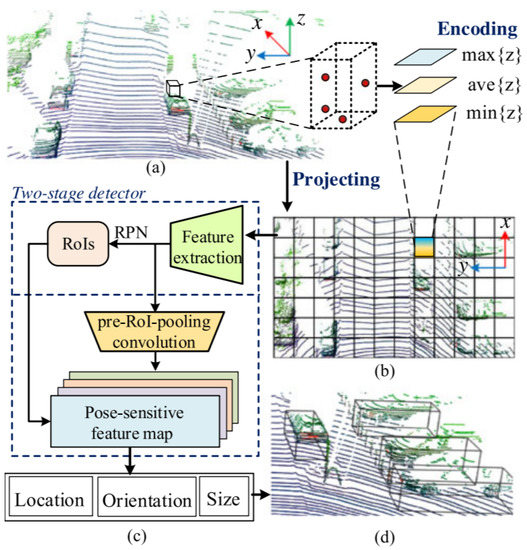

Zeng et al. [61] utilized pure LiDAR PC on a 2D grid and introduced a real-time 3D detection method RT3D illustrated in Figure 11 using two sub-networks: region proposal network and classification sub-network. Its pipeline contained three major steps. First, sparse 3D point clouds were projected on a 2D grid representation for converting them into the input format of CNN. After that, height information from point data was embedded in the 2D grid for 3D object detection. Thirdly, the 2D grid information was passed to a two-stage CNN detector, which generated region proposals.

Figure 11.

The pipeline of RT3D [61]: (a) LiDAR-based 3D point cloud on (b) a depth map encoded with height information of points; (c) a CNN-based two-stage detector is utilized for region proposals generation and their classification on pose-sensitive feature maps; (d) visualization of detected vehicles with orientated 3D BBoxes.

This was initialized with pre-trained ResNet-50 model [108], while it adopted Faster-RCNN [109] techniques for the generation of region proposals on feature map and introduced pre-RoI pooling convolution techniques before RoI operations to improve the computation efficiency. Subsequently, classification and location regression for each RoI was performed to define the location, orientation, and size estimation with a pose-sensitive feature map. This addressed two problems related to the sparsity of PC: First, deleting empty anchors that contained no data on feature maps; Second: adopting automatic selection of hard examples using online hard example mining [110] to provide end-to-end efficient and effective network training.

Most PC-based 3D object detection methods use anchor-based detection methods, which have two major disadvantages. First, these methods require Non-Maximum Suppression (NMS) to filter redundant, overlapped, and imprecise bounding boxes (BBoxes), which causes non-trivial computational costs. Second, they require tricky anchor tuning, which is time-consuming.

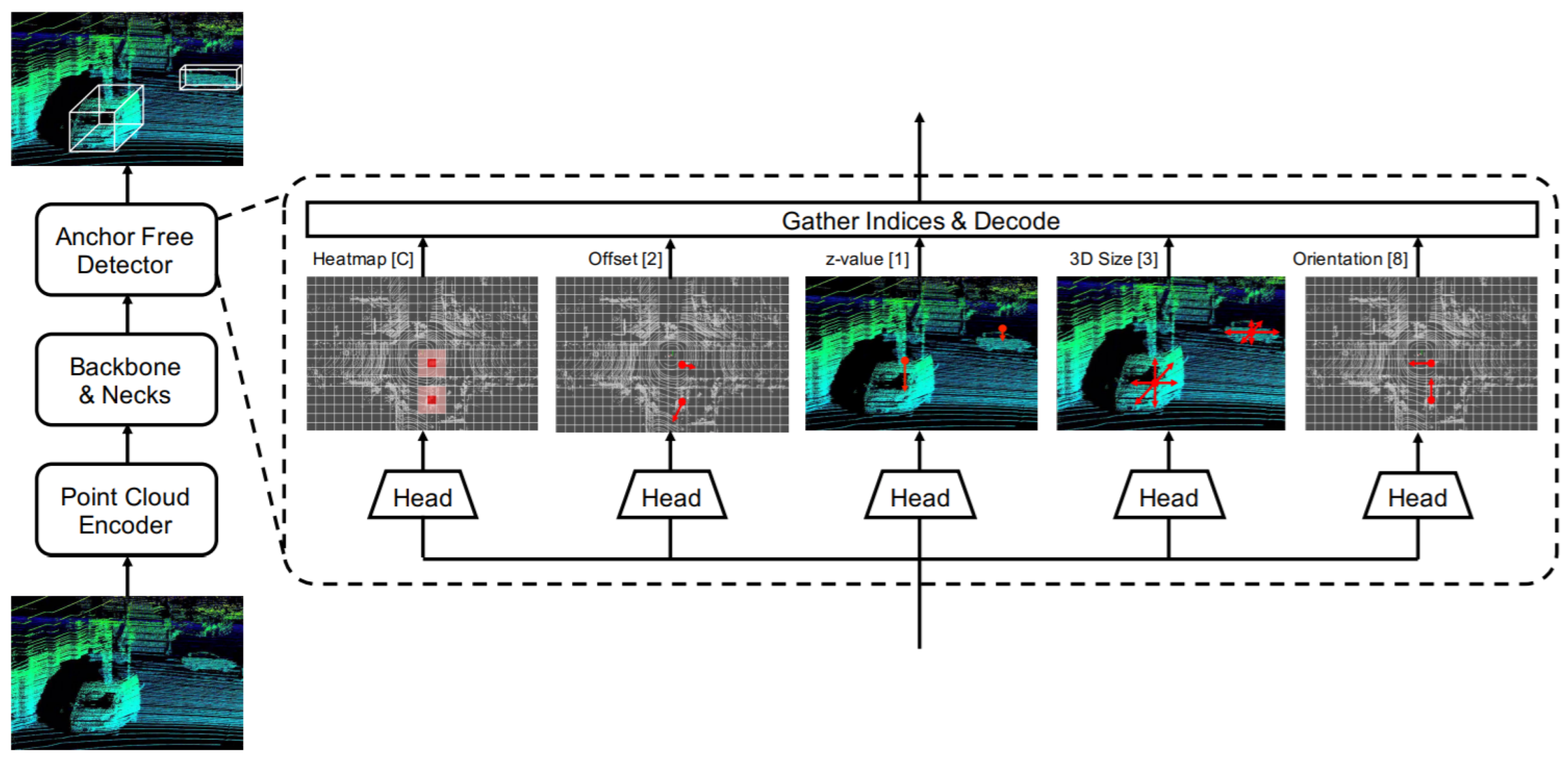

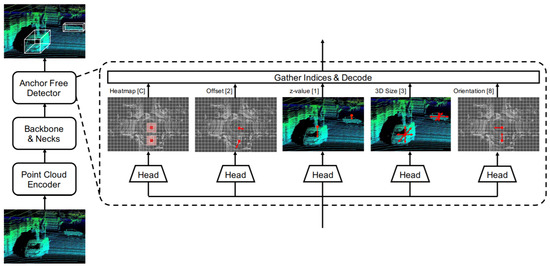

In this direction, Ge et al. [62] proposed AFDet, which is the first anchor and NMS-free PC 3D object one-stage detector with straightforward post-processing. Its 3DOR detection pipeline consisted of four major components, which were a point cloud encoder, the backbone, and necks, and it also included an anchor free detector as shown in Figure 12. It encoded PC to image-like feature maps in birds eye view (BEV) using [111]. Then, it used a CNN with up-sampling necks, which were connected to five different heads for the prediction of object centers in the BEV plane using key point heat map and regression of 3D BBoxes. It combined the head outputs to generate detection outcomes. Every heat peak was selected by a max pooling operation during the inference, which eliminated the need for NMS.

Figure 12.

3D detection pipeline of AFDet [62]. The numbers in square brackets represent output channels of the last convolution layer, and C indicates the number of categories.

- (b)

- 3D Voxel Grid-Based 3DOR

Many LiDAR-based 3DOR techniques use a voxel grid representation [112]. Table 12 explains the brief methodology, limitations, and the research gap, and we summarize the reviewed models with advantages and limitations in Table 13.

Table 12.

Methodology and Limitation(s): 3D Voxel Grid-based 3DOR Methods.

Table 13.

Literature Analysis: 3D Voxel Grid-based 3D Object Recognition Methods.

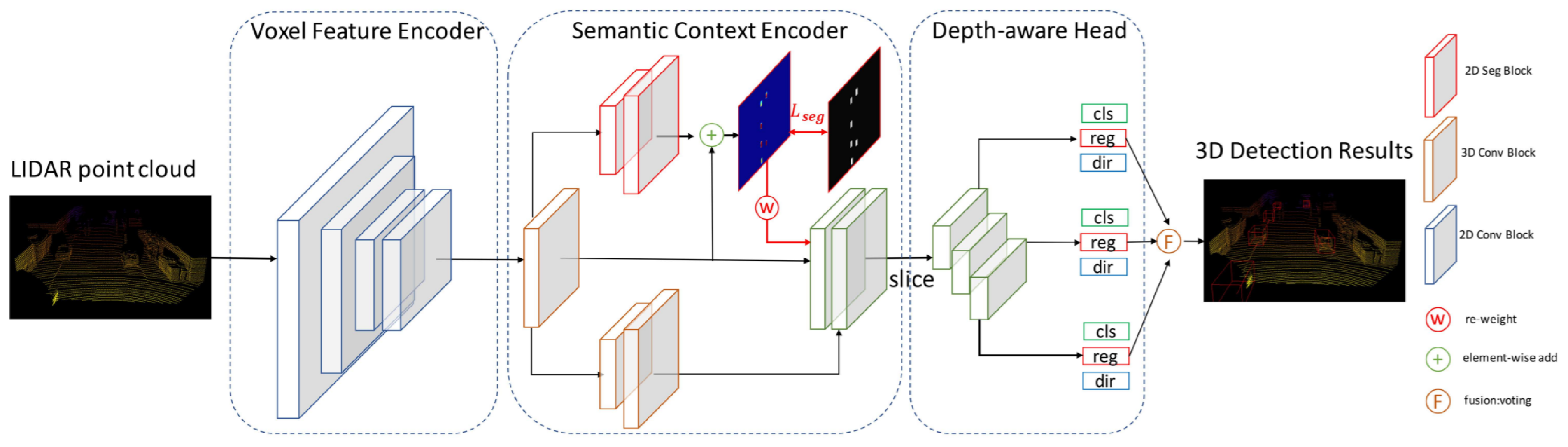

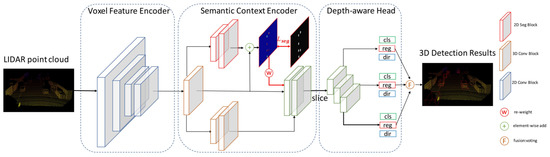

LiDAR PC-based 3D vehicle detection is important for obstacle avoidance in real-world robotics applications, such as autonomous driving. The semantic context information in LiDAR-based sensors is not deeply explored in the literature. Therefore, despite significant progress, vehicle ambiguity and the varying distribution of PC across different depths are two main problems. Yi et al. [63] addressed these issues by developing free-of-charge BEV semantic masks and a depth-aware learning head in the fully convolutional network. They proposed a one-stage detection framework, SegVNet, consisting of three major components: a voxel feature encoder (VFE), semantic context encoder (SCE), and depth-aware head as shown in Figure 13.

Figure 13.

The SegVNet [63] with major components VFE, SCE, and depth aware head.

They introduced a VFE for voxelized feature representation of raw PC and developed a SCE for taking BEV feature maps from VFE as input and generated the semantic context encoded feature maps as output for 3D detection. SCE shared VFE feature maps with its two branches, in which, the first is adopted from [113], while the second learns BEV semantic masks predictions. Its depth-aware head consisting of convolution layers with different kernel sizes was designed for learning distinctive depth-aware features across different depths in autonomous driving scenarios.

Many recent PC-based 3D detectors are optimized for classes, such as cars, pedestrians, and cyclists with multiple models; therefore, it requires a large number of resources to run multiple models for obtaining the desired detection results, which are not desirable for autonomous driving vehicles that have limited resources.

Muramatsu et al. [64] presented their solution by developing the SECOND-DX model to support multi-class LiDAR-based 3D object detection with only a single model in real-time. This extended the [113,114], and [111] models to provide support for three classes: cars, pedestrians, and cyclists. It divided the PC into a 3D spatial grid and extracted fine local features using a high-resolution voxel. It contained three sub-networks in which the first [111] was used to convert points to voxel-wise representations, the second sub-network improved the spatial feature map and encoded it to a 2D feature map, and class probabilities and direction classification were performed by the last region proposal network.

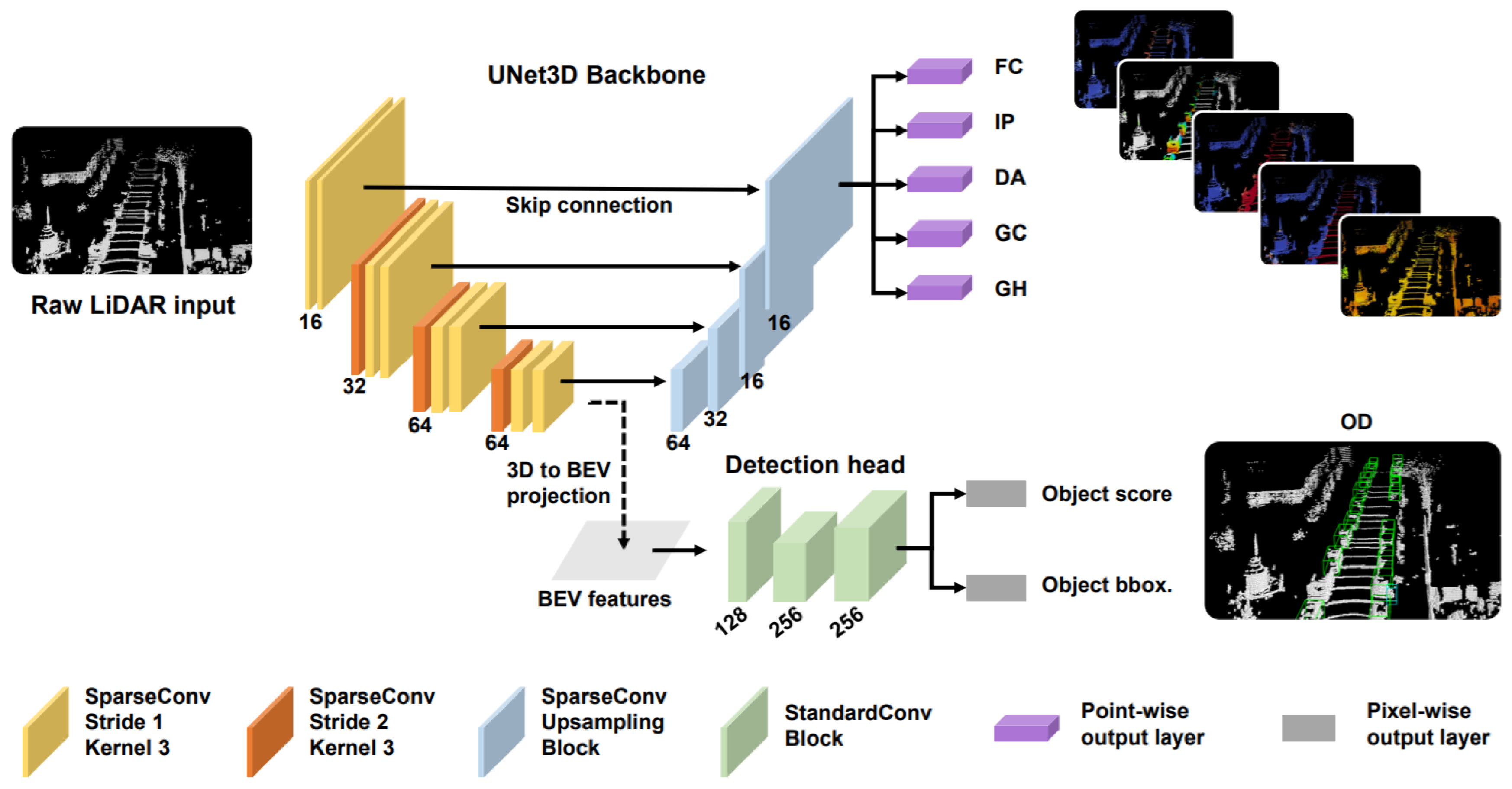

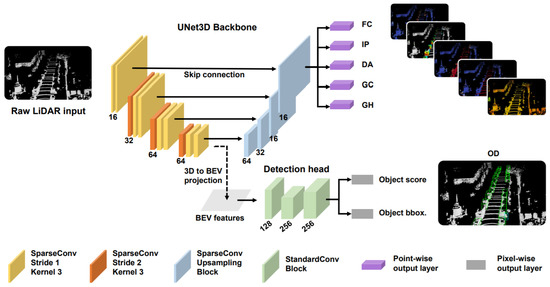

Feng et al. [65] proposed a LiDAR-based multi-task learning network (LidarMTL) to perform six perception tasks in a unified network for 3DOR. Its network architecture based on the voxelized Lidar point cloud is shown in Figure 14, which voxelized the 3D space into regular voxels. It well-preserved the geometric information by proper voxel size. It used UNet architecture to add task-specific heads and trained this entire network with multi-task loss. Following [115], they extended the encoder–decoder based [116] UNet architecture for efficient processing of 3D LiDAR points that were represented as voxels using 3D sparse convolution [113].

Figure 14.

The network [65] is based on a UNet backbone with 3D sparse convolution and deconvolution to perform object detection on the Lidar BEV.

- (ii)

- Unstructured Representation for 3DOR

This section focuses on Point-nets, and we analyze methods with their advantages and limitations in Table 14.

Table 14.

Literature Analysis: PointNet-based 3DOR Methods.

- (a)

- PointNet-Based 3DOR

Point-nets directly handle the irregularities by taking raw LiDAR PC data as the input. This aims at reducing the information loss in 3D space caused by projection or quantization methods. Table 15 illustrates brief methodology, limitations, and the research gaps of pointNet-based 3DOR techniques, while Table 14 gives a literature analysis of the reviewed studies.

Table 15.

Methodology and Limitation(s): PointNet-based 3DOR Methods.

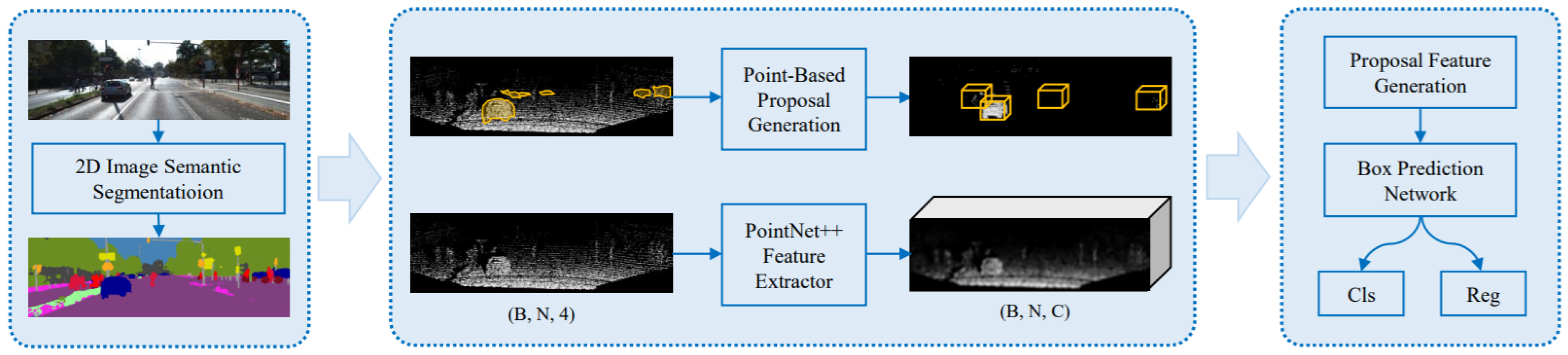

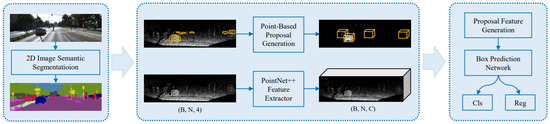

Most of the existing methods encode 3D PCs to 2D grid images by projection [73,117] or 3D voxel grid [114,118] and then apply CNN. However, the detection performance through these representations is not always optimal. Moreover, the limitation of these methods is their dependency on image detection results of 2D detectors, which do not give satisfactory performance in a large-cluttered environment. In a study, Yang et al. [66] addressed these issues by proposing an IPOD framework for 3D object detection on raw PC and provided a high recall rate. It seeded all points of cloud and object proposals without losing localization information.

It also extracted their local and context information, which was fed to PointNet for result generation through inference. It produced a 3D BBox from point-based object proposals and introduced the techniques for ambiguity reduction. Its network architecture shown in Figure 15 was consisted of a backbone network work based on PointNet++ [119], a proposal feature generation module with two parts for feature map extraction, and a BBox prediction network for the prediction of object’s size, shape, class, and orientation. It followed [114,120] to train one network for cars and the other for cyclists and pedestrians. 3D object detection from raw PC has been deeply investigated compared to other 3D detection methods.

Figure 15.

Illustration of IPOD [66] consisting of a sub-sampling network, point-based proposal generation, and the components of network architecture, which classifies and regresses the generated proposals.

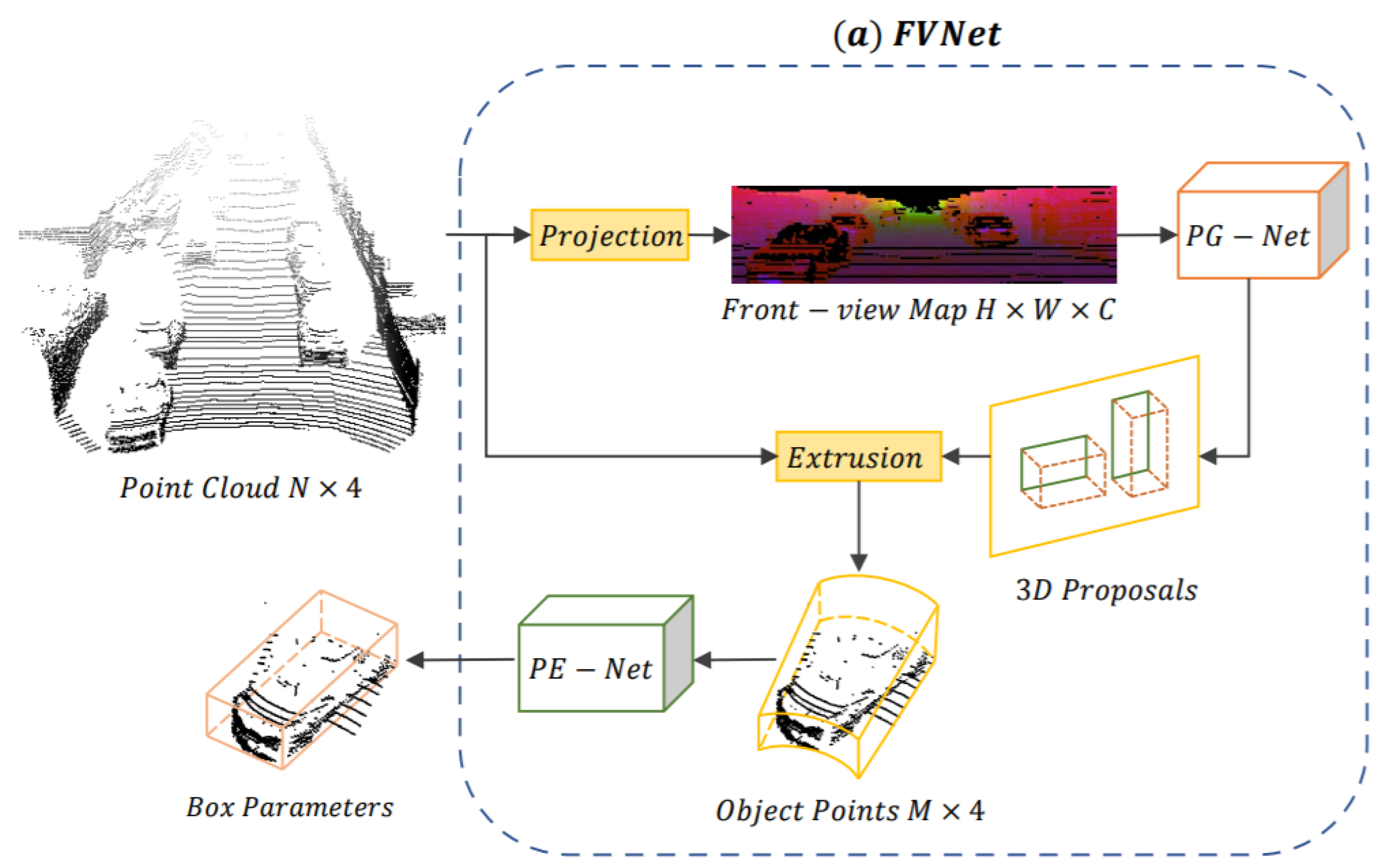

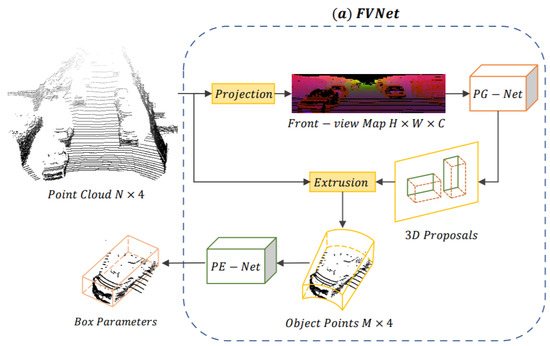

In a seminal study, Zhou et al. [67] presented an FVNet framework for raw PC-based 3D object detection and front-view proposals generation. Direct learning from PC is a challenging task due to its sparse and irregular points. The FVNet circumvented this issue by projecting raw PC on a cylindrical surface for front view feature map generation and took the advantage of both 2D image grid and 3D voxel grid while retained the rich information of 3D PC. The architecture of FVNet shown in Figure 16 was composed of two sub-networks. It used a proposal generation network (PG-Net) to predict the region proposals from the generated maps.

Figure 16.

The network pipeline of FVNet [67] composed of PG-Net and PE-NET.

Then, these maps were used for the prediction of 3D region proposals. Finally, parameter estimation network (PE-Net), which extended the PointNet [121] structure, was used for the extraction of point-wise features and regression of 3D BBox parameters.

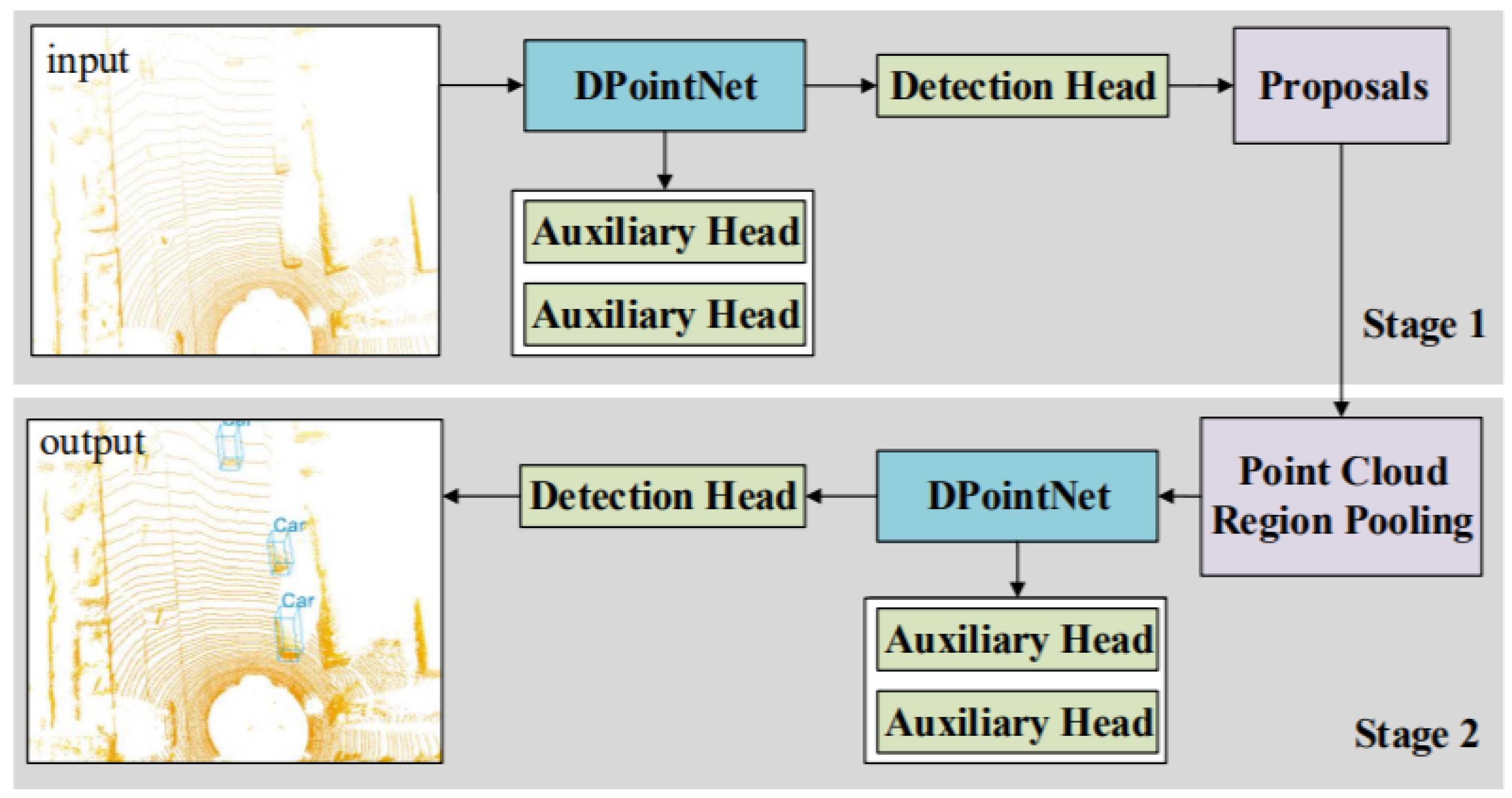

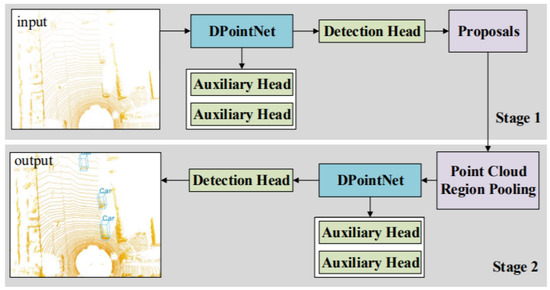

Li et al. [68] proposed density-oriented Point-Net (DPointNet) shown in Figure 17 to overcome the inhomogeneity of point clouds for 3DOR and verified its effectiveness on 3DOR by applying it to PointRCNN [122]. This network was proposed with two kinds of layers known as the SG (Sampling and Grouping) layer and several FA (Fusion and Abstraction) layers. It used the SG layer for sampling the seeds and their neighbors and several FA layers for fusion and abstraction of seeds features. The seeds from the input point cloud were sampled using farthest point sampling, and repeated random sampling was used if the neighbors were not sufficient.

Figure 17.

The architecture with DPointNet [68] detector consisting of two stages for 3D proposal generation and proposal refinement.

The seed neighbors were divided into several groups according to the number of FA layers. Then, the next step was performed by FA layers, which used all neighbor information from SG layer. The FA layers were designed based on three schemes to fuse and abstract information for each seed. First, the feature appending scheme was used to transform the features of all groups in FA layer. Second, the coordinate concatenation scheme, was used to adopt the ‘concatenation’ mechanism for fusion using coordination information. Third, the feature concatenation scheme was used to combine first and second schemes by sufficient feature extraction and feature fusion. The auxiliary heads were applied to PointRCNN for training process.

- (iii)

- Graph Representation for 3DOR

Graph-based representation preserves the irregularity of PC. However, only a few studies have investigated graph neural networks for 3D object detection in LiDAR PC. This section first discusses recent graph-based 3DOR methods and then analyzes them with their advantages and limitations as shown in Table 16.

Table 16.

Literature Analysis: Graph-based Representation for 3DOR.

Instead of converting PC data into grid or voxel representation, Shi et al. [69] proposed Point-GNN, a graph neural network for compact representation of PC in which neighbor-hood points were linked with the graph edges. It facilitated accurate detection of multiple objects on PC using 3D BBoxes in a single shot from LiDAR PC. The points were coordinated by the auto-registration method while detection results from different vertices and integrated by box merging and scoring operations.

Existing 3D object detectors individually recognize the objects without considering their relationship in learning and inference. The overall architecture contains three components. The first is graph construction in which a voxel down-sampled point cloud was used for reducing the density of a point cloud during graph construction. The second contained a GNN of T iterations in which a graph convolutional neural network was designed to refine the vertex’s state. The third was related to bounding box merging and scoring in which the merged boxes were calculated by considering the entire overlapped box cluster.

Feng et al. [70] presented a 3D relation graph network for building an object–object relation model by learning pseudo centers and direction vectors to improve the prediction accuracy. It was composed of two main parts in which 3D BBoxes were predicated through the proposal generation module, directly on the PC with PointNet++ [119] backbone. Its second part introduced the relation module for point attention pooling and exploit the object–object relationship.It also used point attention pooling for converting the point features into a uniform vector and performed relational reasoning using 3D object–object relation graph. It applied a 3D NMS post processing step for the extraction of high-quality 3D BBox candidates.

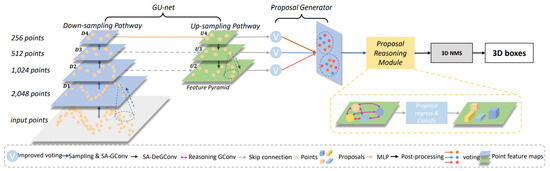

3D object recognition requires both geometric and semantic information (e.g., the object’s shape). However, many PC-based object detectors do not effectively capture the semantic characteristic of PCs. In this direction, Chen et al. [71] introduced the hierarchical graph network (HGNet) as shown in Figure 18 that processes raw PCs using multi-level semantics for 3D object detection. It contained three main parts, which are a graph convolution-based U-shape network called GUnet, proposal generator, and proposal reasoning module (referred to as ProRe Module).

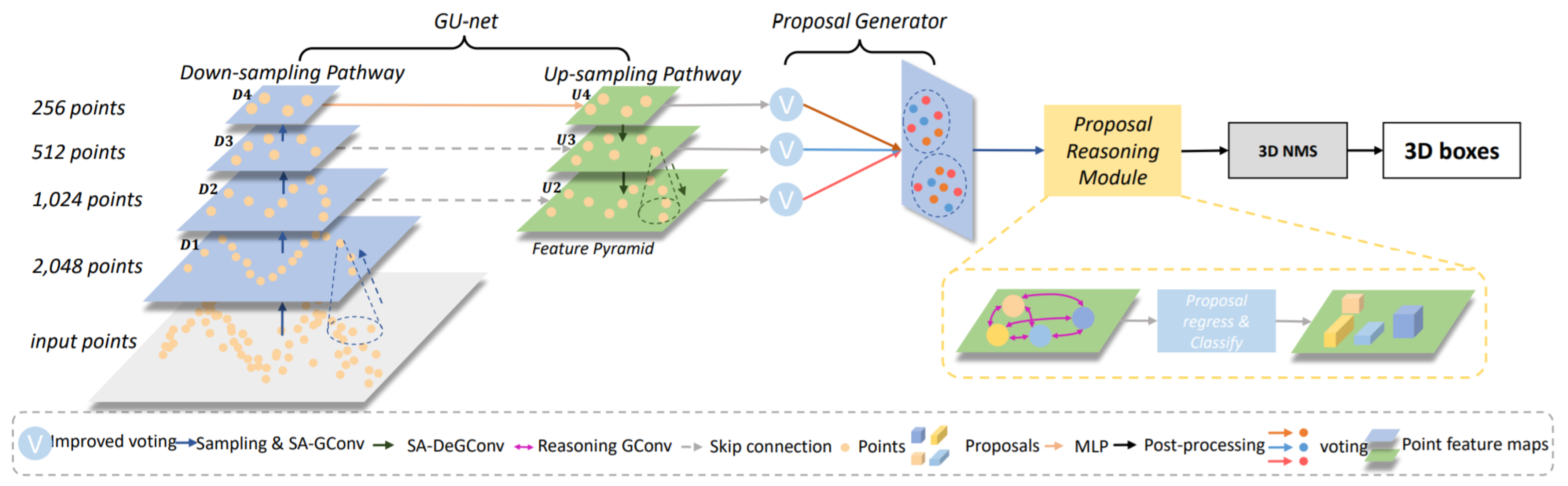

Figure 18.

3D object detection pipeline of HGNet [71] framework with three main components: GU-net, Proposal Generator, and ProRe Module.

It depicted the shape information of objects by extracting local features from geometric positions of the points. It employed a shape-attentive graph convolution, which is a U-shape network for mapping multi-level features through the voting module, and used ProRe Module to reason about proposals for BBox prediction by taking the advantage of global scene semantics. The proposal features were updated by GConv, combining the global scene semantics and including proposals’ relative positions as an attention map.

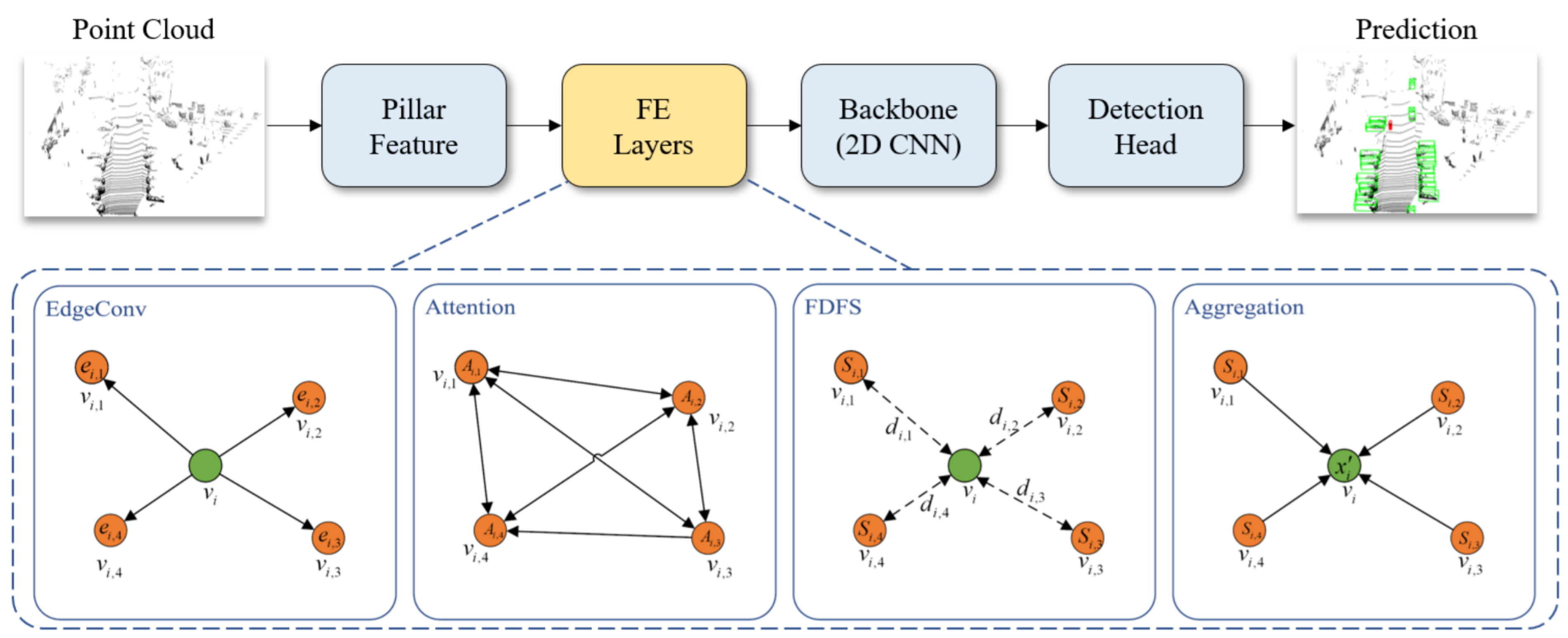

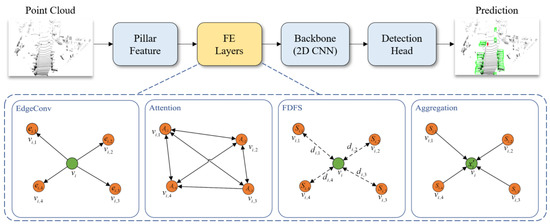

Wang et al. [72] overcame the inherent drawbacks of partition-based methods that limit the 3DOR of small objects by proposing the spatial-attention graph convolution (S-AT GCN), which include EdgeConv, attention, far distance feature suppression, and aggregation steps as shown in Figure 19. For partition operation, single instance, e.g., a pedestrian was sliced, which is called the partition effect. The partition effect was used to influence the performance of 3DOR, particularly in the case of small object detection.

Figure 19.

The pointPillars [72] with a feature enhancement layer.

An extra layer called feature enhancement (FE) layer was included after partition operation. The S-AT GCN was cascaded to form FE layers, while the effectiveness of these layers was presented by adding [121]. They added the feature enhancement (FE) layer to the baseline model, point pillars [121] after partition operation and a spatial attention mechanism for GCN to extract geometric information. This enabled the network to extract more accurate foreground features.

3.1.3. LiDAR-Camera Fusion-Based 3DOR

This section discusses 3D object detection based on camera-LiDAR fusion [123] using DL approaches to overcome the limitations and uncertainties of a single sensor. Camera-LiDAR fusion has become a practical approach for 3DOR [124]. The reliance on a single sensor can be risky for the accurate understanding of the surrounding environment, therefore, it is advantageous to equip robotic systems with a second sensor to achieve robust environment perception for the detection of 3D objects. To this end, sensor fusion, which leverages the data derived from multiple sensors and gives less uncertain information compared to the individual sensor, has become an emerging research area. Table 17 demonstrates the methodology and limitations along with the research gap of camera-LiDAR fusion-based 3DOR techniques.

Table 17.

Methodology and Limitation(s): Camera-LiDAR fusion-based 3DOR Methods.

The fusion approaches can be divided into three categories. Early fusion (EF), also called data-fusion, takes inputs from multiple sensors that are first combined in the beginning and makes a new representation that is used for transformations (e.g., convolutions). Late-fusion (LF), also known as decision fusion, first transforms the sensors’ inputs and then combines them. Deep-fusion (DF) or middle-fusion (MF) [125] is the combination of both EF and LF. We review some camera-LiDAR fusion methods and present their literature analysis in Table 18.

Table 18.

Literature Analysis: Camera-LiDAR Fusion-based 3D Object Recognition Methods.

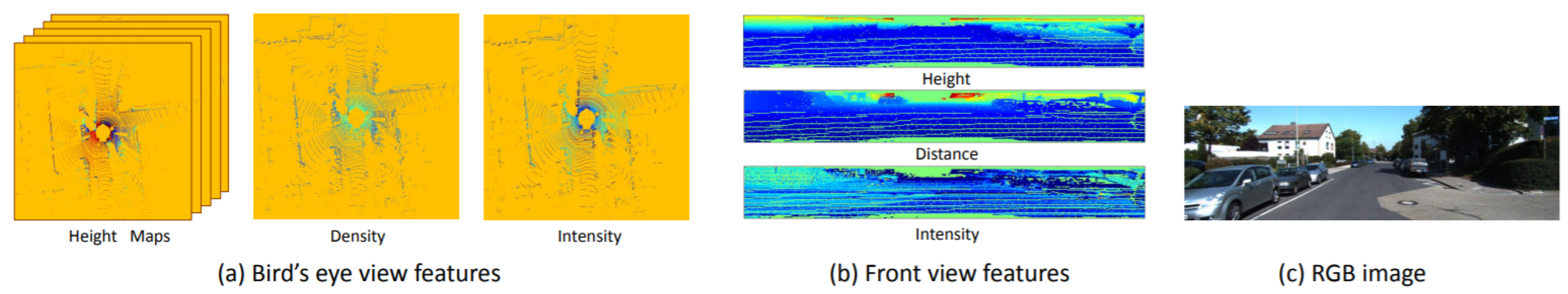

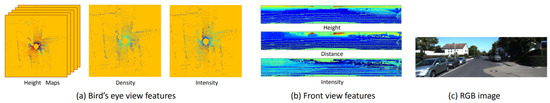

Fusion approaches for 3D object detection are either very complicated or rely on late-fusion. Therefore, they do not provide multi-modalities interaction at the early stages. In this direction, Chen et al. [73] proposed multi-view representation of 3D (MV3D) point cloud, which included a bird’s eye view and front view of LiDAR and an image as input as shown in Figure 20. The representation of bird’s eye view was encoded by height, intensity, and density, while the complementary information was provided by the bird’s eye view representation. It was used for the fusion of both LiDAR PC and RGB camera images and the prediction of 3D BBoxes.

Figure 20.

The input features of the MV3D [73] network.

MV3D was composed of two sub-networks for the generation of 3D object proposals from BEV PC representation and fusion of multi-view features. It provided a deep fusion scheme after region proposal for combining region-wise features and enabled intermediate layer interaction. MV3D used 3D proposals to support different modalities and performed 3D box regression for accurate detection of objects, location, orientation, and size in 3D space.

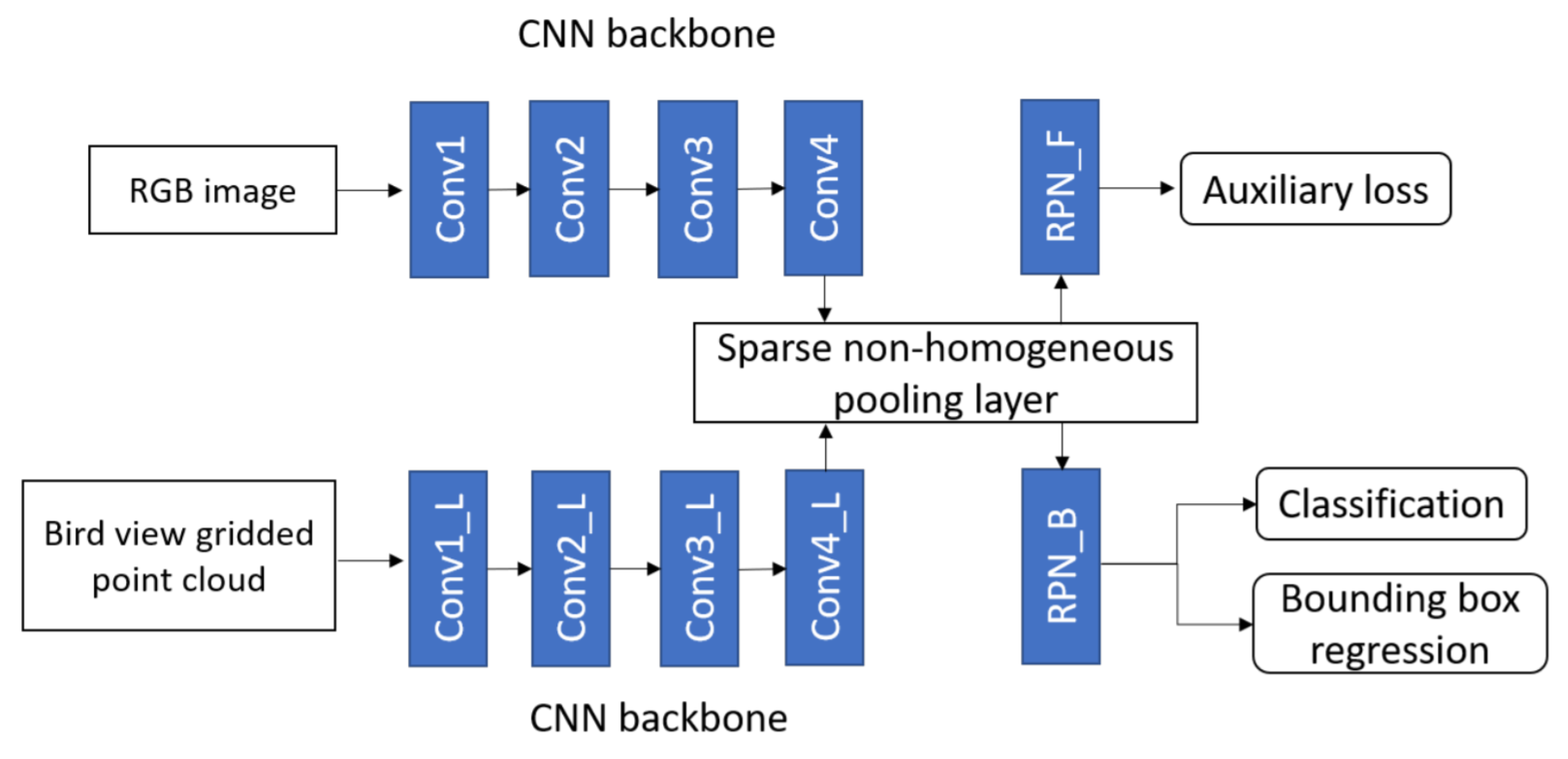

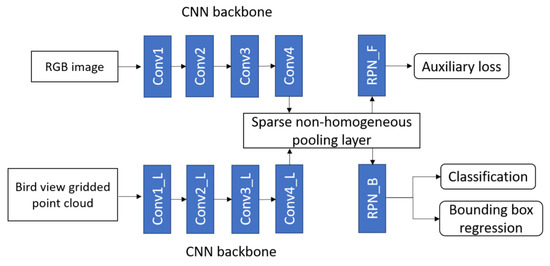

Wang et al. [74] used deep CNN for camera-LiDAR fusion architecture to detect 3D objects in the autonomous driving scenario and efficiently transformed the features between BEV and front view by developing a sparse non-homogeneous pooling layer. The main idea to transform feature maps into different views by point cloud and matrix multiplication. A fusion-based network was built The network structure of one-stage fusion-based detection network was shown in the Figure 21, which contained two fully convolutional backbones for image and LiDAR units.

Figure 21.

The fusion-based one-stage object detection [74] network.

The PRN similar to many camera-based one stage detectors was used in image convolutional networks. However, region proposal was not used during the testing process. The auxiliary loss was applied to get supervision from the label and 3D proposal in the front view. It mapped two views by sparse PC and used a pooling layer to perform multi-view fusion before the proposal stage to transform the entire feature map. The architecture of its one-stage detector consists of two kinds of CNN backbone: VGG for camera-LiDAR with a feature map down-sampled four times for BEV and eight times in front view; MS-CNN [126] for camera-VoxelNet [114] with a feature map down-sampled two times for BEV and eight times in front view.

Roth et al. [75] performed deep end-to-end 3D person detection with a camera and LiDAR PC using deep CNN for estimating the 3D location and extent of people in the automotive scenes. Its architecture refined 2D anchor proposals by developing a region proposal network (RPN and subsequent detection network). It extracted high-level features from camera images using VGG-like CNN, obtained PC features through Voxel Feature Encoders [114], and performed end-to-end learning. The deep CNN learned low-level features from camera images and 3D LiDAR point clouds. It fused their high-level representations from both modalities and then passed them to the regression model as input for estimating the 3D person BBoxes.

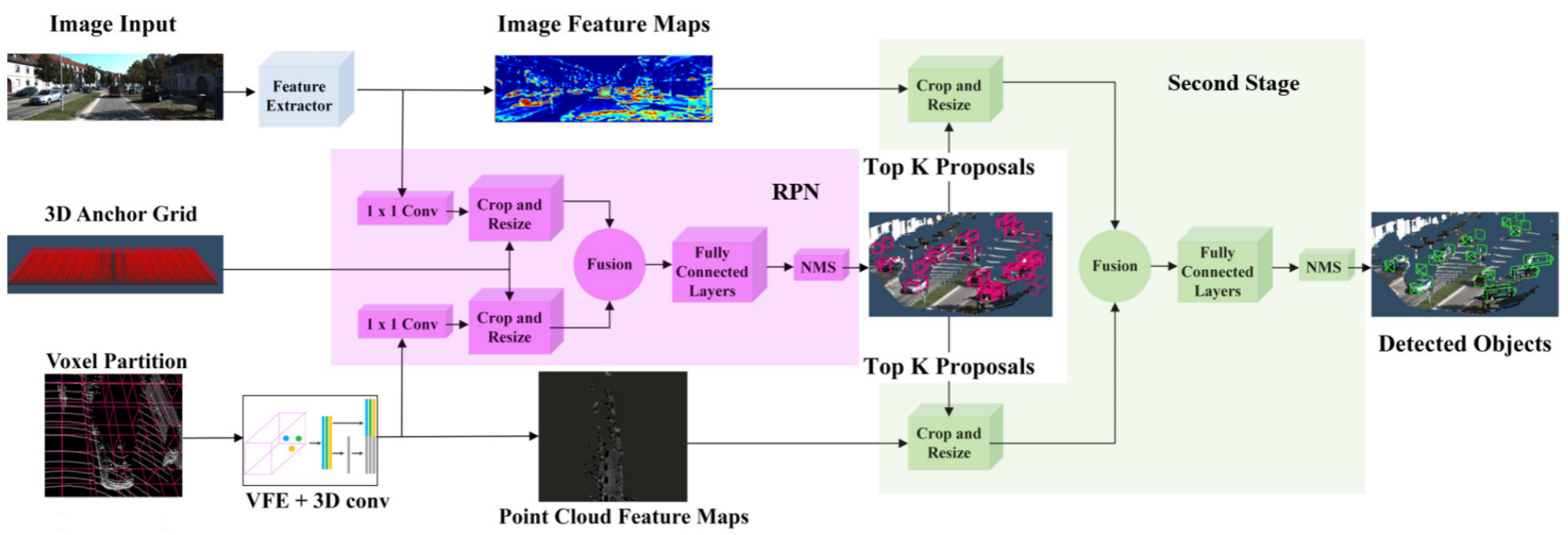

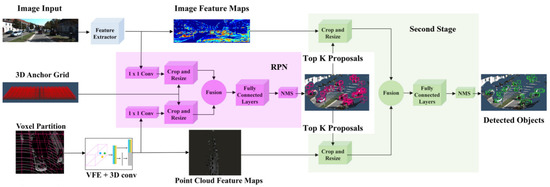

Figure 22 illustrated the network architecture, which was inspired by AVOD [120]. It adopted VGG16 network to extract the features of the image while features from the point cloud were extracted using voxel partitions. These partitions were applied by VFE layers and 3D convolutions. They size of the feature map was reduced by applying 1 × 1 convolution in RPN. The proposals were obtained by project 3D anchors on the feature map. The features from both modalities were fused after resizing and object’s location was estimated by applying fully CNN. In the second stage, the best proposal were cropped and fused from full feature maps. The fully connected layers for fused crops were used for the implementation of object detection layers. It allowed end-to-end network to the 3D locations of the persons from camera image and LiDAR point cloud data.

Figure 22.

Aggregate view object detection [120] network pipeline for 3D object detection in the context of autonomous driving.

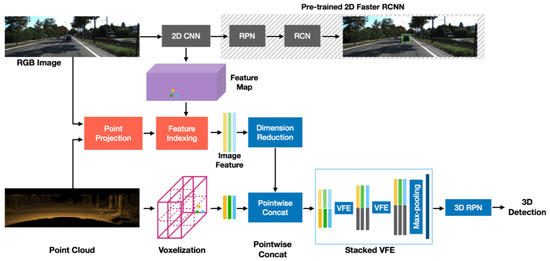

Sindagi et al. [76] extended VoxelNet [114] by introducing two fusion techniques: The point-fusion as an early-fusion scheme was employed to give a projection of PC to image feature space using a known calibration matrix, extract the features using a 2D detector, and perform point-level concatenation of image features. The voxel fusion as a late-fusion strategy was used to project non-empty 3D voxels generated by VoxelNet, extract features in 2D ROIs, and perform voxel-level concatenation of pooled features.

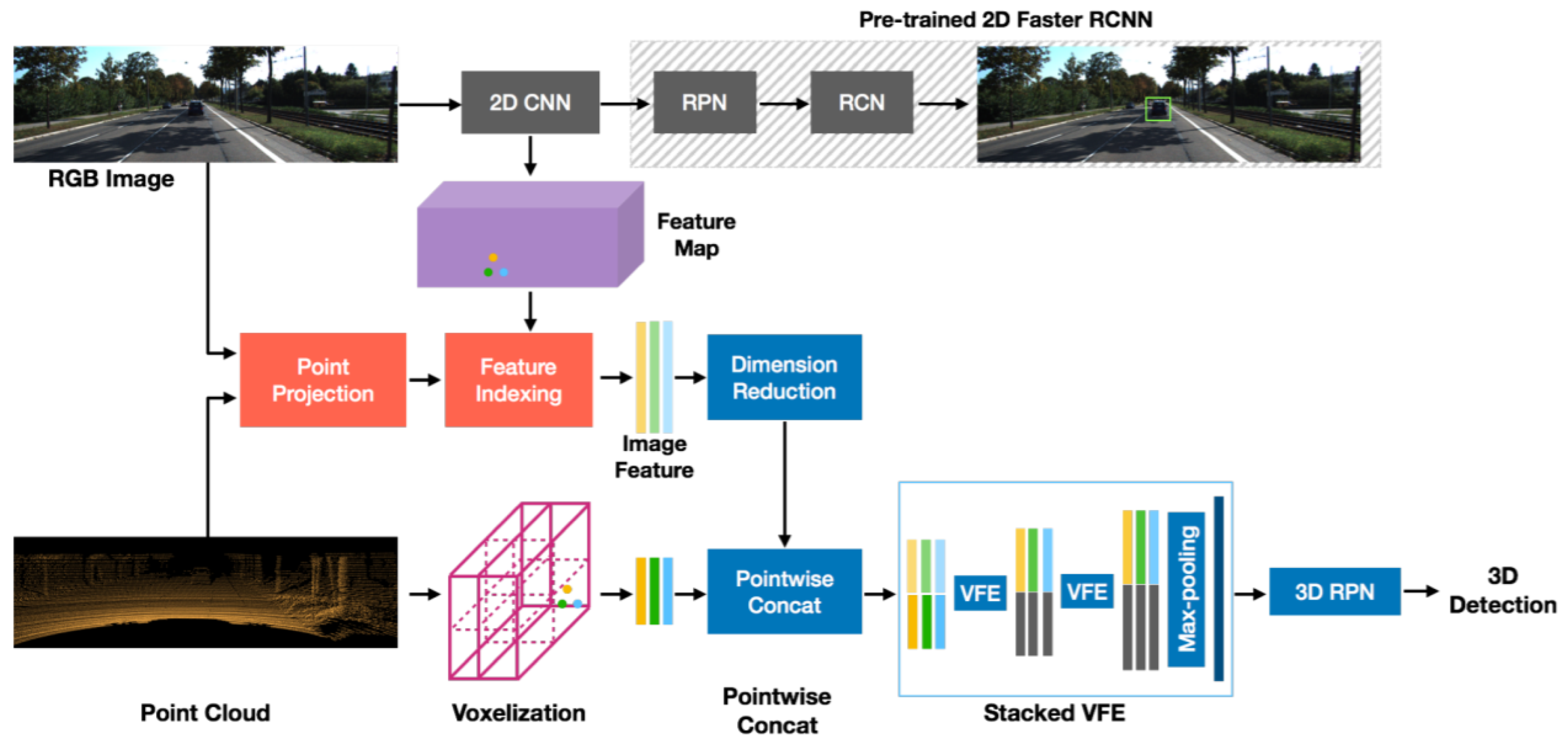

It was a later fusion technique to handle the empty voxels. The MVX-Net effectively fused multimodal information. Its PointFusion based method is illustrated in Figure 23 in which convolutional filters of faster RCNN were used to for extracting the image feature map. The 3D points on the image were projected by calibration information and related features were appended to the 3D points. The 3D RPN and voxel feature enhancement layers were used for the processing the aggregated data and 3D detections.

Figure 23.

The overview of the MVX-Net [76] PointFusion method.

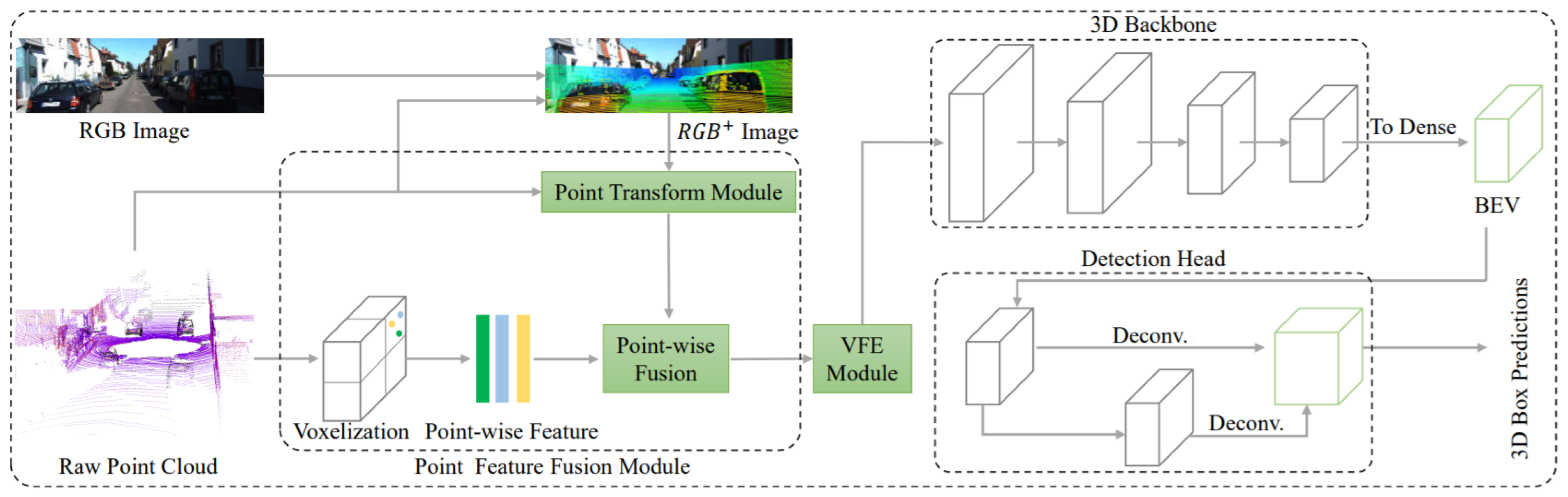

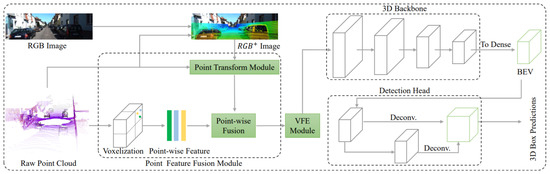

Wen et al. [77] proposed an early-fusion method to use both camera-LiDAR data for efficient 3DOR with single backbone network architecture. It extracted point-wise features from RGB images, which were fed into a 3D neural network. It used two strategies for reducing information loss during 3D voxel grid-based point-cloud representation. The first one was using small voxel size, while the second strategy was projecting point cloud features onto RGB images. A point feature fusion module, a voxel feature encoder module, a detection head, and a loss function were developed as the four main components of its one-stage 3D multi-class object detection model as shown in Figure 24.

Figure 24.

The pipeline of 3D object detection [77] network for the LiDAR and camera, including input, the point feature fusion module, the 3D backbone, and the detection head.

The point clouds and RGB images were used as inputs and while the predictions of oriented 3D BBoxes for cars, pedestrians, and cyclists were the output. It used a point feature fusion module for the extraction of point features from the image and fused those features with the related point cloud features. High-level representation of fused point-wise features was performed by a voxel feature encoder module and 3D backbone and 3D BBoxes were classified and regressed by the detection head.

Summary

The summary of 3DOR according to the studies reviewed in Section 3.1 and listed in Table 9, Table 11, Table 13, Table 14, Table 16, and Table 18 is presented. Current applications of 3DOR are generally categorized into two environments: outdoor and indoor, with the first category being more frequently studied (19 vs. 4 studies). The article divides these 3DOR studies according to sensor modalities that include camera-based (monocular—five studies and stereo cameras—two studies), image grid-based (two studies), 3D voxel grid-based (three studies), pointNet-based (three studies), graph-based (four studies), and camera-LiDAR fusion-based (five studies). These 3DOR methods use state-of-the-art DL-based object recognition networks that follow either one-stage (nine studies) or two-stage (14 studies) object detection pipelines.

The advantages and limitations of 3DOR methods show that developing DL-based multi-model recognition systems is a particularly challenging task for ADV in outdoor environment because it requires a high level of accuracy and real-time performance while current models cannot generate prediction consistency over time. On the other hand, object recognition is a challenge in an indoor environment consisting of cluttered scene with many occluded objects. In addition, the fusion of multiple sensors and different feature representations as well as optimal fusion architecture for 3DOR are still open questions that require more focus on these research topics.

3.2. 3D Place Recognition (3DPR)

3D place recognition is a task of identifying the location in a view of a place by querying the similar images that belong to the same location in a large geo-tagged database [127]. It retrieves the database images according to the robot pose and current query image taken by the robot’s sensor (i.e., camera) to find the association between query images and database images of known places. Robots and automated vehicles on the road use the place recognition approaches for accurately recognizing the locations and efficiently identifying the revisited places.

Although, place recognition systems can also benefit from the existing research on object recognition by detecting the objects in the context of scene knowledge [128]. However, place recognition approaches are more concentrated on larger scale targets called the place landmarks [129]. Another major characteristic that distinguishes the place recognition from other visual recognition tasks is that it has to perform the condition-invariant recognition to a degree that many other recognition tasks do not have. Moreover, an architecture that is apt for 3DOR may not fit well into 3DPR tasks because their visual cues are different.

Place recognition is an active research area and a key capability of autonomous mobile robots. However, it is still a challenging task to achieve. The recent literature on place recognition concentrates on replacing traditional handcrafted feature extractors [4,130,131,132,133,134,135,136,137] with CNN for feature extraction [138,139,140,141], which aids in the direct learning of 3D structural descriptors. Camera and LiDAR are two main sensors to perform place recognition tasks.

Camera-based place recognition methods contain efficient descriptive information, but they struggle to cope with illumination and occlusion problems [142]. LiDAR-based place recognition approaches are invariant to appearance change [143], however, rich descriptive representation is still an open research question for LiDAR-based place recognition, and it suffers from limited ranging and motion distortion issues [114,144,145] Therefore, fusing information from both sensors provides better solutions.

This section reviews data representation methods for 3D place recognition based on Camera and LiDAR sensors using DL models. It is subdivided as Camera-based 3DPR (Section 3.2.1), LiDAR-based 3DPR (Section 3.2.2), and Camera-LiDAR Fusion-based 3DPR (Section 3.2.3).

3.2.1. Camera-Based 3DPR

Visual place recognition (VPR) is the problem of recognizing a place from the robot’s current camera images based on the visual appearance [146,147]. It has been around for many years. However, research in this field is growing rapidly due to recent developments in camera technologies [148] with their compatibility for DL-based techniques. In this direction, 3D depth vision cameras and event-based cameras have drawn researchers’ attention. 3D depth cameras have made it possible to collect 3D data with ease. However, the limited range of depth, less accurate distance information, and training 3D data with DL-based models are the challenges still underdeveloped [149].

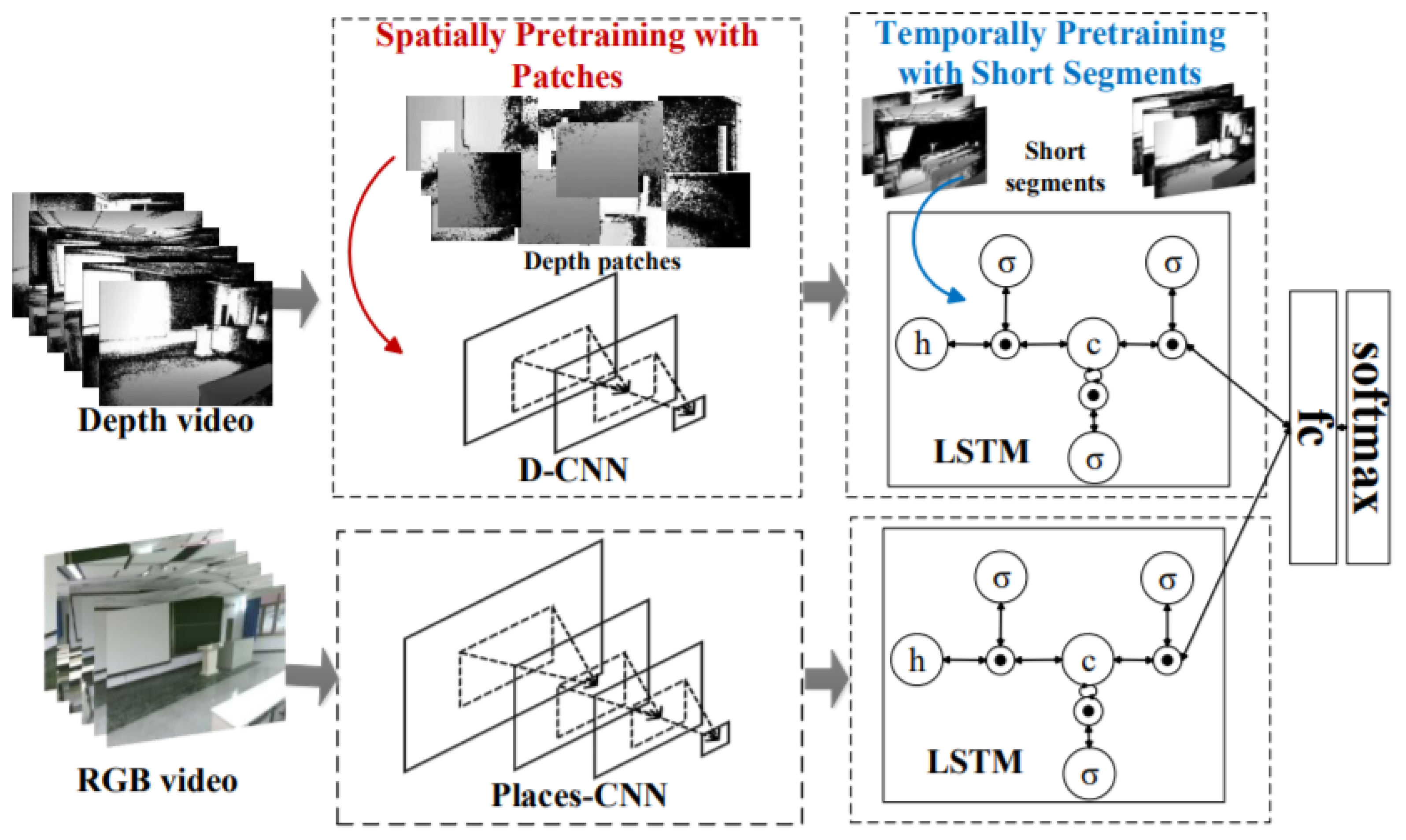

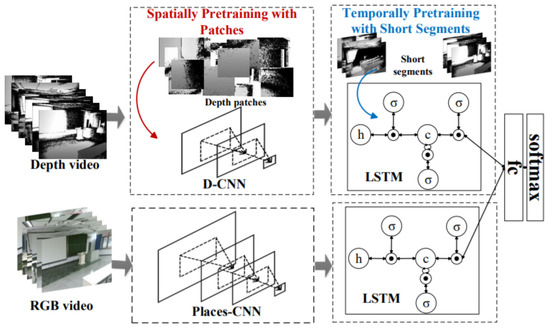

As DL-models rely on the networks trained only on RGB data, which lacks the depth features. In this direction, Song et al. [78] addressed these limitations using RGB-D videos for taking advantage of the richer depth and RGB information. It introduced a two-step training approach that involves weekly pre-training via patches to learn powerful depth-specific features. Its proposed CNN-RNN framework was used to model RGB-D scenes for recognition.

Inspired by the two-step CNN techniques that were trained on still images, a three-step training strategy was introduced for CNN-RCNN architecture to obtain the significant gain through the integration of depth videos. It created a joint embedding by combining convolutional and recurrent neural networks for capturing spatial and temporal information as shown in Figure 25. LSTM blocks were used to implement the recurrent neural networks. It used independent branches for RGB and depth data. LSTMs based temporal embedding was modality specific and late fusion was performed using fully connected layer while combined architecture was trained jointly end-to-end.

Figure 25.

The CNN-RNN [78] architecture for video recognition.

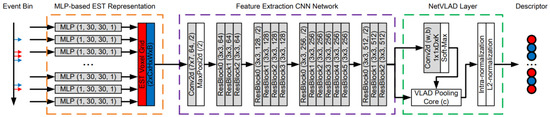

To the best of our knowledge, there are very few studies that use an event-based camera for place recognition. Among them, Kong et al. [79] proposed Event-VPR, the first end-to-end VPR method using an event camera. These cameras work differently from the frame-based cameras because there are neuromorphic visual sensors that are inspired by the biological retina and have the advantage of low latency, low bandwidth and low power consumption [150]. The key idea of Event-VPR, as shown in Figure 26, was to apply NetVLAD to EST voxel grid, which was generated by event streams.

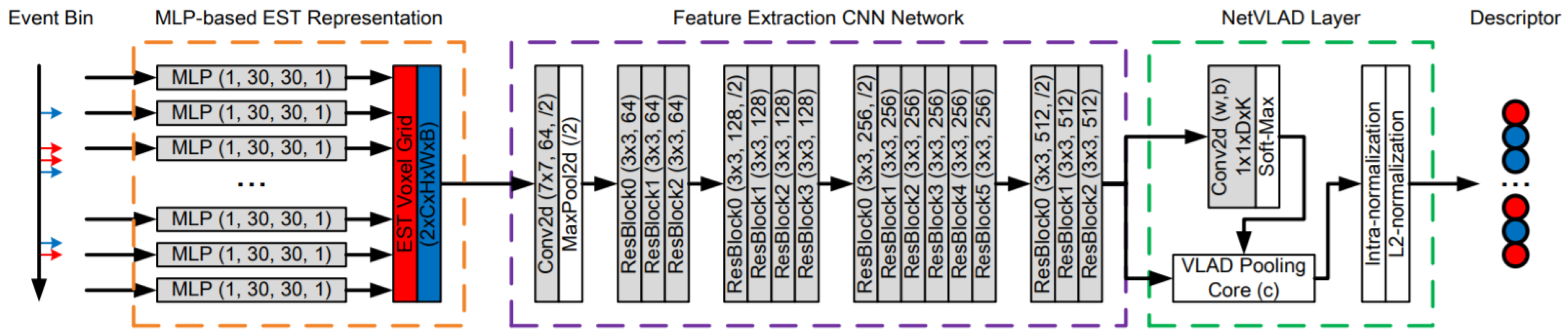

Figure 26.

The pipeline of Event-VPR [79] for 3DPR.

It selected the corresponding positive and negative of event bins and trained the network to learn the global descriptor vectors of the bins. First, it used event streams as input and divided the consecutive event stream into the bins. These bins were converted into EST voxel grid using MLP-based kernel. Then, the visual features of EST voxel grids were extracted using ResNet34 [108]. Then, feature descriptor aggregation was performed by a VLAD-based aggregated description layer, and finally the network was trained with weakly supervised training for 3DPR.

3.2.2. LiDAR-Based 3DPR

Place recognition using LiDAR-based 3D PC is still an open issue and a harder task in large-scale dynamic environments due to the difficulty in feature extraction from raw 3D PC and global descriptor generation [151]. The article focuses on recent LiDAR point-cloud-based methods for 3D place recognition using DL-based techniques and provide their comparison in Table 19.

Table 19.

Literature Analysis: 3D Place Recognition (3DPR) Methods.

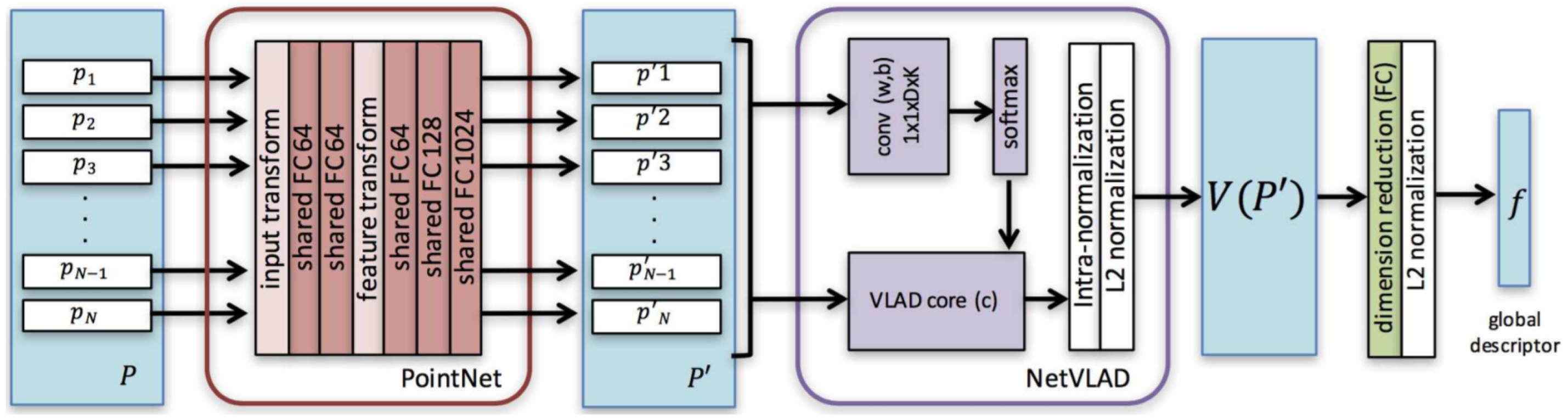

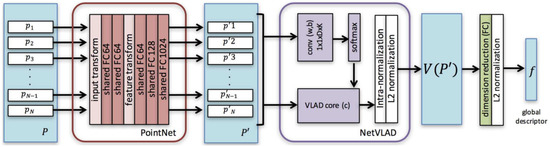

In contrast to image-based counterparts, most studies of 3D recognition have not dealt with LiDAR PC for place recognition due to the difficulty of its local descriptors’ extraction that can later be converted into global descriptors. A recent study by Angelina et al. [80] applied DL networks and introduced PointNetVLAD to provide the solution of PC-based place recognition using NetVLAD [152] and PointNet [121]. It extracted more general global features proposing lazy triplet and quadruplet loss function while mapped 3D PC to discriminative global descriptors by training PointNETVLAD using metric learning [153].

The PointNetVLAD was a combination of existing PointNet [121] and NetVLAD [152], shown in Figure 27 for global descriptor extraction from given 3D point clouds by end-to-end training and inference. Its included first block of PointNet that was cropped before maxpool aggregation layer. Its input was the same as PointNet consisting of a set of 3D points. The dimensional local feature descriptors were extracted from each input 3D point. These descriptors were fed to NetVLAD layer, which was designed to aggregate local image features from VGG/AlexNet into global descriptor vector. The VLAD descriptor [154] was the output of the NetVLAD layer.

Figure 27.

The architecture of our PointNetVLAD [80] network.

Place recognition and scene understanding is also an important area of research in the indoor environment. However, in contrast to the outdoor environment, there are fewer studies of place recognition from 3D PC data for the indoor environment. An autonomous robot must be aware of different places, such as rooms, hallways, and kitchens in an indoor environment to perform its task. Huang et al. [81] performed 3D PC (voxel) based scene recognition in an indoor environment by combining semantic segmentation with the multi-task framework. It worked on scene recognition in indoor environment as supervised classification using neural network.

The network was composed of encoder to extract feature representation from input scene and a classification head to obtain class-conditional likelihood. It explored two different options for encoder: First was the working with subsampled version of original PC networks (Pointnet [121], Pointnet++ [119] DGCNN [155]) while second was sparse voxel grid networks (Resnet14 [108]). It demonstrated that multi-task learning with semantic segmentation improves the performance of scene recognition by sharing information among related tasks.

The multi-task network was composed of an encoder for converting the scene into a feature representation, and two output heads, which were semantic segmentation head (top) and a classification head (bottom) for computing the class likelihood as shown in Figure 28. For semantic segmentation, sparse Resnet14 variant with U-net style decoder was extended that mirrored the encoder with skip connections. The network weights of encoder were froze and only scene classification head was trained. Finally the network was fine-tuned with small learning rate to yield better recognition.

Figure 28.

The multi-task network structure [81] for scene recognition in indoor environments.

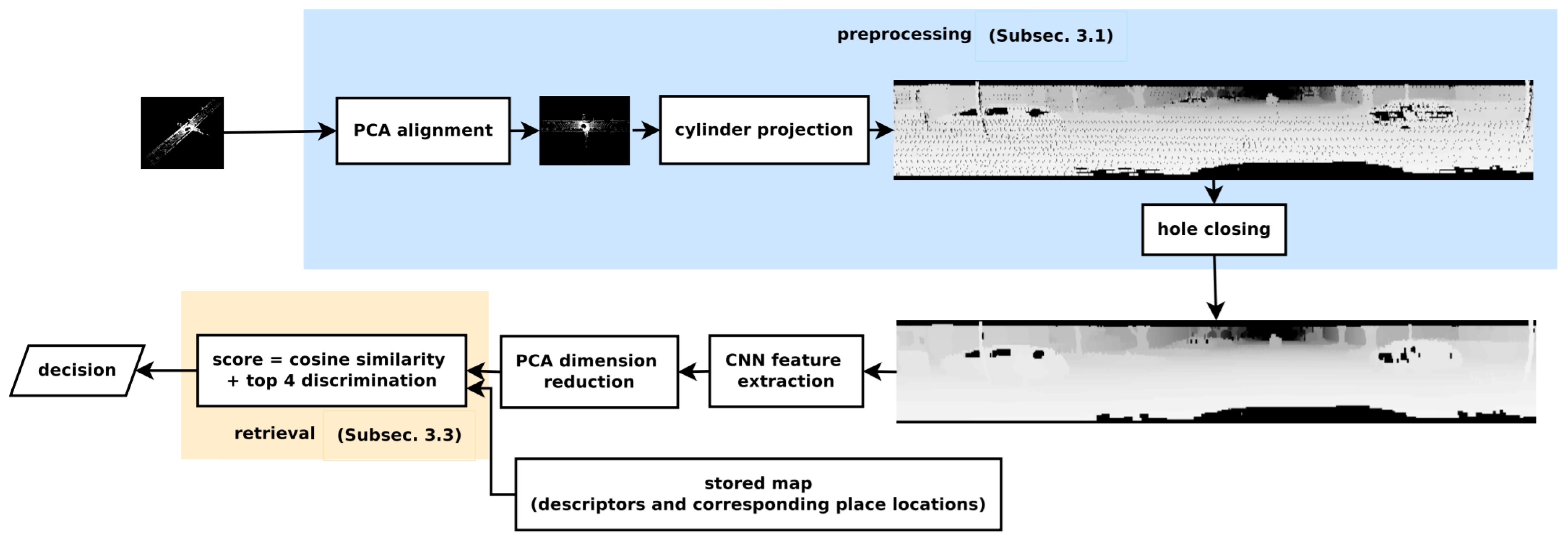

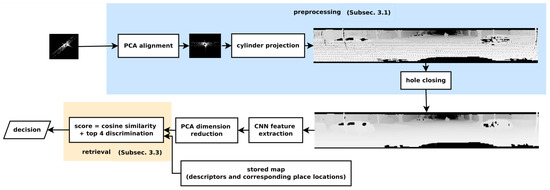

An efficient place recognition system is invariant to illumination variation and object motion in that place [156]. Sun et al. [82] presented PC-based place recognition using CNN that was pre-trained on color images and provided robust detection to moving objects, which were also rotation and illumination invariant. The 3D place recognition system in Figure 29 shows that it first aligned the PC with its principal directions then represented it onto the cylindrical image plan. It performed feature extraction using CNN followed by the principal component analysis dimension reduction and specified a threshold to determine the trade-off between recall and precision.

Figure 29.

The system overview [82] for point-cloud-based place recognition using CNN feature extraction.

In its preprocessing step, it considered a 3D PC created by a Velodyne LiDAR to cover for full 360° environmental view. PCA was used to align the PC by finding the orthogonal directions and obtain more compact features. It generated the range image through the projection of PC on cylindrical plane while extracted the features by CNN using convolutional layers. It used fully connected layers to perform reshaping and pooling layer on the top of hidden layer for dimension reduction. Since one place contained one descriptor; therefore, the variance of dimension indicated its discrimination ability. For retrieval, the descriptor vector of each PC was normalized, and the cosine distance was used as similarity metric.

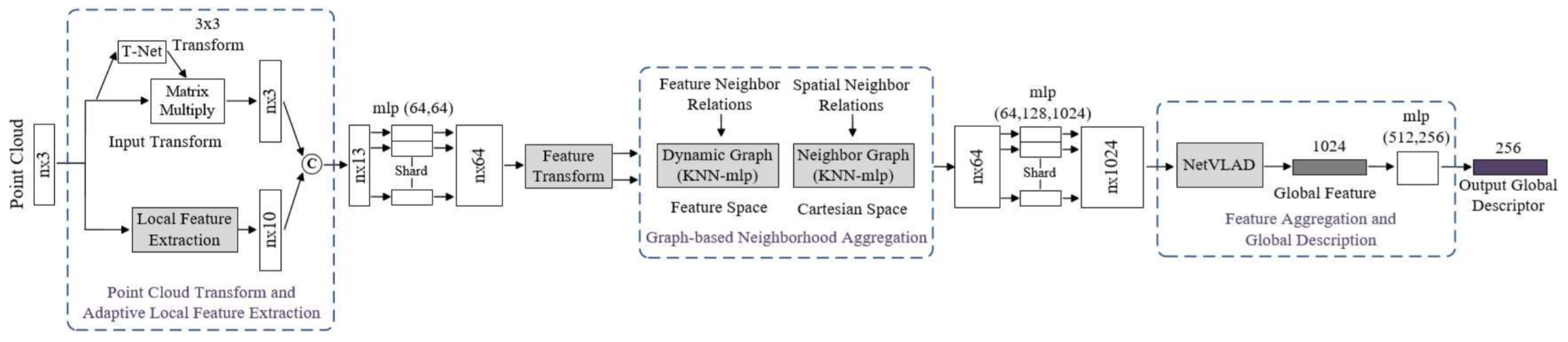

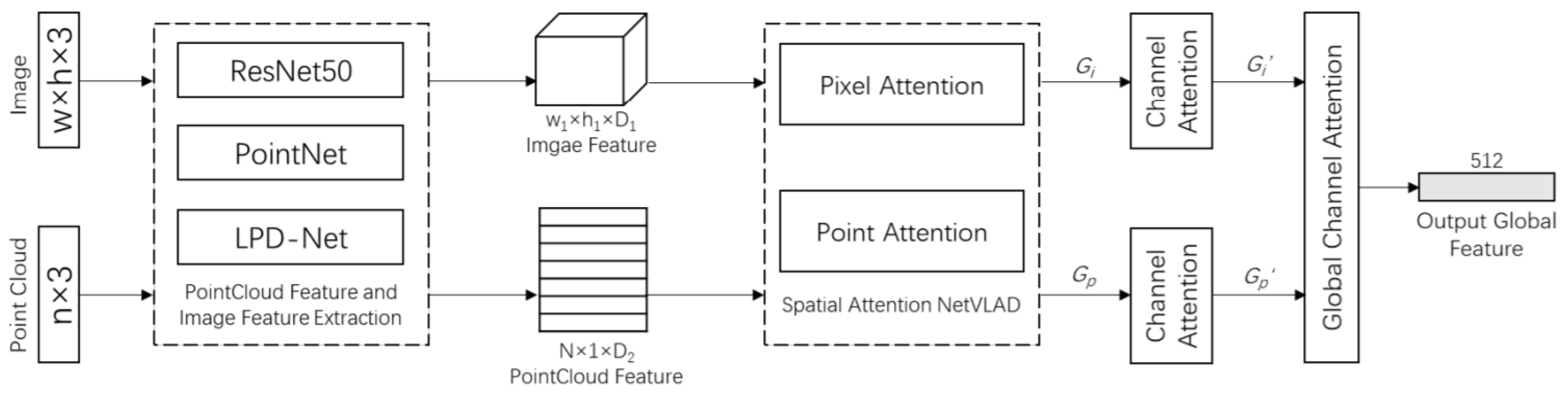

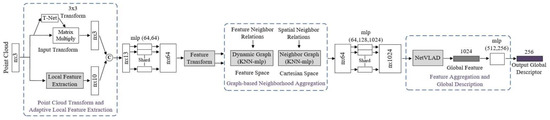

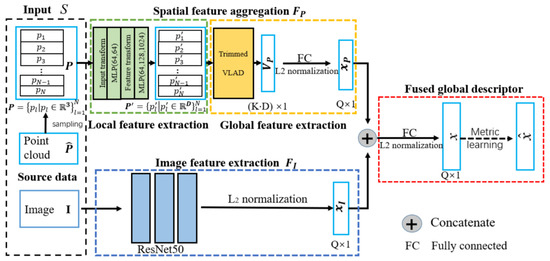

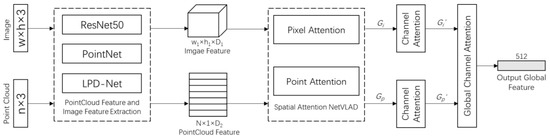

Liu et al. [83] proposed a large-scale place description network (LPD-Net) for extracting distinct and general global feature descriptors from 3D PC. It used local features rather than isolated point positions as the network input. The network architecture was composed of three major modules to handle large scale environment as shown in Figure 30. The adaptive local feature extraction module was used to obtain the PC distribution and the local features. The graph-based neighborhood aggregation module was used in feature and Cartesian space to learn structure information of PC. The resulting vectors were passed to NetVLAD [152] for the generation of a global descriptor.

Figure 30.

The LPD-Net [83] for large scale place recognition.

The computational and storage complexity was reduced by extracting global descriptor to perform real-time place recognition tasks. Its feature network captured the local structure using features around each point in the local neighborhood. The raw PC data was passed as input to Transformation Net [121], which aimed at ensuring the rotational translation invariance and the adaptive local feature extractor, which considered the statistical local distribution.

The appropriate neighborhood size in different situations was selected using adaptive neighborhood structure, which were merged into feature vectors. The output of the feature network was passed to a graph network as input, and feature aggregation was performed using the kNNgraph network in the Cartesian space. It introduced the relational representation from the GNN to LPD-Net for representing the scene compositions as graph nodes, their intrinsic relationships and scene descriptors generated by GNN.

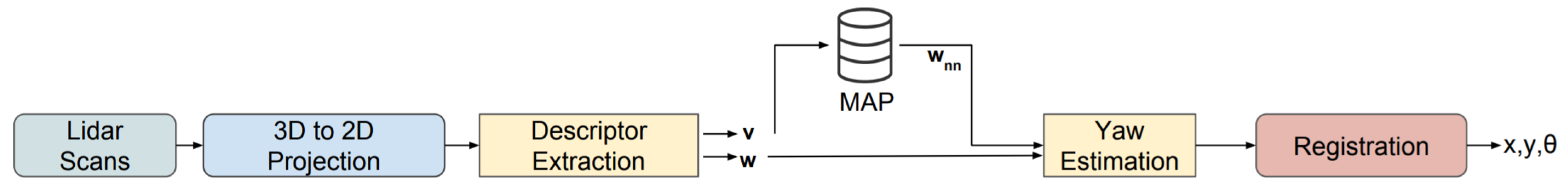

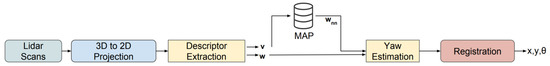

Most research on place recognition [80,157,158] has not fully addressed the problem of 3 DoF transformation. Schaupp et al. [84] dealt with the aforementioned issue by proposing an efficient data-driven framework for extracting compact descriptors from 3D LiDAR PC using CNN, which aimed at recognizing the place and regressing the orientation between point clouds. The network was trained by a triplet loss function and a hard-negative mining scheme was applied to improve the descriptor extractor. It developed a metric global localization in the map reference frame from single scan of 3D LiDAR PC.

For this, it used four sequential components known as point cloud projection, descriptor extraction, yaw estimation, and local point cloud registration as shown in Figure 31. In the first step, PC projection used spherical model for PC representation and converted the LiDAR point cloud scan onto a 2D range image. In the second step, descriptor extraction was implemented for place representation and deriving orientation details using CNN.

Figure 31.

The methodology [84] of oriented recognition from 3D point clouds.

For this, 2D range images were taken as input and two compact descriptor vectors were generated, which were used to represent rotation invariant and encode it for yaw angle discrepancy between the query PC and the PC of the nearest place in the map. Finally, local registration method was applied to obtain three DoF pose estimation using planar coordinates and orientation estimate. The deep CNN architecture based on [159,160] learned mapping from range image through encoding 3D PC onto feature vector representation to effectively perform oriented place recognition.

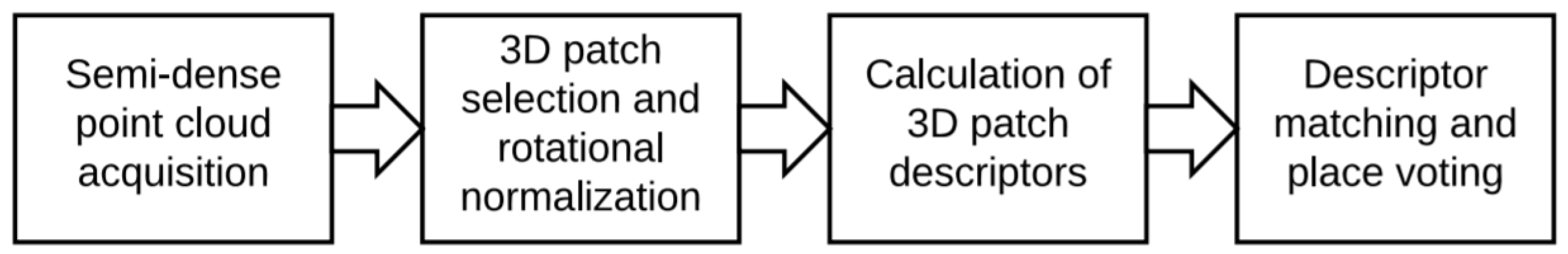

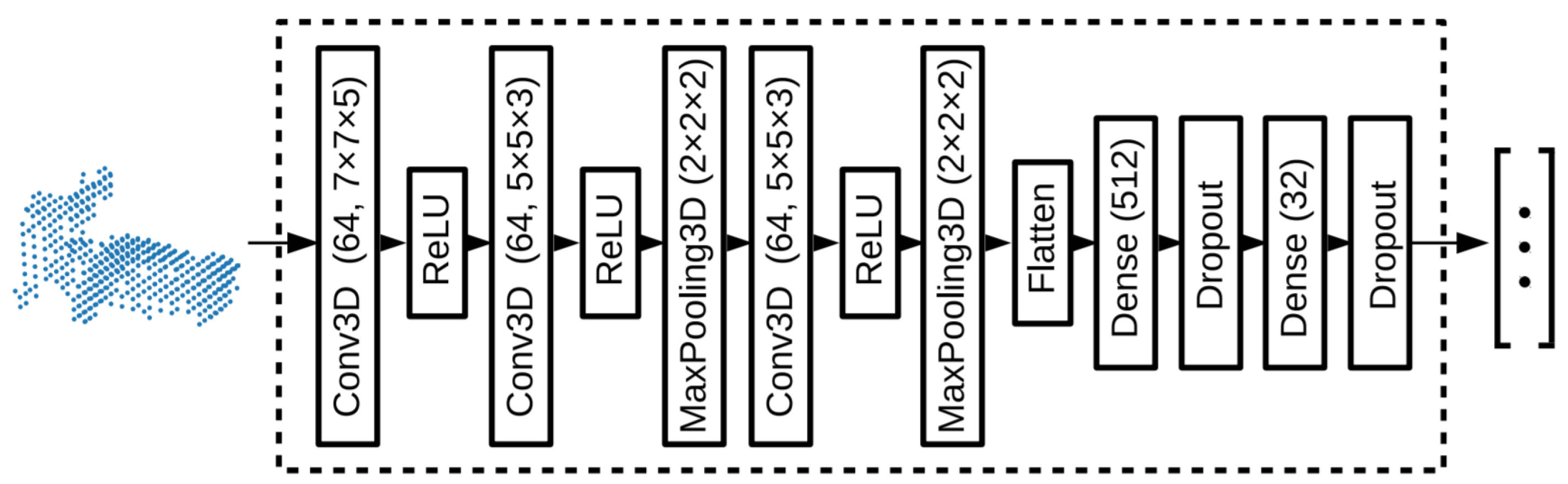

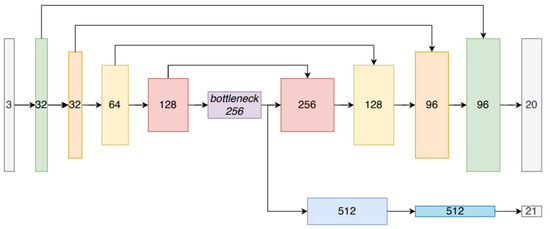

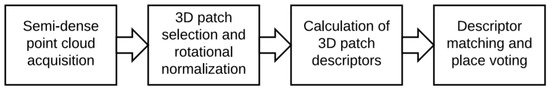

Robust place recognition can be achieved using 3D scene structure. Ye et al. [85] represented structural information of the scene with semi-dense point clouds using DSO [132] and developed local descriptor matching to perform place recognition. It used 3D CNN like [118,161] and generated discriminative descriptors by learning features from a 3D-voxel grid. Its place recognition pipeline as shown in Figure 32 was composed of four main components. It used DSO [162] to acquire the information in semi-dense point cloud. It extracted the local patches from semi-dense point clouds and normalized them.

Figure 32.

The pipeline of [85] place recognition pipeline in semi-dense maps.

In the next step, keypoints were selected from random 5% resulting points and local cylindrical patches were extracted from them, which were chosen with the size to be as small as possible. These patches were represented using CNN-based descriptors, which contained two 3D convolutional layer, ReLU, a pooling and two fully connected layers for mapping from voxel grid to 512-dimensional descriptor. Finally, the resulting descriptors were matched to the descriptors that were stored in the database and their matches were aggregated to keyframe matches. It also used PCA to reduce the dimensionality, which resulted in efficient matching.

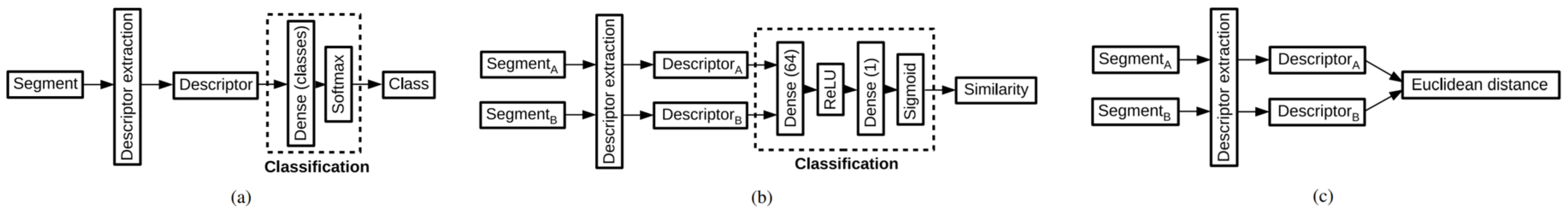

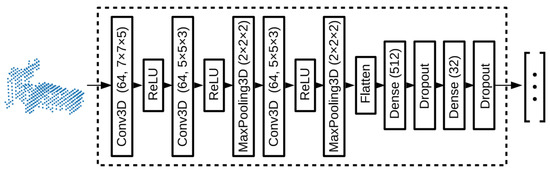

Cramariuc et al. [86] used segment extraction combined with a matching method to perform the place recognition task in LiDAR-based 3D point clouds. It used CNN to generate descriptors for 3D PC segments and introduced a segment recognition approach based on learned descriptors, which outperformed the SegMatch descriptors [163]. It extended the structures of [164,165] to the 3D domain for generating learning-based descriptors. It implemented place recognition task using three different CNNs as shown in Figure 33 for generating descriptors for 3D point cloud segments.

Figure 33.

Three network structures [86] (a) group based CNN (b) Siamese CNN, and (c) descriptor extraction CNN trained using contrastive loss.

For preprocessing, the alignment method was chosen to increase the robustness and make the extraction process less sensitive. The augmentation techniques were used to make multiple copies of the segmented data by rotating each image at different angles. Then, the segments were scaled to fit and centered inside the voxel grid. A CNN was proposed for feature extraction. Figure 34 shows the structure of descriptor extraction CNN, which tested different depths and sizes for layers and filters to keep the network small enough it could be feasible to run on the mobile robot platform. The amount of dropout in the final layers was tuned separately to ensure a correct regularization.

Figure 34.

The descriptor extraction network [86] used in the three CNNs.

The first approach was group-based classification. In this approach, training the CNN for segment classification was based on the groups that represent the classes. The layer before the classification was used as descriptor [166]. The closeness between the descriptors of segments of same group in the Euclidean space was loosely enforced by the classification layer. The probability of a segment belonging to a class was considered proportional to the dot product. The descriptors with small Euclidean distance were classified belonging to the same group. The candidate matches were generated by correlation between similarity and Euclidean distance between descriptors. The network was trained using SGD for minimizing the categorical cross-entropy.

The second approach was training a Siamese convolutional neural network [167] in which two inputs were passed to two distinct CNNs. These two CNNs were considered as two identical descriptor extraction networks. Then, the combination of output of two networks was given to third network, which generates the final output. The advantage of Siamese over two stage detectors was that it allowed training of feature extraction simultaneously. Feature extraction and classifier were used independently during the inference process to boot the performance. It also used GSD for training to reduce the binary cross entropy of the network.

The third approach was training the classifier with contrastive loss [165] for minimizing the Euclidean distance between the matching vectors while maximizing it for non-matching pairs. It recalculated the hard pairs (which had lowest Euclidean distance between their descriptors but the segments did not match and vice versa) at the end of each training epoch to increase the performance and avoid the local minima.

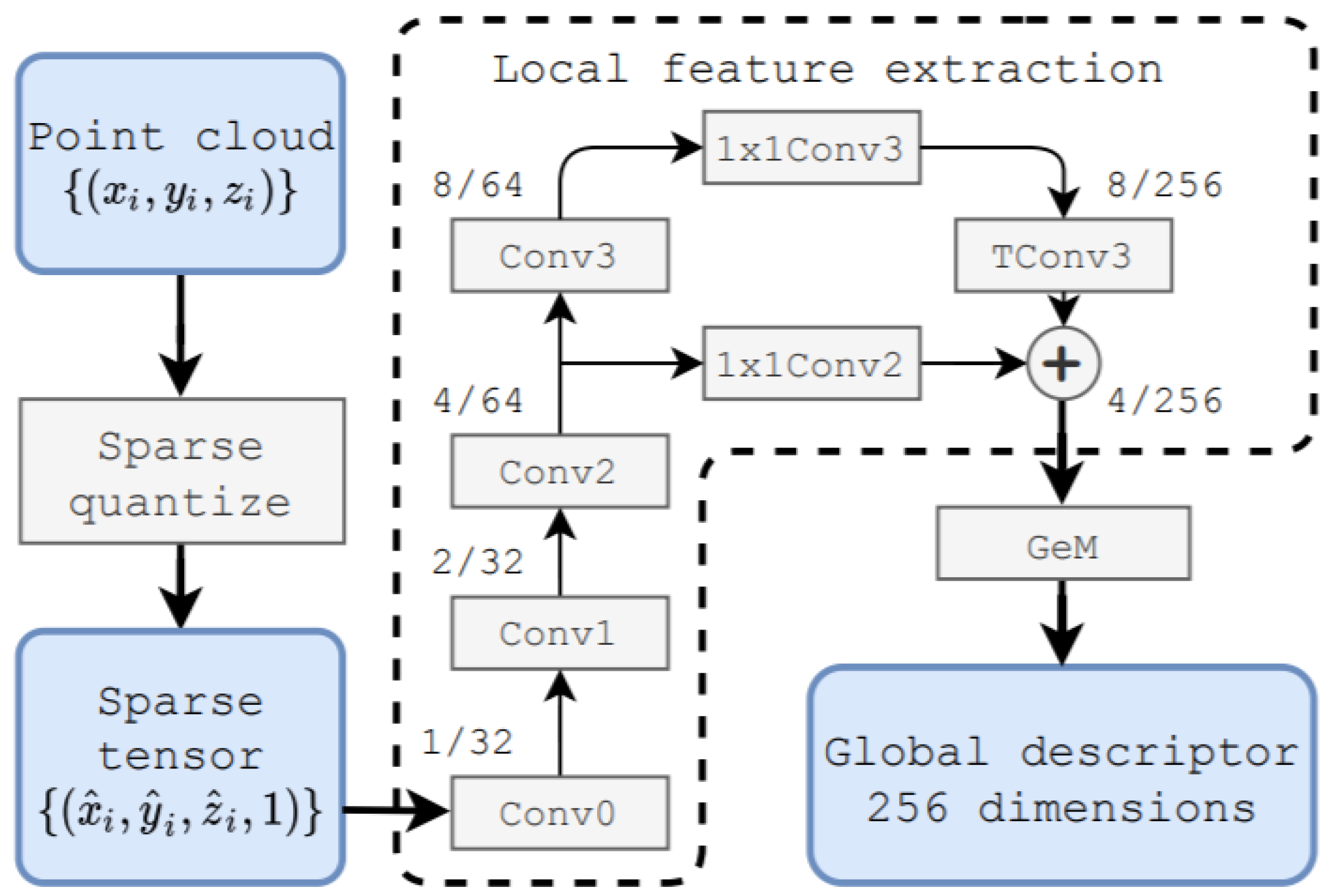

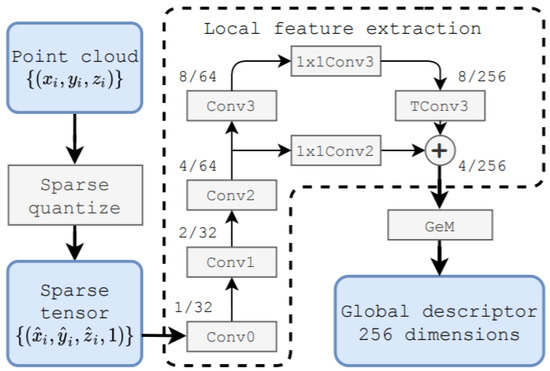

Komorowski et al. [87] used 3D FPN [168] and sparse voxelized point cloud representation inspired by MinkowskiNet [169] to propose discriminative 3D point cloud descriptor for place recognition. The local feature extraction network and generalized mean (GeM) pooling [170] layer were the two main parts of its network architecture as shown in Figure 35 for PC-based place recognition. A set of 3D point coordinates was passed as input and quantized into a sparse, single channel tensor. It used 3D Feature Pyramid Network [168] for local feature extraction. The GeM, which was the generalization of global max and average pooling, was used for the generation of global descriptor vector.

Figure 35.

The network of MinkLoc3D [87] for point-cloud-based place recognition.

The network model was composed of four convolutional blocks that were used to generate sparse 3D feature maps and transposed convolution at its bottom-up and top-down parts, respectively. The top-down part was aimed at generating the upsampled feature map, which used lateral connection for concatenating with the features from the layers of bottom-up. It was intended to produce a feature map with a large respective field and high spatial resolution.

The bottom-up blocks from convolutional layer 1 to layer 3 were contained stride of two for decreasing the spatial resolution followed by residual block. batch normalization [171] layer and ReLU non-linearity were used for all layers in bottom-up blocks. Two 1x1 convolution blocks were aimed at unifying the feature maps channels of bottom-up blocks before they were concatenated in a top-down pass.