Abstract

The recent growth of the elderly population has led to the requirement for constant home monitoring as solitary living becomes popular. This protects older people who live alone from unwanted instances such as falling or deterioration caused by some diseases. However, although wearable devices and camera-based systems can provide relatively precise information about human motion, they invade the privacy of the elderly. One way to detect the abnormal behavior of elderly residents under the condition of maintaining privacy is to equip the resident’s house with an Internet of Things system based on a non-invasive binary motion sensor array. We propose to concatenate external features (previous activity and begin time-stamp) along with extracted features with a bi-directional long short-term memory (Bi-LSTM) neural network to recognize the activities of daily living with a higher accuracy. The concatenated features are classified by a fully connected neural network (FCNN). The proposed model was evaluated on open dataset from the Center for Advanced Studies in Adaptive Systems (CASAS) at Washington State University. The experimental results show that the proposed method outperformed state-of-the-art models with a margin of more than 6.25% of the F1 score on the same dataset.

1. Introduction

The world’s population has aged over the past few decades. In 2018, for the first time, there were more people aged 65 and over than those younger than five, and the elderly population is likely to have doubled by 2050 [1,2]. Moreover, in 2050, the 1.5 billion people older than 65 will outnumber those aged between 15 and 24. This dramatic increase in the elderly population is due to improved quality of life and better healthcare [3,4,5,6], especially the decrease in tobacco use in men and cardiovascular disease in recent decades [3]. Another important factor that affects the growth of the elderly population is the falling birth rate; the average number of live births per woman was only 2.5 worldwide in 2019 and is likely to decrease further [1]. Studies have shown that both high- and low-income countries are experiencing increased life expectancy [4,5].

Elderly people tend to live alone [7,8,9,10,11]. For example, in the United States of America, the percentage of elderly people living alone was 40% in 1990 and 36% in 2016 [12]. In the Republic of Korea, 22.8% of elderly people live alone, almost one in five [8]. One of the reasons is that some elderly people prefer to preserve their privacy [13,14]. However, elderly people living alone are more susceptible to loneliness, illness, and home accidents than those who live with a partner or family [9,15]. Early detection of illness and home accidents is crucial if solitarily-living elderly people are to receive timely and potentially life-saving help [16,17].

As the latest technological development, the Internet of Things (IoT) enables consumers and businesses to have versatile devices connected to the Internet [18,19,20,21,22]. In elderly care and monitoring systems, the use of the IoT is becoming prevalent [23,24,25,26], and monitoring the activities of daily living (ADLs) of elderly people is crucial in indicating their activity level [27].

Previous studies have proposed elderly-monitoring systems based on wearable devices [23,28,29,30,31,32] with the main function of classifying the ADLs of elderly people. However, some people are uncomfortable with wearable devices, and if they choose to wear one, then the favorite part of the body for wearing it is the wrist [33]. In addition to recognizing ADLs, crucial for monitoring elderly people living alone is detecting (i) abnormal activities such as falling [34,35], (ii) early signs of some diseases, and (iii) unusual instances for people with certain diseases [28,36,37]. However, although wearable devices provide accurate information about motion, they are inconvenient for daily use because of the problems such as the need to attach sensors to the body or skin, battery life expectancy, and the probability of abandonment in case of curiosity [38,39,40,41]. Although many different activity classification methods have been suggested for wearable devices, the recent prominence of machine learning (ML) has caused researchers to focus in particular on human activity recognition (HAR) models based on deep learning [38,42,43,44,45].

Camera-based monitoring systems [38,46,47,48,49] solve the problem of having an inconvenient wearable device attached to one’s body or skin. Although various HAR models have been suggested for recognizing ADLs, those based on ML are now playing a major role [22,48,49,50,51,52]. An example is HAR based on a dynamic Bayesian network for detecting the abnormal actions of elderly people from camera video [53]. However, although camera-based systems provide accurate information about human posture, privacy is a major concern [54,55,56]. Moreover, previous research [54] showed that elderly people tend to change their behavior once they are aware of the camera. To minimize the invasion of privacy associated with camera-based technology, low-resolution infrared or depth-camera systems have been suggested [57,58,59,60]. Privacy concerns mean that elderly people prefer to be monitored unobtrusively rather than by camera-based systems [56].

One solution to the privacy issue is to install passive infrared (PIR) sensors in the living environment of the elderly to monitor elderly residents unobtrusively with an ADL classification model [61,62,63,64]. Previous research [65] suggested a new smart radar sensor system that uses an ultra-wideband signal to detect motion. Such radar sensors have a low signal-to-noise ratio and are highly sensitive to environmental changes.

Various indoor activity detection models have been proposed [66,67,68,69,70,71,72,73], most of which use ML to recognize the activities. As stated in [74], deep learning and RNN models have promising results and need to be investigated further for non-intrusive activity recognition. Open datasets from real-life scenarios are used to train and test these models, and the Aruba dataset from the Center for Advanced Studies in Adaptive Systems (CASAS) at Washington State University is often used [75,76].

The authors have published several studies [55,58,77] used CASAS Aruba dataset, where [55] detected travel patterns of a resident living alone using PIR binary sensory data [55]; on the contrary, [58,77] detected the activities of a resident using converted temporal sensory events of each activity samples into an image that is fed into DCNN (Deep Convolutional Neural Networks). First, features are extracted with convolutional layers, and then activity is classified with FCNN (Fully Connected Neural Network).

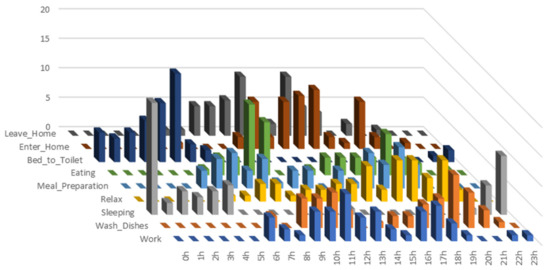

The results of the current work proposed in this study outperformed the existing methods on the Aruba dataset [62,72,78,79]. None of the state-of-the-art (SoTA) methods tested on the Aruba dataset for ADL recognition use temporal features, in particular previous activity and begin time-stamp, which depend significantly on the current activity (see Table 1 and Figure 1).

Table 1.

Number of previous activity instances for activities selected in train and test sets.

Figure 1.

Number of current activity instances in terms of begin time-stamp.

Herein, we propose a deep-learning model for classifying ADLs from PIR binary sensor data. The model uses a bidirectional long short-term memory (Bi-LSTM), a type of recurrent neural network (RNN) and a fully connected neural network (FCNN) to extract features and classify activities, respectively. The work is not focused on generalizing the model over different houses and for residents with different habits.

The main contributions of this study are as follows:

- Use of the external temporal features, previous activity and begin time-stamp, that are concatenated with extracted features by the Bi-LSTM before being fed into the FCNN for classification;

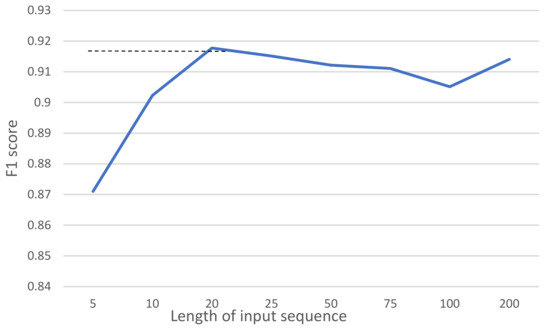

- For Bi-LSTM, an input length is empirically determined to be 20 based on the highest accuracy;

- F1 score difference between the models with/without external features was 28.8%;

- A comparison study on the model’s different architectures, with/without external features and various number of nodes for Bi-LSTM, is conducted;

- For a fair comparison, the proposed model is evaluated on the CASAS Aruba public dataset [76];

- The method outperforms the existing methods [62,72,78,79] with a relatively high F1 score of 0.917, which is an improvement of 6.25% compared with the existing best F1 score.

The rest of this paper is organized as follows. Section 2 reviews other activity recognition methods, and Section 3 describes the present method for recognizing ADLs. Section 4 describes how the model was trained, tested, and compared with the other methods. Section 5 discusses the results and Section 6 presents the conclusions.

2. Related Works

Previous studies have proposed models that use ML to recognize and classify ADLs from the main data sources for doing so, namely wearable devices and smart homes equipped with depth cameras and binary sensors.

An algorithm [31] was suggested for classifying six ADLs with two inertial measurement units. The algorithm has four stages, that is, filtering, phase detection, posture detection, and activity detection. It detects the body posture during static phases and recognizes types of dynamic activities between postures using a rule-based approach. The model achieves an overall accuracy of 84–97% for different types of activities. However, such methods require intensive handcrafting when other activities are added and are sensitive to distortion of input data. Deep-learning methods are used intensively to extract the features for activity recognition. Bianchi et al. [32] proposed an HAR system based on a wearable IoT sensor, for which the feature extractor was a CNN. The model achieved an accuracy of 92.5% on a standard dataset from the UCI ML Repository. A previous study [45] suggested a model that detects falling and its precursor activity from an open dataset. For classifying falls, the authors employed various methods that are support vector machines (SVMs), random forest, and k-nearest neighbors, which achieve F1 scores of 0.997, 0.994, and 0.997, respectively, and for classifying the precursor activities, they achieve F1 scores of 0.756, 0.799, and 0.671, respectively. The results of activity classification are not as good as those of other models. Furthermore, although the systems based on wearable devices provide accurate information about human activity, complications arise like (i) continuing to wear the device, (ii) maintaining battery level, and (iii) attaching the device to the skin.

Systems based on RGB (red–green–blue) cameras [46,47,48] solve the aforementioned problems associated with wearable devices. In a previous study [49], automated ML and transfer learning were used to detect ADLs by analyzing the video from an RGB camera. However, the use of RGB cameras raises privacy concerns.

Activity detection based on depth cameras is another popular method with high precision and less invasion of privacy compared to normal camera images. Anitha et al. [53] proposed an elderly-monitoring system that detects abnormal activities such as falls, chest pain, headache, and vomiting from video sequences with a model based on a dynamic Bayesian network. Image silhouettes are extracted from a normal video sequence that is input to the model, and the model achieves an activity detection accuracy of 82.2%. Jalal et al. [59] developed an HAR model using multiple hidden Markov models that are trained for each specific action. For training and recognizing, the model extracts the features from human depth silhouettes and body-joint information for human activities. The model achieved recognition accuracies of 98.1% and 66.7% on the MSR Action 3D open dataset and a self-annotated dataset, respectively. Hbali et al. [51] presented a method that extracts a human-body skeletal model from depth-camera images, with the classifier being the extremely-randomized-trees algorithm. Although it does not outperform similar models, it provides the promising results with an accuracy of 73.43% on the MSR Daily Activity 3D dataset. Activity recognition systems based on depth cameras are applicable and preferable for the detailed activities such as arm waving or forward kicking, but they do not address the privacy issue fully.

Equipping the living environment of an elderly person with binary sensors invades her/his privacy less than the depth camera does, and it offers greater comfort by avoiding the need to support a wearable device. Yala et al. [61] introduced several traditional ML methods preceded with different feature-extraction techniques, where the highest F1 score among the experimented methods was 0.662. Machot et al. [78] proposed an activity recognition model that finds the best sensor set for each activity. They used an SVM as the classifier and achieved an F1 score of 0.82 on the Aruba dataset. Yatbaz et al. [72] suggested two ADL recognition methods based on scanpath trend analysis (STA), one of which gives the highest F1 score of 0.863 among the existing SoTA models [62,78,79] that are tested on the Aruba dataset. Krishnan et al. [64] proposed a term previous activity in their two step activity recognition method.

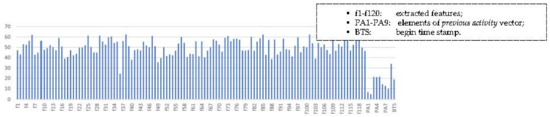

However, none of the aforementioned methods, except [64], use temporal features such as begin time-stamp and previous activity. Figure 2 represents the prominence of external features with stacked column chart, from correlation matrix of feature elements, where vertical axis represents the sum of absolute values of each element for the matrix columns, extracted and external features. External feature elements, with smallest values in the chart, shows that they have less correlation with other features. Our proposed model concatenates these temporal features, begin time-stamp and previous value, with the features extracted by the RNN, and it outperforms the existing SoTA models with an F1 score of 0.917 on the Aruba dataset.

Figure 2.

Stacked column graph of absolute values from correlation matrix of features.

3. Methods

The proposed model uses Bi-LSTM, a type of RNN, to extract the feature vectors from an input data sequence, which are then combined with external features including previous activity and begin time-stamp. The activity recognition is performed by an FCNN. The model structure is empirically selected from extensive experiments over on different combinations of modules (See Section 4.5 and Table 7).

3.1. Model Architecture

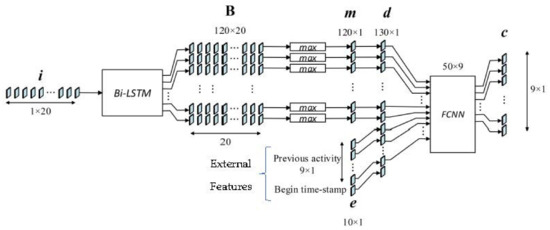

Figure 3 shows the architecture of the proposed model, where a pre-processed sequence of sensor data iT = {i0, i1, i2, …, i19} (see Section 3.3) is inputted to a Bi-LSTM, which consists of an RNN with 60 nodes. Sensors does not send sensor status with consistent frequency. Instead, they send sensor events with “ON” and “OFF” message upon activations. Therefore, the length of the sensor data sequence is inconsistent for activity instances. In order to align data sequence length, zero padding is used in front of the data when the size is less than 20. For sequences longer than 20, the last 20 elements in the sequence form the input data, formulated as follows:

where s is the activity sequence with length l.

Figure 3.

Architecture of the proposed model with its data flow.

The vector z represents the zero padding which converts the length of the input sequence to 20 if it was shorter than 20.

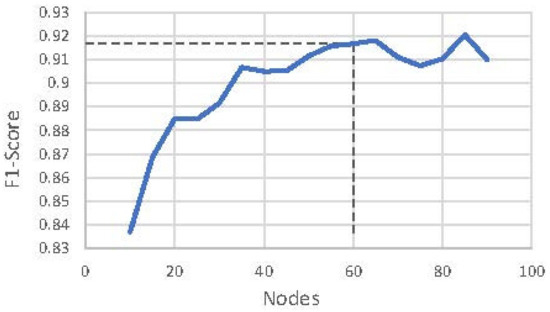

Empirically, we chose Bi-LSTM over LSTM because of the higher performance, where F1 score LSTM is 0.842 (See Table 7). Moreover, the number of nodes (60) was chosen empirically at the value where the F1 score is stabilized (Figure 4). Because the RNN is bidirectional (Bi-LSTM), its output dimensionality (120) is twice its number of nodes (60).

Figure 4.

Graph of F1 score versus Bi-LSTM node.

The output vectors of the Bi-LSTM form a matrix B, where each row represents a feature value and each column is a feature vector generated by the Bi-LSTM from the corresponding element of the input sequence. Therefore, the size of matrix B (120 × 20) is the result of the 60 nodes of the Bi-LSTM and 2 time steps in the input.

On top of this, the feature vector is formed by selecting the maximum value by the max-pooling layer from each row of matrix B. This eliminates the time-step dependency of the features for an activity and selects the maximum value of the feature after taking the whole sequence into account. Each element of the feature vector is selected as

where k is the number of elements in the vector, which is the same as the row number of rows of B.

mk = max(bk1, bk2, bk3, … bk20)

The external feature vector e consists of previous activity p and begin time-stamp ts [Equation (3)]. Vector e and the extracted feature vector m are concatenated to form vector d [Equation (4)], which is then fed into the FCNN classifier:

eT = [pT, ts],

dT = [mT, eT].

Previous activityp is given in 9 × 1 one-hot vector form where each element represents an activity. Begin time-stamp ts, the beginning hour of the activity, corresponds to the current activity, whereas current activity is known to be dependent on previous activity. Table 1 tabulates the number of previous activities’ instances with respect to the current activity in the balanced dataset, where the values present a clear association between the current activity and those that preceded it; for example, Sleeping happens mostly before Bed_to_Toilet. Moreover, the stacked column chart of absolute values (Figure 2) from the correlation matrix for all features, including extracted and external, reveals that previous activity vector elements and begin time stamp are less correlated to other features.

The number of instances of begin time-stamp in terms of daily hours is represented in Figure 1, where the three-dimensional graph exposes the associations between activities and their starting time interval.

The FCNN executes the classification and consists of a 50-node hidden layer and a nine-node output layer. Activation functions of the hidden and output layers are ReLu and Sigmoid, respectively. The input vector d consists of three elements [Equations (3) and (4)]: the feature vector m, the previous activity p, and the begin time-stamp ts. The fully connected classification network is defined as

where ah and ao are the outputs of the hidden and output layers, respectively, of the network.

3.2. Dataset

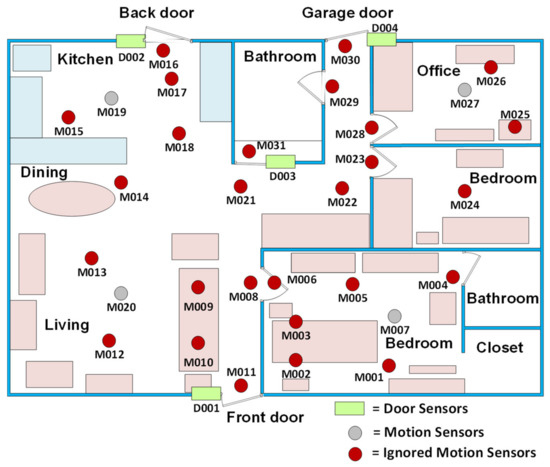

We used Aruba open data set from CASAS smart home project [76] to train and evaluate our model. CASAS assembled 64 open datasets from equipped smart houses inhabited by single or multiple residents for certain amounts of time. Its inhabitancy duration and frequent use in model evaluations led us to use the Aruba testbed dataset in the present work. As shown in Figure 5, Aruba is a smart house in which an elderly lady lived alone for seven months. This house is equipped with 31 wireless binary motion sensors, four temperature sensors, and four door sensors. Because we used only motion sensor data, from all 31 motion sensors and four door sensors, the temperature sensors are not depicted in Figure 5 [77].

Figure 5.

Aruba testbed layout where motion and door sensors are depicted.

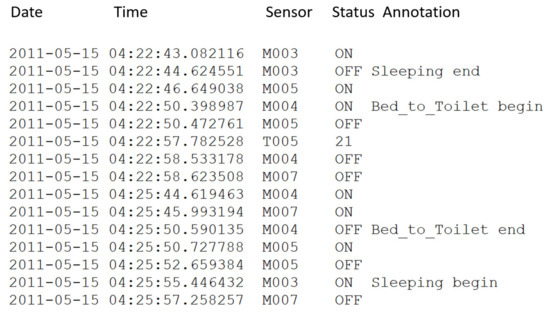

The open dataset is formatted as shown in Figure 6, where each instance consists of date, time, sensor status, and annotations. The dataset is a list of actions lasting for 219 days from 4 November 2010 to 6 November 2011, and it comprises 1′719′557 registered events in total. Figure 6 lists the sensor instances of two actions, namely Sleeping and Bed_to_Toilet, which happened during the night of 15 May 2011. Here, the activity Bed_to_Toilet happens between two Sleeping activities, which is intuitive.

Figure 6.

Format of Aruba dataset.

3.3. Preprocessing of Dataset

For a fair comparison, we used the same data preprocessing method as the one described in [72]. The Aruba dataset contains 1′719′557 raw sensor samples in total. First, we removed all irrelevant samples, i.e., temperature sensor samples, from the dataset, leaving 1′602′981 samples. Moreover, for the sake of formatting, the door sensor statuses of “OPEN” and “CLOSE” were replaced with “ON” and “OFF”, respectively. Various incorrect labels of the sensor status “OFF” (e.g., “OF” and “OFF5”) were replaced with “OFF”. After these steps, the external features, i.e., previous activity and begin time stamp, of each activity, are extracted from the dataset and merged with sensory data of each activity instances. We employed 10-fold cross-validation to evaluate the proposed method, ignoring the activities of Housekeeping and Respirate because they had only 33 and six samples, respectively. The Aruba dataset is imbalanced in terms of the number of samples for each class, ranging from six to 2919 as shown in Table 2. To balance the dataset, 60 samples were selected randomly from each class; thus, six samples were allocated for each fold. Therefore, for each fold evaluation, 90% (54 samples) and 10% (six samples) of the particular class samples were used as the training set and the testing set, respectively.

Table 2.

Number of Instances for Activities.

Figure 7 represents a F1 scores vs. input data length graph. Empirically, the highest F1 score of 0.917 is given against input data length of 20. Therefore we set the input data length as 20 for reducing the computational complexity. In case of the data length of an activity which is less than 20 zero padding is used to fit the sequence to the model input.

Figure 7.

Sample distribution in terms of sensor events.

3.4. Evaluation Measures

Via one of the most commonly used model-validation techniques, we used stratified 10-fold cross-validation to assess our model. For a fair comparison, we selected 60 samples randomly from each activity sample set, resulting in 540 random samples in total. The selected sample set was partitioned into two subsets, namely the training set and the testing set, with 90% and 10%, respectively, of the samples of each activity. Therefore, 54 and six samples were allocated for train and test sets, respectively.

The proposed model was evaluated in terms of the following measures: Recall, Precision, F1 score, Specificity, Accuracy, and Error. These measures were calculated from the model’s numbers of true and false prediction: TP (true positive), TN (true negative), FP (false positive), and FN (false negative) [62]. Evaluation scores of the model are averaged scores from the results of five different models trained and tested on five different sample sets.

3.5. Technical Specifications

Model training was performed on a DGX1 supercomputer, whereas the testing was performed on an ordinary server computer. The server computer was a Dell Workstation 7910 with a six-core Intel(R) Xeon(R) CPU E5-2603 v3 @ 1.60 GHz, 16 GB RAM, and GTX Titan X GPU.

4. Results

We used stratified 10-fold-cross validation for evaluation of the models. Each row of Table 3, Table 4 and Table 7 represent the weighted average of 10-fold evaluation results of five different models that are trained on five different datasets.

Table 3.

Confusion Matrix for Activities and Evaluation of Model with Previous Activity Feature.

Table 4.

Confusion Matrix for Activities and Evaluation of model without Previous Activity Feature.

4.1. Activity Recognition with Extra Features

Table 3 presents the results for the model with the external features of previous activity and begin time-stamp. The normalized values of the confusion matrix for the activities are presented on the left side of the table, while the performance measures of Precision, Recall, Specificity, F1 score, Accuracy, and Error are presented on the right side. The best performance of the model was achieved for the Enter_Home activity, where its Precision, Recall, Specificity, F1 score and Accuracy were 0.997, 1.000, 1.000, 0.978, and 99.96%, respectively. The second and third-best performances were for the Bed_to_Toilet and Leave_Home activities, with F1 scores of 0.990 and 0.987, respectively. The worst-recognized activities were Meal_Preparation and Wash_Dishes, with F1 scores of 0.824 and 0.821, respectively. The F1 score of Wash_Dishes was 17.6% lower than that of the best-recognized activity (Enter_Home).

4.2. Activity Recognition without Previous Activity Feature

Table 4 tabulates the results for the model without accounting for the external feature of previous activity. The best-recognized activity was Bed_to_Toilet, where its Precision, Recall, Specificity, F1 score, and Accuracy were 0.907, 0.973, 0.988, 0.939, and 98.59%, respectively. The second-best-recognized activity was Sleeping, where its Precision, Recall, Specificity, F1 score, and Accuracy were 0.952, 0.793, 0.995, 0.865, and 97.26%, respectively. The worst-recognized activity was Leave_Home, with an F1 score of 0.526, which was 44% lower than the highest F1 score of the Bed_to_Toilet activity.

Furthermore, the best F1 score of this model was 5.9% lower than the highest F1 score of the model with the previous activity feature (Table 3). On the other hand, the lowest F1 score of this model was 35.9% lower than the worst F1 score of the model with the previous activity.

4.3. Classification Results on the Remaining Dataset

The confusion matrix and performance measure Recall on the remaining dataset are represented in Table 5. The best-recognized activities are Enter_Home, Bed_to_Toilet and Leave_Home with Recall of 0.998, 0.997 and 0.990, respectively. The worst-recognized activities are Meal_Preparation and Wash_Dishes with a Recall measure of 0.825 and 0.860, respectively. The worst-recognized activity Meal_Preparation’s performance measure Recall is 17.3% lower than the best-performer’s result. The overall average of performance measure Recall on the remaining dataset is 0.923.

Table 5.

Confusion Matrix for Remaining Activities and Recall Measure.

4.4. Real-Time Activity Recognition with a Predicted Previous Activity Feature

For all the previously mentioned experiments, the previous activity feature was a ground truth which is extracted from the dataset. Table 6 represents weekly-basis real-life scenario activity recognition results on the whole 6 months dataset where the previous activity feature were predicted (not the ground truth) by the proposed method. For the sake of simplicity, we chose to report the weekly-basis results as the daily-basis results were similar. We used the very first activity, the ground truth, of the week where the start of the week was set with Sleeping activity, as a previous activity feature for the second activity. After predicting the second activity, the predictions are used as a previous activity feature for its next activity. In Table 6, a confusion matrix and Recall measure (Recall is chosen as the dataset is severely imbalanced) of the test result is tabulated. The best recognized activities are Work, Leave_Home and Enter_Home with Recall of 0.977, 0.972 and 0.970, respectively. The worst recognized activities are Meal_Preparation and Eating, with a Recall of 0.714 and 0.850, respectively.

Table 6.

Confusion Matrix for Activities and Evaluation of model with Predicted Previous Activity Feature.

For a comparison, the best Recall measure of the model with predicted previous activity feature is 2.14% lower than the Recall measure of the model with the ground truth previous activity feature (Table 5) while the worst recognized activity of the model with the predicted previous activity feature is 15.54% lower than the Recall measure of the worst recognized activity of the model with ground truth previous activity feature (Table 5).

4.5. Comparison Study of Proposed Model with Different Combinations of Internal Modules

Table 7 represents the 10-fold cross-validation results of a comparative study of the proposed model with and without external features for unidirectional and bidirectional LSTM RNNs with different numbers of nodes. The best F1 score is 0.917 for model 3, which has a Bi-LSTM with 60 nodes as a feature extractor and two external features, previous activity and begin time-stamp. The second-best F1 score was 0.905 for model 4, which consists of external features and a Bi-LSTM with 50 nodes. Furthermore, the third-best F1 score was 0.892 for model 7, which has only one external feature (previous activity) and a Bi-LSTM with 60 nodes. The worst F1 score was 0.495 for model 2, which has a 60-node unidirectional LSTM feature extractor but no external features.

Table 7.

Comparison Results of Proposed Model with Different Combinations of Modules.

4.6. Training vs. Testing Accuracy

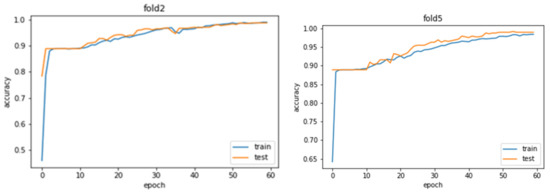

For training and testing the model, the 10-fold cross validation is used (See Section 3.2, Section 3.3 and Section 3.4). Figure 8 represent train and test graphs for accuracy and loss function after 60 epochs. Train and test curves converge and final values exceed 0.9 for accuracy and is lower than 0.1 for a loss function.

Figure 8.

Train and Test accuracy.

4.7. Classification Latency

The average classification latency of the model for each activity is 26.4 ms, and the maximum latency is 30.3 ms, as represented in Table 8. Because the classification latency is less than 30 ms, which is within the industrial IoT system latency requirement of 100 ms [80], the model can be used in an IoT-based real-time privacy-preserving ADL recognition system. The latency measurement was performed on a server with moderate specifications (Section 3.5).

Table 8.

Maximum Classification Latency for Each Activity.

4.8. Comparison with SoTA Models

Table 9 (fair) and Table 10 (non-fair) compare the proposed model with existing SoTA models: [62] by Gochoo et al. [72] by Yatbaz et al. [78,79] by Machot et al. that were evaluated on CASAS Aruba dataset. Table 9 represents a fair comparison with two models [62,72] which are evaluated with the same technique in their studies (Section 3.4). The proposed model outperformed these two SoTA models in a fair comparison with an F1 score of 0.917, which is 6.26% and 16% higher than those of the second-best-performing model STA [72] and our previous study [62], respectively (Table 9).

Table 9.

Comparing Present Model with Existing Models.

Table 10.

Non-fair Comparing Present Model with Existing Models.

Machot et al. [78,79] respectively employed an SVM and RNN as a classifier and they are evaluated on a CASAS Aruba dataset. While both studies [78,79], used 10-fold cross validation technique to evaluate their classification models, [78] used imbalanced dataset with classification penalty and [79] used Synthetic Minority oversampling technique [81] to make the dataset balanced. Table 10 represents comparison of our model achieving an F1 score that is 11.8% and 7.88% higher than those of the models in [78,79]. Although [72], the latest and highest performer of classification task on CASAS Aruba dataset claims that they outperformed these models of [78,79], moreover, due to the difference of sampling methods used in [78,79] for preparing datasets, Table 10 is not a fair comparison.

5. Discussion

Concatenating the external features of previous activity and begin time-stamp to the extracted features from Bi-LSTM gives remarkable results on classifying ADLs from binary sensor data. Our model outperforms all the SoTA models on the Aruba testbed dataset, where its F1 scores range between 0.821 and 0.998 with an average of 0.917.

The model predicts some activities much better than do other models, such as Meal_Preparation, Eating, Wash_Dishes, Enter_Home, and Leave_Home, because of adding extra features, especially previous activity. For example, the Enter_Home activity is classified with 99.96% accuracy (Table 3) because it always occurs after Leave_Home.

We chose to have 60 nodes in the Bi-LSTM as a trade-off between computational complexity and accuracy (see Figure 4). As it can be seen from Table 7, the F1 score of the model decreases to 0.905 and 0.855 when only 50 and 20 nodes are respectively used.

Training and testing accuracy and loss graphs represent good fit of the model by converging and having high values greater than 0.9 for accuracy and low values, less than 0.1, for loss function (Figure 8).

The worst activity recognition of our model is on Wash_Dishes with an F1 score of 0.821; nevertheless, this is 0.12% and 3.92% higher than the average F1 scores of existing models [62,78], respectively.

The model is tested on remaining dataset which was not part of the balanced dataset to train and test the model. Instead of taking F1 score as the main measure, the performance measure Recall represents reasonable results since the remaining part of the dataset is imbalanced.

The model is evaluated with a predicted (not the ground truth) previous activity feature on weekly activity sequences to simulate a real-life scenario activity recognition (Table 6), as well. The results show a reasonably high Recall of 0.927. However, as expected, the best and worst Recall measures were degraded down 2.14% and 15.54%, respectively, compared to the model performance with ground truth previous activity feature (Table 5).

As well as outperforming the SoTA models, the classification latency of the proposed model is less than 30 ms, which is fast enough for an IoT-based real-time privacy-preserving activity recognition system.

The model’s worst performance is on classifying between the activities of Meal_Preparation and Wash_Dishes due to the two actions occurring in the same location in the house, namely the kitchen. This classification could be improved by placing other sensors (e.g., temperature, humidity) in the kitchen.

Although the external feature begin time-stamp improved the performance of the model, its contribution to the F1 score was not as great as that of the external feature previous activity (Table 7). Despite the fact that the external feature previous activity has prominent effect on the model performance, it might mislead to misclassification when wrong previous activity is generated automatically from previous step of classification.

Due to lack of similar datasets, our model is trained and tested only on CASAS dataset. If the model is employed to classify daily activities of a new resident, it is necessary for the model to have a learning phase in order to capture the resident’s daily activity pattern.

6. Conclusions and Future Works

We proposed a privacy-preserving activity recognition model concatenating Bi-LSTM extracted features and external temporal features for classifying the ADLs of an elderly person living alone. The dataset used to train and test the model was the CASAS Aruba open dataset, which was collected from binary sensors in a smart home in which an elderly resident lived alone for seven months. The model outperformed the existing SoTA models with the highest F1 score of 0.917, which was 6.26% better than that of the best existing model. Moreover, a classification latency of less than 30 ms allows our model to be placed in a server of an IoT-based ADL recognition system.

For future work, the worst activity classifications, namely those of Meal_Preparation and Wash_Dishes, both of which take place in the kitchen, should be improved by adding other sensors such as a thermometer, a humidity meter, and an ammeter for electrical appliance. Moreover, a multi-resident activity recognition model should be developed for elderly-monitoring IoT systems.

Author Contributions

Conceptualization, M.G. and T.-H.T.; methodology, M.G., L.B. and W.-X.Z.; software, L.B. and W.-X.Z.; validation, L.B. and W.-X.Z.; formal analysis, M.G. and L.B.; resources, F.S.A.; writing—original draft preparation, L.B.; writing—review and editing, M.G., T.-H.T. and J.-W.H.; supervision, T.-H.T. and M.G.; project administration, T.-H.T. and M.G.; funding acquisition, T.-H.T. and M.G. All authors have read and agreed to the published version of the manuscript.

Funding

The research grant (No. 31T136) was funded by the College of Information Technology, United Arab Emirates University (UAEU).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Washington State University (protocol code and date of approval are not available).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The Aruba dataset is provided by Center for Advanced Studies in Adaptive System (CASAS) in School of Electrical Engineering and Computer Science of Washington State University (http://casas.wsu.edu/datasets/). The accessed date to the dataset is 11 July 2021.

Acknowledgments

AI and Robotics Lab of United Arab Emirates University for offering facilities such as a supercomputer DGX1 for this research work.

Conflicts of Interest

The authors declare no conflict of interest.

References

- United Nations Department of Economic and Social Affairs. World Population Prospects 2019; United Nations: New York, NY, USA, 2019; ISBN 9789211483161. [Google Scholar]

- Grundy, E.M.; Murphy, M. Population ageing in Europe. In Oxford Textbook of Geriatric Medicine; Jean-Pierre, M., Lynn, B.B., Martin Finbarr, C., Walston Jeremy, D., Eds.; Oxford University Press: Oxford, UK, 2017; p. 11. [Google Scholar]

- Mathers, C.D.; Stevens, G.A.; Boerma, T.; White, R.A.; Tobias, M.I. Causes of international increases in older age life expectancy. Lancet 2015, 385, 540–548. [Google Scholar] [CrossRef]

- Cutler, D.; Deaton, A.; Lleras-Muney, A. The determinants of mortality. J. Econ. Perspect. 2006, 20, 97–120. [Google Scholar] [CrossRef] [Green Version]

- Peltzman, S. Mortality inequality. J. Econ. Perspect. 2009, 23, 175–190. [Google Scholar] [CrossRef] [PubMed]

- Preston, S.H. Population investigation committee the changing relation Between mortality and level of economic development. Popul. Stud. 1975, 29, 231–248. [Google Scholar] [CrossRef]

- Johnstone, G.; Dickins, M.; Lowthian, J.; Renehan, E.; Enticott, J.; Mortimer, D.; Ogrin, R. Interventions to improve the health and wellbeing of older people living alone: A mixed-methods systematic review of effectiveness and accessibility. Ageing Soc. 2021, 41, 1587–1636. [Google Scholar] [CrossRef]

- Kwon, H.J.; Jeong, J.U.; Choi, M. Social relationships and suicidal ideation among the elderly who live alone in Republic of Korea: A logistic model. Inq. J. Health Care Organ. Provis. Financ. 2018, 55, 0046958018774177. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zali, M.; Farhadi, A.; Soleimanifar, M.; Allameh, H.; Janani, L. Loneliness, fear of falling, and quality of life in community-dwelling older women who live alone and live with others. Educ. Gerontol. 2017, 43, 582–588. [Google Scholar] [CrossRef]

- Snell, K.D.M. The rise of living alone and loneliness in history. Soc. Hist. 2017, 42, 2–28. [Google Scholar] [CrossRef]

- Reher, D.; Requena, M. Living alone in later life: A global perspective. Popul. Dev. Rev. 2018, 44, 427–454. [Google Scholar] [CrossRef] [Green Version]

- Stepler, R. Smaller Share of Women Ages 65 and Older Are Living Alone. Available online: http://www.pewsocialtrends.org/2016/02/18/smaller-share-of-women-ages-65-and-older-are-living-alone/ (accessed on 7 January 2017).

- Lee, S.M.; Edmonston, B. Living alone among older adults in Canada and the U.S. Healthcare 2019, 7, 68. [Google Scholar] [CrossRef] [Green Version]

- Toot, S.; Swinson, T.; Devine, M.; Challis, D.; Orrell, M. Causes of nursing home placement for older people with dementia: A systematic review and meta-analysis. Int. Psychogeriatr. 2017, 29, 195–208. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tabue Teguo, M.; Simo-Tabue, N.; Stoykova, R.; Meillon, C.; Cogne, M.; Amiéva, H.; Dartigues, J.F. Feelings of loneliness and living alone as predictors of mortality in the elderly: The PAQUID study. Psychosom. Med. 2016, 78, 904–909. [Google Scholar] [CrossRef]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Sánchez, V.G.; Lysaker, O.M.; Skeie, N.O. Human behaviour modelling for welfare technology using hidden Markov models. Pattern Recognit. Lett. 2019, 137, 71–79. [Google Scholar] [CrossRef]

- Tzounis, A.; Katsoulas, N.; Bartzanas, T.; Kittas, C. Internet of things in agriculture, recent advances and future challenges. Biosyst. Eng. 2017, 164, 31–48. [Google Scholar] [CrossRef]

- Ansari, S.; Aslam, T.; Poncela, J.; Otero, P.; Ansari, A. Internet of Things-Based Healthcare Applications; IGI Global: Hershey, PA, USA, 2019; pp. 1–28. [Google Scholar]

- Risteska Stojkoska, B.L.; Trivodaliev, K.V. A review of internet of things for smart home: Challenges and solutions. J. Clean. Prod. 2017, 140, 1454–1464. [Google Scholar] [CrossRef]

- Laplante, P.A.; Laplante, N. The internet of things in healthcare: Potential applications and challenges. IT Prof. 2016, 18, 2–4. [Google Scholar] [CrossRef]

- Gochoo, M.; Tan, T.H.; Huang, S.C.; Batjargal, T.; Hsieh, J.W.; Alnajjar, F.S.; Chen, Y.F. Novel IoT-based privacy-preserving yoga posture recognition system using low-resolution infrared sensors and deep learning. IEEE Internet Things J. 2019, 6, 7192–7200. [Google Scholar] [CrossRef]

- Maskeliunas, R.; Damaševicius, R.; Segal, S. A review of internet of things technologies for ambient assisted living environments. Futur. Internet 2019, 11, 259. [Google Scholar] [CrossRef] [Green Version]

- Padikkapparambil, J.; Ncube, C.; Singh, K.K.; Singh, A. Internet of Things technologies for elderly health-care applications. In Emergence of Pharmaceutical Industry Growth with Industrial IoT Approach; Academic Press: Cambridge, MA, USA, 2020; pp. 217–243. ISBN 9780128195932. [Google Scholar]

- Baig, M.M.; Afifi, S.; GholamHosseini, H.; Mirza, F. A systematic review of wearable sensors and IoT-based monitoring applications for older adults—A focus on ageing population and independent living. J. Med. Syst. 2019, 43, 233. [Google Scholar] [CrossRef] [PubMed]

- Khan, I.; Amaro, A.C.; Oliveira, L. IoT-based systems for improving older adults′ wellbeing: A systematic review. In Proceedings of the 2019 14th Iberian Conference on Information Systems and Technologies (CISTI), Coimbra, Portugal, 19–22 June 2019; pp. 1–6. [Google Scholar]

- Rodríguez, I.; Cajamarca, G.; Herskovic, V.; Fuentes, C.; Campos, M. Helping elderly users report pain levels: A study of user experience with mobile and wearable interfaces. Mob. Inf. Syst. 2017, 2017, 9302328. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, Z.; Dong, T. A review of wearable technologies for elderly care that can accurately track indoor position, recognize physical activities and monitor vital signs in real time. Sensors 2017, 17, 341. [Google Scholar] [CrossRef] [Green Version]

- Jalal, A.; Quaid, M.A.K.; Hasan, A.S. Wearable sensor-based human behavior understanding and recognition in daily life for smart environments. In Proceedings of the 2018 International Conference on Frontiers of Information Technology, FIT, Islamabad, Pakistan, 17–19 December 2018; pp. 105–110. [Google Scholar]

- Brodie, M.A.D.; Coppens, M.J.M.; Lord, S.R.; Lovell, N.H.; Gschwind, Y.J.; Redmond, S.J.; Del Rosario, M.B.; Wang, K.; Sturnieks, D.L.; Persiani, M.; et al. Wearable pendant device monitoring using new wavelet-based methods shows daily life and laboratory gaits are different. Med. Biol. Eng. Comput. 2016, 54, 663–674. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Sohn, J.; Kim, S. Classification of daily activities for the elderly using wearable sensors. J. Healthc. Eng. 2017, 2017, 893816. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bianchi, V.; Bassoli, M.; Lombardo, G.; Fornacciari, P.; Mordonini, M.; De Munari, I. IoT wearable sensor and deep learning: An integrated approach for personalized human activity recognition in a smart home environment. IEEE Internet Things J. 2019, 6, 8553–8562. [Google Scholar] [CrossRef]

- Fang, Y.M.; Chang, C.C. Users′ psychological perception and perceived readability of wearable devices for elderly people. Behav. Inf. Technol. 2016, 35, 225–232. [Google Scholar] [CrossRef]

- Özdemir, A.T. An analysis on sensor locations of the human body for wearable fall detection devices: Principles and practice. Sensors 2016, 16, 1161. [Google Scholar] [CrossRef]

- Thilo, F.J.S.; Bilger, S.; Halfens, R.J.G.; Schols, J.M.G.A.; Hahn, S. Involvement of the end user: Exploration of older people′s needs and preferences for a wearable fall detection device–A qualitative descriptive study. Patient Prefer. Adherence 2017, 11, 11–22. [Google Scholar] [CrossRef] [Green Version]

- Al-khafajiy, M.; Baker, T.; Chalmers, C.; Asim, M.; Kolivand, H.; Fahim, M.; Waraich, A. Remote health monitoring of elderly through wearable sensors. Multimed. Tools Appl. 2019, 78, 24681–24706. [Google Scholar] [CrossRef] [Green Version]

- Schlachetzki, J.C.M.; Aminian, K.; Klucken, J.; Kohl, Z.; Marxreiter, F.; Barth, J.; Reinfelder, S.; Gassner, H.; Eskofier, B.M.; Winkler, J.; et al. Wearable sensors objectively measure gait parameters in Parkinson′s disease. PLoS ONE 2017, 12, e0183989. [Google Scholar] [CrossRef]

- Grossi, G.; Lanzarotti, R.; Napoletano, P.; Noceti, N.; Odone, F. Positive technology for elderly well-being: A review. Pattern Recognit. Lett. 2019, 137, 61–70. [Google Scholar] [CrossRef]

- Kekade, S.; Hseieh, C.H.; Islam, M.M.; Atique, S.; Mohammed Khalfan, A.; Li, Y.C.; Abdul, S.S. The usefulness and actual use of wearable devices among the elderly population. Comput. Methods Programs Biomed. 2018, 153, 137–159. [Google Scholar] [CrossRef]

- Kononova, A.; Li, L.; Kamp, K.; Bowen, M.; Rikard, R.V.; Cotten, S.; Peng, W. The use of wearable activity trackers among older adults: Focus group study of tracker perceptions, motivators, and barriers in the maintenance stage of behavior change. J. Med. Internet Res. 2019, 7, e9832. [Google Scholar] [CrossRef] [PubMed]

- Habibipour, A.; Padyab, A.; Ståhlbröst, A. Social, ethical and ecological issues in wearable technologies. In Proceedings of the 25th Americas Conference on Information Systems, Cancun, Mexico, 15–17 August 2019; pp. 1–10. [Google Scholar]

- Papagiannaki, A.; Zacharaki, E.I.; Kalouris, G.; Kalogiannis, S.; Deltouzos, K.; Ellul, J.; Megalooikonomou, V. Recognizing physical activity of older people from wearable sensors and inconsistent data. Sensors 2019, 19, 880. [Google Scholar] [CrossRef] [Green Version]

- Nweke, H.F.; Teh, Y.W.; Al-garadi, M.A.; Alo, U.R. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Hassan, M.M.; Huda, S.; Uddin, M.Z.; Almogren, A.; Alrubaian, M. Human activity recognition from body sensor data using deep learning. J. Med. Syst. 2018, 42, 99. [Google Scholar] [CrossRef]

- Hussain, F.; Hussain, F.; Ehatisham-Ul-Haq, M.; Azam, M.A. Activity-aware fall detection and recognition based on wearable sensors. IEEE Sens. J. 2019, 19, 4528–4536. [Google Scholar] [CrossRef]

- Poularakis, S.; Avgerinakis, K.; Briassouli, A.; Kompatsiaris, I. Efficient motion estimation methods for fast recognition of activities of daily living. Signal Process. Image Commun. 2017, 53, 1–12. [Google Scholar] [CrossRef]

- Zouba, N.; Boulay, B.; Bremond, F.; Thonnat, M. Monitoring activities of daily living (ADLs) of elderly based on 3D key human postures. Lect. Notes Comput. Sci. 2008, 5329, 37–50. [Google Scholar]

- Gabrielli, M.; Leo, P.; Renzi, F.; Bergamaschi, S. Action recognition to estimate Activities of Daily Living (ADL) of elderly people. In Proceedings of the 2019 IEEE 23rd International Symposium on Consumer Technologies (ISCT), Ancona, Italy, 19–21 June 2019; pp. 261–264. [Google Scholar]

- Mishra, S.R.; Mishra, T.K.; Sanyal, G.; Sarkar, A.; Satapathy, S.C. Real time human action recognition using triggered frame extraction and a typical CNN heuristic. Pattern Recognit. Lett. 2020, 135, 329–336. [Google Scholar] [CrossRef]

- Lu, N.; Wu, Y.; Feng, L.; Song, J. Deep learning for fall detection: Three-dimensional CNN Combined with LSTM on video kinematic data. IEEE J. Biomed. Health Inform. 2018, 23, 314–323. [Google Scholar] [CrossRef]

- Hbali, Y.; Hbali, S.; Ballihi, L.; Sadgal, M. Skeleton-based human activity recognition for elderly monitoring systems. IET Comput. Vis. 2017, 12, 16–26. [Google Scholar] [CrossRef]

- Park, Y.J.; Ro, H.; Lee, N.K.; Han, T.D. Deep-cARe: Projection-based home care augmented reality system with deep learning for elderly. Appl. Sci. 2019, 9, 3897. [Google Scholar] [CrossRef] [Green Version]

- Anitha, G.; Baghavathi Priya, S. Posture based health monitoring and unusual behavior recognition system for elderly using dynamic Bayesian network. Clust. Comput. 2019, 22, 13583–13590. [Google Scholar] [CrossRef]

- Caine, K.; Ŝabanović, S.; Carter, M. The effect of monitoring by cameras and robots on the privacy enhancing behaviors of older adults. In Proceedings of the HRI′12-Proceedings of the 7th Annual ACM/IEEE International Conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012; pp. 343–350. [Google Scholar]

- Gochoo, M.; Tan, T.H.; Velusamy, V.; Liu, S.H.; Bayanduuren, D.; Huang, S.C. Device-free non-privacy invasive classification of elderly travel patterns in a smart house using PIR sensors and DCNN. IEEE Sens. J. 2017, 18, 390–400. [Google Scholar] [CrossRef]

- Boise, L.; Wild, K.; Mattek, N.; Ruhl, M.; Dodge, H.H.; Kaye, J. Willingness of older adults to share data and privacy concerns after exposure to unobtrusive home monitoring. Gerontechnology 2013, 11, 428–435. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Eldib, M.; Deboeverie, F.; Philips, W.; Aghajan, H. Behavior analysis for elderly care using a network of low-resolution visual sensors. J. Electron. Imaging 2016, 25, 041003. [Google Scholar] [CrossRef] [Green Version]

- Gochoo, M.; Tan, T.H.; Batjargal, T.; Seredin, O.; Huang, S.C. Device-free non-privacy invasive indoor human posture recognition using low-resolution infrared sensor-based wireless sensor networks and DCNN. In Proceedings of the Proceedings-2018 IEEE International Conference on Systems, Man, and Cybernetics, SMC, Miyazaki, Japan, 7–10 October 2018; pp. 2311–2316. [Google Scholar]

- Jalal, A.; Kamal, S.; Azurdia-Meza, C.A. Depth maps-based human segmentation and action recognition using full-body plus body color cues via recognizer engine. J. Electr. Eng. Technol. 2019, 14, 455–461. [Google Scholar] [CrossRef]

- Kim, K.; Jalal, A.; Mahmood, M. Vision-based human activity recognition system using depth silhouettes: A smart home system for monitoring the residents. J. Electr. Eng. Technol. 2019, 14, 2567–2573. [Google Scholar] [CrossRef]

- Yala, N.; Fergani, B.; Fleury, A. Towards improving feature extraction and classification for activity recognition on streaming data. J. Ambient. Intell. Humaniz. Comput. 2017, 8, 177–189. [Google Scholar] [CrossRef] [Green Version]

- Gochoo, M.; Tan, T.H.; Liu, S.H.; Jean, F.R.; Alnajjar, F.S.; Huang, S.C. Unobtrusive activity recognition of elderly people living alone using anonymous binary sensors and DCNN. IEEE J. Biomed. Health Inform. 2018, 23, 693–702. [Google Scholar] [CrossRef]

- Susnea, I.; Dumitriu, L.; Talmaciu, M.; Pecheanu, E.; Munteanu, D. Unobtrusive monitoring the daily activity routine of elderly people living alone, with low-cost binary sensors. Sensors 2019, 19, 2264. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krishnan, N.C.; Cook, D.J. Activity recognition on streaming sensor data. Pervasive Mob. Comput. 2014, 10, 138–154. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Diraco, G.; Leone, A.; Siciliano, P. A radar-based smart sensor for unobtrusive elderly monitoring in ambient assisted living applications. Biosensors 2017, 7, 55. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Javier Ordóñez, F.; de Toledo, P.; Sanchis, A. Activity recognition using hybrid generative/discriminative models on home environments using binary sensors. Sensors 2013, 13, 5460–5477. [Google Scholar] [CrossRef]

- Tahir, S.F.; Fahad, L.G.; Kifayat, K. Key feature identification for recognition of activities performed by a smart-home resident. J. Ambient. Intell. Humaniz. Comput. 2019, 11, 1–11. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef] [Green Version]

- Casagrande, F.D.; Torresen, J.; Zouganeli, E. Predicting sensor events, activities, and time of occurrence using binary sensor data from homes with older adults. IEEE Access 2019, 7, 111012–111029. [Google Scholar] [CrossRef]

- Arifoglu, D.; Bouchachia, A. Activity recognition and abnormal behaviour detection with recurrent neural networks. Procedia Comput. Sci. 2017, 110, 86–93. [Google Scholar] [CrossRef]

- Guo, J.; Li, Y.; Hou, M.; Han, S.; Ren, J. Recognition of daily activities of two residents in a smart home based on time clustering. Sensors 2020, 20, 1457. [Google Scholar] [CrossRef] [Green Version]

- Yatbaz, H.Y.; Eraslan, S.; Yesilada, Y.; Ever, E. Activity recognition using binary sensors for elderly people living alone: Scanpath trend analysis approach. IEEE Sens. J. 2019, 19, 7575–7582. [Google Scholar] [CrossRef]

- Singh, D.; Merdivan, E.; Psychoula, I.; Kropf, J.; Hanke, S.; Geist, M.; Holzinger, A. Human activity recognition using recurrent neural networks. In Proceedings of the International Cross-Domain Conference for Machine Learning and Knowledge Extraction, Regio, Italy, 29 August–1 September 2017; pp. 267–274. [Google Scholar]

- Lentzas, A.; Vrakas, D. Non-intrusive human activity recognition and abnormal behavior detection on elderly people: A review. Artif. Intell. Rev. 2020, 53, 1975–2021. [Google Scholar] [CrossRef]

- Cook, D.J. Learning setting-generalized activity models for smart spaces. IEEE Intell. Syst. 2010, 99, 1. [Google Scholar] [CrossRef] [Green Version]

- Cook, D.J.; Crandall, A.S.; Thomas, B.L.; Krishnan, N.C. CASAS: A smart home in a box. Computer 2012, 46, 62–69. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- MacHot, F.A.; Mosa, A.H.; Ali, M.; Kyamakya, K. Activity recognition in sensor data streams for active and assisted living environments. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2933–2945. [Google Scholar] [CrossRef]

- MacHot, F.A.; Ranasinghe, S.; Plattner, J.; Jnoub, N. Human activity recognition based on real life scenarios. In Proceedings of the 2018 IEEE International Conference on Pervasive Computing and Communications Workshops, (PerCom Workshops), Athens, Greece, 19–23 March 2018; pp. 3–8. [Google Scholar]

- Gochoo, M.; Tan, T.H.; Huang, S.C.; Liu, S.H.; Alnajjar, F.S. DCNN-based elderly activity recognition using binary sensors. In Proceedings of the 2017 International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 21–23 November 2017; pp. 1–5. [Google Scholar]

- Pang, Z.; Luvisotto, M.; Dzung, D. Wireless high-performance communications: The challenges and opportunities of a new target. IEEE Ind. Electron. Mag. 2017, 11, 20–25. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).