1. Introduction

With the outbreak of the novel Coronavirus Disease 2019 (COVID-19) [

1], social distancing (SD) emerged as an effective measure against it. Maintaining social distancing in public areas such as transit stations, shopping malls, and university campuses is crucial to prevent or slow the spread of the virus. The practice of social distancing (SD) may continue in the following years until the spread of the virus is completely phased out. However, social distancing is prone to be violated unwillingly, as populations are not accustomed to keeping the necessary 2-meter bubble around each individual. This work proposes a vision-based automatic warning system that can detect social distancing statuses and identify a critical pedestrian density threshold to modulate inflow to crowded areas. Besides being an automated monitoring and warning system, the proposed framework can serve as a tool to detect key variables and statistics for local and global virus control.

Vision-based automatic detection and control systems [

2,

3,

4,

5,

6,

7,

8,

9] are economic and effective solutions to mitigate the spread of COVID-19 in public areas. Although the conceptualization is straightforward, the design and deployment of such systems require smart system design and serious ethical considerations.

First, the system must be fast and real-time. Only a real-time system can detect social distancing statuses immediately and send a warning. Privacy concerns [

10,

11,

12] can be mitigated with a real-time system by not storing sensitive image data while only keeping aggregate statistics, such as the number of SD violations. With a real-time active surveillance system, appropriate measures can be taken as quickly as possible to reduce further spread of COVID-19.

The second design objective is that the system must be accurate and effective enough, but not discriminative. The safest way to achieve this is to build an AI-based detection system. AI-based vision detectors have become the state-of-the-art in people-detection tasks, achieving higher scores in most vision benchmarks than detectors with hand-crafted feature extractors. Furthermore, the latter may lead to maligned designs, whereas an end-to-end AI-based system, such as a deep neural network without any feature-based input space, is much fairer, with one caveat: the training data distribution must be fair.

The third objective aims to provide a more advanced measure than pure social distancing monitoring to further reduce the spread of COVID-19. This leads to our proposed approach of critical pedestrian density identification. The critical density may serve as an indicator to inform the space manager to control the entry port to regulate the incoming pedestrian flow. An online warning is also possible, but it should be non-alarming. For example, the system can send a non-intrusive audio-visual cue to the vicinity of the social distancing violation. Individuals in this region can then make their own decisions with this cue.

We identify the fourth design objective as trust establishment. The whole system and its implementation must be open-sourced. Open-sourcing is crucial for establishing trust between the active surveillance system and society. In addition, researchers and developers can freely and quickly access relevant material and further improve their own designs according to their requirements. This can hasten the development and deployment of anti-COVID-19 technologies, leading to stopping of the spread of the deadly disease and saving lives.

Against this backdrop, we propose a non-intrusive, AI-based active surveillance system for social distancing detection, monitoring, analysis, and control. The overview of the system is shown in

Figure 1. The proposed system first uses a pre-trained deep convolutional neural network (CNN) [

13,

14] to detect individuals with bounding boxes in a given monocular camera frame. Then, detections in the image domain are transformed into real-world bird’s-eye-view coordinates for social distancing detection. Once the social distancing is detected, information is passed to two branches for further processing. One branch is online monitoring and control. If a social distancing violation happens, the system emits a non-alarming audio-visual cue. Simultaneously, the system measures social (pedestrian) density. If the social density is larger than a critical threshold, the system sends an advisory inflow modulation signal to prevent overcrowding. The other branch is offline analysis, which provides necessary information for overcrowding prevention and policymaking. The main analysis is the identification of a critical social density. If the pedestrian density is regulated under this critical value, the probability of social distancing violations will be kept near zero. Finally, the regulator can receive both the offline aggregate statistics and the online status of social distancing control. If immediate action is required, the regulator can act as quickly as possible.

The overall system never stores personal information. Only the processed average results, such as the number of violations and pedestrian density, are stored. This is extremely important for privacy concerns. Our system is also open-sourced for further development.

Our main contributions are:

A novel, vision-based, real-time social distancing and critical social density detection system.

Definition of critical social density and a statistical approach to measuring it.

Measurements of social distancing and critical density statistics of common crowded places, such as the New York Central Station, an indoor mall, and a busy town center in Oxford.

Quantitative validation of the proposed approach to detect social distancing and critical density.

2. Related Work

Social distancing for COVID-19. COVID-19 has caused severe acute respiratory syndromes around the world since December 2019 [

15]. Social distancing is an effective measure to slow the spread of COVID-19 [

1], which is defined as keeping a minimum of 2 meters (6 feet) apart from other individuals to avoid possible contact. Further analysis [

16] also suggests that social distancing has substantial economic benefits. COVID-19 may not be completely eliminated in the short term, but an automated system that can help in the monitoring and analyzing social distancing measures can greatly benefit our society. Statistics from recent works [

1] have demonstrated that strong social distancing measures can indeed reduce the growth rate of COVID-19.

The requirement of social distancing has shaped the development of IoT sensors and smart city technologies. The spread prevention and outbreak alerting of COVID-19 now must be considered in these areas. A recent work [

17] reviews potential solutions and recent approaches, such as IoT sensors, social media, personal gadgets, and public agents for COVID-19 outbreak alerting. Another work [

18] summarises IoT and associated sensor technologies for virus tracing, tracking, and spread mitigation, and highlights the challenges of deploying such sensor hardware.

With the help of the above technologies, social distancing can be better practiced, which will eventually alleviate the spread of the virus and “flatten the curve”.

Social distancing monitoring. In public areas, social distancing is mostly monitored by vision-based IoT systems with pedestrian detection capabilities. Appropriate measures are subsequently taken on this basis.

Pedestrian detection can be viewed as a sub-task of generic object detection or as a specific task of detecting pedestrians only. A detailed survey of 2D object detectors and the corresponding datasets, metrics, and fundamentals can be found in [

19]. Another survey [

20] focuses on deep-learning-based approaches for both the generic object detectors and the pedestrian detectors. Generally speaking, state-of-the-art detectors are divided into two categories. One category is two-stage detectors. Most of them are based on R-CNN [

13,

21], which starts with region proposals and then performs the classification and bounding box regression. The other category is one-stage detectors. Prominent models include YOLO [

14,

22,

23], SSD [

24], and EfficientDet [

25]. The detectors can also be classified as anchor-based [

13,

14,

21,

23,

24,

25] or anchor-free approaches [

26,

27]. The major difference between them is whether to use a set of predefined bounding boxes as candidate positions for the objects. Evaluating these approaches was usually done using the datasets of Pascal VOC [

28] and MS COCO [

29]. The accuracy and real-time performance of these approaches are good enough for deploying pre-trained models for social distancing detection.

There are several emerging technologies that assist in the practice of social distancing. A recent work [

2] has identified how emerging technologies like wireless, networking, and artificial intelligence (AI) can enable or even enforce social distancing. The work discussed possible basic concepts, measurements, models, and practical scenarios for social distancing. Another work [

3] has classified various emerging techniques as either human-centric or smart-space categories, along with the SWOT analysis of the discussed techniques. Social distancing monitoring is also defined as a visual social distancing (VSD) problem in [

4]. The work introduced a skeleton-detection-based approach for inter-personal distance measuring. It also discussed the effect of social context on people’s social distancing and raised the concern of privacy. The discussions are inspirational, but again, do not generate solid results for social distancing monitoring and leaves the question open. A specific social distancing monitoring approach [

5] that utilizes YOLOv3 and Deepsort was proposed to detect and track pedestrians followed by calculating a violation index for non-social-distancing behaviors. The approach is interesting, but the results do not contain any statistical analysis. Furthermore, there is no implementation or privacy-related discussion other than the violation index. Another work [

6] developed a DNN model called DeepSOCIAL for people detection, tracking, and distance estimation. In addition to social distancing monitoring, it also performed dynamic risk assessment. However, this work did not specifically consider the performance of pure violation detection and the solution to prevent overcrowding. More recently, [

30] provides a data-driven deep-learning-based framework for the sustainable development of a smart city. Some other works [

7,

8,

9] also proposed vision-based solutions. A comparison of the above methods with our proposed method can be found in

Table 1.

Several prototypes utilizing machine learning and sensing technologies have already been developed. Landing AI [

31] was almost the first one to introduce a social distancing detector using a surveillance camera to highlight people whose physical distance is below the recommended value. A similar system [

9] was deployed to monitor worker activity and send real-time voice alerts in a manufacturing plant. In addition to surveillance cameras, LiDAR-based [

32] and stereo-camera-based [

33] systems were also proposed, which demonstrated that different types of sensors besides surveillance cameras can also help.

The above systems are interesting, but recording data and sending intrusive alerts might be unacceptable by some people. On the contrary, we propose a non-intrusive warning system with softer omnidirectional audio-visual cues. In addition, our system evaluates critical social density and modulates inflow into a region-of-interest.

3. Preliminaries

Object detection with deep learning. Object detection in the image domain is a fundamental computer vision problem. The goal is to detect instances of semantic objects that belong to certain classes, such as humans, cars, and buildings. Recently, object detection benchmarks have been dominated by deep Convolutional Neural Network (CNN) models [

13,

14,

21,

23,

24,

25]. For example, top scores on MS COCO [

29], which has over 123K images and 896K objects in the training-validation set and 80K images in the testing set with 80 categories, have almost doubled thanks to the recent breakthrough in deep CNNs.

These models are usually trained by supervised learning, with techniques like data augmentation [

34] to increase the variety of data.

Model generalization. The generalization capability [

35] of the state-of-the-art is good enough for deploying pre-trained models to new environments. For 2D object detection, even with different camera models, angles, and illumination conditions, pre-trained models can still achieve good performance.

Therefore, a pre-trained state-of-the-art deep-learning-based pedestrian detector can be directly utilized for the task of social distancing monitoring.

4. Method

We propose to use a fixed monocular camera to detect individuals in a region of interest (ROI) and measure the inter-personal distances in real time without data recording. The proposed system sends a non-intrusive audio-visual cue to warn the crowd if any social distancing breach is detected. Furthermore, we define a novel critical social density metric and propose to advise not entering into the ROI if the density is higher than this value. The overview of our approach is given in

Figure 1, and the formal description starts below.

4.1. Problem Formulation

We define a scene at time t as a sextuple , where is an RGB image captured from a fixed monocular camera with height H and width W. is the area of the ROI on the ground plane in the real world and is the required minimum physical distance. is a binary control signal for sending a non-intrusive audio-visual cue if any inter-pedestrian distance is less than . is another binary control signal for controlling the entrance to the ROI to prevent overcrowding. Overcrowding is detected with our novel definition of critical social density . ensures the social distancing violation occurrence probability stays lower than . The threshold should be set as small as possible to reduce the probability of social distancing violation. For example, the threshold could be , where is the cumulative probability of the confidence interval of a normal distribution. Other choices of also work, depending on the specific requirement of social distancing monitoring.

Problem 1. Given S, we are interested in finding a list of pedestrian position vectors , , in real-world coordinates on the ground plane and a corresponding list of inter-pedestrian distances , . n is the number of pedestrians in the ROI. Additionally, we are interested in finding a critical social density value . should ensure the probability stays over , where we define social density as .

Once Problem 1 is solved, the following control algorithm can be used to warn/advise the population in the ROI.

Algorithm 1. If , then a non-intrusive audio-visual cue is activated with setting the control signal , otherwise . In addition, if then entering the area is not advised with setting , otherwise .

Our solution to Problem 1 starts below.

4.2. Pedestrian Detection in the Image Domain

First, pedestrians are detected in the image domain with a deep CNN model trained on a real-world dataset:

maps an image

into

n tuples

.

n is the number of detected objects.

is the object class label, where

L, the set of object labels, is defined in

.

is the associated bounding box (BB) with four corners.

gives pixel indices in the image domain. The second sub-index

j indicates the corners at top-left, top-right, bottom-left, and bottom-right, respectively.

is the corresponding detection score. Implementation details of

is given in

Section 5.1.

We are only interested in the case of

‘person’. We define

, the pixel pose vector of person

i, by using the middle point of the bottom edge of the BB:

4.3. Image to Real-World Mapping

The next step is obtaining the second mapping function

.

h is an inverse perspective transformation function that maps

in image coordinates to

in real-world coordinates.

is in 2D bird’s-eye-view (BEV) coordinates by assuming the ground plane

. We use the following well-known inverse homography transformation [

36] for this task:

where

is a transformation matrix describing the rotation and translation from world coordinates to image coordinates.

is the homogeneous representation of

in image coordinates, and

is the homogeneous representation of the mapped pose vector.

The transformation matrix

can be found by identifying the geometric relationship among some key points in both the real world and the image, respectively, and then calculating

based on homography [

36]. More details on camera calibration in this particular work can be found in

Section 5.1.

The world pose vector is derived from with .

4.4. Social Distancing Detection

After getting

in real-world coordinates, obtaining the corresponding list of inter-pedestrian distances

D is straightforward. The distance

for pedestrians

i and

j is obtained by taking the Euclidean distance between their pose vectors:

The total number of social distancing violations

v in a scene can be calculated by:

where

if

, otherwise 0.

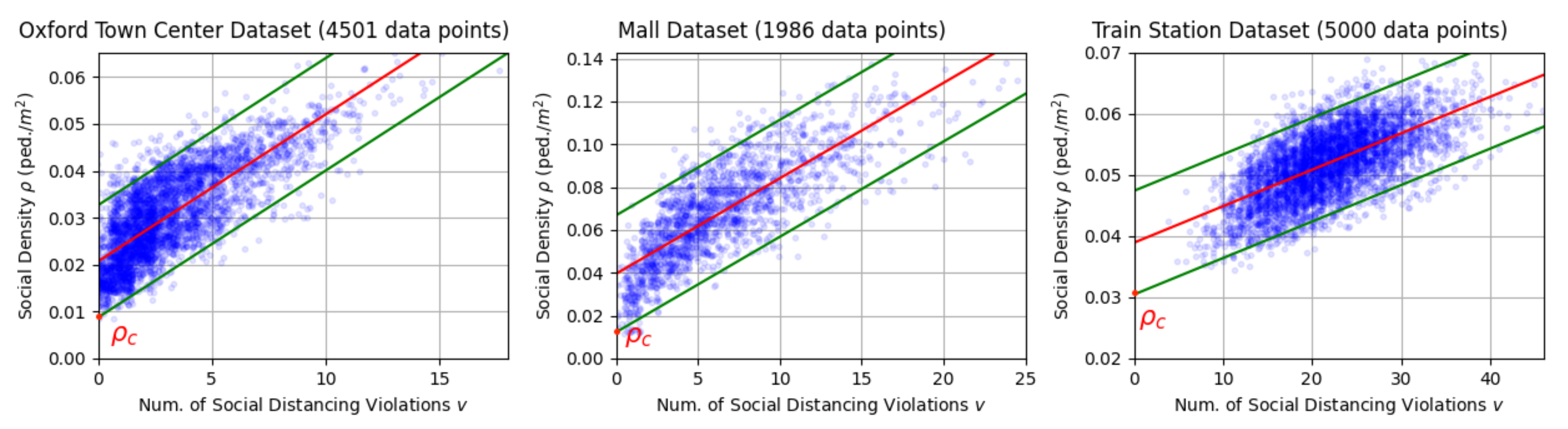

4.5. Critical Social Density Estimation

Finally, we want to find a critical social density value that can ensure the social distancing violation occurrence probability stays below . It should be noted that a trivial solution of will ensure , but it has no practical use. Instead, we want to find the maximum critical social density that can still be considered safe.

To find

, we propose to conduct a simple linear regression using the social density

as the dependent variable and the total number of violations

v as the independent variable:

where

is the regression parameter vector and

is the error term which is assumed to be normal. The regression model is fitted with the ordinary least squares method. Fitting this model requires training data. However, once the model is learned, data are not required anymore. After deployment, the surveillance system operates without recording data.

Once the model is fitted, we can obtain the predicted social density

when there is no social distancing violation (

). To further reduce the probability of social distancing violation occurrence, instead of using

, we propose to determine the critical social density as:

where

is the lower bound of the

prediction interval

at

, as illustrated in

Figure 2.

If we keep the social density of a scene to be smaller than the lower bound of the social density’s prediction interval at , the probability of social distancing violation occurrence can be pushed near zero. This is because under the linear regression assumption, the cumulative probability , which is very small.

4.6. Broader Implementation

To further utilize the obtained critical social density , subsequent measures must be taken to prevent the spread of COVID-19. There are two branches of post-processing mechanisms.

First, social distancing can be monitored and controlled online. Non-alarming audio-visual cues are sent to the people in the areas where the social density is larger than the critical value . In this way, people are immediately aware that they are violating the social distancing practice. The system can also send inflow modulation signals. Site managers can use these signals to keep the people density under . This way, overcrowding is prevented.

Second, the critical density , as well as the statistics, can be used for offline analysis. Analyzed offline data, such as averaged people densities of certain public areas or trends of people density of public events, can be utilized by regulators for better policymaking and large event organization.

Combining both the offline and online information provided by the proposed system, wider prevention measures can be taken as quickly as possible when necessary. The above procedures can be visualized in

Figure 1.

5. Experiments

We conducted three case studies to evaluate the proposed method. Each case utilizes a different pedestrian crowd dataset. They are the Oxford Town Center Dataset (an urban street) [

37], the Mall Dataset (an indoor mall) [

38], and the Train Station Dataset (New York City Grand Central Terminal) [

39].

Table 2 shows detailed information about these datasets.

To validate the effectiveness of the proposed method in detecting social distancing violation, we conducted experiments over Oxford Town Center Dataset to determine the accuracy of the proposed method.

Implementation

The first step was finding the perspective transformation matrix

for the scene of each dataset. For the Oxford Town Center Dataset, we directly used the transformation matrix available on its official website. The other two datasets do not provide the transformation matrices, so we need to find them manually. We first identified the real distances among four key points in the scene and the corresponding coordinates of these points in the image. Then, these four points were used to identify the perspective transformation (homography) [

36] so that the transformation matrix

can be calculated. For the Train Station Dataset, we found the floor plan of NYC Grand Central Terminal and measured the exact distances among the key points. For the Mall Dataset, we first estimated the size of a reference object in the image by comparing it with the width of detected pedestrians and then utilized the key points of the reference object.

The second step was applying the pedestrian detector on each dataset. The experiments were conducted on a regular PC with an Intel Core i7-4790 CPU, 32GB RAM, and an Nvidia GeForce GTX 1070Ti GPU running Ubuntu 16.04 LTS 64-bit operating system. Once the pedestrians were detected, their positions were converted from the image coordinates into the real-world coordinates.

The last step was conducting the social distancing measurement and finding the critical density . Only the pedestrians within the ROI were considered. The statistics of the social density , the inter-pedestrian distances , and the number of violations v were recorded over time.

7. Conclusions

This work proposed an AI- and monocular-camera-based real-time system to detect and monitor social distancing. In addition, our system utilized the proposed critical social density value to avoid overcrowding by modulating inflow to the ROI. The proposed approach was demonstrated using three different pedestrian crowd datasets. Quantitative validation was conducted over the Oxford Town Center Dataset that provides ground truth pedestrian detections.

There were some missed detections in the Mall Dataset and Train Station Dataset, as in some areas the pedestrian density is extremely high and occlusions occur. However, after our qualitative and quantitative analysis, most pedestrians were successfully captured and the missed detections have an minor effect on the proposed method. One future activity could be testing and verifying the proposed method over more datasets of various scenes.

Finally, in this work we did not consider that a group of people might belong to a single family or have some other connection that does not require social distancing. Understanding and addressing this issue could be a futher direction of study. Nevertheless, one may argue that even individuals who have close relationships should still try to practice social distancing in public areas.