Abstract

Classification of terrain is a vital component in giving suitable control to a walking assistive device for the various walking conditions. Although surface electromyography (sEMG) signals have been combined with inputs from other sensors to detect walking intention, no study has yet classified walking environments using sEMG only. Therefore, the purpose of this study is to classify the current walking environment based on the entire sEMG profile gathered from selected muscles in the lower extremities. The muscle activations of selected muscles in the lower extremities were measured in 27 participants while they walked over flat-ground, upstairs, downstairs, uphill, and downhill. An artificial neural network (ANN) was employed to classify these walking environments using the entire sEMG profile recorded for all muscles during the stance phase. The result shows that the ANN was able to classify the current walking environment with high accuracy of 96.3% when using activation from all muscles. When muscle activation from flexor/extensor groups in the knee, ankle, and metatarsophalangeal joints were used individually to classify the environment, the triceps surae muscle activation showed the highest classification accuracy of 88.9%. In conclusion, a current walking environment was classified with high accuracy using an ANN based on only sEMG signals.

1. Introduction

Recent research in the field of robotic walking assistance exoskeletons or prostheses has seen many studies that look to achieve natural movement of the assisting robot through communication between the user and robot [,,]. This human-robot interaction is intended to enable robots to recognize the user’s intended motion through cognitive interactions that occur between the human user and the robot that take place over various communication channels [,]. Intention recognition is important to accomplish synchronization between the motion of the robot and the human [,]. The other studies have reported various methods for recognizing the user’s intended movement based on bioelectrical signals, such as electromyography (EMG), electroencephalography (EEG), and electrooculogram (EOG) signals [,,].

Surface electromyography (sEMG) signals contain neural information associated with human movement [,]. Human motion intention can be recognized by analyzing EMG signals, and that motion can also be classified to appropriately control any assisting devices [,,]. However, analyzing sEMG signals is difficult due to their complicated patterns and non-linear nature []. Neural networks have the ability to understand and analyze complex systems, as such, in recent years they have been applied in many fields such as pattern recognition and adaptive control [,]. In particular, Morbidoni et al. used artificial neural networks (ANN) to classify the gait phase by applying the sEMG signals as input data []. Therefore, neural networks method and EMG signals can be effectively exploited to recognize the intended motion and to classify human motion.

Previously, both machine learning and traditional pattern recognition methods have utilized handcrafted features that were manually extracted from EMG signals in order to classify human movement [,]. However, the performance of these algorithms was greatly influenced by their handcrafted features, meaning their performance often depends on the experience of the engineer and the design of the feature extraction method [,]. Recently, it has been shown that extracting features using deep learning is more robust than relying on handcrafted features. For instance, Morbidoni et al. reported that a deep learning feature-based method was able to classify the gait phase with higher accuracy than a handcrafted features-based approach []. Roy et al. also proposed a deep learning-based classification framework that classifies hand motion with high accuracy []. In that study, the authors adopted an approach where features were extracted using deep learning as this leads to better overall classification performance.

Users of walking assistive devices will inevitably encounter various kinds of environments outside of flat, level ground during their daily life. Proper changes to the control of those walking assistive devices are required to adapt to the changes that occur in the sEMG signals and in the kinematics/kinetics of the joints in the lower extremities as they attempt to tackle various walking environments [,,,]. As such, classifying and/or recognizing the current walking environment is the first requirement in order to appropriately control the assistive device []. To the best of our knowledge, however, no study has attempted to classify the competing five conditions, including a flat-ground, upstairs, downstairs, uphill, and downhill walking, using only sEMG signals. sEMG signals have often been used in the classification of patterns as inputs to machine learning algorithms or artificial neural network (ANN); relying on these kinds of signals has been proven as a valid approach to classifying nonlinear data or complicated patterns []. Motivated by the state of research described above, the purpose of this study is to classify the current walking environment based on the entire sEMG profile from selected muscles in the lower extremities using an ANN.

2. Materials and Methods

2.1. Participants

Twenty-seven male students (age: 24.5 ± 2.7 years, height: 1.73 ± 0.04 m, mass: 69.0 ± 7.99 kg, BMI: 22.9 ± 2.2 kg/m2) participated in this study. Prior to participation, all participants were asked to sign an informed consent form approved by the Institutional Review Board (IRB); all participants were capable of ascending and descending stairs and slopes without any external assistance.

2.2. Experimental Protocol

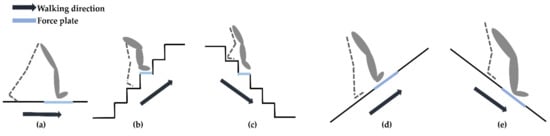

All participants walked barefoot in the following five environments: on flat-ground, upstairs, downstairs, uphill, and downhill (Figure 1). For the flat-ground environment, the participants walked along a straight and level 6 m walkway. For the stairs, the participants walked up and down a total of 5 steps (with each step 0.60 m in length, 0.25 m in width, 0.24 m in height) and there was a force platform embedded in the third step. For the uphill and downhill environments, a walkway with a 15° slope was used. The slope angle of 15° was selected, as it has been suggested in previous research that, at this angle, the effects of slope that come with walking uphill and downhill are apparent [,]. The sloped walkway consisted of three pieces, with each piece being 0.61 m in length and 0.76 m in width. The three pieces were joined together so that the participants could take a few natural steps along the walkway; a force plate was embedded below the second piece. Prior to the actual trials, each participant was instructed to perform several practice runs in the five experimental environments to become familiar with the procedures and instrumentation. During the tests, the participants were instructed to walk at a self-selected speed and to step on the force plate with their dominant leg each time. The dominant leg was defined as the more comfortable leg when kicking a ball [,]. All participants rested between each of the five walking tasks in order to prevent muscle fatigue. sEMG data from five successful trials was recorded for each environment, any trials where the participants did not correctly place their foot on the force plate were discarded.

Figure 1.

The five walking environments tested in this work (a) flat-ground, (b) upstairs, (c) downstairs, (d) uphill, and (e) downhill. A force plate was embedded in different positions according to each walking environment as shown by the blue line in each diagram. The force plate was embedded in the floor of the flat- ground environment as shown in (a). The experimental staircase was designed with five steps, here the force plate was embedded in the third step as shown in (b,c). The sloped walkway was made of three pieces joined together, the force plate was embedded in the second piece as shown in (d,e).

2.3. Data Collection

A wireless EMG system (Wave plus wireless, Cometa, Milan, Italy) was used to record muscle activation data from the participants’ rectus femoris (RF), vastus medialis and lateralis (VM and VL), semitendinosus (ST), biceps femoris (BF), tibialis anterior (TA), soleus (Sol), medial and lateral gastrocnemius (MG and LG), flexor hallucis longus (FHL), and extensor digitorum longus (EDL) at a sampling rate of 1200 Hz while walking. The sEMG sensors were attached to the muscle bellies, while an inter-electrode distance of 20 mm at the recommended locations was maintained (Figure 2).

Figure 2.

Attachment of electrodes. RF, VL, VM, ST, BF, MG, LG, Sol, TA, FHL, and EDL labels indicate the rectus femoris, vastus lateralis, vastus medialis, semitendinosus, biceps femoris, medial gastrocnemius, lateral gastrocnemius, soleus, tibialis anterior, flexor hallucis longus, and extensor digitorum longus, respectively.

The force plate (9260AA6; Kistler, Winterthur, Switzerland) recorded data at a sampling rate of 1200 Hz, and their data collection was synchronized with that of the wireless EMG system to identify the stance phase in each participant’s walk. The force plate and EMG data for each walking environment were recorded simultaneously; in particular, the vertical ground reaction force (vGRF) was used to find the stance phase in each trial. The force plate was embedded in different positions as appropriate for each walking environment (Figure 1).

2.4. Data Processing

Muscle activation data was collected during the stance phase of each participant’s walk, this is defined as the period between the initial heel contact and toe-off. Initial heel contact was identified by finding the first frame in which the vGRF exceeded 20 N [,]. Toe-off was determined by the first frame after initial heel contact in which the vGRF returned 0 N.

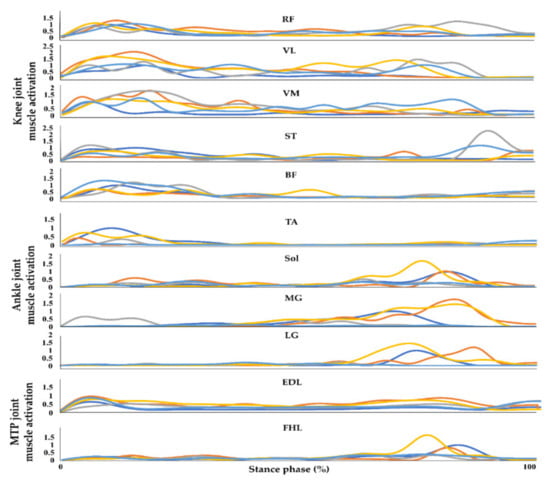

The muscle activation data from the selected muscles in the lower extremities were processed using MATLAB (MATLAB R2017b, Mathworks, Inc., Natik, MA, USA) []. The sEMG signals were processed to extract linear envelopes from the raw sEMG signals. Raw sEMG signals from walking on flat-ground, uphill, downhill, upstairs, and downstairs were passed through a fourth-order Butterworth filter for 20–500 Hz before being full-wave rectified. The rectified sEMG signals were subsequently passed through a fourth-order Butterworth low-pass filter at 10 Hz. The processed sEMG signals for all environments were normalized against each individual’s peak muscle activation amplitude during the flat-ground trial [,]. The individual peak muscle activation amplitude was defined as the maximum amplitude in the stance phase while walking on flat-ground. All sEMG signal data for each muscle during the stance phase were linearly interpolated to 1000 points to match the length of the input dataset before training and testing the model. An entire sEMG profile consists of the data collected during the stance phase from all muscles that were monitored. These data, from each trial of each individual, after being processed as described above, were used as the sole input to the ANN which then attempts to classify the walking environment (Figure 3). The number of input data points used for training and testing each model is shown in Table A1.

Figure 3.

Example of an entire normalized sEMG profile from one trial of a subject collected during the stance phase while walking on flat-ground, upstairs, downstairs, uphill, and downhill. RF, VL, VM, ST, BF, TA, Sol, MG, LG, FHL, and EDL indicate the rectus femoris, vastus lateralis, vastus medialis, semitendinosus, biceps femoris, tibialis anterior, soleus, medial gastrocnemius, lateral gastrocnemius, flexor hallucis longus, and extensor digitorum longus, respectively. The blue, orange, grey, yellow, and sky-blue lines indicate walking on flat-ground, upstairs, downstairs, uphill, and downhill, respectively.

2.5. Walking Environment Classification

The processed sEMG signals from all walking environments were sorted by muscle, then the sorted sEMG signals were labeled to match the actual walking environment they were collected from (Table 1). Entire sEMG profiles of 135 successful trials for each walking environment obtained from 27 subjects during the stance phase were used as the sole input to the classification model. The input data was divided into 2 parts: 80% was used for training the classification model and 20% was used for testing it.

Table 1.

Labeling table. The walking environments are labelled in a sequence.

The ANN was used as a classifier. The processed sEMG muscle activation data were fed into the ANN as the input for the ANN to classify the walking environment. The ANN’s training model consisted of an input layer, a single hidden layer with the rectified linear unit (ReLU) activation function, and the output layers. Entire sEMG profiles from the muscles of each joint being monitored during the stance phase were fed to the input layer. The output layer then classified the input data as being from one of the five walking environments. A Softmax cross-entropy with logits was employed as a loss function, and Adaptive Moment Estimation (Adam) was used as an optimization algorithm to minimize the loss function [,,,].

Classification models were created for each muscle and combinations of muscles, each model’s accuracy was calculated after applying the test data. Specifically, each classification model was created by dividing the data from each joint’s flexor and extensor muscles (knee, ankle, and metatarsophalangeal (MTP) joint), and then the classification accuracy of each model was calculated after using the test dataset input (Figure 4).

Figure 4.

Schematics of the classification procedures using sEMG signals as the input to an artificial neural network (a) training the artificial neural network, (b) classification of walking environment.

Accuracy, sensitivity, and specificity are model evaluation indicators commonly used with classification problems []. For our ANN model, accuracy and confusion matrices were used to evaluate its classification performance. Accuracy of the ANN model is defined as an Equation (1).

where is the number of correctly classified environments, and is the total number of tests.

A confusion matrix is used to better quantify the specifics of the classification performance [], this matrix is defined as follows:

where the elements of the matrix are defined by an Equation (2)

where is the number of samples for the th walking terrain that are identified as the th walking terrain, and is the total number of samples for the th walking terrain. The diagonal elements in the matrix represent the percentage of correct classification events and are used to find the model’s accuracy, while the other elements in the matrix show the percentage of misclassified events.

In addition, sensitivity and specificity were calculated to further evaluate the model’s performance. They are defined as Equations (3) and (4).

where true positive (TP), true negative (TN), false positive (FP), and false negative (FN) are defined in Table 2.

Table 2.

The definitions of TP, FP, FN, and TN.

In this study, the current walking environment was considered as positive; the other four walking environments were then considered negative for that particular trial. As such, five sensitivities and specificities were calculated, for when each walking environment was taken as the positive result.

3. Results

The ANN was able to classify each walking environment with a high degree of accuracy, achieving a success rate of 96.3% when using activation data from all the muscles being monitored (RF, VL, VM, ST, BF, MG, LG, Sol, TA, EDL, and FHL) (Table 3 and Table 4). The sensitivity and specificity for our model’s walking environment classification are shown in Table 4.

Table 3.

Confusion matrix for walking environment classification using sEMG signals as input.

Table 4.

The accuracy, sensitivity, and specificity of the model when using all muscle profiles.

When separate flexor and extensor muscle group activations for each joint were used as the classifying parameters, data from MG, LG, and Sol, which are the ankle extensor muscles, achieved the highest classification accuracy (MG, LG, and Sol: 88.9%; ST and BF: 75.6%; VL, VM, and RF: 68.1%; FHL: 67.4%, TA: 63.0%; EDL: 45.2%; Table 5).

Table 5.

The confusion matrix for classifying the walking environment using only sEMG signals from the flexor and extensor muscle groups of the ankle, knee, and metatarsophalangeal (MTP) joint.

When individual muscle activation was used as the classifying parameter, the highest classification accuracy was obtained when using MG muscle activation data (MG: 81.5%, LG: 77.0%, ST: 72.6%, VM: 68.9%, Sol: 68.1%, RF: 67.4%, FHL: 67.4%, BF: 66.7%, TA: 63.0%, VL: 57.0%, and EDL: 45.2%; Table 6).

Table 6.

The confusion matrix for classifying the walking environment using only sEMG signals from the individual muscles around the ankle, knee, and MTP joints.

4. Discussion

This study proposed using an artificial neural network-based approach to classify whether a human user was walking on flat-ground, upstairs, downstairs, up a ramp, or down a ramp using only sEMG profiles collected from muscles in the lower extremities. When separating the flexor and extensor muscle groups of each joint (i.e., the knee, ankle, and MTP joints) to use as the input to the model, ankle extensors provided the best classification performance. This study proves it is possible to accurately classify the current walking environments based on an ANN using only sEMG signals. The results of this study show that classification accuracy was highest (96.3%) when using muscle activation data from all monitored muscles: the VM, VL, RF, ST, BF, TA, MG, LG, Sol, FHL, and EDL. It should be noted that this high accuracy is comparable and even higher than accuracies from the other studies that used a combination of multiple types of sensor. Kyeong et al. reported a classification accuracy of 96.1% when training and testing the model using the data obtained from multiple sensors (including sEMG, position sensors, GRF sensors, and interaction force sensors). However, the classification accuracy was 76.7% when training and testing the model using only the data obtained from the sEMG sensors []. Joshi et al. reported on their model, which classified the walking environment correctly 67.1% of the time when only relying on data from sEMG sensors []. In these studies, the walking environment was classified using a machine learning method based on time domain features calculated in the feature extraction process as a parameter. However, in our study, we utilized the entire sEMG profile collected during each participant’s stance phase as input data for our deep learning method. As muscle activation data from each time point may reflect any peak amplitude characteristics of the sEMG signals during the stance phase, we believe this is the reason for the higher accuracy of our model compared to other studies. Therefore, our results suggest that it is possible to classify the walking environment with a high degree of accuracy using only sEMG sensors when we use the entire muscle activation profile as input to the classification model.

This study found that muscle activation data from the ankle extensor group of muscles (i.e., the MG, LG, and Sol) gave the highest accuracy when classifying the walking environment. This result indicates that ankle extensor muscle activation provides the most important data when classifying the walking environment. This finding might be linked to differences in the lower limb joint kinetics of the sagittal plane when walking in different environments. Lay et al. reported that there were significant differences in the peak ankle joint moment in both early and late stance phases when walking on flat-ground, uphill, or downhill []. In addition, significant differences were found in the peak knee joint moment during the late stance phase between walking on flat-ground and downhill []. In the case of walking up and down stairs, the peak ankle joint moment has shown significant differences in the early stance phase between walking up stairs and walking down []. Differences in the peak knee joint moment appear in the late stance phase, regardless of whether we are walking upstairs or walking down, compared to walking on flat-ground []. Taking these previous findings together, we may conclude that the ankle joint has more significance than the knee joint in relation to classifying the current walking environment; this, in combination with the results of this study, suggests that muscle activation data from the ankle extensors should be monitored to properly control walking assistive devices as the user moves between different environments.

Although a high degree of accuracy while classifying the current walking environment was shown in this study, there is still room to increase the classification rate of our system for terrain detection, and to apply proper control of walking assistive devices. This study considered the current situation while walking in various environments, however, a system which could provide early detection of transitions between terrains would be preferable to enable timely control of walking assistive devices. Thus, future study into detecting transitions between the walking terrain is warranted. In addition, only male subjects were included in this study, so the current results cannot be generalized to females. To further enhance classification performance and generalize the classification model, more investigation into classifying various walking terrains through a larger sample size, including female subjects, is warranted.

5. Conclusions

This study proposed an ANN-based approach to classifying the user’s competing conditions as walking on flat-ground, upstairs, downstairs, uphill, or downhill. The main contribution of this study is to classify the walking environment by applying an entire sEMG profile from the stance phase as the only input to the ANN classification model. This study suggests that using all the sEMG data from every muscle group in the lower extremities is sufficient to determine a user’s gait characteristics as they change according to the walking conditions and that current deep learning methods can extract these gait characteristics successfully from these inputs. In conclusion, the current walking environment could be accurately classified using an ANN with only sEMG signals as input.

Author Contributions

Conceptualization, P.K. and J.L.; methodology, P.K.; software, P.K. and J.L.; validation, P.K. and J.L.; formal analysis, P.K.; investigation, P.K. and J.L.; resources, P.K. and J.L.; data curation, P.K.; writing—original draft preparation, P.K.; writing—review and editing, C.S.S.; visualization, P.K.; supervision, C.S.S.; project administration, C.S.S.; and funding acquisition, C.S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF-2019R1A2C1089522). This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF- 2020R1I1A1A01072585).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Sogang University (approval no. SGUIRB-A-1910-42 on 14 October 2019).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

The number of input data points used for training and testing each model.

Table A1.

The number of input data points used for training and testing each model.

| Muscle Used for Input | Number of Training Data Points | Number of Testing Data Points |

|---|---|---|

| All muscles | 5,940,000 | 1,485,000 |

| VL, VM, RF | 1,620,000 | 405,000 |

| ST, BF | 1,080,000 | 270,000 |

| LG, MG, Sol | 1,620,000 | 405,000 |

| FHL | 540,000 | 135,000 |

| EDL | 540,000 | 135,000 |

| RF | 540,000 | 135,000 |

| VL | 540,000 | 135,000 |

| VM | 540,000 | 135,000 |

| ST | 540,000 | 135,000 |

| BF | 540,000 | 135,000 |

| MG | 540,000 | 135,000 |

| LG | 540,000 | 135,000 |

| Sol | 540,000 | 135,000 |

| TA | 540,000 | 135,000 |

RF, VL, VM, ST, BF, MG, LG, Sol, TA, FHL, and EDL indicate the rectus femoris, vastus lateralis, vastus medialis, semitendinosus, biceps femoris, medial gastrocnemius, lateral gastrocnemius, soleus, tibialis anterior, flexor hallucis longus, and extensor digitorum longus, respectively.

References

- Han, J.; Ding, Q.; Xiong, A.; Zhao, X. A state-space EMG model for the estimation of continuous joint movements. IEEE Trans. Ind. Electron. 2015, 62, 4267–4275. [Google Scholar] [CrossRef]

- Huang, R.; Cheng, H.; Qiu, J.; Zhang, J. Learning Physical Human-Robot Interaction with Coupled Cooperative Primitives for a Lower Exoskeleton. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1566–1574. [Google Scholar] [CrossRef]

- Li, K.D.; Zhang, J.; Wang, L.; Zhang, M.; Li, J.; Bao, S. A review of the key technologies for sEMG-based human-robot interaction systems. Biomed. Signal Process. Control 2020, 62, 102074. [Google Scholar] [CrossRef]

- Huang, J.; Huo, W.; Xu, W.; Mohammed, S.; Amirat, Y. Control of Upper-Limb Power-Assist Exoskeleton Using a Human-Robot Interface Based on Motion Intention Recognition. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1257–1270. [Google Scholar] [CrossRef]

- Wang, W.; Qin, L.; Yuan, X.; Ming, X.; Sun, T.; Liu, Y. Bionic control of exoskeleton robot based on motion intention for rehabilitation training. Adv. Robot. 2019, 33, 590–601. [Google Scholar] [CrossRef]

- Suzuki, K.; Mito, G.; Kawamoto, H.; Hasegawa, Y.; Sankai, Y. Intention-based walking support for paraplegia patients with Robot Suit HAL. Adv. Robot. 2007, 21, 1441–1469. [Google Scholar] [CrossRef]

- Vallery, H.; van Asseldonk, E.H.F.; Buss, M.; van der Kooij, H. Reference trajectory generation for rehabilitation robots: Complementary limb motion estimation. IEEE Trans. Neural Syst. Rehabil. Eng. 2009, 17, 23–30. [Google Scholar] [CrossRef]

- Accogli, A.; Grazi, L.; Crea, S.; Panarese, A.; Carpaneto, J.; Vitiello, N.; Micera, S. EMG-based detection of user’s intentions for human-machine shared control of an assistive upper-limb exoskeleton. In Wearable Robotics: Challenges and Trends; Springer: Berlin, Germany, 2017; pp. 181–185. [Google Scholar]

- Nam, Y.; Koo, B.; Cichocki, A.; Choi, S. GOM-face: GKP, EOG, and EMG-based multimodal interface with application to humanoid robot control. IEEE Trans. Biomed. Eng. 2014, 61, 453–462. [Google Scholar] [CrossRef]

- Wang, C.; Wu, X.; Wang, Z.; Ma, Y. Implementation of a Brain-Computer Interface on a Lower-Limb Exoskeleton. IEEE Access 2018, 6, 38524–38534. [Google Scholar] [CrossRef]

- Khoshdel, V.; Akbarzadeh, A. An optimized artificial neural network for human-force estimation: Consequences for rehabilitation robotics. Ind. Robot 2018, 45, 416–423. [Google Scholar] [CrossRef]

- Young, A.J.; Smith, L.H.; Rouse, E.J.; Hargrove, L.J. Classification of simultaneous movements using surface EMG pattern recognition. IEEE Trans. Biomed. Eng. 2013, 60, 1250–1258. [Google Scholar] [CrossRef]

- Young, A.J.; Gannon, H.; Ferris, D.P. A Biomechanical Comparison of Proportional Electromyography Control to Biological Torque Control Using a Powered Hip Exoskeleton. Front. Bioeng. Biotechnol. 2017, 5. [Google Scholar] [CrossRef] [PubMed]

- Zhai, X.; Jelfs, B.; Chan, R.H.M.; Tin, C. Self-recalibrating surface EMG pattern recognition for neuroprosthesis control based on convolutional neural network. Front. Neurosci. 2017, 11, 379. [Google Scholar] [CrossRef]

- Alkan, A.; Günay, M. Identification of EMG signals using discriminant analysis and SVM classifier. Expert Syst. Appl. 2012, 39, 44–47. [Google Scholar] [CrossRef]

- Yen, V.T.; Nan, W.Y.; van Cuong, P. Recurrent fuzzy wavelet neural networks based on robust adaptive sliding mode control for industrial robot manipulators. Neural Comput. Appl. 2019, 31, 6945–6958. [Google Scholar] [CrossRef]

- Morbidoni, C.; Cucchiarelli, A.; Fioretti, S.; Di Nardo, F. A deep learning approach to EMG-based classification of gait phases during level ground walking. Electronics 2019, 8, 894. [Google Scholar] [CrossRef]

- Ahsan, M.R.; Ibrahimy, M.I.; Khalifa, O.O. Electromygraphy (EMG) signal based hand gesture recognition using artificial neural network (ANN). In Proceedings of the 2011 International Conference on Mechatronics (ICOM), Kuala Lumpur, Malaysia, 17–19 May 2011. [Google Scholar] [CrossRef]

- Kyeong, S.; Shin, W.; Yang, M.; Heo, U.; Feng, J.; Kim, J. Recognition of walking environments and gait period by surface electromyography. Front. Inf. Technol. Electron. Eng. 2019, 20, 342–352. [Google Scholar] [CrossRef]

- Chowdhury, R.H.; Reaz, M.B.I.; Ali, M.A.B.M.; Bakar, A.A.A.; Chellappan, K.; Chang, T.G. Surface electromyography signal processing and classification techniques. Sensors 2013, 13, 12431–12466. [Google Scholar] [CrossRef]

- Nazmi, N.; Rahman, M.A.A.; Yamamoto, S.I.; Ahmad, S.A.; Zamzuri, H.; Mazlan, S.A. A review of classification techniques of EMG signals during isotonic and isometric contractions. Sensors 2016, 16, 1304. [Google Scholar] [CrossRef]

- Roy, S.S.; Samanta, K.; Chatterjee, S.; Dey, S.; Nandi, A.; Bhowmik, R.; Mondal, S. Hand Movement Recognition Using Cross Spectrum Image Analysis of EMG Signals-A Deep Learning Approach. In Proceedings of the 2020 National Conference on Emerging Trends on Sustainable Technology and Engineering Applications (NCETSTEA), Durgapur, India, 7–8 February 2020. [Google Scholar] [CrossRef]

- Alexander, N.; Schwameder, H. Effect of sloped walking on lower limb muscle forces. Gait Posture 2016, 47, 62–67. [Google Scholar] [CrossRef]

- Gottschall, J.S.; Okita, N.; Sheehan, R.C. Muscle activity patterns of the tensor fascia latae and adductor longus for ramp and stair walking. J. Electromyogr. Kinesiol. 2012, 22, 67–73. [Google Scholar] [CrossRef] [PubMed]

- Sheehan, R.C.; Gottschall, J.S. At similar angles, slope walking has a greater fall risk than stair walking. Appl. Ergon. 2012, 43, 473–478. [Google Scholar] [CrossRef]

- Riener, R.; Rabuffetti, M.; Frigo, C. Stair Ascent and Descent at Different Inclinations. Gait Posture 2002, 15, 32–44. [Google Scholar] [CrossRef]

- Lawson, B.E.; Varol, H.A.; Huff, A.; Erdemir, E.; Goldfarb, M. Control of stair ascent and descent with a powered transfemoral prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 466–473. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, K.; Qian, J.; Zhang, L. Real-Time Surface EMG Pattern Recognition for Hand Gestures Based on an Artificial Neural Network. Sensors 2019, 19, 3170. [Google Scholar] [CrossRef]

- Earhart, G.M.; Bastian, A.J. Form switching during human locomotion: Traversing wedges in a single step. J. Neurophysiol. 2000, 84, 605–615. [Google Scholar] [CrossRef]

- Lee, J.; Yoon, Y.J.; Shin, C.S. The Effect of Backpack Load Carriage on the Kinetics and Kinematics of Ankle and Knee Joints During Uphill Walking. J. Appl. Biomech. 2017, 33, 397–405. [Google Scholar] [CrossRef]

- Hong, Y.N.G.; Lee, J.; Shin, C.S. Transition versus continuous slope walking: Adaptation to change center of mass velocity in young men. Appl. Bionics Biomech. 2018, 2018, 2028638. [Google Scholar] [CrossRef]

- Masuda, K.; Kikuhara, N.; Takahashi, H.; Yamanaka, K. The relationship between muscle cross-sectional area and strength in various isokinetic movements among soccer players. J. Sports Sci. 2003, 21, 851–858. [Google Scholar] [CrossRef]

- Lee, J.; Song, Y.; Shin, C.S. Effect of the sagittal ankle angle at initial contact on energy dissipation in the lower extremity joints during a single-leg landing. Gait Posture 2018, 62, 99–104. [Google Scholar] [CrossRef]

- Jeong, J.; Choi, D.H.; Song, Y.; Shin, C.S. Muscle Strength Training Alters Muscle Activation of the Lower Extremity during Side-Step Cutting in Females. J. Mot. Behav. 2020, 52, 703–712. [Google Scholar] [CrossRef]

- Merletti, R. Standards for reporting EMG data. J. Electromyogr. Kinesiol. 1999, 9, 3–4. [Google Scholar]

- Bartlett, J.L.; Sumner, B.; Ellis, R.G.; Kram, R. Activity and functions of the human gluteal muscles in walking, running, sprinting, and climbing. Am. J. Phys. Anthropol. 2014, 153, 124–131. [Google Scholar] [CrossRef]

- Hong, Y.N.G.; Lee, J.; Kim, P.; Shin, C.S. Gender Differences in the Activation and Co-activation of Lower Extremity Muscles During the Stair-to-Ground Descent Transition. Int. J. Precis. Eng. Manuf. 2020, 21, 1563–1570. [Google Scholar] [CrossRef]

- Giarmatzis, G.; Zacharaki, E.I.; Moustakas, K. Real-time prediction of joint forces by motion capture and machine learning. Sensors 2020, 20, 6933. [Google Scholar] [CrossRef] [PubMed]

- Jang, H.K.; Han, H.; Yoon, S.W. Comprehensive Monitoring of Bad Head and Shoulder Postures by Wearable Magnetic Sensors and Deep Learning. IEEE Sens. J. 2020, 20, 13768–13775. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Yin, S.; Chen, C.; Zhu, H.; Wang, X.; Chen, W. Neural networks for pathological gait classification using wearable motion sensors. In Proceedings of the 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), Nara, Japan, 17–19 October 2019. [Google Scholar] [CrossRef]

- Nair, S.S.; French, R.M.; Laroche, D.; Thomas, E. The application of machine learning algorithms to the analysis of electromyographic patterns from arthritic patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2010, 18, 174–184. [Google Scholar] [CrossRef]

- Gao, S.; Wang, Y.; Fang, C.; Xu, L. A smart terrain identification technique based on electromyography, ground reaction force, and machine learning for lower limb rehabilitation. Appl. Sci. 2020, 10, 2638. [Google Scholar] [CrossRef]

- Joshi, D.; Hahn, M.E. Terrain and direction classification of locomotion transitions using neuromuscular and mechanical input. Ann. Biomed. Eng. 2016, 44, 1275–1284. [Google Scholar] [CrossRef] [PubMed]

- Lay, A.N.; Hass, C.J.; Gregor, R.J. The effects of sloped surfaces on locomotion: A kinematic and kinetic analysis. J. Biomech. 2006, 39, 1621–1628. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).