First Step toward Gestural Recognition in Harsh Environments

Abstract

1. Introduction

2. Related Work

2.1. Ground and Aerial Robots in Harsh Environments

2.2. Gestural Recognition in Harsh Environments

2.3. Remote Sensing in Harsh Environments

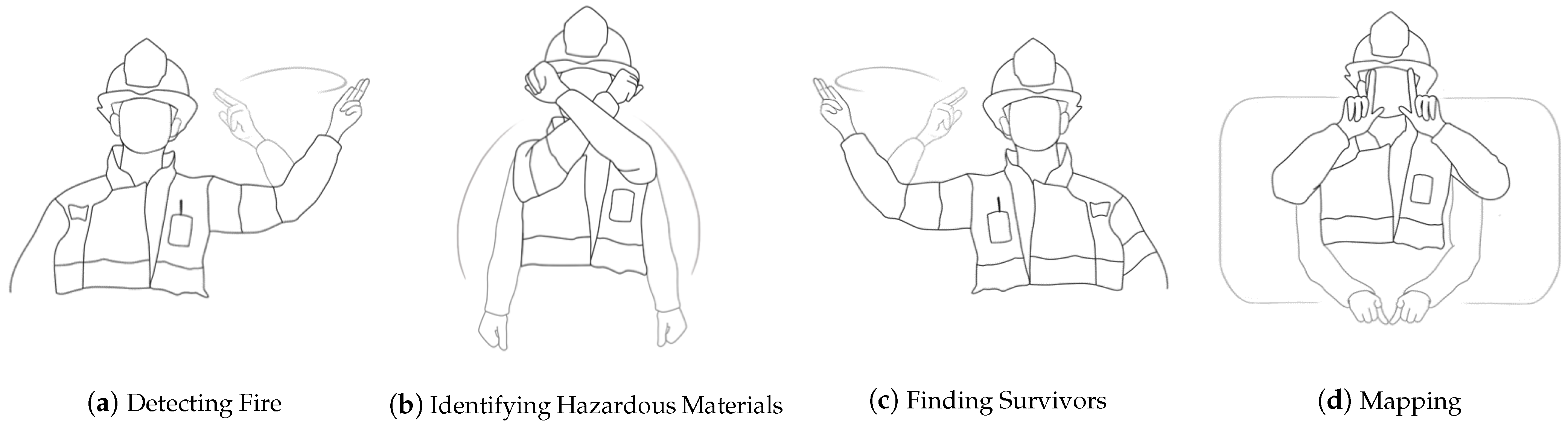

3. Gestures for Gestural Communication in Harsh Environments

- Mapping. The firefighters envisioned sending the drone to map a floor or even an entire building, finding out how many rooms are inside a building, what the structure of the building looks like, and creating a map of it.

- Identifying Hazardous Materials. The firefighters proposed that the drone could identify the type of hazardous material (such as gas or chemicals) and the source and size of the leak, which is a scenario that they encounter in industrial buildings.

- Detecting Fires. The firefighters proposed that the drone could identify fire spots. This is in line with current drone usage in the country.

- Finding Survivors. The firefighters suggested sending the drone to search for trapped civilians or people otherwise in danger. They would like the drone to gather information on the number of people as well as their condition and location. They proposed that the drone could directly provide help to citizens who have fallen into holes or deep tunnels. The firefighters further mentioned a situation that they referred to as “fear for human life and aid to civilians”. This corresponds to situations where there is a concern for civilians, who are locked in their homes and are not responding to the door, who usually have not been seen by their relatives or neighbors, who called the firefighters to break in. Participants mentioned that a drone could fly inside an apartment through a window to help them to conclude on whether they need to break into the apartment or not.

4. Gesture Recognition System Prototyping

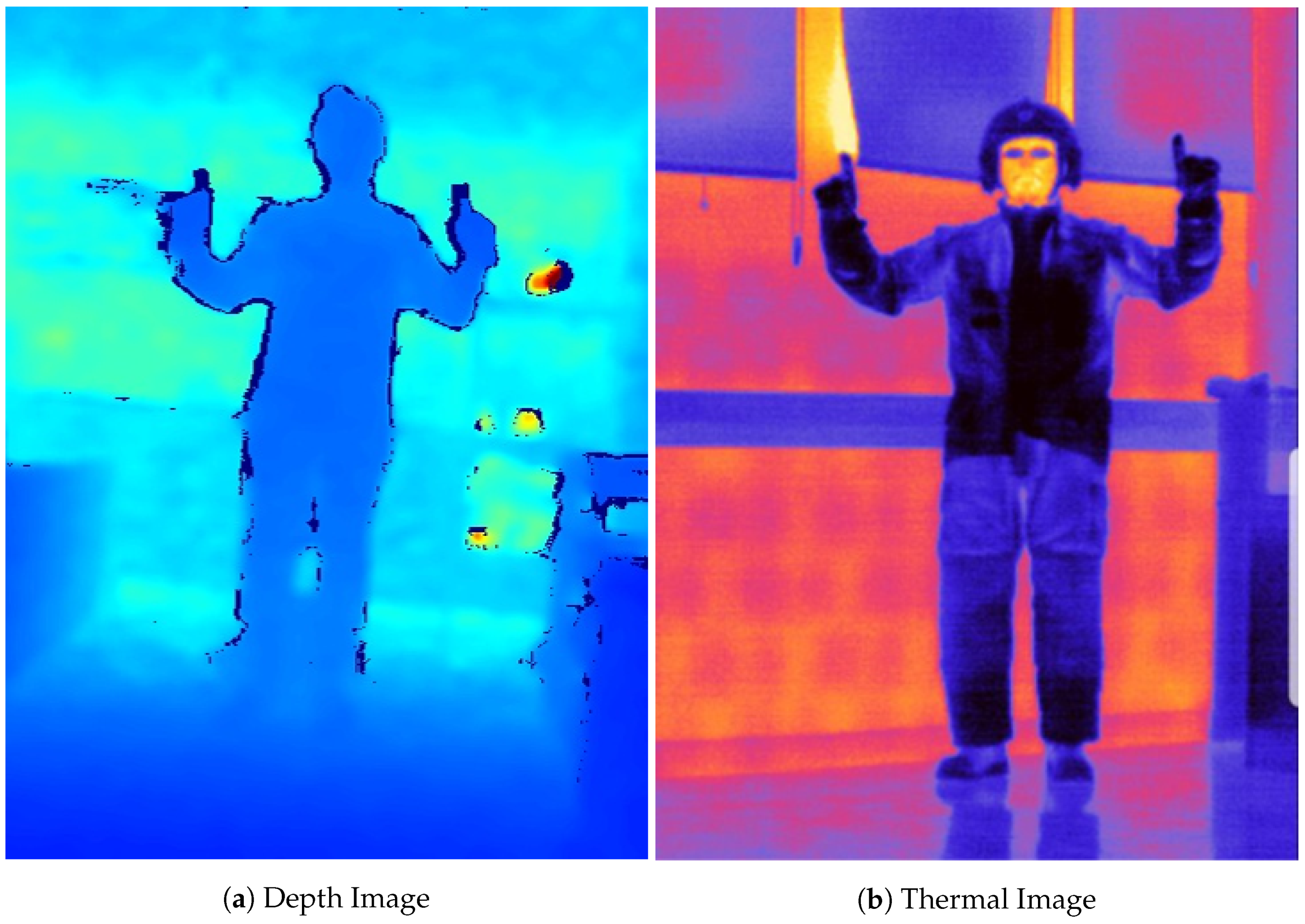

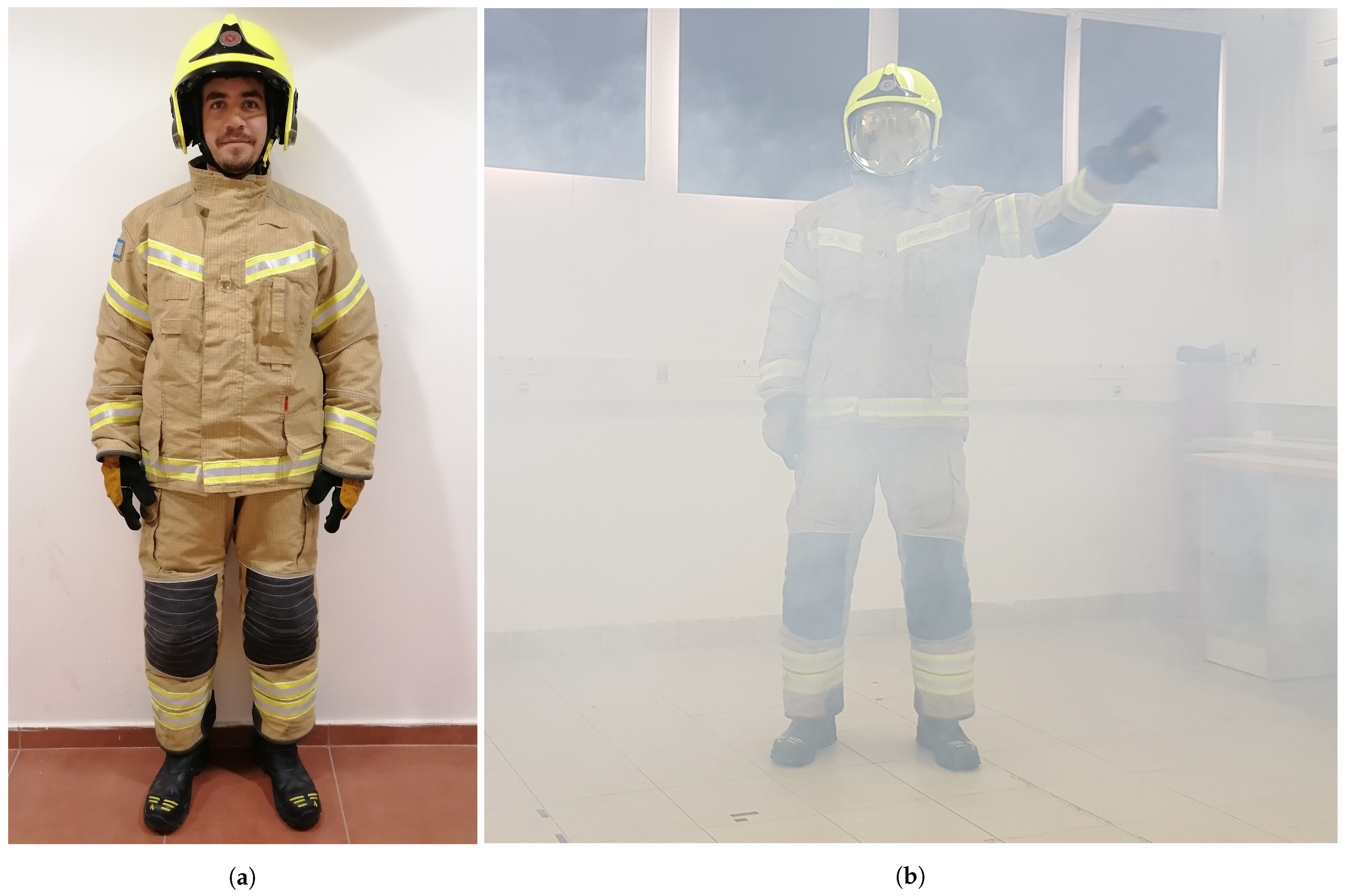

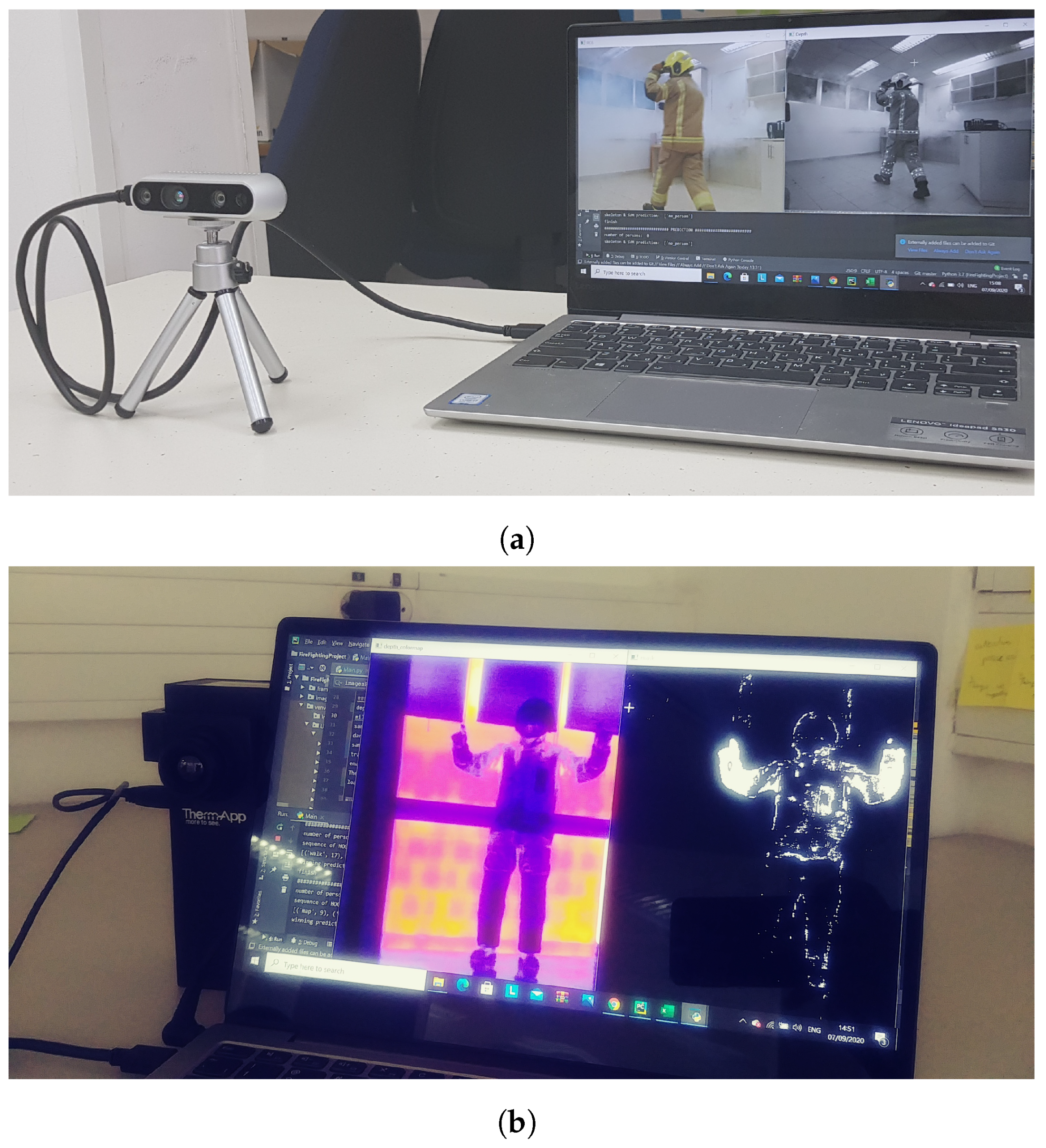

4.1. Visual Sensors

4.2. Algorithms

- Skeleton Extraction. Key points of the person are extracted using the Realsense SDK (Cubemos), which provides a vector of 18 (x,y) coordinates for each frame. Each gesture consists of 20 frames which form a vector of 720 values (20 frames × 18 coordinates × 2 (x,y) values). Skeleton extraction is a known approach in the literature for extracting features for body gesture recognition (e.g., [54]) and it can be implemented with various APIs, such as OpenPose [55]. Compared to other feature extraction techniques, such as using 3D CNNs, which are time-consuming and may be difficult to train, the features of the human skeleton are concise and are based on a pre-trained CNN [54].

- Histogram of oriented gradient (HOG). Each frame is represented by a HOG [56], so that each gesture forms a vector of 20 concatenated HOG vectors. We used scikit-image for extracting the HOG features with the number of pixels per cell = 10 × 10 (size of a cell (in pixels)), number of orientation bins = 9, number of cells in each block = 2 × 2, and using the L2 block normalization method [57]. To improve the accuracy, we used background subtraction using motion analysis by applying BackgroundSubtractorMOG2 [58] (OpenCV) prior to the HOG extraction. The following parameters were used: history = 150 (number of last frames that affect the background model), varThreshold = 40 (variance threshold for the pixel-model match), and detectShadows = False.

- Frame Vote. We designed this algorithm to analyze each frame individually, using a different paradigm compared to the previous two algorithms. For each frame, we run a machine learning algorithm (HOG + SVM or CNN) that classifies the gesture. After 20 frames, the predicted gestures are compared and the one with the most occurrences is selected (majority vote). The algorithm is illustrated in Figure 3. The advantage of this method is that it does not take into account the sequence of the gesture, and thus may hold better if the sensing continuity is not entirely reliable, as may be the case in harsh environments. This is also a good fit for the continuous and repeated gestures elicited in the previous study, as the order in which the gestures are performed has less importance.

4.3. Data Collection and Training

4.4. Evaluation

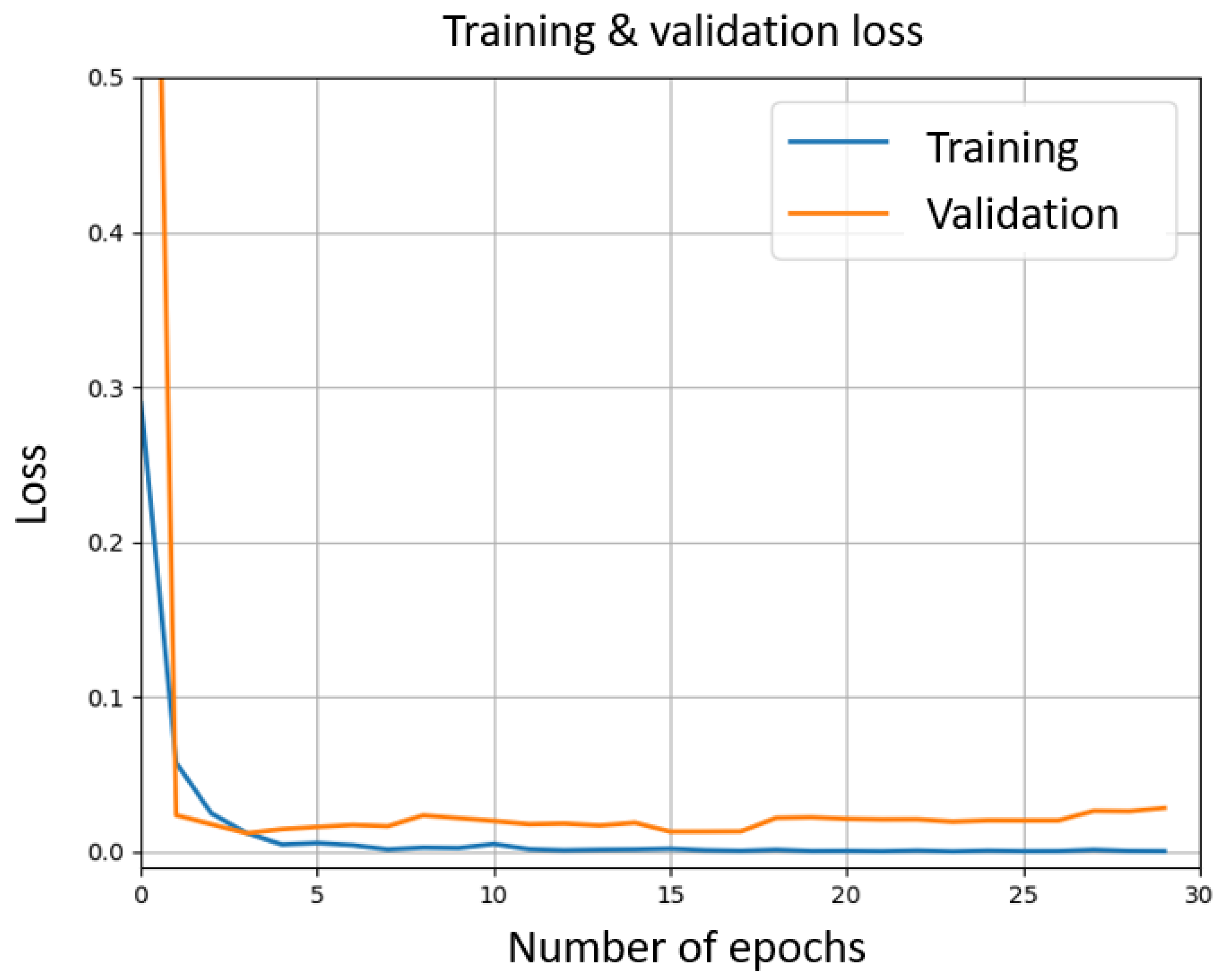

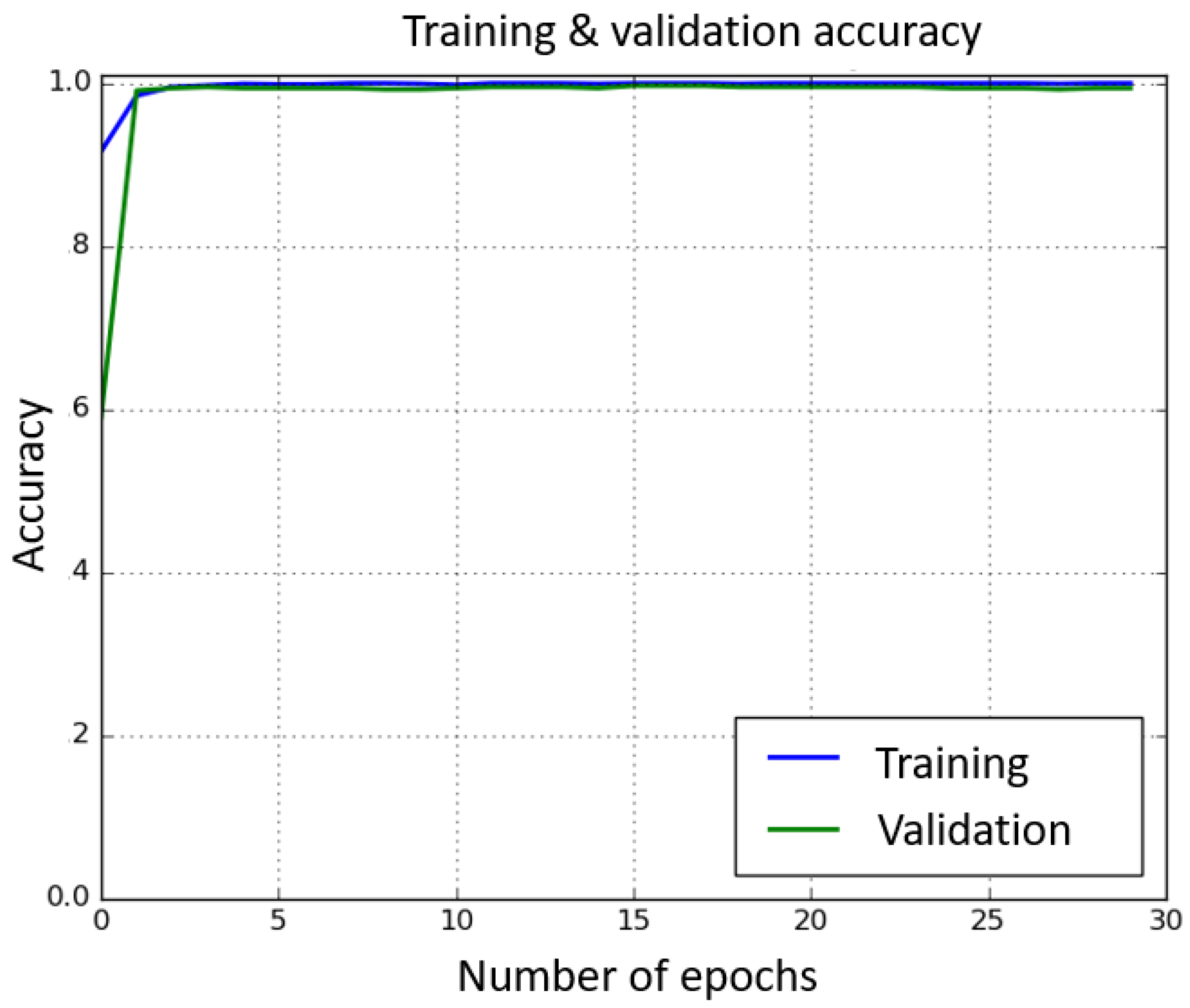

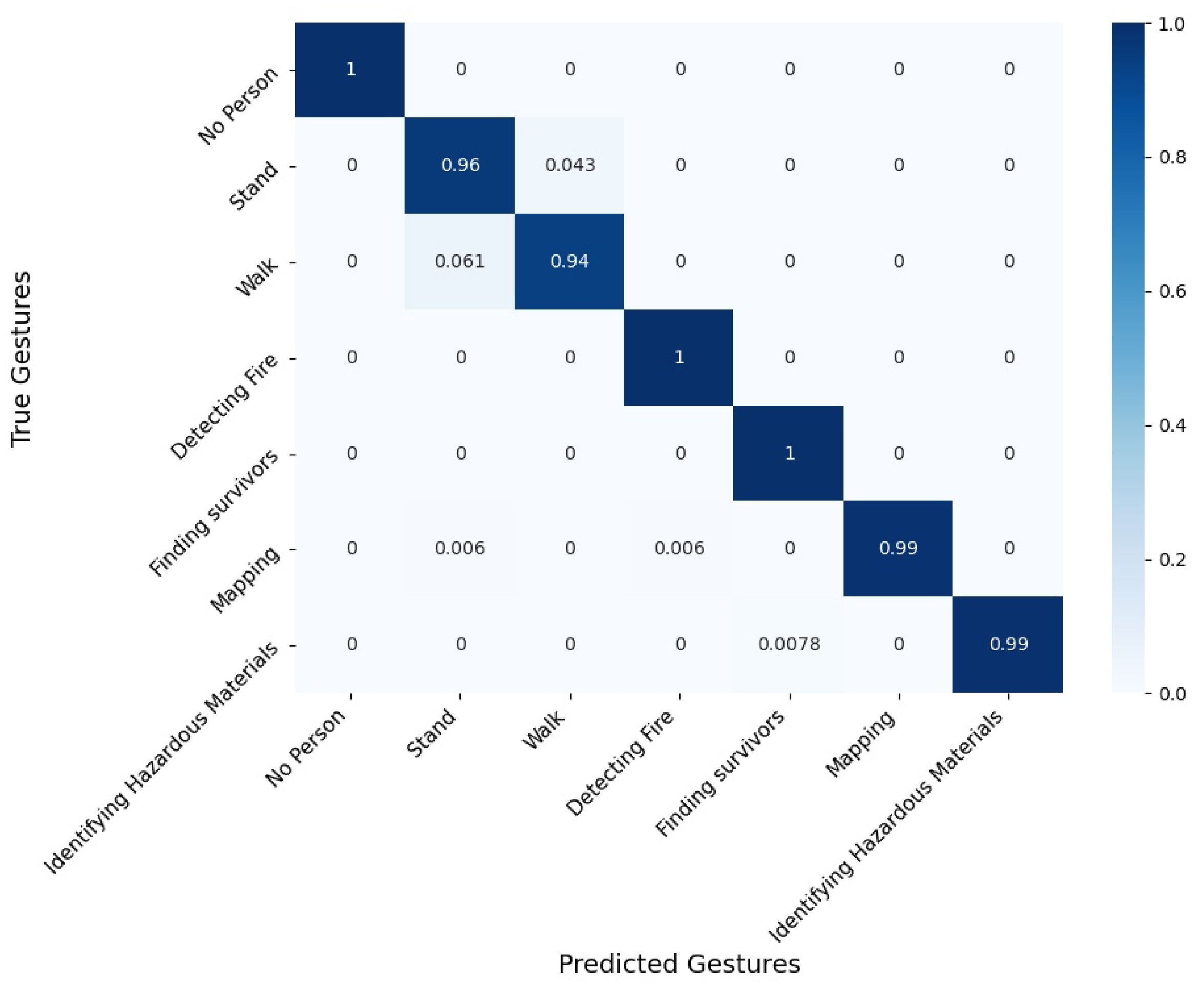

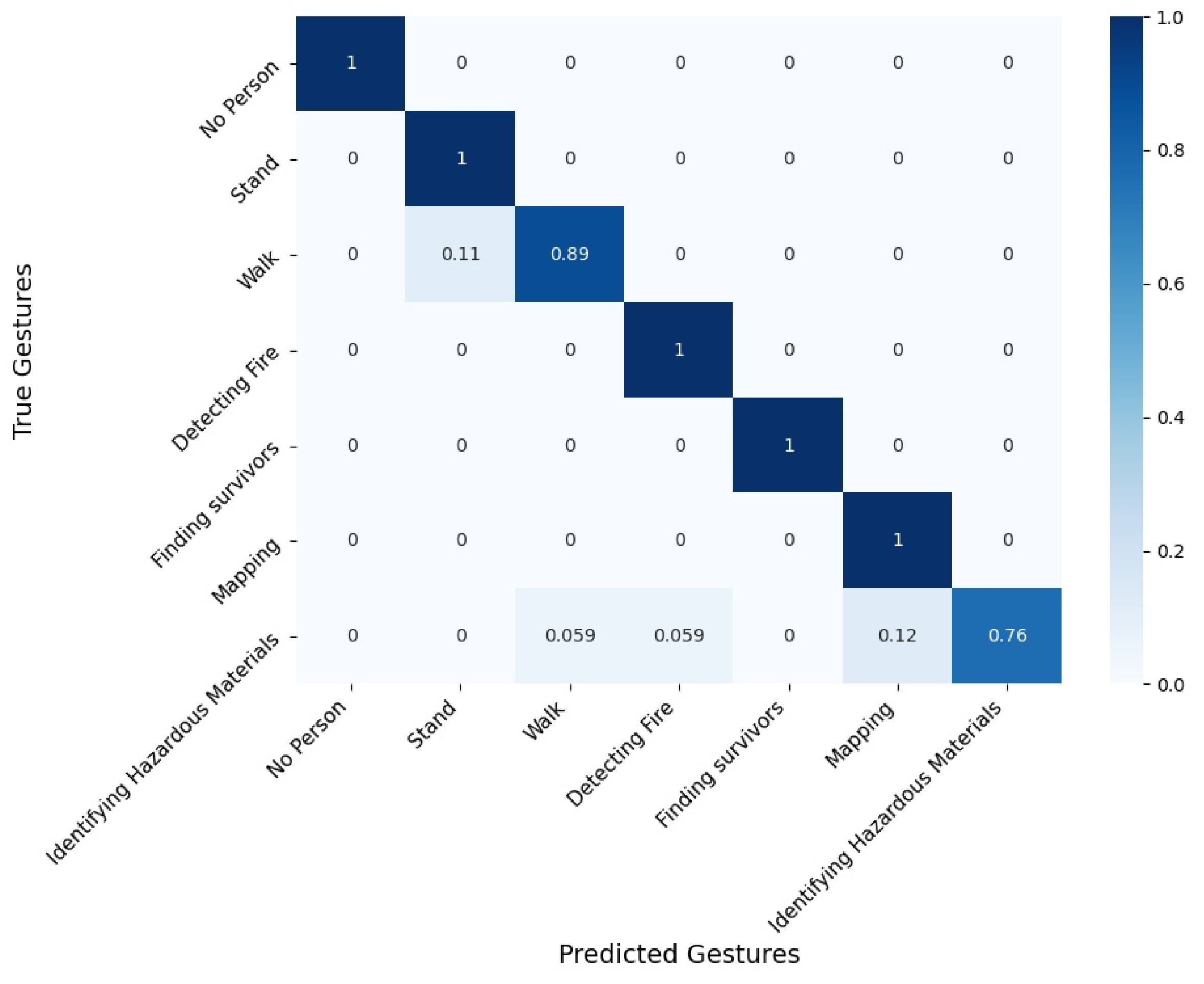

4.5. Results and Interpretation

5. Discussion

6. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HRI | Human–robot interaction |

| HCI | Human–computer interaction |

| HDI | Human–drone interaction |

| SVM | Support vector machine |

| HOG | Histogram of oriented gradient |

| CNN | Convolutional neural networks |

| RNN | Recurrent neural network |

| LSTM | Long short-term memory |

References

- Basiratzadeh, S.; Mir, M.; Tahseen, M.; Shapsough, A.; Dorrikhteh, M.; Saeidi, A. Autonomous UAV for Civil Defense Applications. In Proceedings of the 3rd World Congress on Computer Applications and Information Systems, Kuala Lumpur, Malaysia, 6–8 September 2016. [Google Scholar]

- Apvrille, L.; Tanzi, T.; Dugelay, J.L. Autonomous drones for assisting rescue services within the context of natural disasters. In Proceedings of the 2014 XXXIth URSI General Assembly and Scientific Symposium (URSI GASS), Beijing, China, 16–23 August 2014; pp. 1–4. [Google Scholar]

- Croizé, P.; Archez, M.; Boisson, J.; Roger, T.; Monsegu, V. Autonomous measurement drone for remote dangerous source location mapping. Int. J. Environ. Sci. Dev. 2015, 6, 391. [Google Scholar] [CrossRef]

- Martinson, E.; Lawson, W.E.; Blisard, S.; Harrison, A.M.; Trafton, J.G. Fighting fires with human robot teams. In Proceedings of the IROS, Vilamoura, Portugal, 7–12 October 2012; pp. 2682–2683. [Google Scholar]

- Chavez, A.G.; Mueller, C.A.; Doernbach, T.; Chiarella, D.; Birk, A. Robust gesture-based communication for underwater human-robot interaction in the context of search and rescue diver missions. arXiv 2018, arXiv:1810.07122. [Google Scholar]

- Cacace, J.; Finzi, A.; Lippiello, V.; Furci, M.; Mimmo, N.; Marconi, L. A control architecture for multiple drones operated via multimodal interaction in search & rescue mission. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; pp. 233–239. [Google Scholar]

- De Cillis, F.; Oliva, G.; Pascucci, F.; Setola, R.; Tesei, M. On field gesture-based human-robot interface for emergency responders. In Proceedings of the 2013 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Linkoping, Sweden, 21–26 October 2013; pp. 1–6. [Google Scholar]

- Wong, C.; Yang, E.; Yan, X.T.; Gu, D. Autonomous robots for harsh environments: A holistic overview of current solutions and ongoing challenges. Syst. Sci. Control. Eng. 2018, 6, 213–219. [Google Scholar] [CrossRef]

- Wong, C.; Yang, E.; Yan, X.T.; Gu, D. An overview of robotics and autonomous systems for harsh environments. In Proceedings of the 2017 23rd International Conference on Automation and Computing (ICAC), Huddersfield, UK, 7–8 September 2017; pp. 1–6. [Google Scholar]

- Bausys, R.; Cavallaro, F.; Semenas, R. Application of sustainability principles for harsh environment exploration by autonomous robot. Sustainability 2019, 11, 2518. [Google Scholar] [CrossRef]

- Roldán-Gómez, J.J.; González-Gironda, E.; Barrientos, A. A Survey on Robotic Technologies for Forest Firefighting: Applying Drone Swarms to Improve Firefighters’ Efficiency and Safety. Appl. Sci. 2021, 11, 363. [Google Scholar] [CrossRef]

- Ruangpayoongsak, N.; Roth, H.; Chudoba, J. Mobile robots for search and rescue. In Proceedings of the IEEE International Safety, Security and Rescue Rototics, Workshop, Kobe, Japan, 6–9 June 2005; pp. 212–217. [Google Scholar]

- Jones, H.L.; Rock, S.M.; Burns, D.; Morris, S. Autonomous robots in swat applications: Research, design, and operations challenges. In Proceedings of the AUVSI’02, Orlando, FL, USA, 9–11 July 2002. [Google Scholar]

- Patil, D.; Ansari, M.; Tendulkar, D.; Bhatlekar, R.; Pawar, V.N.; Aswale, S. A Survey On Autonomous Military Service Robot. In Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), Vellore, India, 24–25 February 2020; pp. 1–7. [Google Scholar]

- Matsuno, F.; Tadokoro, S. Rescue robots and systems in Japan. In Proceedings of the 2004 IEEE International Conference on Robotics and Biomimetics, Shenyang, China, 22–26 August 2004; pp. 12–20. [Google Scholar]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early forest fire detection using drones and artificial intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar]

- Tan, C.F.; Liew, S.M.; Alkahari, M.R.; Ranjit, S.S.S.; Said, M.R.; Chen, W.; Sivakumar, D. Fire fighting mobile robot: State of the art and recent development. Aust. J. Basic Appl. Sci. 2013, 7, 220–230. [Google Scholar]

- Rossi, M.; Brunelli, D. Autonomous Gas Detection and Mapping With Unmanned Aerial Vehicles. IEEE Trans. Instrum. Meas. 2016, 65, 765–775. [Google Scholar] [CrossRef]

- Hrabia, C.E.; Hessler, A.; Xu, Y.; Brehmer, J.; Albayrak, S. Efffeu project: Efficient operation of unmanned aerial vehicles for industrial fire fighters. In Proceedings of the 4th ACM Workshop on Micro Aerial Vehicle Networks, Systems, and Applications, Munich, Germany, 10–15 June 2018; pp. 33–38. [Google Scholar]

- Radu, V.T.; Kristensen, A.S.; Mehmood, S. Use of Drones for Firefighting Operations. Master’s Thesis, University Aalborg, Aalborg, Denmark, 2019. [Google Scholar]

- Hassanein, A.; Elhawary, M.; Jaber, N.; El-Abd, M. An autonomous firefighting robot. In Proceedings of the 2015 International Conference on Advanced Robotics (ICAR), Istanbul, Turkey, 27–31 July 2015; pp. 530–535. [Google Scholar]

- Fraune, M.R.; Khalaf, A.S.; Zemedie, M.; Pianpak, P.; NaminiMianji, Z.; Alharthi, S.A.; Dolgov, I.; Hamilton, B.; Tran, S.; Toups, Z. Developing Future Wearable Interfaces for Human-Drone Teams through a Virtual Drone Search Game. Int. J. Hum. Comput. Stud. 2021, 147, 102573. [Google Scholar] [CrossRef]

- Alon, O.; Fyodorov, C.; Rabinovich, S.; Cauchard, J.R. Drones in Firefighting: A User-Centered Design Perspective. In Proceedings of the Accepted for publication at MobileHCI’21: ACM International Conference on Mobile Human-Computer Interaction, Toulouse, France, 27–30 September 2021; pp. 1–17. [Google Scholar]

- Cauchard, J.R.; Khamis, M.; Garcia, J.; Kljun, M.; Brock, A.M. Toward a roadmap for human-drone interaction. Interactions 2021, 28, 76–81. [Google Scholar] [CrossRef]

- Waldherr, S.; Romero, R.; Thrun, S. A Gesture Based Interface for Human-Robot Interaction. Auton. Robot. 2000, 9, 151–173. [Google Scholar] [CrossRef]

- Cauchard, J.R.; Zhai, K.Y.; Landay, J.A. Drone & me: An exploration into natural human-drone interaction. In Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Osaka, Japan, 7–11 September 2015; pp. 361–365. [Google Scholar]

- Jane, L.E.; Ilene, L.E.; Landay, J.A.; Cauchard, J.R. Drone & Wo: Cultural Influences on Human-Drone Interaction Techniques. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems—CHI’17, Denver, CO, USA, 6–11 May 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 6794–6799. [Google Scholar] [CrossRef]

- Cooney, M.D.; Becker-Asano, C.; Kanda, T.; Alissandrakis, A.; Ishiguro, H. Full-body gesture recognition using inertial sensors for playful interaction with small humanoid robot. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2276–2282. [Google Scholar] [CrossRef]

- Sigalas, M.; Baltzakis, H.; Trahanias, P. Gesture recognition based on arm tracking for human-robot interaction. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5424–5429. [Google Scholar]

- Lv, Z.; Halawani, A.; Feng, S.; Li, H.; Réhman, S.U. Multimodal hand and foot gesture interaction for handheld devices. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2014, 11, 10. [Google Scholar] [CrossRef]

- Wen, H.; Ramos Rojas, J.; Dey, A.K. Serendipity: Finger gesture recognition using an off-the-shelf smartwatch. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 3847–3851. [Google Scholar]

- Escalera, S.; Athitsos, V.; Guyon, I. Challenges in multi-modal gesture recognition. In Gesture Recognition; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–60. [Google Scholar]

- Monajjemi, M.; Bruce, J.; Sadat, S.A.; Wawerla, J.; Vaughan, R. UAV, do you see me? Establishing mutual attention between an uninstrumented human and an outdoor UAV in flight. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 3614–3620. [Google Scholar]

- Wu, M.; Balakrishnan, R. Multi-finger and whole hand gestural interaction techniques for multi-user tabletop displays. In Proceedings of the 16th Annual ACM Symposium on User Interface Software and Technology, Vancouver, BC, Canada, 2–5 November 2003; pp. 193–202. [Google Scholar]

- Broccia, G.; Livesu, M.; Scateni, R. Gestural Interaction for Robot Motion Control. In Proceedings of the Eurographics Italian Chapter Conference, Llandudno, UK, 11–15 April 2011; pp. 61–66. [Google Scholar]

- Ng, W.S.; Sharlin, E. Collocated interaction with flying robots. In Proceedings of the 2011 Ro-Man, Atlanta, GA, USA, 31 July–3 August 2011; pp. 143–149. [Google Scholar]

- Cauchard, J.R.; Tamkin, A.; Wang, C.Y.; Vink, L.; Park, M.; Fang, T.; Landay, J.A. Drone.Io: A Gestural and Visual Interface for Human-Drone Interaction. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI)—HRI’19, Daegu, Korea, 11–14 March 2019; pp. 153–162. [Google Scholar]

- Rodríguez-Moreno, I.; Martínez-Otzeta, J.M.; Sierra, B.; Rodriguez, I.; Jauregi, E. Video activity recognition: State-of-the-art. Sensors 2019, 19, 3160. [Google Scholar] [CrossRef] [PubMed]

- Berman, S.; Stern, H. Sensors for gesture recognition systems. IEEE Trans. Syst. Man, Cybern. Part C (Appl. Rev.) 2011, 42, 277–290. [Google Scholar] [CrossRef]

- Freeman, W.T.; Roth, M. Orientation histograms for hand gesture recognition. In Proceedings of the International Workshop on Automatic Face and Gesture Recognition, Zurich, Switzerland, 26–28 June 1995; Volume 12, pp. 296–301. [Google Scholar]

- Vandersteegen, M.; Reusen, W.; Van Beeck, K.; Goedemé, T. Low-Latency Hand Gesture Recognition With a Low-Resolution Thermal Imager. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 98–99. [Google Scholar]

- Ren, Z.; Meng, J.; Yuan, J. Depth camera based hand gesture recognition and its applications in human-computer-interaction. In Proceedings of the 2011 8th International Conference on Information, Communications & Signal Processing, Singapore, 13–16 December 2011; pp. 1–5. [Google Scholar]

- Starr, J.W.; Lattimer, B. Evaluation of navigation sensors in fire smoke environments. Fire Technol. 2014, 50, 1459–1481. [Google Scholar] [CrossRef]

- Department of the Army. Training Circular 3-21.60 (FM 21-60) Visual Signals; Technical Report; CreateSpace Publishing: Scotts Valley, CA, USA, 2017; pp. 1–92. [Google Scholar]

- Kawatsu, C.; Koss, F.; Gillies, A.; Zhao, A.; Crossman, J.; Purman, B.; Stone, D.; Dahn, D. Gesture recognition for robotic control using deep learning. In Proceedings of the NDIA Ground Vehicle Systems Engineering and Technology Symposium, Novi, MI, USA, 8–10 August 2017. [Google Scholar]

- Chen, Q.; Georganas, N.D.; Petriu, E.M. Real-time vision-based hand gesture recognition using haar-like features. In Proceedings of the 2007 IEEE Instrumentation & Measurement Technology Conference IMTC, Warsaw, Poland, 1–3 May 2007; pp. 1–6. [Google Scholar]

- Appenrodt, J.; Al-Hamadi, A.; Michaelis, B. Data gathering for gesture recognition systems based on single color-, stereo color-and thermal cameras. Int. J. Signal Process. Image Process. Pattern Recognit. 2010, 3, 37–50. [Google Scholar]

- Intel. Realsense Depth Camera D435. Available online: https://www.intelrealsense.com/depth-camera-d435/ (accessed on 7 June 2021).

- Opgal. Therm-App Hz. Available online: https://therm-app.com/ (accessed on 7 June 2021).

- Brock, A.M.; Chatain, J.; Park, M.; Fang, T.; Hachet, M.; Landay, J.A.; Cauchard, J.R. FlyMap: Interacting with Maps Projected from a Drone. In Proceedings of the 7th ACM International Symposium on Pervasive Displays—PerDis’18, Munich, Germany, 6–8 June 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- De Smedt, Q.; Wannous, H.; Vandeborre, J.P. Heterogeneous hand gesture recognition using 3D dynamic skeletal data. Comput. Vis. Image Underst. 2019, 181, 60–72. [Google Scholar] [CrossRef]

- Žemgulys, J.; Raudonis, V.; Maskeliūnas, R.; Damaševičius, R. Recognition of basketball referee signals from videos using Histogram of Oriented Gradients (HOG) and Support Vector Machine (SVM). Procedia Comput. Sci. 2018, 130, 953–960. [Google Scholar] [CrossRef]

- De Oliveira, D.C.; Wehrmeister, M.A. Using deep learning and low-cost RGB and thermal cameras to detect pedestrians in aerial images captured by multirotor UAV. Sensors 2018, 18, 2244. [Google Scholar] [CrossRef]

- Liu, C.; Szirányi, T. Real-Time Human Detection and Gesture Recognition for On-Board UAV Rescue. Sensors 2021, 21, 2180. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.E.; Sheikh, Y. OpenPose: Realtime multi-person 2D pose estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [PubMed]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Dadi, H.S.; Pillutla, G.M. Improved face recognition rate using HOG features and SVM classifier. IOSR J. Electron. Commun. Eng. 2016, 11, 34–44. [Google Scholar] [CrossRef]

- OpenCV. BackgroundSubtractorMOG2. Available online: https://docs.opencv.org/master/d7/d7b/classcv_1_1BackgroundSubtractorMOG2.html (accessed on 7 June 2021).

- Keras API Reference. Resnet. Available online: https://keras.io/api/applications/resnet/ (accessed on 7 June 2021).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 630–645. [Google Scholar]

- Bianco, S.; Cadene, R.; Celona, L.; Napoletano, P. Benchmark Analysis of Representative Deep Neural Network Architectures. IEEE Access 2018, 6, 64270–64277. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Hendel, I.G.; Ross, G.M. Efficacy of Remote Sensing in Early Forest Fire Detection: A Thermal Sensor Comparison. Can. J. Remote Sens. 2020, 46, 414–428. [Google Scholar] [CrossRef]

- Brunner, C.; Peynot, T.; Vidal-Calleja, T.; Underwood, J. Selective combination of visual and thermal imaging for resilient localization in adverse conditions: Day and night, smoke and fire. J. Field Robot. 2013, 30, 641–666. [Google Scholar] [CrossRef]

| Task | Description | Chosen Gesture |

|---|---|---|

| Detecting Fire | Detect number and location of fire spots | (a) |

| Identifying Hazardous Materials | Identify source and size of gas or chemical leak | (b) |

| Finding Survivors | Search for trapped citizens or people in danger and collect information on their number and location | (c) |

| Mapping | Create a map of the floor or building based on exploration | (d) |

| Recognition Accuracy | ||||

|---|---|---|---|---|

| Sensor | Algorithm | without Smoke without Equipment | without Smoke with Equipment | with Smoke with Equipment |

| Depth | “Frame Vote” (HOG + SVM) | 78% | 70% | N/A |

| “Frame Vote” (CNN) | 81% | 72% | N/A | |

| HOG + SVM | 92% | 84% | N/A | |

| RGB | skeleton extraction + SVM | 98% | 96% | 90% |

| Thermal | “Frame Vote” (HOG + SVM) | 90% | 86% | 70% |

| “Frame Vote” (CNN) | 90% | 84% | 71% | |

| HOG + SVM | 86% | 86% | 56% | |

| skeleton extraction + SVM | 94% | 96% | 88% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alon, O.; Rabinovich, S.; Fyodorov, C.; Cauchard, J.R. First Step toward Gestural Recognition in Harsh Environments. Sensors 2021, 21, 3997. https://doi.org/10.3390/s21123997

Alon O, Rabinovich S, Fyodorov C, Cauchard JR. First Step toward Gestural Recognition in Harsh Environments. Sensors. 2021; 21(12):3997. https://doi.org/10.3390/s21123997

Chicago/Turabian StyleAlon, Omri, Sharon Rabinovich, Chana Fyodorov, and Jessica R. Cauchard. 2021. "First Step toward Gestural Recognition in Harsh Environments" Sensors 21, no. 12: 3997. https://doi.org/10.3390/s21123997

APA StyleAlon, O., Rabinovich, S., Fyodorov, C., & Cauchard, J. R. (2021). First Step toward Gestural Recognition in Harsh Environments. Sensors, 21(12), 3997. https://doi.org/10.3390/s21123997