On the Uncertainty Identification for Linear Dynamic Systems Using Stochastic Embedding Approach with Gaussian Mixture Models

Abstract

1. Introduction

- (i)

- We obtain the likelihood function for a linear dynamic system modeled with SE approach and a finite Gaussian mixture distribution. The likelihood function is computed by marginalizing the vector of parameters of the error-model as a hidden variable.

- (ii)

- We propose an EM algorithm to solve the associated ML estimation problem with GMMs, obtaining the estimates of the vector of parameters that define the nominal model and closed-form expressions for the GMM estimators of the error-model distribution.

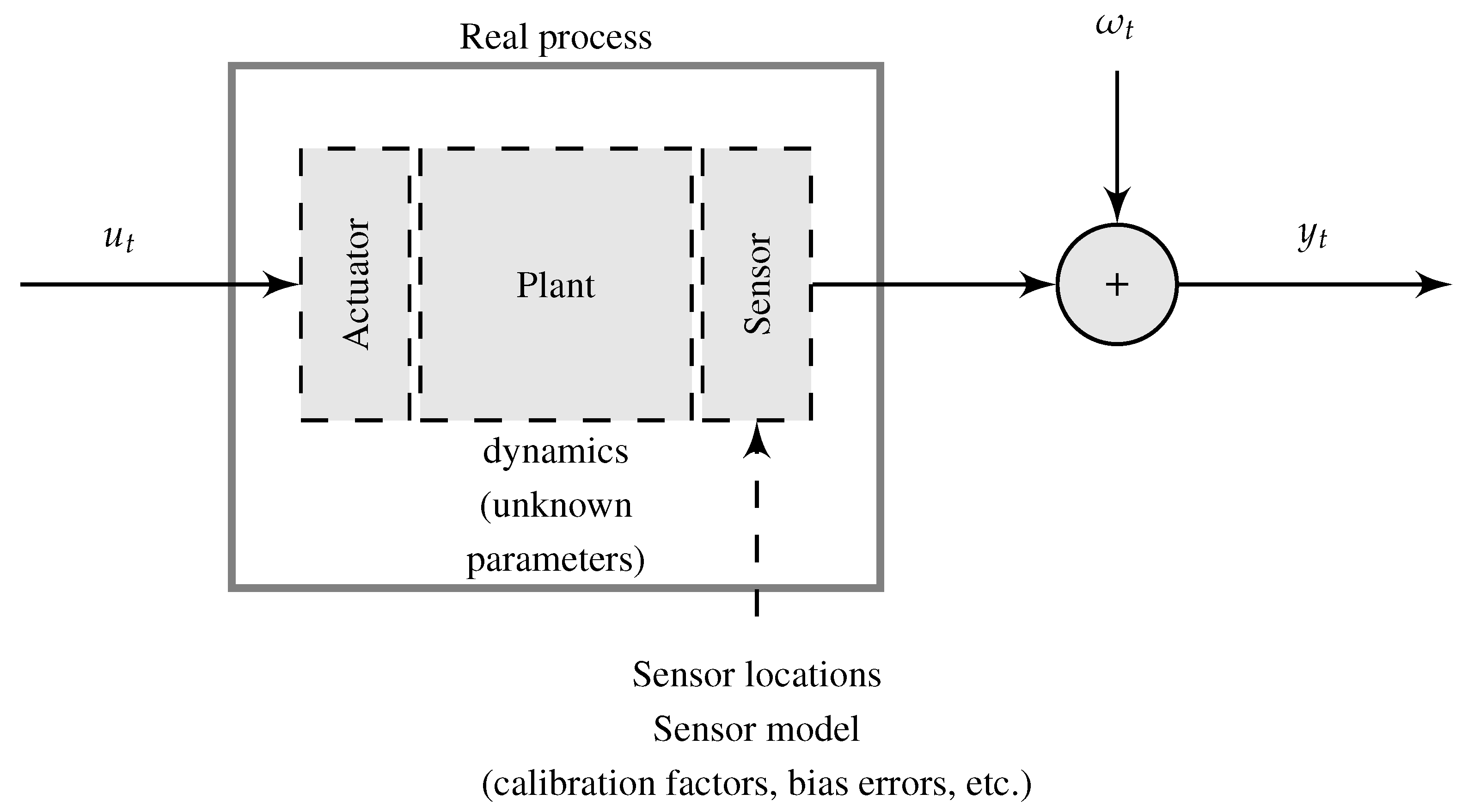

2. Uncertainty Modeling for Linear Dynamic Systems Using Stochastic Embedding Approach

2.1. System Description

2.2. Standing Assumptions

3. Maximum Likelihood Estimation for Uncertainty Modeling Using GMMs

4. An EM Algorithm with GMMs for Uncertainty Modeling in Linear Dynamic Systems

- (1)

- Fixing the vector of parameters at its value from the current iteration to optimize Equation (19) with respect to the GMM parameters and the noise variance .

- (2)

- Fixing the GMM parameters and the noise variance at their values from the iteration and and to solve the optimization problem in Equation (20) to obtain .

| Algorithm 1 (EM algorithm with GMMs). |

|

5. Numerical Examples

- (1)

- The data length is .

- (2)

- The number of experiments is .

- (3)

- The number of Monte Carlo (MC) simulation is 25.

- (4)

- The stopping criterion is satisfied when:or when 2000 iterations of the EM algorithm have been reached, where ‖·‖ denotes the general vector norm operator.

- (1)

- From all the estimated FIR models, compute the average value of the coefficient corresponding to each tap.

- (2)

- Evenly space the initial mean values of the -dimensional GMM between the estimated maximum and the minimum value of each tap.

- (3)

- Set the variances of the -dimensional GMM equal to a diagonal covariance matrix with the sample variance of each tap on the diagonal.

- (4)

- Set the mixing weight for each GMM component equal to .

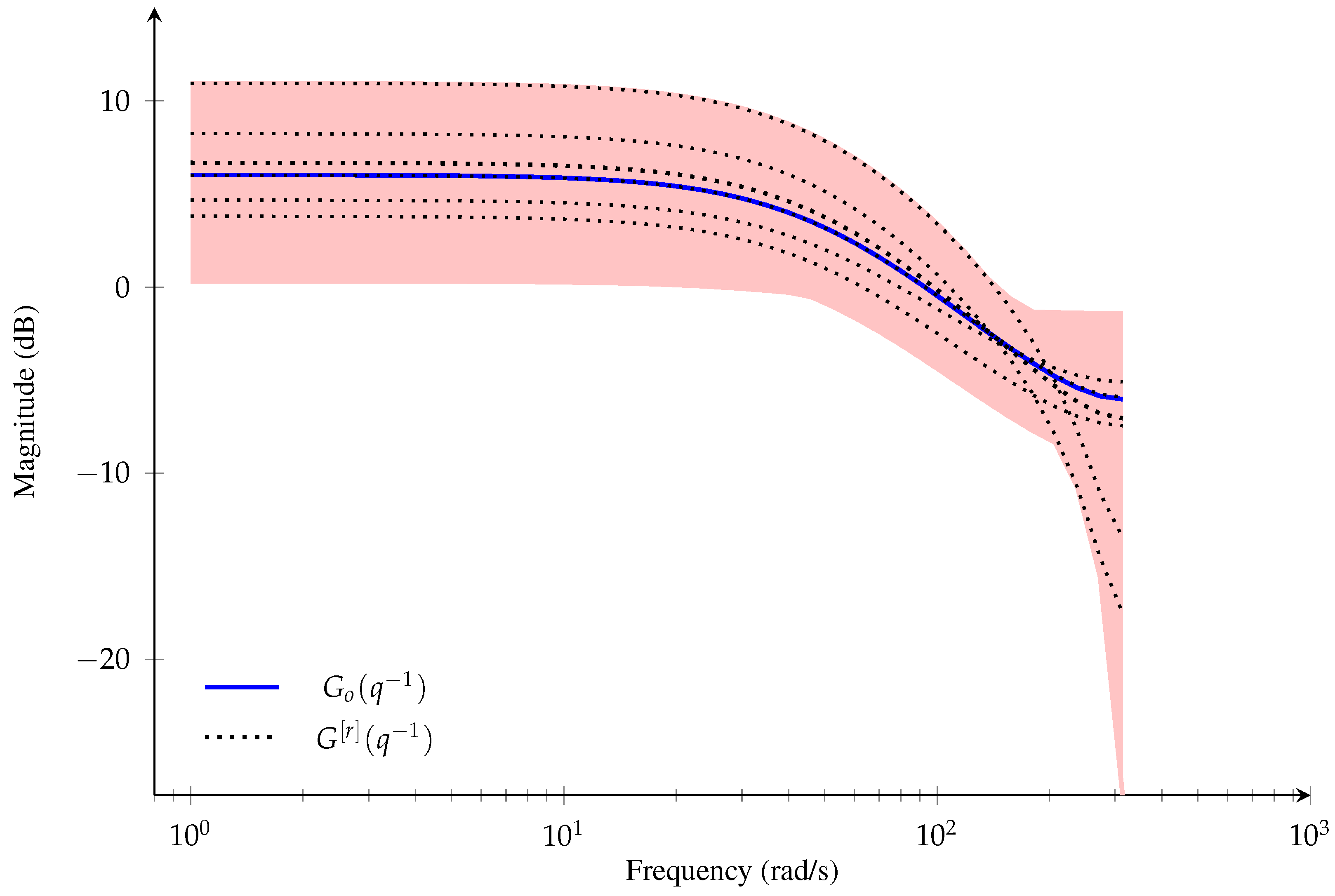

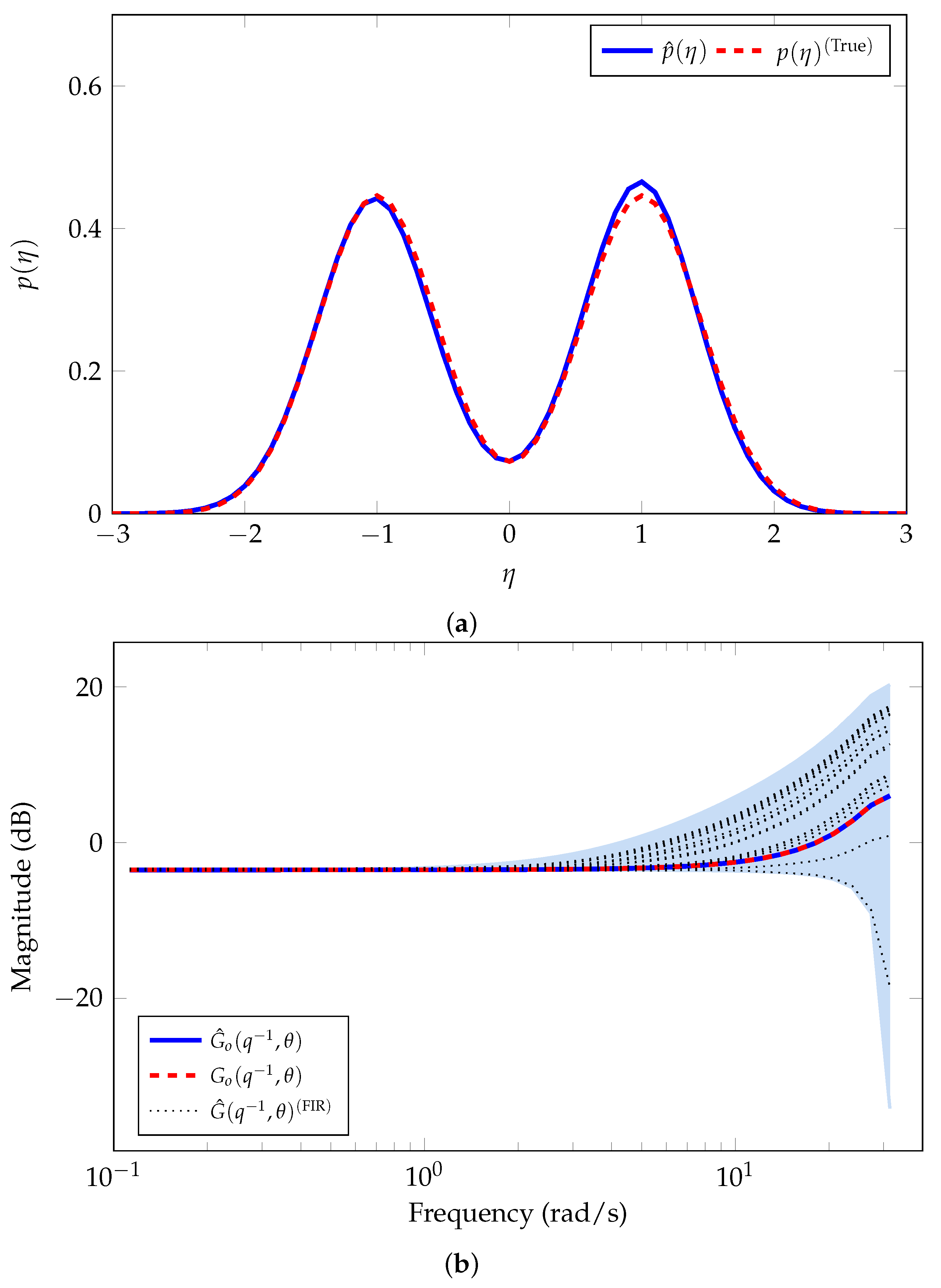

5.1. Example 1: A General System with a Gaussian Mixture Error-Model Distribution

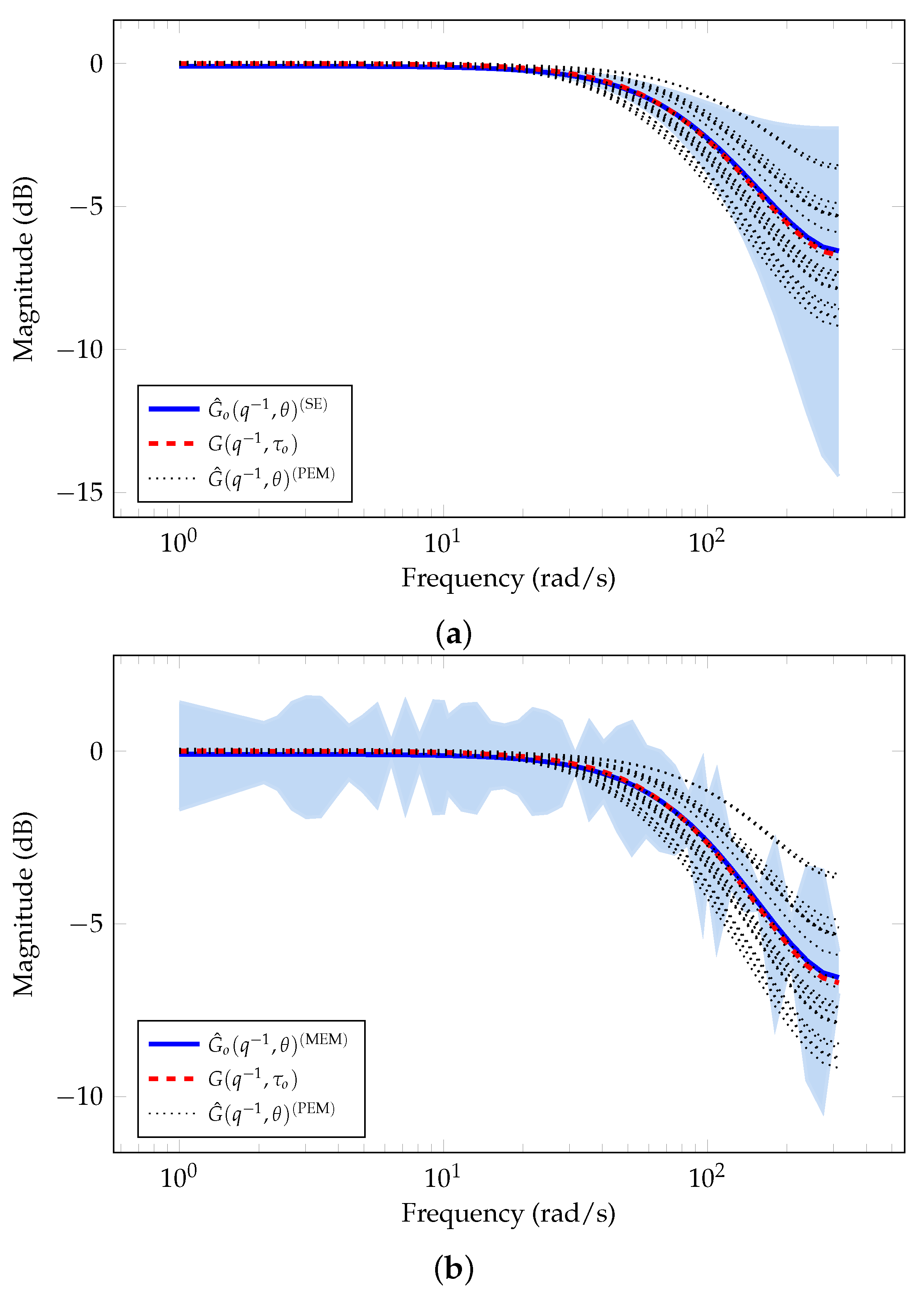

5.2. Example 2: A Resistor-Capacitor System Model with Non-Gaussian-Sum Uncertainties

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| EM: | Expectation-Maximization |

| FIR: | Finite impulse response |

| GMM: | Gaussian mixture model |

| MC: | Monte Carlo |

| MEM: | Model error modeling |

| ML: | Maximum likelihood |

| PDF: | Probability density function |

| PEM: | Prediction error method |

| SE: | Stochastic embedding |

Appendix A. Maximum Likelihood Formulation with SE Approach

Appendix B. Computing the Complete Likelihood Function

Appendix C. EM Algorithm with GMMs Formulation

Appendix C.1. Computing the Auxiliary Function

Appendix C.2. Optimizing the Auxiliary Function

References

- Söderström, T.; Stoica, P. System Identification; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1988. [Google Scholar]

- Ljung, L. System Identification: Theory for the User; Prentice Hall PTR: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Goodwin, G.C.; Payne, R.L. Dynamic System Identification: Experiment Design and Data Analysis; Academic Press: New York, NY, USA, 1977. [Google Scholar]

- Ljung, L.; Goodwin, G.C.; Agüero, J.C. Stochastic Embedding revisited: A modern interpretation. In Proceedings of the 53rd IEEE Conference on Decision and Control, Los Angeles, CA, USA, 15–17 December 2014; pp. 3340–3345. [Google Scholar]

- Jategaonkar, R.V. Flight Vehicle System Identification: A Time Domain Methodology, 2nd ed.; Progress in Astronautics and Aeronautics; v. 245; American Institute of Aeronautics and Astronautics, Inc.: Reston, VA, USA, 2006. [Google Scholar]

- Han, Z.; Li, W.; Shah, S.L. Robust Fault Diagnosis in the Presence of Process Uncertainties. IFAC Proc. Vol. 2002, 35, 473–478. [Google Scholar] [CrossRef]

- Nikoukhah, R.; Campbell, S. A Multi-Model Approach to Failure Detection in Uncertain Sampled-Data Systems. Eur. J. Control 2005, 11, 255–265. [Google Scholar] [CrossRef]

- Patton, R.J.; Frank, P.M.; Clark, R.N. Issues of Fault Diagnosis for Dynamic Systems, 1st ed.; Springer Publishing Company, Inc.: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Escobet, T.; Bregon, A.; Pulido, B.; Puig, V. Fault Diagnosis of Dynamic Systems; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Hamel, P.G.; Jategaonkar, R.V. Evolution of flight vehicle system identification. J. Aircr. 1996, 33, 9–28. [Google Scholar] [CrossRef]

- Jategaonkar, R.; Thielecke, F. Aircraft parameter estimation—A tool for development of aerodynamic databases. Sadhana 2006, 25, 119–135. [Google Scholar] [CrossRef]

- Rosić, B.V.; Diekmann, J.H. Methods for the Uncertainty Quantification of Aircraft Simulation Models. J. Aircr. 2015, 52, 1247–1255. [Google Scholar] [CrossRef]

- Mu, H.Q.; Liu, H.T.; Shen, J.H. Copula-Based Uncertainty Quantification (Copula-UQ) for Multi-Sensor Data in Structural Health Monitoring. Sensors 2020, 20, 5692. [Google Scholar] [CrossRef]

- Hilton, S.; Cairola, F.; Gardi, A.; Sabatini, R.; Pongsakornsathien, N.; Ezer, N. Uncertainty Quantification for Space Situational Awareness and Traffic Management. Sensors 2019, 19, 4361. [Google Scholar] [CrossRef]

- Guo, J.; Zhao, Y. Recursive projection algorithm on FIR system identification with binary-valued observations. Automatica 2013, 49, 3396–3401. [Google Scholar] [CrossRef]

- Moschitta, A.; Schoukens, J.; Carbone, P. Parametric System Identification Using Quantized Data. IEEE Trans. Instrum. Meas. 2015, 64, 2312–2322. [Google Scholar] [CrossRef]

- Cedeño, A.L.; Orellana, R.; Carvajal, R.; Agüero, J.C. EM-based identification of static errors-in-variables systems utilizing Gaussian Mixture models. IFAC-PapersOnLine 2020, 53, 863–868. [Google Scholar] [CrossRef]

- Orellana, R.; Escárate, P.; Curé, M.; Christen, A.; Carvajal, R.; Agüero, J.C. A method to deconvolve stellar rotational velocities-III. The probability distribution function via maximum likelihood utilizing finite distribution mixtures. Astron. Astrophys. 2019, 623, A138. [Google Scholar] [CrossRef]

- Agüero, J.C.; Goodwin, G.C.; Yuz, J.I. System identification using quantized data. In Proceedings of the 46th IEEE Conference on Decision and Control, New Orleans, LA, USA, 12–14 December 2007; pp. 4263–4268. [Google Scholar]

- Godoy, B.I.; Goodwin, G.C.; Agüero, J.C.; Marelli, D.; Wigren, T. On identification of FIR systems having quantized output data. Automatica 2011, 47, 1905–1915. [Google Scholar] [CrossRef]

- Godoy, B.I.; Agüero, J.C.; Carvajal, R.; Goodwin, G.C.; Yuz, J.I. Identification of sparse FIR systems using a general quantisation scheme. Int. J. Control 2014, 87, 874–886. [Google Scholar] [CrossRef]

- Agüero, J.C.; González, K.; Carvajal, R. EM-based identification of ARX systems having quantized output data. IFAC-PapersOnLine 2017, 50, 8367–8372. [Google Scholar] [CrossRef]

- Carvajal, R.; Godoy, B.I.; Agüero, J.C.; Goodwin, G.C. EM-based sparse channel estimation in OFDM systems. In Proceedings of the IEEE 13th International Workshop on Signal Processing Advances in Wireless Communications, Çeşme, Turkey, 17–20 June 2012; pp. 530–534. [Google Scholar]

- Carvajal, R.; Aguero, J.C.; Godoy, B.I.; Goodwin, G.C. EM-Based Maximum-Likelihood Channel Estimation in Multicarrier Systems With Phase Distortion. IEEE Trans. Veh. Technol. 2013, 62, 152–160. [Google Scholar] [CrossRef]

- Carnduff, S. Flight Vehicle System Identification: A Time-Domain Methodology. Aeronaut. J. 2015, 119, 930–931. [Google Scholar] [CrossRef]

- Goodwin, G.C.; Salgado, M.E. A stochastic embedding approach for quantifying uncertainty in the estimation of restricted complexity models. Int. J. Adapt. Control Signal Process. 1989, 3, 333–356. [Google Scholar] [CrossRef]

- Van den Hof, P.; Wahlberg, B.; Heuberger, P.; Ninness, B.; Bokor, J.; Oliveira e Silva, T. Modelling and Identification with Rational Orthogonal Basis Functions. IFAC Proc. Vol. 2000, 33, 445–455. [Google Scholar] [CrossRef]

- Peralta, P.; Ruiz, R.O.; Taflanidis, A.A. Bayesian identification of electromechanical properties in piezoelectric energy harvesters. Mech. Syst. Signal Process. 2020, 141, 106506. [Google Scholar] [CrossRef]

- Goodwin, G.; Graebe, S.; Salgado, M.E. Control Systems Design; Prentice Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Douma, S.G.; Van den Hof, P.M. Relations between uncertainty structures in identification for robust control. Automatica 2005, 41, 439–457. [Google Scholar] [CrossRef]

- Calafiore, G.C.; Campi, M.C. The scenario approach to robust control design. IEEE Trans. Autom. Control 2006, 51, 742–753. [Google Scholar] [CrossRef]

- Milanese, M.; Vicino, A. Optimal estimation theory for dynamic systems with set membership uncertainty: An overview. Automatica 1991, 27, 997–1009. [Google Scholar] [CrossRef]

- Ljung, L. Model Error Modeling and Control Design. IFAC Proc. Vol. 2000, 33, 31–36. [Google Scholar] [CrossRef]

- Goodwin, G.C.; Gevers, M.; Ninness, B. Quantifying the error in estimated transfer functions with application to model order selection. IEEE Trans. Autom. Control 1992, 37, 913–928. [Google Scholar] [CrossRef]

- Delgado, R.A.; Goodwin, G.C.; Carvajal, R.; Agüero, J.C. A novel approach to model error modelling using the expectation-maximization algorithm. In Proceedings of the 51st IEEE Conference on Decision and Control, Maui, HI, USA, 10–13 December 2012; pp. 7327–7332. [Google Scholar]

- Dempster, A.; Laird, N.; Rubin, D. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. B 1977, 39, 1–38. [Google Scholar]

- Ljung, L.; Goodwin, G.C.; Agüero, J.C.; Chen, T. Model Error Modeling and Stochastic Embedding. IFAC-PapersOnLine 2015, 48, 75–79. [Google Scholar] [CrossRef]

- Pillonetto, G.; Nicolao, G.D. A new kernel-based approach for linear system identification. Automatica 2010, 46, 81–93. [Google Scholar] [CrossRef]

- Ljung, L.; Chen, T.; Mu, B. A shift in paradigm for system identification. Int. J. Control 2020, 93, 173–180. [Google Scholar] [CrossRef]

- Anderson, B.; Moore, J. Optimal Filtering; Prentice-Hall: Englewood Cliffs, NJ, USA, 1979. [Google Scholar]

- Söderström, T. Discrete-Time Stochastic Systems: Estimation and Control, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Balenzuela, M.P.; Dahlin, J.; Bartlett, N.; Wills, A.G.; Renton, C.; Ninness, B. Accurate Gaussian Mixture Model Smoothing using a Two-Filter Approach. In Proceedings of the IEEE Conference on Decision and Control, Miami, FL, USA, 17–19 December 2018; pp. 694–699. [Google Scholar]

- Bittner, G.; Orellana, R.; Carvajal, R.; Agüero, J.C. Maximum Likelihood identification for Linear Dynamic Systems with finite Gaussian mixture noise distribution. In Proceedings of the IEEE CHILEAN Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Valparaíso, Chile, 13–27 November 2019; pp. 1–7. [Google Scholar]

- Orellana, R.; Bittner, G.; Carvajal, R.; Agüero, J.C. Maximum Likelihood estimation for non-minimum-phase noise transfer function with Gaussian mixture noise distribution. Automatica 2021. under review. [Google Scholar]

- Sorenson, H.W.; Alspach, D.L. Recursive Bayesian estimation using Gaussian sums. Automatica 1971, 7, 465–479. [Google Scholar] [CrossRef]

- Dahlin, J.; Wills, A.; Ninness, B. Sparse Bayesian ARX models with flexible noise distributions. IFAC-PapersOnLine 2018, 51, 25–30. [Google Scholar] [CrossRef]

- Orellana, R.; Carvajal, R.; Agüero, J.C. Empirical Bayes estimation utilizing finite Gaussian Mixture Models. In Proceedings of the IEEE CHILEAN Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Valparaíso, Chile, 13–27 November 2019; pp. 1–6. [Google Scholar]

- Orellana, R.; Carvajal, R.; Agüero, J.C.; Goodwin, G.C. Model Error Modelling using a Stochastic Embedding approach with Gaussian Mixture Models for FIR system. IFAC-PapersOnLine 2020, 53, 845–850. [Google Scholar] [CrossRef]

- Carvajal, R.; Orellana, R.; Katselis, D.; Escárate, P.; Agüero, J.C. A data augmentation approach for a class of statistical inference problems. PLoS ONE 2018, 13, e0208499. [Google Scholar] [CrossRef]

- Lo, J. Finite-dimensional sensor orbits and optimal nonlinear filtering. IEEE Trans. Inf. Theory 1972, 18, 583–588. [Google Scholar] [CrossRef]

- Yu, C.; Verhaegen, M. Data-driven fault estimation of non-minimum phase LTI systems. Automatica 2018, 92, 181–187. [Google Scholar] [CrossRef]

- Campi, M.C. The problem of pole-zero cancellation in transfer function identification and application to adaptive stabilization. Automatica 1996, 32, 849–857. [Google Scholar] [CrossRef]

- Heuberger, P.; Van den Hof, P.; Bosgra, O. A Generalized Orthonormal Basis for Linear Dynamical Systems. IEEE Trans. Autom. Control 1995, 40, 451–465. [Google Scholar] [CrossRef]

- Chen, T.; Ljung, L. Implementation of algorithms for tuning parameters in regularized least squares problems in system identification. Automatica 2013, 49, 2213–2220. [Google Scholar] [CrossRef]

- Jin, C.; Zhang, Y.; Balakrishnan, S.; Wainwright, M.; Jordan, M. Local Maxima in the Likelihood of Gaussian Mixture Models: Structural Results and Algorithmic Consequences. In Proceedings of the 29th Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4123–4131. [Google Scholar]

- McLachlan, G.J.; Peel, D. Finite Mixture Models; John Wiley & Sons: New York, NY, USA, 2000. [Google Scholar]

- Frühwirth-Schnatter, S.; Celeux, G.; Robert, C. Handbook of Mixture Analysis; Chapman and Hall/CRC: New York, NY, USA, 2018. [Google Scholar]

- Gibson, S.; Ninness, B. Robust maximum-likelihood estimation of multivariable dynamic systems. Automatica 2005, 41, 1667–1682. [Google Scholar] [CrossRef]

- Gopaluni, R.B. A particle filter approach to identification of nonlinear processes under missing observations. Can. J. Chem. Eng. 2008, 86, 1081–1092. [Google Scholar] [CrossRef]

- Agüero, J.C.; Tang, W.; Yuz, J.I.; Delgado, R.; Goodwin, G.C. Dual time–frequency domain system identification. Automatica 2012, 48, 3031–3041. [Google Scholar] [CrossRef]

- Wright, S. Coordinate descent algorithms. Math. Program. 2015, 151, 3–34. [Google Scholar] [CrossRef]

- Lichota, P. Inclusion of the D-optimality in multisine manoeuvre design for aircraft parameter estimation. J. Theor. Appl. Mech. 2016, 54, 87–98. [Google Scholar] [CrossRef]

- Pawełek, A.; Lichota, P. Arrival air traffic separations assessment using Maximum Likelihood Estimation and Fisher Information Matrix. In Proceedings of the 20th International Carpathian Control Conference, Krakow-Wieliczka, Poland, 26–29 May 2019; pp. 1–6. [Google Scholar]

| Parameter | True Value | Estimated Value |

|---|---|---|

| 1 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Orellana, R.; Carvajal, R.; Escárate, P.; Agüero, J.C. On the Uncertainty Identification for Linear Dynamic Systems Using Stochastic Embedding Approach with Gaussian Mixture Models. Sensors 2021, 21, 3837. https://doi.org/10.3390/s21113837

Orellana R, Carvajal R, Escárate P, Agüero JC. On the Uncertainty Identification for Linear Dynamic Systems Using Stochastic Embedding Approach with Gaussian Mixture Models. Sensors. 2021; 21(11):3837. https://doi.org/10.3390/s21113837

Chicago/Turabian StyleOrellana, Rafael, Rodrigo Carvajal, Pedro Escárate, and Juan C. Agüero. 2021. "On the Uncertainty Identification for Linear Dynamic Systems Using Stochastic Embedding Approach with Gaussian Mixture Models" Sensors 21, no. 11: 3837. https://doi.org/10.3390/s21113837

APA StyleOrellana, R., Carvajal, R., Escárate, P., & Agüero, J. C. (2021). On the Uncertainty Identification for Linear Dynamic Systems Using Stochastic Embedding Approach with Gaussian Mixture Models. Sensors, 21(11), 3837. https://doi.org/10.3390/s21113837