Auto-Refining Reconstruction Algorithm for Recreation of Limited Angle Humanoid Depth Data

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

3.1. Dataset

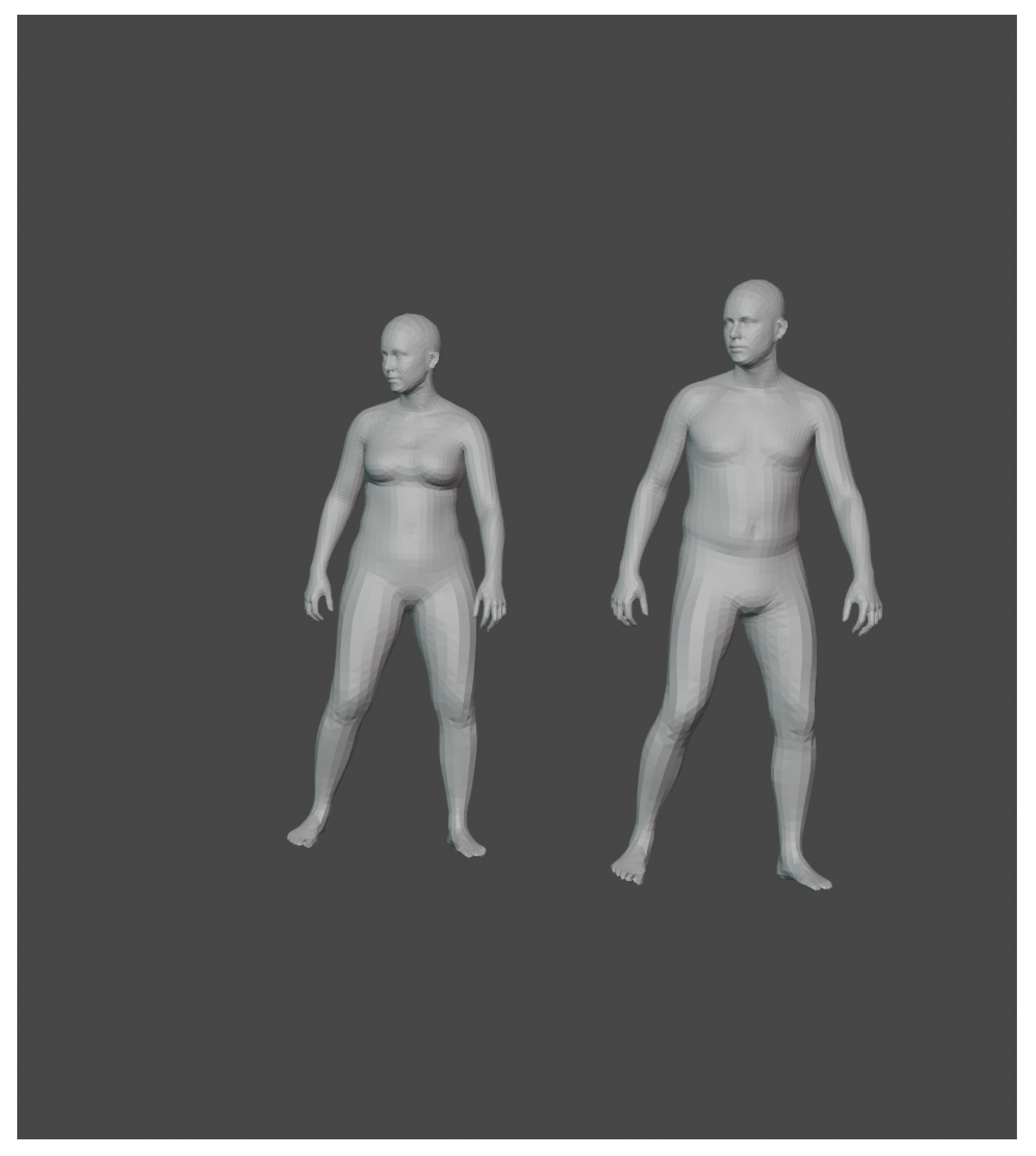

3.1.1. Synthetic Dataset

| Algorithm 1 Convert depth image to pointcloud |

|

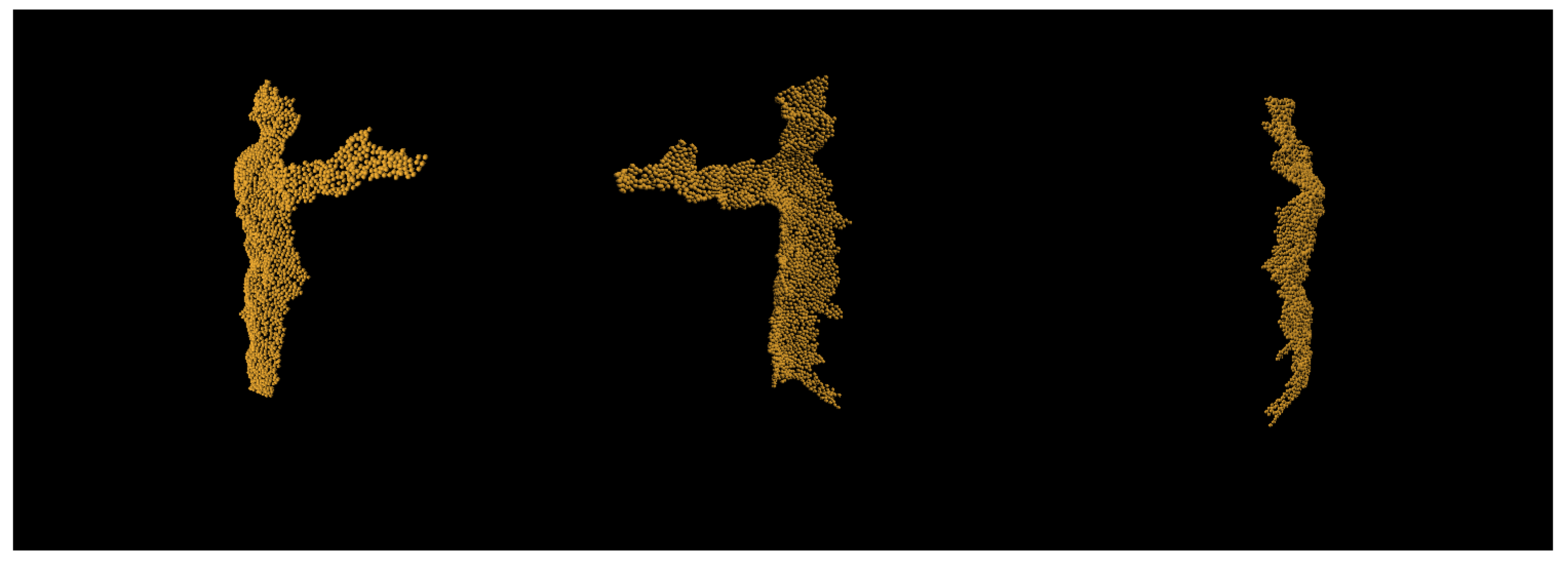

3.1.2. Real World Dataset

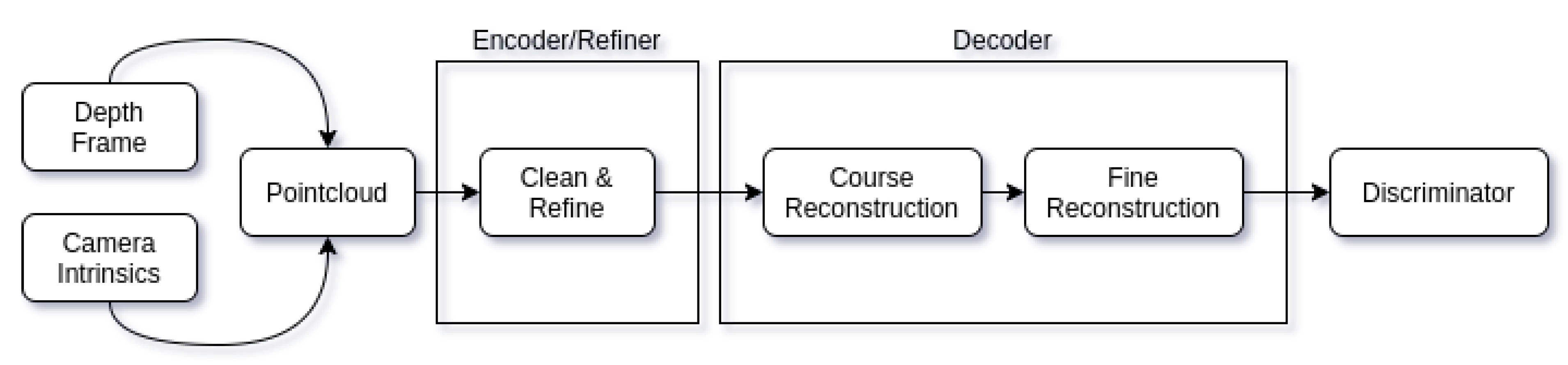

3.2. Proposed Adversarial Auto-Refiner Network Architecture

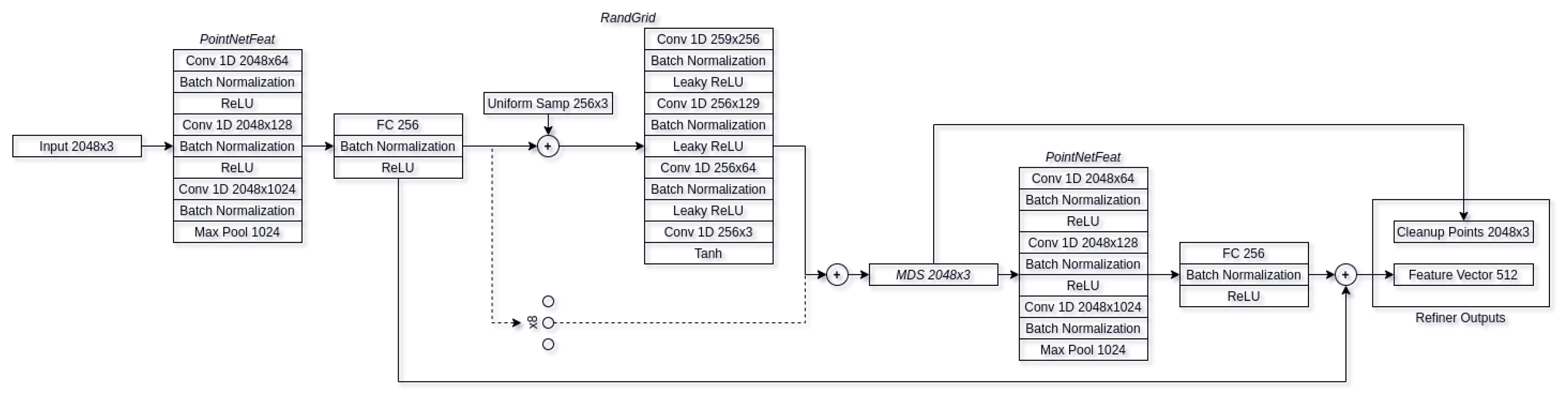

3.2.1. Refiner

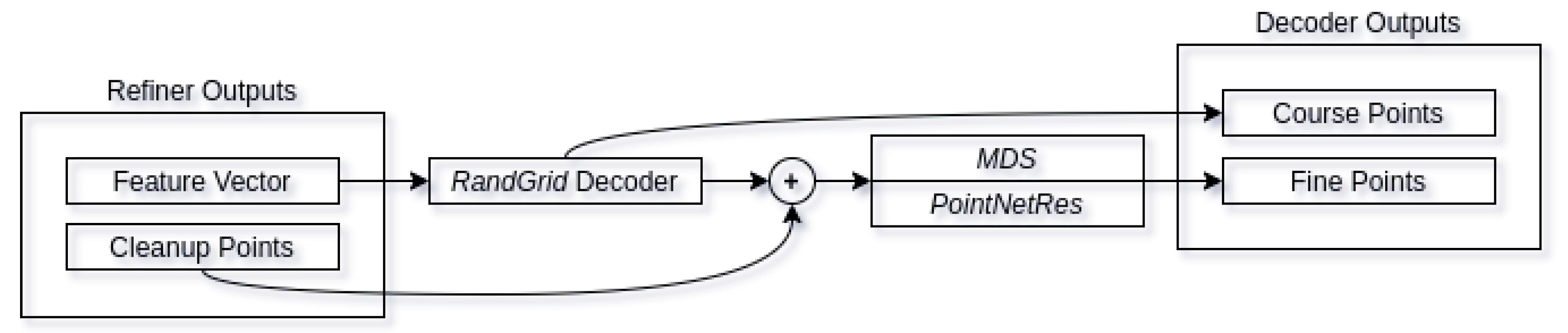

3.2.2. Decoder

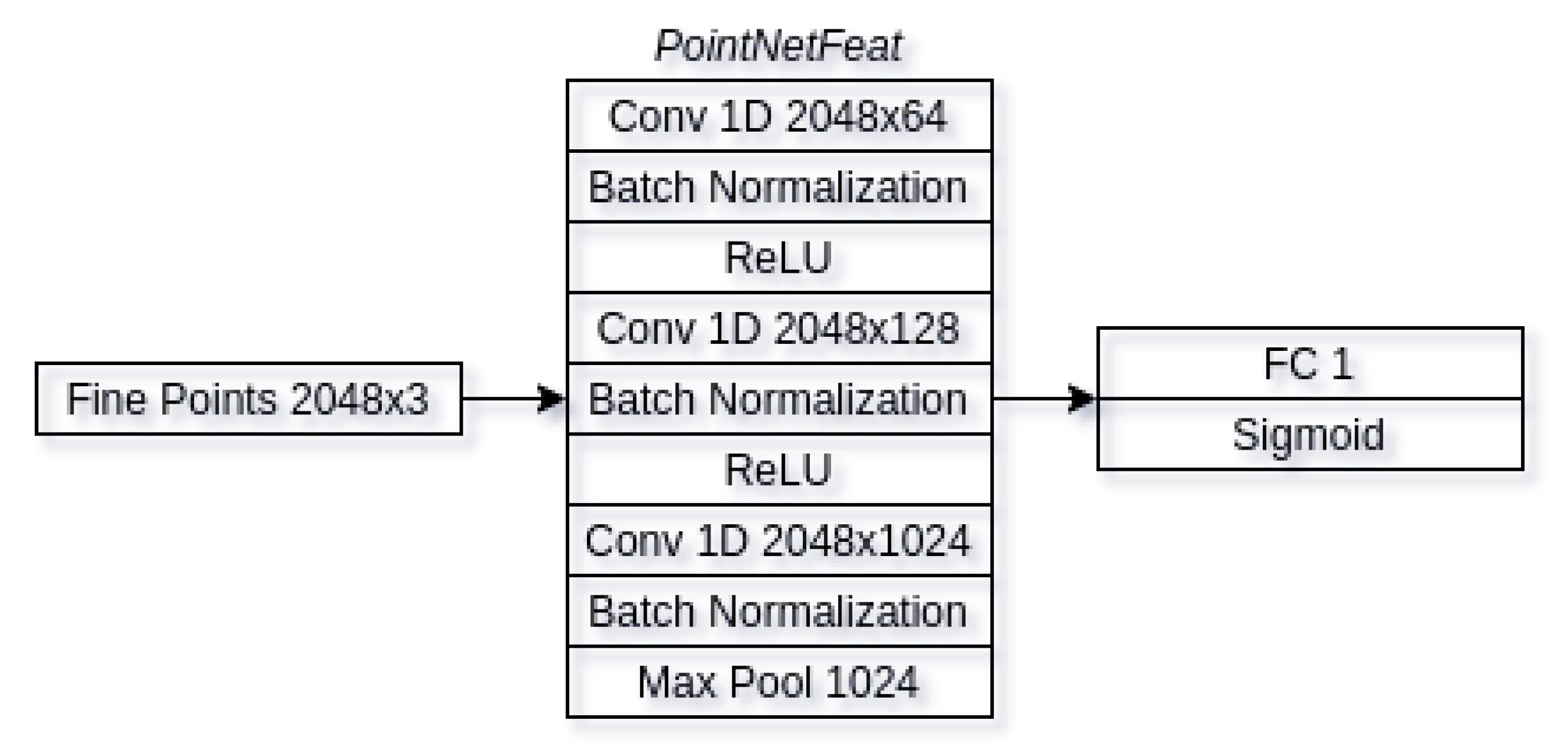

3.2.3. Discriminator

3.3. Training Procedure

3.3.1. Phase I

3.3.2. Phase II

3.3.3. Phase III

3.3.4. Phase IV

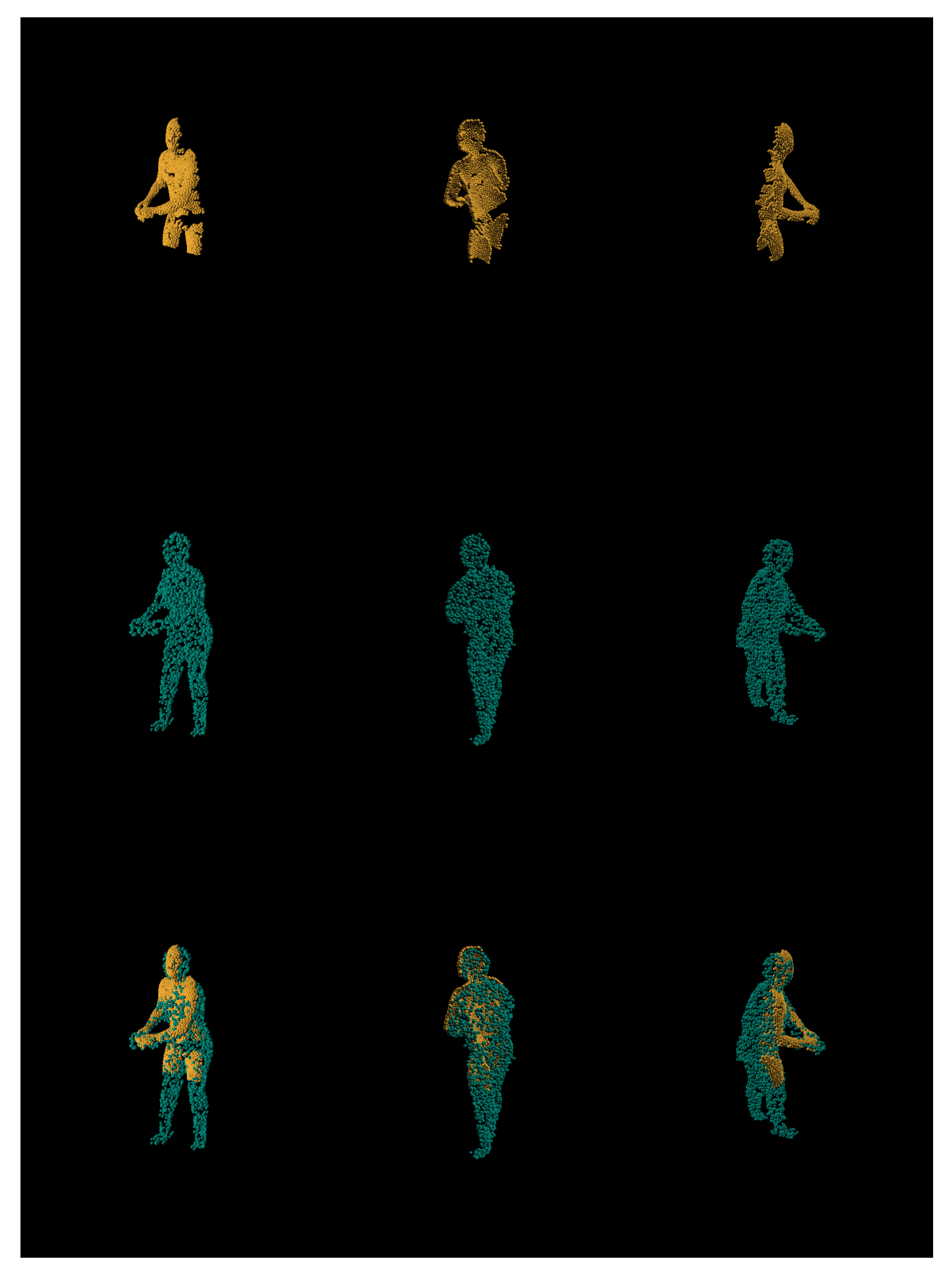

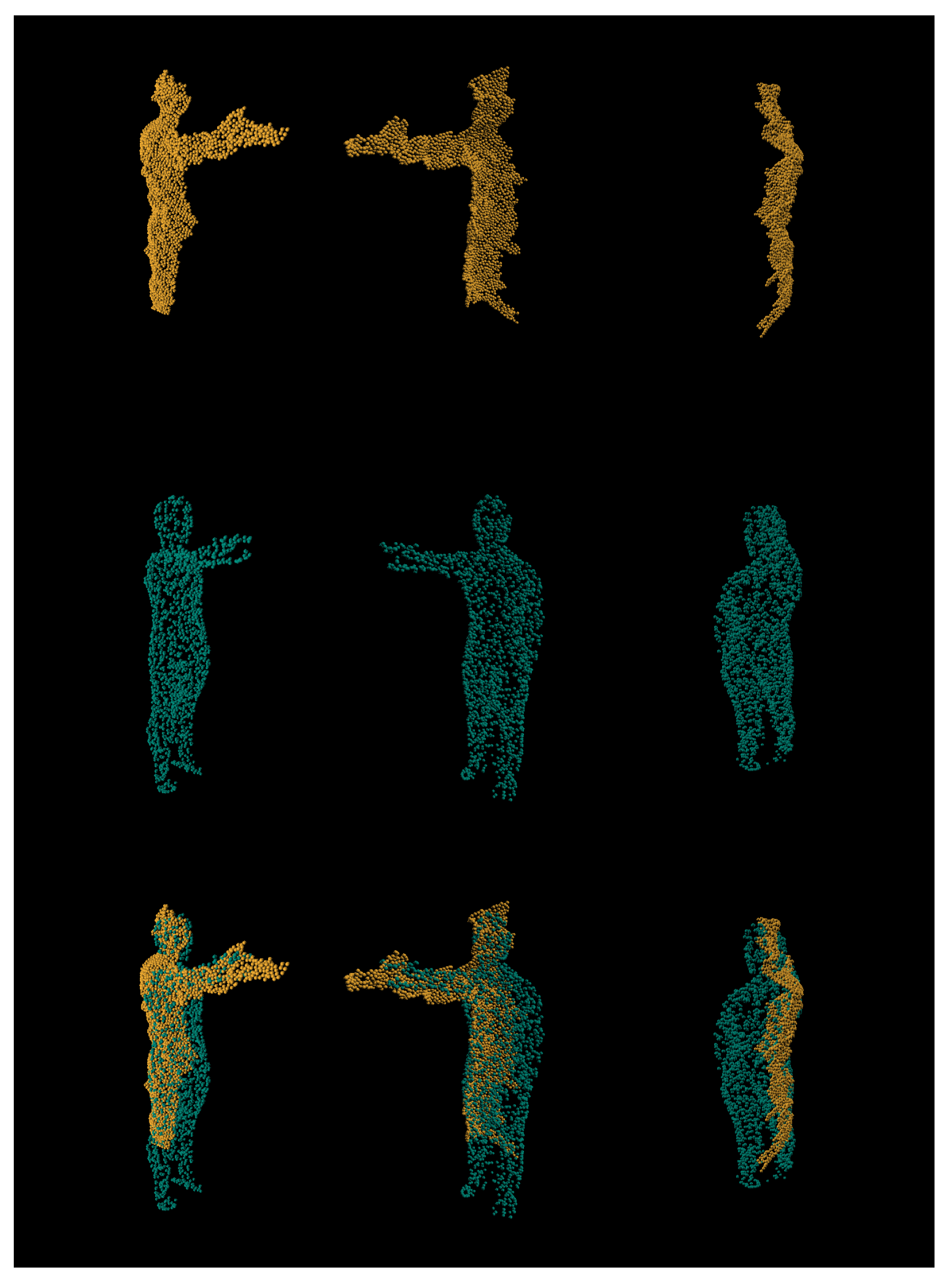

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1297–1304. [Google Scholar] [CrossRef]

- Bozgeyikli, G.; Bozgeyikli, E.; Işler, V. Introducing tangible objects into motion controlled gameplay using Microsoft® Kinect™. Comput. Animat. Virtual Worlds 2013, 24, 429–441. [Google Scholar] [CrossRef]

- Lozada, R.M.; Escriba, L.R.; Molina Granja, F.T. MS-Kinect in the development of educational games for preschoolers. Int. J. Learn. Technol. 2018, 13, 277–305. [Google Scholar] [CrossRef]

- Cary, F.; Postolache, O.; Girao, P.S. Kinect based system and serious game motivating approach for physiotherapy assessment and remote session monitoring. Int. J. Smart Sens. Intell. Syst. 2014, 7, 2–4. [Google Scholar] [CrossRef]

- Ryselis, K.; Petkus, T.; Blažauskas, T.; Maskeliūnas, R.; Damaševičius, R. Multiple Kinect based system to monitor and analyze key performance indicators of physical training. Hum. Centric Comput. Inf. Sci. 2020, 10, 1–22. [Google Scholar] [CrossRef]

- Camalan, S.; Sengul, G.; Misra, S.; Maskeliūnas, R.; Damaševičius, R. Gender detection using 3d anthropometric measurements by kinect. Metrol. Meas. Syst. 2018, 25, 253–267. [Google Scholar]

- Lourenco, F.; Araujo, H. Intel realsense SR305, D415 and L515: Experimental evaluation and comparison of depth estimation. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2021); Science and Technology Publications: Setúbal, Portugal, 2021; Volume 4, pp. 362–369. [Google Scholar]

- Zhang, Y.; Caspi, A. Stereo imagery based depth sensing in diverse outdoor environments: Practical considerations. In Proceedings of the 2nd ACM/EIGSCC Symposium on Smart Cities and Communities, SCC 2019, Portland, OR, USA, 10–12 September 2019. [Google Scholar]

- Jacob, S.; Menon, V.G.; Joseph, S. Depth Information Enhancement Using Block Matching and Image Pyramiding Stereo Vision Enabled RGB-D Sensor. IEEE Sens. J. 2020, 20, 5406–5414. [Google Scholar] [CrossRef]

- Díaz-Álvarez, A.; Clavijo, M.; Jiménez, F.; Serradilla, F. Inferring the Driver’s Lane Change Intention through LiDAR-Based Environment Analysis Using Convolutional Neural Networks. Sensors 2021, 21, 475. [Google Scholar] [CrossRef]

- Latella, M.; Sola, F.; Camporeale, C. A Density-Based Algorithm for the Detection of Individual Trees from LiDAR Data. Remote Sens. 2021, 13, 322. [Google Scholar] [CrossRef]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Thermal Infrared Sensing for Near Real-Time Data-Driven Fire Detection and Monitoring Systems. Sensors 2020, 20, 6803. [Google Scholar] [CrossRef]

- Pérez, J.; Bryson, M.; Williams, S.B.; Sanz, P.J. Recovering Depth from Still Images for Underwater Dehazing Using Deep Learning. Sensors 2020, 20, 4580. [Google Scholar] [CrossRef]

- Ren, W.; Ma, O.; Ji, H.; Liu, X. Human Posture Recognition Using a Hybrid of Fuzzy Logic and Machine Learning Approaches. IEEE Access 2020, 8, 135628–135639. [Google Scholar] [CrossRef]

- Kulikajevas, A.; Maskeliunas, R.; Damaševičius, R. Detection of sitting posture using hierarchical image composition and deep learning. PeerJ Comput. Sci. 2021, 7. [Google Scholar] [CrossRef]

- Coolen, B.; Beek, P.J.; Geerse, D.J.; Roerdink, M. Avoiding 3D Obstacles in Mixed Reality: Does It Differ from Negotiating Real Obstacles? Sensors 2020, 20, 1095. [Google Scholar] [CrossRef]

- Fanini, B.; Pagano, A.; Ferdani, D. A Novel Immersive VR Game Model for Recontextualization in Virtual Environments: The uVRModel. Multimodal Technol. Interact. 2018, 2, 20. [Google Scholar] [CrossRef]

- Ibañez-Etxeberria, A.; Gómez-Carrasco, C.J.; Fontal, O.; García-Ceballos, S. Virtual Environments and Augmented Reality Applied to Heritage Education. An Evaluative Study. Appl. Sci. 2020, 10, 2352. [Google Scholar] [CrossRef]

- Fast-Berglund, Å; Gong, L.; Li, D. Testing and validating Extended Reality (xR) technologies in manufacturing. Procedia Manuf. 2018, 25, 31–38. [Google Scholar] [CrossRef]

- Fan, H.; Su, H.; Guibas, L. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Choy, C.B.; Xu, D.; Gwak, J.; Chen, K.; Savarese, S. 3D-R2N2: A Unified Approach for Single and Multi-view 3D Object Reconstruction. In Proceedings of the European Conference on Computer Vision (ECCV); Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Song, H.O.; Xiang, Y.; Jegelka, S.; Savarese, S. Deep Metric Learning via Lifted Structured Feature Embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June 2016–1 July 2016. [Google Scholar]

- Chang, A.X.; Funkhouser, T.A.; Guibas, L.J.; Hanrahan, P.; Huang, Q.X.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. ShapeNet: An Information-Rich 3D Model Repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-Term Residential Load Forecasting Based on LSTM Recurrent Neural Network. IEEE Trans. Smart Grid 2019, 10, 841–851. [Google Scholar] [CrossRef]

- Kulikajevas, A.; Maskeliūnas, R.; Damaševičius, R.; Ho, E.S.L. 3D Object Reconstruction from Imperfect Depth Data Using Extended YOLOv3 Network. Sensors 2020, 20, 2025. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Kulikajevas, A.; Maskeliūnas, R.; Damaševičius, R.; Misra, S. Reconstruction of 3D Object Shape Using Hybrid Modular Neural Network Architecture Trained on 3D Models from ShapeNetCore Dataset. Sensors 2019, 19, 1553. [Google Scholar] [CrossRef] [PubMed]

- Tatarchenko, M.; Dosovitskiy, A.; Brox, T. Octree Generating Networks: Efficient Convolutional Architectures for High-resolution 3D Outputs. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2107–2115. [Google Scholar] [CrossRef]

- Mi, Z.; Luo, Y.; Tao, W. SSRNet: Scalable 3D Surface Reconstruction Network. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 967–976. [Google Scholar] [CrossRef]

- Ma, T.; Kuang, P.; Tian, W. An improved recurrent neural networks for 3d object reconstruction. Appl. Intell. 2019. [Google Scholar] [CrossRef]

- Xu, X.; Lin, F.; Wang, A.; Hu, Y.; Huang, M.; Xu, W. Body-Earth Mover’s Distance: A Matching-Based Approach for Sleep Posture Recognition. IEEE Trans. Biomed. Circuits Syst. 2016, 10, 1023–1035. [Google Scholar] [CrossRef]

- Wu, W.; Qi, Z.; Fuxin, L. PointConv: Deep Convolutional Networks on 3D Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9613–9622. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. arXiv 2016, arXiv:1612.00593v1. [Google Scholar]

- Yuan, W.; Khot, T.; Held, D.; Mertz, C.; Hebert, M. PCN: Point Completion Network. arXiv 2018, arXiv:1808.00671. [Google Scholar]

- Groueix, T.; Fisher, M.; Kim, V.G.; Russell, B.C.; Aubry, M. AtlasNet: A Papier-Mâché Approach to Learning 3D Surface Generation. arXiv 2018, arXiv:1802.05384. [Google Scholar]

- Liu, M.; Sheng, L.; Yang, S.; Shao, J.; Hu, S.M. Morphing and Sampling Network for Dense Point Cloud Completion. Proc. AAAI Conf. Artif. Intell. 2020, 34, 11596–11603. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.A.; Nießner, M. ScanNet: Richly-annotated 3D Reconstructions of Indoor Scenes. arXiv 2017, arXiv:1702.04405. [Google Scholar]

- Haque, A.; Peng, B.; Luo, Z.; Alahi, A.; Yeung, S.; Fei-Fei, L. ITOP Dataset (Version 1.0); Zenodo: Geneva, Switzerland, 2016. [Google Scholar] [CrossRef]

- Ganapathi, V.; Plagemann, C.; Koller, D.; Thrun, S. Real-Time Human Pose Tracking from Range Data. In Computer Vision—ECCV 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 738–751. [Google Scholar]

- Flaischlen, S.; Wehinger, G.D. Synthetic Packed-Bed Generation for CFD Simulations: Blender vs. STAR-CCM+. ChemEngineering 2019, 3, 52. [Google Scholar] [CrossRef]

- Ghorbani, S.; Mahdaviani, K.; Thaler, A.; Kording, K.; Cook, D.J.; Blohm, G.; Troje, N.F. MoVi: A Large Multipurpose Motion and Video Dataset. arXiv 2020, arXiv:2003.01888. [Google Scholar]

- Mahmood, N.; Ghorbani, N.; Troje, N.F.; Pons-Moll, G.; Black, M.J. AMASS: Archive of Motion Capture as Surface Shapes. In Proceedings of the International Conference on Computer Vision, Seoul, Korea, 27 October 2019–2 November 2019; pp. 5442–5451. [Google Scholar]

- Kainz, F.; Bogart, R.R.; Hess, D.K. The OpenEXR Image File Format; ACM Press: New York, NY, USA, 2004. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the 31st International Conference on Neural Information Processing Systems; NIPS’17; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 5105–5114. [Google Scholar]

- Jin, Y.; Zhang, J.; Li, M.; Tian, Y.; Zhu, H.; Fang, Z. Towards the Automatic Anime Characters Creation with Generative Adversarial Networks. arXiv 2017, arXiv:1708.05509. [Google Scholar]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. arXiv 2020, arXiv:1703.10593. [Google Scholar]

- Atapattu, C.; Rekabdar, B. Improving the realism of synthetic images through a combination of adversarial and perceptual losses. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Wu, S.; Li, G.; Deng, L.; Liu, L.; Wu, D.; Xie, Y.; Shi, L. L1 -Norm Batch Normalization for Efficient Training of Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2043–2051. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef]

- Gao, T.; Chai, Y.; Liu, Y. Applying long short term momory neural networks for predicting stock closing price. In Proceedings of the 2017 8th IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 24–26 November 2017; pp. 575–578. [Google Scholar] [CrossRef]

| Name | Voxels | Pointcloud | Input | EMD | CD | Standalone |

|---|---|---|---|---|---|---|

| 3D-R2N2 | ✓ | ✗ | RGB | — | — | ✗ |

| YoloExt | ✓ | ✗ | RGB-D | — | — | ✓ |

| PointOutNet | ✗ | ✓ | RGB | ✓ | ✓ | ✗ |

| PointNet w FCAE | ✗ | ✓ | Pointcloud | ✗ | ✓ | ✗ |

| PCN | ✗ | ✓ | Pointcloud | ✗ | ✓ | ✗ |

| AtlasNet | ✗ | ✓ | Pointcloud | ✗ | ✓ | ✗ |

| MSN | ✗ | ✓ | Pointcloud | ✓ | ✗ | ✗ |

| Ours | ✗ | ✓ | Depth | ✓ | ✗ | ✓ |

| Method | ShapeNet | AMASS | ||

|---|---|---|---|---|

| EMD | CD | EMD | CD | |

| PointNet wFCAE [21] | 0.0832 | 0.0182 | 3.3806 | 4.9042 |

| PCN [36] | 0.0734 | 0.0121 | 3.0456 | 4.0955 |

| AtlasNet [37] | 0.0653 | 0.0182 | 2.0875 | 6.4343 |

| MSN [38] | 0.0378 | 0.0114 | 1.1525 | 0.8016 |

| Our method (Cleanup) | N/A | N/A | 0.0603 | 0.0292 |

| Our method (Reconstruction) | N/A | N/A | 0.0590 | 0.0790 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kulikajevas, A.; Maskeliūnas, R.; Damaševičius, R.; Wlodarczyk-Sielicka, M. Auto-Refining Reconstruction Algorithm for Recreation of Limited Angle Humanoid Depth Data. Sensors 2021, 21, 3702. https://doi.org/10.3390/s21113702

Kulikajevas A, Maskeliūnas R, Damaševičius R, Wlodarczyk-Sielicka M. Auto-Refining Reconstruction Algorithm for Recreation of Limited Angle Humanoid Depth Data. Sensors. 2021; 21(11):3702. https://doi.org/10.3390/s21113702

Chicago/Turabian StyleKulikajevas, Audrius, Rytis Maskeliūnas, Robertas Damaševičius, and Marta Wlodarczyk-Sielicka. 2021. "Auto-Refining Reconstruction Algorithm for Recreation of Limited Angle Humanoid Depth Data" Sensors 21, no. 11: 3702. https://doi.org/10.3390/s21113702

APA StyleKulikajevas, A., Maskeliūnas, R., Damaševičius, R., & Wlodarczyk-Sielicka, M. (2021). Auto-Refining Reconstruction Algorithm for Recreation of Limited Angle Humanoid Depth Data. Sensors, 21(11), 3702. https://doi.org/10.3390/s21113702