A Sawn Timber Tree Species Recognition Method Based on AM-SPPResNet

Abstract

1. Introduction

2. Materials and Methods

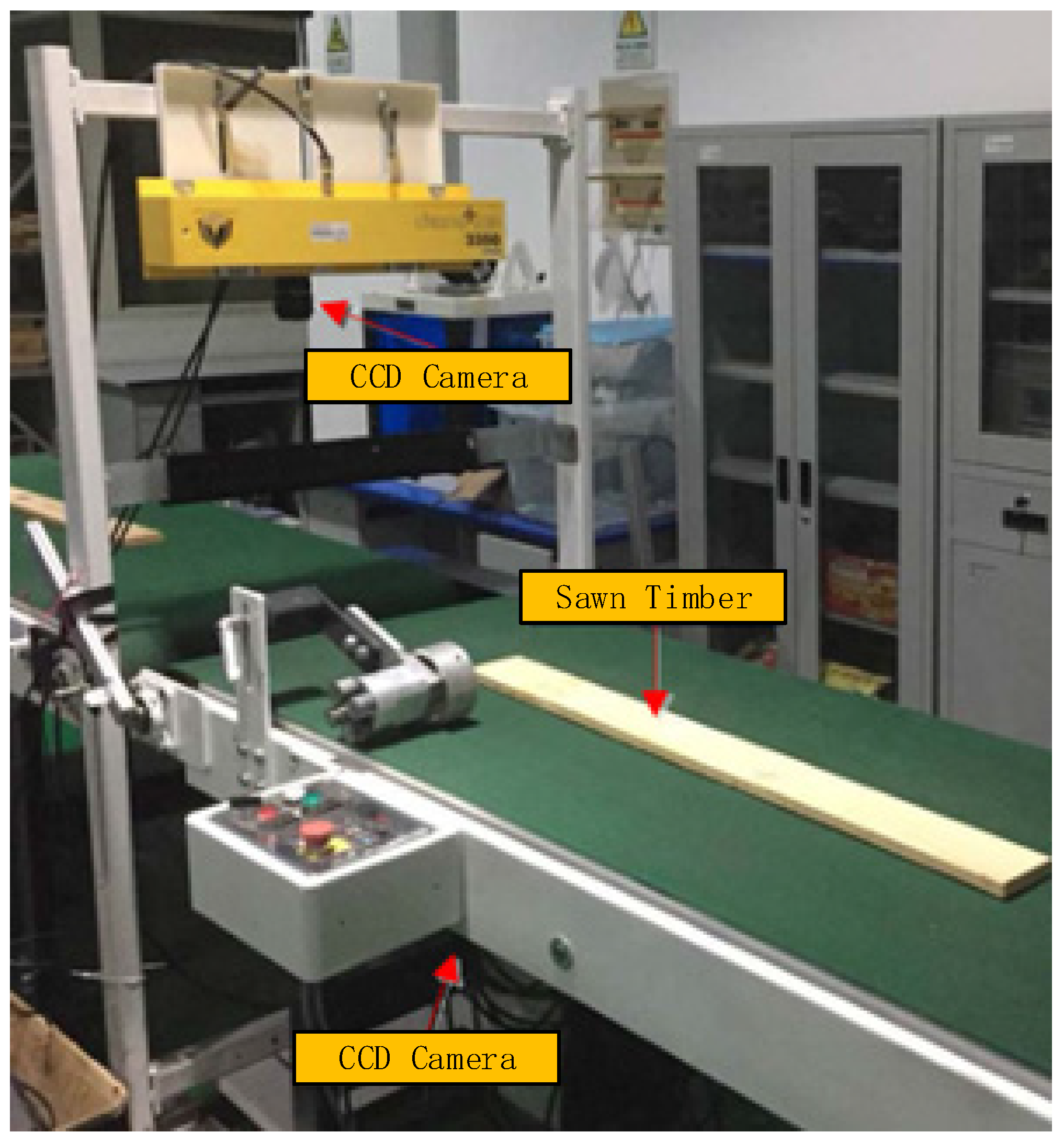

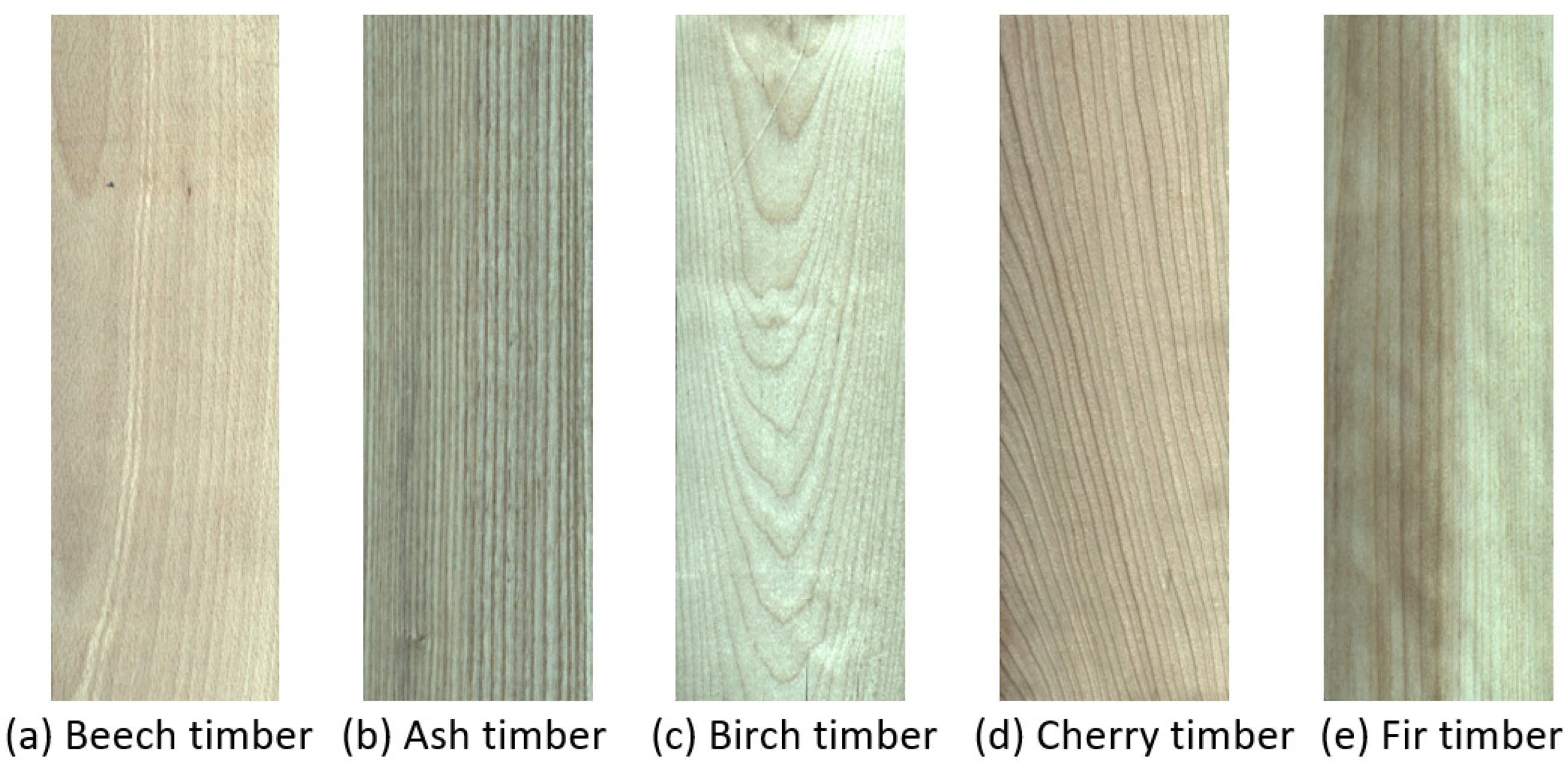

2.1. Acquisition and Partition of Dataset

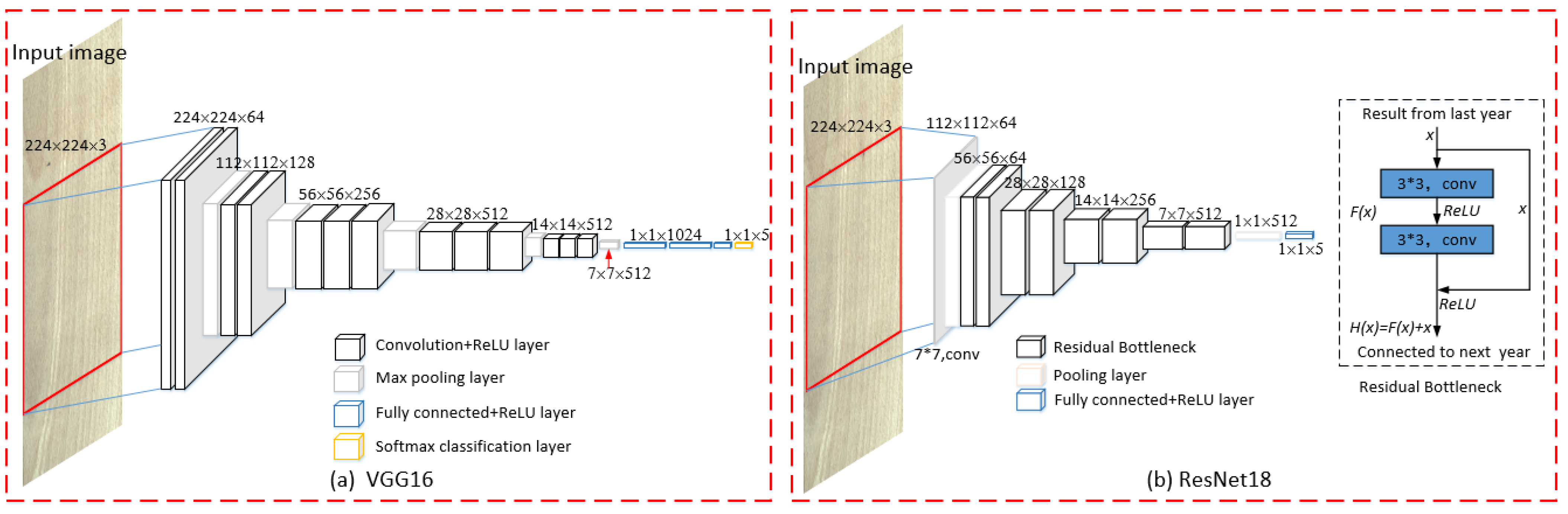

2.2. Convolutional Neural Networks

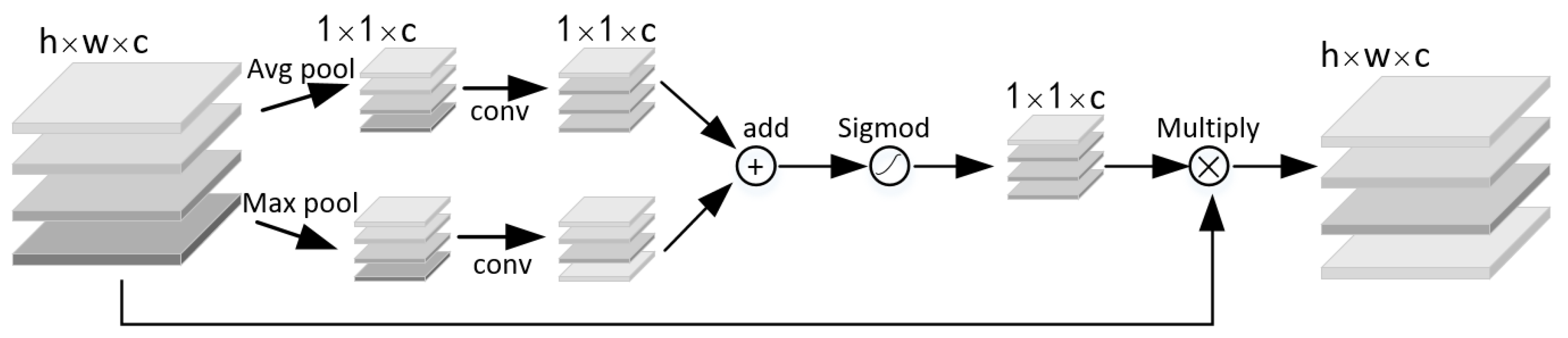

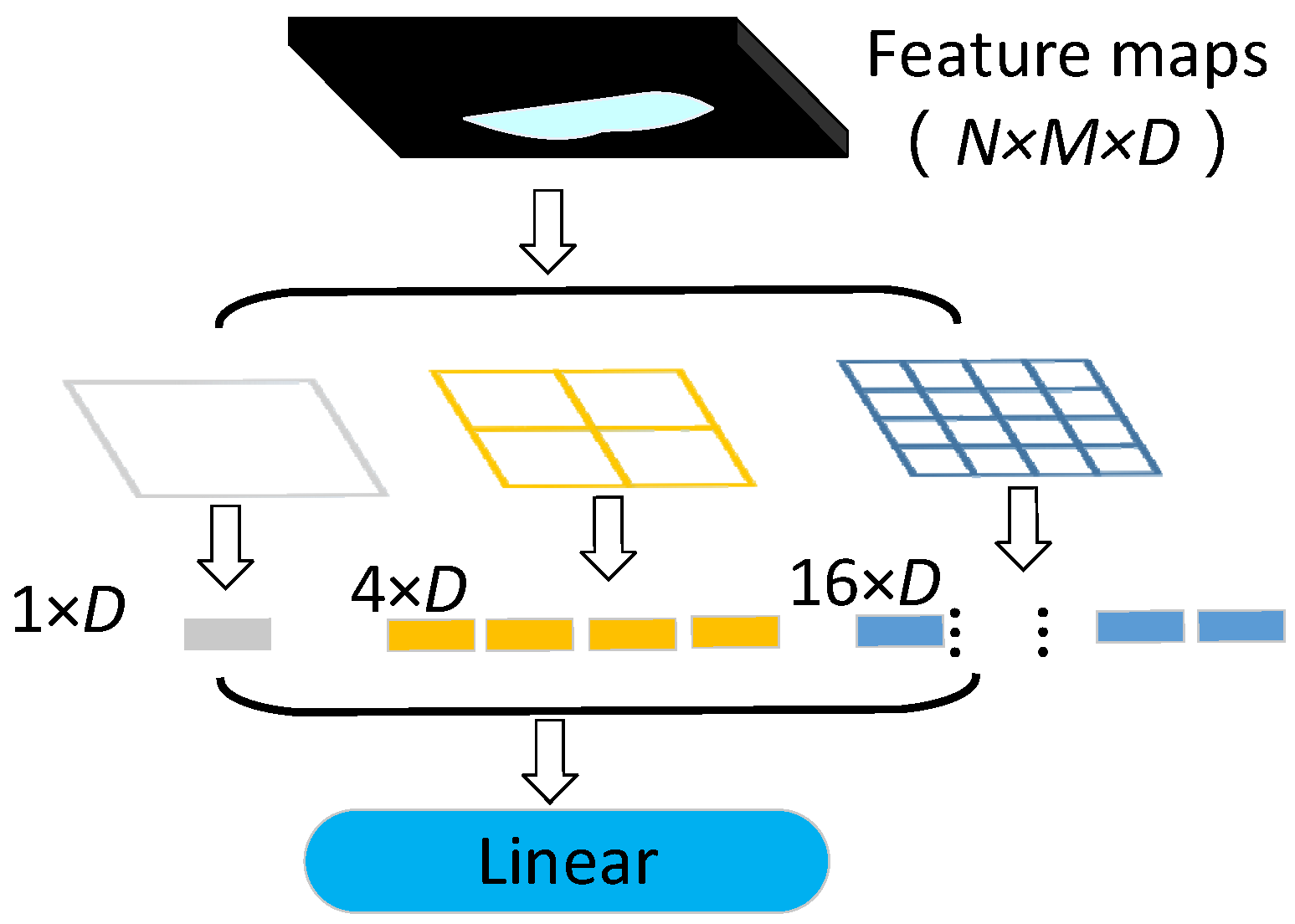

2.3. Optimized Convolutional Neural Network

3. Results

3.1. Model Evaluation Index

3.2. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hautamaki, S.; Kilpelainen, H.; Verkasalo, E. Factors and models for the bending properties of sawn timber from Finland and North-Western Russia. Part I: Norway Spruce. Balt. For. 2013, 19, 106–119. [Google Scholar]

- Ratnasingam, J.; Liat, L.C.; Ramasamy, G.; Mohamed, S.; Senin, A.L. Attributes of sawn timber important for the manufacturers of value-added wood products in Malaysia. Bioresources 2016, 11, 8297–8306. [Google Scholar] [CrossRef]

- Zhao, R.; Diao, G.; Chen, S.Z. Study on the price fluctuation and dynamic relationship between log and sawn timber. For. Prod. J. 2019, 69, 34–41. [Google Scholar] [CrossRef]

- Shi, J.H.; Li, Z.Y.; Zhu, T.T.; Wang, D.Y.; Ni, C. Defect detection of industry wood veneer based on NAS and multi-channel mask R-CNN. Sensors 2020, 20, 4398. [Google Scholar] [CrossRef]

- Huang, Y.P.; Si, W.; Chen, K.J.; Sun, Y. Assessment of tomato maturity in different layers by spatially resolved spectroscopy. Sensors 2020, 20, 7229. [Google Scholar] [CrossRef]

- Ni, C.; Li, Z.Y.; Zhang, X.; Sun, X.Y.; Huang, Y.P.; Zhao, L.; Zhu, T.T.; Wang, D.Y. Online sorting of the film on cotton based on deep learning and hyperspectral imaging. IEEE Access 2020, 8, 93028–93038. [Google Scholar] [CrossRef]

- Zhao, Y.S.; Xue, X.M.; Song, X.J.; Nan, C.H.; Chen, R.K.; Wang, Y. Comparison and analysis of FT-IR spectra for six broad leaved wood species. J. For. Eng. 2019, 5, 40–45. [Google Scholar] [CrossRef]

- Briggert, A.; Olsson, A.; Oscarsson, J. Prediction of tensile strength of sawn timber: Definitions and performance of indicating properties based on surface laser scanning and dynamic excitation. Mater. Struct. 2020, 53, 1–20. [Google Scholar] [CrossRef]

- Fahrurozi, A.; Madenda, S.; Ernastuti, E.; Kerami, D. Wood Texture features extraction by using GLCM combined with various edge detection methods. J. Phys. Conf. Ser. 2016, 725, 12005. [Google Scholar] [CrossRef]

- Ramayanti, D. Feature textures extraction of macroscopic image of jatiwood (Tectona Grandy) based on gray level co-occurence matrix. Iop Conf. Ser. Mat. Sci. 2018, 453, 012046. [Google Scholar] [CrossRef]

- Yadav, A.R.; Dewal, M.L.; Anand, R.S.; Gupta, S. Classification of hardwood species using ANN classifier. In Proceedings of the 12th Indian National Conference on Computer Vision, Graphics and Image Processing, Jodhpur, India, 18–21 December 2021. [Google Scholar]

- Hadiwidjaja, M.L.; Gunawan, P.H.; Prakasa, E.; Rianto, Y.; Sugiarto, B.; Wardoyo, R.; Damayanti, R.; Sugiyanto, K.; Dewi, L.M.; Astutiputri, V.F. Developing wood identification system by local binary pattern and hough transform method. J. Phys. Conf. Ser. 2019, 1192, 012053. [Google Scholar] [CrossRef]

- Hiremath, P.S.; Bhusnurmath, R.A. Multiresolution LDBP descriptors for texture classification using anisotropic diffusion with an application to wood texture analysis. Pattern Recogn. Lett. 2017, 89, 8–17. [Google Scholar] [CrossRef]

- Sun, Y.Y.; Chen, S.; Gao, L. Feature extraction method based on improved linear LBP operator. In Proceedings of the 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference, Chengdu, China, 15–17 March 2019; pp. 1536–1540. [Google Scholar]

- Hwang, S.W.; Kobayashi, K.; Sugiyama, J.J. Detection and visualization of encoded local features as anatomical predictors in cross-sectional images of Lauraceae. J. Wood Sci. 2020, 66, 16. [Google Scholar] [CrossRef]

- Kobayashi, K.; Kegasa, T.; Hwang, S.W.; Sugiyama, J.J. Anatomical features of Fagaceae wood statistically extracted by computer vision approaches: Some relationships with evolution. PLoS ONE 2019, 14. [Google Scholar] [CrossRef]

- Avci, E.; Sengur, A.; Hanbay, D. An optimum feature extraction method for texture classification. Expert Syst. Appl.. 2009, 36, 6036–6043. [Google Scholar] [CrossRef]

- Celik, T.; Tjahjadi, T. Bayesian texture classification and retrieval based on multiscale feature vector. Pattern Recogn. Lett. 2011, 32, 159–167. [Google Scholar] [CrossRef][Green Version]

- Xie, Y.H.; Wang, J.C. Study on the identification of the wood surface defects based on texture features. Optik 2015, 126, 2231–2235. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Barboutis, I.; Grammalidis, N.; Lefakis, P. Wood species recognition through multidimensional texture analysis. Comput. Electron. Agric. 2018, 144, 241–248. [Google Scholar] [CrossRef]

- Sugiarto, B.; Prakasa, E.; Wardoyo, R.; Damayanti, R.; Krisdianto; Dewi, L.M.; Pardede, H.F.; Rianto, Y. Wood identification based on histogram of oriented gradient (HOG) feature and support vector machine (SVM) Classifier. In Proceedings of the 2017 2nd International Conferences on Information Technology, Information Systems and Electrical En-gineering (ICITISEE); Institute of Electrical and Electronics Engineers (IEEE), Yogyakarta, Indonesia, 1–3 November 2017; pp. 337–341. [Google Scholar]

- Li, K.; Jain, A.; Malovannaya, A.; Wen, B.; Zhang, B. DeepRescore: Leveraging deep learning to improve peptide identification in immunopeptidomics. Proteomics 2020, 20. [Google Scholar] [CrossRef]

- Zhang, X.Z.; Li, L.J. Research of image recognition of camellia oleifera fruit based on improved convolutional auto-encoder. J. For. Eng. 2019, 4, 118–124. [Google Scholar] [CrossRef]

- Sun, L.Y.; Liu, M.L.; Zhou, L.X.; Yu, Y. Research on forest fire prediction method based on deep learning. J. For. Eng. 2019, 4, 132–136. [Google Scholar] [CrossRef]

- Ye, Q.L.; Xu, D.P.; Zhang, D. Remote sensing image classification based on deep learning features and support vector machine. J. For. Eng. 2019, 4. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Ma, H.; Luo, Z.; Li, X. Open set domain adaptation in machinery fault diagnostics using instance-level weighted adversarial learning. IEEE Trans. Ind. Inform. 2021, 10, 1. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE T. Pattern Anal. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Cao, G.; Zhou, Y.; Bollegala, D.; Luo, S. Spatio-temporal attention model for tactile texture recognition. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; pp. 9896–9902. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Xu, F.; Miao, Z.; Ye, Q.L. End-to-end remote sensing image classification framework based on convolutional block attention module. J. For. Eng. 2020, 5, 133–138. [Google Scholar] [CrossRef]

- Gallagher, B.; Rever, M.; Loveland, D.; Mundhenk, T.N.; Beauchamp, B.; Robertson, E.; Jaman, G.G.; Hiszpanski, A.M.; Han, T.Y.-J. Predicting compressive strength of consolidated molecular solids using computer vision and deep learning. Mater. Des. 2020, 190, 108541. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2012, 60, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Konstantopoulos, G.; Koumoulos, E.P.; Charitidis, C.A. Classification of mechanism of reinforcement in the fiber-matrix interface: Application of Machine Learning on nanoindentation data. Mater. Des. 2020, 192, 108705. [Google Scholar] [CrossRef]

| Parameter | |

|---|---|

| Cameras | DALSA LA-GC-02K05B |

| Camera lens | Nikon AF Nikkor 50 mm f/1.8 D |

| System | Windows 10 × 64 |

| CPU | Intel Xeon W-2155@3.30 GHz |

| GPU | Nvidia GeForce GTX 1080 Ti (11G) |

| Environment configuration | Pytorch1.7.1 + Python3.7.0 + Cuda10.1 |

| Dataset | Beech Timber | Ash Timber | Birch Timber | Cherry Timber | Fir Timber | |

|---|---|---|---|---|---|---|

| Train dataset | Fold 1 | 161 | 159 | 161 | 160 | 193 |

| Fold 2 | 161 | 159 | 161 | 160 | 194 | |

| Fold 3 | 161 | 159 | 161 | 160 | 194 | |

| Fold 4 | 162 | 160 | 161 | 160 | 194 | |

| Test dataset | 162 | 160 | 161 | 161 | 194 | |

| total | 807 | 797 | 805 | 801 | 969 | |

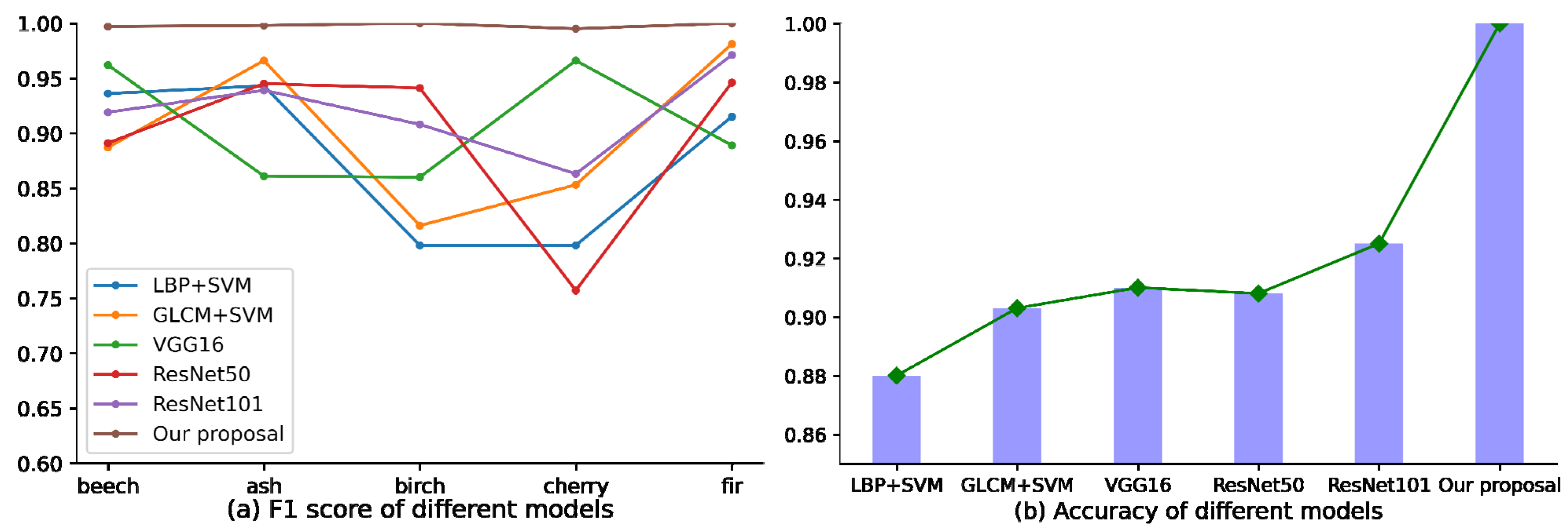

| ResNet101 | AM-SPPResNet | |||||||||

| Beech | Ash | Birch | Cherry | Fir | Beech | Ash | Birch | Cherry | Fir | |

| Precision | 0.895 | 0.900 | 0.970 | 0.970 | 0.990 | 0.943 | 0.985 | 1.000 | 0.998 | 0.983 |

| Recall | 0.970 | 0.995 | 0.875 | 0.833 | 0.953 | 1.000 | 0.983 | 0.958 | 0.968 | 0.970 |

| F1-Score | 0.919 | 0.939 | 0.908 | 0.863 | 0.971 | 0.970 | 0.985 | 0.978 | 0.982 | 0.976 |

| Accuracy | 0.925 | 0.978 | ||||||||

| ResNet101 + XGBoost | ResNet101 + SVM | |||||||||

| Beech | Ash | Birch | Cherry | Fir | Beech | Ash | Birch | Cherry | Fir | |

| Precision | 0.990 | 0.850 | 0.978 | 0.995 | 0.878 | 0.980 | 0.988 | 0.918 | 0.918 | 0.973 |

| Recall | 0.998 | 0.887 | 0.908 | 0.985 | 0.898 | 0.958 | 0.938 | 0.965 | 0.978 | 0.925 |

| F1-Score | 0.994 | 0.868 | 0.942 | 0.990 | 0.888 | 0.968 | 0.961 | 0.938 | 0.945 | 0.948 |

| Accuracy | 0.930 | 0.950 | ||||||||

| AM-SPPResNet + XGBoost | AM-SPPResNet + SVM Classifier | |||||||||

| Beech | Ash | Birch | Cherry | Fir | Beech | Ash | Birch | Cherry | Fir | |

| Precision | 0.998 | 0.998 | 0.995 | 0.995 | 0.990 | 0.995 | 0.998 | 1.000 | 0.998 | 1.000 |

| Recall | 1.000 | 0.993 | 0.990 | 0.990 | 0.998 | 1.000 | 0.998 | 1.000 | 0.993 | 1.000 |

| F1-Score | 0.999 | 0.995 | 0.992 | 0.992 | 0.994 | 0.997 | 0.998 | 1.000 | 0.995 | 1.000 |

| Accuracy | 0.995 | 1.000 | ||||||||

| Beech | Ash | Birch | Cherry | Fir | |

|---|---|---|---|---|---|

| Precision | 1.00 | 0.99 | 0.98 | 1.00 | 0.99 |

| Recall | 1.00 | 0.98 | 0.99 | 0.99 | 0.99 |

| F1-score | 1.000 | 0.985 | 0.985 | 0.995 | 0.990 |

| Accuracy | 0.99 | ||||

| LBP (P = 8, R = 1) + SVM Classifier | GLCM (angle = [0°, 45°, 90°, 135°], d = [2,4]) + SVM Classifier | |||||||||

| Beech | Ash | Birch | Cherry | Fir | Beech | Ash | Birch | Cherry | Fir | |

| Precision | 0.990 | 0.965 | 0.720 | 0.825 | 0.925 | 0.898 | 0.973 | 0.783 | 0.888 | 0.975 |

| Recall | 0.888 | 0.923 | 0.895 | 0.775 | 0.905 | 0.878 | 0.960 | 0.853 | 0.823 | 0.988 |

| F1-Score | 0.936 | 0.943 | 0.798 | 0.798 | 0.915 | 0.887 | 0.966 | 0.816 | 0.853 | 0.981 |

| Accuracy | 0.880 | 0.903 | ||||||||

| Average time of single image | 21.3 ms | 25.6 ms | ||||||||

| VGG16 | ResNet50 | |||||||||

| Beech | Ash | Birch | Cherry | Fir | Beech | Ash | Birch | Cherry | Fir | |

| Precision | 0.970 | 0.968 | 0.913 | 0.963 | 0.860 | 0.858 | 0.920 | 0.943 | 0.968 | 0.993 |

| Recall | 0.955 | 0.828 | 0.828 | 0.970 | 0.953 | 0.965 | 0.983 | 0.948 | 0.745 | 0.908 |

| F1-Score | 0.962 | 0.861 | 0.860 | 0.966 | 0.889 | 0.891 | 0.945 | 0.941 | 0.757 | 0.946 |

| Accuracy | 0.910 | 0.908 | ||||||||

| Average time of single image | 10.5 ms | 35.5 ms | ||||||||

| ResNet101 | AM-SPPResNet + SVM Classifier | |||||||||

| Beech | Ash | Birch | Cherry | Fir | Beech | Ash | Birch | Cherry | Fir | |

| Precision | 0.895 | 0.900 | 0.970 | 0.970 | 0.990 | 0.995 | 0.998 | 1.000 | 0.998 | 1.000 |

| Recall | 0.970 | 0.995 | 0.875 | 0.833 | 0.953 | 1.000 | 0.998 | 1.000 | 0.993 | 1.000 |

| F1-Score | 0.919 | 0.939 | 0.908 | 0.863 | 0.971 | 0.997 | 0.998 | 1.000 | 0.995 | 1.000 |

| Accuracy | 0.925 | 1.000 | ||||||||

| Average time of single image | 30.5 ms | 63.8 ms | ||||||||

| Class Index | Botanical Name | Category |

|---|---|---|

| 1 | Fagus sylvatica | Diffuse-porous hardwood |

| 2 | Juglans regia | Semi-diffuse-porous hardwood |

| 3 | Castanea sativa | Ring-porous hardwood |

| 4 | Quercus cerris | Ring-porous hardwood |

| 5 | Alnus glutinosa | Diffuse-porous hardwood |

| 6 | Fraxinus ornus | Ring-porous hardwood |

| 7 | Picea abies | Softwood |

| 8 | Ailanthus | Softwood |

| 9 | altissima | Ring-porous hardwood |

| 10 | Robinia pseudoacacia | Ring-porous hardwood |

| 11 | Cupressus sempervirens | Softwood |

| 12 | Platanus orientalis | Diffuse-porous hardwood |

| VGG16 | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

| Precision | 0.84 | 0.73 | 0.89 | 0.91 | 0.80 | 0.84 | 0.94 | 0.91 | 0.92 | 0.75 | 0.92 | 0.83 |

| Recall | 0.95 | 0.88 | 0.85 | 0.89 | 0.78 | 0.85 | 0.91 | 0.92 | 0.73 | 0.92 | 0.88 | 0.63 |

| F1-score | 0.89 | 0.80 | 0.87 | 0.90 | 0.79 | 0.84 | 0.92 | 0.91 | 0.81 | 0.83 | 0.90 | 0.72 |

| Accuracy | 0.85 | |||||||||||

| Average time of single image | 6.2 ms | |||||||||||

| ResNet50 | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

| Precision | 0.98 | 0.76 | 0.94 | 0.95 | 0.95 | 0.79 | 0.87 | 0.71 | 1.00 | 1.00 | 0.95 | 0.95 |

| Recall | 0.96 | 0.98 | 0.92 | 0.93 | 0.83 | 0.88 | 0.79 | 1.00 | 0.80 | 0.94 | 0.99 | 0.91 |

| F1-score | 0.97 | 0.86 | 0.93 | 0.94 | 0.89 | 0.83 | 0.83 | 0.83 | 0.89 | 0.97 | 0.97 | 0.93 |

| Accuracy | 0.92 | |||||||||||

| Average time of single image | 9.4 ms | |||||||||||

| ResNet101 | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

| Precision | 0.94 | 1 | 0.89 | 0.8 | 0.96 | 0.95 | 0.89 | 0.93 | 1 | 0.97 | 0.98 | 0.89 |

| Recall | 0.91 | 0.92 | 0.96 | 0.97 | 0.81 | 0.79 | 0.98 | 0.98 | 0.86 | 0.95 | 0.96 | 0.95 |

| F1-score | 0.92 | 0.96 | 0.92 | 0.88 | 0.88 | 0.86 | 0.93 | 0.95 | 0.92 | 0.96 | 0.97 | 0.92 |

| Accuracy | 0.92 | |||||||||||

| Average time of single image | 17.5 ms | |||||||||||

| AM-SPPResNet | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

| Precision | 0.98 | 0.98 | 0.93 | 0.99 | 0.94 | 0.98 | 0.96 | 0.97 | 0.93 | 0.95 | 0.94 | 0.97 |

| Recall | 0.99 | 0.85 | 0.96 | 0.83 | 0.97 | 0.97 | 0.99 | 0.91 | 1 | 0.95 | 0.98 | 0.95 |

| F1-score | 0.98 | 0.91 | 0.94 | 0.90 | 0.95 | 0.97 | 0.97 | 0.94 | 0.96 | 0.95 | 0.96 | 0.96 |

| Accuracy | 0.96 | |||||||||||

| Average time of single image | 15.9 ms | |||||||||||

| AM-SPPResNet + SVM | ||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

| Precision | 0.98 | 1 | 0.98 | 1 | 0.98 | 1 | 0.98 | 1 | 0.99 | 1 | 0.98 | 0.98 |

| Recall | 1 | 0.92 | 0.99 | 0.98 | 0.99 | 0.97 | 0.99 | 0.99 | 1 | 0.97 | 1 | 0.97 |

| F1-score | 0.99 | 0.96 | 0.98 | 0.99 | 0.98 | 0.98 | 0.98 | 0.99 | 0.99 | 0.98 | 0.99 | 0.97 |

| Accuracy | 0.99 | |||||||||||

| Average time of single image | 26.3 ms | |||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, F.; Liu, Y.; Zhuang, Z.; Wang, Z. A Sawn Timber Tree Species Recognition Method Based on AM-SPPResNet. Sensors 2021, 21, 3699. https://doi.org/10.3390/s21113699

Ding F, Liu Y, Zhuang Z, Wang Z. A Sawn Timber Tree Species Recognition Method Based on AM-SPPResNet. Sensors. 2021; 21(11):3699. https://doi.org/10.3390/s21113699

Chicago/Turabian StyleDing, Fenglong, Ying Liu, Zilong Zhuang, and Zhengguang Wang. 2021. "A Sawn Timber Tree Species Recognition Method Based on AM-SPPResNet" Sensors 21, no. 11: 3699. https://doi.org/10.3390/s21113699

APA StyleDing, F., Liu, Y., Zhuang, Z., & Wang, Z. (2021). A Sawn Timber Tree Species Recognition Method Based on AM-SPPResNet. Sensors, 21(11), 3699. https://doi.org/10.3390/s21113699