Comparison of Depth Camera and Terrestrial Laser Scanner in Monitoring Structural Deflections

Abstract

1. Introduction

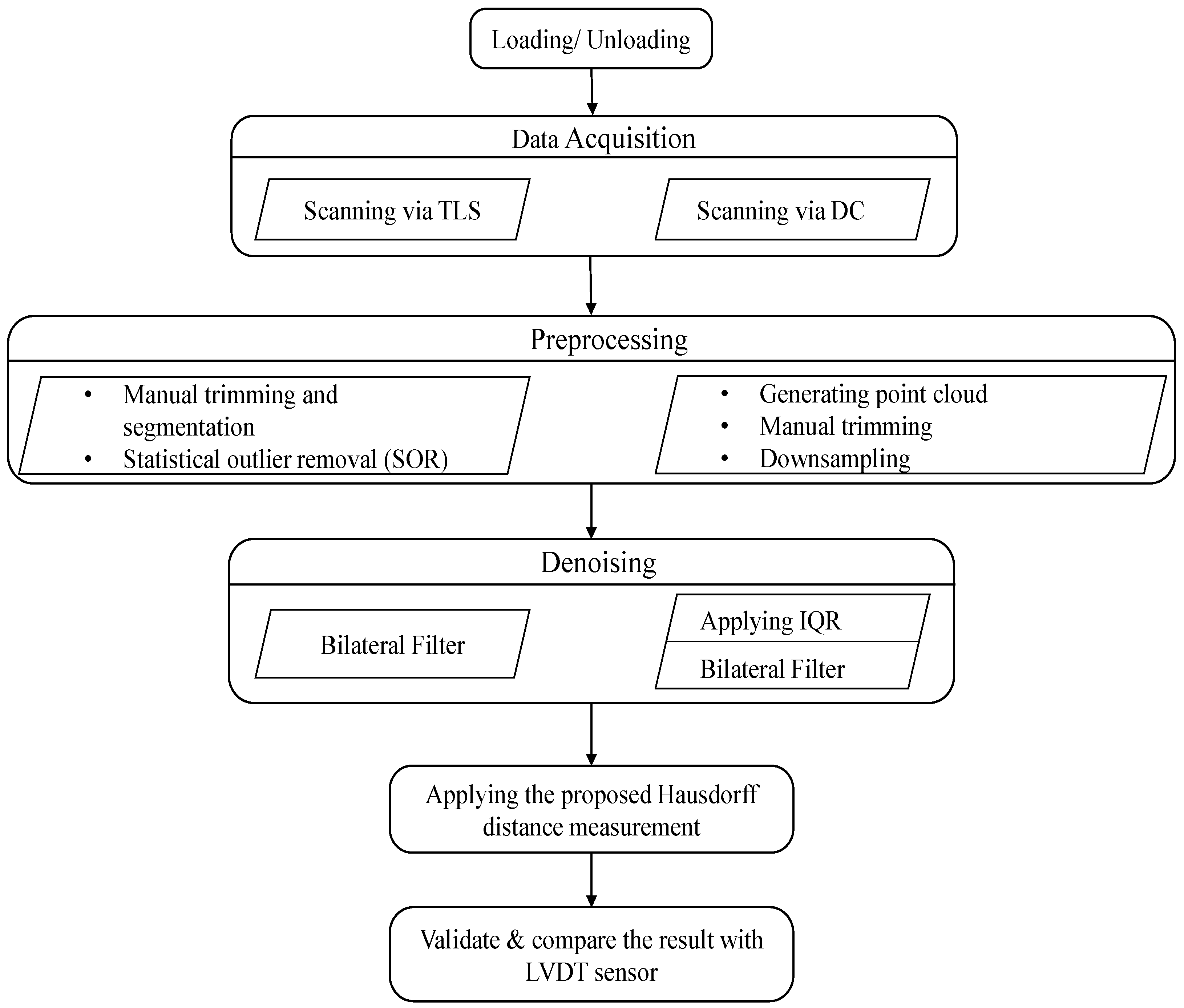

2. Data Preparation

2.1. Data Acquisition and Pre-Processing

2.2. Denoising

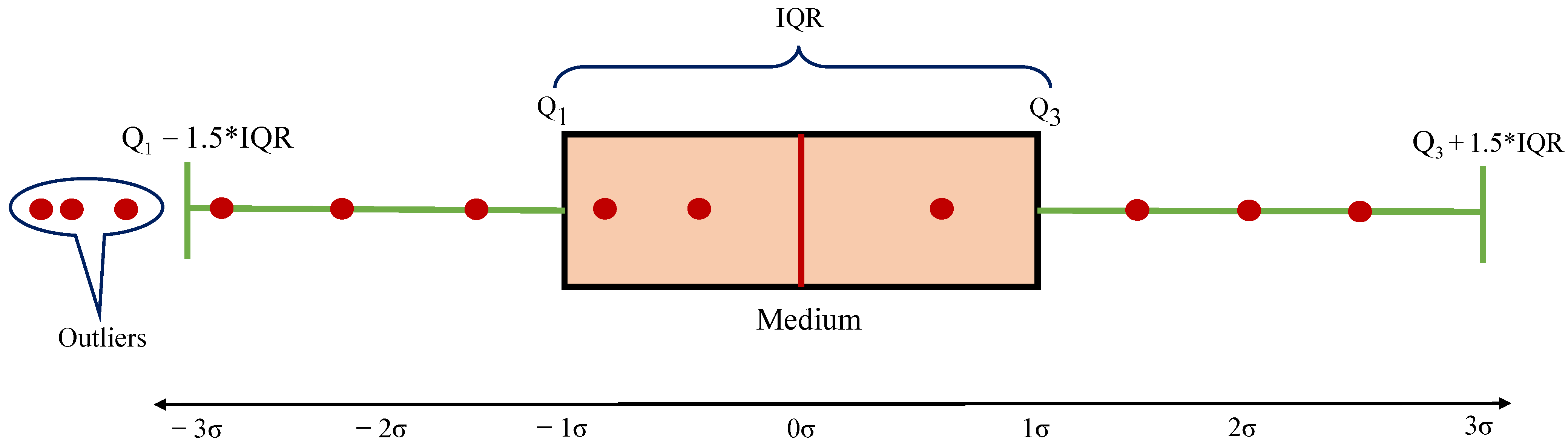

2.2.1. Interquartile Range

- Maintaining the data in ascending order.

- Obtaining the median values, , and , used in determining the interquartile range.

- Scaling the interquartile range by 1.5.

- Adding and deducting the achieved value onto and , respectively.

- Removing the set of data beyond these two ranges.

2.2.2. Bilateral Filter

- p is the noisy point from TLS or DC.

- is the denoised point of p.

- is the normal vector of point p.

- is the 2D Gaussian filter for smoothing.

- is the 1D Gaussian weight function for preserving edge features.

- is a neighborhood point within the distance range, r, from p.

- is the geometric distance between p and .

- is an inner product between the normal of a point, p, and the geometric distance, g.

- & are parameters defined as the standard deviation of the neighborhood distance of point p and a factor of the projection of the distance vector from point p to its neighborhood point on the normal vector, , of point p, respectively.

| Algorithm 1. Bilateral denoising |

| Input: points from TLS and/or DC obtained from deflected and/or undeflected beam, p |

| ← neighborhood of a selected point, p, within radius r of surroundings. |

| Evaluate the unit normal vector, , to the regression plane, , from |

| Output: denoised point p’ |

| Fordo, |

| End |

3. Experimental Study

3.1. Instrumentation

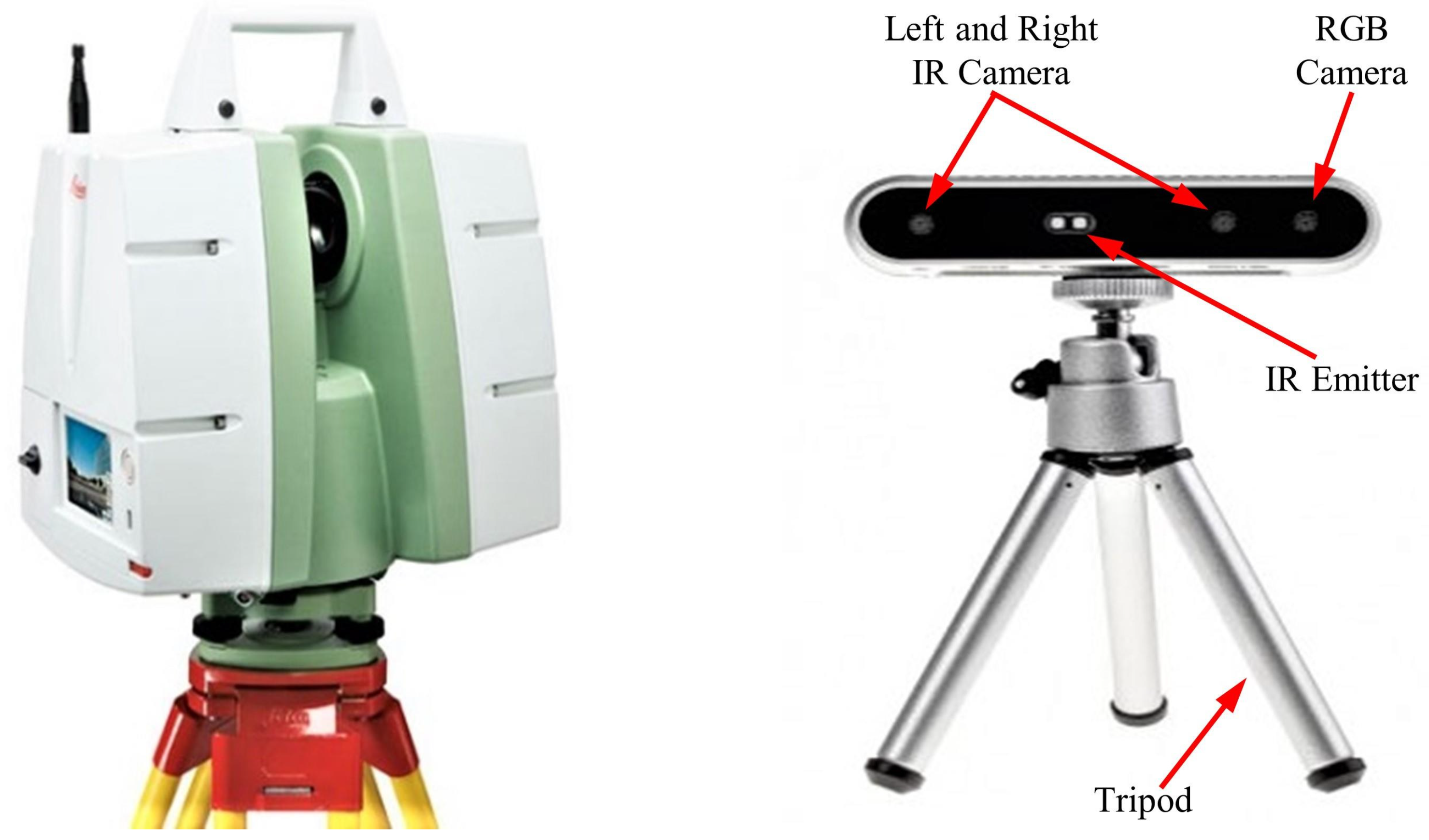

3.1.1. Depth Camera

3.1.2. Terrestrial Laser Scanning

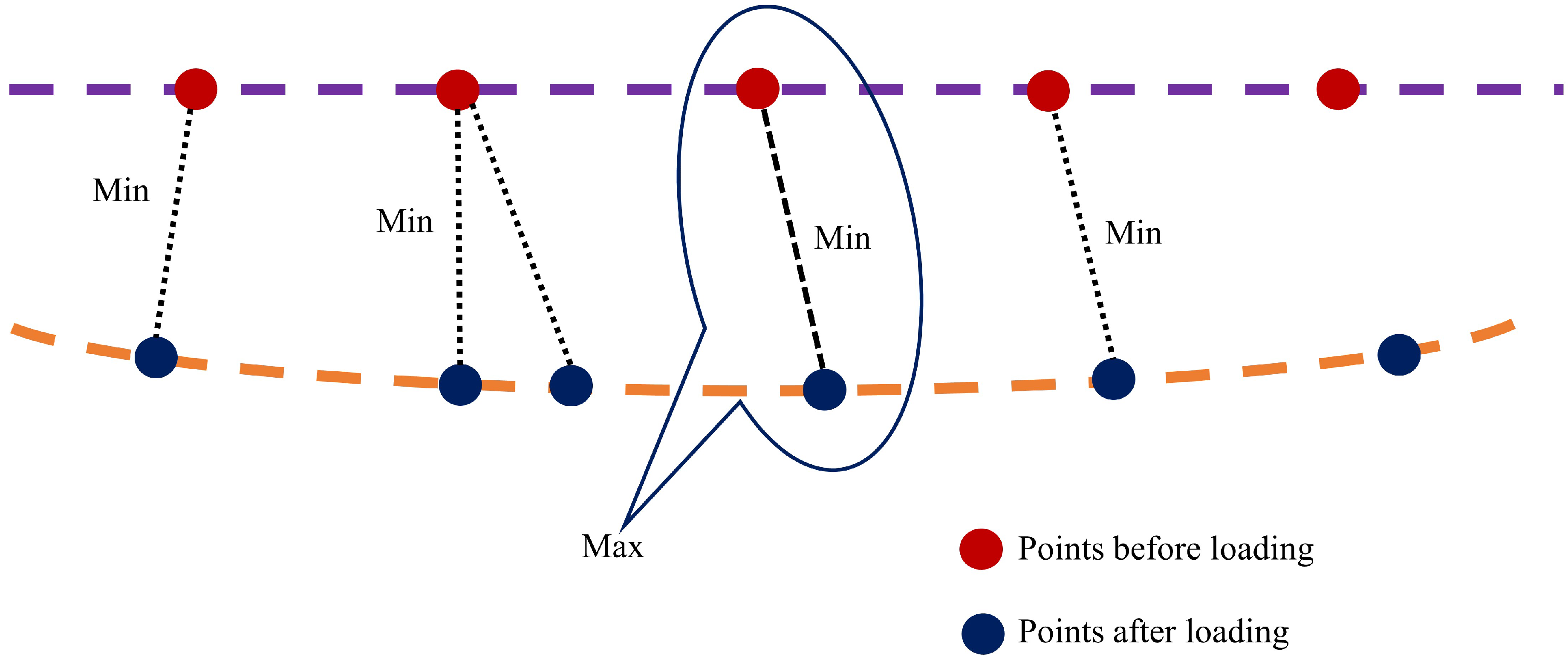

3.2. Proposed Approach for Estimating Structural Deflection Using via TLS and DC

Hausdorff Distance

3.3. Experimental Design

4. Results and Discussion

4.1. Depth Camera Data Processing

- The D415 depth camera was fixed on the horizontal leveled area on the right at the bottom of the center along the span, as shown in Figure 5.

- All the scanning processes conducted in the same position. Figure 6 depicts the data acquired using the Intel RealSense D415 that were analyzed by changing the .bin file format first into the .pts and then into the .txt format, which enabled us to easily interpret the data using Cloud Compare and MATLAB software packages. The necessary pre-processing steps, such as removing unwanted data, downsampling the data, statistical outlier removal (SOR), and manual cropping of the farthest outliers, were performed in the Cloud Compare software using the .pts file format.

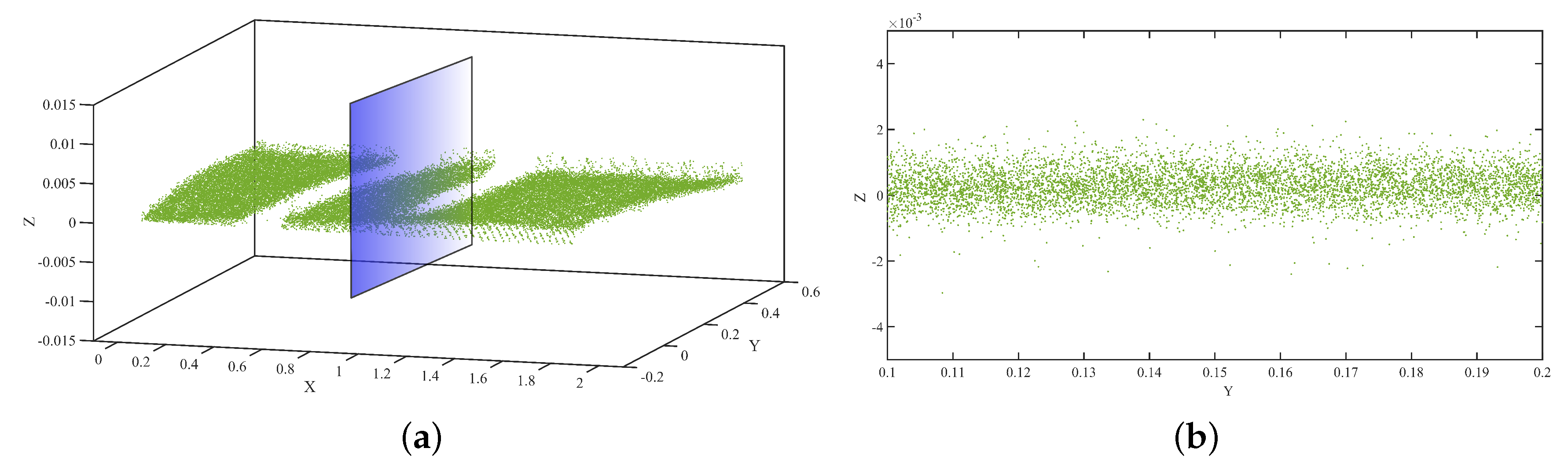

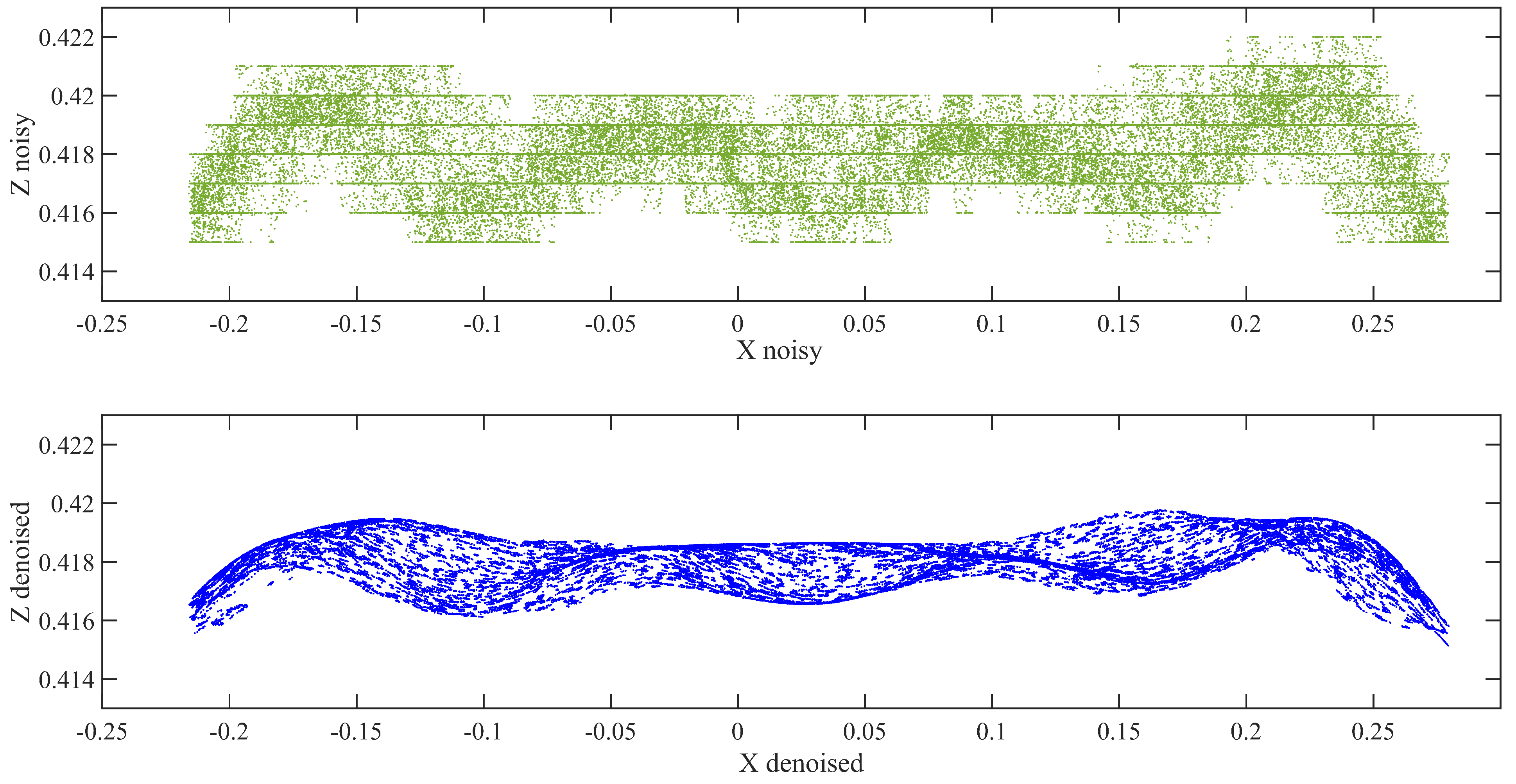

- Because the DC is very sensitive to inherent noise, noise should be treated prominently. Bilateral filtering techniques were applied based on the pseudocode described in Section 2.2.2, preceded by using the IQR score to minimize the noise in the data by eliminating outliers. The resulting point cloud of the scene after eliminating the outliers is shown in Figure 7.

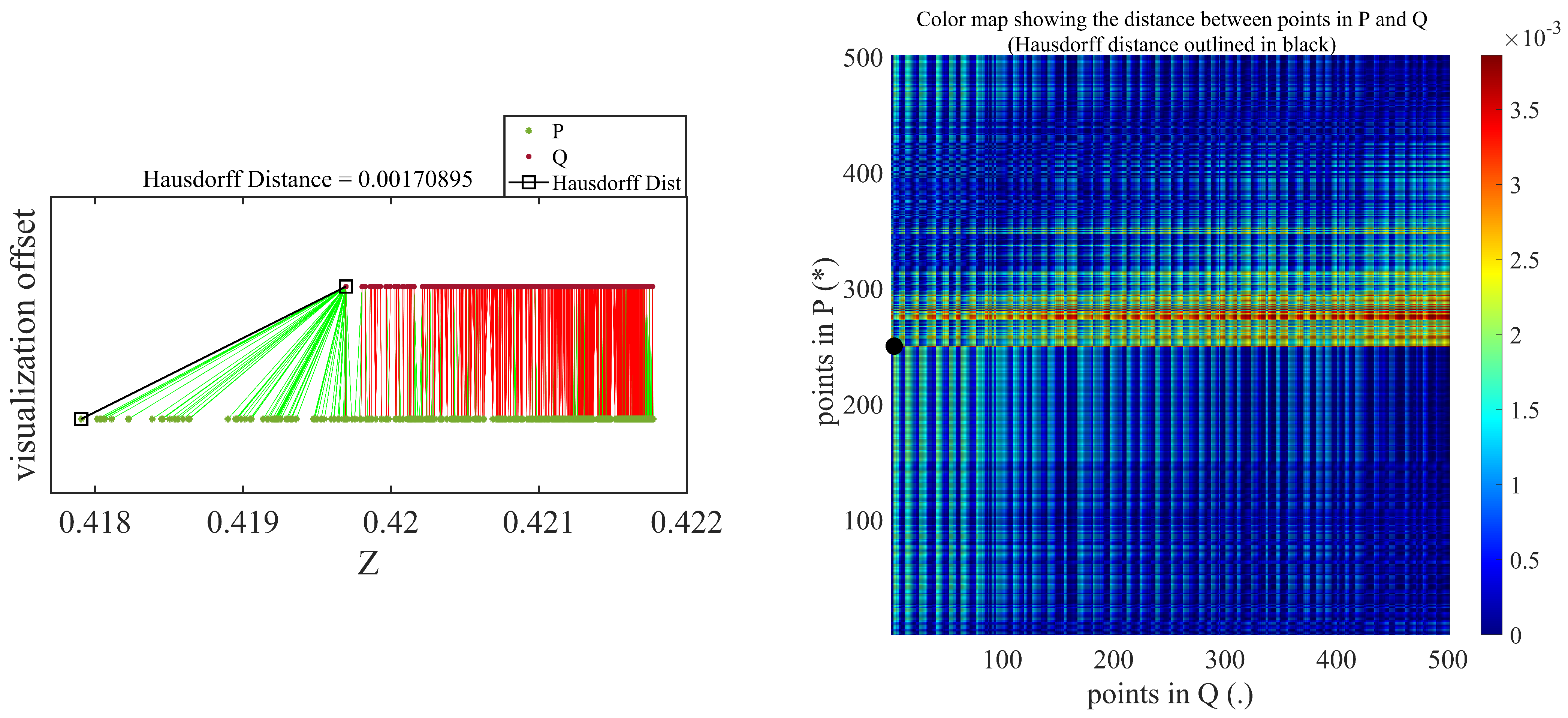

- The proposed Hausdorff distance measurement algorithm was executed using the points obtained from the loading and unloading scenarios per Equation (3).

- Once we obtained the Hausdorff distance for each loading scenario, we compared it with the LVDT output.

4.2. Terrestrial Laser Scanning Data Processing

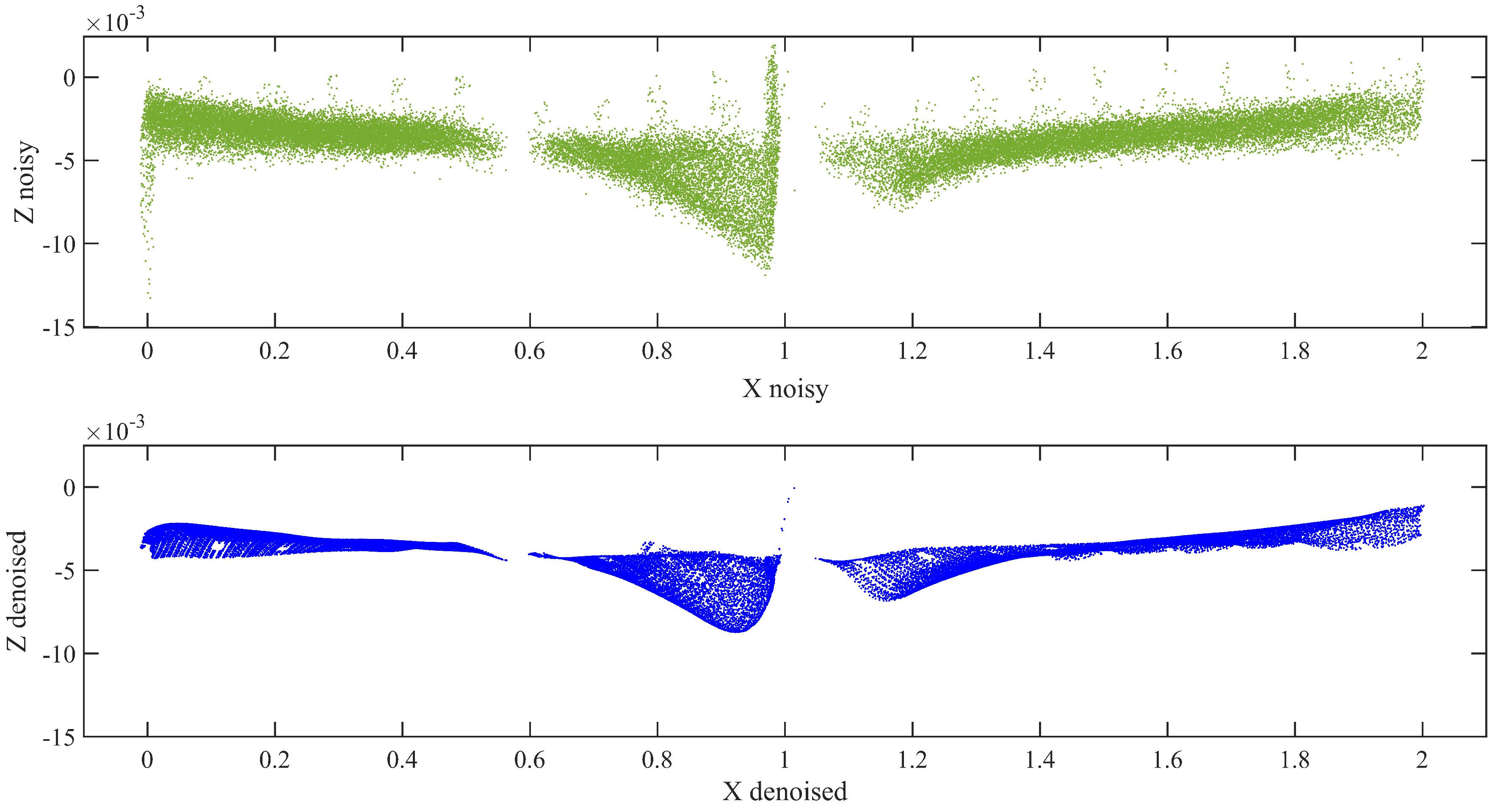

- The Leica C5 scanner was placed 2.5 m away from the specimen during the laboratory experiment. The incident angle and range of a scanner are selected based on the factors affecting the accuracy of the data [39]. The scanning process was started immediately after applying the required load and attaining the LVDT reading for the nominal deflection. The acquired raw data form the scanner shown in Figure 8 was transformed into the .pts or .xyz file format, for easy analysis using Cloud Compare.

- The necessary pre-processing steps, including SOR, manual trimming, and segmentation were conducted for the raw data to decrease noise and increase accuracy. According to this approach, the bottom flange was more effective in determining the deflection. Therefore, our target was to tear out the bottom flange during segmentation.

- Similar to the analysis of the DC data, the bilateral filtering techniques were also applied to the TLS data for thorough removal of noises, as shown in Figure 9.

- Once we obtained a clear representative of the specimen point cloud data, we employed the Hausdorff distance approach for the loading and unloading data separately. We then Compared and validated these results with those obtained using the DC and LVDT sensors.

4.3. Validated Result and Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 3D | Three-Dimensional |

| LIDAR | Light Detection and Ranging |

| TLS | Terrestrial Laser Scanning |

| DC | Depth Camera |

| LVDT | Linear Variable Differential Transformer |

| IQR | Inter Quartile Range |

| RGB | Red Green Blue |

| ToF | Time of Flight |

| UTM | Universal Testing Machine |

References

- Brownjohn, J.M. Structural health monitoring of civil infrastructure. Philos. Trans. R. Soc. Math. Phys. Eng. Sci. 2007, 365, 589–622. [Google Scholar] [CrossRef] [PubMed]

- Li, H.N.; Li, D.S.; Song, G.B. Recent applications of fiber optic sensors to health monitoring in civil engineering. Eng. Struct. 2004, 26, 1647–1657. [Google Scholar] [CrossRef]

- Lichti, D.D.; Jamtsho, S.; El-Halawany, S.I.; Lahamy, H.; Chow, J.; Chan, T.O.; El-Badry, M. Structural deflection measurement with a range camera. J. Surv. Eng. 2012, 138, 66–76. [Google Scholar] [CrossRef]

- Hieu, N.; Wang, Z.; Jones, P.; Zhao, B. 3D shape, deformation, and vibration measurements using infrared Kinect sensors and digital image correlation. Appl. Opt. 2017, 56, 9030–9037. [Google Scholar]

- Dhakal, D.R.; Neupane, K.E.S.H.A.B.; Thapa, C.H.I.R.A.Y.U.; Ramanjaneyulu, G.V. Different techniques of structural health monitoring. Res. Dev. IJCSEIERD 2013, 3, 55–66. [Google Scholar]

- Kim, I.; Jeon, H.; Baek, S.; Hong, W.; Jung, H. Application of crack identification techniques for an aging concrete bridge inspection using an unmanned aerial vehicle. Sensors 2018, 18, 1881. [Google Scholar] [CrossRef]

- Seon, P.H.; Lee, H.M.; Adeli, H.; Lee, I. A new approach for health monitoring of structures: Terrestrial laser scanning. Comput. Civ. Infrastruct. Eng. 2007, 22, 19–30. [Google Scholar]

- Shen, Y.; Wu, L.; Wang, Z. Identification of inclined buildings from aerial lidar data for disaster management. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Chinrungrueng, J.; Sunantachaikul, U.; Triamlumlerd, S. Smart parking: An application of optical wireless sensor network. In Proceedings of the 2007 International Symposium on Applications and the Internet Workshops, Hiroshima, Japan, 15–19 January 2007; p. 66. [Google Scholar]

- Daponte, P.; De Vito, L.; Mazzilli, G.; Picariello, F.; Rapuano, S.; Riccio, M. Metrology for drone and drone for metrology: Measurement systems on small civilian drones. In Proceedings of the 2015 IEEE Metrology for Aerospace (MetroAeroSpace), Benevento, Italy, 4–5 June 2015; pp. 306–311. [Google Scholar]

- Giovanna, S.; Trebeschi, M.; Docchio, F. State-of-the-art and applications of 3D imaging sensors in industry, cultural heritage, medicine, and criminal investigation. Sensors 2009, 9, 568–601. [Google Scholar]

- Qi, X.; Lichti, D.D.; El-Badry, M.; Chan, T.O.; El-Halawany, S.I.; Lahamy, H.; Steward, J. Structural dynamic deflection measurement with range cameras. Photogramm. Rec. 2014, 29, 89–107. [Google Scholar] [CrossRef]

- Kim, K.; Kim, J. Dynamic displacement measurement of a vibratory object using a terrestrial laser scanner. Meas. Sci. Technol. 2015, 26, 045002. [Google Scholar] [CrossRef]

- Qi, X.; Lichti, D.; El-Badry, M.; Chow, J.; Ang, K. Vertical dynamic deflection measurement in concrete beams with the Microsoft Kinect. Sensors 2014, 14, 3293–3307. [Google Scholar] [CrossRef] [PubMed]

- Cabaleiro, M.; Riveiro, B.; Arias, P.; Caamaño, J.C. Algorithm for beam deformation modeling from LiDAR data. Measurement 2015, 76, 20–31. [Google Scholar] [CrossRef]

- Gordon, S.J.; Lichti, D.D. Modeling terrestrial laser scanner data for precise structural deformation measurement. J. Surv. Eng. 2007, 133, 72–80. [Google Scholar] [CrossRef]

- Maru, M.B.; Lee, D.; Cha, G.; Park, S. Beam Deflection Monitoring Based on a Genetic Algorithm Using Lidar Data. Sensors 2020, 20, 2144. [Google Scholar] [CrossRef] [PubMed]

- Sayyar-Roudsari, S.; Hamoush, S.A.; Szeto, T.M.V.; Yi, S. Using a 3D Computer Vision System for Inspection of Reinforced Concrete Structures. In Proceedings of the Science and Information Conference, Tokyo, Japan, 16–19 March 2019; Springer: Cham, Switzerland, 2019; pp. 608–618. [Google Scholar]

- Chen, Y.L.; Abdelbarr, M.; Jahanshahi, M.R.; Masri, S.F. Color and depth data fusion using an RGB-D sensor for inexpensive and contactless dynamic displacement-field measurement. Struct. Control. Health Monit. 2017, 24, e2000. [Google Scholar] [CrossRef]

- Kim, K.; Sohn, H. Dynamic displacement estimation by fusing LDV and LiDAR measurements via smoothing based Kalman filtering. Mech. Syst. Signal Process. 2017, 82, 339–355. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, C.; Ju, Z.; Li, Z.; Su, C. Preprocessing and transmission for 3d point cloud data. In Proceedings of the International Conference on Intelligent Robotics and Applications, Wuhan, China, 16–18 August 2017; Springer: Cham, Switzerland, 2017; pp. 438–449. [Google Scholar]

- Salgado, C.M.; Azevedo, C.; Proença, H.; Vieira, S.M. Noise versus outliers. In Secondary Analysis of Electronic Health Records; Springer: Cham, Switzerland, 2016; pp. 163–183. [Google Scholar]

- Toshniwal, D.; Yadav, S. Adaptive outlier detection in streaming time series. In Proceedings of the International Conference on Asia Agriculture and Animal, ICAAA, Hong Kong, China, 2–3 July 2011; Volume 13, pp. 186–192. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No. 98CH36271), Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar]

- Fleishman, S.; Drori, I.; Cohen-Or, D. Bilateral mesh denoising. In ACM SIGGRAPH 2003 Papers; Association for Computing Machinery: New York, NY, USA, 2003; pp. 950–953. [Google Scholar]

- Digne, J.; Franchis, C.D. The bilateral filter for point clouds. Image Process. Online 2017, 7, 278–287. [Google Scholar] [CrossRef]

- Wang, L.; Yuan, B.; Chen, J. Robust fuzzy c-means and bilateral point clouds denoising. In Proceedings of the 2006 8th International Conference on Signal Processing, Beijing, China, 16–20 November 2006; Volume 2. [Google Scholar]

- Merklinger, H.M. Focusing the View Camera; Seaboard Printing Limited: Dartmouth, NS, Canada, 1996. [Google Scholar]

- Intel® RealSenseTM. RealSense D400 Series User Manual. 2018. Available online: https://www.intel.com/content/dam/support/us/en/documents/emerging-technologies/intel-realsense-technology/Intel-RealSense-Viewer-User-Guide.pdf (accessed on 30 December 2020).

- Lemmens, M. Terrestrial laser scanning. In Geo-Information; Springer: Dordrecht, The Netherlands, 2011; pp. 101–121. [Google Scholar]

- Vosselman, G.; Maas, H. Airborne and Terrestrial Laser Scanning; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Nuttens, T.; Wulf, A.D.; Deruyter, G.; Stal, C.; Backer, H.D.; Schotte, K. Application of laser scanning for deformation measurements: A comparison between different types of scanning instruments. In Proceedings of the FIG Working Week, Rome, Italy, 6–10 May 2012. [Google Scholar]

- Romsek, B.R. Terrestrial Laser Scanning: Comparison of Time-of-Flight and Phase Based Measuring Systems. Master’s Thesis, Master of Science in Engineering, Purdue University, West Lafayette, IN, USA, 2008. [Google Scholar]

- Fröhlich, C.; Mettenleiter, M. Terrestrial laser scanning—new perspectives in 3D surveying. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2004, 36, W2. [Google Scholar]

- Ullrich, A.; Pfennigbauer, M. Echo digitization and waveform analysis in airborne and terrestrial laser scanning. In Proceedings of the Photogrammetric Week, Stuttgart, Germany, 5–9 September 2011; Volume 11. [Google Scholar]

- Geosystems, Leica. Leica ScanStation C10/C5 User Manual. 2011. Available online: https://www.google.com/search?q=Geosystems%2C+Leica.+Leica+ScanStation+C10%2FC5+User+Manual&oq=Geosystems%2C+Leica.+Leica+ScanStation+C10%2FC5+User+Manual&aqs=chrome..69i57.550j0j9&sourceid=chrome&ie=UTF-8# (accessed on 30 December 2020).

- Nutanong, S.; Jacox, E.H.; Samet, H. An incremental Hausdorff distance calculation algorithm. Proc. VLDB Endow. 2011, 4, 506–517. [Google Scholar] [CrossRef]

- Taha, A.A.; Hanbury, A. An efficient algorithm for calculating the exact Hausdorff distance. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2153–2163. [Google Scholar] [CrossRef]

- Bolkas, D.; Martinez, A. Effect of target color and scanning geometry on terrestrial LiDAR point-cloud noise and plane fitting. J. Appl. Geod. 2018, 12, 109–127. [Google Scholar] [CrossRef]

| Parameter | Terrestrial Laser Scanner | Depth Camera |

|---|---|---|

| Brand | Leica | Intel RealSense |

| Model | C5 | D415 |

| Range | 300 m @ 90 %; 134 m@ 18 % albedo (minimum range 0.1 m) | ∼10 m |

| Field of View (H × V) | 360° × 270° | 69.4° × 42.5° × 77° |

| Range measurement principle | Pulsed (Time of Flight) | Active IR Stereo |

| Scan rate | 50,000 points/s | - |

| Resolution | - | 1280 × 720 |

| precision | 2 mm | - |

| Baseline | - | 55 mm |

| Point spacing | Fully selectable horizontal and vertical; <1 mm minimum spacing, through full range; single point dwell capacity. | - |

| IR Projector | - | Standard |

| Camera | Auto adjusting, integrated high-resolution digital camera with zoom video | Full HD RGB camera calibrated and synchronized with depth data |

| TLS | Percentage Error | DC | Percentage Error | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Nominal Deflection (mm) | LVDT 1.00 m (mm) | Loading (KN) | Noised mm | Denoised mm | Error Noised % | Error Denoised % | Noised mm | Denoised mm | Error Noised % | Error Denoised % |

| Unloading | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | −1.005 | 57.33 | −1.286 | −1.058 | 27.96 | 5.27 | −0.709 | −0.795 | 29.45 | 20.90 |

| 2 | −2.014 | 200.85 | −2.373 | −2.087 | 17.83 | 3.62 | −1.714 | −1.791 | 14.90 | 11.07 |

| 3 | −3.022 | 380.85 | −2.970 | −2.988 | 1.72 | 1.13 | −2.711 | −3.043 | 10.29 | 0.69 |

| 4 | −4.029 | 480.84 | −4.174 | −3.967 | 3.60 | 1.54 | −4.310 | −4.129 | 6.97 | 2.48 |

| Nominal Deflection (mm) | LVDT 1.00 m (mm) | Loading (KN) | TLS_Denoised | DC_Denoised | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Case | Case | |||||||||||||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |||

| Unloading | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | −4.029 | 480.84 | 3.82 | 3.79 | 3.98 | 4.07 | 3.98 | 4.04 | 3.88 | 4.02 | 4.13 | 4.43 | 4.29 | 4.27 | 4.13 | 4.07 | 4.08 | 3.98 | 3.99 | 3.92 |

| Average | −3.9667 | −4.1295 | ||||||||||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maru, M.B.; Lee, D.; Tola, K.D.; Park, S. Comparison of Depth Camera and Terrestrial Laser Scanner in Monitoring Structural Deflections. Sensors 2021, 21, 201. https://doi.org/10.3390/s21010201

Maru MB, Lee D, Tola KD, Park S. Comparison of Depth Camera and Terrestrial Laser Scanner in Monitoring Structural Deflections. Sensors. 2021; 21(1):201. https://doi.org/10.3390/s21010201

Chicago/Turabian StyleMaru, Michael Bekele, Donghwan Lee, Kassahun Demissie Tola, and Seunghee Park. 2021. "Comparison of Depth Camera and Terrestrial Laser Scanner in Monitoring Structural Deflections" Sensors 21, no. 1: 201. https://doi.org/10.3390/s21010201

APA StyleMaru, M. B., Lee, D., Tola, K. D., & Park, S. (2021). Comparison of Depth Camera and Terrestrial Laser Scanner in Monitoring Structural Deflections. Sensors, 21(1), 201. https://doi.org/10.3390/s21010201