Investigating an Integrated Sensor Fusion System for Mental Fatigue Assessment for Demanding Maritime Operations

Abstract

1. Introduction

2. Materials and Methods

2.1. Sensor Framework

- Phase 1—data acquisition. During this phase a set of sensors is used to collect physiological data from the operator. The data are collected from disparate sensors and centralized by a micro-controller. During this phase the data are also preprocessed to remove noise and unwanted artifacts that can disturb the fusion and classification processes.

- Phase 2—mental fatigue assessment. During this phase a sensor fusion algorithm is applied to the preprocessed data. This algorithm is responsible for fusing the disparate data channels and outputting an MF indicator. This MF indicator is registered and can be used to evaluate the risk level in the operation.

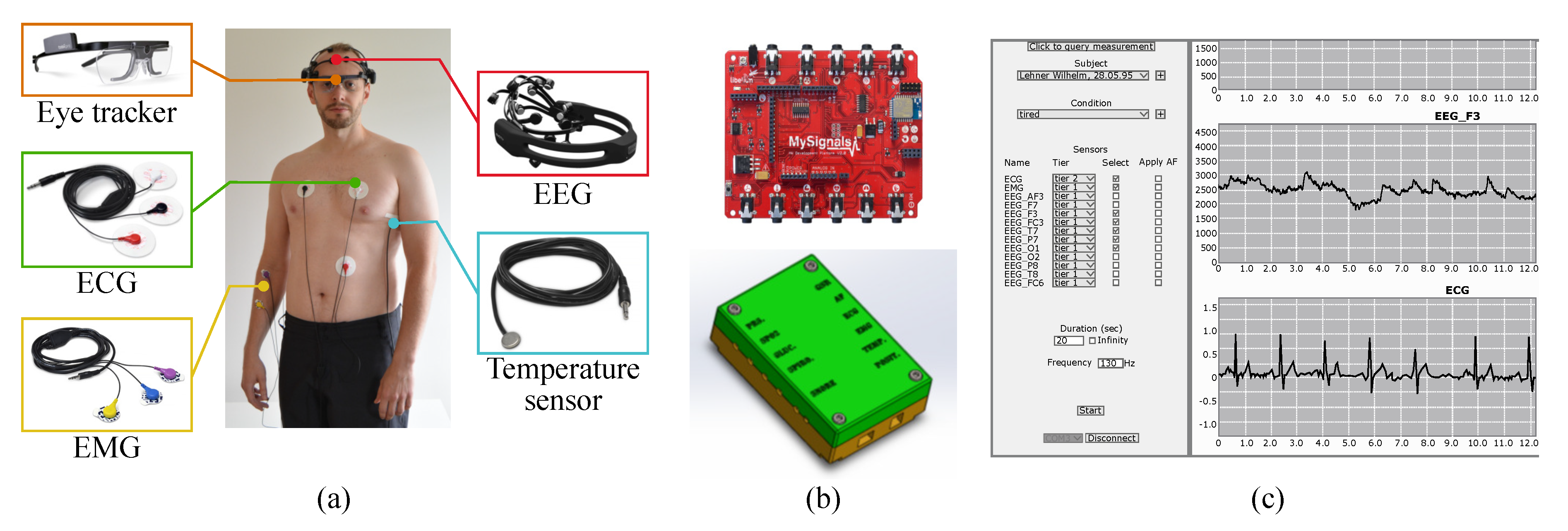

2.2. Sensors Setup

2.2.1. ECG

2.2.2. EMG

2.2.3. Body Temperature Sensor

2.2.4. EEG

2.2.5. Eye Tracker

2.2.6. Data Centralization

2.3. Data Collection and Preprocessing

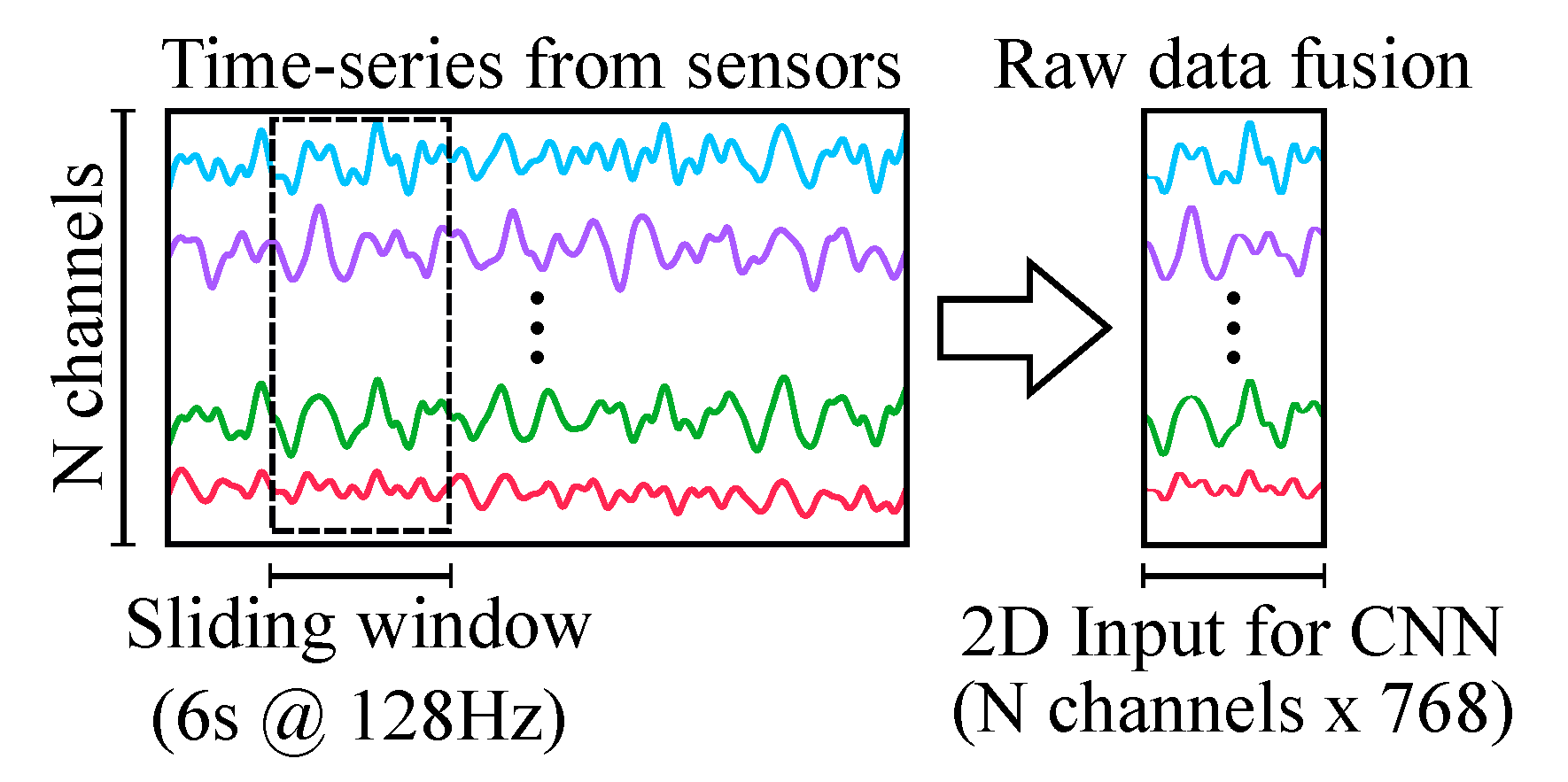

2.4. Sensor Fusion

2.5. Mental Fatigue Assessment

2.6. Experimental Setup

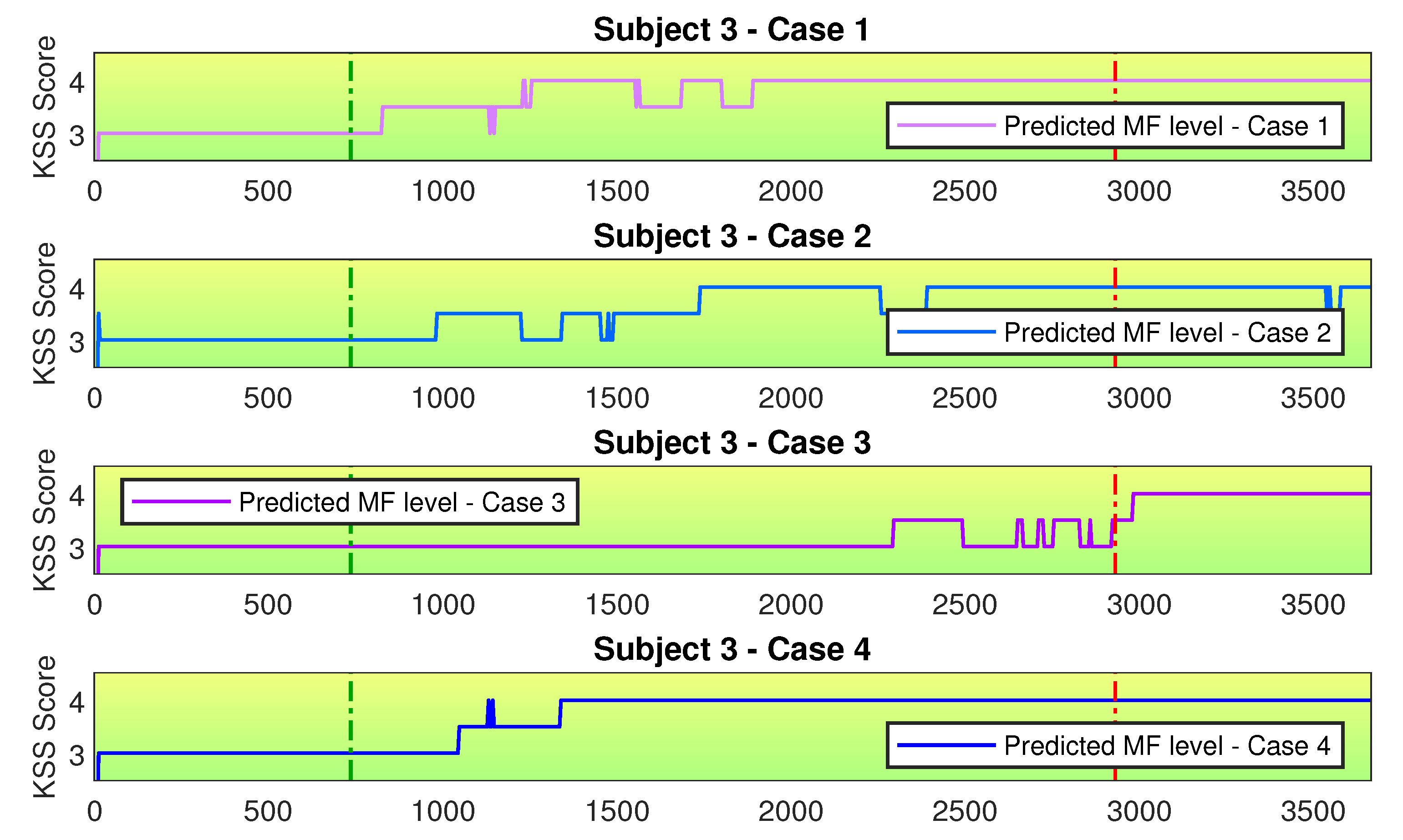

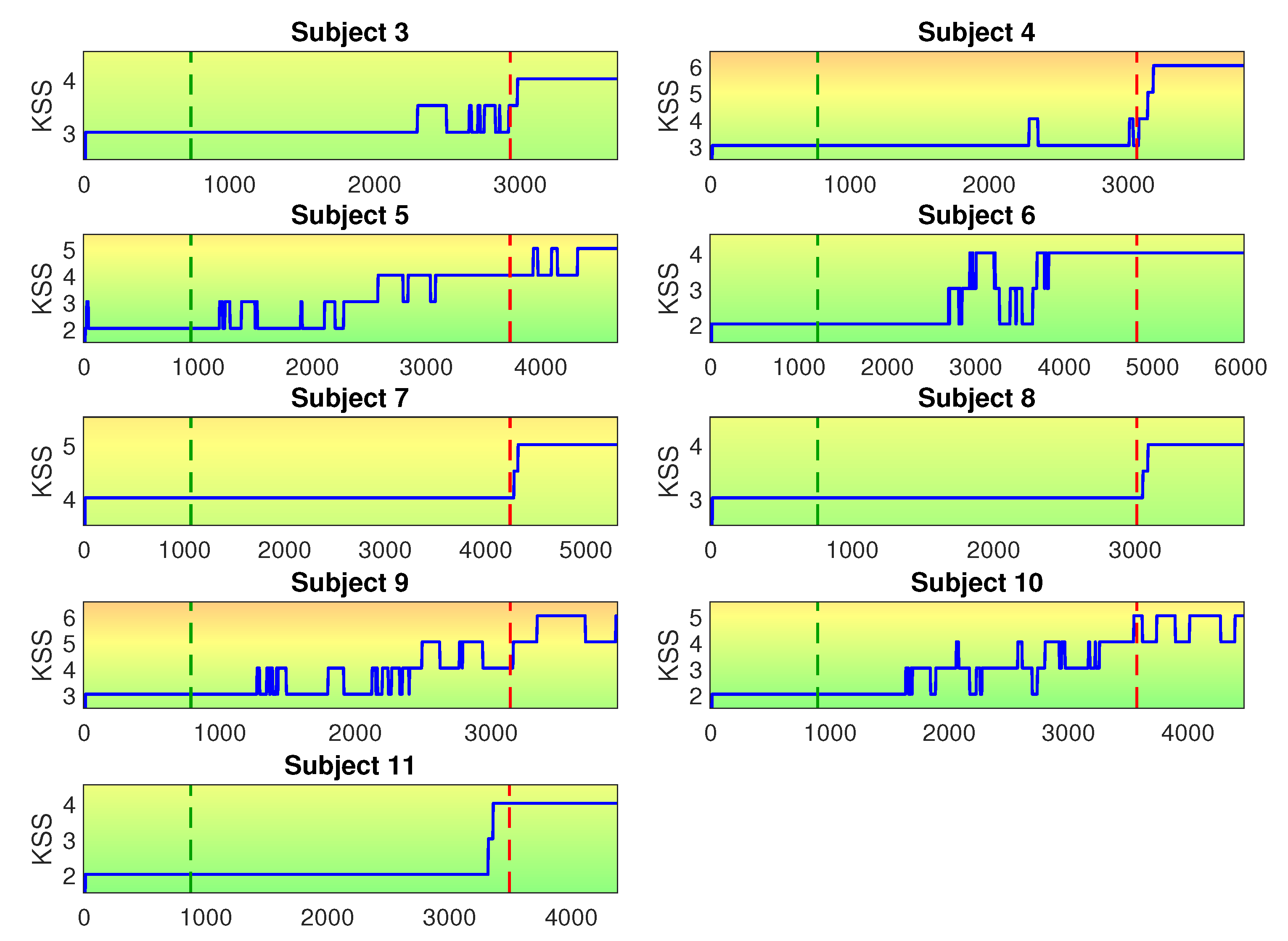

3. Results

4. Discussion

4.1. Impact of Sensor Combination on CNN Generalization

| Algorithm 1 Mental fatigue assessment. |

| 1: procedure MENTAL FATIGUE SCALE |

| 2: Continuously read and preprocess data from physiological sensors |

| 3: loop every 4 s: |

| 4: Segment time-series with sliding window (length = 6 s/overlap = 2 s) |

| 5: Fuse time-series segments into 2D input |

| 6: Feed 2D input to CNN and get classification output |

| 7: Obtain MF level by averaging CNN output with averaging window |

| (length = 5 steps) |

| 8: Plot MF level |

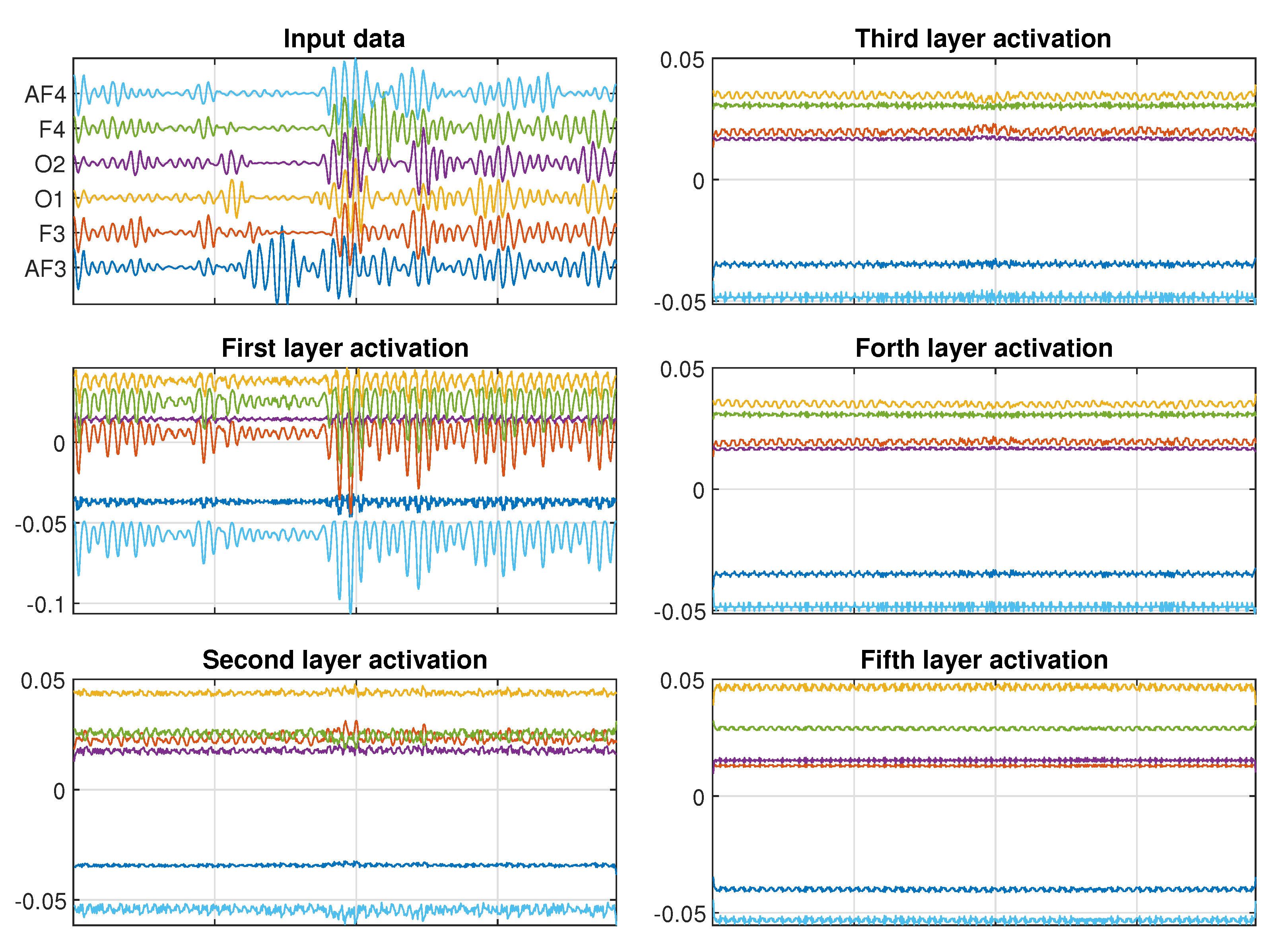

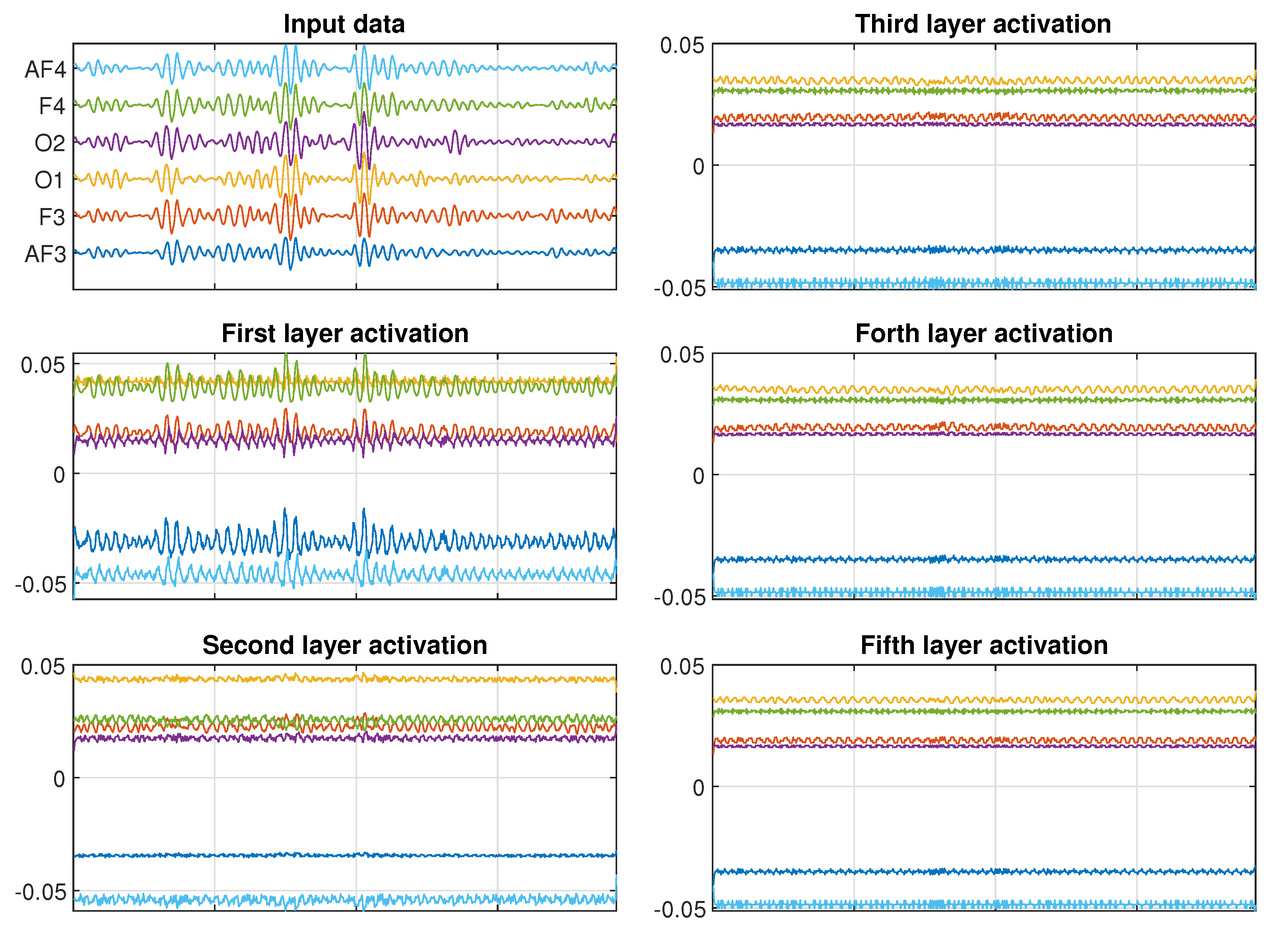

4.2. Looking Inside the CNN

4.3. Limitations

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| CNN | convolutional neural network |

| ECG | electrocardiogram |

| EEG | electroencephalogram |

| EMG | electromyogram |

| EOG | electrooculogram |

| KSS | Karolisnka Sleepiness Scale |

| MF | mental fatigue |

References

- Chauvin, C. Human factors and maritime safety. J. Navig. 2011, 64, 625–632. [Google Scholar] [CrossRef]

- Schröder-Hinrichs, J.U.; Hollnagel, E.; Baldauf, M.; Hofmann, S.; Kataria, A. Maritime human factors and IMO policy. Marit. Policy Manag. 2013, 40, 243–260. [Google Scholar] [CrossRef]

- Endsley, M.R. Situation awareness global assessment technique (SAGAT). In Proceedings of the IEEE 1988 National Aerospace and Electronics Conference, Dayton, OH, USA, 23–27 May 1988; pp. 789–795. [Google Scholar]

- Sneddon, A.; Mearns, K.; Flin, R. Stress, fatigue, situation awareness and safety in offshore drilling crews. Saf. Sci. 2013, 56, 80–88. [Google Scholar] [CrossRef]

- Quick, J.C.; Wright, T.A.; Adkins, J.A.; Nelson, D.L.; Quick, J.D. Preventive Stress Management in Organizations; American Psychological Association: Washington, DC, USA, 2013. [Google Scholar]

- Spencer, M.; Robertson, K.; Folkard, S. The development of a fatigue/risk index for shiftworkers (Research Rep. 446). In Health and Safety Executive Report; HSE Books: Sudbury, UK, 2006. [Google Scholar]

- IMO. Guidelines on Fatigue; International Maritime Organization: London, UK, 2019. [Google Scholar]

- Shingledecker, C.A.; Holding, D.H. Risk and effort measures of fatigue. J. Mot. Behav. 1974, 6, 17–25. [Google Scholar] [CrossRef] [PubMed]

- Monk, T.H. Sleep, Sleepiness and Performance; John Wiley & Sons: Hoboken, NJ, USA, 1991. [Google Scholar]

- McCafferty, D.B.; Baker, C.C. Human error and marine systems: Current trends. In Proceedings of the IBC’s 2nd Annual Conference on Human Errors, London, UK, 20–21 March 2002. [Google Scholar]

- Parker, T.W.; Hubinger, L.M.; Green, S.; Sargent, L.; Boyd, B. A Survey of the Health Stress and Fatigue of Australian Seafarers; Australian Maritime Safety Authority, Australian Government: Canberra, Australia, 1997.

- Wu, E.Q.; Peng, X.; Zhang, C.Z.; Lin, J.; Sheng, R.S. Pilots’ Fatigue Status Recognition Using Deep Contractive Autoencoder Network. IEEE Trans. Instrum. Meas. 2019, 68, 3907–3919. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Åkerstedt, T.; Gillberg, M. Subjective and objective sleepiness in the active individual. Int. J. Neurosci. 1990, 52, 29–37. [Google Scholar] [CrossRef]

- Wadsworth, E.J.; Allen, P.H.; Wellens, B.T.; McNamara, R.L.; Smith, A.P. Patterns of fatigue among seafarers during a tour of duty. Am. J. Ind. Med. 2006, 49, 836–844. [Google Scholar] [CrossRef]

- Sahayadhas, A.; Sundaraj, K.; Murugappan, M. Detecting driver drowsiness based on sensors: A review. Sensors 2012, 12, 16937–16953. [Google Scholar] [CrossRef]

- Nilsson, T.; Nelson, T.M.; Carlson, D. Development of fatigue symptoms during simulated driving. Accid. Anal. Prev. 1997, 29, 479–488. [Google Scholar] [CrossRef]

- Elmenreich, W. An Introduction to Sensor Fusion; Vienna University of Technology: Vienna, Austria, 2002. [Google Scholar]

- Xiong, N.; Svensson, P. Multi-sensor management for information fusion: Issues and approaches. Inf. Fusion 2002, 3, 163–186. [Google Scholar] [CrossRef]

- Eriksen, C.A.; Gillberg, M.; Vestergren, P. Sleepiness and sleep in a simulated “six hours on/six hours off” sea watch system. Chronobiol. Int. 2006, 23, 1193–1202. [Google Scholar] [CrossRef] [PubMed]

- Gould, K.S.; Hirvonen, K.; Koefoed, V.F.; Red, B.K.; Sallinen, M.; Holm, A.; Bridger, R.S.; Moen, B.E. Effects of 60 hours of total sleep deprivation on two methods of high-speed ship navigation. Ergonomics 2009, 52, 1469–1486. [Google Scholar] [CrossRef] [PubMed]

- Allen, P.H.; Wellens, B.T.; McNamara, R.; Smith, A.P. It’s not all plain sailing. Port turn-arounds and Seafarers’ fatigue: A case study. In Proceedings of the International Conference on Contemporary Ergonomics (CE2005), Hatfield, UK, 5–7 April 2005. [Google Scholar]

- Tirilly, G. The impact of fragmented sleep at sea on sleep, alertness and safety of seafarers. Med. Maritima 2004, 4, 96–105. [Google Scholar]

- Yang, G.; Lin, Y.; Bhattacharya, P. A driver fatigue recognition model based on information fusion and dynamic Bayesian network. Inf. Sci. 2010, 180, 1942–1954. [Google Scholar] [CrossRef]

- MySignals—eHealth and Medical IoT Development Platform. Available online: http://www.my-signals.com/ (accessed on 17 June 2019).

- Reaz, M.B.I.; Hussain, M.; Mohd-Yasin, F. Techniques of EMG signal analysis: Detection, processing, classification and applications. Biol. Proced. Online 2006, 8, 11. [Google Scholar] [CrossRef]

- Van Dongen, H.P.; Dinges, D.F. Circadian rhythms in fatigue, alertness, and performance. Princ. Pract. Sleep Med. 2000, 20, 391–399. [Google Scholar]

- Niedermeyer, E.; da Silva, F.L. Electroencephalography: Basic Principles, Clinical Applications, and Related Fields; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2005. [Google Scholar]

- Chuang, S.W.; Ko, L.W.; Lin, Y.P.; Huang, R.S.; Jung, T.P.; Lin, C.T. Co-modulatory spectral changes in independent brain processes are correlated with task performance. Neuroimage 2012, 62, 1469–1477. [Google Scholar] [CrossRef]

- Emotiv Epoc+. Available online: https://www.emotiv.com/epoc/ (accessed on 17 June 2019).

- Maglione, A.; Borghini, G.; Arico, P.; Borgia, F.; Graziani, I.; Colosimo, A.; Kong, W.; Vecchiato, G.; Babiloni, F. Evaluation of the workload and drowsiness during car driving by using high resolution EEG activity and neurophysiologic indices. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 6238–6241. [Google Scholar]

- Tobii Pro Glasses 2. Available online: https://www.tobiipro.com// (accessed on 17 June 2019).

- Mühlbacher-Karrer, S.; Mosa, A.H.; Faller, L.M.; Ali, M.; Hamid, R.; Zangl, H.; Kyamakya, K. A driver state detection system—Combining a capacitive hand detection sensor with physiological sensors. IEEE Trans. Instrum. Meas. 2017, 66, 624–636. [Google Scholar] [CrossRef]

- Bosse, E.; Roy, J.; Grenier, D. Data fusion concepts applied to a suite of dissimilar sensors. In Proceedings of the 1996 Canadian Conference on Electrical and Computer Engineering, Calgary, AB, Canada, 26–29 May 1996; Volume 2, pp. 692–695. [Google Scholar]

- Durrant-Whyte, H.F. Sensor models and multisensor integration. Int. J. Robot. Res. 1988, 7, 97–113. [Google Scholar] [CrossRef]

- Waltz, E.; Llinas, J. Multisensor Data Fusion; Artech House: Boston, MA, USA, 1990; Volume 685. [Google Scholar]

- Jing, L.; Wang, T.; Zhao, M.; Wang, P. An adaptive multi-sensor data fusion method based on deep convolutional neural networks for fault diagnosis of planetary gearbox. Sensors 2017, 17, 414. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Wang, X.; Chen, J.; You, W.; Zhang, W. Spectral and temporal feature learning with two-stream neural networks for mental workload assessment. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1149–1159. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Wang, X.; Yang, Y.; Mu, C.; Cai, Q.; Dang, W.; Zuo, S. EEG-based spatio–temporal convolutional neural network for driver fatigue evaluation. IEEE Trans. Neural Networks Learn. Syst. 2019, 30, 2755–2763. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Qian, J.; Yao, Z.; Jiao, X.; Pan, J. Convolutional two-stream network using multi-facial feature fusion for driver fatigue detection. Future Internet 2019, 11, 115. [Google Scholar] [CrossRef]

- Han, S.Y.; Kwak, N.S.; Oh, T.; Lee, S.W. Classification of pilots’ mental states using a multimodal deep learning network. Biocybern. Biomed. Eng. 2020, 40, 324–336. [Google Scholar] [CrossRef]

- Monteiro, T.G.; Skourup, C.; Zhang, H. Optimizing CNN Hyperparameters for Mental Fatigue Assessment in Demanding Maritime Operations. IEEE Access 2020, 8, 40402–40412. [Google Scholar] [CrossRef]

- Lützhöft, M.; Grech, M.R.; Porathe, T. Information environment, fatigue, and culture in the maritime domain. Rev. Hum. Factors Ergon. 2011, 7, 280–322. [Google Scholar] [CrossRef]

- Norsk Senter for Forskningsdata AS. Available online: https://www.regjeringen.no/en/ (accessed on 17 June 2019).

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

| Case 1 | Case 2 | Case 3 | Case 4 | |

|---|---|---|---|---|

|

Test acc (avg ± std) |

Test acc (avg ± std) |

Test acc (avg ± std) |

Test acc (avg ± std) | |

| Subj. 1 | 0.63 ± 0.13 | 0.82 ± 0.03 | - | 0.82 ± 0.04 |

| Subj. 2 | 0.94 ± 0.02 | 0.80 ± 0.05 | - | 0.93 ± 0.04 |

| Subj. 3 | 0.93 ± 0.03 | 0.77 ± 0.04 | 0.99 ± 0.01 | 0.95 ± 0.02 |

| Subj. 4 | 0.81 ± 0.05 | 0.88 ± 0.04 | 0.99 ± 0.01 | 0.90 ± 0.05 |

| Subj. 5 | 0.94 ± 0.03 | - | 0.95 ± 0.03 | 0.96 ± 0.02 |

| Subj. 6 | 0.99 ± 0.01 | - | 0.98 ± 0.02 | 0.99 ± 0.01 |

| Subj. 7 | 0.97 ± 0.02 | - | 1.00 ± 0.01 | 0.97 ± 0.02 |

| Subj. 8 | 0.84 ± 0.07 | - | 0.99 ± 0.02 | 0.85 ± 0.08 |

| Subj. 9 | 0.66 ± 0.13 | - | 0.91 ± 0.04 | 0.87 ± 0.04 |

| Subj. 10 | 0.94 ± 0.03 | - | 0.98 ± 0.02 | 0.96 ± 0.02 |

| Subj. 11 | 0.81 ± 0.18 | - | 1.00 ± 0.00 | 0.81 ± 0.17 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monteiro, T.G.; Li, G.; Skourup, C.; Zhang, H. Investigating an Integrated Sensor Fusion System for Mental Fatigue Assessment for Demanding Maritime Operations. Sensors 2020, 20, 2588. https://doi.org/10.3390/s20092588

Monteiro TG, Li G, Skourup C, Zhang H. Investigating an Integrated Sensor Fusion System for Mental Fatigue Assessment for Demanding Maritime Operations. Sensors. 2020; 20(9):2588. https://doi.org/10.3390/s20092588

Chicago/Turabian StyleMonteiro, Thiago Gabriel, Guoyuan Li, Charlotte Skourup, and Houxiang Zhang. 2020. "Investigating an Integrated Sensor Fusion System for Mental Fatigue Assessment for Demanding Maritime Operations" Sensors 20, no. 9: 2588. https://doi.org/10.3390/s20092588

APA StyleMonteiro, T. G., Li, G., Skourup, C., & Zhang, H. (2020). Investigating an Integrated Sensor Fusion System for Mental Fatigue Assessment for Demanding Maritime Operations. Sensors, 20(9), 2588. https://doi.org/10.3390/s20092588