Deep Learning-Based Monocular Depth Estimation Methods—A State-of-the-Art Review

Abstract

1. Introduction

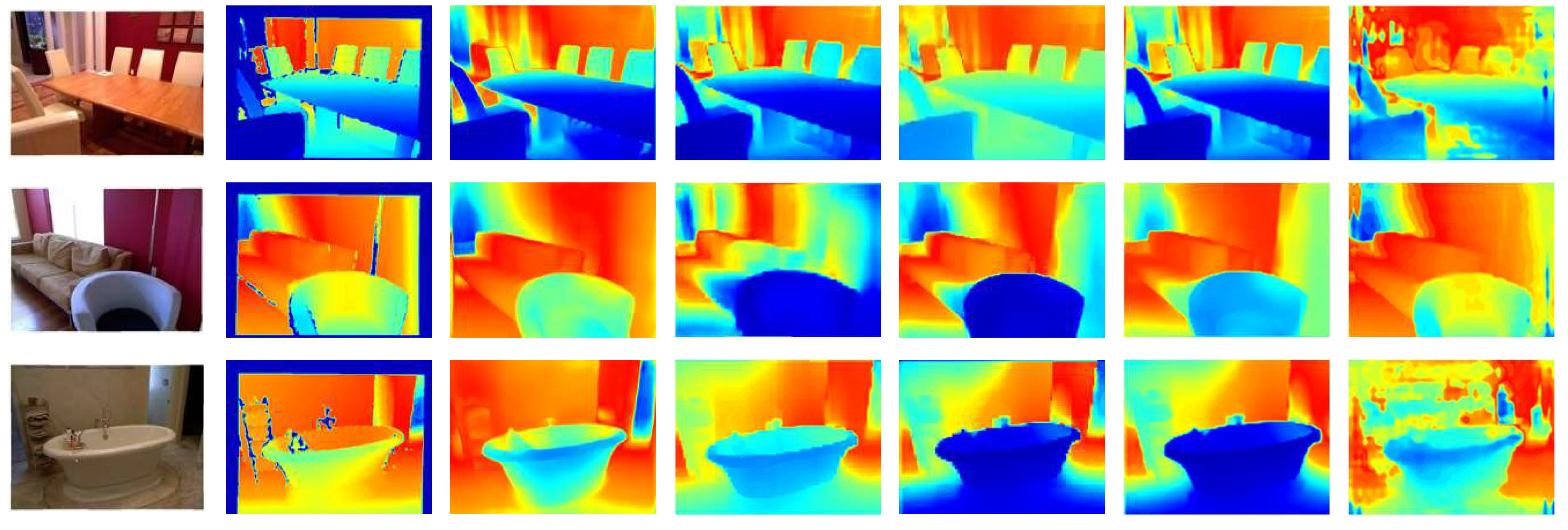

2. An Overview of Monocular Depth Estimation

2.1. Problem Representation

2.2. Traditional Methods for Depth Estimation

2.3. Datasets for Depth Estimation

- NYU-v2: the NYU-v2 dataset for depth estimation was introduced in [29]. The dataset consists of 1449 RGB images densely labelled with depth images. The datasets consist of 407K frames of 464 scenes taken from three different cities. These datasets are used for indoor scenes depth estimation, segmentation and classification.

- Make3D: the Make3D dataset, introduced in [30], contains 400 and 134 outdoor images for training and testing, respectively. This dataset contains different types of outdoor, indoor and synthetic scenes that are used for depth estimation by presenting a more complex set of features.

- KITTI: the KITTI dataset, introduced in [31], has two versions and is made of 394 road scenes providing RGB stereo sets and corresponding ground truth depth maps. The KITTI dataset is further divided into RD: KITTI Raw Depth [31]; CD: KITTI Continuous Depth [31,32]; SD: KITTI Semi-Dense Depth [31,32]; ES: Eigen Split [33]; ID: KITTI Improved Depth [34]. KITTI datasets are commonly used for different tasks including 3D object detection and depth estimation. The high-quality ground truth images are captured using the Velodyne laser scanner.

- Pandora: the Pandora dataset, introduced [35], contains 250K full resolution RGB and corresponding depth images having their corresponding annotation. Pandora dataset is used for head centre localization, head pose estimation and shoulder pose estimation.

- SceneFlow: this was introduced in [36] as one of the very first large-scale synthetic datasets consist of 39K stereo images with corresponding disparity, depth, optical flow and segmentation masks.

3. Deep Learning and Monocular Depth Estimation

3.1. Supervised Methods

3.2. Self-Supervised Methods

3.3. Semi-Supervised Methods

4. Evaluation Matrices and Criteria

5. Discussion

5.1. Comparison Analysis Based on Performance

5.2. Future Research Directions

- Complex deep networks are very expansive in terms of memory requirements, which is a major issue when dealing with high-resolution images and when aiming to predict high-resolution depth images.

- Developments in high-performance computing can address the memory and computational issues, however, devolving lighter deep network architectures remains desirable especially if it is to be deployed in smart consumer devices.

- Another challenge is how to achieve higher accuracy, in general, which is affected by the complex scenarios, such as occlusions, highly cluttered scenes and complex material properties of the objects.

- Deep-learning methods rely heavily on the training datasets annotated with ground truth labels for depth estimation which is very expansive to obtain in the real world.

- We expect in the future to see the emergence of large databases for 3D reconstruction. Emerging new self-adoption methods that can adapt themselves to new circumstances in real-time or with minimum supervision are one of the promising future directions for research in depth estimation.

Author Contributions

Funding

Conflicts of Interest

Appendix A

Low-Performance Monocular Depth Estimation Methods

| Method | Input | Type | Optimizer | Parameters | Output | GPU Memory | RMSE | GPU Model |

|---|---|---|---|---|---|---|---|---|

| Zhou et al. [70] | 416 K | CNN | Adam | N/A | 416 K | N/A | 4.975 | N/A |

| Casser et al. [73] | × 416 K | CNN | Adam | N/A | 416 K | 11 GB | 4.7503 | 1080 Ti |

| Guizilini et al. [74] | 192 K | FC | Adam | 86M | × 192 K | N/A | 4.601 | N/A |

| Godard et al. [15] | 192 K | FC | Adam | 31M | × 192 K | 12 GB | 4.935 | TITAN Xp |

| Eigen et al. [33] | 184 K | CNN | Adam | N/A | × 184 | 6 GB | N/A | TITAN Black |

| Guizilin et al. [75] | 192 K | ED | Adam | 79M | × 192 | × 16 GB | 4.270 | Tesla V100 |

| Tang et al. [76] | 192 K | CNN | RMSprop | 80M | 192 | 12 GB | N/A | N/A |

| Ramamonjisoa et al. [40] | 480 N | ED | Adam | 69M | 480 N | 11 GB | 0.401 | 1080 Ti |

| Riegler et al. [39] | N/A | ED | Adam | N/A | N/A | N/A | N/A | N/A |

| Ji et al. [37] | 240 N | ED | Adam | N/A | 240 N | 12 GB | 0.704 | TITAN Xp |

| Almalioglu et al. [77] | 416 K | GAN | RMSprop | 63M | 416 K | 12 GB | 5.448 | TITAN V |

| Pillai et al. [41] | 416 K | CNN | Adam | 97M | 416 K | × 16 GB | 4.958 | Tesla V100 |

| Wofk et al. [24] | 224 N | ED | SGD | N/A | 224 N | N/A | 0.604 | N/A |

| Watson et al. [78] | 416 K | ED | SGD | N/A | 416 K | N/A | N/A | N/A |

| Chen et al. [79] | × 512 K | ED | Adam | N/A | 512 K | 11 GB | 3.871 | 1080 Ti |

| Lee et al. [80] | 480 N | CNN | SGD | 61M | 480 N | N/A | 0.538 | N/A |

References

- Chen, L.; Tang, W.; John, N.W.; Wan, T.R.; Zhang, J.J. Augmented Reality for Depth Cues in Monocular Minimally Invasive Surgery. arXiv Prepr. 2017, arXiv:1703.01243. [Google Scholar]

- Liu, X.; Sinha, A.; Ishii, M.; Hager, G.D.; Reiter, A.; Taylor, R.H.; Unberath, M. Dense Depth Estimation in Monocular Endoscopy with Self-supervised Learning Methods. IEEE Trans. Med. Imaging 2019, 1. [Google Scholar] [CrossRef] [PubMed]

- Palafox, P.R.; Betz, J.; Nobis, F.; Riedl, K.; Lienkamp, M. SemanticDepth: Fusing Semantic Segmentation and Monocular Depth Estimation for Enabling Autonomous Driving in Roads without Lane Lines. Sensors 2019, 19, 3224. [Google Scholar] [CrossRef] [PubMed]

- Laidlow, T.; Czarnowski, J.; Leutenegger, S. DeepFusion: Real-Time Dense 3D Reconstruction for Monocular SLAM using Single-View Depth and Gradient Predictions. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4068–4074. [Google Scholar]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Dai, Y.; Li, H.; He, M. Projective Multiview Structure and Motion from Element-Wise Factorization. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2238–2251. [Google Scholar] [CrossRef]

- Yu, F.; Gallup, D. 3D Reconstruction from Accidental Motion. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3986–3993. [Google Scholar]

- Javidnia, H.; Corcoran, P. Accurate Depth Map Estimation From Small Motions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2453–2461. [Google Scholar]

- Basha, T.; Avidan, S.; Hornung, A.; Matusik, W. Structure and motion from scene registration. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1426–1433. [Google Scholar]

- Scharstein, D.; Pal, C. Learning conditional random fields for stereo. In Proceedings of the 2007 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2007), Minneapolis, MN, USA, 18–23 June 2007; pp. 1–8. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the 1997 Conference on Computer Vision and Pattern Recognition (CVPR ’97), San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Javidnia, H.; Corcoran, P. A Depth Map Post-Processing Approach Based on Adaptive Random Walk with Restart. IEEE Access 2016, 4, 5509–5519. [Google Scholar] [CrossRef]

- Godard, C.; Aodha, O.M.; Brostow, G.J. Unsupervised Monocular Depth Estimation with Left-Right Consistency. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6602–6611. [Google Scholar]

- Kuznietsov, Y.; Stückler, J.; Leibe, B. Semi-Supervised Deep Learning for Monocular Depth Map Prediction. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2215–2223. [Google Scholar]

- Bazrafkan, S.; Javidnia, H.; Lemley, J.; Corcoran, P. Semiparallel deep neural network hybrid architecture: First application on depth from monocular camera. J. Electron. Imaging 2018, 27, 1–19. [Google Scholar] [CrossRef]

- Fu, H.; Gong, M.; Wang, C.; Batmanghelich, K.; Tao, D. Deep ordinal regression network for monocular depth estimation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2002–2011. [Google Scholar]

- Xu, D.; Wang, W.; Tang, H.; Liu, H.; Sebe, N.; Ricci, E. Structured attention guided convolutional neural fields for monocular depth estimation. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3917–3925. [Google Scholar]

- Microsoft. Kinect for Windows. 2010. Available online: https://developer.microsoft.com/en-us/windows/kinect/ (accessed on 22 March 2020).

- Microsoft. Kinect for Xbox One. 2017. Available online: https://www.xbox.com/en-US/xbox-one/accessories/kinect (accessed on 22 March 2020).

- Javidnia, H. Contributions to the Measurement of Depth in Consumer Imaging; National University of Ireland Galway: Galway, Ireland, 2018. [Google Scholar]

- Elkerdawy, S.; Zhang, H.; Ray, N. Lightweight monocular depth estimation model by joint end-to-end filter pruning. In Proceedings of the 2019 IEEE International Conference on Image Processing, ICIP 2019, Taipei, Taiwan, 22–25 September 2019; pp. 4290–4294. [Google Scholar]

- Wofk, D.; Ma, F.; Yang, T.-J.; Karaman, S.; Sze, V. Fastdepth: Fast monocular depth estimation on embedded systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6101–6108. [Google Scholar]

- Poggi, M.; Aleotti, F.; Tosi, F.; Mattoccia, S. Towards real-time unsupervised monocular depth estimation on cpuIn. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2018, Madrid, Spain, 1–5 October 2018; pp. 5848–5854. [Google Scholar]

- Scharstein, D.; Szeliski, R. High-accuracy stereo depth maps using structured light. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2003), Madison, WI, USA, 16–22 June 2003; Volume 1, pp. I-195–I-202. [Google Scholar]

- Luo, H.; Gao, B.; Xu, J.; Chen, K. An approach for structured light system calibration. In Proceedings of the 2013 IEEE International Conference on Cyber Technology in Automation, Control and Intelligent Systems, Nanjing, China, 26–29 May 2013; pp. 428–433. [Google Scholar]

- Velodyne Lidar. Inc. Available online: https://velodynelidar.com/ (accessed on 22 March 2020).

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 746–760. [Google Scholar]

- Saxena, A.; Sun, M.; Ng, A.Y. Make3d: Learning 3d scene structure from a single still image. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 824–840. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Dos Santos Rosa, N.; Guizilini, V.; Grassi, V. Sparse-to-Continuous: Enhancing Monocular Depth Estimation using Occupancy Maps. In Proceedings of the 2019 19th International Conference on Advanced Robotics (ICAR), Belo Horizonte, Brazil, 2–6 December 2019; pp. 793–800. [Google Scholar]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth Map Prediction from a Single Image Using a Multi-scale Deep Network. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2366–2374. [Google Scholar]

- Uhrig, J.; Schneider, N.; Schneider, L.; Franke, U.; Brox, T.; Geiger, A. Sparsity Invariant CNNs. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 11–20. [Google Scholar]

- Borghi, G.; Venturelli, M.; Vezzani, R.; Cucchiara, R. Poseidon: Face-from-depth for driver pose estimation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4661–4670. [Google Scholar]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Ji, R.; Li, K.; Wang, Y.; Sun, X.; Guo, F.; Guo, X.; Wu, Y.; Huang, F.; Luo, J. Semi-Supervised Adversarial Monocular Depth Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 1. [Google Scholar] [CrossRef]

- Tosi, F.; Aleotti, F.; Poggi, M.; Mattoccia, S. Learning monocular depth estimation infusing traditional stereo knowledge. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9799–9809. [Google Scholar]

- Riegler, G.; Liao, Y.; Donne, S.; Koltun, V.; Geiger, A. Connecting the Dots: Learning Representations for Active Monocular Depth Estimation. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7624–7633. [Google Scholar]

- Ramamonjisoa, M.; Lepetit, V. Sharpnet: Fast and accurate recovery of occluding contours in monocular depth estimation. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Pillai, S.; Ambruş, R.; Gaidon, A. Superdepth: Self-supervised, super-resolved monocular depth estimation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 9250–9256. [Google Scholar]

- Godard, C.; Mac Aodha, O.; Firman, M.; Brostow, G.J. Digging into self-supervised monocular depth estimation. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 3828–3838. [Google Scholar]

- Chen, Y.; Zhao, H.; Hu, Z. Attention-based context aggregation network for monocular depth estimation. arXiv Prepr. 2019, arXiv:1901.10137. [Google Scholar]

- Alhashim, I.; Wonka, P. High quality monocular depth estimation via transfer learning. arXiv Prepr. 2018, arXiv:1812.11941. [Google Scholar]

- Yin, W.; Liu, Y.; Shen, C.; Yan, Y. Enforcing geometric constraints of virtual normal for depth prediction. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 5684–5693. [Google Scholar]

- Lee, J.H.; Han, M.-K.; Ko, D.W.; Suh, I.H. From big to small: Multi-scale local planar guidance for monocular depth estimation. arXiv Prepr. 2019, arXiv:1907.10326. [Google Scholar]

- Teed, Z.; Deng, J. Deepv2d: Video to depth with differentiable structure from motion. arXiv Prepr. 2018, arXiv:1812.04605. [Google Scholar]

- Goldman, M.; Hassner, T.; Avidan, S. Learn stereo, infer mono: Siamese networks for self-supervised, monocular, depth estimation. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Guizilini, V.; Ambrus, R.; Pillai, S.; Gaidon, A. Packnet-sfm: 3d packing for self-supervised monocular depth estimation. arXiv Prepr. 2019, arXiv:1905.02693. [Google Scholar]

- Andraghetti, L.; Myriokefalitakis, P.; Dovesi, P.L.; Luque, B.; Poggi, M.; Pieropan, A.; Mattoccia, S. Enhancing self-supervised monocular depth estimation with traditional visual odometry. In Proceedings of the 2019 International Conference on 3D Vision (3DV), Quebec City, QC, Canada, 16–19 September 2019; pp. 424–433. [Google Scholar]

- Zhao, S.; Fu, H.; Gong, M.; Tao, D. Geometry-aware symmetric domain adaptation for monocular depth estimation. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9788–9798. [Google Scholar]

- Chen, W.; Fu, Z.; Yang, D.; Deng, J. Single-image depth perception in the wild. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems 2016, Barcelona, Spain, 5–10 December 2016; pp. 730–738. [Google Scholar]

- Xie, J.; Girshick, R.; Farhadi, A. Deep3d: Fully automatic 2d-to-3d video conversion with deep convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 842–857. [Google Scholar]

- Garg, R.; BG, V.K.; Carneiro, G.; Reid, I. Unsupervised CNN for Single View Depth Estimation: Geometry to the Rescue. In Computer Vision—ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VII; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 740–756. [Google Scholar]

- Ramos, F.; Ott, L. Hilbert maps: Scalable continuous occupancy mapping with stochastic gradient descent. Int. J. Rob. Res. 2016, 35, 1717–1730. [Google Scholar] [CrossRef]

- Laina, I.; Rupprecht, C.; Belagiannis, V.; Tombari, F.; Navab, N. Deeper Depth Prediction with Fully Convolutional Residual Networks. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 239–248. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, W.; Rabinovich, A.; Berg, A.C. Parsenet: Looking wider to see better. arXiv Prepr. 2015, arXiv:1506.04579. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der, M.L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Lehtinen, J.; Munkberg, J.; Hasselgren, J.; Laine, S.; Karras, T.; Aittala, M.; Aila, T. Noise2noise: Learning image restoration without clean data. arXiv Prepr. 2018, arXiv:1803.04189. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5828–5839. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv Prepr. 2017, arXiv:1706.05587. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 850–865. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv Prepr. 2014, arXiv:1409.1556. [Google Scholar]

- Huber, P.J. Robust Estimation of a Location Parameter. In Breakthroughs in Statistics: Methodology and Distribution; Kotz, S., Johnson, N.L., Eds.; Springer New York: New York, NY, USA, 1992; pp. 492–518. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Zhou, T.; Brown, M.; Snavely, N.; Lowe, D.G. Unsupervised learning of depth and ego-motion from video. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1851–1858. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Casser, V.; Pirk, S.; Mahjourian, R.; Angelova, A. Depth prediction without the sensors: Leveraging structure for unsupervised learning from monocular videos. Proceedings of Thirty-Third AAAI Conference on Artificial Intelligence (AAAI-19), Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8001–8008. [Google Scholar]

- Guizilini, V.; Hou, R.; Li, J.; Ambrus, R.; Gaidon, A. Semantically-Guided Representation Learning for Self-Supervised Monocular Depth. arXiv Prepr. 2020, arXiv:2002.12319. [Google Scholar]

- Guizilini, V.; Li, J.; Ambrus, R.; Pillai, S.; Gaidon, A. Robust Semi-Supervised Monocular Depth Estimation with Reprojected Distances. arXiv Prepr. arXiv:1910.01765, 2019.

- Tang, C.; Tan, P. Ba-net: Dense bundle adjustment network. arXiv Prepr. 2018, arXiv:1806.04807. [Google Scholar]

- Almalioglu, Y.; Saputra, M.R.U.; Gusmão PPBd Markham, A.; Trigoni, N. GANVO: Unsupervised Deep Monocular Visual Odometry and Depth Estimation with Generative Adversarial Networks. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5474–5480. [Google Scholar]

- Watson, J.; Firman, M.; Brostow, G.J.; Turmukhambetov, D. Self-Supervised Monocular Depth Hints. In Proceedings of the 2019 IEEE International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 2162–2171. [Google Scholar]

- Chen, P.-Y.; Liu, A.H.; Liu, Y.-C.; Wang, Y.-C.F. Towards scene understanding: Unsupervised monocular depth estimation with semantic-aware representation. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 2624–2632. [Google Scholar]

- Lee, J.-H.; Kim, C.-S. Monocular depth estimation using relative depth maps. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9729–9738. [Google Scholar]

| Dataset | Labelled Images | Annotation | Brief Description |

|---|---|---|---|

| NYU-v2 [29] | 1449 | Depth + Segmentation | Red-green-blue (RGB) and depth images taken from indoor scenes. |

| Make3D [30] | 534 | Depth | RGB and depth images taken from outdoor scenes. |

| KITTI [31] | 94K | Depth aligned with RAW data + Optical Flow | RGB and depth from 394 road scenes. |

| Pandora [35] | 250K | Depth + Annotation | RGB and depth images. |

| SceneFlow [36] | 39K | Depth + Disparity + Optical Flow+ Segmentation Map | Stereo image sets rendered from synthetic data with ground truth depth, disparity and optical flow. |

| Method | Architecture | Category |

|---|---|---|

| EMDEOM [32] | FC | Supervised |

| ACAN [46] | Encoder-Decoder | |

| DenseDepth [47] | Encoder-Decoder | |

| DORN [18] | CNN | |

| VNL [48] | Encoder-Decoder | |

| BTS [49] DeepV2D [50] | Encoder-Decoder CNN | |

| LISM [51] | Encoder-Decoder | Self-supervised |

| monoResMatch [38] | CNN | |

| PackNet-SfM [52] | CNN | |

| VOMonodepth [53] | Auto-Decoder | |

| monodepth2 [42] | CNN | |

| GASDA [54] | CNN | Semi-supervised |

| Method | Input | Type | Optimizer | Parameters | Output | GPU Memory | GPU Model |

|---|---|---|---|---|---|---|---|

| BTS [49] | 704 K | ED | Adam | 47M | 704 K | 11 GB | 1080 Ti |

| DORN [18] | 513 K | CNN | Adam | 123.4M | × 385 K | 12 GB | TITAN Xp |

| VNL [48] | 384 N | ED | SGD | 2.7M | × 384 N | N/A | N/A |

| ACAN [46] | 352 N | ED | SGD | 80M | × 352 N | 11 GB | 1080 Ti |

| VOMonodepth [53] | × 512 K | AD | Adam | 35M | 512 K | 12 GB | TITAN Xp |

| LSIM [51] | × 375 K | ED | Adam | 73.3M | × 375 K | 12 GB | TITAN Xp |

| GASDA [54] | × 640 K | CNN | Adam | 70M | × 640 K | N/A | N/A |

| DenseDepth [47] | × 480 N | ED | Adam | 42.6M | × 240 N | 12 GB | TITAN Xp |

| monoResMatch [38] | × 640 K | CNN | Adam | 42.5M | × 640 K | 12 GB | TITAN Xp |

| EMDEOM [32] | × 228 K | FC | Adam | 63M | × 160 K | 12 GB | TITAN Xp |

| PackNet-SfM [52] | × 192 K | CNN | Adam | 128M | × 192 K | 16 GB | Tesla V100 |

| monodepth2 [42] DeepV2D [50] | 192 K × 480 N | CNN CNN | Adam RMSProp | 70M 32M | × 192 K × 480 N | 12 GB 11 GB | TITAN Xp 1080 Ti |

| Method | Train | Test | Abs Rel | Sq Rel | RMSE | RMSElog | |||

|---|---|---|---|---|---|---|---|---|---|

| BTS [49] | ES(RD) | ES(RD) | 0.060 | 0.182 | 2.005 | 0.092 | 0.959 | 0.994 | 0.999 |

| DORN [18] | ES(RD) | ES(RD) | 0.071 | 0.268 | 2.271 | 0.116 | 0.936 | 0.985 | 0.995 |

| VNL [48] | ES(RD) | ES(RD) | 0.072 | 0.883 | 3.258 | 0.117 | 0.938 | 0.990 | 0.998 |

| ACAN [46] | ES(RD) | ES(RD) | 0.083 | 0.437 | 3.599 | 0.127 | 0.919 | 0.982 | 0.995 |

| VOMonodepth [53] | ES(RD) | ES(RD) | 0.091 | 0.548 | 3.790 | 0.181 | 0.892 | 0.956 | 0.979 |

| LSIM [51] | FT | RD | 0.169 | 0.6531 | 3.790 | 0.195 | 0.867 | 0.954 | 0.979 |

| GASDA [54] | ES(RD) | ES(RD) | 0.143 | 0.756 | 3.846 | 0.217 | 0.836 | 0.946 | 0.976 |

| DenseDepth [47] | ES(RD) | ES(RD) | 0.093 | 0.589 | 4.170 | 0.171 | 0.886 | 0.965 | 0.986 |

| monoResMatch [38] | ES(RD) | ES(RD) | 0.096 | 0.673 | 4.351 | 0.184 | 0.890 | 0.961 | 0.981 |

| EMDEOM [32] | RD, CD | SD | 0.118 | 0.630 | 4.520 | 0.209 | 0.898 | 0.966 | 0.985 |

| monodepth2 [42] | ES(RD) | ES(RD) | 0.115 | 0.903 | 4.863 | 0.193 | 0.877 | 0.959 | 0.981 |

| PackNet-SfM [52] | ES(RD) | ID | 0.078 | 0.420 | 3.485 | 0.121 | 0.931 | 0.986 | 0.996 |

| DeepV2D [50] | ES(RD) | ES(RD) | 0.037 | 0.174 | 2.005 | 0.074 | 0.977 | 0.993 | 0.997 |

| Method | Abs Rel | Sq Rel | RMSE | RMSElog | |||

|---|---|---|---|---|---|---|---|

| BTS [49] | 0.112 | 0.025 | 0.352 | 0.047 | 0.882 | 0.979 | 0.995 |

| VNL [48] | 0.113 | 0.034 | 0.364 | 0.054 | 0.815 | 0.990 | 0.993 |

| DenseDepth [47] | 0.123 | 0.045 | 0.465 | 0.053 | 0.846 | 0.970 | 0.994 |

| ACAN [46] | 0.123 | 0.101 | 0.496 | 0.174 | 0.826 | 0.974 | 0.990 |

| DORN [18] | 0.138 | 0.051 | 0.509 | 0.653 | 0.825 | 0.964 | 0.992 |

| monoResMatch [38] | 1.356 | 1.156 | 0.694 | 1.125 | 0.825 | 0.965 | 0.967 |

| monodepth2 [42] | 2.344 | 1.365 | 0.734 | 1.134 | 0.826 | 0.958 | 0.979 |

| EMDEOM [32] | 2.035 | 1.630 | 0.620 | 1.209 | 0.896 | 0.957 | 0.984 |

| LSIM [51] | 2.344 | 1.156 | 0.835 | 1.175 | 0.815 | 0.943 | 0.975 |

| PackNet-SfM [52] | 2.343 | 1.158 | 0.887 | 1.234 | 0.821 | 0.945 | 0.968 |

| GASDA [54] | 1.356 | 1.156 | 0.963 | 1.223 | 0.765 | 0.897 | 0.968 |

| VOMonodepth [53] | 2.456 | 1.192 | 0.985 | 1.234 | 0.756 | 0.884 | 0.965 |

| DeepV2D [50] | 0.061 | 0.094 | 0.403 | 0.026 | 0.956 | 0.989 | 0.996 |

| Method | Inference Time | Network/FC/CNN |

|---|---|---|

| BTS [49] | 0.22 s | Encoder-decoder |

| VNL [48] | 0.25 s | Auto-decoder |

| DeepV2D [50] | 0.36 s | CNN |

| ACAN [46] | 0.89 s | Encoder-decoder |

| VOMonodepth [53] | 0.34 s | CNN |

| LSIM [51] | 0.54 s | CNN |

| GASDA [54] | 0.57 s | Encoder-decoder |

| DenseDepth [47] | 0.35 s | Encoder-decoder |

| monoResMatch [38] | 0.37 s | CNN |

| EMDEOM [32] | 0.63 s | FC |

| DORN [18] | 0.98 s | Encoder-decoder |

| PackNet-SfM [52] | 0.97 s | CNN |

| monodepth2 [42] | 0.56 s | CNN |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, F.; Salahuddin, S.; Javidnia, H. Deep Learning-Based Monocular Depth Estimation Methods—A State-of-the-Art Review. Sensors 2020, 20, 2272. https://doi.org/10.3390/s20082272

Khan F, Salahuddin S, Javidnia H. Deep Learning-Based Monocular Depth Estimation Methods—A State-of-the-Art Review. Sensors. 2020; 20(8):2272. https://doi.org/10.3390/s20082272

Chicago/Turabian StyleKhan, Faisal, Saqib Salahuddin, and Hossein Javidnia. 2020. "Deep Learning-Based Monocular Depth Estimation Methods—A State-of-the-Art Review" Sensors 20, no. 8: 2272. https://doi.org/10.3390/s20082272

APA StyleKhan, F., Salahuddin, S., & Javidnia, H. (2020). Deep Learning-Based Monocular Depth Estimation Methods—A State-of-the-Art Review. Sensors, 20(8), 2272. https://doi.org/10.3390/s20082272