Abstract

This paper studies the limitations of binocular vision technology in monitoring accuracy. The factors affecting the surface displacement monitoring of the slope are analyzed mainly from system structure parameters and environment parameters. Based on the error analysis theory, the functional relationship between the structure parameters and the monitoring error is studied. The error distribution curve is obtained through laboratory testing and sensitivity analysis, and parameter selection criteria are proposed. Corresponding image optimization methods are designed according to the error distribution curve of the environment parameters, and a large number of tests proved that the methods effectively improved the measurement accuracy of slope deformation monitoring. Finally, the reliability and accuracy of the proposed system and method are verified by displacement measurement of a slope on site.

1. Introduction

Landslides are among the most common and catastrophic natural hazards, causing numerous casualties and fatalities every year [1]. Extracting the slope deforming information can effectively predict and warn of landslides early [2]. Historically, various monitoring methods have been employed to measure the slope deformation, including geodesy [3], GPS [4], INSAR [5], 3D laser scanning [6] and digital photogrammetry [7,8]. However, the construction industry seeks a more intelligent but low-cost technology to achieve dynamic and high-accuracy monitoring. Binocular vision technology is a promising new approach to deal with slope deformation monitoring [9] which has been widely and increasingly used in bridge vibration [10], multipoint displacement measurement [11], materials strain detection [12], depth estimation [13], and target tracking [14].

The accuracy of binocular vision technology is limited by the camera calibration parameters [15], system structure parameters [16], and environment parameters [17,18]. In order to eliminate the influence of camera calibration parameters on the monitoring, Li et al. [19] proposed a high-precision camera calibration method based on a multiview template and alternative bundle adjustment. Liu et al. [20] devoted to minimize the error between the geometric relation of 3D reconstructed feature points and the ground truth. Jin et al. [21] improved the calibration accuracy of the camera through distortion correction. Yang et al. [22] studied the influence of camera distortion, number of calibration images, number of targets and position of calibration board on the calibration accuracy. For system structure parameters, it mainly includes the angle between the camera optical axis and the baseline, baseline distance and monitoring distance [22,23,24]. Lu et al. [25] built the relationship between structure parameters (baseline, focal length and object distance) and distance measurement errors. Deng et al. [26] have studied the effect of vision measurement parameters on the accuracy of binocular reconstruction at high temperatures. Xu et al. [27] determined the optimal configuration of the system by comprehensively analyzing the baseline distance and measurement distance. Tang et al. [28] analyzed the relationship between the depth errors, the field of views and the distances through measurement tests, and obtained the error correction curves. Xu et al. [29] deduced the best relationship that the virtual baseline distance is 1–1.5 times of measurement distance for the double reflection system.

The camera calibration and system structure parameters are artificially adjustable. In contrast, the influence of environment parameters only can be optimized by image processing technologies based on monitoring data. The most popular image sharpening technology can be divided into two categories: image enhancement technology based on image processing and image restoration technology based on a physical model. Image enhancement technology [30] directly processes the pixel points of the image without considering the reasons of image quality deterioration so as to improve the vision effect of the image, such as histogram equalization [31], curvelet transform [32], and homomorphic filtering algorithm [33]. Image restoration technology [34] employs some prior knowledge of the degradation phenomenon to realize the process of establishing and inversely deducing degraded images so as to restore the original appearance of images, such as a physical model of atmospheric scattering [35], guided filtering [36] and improved mean filtering algorithm [37]. However, there is very little research into the above parameters of the binocular vision technology for slope deformation monitoring.

The main purpose of this study aims to investigate structure parameters and environment parameters for improving the accuracy of the binocular vision measurement system for slope deformation monitoring. The error distribution curves were obtained through laboratory tests. The sensitivity of structure parameters was analyzed, and parameter selection criteria were proposed. In addition, the environmental influences were optimized by image processing technology. Furthermore, field tests were conducted to verify the reliability and accuracy of the proposed system and method.

2. Binocular Vision Measurement System

2.1. Laser Rangefinder-Camera Station

Figure 1 shows the binocular vision measurement system (BVMS) for slope deformation monitoring. In order to complete the site layout of the BVMS accurately and quickly in engineering monitoring, we design a simple laser rangefinder-camera station. It consists of tripod, laser rangefinders, camera, and angle measuring ruler. Laser rangefinders and the angle-measuring ruler are installed between the camera and the tripod. The laser rangefinder is fixed on the angle-measuring ruler, which is convenient for measuring the baseline distance and monitoring distance. One end of angle measuring ruler can be hinged according to the specific field of view. In order to make the layout system universal, the base of the design can be connected to the tripod through screws and the lower tray fixed the angle measuring ruler and the laser rangefinders. The camera can be connected to the tray using screws, and the camera direction can be flexibly rotated according to requirements. The connection is equipped with horizontal bubbles, which can be leveled during monitoring.

Figure 1.

Binocular vision measurement system: (a) image of 3D simulation; (b) site layout diagram.

2.2. Slope Deformation Monitoring Based on BVMS

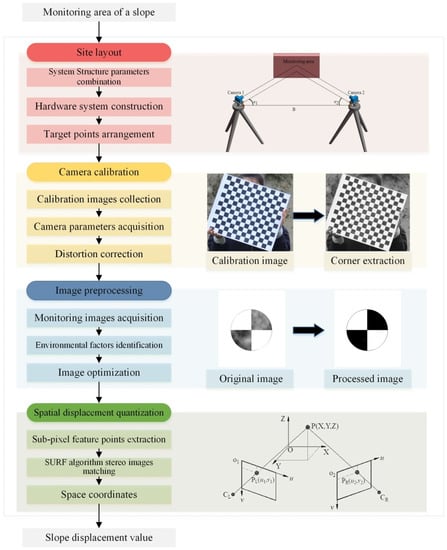

Figure 2 illustrates the process of slope deformation monitoring based on BVMS. Specific steps are as follows:

Figure 2.

Slope deformation monitoring process based on binocular vision measurement system.

Step 1: Site layout. The laser rangefinder-camera station hardware system is built according to the system structure parameters, and then the target points are reasonably arranged in the monitoring area based on the field of view.

Step 2: Camera calibration. The improved Zhang’s calibration method [38] is used to obtain camera parameters and achieve distortion correction of the camera. It should be noted that during the subsequent tests, refocusing the camera has been avoided.

Step 3: Image preprocessing. The frequency of image capture can be set according to monitoring requirements, such as acquiring images every half an hour. Multiple (≥5) images should be taken continuously within one second for each acquisition, which can effectively reduce random errors during subsequent processing of the data. Then the environmental factors in the images are identified through human intervention. Finally, the preprocessing system designed in this paper is used to optimize the images.

Step 4: Spatial displacement quantization. Feature points are extracted from the optimized images [39]. Three-dimensional information of the target points is converted by stereo matching with the Speeded-Up Robust Features (SURF) algorithm [40]. Then the displacement is calculated.

3. Accuracy Analysis of System Structure Parameters

3.1. System Monitoring Model

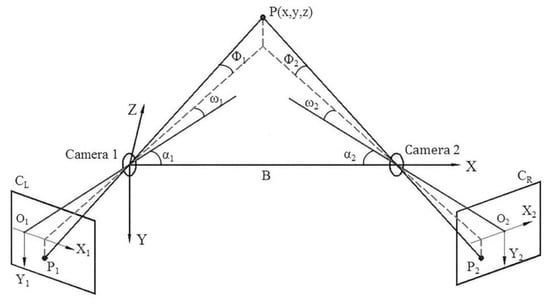

Figure 3 presents the space geometry model of the BVMS [41]. and are the imaging planes of the left and right cameras. The image coordinate system of the left camera is , and the right one is . and are the image coordinates of target point . and are the angles between the baseline and the optical axis of the left and right camera, respectively. The focal length is . The projection geometry of the target point on the left and right cameras is described by the horizontal field of view angle and , and the vertical field of view angle and . If the two cameras are placed horizontally and the coordinate system of the left camera is considered as the reference coordinate system, the coordinates of target point in the reference coordinate system can be calculated by Equation (1):

where , , , .

Figure 3.

The monitoring model of binocular vision measurement.

Therefore, the position information of the target point in the world coordinate system depends on the system structure parameters, including the monitoring distance , the baseline distance , the angle between the optical axis and the baseline, and the focal length of camera. Equation (1) can be expressed into the vector function relationship, as shown in Equation (2):

According to the error analysis theory [42], the position information error of monitoring target point in the world coordinate system:

where is the error generated by each influencing parameter, represents one of parameters with . By synthesizing the errors , and in three directions, the comprehensive monitoring error of position information can be obtained as:

where stands for the direction of , , . The error transfer function is introduced to rewrite Equation (4) as:

where represents the error transfer function of each parameter and is defined as:

According to Equations (1)–(6), the error transfer function of space coordinates of the target point can be shown as Equations (7)–(10):

3.2. Model Verification

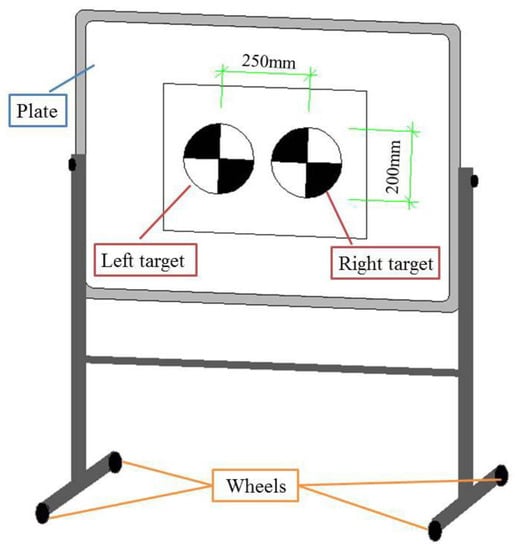

A simulation test of slope slide was designed to verify the feasibility of the proposed system and further to analyze the influence of the structure parameters described above on the binocular system monitoring.

We used the laser rangefinder-camera station mentioned above, where the camera is a Nikon D3100 digital camera with resolution of 4608 × 3072 and 14.2 million pixels. The homemade calibration board is a chessboard with 14 × 13 blocks, where each block is 30 mm × 30 mm. Considering that displacement errors may be caused by manually moving it, we printed two identical targets with a diameter of 200 mm on A2 paper to simulate displacement deformation. Their center distance is 250 mm, that is, the true value of the displacement is 250 mm. To facilitate movement, we pasted the targets on the removable platform, as shown in Figure 4. During the tests, the camera had to be recalibrated for every set of structure parameters changed. At each position, five images of the targets were collected in succession to calculate the distance between the two targets.

Figure 4.

Diagram of removable platform of sliding deformation simulation test.

The central location was performed on the targets in each image to obtain their pixel coordinates. Then the camera parameters were calibrated to convert the three-dimensional space coordinates. The center distance of the two targets was calculated according to Equation (11). Under each set of structure parameter combinations, there are five measured displacement values, as shown in Figure 5, which are , , , and .

where is the measured displacement value; and are the three-dimensional coordinates of the center of left and right targets in the same image, respectively.

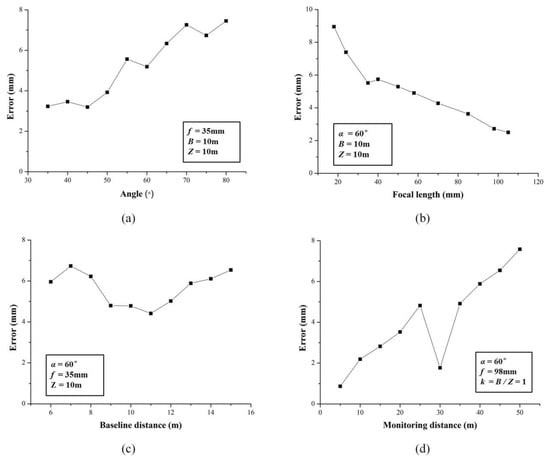

Figure 5.

The total displacements measured by binocular vision measurement system with different structure parameters: (a) the angle between optical axis and baseline; (b) focal length ; (c) baseline distance ; (d) monitoring distance .

Ideally, the five points corresponding to the same abscissa should coincide, that is, the displacement values measured at the same position should be the same. However, it can be seen from Figure 5 that some groups of data showed a large deviation. This was due to other effects during the monitoring process, such as imperfect instrument structures and improper camera shutter operation. Therefore, in the subsequent analysis of the error distribution curve, we filtered out the images with larger relative error data. Finally, Equation (12) was used to calculate the root mean square error (RMSE) of each group of data to evaluate the deviation between the observed value and the true value, and the error distribution curve was obtained as shown in Figure 6.

where is the true displacement value; is the amount of valid data measured for each group.

Figure 6.

Distribution curve of displacement errors under different structure parameters: (a) the angle between optical axis and baseline; (b) focal length ; (c) baseline distance ; (d) monitoring distance .

Figure 6a expounds the variation of error with different angles between the optical axis and baseline. Between 35° and 80°, the general variation trend of error increased with the increase of angles. At 45 °, the error reached a minimum. It could be considered that the proposed BVMS was more reliable for monitoring when the angle is less than 50°. It can be apparently seen from Figure 6b that the focal length was inversely proportional to the monitoring error. As the focal length increased, the field of view narrowed, so there is relatively little interference information in the image. Therefore, the camera lens with large focal length as far as possible should be arranged in practical applications. The third group focused on the effect of the baseline distance on the error. In BVMS, the baseline distance is an important parameter and is often considered in conjunction with the monitoring distance. As shown in Figure 6c, the overall trend of error decreased first and then increased. When the ratio of the baseline distance to the monitoring distance was between 0.9 and 1.2, the error got a smaller value and the result seemed to be acceptable. Figure 6d illustrates the relationship between the monitoring distance and the error. As the monitoring distance increased, we can clearly see that the error also increased. Although the measured displacement error may break through 8 mm when the monitoring distance exceeds 50 m, it is believed that with the development of vision sensors, the monitoring distance of the BVMS will be more and more optimized, and remote, high-precision monitoring will be realized.

3.3. Sensitivity Analysis and Optimization Design

The influence degree of different system structure parameters on binocular measurement results was different. If the sensitivity of each parameter can be determined, the high-sensitivity parameter can be quickly and effectively suppressed and the monitoring accuracy can be improved.

For sensitivity analysis, the parameter combination set should be established first, as listed in Table 1. The value range of each parameter was determined according to the previous analysis and the tests. In addition, since different structure parameters have different units of physical quantities, it was difficult to rank the sensitivity degree, so the sensitivity factors of each parameter needed to be normalized dimensionless [43], as shown in Equation (13).

where is the normalized sensitivity factor of the evaluation parameter ; corresponds to the reference value of system accuracy; and are respectively the maximum and minimum values of the corresponding accuracy within the value range of system parameter .

Table 1.

The structure parameters combination set for sensitivity analysis.

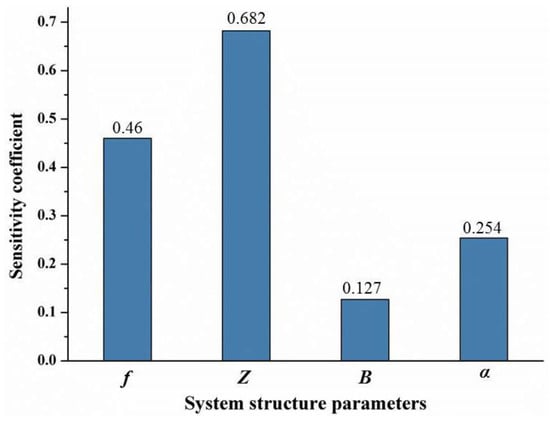

According to the error synthesis theory [42], the value of function is equal to the sum of errors caused by each parameter. The error range caused by baseline distance and the angle between optical axis and baseline could be deduced from Equations (7)–(10). Focal length and monitoring distance affect the system accuracy by affecting the actual physical size represented by the pixel points, and the generated error could be calculated by Equation (14) [44]. Finally, the sensitivity coefficient of each parameter could be obtained, as shown in Figure 7.

where represents the size of pixel points, which was set as 6.25 μm in our experiment.

Figure 7.

The sensitivity coefficient of the system structure parameters.

Figure 7 shows that the sensitivity coefficients of two parameters, the monitoring distance and the camera focal length , were significantly higher than the other parameters, which means these two parameters had a larger influence degree on the total monitoring error than the other parameters. Therefore, in the application of BVMS, the two parameters should be efficiently configured according to the specific situation to reduce the monitoring error.

In order to facilitate the application of engineering monitoring, we designed the corresponding binocular system structure parameters combinations according to the former analysis, as listed in Table 2. In engineering applications, appropriate cameras and structure parameters combination can be selected according to specific precision requirements, monitoring scene and project budget.

Table 2.

Recommendation of structure parameters combination using the binocular vision measurement system.

4. Accuracy Analysis of Environment Parameters

In the vision-based slope monitoring, the main work is to process the acquired slope images. Landslides occur frequently in the rain, fog and other weather conditions, resulting in the images containing complex environment parameters. If these interferences can be filtered rapidly and effectively, it would be helpful for extracting objects and image matching and positioning, and then improve the accuracy and efficiency of slope deformation monitoring.

4.1. The Influence of Each Parameters

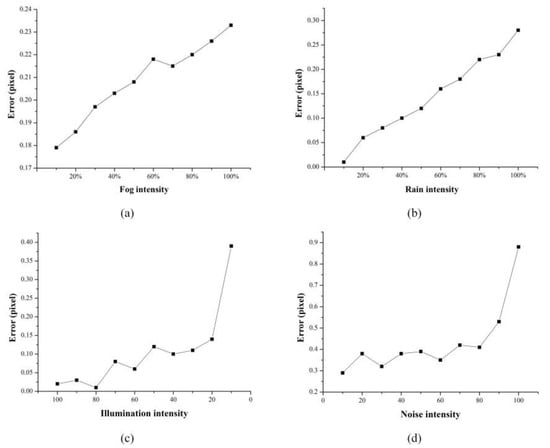

Simulation tests were designed in this section to study the influence of four common environmental factors on the image center positioning results. The interference of each environment parameter was simulated by adding filters, adjusting color levels, modifying layer modes and other basic operations in Photoshop. The center point positioning result of the disturbed image was compared with that of the original image, and the error distribution curve was obtained, as shown in Figure 8. The size of all images was 1095 × 956 pixels, and the central coordinate of the original image was (466.977, 565.527).

Figure 8.

The influence of environment parameters on image center positioning results: (a) fog; (b) rain; (c) low illumination; (d) noise.

For the simulation of fog, we changed the intensity of fog by adjusting the filling amount of fog in the image. As can be seen from Figure 8a, the larger the fog, the greater the image positioning error. In foggy environments, the fog and dust permeated in the air will blur vision, and the image obtained under foggy conditions is significantly degraded, and the features such as the contrast and color of the target are attenuated, thus reducing the accuracy of target positioning.

Similarly, the simulation of rain with different intensity was realized by changing the filling amount of rain. Figure 8b presents that the error is positively correlated with the amount of rain. The impact of rain on the imaging quality was mainly concentrated in three aspects. One was that the raindrops blocked the target to a certain extent, making it difficult to accurately locate the target in the image. The second was that the existence of rain lines caused errors in the detection of target edges. Third, the image became blurred, which reduced the robustness and adaptability of the vision monitoring system, and was not conducive to subsequent target positioning and calculation.

The simulation of low illumination was relatively simple; we just changed the brightness value of the image directly. Figure 8c shows that although the error distribution curve fluctuates, the general trend is that the error increases with the decrease of brightness. In the case of poor lighting conditions, such as dusk and dawn, the imaging quality was severely deteriorated, mainly due to the large dark area of the image, blurred content and loss of details. Therefore, it is difficult to meet the needs of monitoring systems.

Noise simulation was achieved by adding filters, and the intensity of the added noise could be set. Through a large number of experiments, we found that the effect of noise on image positioning was the greatest. Figure 8d illustrates the relationship between noise intensity and positioning error: the higher the noise intensity, the more unnecessary or redundant interference information in the image data. In the field of monitoring, the error caused by noise will be amplified in subsequent processing, and with the transmission of errors, the results will show a non-negligible error.

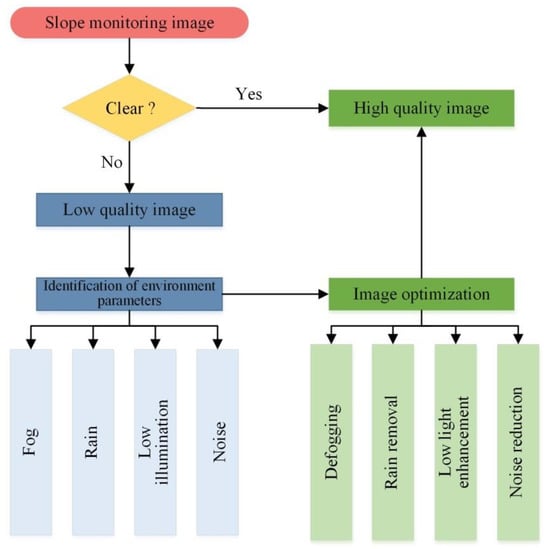

4.2. Optimization Design

From the previous analysis, it can be seen that the interference of environment parameters will cause deviations in image positioning. If the image cannot accurately reflect the actual situation of the slope measurement points, it is difficult to quantify the deformation. Therefore, this paper designed the corresponding image processing technology for the monitoring images under the above four types of environmental interferences to achieve image optimization (see Figure 9).

Figure 9.

The process of slope monitoring image optimization.

- (1)

- Fog

Fog degrades the collected images, affects the positioning accuracy and even loses the target points. Therefore, the dark channel prior technology [45] was adopted to remove haze from images. On this basis, the guided filtering [36] was used to refine the transmittance, so as to achieve image defogging.

- (2)

- Rain

Rainfall decreases the image contrast and blurs the target. On the account of deep learning [46], a neural network model [47] was employed to remove the rain streaks.

- (3)

- Low illumination

Insufficient light will cause the captured image to have a large area of dark areas, which is difficult to meet the requirements of subsequent processing. In this paper, image main structures extraction technology [48] and low-illumination image degradation model [49] were used to enhance low light images.

- (4)

- Noise

Interference of various factors induces noise in the image process of capture and transmission. In succession, the noise error will be continuously amplified in the subsequent processing. The Block-Matching and 3D filtering (BM3D) algorithm [50] was adopted to reduce image noise. Based on the self-similarity of images, the algorithm evaluated the similarity between blocks of images at different positions, so as to estimate and restore the original gray level of image pixels.

4.3. Performance Verification

This section describes two tests to verify the image optimization method proposed in this study.

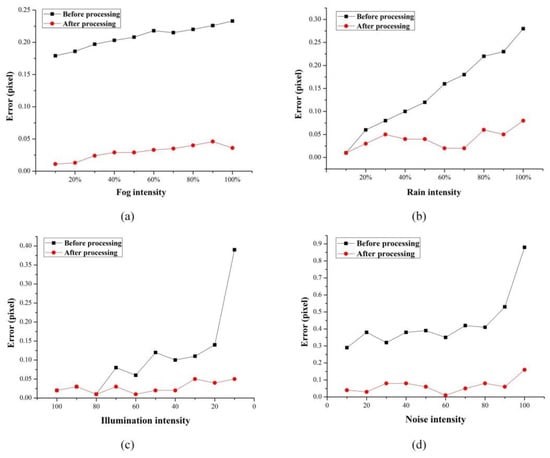

- (1)

- Interference of single environment parameter

In the previous study of the influence of each environment parameters on the center positioning results, images were obtained under each environment interference. The corresponding optimization methods were used for processing, and the optimized image center positioning results were compared with the original image to obtain a processed error distribution curve, as shown by a red curve in Figure 10. The black curve is the error distribution curve before processing.

Figure 10.

Target center positioning errors under single environment parameter interference before and after processing: (a) fog; (b) rain; (c) low illumination; (d) noise.

It can be clearly seen from the Figure 10 that the interference of the four environment parameters leads to the positioning deviation. However, for the effects of fog, rain and low illumination, the maximum error of the positioning results before processing did not exceed 0.4 pixels, indicating that the proposed BVMS has good robustness. Although the error was relatively large when the noise intensity was high, the positioning accuracy was significantly improved after optimization. On the whole, the maximum error of the optimized positioning result was 0.146 pixels, and only one group of pixel errors exceeded 0.1. It shows that the designed image optimization methods can effectively improve the influence of environmental parameters on the monitoring images.

- (2)

- Interference of combined environment parameters

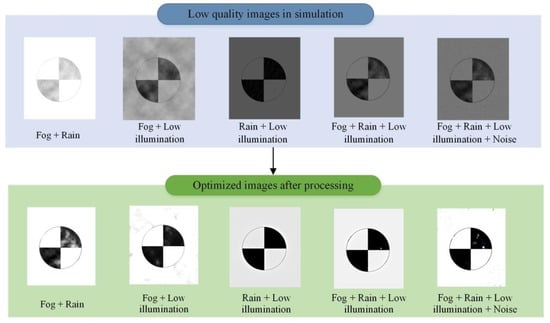

Actually, the images were often affected by more than one types of environment interference, so it is of great significance to study the interference of multi-environment parameters. The following five sets of simulation tests are designed for slope monitoring. Figure 11 shows images before and after processing. It is worth noting that the interference intensity of identical environment parameters was the same during the testing of different combinations. That was achieved by keeping the parameters involved in the simulation consistent in Photoshop. The center point positioning was also performed on the images before and after optimization. The error results are listed in Table 3.

Figure 11.

The simulated images before and after processing under the combined interference of multi-environment parameters.

Table 3.

The positioning results of target center before and after image processing.

As shown in Figure 11, under the interference of combined environment parameters, the image quality seriously degraded, and the target area was easy to blur. This is likely to result in positioning failure. Judging from the location results before processing (see Table 3), in general, the more environment parameter interferences contained in the image, the greater the positioning error. When optimizing, low illumination handling was the most challenging. Different optimization parameters had to be set for processing, and the best-performing images were selected for subsequent analysis. In addition, by comparing a large number of test data, we found that different processing orders lead to different positioning results. Optimizing the factors that are easy to identify first, and then processing the factors that are difficult to discern, can make the unrecognized images get better positioning. In short, the optimized positioning error was no more than 0.11 pixels, which indicates that the optimization methods proposed in this paper can be well used for processing slope-monitoring images.

5. Field Tests

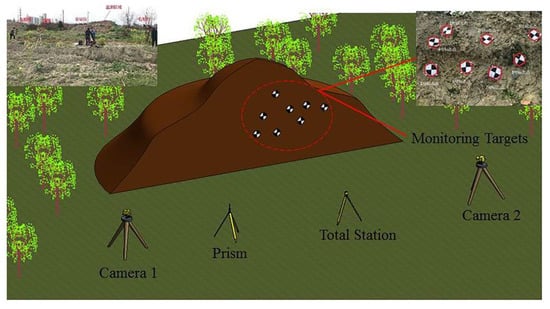

After optimizing the binocular measurement system and realizing the deformation monitoring of the target, we conducted further field tests. The deformation of the slope surface was simulated in the field and the applicability and accuracy of the method proposed in this paper was verified by the comparative analysis of the monitoring data.

In total, eight target points were set randomly on the slope surface, as shown in Figure 12. In order to verify the efficiency of the proposed BVMS, the surface deformation of the slope was simulated by artificially moving these points and compared with the measurement results of the total station. The combination of structure parameters is listed in Table 4. The influence of environment parameters was optimized before calculation.

Figure 12.

General arrangement of field tests.

Table 4.

The designed combination of structure parameters.

Comparing the measurement results of the total station and BVMS, the root mean square errors of the eight targets in the X, Y, and Z directions and the total deformation were obtained, as shown in Table 5.

Table 5.

The displacement error results measured by binocular vision measurement system.

We can conclude from Table 5 that the BVMS and method proposed in this paper can be well applied to structural deformation monitoring. The final measured error is within the range of theoretical error, which also verifies the practicability of the proposed structure parameters combination recommendation table (see Table 2). Although the current results show that the monitoring distance is best controlled within 60m, it can already be used for monitoring of small slopes and small foundation pit engineering. In addition, with the development of binocular vision technology, its application range and monitoring distance will be more improved.

6. Conclusions

In this paper, the factors influencing the accuracy of the BVMS in slope monitoring are analyzed systematically. The conclusions can be drawn as follows:

- (1)

- By combining the principle of binocular vision technology and the error analysis theory, the functional relationship between structure parameters and monitoring results was studied. Further laboratory tests were performed, and the error distribution curves under the influence of the angle between optical axis and baseline, focal length, baseline distance, and monitoring distance were obtained, respectively. Furthermore, the recommendation of structure parameters combination using the BVMS was also proposed.

- (2)

- Through the environmental interference simulation tests, the error distribution curves under the influence of fog, rain, low illumination and noise were obtained. Therefore, image optimization methods are designed to deal with the interference of these four environment parameters. It relies on modifying the pixel distribution of the image to improve the contrast of the target, so as to further improve the environmental adaptability of the proposed system.

- (3)

- Finally, the slope movement is simulated on site, and the deformation is monitored employing the proposed method. The results not only verify the validity of the structure parameters combination recommendation table, but also reflect the application potentials of the BVMS for cost-effective slope health monitoring. However, its applied range and monitoring distance still need to be improved.

Author Contributions

The asterisk indicates the corresponding author, and the first two authors contributed equally to this work. The binocular vision measurement system is developed by Z.F. and Z.S. under the supervision of Q.H. and L.H. Theoretical analysis of system accuracy is carried out by Z.F. under the guidance of Q.H. Experiment planning, setup and measurement of laboratory and field tests are conducted by J.T., Y.B., Y.G., L.H., J.Z. and Q.C. provided valuable insight in preparing this manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 51574201), Research and Innovation Team of Provincial Universities in Sichuan(18TD0014), and Excellent Youth Foundation of Sichuan Scientific Committee (2019JDJQ0037).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kavzoglu, T.; Sahin, E.K.; Colkesen, I. Landslide susceptibility mapping using GIS-based multi-criteria decision analysis, support vector machines, and logistic regression. Landslides 2013, 11, 425–439. [Google Scholar] [CrossRef]

- Tofani, V.; Raspini, F.; Catani, F.; Casagli, N. Persistent Scatterer Interferometry (PSI) Technique for Landslide Characterization and Monitoring. Remote Sens. 2014, 5, 1045–1065. [Google Scholar] [CrossRef]

- Marek, L.; Miřijovský, J.; Tuček, P. Monitoring of the shallow landslide using UAV photogrammetry and geodetic measurements. In Engineering Geology for Society and Territory-Volume 2; Springer: Berlin, Germany, 2015; pp. 113–116. [Google Scholar]

- Benoit, L.; Briole, P.; Martin, O.; Thom, C.; Ulrich, P. Monitoring landslide displacements with the Geocube wireless network of low-cost GPS. Eng. Geol. 2015, 195, 111–121. [Google Scholar] [CrossRef]

- Sun, Q.; Zhang, L.; Ding, X.L.; Hu, J.; Li, Z.W.; Zhu, J.J. Slope deformation prior to Zhouqu, China landslide from InSAR time series analysis. Remote Sens. Environ. 2015, 156, 45–57. [Google Scholar] [CrossRef]

- Abellán, A.; Oppikofer, T.; Jaboyedoff, M.; Rosser, N.J.; Lim, M.; Lato, M.J. Terrestrial laser scanning of rock slope instabilities. Earth Surf. Process. Landf. 2014, 39, 80–97. [Google Scholar] [CrossRef]

- Ohnishi, Y.; Nishiyama, S.; Yano, T.; Matsuyama, H.; Amano, K. A study of the application of digital photogrammetry to slope monitoring systems. Int. J. Rock Mech. Min. Sci. 2006, 43, 756–766. [Google Scholar] [CrossRef]

- Hastaoğlu, K.Ö.; Gül, Y.; Poyraz, F.; Kara, B.C. Monitoring 3D areal displacements by a new methodology and software using UAV photogrammetry. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101916. [Google Scholar] [CrossRef]

- He, L.; Tan, J.; Hu, Q.; He, S.; Cai, Q.; Fu, Y.; Tang, S. Non-contact measurement of the surface displacement of a slope based on a smart binocular vision system. Sensors 2018, 18, 2890. [Google Scholar] [CrossRef]

- Hu, Q.; He, S.; Wang, S.; Liu, Y.; Zhang, Z.; He, L.; Wang, F.; Cai, Q.; Shi, R.; Yang, Y. A high-speed target-free vision-based sensor for bus rapid transit viaduct vibration measurements using CMT and ORB algorithms. Sensors 2017, 17, 1305. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Vision-based multipoint displacement measurement for structural health monitoring. Struct. Control Health Monit. 2016, 23, 876–890. [Google Scholar] [CrossRef]

- Tang, Y.; Li, L.; Wang, C.; Chen, M.; Feng, W.; Zou, X.; Huang, K. Real-time detection of surface deformation and strain in recycled aggregate concrete-filled steel tubular columns via four-ocular vision. Robot. Comput. Integr. Manuf. 2019, 59, 36–46. [Google Scholar] [CrossRef]

- Mansour, M.; Davidson, P.; Stepanov, O.; Piché, R. Relative Importance of Binocular Disparity and Motion Parallax for Depth Estimation: A Computer Vision Approach. Remote Sens. 2019, 11, 1990. [Google Scholar] [CrossRef]

- Liu, S.; Li, Y.-F. Precision 3D Motion Tracking for Binocular Microscopic Vision System. IEEE Trans. Ind. Electron. 2019, 66, 9339–9349. [Google Scholar] [CrossRef]

- Jin, D.; Yang, Y. Sensitivity analysis of the error factors in the binocular vision measurement system. Opt. Eng. 2018, 57, 104109. [Google Scholar] [CrossRef]

- Li, P.; Wang, Y.W. Analysis on Structural Parameters of Binocular Stereo Vision System. Proc. Appl. Mech. Mater. IEEE Photonics J. 2018, 10, 290–295. [Google Scholar] [CrossRef]

- Nayar, S.K.; Narasimhan, S.G. Vision in bad weather. In Proceedings of Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Narasimhan, S.G.; Nayar, S.K. Contrast restoration of weather degraded images. Ieee Trans. Pattern Anal. Mach. Intell. 2003, 25, 713–724. [Google Scholar] [CrossRef]

- Li, W.; Siyu, S.; Hui, L. High-precision method of binocular camera calibration with a distortion model. Appl. Opt. 2017, 56, 2368. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Z.; Duan, G.; Cheng, J.; Jiang, X.; Tan, J. Precise and robust binocular camera calibration based on multiple constraints. Appl. Opt. 2018, 57, 5130. [Google Scholar] [CrossRef]

- Jin, D.; Yang, Y. Using distortion correction to improve the precision of camera calibration. Opt. Rev. 2019, 26. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, B.; Zhang, R.; Zhou, H.; Wang, R. Analysis on Location Accuracy for Binocular Stereo Vision System. IEEE Photonics J. 2017, 10, 7800316. [Google Scholar]

- Yu, H.; Xing, T.; Xin, J. The analysis of measurement accuracy of the parallel binocular stereo vision system. In Proceedings of the 8th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Optical Test, Measurement Technology, and Equipment, Bellingham, WA, USA, 13 October 2016. [Google Scholar]

- Xu, G.; Lu, X.; Li, X.; Su, J.; Tian, G.; Sun, L. Size character optimization for measurement system with binocular vision and optical elements based on local particle swarm method. Opt. Rev. 2015, 22, 58–64. [Google Scholar] [CrossRef]

- Lu, B.-L.; Liu, Y.-L.; Sun, L. Error analysis of binocular stereo vision system applied in small scale measurement. Acta Photonica Sin. 2015, 44, 1011001. [Google Scholar]

- Deng, H.; Wang, F.; Zhang, J.; Hu, G.; Ma, M.; Zhong, X. Vision measurement error analysis for nonlinear light refraction at high temperature. Appl. Opt. 2018, 57, 5556–5565. [Google Scholar] [CrossRef] [PubMed]

- Xu, G.; Li, X.; Su, J.; Pan, H.; Geng, L. Integrative evaluation of the optimal configuration for the measurement of the line segments using stereo vision. Opt. Int. J. Light Electron Opt. 2013, 124, 1015–1018. [Google Scholar] [CrossRef]

- Tang, J.; Zhang, M.; Wang, L. A data processing method to improve the accuracy of depth measurement by binocular stereo vision system. In Proceedings of the Optical Metrology and Inspection for Industrial Applications II, Beijing, China, 5–7 November 2012; p. 856306. [Google Scholar]

- Xu, G.; Li, X.; Su, J.; Lan, Z.; Tian, G.; Lu, X. Comprehensive evaluation for measurement errors of a binocular vision system with long virtual baseline generated from double reflection mirrors. Optik 2015, 126, 4621–4624. [Google Scholar] [CrossRef]

- Gu, K.; Zhai, G.; Lin, W.; Liu, M. The analysis of image contrast: From quality assessment to automatic enhancement. IEEE Trans. Cybern. 2015, 46, 284–297. [Google Scholar] [CrossRef]

- Yeganeh, H.; Ziaei, A.; Rezaie, A. A novel approach for contrast enhancement based on histogram equalization. In Proceedings of the 2008 International Conference on Computer and Communication Engineering, Kuala Lumpur, Malaysia, 13–15 May 2008; pp. 256–260. [Google Scholar]

- Ma, J.; Plonka, G. The curvelet transform. IEEE Signal Process. Mag. 2010, 27, 118–133. [Google Scholar] [CrossRef]

- Oh, J.; Hwang, H. Feature enhancement of medical images using morphology-based homomorphic filter and differential evolution algorithm. Int. J. Control. Syst. 2010, 8, 857–861. [Google Scholar] [CrossRef]

- Banham, M.R.; Katsaggelos, A.K. Digital image restoration. IEEE Signal Process. Mag. 1997, 14, 24–41. [Google Scholar] [CrossRef]

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles; John Wiley and Sons, Inc.: New York, NY, USA, 1976; p. 421. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Yan-Hai, W.U.; Zhang, J.; Chen, K. Improved defogging algorithm of single image based on mean filter. J. Xian Univ. Sci. Technol. 2016. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22. [Google Scholar] [CrossRef]

- Chen, S.Z.; Wang, W.Y. Improved Algorithm Based on Harris Corner Point Detection. Mod. Comput. 2010, 1, 44–46. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Vision, L.V.G.J.C.; Understanding, I. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Liu, Y.-F.; Guo, H.; Nie, D.-J.; Guo, X.-L.; Chen, F. Analysis on measurement accuracy of binocular stereo vision system. Donghua Daxue Xuebao(Ziran Ban) 2012, 38, 572–576. [Google Scholar]

- Fei, Y.-T. Error Theory and Data Processing; Mechanical Industry Press: Wuhan, China, 2010; p. 102. [Google Scholar]

- Wei-shen, Z.; Guang, Z. Susceptibility analyses of parameters of jointed rock to breaking area in surrounding rock. Undergr. Space 1994, 14, 10–15. [Google Scholar]

- Long, Y.; Zhengxu, Z.; Yiqi, Z.J.C.J.o.S.I. Accuracy analysis and configuration design of stereo photogrammetry system based on CCD. Article 2008, 29, 410. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single Image Haze Removal Using Dark Channel Prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar]

- Fu, X.; Huang, J.; Zeng, D.; Yue, H.; Ding, X.; Paisley, J. Removing Rain from Single Images via a Deep Detail Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25. [Google Scholar] [CrossRef]

- Xu, L.; Yan, Q.; Xia, Y.; Jia, J. Structure extraction from texture via relative total variation. ACM Trans. Graph. 2012, 31, 1. [Google Scholar] [CrossRef]

- Guo, X. LIME: A Method for Low-light IMage Enhancement. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).