De-Skewing LiDAR Scan for Refinement of Local Mapping

Abstract

1. Introduction

2. Related Works

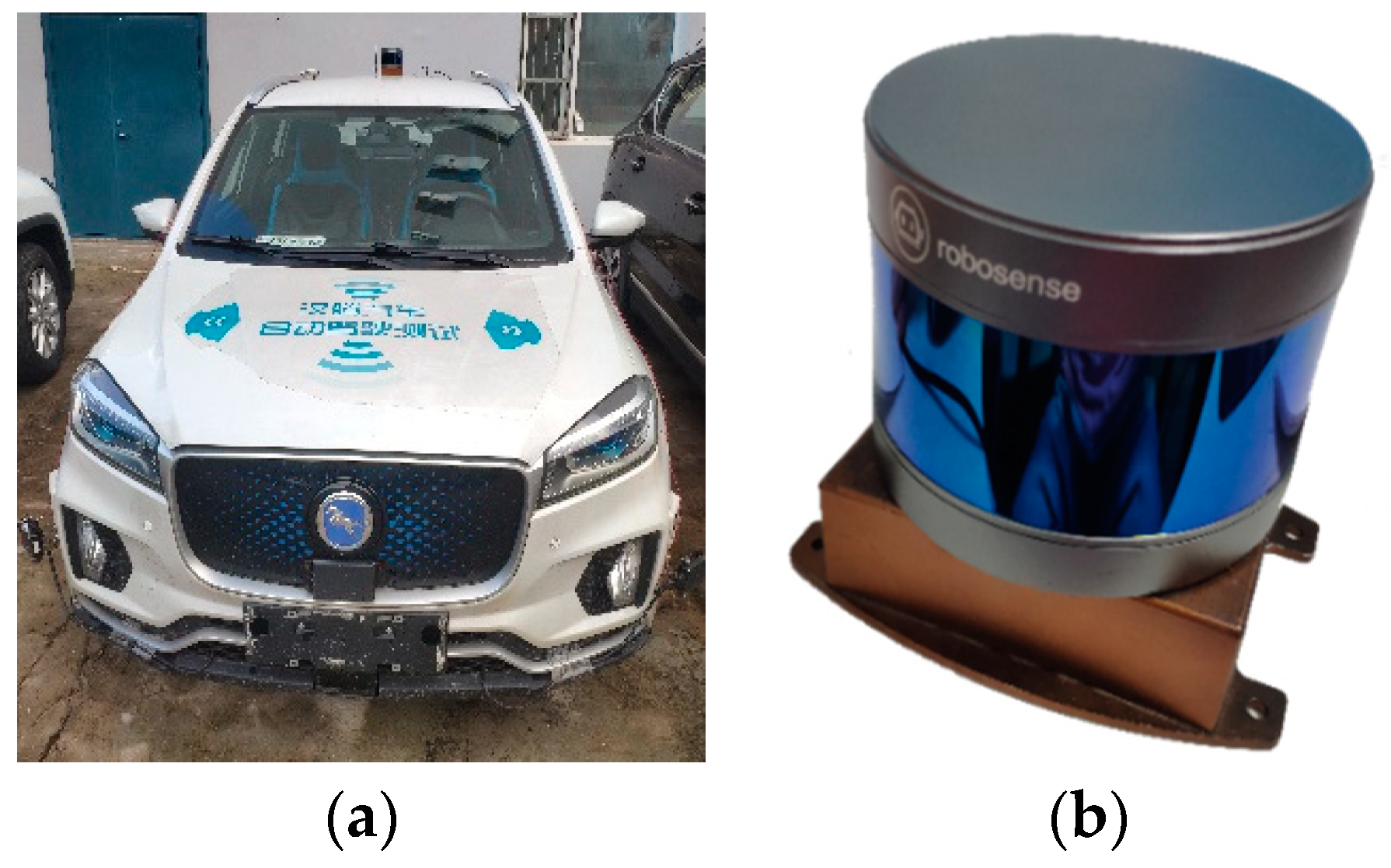

3. Methods

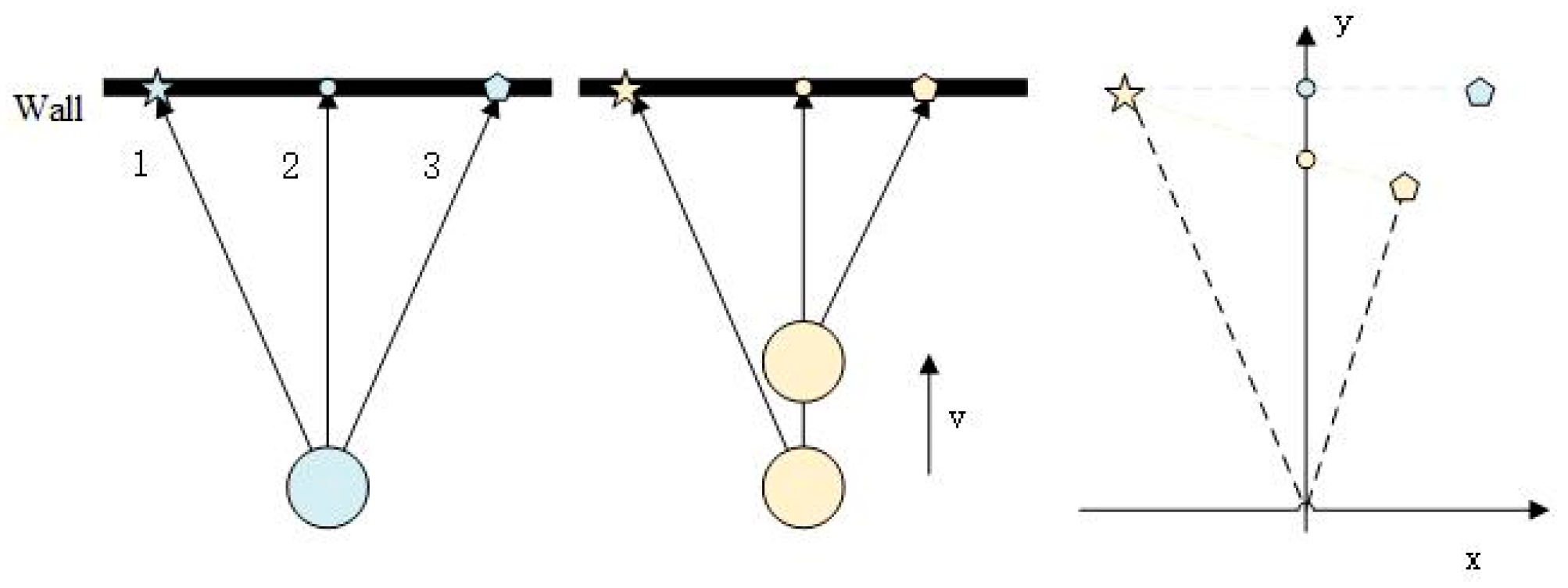

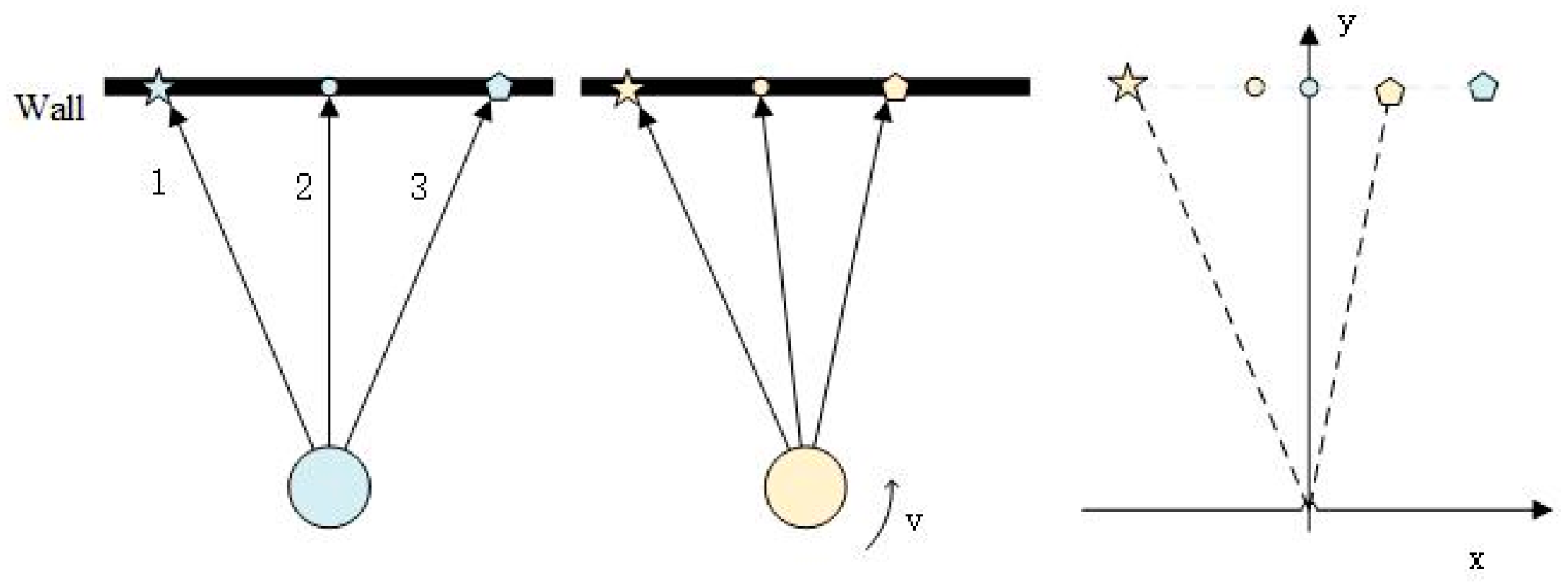

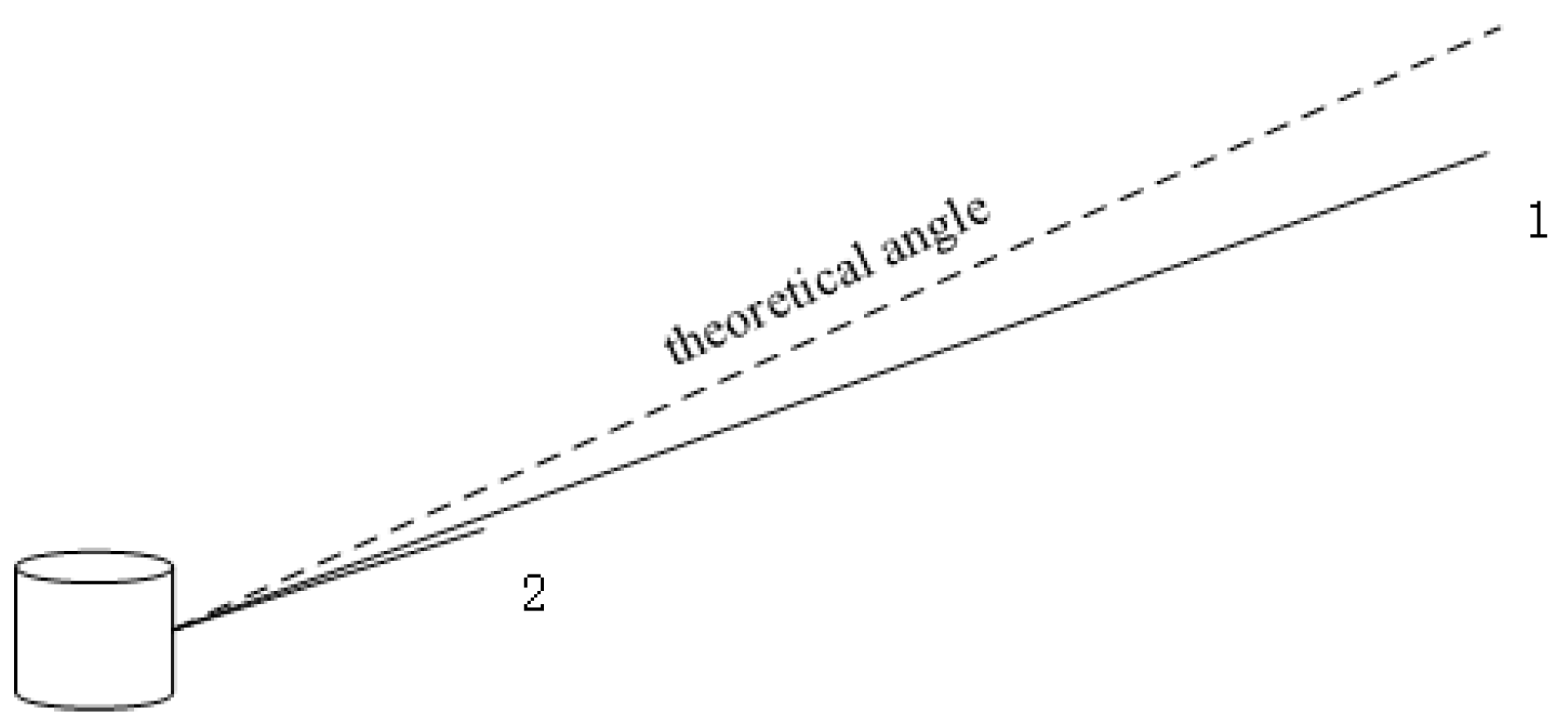

3.1. The Impact of LiDAR Motion on Skewing

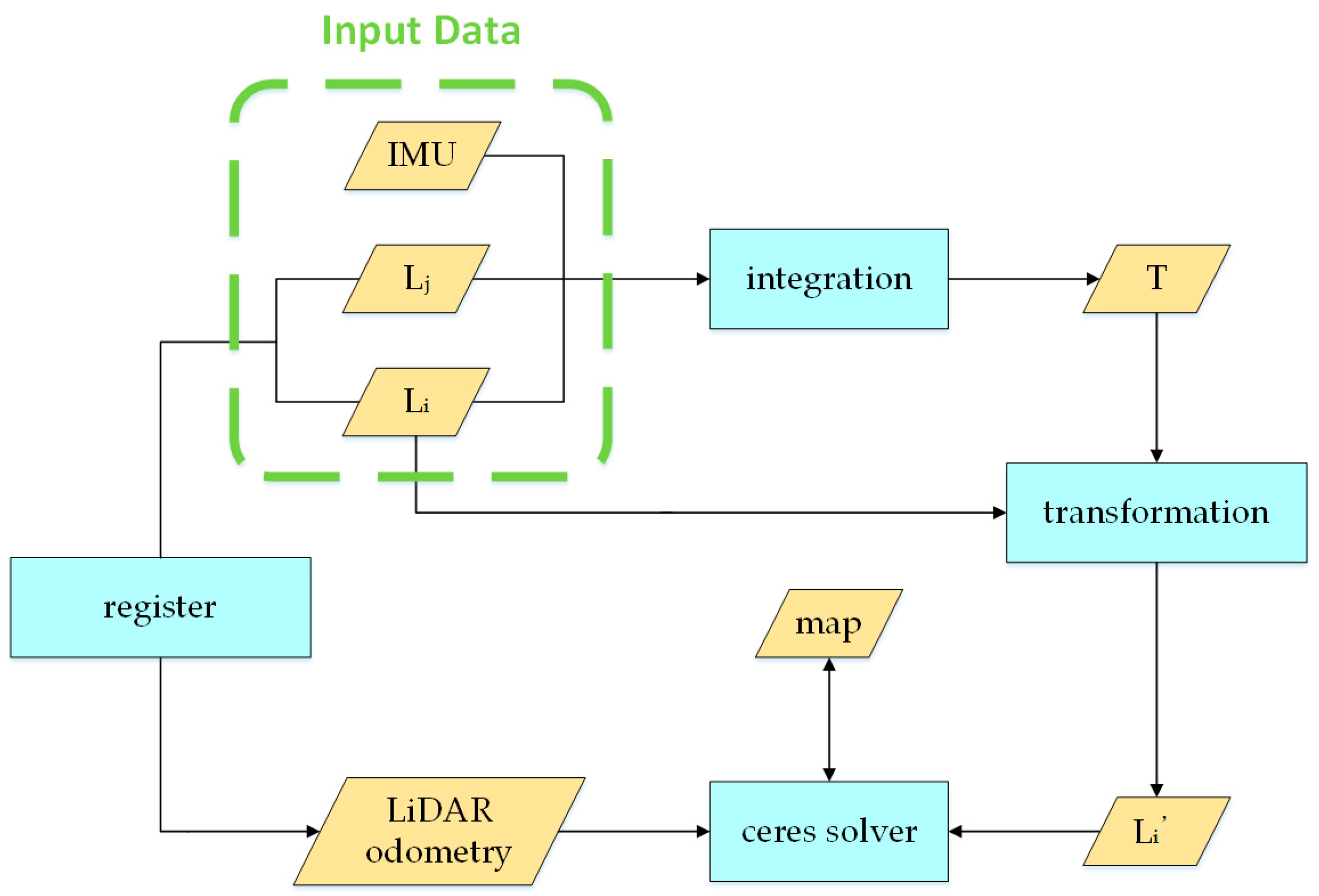

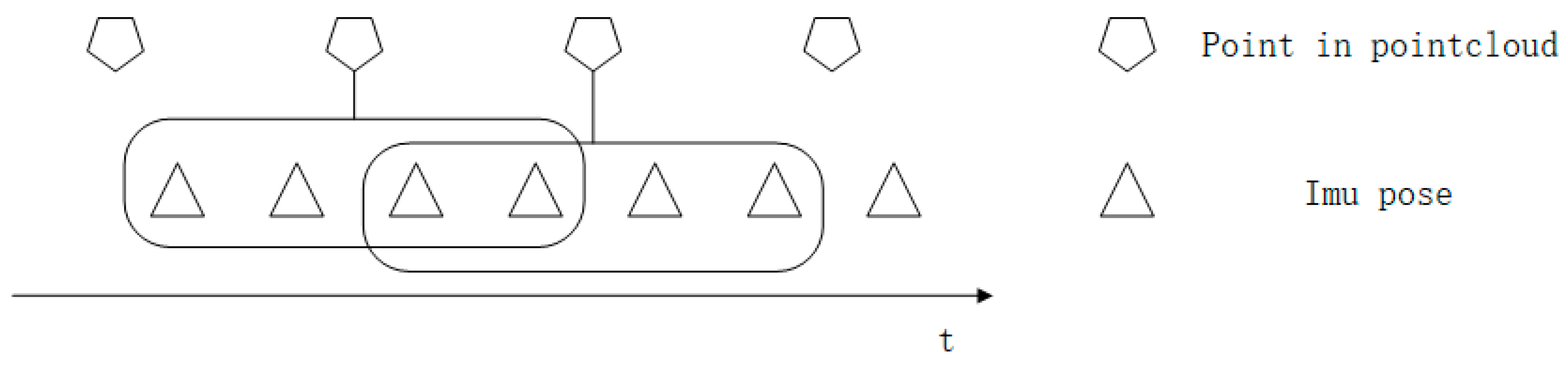

3.2. Overview of De-Skewing System

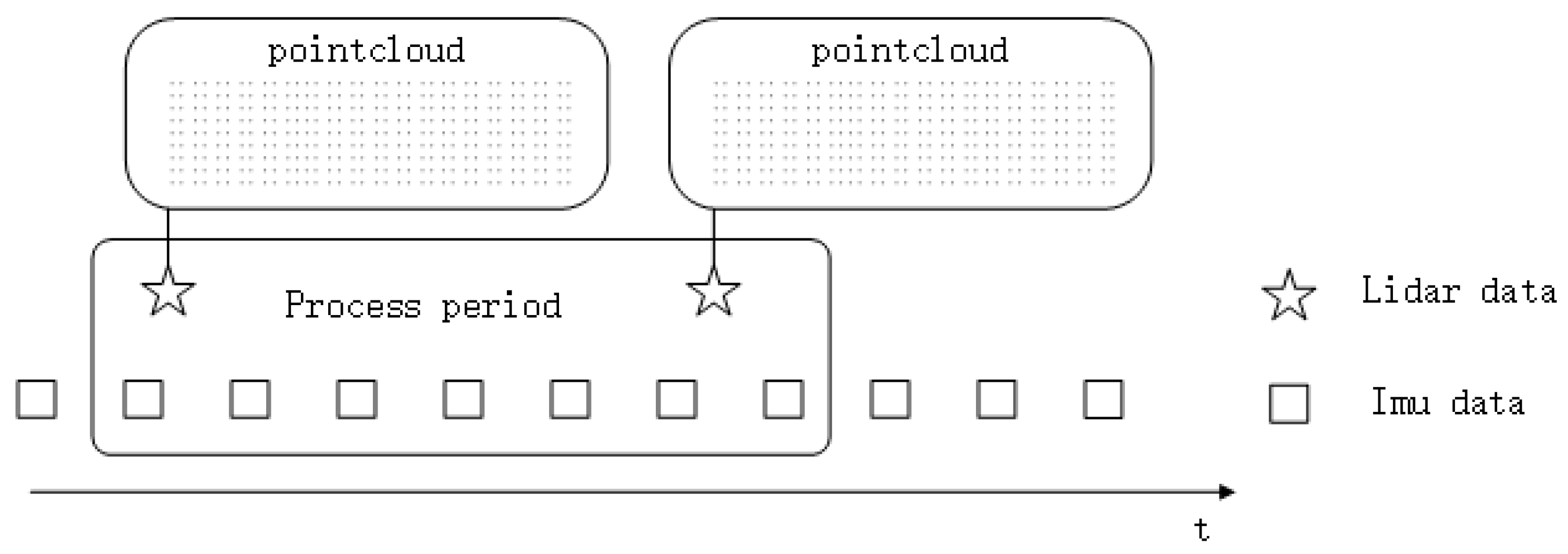

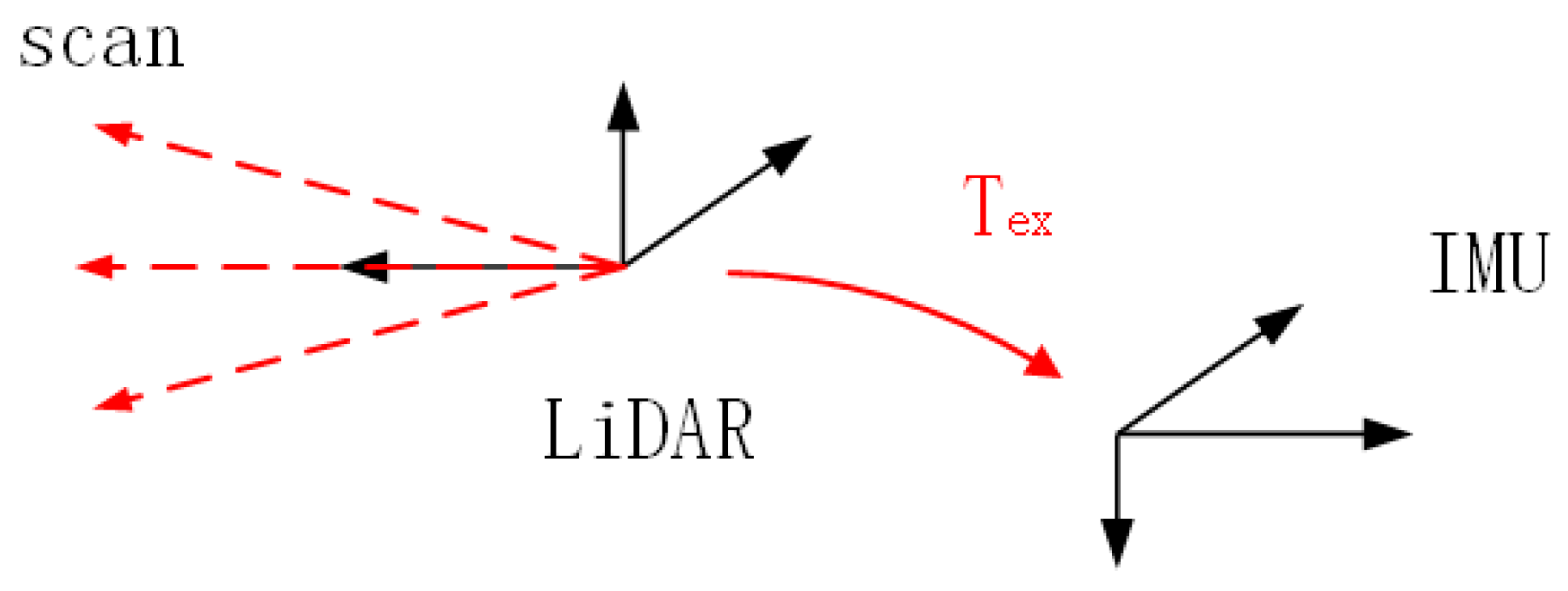

3.3. IMU Odometry and Coordinate Transformation

3.3.1. Location Modeling

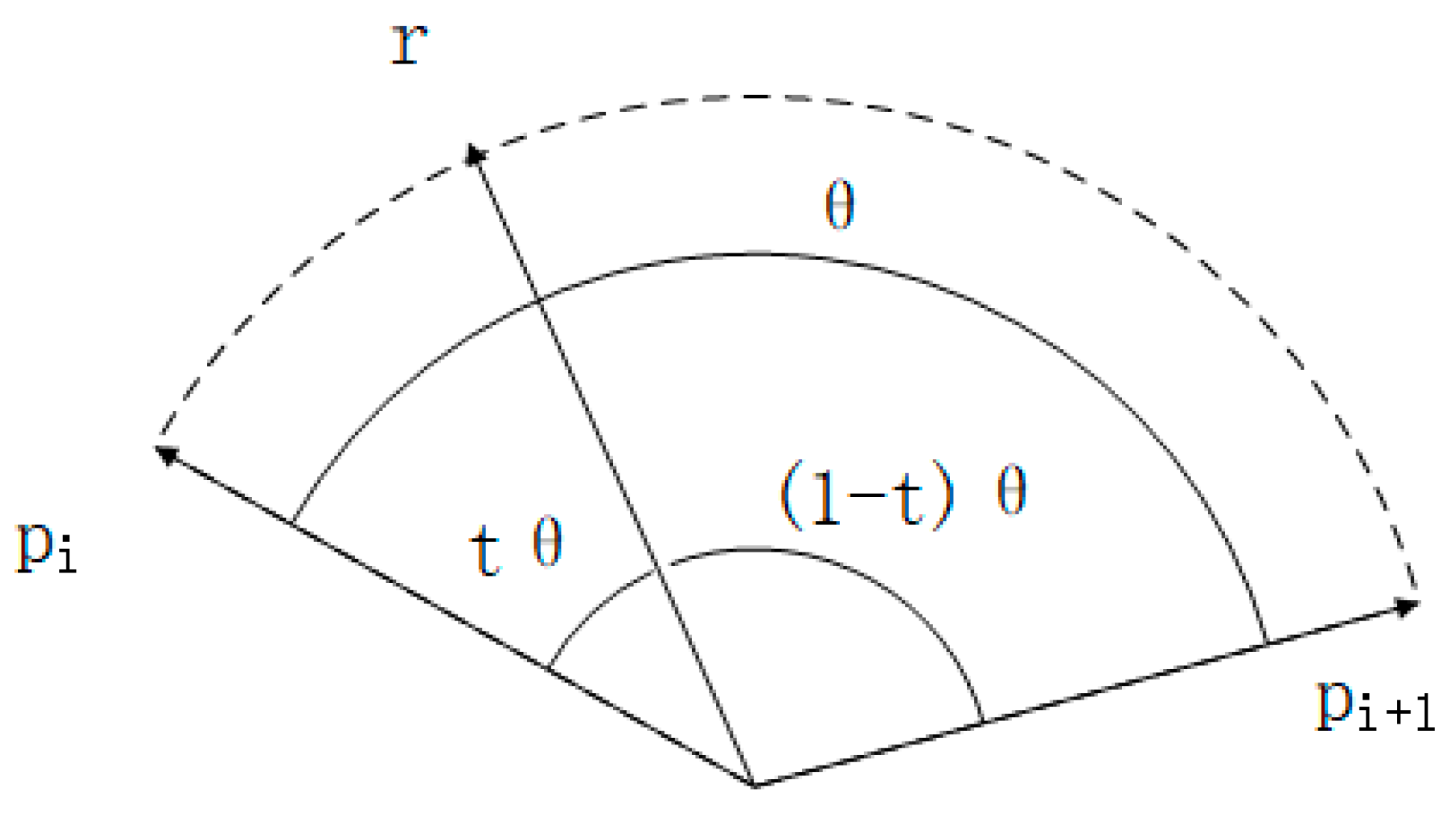

3.3.2. Rotation Modeling

3.3.3. Coordinate Transformation

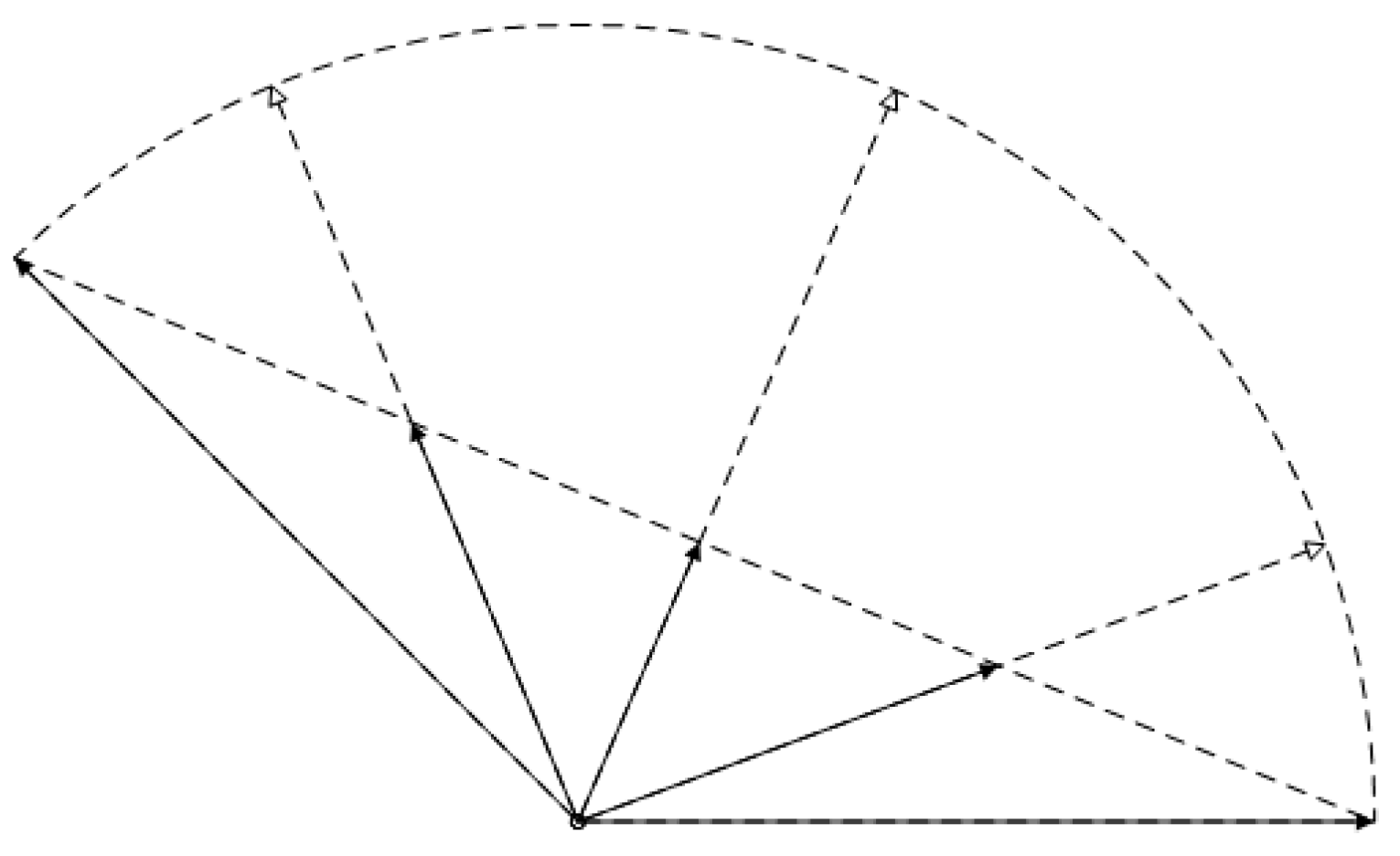

3.4. Point Cloud Registration and LiDAR Odometry

3.5. Ceres Solver Optimization

4. Results and Discussion

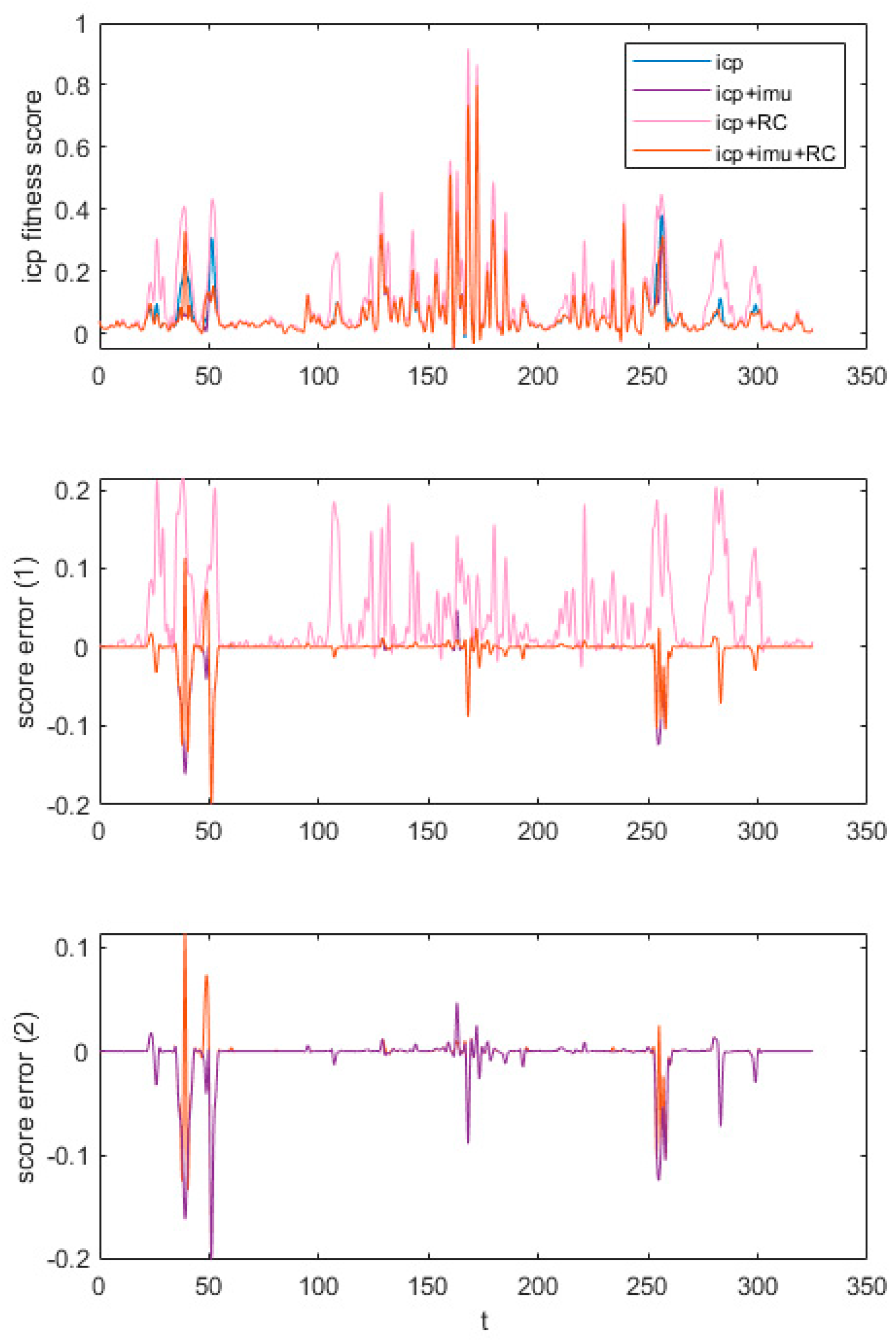

4.1. ICP Registration Comparison

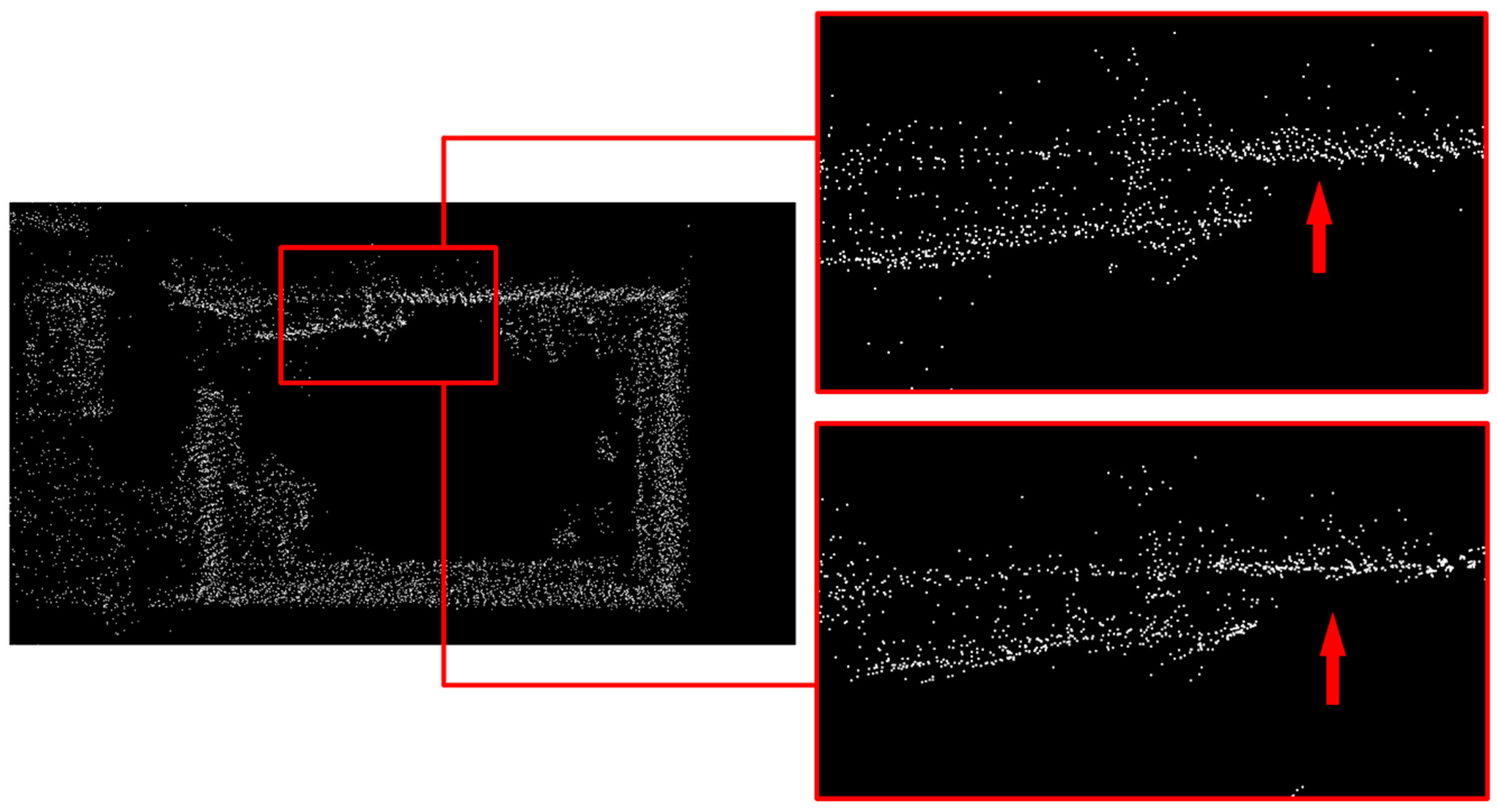

4.2. Indoor Experiments

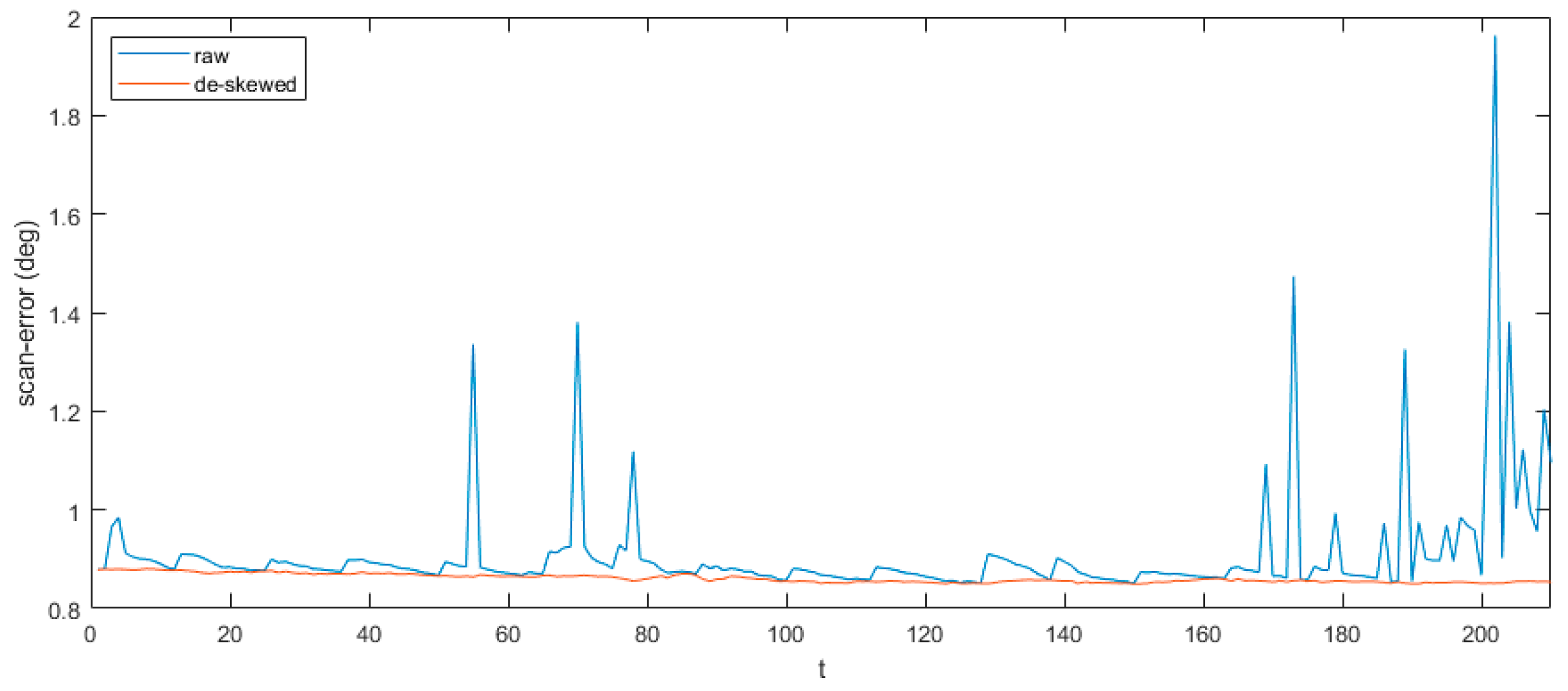

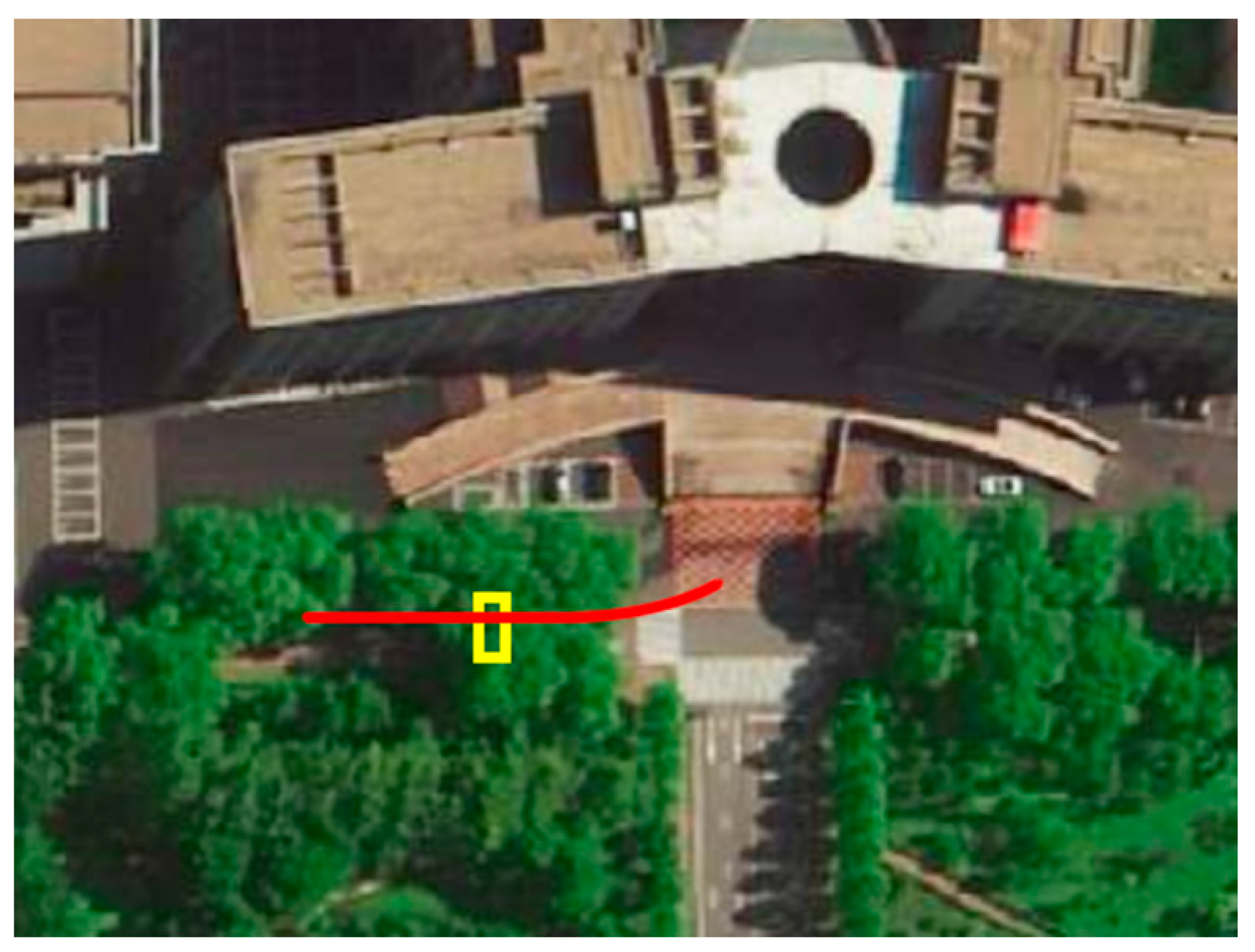

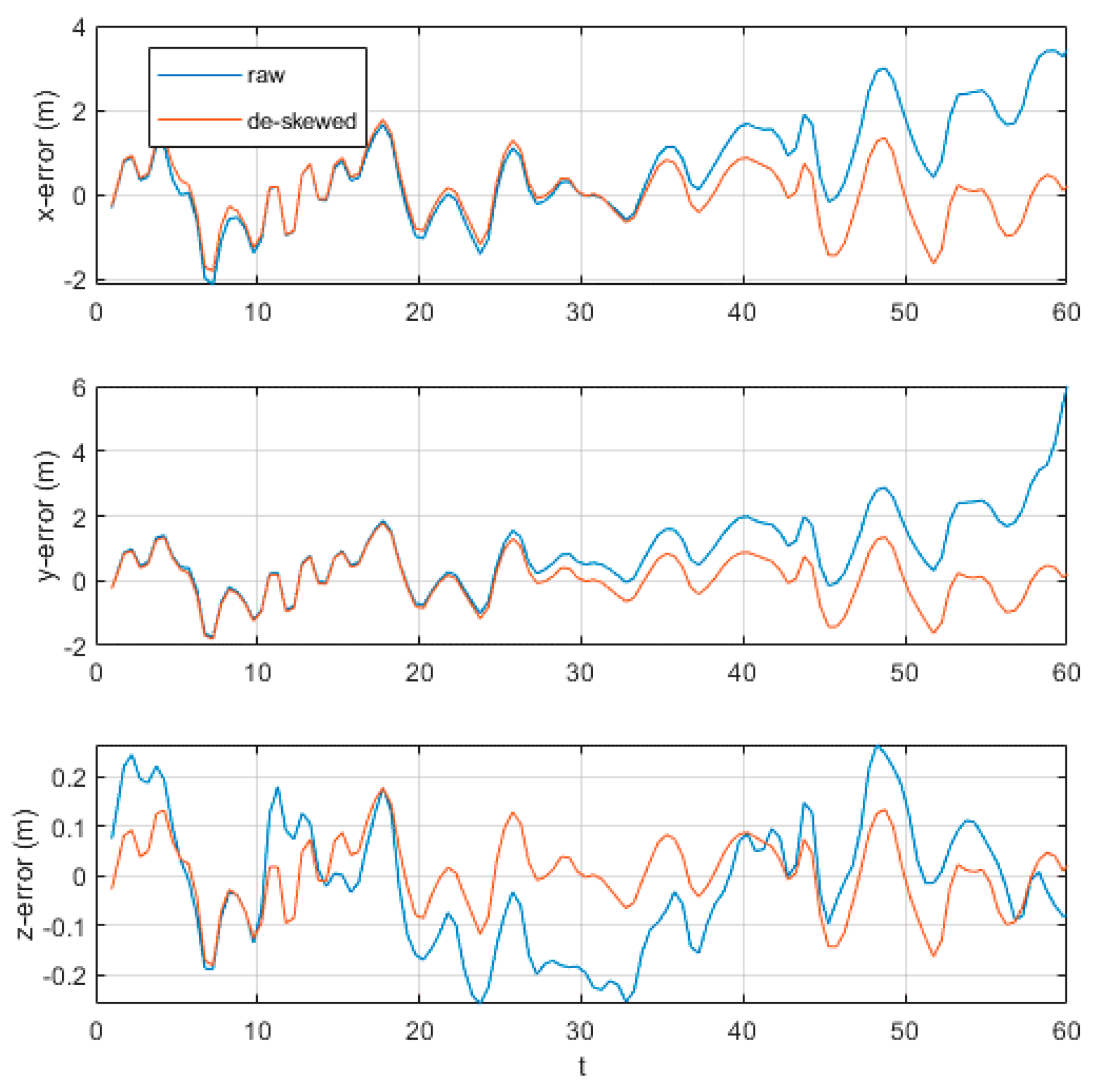

4.3. Outdoor Experiments

4.4. Discussion

5. Conclusion

Author Contributions

Funding

Conflicts of Interest

References

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, J.; Li, D. Object classification using CNN-based fusion of vision and LiDAR in autonomous vehicle environment. IEEE Trans. Ind. Inform. 2018, 14, 4224–4231. [Google Scholar] [CrossRef]

- Xiao, W.; Vallet, B.; Paparoditis, N. Change detection in 3d point clouds acquired by a mobile mapping system. ISPRS Annals of Photogrammetry. Remote Sens. Spat. Inf. Sci. 2013, 1, 331–336. [Google Scholar]

- Ali, W.; Abdelkarim, S.; Zahran, M.H.; Zidan, M.; Sallab, A.E. YOLO3D: End-to-end real-time 3D Oriented Object Bounding Box Detection from LiDAR Point Cloud. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Dubé, R.; Cramariuc, A.; Dugas, D.; Nieto, J.; Siegwart, R.; Cadena, C. SegMap: 3D segment mapping using data-driven descriptors. arXiv 2018, arXiv:1804.09557. [Google Scholar]

- Cole, D.M.; Newman, P.M. Using laser range data for 3D SLAM in outdoor environments. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006. [Google Scholar]

- Grisetti, G.; Kümmerle, R.; Stachniss, C.; Frese, U.; Hertzberg, C. Hierarchical optimization on manifolds for online 2D and 3D mapping. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; IEEE: Piscataway, NJ, USA; pp. 273–278. [Google Scholar]

- Agarwal, S.; Mierle, K. Ceres Solver. Available online: http://ceres-solver.org (accessed on 22 August 2018).

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-Manifold Preintegration for Real-Time Visual-Inertial Odometry. IEEE Trans. Robot. 2016, 33, 1–21. [Google Scholar] [CrossRef]

- Tang, J.; Chen, Y.; Niu, X.; Wang, L.; Chen, L.; Liu, J.; Shi, C.; Hyyppä, J. LiDAR scan matching aided inertial navigation system in GNSS-denied environments. Sensors 2015, 15, 16710–16728. [Google Scholar] [CrossRef] [PubMed]

- Lynen, S.; Achtelik, M.W.; Weiss, S.; Chli, M.; Siegwart, R. A robust and modular multi-sensor fusion approach applied to mav navigation. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: Piscataway, NJ, USA; pp. 3923–3929. [Google Scholar]

- Soloviev, A.; Bates, D.; Van Graas, F. Tight coupling of laser scanner and inertial measurements for a fully autonomous relative navigation solution. Navigation 2007, 54, 189–205. [Google Scholar] [CrossRef]

- Hemann, G.; Singh, S.; Kaess, M. Long-range GPS-denied aerial inertial navigation with LiDAR localization. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA; pp. 1659–1666. [Google Scholar]

- Pomerleau, F.; Colas, F.; Siegwart, R. A review of point cloud registration algorithms for mobile robotics. Found. Trends® Robot. 2015, 4, 1–104. [Google Scholar] [CrossRef]

- Elbaz, G.; Avraham, T.; Fischer, A. 3D point cloud registration for localization using a deep neural network auto-encoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4631–4640. [Google Scholar]

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-points congruent sets for robust pairwise surface registration. ACM Trans. Graph. (TOG) 2008, 27, 85. [Google Scholar] [CrossRef]

- Mellado, N.; Aiger, D.; Mitra, N.J. Super 4pcs fast global pointcloud registration via smart indexing. Comput. Graph. Forum 2014, 33, 205–215. [Google Scholar] [CrossRef]

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Keypoint-based 4-Points Congruent Sets–Automated marker-less registration of laser scans. ISPRS J. Photogramm. Remote Sens. 2014, 96, 149–163. [Google Scholar] [CrossRef]

- Mohamad, M.; Ahmed, M.T.; Rappaport, D.; Greenspan, M. Super generalized 4pcs for 3d registration. In Proceedings of the 2015 International Conference on 3D Vision, Lyon, France, 19–22 October 2015; IEEE: Piscataway, NJ, USA; pp. 598–606. [Google Scholar]

- Vlaminck, M.; Luong, H.Q.; Goeman, W.; Veelaert, P.; Philips, W. Towards online mobile mapping using inhomogeneous LiDAR data. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; IEEE: Piscataway, NJ, USA; pp. 845–850. [Google Scholar]

- Moosmann, F.; Stiller, C. Velodyne slam. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium (iv), Baden-Baden, Germany, 5–9 June 2011; IEEE: Piscataway, NJ, USA; pp. 393–398. [Google Scholar]

- Kim, C.; Jo, K.; Cho, S.; Sunwoo, M. Optimal smoothing based mapping process of road surface marking in urban canyon environment. In Proceedings of the 2017 14th Workshop on Positioning, Navigation and Communications (WPNC), Bremen, Germany, 25–26 October 2017; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Al-Nuaimi, A.; Lopes, W.; Zeller, P.; Garcea, A.; Lopes, C.; Steinbach, E. Analyzing LiDAR scan skewing and its impact on scan matching. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Madrid, Spain, 4–7 October 2016; IEEE: Piscataway, NJ, USA; pp. 1–8. [Google Scholar]

- Zhao, S.; Fang, Z.; Li, H.; Scherer, S. A Robust Laser-Inertial Odometry and Mapping Method for Large-Scale Highway Environments. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 1285–1292. [Google Scholar] [CrossRef]

- Droeschel, D.; Behnke, S. LiDAR-based online mapping. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 20–25 May 2018; IEEE: Piscataway, NJ, USA; pp. 1–9. [Google Scholar]

- Shoemake, K. Animating rotation with quaternion curves. ACM SIGGRAPH Comput. Graph. 1985, 19, 245–254. [Google Scholar] [CrossRef]

- Dam, E.B.; Koch, M.; Lillholm, M. Quaternions, Interpolation and Animation; Datalogisk Institut, Københavns Universitet: Copenhagen, Denmark, 1998. [Google Scholar]

- Robosense. RS-LiDAR-16 UserGuide. Available online: https://www.generationrobots.com/media/RS-LiDAR-16%20datasheet%20Car%20Mounted%28English%29.pdf (accessed on 26 March 2020).

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Umeyama, S. Least-squares estimation of transformation parameters between two point patterns. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 4, 376–380. [Google Scholar] [CrossRef]

| Channel | RMSE (No De-Skewing) | RMSE (After De-Skewing) | Channel | RMSE (No De-Skewing) | RMSE (After De-Skewing) |

|---|---|---|---|---|---|

| 0 | 1.7895 | 1.5708 | 8 | 0.1405 | 0.0996 |

| 1 | 1.5165 | 1.3302 | 9 | 0.3232 | 0.2780 |

| 2 | 1.2540 | 1.1161 | 10 | 0.5124 | 0.4482 |

| 3 | 1.0288 | 0.9084 | 11 | 0.7154 | 0.6290 |

| 4 | 0.7912 | 0.6937 | 12 | 0.9312 | 0.8226 |

| 5 | 0.5679 | 0.4946 | 13 | 1.3025 | 1.1915 |

| 6 | 0.3453 | 0.2949 | 14 | 1.5685 | 1.4473 |

| 7 | 0.1411 | 0.1008 | 15 | 2.5504 | 2.3487 |

| Pose | RMSE (No De-Skewing) | RMSE (After De-Skewing) |

|---|---|---|

| X | 1.5750 | 0.9257 |

| Y | 1.9159 | 0.9645 |

| Z | 0.1436 | 0.0741 |

| Roll | 0.0096 | 0.0103 |

| Pitch | 0.1010 | 0.0895 |

| Yaw | 0.0935 | 0.0792 |

| Channel | RMSE | MAX (deg) | ||

|---|---|---|---|---|

| No De-Skewing | After De-Skewing | No De-Skewing | After De-Skewing | |

| 0 | 0.5201 | 0.3499 | 2.8023 | 0.358 |

| 1 | 0.1748 | 0.1591 | 0.3624 | 0.1643 |

| 2 | 0.1089 | 0.0959 | 0.2811 | 0.0984 |

| 3 | 0.0745 | 0.0658 | 0.1996 | 0.0688 |

| 4 | 0.0448 | 0.0374 | 0.1374 | 0.0395 |

| 5 | 0.0446 | 0.0398 | 0.1092 | 0.0441 |

| 6 | 0.0484 | 0.0458 | 0.089 | 0.0479 |

| 7 | 0.0086 | 0.0073 | 0.0274 | 0.0084 |

| 8 | 0.0099 | 0.0104 | 0.0115 | 0.011 |

| 9 | 0.0174 | 0.0130 | 0.0661 | 0.0145 |

| 10 | 0.0372 | 0.0308 | 0.122 | 0.037 |

| 11 | 0.0587 | 0.0500 | 0.1747 | 0.0573 |

| 12 | 0.0827 | 0.0720 | 0.2288 | 0.0840 |

| 13 | 0.1272 | 0.1146 | 0.2961 | 0.1315 |

| 14 | 0.1322 | 0.1166 | 0.3241 | 0.1391 |

| 15 | 0.1415 | 0.1233 | 0.3637 | 0.1451 |

| Channel | RMSE | MAX (deg) | ||

|---|---|---|---|---|

| No De-Skewing | After De-Skewing | No De-Skewing | After De-Skewing | |

| 0 | 1.3610 | 0.1255 | 6 | 0.1299 |

| 1 | 1.1805 | 0.1088 | 5.2 | 0.1112 |

| 2 | 0.9990 | 0.0921 | 4.4 | 0.0948 |

| 3 | 0.8176 | 0.0753 | 3.6 | 0.0775 |

| 4 | 0.6377 | 0.0586 | 2.8 | 0.0643 |

| 5 | 0.4590 | 0.0419 | 2 | 0.0513 |

| 6 | 0.2838 | 0.0255 | 1.2 | 0.0299 |

| 7 | 0.1020 | 0.0093 | 0.4324 | 0.0134 |

| 8 | 0.1413 | 0.0112 | 0.8889 | 0.0169 |

| 9 | 0.4551 | 0.0348 | 2.1818 | 0.048 |

| 10 | 0.5982 | 0.0516 | 3.2 | 0.0667 |

| 11 | 0.8019 | 0.0718 | 2.9474 | 0.0913 |

| 12 | 1.0547 | 0.0940 | 4.1143 | 0.1137 |

| 13 | 1.2791 | 0.1179 | 5.5 | 0.147 |

| 14 | 1.5278 | 0.1449 | 6.1176 | 0.2023 |

| 15 | 1.8948 | 0.1764 | 7.5 | 0.2572 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, L.; Jin, Z.; Gao, Z. De-Skewing LiDAR Scan for Refinement of Local Mapping. Sensors 2020, 20, 1846. https://doi.org/10.3390/s20071846

He L, Jin Z, Gao Z. De-Skewing LiDAR Scan for Refinement of Local Mapping. Sensors. 2020; 20(7):1846. https://doi.org/10.3390/s20071846

Chicago/Turabian StyleHe, Lei, Zhe Jin, and Zhenhai Gao. 2020. "De-Skewing LiDAR Scan for Refinement of Local Mapping" Sensors 20, no. 7: 1846. https://doi.org/10.3390/s20071846

APA StyleHe, L., Jin, Z., & Gao, Z. (2020). De-Skewing LiDAR Scan for Refinement of Local Mapping. Sensors, 20(7), 1846. https://doi.org/10.3390/s20071846