Heterogeneous Iris One-to-One Certification with Universal Sensors Based On Quality Fuzzy Inference and Multi-Feature Fusion Lightweight Neural Network

Abstract

1. Introduction

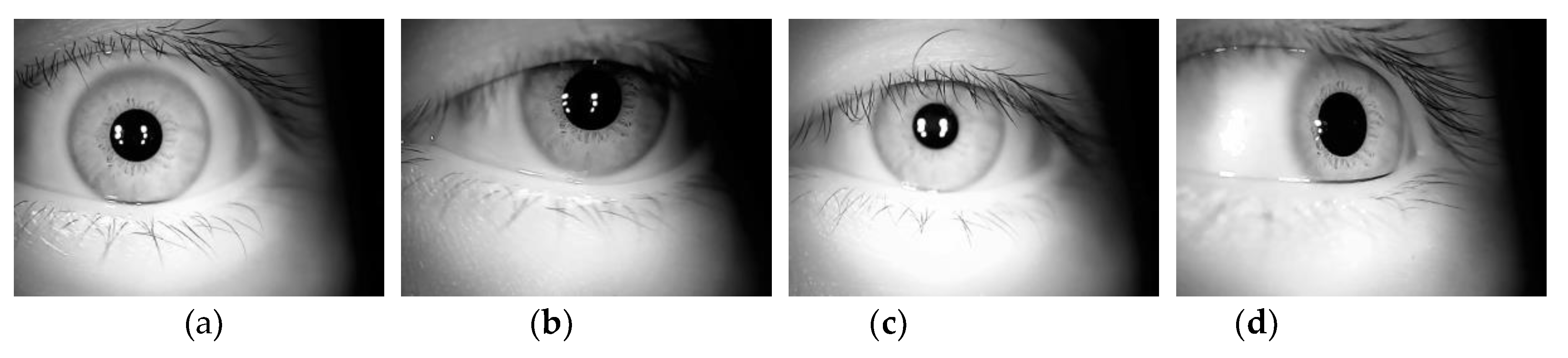

- The iris acquisition status and acquisition environment change, and this change cannot be predicted, which causes certain defocusing, deflection, shadowing and other problems. The dimensions of the captured images are 640 × 480;

- The number of iris categories is lightweight (all iris libraries contain dozens of categories, and each category contains only a few thousand pictures);

- To ensure the accuracy of lightweight certification, testers are allowed to collect multiple times, and thus, it is allowable to appropriately increase the false rejection rate;

- The number of training irises can reach several thousand, but the types of training irises (degree of defocus, illumination, strabismus effect, etc.) are not classified; they are directly mixed, belonging to mixed data;

- The subject tester is a living human.

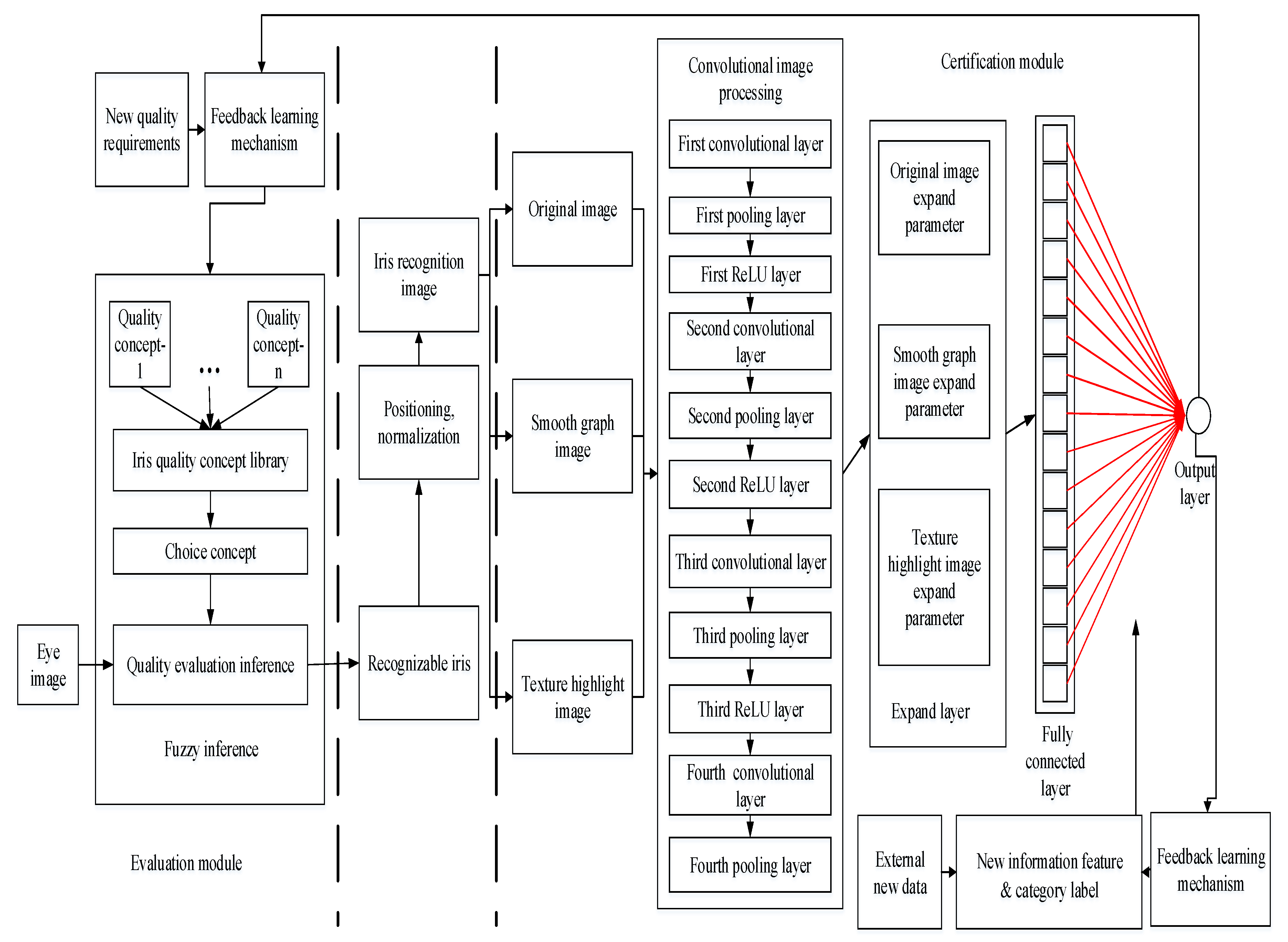

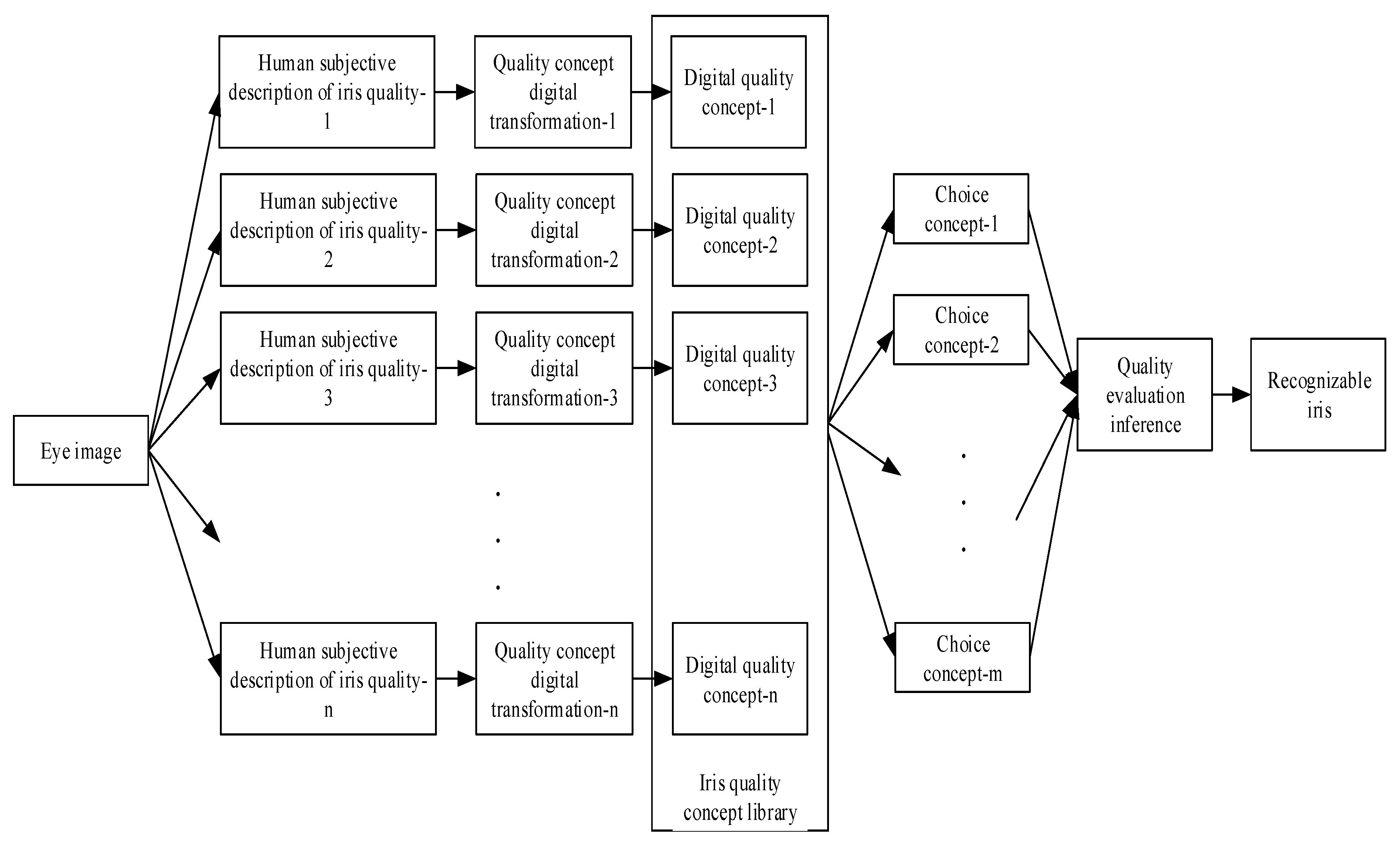

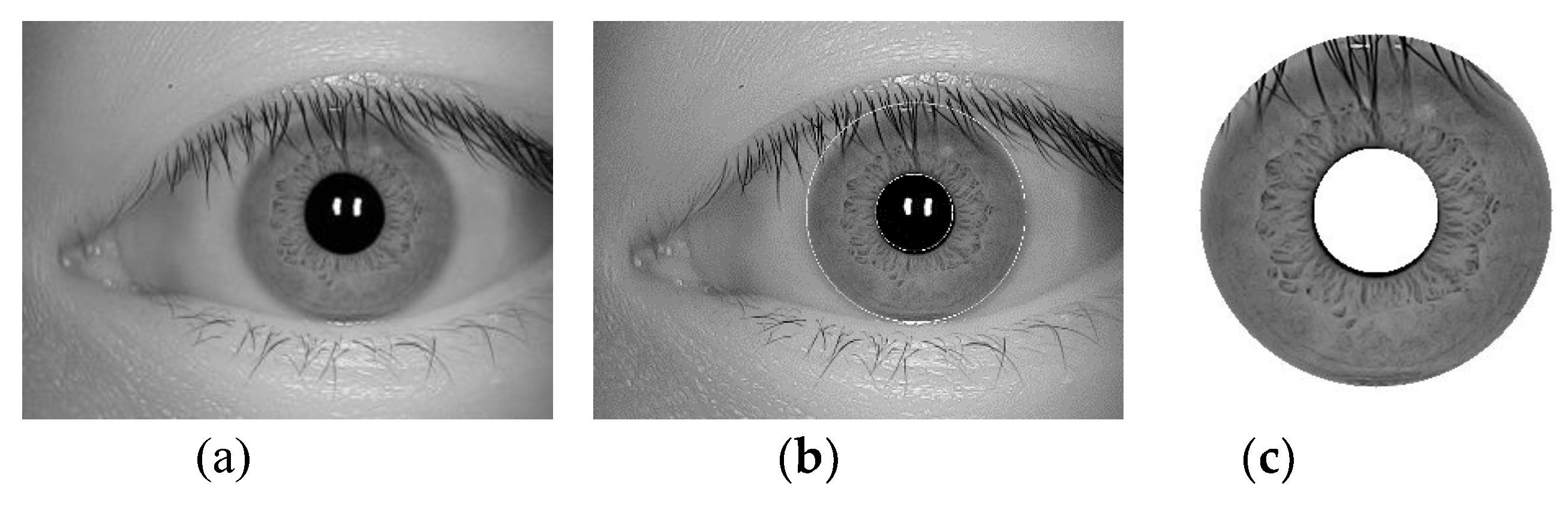

- Evaluation module: The evaluation module is a set of general processes that can be run on the irises collected by different acquisition instruments. This paper designs a quality concept fuzzy inference method based on a pure fuzzy logic system to select a recognizable iris. First, based on the existing training iris, a mechanism for the eye concept base and the qualified iris image concept base is proposed, and the subjective cognition of a person with normal thinking initially transforms the concept of an iris into a digital logic concept of the computer system. When evaluating the quality of the test iris, suitable concepts are selected according to the iris recognition requirements, and a quality inference machine is used to determine whether the test iris can be used for iris recognition. After the recognizable iris is processed, it is input into the recognition model. With the analysis of certification error results and the emergence of new quality requirements, a feedback learning mechanism for quality evaluation is designed for the updating and correction of knowledge. Existing conceptual labels are checked according to changes in the number of irises, errors of the original labels are corrected, and label expansion is implemented according to changes in quality requirements. The recognizable iris image determined by the evaluation module is located, normalized, and converted into a 180 × 32 dimensional iris recognition area.

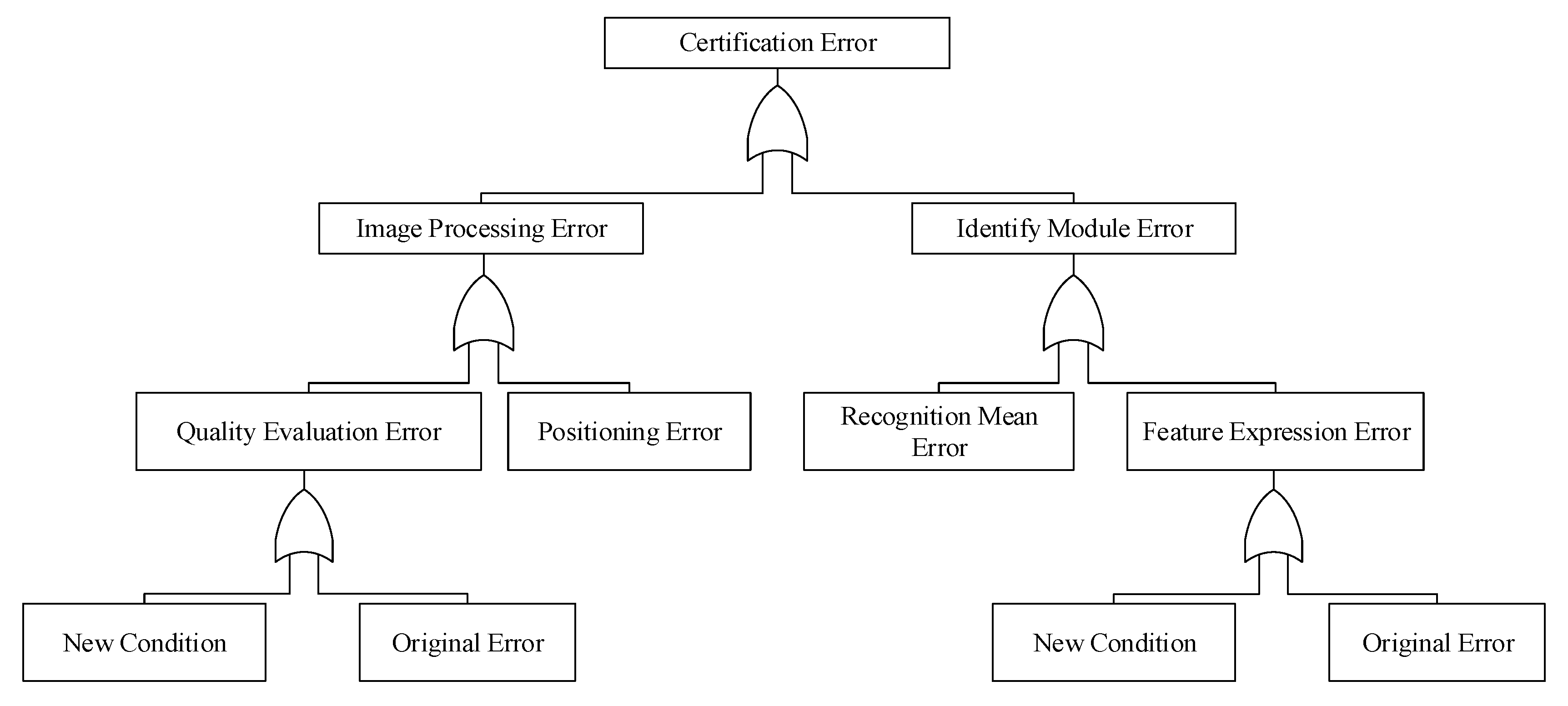

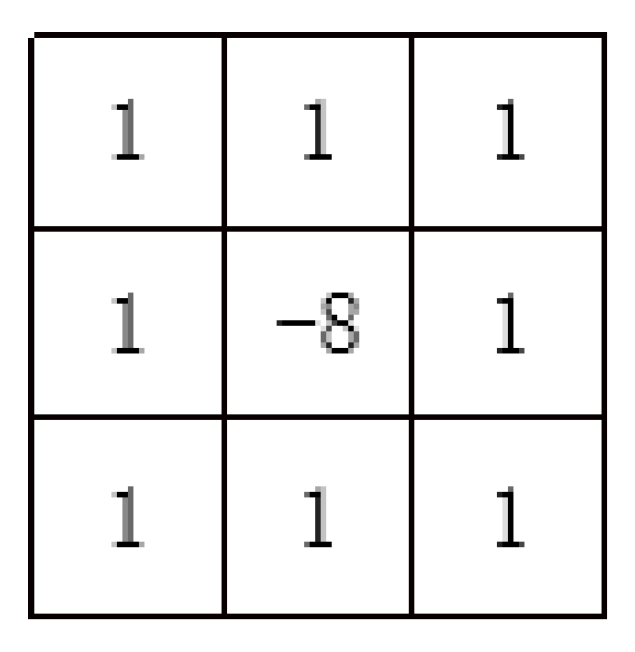

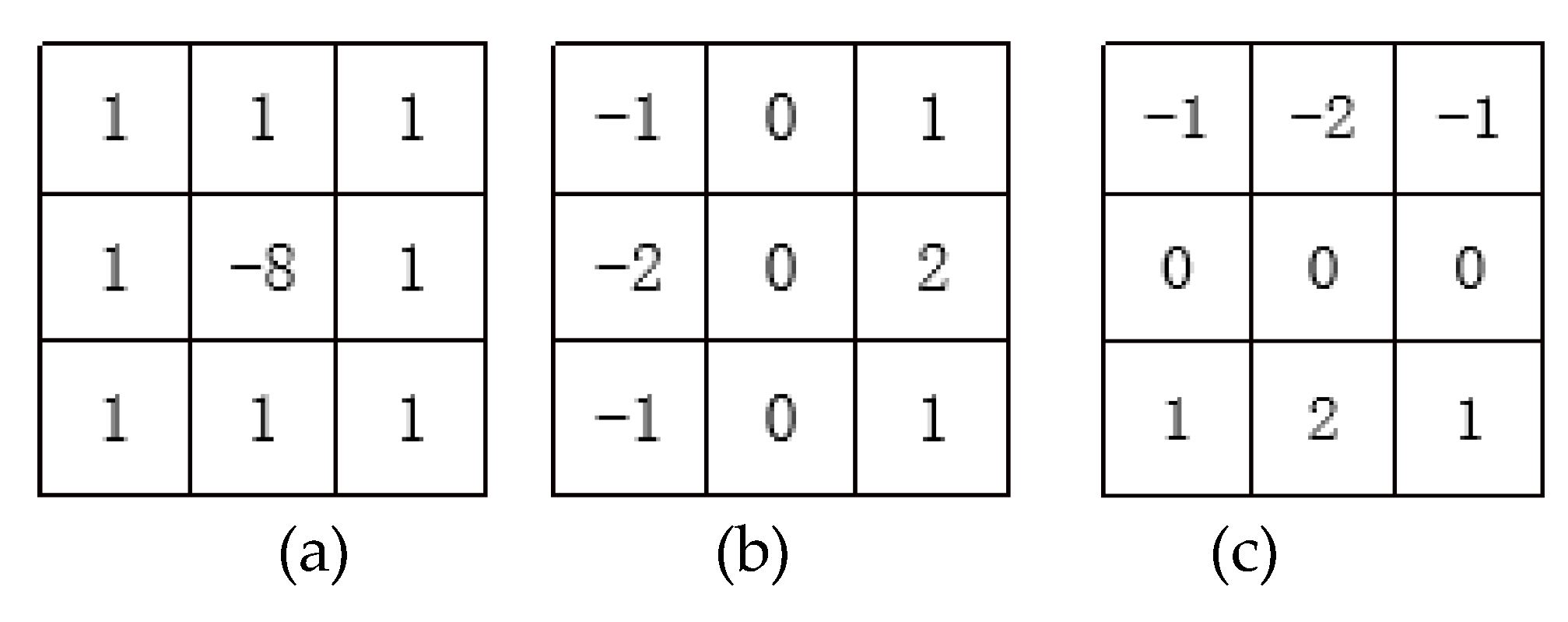

- Certification module: Based on the convolutional neural network structure, a neural network structure with lightweight layers is designed for iris certification. A feature representation mechanism based on multi-source features is proposed. The image of the iris certification area is processed by a smoothing algorithm and a texture highlighting algorithm. Three different iris images are formed as multi-source features. Each iris image passes through 12 layers of an image-processing network consisting of a convolutional layer, pooling layer, ReLU layer, and expansion layer. Finally, each iris image forms 15 expanded parameters and a total of 45 expanded parameters in the expansion layer. In the certification module, the expanded parameters of the three images are fused by average fusion through the feature fusion layer to form 15 recognition parameters. The certification function is designed based on the sigmoid function [1], and the statistical information is used to calculate the certification parameter information entropy. According to the information entropy, the certification function parameters are designed as the category labels. In the fully connected layer, one-to-one certification is finally performed through the certification function, and finally, the final result is output through the output layer. With the analysis of certification error results and the emergence of new iris data, in order to modify the information entropy and new category labels, a feedback learning mechanism for the certification module is designed.

- The problem of the heterogeneous unsteady-state iris: In quality evaluation, design of the iris quality knowledge concept mechanism must minimize the impact of fixed threshold decisions and use the objective iris features to set the concept. It must not require a fixed index for mechanical evaluation, but set the concept of quality based on the actual environment and identification needs for conceptual reasoning. In feature expression, a multi-source feature fusion mechanism is designed, feature fusion is performed from a multi-source perspective, and discrimination between different types of features is increased. The information entropy is used to design the recognition function category label parameters, realize the statistical recognition of the multi-state iris in the mixed data set, expand the range of recognizable irises, and improve the accuracy of unsteady heterogeneous iris certification.

- For the correlation between the various links in the whole process: This paper uses the non-template matching mode as the basis for the design of the certification model. It does not focus on a specific algorithm for research, but mainly focuses on the research of the protocol mechanism, and proposes a set of solutions for the whole process of iris certification from image acquisition to result output. In constructing the knowledge base, reasoning process, certification process, and a series of agreement mechanisms were proposed to connect the links with each other and fully consider the correlation between the certification steps. Aiming at resolving the instability of the unsteady-state iris and making the scheme universal, lightweight heterogeneous certification can be realized; that is, the iris images collected under different environments by different sensors can be recognized by the scheme.

- Limitations on iris dataset size and situation classification: Based on improvement of the positive certification process, this paper designs a feedback learning mechanism in the revision of the reverse overall process. Via professional analysis by human operators and data feedback from the computer system, the error certification situation and new possible external conditions are added. In this way, the process of performing a response and adjusting or correcting the knowledge base concept and the entropy features of the category labels enables the entire certification process to achieve dynamic adjustment, thus improving the forward certification and reverse correction of deep learning frameworks under the data set constraints.

2. Background and Related Work

- Unconstrained state in the same environment: Iris acquisition is performed based on a lack of restriction of the acquisition posture of the acquisition target person, and the external environment is not changed for iris acquisition;

- Constrained state in the same environment: Iris acquisition is performed based on the restriction of the acquisition posture of the acquisition target person, and the external environment is not changed for iris acquisition;

- Constrained state with environmental change: Iris acquisition is performed based on the restriction of the acquisition posture of the acquisition target person, and the external environment is changed for iris acquisition;

- Unconstrained state with environmental change: Iris acquisition is performed based on a lack of restriction of the acquisition posture of the acquisition target person, and the external environment is changed for iris acquisition.

- Template matching: Coding the test iris with a single storage template iris to get the final conclusion;

- Non-template matching: The features of the iris are formed into a cognitive concept, which is incorporated into neural network architectures such as deep learning [2] in the form of parameters, etc., to achieve non-coding matching recognition.

- One-to-one certification: It is used to determine whether the test iris and the template iris belong to the same person;

- One-to-many recognition: The iris of the test person is matched with multiple template irises, and the identity of the test person must be accurately identified.

- Research on the whole structure of iris recognition: In the current research on iris recognition, template matching methods are basically aimed at a specific scene in image acquisition [3], quality evaluation [4], localization normalization [5], feature expression [6], and recognition [7] and take one or more of these steps as the core to improve and further improve performance. This method mechanically separates the steps, ignores the agreement between the algorithms, and limits effect on the overall performance accuracy. Non-template matching methods based on neural network structures such as deep learning explore the internal connections of certain steps, nesting them together to form a whole, and not necessarily completing all steps, thereby improving the accuracy. However, there is no qualitative and quantitative analysis method for the clear correlation between the steps in the overall structure of iris recognition. It seriously affects the demand for improving the performance of the overall recognition system.

- Iris multi-state and single-source feature expression: Most training and learning recognition models in the current iris recognition algorithms are based on publicly known iris training sets or manually set iris labels. However, in the actual shooting process, multi-state heterogeneity is expected to occur between the template image and the collected image. There are many reasons for the multi-state heterogeneity, and they are divided into three main aspects:

- a.

- b.

- The collection environment is different because the iris status is unstable under different environments (illumination, etc.), which affects the relative relationship between the iris textures.

- c.

- The acquisition status of the iris is different. Because the acquisition status of the target person varies at different times, disturbances such as defocusing and deflection occur.

- The existing structural framework is designed to process a certain type of data, and the design purpose of the framework is not necessarily aimed at iris recognition. When the existing framework is used in iris recognition, inputting the iris data directly into the frame might not achieve a good recognition effect. The unsteady-state iris in an uncertain state is prone to a situation in which the existing framework does not match the input iris data. When the iris data set is limited in size, the situation classification is limited, and altering the existing framework might reduce accuracy, thus greatly limiting the types of existing frameworks that can be used. The question of how to design the framework to better adapt to the iris data must be addressed.

- In the study of feature expression and universal recognition, most methods normalize the data of different sensors and different environments by using algorithms such that the images can be identified under the same standard. However, not all processes are suitable for all images; the lack of suitability can cause unnecessary calculations and exclude certain images that can be identified but are not considered to be available irises because they do not meet the standard, possibly resulting in a lack of training of the multi-state iris model. Current research lack a universal process mechanism for the heterogeneous iris generated in a variety of different devices and environments. Because a single algorithm is prone to omission in multi-state features, many algorithms use multi-source feature expressions, an approach that requires in-depth understanding of the relationship between different types of features to avoid repeated calculation of iris features with large correlations and to improve the remoteness and discrimination of features.

- With the limited size of the iris data set and the limitation of situation classification, it is necessary to judge the situation of recognition errors and establish a reverse correction mechanism for the recognition framework process. This mechanism not only avoids mechanical judgment by relying on a large amount of data to generate fixed indicators in statistical learning but also avoids the situation of cognitive loss caused by the inability to form new concepts when unknown situations occur in cognitive learning.

- In face of the unsteady situation of heterogeneous irises produced by a variety of acquisition sensors and environments, the traditional processing method of a single source iris based on statistical learning or cognitive learning is not effective;

- Traditional iris recognition divides the entire process into several statistically guided steps, which cannot solve the problem of correlation among various links;

- The existing iris dataset size and situation classification constraints make it difficult to meet the requirements of learning methods in a single deep learning framework.

3. Evaluation Module

3.1. Iris Quality Knowledge Concept Construction Mechanism

3.2. Example of Iris Quality Concept Fuzzy Inference

3.2.1. Eye Concept Knowledge Base and Eye Inference

- (1)

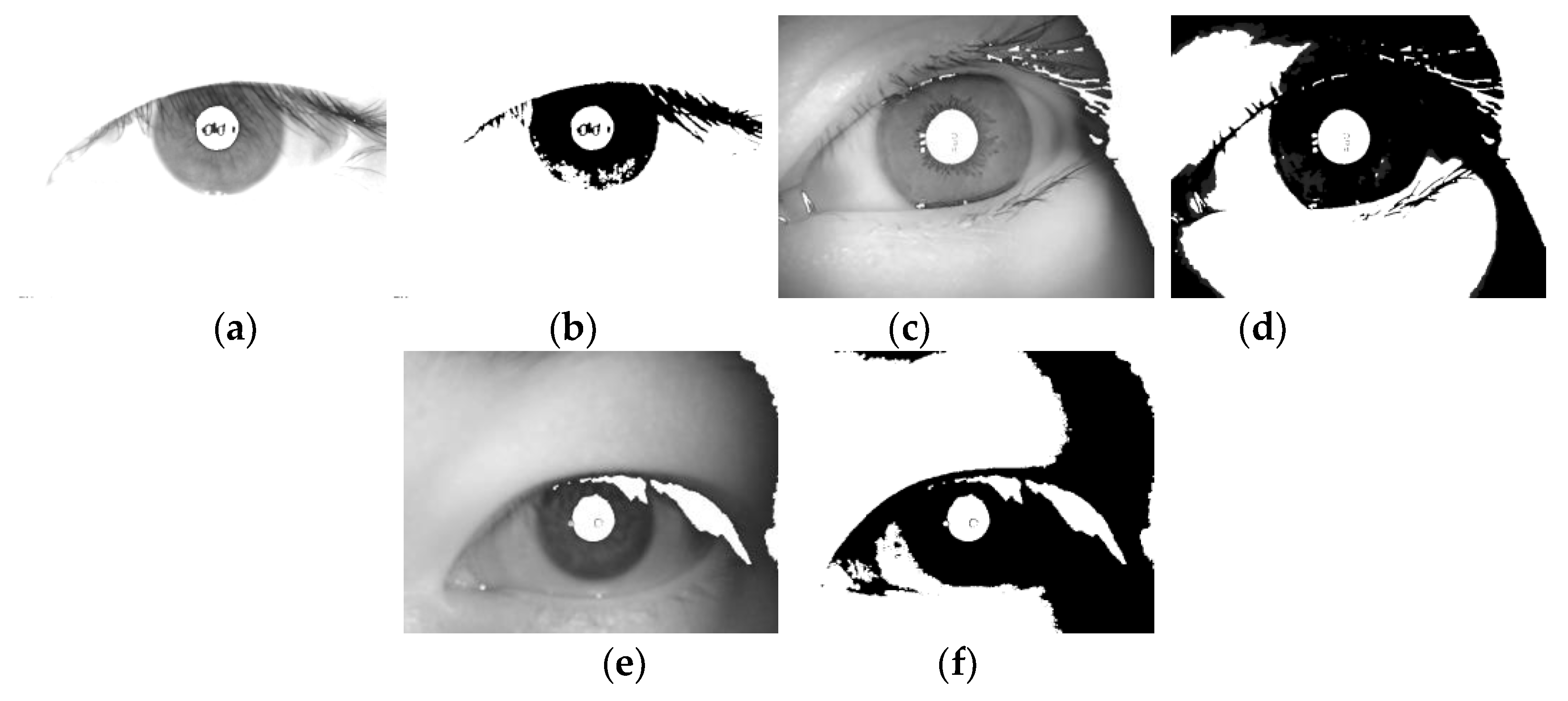

- It can be seen from Figure 5 that although the pupils are identified in the eye discrimination image, the pupils are still incomplete due to light and eye hair. Therefore, in order to better determine the position of the pupil, it is necessary to reflect the pupil rules more accurately, so binarization is required.

- (2)

- By lowering the eyes to determine the overall gray value of the image, while making the low gray value closer to 0, the relative relationship between the gray values is not changed, and the pupil site is better found. The reason for subtracting half of the average gray value is that under the unstable iris, the specific situation of the gray value of each image is uncertain, and it is not safe to use fixed parameters. Therefore, a non-fixed value related to the nature of the image is needed as the parameter for function design. Because the gray value of the pupil itself is relatively small, the gray value will be less than the average value in most cases, but the unpredictable situation in the unsteady iris is more complicated, and the gray value of other non-pupil parts cannot be guaranteed. Subtracting the average value may cause points that are larger than the grayscale average value to be suppressed too much, affecting the appearance of the pupils. Therefore, only half of the average grayscale value is subtracted in the function design.

- (3)

- For points where the gray value is lower than the average value, subtracting the minimum gray value ensures that the pupil can be separated to the greatest extent. Then the difference between pupil and eye hair can be distinguished, and then as much of the pupil connected area can be identified as possible.

- (1)

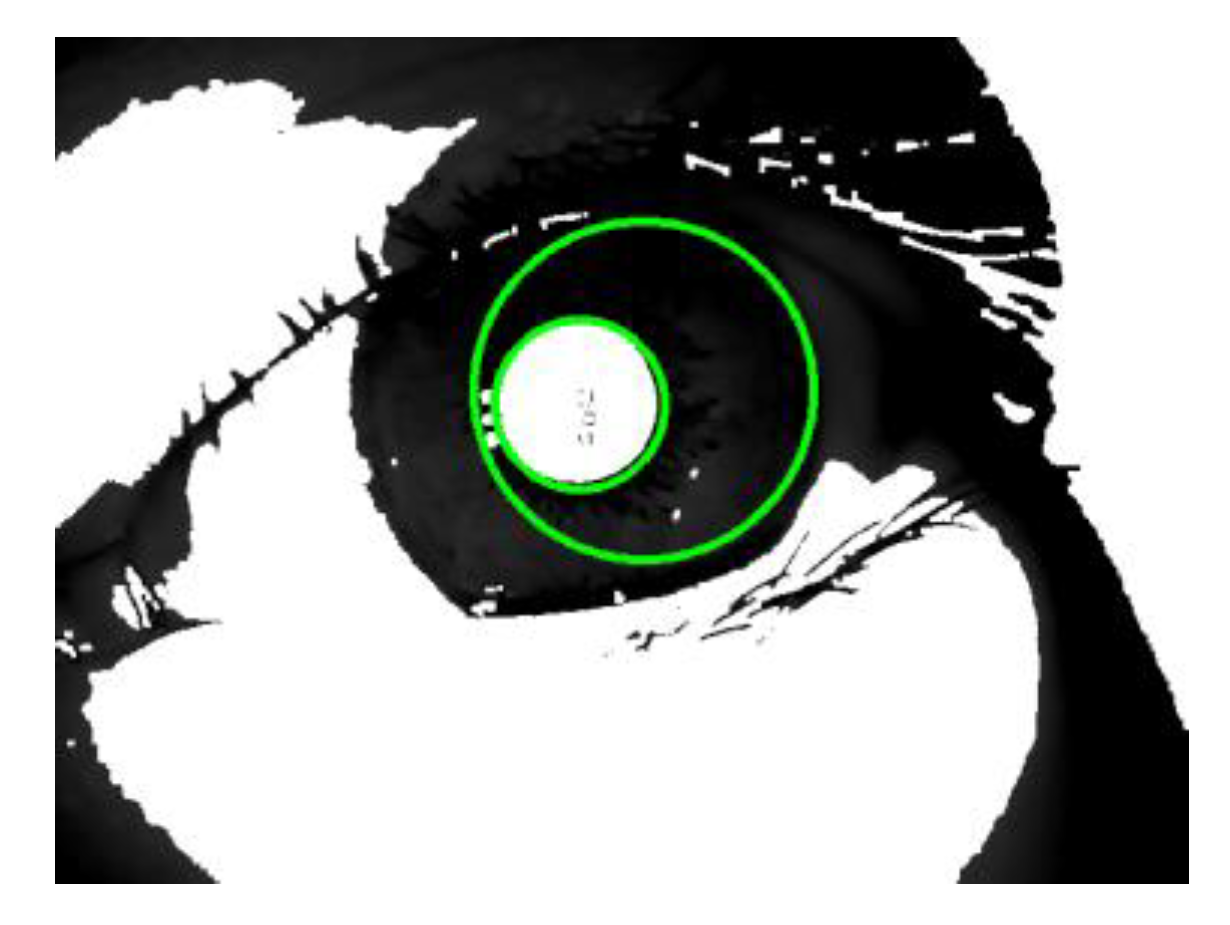

- It can be seen from Figure 8 that although the shape of the pupil connection area is irregular and complete, it can be regarded as a circle. Therefore, in order to reduce the calculation amount, the existing circle detection technology is used to fit the pupil connection area.

- (2)

- During the detection, due to the unpredictability of the unsteady state iris, the circle radius of the pupil connection area cannot be set in a fixed area. The image dimension of the image used in this paper is 640 × 480. In order to reduce the computational complexity, the image dimension is reduced to 160 × 120 by the majority statistical pooling method to form a reduced- dimensional image.

- (1)

- In the image of the connected area of the iris, divide the pupil range circled by a detected center radius into four equal parts;

- (2)

- Calculate the number of points with a gray value of 255 in these four parts, and calculate the proportional value of the points in the area;

- (3)

- Calculate the variance value T of the proportional value of the four parts.

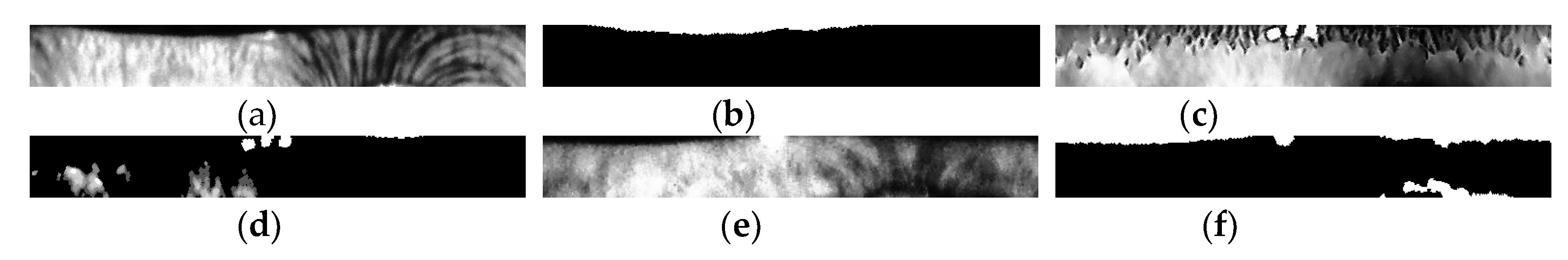

- (1)

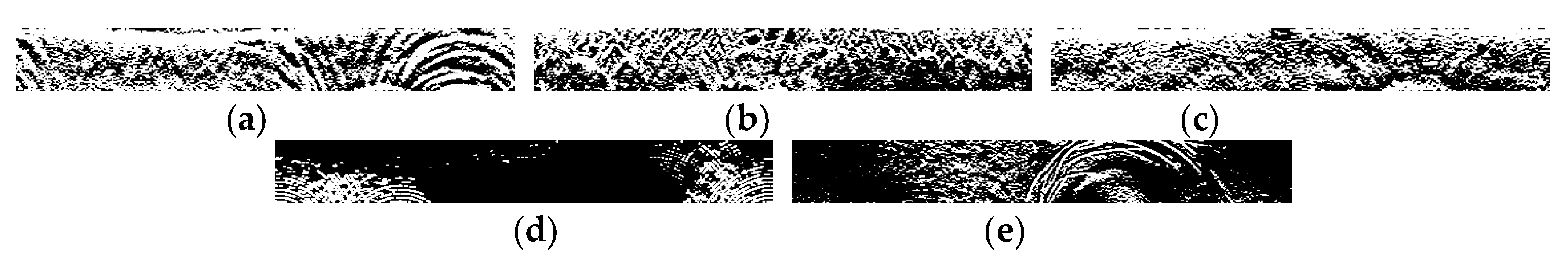

- In order to reduce the amount of calculations, the normalized image of the iris connected area image is intercepted, and the 512 × 64 dimension image is divided into eight 64 × 64 dimension images on average. The intercepted coordinate points of the image with the smallest gray value among the 8 images is recorded;

- (2)

- The outstanding image is equally divided into eight 64 × 64 dimensional images, and the image at the same position as the intercepted coordinate point recorded in 1) is selected as the iris detection image. The detection image of the iris part and the detection image of the non-iris part are shown in Figure 14.

- (3)

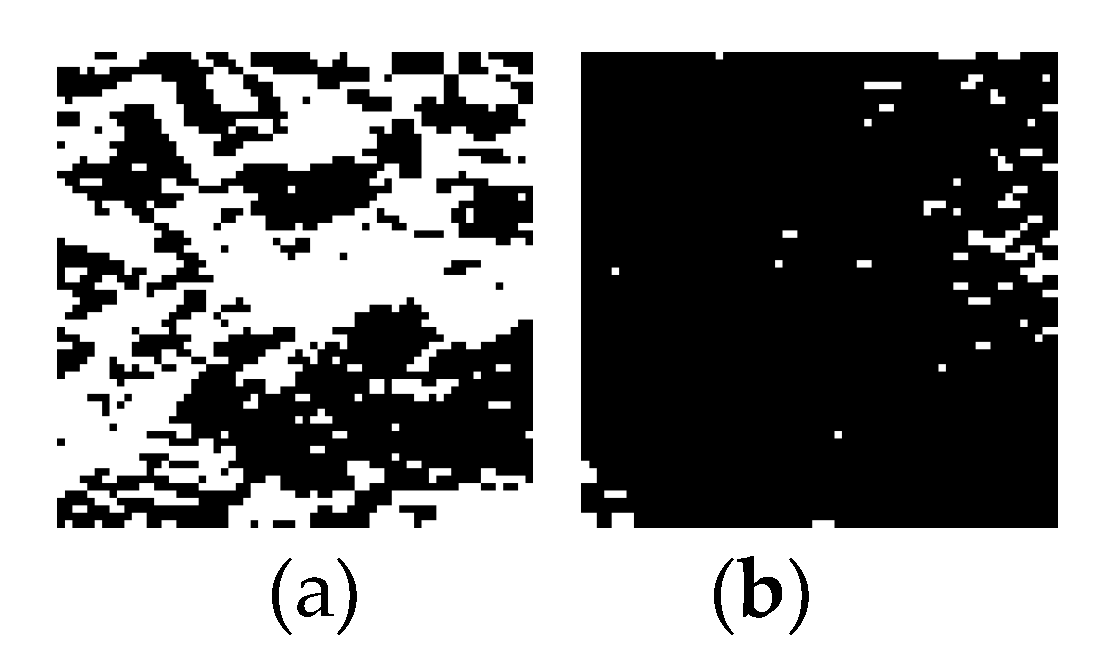

- The minimum pooling method is used to minimize the detection image in 2 × 2 dimensions [27] to form a 32 × 32 dimensional pooling detection image. In order to better reflect the irregular and black-and-white form of the iris, the Hamming distance [28] is used for representation. Set the points with a gray value of 255 to 1 and the points with a gray value of 0 to 0 to form 32 binary sequences. Calculate the Hamming distance between each adjacent sequence, a total of 31 values, numbered .

3.2.2. Quality Concept Knowledge Base and Quality Inference

3.3. Feedback Learning Mechanism of Quality Fuzzy Inference

- The subjective concept of the human eye is limited by the accumulation of human knowledge. The subjective impressions obtained only by visual observation of the data possessed are not necessarily completely accurate.

- The method of transforming the subjective concept into a digital concept can digitally represent the subjective description of the iris only adequately; however, this does not mean that the means of expression must be the best and unique.

- As the number of acquisitions increases and the external conditions of the acquisition and the needs of the iris change, the perception of the subjective concept of the iris may change.

- When it is determined that the quality concept should be adjusted, analyze the existing quality concept labels, each label setting process one by one, and whether the process settings are standardized; then, record if there are irregularities.

- Modify the parameter settings in the original process appropriately, observe the modified recognition results, and then analyze the relationship between each quality concept label and the standardization of the settings to discover loopholes and record them; analyze various recording situations, modify existing quality concept labels (for human subjective concepts, digital expression of subjective concepts, etc.), quality concept label representation methods, and image processing processes to correct the quality concept labels.

- When the recognition environment changes and it is necessary to expand the concept library, analyze the new environment and conditions, add new quality concepts, and expand the quality concepts (by creating new concepts and new digital expression methods).

4. Certification Module

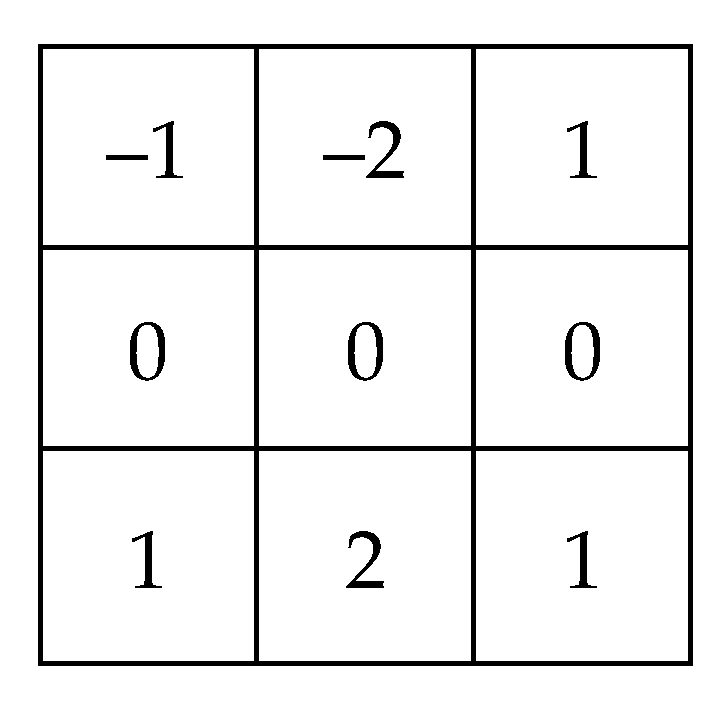

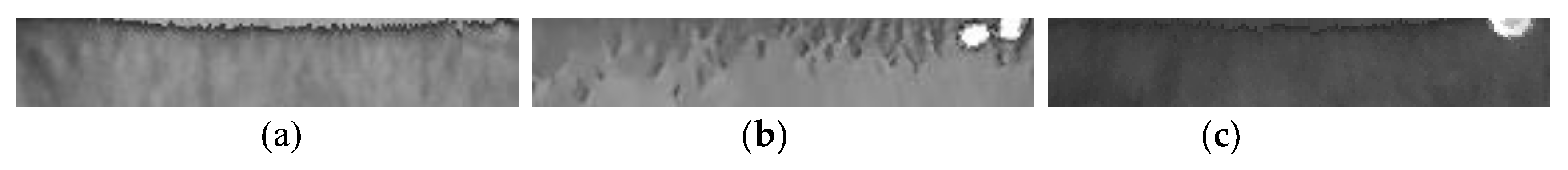

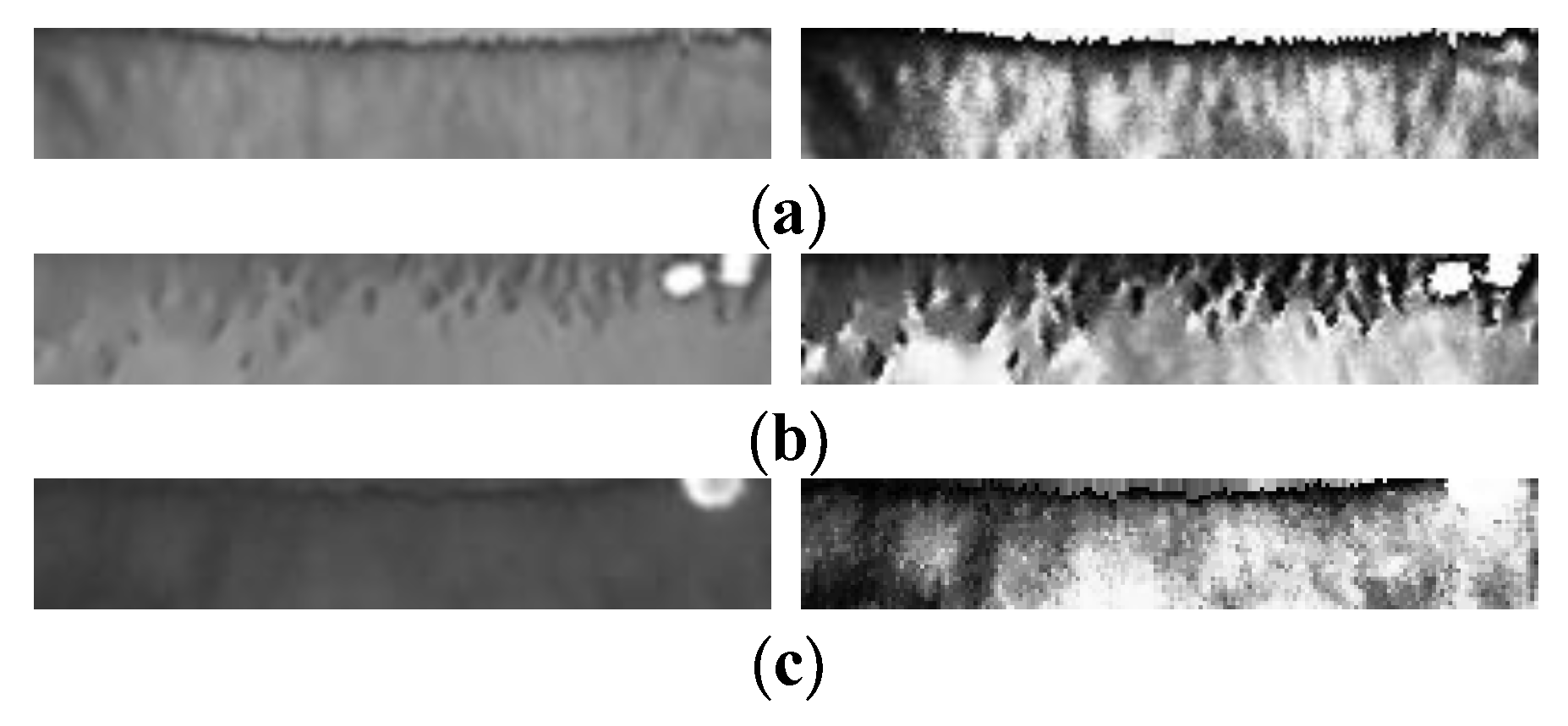

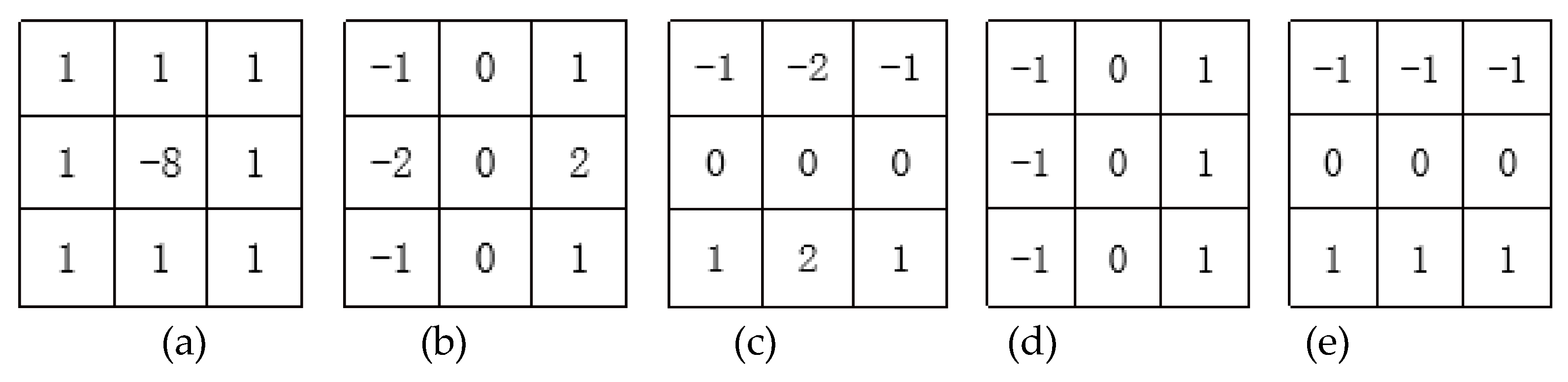

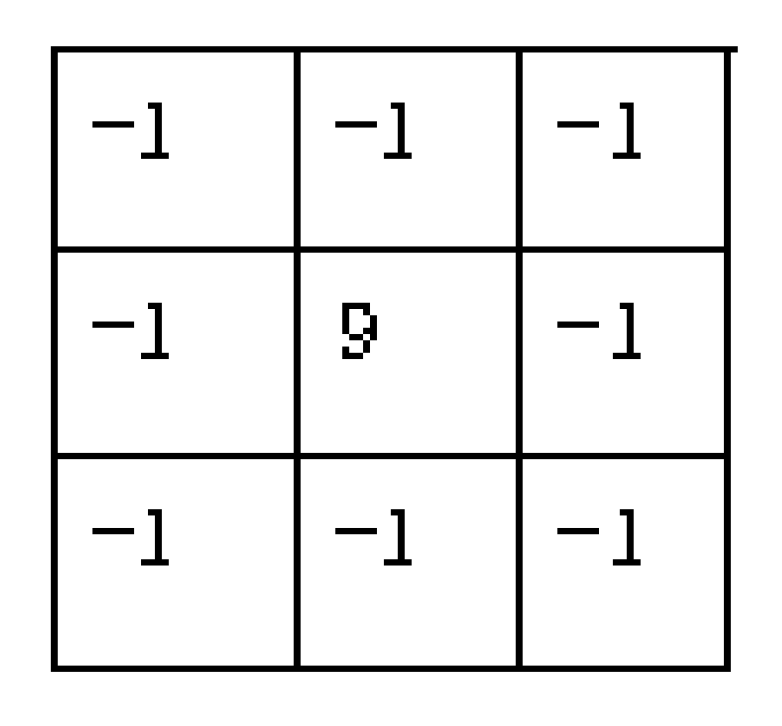

4.1. Iris Processing

4.2. Multi-Source Multi-Feature Fusion Mechanism and Heterogeneous One-to-One Certification

4.2.1. Feature Expression

4.2.2. Iris Certification

4.3. Feedback Learning Mechanism of Iris Certification

- The number of certification errors within a certain number of tests are counted and it is determined whether the parameters need to be adjusted based on the changes in the training data. This article assumes that the correct recognition rate is less than 95% or the number of training irises is increased by more than five times (where the new training iris contains the previous test iris); then, the certification model needs to be updated.

- If it is determined that the update of the certification model is not required, the original set parameters and category label range for certification are used.

- If it is determined that the certification model needs to be updated, new statistical adjustments are made to and based on all existing training data and according to the maximum satisfaction principle (choosing to satisfy the data distribution as much as possible). and are recalculated based on the recognition parameters of all training irises. The certification function group with formulas 1–5 is recalculated according to the new and , according to the new entropy feature , and the distribution of the expanded values, and this is used as a basis to count the new category label range and range interval.

- Steps 1–3 are repeated, until a situation that cannot optimize the certification model appears (because of training data exhaustion, etc.).

5. Experiments and Analysis

5.1. Explanation of Structural Meaning

5.1.1. The Relationship Between Iris Features and Iris Requirements

- Fusion method: Iris recognition based on a feature weighted fusion method based on Haar and LBP in [17] and training feature weights through statistical learning;

- Secondary iris recognition: Multicategory secondary iris recognition based on a BP neural network after noise reduction by principal component analysis, as used in [7]:

- DPSO-certification function: A certification function optimization algorithm based on a decision particle swarm optimization algorithm and stable feature, as used in [16];

- Statistical cognitive learning: A multistate iris multiclassification recognition method based on statistical cognitive learning, as used in [20];

- Multialgorithm parallel integration: An unsteady state multialgorithm parallel integration decision recognition algorithm in [18];

- Capsule Deep Learning: Iris recognition based on capsule deep learning in [13].

- Fusion method: Clarity selects the secondary inspection method in literature [41]; the effective iris area detection uses the gray histogram distribution detection in literature [42]; the strabismus selects the eccentricity detection in literature [42]; and confirmation of the presence of eyes uses the eye concept cognition in the examples presented in this paper.

- Secondary iris recognition: Clarity selects the secondary inspection method in literature [41]; effective iris area detection uses the method of detecting the appropriate iris area by using the block gray value variance in literature [41]; the strabismus selects the strabismus detection in literature [41]; and confirmation of the presence of eyes uses the eye concept cognition in the examples presented in this paper.

- DPSO-certification function: Clarity selects the image blur detection in the example presented in this paper; the effective iris area detection uses and the presence of eyes selects the concept awareness of the eye in the example presented in this paper; and the strabismus selects the strabismus detection in literature [41];

- Statistical cognitive learning: Clarity selects the image blur detection in the example presented in this paper; the effective iris area detection uses and the presence of eyes selects the concept awareness of the eye in the example presented in this paper; the strabismus uses the image direct vision detection in the example presented in this paper.

- Multialgorithm parallel integration: Clarity selects the image blur detection in the example presented in this paper; the effective iris area detection uses and the presence of eyes selects the concept awareness of the eye in the example presented in this paper; the strabismus uses the image direct vision detection in the example presented in this paper.

- Capsule deep learning: Clarity selects the sharpness test method used in literature [42]; effective iris area detection uses the method of detecting the appropriate iris area using the block gray value variance in literature [41]; the strabismus selects the strabismus detection used in literature [41]; confirmation of the presence of eyes uses the eye concept cognition in the examples presented in this paper.

5.1.2. Reasonable Certification Structural Design Description

- The existing structural framework is designed to process a certain type of data, and the design purpose of the framework is not necessarily aimed at iris recognition. Therefore, when the existing frame is used for iris recognition, inputting iris data directly into the frame may not achieve a good recognition effect;

- At present, there is no detailed definition of iris features. The expression of iris features is usually determined after the iris is converted from an image into a digital form, which has certain requirements for the internal feature transformation of the deep learning architecture. When faced with a multi-sensor iris whose acquisition status is unpredictable, there will be a large number of frameworks that cannot adapt to this kind of situation, which will lead to a decline in the accuracy of identifying irises from different acquisition sensors;

- The architecture of deep learning has high requirements for the classification of data volume and data situation. In addition, for a certain deep learning framework, the computing power is highly related to computing equipment, making it difficult for many low-level devices to use deep learning architectures for calculations.

5.1.3. Structural Properties and Algorithm Independence

5.1.4. Significance of Certification Function

5.1.5. Reasonable Setting of the Feedback Learning Mechanism

Reasonable setting of quality concept

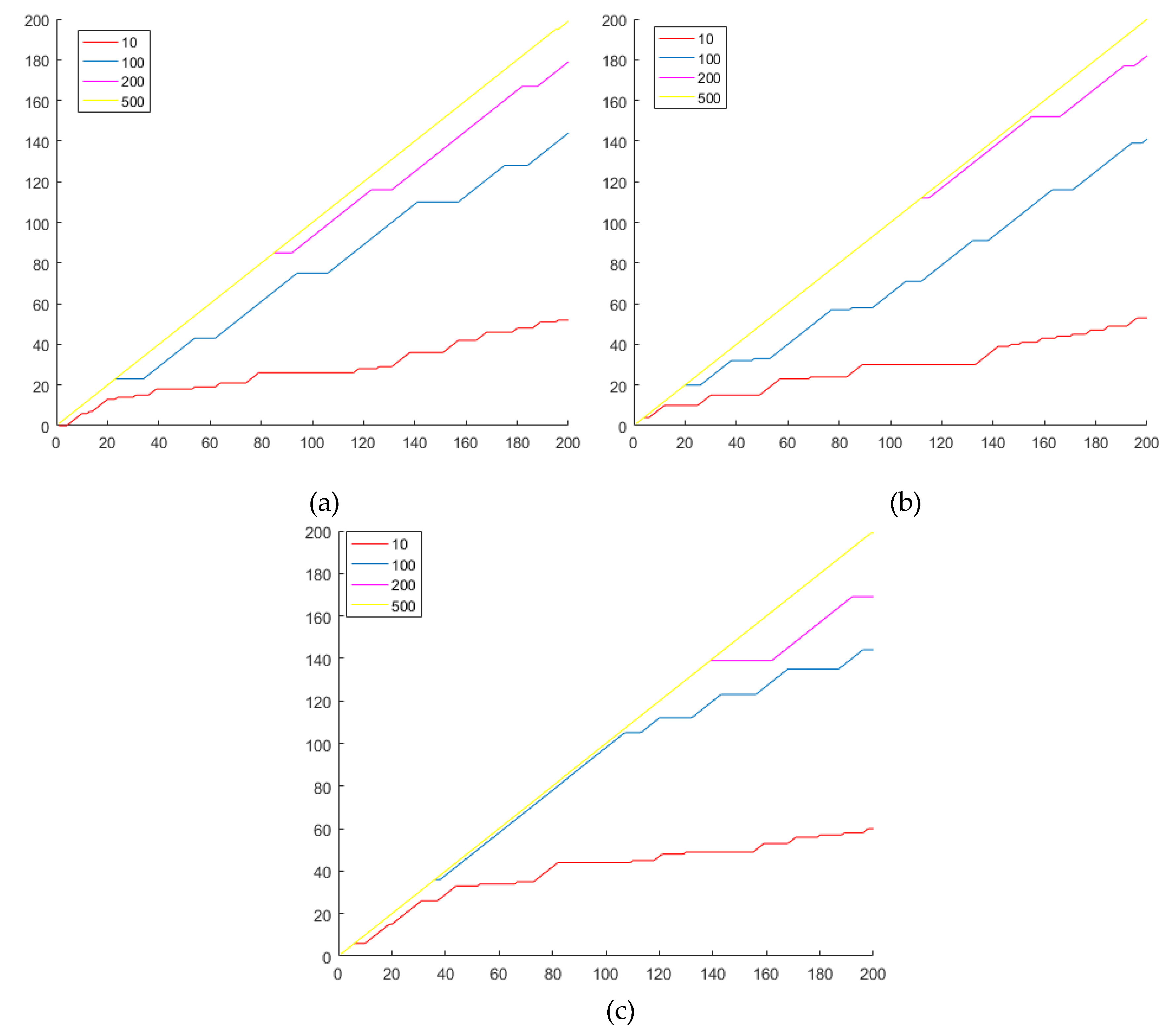

5.2. Certification Method Performance Experiment

5.2.1. Sensor Heterogeneous Versatility Experiment

- The number of iris acquisitions when each category of each iris library reaches 100 accurate certifications (which is divided into: the number of repeated acquisitions due to quality evaluation problems, and the number of repeated acquisitions caused by system false rejection (no problem in quality detection)).

- The number of errors identified when each category of each iris library reaches 100 times was accurately identified.

5.2.2. Certification Performance Experiment

5.2.3. Time Operation Experiment

5.3. Comprehensive Experiment

- 4.

- 5.

- 6.

- 7.

- Case 7: Quality evaluation fuzzy reasoning system (example indicators in this paper) and iris feature representation based on the fractal coding method in [55].

- 8.

- Case 8: Quality evaluation fuzzy reasoning system (example indicators in this paper) and the histogram of oriented gradients (HOG) is used to extract the iris features and certification by support vector machine (SVM) in [56].

- 9.

- Case 9: Quality evaluation fuzzy reasoning system (example indicators in this paper) and feature extraction based on the scale-invariant feature transform (SIFT) in [57] and recognition based on SVM.

- 10.

- Case 10: Quality evaluation fuzzy reasoning system (example indicators in this paper) and iris recognition based on the cognitive internet of things (CIoT) identified by multi-algorithm methods in [58]

- 11.

- Case 11: Quality evaluation fuzzy inference (example indicators in this paper) and the iris recognition method based on the iris-specific Mask R-CNN in [12].

- 12.

- Case 12: Quality evaluation fuzzy inference (example indicators in this paper) and an iris recognition method based on error correction codes and convolutional neural networks in [22].

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, S.; Liu, Y.-N.; Zhu, X.; Huo, G.; Liu, W.-T.; Feng, J.-K. Iris double recognition based on modified evolutionary neural network. J. Electron. Imaging 2017, 26, 1. [Google Scholar]

- Chen, B.; Xing, L.; Zheng, N.; Príncipe, J.C. Quantized Minimum Error Entropy Criterion. IEEE Trans. Neural Netw. Learn. Syst. 2019, 5, 1370–1389. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.; Pham, T.; Lee, Y.W.; Park, K. Deep Learning-Based Enhanced Presentation Attack Detection for Iris Recognition by Combining Features from Local and Global Regions Based on NIR Camera Sensor. Sensors 2018, 18, 2601. [Google Scholar]

- Alonso-Fernandez, F.; Farrugia, R.A.; Bigun, J.; Fierrez, J.; Gonzalez-Sosa, E. A Survey of Super-Resolution in Iris Biometrics with Evaluation of Dictionary-Learning. IEEE Access 2018, 7, 6519–6544. [Google Scholar]

- Jha, R.R.; Jaswal, G.; Gupta, D.; Saini, S.; Nigam, A. PixISegNet: Pixel-level iris segmentation network using convolutional encoder–decoder with stacked hourglass bottleneck. IET Biom. 2020, 9, 11–24. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Zhu, X.; Chen, Y.H. LOG operator and adaptive optimization Gabor filtering for iris recognition. J. Jilin Univ. (Eng. Technol. Ed.) 2018, 5, 1606–1613. [Google Scholar]

- Liu, S.; Liu, Y.; Zhu, X.; Lin, Z.; Yang, J. Ant Colony Mutation Particle Swarm Optimization for Secondary Iris Recognition. J. Comput. Des. Comput. Graph. 2018, 30, 1604. [Google Scholar]

- Lee, Y.W.; Kim, K.W.; Hoang, T.M.; Arsalan, M.; Park, K. Deep Residual CNN-Based Ocular Recognition Based on Rough Pupil Detection in the Images by NIR Camera Sensor. Sensors 2019, 19, 842. [Google Scholar]

- Bazrafkan, S.; Thavalengal, S.; Corcoran, P. An end to end Deep Neural Network for iris segmentation in unconstrained scenarios. Neural Netw. 2018, 106, 79–95. [Google Scholar]

- Zhang, M.; He, Z.; Zhang, H.; Tan, T.; Sun, Z. Toward practical remote iris recognition: A boosting based framework. Neurocomputing 2019, 330, 238–252. [Google Scholar] [CrossRef]

- Mowla, N.I.; Doh, I.; Chae, K. Binarized Multi-Factor Cognitive Detection of Bio-Modality Spoofing in Fog Based Medical Cyber-Physical System. In Proceedings of the 2019 International Conference on Information Networking (ICOIN), Kuala Lumpur, Malaysia, 9–11 January 2019; pp. 43–48. [Google Scholar]

- Zhao, Z.; Kumar, A. A deep learning based unified framework to detect, segment and recognize irises using spatially corresponding features. Pattern Recognit. 2019, 93, 546–557. [Google Scholar] [CrossRef]

- Zhao, T.; Liu, Y.; Huo, G.; Zhu, X. A Deep Learning Iris Recognition Method Based on Capsule Network Architecture. IEEE Access 2019, 7, 49691–49701. [Google Scholar] [CrossRef]

- Liu, N.; Zhang, M.; Li, H.; Sun, Z.; Tan, T. Deepiris: Learning pairwise filter bank for heterogeneous iris verification. Pattern Recognit. Lett. 2016, 82, 154–161. [Google Scholar] [CrossRef]

- Nguyen, K.; Fookes, C.; Ross, A.; Sridharan, S. Iris Recognition with Off-the-Shelf CNN Features: A Deep Learning Perspective. IEEE Access 2017, 6, 18848–18855. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.; Zhu, X.; Guang, H.; Tong, D.; Zhang, K.; Jiang, X.; Guo, S.-J.; Zhang, Q.X. Iris secondary recognition based on decision particle swarm optimization and stable texture. J. Jilin Univ. (Eng. Technol. Ed.) 2019, 49, 1329–1338. [Google Scholar]

- Liu, Y.; Liu, S.; Zhu, X.; Liu, T.-H.; Liu, Y.-N. Iris recognition algorithm based on feature weighted fusion. J. Jilin Univ. (Eng. Technol. Ed.) 2019, 49, 221–229. [Google Scholar]

- Shuai, L.; Yuanning, L.; Zhu, X.; Xinlong, L.; Chaoqun, W.; Kuo, Z.; Tong, D. Unsteady State Lightweight Iris Certification Based on Multi-Algorithm Parallel Integration. Algorithms 2019, 12, 194. [Google Scholar] [CrossRef]

- Arsalan, M.; Kim, D.S.; Lee, M.B.; Owais, M.; Park, K. FRED-Net: Fully residual encoder–decoder network for accurate iris segmentation. Expert Syst. Appl. 2019, 122, 217–241. [Google Scholar] [CrossRef]

- Shuai, L.; Yuanning, L.; Xiaodong, Z.; Guang, H.; Jingwei, C.; Qixian, Z.; Zukang, W.; Zhiyi, D. Statistical Cognitive Learning and Security Output Protocol for Multi-State Iris Recognition. IEEE Access 2019, 7, 132871–132893. [Google Scholar] [CrossRef]

- Wang, Z.; Li, C.; Shao, H.; Sun, J. Eye Recognition with Mixed Convolutional and Residual Network (MiCoRe-Net). IEEE Access 2018, 6, 17905–17912. [Google Scholar] [CrossRef]

- Cheng, Y.; Liu, Y.; Zhu, X.; Li, S. A Multiclassification Method for Iris Data Based on the Hadamard Error Correction Output Code and a Convolutional Network. IEEE Access 2019, 7, 145235–145245. [Google Scholar] [CrossRef]

- Liu, S.; Liu, Y.; Zhu, X.; Huo, G.; Cui, J.; Zhang, Q.; Dong, Z.; Jiang, X. Current optimal active feedback and stealing response mechanism for low-end device constrained defocused iris certification. J. Electron. Imaging 2020, 29, 013012. [Google Scholar]

- Gangwar, A.; Joshi, A. DeepIrisNet: Deep iris representation with applications in iris recognition and cross-sensor iris recognition. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2301–2305. [Google Scholar]

- Liu, N.; Liu, J.; Sun, Z.; Tan, T. A Code-Level Approach to Heterogeneous Iris Recognition. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2373–2386. [Google Scholar] [CrossRef]

- Garea-Llano, E.; Vázquez, M.S.G.; Colores-Vargas, J.M.; Fuentes, L.M.Z.; Acosta, A.A.R. Optimized robust multi-sensor scheme for simultaneous video and image iris recognition. Pattern Recognit. Lett. 2018, 101, 44–51. [Google Scholar] [CrossRef]

- Subramani, B.; Veluchamy, M. Fuzzy contextual inference system for medical image enhancement. Measurement 2019, 148, 106967. [Google Scholar] [CrossRef]

- Benalcazar, D.; Perez, C.; Bastias, D.; Bowyer, K. Iris Recognition: Comparing Visible-Light Lateral and Frontal Illumination to NIR Frontal Illumination. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 867–876. [Google Scholar]

- Lv, J.; Wang, Y.; Xu, L.; Gu, Y.; Zou, L.; Yang, B.; Ma, Z. A method to obtain the near-large fruit from apple image in orchard for single-arm apple harvesting robot. Sci. Hortic. 2019, 257, 108758. [Google Scholar] [CrossRef]

- Rana, H.K.; Azam, S.; Akhtar, M.R.; Quinn, J.M.; Moni, M.A. A fast iris recognition system through optimum feature extraction. PeerJ Comput. Sci. 2019, 5, e184. [Google Scholar] [CrossRef]

- Yao, L.; Muhammad, S. A novel technique for analysing histogram equalized medical images using superpixels. Comput. Assist. Surg. 2019, 24, 53–61. [Google Scholar] [CrossRef]

- Shi, H.; Zhang, Y.; Zhang, Z.; Ma, N.; Zhao, X.; Gao, Y.; Sun, J. Hypergraph-Induced Convolutional Networks for Visual Classification. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2963–2972. [Google Scholar] [CrossRef]

- Yu, H.; Zhang, X.J.; Wang, S.; Song, S.M. Alternative framework of the Gaussian filter for non-linear systems with synchronously correlated noises. IET Sci. Meas. Technol. 2016, 10, 306–315. [Google Scholar] [CrossRef]

- Völgyes, D.; Martinsen, A.C.; Stray-Pedersen, A.; Waaler, D.; Pedersen, M. A Weighted Histogram-Based Tone Mapping Algorithm for CT Images. Algorithms 2018, 11, 111. [Google Scholar] [CrossRef]

- Yu, Y.; Chen, Y.; Guo, P.; Chen, P.; Peng, N. Noisy Image Blind Deblurring via Hyper Laplacian Prior and Spectral Properties of Convolution Kernel. Chin. J. Eng. Math. 2018, 35, 648–654. [Google Scholar]

- Lan, G.; Shen, Y.; Chen, T.; Zhu, H. Parallel implementations of structural similarity based no-reference image quality assessment. Adv. Eng. Softw. 2017, 114, 372–379. [Google Scholar] [CrossRef]

- Mohebian, M.R.; Marateb, H.R.; Karimimehr, S.; Mañanas, M.A.; Kranjec, J.; Holobar, A. Non-invasive Decoding of the Motoneurons: A Guided Source Separation Method Based on Convolution Kernel Compensation With Clustered Initial Points. Front. Comput. Neurosci. 2019, 13, 14. [Google Scholar] [CrossRef] [PubMed]

- Ding, H.; Pan, Z.; Cen, Q.; Li, Y.; Chen, S. Multi-scale fully convolutional network for gland segmentation using three-class classification. Neurocomputing 2020, 380, 150–161. [Google Scholar] [CrossRef]

- Gao, L.; Zheng, H. Convolutional neural network based on PReLUs-Softplus nonlinear excitation function. J. Shenyang Univ. Technol. 2018, 40, 54–59. [Google Scholar]

- JLU Iris Image Database. Available online: http://www.jlucomputer.com/index/irislibrary/irislibrary.html (accessed on 22 March 2020).

- Liu, S.; Liu, Y.; Zhu, X. Sequence Iris Quality Evaluation Algorithm Based on Morphology and Gray Distribution. J. Jilin Univ. (Eng. Technol. Ed.) 2018, 56, 1156–1162. [Google Scholar]

- Shuai, L.; Yuanning, L.; Xiaodong, Z.; Hao, Z.; Guang, H.; Guangyu, W.; Jingwei, C.; Xinlong, L.; Zukang, W.; Zhiyi, D. Constrained Sequence Iris Quality Evaluation Based on Causal Relationship Decision Reasoning. In Proceedings of the 14th Chinese Conference on Biometric Recognition, CCBR2019, Zhuzhou, China, 11–12 October 2019; pp. 337–345. [Google Scholar]

- Gao, F.; Huang, T.; Wang, J.; Sun, J.; Hussain, A.; Yang, E. Dual-Branch Deep Convolution Neural Network for Polarimetric SAR Image Classification. Appl. Sci. 2017, 7, 447. [Google Scholar] [CrossRef]

- Gangwar, A.; Joshi, A. An Experimental Study of Deep Convolutional Features For Iris Recognition‘presented. In Proceedings of the IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, USA, 3 December 2016. [Google Scholar]

- Maram, A.; Lamiaa, E. Convolutional Neural Network Based Feature Extraction for IRIS Recognition. Int. J. Comput. Sci. Inf. Technol. 2018, 10, 65–78. [Google Scholar]

- Gómez-Ríos, A.; Tabik, S.; Luengo, J.; Shihavuddin, A.; Krawczyk, B.; Herrera, F. Towards highly accurate coral texture images classification using deep convolutional neural networks and data augmentation. Expert Syst. Appl. 2019, 118, 315–328. [Google Scholar] [CrossRef]

- Umer, S.; Sardar, A.; Dhara, B.C.; Raout, R.K.; Pandey, H.M. Person identification using fusion of iris and periocular deep features. Neural Netw. 2019, 122, 407–419. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Kumar, A. Cross-spectral iris recognition using CNN and supervised discrete hashing. Pattern Recognit. 2019, 86, 85–98. [Google Scholar] [CrossRef]

- Zhao, Z.; Kumar, A. Towards more accu-rate iris recognition using deeply learned spatially cor-responding features. In Proceedings of the IEEE International Conferenceon Computer Vision, Venice, Itally, 22–29 October 2017; pp. 3809–3818. [Google Scholar]

- Baqar, M.; Ghani, A.; Aftab, A.; Arbab, S.; Yasin, S. Deep belief networks for irisrecognition based on contour detection. In Proceedings of the Inter-national Conference on Open Source Systems & Tech-nologies (ICOSST), Lahore, Pakistan, 15–17 Decmber 2016; pp. 72–77. [Google Scholar]

- Cheng, D.; Kou, K.I. Multichannel interpolation of nonuniform samples with application to image recovery. J. Comput. Appl. Math. 2020, 367, 112502. [Google Scholar] [CrossRef]

- Biagi, S.; Isernia, T. On the solvability of singular boundary value problems on the real line in the critical growth case. Discret. Contin. Dyn. Syst. - A 2020, 40, 1131–1157. [Google Scholar] [CrossRef]

- Chen, W.; Sun, Z.; Han, J. Landslide Susceptibility Modeling Using Integrated Ensemble Weights of Evidence with Logistic Regression and Random Forest Models. Appl. Sci. 2019, 9, 171. [Google Scholar] [CrossRef]

- Kuehlkamp, A.; Pinto, A.; Rocha, A.; Bowyer, K.; Czajka, A. Ensemble of Multi-View Learning Classifiers for Cross-Domain Iris Presentation Attack Detection. IEEE Trans. Inf. Forensics Secur. 2018, 14, 1419–1431. [Google Scholar] [CrossRef]

- Al-Saidi, N.M.; Mohammed, A.J.; Al-Azawi, R.J.; Ali, A.H. Iris Features Via Fractal Functions for Authentication Protocols. Int. J. Innov. Comput. Inf. Control 2019, 14, 1441–1453. [Google Scholar]

- Patil, C.M.; Gowda, S. An Approach for Secure Identification and Authentication for Biometrics using Iris. In Proceedings of the 2017 International Conference on Current Trends in Computer, Electrical, Electronics and Communication (CTCEEC), Mysore, India, 8–9 September 2017; Volume SEP 08-09, pp. 421–424. [Google Scholar]

- Manzo, M. Attributed Relational SIFT-based Regions Graph (ARSRG): Concepts and applications. arXiv 2019, arXiv:1912.09972. (under review). [Google Scholar]

- Gad, R.; Talha, M.; El-Latif, A.A.A.; Zorkany, M.; El-Sayed, A.; El-Fishawy, N.; Muhammad, G.; Talha, M. Iris Recognition Using Multi-Algorithmic Approaches for Cognitive Internet of things (CIoT) Framework. Future Gener. Comput. Syst. 2018, 89, 178–191. [Google Scholar] [CrossRef]

| JLU-4.0 | JLU-6.0 | JLU-7.0 | |

| Pixel | 5,000,000 | 2,000,000 | 300,000 |

| Collection distance | 150–200 mm | 100–150 mm | 150–200 mm |

| Resolution | 640 × 480 | 640 × 480 | 640 × 480 |

| Light | Infrared | Infrared | Non-infrared |

| Color | Color | Grayscale | Grayscale |

| Method | Clarity (Degree of Light Effect) | Effective Iris Area | Strabismus | Confirm the Presence of Eyes |

|---|---|---|---|---|

| Fusion method | high | high | high | yes |

| Secondary iris recognition | high | medium | medium | yes |

| DPSO-certification function | low | low | medium | yes |

| Statistical cognitive learning | low | low | low | yes |

| Multialgorithm parallel integration | low | low | low | yes |

| Capsule deep learning | medium | medium | medium | yes |

| Method | Consider Qualified Number | Correct Certifications of Consider Qualified Number | |

|---|---|---|---|

| JLU-4.0 | Fusion method | 156 | 155 |

| Secondary iris recognition | 235 | 230 | |

| DPSO-certification function | 435 | 434 | |

| Statistical cognitive learning | 943 | 943 | |

| Multialgorithm parallel integration | 954 | 952 | |

| Capsule deep learning | 513 | 511 | |

| JLU-6.0 | Fusion method | 179 | 175 |

| Secondary iris recognition | 258 | 257 | |

| DPSO-certification function | 474 | 473 | |

| Statistical cognitive learning | 963 | 958 | |

| Multialgorithm parallel integration | 974 | 973 | |

| Capsule deep learning | 526 | 523 | |

| JLU-7.0 | Fusion method | 111 | 110 |

| Secondary iris recognition | 210 | 210 | |

| DPSO-certification function | 453 | 453 | |

| Statistical cognitive learning | 1007 | 1003 | |

| Multialgorithm parallel integration | 987 | 985 | |

| Capsule deep learning | 546 | 545 |

| Category Number | Image Number in Each Category | Total | Match Number in the Same Category | Match Number in Different Category | Total |

|---|---|---|---|---|---|

| 50 | 50 | 2500 | 2500 | 7500 | 10,000 |

| JLU-4.0 | JLU-6.0 | JLU-7.0 | ||||

|---|---|---|---|---|---|---|

| Method | Correctly Certification | CRR | Correctly Certification | CRR | Correctly Certification | CRR |

| CNN-special certification function | 9997 | 99.97% | 10,000 | 100% | 9998 | 99.98% |

| FRIR-V2 | 9135 | 91.35% | 8421 | 84.21% | 8754 | 87.54% |

| VGG-Net | 7369 | 73.69% | 8123 | 81.23% | 7869 | 78.69% |

| DeepIrisNet-A | 7869 | 78.69% | 7498 | 74.98% | 8096 | 80.96% |

| DeepIris | 8456 | 84.56% | 8569 | 85.69% | 8375 | 83.75% |

| DeepIrisNet | 9236 | 92.36% | 9347 | 93.47% | 9147 | 91.47% |

| Alex-Net | 6789 | 67.89% | 5698 | 56.98% | 6845 | 68.45% |

| ResNet | 7125 | 71.25% | 7236 | 72.36% | 7523 | 75.23% |

| Inception-v3 | 7698 | 76.98% | 7748 | 77.48% | 7496 | 74.96% |

| CNN-self-learned | 8963 | 89.63% | 8742 | 87.42% | 8541 | 85.41% |

| FCN | 8546 | 85.46% | 8325 | 83.25% | 8698 | 86.98% |

| DBN-RVLR-NN | 7968 | 79.68% | 7762 | 77.62% | 8023 | 80.23% |

| Number of Correct Certification | Correct Certification Rate | ||

|---|---|---|---|

| JLU-4.0 | Original structure | 998 | 99.8% |

| Unprocessed structure | 875 | 87.5% | |

| Structure of replacement algorithm | 998 | 99.8% | |

| JLU-6.0 | Original structure | 1000 | 100% |

| Unprocessed structure | 869 | 86.9% | |

| Structure of replacement algorithm | 997 | 99.7% | |

| JLU-7.0 | Original structure | 999 | 99.9% |

| Unprocessed structure | 892 | 89.2% | |

| Structure of replacement algorithm | 1000 | 100% | |

| The Number of Training Iris Is 500 | The Number of Training Iris Is 100 | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | ||

| 1 | 41.8608 | 15.7176 | 24.374 | 1 | 13.1152 | 18.8164 | 25.5812 | ||

| 0.6 | 0.58 | 0.58 | 0.6 | 0.48 | 0.56 | ||||

| 2 | 38.2424 | 25.0296 | 67.3079 | 2 | 56.6001 | 45.5503 | 54.137 | ||

| 0.4 | 0.48 | 0.48 | 0.4 | 0.48 | 0.5 | ||||

| 3 | 15.127 | 16.8023 | 49.4673 | 3 | 27.1576 | 27.717 | 35.3843 | ||

| 0.4 | 0.58 | 0.5 | 0.6 | 0.58 | 0.52 | ||||

| 4 | 54.5455 | 19.2285 | 32.8769 | 4 | 27.3758 | 27.0261 | 16.1855 | ||

| 0.6 | 0.54 | 0.48 | 0.6 | 0.54 | 0.48 | ||||

| 5 | 25.5758 | 24.157 | 54.8345 | 5 | 36.2848 | 40.5636 | 47.3764 | ||

| 0.4 | 0.48 | 0.42 | 0.4 | 0.56 | 0.48 | ||||

| 6 | 26.0909 | 20.8958 | 55.0618 | 6 | 40.3152 | 33.6909 | 42.1176 | ||

| 0.4 | 0.58 | 0.46 | 0.4 | 0.46 | 0.6 | ||||

| 7 | 38.8545 | 13.9576 | 25.9436 | 7 | 21.1273 | 19.9715 | 22.3182 | ||

| 0.4 | 0.56 | 0.58 | 0.6 | 0.54 | 0.52 | ||||

| 8 | 32.9091 | 21.7139 | 52.7546 | 8 | 42.1394 | 35.9509 | 44.1636 | ||

| 0.6 | 0.5 | 0.54 | 0.6 | 0.5 | 0.54 | ||||

| 9 | 16.9151 | 14.2752 | 38.4412 | 9 | 24.4364 | 21.9733 | 27.5012 | ||

| 0.6 | 0.52 | 0.54 | 0.4 | 0.54 | 0.54 | ||||

| 10 | 30.0727 | 14.7309 | 21.1418 | 10 | 16.3152 | 18.5473 | 19.3721 | ||

| 0.2 | 0.52 | 0.52 | 0.6 | 0.54 | 0.5 | ||||

| 11 | 29.3212 | 18.7345 | 50.537 | 11 | 36.4909 | 36.4655 | 42.06 | ||

| 0.6 | 0.64 | 0.44 | 0.6 | 0.5 | 0.42 | ||||

| 12 | 21.6424 | 13.2697 | 34.1182 | 12 | 17.9817 | 19.143 | 20.5849 | ||

| 0.6 | 0.62 | 0.52 | 0.4 | 0.6 | 0.54 | ||||

| 13 | 39.8727 | 19.3085 | 31.3618 | 13 | 30.406 | 28.7436 | 16.9236 | ||

| 0.6 | 0.6 | 0.48 | 0.6 | 0.52 | 0.52 | ||||

| 14 | 25.503 | 23.3364 | 50.7218 | 14 | 24.9576 | 36.1054 | 39.3079 | ||

| 0.6 | 0.56 | 0.48 | 0.6 | 0.46 | 0.58 | ||||

| 15 | 19.0424 | 22.0957 | 54.723 | 15 | 37.6788 | 33.1055 | 37.7097 | ||

| 0.4 | 0.48 | 0.36 | 0.4 | 0.44 | 0.5 | ||||

| The Number of Training Iris Is 500 | The Number of Training Iris Is 100 | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | ||

| 1 | 0.306495 | 0.315942 | 0.315942 | 1 | 0.306495 | 0.352305 | 0.324698 | ||

| 0.366516 | 0.36435 | 0.36435 | 0.366516 | 0.340042 | 0.361231 | ||||

| 2 | 0.366516 | 0.352305 | 0.352305 | 2 | 0.366516 | 0.352305 | 0.346574 | ||

| 0.306495 | 0.340042 | 0.340042 | 0.306495 | 0.340042 | 0.346574 | ||||

| 3 | 0.366516 | 0.315942 | 0.346574 | 3 | 0.306495 | 0.315942 | 0.340042 | ||

| 0.306495 | 0.36435 | 0.346574 | 0.366516 | 0.36435 | 0.352305 | ||||

| 4 | 0.306495 | 0.332741 | 0.352305 | 4 | 0.306495 | 0.332741 | 0.352305 | ||

| 0.366516 | 0.357203 | 0.340042 | 0.366516 | 0.357203 | 0.340042 | ||||

| 5 | 0.366516 | 0.352305 | 0.36435 | 5 | 0.366516 | 0.324698 | 0.352305 | ||

| 0.306495 | 0.340042 | 0.315942 | 0.306495 | 0.361231 | 0.340042 | ||||

| 6 | 0.366516 | 0.315942 | 0.357203 | 6 | 0.366516 | 0.357203 | 0.306495 | ||

| 0.306495 | 0.36435 | 0.332741 | 0.306495 | 0.332741 | 0.366516 | ||||

| 7 | 0.366516 | 0.324698 | 0.315942 | 7 | 0.306495 | 0.332741 | 0.340042 | ||

| 0.306495 | 0.361231 | 0.36435 | 0.366516 | 0.357203 | 0.352305 | ||||

| 8 | 0.306495 | 0.346574 | 0.332741 | 8 | 0.306495 | 0.346574 | 0.332741 | ||

| 0.366516 | 0.346574 | 0.357203 | 0.366516 | 0.346574 | 0.357203 | ||||

| 9 | 0.306495 | 0.340042 | 0.332741 | 9 | 0.366516 | 0.332741 | 0.332741 | ||

| 0.366516 | 0.352305 | 0.357203 | 0.306495 | 0.357203 | 0.357203 | ||||

| 10 | 0.321888 | 0.340042 | 0.340042 | 10 | 0.306495 | 0.332741 | 0.346574 | ||

| 0.178515 | 0.352305 | 0.352305 | 0.366516 | 0.357203 | 0.346574 | ||||

| 11 | 0.306495 | 0.285624 | 0.361231 | 11 | 0.306495 | 0.346574 | 0.36435 | ||

| 0.366516 | 0.367794 | 0.324698 | 0.366516 | 0.346574 | 0.315942 | ||||

| 12 | 0.306495 | 0.296382 | 0.340042 | 12 | 0.366516 | 0.306495 | 0.332741 | ||

| 0.366516 | 0.367682 | 0.352305 | 0.306495 | 0.366516 | 0.357203 | ||||

| 13 | 0.306495 | 0.306495 | 0.352305 | 13 | 0.306495 | 0.340042 | 0.340042 | ||

| 0.366516 | 0.366516 | 0.340042 | 0.366516 | 0.352305 | 0.352305 | ||||

| 14 | 0.306495 | 0.324698 | 0.352305 | 14 | 0.306495 | 0.357203 | 0.315942 | ||

| 0.366516 | 0.361231 | 0.340042 | 0.366516 | 0.332741 | 0.36435 | ||||

| 15 | 0.366516 | 0.352305 | 0.367794 | 15 | 0.366516 | 0.361231 | 0.346574 | ||

| 0.306495 | 0.340042 | 0.285624 | 0.306495 | 0.324698 | 0.346574 | ||||

| The Number of Training Iris Is 500 | The Number of Training Iris Is 100 | ||||||

|---|---|---|---|---|---|---|---|

| No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | No. | JLU-4.0 | JLU-6.0 | JLU-7.0 |

| 1 | 0.330504 | 0.336273 | 0.336273 | 1 | 0.330504 | 0.345928 | 0.340773 |

| 2 | 0.330504 | 0.345928 | 0.345928 | 2 | 0.330504 | 0.345928 | 0.346574 |

| 3 | 0.330504 | 0.336273 | 0.346574 | 3 | 0.330504 | 0.336273 | 0.345928 |

| 4 | 0.330504 | 0.343993 | 0.345928 | 4 | 0.330504 | 0.343993 | 0.345928 |

| 5 | 0.330504 | 0.345928 | 0.336273 | 5 | 0.330504 | 0.340773 | 0.345928 |

| 6 | 0.330504 | 0.336273 | 0.343993 | 6 | 0.330504 | 0.343993 | 0.330504 |

| 7 | 0.330504 | 0.340773 | 0.336273 | 7 | 0.330504 | 0.343993 | 0.345928 |

| 8 | 0.330504 | 0.346574 | 0.343993 | 8 | 0.330504 | 0.346574 | 0.343993 |

| 9 | 0.330504 | 0.345928 | 0.343993 | 9 | 0.330504 | 0.343993 | 0.343993 |

| 10 | 0.207189 | 0.345928 | 0.345928 | 10 | 0.330504 | 0.343993 | 0.346574 |

| 11 | 0.330504 | 0.315205 | 0.340773 | 11 | 0.330504 | 0.346574 | 0.336273 |

| 12 | 0.330504 | 0.323476 | 0.345928 | 12 | 0.330504 | 0.330504 | 0.343993 |

| 13 | 0.330504 | 0.330504 | 0.345928 | 13 | 0.330504 | 0.345928 | 0.345928 |

| 14 | 0.330504 | 0.340773 | 0.345928 | 14 | 0.330504 | 0.343993 | 0.336273 |

| 15 | 0.330504 | 0.345928 | 0.315205 | 15 | 0.330504 | 0.340773 | 0.346574 |

| No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | No. | JLU-4.0 | JLU-6.0 | JLU-7.0 |

|---|---|---|---|---|---|---|---|

| 1 | 35.7268 | 12.788 | 12.9091 | 9 | 21.8484 | 24.0909 | 19.1818 |

| 2 | 30.7579 | 24.5452 | 55.6973 | 10 | 8.36367 | 22.2121 | 7.5758 |

| 3 | 24.9394 | 22.3033 | 26.9697 | 11 | 22.3636 | 24.3636 | 47.606 |

| 4 | 28.3939 | 13.1515 | 15.1817 | 12 | 15.2727 | 7.9696 | 23.2121 |

| 5 | 28.0303 | 25.606 | 56.2121 | 13 | 15.7272 | 4.06066 | 10.4243 |

| 6 | 21.7576 | 21.9091 | 51.4849 | 14 | 23.3637 | 13.6667 | 40.4849 |

| 7 | 19.8787 | 11.2424 | 26.9091 | 15 | 5.93933 | 11.1515 | 56.1819 |

| 8 | 22.8181 | 6.99993 | 48.4243 | ||||

| The Number of Training Iris Is 500 | The Number of Training Iris Is 100 | ||||||

|---|---|---|---|---|---|---|---|

| No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | No. | JLU-4.0 | JLU-6.0 | JLU-7.0 |

| 1 | 0.15695 | 0.149091 | 0.0970519 | 1 | 0.399367 | 0.114928 | 0.0917578 |

| 2 | 0.117914 | 0.165834 | 0.139936 | 2 | 0.0796696 | 0.0911246 | 0.178281 |

| 3 | 0.303185 | 0.203128 | 0.0944764 | 3 | 0.168877 | 0.147454 | 0.134772 |

| 4 | 0.0957285 | 0.122894 | 0.078089 | 4 | 0.152059 | 0.0874362 | 0.158619 |

| 5 | 0.201546 | 0.187428 | 0.18785 | 5 | 0.113255 | 0.114782 | 0.209799 |

| 6 | 0.122257 | 0.160448 | 0.153639 | 6 | 0.0791217 | 0.106853 | 0.179213 |

| 7 | 0.0750067 | 0.146459 | 0.158722 | 7 | 0.173029 | 0.101146 | 0.203892 |

| 8 | 0.127508 | 0.0558626 | 0.164931 | 8 | 0.0995787 | 0.0337403 | 0.180166 |

| 9 | 0.189364 | 0.285385 | 0.0896586 | 9 | 0.13108 | 0.180149 | 0.125325 |

| 10 | 0.0179044 | 0.254989 | 0.063361 | 10 | 0.0942713 | 0.196781 | 0.0677668 |

| 11 | 0.14026 | 0.17219 | 0.149724 | 11 | 0.112702 | 0.115778 | 0.207409 |

| 12 | 0.129773 | 0.110362 | 0.1203 | 12 | 0.12452 | 0.07656 | 0.185284 |

| 13 | 0.0725356 | 0.0386744 | 0.056209 | 13 | 0.095119 | 0.0249799 | 0.108916 |

| 14 | 0.168471 | 0.106487 | 0.134977 | 14 | 0.172153 | 0.0621963 | 0.157609 |

| 15 | 0.0457266 | 0.0853465 | 0.187673 | 15 | 0.0231097 | 0.0535391 | 0.258172 |

| The Number of Training Iris Is 500 | The Number of Training Iris Is 100 | ||||||

|---|---|---|---|---|---|---|---|

| No. | JLU-4.0 | JLU-6.0 | JLU-7.0 | No. | JLU-4.0 | JLU-6.0 | JLU-7.0 |

| 11.9502 | 13.2395 | 11.0544 | 12.2111 | 8.7975 | 14.2748 | ||

| 154,844 | 562,137 | 63,220.3 | 201,013 | 6617.71 | 1,583,010 | ||

| Case | JLU-4.0 | JLU-6.0 | JLU-7.0 | |

|---|---|---|---|---|

| 1 | Qualified quantity | 937 | 953 | 964 |

| Accuracy | 42.39% | 47.53% | 28.75% | |

| 2 | Qualified quantity | 975 | 946 | 968 |

| Accuracy | 44.53% | 42.85% | 32.56% | |

| 3 | Qualified quantity | 986 | 986 | 972 |

| Accuracy | 37.59% | 39.42% | 41.25% | |

| 4 | Qualified quantity | 903 | 863 | 893 |

| Accuracy | 50.67% | 58.25% | 49.53% |

| No. | Training Iris Number | Test Iris Number | Number of Correct Certification | Correct Certification Rate | Whether to Update Judgment |

|---|---|---|---|---|---|

| JLU-4.0 | |||||

| 1 | 10 | 50 | 48 | 96% | No |

| 2 | 10 | 100 | 69 | 69% | Yes |

| 3 | 100 | 200 | 189 | 94.5% | Yes |

| 4 | 500 | 200 | 200 | 100% | No |

| JLU-6.0 | |||||

| 1 | 10 | 50 | 38 | 76% | Yes |

| 2 | 100 | 200 | 193 | 96.5% | No |

| 3 | 100 | 500 | 375 | 75% | Yes |

| 4 | 500 | 500 | 499 | 99.8% | No |

| JLU-7.0 | |||||

| 1 | 10 | 50 | 42 | 84% | Yes |

| 2 | 50 | 100 | 89 | 89% | Yes |

| 3 | 200 | 200 | 198 | 99% | No |

| 4 | 200 | 500 | 423 | 84.6% | Yes |

| The Number of Training Iris | Category Label Range |

|---|---|

| JLU-4.0 | |

| 10 | [1000,2000] |

| 100 | [1000,2000],[1800,1900] |

| 500 | [1000,2000],[1800,1900],[13000,14000],[8900,9000] |

| JLU-6.0 | |

| 10 | [890,000,900,000],[310,000,320,000], |

| 50 | [890,000,900,000],[310,000,320,000],[138,000,000,139,000,000],[1,820,000,1,830,000] |

| 100 | [890,000,900,000],[310,000,320,000],[138,000,000,139,000,000],[1,820,000,1,830,000], [2,000,000,2,100,000] |

| 500 | [890,000,900,000],[310,000,320,000],[138,000,000,139,000,000],[1,820,000,1,830,000], [2,000,000,2,100,000],[1,190,000,1,200,000],[125,000,000,126,000,000] |

| JLU-7.0 | |

| 10 | [138,000,139,000],[1,150,000,1,160,000] |

| 50 | [138,000,139,000],[1,150,000,1,160,000],[3,500,000,3,800,000] |

| 100 | [138,000,139,000],[1,150,000,1,160,000],[3,500,000,3,800,000],[2,900,000,3,000,000], [840,000,850,000] |

| 200 | [138,000,139,000],[1,150,000,1,160,000],[3,500,000,3,800,000],[2,900,000,3,000,000], [840,000,850,000],[2,470,000,2,480,000],[720,000,730,000] |

| 500 | [138,000,139,000],[1,150,000,1,160,000],[3,500,000,3,800,000],[2,900,000,3,000,000], [840,000,850,000],[2,470,000,2,480,000],[720,000,730,000] |

| Total Number of Collection | Quality Error | False Rejection | False Acceptance | ||

|---|---|---|---|---|---|

| JLU-4.0 | Category 1 | 105 | 3 | 2 | 0 |

| Category 2 | 107 | 5 | 1 | 1 | |

| Category 3 | 103 | 0 | 3 | 0 | |

| Category 4 | 101 | 1 | 0 | 0 | |

| Category 5 | 106 | 2 | 3 | 1 | |

| JLU-6.0 | Category 1 | 101 | 0 | 1 | 0 |

| Category 2 | 100 | 0 | 0 | 0 | |

| Category 3 | 103 | 1 | 1 | 1 | |

| Category 4 | 100 | 0 | 0 | 0 | |

| Category 5 | 104 | 1 | 3 | 0 | |

| JLU-7.0 | Category 1 | 105 | 1 | 3 | 1 |

| Category 2 | 109 | 5 | 4 | 0 | |

| Category 3 | 104 | 1 | 2 | 1 | |

| Category 4 | 107 | 0 | 7 | 0 | |

| Category 5 | 105 | 2 | 3 | 0 | |

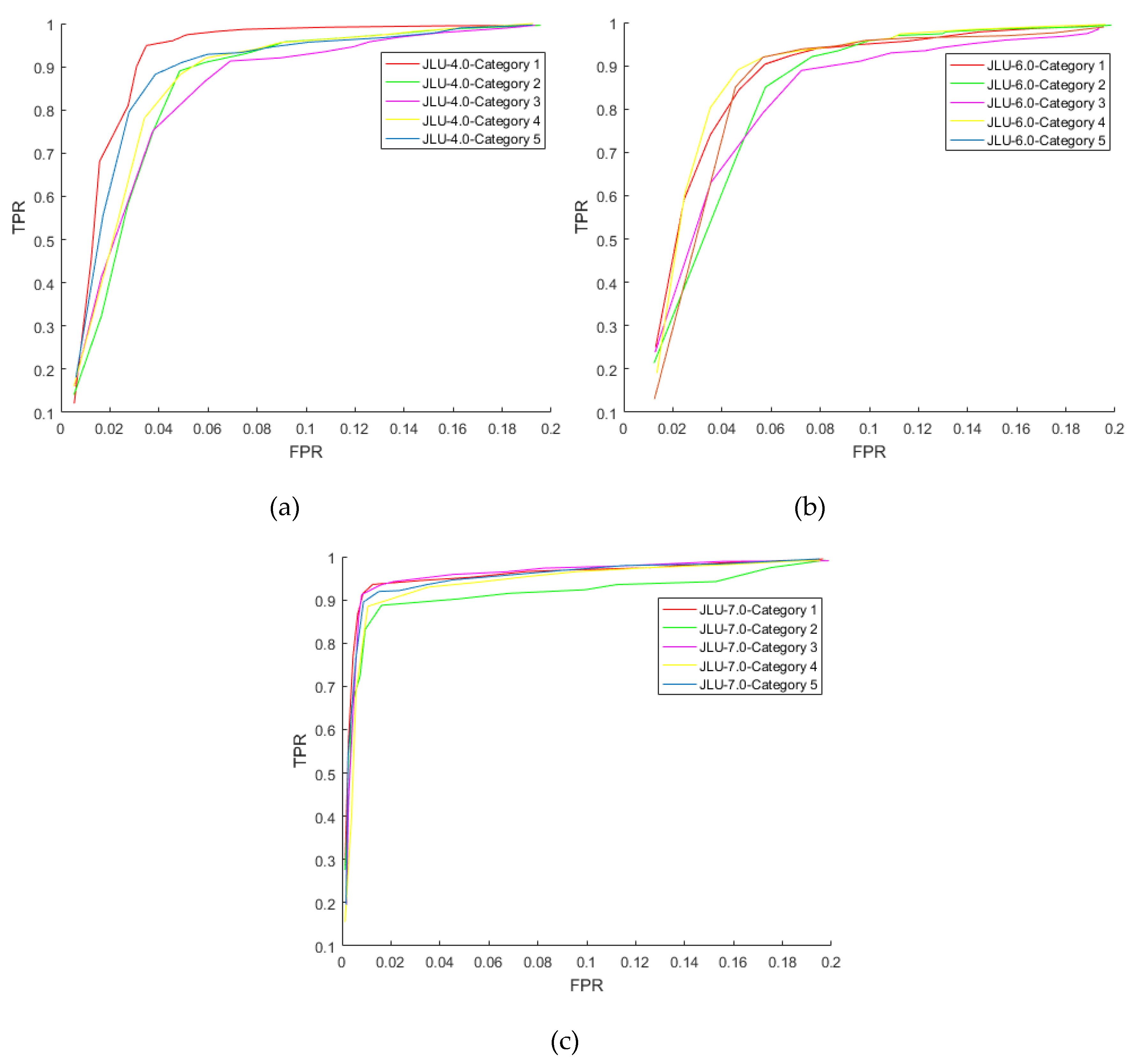

| The Same Category | Different Category | ||

|---|---|---|---|

| JLU-4.0 | Category 1 | 1756 | 8435 |

| Category 2 | 1657 | 9135 | |

| Category 3 | 1568 | 9253 | |

| Category 4 | 1456 | 8965 | |

| Category 5 | 1536 | 9568 | |

| JLU-6.0 | Category 1 | 1478 | 8965 |

| Category 2 | 1569 | 8567 | |

| Category 3 | 1745 | 9542 | |

| Category 4 | 1869 | 9645 | |

| Category 5 | 1756 | 9456 | |

| JLU-7.0 | Category 1 | 1689 | 7895 |

| Category 2 | 1756 | 8456 | |

| Category 3 | 1896 | 9674 | |

| Category 4 | 1598 | 8456 | |

| Category 5 | 1796 | 8695 | |

| Iris Library | Algorithm Running Time in This Paper | Recognizable Irises Considered by Evaluation | The Correct Number of Recognizable | Unrecognizable Irises Considered by Evaluation | The Correct Number of Unrecognizable |

|---|---|---|---|---|---|

| JLU-4.0 | 6186 ms | 786 | 785 | 214 | 0 |

| JLU-6.0 | 6347 ms | 815 | 815 | 185 | 0 |

| JLU-7.0 | 6412 ms | 868 | 868 | 132 | 0 |

| Category Number | Image Number in Each Category | Total | Match Number in the Same Category | Match Number in Different Category | Total |

|---|---|---|---|---|---|

| 50 | 100 | 5000 | 5000 | 10,000 | 15,000 |

| Recognizable Iris Considered by Evaluation | Certified Ratio | Unrecognizable Iris Considered by Evaluation | |

|---|---|---|---|

| JLU-4.0 | |||

| Qualified iris evaluation indicators in literature [41] | 9135 | 60.9% | 5865 |

| Inference engine system in literature [40] | 9635 | 64.233% | 5365 |

| Quality evaluation fuzzy reasoning system(example indicators in this paper) | 13,585 | 90.567% | 1415 |

| JLU-6.0 | |||

| Qualified iris evaluation indicators in literature [41] | 8123 | 54.153% | 6877 |

| Inference engine system in literature [40] | 7658 | 51.053% | 7342 |

| Quality evaluation fuzzy reasoning system(example indicators in this paper) | 14,325 | 95.5% | 675 |

| JLU-7.0 | |||

| Qualified iris evaluation indicators in literature [41] | 6635 | 44.233% | 8365 |

| Inference engine system in literature [40] | 7468 | 49.787% | 7532 |

| Quality evaluation fuzzy reasoning system(example indicators in this paper) | 12,453 | 83.02% | 2547 |

| Case | The Correct Number of Recognizable Irises | CRR of Recognizable | The Correct Number of Unrecognizable Irises | CRR of Unrecognizable |

|---|---|---|---|---|

| JLU-4.0 | ||||

| 0 | 13,585 | 100% | 1 | 0.0707% |

| 1 | 6842 | 74.899% | 1125 | 19.182% |

| 2 | 7368 | 80.657% | 426 | 7.263% |

| 3 | 8568 | 88.928% | 536 | 9.991% |

| 4 | 8356 | 91.472% | 1365 | 23.274% |

| 5 | 6789 | 70.462% | 145 | 2.703% |

| 6 | 8756 | 90.877% | 3658 | 68.183% |

| 7 | 10,685 | 78.653% | 85 | 6.007% |

| 8 | 9756 | 71.815% | 12 | 0.848% |

| 9 | 11,423 | 84.085% | 6 | 0.424% |

| 10 | 13,086 | 96.327% | 10 | 0.707% |

| 11 | 10,985 | 80.861% | 9 | 0.636% |

| 12 | 10,586 | 77.924% | 23 | 1.625% |

| JLU-6.0 | ||||

| 0 | 14,323 | 99.986% | 0 | 0% |

| 1 | 6135 | 75.526% | 1574 | 22.888% |

| 2 | 6585 | 81.066% | 589 | 8.565% |

| 3 | 6987 | 91.237% | 458 | 6.238% |

| 4 | 7162 | 88.169% | 2869 | 41.719% |

| 5 | 5869 | 76.639% | 95 | 1.294% |

| 6 | 7436 | 97.101% | 3658 | 49.823% |

| 7 | 11,468 | 80.056% | 25 | 3.704% |

| 8 | 10,125 | 70.681% | 3 | 0.444% |

| 9 | 11,987 | 83.678% | 5 | 0.741% |

| 10 | 13,845 | 96.649% | 0 | 0% |

| 11 | 12,452 | 86.925% | 0 | 0% |

| 12 | 12,653 | 88.328% | 5 | 0.741% |

| JLU-7.0 | ||||

| 0 | 12,452 | 99.992% | 2 | 0.079% |

| 1 | 4785 | 72.118% | 1023 | 12.230% |

| 2 | 4985 | 75.132% | 523 | 6.252% |

| 3 | 6723 | 90.024% | 354 | 4.700% |

| 4 | 5621 | 84.717% | 1862 | 22.259% |

| 5 | 5862 | 78.495% | 115 | 1.527% |

| 6 | 7256 | 97.161% | 3675 | 48.792% |

| 7 | 9926 | 79.708% | 1 | 0.039% |

| 8 | 9352 | 75.098% | 3 | 0.118% |

| 9 | 9789 | 78.607% | 1 | 0.039% |

| 10 | 11,589 | 93.062% | 1 | 0.039% |

| 11 | 10,985 | 88.212% | 3 | 0.118% |

| 12 | 10,709 | 85.995% | 4 | 0.157% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shuai, L.; Yuanning, L.; Xiaodong, Z.; Guang, H.; Zukang, W.; Xinlong, L.; Chaoqun, W.; Jingwei, C. Heterogeneous Iris One-to-One Certification with Universal Sensors Based On Quality Fuzzy Inference and Multi-Feature Fusion Lightweight Neural Network. Sensors 2020, 20, 1785. https://doi.org/10.3390/s20061785

Shuai L, Yuanning L, Xiaodong Z, Guang H, Zukang W, Xinlong L, Chaoqun W, Jingwei C. Heterogeneous Iris One-to-One Certification with Universal Sensors Based On Quality Fuzzy Inference and Multi-Feature Fusion Lightweight Neural Network. Sensors. 2020; 20(6):1785. https://doi.org/10.3390/s20061785

Chicago/Turabian StyleShuai, Liu, Liu Yuanning, Zhu Xiaodong, Huo Guang, Wu Zukang, Li Xinlong, Wang Chaoqun, and Cui Jingwei. 2020. "Heterogeneous Iris One-to-One Certification with Universal Sensors Based On Quality Fuzzy Inference and Multi-Feature Fusion Lightweight Neural Network" Sensors 20, no. 6: 1785. https://doi.org/10.3390/s20061785

APA StyleShuai, L., Yuanning, L., Xiaodong, Z., Guang, H., Zukang, W., Xinlong, L., Chaoqun, W., & Jingwei, C. (2020). Heterogeneous Iris One-to-One Certification with Universal Sensors Based On Quality Fuzzy Inference and Multi-Feature Fusion Lightweight Neural Network. Sensors, 20(6), 1785. https://doi.org/10.3390/s20061785