A Formal and Quantifiable Log Analysis Framework for Test Driving of Autonomous Vehicles

Abstract

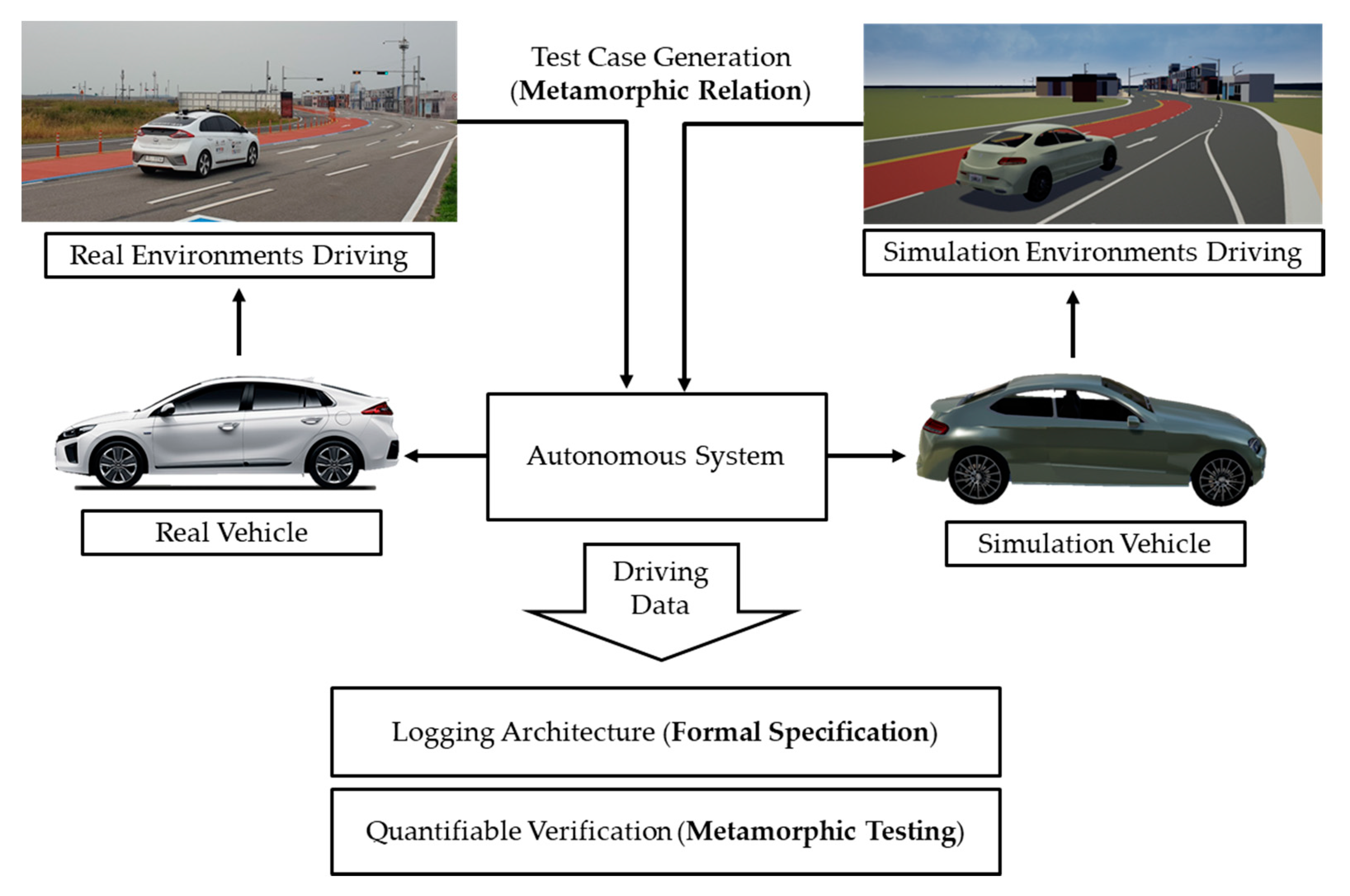

1. Introduction

1.1. Driving Data Recording

1.2. Formal Methods

1.3. Metamorphic Testing

1.4. Problem Statement

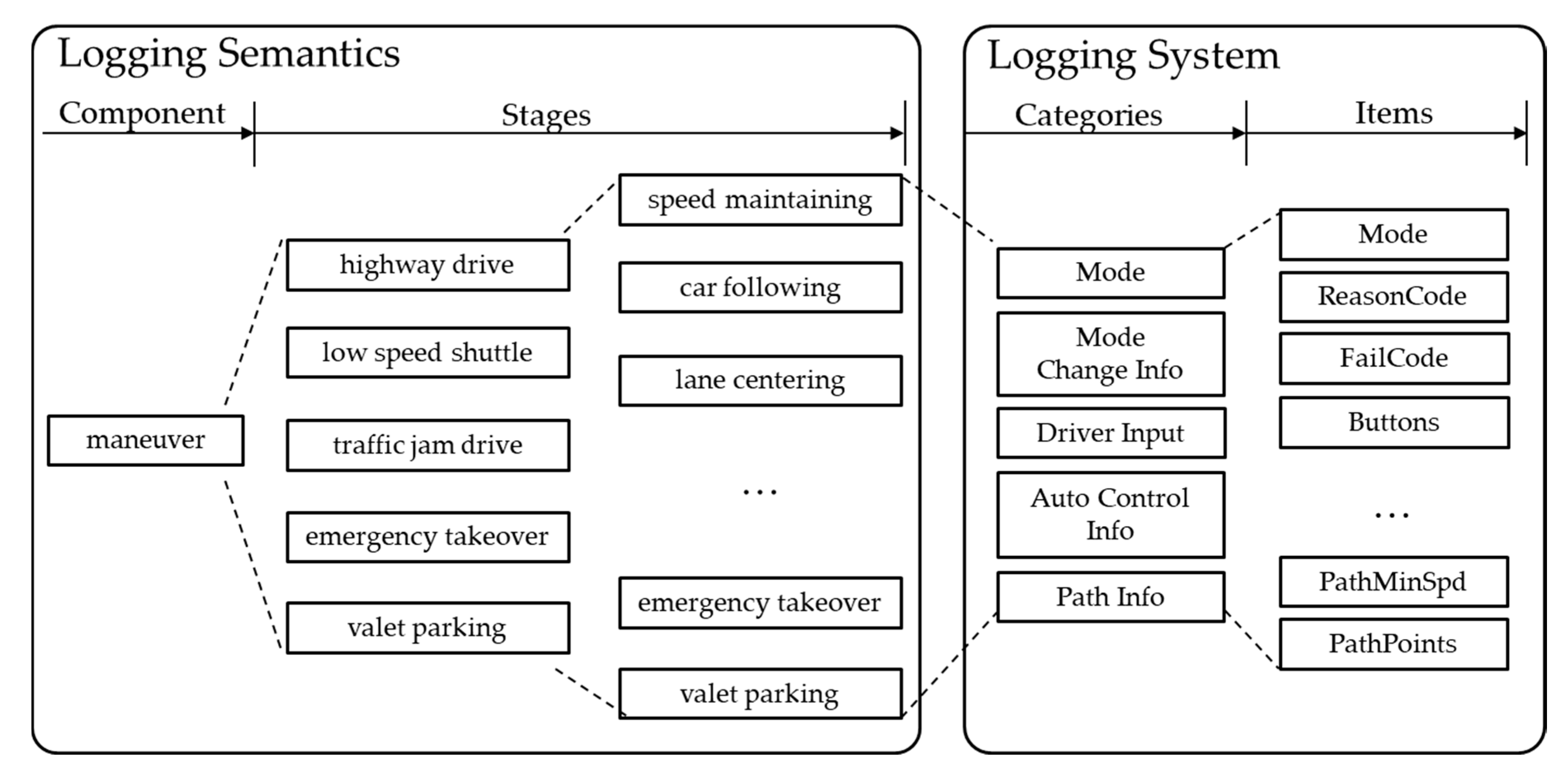

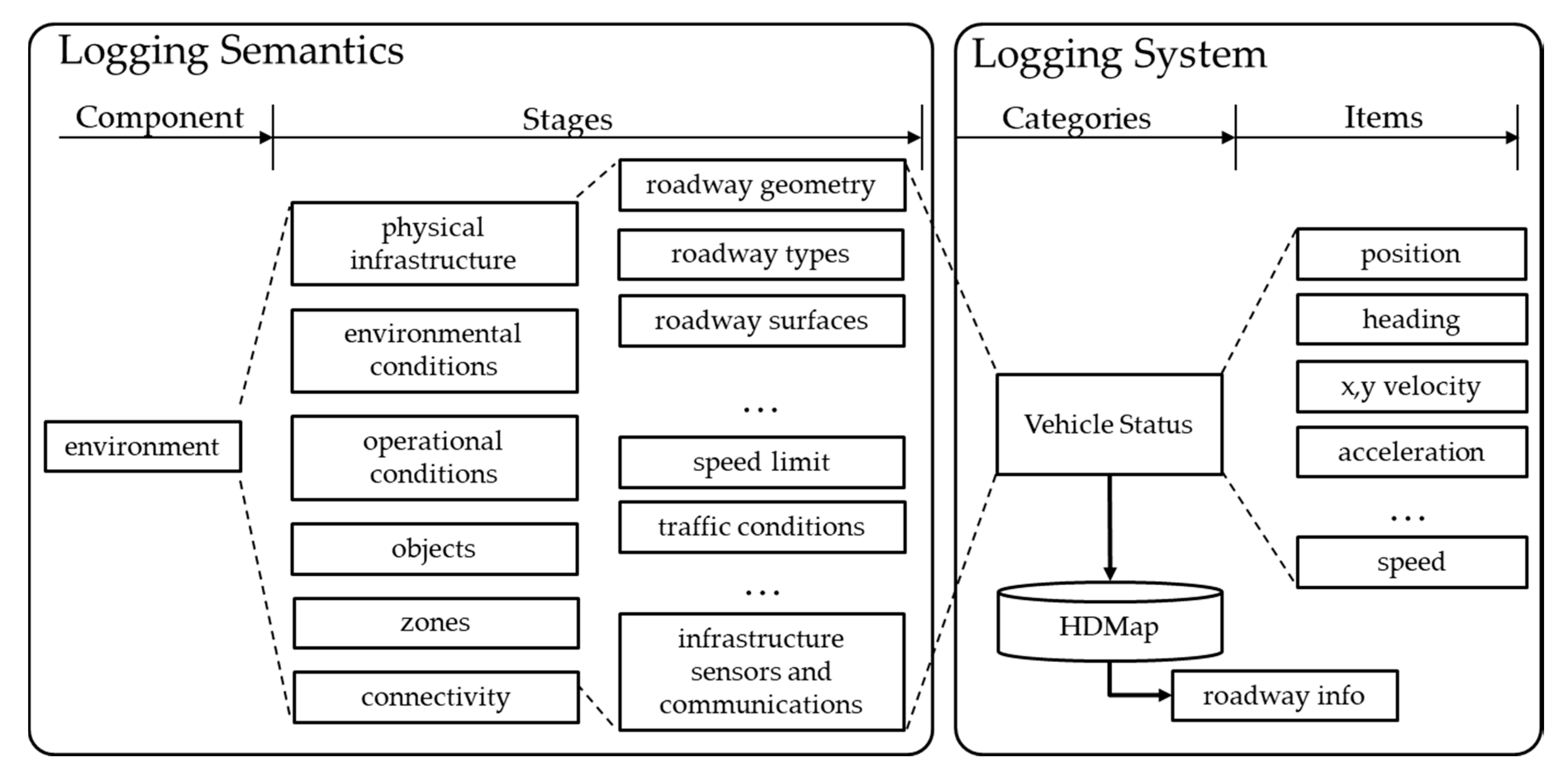

2. The Logging Architecture

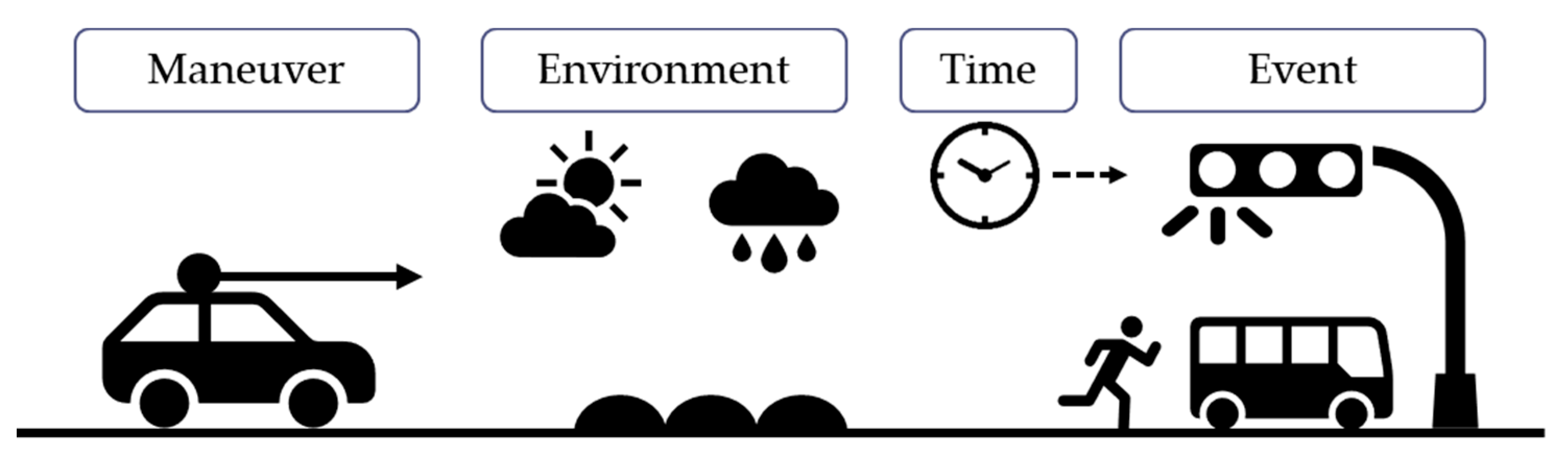

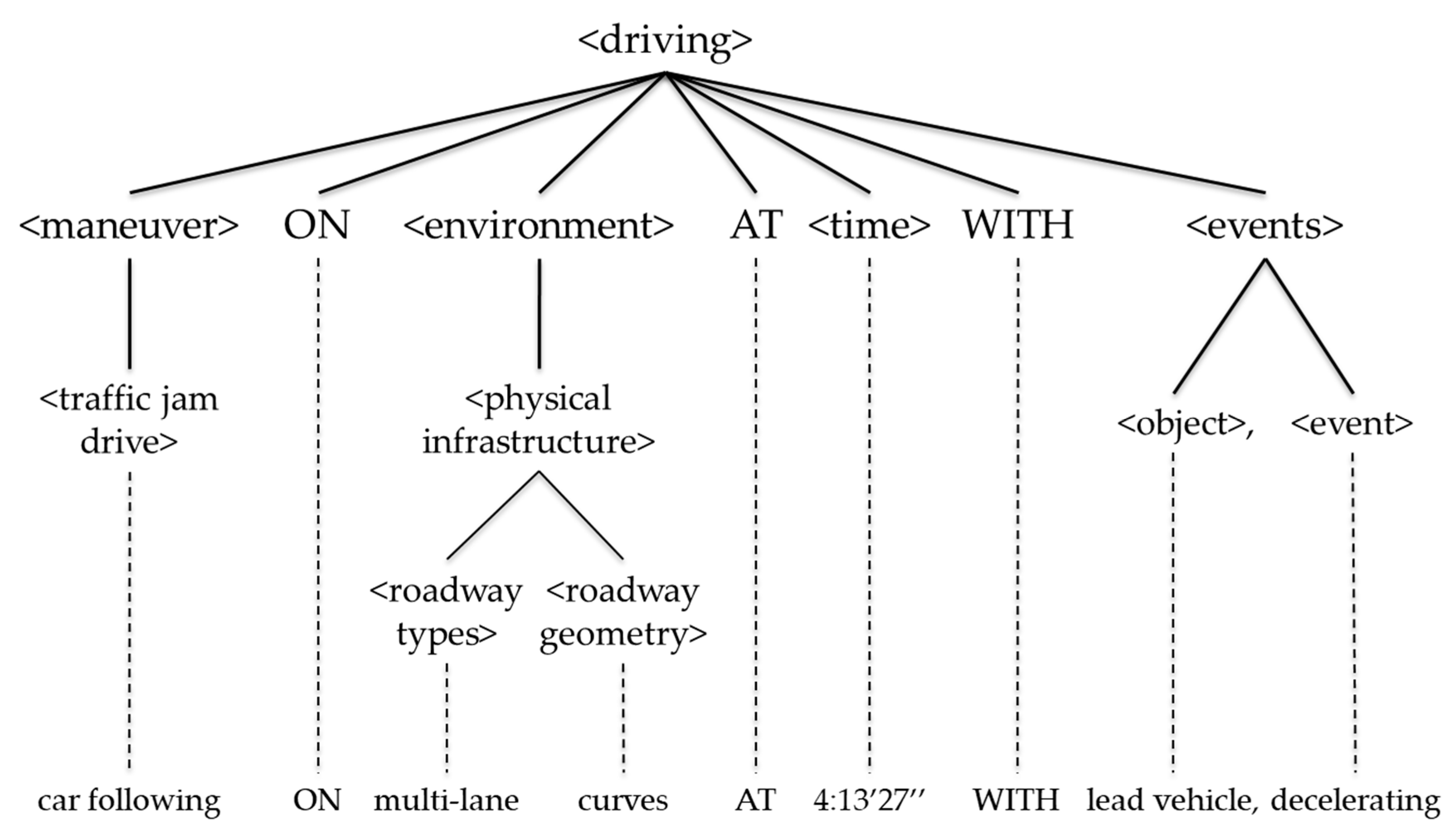

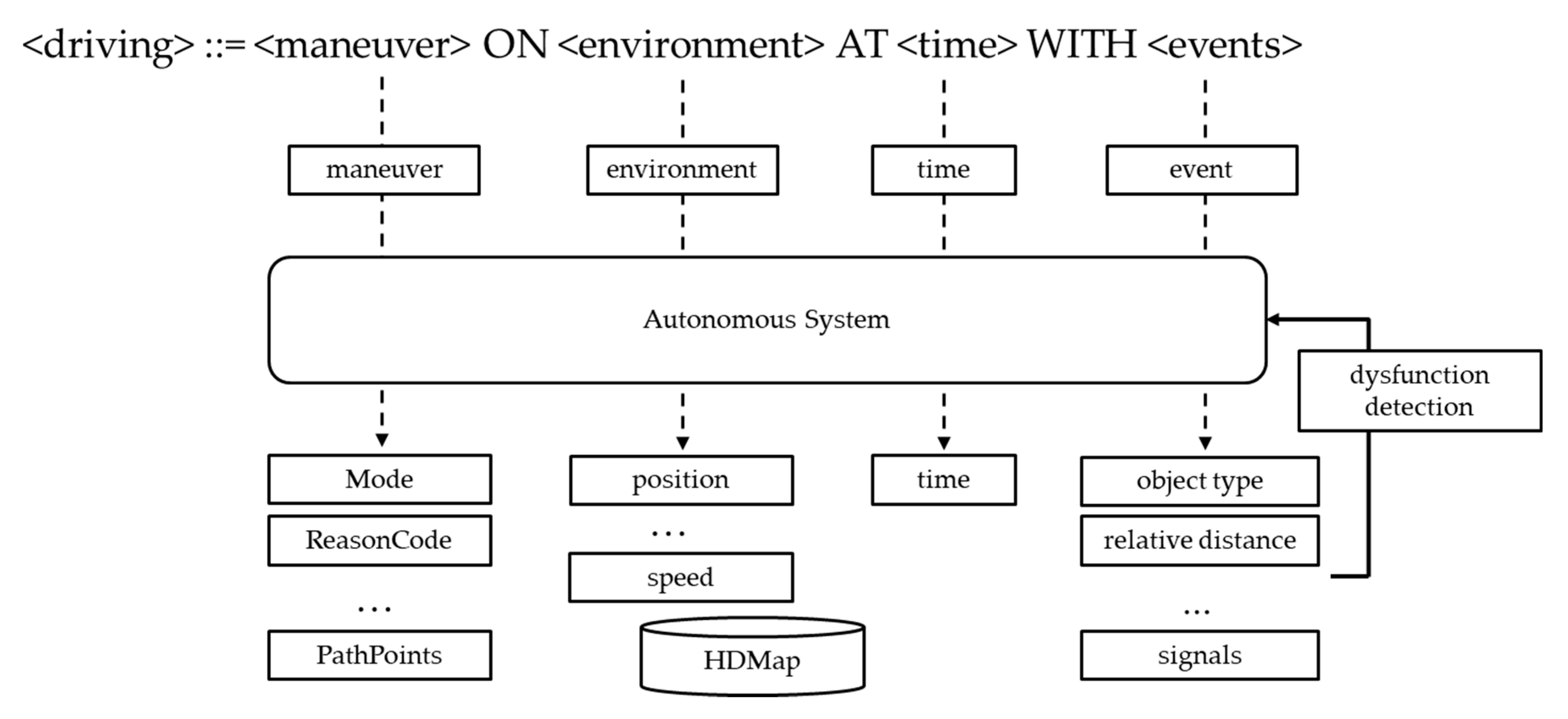

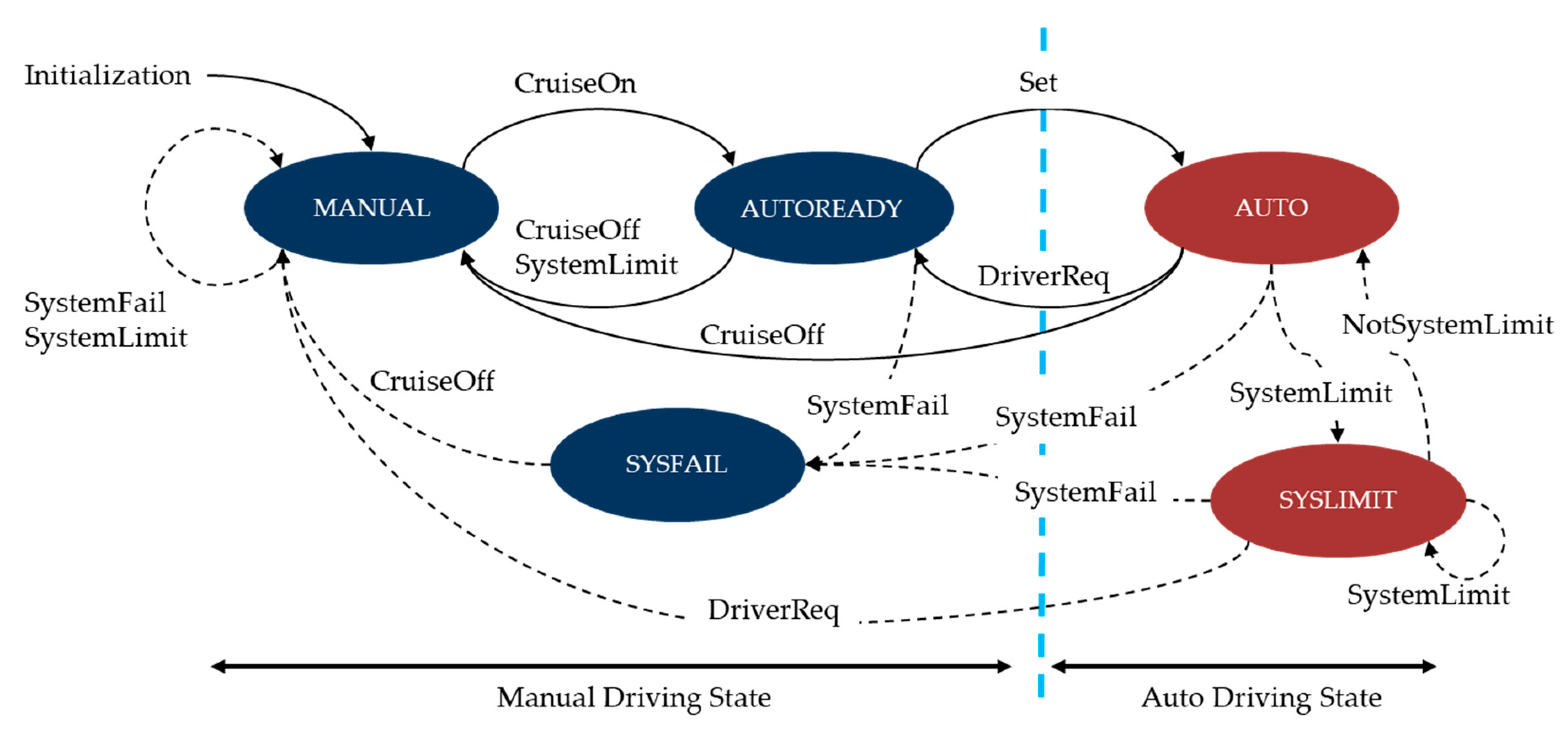

2.1. Formal Specifications of the Driving Situation

::= car following ON multilane curves AT 4:13′27″ WITH lead vehicle, stopped

2.2. The Logging Data Structure

- Maneuver Components

- Mode: This category includes the current driving mode of the autonomous vehicle, the reason code for entering the current driving mode, and the reason code for generating an approximate error when a system error occurs. The first thing to analyze from the driving information is to check the current driving mode and the reason for the mode change.

- Mode Change Info: This is for the change in autonomous driving mode. It is important to record driving mode changes because an autonomous vehicle changes its movement according to the driving mode. The previous mode right before the current mode is also recorded. If there were many mode changes in autonomous driving, knowing which mode the vehicle is in before the current stage greatly helps in data analysis.

- Driver Input: This category stores handling, accelerator, brake information, gear information, steering torque, and so on. With this information, an inspector can see what the driver control was at the time of logging.

- Control Info: In this category, vehicle control information is stored. Target steering, target speed, and target acceleration are stored. Target acceleration/brake pedal position also can be stored depending on the vehicle speed control type. Turn-signal control information (i.e., left/right turn signal) is also stored. Based on this information, the intention of the autonomous system can be identified.

- Path Info: Path info stores the driving path generated by the path planning module. The autonomous system drives the vehicle based on this path.

- Environment Component

- Vehicle Status: In this category, the running status of the vehicle is recorded. The current position and heading of the vehicle are recorded by default. The velocity and acceleration along the vertical and horizontal axes of the vehicle are also recorded together. Target speed and current speed during autonomous driving are stored too.

- Time Component

- Time: This category represents the time of logging. The logging time interval can be adjusted as needed. We performed logging every 10 or 20 ms to minimize the loss of very short-term driving information. In future work, this time category could have siblings of more than one child item to represent the temporal context of other components.

- Event Component

- Object Info: This category stores the relationship with objects in the vehicle’s path. This can be used to determine how the vehicle responded to a frontal obstacle.

3. Quantifiable Verifications

3.1. Metamorphic Relations

3.2. Analysis Methods

| (step1) maneuver | |

| <driving> | ::= <maneuver> |

| ::= <traffic jam drive> | |

| ::= “car following” | |

| (step 2) environment | |

| ::= “car following” ON <environment> | |

| ::= “car following” ON <physical infrastructure> | |

| ::= “car following” ON <roadway geometry><roadway types> | |

| ::= “car following” ON “straightaways”, “urban” | |

| (step3) time | |

| ::= “car following” ON “straightaways”, “urban” AT “time” | |

| (step4) events | |

| ::= “car following” ON “straightaways”, “urban” AT “time” WITH <events> | |

| ::= “car following” ON “straightaways”, “urban” AT “time” WITH <object>,<object event> | |

| ::= “car following” ON “straightaways”, “urban” AT “time” WITH “lead vehicle”, “stopped” |

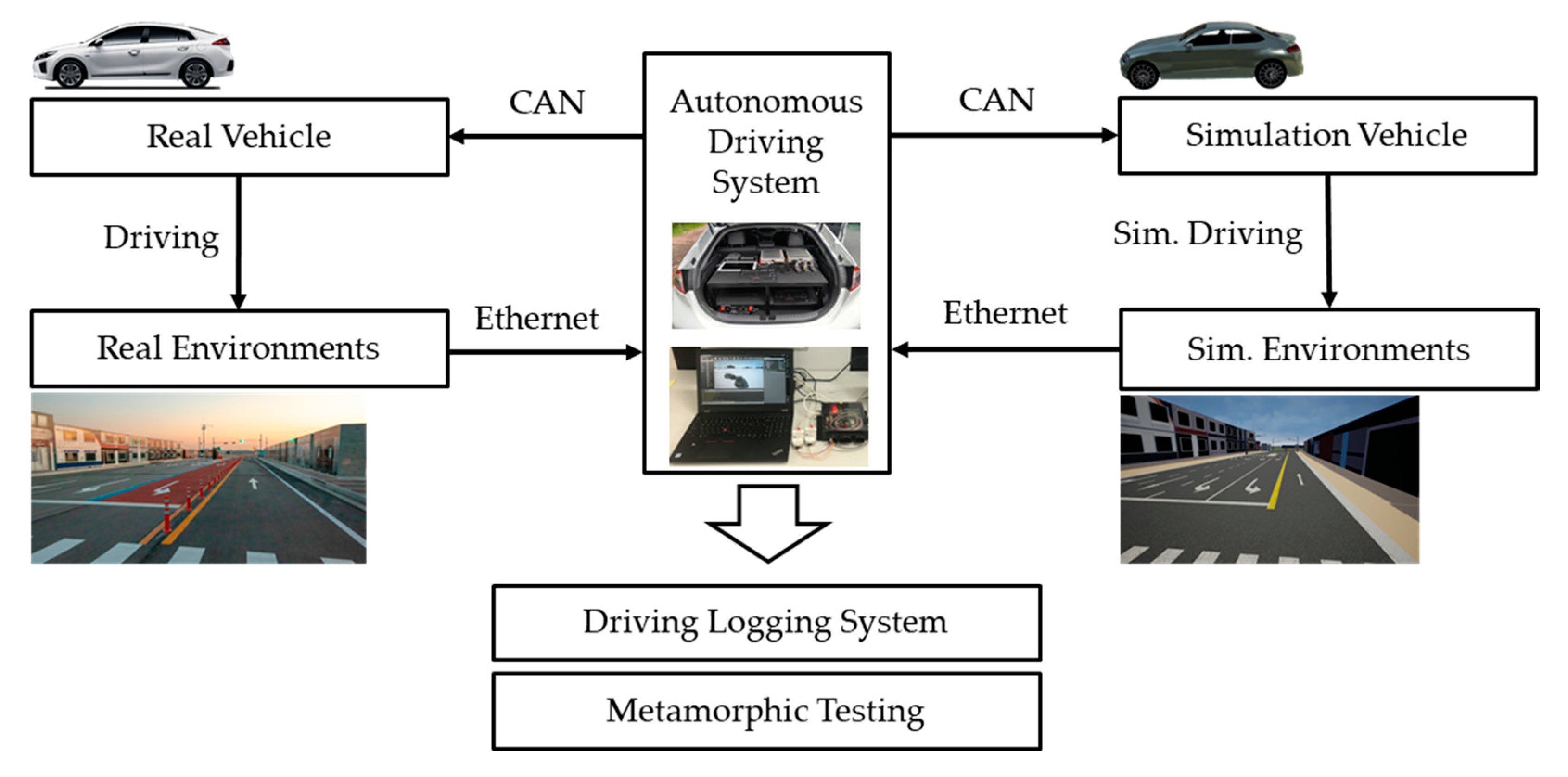

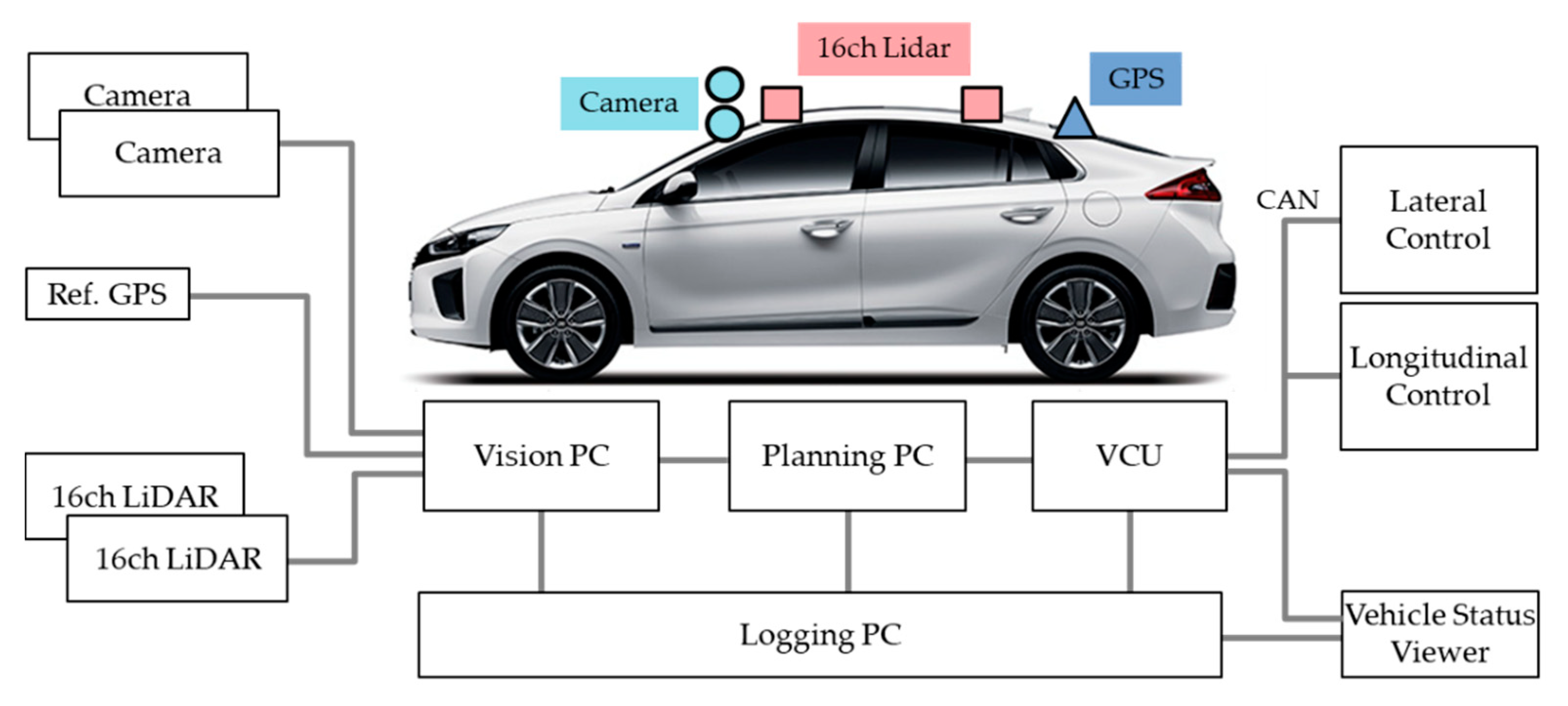

4. Experimental Results

4.1. System Configurations

4.2. Metamorphic Test Examples

4.2.1. MT1: Stop Regardless of the Obstacle Order

- MR1: if D = {x | x is a set of an obstacle’s relative distance}, then the ego-vehicle must stop at min(D).

- Input Do: Do = {the vertical axis position of obstacles}

- Expected output of Do: ego-vehicle should stop at min(Do)

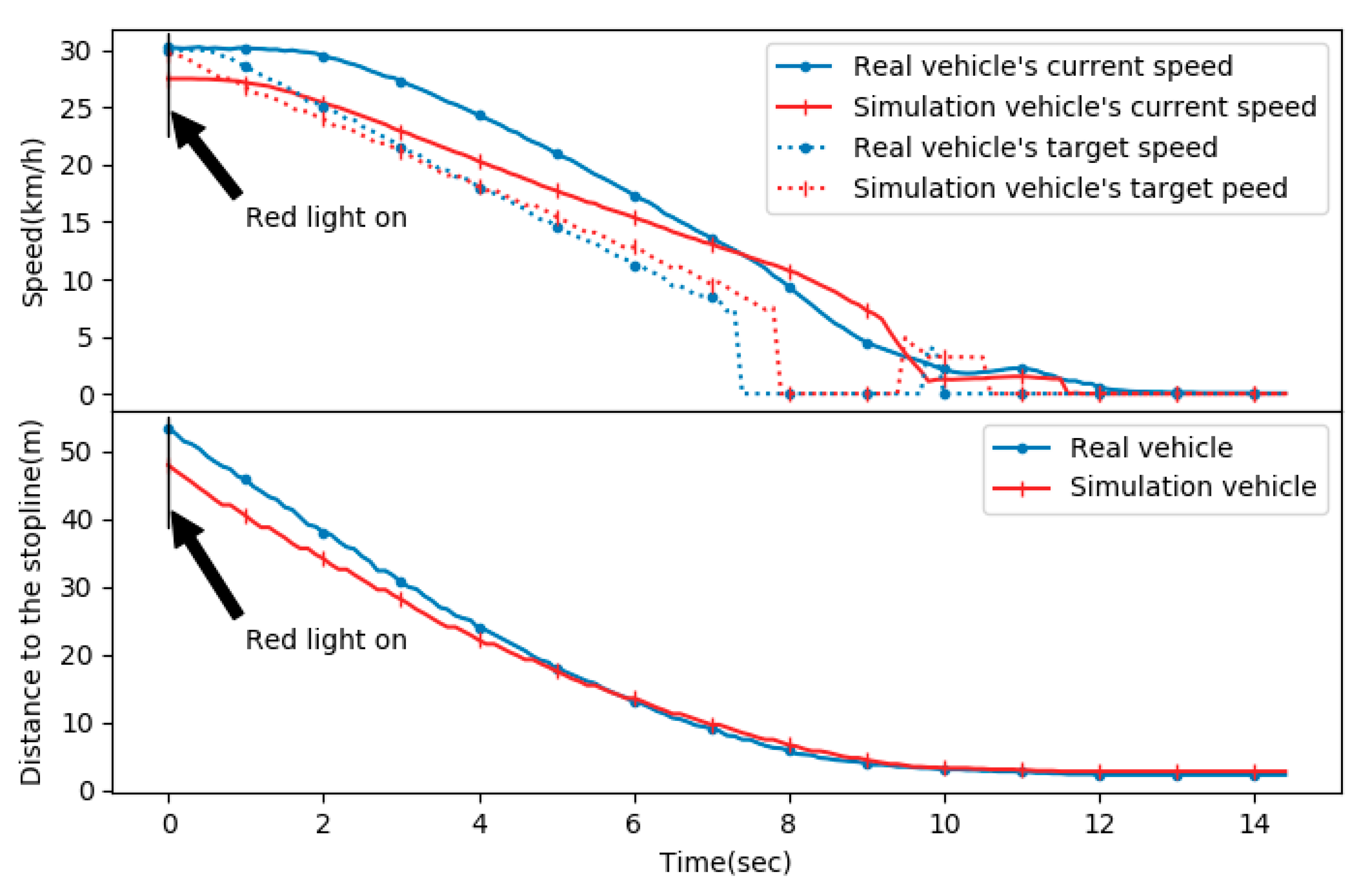

4.2.2. MT2: Stop Before the Stop Line

- Perception: traffic light recognition (red) and ego-vehicle localization;

- Planning: calculation of the remaining distance to the stop line based on map data;

- Control: calculation of the target speed based on remaining distance and braking control.

- MR2: if Input set Do and D1 exist, then |Ro| <= |R1| holds.

- If Input set Do = {x, y | x is ego-vehicle speed, y is a distance to the stop line} is given, the system returns Output set Ro = {x, y | x is the ego-vehicle speed and must be zero, y is a distance to the stop line and must be positive}.

- If Input set D1 = {x, y+d | x is the ego-vehicle speed, y is a distance to the stop line, d is a positive value} is given, the system returns Output set R1 = {x, y | x is the ego-vehicle speed and must be zero, y is a distance to the stop line and must be positive}.

- |Ro| and |R1| mean the number of elements in Ro and R1, respectively.

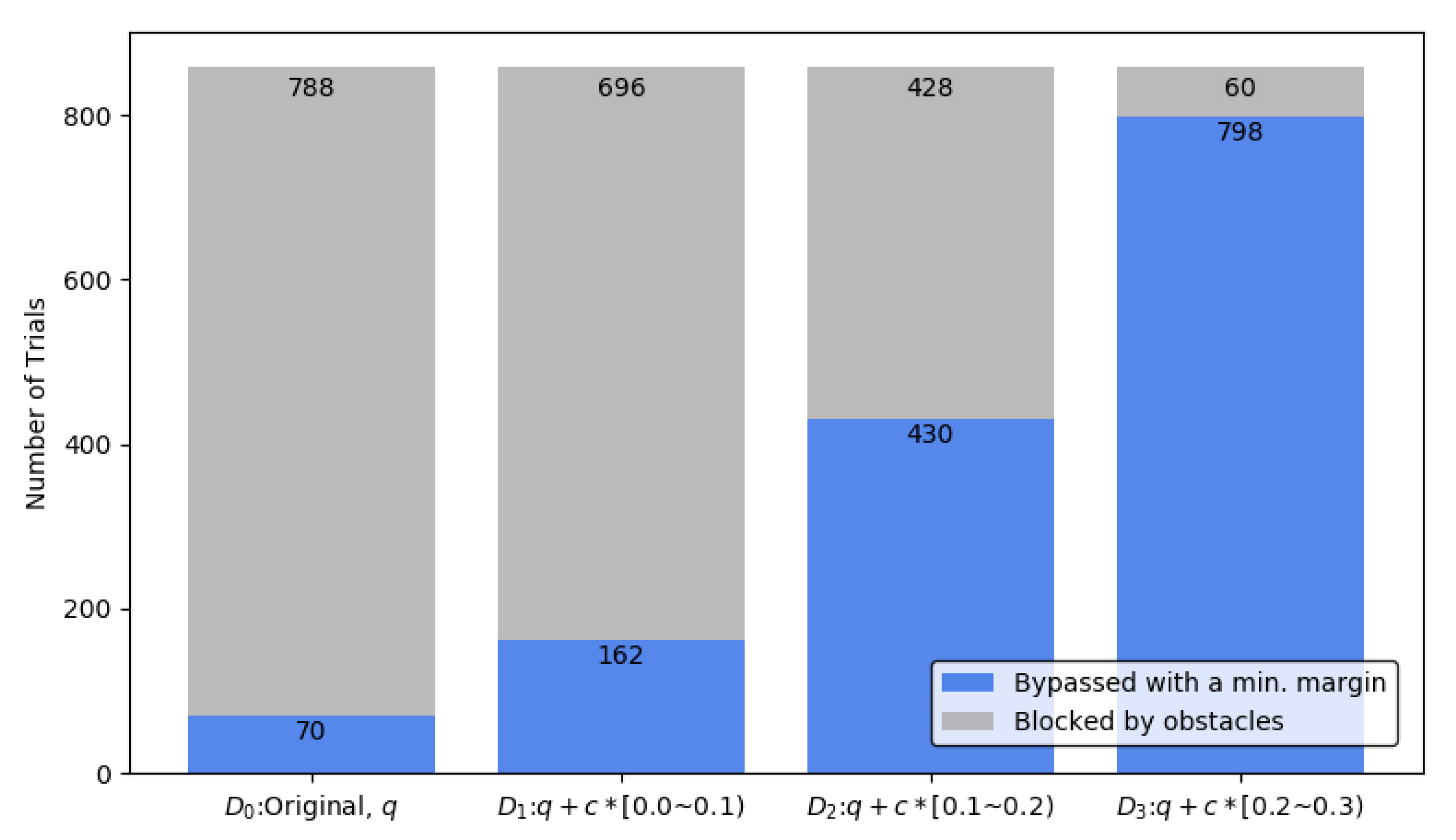

4.2.3. MT3: Avoiding Obstacles with a Minimum Margin

- MR3: if Input set Do and D1 exist, then |Ro| <= |R1| holds.

- If Input set Do = {p, q | p is ego-vehicle position, q is an obstacle position} is given, the system returns Output set Ro = {p | p is the ego vehicle position and the distance between p and the final position in path must be less than or equal to the preset Euclidean distance e (e.g., e = 1.0 m)}.

- If Input set D1 = {p, q+d | p is the ego-vehicle position, q is an obstacle position, d is a positive random value and greater than |do|} is given, the system returns Output set R1 = {p| p is the ego-vehicle position and the distance between p and the final position in path must be less than or equal to the preset Euclidean distance e (e.g., e = 1.0 m)}.

- |Ro| and |R1| mean the number of elements in Ro and R1, respectively.

- Note that p, q, and d are coordinates in two-dimensional space and written in bold.

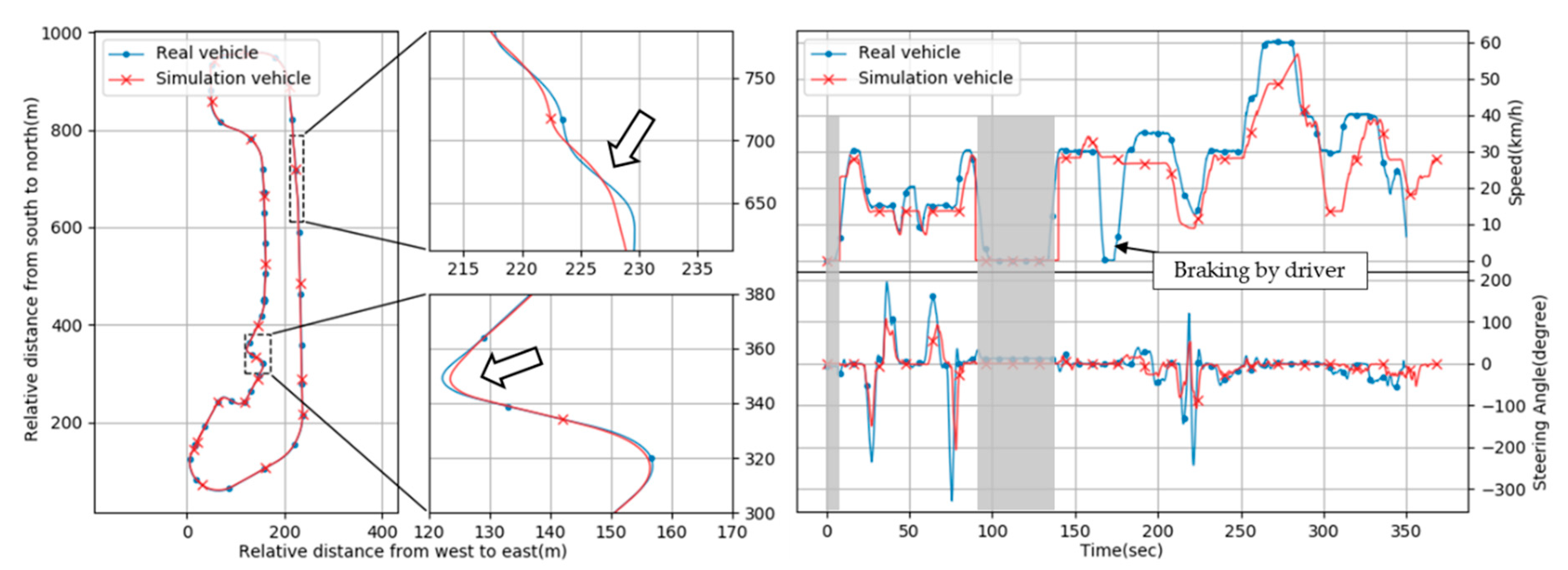

4.3. Logging Data Analysis

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A

| <driving> | ::= | <maneuver> ON <environment> AT <time> [WITH <events>] |

| <maneuver> | ::= | <highway drive> |

| | <low speed shuttle> | ||

| | <traffic jam drive> | ||

| | <emergency takeover> | ||

| | <valet parking> | ||

| <highway drive> | ::= | “maintain speed” |

| | “car following” | ||

| | “lane centering” | ||

| | “lane switching/overtaking” | ||

| | “obstacle avoidance” | ||

| | “follow driving law” | ||

| | “low/high speed merge” | ||

| <low speed shuttle> | ::= | “maintain speed” |

| | “car following” | ||

| | “lane centering” | ||

| | “lane switching/overtaking” | ||

| | “obstacle avoidance” | ||

| | “follow driving law” | ||

| | “stop at destination” | ||

| | “n-point turn” | ||

| | “route planning” | ||

| | “parking” | ||

| <traffic jam drive> | ::= | “maintain speed” |

| | “car following” | ||

| | “lane centering” | ||

| | “lane switching/overtaking” | ||

| | “obstacle avoidance” | ||

| | “follow driving law” | ||

| | “low speed merge” | ||

| <emergency takeover> | ::= | “takeover request” |

| “takeover response” | ||

| <valet parking> | ::= | “maintain speed” |

| | “car following” | ||

| | “obstacle avoidance” | ||

| | “follow driving law” | ||

| | “parking” |

| <environment> | ::= | <physical infrastructure> |

| [, <environmental conditions>] | ||

| [, <operational constrains>] | ||

| [, <objects> ] | ||

| [, <zones> ] | ||

| [, <connectivity> ] | ||

| <physical infrastructure> | ::= | <roadway geometry> |

| [, <roadway types>] | ||

| [, <roadway surfaces>] | ||

| [, <roadway edges>] | ||

| <environmental conditions> | ::= | <weather> |

| , <weather-induced roadway conditions> | ||

| , <particulate matter> | ||

| , <illumination> | ||

| <operational constrains> | ::= | <speed limit> |

| , <traffic conditions> | ||

| <objects> | ::= | <signage> |

| , <roadway users> | ||

| , <non-roadway user obstacles/objects> | ||

| <zones> | ::= | “geo-fencing” |

| | “traffic management zones” | ||

| | “school zone” | ||

| | “construction zones” | ||

| | “regions/states” | ||

| | “interference zones” | ||

| <connectivity> | ::= | “V2V” |

| | “traffic density information” | ||

| | “remote fleet management system” | ||

| | “infrastructure sensors and communications” |

| <physical infrastructure> | ::= | <roadway geometry> |

| [, <roadway types>] | ||

| [, <roadway surfaces>] | ||

| [, <roadway edges>] | ||

| <roadway geometry> | ::= | “straightaways” |

| | “curves” | ||

| | “hills” | ||

| | “lateral crests” | ||

| | “corners” | ||

| | “negative obstacles” | ||

| | “lane width” | ||

| <roadway types> | ::= | “divided highway” |

| | “undivided highway” | ||

| | “urban” | ||

| | “rural” | ||

| | “parking” | ||

| | “multi-lane” | ||

| | “single-lane” | ||

| | “4-way/2-way stop” | ||

| | “merge lanes” | ||

| <roadway surfaces> | ::= | “asphalt” |

| | “concrete” | ||

| | “mixed” | ||

| | “brick” | ||

| | “dirt” | ||

| <roadway edges> | ::= | “line markers” |

| | “temporary line markers” | ||

| | “shoulder(paved or gravel)” | ||

| | “shoulder(grass)” | ||

| | “concrete barriers” | ||

| | “curb” | ||

| | “cones” |

| <environmental conditions> | ::= | <weather> |

| , <weather-induced roadway conditions> | ||

| , <particulate matter> | ||

| , <illumination> | ||

| <weather> | ::= | “temperature", ( "snow" | "wind" | <rain> ) |

| <weather-induced roadway conditions> | ::= | “standing water” |

| | “flooded roadways” | ||

| | “icy roads” | ||

| | “snow on road” | ||

| <particulate matter> | ::= | “fog” |

| | “smoke” | ||

| | “smog” | ||

| | “dust/dirt” | ||

| | “mud” | ||

| <illumination> | ::= | “day” |

| | “dawn” | ||

| | “dusk” | ||

| | “night” | ||

| | “street lights” | ||

| | “headlights” | ||

| | “oncoming vehicle lights” |

| <operational constrains> | ::= | <speed limit> |

| , <traffic conditions> | ||

| <speed limit> | ::= | “minimum speed limit” |

| , "maximum speed limit” | ||

| <traffic conditions> | ::= | “minimal traffic” |

| | “normal traffic” | ||

| | “bumper-to-bumper/rush-hour traffic” | ||

| | “altered(accident, construction, …)” |

| <objects> | ::= | <signage> |

| , <roadway users> | ||

| , <non-roadway user obstacles/objects> | ||

| <signage> | ::= | <signs> |

| | <traffic signals> | ||

| | “crosswalk” | ||

| | “railroad crossing” | ||

| | “stopped bus” | ||

| | “construction signage” | ||

| | “hand signals” | ||

| <roadway users> | ::= | <vehicle types> |

| , "stopped vehicle” | ||

| , "moving vehicles” | ||

| | “pedestrians” | ||

| | “cyclists” | ||

| <non-roadway user obstacles /objects> | ::= | <animals> |

| | “shopping carts” | ||

| | <debris> | ||

| | “construction equipment” | ||

| | “pedestrians" | ||

| | “cyclist” |

| <signs> | ::= | “stop” |

| | “yield” | ||

| | “pedestrian” | ||

| <traffic signals> | ::= | “flashing” |

| | “school zone” | ||

| | “fire department zone” | ||

| | “etc” |

| <vehicle types> | ::= | “cars” |

| | “light trucks” | ||

| | “large trucks” | ||

| | “motorcycles” |

| <animals> | ::= | “dogs” |

| | “cats” | ||

| | “deer” | ||

| | “etc” | ||

| <debris> | ::= | “pieces of tire” |

| | “trash” | ||

| | “ladders” | ||

| | “etc” |

| <time> | ::= | “time” |

| <events> | ::= | (<object>, <object event>) |

| {, (<object>, <object event>) } | ||

| <object> | ::= | “lead vehicle” |

| | “side/back vehicle” | ||

| | “pedestrian” | ||

| | “pedalcyclist” | ||

| | “animal/debris/dynamic object” | ||

| | “signals/signs” | ||

| <object event> | ::= | “decelerating” |

| | “stopped” | ||

| | “accelerating” | ||

| | “turning” | ||

| | “cutting out” | ||

| | “parking” |

References

- Li, J.; Cheng, H.; Guo, H.; Qiu, S. Survey on Artificial Intelligence for Vehicles. Automot. Innov. 2018, 1, 2–14. [Google Scholar] [CrossRef]

- Oh, S.; Kang, H. Object detection and classification by decision-level fusion for intelligent vehicle systems. Sensors 2017, 17, 207. [Google Scholar] [CrossRef] [PubMed]

- Jati, G.; Gunawan, A.A.S.; Jatmiko, W. Dynamic swarm particle for fast motion vehicle tracking. ETRI J. 2020, 42, 54–66. [Google Scholar] [CrossRef]

- Bimbraw, K. Autonomous Cars: Past, Present and Future—A Review of the Developments in the Last Century, the Present Scenario and the Expected Future of Autonomous Vehicle Technology. In Proceedings of the 2015 12th International Conference on Informatics in Control, Automation and Robotics (ICINCO), Colmar, France, 21–23 July 2015; pp. 191–198. [Google Scholar]

- Fridman, L.; Brown, D.E.; Glazer, M.; Angell, W.; Dodd, S.; Jenik, B.; Terwilliger, J.; Patsekin, A.; Kindelsberger, J.; Ding, L. MIT advanced vehicle technology study: Large-scale naturalistic driving study of driver behavior and interaction with automation. IEEE Access 2019, 7, 102021–102038. [Google Scholar] [CrossRef]

- Favarò, F.; Eurich, S.; Nader, N. Autonomous vehicles’ disengagements: Trends, triggers, and regulatory limitations. Accid. Anal. Prev. 2018, 110, 136–148. [Google Scholar] [CrossRef]

- Kalra, N.; Paddock, S.M. Driving to safety: How many miles of driving would it take to demonstrate autonomous vehicle reliability? Transp. Res. Part A Policy Pract. 2016, 94, 182–193. [Google Scholar] [CrossRef]

- Levin, S. Uber crash shows’ catastrophic failure’of self-driving technology, experts say. The Guard. 2018. Available online: https://www.theguardian.com/technology/2018/mar/22/self-driving-car-uber-death-woman-failure-fatal-crash-arizona (accessed on 16 December 2019).

- Wongpiromsarn, T.; Murray, R.M. Formal verification of an autonomous vehicle system. In Proceedings of the Conference on Decision and Control, Cancun, Maxico, 9–11 December 2008; Available online: http://www.cds.caltech.edu/~murray/papers/wm08-cdc.html (accessed on 20 December 2019).

- Seshia, S.A.; Sadigh, D.; Sastry, S.S. Formal methods for semi-autonomous driving. In Proceedings of the 2015 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 8–12 June 2015; pp. 1–5. [Google Scholar]

- Chen, T.Y.T.; Cheung, S.S.C.; Yiu, S.S.M. Metamorphic testing: A new approach for generating next test cases. Tech. Rep. HKUST-CS98-01, Dep. Comput. Sci. Hong Kong Univ. Sci. Technol. Hong Kong 1998, 1–11. Available online: https://www.cse.ust.hk/~scc/publ/CS98-01-metamorphictesting.pdf (accessed on 10 December 2019).

- Segura, S.; Zhou, Z.Q. Metamorphic testing 20 years later: A hands-on introduction. In Proceedings of the 40th International Conference on Software Engineering: Companion Proceeedings, Gothenburg, Sweden, May 2018; pp. 538–539. [Google Scholar]

- Zhou, Z.Q.; Sun, L. Metamorphic testing of driverless cars. Commun. ACM 2019, 62, 61–67. [Google Scholar] [CrossRef]

- California Code of Regulations, Title 13, Division 1, Chapter 1, Article 3.7. 2018. Available online: https://govt.westlaw.com/calregs/Browse/Home/California/CaliforniaCodeofRegulations?guid=I14E801D0D46811DE8879F88E8B0DAAAE&originationContext=documenttoc&transitionType=Default&contextData=(sc.Default) (accessed on 10 December 2019).

- Motor Vehicle Management Act, Statutes of the Republic of Korea, Article 27. 2016. Available online: https://elaw.klri.re.kr/eng_service/lawView.do?hseq=42015&lang=ENG (accessed on 10 December 2019).

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Rob. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Jin, X.; Deshmukh, J.V.; Kapinski, J.; Ueda, K.; Butts, K. Challenges of Applying Formal Methods to Automotive Control Systems. In Proceedings of the NSF National Workshop on Transportation Cyber-Physical Systems, 2014; Available online: https://cps-vo.org/node/11225 (accessed on 10 December 2019).

- Kamali, M.; Dennis, L.A.; McAree, O.; Fisher, M.; Veres, S.M. Formal verification of autonomous vehicle platooning. Sci. Comput. Program. 2017, 148, 88–106. [Google Scholar] [CrossRef]

- Weyuker, E.J. On testing non-testable programs. Comput. J. 1982, 25, 465–470. [Google Scholar] [CrossRef]

- Sung, K.; Min, K.; Choi, J. Driving information logger with in-vehicle communication for autonomous vehicle research. In Proceedings of the International Conference on Advanced Communication Technology, ICACT, Chuncheon-si Gangwon-do, Korea, 11–14 February 2018; Volume 2018-Febru, pp. 300–302. [Google Scholar]

- Thorn, E.; Kimmel, S.; Chaka, M. A Framework for Automated Driving System Testable Cases and Scenarios. 2018; pp. 1–180. Available online: https://www.nhtsa.gov/sites/nhtsa.dot.gov/files/documents/13882-automateddrivingsystems_092618_v1a_tag.pdf (accessed on 10 December 2019).

- Segura, S.; Fraser, G.; Sanchez, A.B.; Ruiz-Cortes, A. A Survey on Metamorphic Testing. IEEE Trans. Softw. Eng. 2016, 42, 805–824. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, PMLR, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

| <driving> | ::= | <maneuver> ON <environment> AT <time> [WITH <events>] | ||

| <maneuver> | ::= | <highway drive> | ||

| | <low speed shuttle> | ||||

| | <traffic jam drive> | ||||

| | <emergency takeover> | ||||

| | <valet parking> | ||||

| <environment> | ::= | <physical infrastructure> | ||

| [, <environmental conditions>] | ||||

| [, <operational constrains>] | ||||

| [, <objects>] | ||||

| [, <zones>] | ||||

| [, <connectivity>] | ||||

| <time> | ::= | “time” | ||

| <events> | ::= | <object>, <object event> | ||

| {<object>, <object event>} | ||||

| Component | Category | Item | Description |

|---|---|---|---|

| Maneuver | Mode | Mode | System’s current working mode |

| ReasonCode | Reasons for entering the current mode | ||

| FailCode | Reasons for system failure | ||

| Mode Change Info | Buttons | Buttons pressed by the driver | |

| StateIn | Previous system mode | ||

| Driver Input | APS | Accelerator pedal position value | |

| BPS | Brake pedal position value | ||

| SAS | Steering angle sensor value | ||

| GearPos | Transmission gear position (P, R, N, D) | ||

| OBD2Spd | OBD2-based vehicle speed | ||

| PushBrake | Push brake? On/Off | ||

| SteeringTorque | Steering torque value | ||

| Auto Control Info | TargetSteer | Target steering command | |

| TargetSpeed | Target speed command | ||

| TargetAccel | Target acceleration command | ||

| Target Signal | Left/Right turn signal command | ||

| Path Info | PathLen | Path length | |

| PathMinSpd | Minimum speed in path | ||

| PathPoints | Start, mid, last points in path | ||

| Environment | Vehicle Status | Longitude | GPS longitude |

| Latitude | GPS latitude | ||

| Heading | GPS heading | ||

| LongVel | Longitude velocity | ||

| LatiVel | Latitude velocity | ||

| LongAccel | Longitude acceleration | ||

| LatiAccel | Latitude acceleration | ||

| WheelSpd | Vehicle rear wheel average speed | ||

| VelCurr | Current speed of the vehicle | ||

| Time | Time | Logging Time | Current logging time |

| Event | Object Info | ObjectType | Object type |

| Drel | Relative distance to object | ||

| Vrel | Relative velocity to object | ||

| Size | Object size | ||

| Signals | Traffic light signals |

| Inputs | Description | Expected Result |

|---|---|---|

| Do | Original input set | Stop before min(Do) |

| D1 | Shuffle the elements in Do | Stop before min(D1) |

| D2 | Add random noise (2 m < n < 7 m) to the elements in Do | Stop before min(D2) |

| D3 | Add more elements in Do | Stop before min(D3) |

| Inputs | Description | Expected Result |

| Do | Original input set | |Ro| |

| D1 | New input set D1 = {x, y+d | x, y in Do, d is positive value} | |R1| (Satisfying |Ro| ≤ |R1|) |

| Inputs | Description | Expected Result |

|---|---|---|

| Do | Original input set Do = {p, q | p is ego-vehicle position, q is obstacle position, q = c * [0.0~0.4) } | |Ro| |

| D1 | Input set D1 = {p, q+d1 | p, q in Do, d1 is positive random value, d1 = c * [0.0~0.1) } | |Ro| <= |R1| |

| D2 | Input set D2 = {p, q+d2 | p, q in Do, d2 is positive random value, d2 = c * [0.1~0.2) } | |Ro| <= |R1| <= |R2| |

| D3 | Input set D3 = {p, q+d3 | p, q in Do, d3 is positive random value, d3 = c * [0.2~0.3) } | |Ro| <= |R1|<= |R2|<= |R3| |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sung, K.; Min, K.-W.; Choi, J.; Kim, B.-C. A Formal and Quantifiable Log Analysis Framework for Test Driving of Autonomous Vehicles. Sensors 2020, 20, 1356. https://doi.org/10.3390/s20051356

Sung K, Min K-W, Choi J, Kim B-C. A Formal and Quantifiable Log Analysis Framework for Test Driving of Autonomous Vehicles. Sensors. 2020; 20(5):1356. https://doi.org/10.3390/s20051356

Chicago/Turabian StyleSung, Kyungbok, Kyoung-Wook Min, Jeongdan Choi, and Byung-Cheol Kim. 2020. "A Formal and Quantifiable Log Analysis Framework for Test Driving of Autonomous Vehicles" Sensors 20, no. 5: 1356. https://doi.org/10.3390/s20051356

APA StyleSung, K., Min, K.-W., Choi, J., & Kim, B.-C. (2020). A Formal and Quantifiable Log Analysis Framework for Test Driving of Autonomous Vehicles. Sensors, 20(5), 1356. https://doi.org/10.3390/s20051356