The Algorithms of Distributed Learning and Distributed Estimation about Intelligent Wireless Sensor Network

Abstract

1. Introduction

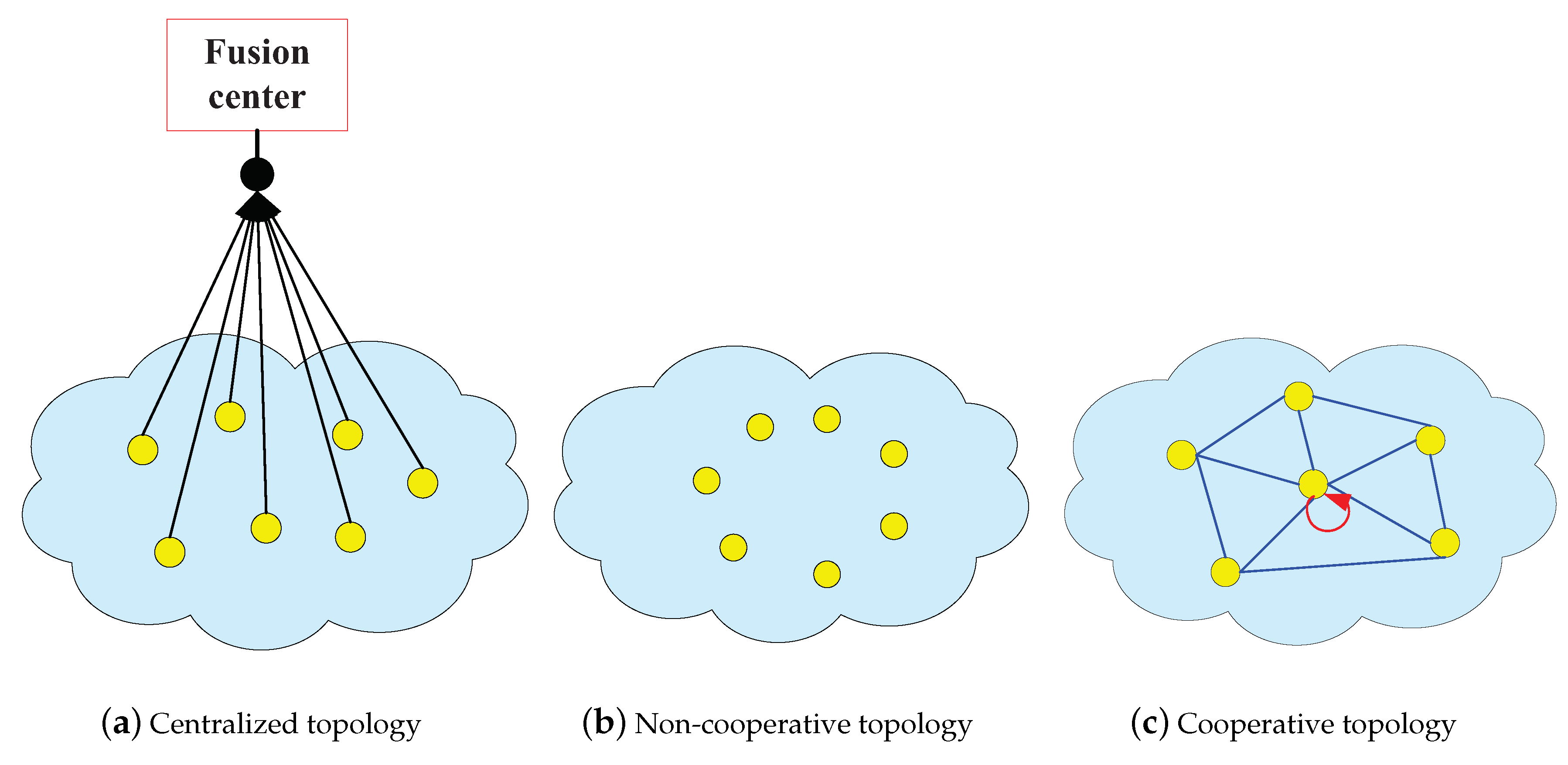

2. Basic Topologies of IWSN

2.1. Centralized Topology of IWSN

2.2. Cooperation Topology and Non-Cooperative Topology

3. Cooperative Distributed Estimation Strategy

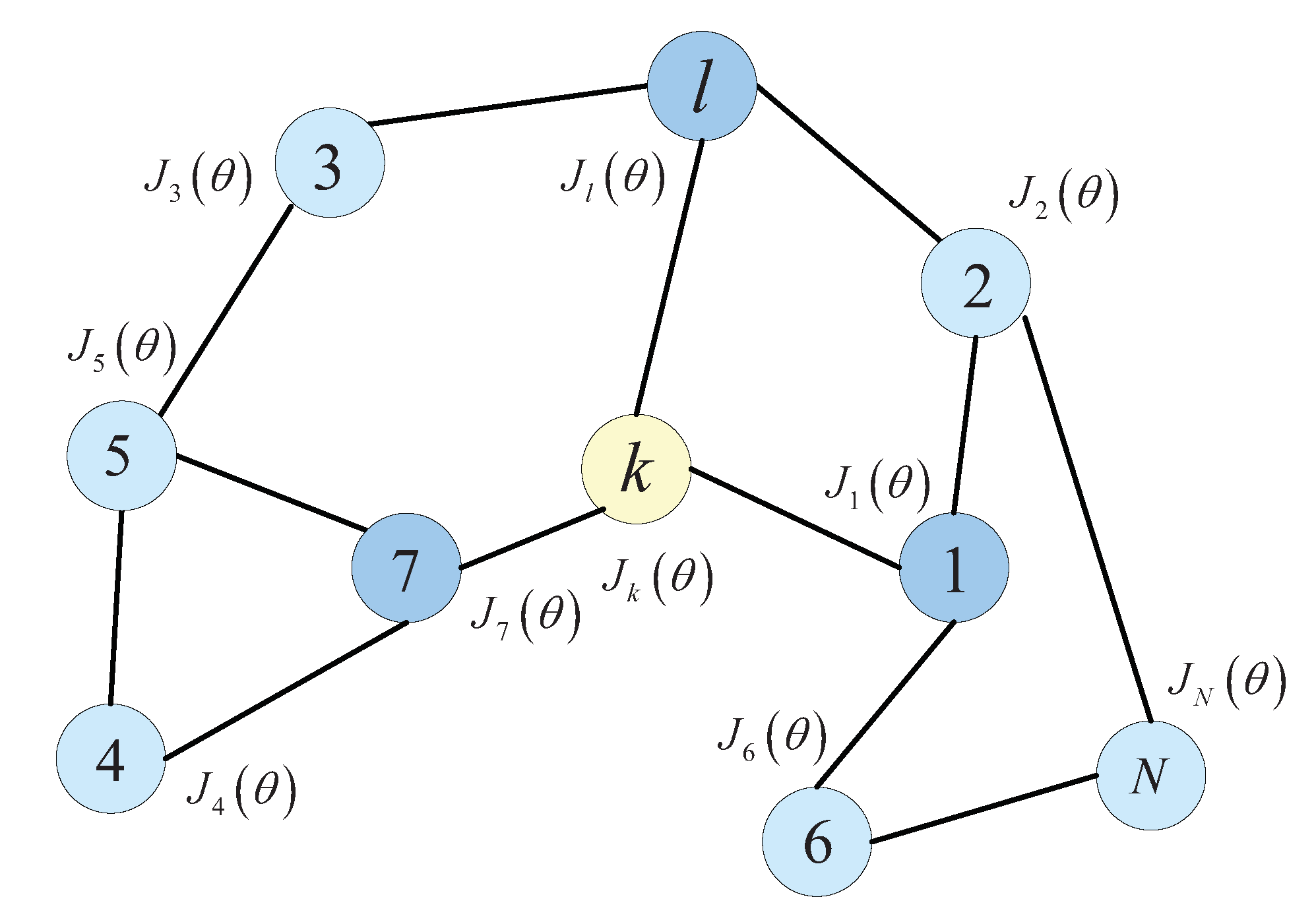

3.1. The Problem of Distributed Estimation

3.2. Noncooperative Distributed Estimation Strategy

3.3. Cooperative Distributed Strategy

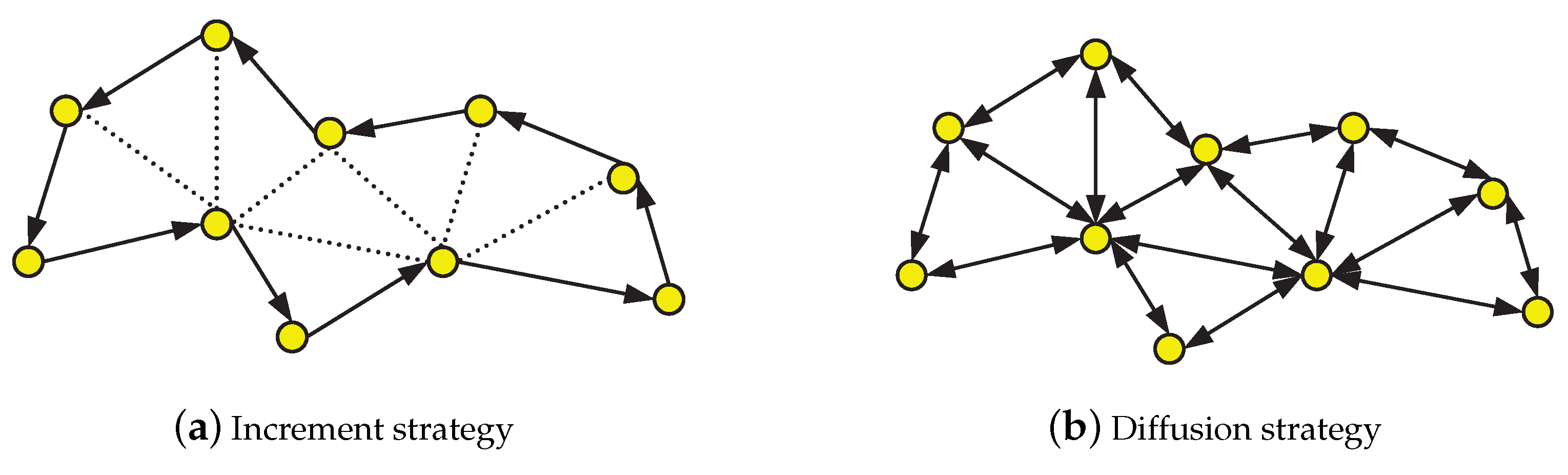

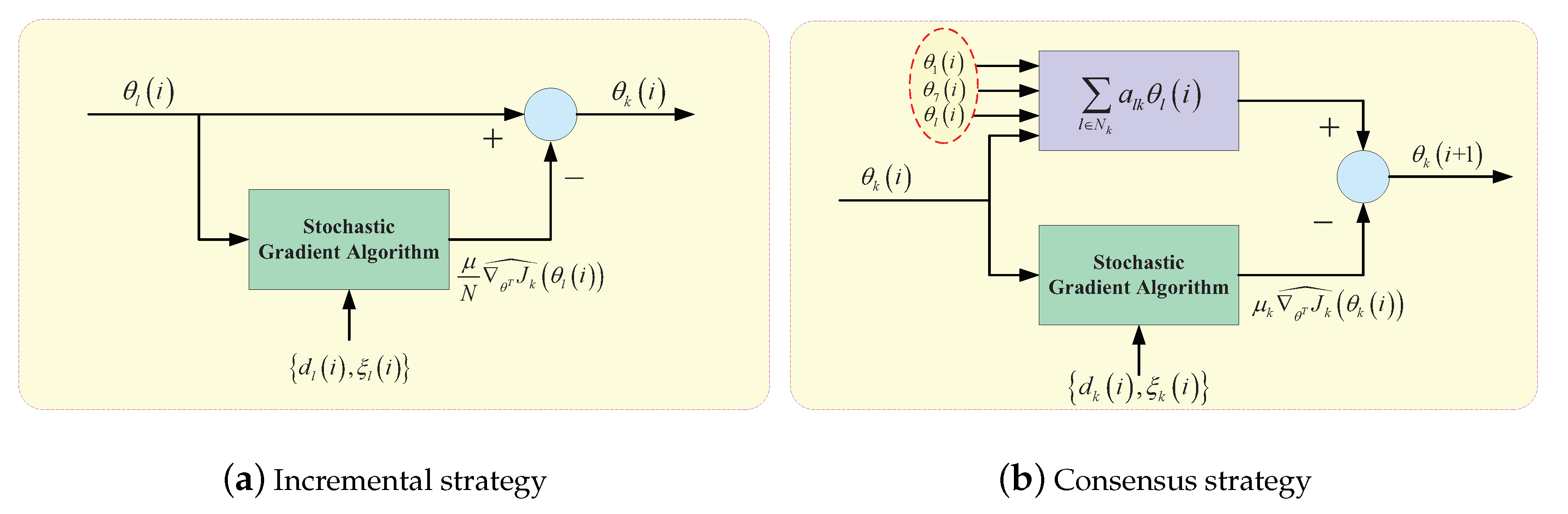

3.3.1. Increment Strategy

| Algorithm 1: Increment strategy for distributed learning. |

|

3.3.2. Consensus Strategy

| Algorithm 2: Consensus strategy for distributed learning. |

|

3.3.3. Diffusion Strategy

| Algorithm 3: Diffusion strategy for distributed learning (ATC). |

|

| Algorithm 4: Diffusion strategy for distributed learning (CTA). |

|

3.4. The Differences among Three Distributed Estimation Algorithms

3.5. Extension Analysis of Distributed Estimation Algorithm

3.5.1. Distributed Strategy with Sparse and Regularization

3.5.2. Gossip Strategy

3.5.3. Asynchronous Strategy

3.5.4. Distributed Strategy with Noise

3.5.5. Distributed Kalman Filter

3.5.6. Distributed Bayesian Learning

3.6. Example

4. The Main Results of the Distributed Estimation

5. Conclusions and Future Perspective

Author Contributions

Funding

Conflicts of Interest

References

- Xue, L.; Wang, J.L.; Li, J.; Wang, Y.L.; Guan, X. Precoding design for energy efficiency maximization in MIMO half-duplex wireless sensor networks with SWIPT. Sensors 2019, 19, 923. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Richard, C.; Sayed, A.H. Multitask diffusion adaptation over networks with common latent representations. IEEE J. Sel. Top. Signal Process. 2017, 11, 563–579. [Google Scholar] [CrossRef]

- Hao, Q.; Hu, F. Intelligent Sensor Networks the Integration of Sensor Networks, Signal Processing, and Machine Learning; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Alkaya, A.; Grimble, M.J. Experimental application of nonlinear minimum variance estimation for fault detection systems. Int. J. Syst. Sci. 2016, 47, 3055–3063. [Google Scholar] [CrossRef]

- Wang, J.; Yang, D.; Jiang, W.; Zhou, J. Semisupervised incremental support vector machine learning based on neighborhood kernel estimation. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 2677–2687. [Google Scholar] [CrossRef]

- Ribeiro, A.; Schizas, I.D.; Roumeliotis, S.I.; Giannakis, G. Kalman filtering in wireless sensor networks. IEEE Control Syst. 2010, 30, 66–68. [Google Scholar]

- Mu, C.X.; Ni, Z.; Sun, C.Y.; He, H.B. Data-driven tracking control with adaptive dynamic programming for a class of continuous-time nonlinear systems. IEEE Trans. Cybern. 2017, 47, 1460–1470. [Google Scholar] [CrossRef]

- Ying, B.; Sayed, A.H. Performance limits of stochastic sub–gradient learning, Part I: Single-agent case. Signal Process. 2018, 144, 271–282. [Google Scholar] [CrossRef]

- Ying, B.; Sayed, A.H. Performance limits of stochastic sub–gradient learning, Part II: Multi-agent case. Signal Process. 2018, 144, 253–264. [Google Scholar] [CrossRef]

- Hua, J.H.; Li, C.G. Distributed variational bayesian algorithms over sensor networks. IEEE Trans. Signal Process. 2016, 64, 783–789. [Google Scholar] [CrossRef]

- Chen, Y.; Lü, J.H.; Yu, X.G.; Hill, D.J. Multi-agent systems with dynamical topologies: consensus and applications. IEEE Circuits Syst. Mag. 2013, 13, 21–34. [Google Scholar] [CrossRef]

- Zhu, S.; Chen, C.; Ma, X.; Yang, B.; Guan, X. Consensus basedestimation over relay assisted sensor networks for situation monitoring. IEEE J. Sel. Top. Signal Process. 2015, 9, 278–291. [Google Scholar] [CrossRef]

- Hua, J.H.; Li, C.G.; Shen, H.L. Distributed learning of predictive structures from multiple tasks over networks. IEEE Trans. Ind. Electron. 2017, 64, 4246–4256. [Google Scholar] [CrossRef]

- Grimble, M. Nonlinear minimum variance estimation for statedependent discrete-time systems. IET Signal Process. 2012, 6, 379–391. [Google Scholar] [CrossRef]

- Li, X.; Shi, Q.; Xiao, S.; Duan, S.; Chen, F. A robust diffusion minimum kernel risk-sensitive loss algorithm over multitask sensor networks. Sensors 2019, 19, 2339. [Google Scholar] [CrossRef] [PubMed]

- Slavakis, K.; Giannakis, G.; Mateos, G. Modeling and optimization for big data analytics: (Statistical) learning tools for our era of data deluge. IEEE Signal Process. Mag. 2014, 31, 18–31. [Google Scholar] [CrossRef]

- Wei, Q.; Liu, D.; Lewis, F.L. Optimal distributed synchronization control for continuous-time heterogeneous multiagent differential graphical games. Inf. Sci. 2015, 26, 96–113. [Google Scholar]

- Li, C.; Huang, S.; Liu, Y. Distributed TLS over multitask networks with adaptive intertask cooperation. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 3036–3052. [Google Scholar] [CrossRef]

- Hua, J.H.; Li, C. Distributed jointly sparse Bayesian learning with quantized communication. IEEE Trans. Signal Inf. Process. Netw. 2018, 4, 769–782. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G.E. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Yang, B.; Xi, J.X.; Yang, J.; Xue, L. An alignment method for strapdown inertial navigation systems assisted by doppler radar on a vehicle-borne moving base. Sensors 2019, 19, 4577. [Google Scholar] [CrossRef] [PubMed]

- Hastie, T. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: Berlin, Germany, 2016. [Google Scholar]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef] [PubMed]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level Control through Deep Reinforcement Learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Kelleher, J.D.; Namee, B.M.; D’Arcy, A. Foundations of Machine Learning, 2nd ed.; The MIT Press: New York, NY, USA, 2018. [Google Scholar]

- Li, Z.; Wang, Y.; Zheng, W. Adaptive consensus-based unscented information filter for tracking target with maneuver and colored noise. Sensors 2019, 19, 3069. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: New York, NY, USA, 2016. [Google Scholar]

- Sun, Y.L.; Yuan, Y.; Xu, Q.; Hua, C.; Guan, X. A mobile anchor node assisted RSSI localization scheme in underwater wireless sensor networks. Sensors 2019, 19, 4369. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Wei, Q.; Wang, D.; Yang, X.; Li, H. Adaptive Dynamic Programming with Applications in Optimal Control; Springer: New York, NY, USA, 2017. [Google Scholar]

- Lewis, F.L.; Liu, D. Reinforcement Learning and Approximate Dynamic Programming for Feedback Control; Wiley-IEEE Press: New York, NY, USA, 2012. [Google Scholar]

- Zhang, H.; Liu, D. Adaptive Dynamic Programming for Control: Algorithms and Stability; Springer: New York, NY, USA, 2012. [Google Scholar]

- Wei, Q.; Song, R.; Yan, P. Data-driven zero-sum neuro-optimal control for a class of continuous-time unknown nonlinear systems with disturbance using ADP. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 444–458. [Google Scholar] [CrossRef]

- Mu, C.; Tang, Y.; He, H.B. Improved sliding mode design for load frequency control of power system integrated an adaptive learning strategy. IEEE Trans. Ind. Electron. 2017, 64, 6742–6751. [Google Scholar] [CrossRef]

- Song, R.; Lewis, F.L.; Wei, Q. Off-policy integral reinforcement learning method to solve nonlinear continuous-time multiplayer nonzero-sum games. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 704–713. [Google Scholar] [CrossRef]

- Wei, Q.; Liu, D.; Lin, H. Value iteration adaptive dynamic programming for optimal control of discrete-time nonlinear systems. IEEE Trans. Cybern. 2016, 46, 840–853. [Google Scholar] [CrossRef]

- Mu, C.; Wang, D.; He, H.B. Novel iterative neural dynamic programming for data-based approximate optimal control design. Automatica 2017, 81, 240–252. [Google Scholar] [CrossRef]

- Yang, S.; Liu, Q.; Wang, J. Distributed optimization based on a multiagent system in the presence of communication delays. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 717–728. [Google Scholar] [CrossRef]

- Yuan, G.M.; Yuan, W.Z.; Xue, L.; Xie, J.B.; Chang, H.L. Dynamic performance comparison of two Kalman filters for rate signal direct modeling and differencing modeling for combining a MEMS gyroscope array to improve accuracy. Sensors 2015, 15, 27590. [Google Scholar] [CrossRef] [PubMed]

- Schizas, I.D.; Ribeiro, A.; Giannakis, G.B. Consensus in Ad Hoc WSNs with noisy links–Part I: distributed estimation of deterministic signals. IEEE Trans. Signal Process. 2008, 56, 350–364. [Google Scholar] [CrossRef]

- Yuan, K.; Ying, B.; Zhao, X.C.; Sayed, A.H. Exact diffusion for distributed optimization and learning - Part I: algorithm development. IEEE Trans. Signal Process. 2019, 67, 708–723. [Google Scholar] [CrossRef]

- Schizas, I.D.; Ribeiro, A.; Giannakis, G.B. Consensus in Ad Hoc WSNs with noisy links–Part II: distributed estimation and smoothing of random signals. IEEE Trans. Signal Process. 2008, 56, 1650–1666. [Google Scholar] [CrossRef]

- Yuan, K.; Ying, B.; Zhao, X.C.; Sayed, A.H. Exact diffusion for distributed optimization and learning - Part II: convergence analysis. IEEE Trans. Signal Process. 2019, 67, 724–739. [Google Scholar] [CrossRef]

- Luo, B.; Wu, H.N.; Li, H.X. Adaptive optimal control of highly dissipative nonlinear spatially distributed processes with neuro-dynamic programming. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 684–696. [Google Scholar]

- Ierardi, C.; Orihuela, L.; Jurado, I. Distributed estimation techniques for cyber-physical systems: a systematic review. Sensors 2019, 19, 4270. [Google Scholar] [CrossRef]

- Wei, Q.; Shi, G.; Song, R.Z.; Liu, Y. Adaptive dynamic programming-based optimal control scheme for energy storage systems with solar renewable energy. IEEE Trans. Ind. Electron. 2017, 64, 5468–5478. [Google Scholar] [CrossRef]

- Dehghannasiri, R.; Esfahani, M.S.; Dougherty, E.R. Intrinsically bayesian robust Kalman filter: an innovation process approach. IEEE Trans. Signal Process. 2017, 65, 783–798. [Google Scholar] [CrossRef]

- Liu, J.; Liu, Y.; Dong, K.; Ding, Z.; He, Y. A novel distributed state estimation algorithm with consensus strategy. Sensors 2019, 19, 2134. [Google Scholar] [CrossRef] [PubMed]

- Nassif, R.; Vlaski, S.; Richard, C.; Sayed, A.H. A regularization framework for learning over multitask graphs. IEEE Signal Process. Lett. 2019, 26, 297–301. [Google Scholar] [CrossRef]

- Cheng, L.; Hou, Z.; Tan, M.; Wang, X. Necessary and sufficient conditions for consensus of double-integrator multi-agent systems with measurement noises. IEEE Trans. Autom. Contr. 2011, 56, 1958–1963. [Google Scholar] [CrossRef]

- Olfati-Saber, R.; Fax, J.A.; Murray, R.M. Consensus and cooperation in networked multi-agent systems. Proc. IEEE 2007, 95, 215–233. [Google Scholar] [CrossRef]

- Sayed, A.H.; Lopes, C.G. Adaptive processing over distributed networks. IEICE Trans. Fundam. 2007, E90-A, 1504–1510. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, W.N.; Yu, L. Distributed finite-horizon fusion Kalman filtering for bandwidth and energy constrained wireless sensor networks. IEEE Trans. Signal Process. 2014, 62, 797–812. [Google Scholar] [CrossRef]

- Thomopoulos, S.C.A.; Viswanathan, R.; Bougoulias, D.K. Optimal distributed decision fusion. IEEE Trans. Aerosp Electron Syst. 2002, 25, 761–765. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhou, J.; Shen, X.; Song, E.; Luo, Y. Networked Multisensor Decision and Estimation Fusion: Based on Advanced Mathematical Methods; CRC Press: New York, NY, USA, 2012. [Google Scholar]

- Haykin, S. Adaptive Filter Theory; Prentice Hall: New York, NY, USA, 2002. [Google Scholar]

- Sayed, A.H. Adaptive Filters; Wiley: Hoboken, NJ, USA, 2008. [Google Scholar]

- Couzin, I.D. Collective cognition in animal groups. Trends Cogn. Sci. 2009, 13, 36–43. [Google Scholar] [CrossRef]

- Sayed, A.H.; Tu, S.Y.; Chen, J.; Zhao, X. Diffusion strategies for adaptation and learning over networks: an examination of distributed strategies and network behavior. IEEE Signal Proces. Mag. 2013, 30, 155–171. [Google Scholar] [CrossRef]

- Godsil, C.; Royle, G.F. Algebraic Graph Theory (Graduate Texts in Mathematics); Springer: Berlin, Germany, 2001. [Google Scholar]

- Lin, Z.Y.; Wang, L.; Han, Z.; Fu, M. Distributed formation control of multi-agent systems using complex Laplacian. IEEE Trans. Autom. Contr. 2014, 59, 1765–1777. [Google Scholar] [CrossRef]

- Trudeau, R.J. Introduction to Graph Theory (Dover Books on Mathematics), 2nd ed.; Dover Publications: New York, NY, USA, 1994. [Google Scholar]

- Reinhard Diestel. Graph Theory, 5th ed.; Springer: New York, NY, USA, 2017. [Google Scholar]

- Sayed, A.H. Adaptive networks. Proc. IEEE 2014, 102, 460–497. [Google Scholar] [CrossRef]

- Tu, S.Y.; Sayed, A.H. Mobile adaptive network. IEEE J. Sel. Top. Signal Process. 2011, 5, 649–664. [Google Scholar]

- Alghunaim, S.A.; Sayed, A.H. Distributed coupled multiagent stochastic optimization. IEEE Trans. Autom. Contr. 2020, 65, 175–190. [Google Scholar] [CrossRef]

- Khawatmi, S.; Zoubir, A.M.; Sayed, A.H. Decentralized decision-making over multi–task networks. Signal Process. 2019, 160, 229–236. [Google Scholar] [CrossRef]

- Sayed, A.H. Diffusion adaptation over networks. Acad. Press Libr. Signal Process. 2014, 3, 322–454. [Google Scholar]

- Zhang, Y.; Wang, C.; Zhao, L.; Chambers, J.A. A spatial diffusion strategy for Tap-length estimation over adaptive networks. IEEE Trans. Signal Process. 2015, 63, 4487–4501. [Google Scholar] [CrossRef]

- Sayed, A.H. Adaptation, learning, and optimization over networks. Found. Trends Mach. Learn. 2014, 7, 311–801. [Google Scholar] [CrossRef]

- Lopes, C.G.; Sayed, A.H. Incremental adaptive strategies over distributed networks. IEEE Trans. Signal Process. 2007, 55, 4064–4077. [Google Scholar] [CrossRef]

- Li, L.; Lopes, C.G.; Chambers, J.; Sayed, A.H. Distributed estimation over an adaptive incremental network based on the affine projection algorithm. IEEE Trans. Signal Process. 2010, 58, 151–164. [Google Scholar] [CrossRef]

- Cattivelli, F.; Sayed, A.H. Analysis of spatial and incremental LMS processing for distributed estimation. IEEE Trans. Signal Process. 2011, 59, 1465–1480. [Google Scholar] [CrossRef]

- Yin, F.; Fritsche, C.; Jin, D.; Gustafsson, F.; Zoubir, A.M. Cooperative localization in WSNs using Gaussian mixture modeling: distributed ECM algorithms. IEEE Trans. Signal Process. 2015, 63, 1448–1463. [Google Scholar] [CrossRef]

- Nedic, A.; Bertsekas, D.P. Incremental subgradient methods for non-differentiable optimization. SIAM J. Opt. 2001, 12, 109–138. [Google Scholar] [CrossRef]

- Helou, E.S.; De Pierro, A.R. Incremental subgradients for constrained convex optimization: A unified framework and new methods. SIAM J. Opt. 2009, 20, 1547–1572. [Google Scholar]

- Koppel, A.; Sadler, B.M.; Ribeiro, A. Proximity without consensus in online multiagent optimization. IEEE Trans. Signal Process. 2017, 65, 3062–3077. [Google Scholar] [CrossRef]

- Barbarossa, S.; Scutari, G. Bio-inspired sensor network design. IEEE Signal Process. Mag. 2007, 24, 26–35. [Google Scholar] [CrossRef]

- Cao, X.; Ray Liu, K.J. Decentralized sparse multitask RLS over networks. IEEE Trans. Signal Process. 2017, 65, 6217–6232. [Google Scholar] [CrossRef][Green Version]

- Tan, F.; Guan, X.; Liu, D. Consensus protocol for multi-agent continuous systems. Chin. Phys. B 2008, 17, 3531–3535. [Google Scholar]

- Han, F.; Dong, H.; Wang, Z.; Li, G. Local design of distributed H∞–consensus filtering over sensor networks under multiplicative noises and deception attacks. Int. J. Robust Nonlin. 2019, 29, 2296–2314. [Google Scholar] [CrossRef]

- Lorenzo, P.D.; Sayed, A.H. Sparse distributed learning based on diffusion adaptation. IEEE Trans. Signal Process. 2013, 61, 1419–1433. [Google Scholar] [CrossRef]

- Chouvardas, S.; Slavakis, K.; Kopsinis, Y.; Theodoridis, S. A sparsity-promoting adaptive algorithm for distributed learning. IEEE Trans. Signal Process. 2012, 60, 5412–5425. [Google Scholar] [CrossRef]

- Cattivelli, F.S.; Lopes, C.G.; Sayed, A.H. Diffusion recursive least-squares for distributed estimation over adaptive networks. IEEE Trans. Signal Process. 2008, 56, 1865–1877. [Google Scholar] [CrossRef]

- Bertrand, A.; Moonen, M.; Sayed, A.H. Diffusion bias-compensated RLS estimation over adaptive networks. IEEE Trans. Signal Process. 2011, 59, 5212–5224. [Google Scholar] [CrossRef]

- Kar, S.; Moura, J.M.F. Convergence rate analysis of distributed gossip (linear parameter) estimation: Fundamental limits and tradeoffs. IEEE J. Sel. Top. Signal Process. 2011, 5, 674–690. [Google Scholar] [CrossRef]

- Dimakis, A.G.; Kar, S.; Moura, J.M.F.; Rabbat, M.G.; Scaglione, A. Gossip algorithms for distributed signal processing. Proc. IEEE 2010, 98, 1847–1864. [Google Scholar] [CrossRef]

- Aysal, T.C.; Yildiz, M.E.; Sarwate, A.D.; Scaglione, A. Broadcast gossip algorithms for consensus. IEEE Trans. Signal Process. 2009, 57, 2748–2761. [Google Scholar] [CrossRef]

- Boyd, S.; Ghosh, A.; Prabhakar, B.; Shah, D. Randomized gossip algorithms. IEEE Trans. Inf. Theory 2006, 52, 2508–2530. [Google Scholar] [CrossRef]

- Tsitsiklis, J.; Bertsekas, D.; Athans, M. Distributed asynchronous deterministic and stochastic gradient optimization algorithms. IEEE Trans. Autom. Contr. 1986, 31, 803–812. [Google Scholar] [CrossRef]

- Srivastava, K.; Nedic, A. Distributed asynchronous constrained stochastic optimization. IEEE J. Sel. Top. Signal Process. 2011, 5, 772–790. [Google Scholar] [CrossRef]

- Kar, S.; Moura, J.M.F. Distributed consensus algorithms in sensor networks: Link failures and channel noise. IEEE Trans. Signal Process. 2009, 57, 355–369. [Google Scholar] [CrossRef]

- Zhao, X.; Tu, S.Y.; Sayed, A.H. Diffusion adaptation over networks under imperfect information exchange and non-stationary data. IEEE Trans. Signal Process. 2012, 60, 3460–3475. [Google Scholar] [CrossRef]

- Khalili, A.; Tinati, M.A.; Rastegarnia, A.; Chambers, J.A. Steady state analysis of diffusion LMS adaptive networks with noisy links. IEEE Trans. Signal Process. 2012, 60, 974–979. [Google Scholar] [CrossRef]

- Olfati-Saber, R. Kalman-consensus filter: optimality, stability, and performance. In Proceedings of the 48h IEEE Conference on Decision and Control (CDC) held jointly with 2009 28th Chinese Control Conference, Shanghai, China, 15–18 December 2009; pp. 7036–7042. [Google Scholar]

- Khan, U.A.; Moura, J.M.F. Distributing the Kalman filter for large-scale systems. IEEE Trans. Signal Process. 2008, 56, 4919–4935. [Google Scholar] [CrossRef]

- Cattivelli, F.; Sayed, A.H. Diffusion strategies for distributed Kalman filtering and smoothing. IEEE Trans. Autom. Contr. 2010, 55, 2069–2084. [Google Scholar] [CrossRef]

- Khan, U.A.; Jadbabaie, A. On the stability and optimality of distributed Kalman filters with finite-time data fusion. In Proceedings of the 2011 American Control Conference, San Francisco, CA, USA, 29 June–1 July 2011; pp. 3405–3410. [Google Scholar]

- Chao, C.M.; Wang, Y.Z.; Lu, M.W. Multiple-rendezvous multichannel MAC protocol design for underwater sensor networks. IEEE Trans. Syst. Man. Cybern. Syst. 2013, 43, 128–138. [Google Scholar] [CrossRef]

- Ma, J.; Ye, M.; Zheng, Y.; Zhu, Y. Consensus analysis of hybrid multiagent systems: a game–theoretic approach. Int. J. Robust Nonlin. 2019, 29, 1840–1853. [Google Scholar] [CrossRef]

- Segarra, S.; Mateos, G.; Marques, A.G.; Ribeiro, A. Blind identification of graph filters. IEEE Trans. Signal Process. 2016, 65, 1146–1159. [Google Scholar] [CrossRef]

- Wan, Y.; Wei, D.; Hao, Y. Distributed filtering with consensus strategies in sensor networks: considering consensus tracking error. Acta Autom. Sin. 2012, 38, 1211–1217. [Google Scholar] [CrossRef]

- Carli, R.; Chiuso, A.; Schenato, L.; Zampieri, S. Distributed Kalman filtering based on consensus strategies. IEEE J. Sel. Areas Commun. 2008, 26, 622–633. [Google Scholar] [CrossRef]

- Lopes, C.G.; Sayed, A.H. Diffusion least-mean squares over adaptive networks: formulation and performance analysis. IEEE Trans. Signal Process. 2008, 56, 3122–3136. [Google Scholar] [CrossRef]

- Zhao, X.; Sayed, A.H. Performance limits for distributed estimation over LMS adaptive networks. IEEE Trans. Signal Process. 2012, 60, 5107–5124. [Google Scholar] [CrossRef]

- Cattivelli, F.S.; Sayed, A.H. Diffusion LMS strategies for distributed estimation. IEEE Trans. Signal Process. 2010, 58, 1035–1048. [Google Scholar] [CrossRef]

- Arablouei, R.; Dogancay, K.; Werner, S.; Huang, Y.F. Adaptive distributed estimation based on recursive least-squares and partial diffusion. IEEE Trans. Signal Process. 2014, 62, 3510–3522. [Google Scholar] [CrossRef]

- Mateos, G.; Giannakis, G.B. Distributed recursive least-squares: stability and performance analysis. IEEE Trans. Signal Process. 2012, 60, 3740–3754. [Google Scholar] [CrossRef]

- Tu, S.Y.; Sayed, A.H. Diffusion strategies outperform consensus strategies for distributed estimation over adaptive networks. IEEE Trans. Signal Process. 2012, 60, 6217–6234. [Google Scholar] [CrossRef]

- Predd, J.B.; Kulkarni, S.R.; Poor, H.V. Distributed learning in wireless sensor networks. IEEE Signal Process Mag. 2006, 23, 56–69. [Google Scholar] [CrossRef]

- Chen, J.; Sayed, A.H. Diffusion adaptation strategies for distributed optimization and learning over networks. IEEE Trans. Signal Process. 2012, 60, 4289–4305. [Google Scholar] [CrossRef]

- Sayed, A.H.; Tu, S.Y.; Chen, J.S. Online learning and adaptation over networks: more information is not necessarily better. In Proceedings of the 2013 Information Theory and Applications Workshop (ITA), San Diego, CA, 10–15 February 2013; pp. 1–8. [Google Scholar]

- Chouvardas, S.; Slavakis, K.; Theodoridis, S. Adaptive robust distributed learning in diffusion sensor networks. IEEE Trans. Signal Process. 2011, 59, 4692–4707. [Google Scholar] [CrossRef]

- Ma, H.; Yang, Y.; Chen, Y.; Ray Liu, K.J.; Wang, Q. Distributed state estimation with dimension reduction preprocessing. IEEE Trans. Signal Process. 2014, 62, 3098–3110. [Google Scholar]

- Chen, J.; Towfic, Z.J.; Sayed, A.H. Dictionary learning over distributed models. IEEE Trans. Signal Process. 2015, 63, 1001–1016. [Google Scholar] [CrossRef][Green Version]

- Liu, Y.; Li, C.; Zhang, Z. Diffusion sparse least-mean squares over networks. IEEE Trans. Signal Process. 2012, 60, 4480–4485. [Google Scholar] [CrossRef]

- Jakovetic, D.; Xavier, J.; Moura, J.M.F. Fast distributed gradient methods. IEEE Trans. Autom. Contr. 2014, 59, 1131–1146. [Google Scholar] [CrossRef]

- Wei, E.; Ozdaglar, A.; Jadbabaie, A. A distributed Newton method for Network Utility Maximization–I: algorithm. IEEE Trans. Autom. Contr. 2013, 58, 2162–2175. [Google Scholar] [CrossRef]

- Wei, E.; Ozdaglar, A.; Jadbabaie, A. A distributed Newton method for Network Utility Maximization–II: convergence. IEEE Trans. Autom. Contr. 2013, 58, 2176–2188. [Google Scholar] [CrossRef]

- Gharehshiran, O.N.; Krishnamurthy, V.; Yin, G. Distributed energy-aware diffusion least mean squares: game-theoretic learning. IEEE J. Sel. Top. Signal Process. 2013, 7, 821–836. [Google Scholar] [CrossRef]

- Jiang, C.; Chen, Y.; Ray Liu, K.J. Distributed adaptive networks: A graphical evolutionary game-theoretic view. IEEE Trans. Signal Process. 2013, 61, 5675–5688. [Google Scholar] [CrossRef]

- Chen, J.; Sayed, A.H. Distributed Pareto optimization via diffusion strategies. IEEE J. Sel. Top. Signal Process. 2013, 7, 205–220. [Google Scholar] [CrossRef]

- Zhao, X.; Sayed, A.H. Asynchronous adaptation and learning over networks–part I: modeling and stability analysis. IEEE Trans. Signal Process. 2015, 63, 811–826. [Google Scholar] [CrossRef]

- Zhao, X.; Sayed, A.H. Asynchronous adaptation and learning over networks-part II: Performance Analysis. IEEE Trans. Signal Process. 2015, 63, 827–842. [Google Scholar] [CrossRef]

- Zhao, X.; Sayed, A.H. Asynchronous adaptation and learning over networks-part III: comparison analysis. IEEE Trans. Signal Process. 2015, 63, 843–858. [Google Scholar] [CrossRef]

- Zhong, M.; Cassandras, C.G. Asynchronous distributed optimization with event-driven communication. IEEE Trans. Autom. Contr. 2010, 55, 2735–2750. [Google Scholar] [CrossRef]

- Edwards, J. Signal processing leads to new wireless technologies [Special reports]. IEEE Signal Process Mag. 2014, 31, 10–14. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tan, F. The Algorithms of Distributed Learning and Distributed Estimation about Intelligent Wireless Sensor Network. Sensors 2020, 20, 1302. https://doi.org/10.3390/s20051302

Tan F. The Algorithms of Distributed Learning and Distributed Estimation about Intelligent Wireless Sensor Network. Sensors. 2020; 20(5):1302. https://doi.org/10.3390/s20051302

Chicago/Turabian StyleTan, Fuxiao. 2020. "The Algorithms of Distributed Learning and Distributed Estimation about Intelligent Wireless Sensor Network" Sensors 20, no. 5: 1302. https://doi.org/10.3390/s20051302

APA StyleTan, F. (2020). The Algorithms of Distributed Learning and Distributed Estimation about Intelligent Wireless Sensor Network. Sensors, 20(5), 1302. https://doi.org/10.3390/s20051302