Abstract

Deep Learning (DL), a successful promising approach for discriminative and generative tasks, has recently proved its high potential in 2D medical imaging analysis; however, physiological data in the form of 1D signals have yet to be beneficially exploited from this novel approach to fulfil the desired medical tasks. Therefore, in this paper we survey the latest scientific research on deep learning in physiological signal data such as electromyogram (EMG), electrocardiogram (ECG), electroencephalogram (EEG), and electrooculogram (EOG). We found 147 papers published between January 2018 and October 2019 inclusive from various journals and publishers. The objective of this paper is to conduct a detailed study to comprehend, categorize, and compare the key parameters of the deep-learning approaches that have been used in physiological signal analysis for various medical applications. The key parameters of deep-learning approach that we review are the input data type, deep-learning task, deep-learning model, training architecture, and dataset sources. Those are the main key parameters that affect system performance. We taxonomize the research works using deep-learning method in physiological signal analysis based on: (1) physiological signal data perspective, such as data modality and medical application; and (2) deep-learning concept perspective such as training architecture and dataset sources.

1. Introduction

Deep Learning has succeeded over traditional machine learning in the field of medical imaging analysis, due to its unique ability to learn features from raw data [1]. Objects of interest in medical imaging such as lesions, organs, and tumors are very complex, and much time and effort is required to extract features using traditional machine learning, which is accomplished manually. Thus, deep learning in medical imaging replaces hand-crafted feature extraction by learning from raw input data, feeding into several hidden layers, and finally outputting the result from a huge number of parameters in an end-to-end learning manner [2]. Therefore, many research works have benefited from this novel approach to apply physiological data to fulfil medical tasks.

Physiological signal data in the form of 1D signals are time-domain data, in which sample data points are recorded over a period of time [3]. These signals change continuously and indicate the health of a human body. Physiological signal data categories fall into characteristics such as electromyogram (EMG), which is data regarding changes to skeleton muscles, electrocardiogram (ECG), which is data regarding changes to heart beat or rhythm, electroencephalogram (EEG), which is data regarding changes to the brain measured from the scalp, and electrooculogram (EOG), which is data regarding changes to corneo-retinal potential between the front and the back of the human eye.

Convolutional neural network (CNN) is the most successful type of deep-learning model for 2D image analysis such as recognition, classification, and prediction. CNN receives 2D data as an input and extracts high-level features though many hidden convolution layers. Thus, to feed physiological signals into a CNN model, some research works have converted 1D signals into 2D data [4]. Therefore, in this paper we survey 147 contributions which have found highly accurate and significant results of physiological signal analysis using a deep-learning approach. We overview and explain these solutions and contributions in more detail in Section 3, Section 4, and Section 5.

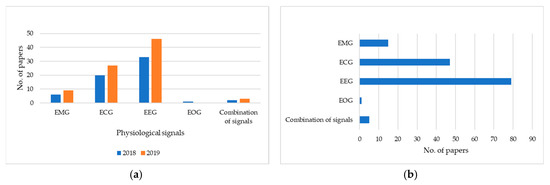

We collected papers via search engine PubMed with keywords combining “deep learning” and a type of physiological signal such as “deep learning electromyogram emg”, “deep learning electrocardiogram ecg”, “deep learning electroencephalogram eeg”, and “deep learning electrooculogram eog” [5]. We found 147 papers published between January 2018 and October 2019 inclusive from various journals and publishers. As illustrated in Figure 1a, the works on EMG, ECG, and EEG using a deep-learning approach have rapidly increased in 2018 and 2019, while EOG and a combination of those signals are limited. Within the works on EEG, there has been an increase by 13, from 33 works in 2018 to 46 works in 2019. As shown in Figure 1b, among the four data modalities of physiological signals, EEG has been conducted in 79 works on a variety of applications. For ECG, there have 47 works conducted. Fifteen works apply to EMG, 1 work to EOG, and 5 works to a combination of those signals.

Figure 1.

Statistics for papers using a deep-learning approach in physiological signal data grouped by: (a) year of publication; and (b) data modality.

There are many papers that prove that the deep-learning approach is more successful than traditional machine learning for both implementation and performance. However, this paper does not aim to study the comparison between them. In this paper, we review some recent methods of deep learning in the last two years that analyze the physiological signals. We only compare the key parameters within deep-learning methods such as input data type, deep-learning task, deep-learning model, training architecture, and dataset sources which are involved in predicting the state of hand motion, heart disease, brain disease, emotion, sleep stages, age, and gender.

2. Related Works

There are two types of scientific survey in deep-learning approaches regarding physiological signal data for healthcare application between January 2018 and October 2019 inclusive.

The first is oriented to medical fields such as a taxonomy based on medical tasks (i.e., disease detection, computer-aided diagnosis, etc.), or a taxonomy based on anatomy application areas (i.e., brain, eyes, chest, lung, liver, kidney, heart, etc.). Faust et al [6] collected 53 research papers regarding physiological signal analysis using deep-learning methods published from 2008 to 2017 inclusive. This work initially introduced deep-learning models such as auto-encoder, deep belief network, restricted Boltzmann machine, generative adversarial network, and recurrent neural network. Then, it categorized the papers based on types of physiological signal data modalities. Each category points out the medical application, the deep-learning algorithm, the dataset, and the results.

The second is oriented to deep-learning techniques such as a taxonomy based on deep-learning architectures (i.e., AE, CNN, RNN, DBN, GAN, U-Net, etc.), or the workflow of deep-learning implementation for medical application. Ganapathy et al [3] conducted a taxonomy-based survey on deep learning of 1D biosignal data. This work collected 71 papers from 2010 to 2017 inclusive. Most of the collected papers were published on ECG signals. The goal of the survey was initially to review several techniques for biosignal analysis using deep learning. Then, it classified deep-learning models based on origin, dimension, type of biosignal as an input data, the goal of application, dataset size, type of ground-truth data, and learning schedule of the network. Tobore et al [7], pointed out some biomedical domain considerations in deep-learning intervention for healthcare challenges. It presented the implementation of deep learning in healthcare by categorizing it into biological system, e-health record, medical image, and physiological signals. It ended by introducing research directions for improving health management on a physiological signal application.

3. Physiological Signal Analysis and Modality

Physiological signal analysis is a study estimating the human health condition from a physical phenomenon. There are three types of measurement to record physiological signals: (1) reports; (2) reading; and (3) behavior [8]. The “report” is a response evaluation of questionnaire from subjects who participants in rating their own physiological states. The “reading” is recorded information that is captured by a device to read the human body state such as muscle strength, heartbeat, brain functionality, etc. The “behavior” measurement records a variety of actions such as movement of the eyes. In this paper, we did not review the “report” measurement because the response of “report” is a more biased, less precise question and has broader diversity of question scale. We focus on the technique of “reading” and “behavior” measurement in which the response results are in a signal modality of EMG, ECG, EEG, EOG, or a combination of these signals.

Table 1 describes the physiological signal modality which was used to implement medical application. The muscle tension pattern of the EMG signal provides hand motion and muscle activity recognition. The variant of heartbeat or heart rhythm provides heart disease, sleep stage, emotion, age, and gender classification. The diversity of brain response of EEG signal provides brain disease, emotion, sleep-stage, motion, gender, words, and age classification. The changes of eye corneo-retinal potential of EOG signal provides sleep-stage classification.

Table 1.

Medical application in physiological signal analysis.

This section presents a categorization of physiological signal data modality regarding the various deep-learning models. We demonstrate key contributions in medical application and performance of systems. We taxonomize contributions such as deep learning on electromyogram (EMG), deep learning on electrocardiogram (ECG), deep learning on electroencephalogram (EEG), deep learning on electrooculogram (EOG), and deep learning on a combination of signals, as shown in Table 2.

Table 2.

Table structure based on signal modality and dataset source.

We do both a quantitative and qualitive comparison of the deep-learning model. For quantitative comparison, the number of deep-learning models that have been used in medical application is illustrated. For qualitative comparison, since the performance criterion is not provided uniformly, we assume an accuracy value as a base criterion for an overall performance comparison.

3.1. Deep Learning with Electromyogram (EMG)

Electromyogram (EMG) signal is data regarding changes of skeleton muscles, which is recorded by putting non-invasive EMG electrodes on the skin such as the commercial MYO Armband (MYB). Since different muscle information is defined by different activity, it can discriminate a pattern of motion such as an open or closed hand. To classify those motion patterns based on the EMG signal information, 15 research works were conducted using deep-learning methods, as shown in Table 3 and Table 4. Within these research works, there are two types of key contribution. One is focused on hand motion recognition and another one is focused on general muscle activity recognition.

Table 3.

Medical application in EMG analysis using a public dataset source.

Table 4.

Medical application in EMG analysis using Private dataset source.

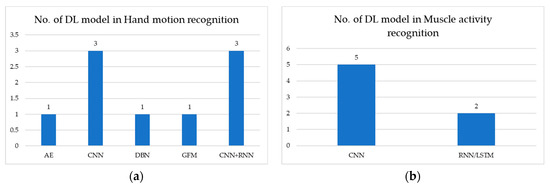

Figure 2 shows the number of deep-learning models used to analyze the EMG signal: (a) illustrates hand motion recognition and (b) illustrates muscle activity recognition. In hand motion recognition, CNN and CNN+RNN models are the most commonly used. In muscle activity recognition, the CNN model is the most commonly used.

Figure 2.

Number of DL models used in EMG signals for: (a) hand motion recognition; (b) muscle activity recognition.

Table 3 describes medical application using deep-learning methods in EMG signal analysis from a public dataset source. The publicly available datasets are deployed in the CNN model, which provides overall accuracy >68%. However, the CNN+RNN model provides higher accuracy than the CNN model, with accuracy >82%.

Table 4 describes medical application using deep-learning methods in EMG signal analysis from a private (in-house) dataset source. The works use their own in-house (private) dataset to recognize hand motion. The DBN model performs with overall accuracy >88%. Therefore, The DBN model performs better than CNN and CNN+RNN models. For muscle activity recognition, the CNN model performs NMSE of 0.033 ± 0.017, while RNN/long short-term memory (LSTM) model performs NMSE of 0.096 ± 0.013. Therefore, the CNN model performs better than the RNN/LSTM model.

3.2. Deep Learning with Electrocardiogram (ECG)

Electrocardiogram (ECG) is data regarding changes of heartbeat or rhythm. There are 47 research works using deep-learning methods to analyze the ECG signals, as shown in Table 5, Table 6, and Table 7. Their key contributions are categorized as heartbeat signal classification, heart disease classification, sleep-stage classification, emotion detection, and age and gender prediction.

Table 5.

Medical application in ECG analysis using a public dataset source.

Table 6.

Medical application in ECG analysis using Private dataset source.

Table 7.

Medical application in ECG analysis using Hybrid dataset source.

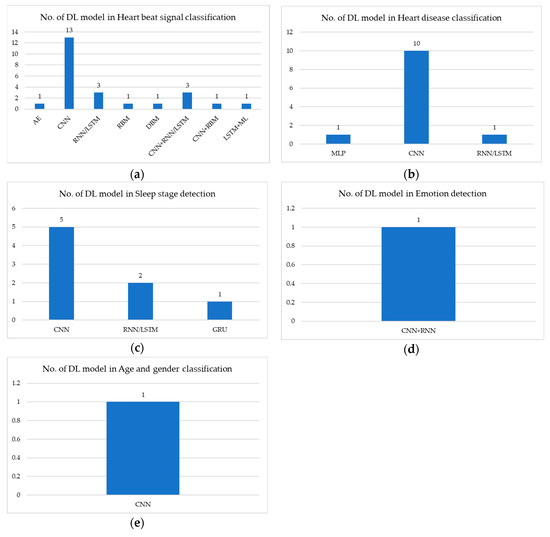

Figure 3 shows the number of deep-learning models used to analyze ECG signal: (a) illustrates heartbeat signal classification in which the CNN model is the most commonly used; (b) illustrates heart disease classification in which CNN is the most commonly used; (c) illustrates sleep-stage detection in which CNN is the most commonly used; (d) illustrates emotion detection in which RNN/LSTM and CNN+RNN are used; and (e) illustrates age and gender classification in which only CNN is used.

Figure 3.

Number of DL models used in ECG signals for: (a) heart beat signal classification; (b) heart disease classification; (c) sleep stage detection; (d) emotion detection; and (e) age and gender classification.

Table 5 describes medical application using deep-learning methods in ECG signal analysis from a public dataset source. In heartbeat signal classification, the CNN model performs with overall accuracy >95%. RNN/LSTM model performs with overall accuracy >98%. CNN+RNN/LSTM model performs with overall accuracy >87%. Therefore, RNN/LSTM model performs better than CNN and CNN+RNN/LSTM models. In heart disease classification, CNN model performs with overall accuracy >83%. RNN/LSTM model performs with overall accuracy >90%. CNN+RNN/LSTM model performs with overall accuracy >98%. Therefore, CNN+RNN/LSTM model performs the best. In sleep-stage classification, only CNN model is used and it performs with overall accuracy >87%.

Table 6 describes medical application using deep-learning methods in ECG signal analysis from a private dataset source. In heartbeat signal classification, only CNN model is used and the CNN model performs with overall accuracy >78%. In heart disease classification, CNN model performs with overall accuracy >97%, while CNN+LSTM model performs with accuracy >83%. Therefore, CNN model performs better than CNN+LSTM model. In sleep-stage classification, CNN and GRU model perform with accuracy of 99%. In emotion classification, CNN+RNN model performs with accuracy >73%. In age and gender prediction, CNN model performs with accuracy >90%.

Table 7 describes medical application using deep-learning methods in ECG signal analysis from a hybrid dataset source. In heartbeat signal classification, CNN+LSTM model performs with accuracy >99%.

3.3. Deep Learning with Electroencephalogram (EEG)

Electroencephalogram (EEG) is data regarding changes of the brain measured from the scalp. There are 79 research works using deep-learning methods to analyze the EEG signals, as shown in Table 8, Table 9, and Table 10. Their key contributions are categorized as brain functionality classification, brain disease classification, emotion classification, sleep-stage classification, motion classification, gender classification, word classification, and age classification.

Table 8.

Medical application in EEG analysis using Public dataset source.

Table 9.

Medical application in EEG analysis using Private dataset source.

Table 10.

Medical application in EEG analysis using Hybrid dataset source.

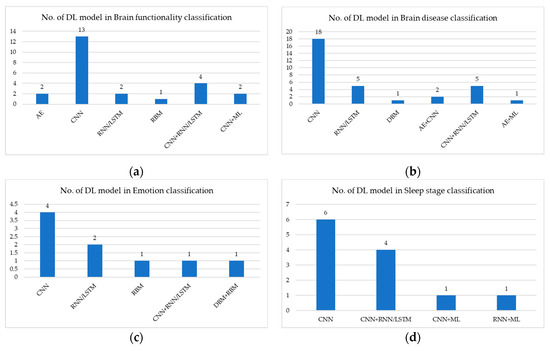

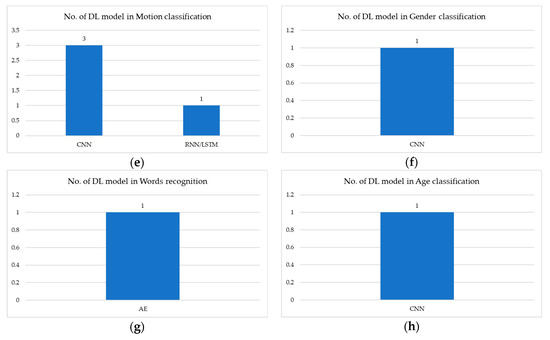

Figure 4 shows the number of deep-learning models used to analyze EEG signal: (a) illustrates brain functionality classification in which the CNN model is the most commonly used; (b) illustrates brain disease classification in which the CNN is the most commonly used; (c) illustrates emotion classification in which the CNN is the most commonly used; (d) illustrates sleep-stage classification in which CNN is the most commonly used; (e) illustrates motion classification in which CNN is the most commonly used; (f) illustrates gender classification in which only CNN is used; (g) illustrates word recognition in which only AE is used; and (h) illustrates age classification in which only CNN is used.

Figure 4.

Number of DL models used in EEG signals for: (a) brain functionality classification; (b) brain disease classification; (c) emotion classification; (d) sleep-stage classification; (e) motion classification; (f) gender classification; (g) words recognition; and (h) age classification.

Table 8 describes medical application using deep-learning methods in EEG signal analysis from a public dataset source. In brain functionality signal classification, CNN model performs with overall accuracy >66%. RNN/LSTM model performs with overall accuracy >77%. CNN+RNN/LSTM model performs with overall accuracy >74%. Therefore, RNN/LSTM model performs better than CNN and CNN+RNN/LSTM models. In brain disease classification, CNN model performs with overall accuracy >93%. RNN/LSTM model performs with overall accuracy >95%. CNN+RNN/LSTM model performs with overall accuracy >90%. Therefore, RNN/LSTM model performs better than CNN and CNN+RNN/LSTM models. In emotion classification, CNN model performs with overall accuracy >55%. RNN/LSTM model performs with overall accuracy >74%. RBM model performs with overall accuracy >75%. Therefore, RBM model performs best. In sleep-stage classification, CNN model performs with overall accuracy >79%. RNN/LSTM model performs with overall accuracy >79%. CNN+RNN/LSTM model performs with overall accuracy >84%. Therefore, CNN+RNN/LSTM model performs better than CNN and RNN/LSTM models. In motion classification, only RNN/LSTM is used, with accuracy >68%. In gender classification, only CNN is used, with accuracy >80%. In word classification, only CNN+AE is used, with overall accuracy >95%.

Table 9 describes medical application using deep-learning methods in EEG signal analysis from a private dataset source. In brain functionality signal classification, CNN model performs with overall accuracy >63%. CNN+RNN/LSTM model performs with overall accuracy >83%. Stacked auto-encoder (SAE)+CNN model performs with overall accuracy >88%. Therefore, SAE+CNN model performs better than CNN and CNN+RNN/LSTM models. In brain disease classification, CNN model performs with overall accuracy >59%. RNN/LSTM model performs with overall accuracy >73%. CNN+RNN/LSTM model performs with overall accuracy >70%. Therefore, RNN/LSTM model performs better than CNN and CNN+RNN/LSTM models. In emotion classification, only CNN+LSTM model is used, with accuracy >98%. In sleep-stage classification, CNN model performs with overall accuracy >95%. CNN+RNN/LSTM model performs with kappa > 0.8. In motion classification, only CNN model is used, with accuracy >80%.

Table 10 describes medical application using deep-learning methods in EEG signal analysis from a hybrid dataset source. In brain disease classification, only the CNN+AE model is used, with kappa > 0.564. In sleep-stage classification, only CNN+LSTM model is used, with kappa > 0.72. In age classification, only the CNN model is used, with accuracy >95%.

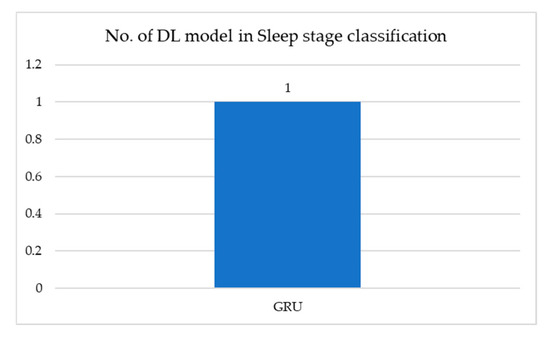

3.4. Deep Learning with Electrooculogram (EOG)

Electrooculogram (EOG) is data regarding changes of the corneo-retinal potential between the front and the back of the human eye. There are 1 research work using deep-learning methods to analyze the EOG signals, as shown in Table 11. The contribution of deploying deep learning in EOG signal analysis is only for sleep-stage classification. Figure 5 shows the number of deep-learning models which are used to analyze EOG signal for sleep-stage classification. The work used GRU model.

Table 11.

Medical application in EOG analysis using Public dataset source.

Figure 5.

Number of DL models used in EOG signals for sleep-stage classification.

Table 11 describes medical application using deep-learning methods in EOG signal analysis from a public dataset source. In sleep-stage classification, the GRU model performs with accuracy of 69.25%.

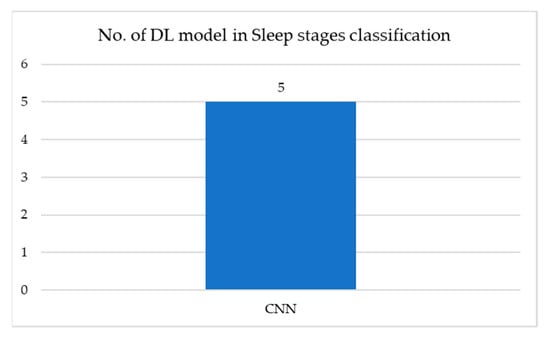

3.5. Deep Learning with a Combination of Signals

There are 5 research works using deep-learning methods to analyze a combination of signals, as shown in Table 12. Sokolovsky et al [150] combined EEG and EOG signal. Chambon et al [151] and Andreotti et al [152] combined polysomnography (PSG) signals such as EEG, EMG, and EOG. The work of Yildirim et al [153] exploited the combination signals of EEG and EOG. Croce et al’s [154] contribution was from EEG and magnetoencephalographic (MEG) signals. CNN is used for both sleep-stage classification and the classification of brain and artifactual independent components. Figure 6 shows the number of deep-learning models used to analyze a combination of signals for sleep-stage classification.

Table 12.

Medical application in Combine of signals analysis using Public dataset source.

Figure 6.

Number of DL models used in a combination of signals for sleep-stage classification.

Table 12 describes medical application using deep-learning methods in a combination of signals analysis from a public dataset source. In sleep-stage classification, only the CNN model is used, with an overall accuracy >81%.

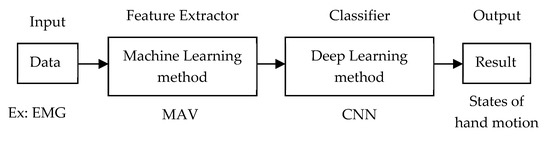

4. Training Architecture

To strive for high accuracy, deep-learning techniques require not only a good algorithm, but also a good dataset [155]. Therefore, the input data is used in two ways: (1) the input data are first extracted as features, then the feature data are fed into the network. Based on our review, some contributions use traditional machine-learning methods as feature extractors described in detail in Section 4.1, while other contributions use deep-learning methods as feature extractors described in detail in Section 4.2; and (2) the raw input data are fed into the network directly for end-to-end learning described in detail in Section 4.3.

4.1. Traditional Machine Learning as Feature Extractor and Deep Learning as Classifier

To distinguish the label of signals, raw signal data is divided into N levels. This step is called feature extraction. Feature extraction is conducted to strengthen the accuracy of prediction in the classification step. Figure 7 illustrates the training architecture using traditional machine learning as feature extractor and deep learning as classifier. For example, the raw EMG signal is divided into N levels using mean absolute value (MAV). The featured data is fed into a CNN to classify hand motion.

Figure 7.

Training architecture of machine learning as feature extractor and deep learning as classifier.

Yu et al [9] designed a feature-level fusion to recognize Chinese sign language. The features are extracted using hand-crafted features and learned features from DBN. These two feature levels are concatenated before being fed into the deep belief network and fully connected network for learning.

For the hand-grasping classification described by Li et al [14], principal component analysis (PCA) method is used for dimension reduction and DNN with a stack of 2-layered auto-encoders, and a SoftMax classifier is applied for classifying levels of force.

Saadatnejad et al [35] proposed ECG heartbeat classification for continuous monitoring. The work extracted raw ECG samples into heartbeat RR interval features and wavelet features. Next, the extracted features were fed into two RNN-based models for classification.

To classify premature ventricular contraction, Jeon et al [45] extracted the features in the QRS pattern from the ECG signal and classified by modified weight and bias based on the error-backpropagation algorithm.

Liu et al [60] presented heart disease classification based on ECG signals by deploying symbolic aggregate approximation (SAX) as a feature extraction and LSTM for classification.

Majidov et al [85] proposed motor imagery EEG classification by deploying Riemannian geometry-based feature extraction and a comparison between convolutional layers and SoftMax layers and convolutional layers, and fully connected layers which outputs 100 units.

Abbas et al [87] designed a model for multiclass motor imagery classification, in which fast Fourier transform energy map (FFTEM) is used for feature extraction and CNN is used for classification.

In diagnosing brain disorders, Golmohammadi et al [95] used linear frequency cepstral coefficients (LFCC) for feature extraction and hybrid hidden Markov models and stacked denoising auto-encoder (SDA) model for classifying.

4.2. Deep Learning as Feature Extractor and Traditional Machine Learning as Classifier

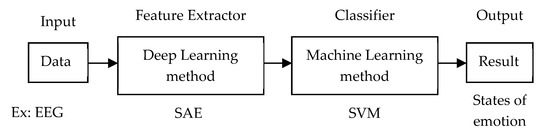

Figure 8 illustrates the training architecture of using deep learning as a feature extractor and traditional machine learning as classifier. For example, the raw EEG signal is divided into N levels using SAE. The featured data is fed into support vector machine (SVM) to classify the state of emotion.

Figure 8.

Training architecture of deep learning as feature extractor and machine learning as classifier.

Chauhan et al [26] proposed an ECG anomaly class identification algorithm, in which the LSTM and error profile modeling are used as a feature extractor. Then, the multiple choices of traditional machine-learning classifier models were conducted, such as multilayer perception, support vector machine, and logistic regression.

To diagnose arrhythmia, Yang et al [42] used DL-CCANet and TL-CCANet as feature extractor to discriminate features from dual-lead and three-lead ECGs. Then, the extracted features were fed into the linear support vector machine for classification.

Nguyen et al [63] proposed an algorithm for detecting sudden cardiac arrest in automated external defibrillators, in which CNN is used as feature extractor (CNNE) and a boosting (BS) classifier.

Ma et al [131] designed a model to detect driving fatigue. The network model integrated the PCA and deep-learning method called PCANet for feature extraction. Then, SVM/KNN is used for classification.

4.3. End-to-End Learning

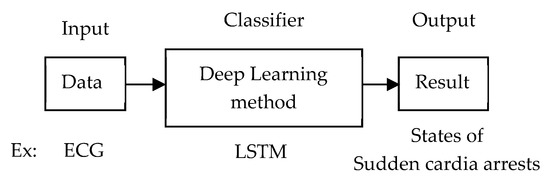

Rather than extracting the feature from raw data, the raw data is fed into the network for classification. This architecture reduces the feature-extraction step. Figure 9 illustrates the training architecture of using only deep-learning methods to get input raw data, do a classification, and output the result. For example, the ECG data is fed into the LSTM network to classify the states of sudden cardiac arrests.

Figure 9.

Training architecture of end-to-end learning using deep learning.

5. Dataset Sources

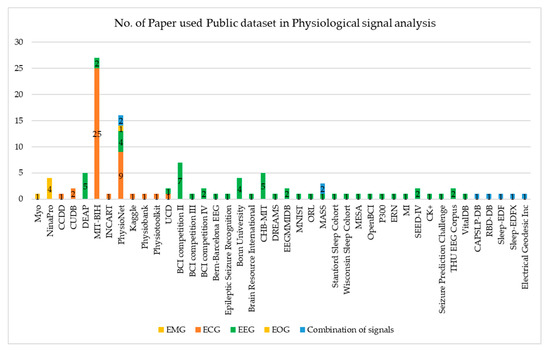

We deduce that there are three types of dataset sources used. (1) The public dataset as shown in Table 3, Table 5, Table 8, Table 11, and Table 12 is available online and freely accessible. It has large numbers of samples. Figure 10 illustrates the number of papers using a public dataset based on physiological data modality. For EMG signal analysis, NinaPro DB is the most commonly used. For ECG signal analysis, MIT-BIH is the most commonly used, then PhysioNet is the second most commonly used. For EEG signal analysis, BCI competition II is the most commonly used, then CHB-MIT and DEAP are the second most commonly used. For EOG signal analysis, only PhysioNet is used. For the combination of signal analysis, MASS and PhysioNet is the most commonly used. (2) Private datasets are shown in Table 4, Table 6, and Table 9: it is collected by an author in their own laboratory, hospital, or institution. This dataset requires a specific device for recording or capturing and requires participants or subjects to evolve in the experimental process. Thus, it has a small number of samples. (3) Hybrid datasets are shown in Table 7 and Table 10: the public and private datasets are combined for use in the experiment.

Figure 10.

Number of paper used Public dataset in physiological signal analysis.

6. Discussion

We studied contributions based on types of physiological signal data modality and training architecture. The medical application, deep-learning model, and performance of those contributions have been reviewed and illustrated.

6.1. Discussion of the Deep-Learning Task

In medical application, we deduced that most of the contributions were conducted using a classification task, feature-extraction task, and data compression task. The classification task, which is also known as recognition task, detection task, or prediction task, focuses on whether the instance exists or does not exist. For example, arrhythmia detection [51] analyzes whether the heartbeat signal is normal or arrhythmic. The classification task also focuses on grouping or leveling the types of instances. For example, emotion classification [126] analyzes emotion into groups of sad, happy, neutral, and fear. The feature-extraction task [43] focuses on input data enhancement, in which the unsupervised learning technique is used to label the dataset to avoid a heavy burden from manual labeling. The data compression task [33] focuses on decreasing the data size while still retaining the high quality of data for storage and transmission.

6.2. Discussion of the Deep-Learning Model

Even though there are various deep-learning models, we deduced that only CNN, RNN/LSTM, and CNN+RNN/LSTM models are the most commonly used. As theorized in the literature, the RNN/LSTM model predicts continuously sequential data well. However, many contributions convert physiological signals into 2D data and feed those 2D data into a CNN network, in which the performance is good.

6.3. Discussion of the Training Architecture

Due to different characteristics of data modality, investigation into the diversity of training architectures has been conducted. The first type of architecture exploits the traditional machine-learning model as a feature extractor and deep-learning model as a classifier. This architecture’s goal is to boost accuracy of classification by converting raw data into feature data. The feature data consists of higher potentially discriminated characteristics than the raw data. The DL classifier trains this feature data in a supervised learning manner.

In contrast, the second type of architecture employs the deep-learning model as a feature extractor and traditional machine-learning model as a classifier. This architecture’s goal is to reduce the heavy burden of the hand-crafted labeling of the dataset. The DL extractor trains the raw data in an unsupervised learning manner.

The third architecture type uses only a deep-learning model to train raw data and receive the final output. This architecture’s goal is to not rely on the input dataset, but to strengthen the algorithm of the deep-learning model, in which they believe that the more robust the DL algorithm, the higher the accuracy will be received. This architecture trains raw data in a supervised learning manner. Additionally, this architecture eases the implementation stage.

In our survey, we could not point out which type of architecture was best. This is because there are no contributions that apply these three types of architecture using the same input dataset for training, testing, and receiving the same desired task.

6.4. Discussion of the Dataset Source

We overviewed the sources of the dataset which were conducted for the deep-learning application of physiological signal analysis. The available public datasets which are widely used are MIT-BIH, PhysioNet, BCI competition II, CHB-MIT, DEAP, Bonn University, and NinaPro. The private dataset was collected by authors in their own laboratory, hospital, or institution. The private dataset was collected if the data was not available as a public source. Due to lack of datasets, contributions such as Nodera et al [23] employed a technique of data augmentation, in which a fake dataset is generated by duplicating original data and doing a transformation such as translation and rotation. Contributions [12,16,23,46,58] employed a transfer learning technique. Rather than undertaking a training from a scratch with a huge required dataset, they adapted the pre-weight from a state-of-the-art model such as AlexNet, VGG, ResNet, Inception, or DenseNet.

7. Conclusions

In this paper, we conducted an overview of deep-learning approaches and their applications in medical 1D signal analysis over the past two years. We found 147 papers using deep-learning methods in EMG signal analysis, ECG signals analysis, EEG signals analysis, EOG signals analysis, and combinations of signal analysis.

By reviewing those works, we contribute to the identification of the key parameters used to estimate the state of hand motion, heart disease, brain disease, emotion, sleep stages, age, and gender. Additionally, we reveal that the CNN model predicts the physiological signals at the state-of-the-art level. We have also learned that there is no precise standardized experimental setting. These non-uniform parameters and settings makes it difficult to compare exact performance. However, we compared the overall performance. This comparison should enlighten other researchers to make a decision on which input data type, deep-learning task, deep-learning model, and dataset is suitable for achieving their desired medical application and reaching state-of-the-art level. As a lesson learned from this review, our discussion can also help fellow researchers to make a decision on a deep-learning task, deep-learning model, training architecture, and dataset. Those are the main parameters that effects the system performance.

In conclusion, a deep-learning approach has proved promising for bringing those current contributions to the state-of-the-art level in physiological signal analysis for medical applications.

Author Contributions

Conceptualization, Project administration, and Writing-review and editing was made by M.H. Methodology, Writing-original draft was made by B.R. Writing-review and editing and Validation was made by S.M. Data curation and Writing-original draft was made by N.-J.S. All authors have read and agreed to the published version of the manuscript.

Acknowledgments

This research was supported by the Bio and Medical Technology Development Program of the National Research Foundation (NRF) funded by the Korean government (MSIT) (No. NRF-2019M3E5D1A02069073) and was supported by the Soonchunhyang University Research Fund.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

| ADHD | Attention Deficit Hyperactivity Disorder |

| AE | Auto-encoder |

| ANN | Artificial neural network |

| AUC | Area under the curve |

| AUPRC | Area under the precision–recall curve |

| AUROC | Area under the receiver operating characteristic curve |

| BB | Bern-Barcelona EEG database |

| BCI | Brain-computer interface |

| BRNN | Bi-directional recurrent neural network |

| CAM-ICU | Confusion assessment method for the ICU |

| CapsNet | Capsule network |

| CNNE | Convolutional neural network as a feature extractor |

| CP-MixedNet | Channel-projection mixed-scale convolutional neural network |

| CssC DBM | Contractive Slab and Spike Convolutional Deep Boltzmann Machine |

| DBLSTM-WS | Bi-directional LSTM network-based wavelet sequences |

| DBM | Deep Boltzmann Machine |

| DBN | Deep belief network |

| DBN-GC | Deep belief networks with glia chains |

| DCNN | Deep convolution neural network |

| DCssC DBM | Discriminative version of CssCDBM |

| DL-CCANet | Dual-lead ECGs - canonical correlation analysis and cascaded convolutional network |

| DN-AE-NTM | Deep network - auto-encoder - neural Turing machine |

| DNN | Deep neural network |

| EBR | Error Backpropagation |

| ED | Emergency department |

| EEG-fNIRs | EEG-Functional near-infrared spectroscopy |

| EL-SDAE | Ensemble SDAE classifier with local information preservation |

| ERP | Event-related potential |

| ESR | Epileptic Seizure Recognition dataset |

| ETLE | Extra-temporal lobe epilepsy |

| FPR | False prediction rates |

| GAN | Generative adversarial network |

| GFM | Generative flow model |

| GRU | Gated-recurrent unit |

| HGD | High gamma dataset |

| HMM | Hidden Markov models |

| IC | Independent component |

| KFs | Polynomial Kalman filters |

| LSTM | Long short-term memory |

| MEG | Magnetoencephalographic |

| MLP | Multilayer perceptron |

| MLR | Multilayer logistic regression |

| MMDPN | Multi-view multi-level deep polynomial network |

| MPCNN | Multi-perspective convolutional neural network |

| MTLE | Mesial temporal lobe epilepsy |

| NIP | Neural interface processor |

| NMSE | Normalised mean square error |

| OCNN | Orthogonal convolutional neural network |

| PCANet | Integrating the principal component analysis (PCA) and a deep-learning model |

| R3DCNN | 3D convolutional neural networks |

| RA | Region aggregation |

| RASS | Richmond agitation-sedation scale |

| RBM | Restricted Boltzmann machine |

| RCNN | Recurrent convolutional neural network |

| RNN | Recurrent neural network |

| RR | Respiratory rate |

| SAE | Stacked auto-encoder |

| SDAE | Stacked denoising auto-encoder |

| SEED | SJTU emotion EEG dataset |

| SNN | Spiking neural network |

| STFT | Short-term Fourier transform |

| SVEB | Supraventricular ectopic beat |

| SVM | Support vector machine |

| SWT | Stationary wavelet transforms |

| TCN | Temporal convolutional network |

| TL-CCANet | Three-lead ECGs - canonical correlation analysis and cascaded convolutional network |

| TLE | Temporal lobe epilepsy |

| VAE | Variational auto-encoder |

| VEB | Ventricular ectopic beat |

References

- Mu, R.; Zeng, X. A Review of Deep Learning Research. TIISs 2019, 13, 1738–1764. [Google Scholar] [CrossRef]

- Zhang, L.; Jia, J.; Li, Y.; Gao, W.; Wang, M. Deep Learning based Rapid Diagnosis System for Identifying Tomato Nutrition Disorders. KSII Trans. Internet Inf. Syst. 2019, 13, 2012–2027. [Google Scholar] [CrossRef]

- Ganapathy, N.; Swaminathan, R.; Deserno, T.M. Deep learning on 1-D biosignals: A taxonomy-based survey. Yearbook Med. Inf. 2018, 27, 98–109. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Yao, L.; Chen, K.; Wang, S.; Chang, X.; Liu, Y. Making Sense of Spatio-Temporal Preserving Representations for EEG-Based Human Intention Recognition. IEEE Trans. Cybern. 2019, 1–12. [Google Scholar] [CrossRef] [PubMed]

- PubMed. Available online: https://www.ncbi.nlm.nih.gov/pubmed/ (accessed on 31 October 2019).

- Faust, O.; Hagiwara, Y.; Hong, T.J.; Lih, O.S.; Acharya, U.R. Deep learning for healthcare applications based on physiological signals: A review. Comput. Methods Programs Biomed. 2018, 161, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Tobore, I.; Li, J.; Yuhang, L.; Al-Handarish, Y.; Abhishek, K.; Zedong, N.; Lei, W. Deep Learning Intervention for Health Care Challenges: Some Biomedical Domain Considerations. JMIR mHealth uHealth 2019, 7, e11966. [Google Scholar] [CrossRef]

- Baig, M.Z.; Kavkli, M. A Survey on Psycho-Physiological Analysis & Measurement Methods in Multimodal Systems. Multimodal Technol. Interact 2019, 3, 37. [Google Scholar] [CrossRef]

- Yu, Y.; Chen, X.; Cao, S.; Zhang, X.; Chen, X. Exploration of Chinese Sign Language Recognition Using Wearable Sensors Based on Deep Belief Net. IEEE J. Biomed. Health Inf. 2019. [Google Scholar] [CrossRef]

- Hu, Y.; Wong, Y.; Wei, W.; Du, Y.; Kankanhalli, M.; Geng, W. A novel attention-based hybrid CNN-RNN architecture for sEMG-based gesture recognition. PLoS ONE 2018, 13, e0206049. [Google Scholar] [CrossRef]

- Wei, W.; Dai, Q.; Wong, Y.; Hu, Y.; Kankanhalli, M.; Geng, W. Surface Electromyography-based Gesture Recognition by Multi-view Deep Learning. IEEE Trans. Biomed. Eng. 2019, 66, 2964–2973. [Google Scholar] [CrossRef]

- Cote-Allard, U.; Fall, C.L.; Drouin, A.; Campeau-Lecours, A.; Gosselin, C.; Glette, K.; Laviolette, F.; Gosselin, B. Deep learning for electromyographic hand gesture signal classification using transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 760–771. [Google Scholar] [CrossRef] [PubMed]

- Sun, W.; Liu, H.; Tang, R.; Lang, Y.; He, J.; Huang, Q. sEMG-Based Hand-Gesture Classification Using a Generative Flow Model. Sensors 2019, 19, 1952. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Ren, J.; Huang, H.; Wang, B.; Zhu, Y.; Hu, H. PCA and deep learning based myoelectric grasping control of a prosthetic hand. Biomed. Eng. Online 2018, 17, 107. [Google Scholar] [CrossRef] [PubMed]

- Rehman, M.Z.; Waris, A.; Gilani, S.O.; Jochumsen, M.; Niazi, I.K.; Jamil, M.; Farina, D.; Kamavuako, E.N. Multiday EMG-based classification of hand motions with deep learning techniques. Sensors 2018, 18, 2497. [Google Scholar] [CrossRef]

- Wang, W.; Chen, B.; Xia, P.; Hu, J.; Peng, Y. Sensor Fusion for Myoelectric Control Based on Deep Learning with Recurrent Convolutional Neural Networks. Artif. Organs 2018, 42, E272–E282. [Google Scholar] [CrossRef]

- Xia, P.; Hu, J.; Peng, Y. EMG-based estimation of limb movement using deep learning with recurrent convolutional neural networks. Artif. Organs 2018, 42, E67–E77. [Google Scholar] [CrossRef]

- Olsson, A.E.; Sager, P.; Andersson, E.; Bjorkman, A.; Malesevic, N.; Antfolk, C. Extraction of Multi-Labelled Movement Information from the Raw HD-sEMG Image with Time-Domain Depth. Sci. Rep. 2019, 9, 7244. [Google Scholar] [CrossRef]

- Khowailed, I.A.; Abotabl, A. Neural muscle activation detection: A deep learning approach using surface electromyography. J. Biomech. 2019, 95, 109322. [Google Scholar] [CrossRef]

- Rance, L.; Ding, Z.; McGregor, A.H.; Bull, A.M.J. Deep learning for musculoskeletal force prediction. Ann. Biomed. Eng. 2019, 47, 778–789. [Google Scholar] [CrossRef]

- Dantas, H.; Warren, D.J.; Wendelken, S.M.; Davis, T.S.; Clark, G.A.; Mathews, V.J. Deep Learning Movement Intent Decoders Trained with Dataset Aggregation for Prosthetic Limb Control. IEEE Trans. Biomed. Eng. 2019, 66, 3192–3203. [Google Scholar] [CrossRef]

- Ameri, A.; Akhaee, M.A.; Scheme, E.; Englehart, K. Real-time, simultaneous myoelectric control using a convolutional neural network. PLoS ONE 2018, 13, e0203835. [Google Scholar] [CrossRef]

- Nodera, H.; Osaki, Y.; Yamazaki, H.; Mori, A.; Izumi, Y.; Kaji, R. Deep learning for waveform identification of resting needle electromyography signals. Clin. Neurophysiol. 2019, 130, 617–623. [Google Scholar] [CrossRef]

- Ribeiro, A.L.P.; Paixao, G.M.M.; Gomes, P.R.; Ribeiro, M.H.; Ribeiro, A.H.; Canazart, J.A.; Oliveira, D.M.; Ferreira, M.P.; Lima, E.M.; Moraes, J.L.; et al. Tele-electrocardiography and bigdata: The CODE (Clinical Outcomes in Digital Electrocardiography) study. J. Electrocardiol. 2019, 57, S75–S78. [Google Scholar] [CrossRef]

- Cano-Espinosa, C.; Gonzalez, G.; Washko, G.R.; Cazorla, M.; Estepar, R.S.J. Automated Agatston score computation in non-ECG gated CT scans using deep learning. In Proceedings of the Medical Imaging 2018: Image Processing, Houston, TX, USA, 2 March 2018. [Google Scholar] [CrossRef]

- Chauhan, S.; Vig, L.; Ahmad, S. ECG anomaly class identification using LSTM and error profile modeling. Comput. Biol. Med. 2019, 109, 14–21. [Google Scholar] [CrossRef]

- Xia, Y.; Wulan, N.; Wang, K.; Zhang, H. Detecting atrial fibrillation by deep convolutional neural networks. Comput. Biol. Med. 2018, 93, 84–92. [Google Scholar] [CrossRef]

- Tan, J.H.; Hagiwara, Y.; Pang, W.; Lim, I.; Oh, S.L.; Adam, M.; Tan, R.S.; Chen, M.; Acharya, U.R. Application of stacked convolutional and long short-term memory network for accurate identification of CAD ECG signals. Comput. Biol. Med. 2018, 94, 19–26. [Google Scholar] [CrossRef]

- Smith, S.W.; Walsh, B.; Grauer, K.; Wang, K.; Rapin, J.; Li, J.; Fennell, W.; Taboulet, P. A deep neural network learning algorithm outperforms a conventional algorithm for emergency department electrocardiogram interpretation. J. Electrocardiol. 2019, 52, 88–95. [Google Scholar] [CrossRef]

- Wang, L.; Zhou, X. Detection of congestive heart failure based on LSTM-based deep network via short-term RR intervals. Sensors 2019, 19, 1502. [Google Scholar] [CrossRef]

- Attia, Z.I.; Sugrue, A.; Asirvatham, S.J.; Acherman, M.J.; Kapa, S.; Freidman, P.A.; Noseworthy, P.A. Noninvasive assessment of dofetilide plasma concentration using a deep learning (neural network) analysis of the surface electrocardiogram: A proof of concept study. PLoS ONE 2018, 13, e0201059. [Google Scholar] [CrossRef]

- Chen, M.; Wang, G.; Xie, P.; Sang, Z.; Lv, T.; Zhang, P.; Yang, H. Region Aggregation Network: Improving Convolutional Neural Network for ECG Characteristic Detection. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Wang, F.; Ma, Q.; Liu, W.; Chang, S.; Wang, H.; He, J.; Huang, Q. A novel ECG signal compression method using spindle convolutional auto-encoder. Comput. Methods Programs Biomed. 2019, 175, 139–150. [Google Scholar] [CrossRef]

- Wang, E.K.; Xi, I.; Sun, R.; Wang, F.; Pan, L.; Cheng, C.; Dimitrakopoulou-Srauss, A.; Zhe, N.; Li, Y. A new deep learning model for assisted diagnosis on electrocardiogram. Math. Biosci. Eng. MBE 2019, 16, 2481–2491. [Google Scholar] [CrossRef] [PubMed]

- Saadatnejad, S.; Oveisi, M.; Hashemi, M. LSTM-Based ECG Classification for Continuous Monitoring on Personal Wearable Devices. IEEE J. Biomed. Health Inf. 2019, 24, 515–523. [Google Scholar] [CrossRef] [PubMed]

- Brito, C.; Machado, A.; Sousa, A. Electrocardiogram Beat-Classification Based on a ResNet Network. Stud. Health technol. Inf. 2019, 264, 55–59. [Google Scholar] [CrossRef]

- Xu, S.S.; Mak, M.W.; Cheung, C.C. Towards end-to-end ECG classification with raw signal extraction and deep neural networks. IEEE J. Biomed. Health Inf. 2018, 23, 1574–1584. [Google Scholar] [CrossRef]

- Yildirim, Ö. A novel wavelet sequence based on deep bidirectional LSTM network model for ECG signal classification. Comput. Biol. Med. 2018, 96, 189–202. [Google Scholar] [CrossRef]

- Zhao, W.; Hu, J.; Jia, D.; Wang, H.; Li, Z.; Yan, C.; You, T. Deep Learning Based Patient-Specific Classification of Arrhythmia on ECG signal. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); IEEE: Berlin, Germany, 2019. [Google Scholar] [CrossRef]

- Niu, J.; Tang, Y.; Sun, Z.; Zhang, W. Inter-Patient ECG Classification with Symbolic Representations and Multi-Perspective Convolutional Neural Networks. IEEE J. Biomed. Health Inf. 2019. [Google Scholar] [CrossRef]

- Attia, Z.I.; Kapa, S.; Yao, X.; Lopez-Jimenez, F.; Mohan, T.L.; Pellikka, P.A.; Carter, R.E.; Shah, N.D.; Friedman, P.A.; Noseworthy, P.A. Prospective validation of a deep learning electrocardiogram algorithm for the detection of left ventricular systolic dysfunction. J. Cardiovascu Electrophysiol. 2019, 30, 668–674. [Google Scholar] [CrossRef]

- Yang, W.; Si, Y.; Wang, D.; Zhang, G. A Novel Approach for Multi-Lead ECG Classification Using DL-CCANet and TL-CCANet. Sensors 2019, 19, 3214. [Google Scholar] [CrossRef]

- Yoon, D.; Lim, H.; Jung, K.; Kim, T.; Lee, S. Deep Learning-Based Electrocardiogram Signal Noise Detection and Screening Model. Healthcare Inf. Res. 2019, 25, 201–211. [Google Scholar] [CrossRef]

- Ansari, S.; Gryak, J.; Najarian, K. Noise Detection in Electrocardiography Signal for Robust Heart Rate Variability Analysis: A Deep Learning Approach. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Jeon, E.; Jung, B.; Nam, Y.; Lee, H. Classification of Premature Ventricular Contraction using Error Back-Propagation. KSII Trans. Internet Inf. Syst. 2018, 12, 988–1001. [Google Scholar] [CrossRef]

- Byeon, Y.; Pan, S.; Kwak, K. Intelligent deep models based on scalograms of electrocardiogram signals for biometrics. Sensors 2019, 19, 935. [Google Scholar] [CrossRef] [PubMed]

- Mathews, S.M.; Kambhamettu, C.; Barner, K.E. A novel application of deep learning for single-lead ECG classification. Comput. Biol. Med. 2018, 99, 53–62. [Google Scholar] [CrossRef] [PubMed]

- Picon, A.; Irusta, U.; Alvarez-Gila, A.; Aramendi, E.; Alonso-Atienza, F.; Figuera, C.; Ayala, U.; Garrote, E.; Wik, L.; Kramer-Johansen, J.; et al. Mixed convolutional and long short-term memory network for the detection of lethal ventricular arrhythmia. PLoS ONE 2019, 14, e0216756. [Google Scholar] [CrossRef] [PubMed]

- Yildirim, O.; Baloglu, U.B.; Tan, R.; Ciaccio, E.J.; Acharya, U.R. A new approach for arrhythmia classification using deep coded features and LSTM networks. Comput. Methods Programs Biomed. 2019, 176, 121–133. [Google Scholar] [CrossRef] [PubMed]

- Oh, S.L.; Ng, E.Y.K.; Tan, R.S.; Acharya, U.R. Automated diagnosis of arrhythmia using combination of CNN and LSTM techniques with variable length heart beats. Comput. Biol. Med. 2018, 102, 278–287. [Google Scholar] [CrossRef] [PubMed]

- He, Z.; Zhang, X.; Cao, Y.; Liu, Z.; Zhang, B.; Wang, X. LiteNet: Lightweight neural network for detecting arrhythmias at resource-constrained mobile devices. Sensors 2018, 18, 1229. [Google Scholar] [CrossRef]

- Erdenebayar, U.; Kim, H.; Park, J.; Kang, D.; Lee, K. Automatic Prediction of Atrial Fibrillation Based on Convolutional Neural Network Using a Short-term Normal Electrocardiogram Signal. J. Korean Med. Sci. 2019, 34. [Google Scholar] [CrossRef]

- Oh, S.L.; Ng, E.Y.K.; Tan, R.S.; Acharya, U.R. Automated beat-wise arrhythmia diagnosis using modified U-net on extended electrocardiographic recordings with heterogeneous arrhythmia types. Comput. Biol. Med. 2019, 105, 92–101. [Google Scholar] [CrossRef]

- Savalia, S.; Emamian, V. Cardiac arrhythmia classification by multi-layer perceptron and convolution neural networks. Bioengineering 2018, 5, 35. [Google Scholar] [CrossRef]

- Yıldırım, Ö.; Plawiak, P.; Tan, R.S.; Acharya, U.R. Arrhythmia detection using deep convolutional neural network with long duration ECG signals. Comput. Biol. Med. 2018, 102, 411–420. [Google Scholar] [CrossRef]

- Hannun, A.Y.; Rajpurkar, P.; Haghpanahi, M.; Tison, G.H.; Bourn, C.; Turakhia, M.P.; Ng, A.Y. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 2019, 25, 65–69. [Google Scholar] [CrossRef] [PubMed]

- Yildirim, O.; Talo, M.; Ay, B.; Baloglu, U.B.; Aydin, G.; Acharya, U.R. Automated detection of diabetic subject using pre-trained 2D-CNN models with frequency spectrum images extracted from heart rate signals. Comput. Biol. Med. 2019, 113, 103387. [Google Scholar] [CrossRef] [PubMed]

- Xiao, R.; Xu, Y.; Pelter, M.M.; Mortara, D.W.; Hu, X. A deep learning approach to examine ischemic ST changes in ambulatory ECG recordings. AMIA Summits Transl. Sci. Proc. 2018, 2018, 256. [Google Scholar]

- Ji, Y.; Zhang, S.; Xiao, W. Electrocardiogram Classification Based on Faster Regions with Convolutional Neural Network. Sensors 2019, 19, 2558. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Kim, Y. Classification of Heart Diseases Based On ECG Signals Using Long Short-Term Memory. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Sbrollini, A.; Jongh, M.C.; Haar, C.C.T.; Treskes, R.W.; Man, S.; Burattini, L.; Swenne, C.A. Serial electrocardiography to detect newly emerging or aggravating cardiac pathology: A deep-learning approach. Biomed. Eng. Online 2019, 18, 15. [Google Scholar] [CrossRef]

- Seo, W.; Kim, N.; Lee, C.; Park, S. Deep ECG-Respiration Network (DeepER Net) for Recognizing Mental Stress. Sensors 2019, 19, 3021. [Google Scholar] [CrossRef]

- Nguyen, M.T.; Nguyen, B.V.; Kim, K. Deep Feature Learning for Sudden Cardiac Arrest Detection in Automated External Defibrillators. Sci. Rep. 2018, 8, 17196. [Google Scholar] [CrossRef]

- Wang, L.; Lin, Y.; Wang, J. A RR interval based automated apnea detection approach using residual network. Comput. Methods Programs Biomed. 2019, 176, 93–104. [Google Scholar] [CrossRef]

- Dey, D.; Chaudhuri, S.; Munshi, S. Obstructive sleep apnoea detection using convolutional neural network based deep learning framework. Biomed. Eng. lett. 2018, 8, 95–100. [Google Scholar] [CrossRef]

- Kido, K.; Tamura, T.; Ono, N.; Altaf-Ul-Amin, M.; Sekine, M.; Kanaya, S.; Huang, M. A Novel CNN-Based Framework for Classification of Signal Quality and Sleep Position from a Capacitive ECG Measurement. Sensors 2019, 19, 1731. [Google Scholar] [CrossRef]

- Erdenebayar, U.; Kim, Y.J.; Park, J.; Joo, E.; Lee, K. Deep learning approaches for automatic detection of sleep apnea events from an electrocardiogram. Comput. Methods Programs Biomed. 2019, 180, 105001. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Lu, C.; Shen, G.; Hong, F. Sleep apnea detection from a single-lead ECG signal with automatic feature-extraction through a modified LeNet-5 convolutional neural network. PeerJ 2019, 7. [Google Scholar] [CrossRef] [PubMed]

- Hwang, B.; You, J.; Vaessen, T.; Myin-Germeys, I.; Park, C.; Zhang, B.T. Deep ECGNet: An optimal deep learning framework for monitoring mental stress using ultra short-term ECG signals. TELEMEDICINE e-HEALTH 2018, 24, 753–772. [Google Scholar] [CrossRef] [PubMed]

- Attia, Z.I.; Friedman, P.A.; Noseworthy, P.A.; Ladewig, D.J.; Satam, G.; Pellikka, P.A.; Munger, T.M.; Asirvatham, S.J.; Scott, C.G.; Carter, R.E.; et al. Age and sex estimation using artificial intelligence from standard 12-lead ECGs. Circ. Arrhythmia Electrophysiol. 2019, 12. [Google Scholar] [CrossRef]

- Toraman, S.; Tuncer, S.A.; Balgetir, F. Is it possible to detect cerebral dominance via EEG signals by using deep learning? Med. Hypotheses 2019, 131. [Google Scholar] [CrossRef]

- Doborjeh, Z.G.; Kasabov, N.; Doborjeh, M.G.; Sumich, A. Modelling peri-perceptual brain processes in a deep learning spiking neural network architecture. Sci. Rep. 2018, 8. [Google Scholar] [CrossRef]

- Kshirsagar, G.B.; Londhe, N.D. Improving Performance of Devanagari Script Input-Based P300 Speller Using Deep Learning. IEEE Trans. Biomed. Eng. 2018, 66, 2992–3005. [Google Scholar] [CrossRef]

- Roy, S.; Kiral-Kornek, I.; Harrer, S. Deep learning enabled automatic abnormal EEG identification. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Lei, B.; Liu, X.; Liang, S.; Hang, W.; Wang, Q.; Choi, K.; Qin, J. Walking imagery evaluation in brain computer interfaces via a multi-view multi-level deep polynomial network. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 497–506. [Google Scholar] [CrossRef]

- Li, J.; Yu, Z.L.; Gu, Z.; Wu, W.; Li, Y.; Jin, L. A hybrid network for ERP detection and analysis based on restricted Boltzmann machine. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 563–572. [Google Scholar] [CrossRef]

- Vahid, A.; Bluschke, A.; Roessner, V.; Stober, S.; Beste, C. Deep Learning Based on Event-Related EEG Differentiates Children with ADHD from Healthy Controls. J. Clin. Med. 2019, 8, 1055. [Google Scholar] [CrossRef]

- Tjepkema-Cloostermans, M.C.; Carvalho, R.C.V.; Putten, M.J.A.M. Deep learning for detection of focal epileptiform discharges from scalp EEG recordings. Clin. Neurophysiol. 2018, 129, 2191–2196. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Yin, Z.; Wang, Y.; Zhang, W.; Wang, Y.; Zhang, J. Assessing cognitive mental workload via EEG signals and an ensemble deep learning classifier based on denoising autoencoders. Comput. Biol. Med. 2019, 109, 159–170. [Google Scholar] [CrossRef] [PubMed]

- Ravindran, A.S.; Mobiny, A.; Cruz-Garza, J.G.; Paek, A.; Kopteva, A.; Vidal, J.L.C. Assaying neural activity of children during video game play in public spaces: A deep learning approach. J. Neural Eng. 2019, 16, 12. [Google Scholar] [CrossRef]

- Zhao, D.; Tang, F.; Si, B.; Feng, X. Learning joint space–time–frequency features for EEG decoding on small labeled data. Neural Networks 2019, 114, 67–77. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, X.; Zhang, W.; Chen, J. Learning Spatial–Spectral–Temporal EEG Features With Recurrent 3D Convolutional Neural Networks for Cross-Task Mental Workload Assessment. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 27, 31–42. [Google Scholar] [CrossRef]

- Sakhavi, S.; Guan, C.; Yan, S. Learning temporal information for brain-computer interface using convolutional neural networks. IEEE Trans. Neural Networks Learn. Syst. 2018, 29, 5619–5629. [Google Scholar] [CrossRef]

- Ha, K.W.; Jeong, J.W. Motor Imagery EEG Classification Using Capsule Networks. Sensors 2019, 19, 2854. [Google Scholar] [CrossRef]

- Majidov, I.; Whangbo, T. Efficient Classification of Motor Imagery Electroencephalography Signals Using Deep Learning Methods. Sensors 2019, 19, 1736. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, X.R.; Zhang, B.; Lei, M.Y.; Cui, W.G.; Guo, Y.Z. A Channel-Projection Mixed-Scale Convolutional Neural Network for Motor Imagery EEG Decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1170–1180. [Google Scholar] [CrossRef]

- Abbas, W.; Khan, N.A. DeepMI: Deep Learning for Multiclass Motor Imagery Classification. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Tayeb, Z.; Fedjaev, J.; Ghaboosi, N.; Richter, C.; Everding, L.; Qu, X.; Wu, Y.; Cheng, G.; Conradt, J. Validating deep neural networks for online decoding of motor imagery movements from EEG signals. Sensors 2019, 19, 210. [Google Scholar] [CrossRef]

- Lee, H.C.; Ryu, H.G.; Chung, E.J.; Jung, C.W. Prediction of bispectral index during target-controlled infusion of propofol and remifentanil. Anesthesiology 2018, 128, 492–501. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Wang, X.; Chen, J.; You, W.; Zhang, W. Spectral and Temporal Feature Learning With Two-Stream Neural Networks for Mental Workload Assessment. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1149–1159. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Wu, D. On the Vulnerability of CNN Classifiers in EEG-Based BCIs. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 17, 814–825. [Google Scholar] [CrossRef] [PubMed]

- Ahmedt-Aristizabal, D.; Fooke, C.; Denman, S.; Nguyen, K.; Sridharan, S.; Dionisio, S. Aberrant epileptic seizure identification: A computer vision perspective. Seizure 2019, 65, 65–71. [Google Scholar] [CrossRef]

- Mumtaz, W.; Qayyum, A. A deep learning framework for automatic diagnosis of unipolar depression. Int. J. Med. Inform. 2019, 132. [Google Scholar] [CrossRef]

- Kim, S.; Kim, J.; Chun, H.W. Wave2Vec: Vectorizing Electroencephalography Bio-Signal for Prediction of Brain Disease. Int. J. Environ. Res. Publ. Health 2018, 15, 1750. [Google Scholar] [CrossRef]

- Golmohammadi, M.; Torbati, A.H.H.N.; Diego, S.L.; Obeid, I.; Picone, J. Automatic analysis of EEGs using big data and hybrid deep learning architectures. Front. Hum. Neurosci. 2019, 13, 76. [Google Scholar] [CrossRef]

- Zhou, Y.; Xu, T.; Li, S.; Li, S. Confusion State Induction and EEG-based Detection in Learning. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adeli, H.; Subha, D.P. Automated EEG-based screening of depression using deep convolutional neural network. Comput. Methods Programs Biomed. 2018, 161, 103–113. [Google Scholar] [CrossRef]

- Bi, X.; Wang, H. Early Alzheimer’s disease diagnosis based on EEG spectral images using deep learning. Neural Networks 2019, 114, 119–135. [Google Scholar] [CrossRef]

- Wei, X.; Zhou, L.; Zhang, Z.; Chen, Z.; Zhou, Y. Early prediction of epileptic seizures using a long-term recurrent convolutional network. J. Neurosci. Methods 2019, 327, 108395. [Google Scholar] [CrossRef]

- Kim, D.; Kim, K. Detection of Early Stage Alzheimer’s Disease using EEG Relative Power with Deep Neural Network. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Dai, M.; Zheng, D.; Na, R.; Wang, S.; Zhang, S. EEG Classification of Motor Imagery Using a Novel Deep Learning Framework. Sensors 2019, 19, 551. [Google Scholar] [CrossRef] [PubMed]

- Tian, X.; Deng, Z.; Ying, W.; Choi, K.S.; Wu, D.; Qin, B.; Wan, J.; Shen, H.; Wang, S. Deep multi-view feature learning for EEG-based epileptic seizure detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1962–1972. [Google Scholar] [CrossRef] [PubMed]

- Jonas, S.; Rossetti, A.O.; Oddo, M.; Jenni, S.; Favaro, P.; Zubler, F. EEG-based outcome prediction after cardiac arrest with convolutional neural networks: Performance and visualization of discriminative features. Hum. Brain Mapp. 2019, 40, 4606–4617. [Google Scholar] [CrossRef] [PubMed]

- Türk, Ö.; Özerdem, M.S. Epilepsy Detection by Using Scalogram Based Convolutional Neural Network from EEG Signals. Brain Sci. 2019, 9, 115. [Google Scholar] [CrossRef]

- Hao, Y.; Khoo, H.M.; Ellenrieder, N.; Zazubovits, N.; Gotman, J. DeepIED: An epileptic discharge detector for EEG-fMRI based on deep learning. NeuroImage Clin. 2018, 17, 962–975. [Google Scholar] [CrossRef]

- San-Segundo, R.; Gil-Martin, M.; D’Haro-Enriquez, L.F.; Pardo, J.M. Classification of epileptic EEG recordings using signal transforms and convolutional neural networks. Comput. Biol. Med. 2019, 109, 148–158. [Google Scholar] [CrossRef]

- Korshunova, I.; Kindermans, P.J.; Degrave, J.; Verhoeven, T.; Brinkmann, B.H.; Dambre, J. Towards improved design and evaluation of epileptic seizure predictors. IEEE Trans. Biomed. Eng. 2018, 65, 502–510. [Google Scholar] [CrossRef]

- Daoud, H.; Bayoumi, M. Efficient Epileptic Seizure Prediction based on Deep Learning. IEEE Trans. Biomed. Circ. Syst. 2019, 13, 804–813. [Google Scholar] [CrossRef]

- Kiral-Kornek, I.; Roy, S.; Nurse, E.; Mashford, B.; Karoly, P.; Carroll, T.; Payne, D.; Saha, S.; Baldassano, S.; O’Brien, T.; et al. Epileptic seizure prediction using big data and deep learning: Toward a mobile system. EBioMedicine 2018, 27, 103–111. [Google Scholar] [CrossRef]

- Hussein, R.; Palangi, H.; Ward, R.K.; Wang, Z.J. Optimized deep neural network architecture for robust detection of epileptic seizures using EEG signals. Clin. Neurophysiol. 2019, 130, 25–37. [Google Scholar] [CrossRef]

- Tsiouris, Κ.Μ.; Pezoulas, V.C.; Zervaski, M.; Konitsiotis, S.; Doutsouris, D.D.; Fotiadis, D.I. A Long Short-Term Memory deep learning network for the prediction of epileptic seizures using EEG signals. Comput. Biol. Med. 2018, 99, 24–37. [Google Scholar] [CrossRef]

- Phang, C.R.; Norman, F.; Hussain, H.; Ting, C.M.; Ombao, H. A Multi-Domain Connectome Convolutional Neural Network for Identifying Schizophrenia from EEG Connectivity Patterns. IEEE J. Biomed. Health Inf. 2019. [Google Scholar] [CrossRef]

- Gleichgerrcht, E.; Munsell, B.; Bhatia, S.; Vandergrift III, W.A.; Rorden, C.; McDonald, C.; Edwards, J.; Kuzniecky, R.; Bonilha, L. Deep learning applied to whole-brain connectome to determine seizure control after epilepsy surgery. Epilepsia 2018, 59, 1643–1654. [Google Scholar] [CrossRef]

- Sirpal, P.; Kassab, A.; Pouliot, P.; Nguyen, D.K.; Lesage, F. fNIRS improves seizure detection in multimodal EEG-fNIRS recordings. J. Biomed. Opt. 2019, 24, 051408. [Google Scholar] [CrossRef]

- Emami, A.; Kunii, N.; Matsuo, T.; Shinozaki, T.; Kawai, K.; Takahashi, H. Seizure detection by convolutional neural network-based analysis of scalp electroencephalography plot images. NeuroImage Clin. 2019, 22, 101684. [Google Scholar] [CrossRef]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adeli, H. Deep convolutional neural network for the automated detection and diagnosis of seizure using EEG signals. Comput. Biol. Med. 2018, 100, 270–278. [Google Scholar] [CrossRef]

- Wei, X.; Zhou, L.; Chen, Z.; Zhang, L.; Zhou, Y. Automatic seizure detection using three-dimensional CNN based on multi-channel EEG. BMC Med. Inf. Decis. Making 2018, 18, 111. [Google Scholar] [CrossRef]

- Yuan, Y.; Xun, G.; Jia, K.; Zhang, A. A Multi-View Deep Learning Framework for EEG Seizure Detection. IEEE J. Biomed. Health Inf. 2018, 23, 83–94. [Google Scholar] [CrossRef]

- Ahmedt-Aristizabal, D.; Fookes, C.; Nguyen, K.; Sridharan, S. Deep classification of epileptic signals. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Jang, H.J.; Cho, K.O. Dual deep neural network-based classifiers to detect experimental seizures. The Korean Can. J. Physiol. Pharmacol. 2019, 23, 131–139. [Google Scholar] [CrossRef]

- Sun, H.; Kimchi, E.; Akeju, O.; Nagaraj, S.B.; McClain, L.M.; Zhou, D.W.; Boyle, E.; Zheng, W.L.; Ge, W.; Westover, M.B. Automated tracking of level of consciousness and delirium in critical illness using deep learning. NPJ Digital Med. 2019, 2, 1–8. [Google Scholar] [CrossRef]

- Kwon, Y.H.; Shin, S.B.; Kim, S.D. Electroencephalography based fusion two-dimensional (2D)-convolution neural networks (CNN) model for emotion recognition system. Sensors 2018, 18, 1383. [Google Scholar] [CrossRef] [PubMed]

- Chao, H.; Dong, L.; Liu, Y.; Lu, B. Emotion Recognition from Multiband EEG Signals Using CapsNet. Sensors 2019, 19, 2212. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Zheng, W.; Cui, Z.; Zong, Y.; Li, Y. Spatial–temporal recurrent neural network for emotion recognition. IEEE Trans. Cybern. 2018, 49, 839–847. [Google Scholar] [CrossRef] [PubMed]

- Bălan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Fear Level Classification Based on Emotional Dimensions and Machine Learning Techniques. Sensors 2019, 19, 1738. [Google Scholar] [CrossRef]

- Zheng, W.L.; Liu, W.; Lu, Y.; Lu, B.L.; Cichocki, A. Emotionmeter: A multimodal framework for recognizing human emotions. IEEE Trans. Cybern. 2018, 49, 1110–1122. [Google Scholar] [CrossRef]

- Chao, H.; Zhi, H.; Dong, L.; Liu, Y. Recognition of emotions using multichannel EEG data and DBN-GC-based ensemble deep learning framework. Comput. Intell. Neurosci. 2018, 2018, 9750904. [Google Scholar] [CrossRef]

- Yohanandan, S.A.C.; Kiral-Kornek, I.; Tang, J.; Mashford, B.S.; Asif, U.; Harrer, S. A Robust Low-Cost EEG Motor Imagery-Based Brain-Computer Interface. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Choi, E.J.; Kim, D.K. Arousal and Valence Classification Model Based on Long Short-Term Memory and DEAP Data for Mental Healthcare Management. Healthcare Inf. res. 2018, 24, 309–316. [Google Scholar] [CrossRef]

- Chambon, S.; Thorey, V.; Arnal, P.J.; Mignot, E.; Gramfort, A. DOSED: A deep learning approach to detect multiple sleep micro-events in EEG signal. J. Neurosci. Methods 2019, 321, 64–78. [Google Scholar] [CrossRef]

- Ma, Y.; Chen, B.; Li, R.; Wang, C.; Wang, J.; She, Q.; Luo, Z.; Zhang, Y. Driving fatigue detection from EEG using a modified PCANet method. Comput. Intell. Neurosci. 2019, 2019, 9. [Google Scholar] [CrossRef]

- Van Leeuwen, K.G.; Sun, H.; Tabaeizadeh, M.; Struck, A.F.; Putten, M.J.A.M.; Westover, M.B. Detecting abnormal electroencephalograms using deep convolutional networks. Clin. Neurophysiology 2019, 130, 77–84. [Google Scholar] [CrossRef]

- Kulkarni, P.M.; Xiao, Z.; Robinson, E.J.; Jami, A.S.; Zhang, J.; Zhou, H.; Henin, S.E.; Liu, A.A.; Osorio, R.S.; Wang, J.; et al. A deep learning approach for real-time detection of sleep spindles. J. Neural Eng. 2019, 16, 036004. [Google Scholar] [CrossRef] [PubMed]

- Bresch, E.; Grossekathofer, U.; Garcia-Molina, G. Recurrent deep neural networks for real-time sleep stage classification from single channel EEG. Front. Comput. Neurosci. 2018, 12, 85. [Google Scholar] [CrossRef] [PubMed]

- Phan, H.; Andreotti, F.; Cooray, N.; Chen, O.Y.; Vos, M.D. DNN filter bank improves 1-max pooling CNN for single-channel EEG automatic sleep stage classification. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Phan, H.; Andreotti, F.; Cooray, N.; Chen, O.Y.; Vos, M.D. Automatic sleep stage classification using single-channel eeg: Learning sequential features with attention-based recurrent neural networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Zhang, J.; Wu, Y. Complex-valued unsupervised convolutional neural networks for sleep stage classification. Comput. Methods Programs Biomed. 2018, 164, 181–191. [Google Scholar] [CrossRef] [PubMed]

- Mousavi, S.; Afghah, F.; Acharya, U.R. SleepEEGNet: Automated sleep stage scoring with sequence to sequence deep learning approach. PLoS ONE 2019, 14, e0216456. [Google Scholar] [CrossRef] [PubMed]

- Mousavi, Z.; Rezaii, T.Y.; Sheykhivand, S.; Farzamnia, A.; Razavi, S.N. Deep convolutional neural network for classification of sleep stages from single-channel EEG signals. J. Neurosci. Methods 2019, 324, 108312. [Google Scholar] [CrossRef]

- Zhang, J.; Yao, R.; Ge, W.; Gao, J. Orthogonal convolutional neural networks for automatic sleep stage classification based on single-channel EEG. Comput. Methods Programs Biomed 2019, 183, 105089. [Google Scholar] [CrossRef]

- Malafeev, A.; Laptev, D.; Bauer, S.; Omlin, X.; Wierzbicka, A.; Wichniak, A.; Jernajczyk, W.; Riener, R.; Buhmann, J.; Achermann, P. Automatic human sleep stage scoring using deep neural networks. Front. Neurosci. 2018, 12, 781. [Google Scholar] [CrossRef]

- Goh, S.K.; Abbass, H.A.; Tan, K.C.; Al-Mamun, A.; Thakor, N.; Bezerianos, A.; Li, J. Spatio–Spectral Representation Learning for Electroencephalographic Gait-Pattern Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 1858–1867. [Google Scholar] [CrossRef]

- Ma, X.; Qiu, S.; Du, C.; Xing, J.; He, H. Improving EEG-Based Motor Imagery Classification via Spatial and Temporal Recurrent Neural Networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Villalba-Diez, J.; Zheng, X.; Schmidt, D.; Molina, M. Characterization of Industry 4.0 Lean Management Problem-Solving Behavioral Patterns Using EEG Sensors and Deep Learning. Sensors 2019, 19, 2841. [Google Scholar] [CrossRef]

- Ruffini, G.; Ibanez, D.; Castellano, M.; Dubreuil-Vall, L.; Soria-Frisch, A.; Postuma, R.; Gagnon, J.F.; Montplaisir, J. Deep learning with EEG spectrograms in rapid eye movement behavior disorder. Front. Neurol. 2019, 10, 1–9. [Google Scholar] [CrossRef]

- Van Putten, M.J.A.M.; Olbrich, S.; Arns, M. Predicting sex from brain rhythms with deep learning. Sci. Rep. 2018, 8, 3069. [Google Scholar] [CrossRef] [PubMed]

- Boloukian, B.; Safi-Esfahani, F. Recognition of words from brain-generated signals of speech-impaired people: Application of autoencoders as a neural Turing machine controller in deep neural networks. Neural Networks 2019, 121, 186–207. [Google Scholar] [CrossRef] [PubMed]

- Kostas, D.; Pang, E.W.; Rudzicz, F. Machine learning for MEG during speech tasks. Sci. Rep. 2019, 9, 1609. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.; Kim, Y. Interactive sleep stage labelling tool for diagnosing sleep disorder using deep learning. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Sokolovsky, M.; Guerrero, F.; Paisarnsrisomsuk, S.; Ruiz, C.; Alvarez, S.A. Deep learning for automated feature discovery and classification of sleep stages. IEEE/ACM Trans. Comput. Biol. Bioinf. 2019. [Google Scholar] [CrossRef]

- Chambon, S.; Galtier, M.N.; Arnal, P.J.; Wainrib, G.; Gramfort, A. A deep learning architecture for temporal sleep stage classification using multivariate and multimodal time series. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 758–769. [Google Scholar] [CrossRef]

- Andreotti, F.; Phan, H.; Cooray, N.; Lo, C.; Hu, M.T.M.; Vos, M.D. Multichannel sleep stage classification and transfer learning using convolutional neural networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Yildirim, O.; Baloglu, U.B.; Acharya, U.R. A deep learning model for automated sleep stages classification using psg signals. Int. J. Environ. Res. Publ. Health 2019, 16, 599. [Google Scholar] [CrossRef]

- Croce, P.; Zappasodi, F.; Marzetti, L.; Merla, A.; Pizzella, V.; Chiarelli, A.M. Deep Convolutional Neural Networks for feature-less automatic classification of Independent Components in multi-channel electrophysiological brain recordings. IEEE Trans. Biomed. Eng. 2018, 66, 2372–2380. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Practical Methodology. In Deep Learning; MIT Press: Cambridge, MA, USA, 2016; pp. 416–437. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).