Shadow Detection in Still Road Images Using Chrominance Properties of Shadows and Spectral Power Distribution of the Illumination

Abstract

1. Introduction

2. Physics Basis: Reflection Model and SPD of the Illumination

2.1. Reflection Model

2.2. SPD of the Illumination in Outdoor Scenes

2.2.1. SPD of Skylight

2.2.2. SPD of Sunlight

3. New Shadow Features

4. Shadow Edge Detection Method

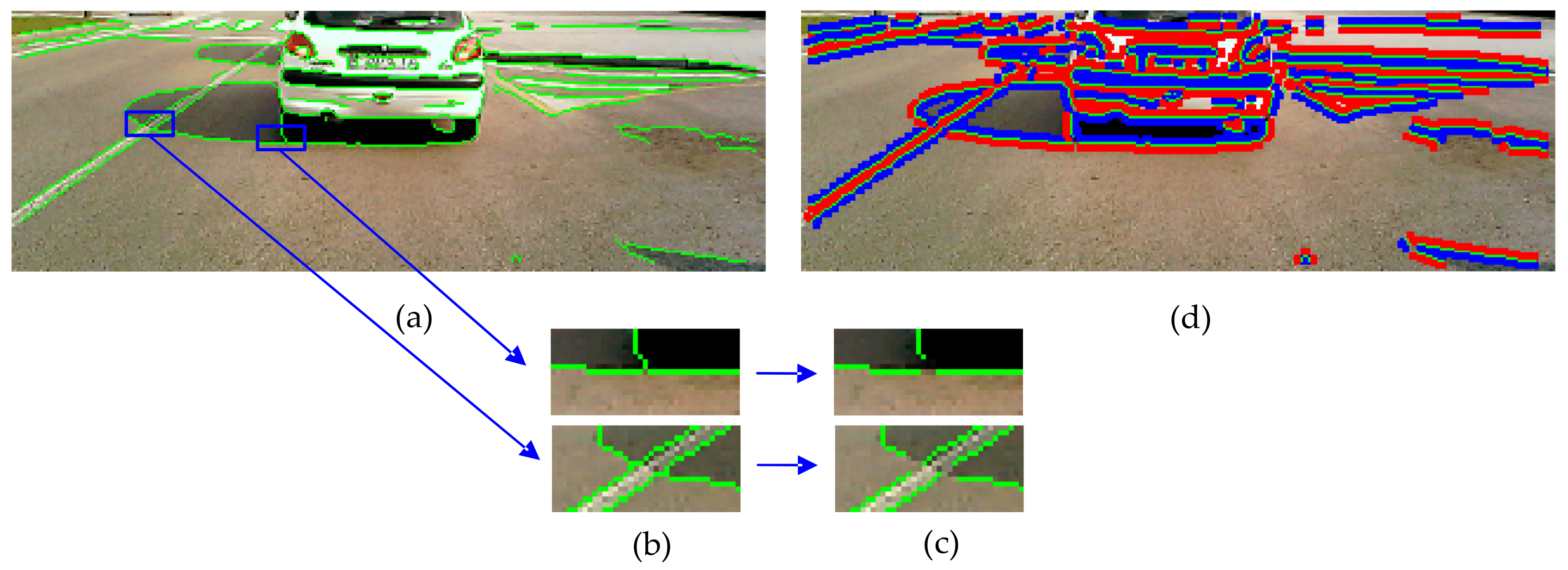

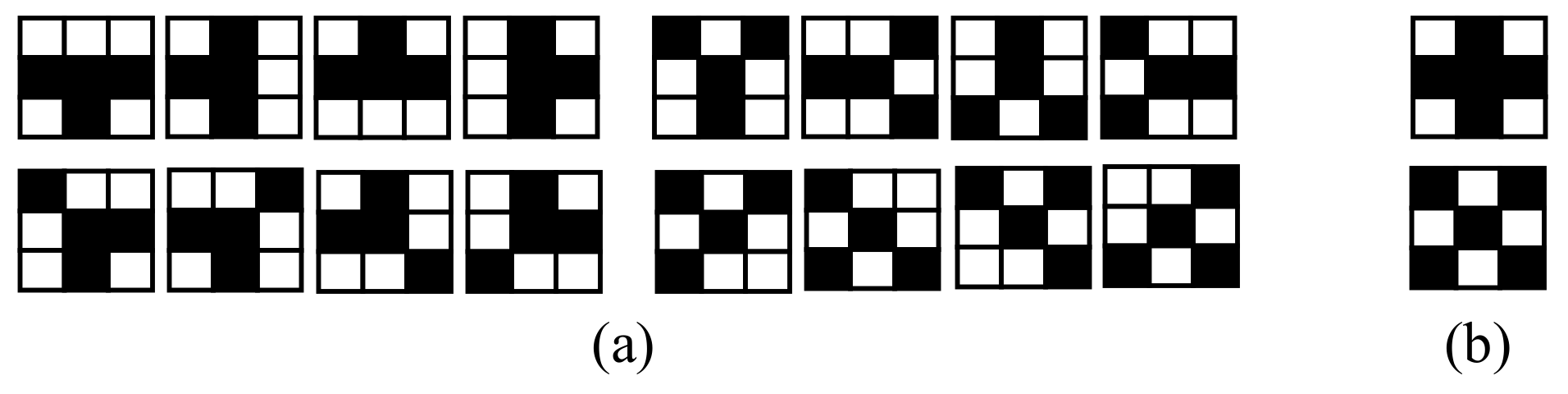

4.1. Extraction of the Image Edges

4.2. Extraction of the Bright and Dark Regions across the Edges

4.3. Extraction of Strong Edges

4.4. Shadow Edge Classification

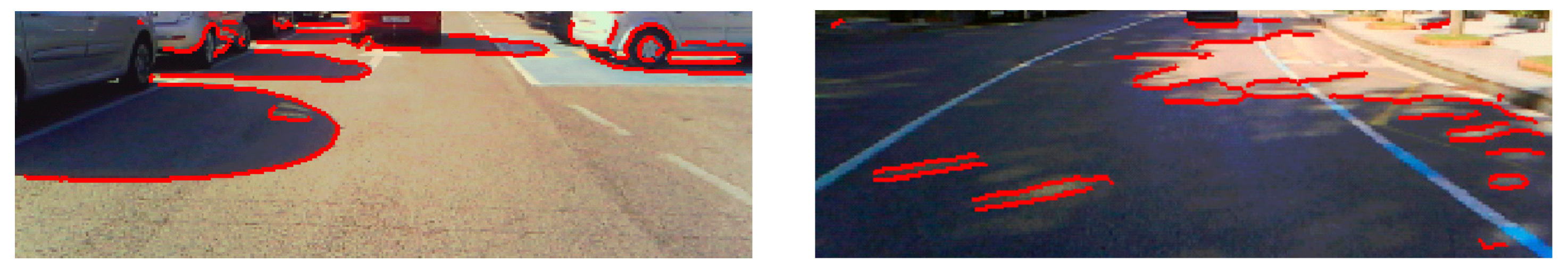

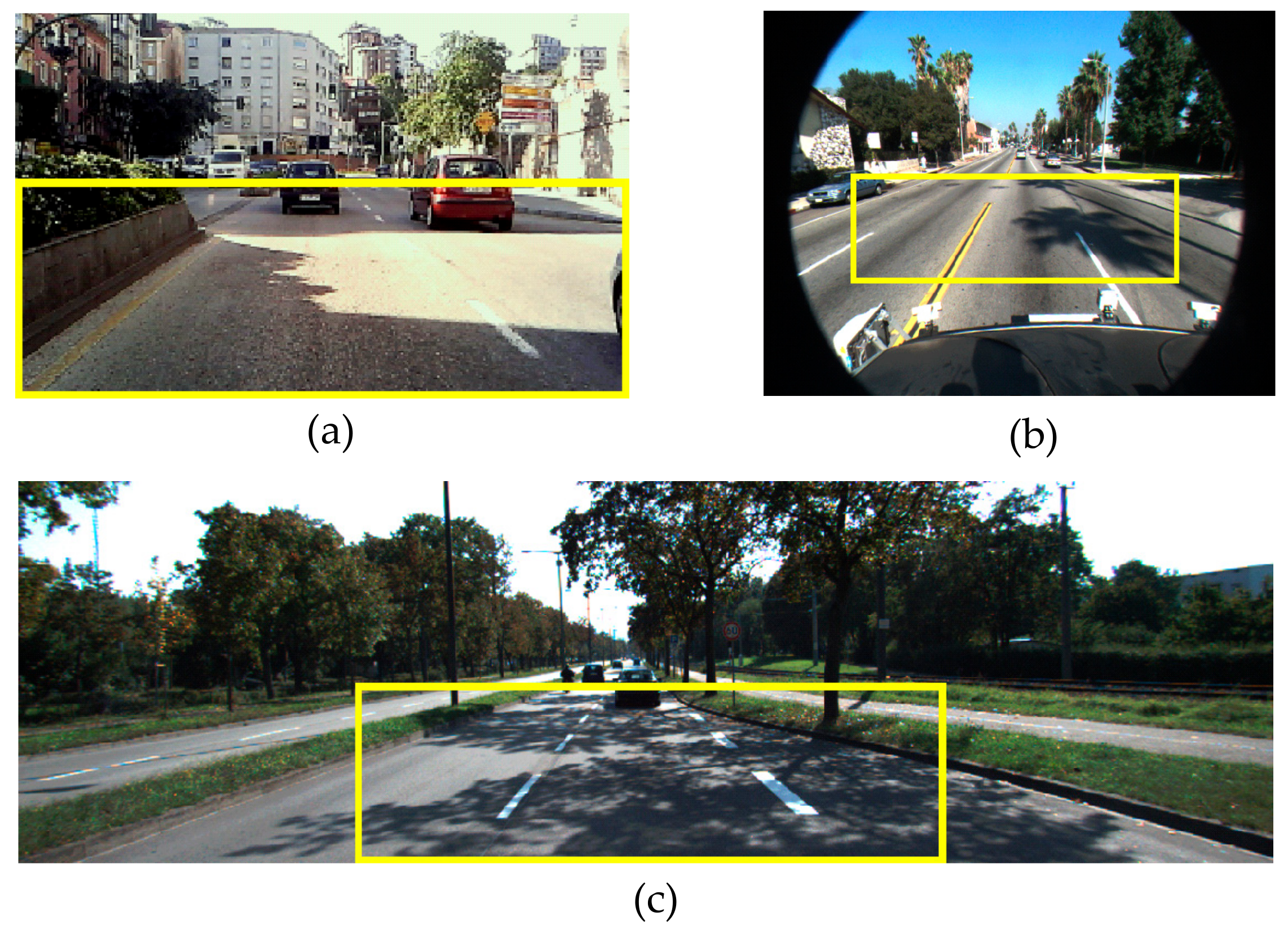

5. Experimental Results

5.1. Individual Performance of the Proposed Shadow Properties

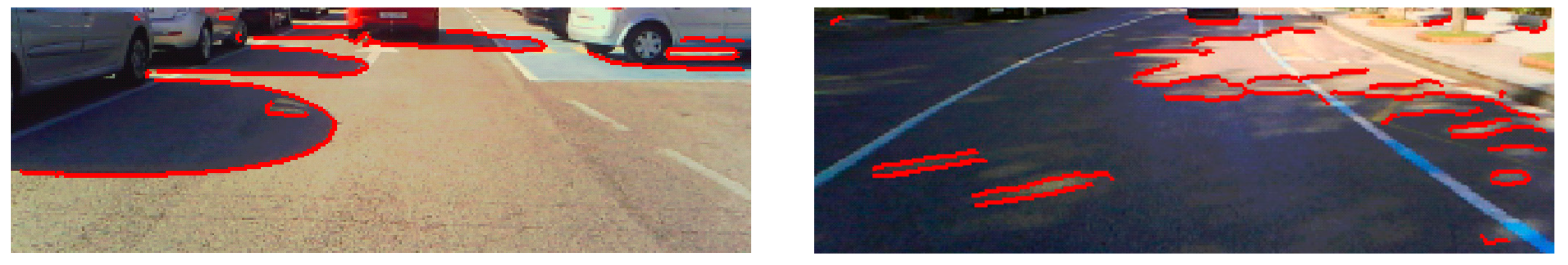

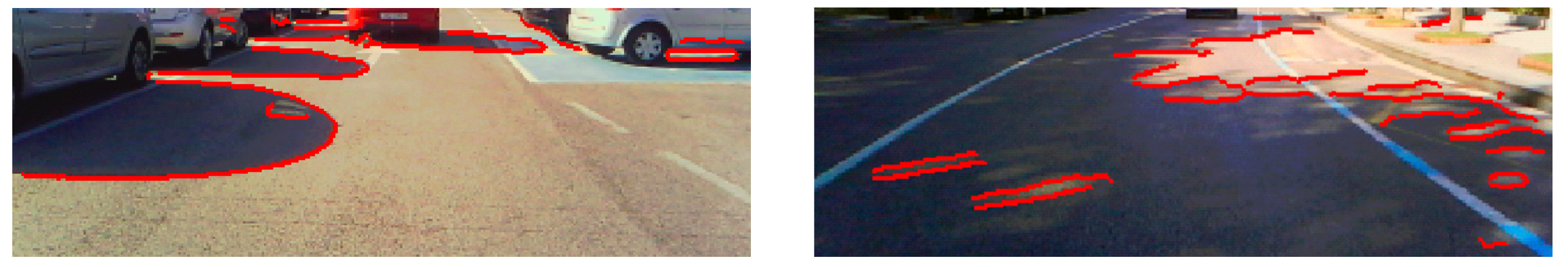

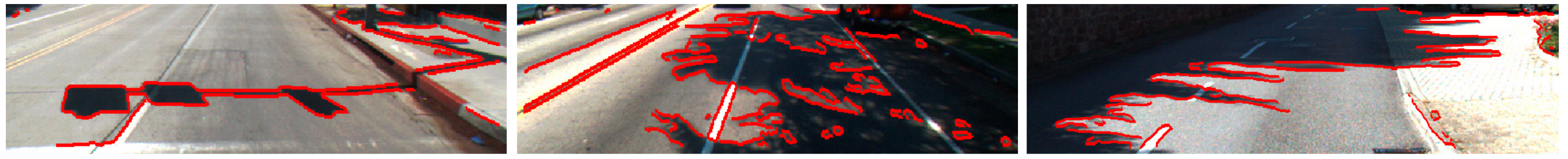

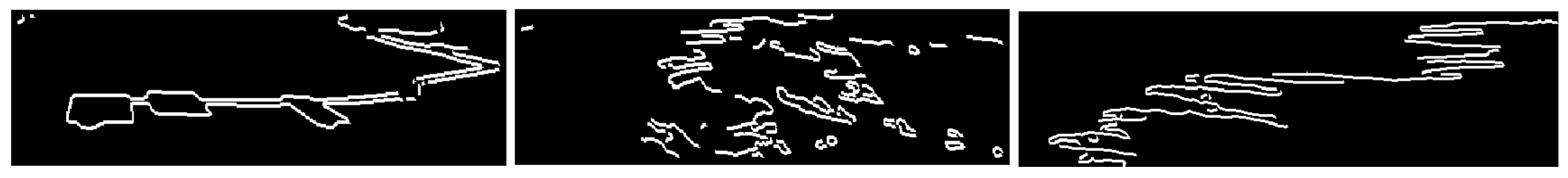

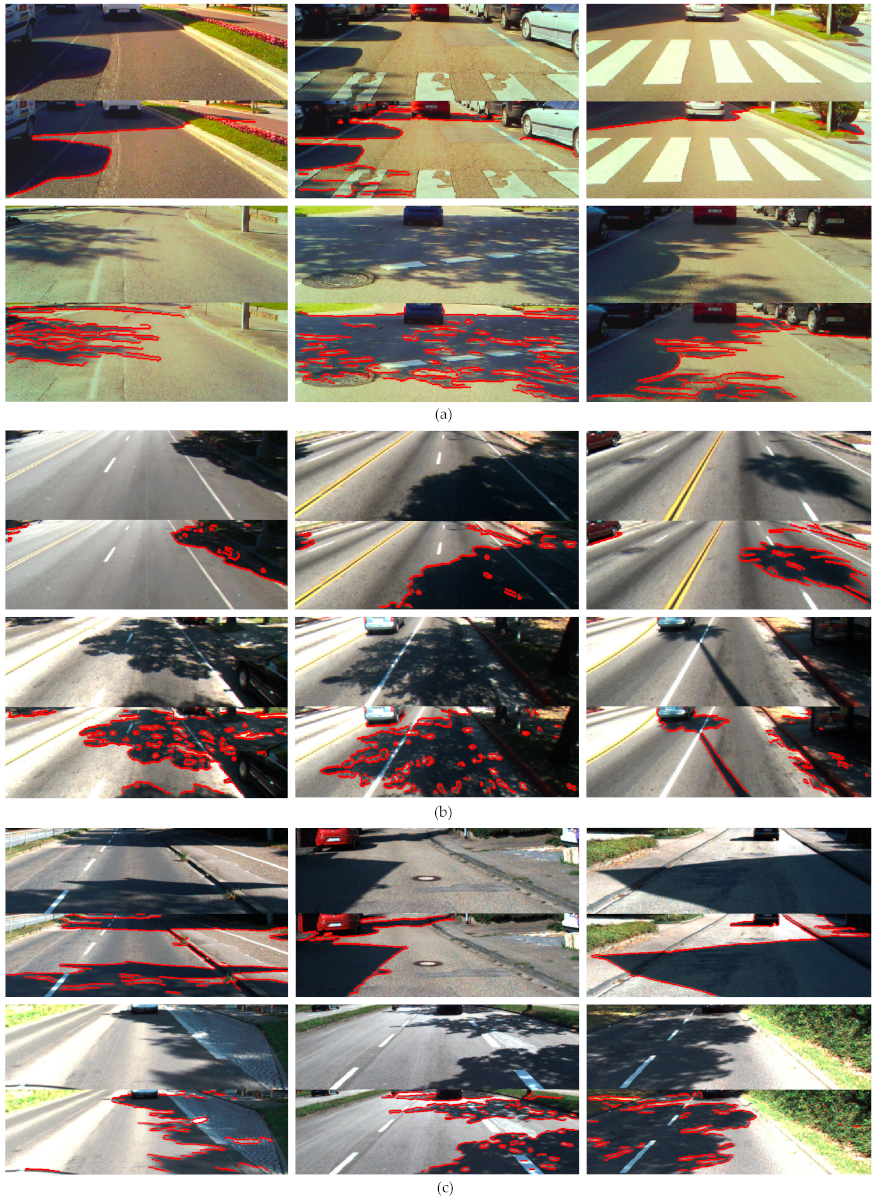

5.1.1. Qualitative Results

5.1.2. Quantitative Results

5.2. Performance of the Proposed Shadow Edge Detection Method

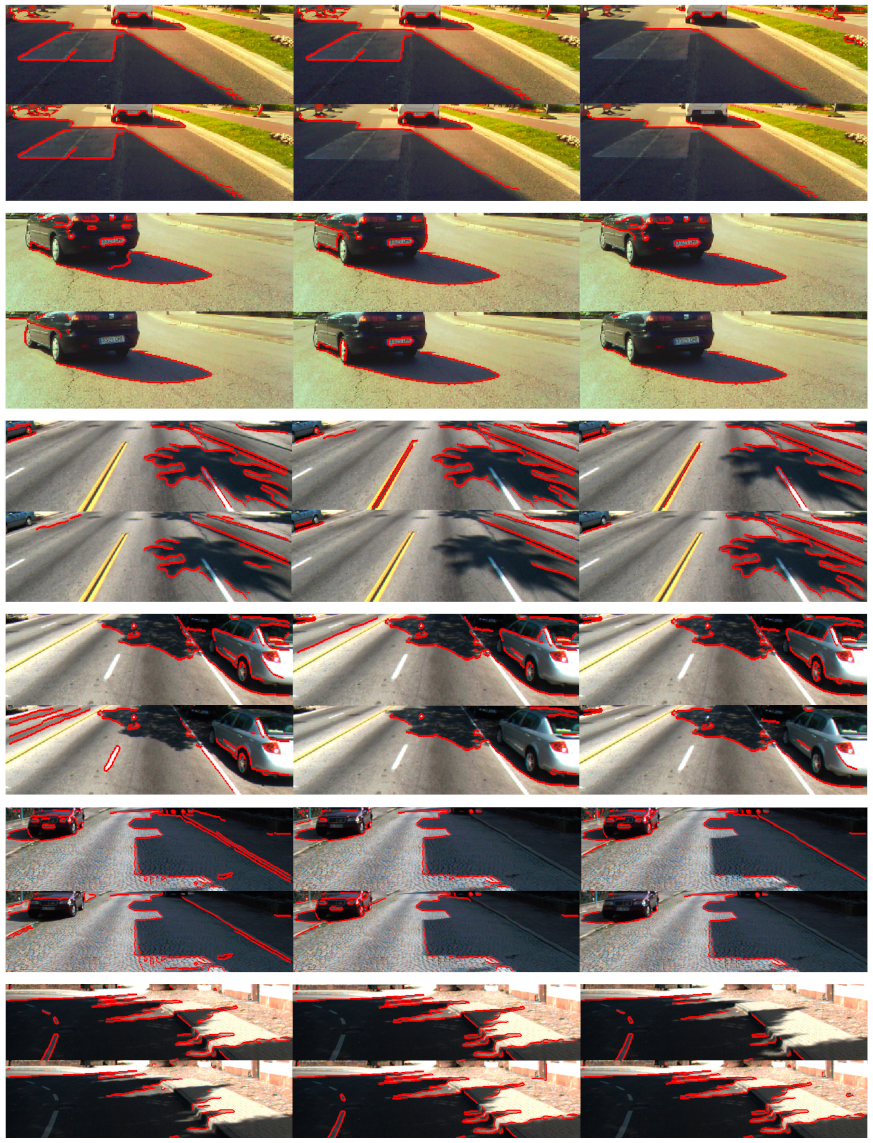

5.2.1. Qualitative Results

5.2.2. Quantitative Results

5.2.3. Results on Images without Shadows

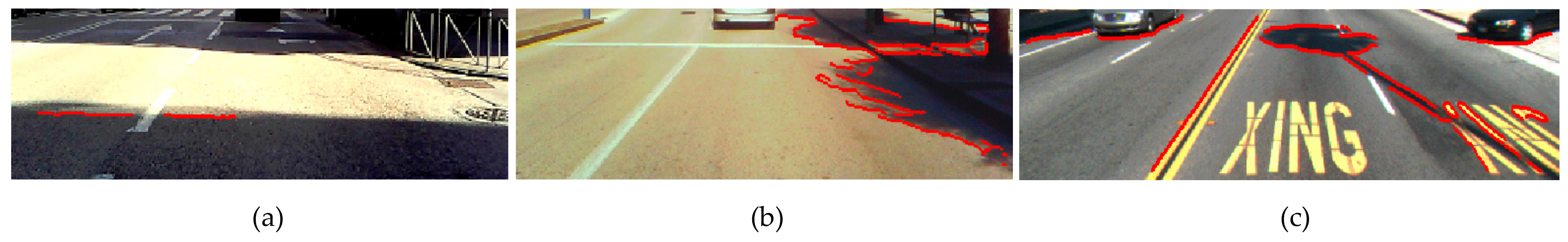

5.2.4. Limitations

5.2.5. Comparison with Previous Works

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Alvarez, J.M.; Lopez, A.M. Road Detection Based on Illuminant Invariance. IEEE Trans. Intell. Transp. Syst. 2011, 12, 184–193. [Google Scholar] [CrossRef]

- Song, Y.; Ju, Y.; Du, K.; Liu, W.; Song, J. Online Road Detection under a Shadowy Traffic Image Using a Learning-Based Illumination-Independent Image. Symmetry 2018, 10, 707. [Google Scholar] [CrossRef]

- Yoo, J.H.; Lee, S.G.; Park, S.K.; Kim, D.H. A Robust Lane Detection Method Based on Vanishing Point Estimation Using the Relevance of Line Segments. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3254–3266. [Google Scholar] [CrossRef]

- Hoang, T.M.; Baek, N.R.; Cho, S.W.; Kim, K.W.; Park, K.R. Road Lane Detection Robust to Shadows Based on a Fuzzy System Using a Visible Light Camera Sensor. Sensors 2017, 17, 2475. [Google Scholar] [CrossRef] [PubMed]

- Bertozzi, M.; Broggi, A.; Fascioli, A. Vision-based intelligent vehicles: State of the art and perspectives. Robot. Autonom. Syst. 2000, 32, 1–16. [Google Scholar] [CrossRef]

- Salvador, E.; Cavallaro, A.; Ebrahimi, T. Cast shadow segmentation using invariant color features. Comput. Vis. Image Underst. 2004, 95, 238–259. [Google Scholar] [CrossRef]

- Sanin, A.; Sanderson, C.; Lovell, B.C. Shadow detection: A survey and comparative evaluation of recent methods. Pattern Recognit. 2012, 45, 1684–1689. [Google Scholar] [CrossRef]

- Prati, A.; Mikic, I.; Trivedi, M. Detecting Moving Shadows: Algorithms and Evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 918–923. [Google Scholar] [CrossRef]

- Russel, M.; Zou, J.; Fang, G. An evaluation of moving shadow detection techniques. Comput. Vis. Media 2017, 2, 195–217. [Google Scholar] [CrossRef]

- Yoneyama, A.; Yeh, C.H.; Kuo, C.C.J. Moving cast shadow elimination for robust vehicle extraction based on 2d joint vehicle/shadow models. In Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, Miami, FL, USA, 21–22 July 2003; pp. 229–236. [Google Scholar]

- Cucchiara, R.; Grana, C.; Piccardi, M.; Prati, A. Detecting moving objects, ghosts, and shadows in video streams. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1337–1342. [Google Scholar] [CrossRef]

- Nadimi, S.; Bhanu, B. Physical models for moving shadow and object detection in video. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1079–1087. [Google Scholar] [CrossRef]

- Martel-Brisson, M.; Zaccarin, A. Learning and removing cast shadows through a multidistribution approach. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1133–1146. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.B.; Chen, C.S. Moving cast shadow detection using physics-based features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2310–2317. [Google Scholar]

- Gomes, V.; Barcellos, P.; Scharcanski, J. Stochastic shadow detection using a hypergraph partitioning approach. Pattern Recognit. 2017, 63, 30–44. [Google Scholar] [CrossRef]

- Kim, D.S.; Arsalan, M.; Park, K.R. Convolutional Neural Network-Based Shadow Detection in Images Using Visible Light Camera Sensor. Sensors 2018, 18, 960. [Google Scholar] [CrossRef] [PubMed]

- Piccardi, M. Background subtraction techniques: A review. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, The Hague, The Netherlands, 10–13 October 2004; pp. 3099–3104. [Google Scholar]

- Maddalena, L.A.; Petrosino, A. Exploiting color and depth for background subtraction. In New Trends in Image Analysis and Processing—ICIAP 2017. Lecture Notes in Computer Science; Battiato, S., Farinella, G.M., Leo, M., Gallo, G., Eds.; Springer: Cham, Switzerland, 2017; Volume 10590, pp. 254–265. ISBN 978-3-319-70742-6. [Google Scholar]

- Babaee, M.; Dinh, D.T.; Rigoll, G. A deep convolutional neural network for video sequence background subtraction. Pattern Recognit. 2018, 76, 635–649. [Google Scholar] [CrossRef]

- Park, S.; Lim, S. Fast Shadow Detection for Urban Autonomous Driving Applications. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 1717–1722. [Google Scholar]

- Levine, M.D.; Bhattacharyya, J. Removing shadows. Pattern Recognit. Lett. 2005, 26, 251–265. [Google Scholar] [CrossRef]

- Tian, J.; Qi, X.; Qu, L.; Tang, Y. New spectrum ratio properties and features for shadow detection. Pattern Recognit. 2016, 51, 85–96. [Google Scholar] [CrossRef]

- Leone, A.; Distante, C. Shadow detection for moving objects based on texture analysis. Pattern Recognit. 2007, 40, 1222–1233. [Google Scholar] [CrossRef]

- Mohan, A.; Tumblin, J.; Choudhury, P. Editing soft shadows in a digital photograph. IEEE Comput. Graph. Appl. 2007, 27, 23–31. [Google Scholar] [CrossRef]

- Graham, F.; Steven, H.; Gerald, F.; Tian, G.Y. Illuminant and device invariant colour using histogram equalization. Pattern Recognit. 2005, 38, 179–190. [Google Scholar]

- Horprasert, T.; Harwood, D.; Davis, L.S. A Statistical Approach for Real-time Robust Background Subtraction and Shadow Detection. In Proceedings of the IEEE Frame Rate Workshop, Kerkyra, Greece, 20–27 September 1999; pp. 1–19. [Google Scholar]

- Dong, X.; Wang, K.; Jia, G. Moving Object and Shadow Detection Based on RGB Color Space and Edge Ratio. In Proceedings of the 2nd International Congress on Image and Signal Processing, Tianjin, China, 17–19 October 2009; pp. 1–5. [Google Scholar]

- Cavallaro, A.; Salvador, E.; Ebrahimi, T. Shadow-aware Object-based Video Processing. IEE Proc. Vis. Image Signal Process. 2005, 152, 398–406. [Google Scholar] [CrossRef]

- Sotelo, M.A.; Rodriguez, F.J.; Magdalena, L.; Bergasa, L.M.; Boquete, L. A Color Vision-Based Lane Tracking System for Autonomous Driving on Unmarked Roads. Autonom. Robots 2004, 16, 95–116. [Google Scholar] [CrossRef]

- Rotaru, C.; Graf, T.; Zhang, J. Color image segmentation in HSI space for automotive applications. J. Real Time Image Process. 2008, 3, 1164–1173. [Google Scholar] [CrossRef]

- Zhang, H.; Hernandez, D.E.; Su, Z.; Su, B. A Low Cost Vision-Based Road-Following System for Mobile Robots. Appl. Sci. 2018, 8, 1635. [Google Scholar] [CrossRef]

- Kampel, M.; Wildenaue, H.; Blauensteiner, P.; Hanbury, A. Improved motion segmentation based on shadow detection. Electron. Lett. Comput. Vis. Image Anal. 2007, 6, 1–12. [Google Scholar]

- Schreer, O.; Feldmann, I.; Goelz, U.; Kauff, P. Fast and robust shadow detection in videoconference applications. In Proceedings of the International Symposium on VIPromCom Video/Image Processing and Multimedia Communications, Zadar, Croatia, 16–19 June 2002; pp. 371–375. [Google Scholar]

- Chen, C.T.; Su, C.Y.; Kao, W.C. An enhanced segmentation on vision-based shadow removal for vehicle detection. In Proceedings of the 2010 International Conference on Green Circuits and Systems, Shanghai, China, 21–23 June 2010; pp. 679–682. [Google Scholar]

- Lee, S.; Hong, H. Use of Gradient-Based Shadow Detection for Estimating Environmental Illumination Distribution. Appl. Sci. 2018, 8, 2255. [Google Scholar] [CrossRef]

- Gevers, T.; Smeulders, A.M.W. Color-based object recognition. Pattern Recognit. 1999, 32, 453–464. [Google Scholar] [CrossRef]

- Rubin, J.M.; Richards, W.A. Color vision and image intensities: When are changes material? Biol. Cybern. 1982, 45, 215–226. [Google Scholar] [CrossRef]

- Pormerleu, D.A. Neural Network Perception for Mobile Robot Guidance; Kluwer Academic Publishers: Boston, MA, USA, 1993. [Google Scholar]

- Wallace, R.; Matsuzaki, K.; Goto, Y.; Crisman, J.; Webb, J.; Kanade, T. Progress in Robot Road-Following. In Proceedings of the IEEE Conference on Robotics Automation, San Francisco, CA, USA, 7–10 April 1986; pp. 1615–1621. [Google Scholar]

- Mikic, I.; Cosman, P.C.; Kogut, G.T.; Trivedi, M.M. Moving shadow and object detection in traffic scenes. In Proceedings of the IEEE International Conference on Pattern Recognition (ICPR), Barcelona, Catalunya, Spain, 3–7 September 2000; pp. 321–324. [Google Scholar]

- Tian, J.; Sun, J.; Tang, Y. Tricolor Attenuation Model for Shadow Detection. IEEE Trans. Image Process. 2009, 10, 2355–2363. [Google Scholar] [CrossRef]

- Barnard, K.; Finlayson, G. Shadow identification using colour ratios. In Proceedings of the IS&T/SID 8th Color Imaging Conference on Color Science, Science, Systems and Application, Scottsdale, AZ, USA, 7–10 November 2000; pp. 97–101. [Google Scholar]

- Tappen, M.F.; Freeman, W.T.; Adelson, E.H. Recovering intrinsic images from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1459–1472. [Google Scholar] [CrossRef]

- Lalonde, J.F.; Efros, A.A.; Narasimhan, S.G. Detecting ground shadows in outdoor consumer photographs. In Proceedings of the 11th European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 322–335. [Google Scholar]

- Finlayson, G.D.; Hordley, S.D.; Cheng, L.; Drew, M.S. On the Removal of Shadows From Images. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 1, 59–68. [Google Scholar] [CrossRef] [PubMed]

- Finlayson, G.D.; Hordley, S.D. Color constancy at a pixel. J. Opt. Soc. Am. A 2001, 18, 253–264. [Google Scholar] [CrossRef] [PubMed]

- Nielsen, M.; Madsen, C.B. Segmentation of Soft Shadows based on a Daylight and Penumbra Model. Lect. Notes Comput. Sci. 2007, 4418, 341–352. [Google Scholar]

- McFeely, R.; Glavin, M.; Jones, E. Shadow identification for digital imagery using colour and texture cues. IET Image Proc. 2012, 6, 148–159. [Google Scholar] [CrossRef]

- Fernández, C.; Fernández-Llorca, D.; Sotelo, M.A. A hybrid vision-map method for urban road detection. J. Adv. Transp. 2017, 2017, 1–21. [Google Scholar] [CrossRef]

- Maxwell, B.A.; Smith, C.A.; Qraitem, M.; Messing, R.; Whitt, S.; Thien, N.; Friedhohh, R.M. Real-Time Physics-Based Removal of Shadows and Shading From Road Surfaces. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–21 June 2019; pp. 88–96. [Google Scholar]

- Guo, R.; Qieyun, D.; Derek, H. Paired regions for shadow detection and removal. IEEE Trans. Pattern Anal. Mach. 2013, 35, 2956–2967. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.H.; Bennamoun, M.; Sohel, F.; Togneri, R. Automatic shadow detection and removal from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 431–446. [Google Scholar] [CrossRef]

- Vicente, T.F.Y.; Hoai, M.; Samaras, D. Leave-One-Out Kernel Optimization for Shadow Detection and Removal. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 682–695. [Google Scholar] [CrossRef]

- Qu, L.; Tian, J.; He, S.; Tang, Y.; Lau, R.W. DeshadowNet: A multicontext embedding deep network for shadow removal. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4067–4075. [Google Scholar]

- Hu, X.; Zhu, L.; Fu, C.W.; Qin, J.; Heng, P.A. Direction-aware Spatial Context Features for Shadow Detection. arXiv 2018, arXiv:1712.04142. [Google Scholar]

- Khan, S.; Bennamoun, M.; Sohel, F.; Togneri, R. Automatic feature learning for robust shadow detection. In IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 4321–4328. [Google Scholar]

- Finlayson, G.D.; Drew, M.S.; Funt, B.V. Spectral sharpening: Sensor transformations for improved color constancy. J. Opt. Soc. Am. A 1994, 11, 1553–1562. [Google Scholar] [CrossRef]

- Stauder, J.; Mech, R.; Ostermann, J. Detection of moving cast shadows for object segmentation. IEEE Trans. Multimedia 1999, 1, 65–76. [Google Scholar] [CrossRef]

- Ibarra-Arenado, M.; Tjahjadi, T.; Pérez-Oria, J.; Robla-Gómez, S.; Jiménez-Avello, A. Shadow-Based Vehicle Detection in Urban Traffic. Sensors 2017, 17, 975. [Google Scholar] [CrossRef] [PubMed]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Aly, M. Real time Detection of Lane Markers in Urban Streets. In Proceedings of the IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 7–12. [Google Scholar]

- Fritsch, J.; Kühnl, T.; Geiger, A. A new performance measure and evaluation benchmark for road detection algorithms. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 1693–1700. [Google Scholar]

| Caltech Dataset (100 Images) | Kitti Dataset (100 Images) | Our Dataset (100 Images) | All Datasets (300 Images) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F-m | P | R | F-m | P | R | F-m | P | R | F-m | |

| Property 1 | 0.964 | 0.791 | 0.869 | 0.960 | 0.727 | 0.827 | 0.913 | 0.672 | 0.774 | 0.950 | 0.730 | 0.826 |

| Property 2 | 0.968 | 0.795 | 0.873 | 0.963 | 0.815 | 0.883 | 0.931 | 0.518 | 0.666 | 0.959 | 0.737 | 0.833 |

| Property 3 | 0.935 | 0.675 | 0.784 | 0.939 | 0.737 | 0.826 | 0.918 | 0.688 | 0.787 | 0.932 | 0.706 | 0.804 |

| Method | 0.901 | 0.869 | 0.884 | 0.926 | 0.895 | 0.910 | 0.888 | 0.750 | 0.813 | 0.905 | 0.884 | 0.894 |

| Caltech Dataset (100 Images) | Kitti Dataset (100 Images) | Our Dataset (100 Images) | All Datasets (300 Images) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F-m | P | R | F-m | P | R | F-m | P | R | F-m | |

| Method 1 | 0.827 | 0.492 | 0.617 | 0.870 | 0.468 | 0.609 | 0.822 | 0.575 | 0.677 | 0.839 | 0.511 | 0.634 |

| Method 2 | 0.919 | 0.596 | 0.723 | 0.938 | 0.566 | 0.706 | 0.876 | 0.588 | 0.704 | 0.911 | 0.583 | 0.711 |

| Method 3 | 0.350 | 0.426 | 0.384 | 0.466 | 0.440 | 0.453 | 0.445 | 0.461 | 0.453 | 0.420 | 0.442 | 0.430 |

| Method 4 | 0.646 | 0.447 | 0.528 | 0.724 | 0.578 | 0.643 | 0.541 | 0.405 | 0.418 | 0.637 | 0.476 | 0.544 |

| Method 5 | 0.608 | 0.565 | 0.585 | 0.698 | 0.630 | 0.662 | 0.477 | 0.442 | 0.458 | 0.594 | 0.545 | 0.568 |

| Our Method | 0.901 | 0.869 | 0.884 | 0.926 | 0.895 | 0.910 | 0.888 | 0.750 | 0.813 | 0.905 | 0.884 | 0.894 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ibarra-Arenado, M.J.; Tjahjadi, T.; Pérez-Oria, J. Shadow Detection in Still Road Images Using Chrominance Properties of Shadows and Spectral Power Distribution of the Illumination. Sensors 2020, 20, 1012. https://doi.org/10.3390/s20041012

Ibarra-Arenado MJ, Tjahjadi T, Pérez-Oria J. Shadow Detection in Still Road Images Using Chrominance Properties of Shadows and Spectral Power Distribution of the Illumination. Sensors. 2020; 20(4):1012. https://doi.org/10.3390/s20041012

Chicago/Turabian StyleIbarra-Arenado, Manuel José, Tardi Tjahjadi, and Juan Pérez-Oria. 2020. "Shadow Detection in Still Road Images Using Chrominance Properties of Shadows and Spectral Power Distribution of the Illumination" Sensors 20, no. 4: 1012. https://doi.org/10.3390/s20041012

APA StyleIbarra-Arenado, M. J., Tjahjadi, T., & Pérez-Oria, J. (2020). Shadow Detection in Still Road Images Using Chrominance Properties of Shadows and Spectral Power Distribution of the Illumination. Sensors, 20(4), 1012. https://doi.org/10.3390/s20041012