A Review on Map-Merging Methods for Typical Map Types in Multiple-Ground-Robot SLAM Solutions

Abstract

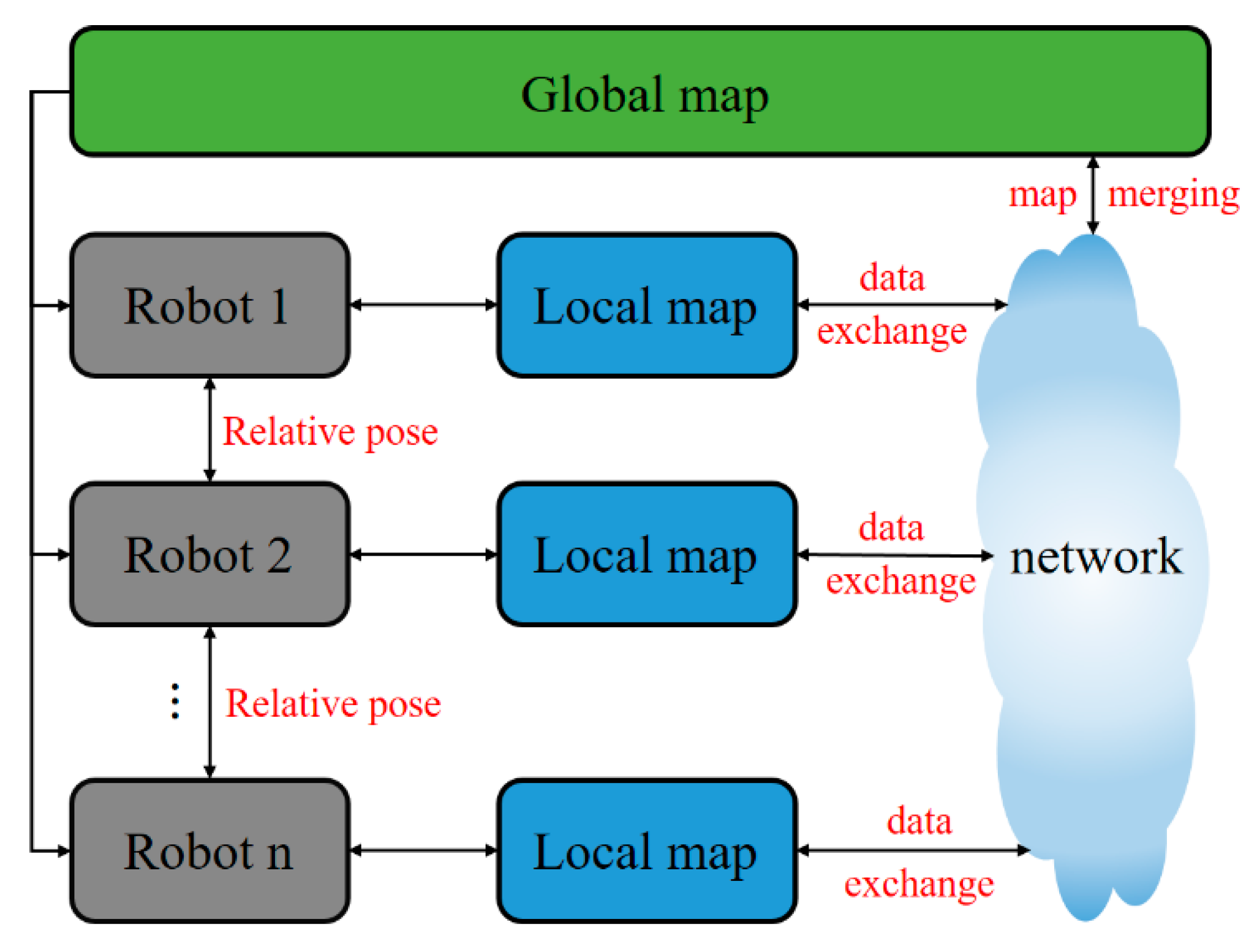

1. Introduction

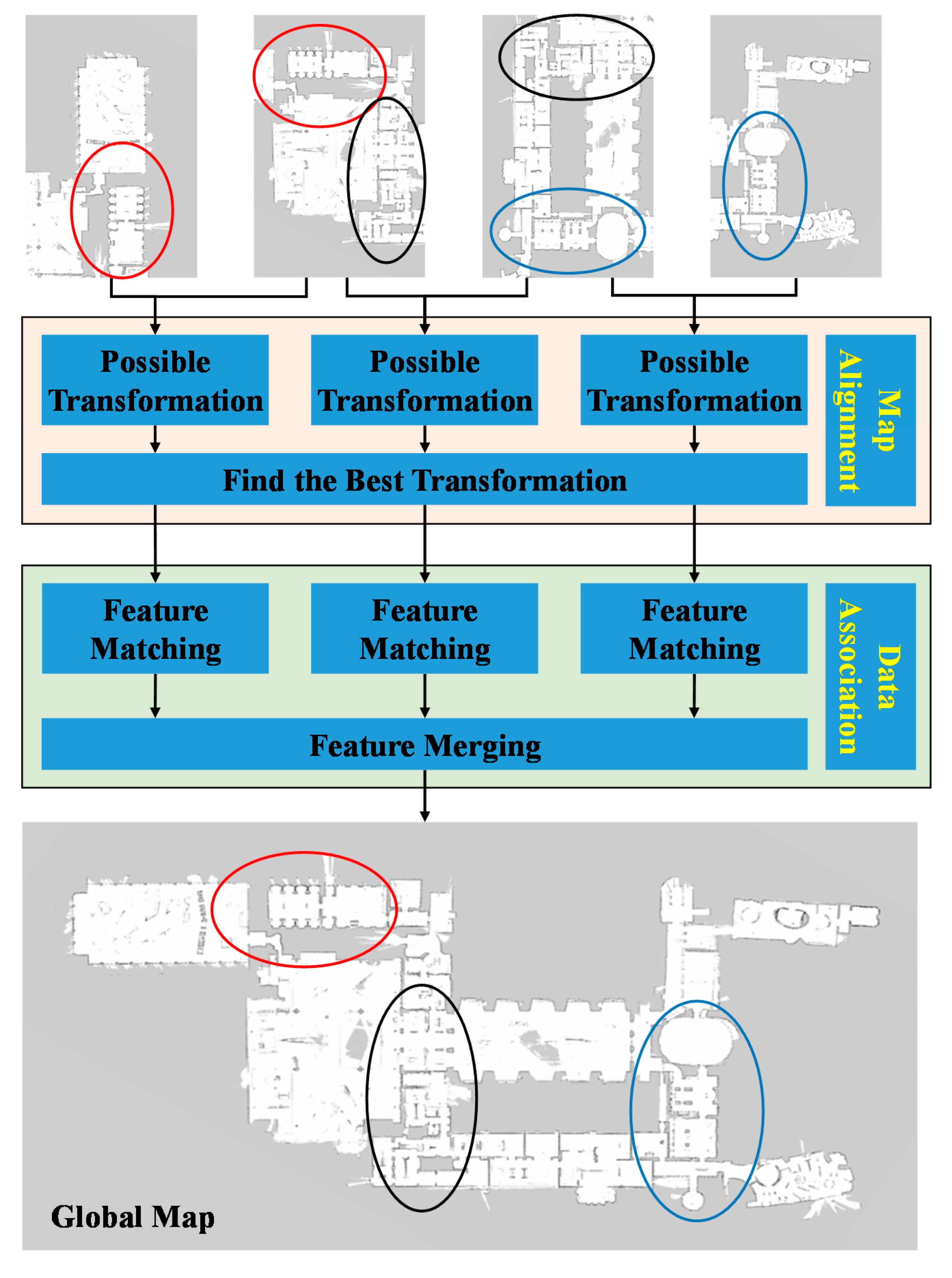

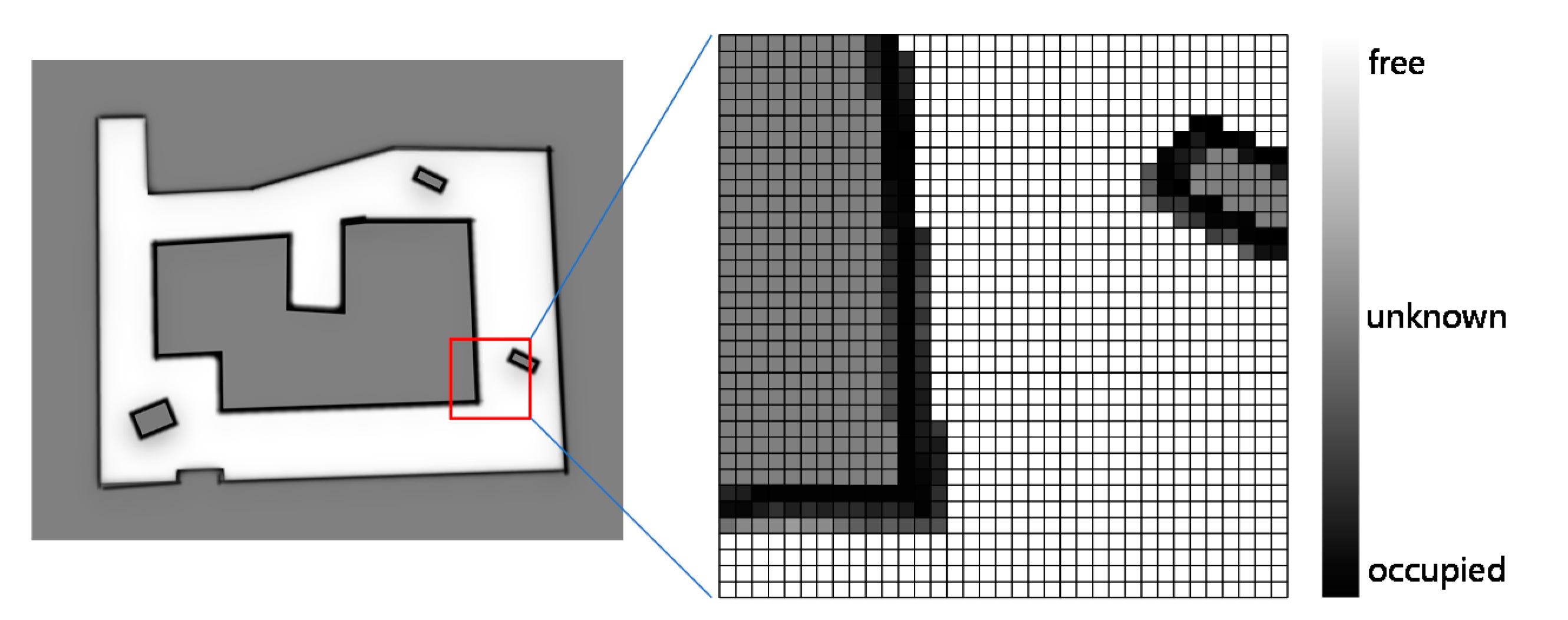

2. Occupancy Grid Map Merging

2.1. Probability Method

2.2. Optimization Method

2.2.1. Objective Function Based on Overlapping

2.2.2. Objective Function Based on Occupancy Likelihood

2.2.3. Objective Function Based on Image Registration

2.3. Feature-Based Method

2.4. Hough-Transform-Based Method

3. Feature-Based Map Merging

3.1. Point-Feature-Based Map Merging

3.2. Line-Feature-Based Map Merging

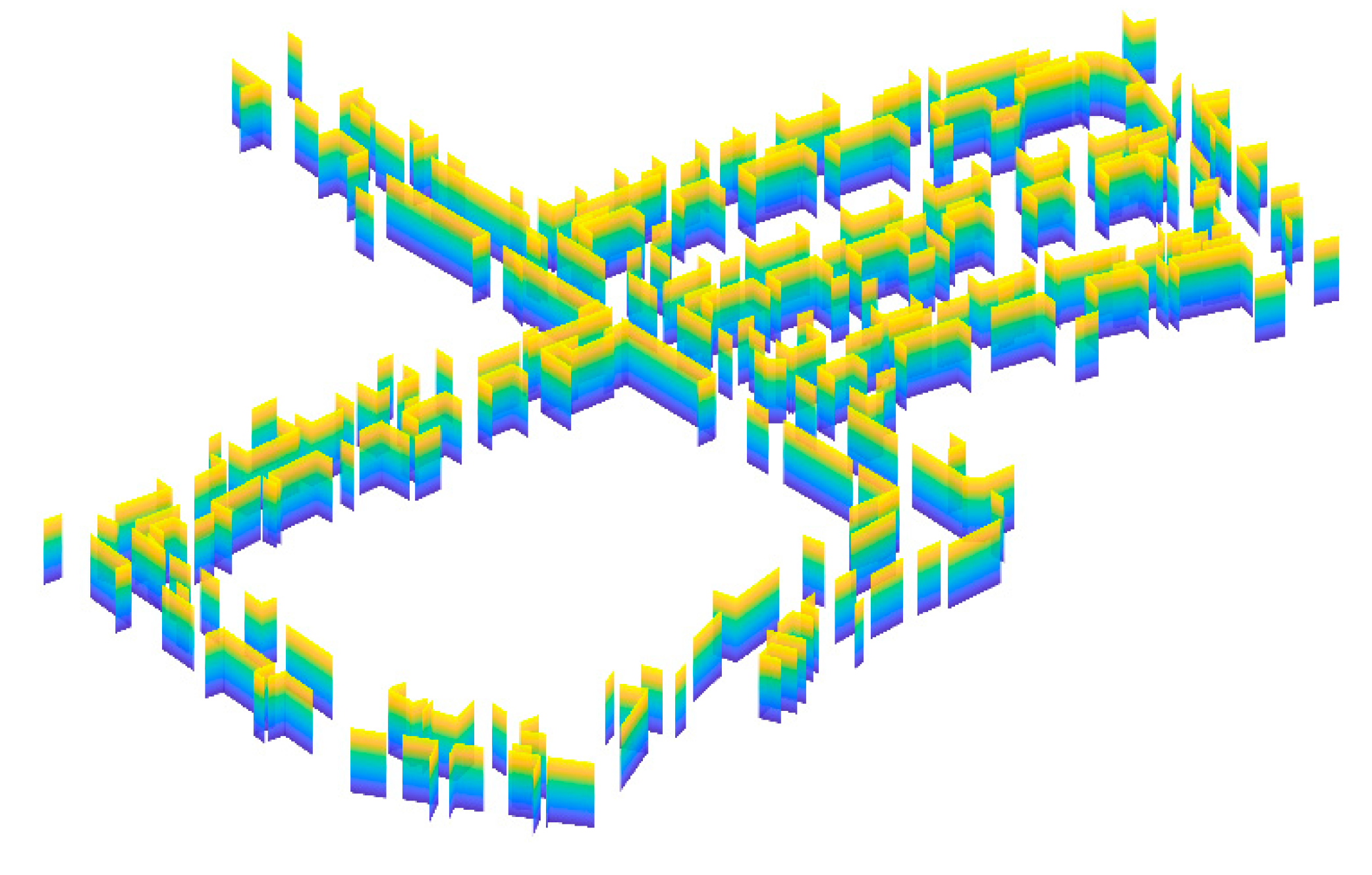

3.3. Plane-Feature-Based Map Merging

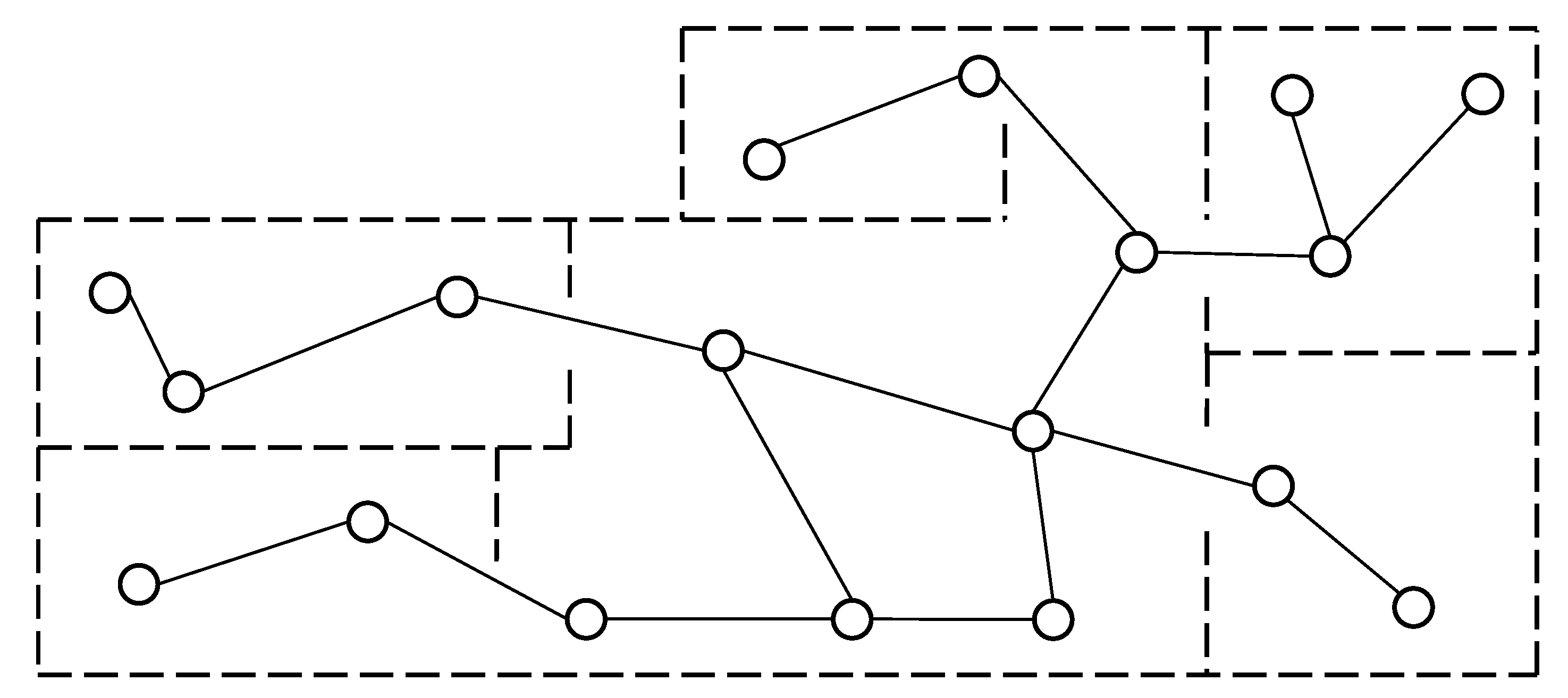

4. Topological Map Merging

5. Summary

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| SLAM | simultaneous localization and mapping |

| ARW | adaptive random walk |

| ICP | iterative closest point |

| TrICP | trimmed ICP |

| SIFT | scale-invariant feature transform |

| SURF | speeded-up robust features |

| KLT | Kanade-Lucas-Tomasi |

| MCS | maximum common subgraph |

| MAFF | map augmentation and feature fusion |

| MER | maximal empty rectangular |

| FAST | features from accelerated segment test |

| ORB | oriented FAST and rotated BRIEF |

| BoW | bag of word |

| RANSAC | random sample consensus |

| EM | expectation–maximization |

| MSSE | modified selective statistical estimator |

| VG | Voronoi graph |

| GVG | generalized Voronoi graph |

| AGVG | annotated generalized Voronoi graph |

| EVG | extended Voronoi graph |

References

- Zhuang, Y.; Jiang, N.; Hu, H.S.; Yan, F. 3-D-Laser-Based Scene Measurement and Place Recognition for Mobile Robots in Dynamic Indoor Environments. IEEE Trans. Instrum. Meas. 2013, 62, 438–450. [Google Scholar] [CrossRef]

- Rone, W.; Ben-Tzvi, P. Mapping, localization and motion planning in mobile multi-robotic systems. Robotica 2013, 31, 1–23. [Google Scholar] [CrossRef]

- Carlone, L.; Ng, M.K.; Du, J.J.; Bona, B.; Indri, M. Simultaneous Localization and Mapping Using Rao-Blackwellized Particle Filters in Multi Robot Systems. J. Intell. Robot. Syst. 2011, 63, 283–307. [Google Scholar] [CrossRef]

- Sasaoka, T.; Kimoto, I.; Kishimoto, Y.; Takaba, K.; Nakashima, H. Multi-robot SLAM via Information Fusion Extended Kalman Filters. IFAC-PapersOnLine 2016, 49, 303–308. [Google Scholar] [CrossRef]

- Jazi, S.H.; Farahani, S.; Karimpour, H. Map-merging in Multi-robot Simultaneous Localization and Mapping Process Using Two Heterogeneous Ground Robots. Int. J. Eng. 2019, 32, 608–616. [Google Scholar]

- Kwon, J.-W. Cooperative Environment Scans Based on a Multi-Robot System. Sensors 2015, 15, 6483–6496. [Google Scholar] [CrossRef] [PubMed]

- Zhi, W.; Ott, L.; Senanayake, R.; Ramos, F. Continuous occupancy map fusion with fast bayesian hilbert maps. In Proceedings of the International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4111–4117. [Google Scholar]

- Williams, S.B.; Dissanayake, G.; Durrant-Whyte, H. Towards multi-vehicle simultaneous localisation and mapping. In Proceedings of the 2002 IEEE International Conference On Robotics And Automation, Vols I-Iv, Proceedings, Washington, DC, USA, 11–15 May 2002; pp. 2743–2748. [Google Scholar]

- Saeedi, S.; Trentini, M.; Seto, M.; Li, H. Multiple-Robot Simultaneous Localization and Mapping: A Review. J. Field Robot. 2016, 33, 3–46. [Google Scholar] [CrossRef]

- Li, H.; Tsukada, M.; Nashashibi, F.; Parent, M. Multivehicle Cooperative Local Mapping: A Methodology Based on Occupancy Grid Map Merging. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2089–2100. [Google Scholar] [CrossRef]

- Carlone, L.; Ng, M.K.; Du, J.; Bona, B.; Indri, M. Rao-Blackwellized Particle Filters Multi Robot SLAM with Unknown Initial Correspondences and Limited Communication. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–8 May 2010; pp. 243–249. [Google Scholar]

- Chen, H.; Huang, H.; Qin, Y.; Li, Y.; Liu, Y. Vision and laser fused SLAM in indoor environments with multi-robot system. Assem. Autom. 2019, 39, 297–307. [Google Scholar] [CrossRef]

- Deutsch, I.; Liu, M.; Siegwart, R. A Framework for Multi-Robot Pose Graph SLAM. In Proceedings of the IEEE International Conference on Real-time Computing and Robotics (IEEE RCAR), Angkor Wat, Cambodia, 6–10 June 2016; IEEE: New York, NY, USA, 2016; pp. 567–572. [Google Scholar]

- Bosse, M.; Zlot, R. Map matching and data association for large-scale two-dimensional laser scan-based SLAM. Int. J. Robot. Res. 2008, 27, 667–691. [Google Scholar] [CrossRef]

- Schwertfeger, S.; Birk, A. Evaluation of Map Quality by Matching and Scoring High-Level, Topological Map Structures. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2221–2226. [Google Scholar]

- Mielle, M.; Magnusson, M.; Lilienthal, A.J. Using sketch-maps for robot navigation: Interpretation and matching. In Proceedings of the 14th IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; Melo, K., Ed.; IEEE: New York, NY, USA, 2016; pp. 252–257. [Google Scholar]

- Kakuma, D.; Tsuichihara, S.; Ricardez, G.A.G.; Takamatsu, J.; Ogasawara, T. Alignment of Occupancy Grid and Floor Maps using Graph Matching. In Proceedings of the 11th IEEE International Conference on Semantic Computing (ICSC), San Diego, CA, USA, 30 January–1 February 2017; pp. 57–60. [Google Scholar]

- Lee, H.-C.; Lee, S.-H.; Lee, T.-S.; Kim, D.-J.; Lee, B.-H. A Survey of Map Merging Techniques for Cooperative-SLAM. In Proceedings of the 9th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Daejeon, Korea, 26–29 November 2012; pp. 285–287. [Google Scholar]

- Amigoni, F.; Banfi, J.; Basilico, N. Multirobot Exploration of Communication-Restricted Environments: A Survey. IEEE Intell. Syst. 2017, 32, 48–57. [Google Scholar] [CrossRef]

- Andersone, I. Heterogeneous Map Merging: State of the Art. Robotics 2019, 8, 74. [Google Scholar] [CrossRef]

- Birk, A.; Carpin, S. Merging occupancy grid maps from multiple robots. Proc. IEEE 2006, 94, 1384–1397. [Google Scholar] [CrossRef]

- Jiang, Z.; Zhu, J.; Li, Y.; Wang, J.; Li, Z.; Lu, H. Simultaneous Merging Multiple Grid Maps Using the Robust Motion Averaging. J. Intell. Robot. Syst. Theory Appl. 2019, 94, 655–668. [Google Scholar] [CrossRef]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; The MIT Press: Cambridge, UK, 2005. [Google Scholar]

- Jiang, Z.; Zhu, J.; Jin, C.; Xu, S.; Zhou, Y.; Pang, S. Simultaneously merging multi-robot grid maps at different resolutions. Multimed. Tools Appl. 2019, 79, 14553–14572. [Google Scholar] [CrossRef]

- Lee, H.-C.; Lee, S.-H.; Choi, M.H.; Lee, B.-H. Probabilistic map merging for multi-robot RBPF-SLAM with unknown initial poses. Robotica 2012, 30, 205–220. [Google Scholar] [CrossRef]

- Blanco, J.-L.; Gonzalez-Jimenez, J.; Fernandez-Madrigal, J.-A. A robust, multi-hypothesis approach to matching occupancy grid maps. Robotica 2013, 31, 687–701. [Google Scholar] [CrossRef]

- Howard, A. Multi-robot simultaneous localization and mapping using particle filters. Int. J. Robot. Res. 2006, 25, 1243–1256. [Google Scholar] [CrossRef]

- Burgard, W.; Moors, M.; Fox, D.; Simmons, R.; Thrun, S. Collaborative multi-robot exploration. In Proceedings of the IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; IEEE: San Francisco, CA, USA, 2000; pp. 476–481. [Google Scholar]

- Thrun, S. A probabilistic on-line mapping algorithm for teams of mobile robots. Int. J. Robot. Res. 2001, 20, 335–363. [Google Scholar] [CrossRef]

- Zhou, X.S.; Roumeliotis, S.I. Multi-robot SLAM with unknown initial correspondence: The robot rendezvous case. In Proceedings of the IEEE/RSJ. International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006; Volume 1–12, pp. 1785–1792. [Google Scholar]

- Konolige, K.; Fox, D.; Limketkai, B.; Ko, J.; Stewart, B. Map merging for distributed robot navigation. In Proceedings of the IEEE/RSJ. International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003; pp. 212–217. [Google Scholar]

- Fox, D.; Ko, J.; Konolige, K.; Limketkai, B.; Schulz, D.; Stewart, B. Distributed multirobot exploration and mapping. Proc. IEEE 2006, 94, 1325–1339. [Google Scholar] [CrossRef]

- Carpin, S.; Birk, A.; Jucikas, V. On map merging. Robot. Auton. Syst. 2005, 53, 1–14. [Google Scholar] [CrossRef]

- Locatelli, M. Simulated annealing algorithms for continuous global optimization. In Handbook of Global Optimization; Springer US: New York, NY, USA, 2002; pp. 179–229. [Google Scholar]

- Rocha, R.; Ferreira, F.; Dias, J. Multi-Robot Complete Exploration using Hill Climbing and Topological Recovery. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; Chatila, R., Kelly, A., Merlet, J.P., Eds.; IEEE: New York, NY, USA, 2008; pp. 1884–1889. [Google Scholar]

- Ma, X.; Guo, R.; Li, Y.; Chen, W. Adaptive Genetic Algorithm for Occupancy Grid Maps Merging. In Proceedings of the 7th World Congress on Intelligent Control and Automation, Chongqing, China, 25–27 June 2008; Volume 1–23, pp. 5716–5720. [Google Scholar]

- Li, H.; Nashashibi, F. A New Method for Occupancy Grid Maps Merging: Application to Multi-vehicle Cooperative Local Mapping and Moving Object Detection in Outdoor Environment. In Proceedings of the 12th International Conference on Control, Automation, Robotics and Vision (ICARCV), Guangzhou, China, 5–7 December 2012; pp. 632–637. [Google Scholar]

- Li, H.; Nashashibi, F. Multi-vehicle cooperative localization using indirect vehicle-to-vehicle relative pose estimation. In Proceedings of the 2012 IEEE International Conference on Vehicular Electronics and Safety, Istanbul, Turkey, 24–27 July 2012; IEEE Computer Society: Istanbul, Turkey, 2012; pp. 267–272. [Google Scholar]

- Ma, L.; Zhu, J.; Zhu, L.; Du, S.; Cui, J. Merging grid maps of different resolutions by scaling registration. Robotica 2016, 34, 2516–2531. [Google Scholar] [CrossRef]

- Zhu, J.-H.; Zhou, Y.; Wang, X.-C.; Han, W.-X.; Ma, L. Grid map merging approach based on image registration. Zidonghua Xuebao Acta Autom. Sin. 2015, 41, 285–294. [Google Scholar]

- Phillips, J.M.; Liu, R.; Tomasi, C. Outlier robust ICP for minimizing fractional RMSD. In Proceedings of the 6th International Conference on 3-D Digital Imaging and Modeling, Montreal, QC, Canada, 21–23 August 2007; Godin, G., Hebert, P., Masuda, T., Taubin, G., Eds.; IEEE Computer Soc: Montreal, QC, Canada, 2007; pp. 427–434. [Google Scholar]

- Chetverikov, D.; Stepanov, D.; Krsek, P. Robust euclidean alignment of 3D point sets: The trimmed iterative closest point algorithm. Image Vis. Comput. 2005, 23, 299–309. [Google Scholar] [CrossRef]

- Saeedi, S.; Paull, L.; Trentini, M.; Li, H. Multiple robot simultaneous localization and mapping. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; Institute of Electrical and Electronics Engineers Inc.: San Francisco, CA, USA, 2011; pp. 853–858. [Google Scholar]

- Wang, K.; Jia, S.; Li, Y.; Li, X.; Guo, B. Research on Map Merging for Multi-robotic System Based on RTM. In Proceedings of the IEEE International Conference on Information and Automation (ICIA), Shenyang, China, 6–8 June 2012; pp. 156–161. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features. (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Durdu, A.; Korkmaz, M. A novel map-merging technique for occupancy grid-based maps using multiple robots: A semantic approach. Turk. J. Electr. Eng. Comput. Sci. 2019, 27, 3980–3993. [Google Scholar] [CrossRef]

- Alnounou, Y.; Paulik, M.J.; Krishnan, M.; Hudas, G.; Overholt, J. Occupancy grid map merging using feature maps. In Proceedings of the IASTED International Conference on Robotics and Applications, Cambridge, MA, USA, 1–3 November 2010; Acta Press: Cambridge, MA, USA, 2010; pp. 469–475. [Google Scholar]

- Park, J.; Sinclair, A.J.; Sherrill, R.E.; Doucette, E.A.; Curtis, J.W. Map Merging of Rotated, Corrupted, and Different Scale Maps using Rectangular Features. In Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), Savannah, GA, USA, 11–14 April 2016; pp. 535–543. [Google Scholar]

- Sun, Y.; Sun, R.; Yu, S.; Peng, Y. A Grid Map Fusion Algorithm Based on Maximum Common Subgraph. In Proceedings of the 13th World Congress on Intelligent Control and Automation (WCICA), Changsha, China, 4–8 July 2018; pp. 58–63. [Google Scholar]

- Topal, S.; Erkmen, I.; Erkmen, A.M. A Novel Map Merging Methodology for Multi-Robot Systems. In Proceedings of the World Congress on Engineering and Computer Science (WCECS)/International Conference on Advances in Engineering Technologies, San Francisco, CA, USA, 20–22 October 2010; Ao, S.I., Douglas, C., Grundfest, W.S., Burgstone, J., Eds.; Int Assoc Engineers-Iaeng: Hong Kong, China, 2010; pp. 383–387. [Google Scholar]

- Park, J.; Sinclair, A.J. Mapping for Path Planning Using Maximal Empty Rectangles; American Institute of Aeronautics and Astronautics Inc.: Boston, MA, USA, 2013. [Google Scholar]

- Viswanathan, D.G. FeatuRes. From accelerated segment test (fast). In Proceedings of the 10th Workshop on Image Analysis for Multimedia Interactive Services, London, UK, 6–8 May 2009; pp. 6–8. [Google Scholar]

- Censi, A.; Iocchi, L.; Grisetti, G. Scan matching in the hough domain. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 2739–2744. [Google Scholar]

- Duda, R.O.; Hart, P.E. Use of hough transformation to detect lines and curves in pictures. Commun. ACM 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Carpin, S. Fast and accurate map merging for multi-robot systems. Auton Robot. 2008, 25, 305–316. [Google Scholar] [CrossRef]

- Saeedi, S.; Paull, L.; Trentini, M.; Seto, M.; Li, H. Map Merging Using Hough Peak Matching. In Proceedings of the 25th IEEE\RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 4683–4688. [Google Scholar]

- Saeedi, S.; Paull, L.; Trentini, M.; Seto, M.; Li, H. Map merging for multiple robots using Hough peak matching. Robot. Auton. Syst. 2014, 62, 1408–1424. [Google Scholar] [CrossRef]

- Ferrao, V.T.; Vinhal, C.D.N.; Da Cruz, G. An occupancy grid map merging algorithm invariant to scale, rotation and translation. In Proceedings of the 6th Brazilian Conference on Intelligent Systems (BRACIS), Uberlandia, Brazil, 2–5 October 2017; pp. 246–251. [Google Scholar]

- Thrun, S.; Liu, Y.F. Multi-robot SLAM with sparse extended information filers. In Robotics Research; Dario, P., Chatila, R., Eds.; Springer: Berlin, Germany, 2005; Volume 15, pp. 254–265. [Google Scholar]

- Mur-Artal, R.; Tardos, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Sun, S.; Xu, B.; Sun, Y.; Sun, Z. Sparse Pointcloud Map Fusion of Multi-Robot System. In Proceedings of the 7th International Conference on Control Automation and Information Sciences (ICCAIS), Hangzhou, China, 24–27 October 2018; pp. 270–274. [Google Scholar]

- Elseberg, J.; Creed, R.T.; Lakaemper, R. A Line Segment Based System for 2D Global Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–8 May 2010; IEEE: New York, NY, USA, 2010; pp. 3924–3931. [Google Scholar]

- Mazuran, M.; Amigoni, F. Matching Line Segment Scans with Mutual Compatibility Constraints. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: New York, NY, USA, 2014; pp. 4298–4303. [Google Scholar]

- Amigoni, F.; Li, A.Q. Comparing methods for merging redundant line segments in maps. Robot. Auton. Syst. 2018, 99, 135–147. [Google Scholar] [CrossRef]

- Amigoni, F.; Fontana, G.; Garigiola, F. A method for building small-size segment-based maps. In Proceedings of the 8th International Symposium on Distributed Autonomous Robotic Systems (DARS 8), Minneapoils, MN, USA, 12–14 July 2006; Gini, M., Voyles, R., Eds.; Springer: Tokyo, Japan, 2006; pp. 11–21. [Google Scholar]

- Amigoni, F.; Gasparini, S.; Gini, M. Building segment-based maps without pose information. Proc. IEEE 2006, 94, 1340–1359. [Google Scholar] [CrossRef]

- Lopez-Sanchez, M.; Esteva, F.; De Mantaras, R.L.; Sierra, C.; Amat, J. Map generation by cooperative low-cost robots in structured unknown environments. Auton Robot. 1998, 5, 53–61. [Google Scholar] [CrossRef]

- Weingarten, J.; Siegwart, R. 3D SLAM using planar segments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–13 October 2006; IEEE: New York, NY, USA, 2006; pp. 3062–3067. [Google Scholar]

- Kim, P.; Coltin, B.; Kim, H.J. Linear RGB-D SLAM for Planar Environments. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 350–366. [Google Scholar]

- Ferrer, G. Eigen-Factors: Plane Estimation for Multi-Frame and Time-Continuous Point Cloud Alignment. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; Institute of Electrical and Electronics Engineers Inc.: Macau, China, 2019; pp. 1278–1284. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: New York, NY, USA, 2011; pp. 2564–2571. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the 9th European Conference on Computer Vision (ECCV 2006), Graz, Austria, 7–13 May 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin, Germany, 2006; Volume 3951, pp. 430–443. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Proceedings of theComputer Vision—ECCV 2010, Heraklion, Crete, Greece, 5–11 September 2010; Springer: Heraklion, Crete, Greece, 2010; pp. 778–792. [Google Scholar]

- Galvez-Lopez, D.; Tardos, J.D. Real-Time Loop Detection with Bags of Binary Words. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; IEEE: New York, NY, USA, 2011; pp. 51–58. [Google Scholar]

- Li, J.Y.; Zhong, R.F.; Hu, Q.W.; Ai, M.Y. Feature-Based Laser Scan Matching and Its Application for Indoor Mapping. Sensors 2016, 16, 21. [Google Scholar] [CrossRef]

- Nguyen, V.; Gaechter, S.; Martinelli, A.; Tomatis, N.; Siegwart, R. A comparison of line extraction algorithms using 2D range data for indoor mobile robotics. Auton Robot. 2007, 23, 97–111. [Google Scholar] [CrossRef]

- Thrun, S.; Liu, Y.F.; Koller, D.; Ng, A.Y.; Ghahramani, Z.; Durrant-Whyte, H. Simultaneous localization and mapping with sparse extended information filters. Int. J. Robot. Res. 2004, 23, 693–716. [Google Scholar] [CrossRef]

- Amigoni, F.; Gasparini, S.; Gini, M. Merging partial maps without using odometry. In Proceedings of the 3rd International Workshop on Multi-Robot Systems, Washington, DC, USA, March 2005; Parker, L.E., Schneider, F.E., Schultz, A.C., Eds.; Springer: Dordrecht, The Netherlands, 2005; pp. 133–144. [Google Scholar]

- Cui, M.; Femiani, J.; Hu, J.; Wonka, P.; Razdan, A. Curve matching for open 2D curves. Pattern Recognit. Lett. 2009, 30, 1–10. [Google Scholar] [CrossRef]

- Baudouin, L.; Mezouar, Y.; Ait-Aider, O.; Araujo, H. Multi-modal Sensors Path Merging. In Proceedings of the 13th International Conference on Intelligent Autonomous Systems (IAS), Padova, Italy, 15–18 July 2014; Menegatti, E., Michael, N., Berns, K., Yamaguchi, H., Eds.; Springer: Berlin, Germany, 2016; Volume 302, pp. 191–201. [Google Scholar]

- Amigoni, F.; Gasparini, S. Analysis of Methods for Reducing Line Segments in Maps: Towards a General Approach. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; Chatila, R., Kelly, A., Merlet, J.P., Eds.; IEEE: New York, NY, USA, 2008; pp. 2896–2901. [Google Scholar]

- Gonzalez-Banos, H.H.; Latombe, J.C. Navigation strategies for exploring indoor environments. Int. J. Robot. Res. 2002, 21, 829–848. [Google Scholar] [CrossRef]

- Amigoni, F.; Vailati, M. A method for reducing redundant line segments in maps. In Proceedings of the Fourth European Conference on Mobile Robots (ECMR), Mlini/Dubrovnik, Croatia, 23–25 September 2009; pp. 61–66. [Google Scholar]

- Lakaemper, R. Simultaneous Multi-Line-Segment Merging for Robot Mapping using Mean Shift Clustering. In Proceedings of the IEEE RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; IEEE: New York, NY, USA, 2009; pp. 1654–1660. [Google Scholar]

- Sarkar, B.; Pal, P.K.; Sarkar, D. Building maps of indoor environments by merging line segments extracted from registered laser range scans. Robot. Auton. Syst. 2014, 62, 603–615. [Google Scholar] [CrossRef]

- Kuo, W.J.; Tseng, S.H.; Yu, J.Y.; Fu, L.C. A Hybrid Approach to RBPF Based SLAM with Grid Mapping Enhanced by Line Matching. In Proceedings of the IEEE RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; IEEE: New York, NY, USA, 2009; pp. 1523–1528. [Google Scholar]

- Ceriani, S.; Fontana, G.; Giusti, A.; Marzorati, D.; Matteucci, M.; Migliore, D.; Rizzi, D.; Sorrenti, D.G.; Taddei, P. Rawseeds ground truth collection systems for indoor self-localization and mapping. Auton Robot. 2009, 27, 353–371. [Google Scholar] [CrossRef]

- Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Trans. Inf. Theory 1975, 21, 32–40. [Google Scholar] [CrossRef]

- Li, F.; Fu, C.; Gostar, A.K.; Yu, S.; Hu, M.; Hoseinnezhad, R. Advanced Mapping Using Planar Features Segmented from 3D Point. Clouds. In Proceedings of the 2019 International Conference on Control, Automation and Information Sciences (ICCAIS), Chengdu, China, 23–26 October 2019; Institute of Electrical and Electronics Engineers Inc.: Chengdu, China, 2019; pp. 1–6. [Google Scholar]

- Zhang, X.; Wang, W.; Qi, X.; Liao, Z.; Wei, R. Point-Plane SLAM Using Supposed Planes for Indoor Environments. Sensors 2019, 19, 3795. [Google Scholar] [CrossRef] [PubMed]

- Fischler, M.A.; Bolles, R.C. Random sample consensus—A paradigm for model-fitting with applications to image-analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Bab-Hadiashar, A.; Suter, D. Robust segmentation of visual data using ranked unbiased scale estimate. Robotica 1999, 17, 649–660. [Google Scholar] [CrossRef]

- Weingarten, J.; Siegwart, R. EKF-Based 3D SLAM for Structured Environment Reconstruction; IEEE: New York, NY, USA, 2005; Volume 1–4, pp. 2089–2094. [Google Scholar]

- Kaess, M. Simultaneous Localization and Mapping with Infinite Planes. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, DC, USA, 26–30 May 2015; pp. 4605–4611. [Google Scholar]

- Holz, D.; Holzer, S.; Rusu, R.B.; Behnke, S. Real-time plane segmentation using RGB-D cameras. In RoboCup 2011: Robot Soccer World Cup XV; Springer: Berlin, Germany, 2012; Volume 7416, pp. 306–317. [Google Scholar]

- Gostar, A.K.; Fu, C.; Chuah, W.; Hossain, M.I.; Tennakoon, R.; Bab-Hadiashar, A.; Hoseinnezhad, R. State Transition for Statistical SLAM Using Planar Features. in 3D Point Clouds. Sensors 2019, 19, 1614. [Google Scholar] [CrossRef]

- Lenac, K.; Kitanov, A.; Cupec, R.; Petrović, I. Fast planar surface 3D SLAM using LIDAR. Robot. Auton. Syst. 2017, 92, 197–220. [Google Scholar] [CrossRef]

- Zureiki, A.; Devy, M. SLAM and data fusion from visual landmarks and 3D planes. In Proceedings of the IFAC Proceedings Volumes, Seoul, Korea, 6–11 July 2008; IFAC Secretariat: Seoul, Korea, 2008; pp. 14651–14656. [Google Scholar]

- Trevor, A.J.B.; Rogers, J.G.; Christensen, H.I. Planar Surface SLAM with 3D and 2D Sensors. In 2012 IEEE International Conference on Robotics and Automation, St Paul, MN, USA, 14–18 May, 2012; IEEE: New York, NY, USA, 2012; pp. 3041–3048. [Google Scholar]

- Korrapati, H.; Courbon, J.; Mezouar, Y. Topological Mapping with Image Sequence Partitioning. In Proceedings of the 12th International Conference of Intelligent Autonomous Systems, Jeju, Korea, 26–29 June 2012; Lee, S., Cho, H.S., Yoon, K.J., Lee, J.M., Eds.; Springer: Berlin, Germany, 2013; Volume 193, pp. 143–151. [Google Scholar]

- Choset, H.; Nagatani, K. Topological simultaneous localization and mapping (SLAM): Toward exact localization without explicit localization. IEEE Trans. Robot. Autom. 2001, 17, 125–137. [Google Scholar] [CrossRef]

- Ferreira, F.; Dias, J.; Santos, V. Merging topological maps for localisation in large environments. In Proceedings of the 11th International Conference on Climbing and Walking Robots and the Support Technologies for Mobile Machines, Coimbra, Portugal, 8–10 September 2008; World Scientific Publishing Co. Pte Ltd.: Coimbra, Portugal, 2008; pp. 122–129. [Google Scholar]

- Booij, O.; Terwijn, B.; Zivkovic, Z.; Krose, B. Navigation using an appearance based topological map. In Proceedings of the IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; Volume 1–10, pp. 3927–3932. [Google Scholar]

- Kavraki, L.E.; Svestka, P.; Latombe, J.-C.; Overmars, M.H. Probabilistic roadmaps for path planning in high-dimensional configuration spaces. IEEE Trans. Robot. Autom. 1996, 12, 566–580. [Google Scholar] [CrossRef]

- LaValle, S.M.; Kuffner, J.J., Jr. Randomized kinodynamic planning. Int. J. Robot. Res. 2001, 20, 378–400. [Google Scholar] [CrossRef]

- Dijkstra, E.W. A note on two problems in connexion with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef]

- Wang, J.; Sun, Y.; Liu, Z.; Yang, P.; Lin, T. Route Planning based on Floyd Algorithm for Intelligence Transportation System. In Proceedings of the 2007 IEEE International Conference on Integration Technology, Shenzhen, China, 20–24 March 2007; pp. 544–546. [Google Scholar]

- Huang, W.H.; Beevers, K.R. Topological Map Merging. Int. J. Robot. Res. 2005, 24, 601–613. [Google Scholar] [CrossRef]

- Wallgruen, J.O. Voronoi Graph Matching for Robot Localization and Mapping. In Proceedings of the 6th International Symposium on Voronoi Diagrams, Copenhagen, Denmark, 23–26 June 2009; Gavrilova, M.L., Tan, C.J.K., Anton, F., Eds.; Springer-Verlag Berlin: Berlin, Germany, 2010; Volume 6290, pp. 76–108. [Google Scholar]

- Beeson, P.; Jong, N.K.; Kuipers, B. Towards autonomous topological place detection using the extended Voronoi graph. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 4373–4379. [Google Scholar]

- Chang, H.J.; Lee, C.S.G.; Hu, Y.C.; Lu, Y.-H. Multi-robot SLAM with topological/metric maps. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; Volume 1–9, pp. 1473–1478. [Google Scholar]

- Bernuy, F.; Ruiz-del-Solar, J. Topological Semantic Mapping and Localization in Urban Road Scenarios. J. Intell. Robot. Syst. 2018, 92, 19–32. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, S.; Fu, C.; Gostar, A.K.; Hu, M. A Review on Map-Merging Methods for Typical Map Types in Multiple-Ground-Robot SLAM Solutions. Sensors 2020, 20, 6988. https://doi.org/10.3390/s20236988

Yu S, Fu C, Gostar AK, Hu M. A Review on Map-Merging Methods for Typical Map Types in Multiple-Ground-Robot SLAM Solutions. Sensors. 2020; 20(23):6988. https://doi.org/10.3390/s20236988

Chicago/Turabian StyleYu, Shuien, Chunyun Fu, Amirali K. Gostar, and Minghui Hu. 2020. "A Review on Map-Merging Methods for Typical Map Types in Multiple-Ground-Robot SLAM Solutions" Sensors 20, no. 23: 6988. https://doi.org/10.3390/s20236988

APA StyleYu, S., Fu, C., Gostar, A. K., & Hu, M. (2020). A Review on Map-Merging Methods for Typical Map Types in Multiple-Ground-Robot SLAM Solutions. Sensors, 20(23), 6988. https://doi.org/10.3390/s20236988