A Single Image 3D Reconstruction Method Based on a Novel Monocular Vision System

Abstract

1. Introduction

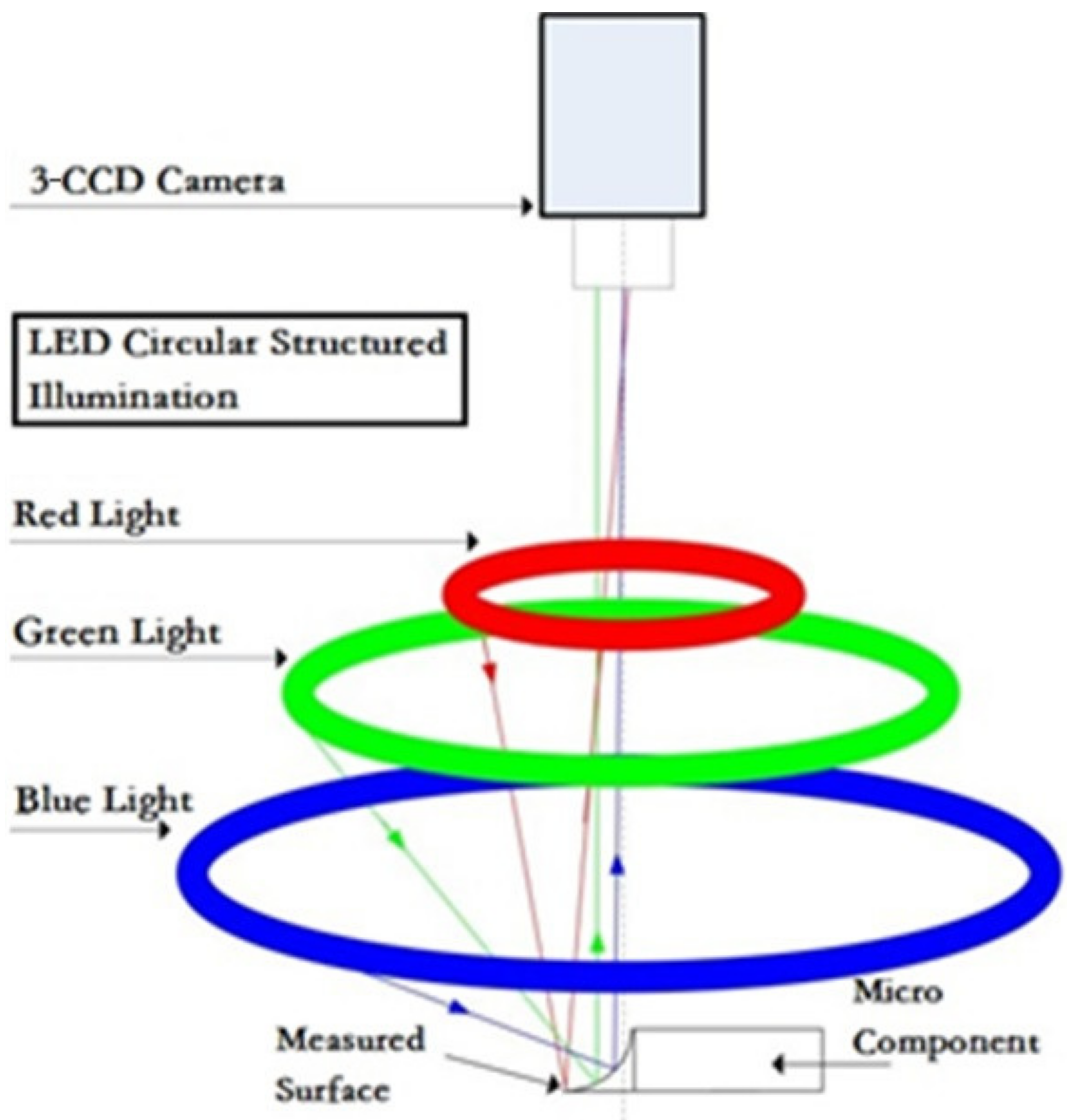

2. Monocular Vision System and its Image Information

3. Analysis of Imaging Principle and Model of 3D Reconstruction

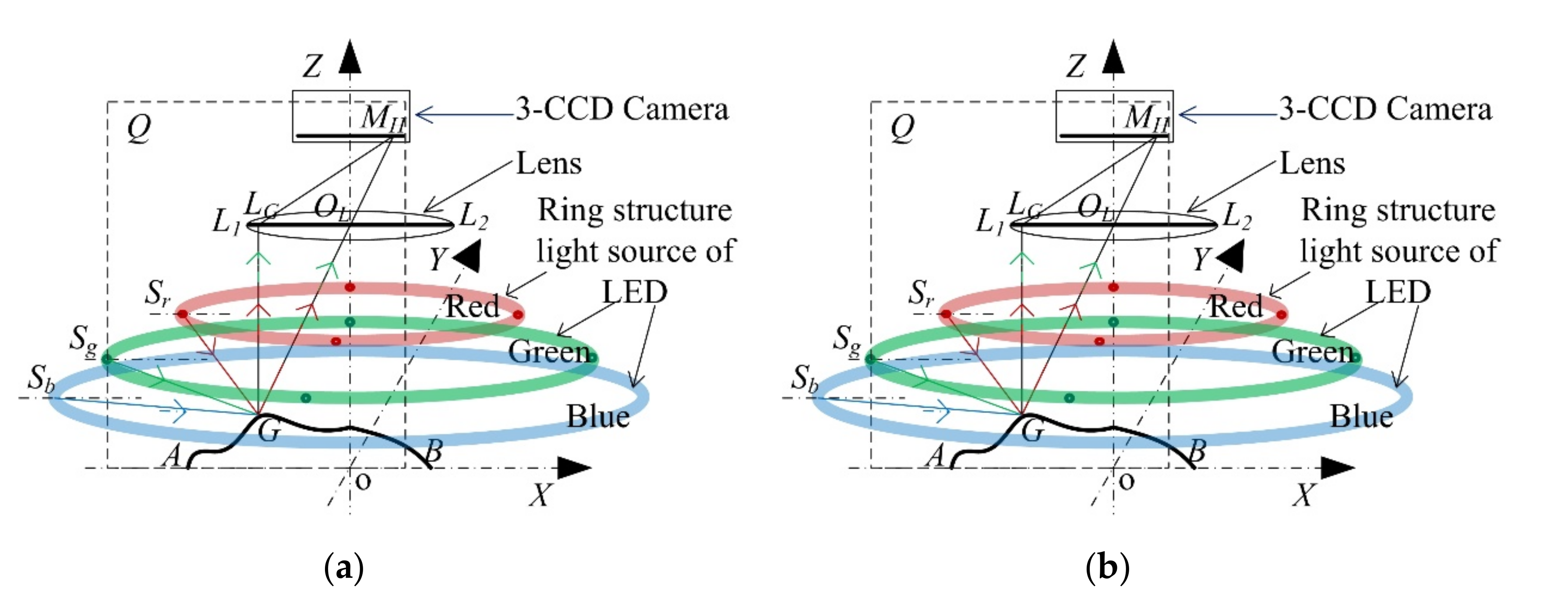

3.1. Principle of the Imaging based on the Monocular Vision System and the Ring Structure Light Source

3.2. The 3D Reconstruction Model

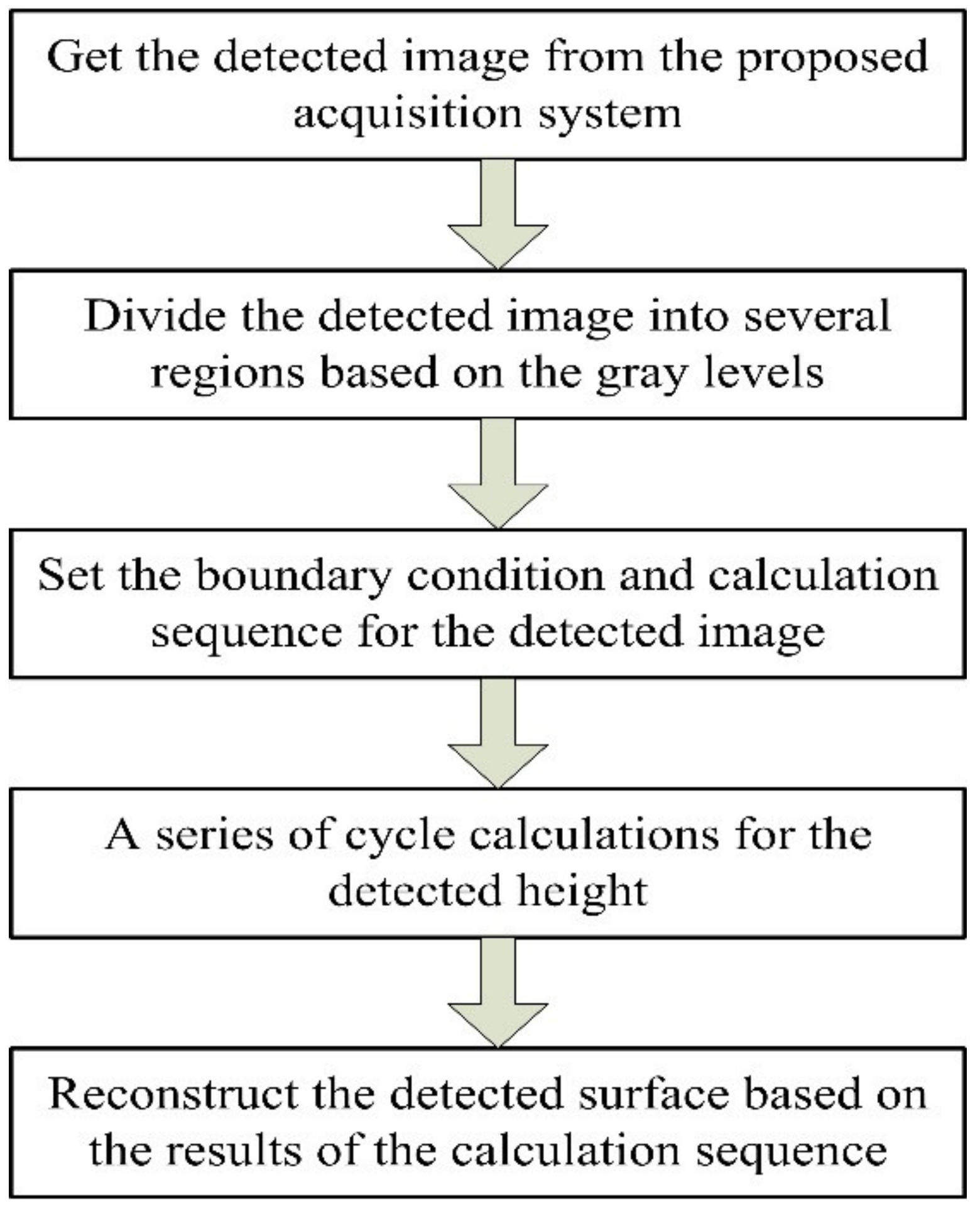

4. Simplified Calculation Method for the Proposed Algorithm

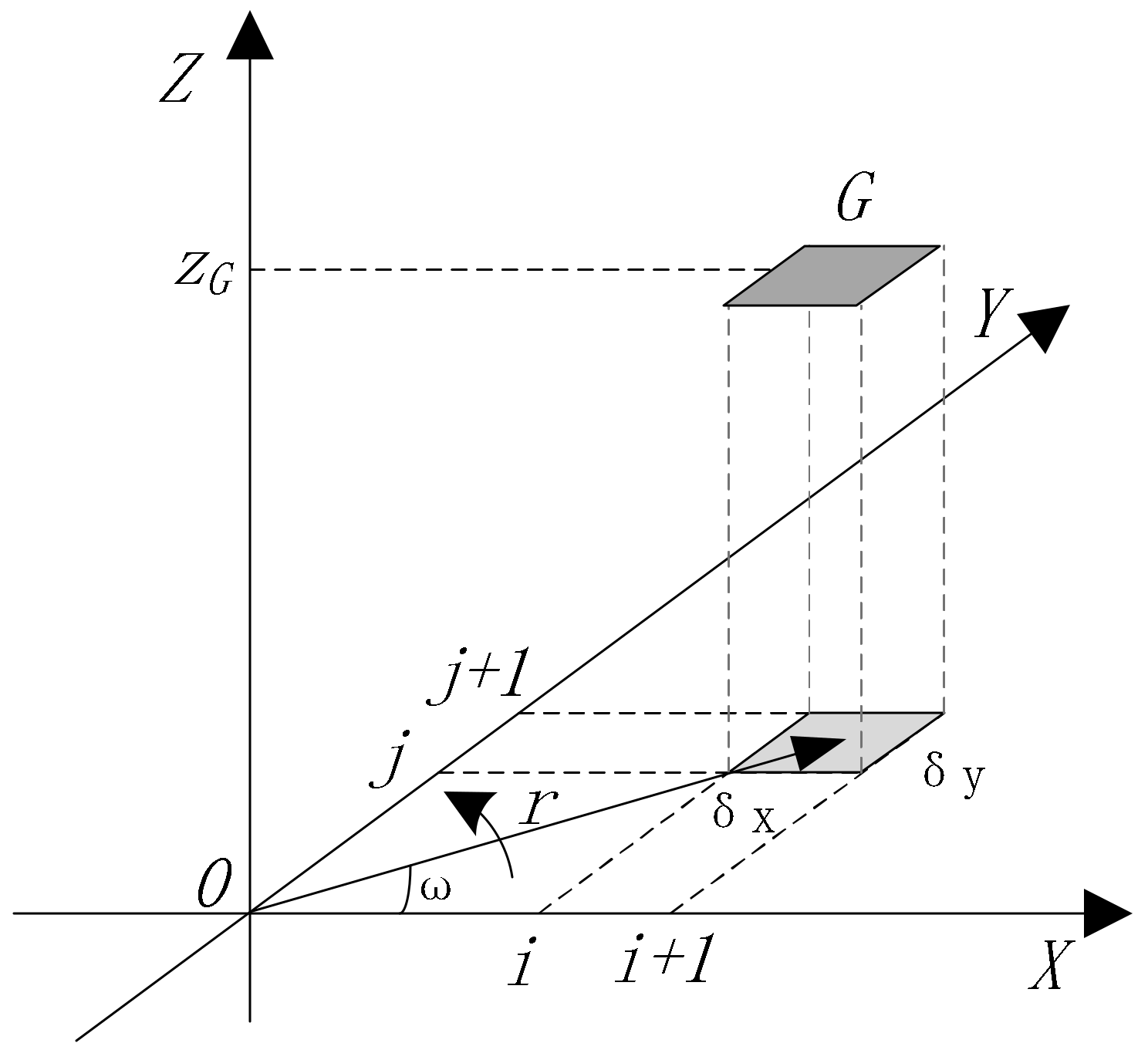

4.1. Algorithm Simplified Based on Regions

4.2. Algorithm Simplified based on Coordinates Transformation

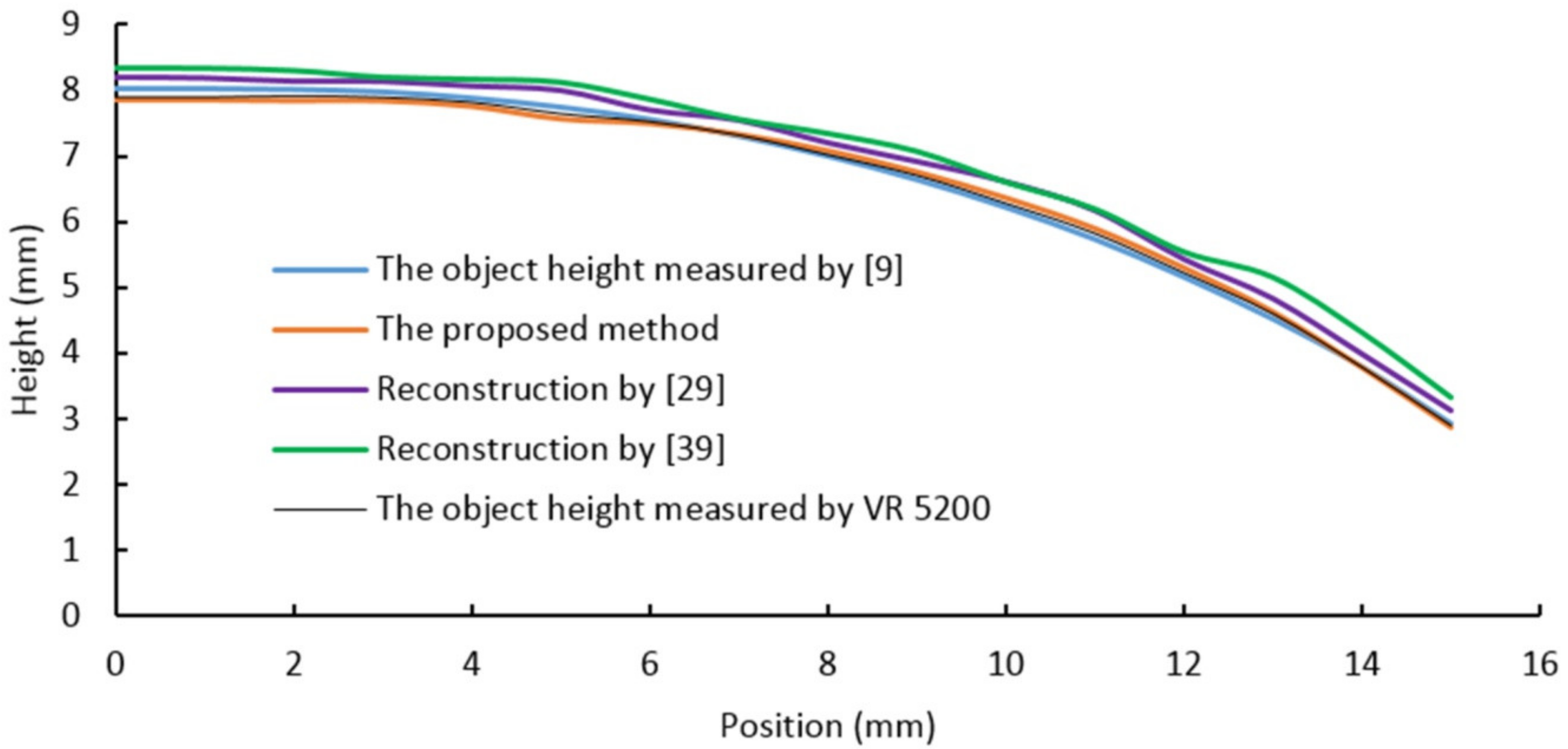

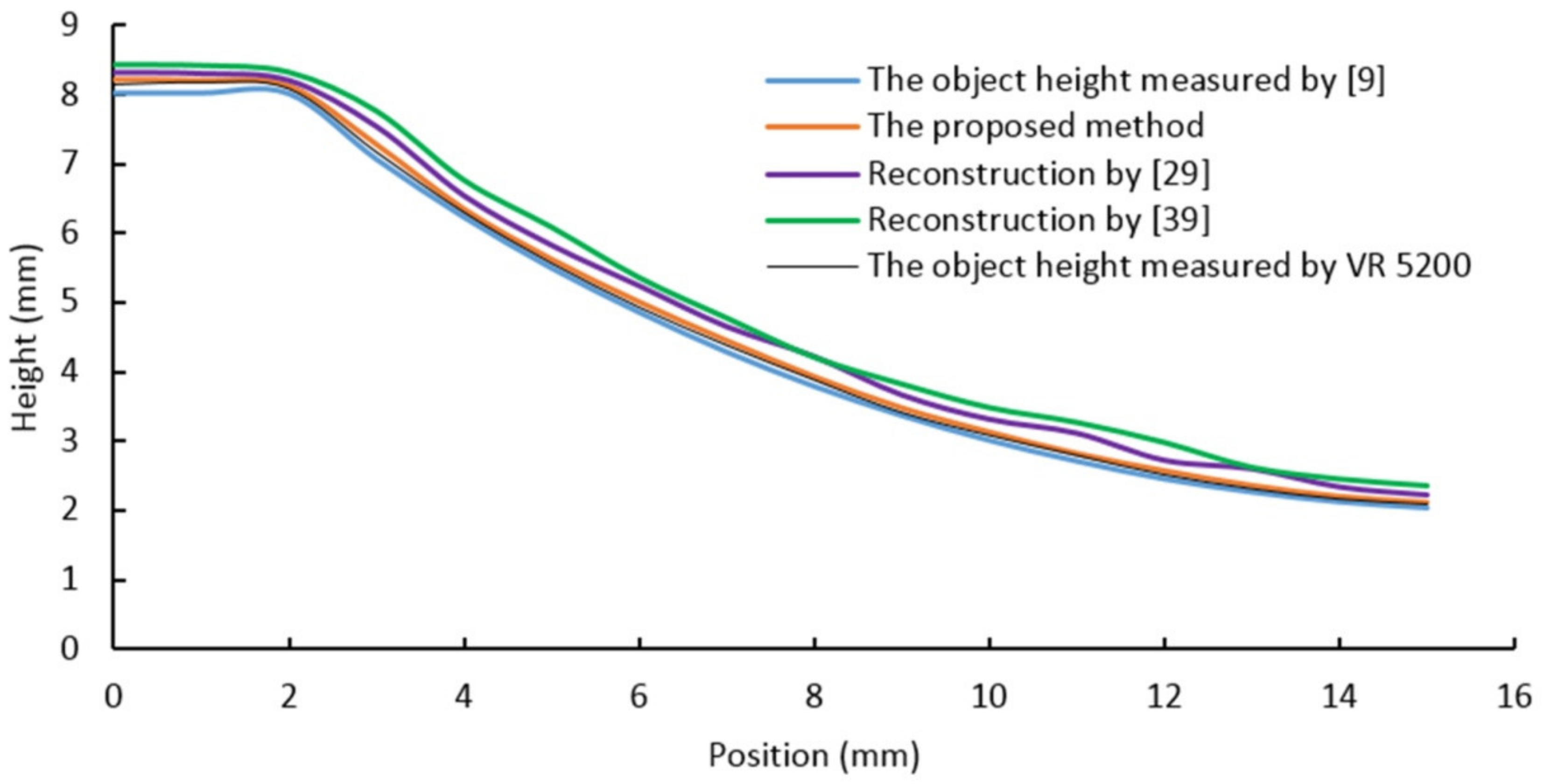

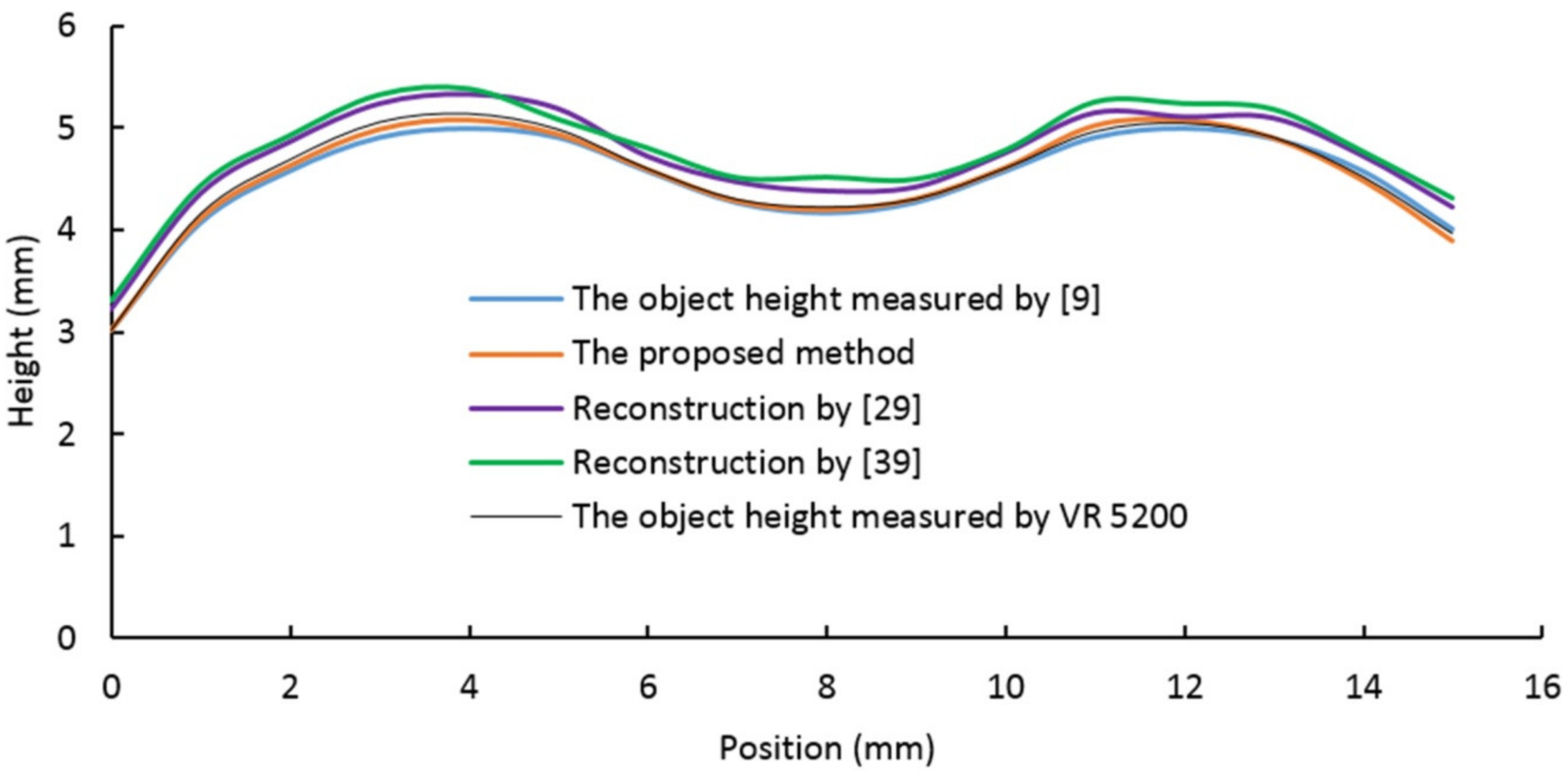

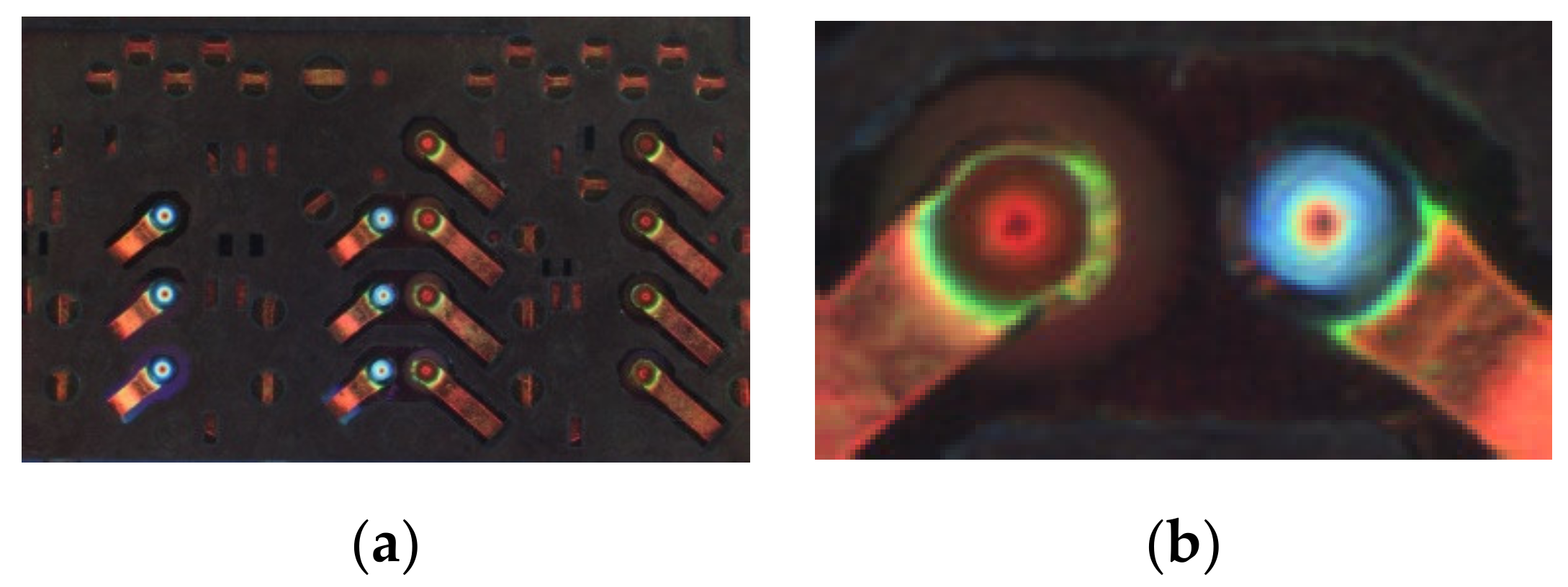

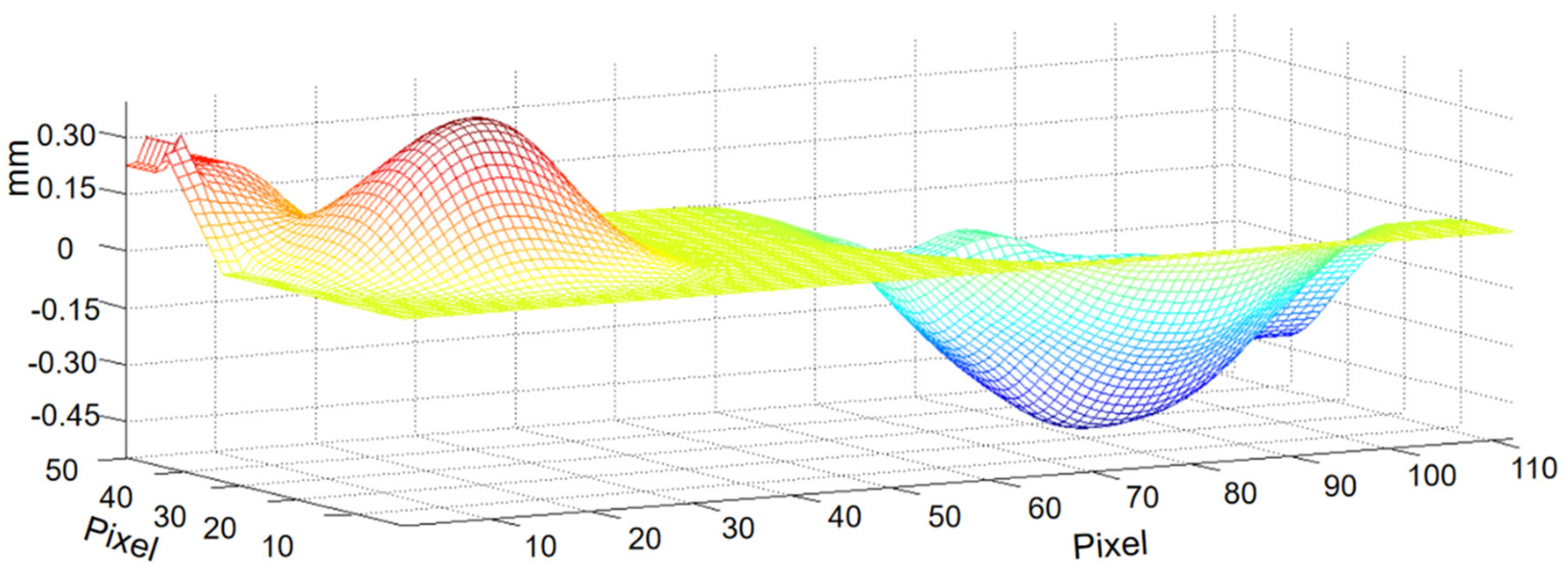

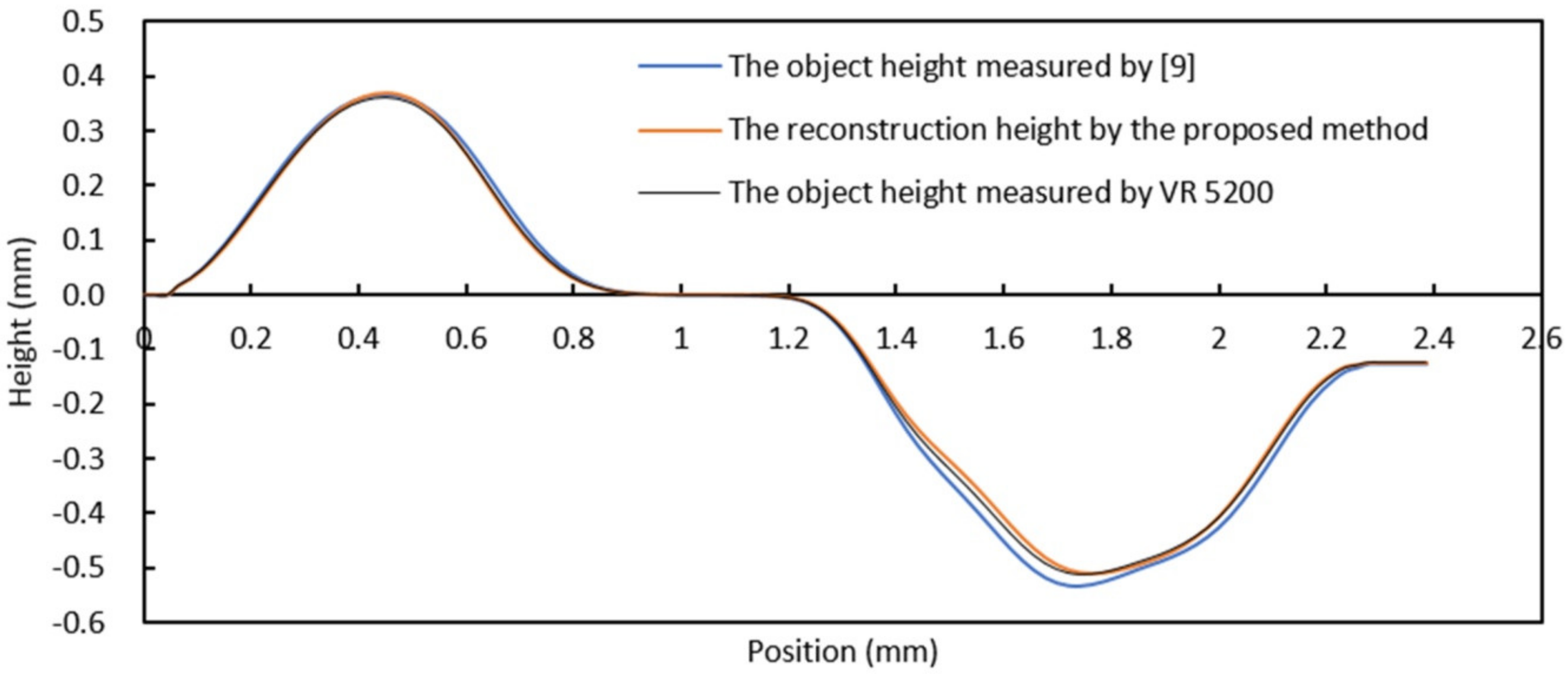

5. Experiments Results and Analysis

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sun, X.; Jing, X.; Cheng, L.; Xu, J. 3-D quasi-zero-stiffness-based sensor system for absolute motion measurement and application in active vibration control. IEEE/ASME Trans. Mechatron. 2015, 20, 254–262. [Google Scholar] [CrossRef]

- Wilde, T.; Rossi, C.; Theisel, H. Recirculation surfaces for flow visualization. IEEE Trans. Vis. Comput. Graph. 2019, 25, 946–955. [Google Scholar] [CrossRef] [PubMed]

- Nasrulloh, A.; Willcocks, C.; Jackson, P.; Geenen, C.; Habib, M.; Steel, D.; Obara, B. Multi-scale segmentation and surface fitting for measuring 3-D macular holes. IEEE Trans. Med. Imaging 2018, 37, 580–589. [Google Scholar] [CrossRef] [PubMed]

- Banerjee, D.; Yu, K.; Aggarwal, G. Robotic arm based 3D reconstruction test automation. IEEE Access 2018, 6, 7206–7213. [Google Scholar] [CrossRef]

- Tomic, S.; Beko, M.; Dinis, R. 3-D Target localization in wireless sensor networks using RSS and AoA measurements. IEEE Trans. Veh. Technol. 2016, 66, 3197–3210. [Google Scholar] [CrossRef]

- Weissenbock, J.; Fröhler, B.; Groller, E.; Kastner, J.; Heinzl, C. Dynamic volume lines: Visual comparison of 3D volumes through space-filling curves. IEEE Trans. Vis. Comput. Graph. 2018, 25, 1040–1049. [Google Scholar] [CrossRef]

- Pichat, J.; Iglesias, J.E.; Yousry, T.; Ourselin, S.; Modat, M. A survey of methods for 3D histology reconstruction. Med. Image Anal. 2018, 46, 73–105. [Google Scholar] [CrossRef]

- Huang, J.; Sun, M.; Ma, J.; Chi, Y. Super-resolution image reconstruction for high-density three-dimensional single-molecule microscopy. IEEE Trans. Comput. Imaging 2017, 3, 763–773. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, Y.; Verbeek, F.J. A two-phase 3-D reconstruction approach for light microscopy axial-view imaging. IEEE J. Sel. Top. Signal Process. 2017, 11, 1034–1046. [Google Scholar] [CrossRef]

- Bang, T.K.; Shin, K.-H.; Koo, M.-M.; Han, C.; Cho, H.W.; Choi, J.-Y. Measurement and torque calculation of magnetic spur gear based on quasi 3-D analytical method. IEEE Trans. Appl. Supercond. 2018, 28, 1–5. [Google Scholar] [CrossRef]

- Shi, C.; Luo, X.; Guo, J.; Najdovski, Z.; Fukuda, T.; Ren, H. Three-dimensional intravascular reconstruction techniques based on intravascular ultrasound: A technical review. IEEE J. Biomed. Health Inform. 2018, 22, 806–817. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Wong, K. Acoustic direction finding using a spatially spread tri-axial velocity sensor. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 834–842. [Google Scholar] [CrossRef]

- Zhang, X.; Wu, X.; Adelegan, O.J.; Yamaner, F.Y.; Oralkan, O. Backward-mode photoacoustic imaging using illumination through a CMUT with improved transparency. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2018, 65, 85–94. [Google Scholar] [CrossRef] [PubMed]

- Bevacqua, M.T.; Scapaticci, R. A compressive sensing approach for 3D breast cancer microwave imaging with magnetic nanoparticles as contrast agent. IEEE Trans. Med. Imaging 2015, 35, 665–673. [Google Scholar] [CrossRef] [PubMed]

- Fourgeaud, L.; Ercolani, E.; Duplat, J.; Gully, P.; Nikolayev, V.S. 3D reconstruction of dynamic liquid film shape by optical grid deflection method. Eur. Phys. J. 2018, 41, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Lu, B.; Zhang, Y.; Liu, H.; Qu, Y. 3D reconstruction of indoor scenes via image registration. Neural Process. Lett. 2018, 48, 1281–1304. [Google Scholar] [CrossRef]

- Ma, Z.; Liu, S. A review of 3D reconstruction techniques in civil engineering and their applications. Adv. Eng. Inform. 2018, 37, 163–174. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Y.; Wang, H.; Ho, Y.-S.; Feng, S. WLDISR: Weighted local sparse representation-based depth image super-resolution for 3D video system. IEEE Trans. Image Process. 2018, 28, 561–576. [Google Scholar] [CrossRef]

- Gai, S.; Da, F.; Dai, X. A novel dual-camera calibration method for 3D optical measurement. Opt. Lasers Eng. 2018, 104, 126–134. [Google Scholar] [CrossRef]

- Dehais, J.B.; Anthimopoulos, M.; Shevchik, S.; Mougiakakou, S. Two-view 3D reconstruction for food volume estimation. IEEE Trans. Multimed. 2016, 19, 1090–1099. [Google Scholar] [CrossRef]

- Betta, G.; Capriglione, D.; Gasparetto, M.; Zappa, E.; Liguori, C.; Paolillo, A. Face recognition based on 3D features: Management of the measurement uncertainty for improving the classification. Measurement 2014, 70, 169–178. [Google Scholar] [CrossRef]

- Wang, L.; Xiong, Z.; Shi, G.; Wu, F.; Zeng, W. Adaptive nonlocal sparse representation for dual-camera compressive hyperspectral imaging. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2104–2111. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Hilton, A. 3D scene reconstruction from multiple spherical stereo pairs. Int. J. Comput. Vis. 2013, 104, 94–116. [Google Scholar] [CrossRef]

- Guillemaut, J.-Y.; Hilton, A. Joint multi-layer segmentation and reconstruction for free-viewpoint video applications. Int. J. Comput. Vis. 2010, 93, 73–100. [Google Scholar] [CrossRef]

- Merras, M.; Saaidi, A.; El Akkad, N.; Satori, K. Multi-view 3D reconstruction and modeling of the unknown 3D scenes using genetic algorithms. Soft Comput. 2017, 22, 6271–6289. [Google Scholar] [CrossRef]

- Chen, X.; Graham, J.; Hutchinson, C.; Muir, L. Automatic inference and measurement of 3D carpal bone kinematics from single view fluoroscopic sequences. IEEE Trans. Med. Imaging 2013, 32, 317–328. [Google Scholar] [CrossRef]

- Gallo, A.; Muzzupappa, M.; Bruno, F. 3D reconstruction of small sized objects from a sequence of multi-focused images. J. Cult. Herit. 2014, 15, 173–182. [Google Scholar] [CrossRef]

- Tao, M.W.; Srinivasan, P.P.; Hadap, S.; Rusinkiewicz, S.; Malik, J.; Ramamoorthi, R. Shape estimation from shading, defocus, and correspondence using light-field angular coherence. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 546–560. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, Y.; Zhu, S.-C. Single-view 3D scene reconstruction and parsing by attribute grammar. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 710–725. [Google Scholar] [CrossRef]

- Zhang, R.; Tsai, P.-S.; Cryer, J.; Shah, M. Shape-from-shading: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 21, 690–706. [Google Scholar] [CrossRef]

- Durou, J.-D.; Falcone, M.; Sagona, M. Numerical methods for shape-from-shading: A new survey with benchmarks. Comput. Vis. Image Underst. 2008, 109, 22–43. [Google Scholar] [CrossRef]

- Yang, L.; Li, E.; Long, T.; Fan, J.; Mao, Y.; Fang, Z.; Liang, Z. A welding quality detection method for arc welding robot based on 3D reconstruction with SFS algorithm. Int. J. Adv. Manuf. Technol. 2017, 94, 1209–1220. [Google Scholar] [CrossRef]

- Xie, J.; Dai, G.X.; Fang, Y. Deep multi-metric learning for shape-based 3D model retrieval. IEEE Trans. Multimed. 2017, 19, 2463–2474. [Google Scholar] [CrossRef]

- Peng, K.; Cao, Y.; Wu, Y.; Lu, M. A new method using orthogonal two-frequency grating in online 3D measurement. Opt. Laser Technol. 2016, 83, 81–88. [Google Scholar] [CrossRef]

- Mei, Q.; Gao, J.; Lin, H.; Chen, Y.; Yunbo, H.; Wang, W.; Zhang, G.; Chen, X. Structure light telecentric stereoscopic vision 3D measurement system based on Scheimpflug condition. Opt. Lasers Eng. 2016, 86, 83–91. [Google Scholar] [CrossRef]

- Vagharshakyan, S.; Bregovic, R.; Gotchev, A. Light field reconstruction using shearlet transform. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 133–147. [Google Scholar] [CrossRef]

- Zhou, P.; Xu, K.; Wang, D. Rail profile measurement based on line-structured light vision. IEEE Access 2018, 6, 16423–16431. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Gong, H.; Fisher, R.B. Color homography: Theory and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 20–33. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, G.; Xia, S. Templateless non-rigid reconstruction and motion tracking with a single RGB-D camera. IEEE Trans. Image Process. 2017, 26, 5966–5979. [Google Scholar] [CrossRef]

- Wu, F.; Li, S.; Zhang, X.; Ye, W. A design method for LEDs arrays structure illumination. IEEE/OSA J. Disp. Technol. 2016, 12, 1177–1184. [Google Scholar] [CrossRef]

- Loh, H.; Lu, M. Printed circuit board inspection using image analysis. IEEE Trans. Ind. Appl. 1999, 35, 426–432. [Google Scholar]

- Wu, F.; Zhang, X. An inspection and classification method for chip solder joints using color grads and Boolean rules. Robot. Comput. Integr. Manuf. 2014, 30, 517–526. [Google Scholar] [CrossRef]

| Convex Surface Sample | Concave Surface Sample | Angular Surface Sample | Convex and Concave Surface Sample | |

|---|---|---|---|---|

| Sample images |  |  |  |  |

| Images acquired via the proposed system |  |  |  |  |

| Reconstructed images via the proposed method |  |  |  |  |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, F.; Zhu, S.; Ye, W. A Single Image 3D Reconstruction Method Based on a Novel Monocular Vision System. Sensors 2020, 20, 7045. https://doi.org/10.3390/s20247045

Wu F, Zhu S, Ye W. A Single Image 3D Reconstruction Method Based on a Novel Monocular Vision System. Sensors. 2020; 20(24):7045. https://doi.org/10.3390/s20247045

Chicago/Turabian StyleWu, Fupei, Shukai Zhu, and Weilin Ye. 2020. "A Single Image 3D Reconstruction Method Based on a Novel Monocular Vision System" Sensors 20, no. 24: 7045. https://doi.org/10.3390/s20247045

APA StyleWu, F., Zhu, S., & Ye, W. (2020). A Single Image 3D Reconstruction Method Based on a Novel Monocular Vision System. Sensors, 20(24), 7045. https://doi.org/10.3390/s20247045