Bidirectional Attention for Text-Dependent Speaker Verification

Abstract

1. Introduction

- (1)

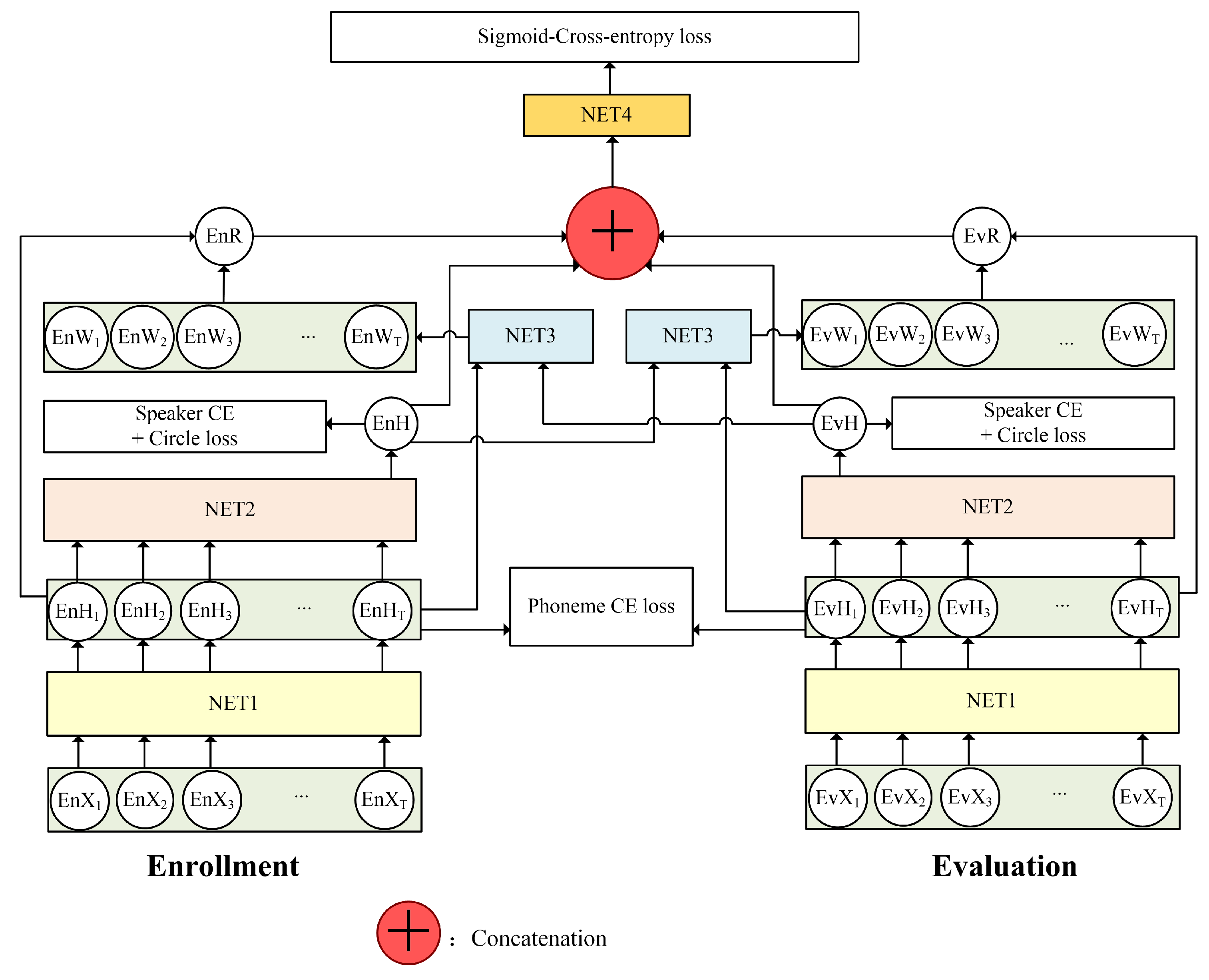

- For each pair of compared utterances (including one for enrollment and another for evaluation), attention scores for frame-level hidden features are calculated via a bidirectional attention model. The input of the model includes the frame-level hidden features of one utterance and the utterance-level hidden features from the other utterance. The interactive features for both utterances are simultaneously obtained in consideration of the joint information shared between them. To the best of the authors’ knowledge, we are the first to employ bidirectional attention in the speaker verification field.

- (2)

- Inspired by the success of circle loss in image analysis, we replace the triplet loss in conventional TDSV models with the recently proposed circle loss. It dynamically adjusts the penalty strength on the within-class similarity and between-class similarity, and provides a flexible optimization. In addition, it tends to converge to a definite status and hence benefits the separability.

- (3)

- We introduce one individual cost function to identify the phonetic contents, which contribute to calculating the attention score more specifically. The attention is then used to perform phonetic-specific pooling. Experimental results demonstrate that the proposed framework achieves the best performance compared with classical i-vector/PLDA, d-vector, and the single-directional attention models.

2. State of the Art

2.1. TDSV Based on i-Vector

2.2. TDSV Based on d-Vector

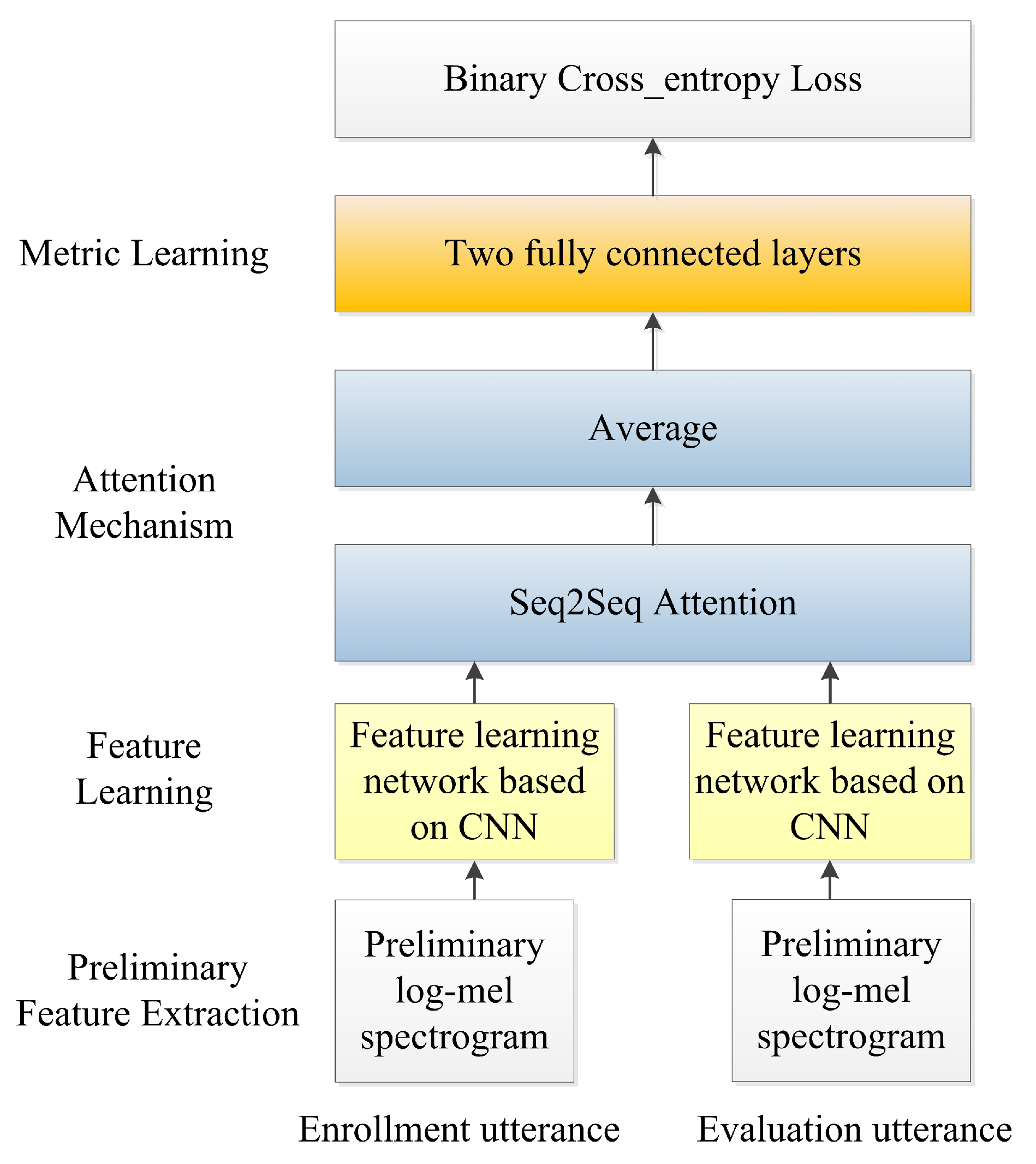

2.3. TDSV Based on Attention Mechanism

3. Methodology

3.1. Data Preprocessing

3.2. Model Structure

3.3. End-to-End Training

4. Experimental Setup

4.1. Experimental Dataset

4.2. Evaluation Metric

5. Results and Discussion

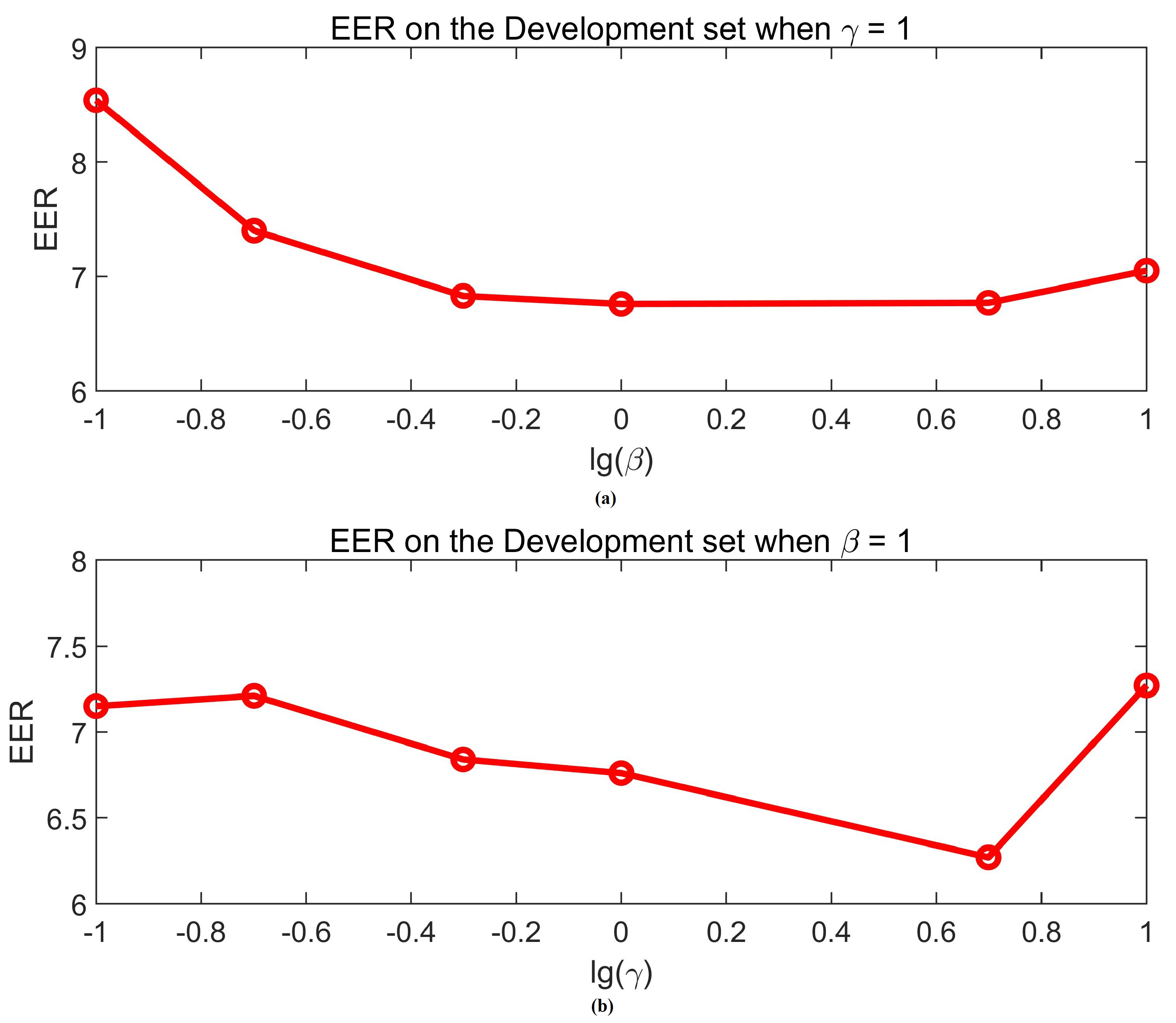

5.1. The Weights of Losses

5.2. Comparison between Triplet Loss and Circle Loss

5.3. Evaluation of Different Deep Feature Combinations

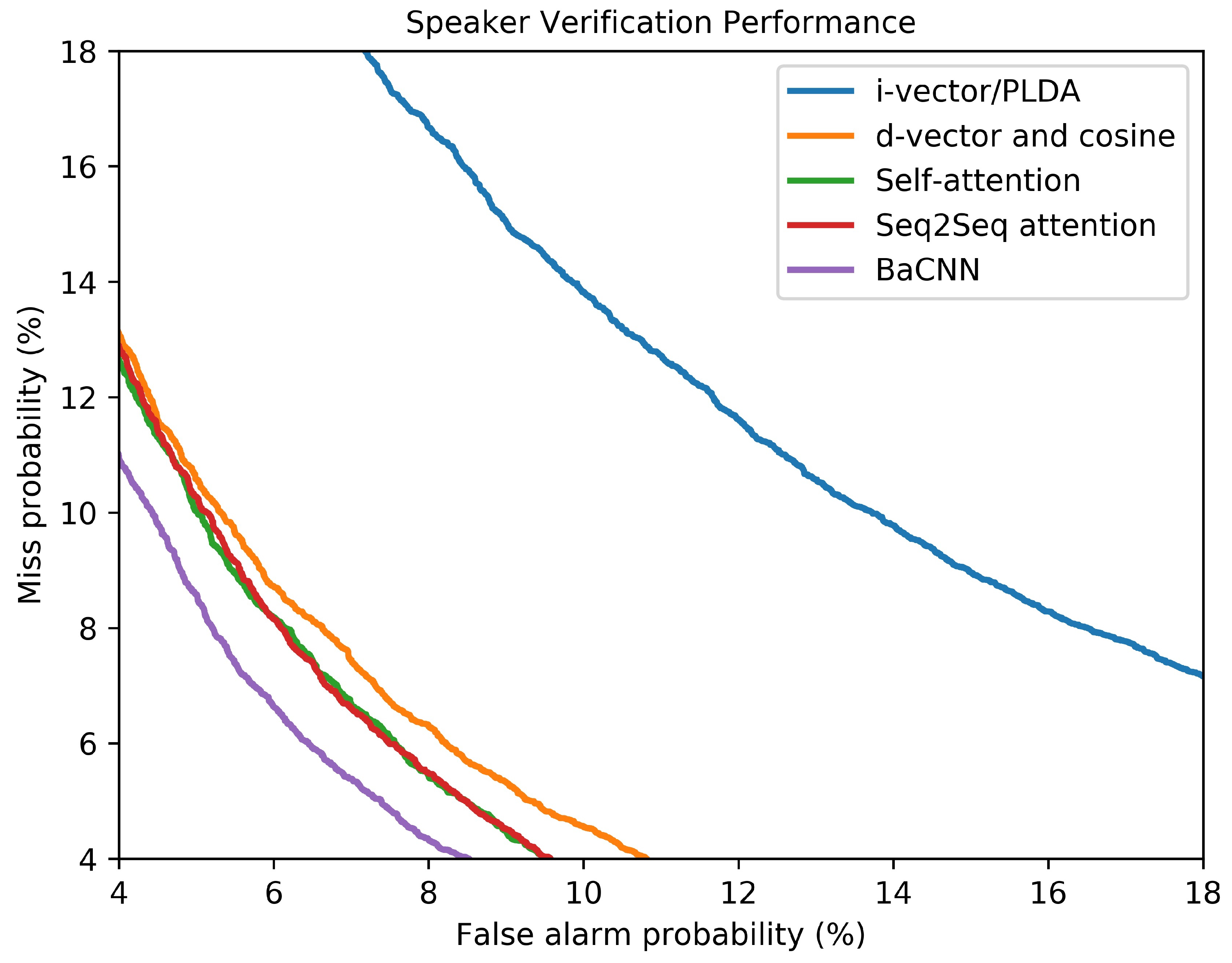

5.4. Comparison with State-of-the-Art TDSV Methods

5.5. Analysis of Interactive Speaker Embeddings

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zeinali, H.; Sameti, H.; Burget, L. HMM-based phrase-independent i-vector extractor for text-dependent speaker verification. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1421–1435. [Google Scholar] [CrossRef]

- Kang, W.H.; Kim, N.S. Adversarially Learned Total Variability Embedding for Speaker Recognition with Random Digit Strings. Sensors 2019, 19, 4709. [Google Scholar] [CrossRef] [PubMed]

- Mingote, V.; Miguel, A.; Ortega, A.; Lleida, E. Optimization of the area under the roc curve using neural network supervectors for text-dependent speaker verification. Comput. Speech Lang. 2020, 63, 101078. [Google Scholar] [CrossRef]

- Machado, T.J.; Vieira Filho, J.; de Oliveira, M.A. Forensic Speaker Verification Using Ordinary Least Squares. Sensors 2019, 19, 4385. [Google Scholar] [CrossRef] [PubMed]

- Larcher, A.; Lee, K.A.; Ma, B.; Li, H. Text-dependent speaker verification: Classifiers, databases and RSR2015. Speech Commun. 2014, 60, 56–77. [Google Scholar] [CrossRef]

- Zhong, J.; Hu, W.; Soong, F.K.; Meng, H. DNN i-Vector Speaker Verification with Short, Text-Constrained Test Utterances. In Proceedings of the 18th Annual Conference of the International Speech Communication Association (Interspeech), Stockholm, Sweden, 20–24 August 2017; pp. 1507–1511. [Google Scholar]

- Zhang, Y.; Yu, M.; Li, N.; Yu, C.; Cui, J.; Yu, D. Seq2Seq Attentional Siamese Neural Networks for Text-dependent Speaker Verification. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6131–6135. [Google Scholar]

- Liu, Y.; He, L.; Tian, Y.; Chen, Z.; Liu, J.; Johnson, M.T. Comparison of multiple features and modeling methods for text-dependent speaker verification. In Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Okinawa, Japan, 16–20 December 2017; pp. 629–636. [Google Scholar]

- Dehak, N.; Kenny, P.J.; Dehak, R.; Dumouchel, P.; Ouellet, P. Front-end factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process. 2010, 19, 788–798. [Google Scholar] [CrossRef]

- Liu, Y.; Qian, Y.; Chen, N.; Fu, T.; Zhang, Y.; Yu, K. Deep feature for text-dependent speaker verification. Speech Commun. 2015, 73, 1–13. [Google Scholar] [CrossRef]

- Yoon, S.H.; Jeon, J.J.; Yu, H.J. Regularized Within-Class Precision Matrix Based PLDA in Text-Dependent Speaker Verification. Appl. Sci. 2020, 10, 6571. [Google Scholar] [CrossRef]

- Zeinali, H.; Sameti, H.; Burget, L.; Černocký, J.H. Text-dependent speaker verification based on i-vectors, neural networks and hidden Markov models. Comput. Speech Lang. 2017, 46, 53–71. [Google Scholar] [CrossRef]

- Yao, Q.; Mak, M.W. SNR-invariant multitask deep neural networks for robust speaker verification. IEEE Signal Process. Lett. 2018, 25, 1670–1674. [Google Scholar] [CrossRef]

- Zhang, C.; Yu, C.; Hansen, J.H. An investigation of deep-learning frameworks for speaker verification antispoofing. IEEE J. Sel. Top. Signal Process. 2017, 11, 684–694. [Google Scholar] [CrossRef]

- Garcia-Romero, D.; McCree, A. Insights into deep neural networks for speaker recognition. In Proceedings of the Sixteenth Annual Conference of the International Speech Communication Association, Dresden, Germany, 6–10 September 2015. [Google Scholar]

- Dey, S.; Madikeri, S.R.; Motlicek, P. End-to-end Text-dependent Speaker Verification Using Novel Distance Measures. In Proceedings of the 19th Annual Conference of the International Speech Communication Association (Interspeech), Hyderabad, India, 2–6 September 2018; pp. 3598–3602. [Google Scholar]

- Zhang, S.X.; Chen, Z.; Zhao, Y.; Li, J.; Gong, Y. End-to-end attention based text-dependent speaker verification. In Proceedings of the 2016 IEEE Spoken Language Technology Workshop (SLT), San Diego, CA, USA, 13–16 December 2016; pp. 171–178. [Google Scholar]

- Bian, T.; Chen, F.; Xu, L. Self-attention based speaker recognition using Cluster-Range Loss. Neurocomputing 2019, 368, 59–68. [Google Scholar] [CrossRef]

- Chowdhury, F.R.R.; Wang, Q.; Moreno, I.L.; Wan, L. Attention-based models for text-dependent speaker verification. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5359–5363. [Google Scholar]

- Wu, Z.; Shen, C.; Van Den Hengel, A. Wider or deeper: Revisiting the resnet model for visual recognition. Pattern Recognit. 2019, 90, 119–133. [Google Scholar] [CrossRef]

- Sun, Y.; Cheng, C.; Zhang, Y.; Zhang, C.; Zheng, L.; Wang, Z.; Wei, Y. Circle loss: A unified perspective of pair similarity optimization. arXiv 2020, arXiv:2002.10857. [Google Scholar]

- Dehak, N.; Dumouchel, P.; Kenny, P. Modeling prosodic features with joint factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 2095–2103. [Google Scholar] [CrossRef]

- Kenny, P.; Ouellet, P.; Dehak, N.; Gupta, V.; Dumouchel, P. A study of interspeaker variability in speaker verification. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 980–988. [Google Scholar] [CrossRef][Green Version]

- Campbell, W.; Sturim, D.; Reynolds, D. Support vector machines using GMM supervectors for speaker verification. IEEE Signal Process Lett. 2006, 13, 308–311. [Google Scholar] [CrossRef]

- Heigold, G.; Moreno, I.; Bengio, S.; Shazeer, N. End-to-end text-dependent speaker verification. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5115–5119. [Google Scholar]

- Li, C.; Ma, X.; Jiang, B.; Li, X.; Zhang, X.; Liu, X.; Cao, Y.; Kannan, A.; Zhu, Z. Deep speaker: An end-to-end neural speaker embedding system. arXiv 2017, arXiv:1705.02304. [Google Scholar]

- Fang, X.; Zou, L.; Li, J.; Sun, L.; Ling, Z.H. Channel adversarial training for cross-channel text-independent speaker recognition. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 6221–6225. [Google Scholar]

- Kaya, E.M.; Elhilali, M. Modelling auditory attention. Philos. Trans. R. Soc. Biol. Sci. 2017, 372, 20160101. [Google Scholar] [CrossRef]

- Dai, L.; Best, V.; Shinn-Cunningham, B.G. Sensorineural hearing loss degrades behavioral and physiological measures of human spatial selective auditory attention. Proc. Natl. Acad. Sci. USA 2018, 115, E3286–E3295. [Google Scholar] [CrossRef]

- Yang, J.; Yang, G. Modified convolutional neural network based on dropout and the stochastic gradient descent optimizer. Algorithms 2018, 11, 28. [Google Scholar] [CrossRef]

- Nagrani, A.; Chung, J.S.; Xie, W.; Zisserman, A. Voxceleb: Large-scale speaker verification in the wild. Comput. Speech Lang. 2020, 60, 101027. [Google Scholar] [CrossRef]

- Kinnunen, T.; Delgado, H.; Evans, N.; Lee, K.A.; Vestman, V.; Nautsch, A.; Todisco, M.; Wang, X.; Sahidullah, M.; Yamagishi, J.; et al. Tandem assessment of spoofing countermeasures and automatic speaker verification: Fundamentals. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2195–2210. [Google Scholar] [CrossRef]

- Kinnunen, T.; Li, H. An overview of text-independent speaker recognition: From features to supervectors. Speech Commun. 2010, 52, 12–40. [Google Scholar] [CrossRef]

| Losses | Development Set | Test Set |

|---|---|---|

| 6.60 | 6.62 | |

| 6.27 | 6.26 |

| Architecture | Losses | Development Set | Test Set |

|---|---|---|---|

| d-vector | 8.03 | 7.99 | |

| d-vector | 7.43 | 7.18 | |

| BaCNN | 6.60 | 6.51 | |

| BaCNN | 6.27 | 6.26 |

| Inputs of NET4 | Development Set | Test Set |

|---|---|---|

| and | 6.55 | 6.62 |

| and | 7.55 | 7.18 |

| , , and | 6.25 | 6.41 |

| , , and | 6.33 | 6.58 |

| , , and | 6.27 | 6.26 |

| Method | Development Set | Test Set | ||||

|---|---|---|---|---|---|---|

| EERR (%) | (%) | EER (%) | (%) | |||

| i-vector/PLDA [12] | 11.61 | 77.51 | 0.5578 | 11.80 | 76.83 | 0.5499 |

| d-vector and cosine [25] | 7.43 | 87.67 | 0.4033 | 7.18 | 89.42 | 0.4017 |

| Self-attention [19] | 6.96 | 90.40 | 0.3795 | 6.87 | 89.98 | 0.4235 |

| Seq2Seq attention [7] | 6.88 | 89.57 | 4059 | 6.83 | 89.73 | 0.4236 |

| BaCNN-1step | 7.60 | 88.10 | 0.4373 | 6.91 | 89.18 | 0.4606 |

| BaCNN | 6.27 | 92.00 | 0.3709 | 6.26 | 91.42 | 0.3996 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, X.; Gao, T.; Zou, L.; Ling, Z. Bidirectional Attention for Text-Dependent Speaker Verification. Sensors 2020, 20, 6784. https://doi.org/10.3390/s20236784

Fang X, Gao T, Zou L, Ling Z. Bidirectional Attention for Text-Dependent Speaker Verification. Sensors. 2020; 20(23):6784. https://doi.org/10.3390/s20236784

Chicago/Turabian StyleFang, Xin, Tian Gao, Liang Zou, and Zhenhua Ling. 2020. "Bidirectional Attention for Text-Dependent Speaker Verification" Sensors 20, no. 23: 6784. https://doi.org/10.3390/s20236784

APA StyleFang, X., Gao, T., Zou, L., & Ling, Z. (2020). Bidirectional Attention for Text-Dependent Speaker Verification. Sensors, 20(23), 6784. https://doi.org/10.3390/s20236784