Abstract

Overloaded network devices are becoming an increasing problem especially in resource limited networks with the continuous and rapid increase of wireless devices and the huge volume of data generated. Admission and routing control policy at a network device can be used to balance the goals of maximizing throughput and ensuring sufficient resources for high priority flows. In this paper we formulate the admission and routing control problem of two types of flows where one has a higher priority than the other as a Markov decision problem. We characterize the optimal admission and routing policy, and show that it is a state-dependent threshold type policy. Furthermore, we conduct extensive numerical experiments to gain more insight into the behavior of the optimal policy under different systems’ parameters. While dynamic programming can be used to solve such problems, the large size of the state space makes it untractable and too resource intensive to run on wireless devices. Therefore, we propose a fast heuristic that exploits the structure of the optimal policy. We empirically show that the heuristic performs very well with an average reward deviation of 1.4% from the optimal while being orders of magnitude faster than the optimal policy. We further generalize the heuristic for the general case of a system with n () types of flows.

1. Introduction

Efficient resource utilization is a primary problem in resource constrained networks. In wireless sensor networks (WSNs) for instance the issue of energy efficiency is crucial to ensure network connectivity and quality of service. In WSNs, sensor nodes are generally deployed to transmit sensitive information in a timely manner. They rely on neighboring nodes to relay traffic to a given destination while operating on limited battery capacity. Energy is used when a node is listening, receiving, or transmitting. If a node’s battery is depleted, neighboring nodes become incapable of relaying and transmitting urgent traffic through the node. More importantly, if said node belongs to the optimal path, a less efficient path will need to be computed which reduces network throughput and consumes more of the scares resources. It is expected that some sensor networks will be deployed over large and inhospitable areas [1,2,3,4,5,6]. Since these networks may not be accessible following deployment, it is crucial that implemented admission and routing policies are resource efficient (i.e., consume minimal energy).

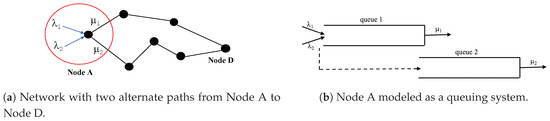

Consider a sensor node A with two available paths to the same destination as shown in Figure 1. We define a task as the transmission of a single flow from a relaying node to the final destination. We say that a task is successful if its corresponding flow is treated to the full extent - that is, all packets that belong to the same flow reach their final destination. Consider the scenario where sensor node A is tasked with relaying two flows. To maximize task success, it may be more efficient to treat one task to a full extent and reject or treat the second task partially than to partially treat both tasks. For instance, suppose a node is sending information about two events to a control center (CC) simultaneously: an attack on a battlefield and a fire in another nearby region. The CC would prefer to receive full information about one flow and act on it, rather than receive partial information about both flows that would be discarded. Hence, sometimes rejecting a flow may be perceived as more beneficial than accepting a flow and partially transmitting it due to lack of resources. This saves energy consumption while transmitting a flow to the full extent.

Figure 1.

The queueing system at node A where type- arrivals join queue 1. Instead of being aborted from the system, packets are routed to queue 2; . Served at queue 1 or at queue 2, packets will reach the same destination D over alternate paths.

In this paper, we model node A (Figure 1) as a queueing system where accepted packets belong to two different flows. We assume that one of the flows has higher priority than the other. Once a packet of a given flow is accepted to the system, it joins queue 1 and it is guaranteed service to the full extent independent of its type. Instead of being rejected or preempted from service, packets have the option to be served at a slower server behind a second queue (queue 2). Hence, the packet is transmitted over a less rewarding path. Transmitted type-i packets are rewarded if served at queue 1, and if served at queue 2. Using the less rewarding path not only minimizes the number of rejected packets from the system, but also maximizes the chance that both flows will be treated to the full extent. Most importantly, allowing packets to be served at queue 2, allows the extension of the life of the path behind queue 1 (the efficient path).

We are interested in finding ways by which node A can, through its local decision policy, accept or reject and decide which path to choose to transmit packets. The objective is to minimize energy consumption and maximize the number of flows served, hence maximizing network throughput.

Such decision control mechanism is fundamental to a variety of other interesting applications. For example, consider the case where a node experiences a flood of traffic as a sign of it being compromised. A node can be subject to a SYN flood where an attacker attempts to fill the backlog queue of a victim machine’s Transmission Control Protocol server (TCP) [7,8]. This results in resource depletion that renders the node unresponsive to legitimate traffic. By recognizing such flood of traffic, a node may either classify it as high priority traffic to identify the attacker and take the appropriate measures, or as low priority traffic and route it to another queue with a slower server.

This problem finds applications not only in computers and communication networks but in various other fields as well. Blockchain-based applications for instance, suffer from high computational and storage expenses, negatively impacting overall performance and scalability [9]. Therefore, work has been done to move computation and data off the chain (Off-chain). Off-chain transactions (i.e., high priority traffic) can be executed instantly and usually have low or no transaction fee. However, on-chain transactions (i.e., low priority traffic) can have a lengthy lag time depending on the network load and on the number of transactions waiting in the queue to be confirmed. Similarly, control of multi-class queueing systems has received significant attention in supply chain management and manufacturing systems ([10,11,12] and references therein). One of the main tools for such control problems is to characterize a performance measure of interest and use optimization methods to find the optimal control policy [13,14,15]. An agorithm for optimal pricing and admission control is proposed in [14,16].

In this paper, we develop a dynamic programming formulation of the Admission and Routing Control (ARC) problem, that maximizes the network throughput by extending the life (the resources) of the efficient path and thus the number of flows serviced to the full extent. We formulate the ARC problem as a Markov decision process (MDP) [17] and characterize the optimal policy under the Poisson traffic model. In particular, we show that the ARC policy that maximizes the expected reward is stationary and is a state-dependent threshold type policy. While dynamic programming can be used to solve such problems, the large size of the state space makes it untractable and too resource intensive to run on network devices and especially on wireless devices. Therefore, we propose a fast heuristic that exploits the structure of the optimal policy. Much of the computation required for our method can be done off-line, and the real-time computation requires no more than a table lookup. Furthermore, computing the parameters of the heuristic control policy is orders of magnitude faster than computing the optimal. We empirically show that the heuristic performs very well with an average reward deviation of 1.4% from the optimal while being orders of magnitude faster than the optimal policy. We further generalize the heuristic for the general case of a system with n (n > 2) types of flows. We believe our heuristic is general enough to be widely applicable and can be implemented in realtime. Although the method we propose applies to general network resource constraints, we consider energy limitations as our motivating application.

The rest of the paper is organized as follows. In Section 2, we provide a literature review. In Section 3, we describe the model and provide its mathematical formulation. In Section 4, we develop properties of the expected reward value function and characterize the optimal ARC policy. In Section 5, we analyze the behavior of the optimal policy through extensive numerical experiments. In Section 6, we propose a heuristic control policy, for the general case of n types of packets, and conduct extensive numerical experiments, for the case of 2 types of flows, in order to assess its performance compared to the optimal policy. In Section 7, we summarize our findings and propose future directions of this work.

2. Literature

In this section, we review the existing literature pertaining to resource constrained environments in WSNs. This is by no means exhaustive; it is only indicative of the interest and the applications.

Extensive work has been done to address the problem of energy saving with respect to WSNs. In [18,19,20,21], controlled mobility is used to extend the network lifetime. Algorithms for self-organization of sensor networks have been proposed [22,23] to minimize the risk of data loss during transmission and to maximize the battery life of individual sensors. Various coverage optimization protocols have been studied [24,25] where a number of sensor nodes are deployed to ensure adequate coverage of a region. Using a coverage optimization protocol, nodes with overlapping sensing areas are turned off to reduce energy consumption. We refer the readers to the following survey [26] of the various other energy efficient coverage techniques. Research in [19] focused on shortest path algorithms to optimize energy consumption. However, using the shortest path may lead to an increase in the ratio of lost packets [27]. In [28], research focused on scheduling sensor nodes to switch on and off, depending on the queue size, to reduce energy consumption. However, switching nodes from an idle to a busy state and vice versa has been a major portion of the power consumption [29].

Numerous works have also treated admission control policies relevant to WSNs. Several algorithms have been used by Internet routers to decide on packets admission and rejection to manage queues and minimize congestion in TCP [30]. In Tail Drop [31], when the queue reaches its maximum capacity, the newly arriving packets are rejected independent of their types. Though traffic may belong to different flows, it is not differentiated, and each packet is treated identically. When segments are lost, the TCP sender enters slow-start, which reduces throughput in that TCP session. A more severe problem occurs when segments from multiple TCP flows are dropped causing global synchronization - that is, all of the involved TCP senders enter slow-start. This problem can be mitigated by routing segments from one flow (the lower priority flow for instance) to a different queue, rather than discarding one segment from each flow. In Weighted Random Early Detection (WRED) [32], flows are differentiated and treated depending on their type. Packets with a higher IP address precedence are less likely to be dropped than packets with a lower precedence. Thus, higher priority packets are delivered with a higher probability than lower priority packets. As in TCP, there is no guarantee that discarded packets belong to the same flow.

Queuing theory has also been widely used in network optimization [33,34,35]. In [36], a queueing network model was used to analyze and study the performance of a mobile WSN. In [37,38], models implementing admission control mechanisms to manage scarce radio resources in WSNs are described in the form of a queueing system with unreliable devices. Work in [39] proposed an energy saving mechanism that controls the ON/OFF state of a sensor node. A sensor node enters an OFF state (multiple fixed duration vacation periods) as soon as its queue is empty and is turned on (changes to an ON state) only when its queue size reaches a threshold value of packets. A M/M/1-type queueing model with a control mechanism is proposed in [40] as a tool to reduce power consumption in WSNs. The tool switches a sensor node from an OFF mode to an ON mode only when its queue size reaches or exceeds a given size.

In order to achieve quality of service in a multi-class two parallel queue system, work in [41,42,43] dedicates a server to the high priority flow. In a resource constrained environment, such a model is not efficient. The dedicated server may be idle for a long time waiting, and consuming resources, for the high priority traffic to arrive while other servers may be congested. A different approach is considered in research that focuses on a single server and two classes of jobs. To minimize the sum of the holding/processing and switching costs, work in [44] switches serving between two classes of traffic. In [45] the authors consider a two-class single server preemptive priority queue where jobs can be denied admission to the system and can be aborted from service. Aborted jobs can rejoin the queue and resume service at a later time. Service does not have to restart but it continues from the step it was aborted from. In [46], an expulsion/scheduling control mechanism is proposed for a single class queueing system with non-identical servers. It was further extended in [47] to a multiple class system where job preemption is allowed.

The concept of termination control, studying a single server one-class workload model is introduced in [48]. In this work, the service of a job may be aborted before the job has received full service, and may be removed at any point in time from the queue. The authors further show that optimal threshold (acceptance and termination) policies exist.

A common characteristic of these methods is that they may find applications in areas such as workflow and assembly lines. However, they are not applicable to communication networks. One key element of communication networks is that once a packet transmission starts, it cannot be aborted and resume its service at a later time. A packet can be either fully transmitted or put back in the queue before its service starts. Moreover, in resource constrained networks, once a packet is queued or starts service, processing and computation resources are consumed. Preempting its service (transmission) and returning it to the queue for its service to restart at a later time, only consumes more of the scarce resources.

A recurrent assumption of existing work related to admission control is that low priority packets are denied admission to the queue when higher priority packets are already present. In the event they are accepted, low priority packets can be at any time rejected from the queue, or aborted from service in favor of a higher priority packet [45]. Another option in multiserver systems is to restrict sending the high priority flow over one of the available paths (generally the optimal one). These approaches may work well in certain applications such as delay tolerant networks and networks with unlimited resources. They are also applicable in general applications such as in workflow and in assembly lines. However, in resource constrained computer networks, once a packet is accepted to the system, resource (energy, computation, memory) consumption starts.

3. Model Description and Formulation

3.1. Model Description

We consider the system given in Figure 1. We model node A as a two-class queueing system and assume that type-i, , packets arrive at node A according to independent Poisson process with arrival rate , respectively. We assume that node A has two paths that lead to the destination D: an energy efficient path, through queue 1, and a less energy efficient path through queue 2. We assume that the service at queue 1 and at queue 2 are exponentially distributed with rates and respectively, where . We assume that type-1 packets have higher priority than type-2 packets hence, they are always accepted to queue 1 upon arrival. However, an admission control mechanism at node A decides to accept or reject type-2 packets. Arrivals (type-1 and accepted type-2 packets) join queue 1. At any event, arrival or service completion, the decision maker can decide to route a packet to queue 2 or to serve it at queue 1. This decision is made to give service advantage to the higher priority packets to be served at queue 1 and to be transmitted over the more energy efficient path. In summary, the system is controlled in 3-ways: (1) all type-1 packets are always accepted to the system, (2) an admission policy at node A decides to accept or reject a newly arriving type-2 packet, (3) a routing policy at node A decides to route type- packet to queue 2 or to serve it at queue 1. However, type-1 packets are always given service priority at queue 1.

We assume that the decision maker has complete state information, i.e., it knows the instantaneous number of packets of type- in queue 1 and the total number of packets in queue 2. Thus, the structure of the system is that of a Markovian decision process [49]. As such, we propose to formulate the admission and routing problem as a MDP and use the value iteration technique to characterize the form of the optimal policy. We formulate the problem by defining the states, the transition structure and the feasible actions.

State. The state of the system is described by the vector where is the number of type-i packets in queue 1 and is the number of packets (type-1 and/or type-2) in queue 2.

Events. We distinguish three possible events: (1) the arrival of a new packet, (2) the service completion at queue 1, and (3) the service completion at queue 2.

Decisions. If the event is an arrival, then if the packet is a type-1, it is automatically accepted to queue 1 and this changes the state into state . If alternatively, it is a type-2 packet, then a decision has to be made to accept or reject the newly arrived packet. If the packet is accepted, then this changes the state to . If it is rejected, the state does not change. Next, the decision is either to serve a packet in queue 1 or to route it to queue 2. The idea here is, if there are type-1 packets in queue 1, they are given higher service priority at queue 1. Hence type-2 packets will be served at queue 1 only if there are no type-1 packets in queue 1.

One may argue, since there are two queues why not dedicate queue 1 to type-1 packets and queue 2 to type-2 packets? The main reason for not using this approach is so that type-2 packets will not be deprived from using the more efficient path when there are no type-1 packets in queue 1. This also allows type-1 packets to be served at queue 2 in the event queue 1 is heavily congested or when the server at queue 2 is idle.

Costs and rewards. Packets earn a reward upon service completion. Packets served at queue 1 receive a type-dependent reward . Since type-1 packets have a higher priority than type-2 packets they receive a higher reward . Packets served at queue 2 receive a reward () independent of their type.

Packets admitted to the system are also subject to a holding and processing cost h, incurred while waiting in the queue or while being served. We assume these costs are linear in the number of packets in the system and are type independent namely, per unit of time when there are packets present in the queues. In addition, each time a packet is admitted to the system, type independent admission cost is incurred. Rejecting packets is free of charge. Moreover, routing type-2 packets to queue 2 is free of charge while routing type-1 has a positive switching cost . Imposing a positive switching cost on type-1 packets is intended to discourage these packets from being routed to queue 2, and use the less efficient path especially, when there are type-2 packets in queue 1.

For a reward to be collected and for the model to make sense, the cost incurred by a type-i packet served at queue i must be smaller than the reward it collects at the queue. When served at queue 1 a packet reward is . When served at queue 2 a packet reward is where the indicator if the packet is type-1 otherwise, it is equal to zero. As our application is related to energy optimization in sensor networks, we assume that all the costs and rewards are in units of energy. The cost h can be interpreted as the energy consumed to process and maintain a packet in the queue. The cost c is the energy consumed to receive a packet and is the energy consumed to switch or move a packet from queue 1 to queue 2. Rewards can be interpreted as the energy saved by successfully transmitting a packet compared to rejecting it.

Criterion. The objective is to maximize the expected discounted reward resulting from accepting, routing and servicing flows to completion over an infinite horizon.

Uniformization. In order to convert the continuous problem into a discrete one, we follow [50]’s uniformization technique. We adjust the transition rates of the embedded Markov chain of the system so that the transition times between decision times is a sequence of independent exponentially distributed random variables with mean , where . Then with probability , a transition concerns the arrival of a type-i packet, with probability concerns a service completion at queue j and with probability , the process terminates. Without loss of generality, we scale the time line so that the rate .

Discounting. We discount future rewards at a rate , (i.e., rewards at time t are multiplied by ). This is equivalent to a process that lasts an exponentially distributed time with mean after which, there will be no more arrivals or service completions.

Note that node A in Figure 1, can be modeled as a single-shared-queue system. In this case, a routing decision can be made only when a packet reaches the head of the queue, leading to the head-of-the-line blocking (HOL) problem [51]. As such, single shared queue devices are perceived to have low performance due to the HOL blocking [52,53]. This is the main reason why network devices generally use separate queues per output port. In this work, modeling node A as a two-queue system mitigates the HOL problem, especially that the routing decision is made not only right before service but also at the arrival of a packet.

3.2. Model Formulation

In the following, we summarize and complete the model in terms of a mathematical formulation. Let be the expected discounted reward of responding to an event given that the system has reached state following n state transitions starting from a randomly chosen initial state (i.e., for all , where is the zero vector of dimension 3 and the inequality is taken component-wise).

Admission: Let denote the expected discounted reward when an arrival of type-i packet event occurs and the system is in state . Let be the k-th unit vector of dimension 3. An arrival of type-2 is accepted to the system only if the difference in reward between accepting the packet and rejecting it is positive i.e., . Recall that type-1 packets are never rejected. Thus, for ,

Service: When the system is in state , a service decision of a packet at queue 1 is made as follows. If it is a type-1 packet, it proceeds with no delay to service at server 1. If it is type-2 packet, it is served at server 1 only if there are no type-1 packets in queue 1. In queue 2, packets are served on a first-come-first-serve independent of their type. We define the service operators at queue as follows:

and

Let denote the expected discounted reward when the current state is and an arrival or a service completion event occurs. Note that , given by Equation (1), represents the expected reward assuming no routing to queue 2 occurred.

Routing: A type-1 packet may be routed to queue 2 only if no type-2 packets are in queue 1 () and it is more rewarding to route the packet to queue 2 than to keep it in queue 1. However, type-2 packets can be routed to queue 2 when and when it is more rewarding to do so. Let denote the expected discounted reward when the current state is and a routing decision to queue 2 is to occur.

The optimal expected discounted reward at state is given by Equation (3). It is implied that at any event, arrival or service completion, the decision maker can decide to route a packet to queue 2 or serve it at queue 1.

4. Characterization of the Optimal Arc Policy

To characterize the optimal policy, we use the value iteration technique introduced in [54,55], by recursively evaluating using Equation (3) for . We prove by induction that if some structural properties of the discounted reward function are satisfied, then these properties are also satisfied for and therefore, they hold for all . As n tends to infinity, the optimal policy converges to the unique optimal policy. This convergence result is ensured by Theorem 8.10.1 in [17]. The convergence to the optimal policy is an important result in the MDP literature. It is based on showing that the iteration from to is a contraction mapping as stated in Theorem 6.2.3 in [17]. This Theorem also proves that the optimal infinite horizon policy is independent of the choice of and this is why one can simply choose .

4.1. Reward Function Properties

Solving the optimality Equation (3) analytically is untractable. Hence, in order to characterize the structure of the optimal policy, we show that the optimal reward function satisfies a set of properties which allow us to infer the structure of the optimal policy. The properties are listed and interpreted below.

Property 1.

.

Property 1 implies that is concave in each of the state variables . In other words, it implies that the marginal reward (i.e., ) of an additional packet of type- in queue 1 is non-increasing in the number of packets for a fixed and fixed number of packets, in queue 2. It also implies that the marginal reward of an additional packet in queue 2 is non-increasing in the number of packets for a fixed number of packets of type- in queue 1.

Property 2.

.

Property 2, for and , states that the marginal reward of an additional type-2 packet in queue 1 is non-increasing in . Therefore, routing a type-2 packet to queue 2 is less rewarding than servicing it at queue 1. Similarly, Property 2, when and , states that the marginal reward of an additional type-1 packet in queue 1 is non-increasing in . Consequently, routing a type-1 packet to queue 2 is less rewarding than servicing it at queue 1. The other cases have similar interpretations.

Property 3.

.

Property 3 states that the marginal reward of an additional type-i packet is non-increasing in and for fixed . Similarly, the marginal value of an additional packet in queue 1 is non-increasing in for fixed queue 2 size. Mathematically, Property 3 indicates that the reward value function is sub-modular.

4.2. Reward Function Bounds

Since all accepted packets are guaranteed to be served at either queue, packets will collect a reward upon service completion as long as and . However, since the reward depends on the packet type and on the queue where the packet resides, in this subsection, we bound the reward collected by packet type. We make use of sample path approach [56] to prove the following propositions.

Proposition 1.

For all and , the difference in reward of serving a type-2 packet at queue 1 does not exceed .

Proof.

Using a sample path analysis, let two instances and of the policy where starts at state and starts at state . will follow the actions of the optimal policy and will copy the actions of . An arrival to both instances changes the rewards equally (every arrival is charged a cost of c). In the event of a departure from state , due to service completion at queue 1, in this case, we must have , otherwise type-1 takes priority in service, immediately afterwards and become identical, so a reward of is generated. The departure can also be a route to queue 2. In this case, since there is no switching cost for type-2 packets, the reward does not change. □

Proposition 2.

For all and , the difference in reward of serving a packet at queue 2 does not exceed .

Proof.

Using a sample path analysis, let two instances and of the policy where starts at state and starts at state . will follow the actions of the optimal policy and will copy the actions of . A departure from both instances changes the rewards equally (every departure is rewarded independent of the packet type). Hence, upon a departure from queue 2 at state , and become identical so the difference in reward is at most . □

Proposition 3.

For all and , the difference in reward to serve a type-1 packet at queue 1 does not exceed .

Proof.

Using a sample path analysis, let two instances of the policy where one () starts at state and the other () starts at state . will follow the actions of the optimal policy and will copy the actions of . An arrival to both instances changes the rewards equally (every arrival is charged a cost of c). In the event of a departure from state , due to service completion at queue 1 (immediately afterwards and become identical), a reward of is generated (since type-1 takes priority over type-2, the departure will be of type-1 unless ). So the difference in reward is at most . The departure can also be a route to queue 2. Note that a routing in both instances changes the reward equally by the switching cost of . □

Proposition 4.

For all and , the difference in reward of serving a packet at queue 1 and at queue 2 is larger than

Proof.

Using a sample path analysis, we first consider the case where and prove . Let two instances and of the policy where starts at state and instance starts at state . Instance will follow the optimal policy and instance will copy the actions of . That is, if routes its packet, then routes its packets, and if takes its packet into service, then takes its packet into service. For both Instances, while packets are still in the system, their costs and rewards are the same. Hence, the difference in reward between the two instances is zero. However, if a packet is served, a reward of in is collected and a reward of in is collected. Hence, the difference in reward between the two instances is .

The proof of the case where , is very similar to the above proof. It suffices to replace by and by . □

Note that in this work, the admission cost is not as relevant as the reward as it is packet type-independent. However, the problem can be easily generalized to assigning type-dependent costs ). On the other hand, the switching cost is important for type-1 packets as they are charged only in the event they are routed to queue 2.

We conclude this section with the main results of the paper as illustrated in the following Theorem.

Theorem 1.

There exists a stationary optimal policy for any initial state such that:

- Admission policy: The optimal admission control policy is a state-dependent threshold-type, with threshold curve , such that a type-2 packet is admitted to queue 1 if and only if where .

- Routing policy: The optimal routing control policy is a state-dependent threshold-type, with threshold curves and , such that:

- Type-1 packet is routed to queue 2 if and only if and , where .

- Type-2 packet is routed to queue 2 if and only if , where .

The results of Theorem 1 also apply to the average reward criterion (see [57]). Hence, we will use the average reward criterion for all our numerical experiments as it has the advantage of not depending on the initial state. To prove the theorem, we will prove Properties 1–3. However, for ease of flow, we defer all mathematical proofs to the Appendix A.

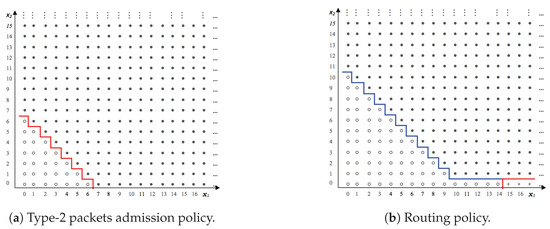

5. Sensitivity Analysis of the Optimal Policy

In this section, we study the optimal control policy depicted in Theorem 1 and its sensitivity to the system parameters. We conduct extensive numerical experiments varying system parameters. As an illustration we consider a base case, where . The optimal policy is computed using the value iteration algorithm of dynamic programming [58]. Convergence is obtained when the expected reward of successive iterations is within an accuracy of . The optimal admission control policy for this system is presented in Figure 2a. The optimal action is to reject type-2 packets in all states above the switching curve (above the red line) and to accept them in all states below the curve. Similarly, the optimal routing policy for the system is presented in Figure 2b. The optimal action is to route type-2 packets to queue 2 only in states above the switching curve (above the blue and red lines). In states below the switch-curve (below the blue line), no routing is allowed. Below the red line are the only states where type-1 packets are routed to queue 2 that is, when We experimented with several system parameters and we obtained the same results in terms of the shape of the switching curves of the admission control policy and routing control policy.

Figure 2.

Optimal control policy.

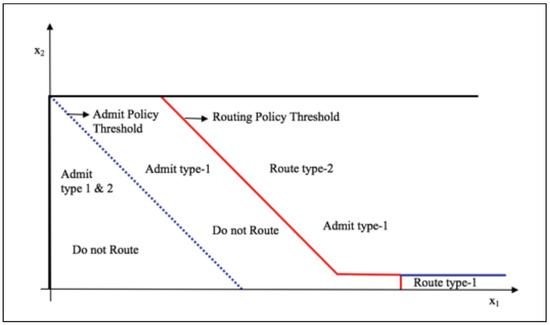

In Figure 3, we superpose both control policy curves where we note that the system gives priority to type-1 packets to be served at queue 1 by routing excess type-2 packets to queue 2. All numerical results gave a straight-line switching curve with slope of in the plane for a given packets in queue 2. However, proving this result analytically is untractable as it amounts to solving the optimality Equation (3) in closed form.

Figure 3.

Admission and routing policy transient states for a given size of queue 2.

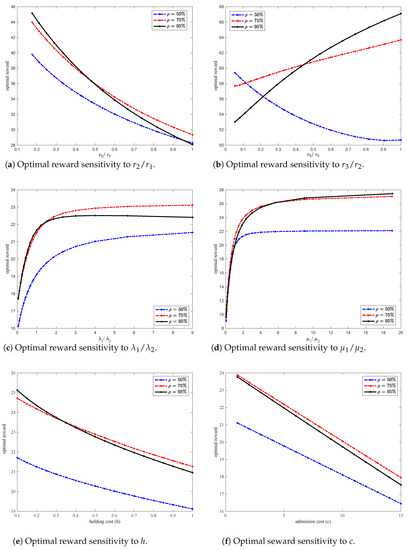

We further study the effect of the system parameters on the optimal average reward for various network load values, . We isolate the effect of a particular system parameter by varying its value while holding the values of other system parameters constant.

We study the effect of increasing reward while maintaining the sum of the rewards of and constant. Figure 4a shows that the optimal average reward decreases nonlinearly as the ratio increases. This behavior can be explained as follows. As increases, the incentive for packets to be routed to queue 2 decreases. Hence, queue 1 becomes overloaded and the overall holding cost eventually becomes high affecting the optimal average reward. Note however, that under heavy network load (), at a certain point, routing to queue 2 becomes inevitable causing lower reward compared to a system with lower network load (). This also explains the crossover of the curves corresponding to the optimal average reward curve for and the one for in the figure.

Figure 4.

Optimal reward sensitivity to rewards , service rates , arrival rates , holding cost h and admission cost c.

We further study the effect of increasing reward on the optimal average reward while maintaining the sum of the rewards of and constant. Figure 4b shows that the optimal average reward increases non-linearly as the ratio increases when the network load is high ( and ). This increase is due to the fact that as increases, packets in queue 1 have more incentive to be routed to queue 2 especially if queue 1 has type-1 packets. As type-2 packets are routed to queue 2, more space opens up in queue 1 for type-1 packets, and more type-2 packets are admitted, hence, the optimal average reward increases. Moreover, as the network load increases, routing admitted packets to queue 2 becomes sometimes necessary. Indeed, for higher network load (), the optimal average reward is increasing at a faster rate compared to the optimal average reward for a network with lower load of . For lower network load () however, as increases the optimal average reward decreases nonlinearly. This can be explained as follows: as increases and if type-1 packets are in queue 1, then type-2 packets have more incentive to be routed to queue 2, and collect a lower reward hence, the optimal average reward decreases. The graphs generated in Figure 4a,b show results when .

Figure 4c shows the sensitivity of the optimal average reward to the arrival rates while maintaining the sum of and constant. The figure shows that the optimal average reward initially increases at a high rate as increases then the rate of increase slows down. The increase continues until queue 1 becomes congested to cause type-1 packets to be routed to queue 2, hence, collect a lower reward , and decrease the optimal average reward. For high network load () however, the optimal average reward eventually starts decreasing as the high load makes it necessary to increase routing packets to queue 2. Eventually, both queues saturate, leading to an increase in the holding cost, and a decrease in the optimal average reward. The graph generated in Figure 4c shows results for the following system parameters: and .

In Figure 4d, we study the effect of the service rates and on the optimal average reward while maintaining the sum of and constant. As the ratio increases, the optimal average reward increases and eventually levels-off for all network loads considered (. This can be explained as follows. As queue 1 service rate increases, queue 1’s length becomes shorter discouraging packets from being routed to queue 2. Hence, the optimal average reward increases. As continues to increase relative to , less and less routing occurs eliminating the need for queue 2 which explains the leveling-off of the optimal average reward (since the arrival rates are held constant). In practice, however, achieving a high service rate to eliminate queue 2 is rather costly. The graph generated in Figure 4d shows results for the following system parameters: and .

Figure 4e shows that the optimal average reward is nonlinearly decreasing in the holding cost h. Figure 4f shows that the optimal average reward is linearly decreasing in the admission cost c. These results are interesting and are worth exploiting in a future work in an attempt to get an analytical expression of the reward. The graphs generated in Figure 4e,f show results for the following system parameters: and various values of h and c respectively.

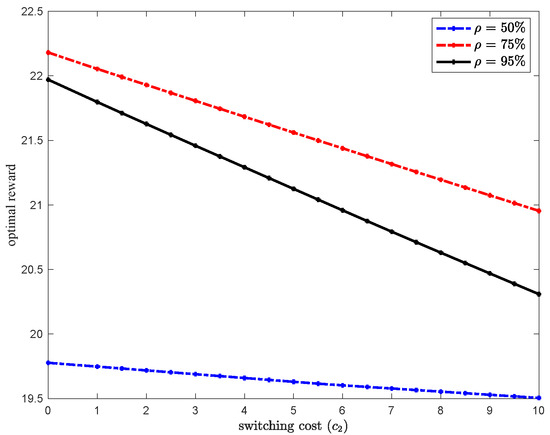

Finally, we would like to note that even though our analysis focused on the case where the switching cost is zero (), similar results are obtained for . As an illustration, Figure 5, shows that the optimal reward linearly decreases in . This result is expected as when the switching cost increases, type-1 packets have no incentive to be routed to queue 2. Moreover, as the network load increases, the optimal reward increases up to a point where both queues become congested. This explains why the optimal reward when is higher than the optimal reward when as increases. This also lead to the conclusion that there is an optimal load where the reward in maximized. The results in Figure 5 are obtained for system parameters: and .

Figure 5.

Optimal reward sensitivity to .

6. Heuristic Control Policy

It is well established that dynamic programming suffers from the curse of dimensionality. For our model in particular, the optimal policy is computationally untractable for systems with more than 2 types of packets (i.e., a state space with dimension greater than 3). Hence, it is too resource intensive to run on resource limited sensor devices. This motivated us to propose an efficient heuristic control policy that imitates the behavior of the optimal policy and is computationally much faster to obtain for the general case of n types of packets (see Algorithm 1). As such, we define the state of the system by the dimensional vector where type-i packets take priority over type-j packets for . The number of packets in queue 1 is represented by where is the number of type-i packets while depicts the number of packets in queue 2. The heuristic control policy is characterized by parameters: parameters control the admission to queue 1, while parameters control the routing to queue 2. Note that type-1 packets are always admitted to queue 1 (i.e, ). Furthermore, we have and . We extend the costs and reward parameters as follows: c is the admission cost; h is the holding cost; is the switching cost for type-i packets () and is the reward of type-i packet served at queue 1 () and is the reward of packets served at queue 2.

At arrival of type-i packet, we use the following control policy where we define the largest packet type such that :

| Algorithm 1. Proposed heuristic control policy. |

|

In order to test the performance of the above proposed heuristic, we compare the reward generated by the heuristic to that of the optimal policy. We use the average reward criterion for this purpose. The average reward under the optimal policy is obtained using the following optimality equation:

where is the optimal average reward per transition (see [58]) and is the optimal differential reward, and as defined in Section 3.2.

The average reward under the heuristic control policy (H) is defined using the following dynamic programming equation:

where is the average reward per transition under the heuristic control policy, is the differential reward under the heuristic policy and and are defined as follows:

In the following, we compare the performance of the proposed heuristic control policy to the optimal control policy for the case of two types of packets. We examine the impact of a certain system variable by varying its value while maintaining all other variables constant. Similar to [59], we use as performance metric the reward Relative Deviation () of the heuristic from the optimal. The , expressed in percentage, is defined as where denotes the average reward rate of the optimal control policy obtained by solving Equation (4), and denotes the average reward rate associated with the heuristic control policy obtained by solving Equation (5). Here, similar to the optimal policy, the expected reward is obtained using the value iteration algorithm with the same accuracy of .

Table 1 shows a sample of 100 randomly generated system parameter values used to compute the performance of the heuristic control policy. Based on these results, it is clear that the heuristic performs very well compared to the optimal policy. For a confidence interval, the average is with a range of .

Table 1.

Heuristic performance compared to the optimal policy. RD is used as a measure of the Heuristic performance compared to the optimal policy.

For a system with more than two types of packets, computing the optimal policy becomes untractable due to the exponential explosion of the number of states. However, computing the thresholds of this heuristic is orders of magnitude faster than computing the optimal policy. In fact, much of the computation required (i.e., computation of the system parameters) for the heuristic can be done off-line, and the real-time computation requires no more than a table lookup. The computation of the system parameters of the heuristic is approximately, and at worst equal to the number of parameters times the size of the square of the cardinality of the state space. Numerical results show that the heuristic performs very well compared to the optimal policy. For a 95% confidence interval, the average computation time is with a range of over a sample of 100 cases.

Given that the computation time of the optimal policy scales exponentially in the state space, computing the optimal policy beyond two priority classes is untractable. For instance, consider a system with three types of packets. Even if we succeed to compute the optimal policy and the associated parameters, it will require a huge state dependent look-up table (five hyper-surfaces representing the state dependent thresholds). For the heuristic however, we will need to store only five static control parameters (i.e., two admission and three routing threshold parameters).

Finally, in practice, traffic flow (i.e., arrival rate ) changes over time. Our model however, assumes a constant traffic flow ( for flow type-i). This is by no means a limitation of our model. In fact, this issue can be approached in one of two ways: either using a transient analysis which is well documented as being untractable especially in the context of an MDP framework; or computing different policy parameters off-line for each traffic flow. These parameters would be used for the particular traffic flow in effect during deployment.

7. Conclusions

In this paper, we considered an admission and routing control problem to address the issue of resource limitation in resource constrained networks (such as WSNs). We formulated the admission and routing control problem of two types of flows where one has a higher priority than the other, as a Markov decision problem. We characterized the optimal policy and showed that it is a state-dependent threshold type policy. Furthermore, we conducted extensive numerical experiments to gain more insight into the behavior of the optimal policy under different system parameters. Due to the computational challenges of the optimal policy (curse of dimensionality) which makes it untractable and too resource intensive to run on wireless devices, we proposed a heuristic that mimics the optimal control policy. Through extensive numerical results, we showed that the heuristic performs very well. It is also orders of magnitude faster than the optimal policy. Much of the required computation can be done off-line, and the real-time computation requires no more than a table lookup. We further generalized the heuristic for the case of a system with n types of flows ().

The results presented in this work provide a first step towards a better understanding of the structure of the optimal policy. There are several avenues for future research. In particular, it would be of interest to generalize the system to multi-server queues with more than two paths leading to the same destination. We expect the problem to become considerably more difficult with each additional feature and it is not clear if the optimal policy would be tractable. A clear extension of this work is to implement and test the proposed admission and routing control policy in real resource constrained network devices.

Funding

This research received no external funding.

Conflicts of Interest

The author declare no conflict of interest.

Appendix A

In order to prove Theorem 1 we first state the following Lemma.

Lemma A1.

Let be the set of functions, v, defined on such that v satisfies Properties 1–3. Then , where is the set of non-negative integers.

Proof.

First, note that by adding Properties 2 and 3 we obtain Property 1. Therefore, it suffices to prove Properties 2 and 3. To prove these properties, we use induction on the remaining number of periods. The following is a sketch of the proof.

- Step 1: we observe that Properties 2 and 3 hold for .

- Step 2: we assume 2 and 3 hold for some .

- Step 3: we prove that 2 and 3 hold for .

We introduce the following notation:

Hence, we can write

We show that and satisfy Properties 2 and 3 and thus satisfy Property 1.

Note that operators and satisfy Properties 2 and 3 by induction as they do not involve any decision. Hence, we only need to show that satisfies Properties 2 and 3. Using the difference operator, we have:

To show that satisfies Property 2, let

Using submodularity property, we infer that: and

We prove the property for each case.

- case 1: assume

- case 2: assume and

- case 3: assume and .

- case 4: assume and

- case 5: assume and

- case 6: assume and

Since the set is closed under addition and multiplication by a scalar, it follows that satisfies Property 2.

To show that satisfies Property 3, let

Using submodularity, we infer that . In the following we prove the property for each case.

- case 1: assume

- case 2: assume

- case 3: assume

- case 4: assume

- case 5: assume

Since the set is closed under addition and multiplication by a scalar, it follows that satisfies Property 3.

To show that satisfies Property 2, we need to prove that

For simplicity we prove the property for and . The rest of the properties follow the same procedure.

- Note that, by Property 2 the following inequalities hold.We prove the property for each case.

- (a)

- case 1: assume

- (b)

- case 2: assume

- (c)

- case 3: assume

- (d)

- case 4: assume

- (e)

- case 5: assume

In order to prove that satisfies Property 3, we need to prove that

For simplicity we prove the property for and . The rest of the properties follow the same procedure.

- Note that, by Property 2 the following inequalities hold. . We prove the property for each case.

- (a)

- case 1: assume

- (b)

- case 2: assume

- (c)

- case 3: assume

- (d)

- case 4: assume

- (e)

- case 5: assume

- ForNote that the expression of given in Equation (A4) is a special case of the one of given in Equation (A3) where and is substituted by , therefore satisfies Property 3. Finally, it is straightforward (using the induction argument) to show that satisfies Properties 2 and 3, hence satisfies Property 1. This completes the proof of Lemma A1.

□

Proof

(Proof of Theorem 1).

For a given n we obtain an optimal policy . It can be shown that there exists an optimal stationary policy such that . This is true because converges to an optimal time-independent discounted reward, w, for any (see [58]).

The admission policy structure is due to Property 1. Indeed the latter indicates that the difference is non increasing in . Thus has a unique maximum at as defined in the theorem.

The routing policy structure for type-2 packets is due to Property 2. The latter indicates that the difference is non decreasing in . Therefore, there exists a unique threshold . Similarly, for type-1 packets, Property 3 implies that there exists a unique threshold (since in this case). and are defined in the theorem.

This completes the proof of the theorem. □

References

- Puccinelli, D.; Haenggi, M. Wireless sensor networks: Applications and challenges of ubiquitous sensing. IEEE Circuits Syst. Mag. 2005, 5, 19–31. [Google Scholar] [CrossRef]

- Rabby, M.K.M.; Alam, M.S. A priority based energy harvesting scheme for charging embedded sensor nodes in wireless body area networks. PLoS ONE 2019, 14, e0214716. [Google Scholar] [CrossRef] [PubMed]

- Anguita, D.; Brizzolara, D.; Parodi, G. Building an Underwater Wireless Sensor Network Based on Optical: Communication: Research Challenges and Current Results. In Proceedings of the 2009 Third International Conference on Sensor Technologies and Applications, Glyfada, Greece, 18–23 June 2009; pp. 476–479. [Google Scholar]

- Hassan, J. Future of Applications In Mobile wireless Sensor Networks. In Proceedings of the 2018 1st International Conference on Computer Applications Information Security (ICCAIS), Riyadh, Kingdom of Saudi Arabia, 4–6 April 2018; pp. 1–6. [Google Scholar]

- Nishikawa, Y.; Sasamura, T.; Ishizuka, Y.; Sugimoto, S.; Iwasaki, S.; Wang, H.; Fujishima, T.; Fujimoto, T.; Yamashita, K.; Suzuki, T.; et al. Design of stable wireless sensor network for slope monitoring. In Proceedings of the 2018 IEEE Topical Conference on Wireless Sensors and Sensor Networks (WiSNet), Anaheim, CA, USA, 14–17 January 2018; pp. 8–11. [Google Scholar]

- Sun, Z.; Akyildiz, I.F. Connectivity in Wireless Underground Sensor Networks. In Proceedings of the 2010 7th Annual IEEE Communications Society Conference on Sensor, Mesh and Ad Hoc Communications and Networks (SECON), Boston, MA, USA, 21–25 June 2010; pp. 1–9. [Google Scholar]

- Bogdanoski, M.; Shuminoski, T.; Risteski, A. Analysis of the SYN flood DoS attack. Int. J. Comput. Netw. Inf. Secur. 2013, 5, 1–11. [Google Scholar] [CrossRef]

- Oncioiu, R.; Simion, E. Approach to Prevent SYN Flood DoS Attacks in Cloud. In Proceedings of the 2018 International Conference on Communications (COMM), Bucharest, Romania, 14–16 June 2018; pp. 447–452. [Google Scholar]

- Eberhardt, J.; Tai, S. On or Off the Blockchain? Insights on Off-Chaining Computation and Data. In Proceedings of the European Conference on Service-Oriented and Cloud Computing, Oslo, Norway, 27–29 September 2017; pp. 3–15. [Google Scholar] [CrossRef]

- Gupta, M.K.; Hemachandra, N.; Venkateswaran, J. Some parametrized dynamic priority policies for 2-class M/G/1 queues: Completeness and applications. arXiv 2018, arXiv:1804.03564. [Google Scholar]

- Dimitris, B.; Niño-Mora, J. Optimization of Multiclass Queueing Networks with Changeover Times via the Achievable Region Approach: Part II, the Multi-Station Case. Math. Oper. Res. 1999, 24, 331–361. [Google Scholar]

- Hassin, R.; Puerto, J.; Fernandez, F.R. The use of relative priorities in optimizing the performance of a queueing system. Eur. J. Oper. Res. 2009, 193, 476–483. [Google Scholar] [CrossRef][Green Version]

- Gupta, M.K.; Hemachandra, N. On 2-moment completeness of non pre-emptive, non anticipative work conserving scheduling policies in some single class queues. In Proceedings of the 2015 13th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), Mumbai, India, 25–29 May 2015; pp. 267–274. [Google Scholar]

- Rawal, A.; Kavitha, V.; Gupta, M.K. Optimal surplus capacity utilization in polling systems via fluid models. In Proceedings of the 2014 12th International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOpt), Hammamet, Tunisia, 12–16 May 2014; pp. 381–388. [Google Scholar]

- Li, C.; Neely, M.J. Delay and rate-optimal control in a multi-class priority queue with adjustable service rates. In Proceedings of the 2012 Proceedings IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012; pp. 2976–2980. [Google Scholar]

- Sinha, S.K.; Rangaraj, N.; Hemachandra, N. Pricing surplus server capacity for mean waiting time sensitive customers. Eur. J. Oper. Res. 2010, 205, 159–171. [Google Scholar] [CrossRef]

- Puterman, M.L. Markov Decision Processes; John Wiley and Sons Inc.: Hoboken, NJ, USA, 2005. [Google Scholar]

- Gouvy, N.; Hamouda, E.; Mitton, N.; Zorbas, D. Energy efficient multi-flow routing in mobile Sensor Networks. In Proceedings of the 2013 IEEE Wireless Communications and Networking Conference (WCNC), Shanghai, China, 7–10 April 2013; pp. 1968–1973. [Google Scholar]

- Zhang, D.; Li, G.; Zheng, K.; Ming, X.; Pan, Z. An Energy-Balanced Routing Method Based on Forward-Aware Factor for Wireless Sensor Networks. IEEE Trans. Ind. Inform. 2014, 10, 766–773. [Google Scholar] [CrossRef]

- Zhang, D.G.; Liu, S.; Zhang, T.; Liang, Z. Novel unequal clustering routing protocol considering energy balancing based on network partition and distance for mobile education. J. Netw. Comput. Appl. 2017, 88, 1–9. [Google Scholar] [CrossRef]

- Zhang, D.; Zheng, K.; Zhang, T.; Wang, X. A novel multicast routing method with minimum transmission for WSN of cloud computing service. Soft Comput. 2014, 19, 1817–1827. [Google Scholar] [CrossRef]

- Dressler, F. Self-Organization in Sensor and Actor Networks; John Wiley and Sons Ltd.: Chichester, UK, 2007. [Google Scholar]

- Pathan, A. Security of Self-Organizing Networks: MANET, WSN, WMN, VANET; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Gu, X.; Yu, J.; Yu, D.; Wang, G.; Lv, Y. ECDC: An energy and coverage-aware distributed clustering protocol for wireless sensor networks. Comput. Electr. Eng. 2014, 40, 384–398. [Google Scholar] [CrossRef]

- Le, N.T.; Jang, M. Energy-efficient coverage guarantees scheduling and routing strategy for wireless sensor networks. Int. J. Distrib. Sens. Netw. 2015, 11, 612383. [Google Scholar] [CrossRef]

- More, A.; Raisinghani, V. A survey on energy efficient coverage protocols in wireless sensor networks. J. King Saud Univ. Comput. Inf. Sci. 2017, 29, 428–448. [Google Scholar] [CrossRef]

- Alghamdi, T. Energy efficient protocol in wireless sensor network: Optimized cluster head selection model. Telecommun. Syst. 2020, 74, 331–345. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, Y.; Liu, B. A sensor node scheduling algorithm for heterogeneous wireless sensor networks. Int. J. Distrib. Sens. Netw. 2019, 15. [Google Scholar] [CrossRef]

- Shih, E.; Cho, S.; Lee, F.S.; Calhoun, B.H.; Chandrakasan, A. Design Considerations for Energy-Efficient Radios in Wireless Microsensor Networks. J. VLSI Signal Process. Syst. Signal Image Video Technol. 2004, 37, 77–94. [Google Scholar] [CrossRef]

- Santhi, V.; Natarajan, A.M. Active Queue Management Algorithm for TCP Networks Congestion Control. Eur. J. Sci. Res. 2011, 54, 245–257. [Google Scholar]

- Comer, D.E. Internetworking with TCP/IP, 5th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Floyd, S.; Jacobson, V. Random Early Detection (RED) gateways for Congestion Avoidance. IEEE/ACM Trans. Netw. 1993, 1, 397–413. [Google Scholar] [CrossRef]

- Robertazzi, T.G. Computer Networks and Systems: Queueing Theory and Performance Evaluation-Queueing Theorty and Performance Evaluation, 3rd ed.; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Kleinrock, L. Queueing Systems, Volume 2: Computer Applications; Wiley: Hoboken, NJ, USA, 1976. [Google Scholar]

- Kleinrock, L. Queuing Systems, Volume 1: Theory; Wiley: Hoboken, NJ, USA, 1975. [Google Scholar]

- Lenin, R.B.; Ramaswamy, S. Performance analysis of wireless sensor networks using queuing networks. Ann. Oper. Res. 2015, 233, 237–261. [Google Scholar] [CrossRef]

- Adou, Y.; Markova, E.; Gudkova, I. Performance Measures Analysis of Admission Control Scheme Model for Wireless Network, Described by a Queuing System Operating in Random Environment. In Proceedings of the 2018 10th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), Moscow, Russia, 5–9 November 2018; pp. 1–5. [Google Scholar]

- Borodakiy, V.Y.; Samouylov, K.E.; Gudkova, I.A.; Ostrikova, D.Y.; Ponomarenko-Timofeev, A.A.; Turlikov, A.M.; Andreev, S.D. Modeling unreliable LSA operation in 3GPP LTE cellular networks. In Proceedings of the 2014 6th International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), St. Petersburg, Russia, 6–8 October 2014; pp. 390–396. [Google Scholar]

- Kempa, W.M. Analytical Model of a Wireless Sensor Network (WSN) Node Operation with a Modified Threshold-Type Energy Saving Mechanism. Sensors 2019, 19, 3114. [Google Scholar] [CrossRef]

- Ghosh, S.; Unnikrishnan, S. Reduced power consumption in wireless sensor networks using queue based approach. In Proceedings of the 2017 International Conference on Advances in Computing, Communication and Control (ICAC3), Mumbai, India, 1–2 December 2017. [Google Scholar]

- Moy, J. Open Shortest Path First (OSPF). Available online: https://tools.ietf.org/html/rfc2328 (accessed on 11 November 2020).

- Hedrick, C. Routing Information Protocol (RIP); Rutgers University: Camden, NJ, USA, 1988. [Google Scholar]

- Zhang, X.; Zhou, Z.; Cheng, D. Efficient path routing strategy for flows with multiple priorities on scale-free networks. PLoS ONE 2017, 12, e0172035. [Google Scholar] [CrossRef] [PubMed]

- Groenevelt, R.; Koole, G.; Nain, P. On the bias vector of a two-class preemptive priority queue. Math. Methods Oper. Res. 2002, 55, 107–120. [Google Scholar] [CrossRef]

- Brouns, G.A.; van der Wal, J. Optimal threshold policies in a two-class preemptive priority queue with admission and termination control. Queueing Syst. 2006, 54, 21–33. [Google Scholar] [CrossRef][Green Version]

- Xu, S. A duality approach to admission and scheduling controls of queues. Queueing Syst. Theory Appl. 1994, 16, 272–300. [Google Scholar] [CrossRef]

- Righter, R. Expulsion and scheduling control for multiclass queues with heterogeneous servers. Queueing Syst. Theory Appl. 2000, 34, 289–300. [Google Scholar] [CrossRef]

- Brouns, G.; van der Wal, J. Optimal threshold policies in a workload model with a variable number of service phases per job. Math. Methods Oper. Res. 2003, 58, 483–501. [Google Scholar] [CrossRef][Green Version]

- Puterman, M. Markov Decision Processes: Discrete Stochastic Dynamic Programming; John Wiley and Sons Inc.: Hoboken, NJ, USA, 1994. [Google Scholar]

- Lippman, A.L. Applying a new device in the optimization of exponential queueing systems. Oper. Res. 1975, 23, 687–710. [Google Scholar] [CrossRef]

- Khorov, E.; Kiryanov, A.; Loginov, V.; Lyakhov, A. Head-of-line blocking avoidance in multimedia streaming over wireless networks. In Proceedings of the 2014 IEEE 25th Annual International Symposium on Personal, Indoor, and Mobile Radio Communication (PIMRC), Washington DC, USA, 2–5 September 2014; pp. 1142–1146. [Google Scholar] [CrossRef]

- Elhafsi, E.H.; Molle, M.; Manjunath, D. On the application of forking nodes to product-form queueing networks. Int. J. Commun. Syst. 2008, 21, 135–165. [Google Scholar] [CrossRef]

- Kumar, A.; Manjunath, D.K.J. Communication Networking: An Analytical Approach; Morgan Kaufman: San Francisco, CA, USA, 2004. [Google Scholar]

- Bellman, R. Dynamic Programming; Princeton University Press: Princeton, NJ, USA, 1957. [Google Scholar]

- Howard, R. Dynamic Programming and Markov Processes; Massachusetts Institute of Technology Press: Cambridge, UK, 1960. [Google Scholar]

- Liu, Z.; Nain, P.; Towsley, D. Sample path methods in the control of queues. Queueing Syst. 1995, 21, 293–335. [Google Scholar] [CrossRef]

- Weber, R.R.; Stidham, S. Optimal control of service rates in networks of queues. Adv. Appl. Probab. 1987, 19, 202–218. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Dynamic Programming and Optimal Control, 2nd ed.; Athena scientific: Belmont, MA, USA, 2001; Volume 2. [Google Scholar]

- ElHafsi, M.; Fang, J.; Hamouda, E. Optimal production and inventory control of multi-class mixed backorder and lost sales demand class models. Eur. J. Oper. Res. 2020. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).