EEG Fingerprints under Naturalistic Viewing Using a Portable Device

Abstract

1. Introduction

2. Materials and Methods

2.1. Datasets

2.2. Features Extraction

2.3. Performance Evaluation and Statistical Analysis

3. Results

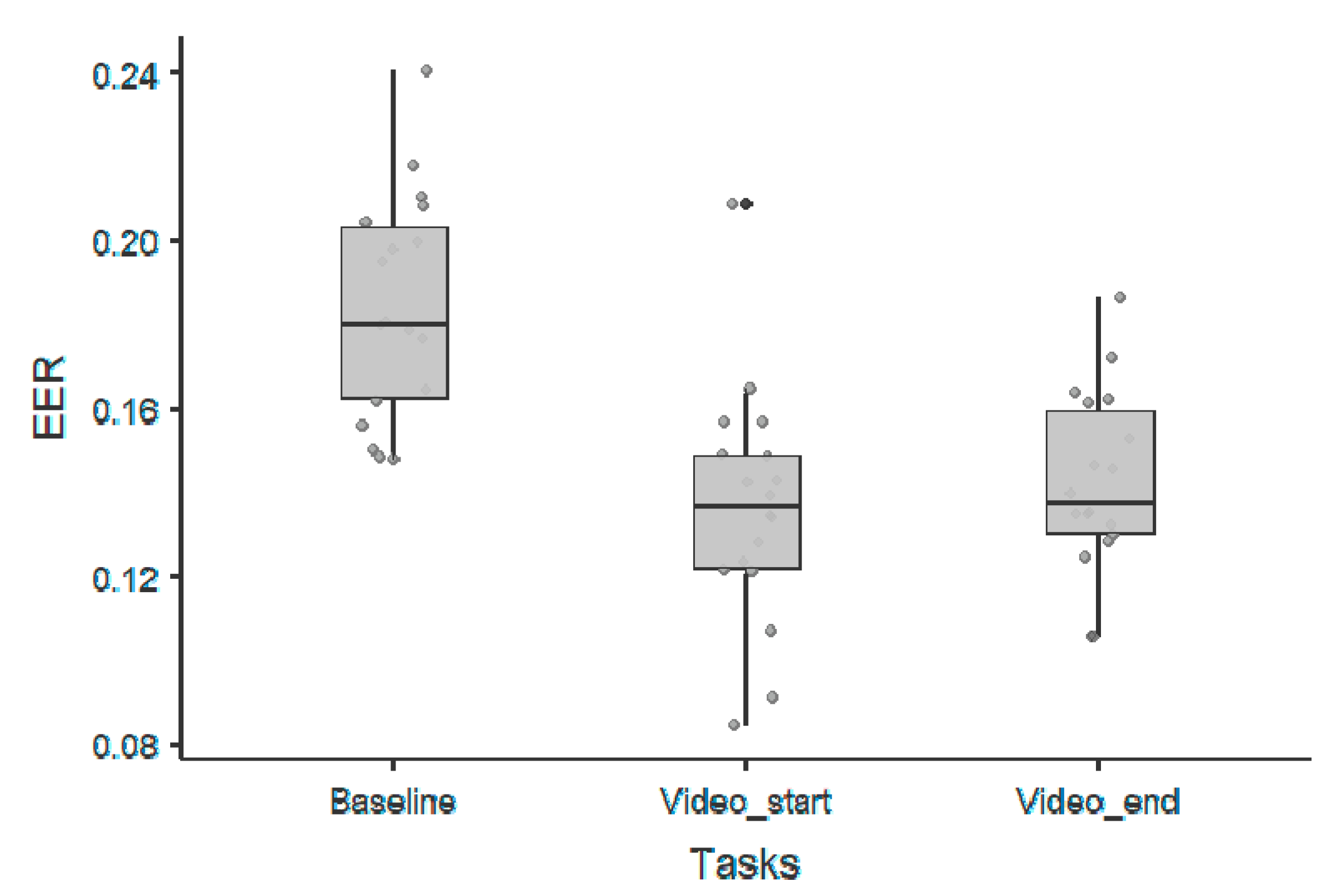

3.1. 1/f Offset Parameter

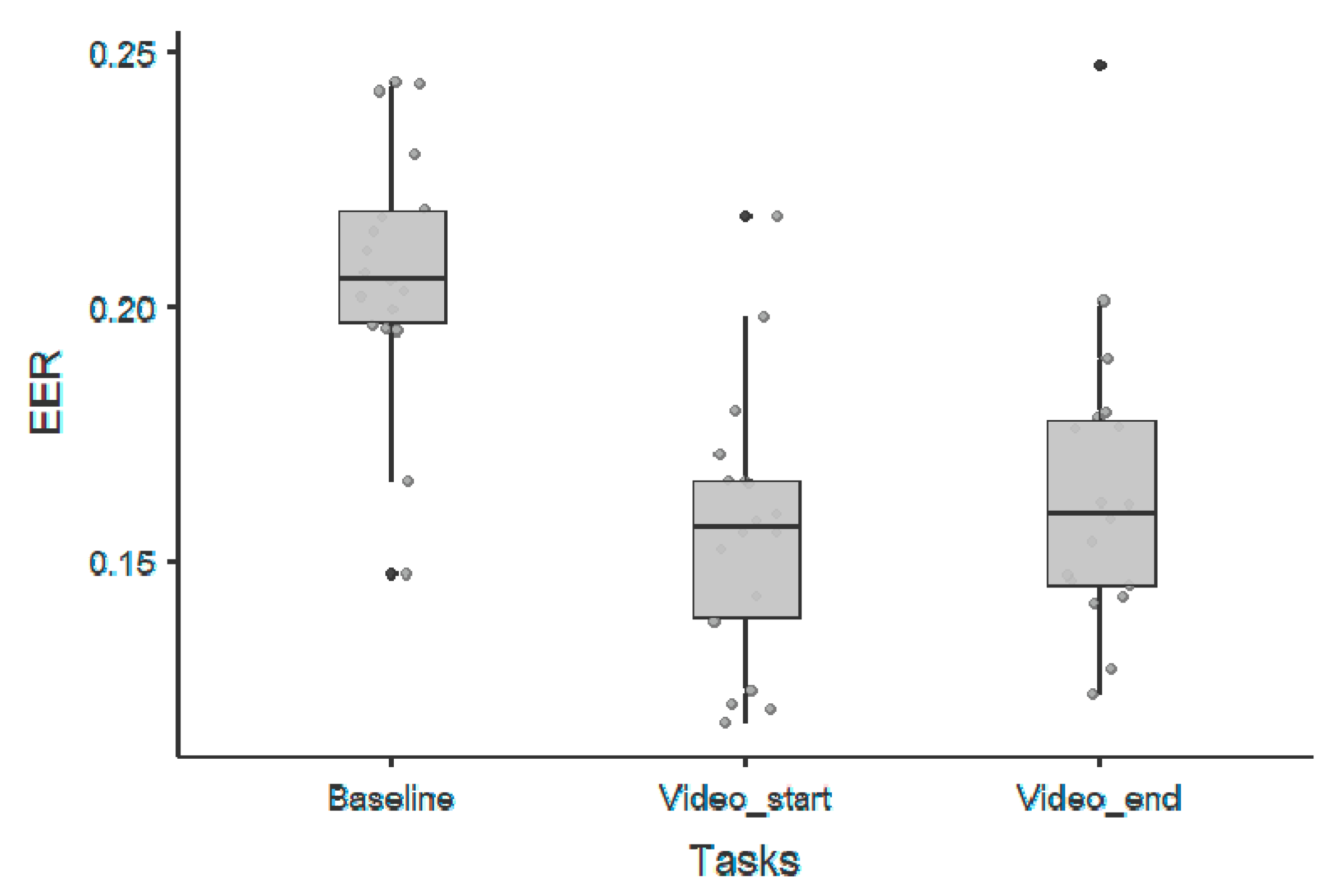

3.2. 1/f Exponent Parameter

3.3. Correlation with Participants’ Self-Assessment

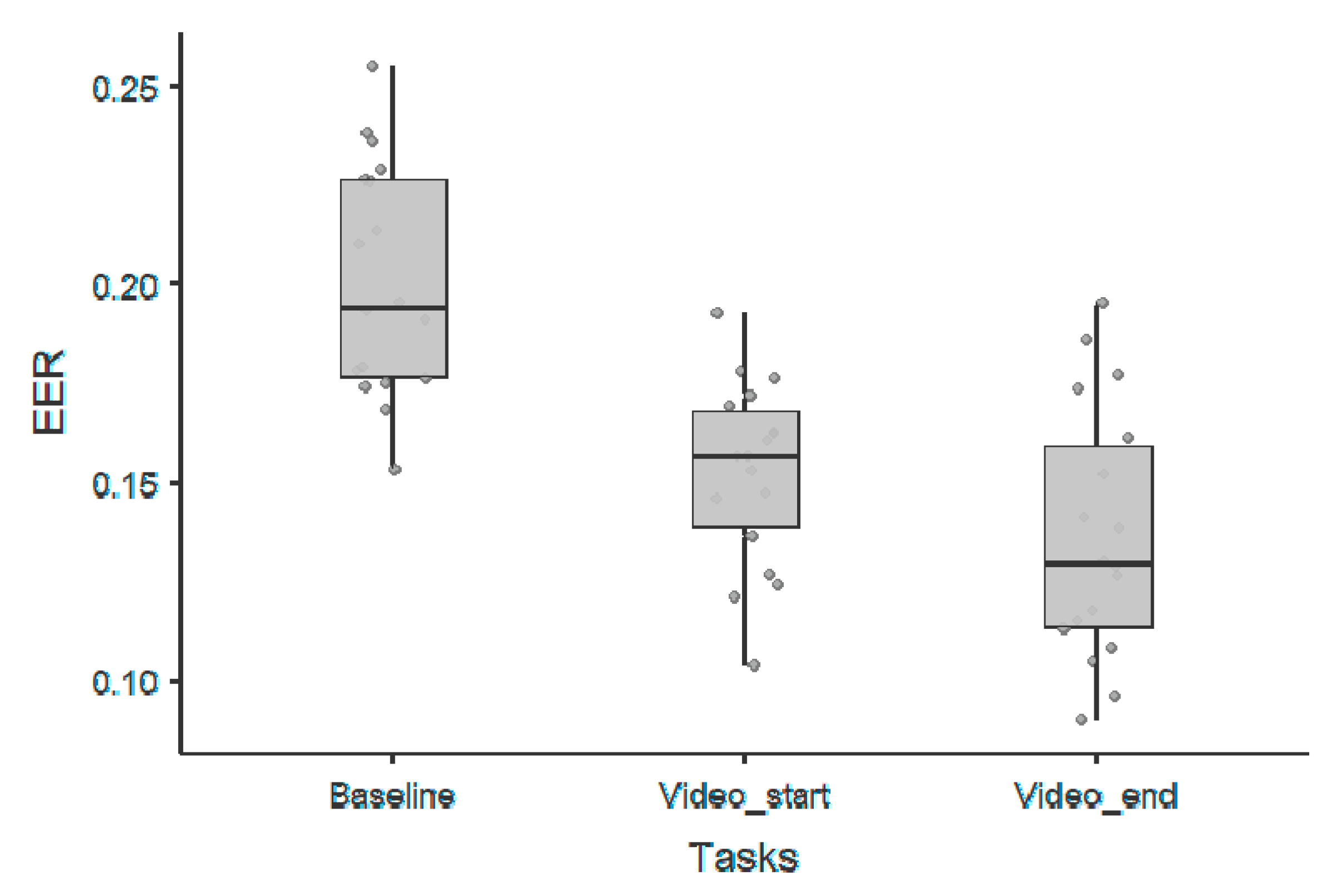

3.4. Periodic Components

3.5. Replication on the DEAP Dataset

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chan, H.-L.; Kuo, P.-C.; Cheng, C.-Y.; Chen, Y.-S. Challenges and Future Perspectives on Electroencephalogram-Based Biometrics in Person Recognition. Front. Neuroinform. 2018, 12, 66. [Google Scholar] [CrossRef]

- Crobe, A.; Demuru, M.; Didaci, L.; Marcialis, G.L.; Fraschini, M. Minimum spanning tree and k-core decomposition as measure of subject-specific EEG traits. Biomed. Phys. Eng. Express 2016, 2, 017001. [Google Scholar] [CrossRef]

- Fraschini, M.; Hillebrand, A.; Demuru, M.; Didaci, L.; Marcialis, G.L. An EEG-Based Biometric System Using Eigenvector Centrality in Resting State Brain Networks. IEEE Signal Process. Lett. 2015, 22, 666–670. [Google Scholar] [CrossRef]

- La Rocca, D.; Campisi, P.; Vegso, B.; Cserti, P.; Kozmann, G.; Babiloni, F.; Fallani, F.D.V. Human Brain Distinctiveness Based on EEG Spectral Coherence Connectivity. IEEE Trans. Biomed. Eng. 2014, 61, 2406–2412. [Google Scholar] [CrossRef] [PubMed]

- Pani, S.M.; Ciuffi, M.; Demuru, M.; La Cava, S.M.; Bazzano, G.; D’Aloja, E.; Fraschini, M. Subject, session and task effects on power, connectivity and network centrality: A source-based EEG study. Biomed. Signal Process. Control 2020, 59, 101891. [Google Scholar] [CrossRef]

- Ruiz-Blondet, M.V.; Jin, Z.; Laszlo, S. CEREBRE: A Novel Method for Very High Accuracy Event-Related Potential Biometric Identification. IEEE Trans. Inf. Forensics Secur. 2016, 11, 1618–1629. [Google Scholar] [CrossRef]

- Yang, S.; Deravi, F.; Hoque, S. Task sensitivity in EEG biometric recognition. Pattern Anal. Appl. 2018, 21, 105–117. [Google Scholar] [CrossRef]

- Haller, M.; Donoghue, T.; Peterson, E.; Varma, P.; Sebastian, P.; Gao, R.; Noto, T.; Knight, R.T.; Shestyuk, A.Y.; Voytek, B. Parameterizing neural power spectra. bioRxiv 2018, 299859. [Google Scholar] [CrossRef]

- Demuru, M.; Fraschini, M. EEG fingerprinting: Subject-specific signature based on the aperiodic component of power spectrum. Comput. Biol. Med. 2020, 120, 103748. [Google Scholar] [CrossRef]

- Betti, V.; Della Penna, S.; De Pasquale, F.; Corbetta, M. Spontaneous Beta Band Rhythms in the Predictive Coding of Natural Stimuli. Neuroscientist 2020. [Google Scholar] [CrossRef]

- Jenke, R.; Peer, A.; Buss, M. Feature Extraction and Selection for Emotion Recognition from EEG. IEEE Trans. Affect. Comput. 2014, 5, 327–339. [Google Scholar] [CrossRef]

- Lin, Y.-P.; Wang, C.-H.; Jung, T.-P.; Wu, T.-L.; Jeng, S.-K.; Duann, J.-R.; Chen, J.-H. EEG-Based Emotion Recognition in Music Listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar] [CrossRef]

- Petrantonakis, P.C.; Hadjileontiadis, L.J. Emotion Recognition from EEG Using Higher Order Crossings. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 186–197. [Google Scholar] [CrossRef] [PubMed]

- Al-Nafjan, A.N.; Hosny, M.; Alohali, Y.A.; Al-Wabil, A. Review and Classification of Emotion Recognition Based on EEG Brain-Computer Interface System Research: A Systematic Review. Appl. Sci. 2017, 7, 1239. [Google Scholar] [CrossRef]

- Arnau-Gonzalez, P.; Arevalillo-Herraez, M.; Katsigiannis, S.; Ramzan, N. On the influence of affect in EEG-based subject identification. IEEE Trans. Affect. Comput. 2018. [Google Scholar] [CrossRef]

- Vahid, A.; Arbabi, E. Human identification with EEG signals in different emotional states. In Proceedings of the 2016 23rd Iranian Conference on Biomedical Engineering and 2016 1st International Iranian Conference on Biomedical Engineering (ICBME), Tehran, Iran, 24–25 November 2016; pp. 242–246. [Google Scholar] [CrossRef]

- Katsigiannis, S.; Ramzan, N. DREAMER: A Database for Emotion Recognition Through EEG and ECG Signals From Wireless Low-cost Off-the-Shelf Devices. IEEE J. Biomed. Health Inform. 2018, 22, 98–107. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Sonkusare, S.; Breakspear, M.; Guo, C. Naturalistic Stimuli in Neuroscience: Critically Acclaimed. Trends Cogn. Sci. 2019, 23, 699–714. [Google Scholar] [CrossRef]

- Ross, A.A.; Nandakumar, K.; Jain, A.K. Handbook of Multibiometrics; Springer: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Fraschini, M.; Demuru, M.; Crobe, A.; Marrosu, F.; Stam, C.J.; Hillebrand, A. The effect of epoch length on estimated EEG functional connectivity and brain network organisation. J. Neural Eng. 2016, 13, 036015. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [PubMed]

- Finn, E.S.; Bandettini, P.A. Movie-watching outperforms rest for functional connectivity-based prediction of behavior. Neuroscience 2020. [Google Scholar] [CrossRef]

- Lai, M.; Demuru, M.; Hillebrand, A.; Fraschini, M. A comparison between scalp- and source-reconstructed EEG networks. Sci. Rep. 2018, 8, 12269. [Google Scholar] [CrossRef] [PubMed]

| 1/f Offset Parameter—Wilcoxon Rank Test | |||||||

|---|---|---|---|---|---|---|---|

| 95% Confidence Interval | |||||||

| Statistic | p | Lower | Upper | Cohen’s d | |||

| Baseline | Videos_start | Wilcoxon W | 171 | <0.00001 | 0.0345 | 0.05941 | 2.022 |

| Baseline | Videos_end | Wilcoxon W | 165 | 0.00011 | 0.0267 | 0.06013 | 1.349 |

| Videos_start | Videos_end | Wilcoxon W | 76 | 0.70188 | −0.0222 | 0.00955 | −0.192 |

| 1/f Exponent Parameter—Wilcoxon Rank Test | |||||||

|---|---|---|---|---|---|---|---|

| 95% Confidence Interval | |||||||

| Statistic | p | Lower | Upper | Cohen’s d | |||

| Baseline | Videos_start | Wilcoxon W | 171 | <0.00001 | 0.0375 | 0.0636 | 2.174 |

| Baseline | Videos_end | Wilcoxon W | 166 | 0.00008 | 0.027 | 0.0602 | 1.371 |

| Videos_start | Videos_end | Wilcoxon W | 70 | 0.51354 | −0.0240 | 0.0101 | −0.250 |

| Beta Band—Wilcoxon Rank Test | |||||||

|---|---|---|---|---|---|---|---|

| 95% Confidence Interval | |||||||

| Statistic | p | Lower | Upper | Cohen’s d | |||

| Baseline | Video_start | Wilcoxon W | 168 | 0.00004 | 0.03658 | 0.0617 | 1.73 |

| Baseline | Video_end | Wilcoxon W | 169 | 0.00002 | 0.04634 | 0.0839 | 1.85 |

| Video_start | Video_end | Wilcoxon W | 129 | 0.05994 | −0.00142 | 0.0357 | 0.479 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fraschini, M.; Meli, M.; Demuru, M.; Didaci, L.; Barberini, L. EEG Fingerprints under Naturalistic Viewing Using a Portable Device. Sensors 2020, 20, 6565. https://doi.org/10.3390/s20226565

Fraschini M, Meli M, Demuru M, Didaci L, Barberini L. EEG Fingerprints under Naturalistic Viewing Using a Portable Device. Sensors. 2020; 20(22):6565. https://doi.org/10.3390/s20226565

Chicago/Turabian StyleFraschini, Matteo, Miro Meli, Matteo Demuru, Luca Didaci, and Luigi Barberini. 2020. "EEG Fingerprints under Naturalistic Viewing Using a Portable Device" Sensors 20, no. 22: 6565. https://doi.org/10.3390/s20226565

APA StyleFraschini, M., Meli, M., Demuru, M., Didaci, L., & Barberini, L. (2020). EEG Fingerprints under Naturalistic Viewing Using a Portable Device. Sensors, 20(22), 6565. https://doi.org/10.3390/s20226565