A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing

Abstract

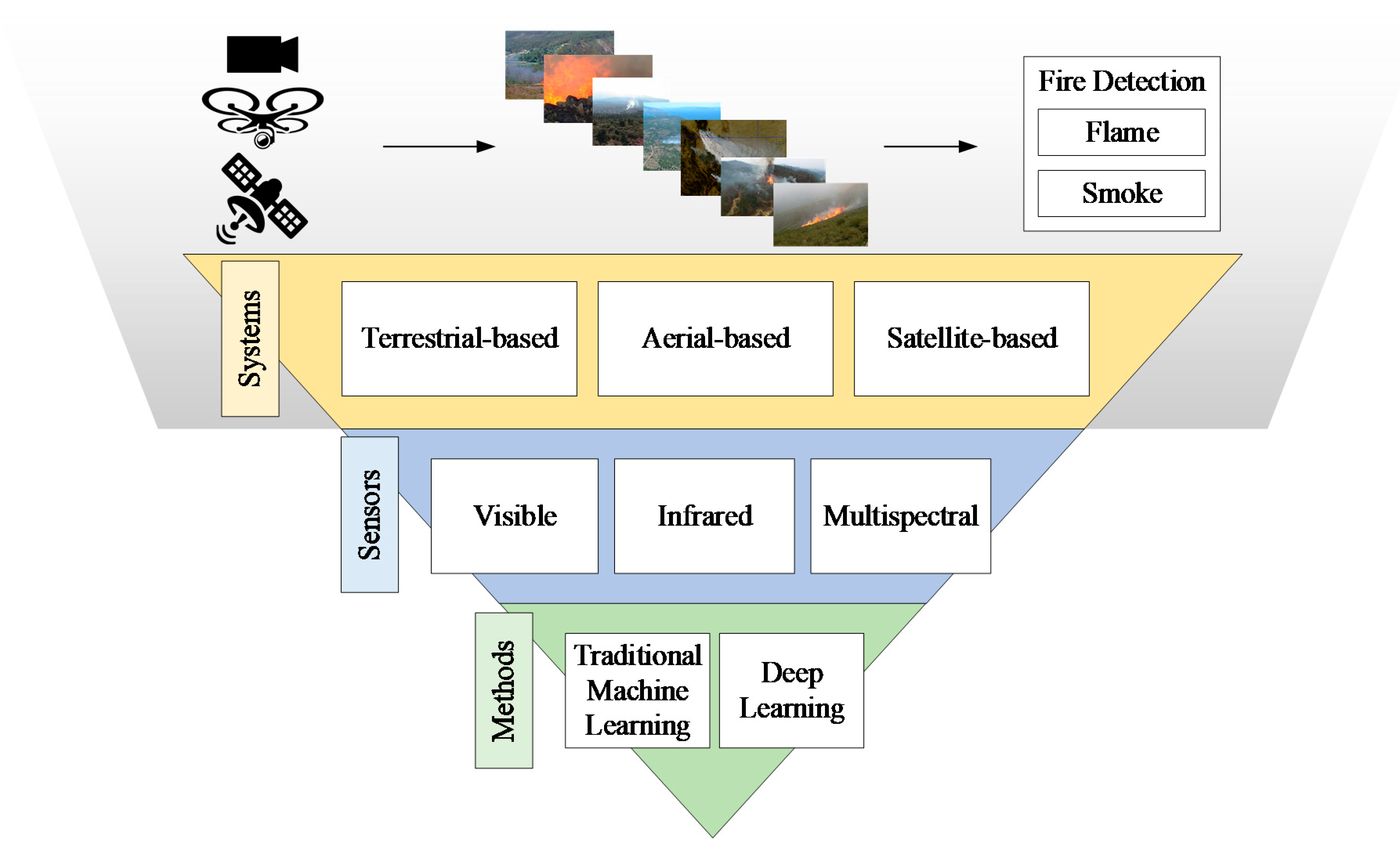

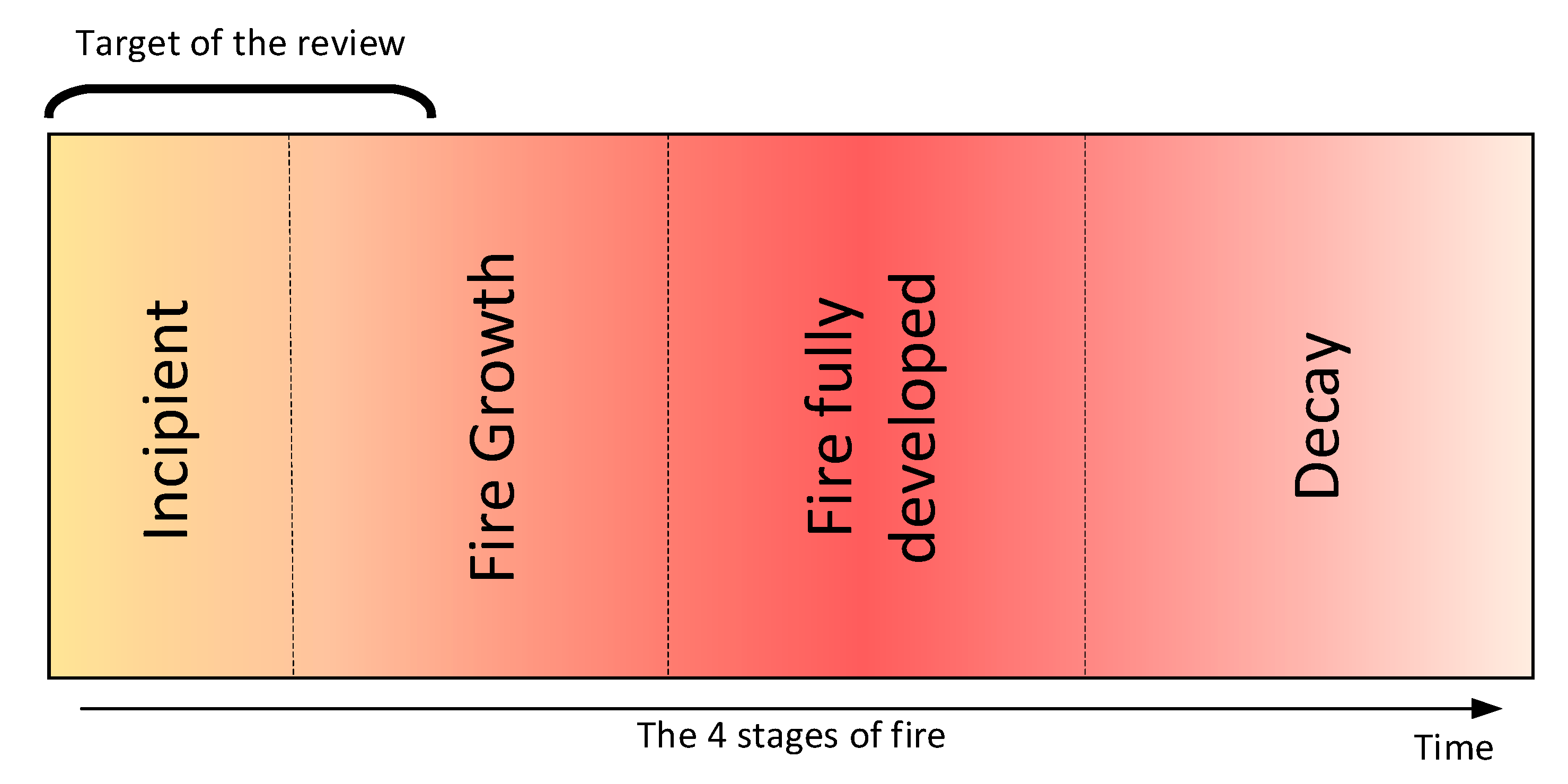

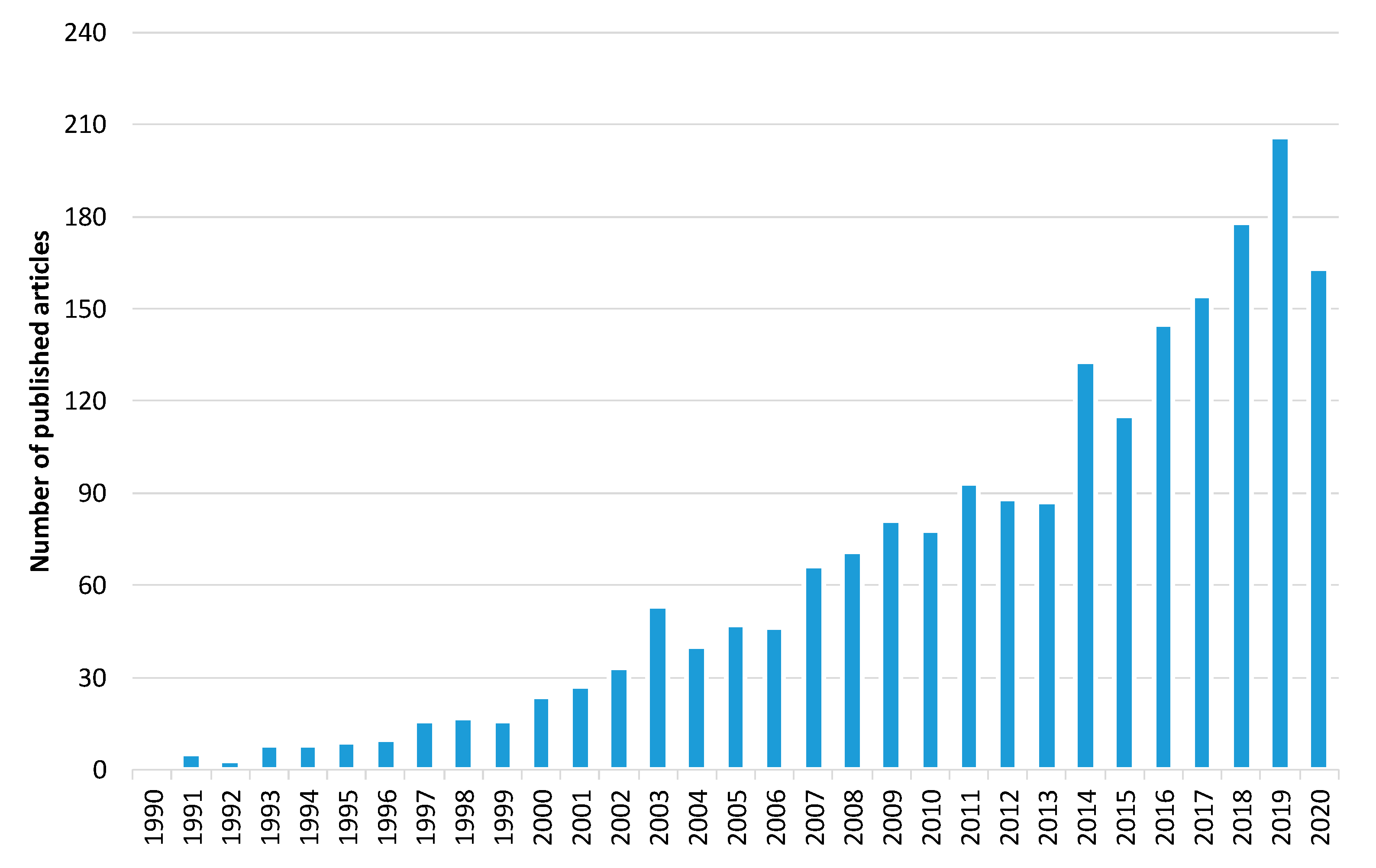

1. Introduction

2. Early Fire Detection Systems

2.1. Terrestrial Systems

2.1.1. Traditional Methods

2.1.2. Deep Learning Methods

2.2. Unmanned Aerial Vehicles

2.2.1. Traditional Methods

2.2.2. Deep Learning Methods

2.3. Spaceborne (Satellite) Systems

2.3.1. Fire and Smoke Detection from Sun-Synchronous Satellites

Traditional Methods

Deep Learning Methods

2.3.2. Fire and Smoke Detection from Geostationary Satellites

Traditional Methods

Deep Learning Methods

2.3.3. Fire and Smoke Detection Using CubeSats

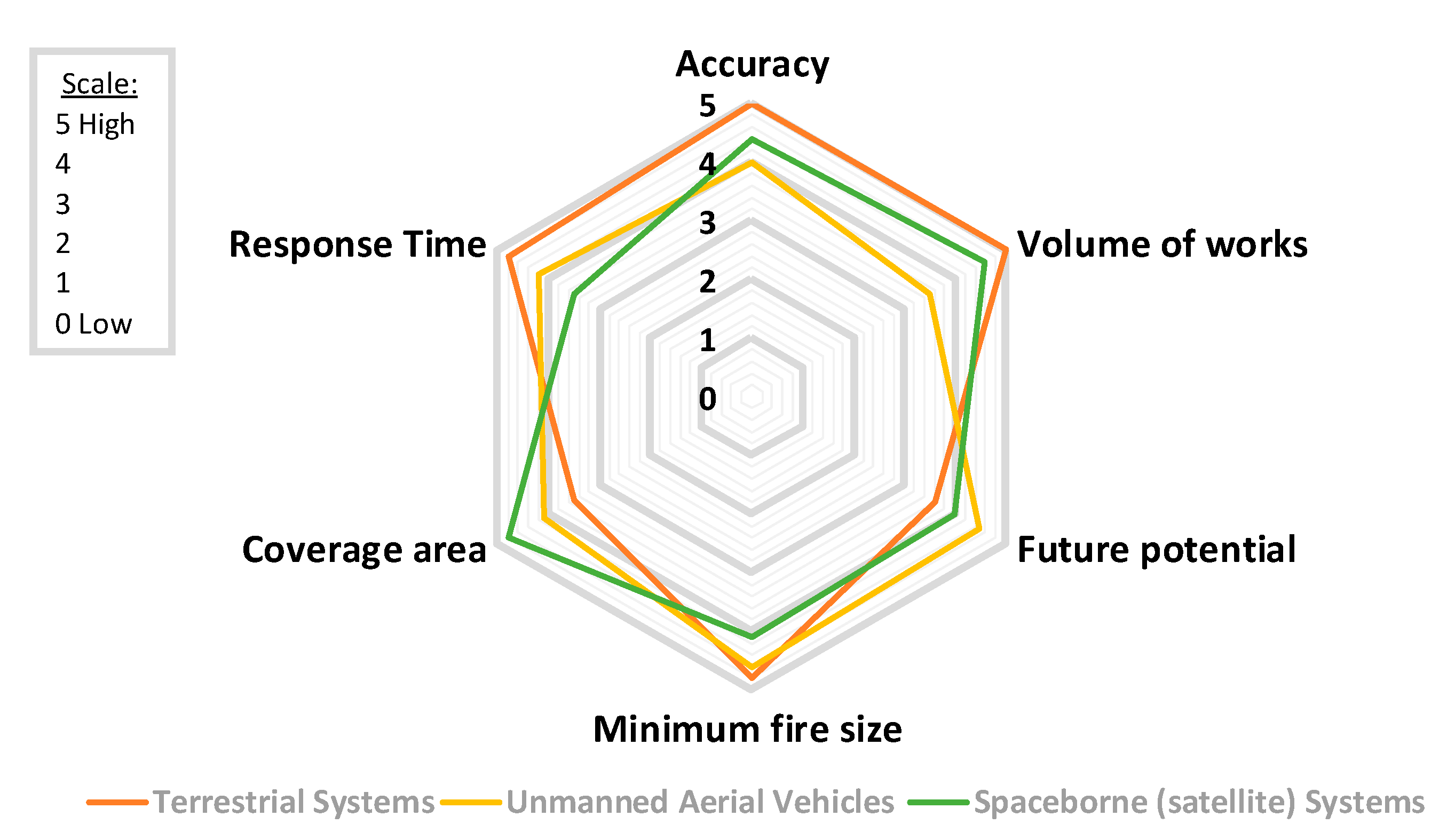

3. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ABI | Advanced Baseline Imager |

| ADF | Adaptive Decision Fusion |

| AHI | Advanced Himawari Imager |

| AVHRR | Advanced Very-High-Resolution Radiometer |

| BAT | Broad Area Training |

| BPNN | Back-Propagation Neural Network |

| BT | Brightness Temperature |

| CCD | Charge-Coupled Device |

| CMOS | Complementary Metal-Oxide-Semiconductor |

| CNN | Convolutional Neural Network |

| DC | Deep Convolutional |

| DL | Deep Learning |

| DTC | Diurnal Temperature Cycles |

| ECEF | Earth-Centered Earth-Fixed |

| EO | Earth Observation |

| ESA | European Space Agency |

| FCN | Fully Convolutional Network |

| GAN | Generative Adversarial Network |

| GEO | Geostationary Orbit |

| GOES | Geostationary Operational Environmental Satellite |

| GPS | Global Positioning System |

| h-LDS | higher-order LDS |

| HMM | Hidden Markov Models |

| HOF | Histograms of Optical Flow |

| HOG | Histograms of Oriented Gradients |

| HoGP | Histograms of Grassmannian Points |

| IMU | Inertial Measurement Unit |

| IoMT | Internet of Multimedia Things |

| IR | InfraRed |

| LDS | Linear Dynamical Systems |

| LEO | Low Earth Orbit |

| LST | Land Surface Temperature |

| LSTM | Long Short-Term Memory |

| LWIR | Long Wavelength InfraRed |

| MetOp | Meteorological Operational |

| MODIS | Moderate Resolution Imaging Spectroradiometer |

| MR | Mixed Reality |

| MSER | Maximally Stable Extremal Regions |

| MSG | Meteosat Second Generation |

| MWIR | Middle Wavelength InfraRed |

| NDVI | Normalized Difference Vegetation Index |

| NED | North-East-Down |

| NIR | Near-InfraRed |

| NOAA | National Oceanic and Atmospheric Administration |

| NPP | National Polar-orbiting Partnership |

| NRT | Near-Real-Time |

| OLI | Operational Land Imager |

| PISA | Pixelwise Image Saliency Aggregating |

| POES | Polar-orbiting Operational Environmental Satellite |

| R-CNN | Region-Based Convolutional Neural Networks |

| ReLU | Rectified Linear Unit |

| RST | Robust Satellite Techniques |

| SEVIRI | Spinning Enhanced Visible and Infrared Imager |

| SFIDE | Satellite Fire Detection |

| sh-LDS | stabilized h-LDS |

| SLAM | Simultaneous Localization and Mapping |

| SroFs | Suspected Regions of Fire |

| SSO | Sun-Synchronous Orbit |

| STCM | Spatio–temporal Contextual Model |

| STM | Spatio–Temporal Model |

| SVD | Single Value Decomposition |

| SVM | Support Vector Machine |

| UAV | Unmanned Aerial Vehicles |

| VIIRS | Visible Infrared Imaging Radiometer Suite |

| WoS | Web of Science |

| WSN | Wireless Sensor Network |

References

- Tanase, M.A.; Aponte, C.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Heurich, M. Detection of windthrows and insect outbreaks by L-band SAR: A case study in the Bavarian Forest National Park. Remote Sens. Environ. 2018, 209, 700–711. [Google Scholar] [CrossRef]

- Pradhan, B.; Suliman, M.D.H.B.; Awang, M.A.B. Forest fire susceptibility and risk mapping using remote sensing and geographical information systems (GIS). Disaster Prev. Manag. Int. J. 2007, 16. [Google Scholar] [CrossRef]

- Kresek, R. History of the Osborne Firefinder. 2007. Available online: http://nysforestrangers.com/archives/osborne%20firefinder%20by%20kresek.pdf (accessed on 7 September 2020).

- Bouabdellah, K.; Noureddine, H.; Larbi, S. Using wireless sensor networks for reliable forest fires detection. Procedia Comput. Sci. 2013, 19, 794–801. [Google Scholar] [CrossRef]

- Gaur, A.; Singh, A.; Kumar, A.; Kulkarni, K.S.; Lala, S.; Kapoor, K.; Srivastava, V.; Kumar, A.; Mukhopadhyay, S.C. Fire sensing technologies: A review. IEEE Sens. J. 2019, 19, 3191–3202. [Google Scholar] [CrossRef]

- Gaur, A.; Singh, A.; Kumar, A.; Kumar, A.; Kapoor, K. Video Flame and Smoke Based Fire Detection Algorithms: A Literature Review. Fire Technol. 2020, 56, 1943–1980. [Google Scholar] [CrossRef]

- Kaabi, R.; Frizzi, S.; Bouchouicha, M.; Fnaiech, F.; Moreau, E. Video smoke detection review: State of the art of smoke detection in visible and IR range. In Proceedings of the 2017 International Conference on Smart, Monitored and Controlled Cities (SM2C), Kerkennah-Sfax, Tunisia, 17 February 2017; pp. 81–86. [Google Scholar]

- Garg, S.; Verma, A.A. Review Survey on Smoke Detection. Imp. J. Interdiscip. Res. 2016, 2, 935–939. [Google Scholar]

- Memane, S.E.; Kulkarni, V.S. A review on flame and smoke detection techniques in video’s. Int. J. Adv. Res. Electr. Electr. Instrum. Eng. 2015, 4, 885–889. [Google Scholar]

- Bu, F.; Gharajeh, M.S. Intelligent and vision-based fire detection systems: A survey. Image Vis. Comput. 2019, 91, 103803. [Google Scholar] [CrossRef]

- Yuan, C.; Zhang, Y.; Liu, Z. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. For. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]

- Allison, R.S.; Johnston, J.M.; Craig, G.; Jennings, S. Airborne optical and thermal remote sensing for wildfire detection and monitoring. Sensors 2016, 16, 1310. [Google Scholar] [CrossRef]

- Nixon, M.; Aguado, A. Feature Extraction and Image Processing for Computer Vision; Academic Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Mather, P.; Tso, B. Classification Methods for Remotely Sensed Data; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Çetin, A.E.; Dimitropoulos, K.; Gouverneur, B.; Grammalidis, N.; Günay, O.; Habiboǧlu, Y.H.; Töreyin, B.U.; Verstockt, S. Video fire detection–review. Dig. Signal Process. 2013, 23, 1827–1843. [Google Scholar] [CrossRef]

- Töreyin, B.U.; Cinbis, R.G.; Dedeoglu, Y.; Cetin, A.E. Fire detection in infrared video using wavelet analysis. Opt. Eng. 2007, 46, 067204. [Google Scholar] [CrossRef]

- Cappellini, Y.; Mattii, L.; Mecocci, A. An Intelligent System for Automatic Fire Detection in Forests. In Recent Issues in Pattern Analysis and Recognition; Springer: Berlin/Heidelberg, Germany, 1989; pp. 563–570. [Google Scholar]

- Chen, T.H.; Wu, P.H.; Chiou, Y.C. An early fire-detection method based on image processing. In Proceedings of the 2004 International Conference on Image Processing (ICIP 04), Singapore, 24–27 October 2004; Volume 3, pp. 1707–1710. [Google Scholar]

- Dimitropoulos, K.; Gunay, O.; Kose, K.; Erden, F.; Chaabene, F.; Tsalakanidou, F.; Grammalidis, N.; Çetin, E. Flame detection for video-based early fire warning for the protection of cultural heritage. In Proceedings of the Euro-Mediterranean Conference, Limassol, Cyprus, 29 October–3 November 2012; Springer: Berlin/Heidelberg, Germany; pp. 378–387. [Google Scholar]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Celik, T. Fast and efficient method for fire detection using image processing. ETRI J. 2010, 32, 881–890. [Google Scholar] [CrossRef]

- Marbach, G.; Loepfe, M.; Brupbacher, T. An image processing technique for fire detection in video images. Fire Saf. J. 2006, 41, 285–289. [Google Scholar] [CrossRef]

- Kim, D.; Wang, Y.F. Smoke detection in video. In Proceedings of the 2009 WRI World Congress on Computer Science and Information Engineering, Los Angeles, CA, USA, 31 March–2 April 2009; Volume 5, pp. 759–763. [Google Scholar]

- Yamagishi, H.; Yamaguchi, J. Fire flame detection algorithm using a color camera. In Proceedings of the MHS’99, 1999 International Symposium on Micromechatronics and Human Science (Cat. No. 99TH8478), Nagoya, Japan, 23–26 November 1999; pp. 255–260. [Google Scholar]

- Dimitropoulos, K.; Tsalakanidou, F.; Grammalidis, N. Flame detection for video-based early fire warning systems and 3D visualization of fire propagation. In Proceedings of the 13th IASTED International Conference on Computer Graphics and Imaging (CGIM 2012), Crete, Greece, 18–20 June 2012; Available online: https://zenodo.org/record/1218#.X6qSVmj7Sbg (accessed on 10 November 2020).

- Zhang, Z.; Shen, T.; Zou, J. An improved probabilistic approach for fire detection in videos. Fire Technol. 2014, 50, 745–752. [Google Scholar] [CrossRef]

- Avgerinakis, K.; Briassouli, A.; Kompatsiaris, I. Smoke detection using temporal HOGHOF descriptors and energy colour statistics from video. In Proceedings of the International Workshop on Multi-Sensor Systems and Networks for Fire Detection and Management, Antalya, Turkey, 8–9 November 2012. [Google Scholar]

- Mueller, M.; Karasev, P.; Kolesov, I.; Tannenbaum, A. Optical flow estimation for flame detection in videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef]

- Chamberlin, D.S.; Rose, A. The First Symposium (International) on Combustion. Combust. Inst. Pittsburgh 1965, 1965, 27–32. [Google Scholar]

- Günay, O.; Taşdemir, K.; Töreyin, B.U.; Çetin, A.E. Fire detection in video using LMS based active learning. Fire Technol. 2010, 46, 551–577. [Google Scholar] [CrossRef]

- Teng, Z.; Kim, J.H.; Kang, D.J. Fire detection based on hidden Markov models. Int. J. Control Autom. Syst. 2010, 8, 822–830. [Google Scholar] [CrossRef]

- Töreyin, B.U. Smoke detection in compressed video. In Applications of Digital Image Processing XLI; International Society for Optics and Photonics: Bellingham, WA, USA, 2018; Volume 10752, p. 1075232. [Google Scholar]

- Savcı, M.M.; Yıldırım, Y.; Saygılı, G.; Töreyin, B.U. Fire detection in H. 264 compressed video. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8310–8314. [Google Scholar]

- Chen, J.; He, Y.; Wang, J. Multi-feature fusion based fast video flame detection. Build. Environ. 2010, 45, 1113–1122. [Google Scholar] [CrossRef]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Cetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Real time video fire detection using spatio-temporal consistency energy. In Proceedings of the 10th IEEE International Conference on Advanced Video and Signal Based Surveillance, Krakow, Poland, 27–30 August 2013; pp. 365–370. [Google Scholar]

- Arrue, B.C.; Ollero, A.; De Dios, J.M. An intelligent system for false alarm reduction in infrared forest-fire detection. IEEE Intell. Syst. Their Appl. 2000, 15, 64–73. [Google Scholar] [CrossRef]

- Grammalidis, N.; Cetin, E.; Dimitropoulos, K.; Tsalakanidou, F.; Kose, K.; Gunay, O.; Gouverneur, B.; Torri, D.; Kuruoglu, E.; Tozzi, S.; et al. A Multi-Sensor Network for the Protection of Cultural Heritage. In Proceedings of the 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011. [Google Scholar]

- Bosch, I.; Serrano, A.; Vergara, L. Multisensor network system for wildfire detection using infrared image processing. Sci. World J. 2013, 2013, 402196. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Grammalidis, N. Smoke detection using spatio-temporal analysis, motion modeling and dynamic texture recognition. In Proceedings of the 22nd European Signal Processing Conference, Lisbon, Portugal, 1–5 September 2014; pp. 1078–1082. [Google Scholar]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Prema, C.E.; Vinsley, S.S.; Suresh, S. Efficient flame detection based on static and dynamic texture analysis in forest fire detection. Fire Technol. 2018, 54, 255–288. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Higher order linear dynamical systems for smoke detection in video surveillance applications. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 1143–1154. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Kitsikidis, A.; Grammalidis, N. Classification of multidimensional time-evolving data using histograms of grassmannian points. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 892–905. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Luo, Y.; Zhao, L.; Liu, P.; Huang, D. Fire smoke detection algorithm based on motion characteristic and convolutional neural networks. Multimed. Tools Appl. 2018, 77, 15075–15092. [Google Scholar] [CrossRef]

- Zhao, L.; Luo, Y.M.; Luo, X.Y. Based on dynamic background update and dark channel prior of fire smoke detection algorithm. Appl. Res. Comput. 2017, 34, 957–960. [Google Scholar]

- Wu, X.; Lu, X.; Leung, H. An adaptive threshold deep learning method for fire and smoke detection. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1954–1959. [Google Scholar]

- Sharma, J.; Granmo, O.C.; Goodwin, M.; Fidje, J.T. Deep convolutional neural networks for fire detection in images. In Proceedings of the International Conference on Engineering Applications of Neural Networks, Athens, Greece, 25–27 August 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 183–193. [Google Scholar]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep convolutional neural networks for forest fire detection. In 2016 International Forum on Management, Education and Information Technology Application; Atlantis Press: Paris, France, 2016. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Mehmood, I.; Rho, S.; Baik, S.W. Convolutional neural networks based fire detection in surveillance videos. IEEE Access 2018, 6, 18174–18183. [Google Scholar] [CrossRef]

- Shen, D.; Chen, X.; Nguyen, M.; Yan, W.Q. Flame detection using deep learning. In Proceedings of the 2018 4th International Conference on Control, Automation and Robotics (ICCAR), Auckland, New Zealand, 20–23 April 2018; pp. 416–420. [Google Scholar]

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.M.; Moreau, E.; Fnaiech, F. Convolutional neural network for video fire and smoke detection. In Proceedings of the IECON 2016-42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 23–26 October 2016; pp. 877–882. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient deep CNN-based fire detection and localization in video surveillance applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1419–1434. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early fire detection using convolutional neural networks during surveillance for effective disaster management. Neurocomputing 2018, 288, 30–42. [Google Scholar] [CrossRef]

- Dunnings, A.J.; Breckon, T.P. Experimentally defined convolutional neural network architecture variants for non-temporal real-time fire detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 1558–1562. [Google Scholar]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2020, 142, 112975. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Kaza, K.; Grammalidis, N. Fire Detection from Images Using Faster R-CNN and Multidimensional Texture Analysis. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing, Brighton, UK, 12–17 May 2019; pp. 8301–8305. [Google Scholar]

- Lin, G.; Zhang, Y.; Xu, G.; Zhang, Q. Smoke detection on video sequences using 3D convolutional neural networks. Fire Technol. 2019, 55, 1827–1847. [Google Scholar] [CrossRef]

- Jadon, A.; Omama, M.; Varshney, A.; Ansari, M.S.; Sharma, R. Firenet: A specialized lightweight fire & smoke detection model for real-time iot applications. arXiv 2019, arXiv:1905.11922. [Google Scholar]

- Zhang, Q.X.; Lin, G.H.; Zhang, Y.M.; Xu, G.; Wang, J.J. Wildland forest fire smoke detection based on faster R-CNN using synthetic smoke images. Procedia Eng. 2018, 211, 441–446. [Google Scholar] [CrossRef]

- Kim, B.; Lee, J. A video-based fire detection using deep learning models. Appl. Sci. 2019, 9, 2862. [Google Scholar] [CrossRef]

- Shi, L.; Long, F.; Lin, C.; Zhao, Y. Video-based fire detection with saliency detection and convolutional neural networks. In International Symposium on Neural Networks; Springer: Cham, Switzerland, 2017; pp. 299–309. [Google Scholar]

- Wang, K.; Lin, L.; Lu, J.; Li, C.; Shi, K. PISA: Pixelwise image saliency by aggregating complementary appearance contrast measures with edge-preserving coherence. IEEE Trans. Image Process. 2015, 9, 2115–2122. [Google Scholar] [CrossRef] [PubMed]

- Yuan, F.; Zhang, L.; Xia, X.; Wan, B.; Huang, Q.; Li, X. Deep smoke segmentation. Neurocomputing 2019, 357, 248–260. [Google Scholar] [CrossRef]

- Cheng, S.; Ma, J.; Zhang, S. Smoke detection and trend prediction method based on Deeplabv3+ and generative adversarial network. J. Electron. Imaging 2019, 28, 033006. [Google Scholar] [CrossRef]

- Aslan, S.; Güdükbay, U.; Töreyin, B.U.; Çetin, A.E. Early wildfire smoke detection based on motion-based geometric image transformation and deep convolutional generative adversarial networks. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8315–8319. [Google Scholar]

- Hristov, G.; Raychev, J.; Kinaneva, D.; Zahariev, P. Emerging methods for early detection of forest fires using unmanned aerial vehicles and lorawan sensor networks. In Proceedings of the IEEE 28th EAEEIE Annual Conference, Hafnarfjordur, Iceland, 26–28 September 2018; pp. 1–9. [Google Scholar]

- Stearns, J.R.; Zahniser, M.S.; Kolb, C.E.; Sandford, B.P. Airborne infrared observations and analyses of a large forest fire. Appl. Opt. 1986, 25, 2554–2562. [Google Scholar] [CrossRef] [PubMed]

- Den Breejen, E.; Breuers, M.; Cremer, F.; Kemp, R.; Roos, M.; Schutte, K.; De Vries, J.S. Autonomous Forest Fire Detection; ADAI-Associacao para o Desenvolvimento da Aerodinamica Industrial: Coimbra, Portugal, 1998; pp. 2003–2012. [Google Scholar]

- Yuan, C.; Liu, Z.; Zhang, Y. UAV-based forest fire detection and tracking using image processing techniques. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 639–643. [Google Scholar]

- Yuan, C.; Liu, Z.; Zhang, Y. Learning-based smoke detection for unmanned aerial vehicles applied to forest fire surveillance. J. Intell. Robot. Syst. 2019, 93, 337–349. [Google Scholar] [CrossRef]

- Dang-Ngoc, H.; Nguyen-Trung, H. Aerial Forest Fire Surveillance-Evaluation of Forest Fire Detection Model using Aerial Videos. In Proceedings of the 2019 International Conference on Advanced Technologies for Communications (ATC), Hanoi, Vietnam, 17–19 October 2019; pp. 142–148. [Google Scholar]

- Yuan, C.; Liu, Z.; Zhang, Y. Aerial images-based forest fire detection for firefighting using optical remote sensing techniques and unmanned aerial vehicles. J. Intell. Robot. Syst. 2017, 88, 635–654. [Google Scholar] [CrossRef]

- De Sousa, J.V.R.; Gamboa, P.V. Aerial Forest Fire Detection and Monitoring Using a Small UAV. KnE Eng. 2020, 242–256. [Google Scholar] [CrossRef]

- Esfahlani, S.S. Mixed reality and remote sensing application of unmanned aerial vehicle in fire and smoke detection. J. Ind. Inf. Integr. 2019, 15, 42–49. [Google Scholar] [CrossRef]

- Sudhakar, S.; Vijayakumar, V.; Kumar, C.S.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16. [Google Scholar] [CrossRef]

- Kinaneva, D.; Hristov, G.; Raychev, J.; Zahariev, P. Early forest fire detection using drones and artificial intelligence. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 1060–1065. [Google Scholar]

- Chen, Y.; Zhang, Y.; Xin, J.; Yi, Y.; Liu, D.; Liu, H. A UAV-based Forest Fire Detection Algorithm Using Convolutional Neural Network. In Proceedings of the IEEE 37th Chinese Control Conference, Wuhan, China, 25–27 July 2018; pp. 10305–10310. [Google Scholar]

- Merino, L.; Caballero, F.; Martínez-De-Dios, J.R.; Maza, I.; Ollero, A. An unmanned aircraft system for automatic forest fire monitoring and measurement. J. Intell. Robot. Syst. 2012, 65, 533–548. [Google Scholar] [CrossRef]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency detection and deep learning-based wildfire identification in UAV imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.; Liu, X.; Chen, H.; Hupy, J.; Yang, B. Deep Learning Based Wildfire Event Object Detection from 4K Aerial Images Acquired by UAS. AI 2020, 1, 166–179. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, Y.; Mu, L.; Xin, J.; Jiao, S.; Liu, H.; Liu, D. A YOLOv3-based Learning Strategy for Real-time UAV-based Forest Fire Detection. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 4963–4967. [Google Scholar]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A Deep Learning Based Forest Fire Detection Approach Using UAV and YOLOv3. In Proceedings of the 2019 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 23–27 July 2019; pp. 1–5. [Google Scholar]

- Srinivas, K.; Dua, M. Fog Computing and Deep CNN Based Efficient Approach to Early Forest Fire Detection with Unmanned Aerial Vehicles. In Proceedings of the International Conference on Inventive Computation Technologies, Coimbatore, India, 29–30 August 2019; Springer: Cham, Switzerland, 2020; pp. 646–652. [Google Scholar]

- Barmpoutis, P.; Stathaki, T. A Novel Framework for Early Fire Detection Using Terrestrial and Aerial 360-Degree Images. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Auckland, New Zealand, 10–14 February 2020; Springer: Cham, Switzerland, 2020; pp. 63–74. [Google Scholar]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- He, L.; Li, Z. Enhancement of a fire detection algorithm by eliminating solar reflection in the mid-IR band: Application to AVHRR data. Int. J. Remote Sens. 2012, 33, 7047–7059. [Google Scholar] [CrossRef]

- He, L.; Li, Z. Enhancement of fire detection algorithm by eliminating solar contamination effect and atmospheric path radiance: Application to MODIS data. Int. J. Remote Sens. 2011, 32, 6273–6293. [Google Scholar] [CrossRef]

- Csiszar, I.; Schroeder, W.; Giglio, L.; Ellicott, E.; Vadrevu, K.P.; Justice, C.O.; Wind, B. Active fires from the Suomi NPP Visible Infrared Imaging Radiometer, Suite: Product Status and first evaluation results. J. Geophys. Res. Atmos. 2014, 119, 803–816. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Csiszar, I.A. The New VIIRS 375m Active Fire Detection Data Product: Algorithm Description and Initial Assessment. Remote Sens. Environ. 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Sayad, Y.O.; Mousannif, H.; Al Moatassime, H. Predictive modeling of wildfires: A new dataset and machine learning approach. Fire Saf. J. 2019, 104, 130–146. [Google Scholar] [CrossRef]

- Shukla, B.P.; Pal, P.K. Automatic smoke detection using satellite imagery: Preparatory to smoke detection from Insat-3D. Int. J. Remote Sens. 2009, 30, 9–22. [Google Scholar] [CrossRef]

- Li, X.; Song, W.; Lian, L.; Wei, X. Forest fire smoke detection using back-propagation neural network based on MODIS data. Remote Sens. 2015, 7, 4473–4498. [Google Scholar] [CrossRef]

- Li, Z.; Khananian, A.; Fraser, R.H.; Cihlar, J. Automatic detection of fire smoke using artificial neural networks and threshold approaches applied to AVHRR imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 1859–1870. [Google Scholar]

- Hally, B.; Wallace, L.; Reinke, K.; Jones, S.; Skidmore, A. Advances in active fire detection using a multi-temporal method for next-generation geostationary satellite data. Int. J. Dig. Earth 2019, 12, 1030–1045. [Google Scholar] [CrossRef]

- Giglio, L.; Schroeder, W.; Justice, C.O. The Collection 6 MODIS Active Fire Detection Algorithm and Fire Products. Remote Sens. Environ. 2016, 178, 31–41. [Google Scholar] [CrossRef]

- Wickramasinghe, C.; Wallace, L.; Reinke, K.; Jones, S. Intercomparison of Himawari-8 AHI-FSA with MODIS and VIIRS active fire products. Int. J. Dig. Earth 2018. [Google Scholar] [CrossRef]

- Lin, L.; Meng, Y.; Yue, A.; Yuan, Y.; Liu, X.; Chen, J.; Zhang, M.; Chen, J. A spatio-temporal model for forest fire detection using HJ-IRS satellite data. Remote Sens. 2016, 8, 403. [Google Scholar] [CrossRef]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite smoke scene detection using convolutional neural network with spatial and channel-wise attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef]

- Vani, K. Deep Learning Based Forest Fire Classification and Detection in Satellite Images. In Proceedings of the 2019 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 18–20 December 2019; pp. 61–65. [Google Scholar]

- Hally, B.; Wallace, L.; Reinke, K.; Jones, S. A Broad-Area Method for the Diurnal Characterisation of Upwelling Medium Wave Infrared Radiation. Remote Sens. 2017, 9, 167. [Google Scholar] [CrossRef]

- Fatkhuroyan, T.W.; Andersen, P. Forest fires detection in Indonesia using satellite Himawari-8 (case study: Sumatera and Kalimantan on august-october 2015). In IOP Conference Series: Earth and Environmental Science; IOP Publishing Ltd.: Bristol, UK, 2017; Volume 54, pp. 1315–1755. [Google Scholar]

- Xu, G.; Zhong, X. Real-time wildfire detection and tracking in Australia using geostationary satellite: Himawari-8. Remote Sens. Lett. 2017, 8, 1052–1061. [Google Scholar] [CrossRef]

- Xie, Z.; Song, W.; Ba, R.; Li, X.; Xia, L. A spatiotemporal contextual model for forest fire detection using Himawari-8 satellite data. Remote Sens. 2018, 10, 1992. [Google Scholar] [CrossRef]

- Filizzola, C.; Corrado, R.; Marchese, F.; Mazzeo, G.; Paciello, R.; Pergola, N.; Tramutoli, V. RST-FIRES, an exportable algorithm for early-fire detection and monitoring: Description, implementation, and field validation in the case of the MSG-SEVIRI sensor. Remote Sens. Environ. 2017, 192, e2–e25. [Google Scholar] [CrossRef]

- Di Biase, V.; Laneve, G. Geostationary sensor based forest fire detection and monitoring: An improved version of the SFIDE algorithm. Remote Sens. 2018, 10, 741. [Google Scholar] [CrossRef]

- Laneve, G.; Castronuovo, M.M.; Cadau, E.G. Continuous monitoring of forest fires in the Mediterranean area using MSG. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2761–2768. [Google Scholar] [CrossRef]

- Hall, J.V.; Zhang, R.; Schroeder, W.; Huang, C.; Giglio, L. Validation of GOES-16 ABI and MSG SEVIRI active fire products. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101928. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Quayle, B.; Lorenz, E.; Morelli, F. Active fire detection using Landsat-8/OLI data. Remote Sens. Environ. 2016, 185, 210–220. [Google Scholar] [CrossRef]

- Larsen, A.; Hanigan, I.; Reich, B.J.; Qin, Y.; Cope, M.; Morgan, G.; Rappold, A.G. A deep learning approach to identify smoke plumes in satellite imagery in near-real time for health risk communication. J. Expo. Sci. Environ. Epidemiol. 2020, 1–7. [Google Scholar] [CrossRef]

- Phan, T.C.; Nguyen, T.T. Remote Sensing Meets Deep Learning: Exploiting Spatio-Temporal-Spectral Satellite Images for Early Wildfire Detection. No. REP_WORK. 2019. Available online: https://infoscience.epfl.ch/record/270339 (accessed on 7 September 2020).

- Cal Poly, S.L.O. The CubeSat Program, CubeSat Design Specification Rev. 13. 2014. Available online: http://blogs.esa.int/philab/files/2019/11/RD-02_CubeSat_Design_Specification_Rev._13_The.pdf (accessed on 7 September 2020).

- Barschke, M.F.; Bartholomäus, J.; Gordon, K.; Lehmenn, M.; Brie, K. The TUBIN nanosatellite mission for wildfire detection in thermal infrared. CEAS Space 2017, 9, 183–194. [Google Scholar] [CrossRef]

- Kameche, M.; Benzeniar, H.; Benbouzid, A.B.; Amri, R.; Bouanani, N. Disaster monitoring constellation using nanosatellites. J. Aerosp. Technol. Manag. 2014, 6, 93–100. [Google Scholar] [CrossRef]

- MODIS—Moderate Resolution Imaging Spectroradiometer, Specifications. Available online: https://modis.gsfc.nasa.gov/about/specifications.php (accessed on 15 September 2020).

- Himawari-8 and 9, Specifications. Available online: https://earth.esa.int/web/eoportal/satellite-missions/h/himawari-8-9 (accessed on 15 September 2020).

- The SEVIRI Instrument. Available online: https://www.eumetsat.int/website/wcm/idc/groups/ops/documents/document/mday/mde1/~edisp/pdf_ten_msg_seviri_instrument.pdf (accessed on 15 September 2020).

- GOES-16ABI, Specifications. Available online: https://www.goes-r.gov/spacesegment/abi.html (accessed on 15 September 2020).

- Huan Jing-1: Environmental Protection & Disaster Monitoring Constellation. Available online: https://earth.esa.int/web/eoportal/satellite-missions/h/hj-1 (accessed on 15 September 2020).

- POES Series, Specifications. Available online: https://directory.eoportal.org/web/eoportal/satellite-missions/n/noaa-poes-series-5th-generation (accessed on 15 September 2020).

- VIIRS-375m. Available online: https://earthdata.nasa.gov/earth-observation-data/near-real-time/firms/viirs-i-band-active-fire-data (accessed on 15 September 2020).

- Visible Infrared Imaging Radiometer Suite (VIIRS) 375m Active Fire Detection and Characterization Algorithm Theoretical Basis Document 1.0. Available online: https://viirsland.gsfc.nasa.gov/PDF/VIIRS_activefire_375m_ATBD.pdf (accessed on 15 September 2020).

- Shah, S.B.; Grübler, T.; Krempel, L.; Ernst, S.; Mauracher, F.; Contractor, S. Real-time wildfire detection from space—A trade-off between sensor quality, physical limitations and payload size. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019. [Google Scholar] [CrossRef]

- Pérez-Lissi, F.; Aguado-Agelet, F.; Vázquez, A.; Yañez, P.; Izquierdo, P.; Lacroix, S.; Bailon-Ruiz, R.; Tasso, J.; Guerra, A.; Costa, M. FIRE-RS: Integrating land sensors, cubesat communications, unmanned aerial vehicles and a situation assessment software for wildland fire characterization and mapping. In Proceedings of the 69th International Astronautical Congress, Bremen, Germany, 1–5 October 2018. [Google Scholar]

- Escrig, A.; Liz, J.L.; Català, J.; Verda, V.; Kanterakis, G.; Carvajal, F.; Pérez, I.; Lewinski, S.; Wozniak, E.; Aleksandrowicz, S.; et al. Advanced Forest Fire Fighting (AF3) European Project, preparedness for and management of large scale forest fires. In Proceedings of the XIV World Forestry Congress 2015, Durban, South Africa, 7–11 September 2015. [Google Scholar]

- Bielski, C.; O’Brien, V.; Whitmore, C.; Ylinen, K.; Juga, I.; Nurmi, P.; Kilpinen, J.; Porras, I.; Sole, J.M.; Gamez, P.; et al. Coupling early warning services, crowdsourcing, and modelling for improved decision support and wildfire emergency management. In Proceedings of the IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 3705–3712. [Google Scholar]

- European Forest Fire Information System (EFFIS). Available online: https://effis.jrc.ec.europa.eu/ (accessed on 2 November 2020).

- NASA Tracks Wildfires From Above to Aid Firefighters Below. Available online: https://www.nasa.gov/feature/goddard/2019/nasa-tracks-wildfires-from-above-to-aid-firefighters-below (accessed on 2 November 2020).

- Govil, K.; Welch, M.L.; Ball, J.T.; Pennypacker, C.R. Preliminary Results from a Wildfire Detection System Using Deep Learning on Remote Camera Images. Remote Sens. 2020, 12, 166. [Google Scholar] [CrossRef]

- MODIS Data Product Non-Technical Description—MOD 14. Available online: https://modis.gsfc.nasa.gov/data/dataprod/nontech/MOD14.php (accessed on 1 November 2020).

- Web of Science. Available online: http://apps.webofknowledge.com/ (accessed on 1 November 2020).

| (Satellite)-Sensor | Spectral Bands | Access to the Data | Specs/Advantages/Limitations | Spatial Scale | Spatial Resolution | Data Coverage | Accuracy Range | |

|---|---|---|---|---|---|---|---|---|

| Terrestrial | Optical | Visible spectrum | Both web cameras and image and video datasets are available | Easy to operate, limited field of view, need to be carefully placed in order to ensure adequate visibility. | Local | Very high spatial resolution (centimeters) depending on camera resolution and distance between the camera and the event | Limited coverage depending the specific task of each system | 85%–100% [35,40,58,60] |

| IR | Infrared spectrum | |||||||

| Multimodal | Multispectral | |||||||

| Aerial | Optical | Visible spectrum | Limited number of accessible published data | Broader and more accurate perception of the fire, cover wider areas, flexible, affected by weather conditions, limited flight time. | Local—Regional | High spatial resolution depending on flight altitude, camera resolution and distance between the camera and the event | Coverage of hundred hectares depending on battery capacity. | 70%–94.6% [75,86,89] |

| IR | Infrared spectrum | |||||||

| Multimodal | Multispectral | |||||||

| Satellite | Terra/Aqua-MODIS [118] | 36 (0.4–14.4 μm) | Registration Required (NASA) | Easily accessible, limited spatial resolution, revisit time: 1–2 days | Global | 0.25 km (bands 1–2) 0.5 km (bands 3–7) 1 km (bands 8–36) | Earth | 92.75%–98.32% [94,95,96,99,102] |

| Himawari-8/9—AHI-8 [119] | 16 (0.4–13.4 μm) | Registration Required/ (Himawari Cloud) | Imaging sensors with high radiometric, spectral, and temporal resolution. 10 min (Full disk), revisit time: 5 min for areas in Japan/Australia) | Regional | 0.5 km or 1 km for visible and near-infrared bands and 2 km for infrared bands | East Asia and Western Pacific | 75%–99.5% [98,100,104,105,106,107,113] | |

| MSG—SEVIRI [120] | 12 (0.4–13.4 μm) | Registration Required (EUMETSAT | Low noise in the long-wave IR channels, tracking of dust storms in near-real-time, susceptibility of the larger field of view to contamination by cloud and lack of dual-view capability, revisit time: 5–15 min | Regional | 1 km for the high-resolution visible channel 3 km for the infrared and the 3 other visible channels | Atlantic Ocean, Europe and Africa | 71.1%–98% [108,109,110,111] | |

| GOES-16ABI [121] | 16 (0.4–13.4 μm) | Registration Required (NOAA) | Infrared resolutions allow the detection of much smaller wildland fires with high temporal resolution but relatively low spatial resolution, and delays in data delivery, revisit time: 5–15 min | Regional | 0.5 km for the 0.64 μm visible channel 1 km for other visible/near-IR 2 km for bands > 2 μm | Western Hemisphere (North and South America) | 94%–98% [111,114] | |

| HuanJing (HJ)-1B—WVC (Wide View CCD Camera)/IRMSS (Infrared Multispectral Scanner) [122] | WVC: 4 (0.43–0.9 μm) IRMSS: 4 (0.75–12.5 μm) | Registration Required | Lack of an onboard calibration system to track HJ-1 sensors’ on-orbit behavior throughout the life of the mission, revisit time: 4 days | Regional | WVC: 30 m IRMSS: 150–300 m | Asian and Pacific Region | 94.45% [101] | |

| POES/MetOp—AVHRR [123] | 6 (0.58–12.5 μm) | Registration Required (NOAA) | Coarse spatial resolution, revisit time: 6 h | Global | 1.1 km by 4 km at nadir | Earth | 99.6% [97] | |

| S-NPP/NOAA-20/NOAA—VIIRS-375 m [124,125] | 16 M-bands (0.4–12.5 μm) 5 I-bands (0.6–12.4 μm) 1 DNB (0.5–0.9 µm) | Registration Required (NASA) | Increased spatial resolution, improved mapping of large fire perimeters, revisit time: 12 h | Global | 0.75 km (M-bands) 0.375 km (I-bands) 0.75 km (DNB) | Earth | 89%–98.8% [93] | |

| CubeSats (data refer to a specific design from [126]) | 2: MWIR (3–5 μm) and LWIR (8–12 μm) | Commercial access planned | Small physical size, reduced cost, improved temporal resolution/response time, Revisit time: less than 1 h. | Global | 0.2 km | Wide coverage in orbit | The first satellite is planned for launch in late 2020 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. https://doi.org/10.3390/s20226442

Barmpoutis P, Papaioannou P, Dimitropoulos K, Grammalidis N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors. 2020; 20(22):6442. https://doi.org/10.3390/s20226442

Chicago/Turabian StyleBarmpoutis, Panagiotis, Periklis Papaioannou, Kosmas Dimitropoulos, and Nikos Grammalidis. 2020. "A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing" Sensors 20, no. 22: 6442. https://doi.org/10.3390/s20226442

APA StyleBarmpoutis, P., Papaioannou, P., Dimitropoulos, K., & Grammalidis, N. (2020). A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors, 20(22), 6442. https://doi.org/10.3390/s20226442