Biometric Signals Estimation Using Single Photon Camera and Deep Learning

Abstract

1. Introduction

2. Related Work

3. System Overview

3.1. Signal Acquisition

3.2. Signal Extraction

3.2.1. Skin Detection

3.2.2. Signal Preprocessing

3.3. Signal Processing

3.3.1. Filtering

3.3.2. Average Heart Rate Estimation

3.3.3. Tachogram Estimation

3.3.4. LF/HF Estimation

3.3.5. Respiration Rate Estimation

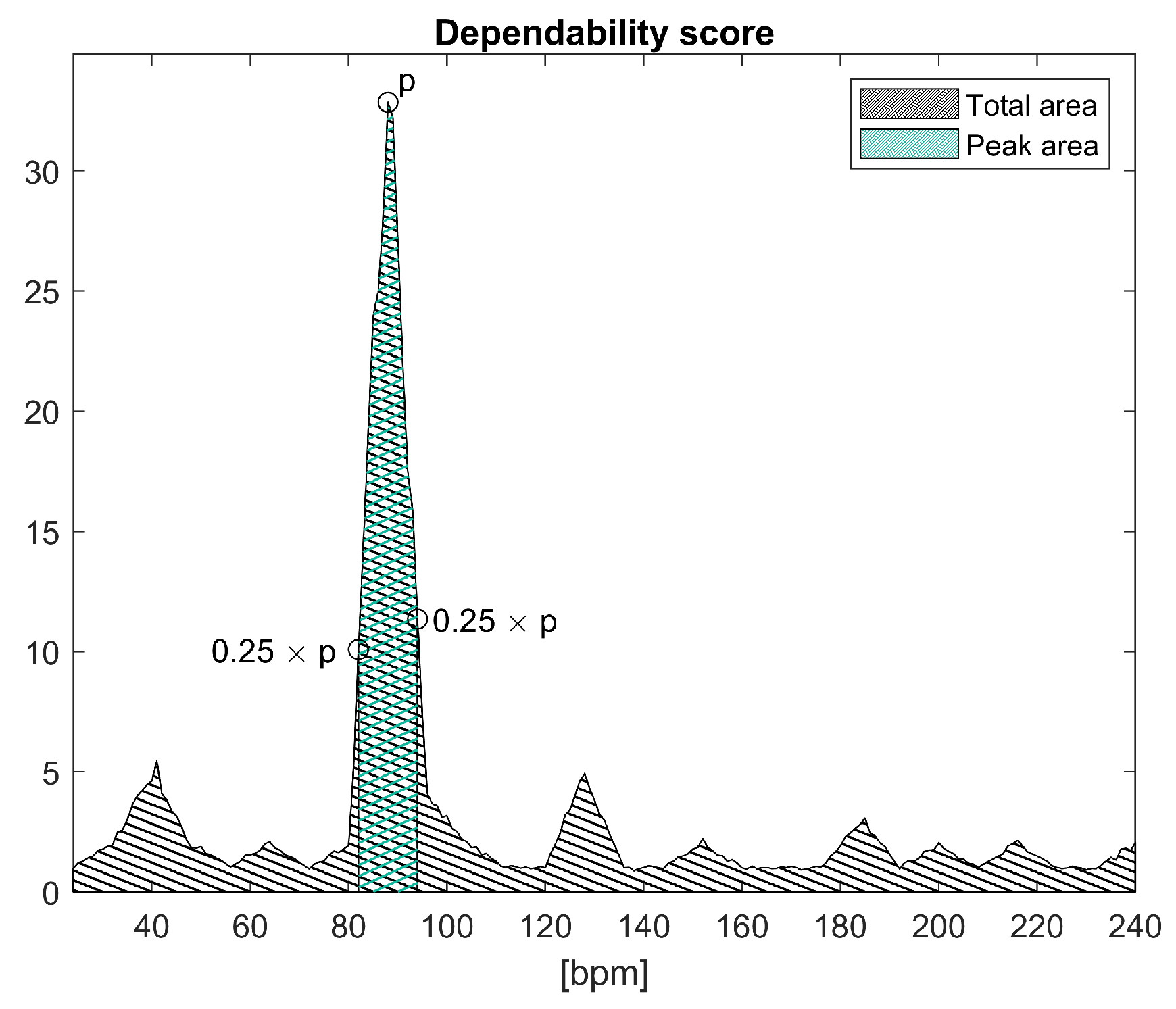

3.4. Dependability Processing

3.4.1. Periodic Head Movements

3.4.2. Pulsating Light

4. Method

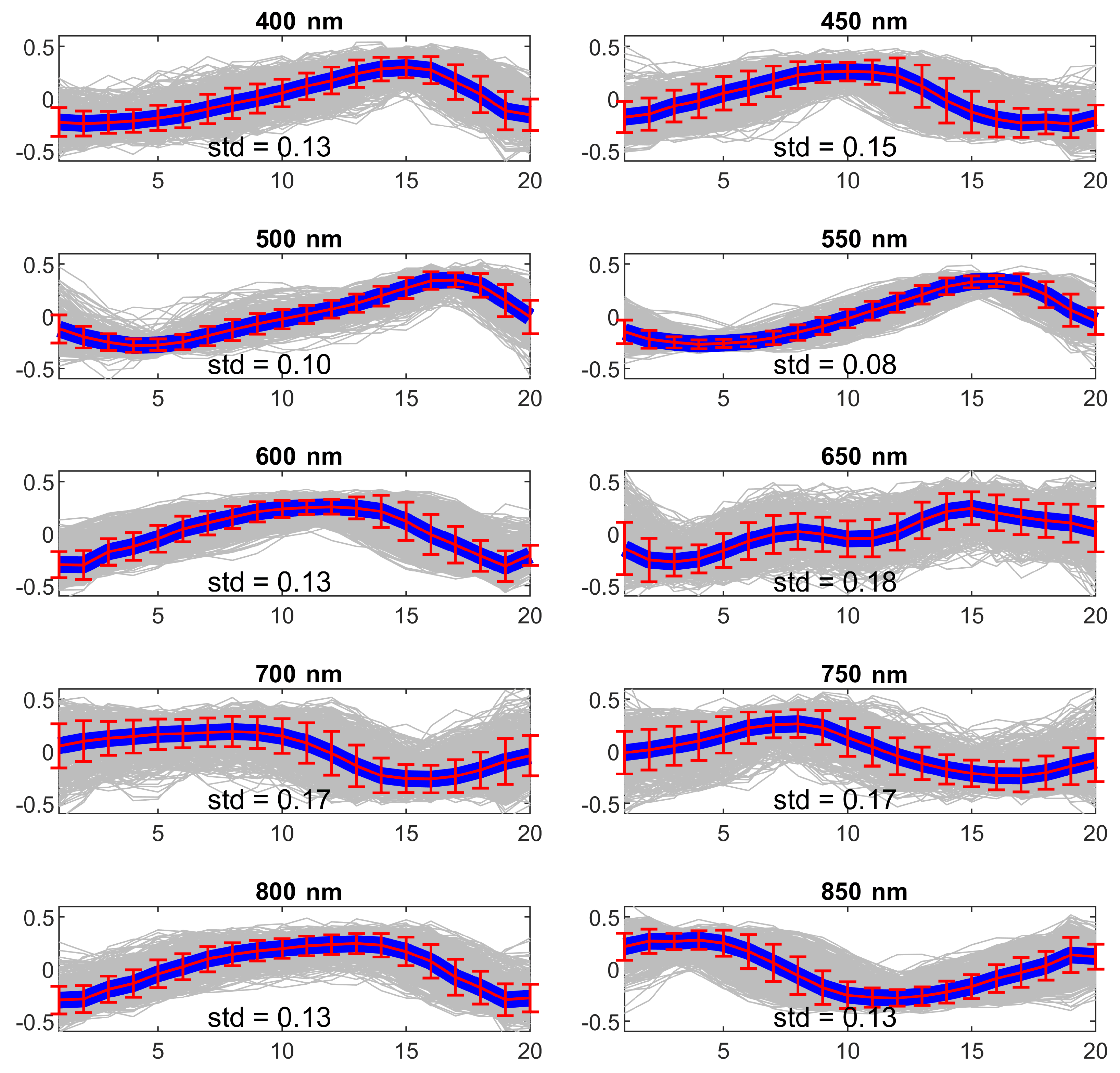

4.1. Experiment 1—Wavelength Selection

4.2. Experiment 2—SPAD and RGB Cameras Comparison

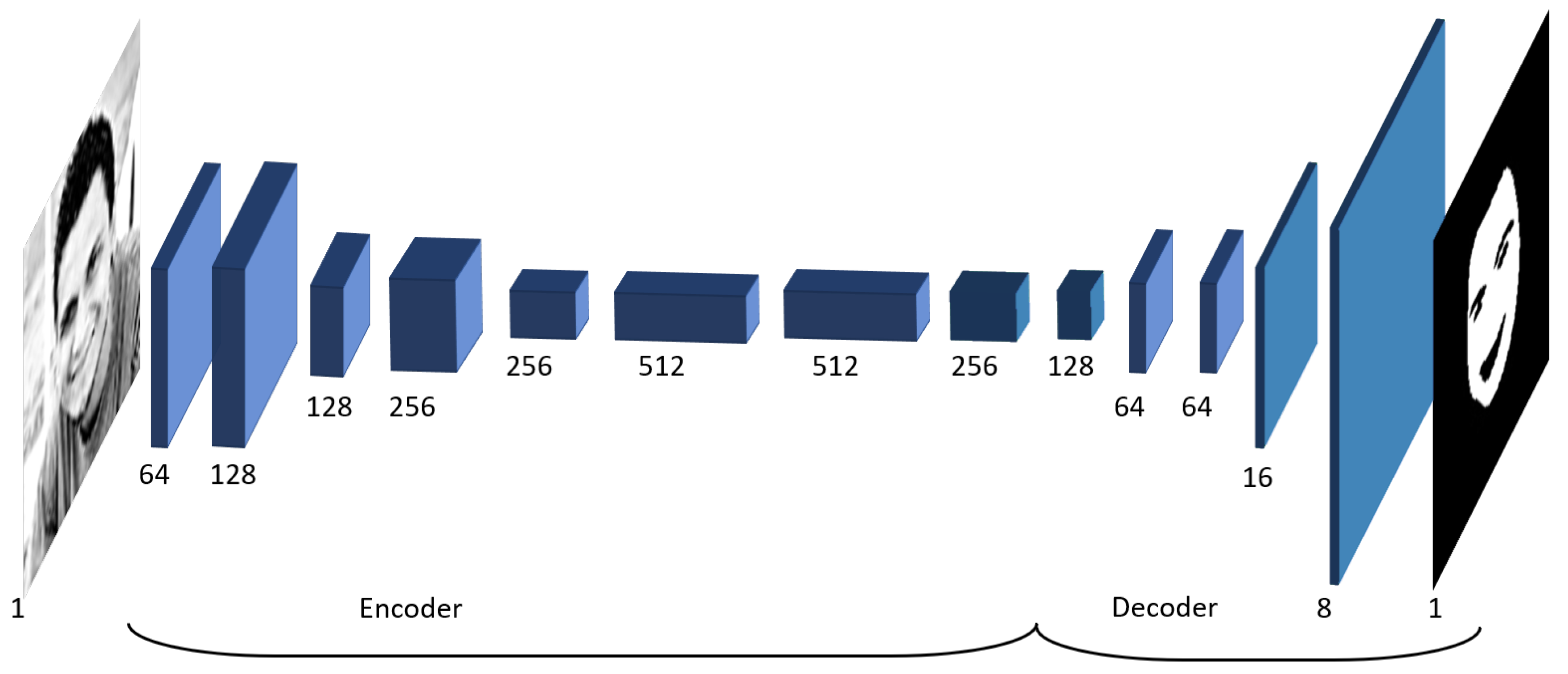

4.3. Experiment 3—Deep Learning-Based Signal Extraction

4.4. Experiment 4—Dependability Checks Evaluation

4.5. Evaluation Metrics

5. Evaluation Results

5.1. Experiment 1—Wavelength Selection

5.2. Experiment 2—SPAD and RGB Cameras Comparison

5.3. Experiment 3—Deep Learning-Based Signal Extraction

5.4. Experiment 4—Dependability Checks

6. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Sinhal, R.; Singh, K.; Raghuwanshi, M.M. An Overview of Remote Photoplethysmography Methods for Vital Sign Monitoring. In Computer Vision and Machine Intelligence in Medical Image Analysis; Gupta, M., Konar, D., Bhattacharyya, S., Biswas, S., Eds.; Springer: Singapore, 2020; pp. 21–31. [Google Scholar]

- Paracchini, M.; Marchesi, L.; Pasquinelli, K.; Marcon, M.; Fontana, G.; Gabrielli, A.; Villa, F. Remote PhotoPlethysmoGraphy Using SPAD Camera for Automotive Health Monitoring Application. In Proceedings of the 2019 AEIT International Conference of Electrical and Electronic Technologies for Automotive (AEIT AUTOMOTIVE), Turin, Italy, 2–4 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, G.; Liu, C.; Ji, L.; Yang, J.; Liu, C. Effect of a Percutaneous Coronary Intervention Procedure on Heart Rate Variability and Pulse Transit Time Variability: A Comparison Study Based on Fuzzy Measure Entropy. Entropy 2016, 18, 246. [Google Scholar] [CrossRef]

- Malliani, A.; Pagani, M.; Lombardi, F.; Cerutti, S. Research Advances Series Cardiovascular Neural Regulation Explored in the Frequency Domain. CV Neural Regul. Freq. Domain 1991, 84, 482–492. [Google Scholar]

- Rouast, P.; Adam, M.; Chiong, R.; Cornforth, D.; Lux, E. Remote heart rate measurement using low-cost RGB face video: A technical literature review. Front. Comput. Sci. 2017, 1–15. [Google Scholar] [CrossRef]

- Bronzi, D.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F. SPAD Figures of Merit for Photon-Counting, Photon-Timing, and Imaging Applications: A Review. IEEE Sens. J. 2016, 16, 3–12. [Google Scholar] [CrossRef]

- Bronzi, D.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F.; Durini, D.; Weyers, S.; Brockherde, W. 100,000 Frames/s 64 × 32 Single-Photon Detector Array for 2-D Imaging and 3-D Ranging. IEEE J. Sel. Top. Quantum Electron. 2014, 20, 354–363. [Google Scholar] [CrossRef]

- Bronzi, D.; Zou, Y.; Villa, F.; Tisa, S.; Tosi, A.; Zappa, F. Automotive Three-Dimensional Vision through a Single-Photon Counting SPAD Camera. IEEE Trans. Intell. Transp. Syst. 2016, 17, 782–795. [Google Scholar] [CrossRef]

- Albota, M.A.; Heinrichs, R.M.; Kocher, D.G.; Fouche, D.G.; Player, B.E.; O’Brien, M.E.; Aull, B.F.; Zayhowski, J.J.; Mooney, J.; Willard, B.C.; et al. Three-dimensional imaging laser radar with a photon-counting avalanche photodiode array and microchip laser. Appl. Opt. 2002, 41, 7671–7678. [Google Scholar] [CrossRef]

- Hertzman, A.B. Photoelectric Plethysmography of the Fingers and Toes in Man. Proc. Soc. Exp. Biol. Med. 1937, 37, 529–534. [Google Scholar] [CrossRef]

- Sun, Y.; Azorin-Peris, V.; Kalawsky, R.; Hu, S.; Papin, C.; Greenwald, S.E. Use of ambient light in remote photoplethysmographic systems: Comparison between a high-performance camera and a low-cost webcam. J. Biomed. Opt. 2012, 17, 037005. [Google Scholar] [CrossRef] [PubMed]

- Verkruysse, W.; Svaasand, L.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 63–86. [Google Scholar] [CrossRef]

- Moreno, J.; Ramos-Castro, J.; Movellan, J.; Parrado, E.; Rodas, G.; Capdevila, L. Facial video-based photoplethysmography to detect HRV at rest. Int. J. Sport. Med. 2015, 36, 474–480. [Google Scholar] [CrossRef] [PubMed]

- Rouast, P.V.; Adam, M.P.; Dorner, V.; Lux, E. Remote photoplethysmography: Evaluation of contactless heart rate measurement in an information systems setting. In Proceedings of the Applied Informatics and Technology Innovation Conference, Newcastle, Australia, 22–24 November 2016; pp. 1–17. [Google Scholar] [CrossRef]

- Vieira Moco, A. Towards Photoplethysmographic Imaging: Modeling, Experiments and Applications. Ph.D. Thesis, Department of Electrical Engineering, Hong Kong, China, 2019. [Google Scholar]

- Iozzia, L.; Cerina, L.; Mainardi, L. Relationships between heart-rate variability and pulse-rate variability obtained from video-PPG signal using ZCA. Physiol. Meas. 2016, 37, 1934–1944. [Google Scholar] [CrossRef] [PubMed]

- De Haan, G.; Jeanne, V. Robust pulse rate from chrominance-based rPPG. IEEE Trans. Biomed. Eng. 2013, 60, 2878–2886. [Google Scholar] [CrossRef] [PubMed]

- McDuff, D.; Estepp, J.R.; Piasecki, A.M.; Blackford, E.B. A survey of remote optical photoplethysmographic imaging methods. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6398–6404. [Google Scholar]

- Zaunseder, S.; Trumpp, A.; Wedekind, D.; Malberg, H. Cardiovascular assessment by imaging photoplethysmography—A review. Biomed. Eng. Biomed. Tech. 2018, 63, 617–634. [Google Scholar] [CrossRef] [PubMed]

- Hassan, M.; Malik, A.; Fofi, D.; Saad, N.; Karasfi, B.; Ali, Y.; Meriaudeau, F. Heart rate estimation using facial video: A review. Biomed. Signal Process. Control 2017, 38, 346–360. [Google Scholar] [CrossRef]

- El-Hajj, C.; Kyriacou, P. A review of machine learning techniques in photoplethysmography for the non-invasive cuff-less measurement of blood pressure. Biomed. Signal Process. Control 2020, 58, 101870. [Google Scholar] [CrossRef]

- Bousefsaf, F.; Pruski, A.; Maaoui, C. 3D Convolutional Neural Networks for Remote Pulse Rate Measurement and Mapping from Facial Video. Appl. Sci. 2019, 9, 4364. [Google Scholar] [CrossRef]

- Sabokrou, M.; Pourreza, M.; Li, X.; Fathy, M.; Zhao, G. Deep-HR: Fast Heart Rate Estimation from Face Video Under Realistic Conditions. arXiv 2020, arXiv:2002.04821. [Google Scholar]

- Kopeliovich, M.; Mironenko, Y.; Petrushan, M. Architectural Tricks for Deep Learning in Remote Photoplethysmography. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Baldassarre, F.; Gonzalez-Morin, D.; Rodes-Guirao, L. Deep-Koalarization: Image Colorization using CNNs and Inception-ResNet-v2. arXiv 2017, arXiv:1712.03400. [Google Scholar]

- Paracchini, M.; Marcon, M.; Villa, F.; Tubaro, S. Deep skin detection on low resolution grayscale images. Pattern Recognit. Lett. 2020, 131, 322–328. [Google Scholar] [CrossRef]

- Clifford, G.; Tarassenko, L. Quantifying Errors in Spectral Estimates of HRV Due to Beat Replacement and Resampling. IEEE Trans. Biomed. Eng. 2005, 52, 630–638. [Google Scholar] [CrossRef] [PubMed]

- Viola, P.; Jones, M.J. Robust Real-Time Face Detection. Int. J. Comput. Vision 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Pan, J.; Tompkins, W. A Real-Time QRS Detection Algorithm. IEEE Trans. Biomed. Eng. 1985, BME-32, 230–236. [Google Scholar] [CrossRef]

- Charlton, P.; Bonnici, T.; Tarassenko, L.; Clifton, D.A.; Beale, R.; Watkinson, P.J. An assessment of algorithms to estimate respiratory rate from the electrocardiogram and photoplethysmogram. Physiol. Meas. 2016, 37, 610–626. [Google Scholar] [CrossRef] [PubMed]

- Gudi, A.; Bittner, M.; Lochmans, R.; Gemert, J.V. Efficient Real-Time Camera Based Estimation of Heart Rate and Its Variability. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27–28 October 2019; pp. 1570–1579. [Google Scholar]

- Ash, C.; Dubec, M.; Donne, K.; Bashford, T. Effect of wavelength and beam width on penetration in light-tissue interaction using computational methods. Lasers Med. Sci. 2017, 32, 1909–1918. [Google Scholar] [CrossRef]

- Zijlstra, W.G.; Buursma, A. Spectrophotometry of hemoglobin: Absorption spectra of bovine oxyhemoglobin, deoxyhemoglobin, carboxyhemoglobin, and methemoglobin. Comp. Biochem. Physiol.-B Biochem. Mol. Biol. 1997, 118, 743–749. [Google Scholar] [CrossRef]

| Wavelength (nm) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 400 | 450 | 500 | 550 | 600 | 650 | 700 | 750 | 800 | 850 | |

| Sbj 1 | 0.14 | 0.15 | 0.10 | 0.08 | 0.13 | 0.18 | 0.17 | 0.17 | 0.13 | 0.13 |

| Sbj 2 | 0.14 | 0.12 | 0.12 | 0.12 | 0.14 | 0.16 | 0.16 | 0.15 | 0.14 | 0.12 |

| Sbj 3 | 0.14 | 0.14 | 0.11 | 0.12 | 0.12 | 0.20 | 0.21 | 0.22 | 0.16 | 0.19 |

| Sbj 4 | 0.15 | 0.12 | 0.13 | 0.12 | 0.15 | 0.21 | 0.20 | 0.20 | 0.14 | 0.13 |

| Sbj 5 | 0.15 | 0.12 | 0.12 | 0.09 | 0.12 | 0.15 | 0.16 | 0.14 | 0.11 | 0.10 |

| Avg. | 0.14 | 0.13 | 0.12 | 0.11 | 0.13 | 0.18 | 0.18 | 0.18 | 0.14 | 0.13 |

| Wavelength (nm) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| [bpm] | 400 | 450 | 500 | 550 | 600 | 650 | 700 | 750 | 800 | 850 |

| Sbj 1 | 0 | 2 | 4 | 1 | 3 | 7 | 4 | 2 | 1 | 0 |

| Sbj 2 | 27 | 41 | 0 | 0 | 0 | 9 | 32 | 22 | 50 | 24 |

| Sbj 3 | 18 | 1 | 3 | 2 | 14 | 15 | 12 | 17 | 9 | 18 |

| Sbj 4 | 0 | 0 | 0 | 1 | 2 | 12 | N.A. | 6 | 1 | 0 |

| Sbj 5 | 4 | 6 | 3 | 0 | 3 | 1 | N.A. | 1 | 0 | 0 |

| Avg. | 9.8 | 10.0 | 2.0 | 0.8 | 4.4 | 8.8 | 16.0 | 9.6 | 12.2 | 8.4 |

| Wavelength (nm) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| [s] | 400 | 450 | 500 | 550 | 600 | 650 | 700 | 750 | 800 | 850 |

| Sbj 1 | 0.10 | 0.04 | 0.08 | 0.03 | 0.07 | 0.11 | 0.10 | 0.07 | 0.06 | 0.03 |

| Sbj 2 | 0.05 | 0.07 | 0.03 | 0.07 | 0.23 | 0.22 | 0.23 | 0.15 | 0.09 | 0.06 |

| Sbj 3 | 0.09 | 0.07 | 0.06 | 0.03 | 0.08 | 0.17 | 0.17 | 0.15 | 0.10 | 0.28 |

| Sbj 4 | 0.07 | 0.05 | 0.05 | 0.03 | 0.07 | 0.21 | N.A. | 0.18 | 0.11 | 0.07 |

| Sbj 5 | 0.12 | 0.10 | 0.14 | 0.02 | 0.08 | 0.30 | N.A. | 0.12 | 0.05 | 0.05 |

| Avg. | 0.09 | 0.07 | 0.07 | 0.04 | 0.11 | 0.20 | 0.17 | 0.13 | 0.08 | 0.10 |

| Wavelength (nm) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 400 | 450 | 500 | 550 | 600 | 650 | 700 | 750 | 800 | 850 | |

| Sbj 1 | 1.6 | 1.5 | 1.1 | 1.3 | 2.1 | 1.6 | 1.7 | 1.2 | 1.3 | 1.5 |

| Sbj 2 | 0.3 | 0.4 | 1.2 | 0.5 | 0.9 | 0.9 | 0.5 | 0.6 | 0.3 | 0.3 |

| Sbj 3 | 1.0 | 1.7 | 1.4 | 1.3 | 1.8 | 4.2 | 1.2 | 2.8 | 2.5 | 3.3 |

| Sbj 4 | 1.3 | 1.2 | 1.8 | 0.8 | 1.1 | 1.9 | N.A. | 1.5 | 1.8 | 1.7 |

| Sbj 5 | 1.2 | 0.8 | 2.5 | 0.1 | 0.5 | 1.4 | N.A. | 0.8 | 0.6 | 0.6 |

| Avg. | 1.1 | 1.1 | 1.6 | 0.8 | 1.3 | 2.0 | 1.1 | 1.4 | 1.3 | 1.5 |

| Wavelength (nm) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| [bpm] | 400 | 450 | 500 | 550 | 600 | 650 | 700 | 750 | 800 | 850 |

| Sbj 1 | 0.4 | 0.3 | 0.4 | 0.2 | 0.4 | 0.7 | 0.5 | 0.4 | 0.3 | 0.3 |

| Sbj 2 | 0.4 | 0.2 | 0.4 | 0.0 | 0.2 | 0.3 | 0.4 | 0.1 | 0.3 | 0.1 |

| Sbj 3 | 0.3 | 0.5 | 0.1 | 0.1 | 0.7 | 0.4 | 0.7 | 0.8 | 0.6 | 0.5 |

| Sbj 4 | 0.3 | 0.1 | 0.3 | 0.5 | 0.4 | 0.5 | N.A. | 0.6 | 0.6 | 0.4 |

| Sbj 5 | 0.4 | 0.7 | 0.4 | 0.3 | 0.2 | 0.7 | N.A. | 0.2 | 0.6 | 1.1 |

| Avg. | 0.36 | 0.36 | 0.32 | 0.22 | 0.38 | 0.52 | 0.53 | 0.42 | 0.48 | 0.48 |

| Error [bpm] | SPAD | RGB |

|---|---|---|

| Sbj 1 | 0.0 | 0.0 |

| Sbj 2 | 0.2 | 0.2 |

| Sbj 3 | 0.2 | 0.2 |

| Avg. | 0.1 | 0.1 |

| RMSE (ms) | SPAD | RGB |

|---|---|---|

| Sbj 1 | 2 | 2 |

| Sbj 2 | 0.8 | 0.7 |

| Sbj 3 | 0.6 | 6 |

| Avg. | 1.1 | 2.9 |

| Skin | Foreh. | Skin w/o Foreh. | ||||

|---|---|---|---|---|---|---|

| (bpm) | RMSE | std | RMSE | std | RMSE | std |

| Sbj 1 | 2 | 1.4 | 2 | 1.4 | 2 | 1.4 |

| Sbj 2 | 1.4 | 0 | 1.4 | 1.4 | 1.4 | 0 |

| Avg. | 1.7 | 0.7 | 1.7 | 1.4 | 1.7 | 0.7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paracchini, M.; Marcon, M.; Villa, F.; Zappa, F.; Tubaro, S. Biometric Signals Estimation Using Single Photon Camera and Deep Learning. Sensors 2020, 20, 6102. https://doi.org/10.3390/s20216102

Paracchini M, Marcon M, Villa F, Zappa F, Tubaro S. Biometric Signals Estimation Using Single Photon Camera and Deep Learning. Sensors. 2020; 20(21):6102. https://doi.org/10.3390/s20216102

Chicago/Turabian StyleParacchini, Marco, Marco Marcon, Federica Villa, Franco Zappa, and Stefano Tubaro. 2020. "Biometric Signals Estimation Using Single Photon Camera and Deep Learning" Sensors 20, no. 21: 6102. https://doi.org/10.3390/s20216102

APA StyleParacchini, M., Marcon, M., Villa, F., Zappa, F., & Tubaro, S. (2020). Biometric Signals Estimation Using Single Photon Camera and Deep Learning. Sensors, 20(21), 6102. https://doi.org/10.3390/s20216102