Figure 1.

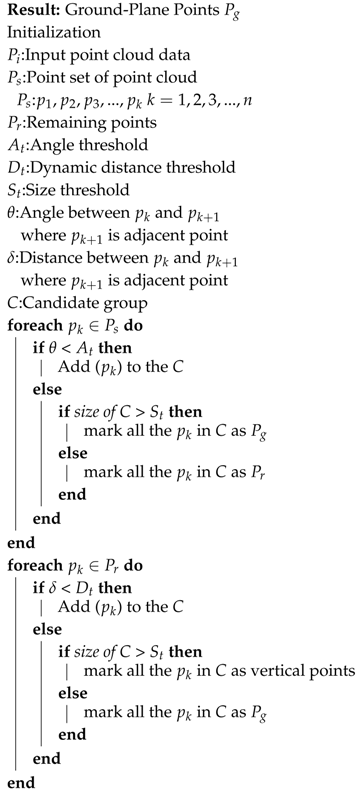

Our KIA-Soul EV is equipped with two FLIR cameras, a 3D 32-laser scanner and a GPS/IMU inertial navigation system [

24] (Best viewed in color).

Figure 1.

Our KIA-Soul EV is equipped with two FLIR cameras, a 3D 32-laser scanner and a GPS/IMU inertial navigation system [

24] (Best viewed in color).

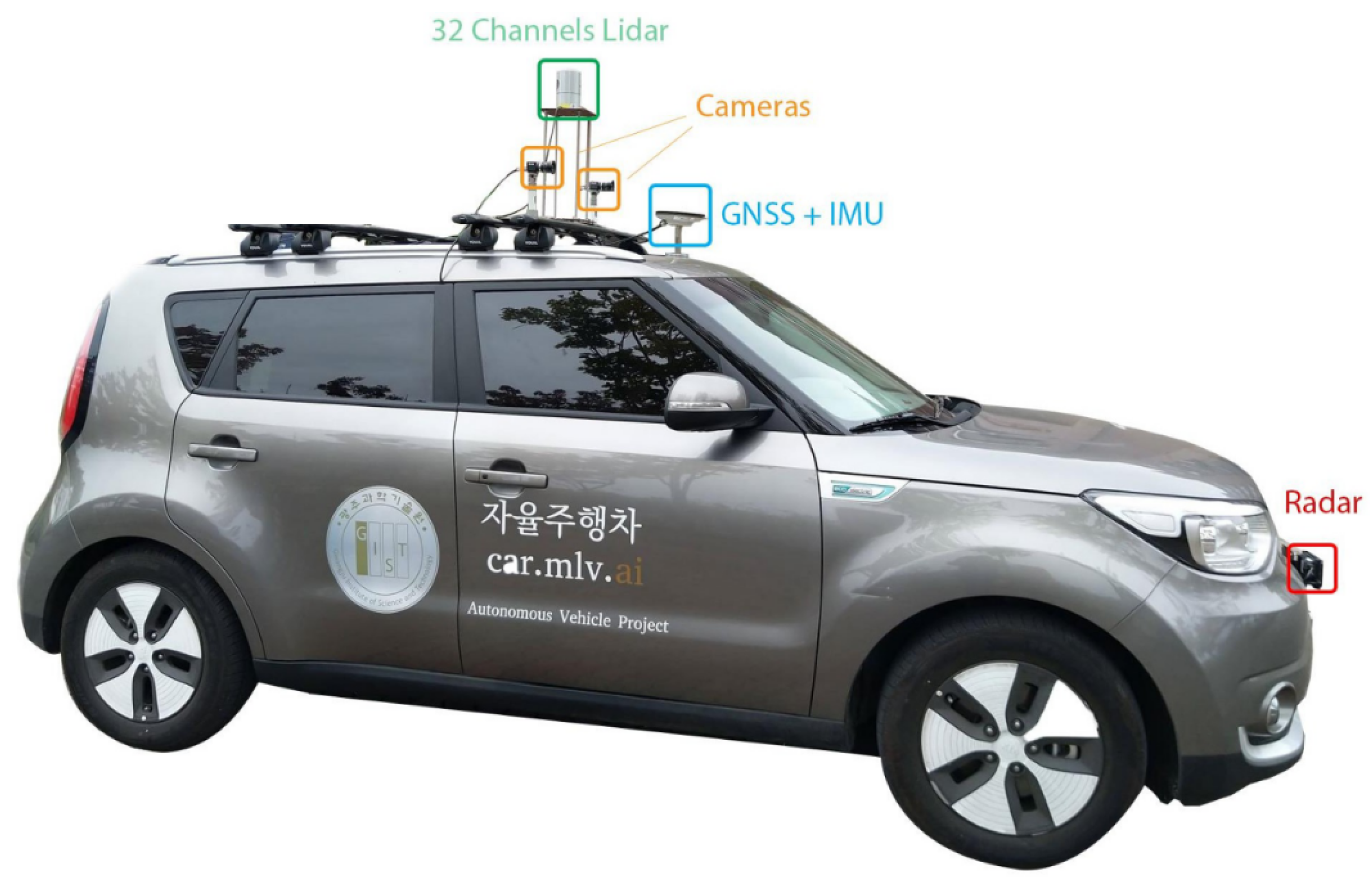

Figure 2.

The communication lines between CAN Gateways Module and OBD Connector.

Figure 2.

The communication lines between CAN Gateways Module and OBD Connector.

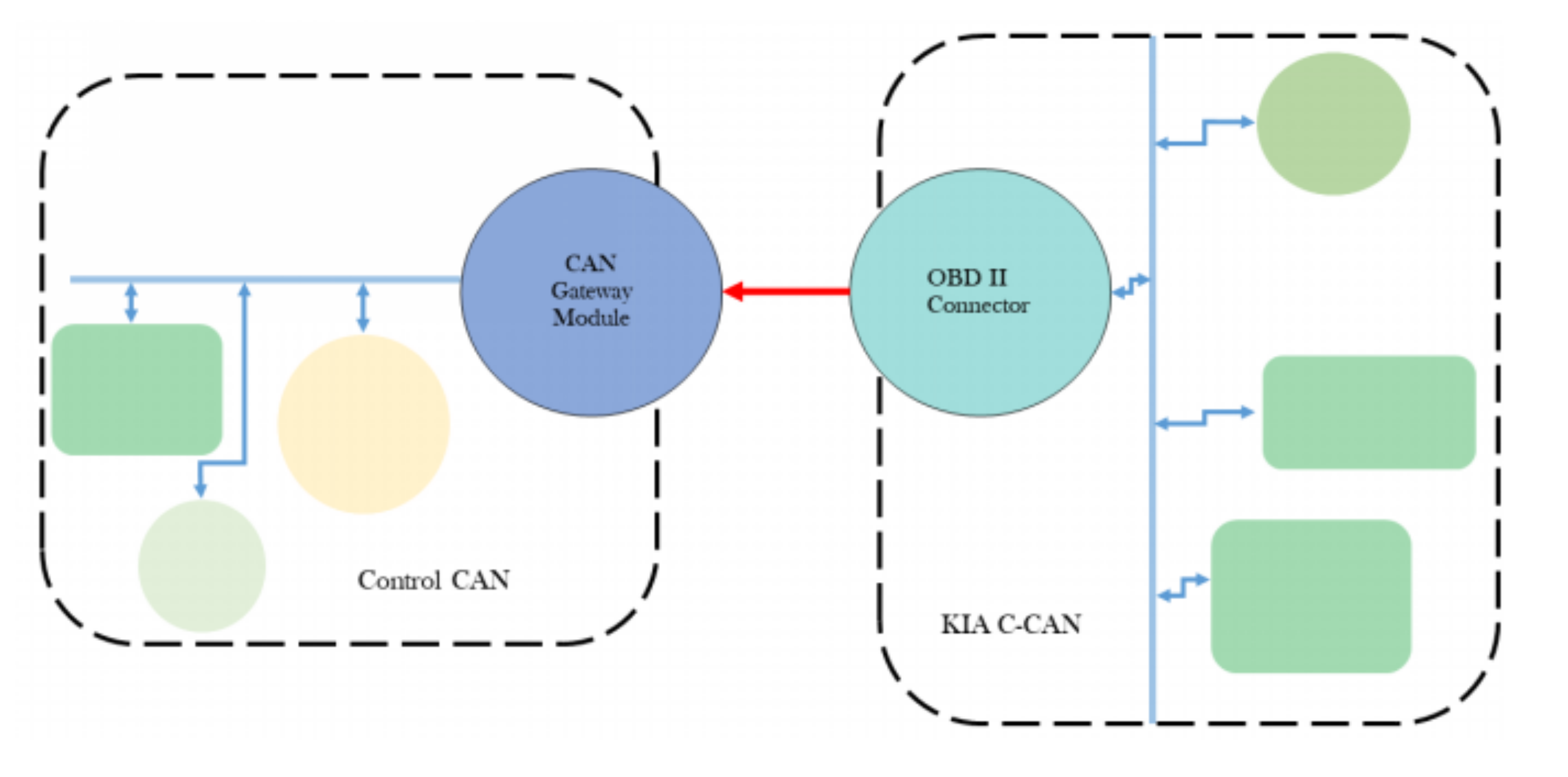

Figure 3.

In the wiring diagram, the black color is the connection between the main battery of the car and the inverter. The red color wire shows the power is transmitted to different sensors. The green colors show the connection between sensors which are connected to the computer and are powered through them. The violet color is for the devices which are connected through a network protocol. The brown color is for GPS antenna of the GNSS system. The pink color is for receiving and sending commands to car hardware (Best viewed in color).

Figure 3.

In the wiring diagram, the black color is the connection between the main battery of the car and the inverter. The red color wire shows the power is transmitted to different sensors. The green colors show the connection between sensors which are connected to the computer and are powered through them. The violet color is for the devices which are connected through a network protocol. The brown color is for GPS antenna of the GNSS system. The pink color is for receiving and sending commands to car hardware (Best viewed in color).

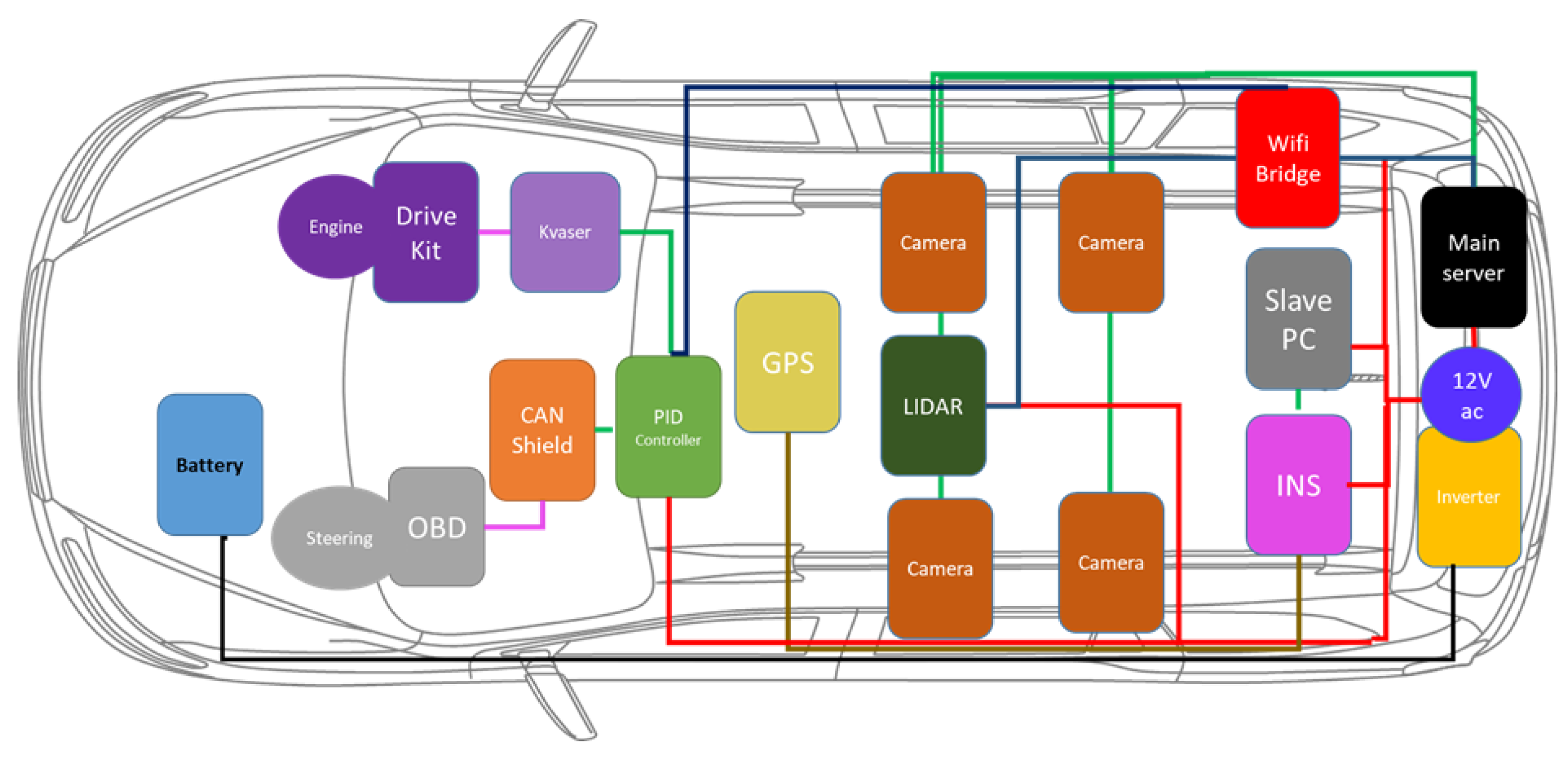

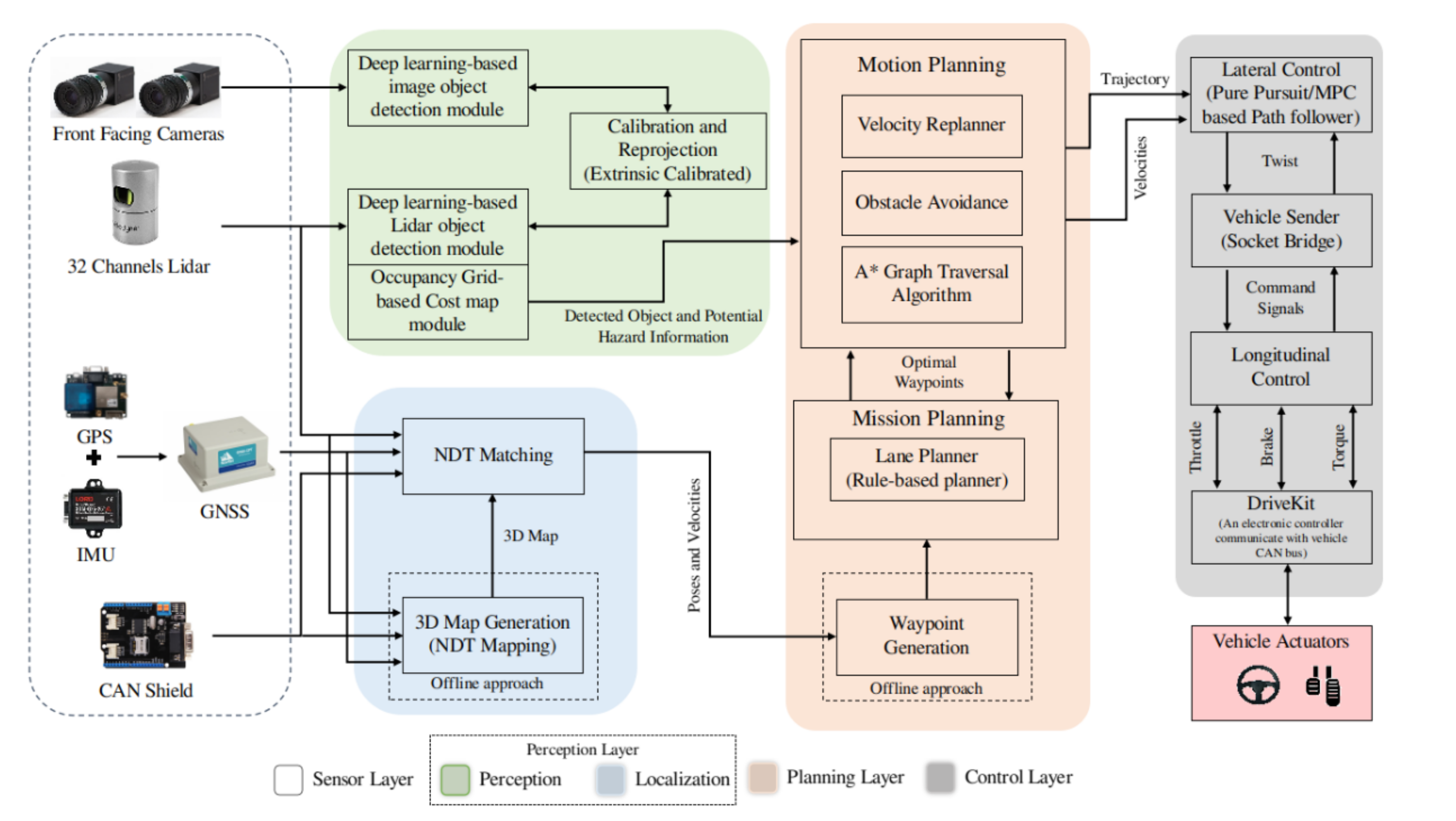

Figure 4.

Overall architecture of our autonomous vehicle system. It includes sensors, perception, planning and control modules (Best viewed in color).

Figure 4.

Overall architecture of our autonomous vehicle system. It includes sensors, perception, planning and control modules (Best viewed in color).

Figure 5.

The flow diagram of the localization process is shown. First, the map is built using NDT mapping using the Lidar data. The point cloud data is downsampled using voxel grid filter for the refinement of 3D map. NDT matching takes filtered Lidar data (scans), GPS, IMU, Odometry, and 3D map for the pose estimation.

Figure 5.

The flow diagram of the localization process is shown. First, the map is built using NDT mapping using the Lidar data. The point cloud data is downsampled using voxel grid filter for the refinement of 3D map. NDT matching takes filtered Lidar data (scans), GPS, IMU, Odometry, and 3D map for the pose estimation.

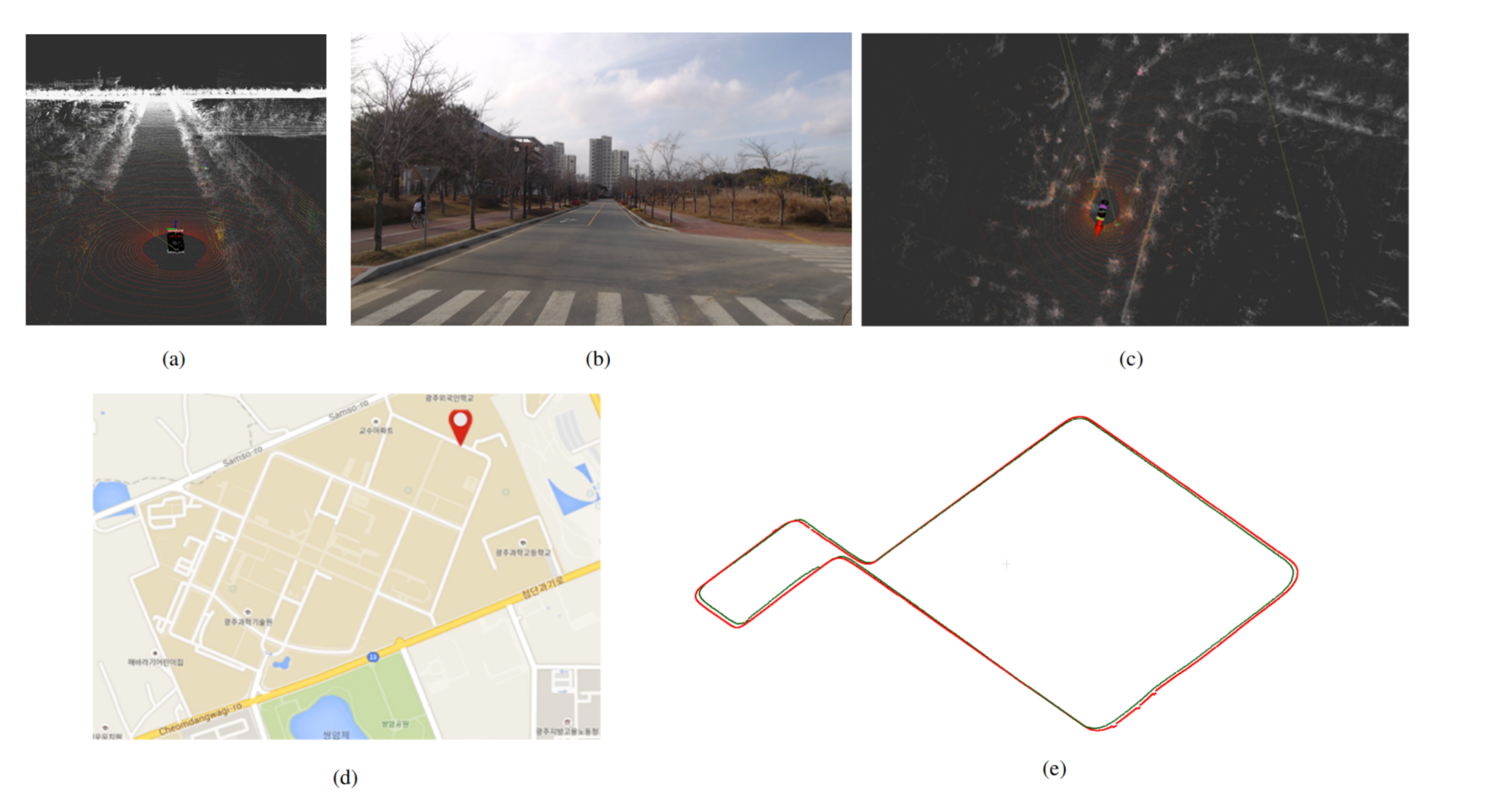

Figure 6.

(a) The 3D map of the environment formed using the NDT mapping. (b) The localization of autonomous vehicle using NDT matching in the 3D map by matching the current Lidar scan and the 3D map information. (c) The current view of the environment in image data. (d) The current localization is marked in the Google map. (e) Qualitative comparison between GNSS pose and NDT pose. The GNSS pose is shown in red and NDT pose is shown in green (Best viewed in color).

Figure 6.

(a) The 3D map of the environment formed using the NDT mapping. (b) The localization of autonomous vehicle using NDT matching in the 3D map by matching the current Lidar scan and the 3D map information. (c) The current view of the environment in image data. (d) The current localization is marked in the Google map. (e) Qualitative comparison between GNSS pose and NDT pose. The GNSS pose is shown in red and NDT pose is shown in green (Best viewed in color).

Figure 7.

The overall architecture of the calibration and re-projection process is shown. The process is divided into steps. (i) Object detection gives the object detection (ii) Calibration module (iii) The calibration parameters are utilized by the mapping module for generating the point image. (iv) The re-projection module consists of range fusion that performs the distance calculation from the point image and object detection proposal in images and finally computes the object labels bounding box in the Lidar frame (Best viewed in color).

Figure 7.

The overall architecture of the calibration and re-projection process is shown. The process is divided into steps. (i) Object detection gives the object detection (ii) Calibration module (iii) The calibration parameters are utilized by the mapping module for generating the point image. (iv) The re-projection module consists of range fusion that performs the distance calculation from the point image and object detection proposal in images and finally computes the object labels bounding box in the Lidar frame (Best viewed in color).

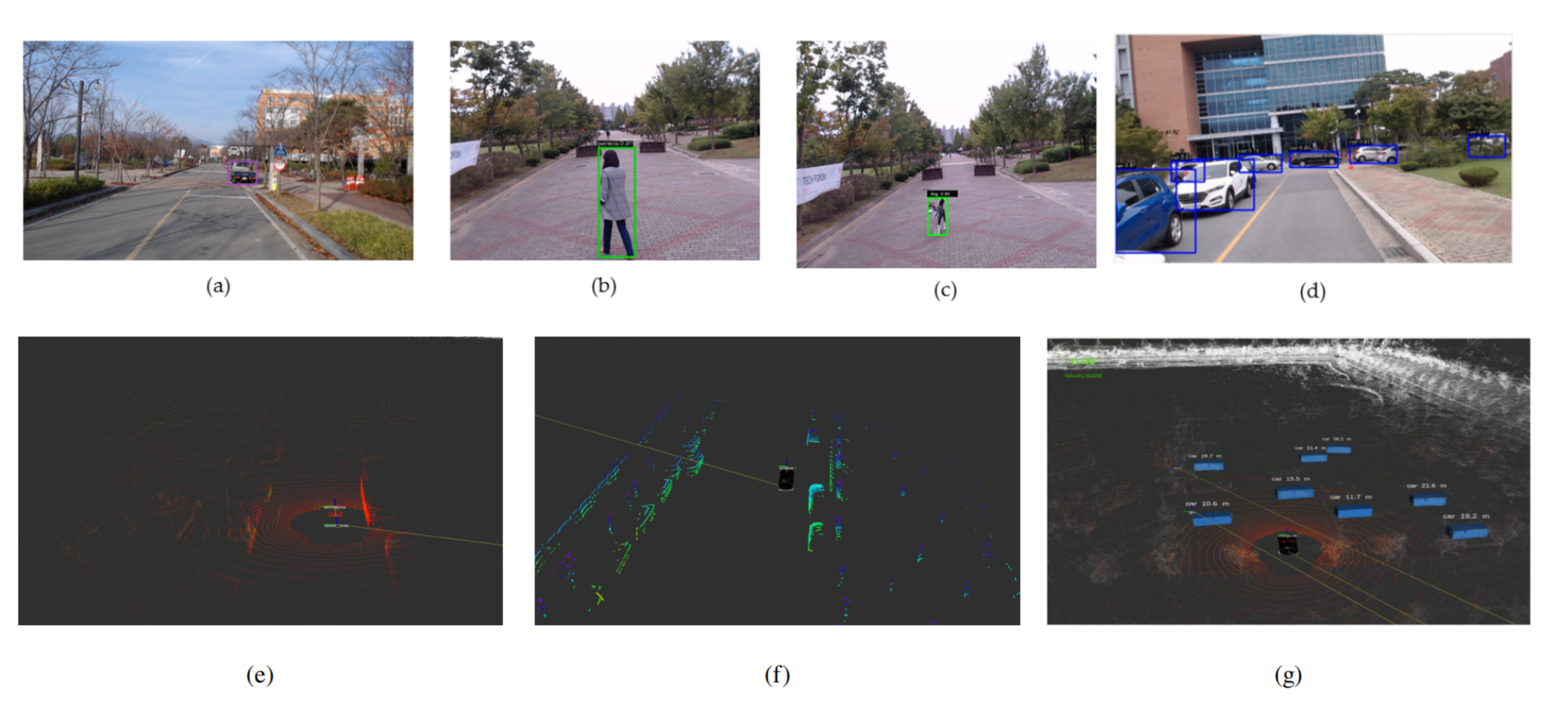

Figure 8.

(a–d) The object detection result using YOLOv3. (e) shows lidar point cloud, (f) shows the results for ground plane removal and (g) shows the results of PointPillar (Best viewed in color).

Figure 8.

(a–d) The object detection result using YOLOv3. (e) shows lidar point cloud, (f) shows the results for ground plane removal and (g) shows the results of PointPillar (Best viewed in color).

Figure 9.

(a,b) The images data and Lidar point cloud data. (a) The corner detection in image data. (c) The point image data is the projection of Lidar data onto image data. (d) The person is detected in the image data and (e) The re-projection of that detection in the Lidar data (Best viewed in color).

Figure 9.

(a,b) The images data and Lidar point cloud data. (a) The corner detection in image data. (c) The point image data is the projection of Lidar data onto image data. (d) The person is detected in the image data and (e) The re-projection of that detection in the Lidar data (Best viewed in color).

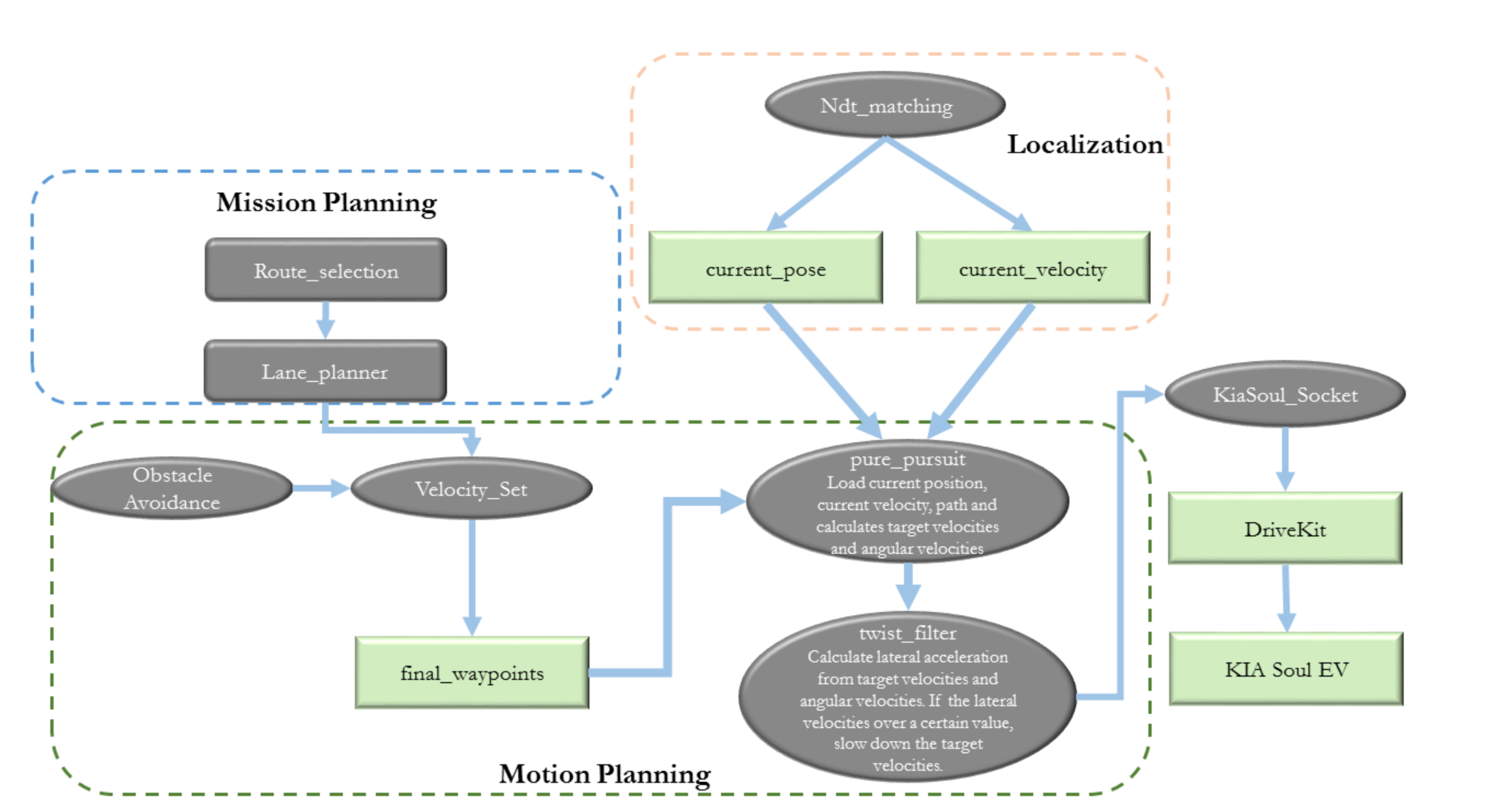

Figure 10.

The architecture of the planning module is shown. The planning module contains Mission and Motion planning as its most vital modules. Mission planning generates the lane for routing for the autonomous vehicle using lane planner. Motion planning plans the path and keeps the autonomous vehicle to follow that path. The flow of information is shown above (Best viewed in color).

Figure 10.

The architecture of the planning module is shown. The planning module contains Mission and Motion planning as its most vital modules. Mission planning generates the lane for routing for the autonomous vehicle using lane planner. Motion planning plans the path and keeps the autonomous vehicle to follow that path. The flow of information is shown above (Best viewed in color).

Figure 11.

(a) The waypoints generated by the motion planning module that contains the GPS coordinates, velocities and angles. (b) The state-machine implementation for obstacle avoidance and stopping. The vehicle will approach to track the speed until the obstacle is not encountered. When the obstacle is detected, if the distance of detected obstacle is less than the velocity-threshold, the planner will replan the velocity to decrease the speed. After this there are two options for the vehicle, if the distance of the detected object is less than stop-threshold and lane information with change flag is available, then the vehicle will avoid the obstacle until the safe avoidance has not been done. In another case, if the detected obstacle distance is less than stop-threshold, and vehicle velocity is approaching to zero, the vehicle will stop until the obstacle position is not changed. Finally, suppose the obstacle position is changed, and the detected obstacle distance is greater than the stop-threshold. In that case, the vehicle will approach to track speed in stop case whereas in avoiding obstacle case if the distance of detected obstacle is greater than stop-threshold, it will approach to track speed (Best viewed in color).

Figure 11.

(a) The waypoints generated by the motion planning module that contains the GPS coordinates, velocities and angles. (b) The state-machine implementation for obstacle avoidance and stopping. The vehicle will approach to track the speed until the obstacle is not encountered. When the obstacle is detected, if the distance of detected obstacle is less than the velocity-threshold, the planner will replan the velocity to decrease the speed. After this there are two options for the vehicle, if the distance of the detected object is less than stop-threshold and lane information with change flag is available, then the vehicle will avoid the obstacle until the safe avoidance has not been done. In another case, if the detected obstacle distance is less than stop-threshold, and vehicle velocity is approaching to zero, the vehicle will stop until the obstacle position is not changed. Finally, suppose the obstacle position is changed, and the detected obstacle distance is greater than the stop-threshold. In that case, the vehicle will approach to track speed in stop case whereas in avoiding obstacle case if the distance of detected obstacle is greater than stop-threshold, it will approach to track speed (Best viewed in color).

![Sensors 20 05999 g011 Sensors 20 05999 g011]()

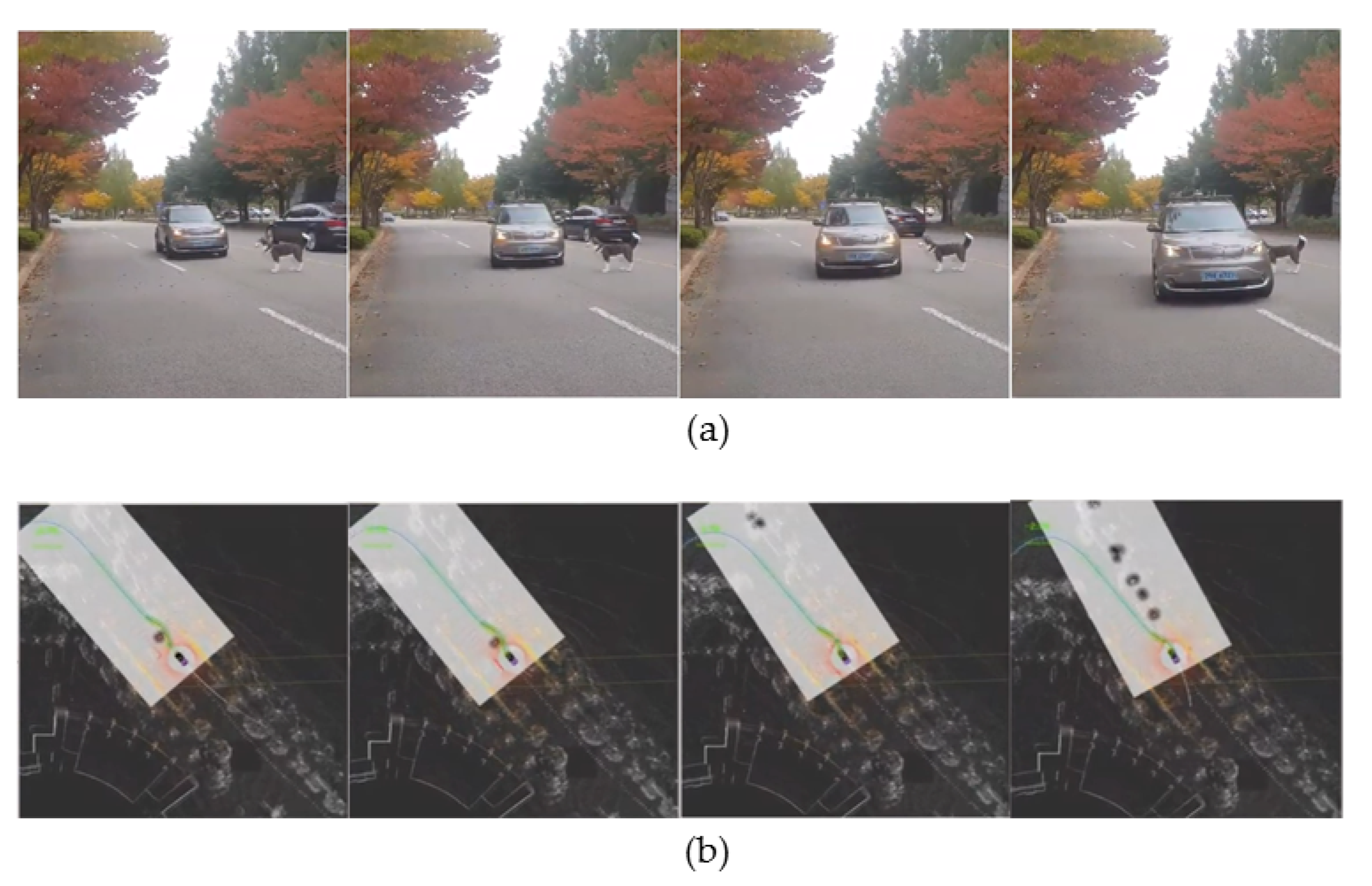

Figure 12.

(a) The frames in which the obstacle avoidance is done. An obstacle (a mannequin husky) is placed in front of the autonomous vehicle. (b) The visualization of pure pursuit and the changed waypoints path (Best viewed in color).

Figure 12.

(a) The frames in which the obstacle avoidance is done. An obstacle (a mannequin husky) is placed in front of the autonomous vehicle. (b) The visualization of pure pursuit and the changed waypoints path (Best viewed in color).

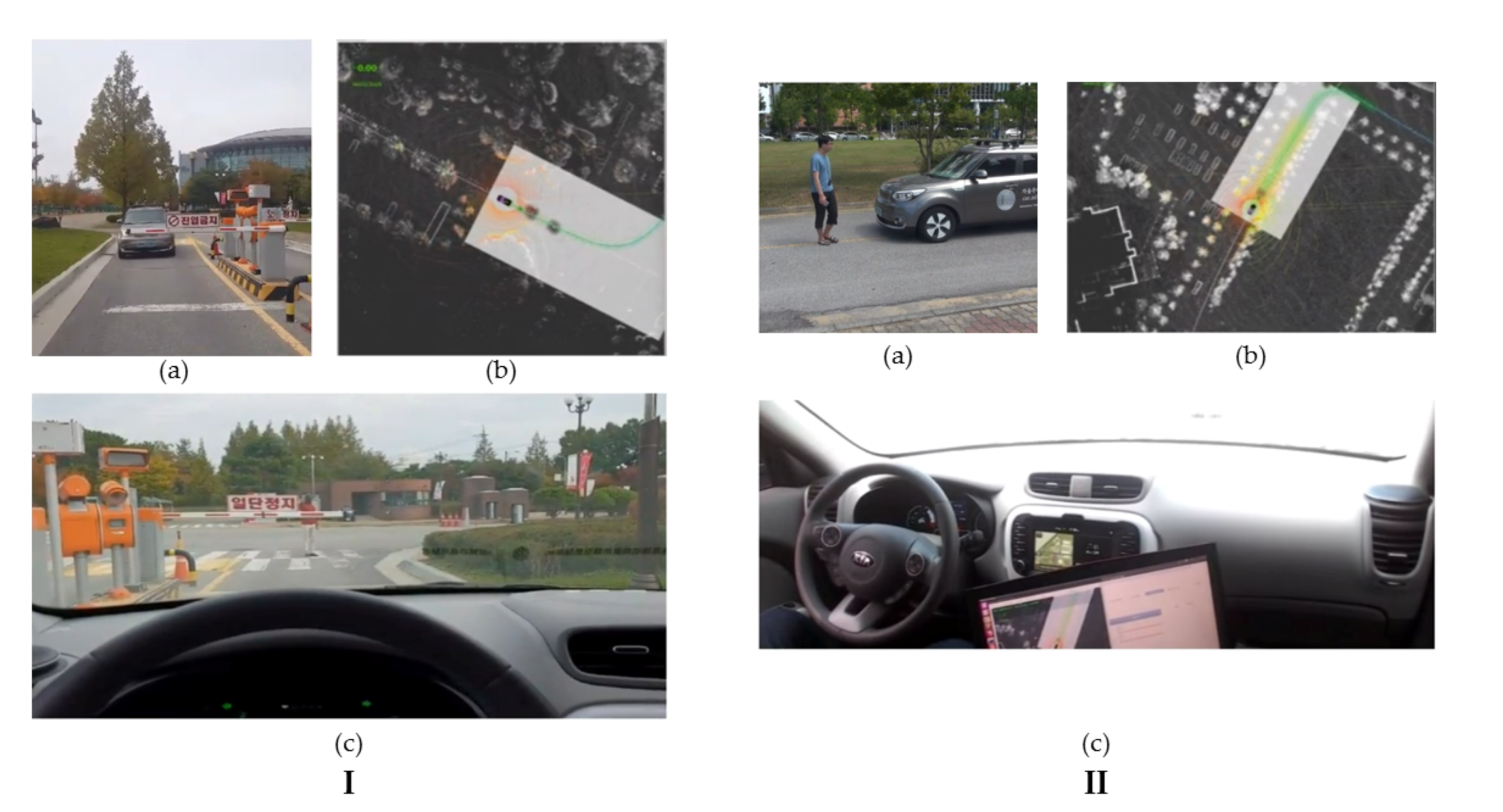

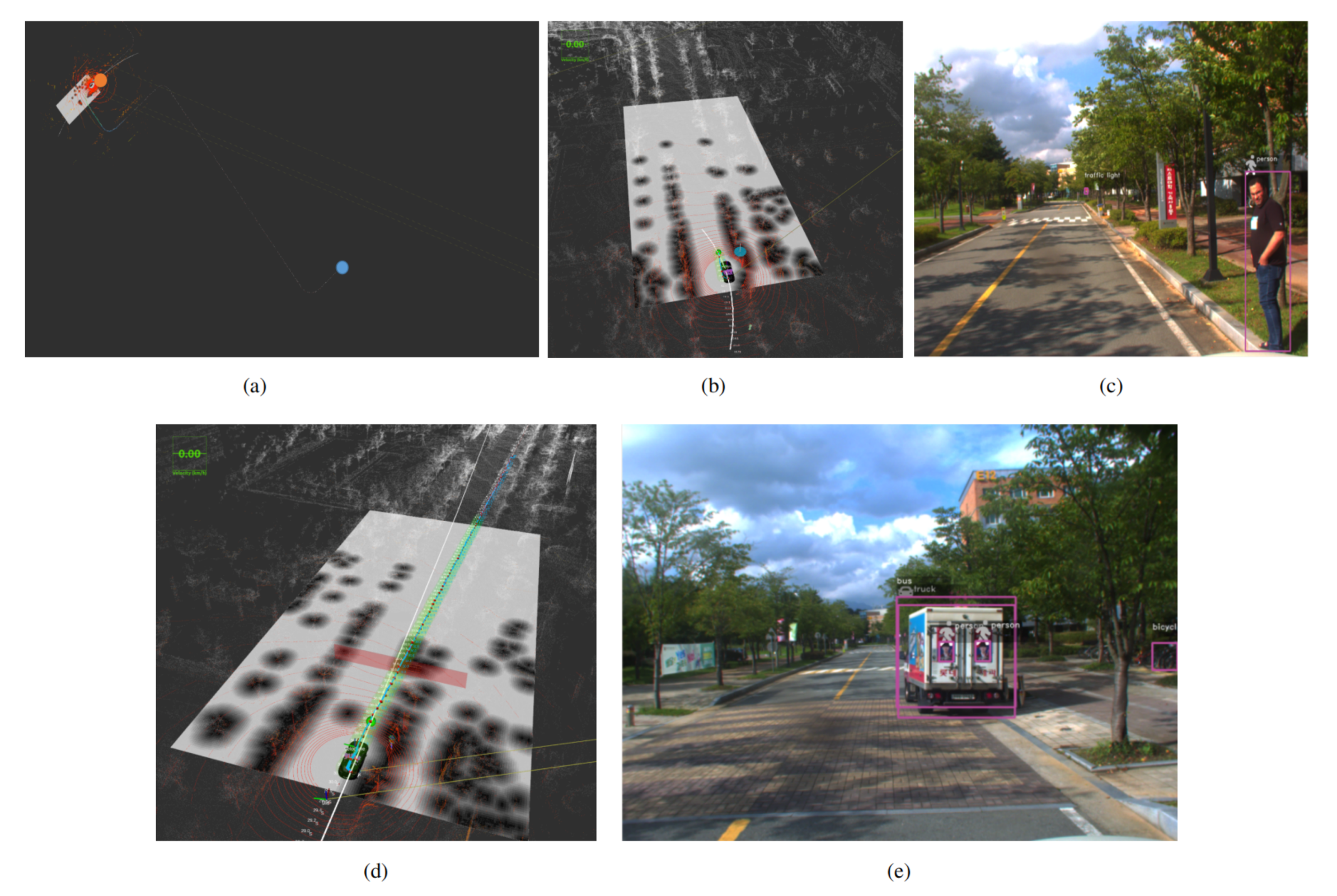

Figure 13.

(I) (a,c) The autonomous vehicle is stopped in front of the obstacle. (b) The visualization of the process in the RViz showing the obstacle and the car. (II) (a–c) shows the results of an obstacle stop using the person as an obstacle (Best viewed in color).

Figure 13.

(I) (a,c) The autonomous vehicle is stopped in front of the obstacle. (b) The visualization of the process in the RViz showing the obstacle and the car. (II) (a–c) shows the results of an obstacle stop using the person as an obstacle (Best viewed in color).

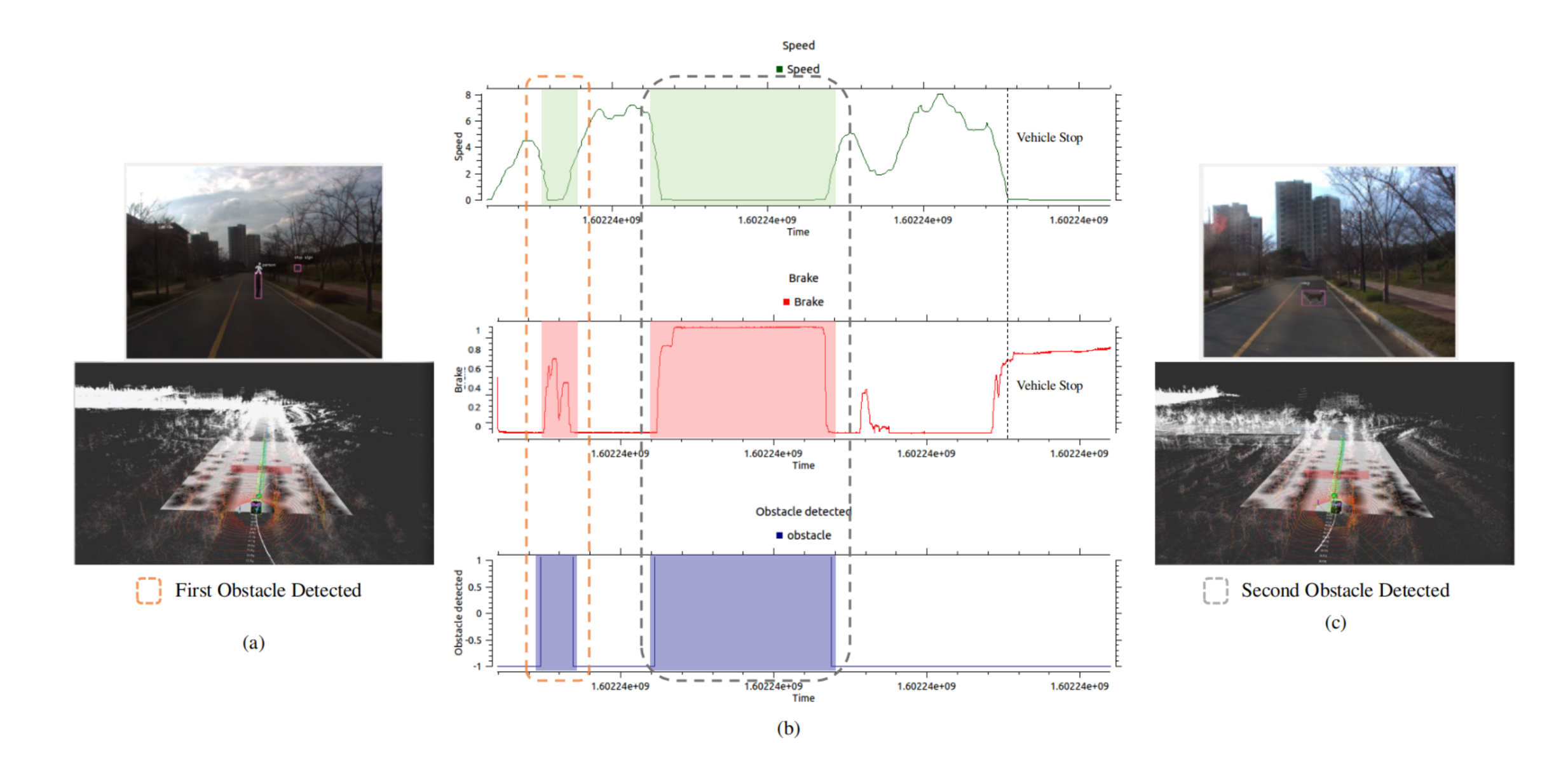

Figure 14.

The quantitative evaluation of obstacle detection using the proposed autonomous vehicle is illustrated. (a,c) show the obstacle detection in camera and Lidar frame using YOLOv3 and PointPillar network respectively. (b) The latency of speed, brake and obstacle profile is illustrated. It is shown in the graph that when the obstacle is detected, the vehicle speed slows down and brake command is promptly activated. The synchronization between the three profile is clearly seen with total execution time of 1650 s. In (b) at the detection of second obstacle, the graph profile of speed, brake and obstacle remain in their respective state, this corresponds that the obstacle is present in the route of autonomous vehicle and have not changed the place for that much period. The speed is in (m/s), brake command is command to vehicle CAN bus through drivekit, and obstacle detection is the indication of obstacle flag.

Figure 14.

The quantitative evaluation of obstacle detection using the proposed autonomous vehicle is illustrated. (a,c) show the obstacle detection in camera and Lidar frame using YOLOv3 and PointPillar network respectively. (b) The latency of speed, brake and obstacle profile is illustrated. It is shown in the graph that when the obstacle is detected, the vehicle speed slows down and brake command is promptly activated. The synchronization between the three profile is clearly seen with total execution time of 1650 s. In (b) at the detection of second obstacle, the graph profile of speed, brake and obstacle remain in their respective state, this corresponds that the obstacle is present in the route of autonomous vehicle and have not changed the place for that much period. The speed is in (m/s), brake command is command to vehicle CAN bus through drivekit, and obstacle detection is the indication of obstacle flag.

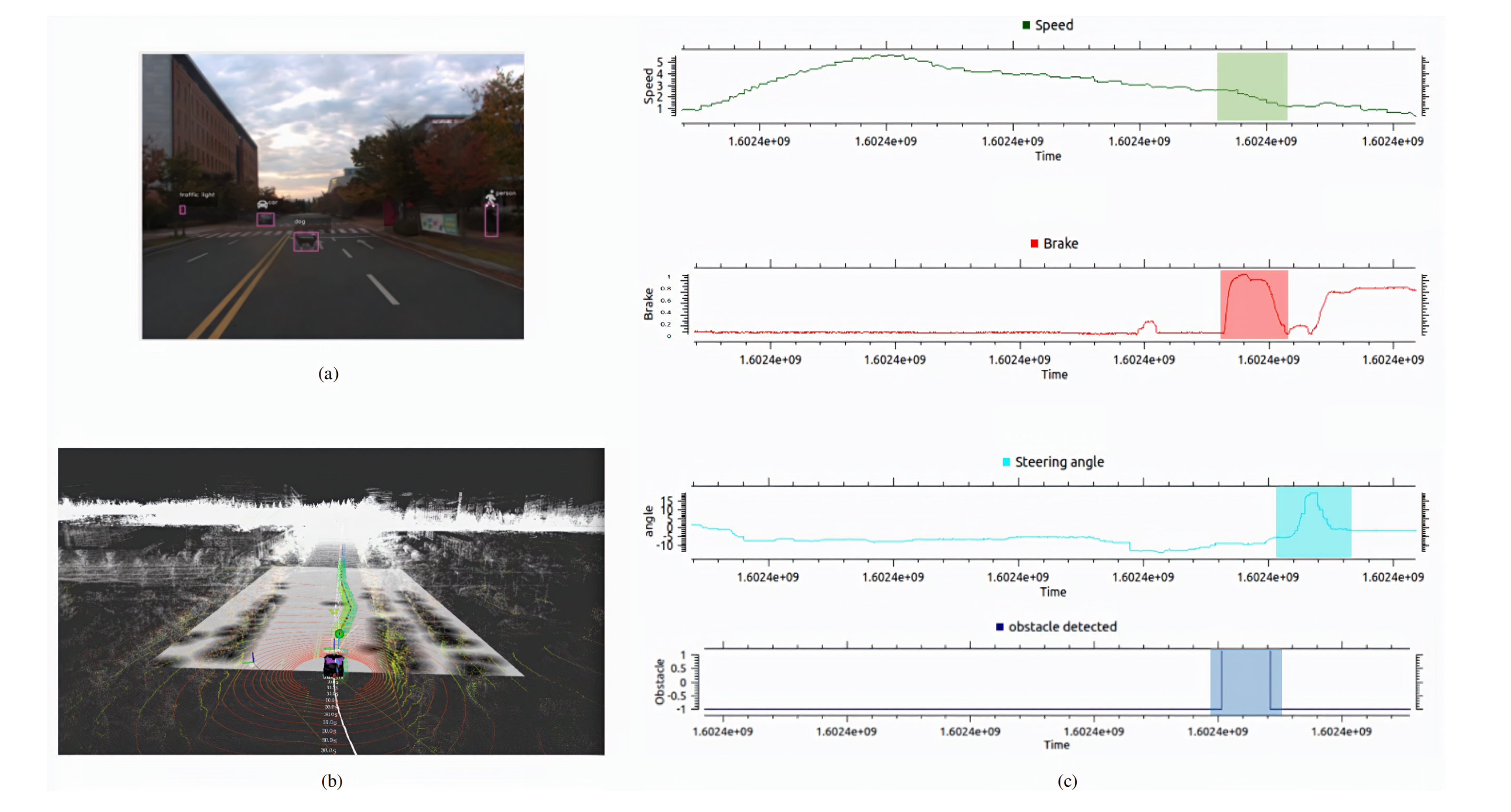

Figure 15.

The quantitative evaluation of obstacle avoidance using the proposed autonomous vehicle is illustrated. (a) Obstacle is detected in the camera frame using YOLOv3. (b) Obstacle is detected in the Lidar frame using PointPillar and upon detection the waypoints are changed and velocity is replanned using velocity replanning module and obstacle is avoided by complying the traffic rules using the state-machine configuration. (c) illustrates the quantitative graphs of obstacle avoidance using proposed autonomous vehicle. The spike in the steering angle graph shows the change of angle in positive direction. In the graphs it is shown that first the obstacle is detected, the vehicle speed is lower down and brake is applied, and in that case it is possible for the autonomous vehicle to avoid the obstacle by not violating the traffic rules, the autonomous vehicle has avoided the obstacle safely.

Figure 15.

The quantitative evaluation of obstacle avoidance using the proposed autonomous vehicle is illustrated. (a) Obstacle is detected in the camera frame using YOLOv3. (b) Obstacle is detected in the Lidar frame using PointPillar and upon detection the waypoints are changed and velocity is replanned using velocity replanning module and obstacle is avoided by complying the traffic rules using the state-machine configuration. (c) illustrates the quantitative graphs of obstacle avoidance using proposed autonomous vehicle. The spike in the steering angle graph shows the change of angle in positive direction. In the graphs it is shown that first the obstacle is detected, the vehicle speed is lower down and brake is applied, and in that case it is possible for the autonomous vehicle to avoid the obstacle by not violating the traffic rules, the autonomous vehicle has avoided the obstacle safely.

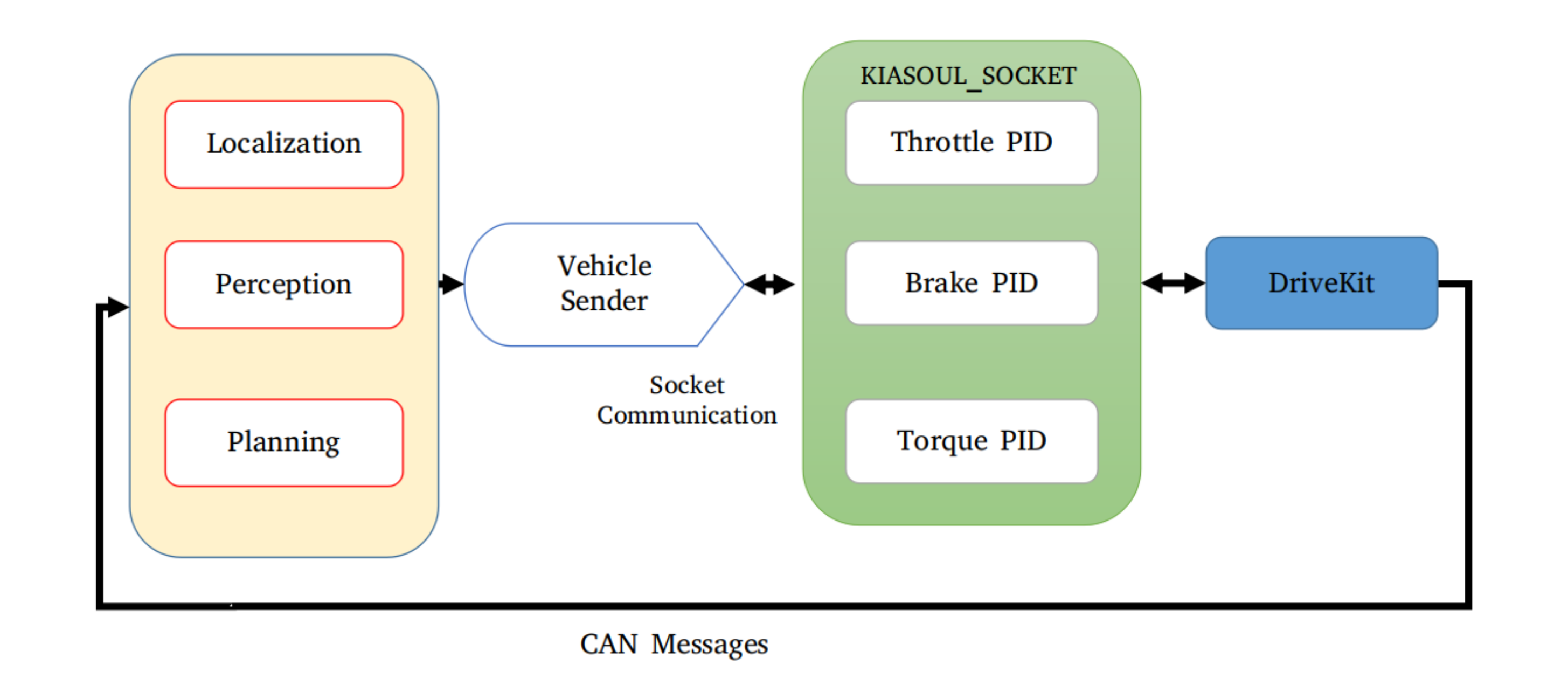

Figure 16.

Vehicle sender receives the information from Planning and send the command to PID controller. Kiasoul-socket is composed of Throttle/Brake and Torque PIDs that sends the command to Drivekit.

Figure 16.

Vehicle sender receives the information from Planning and send the command to PID controller. Kiasoul-socket is composed of Throttle/Brake and Torque PIDs that sends the command to Drivekit.

Figure 17.

The geometric representation of pure pursuit algorithm.

Figure 17.

The geometric representation of pure pursuit algorithm.

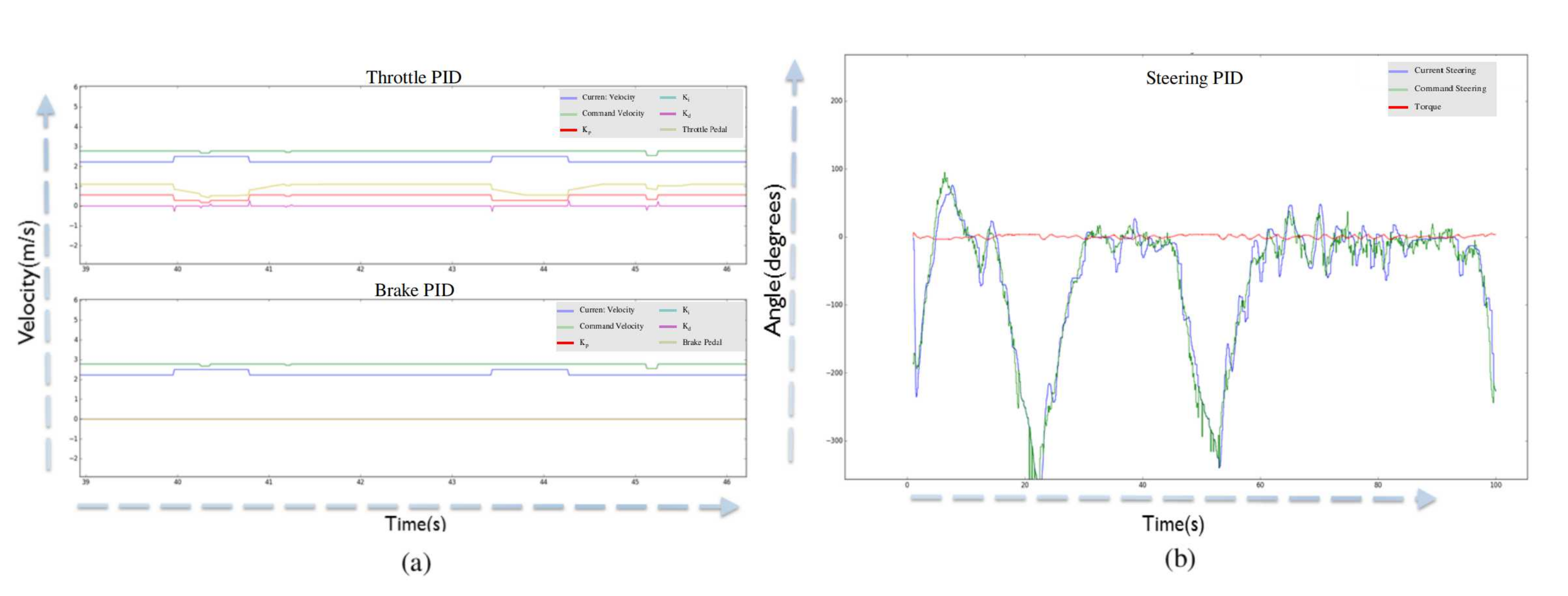

Figure 18.

(

a) The current velocity (from can-bus) and command velocity (from twist filter). It also shows the Throttle Pedal and PID parameters (Proportional, Integral and Derivative) (between 1 and −1). (

b) The graph of current steering and target steering values along with the torque information [

24] (Best viewed in color).

Figure 18.

(

a) The current velocity (from can-bus) and command velocity (from twist filter). It also shows the Throttle Pedal and PID parameters (Proportional, Integral and Derivative) (between 1 and −1). (

b) The graph of current steering and target steering values along with the torque information [

24] (Best viewed in color).

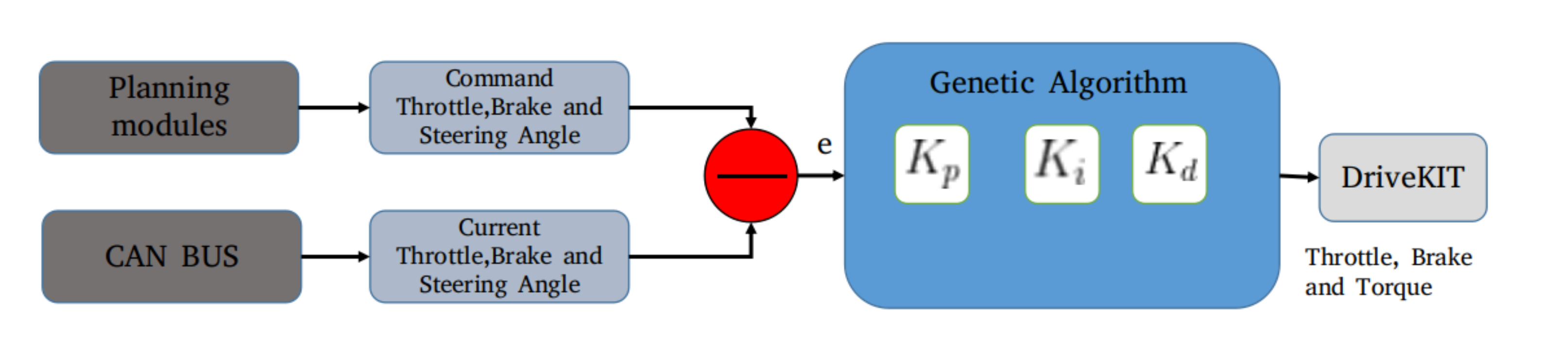

Figure 19.

Framework for tuning the PID parameters using genetic algorithm is shown. The parameters are tuned for throttle, brake and steering respectively.

Figure 19.

Framework for tuning the PID parameters using genetic algorithm is shown. The parameters are tuned for throttle, brake and steering respectively.

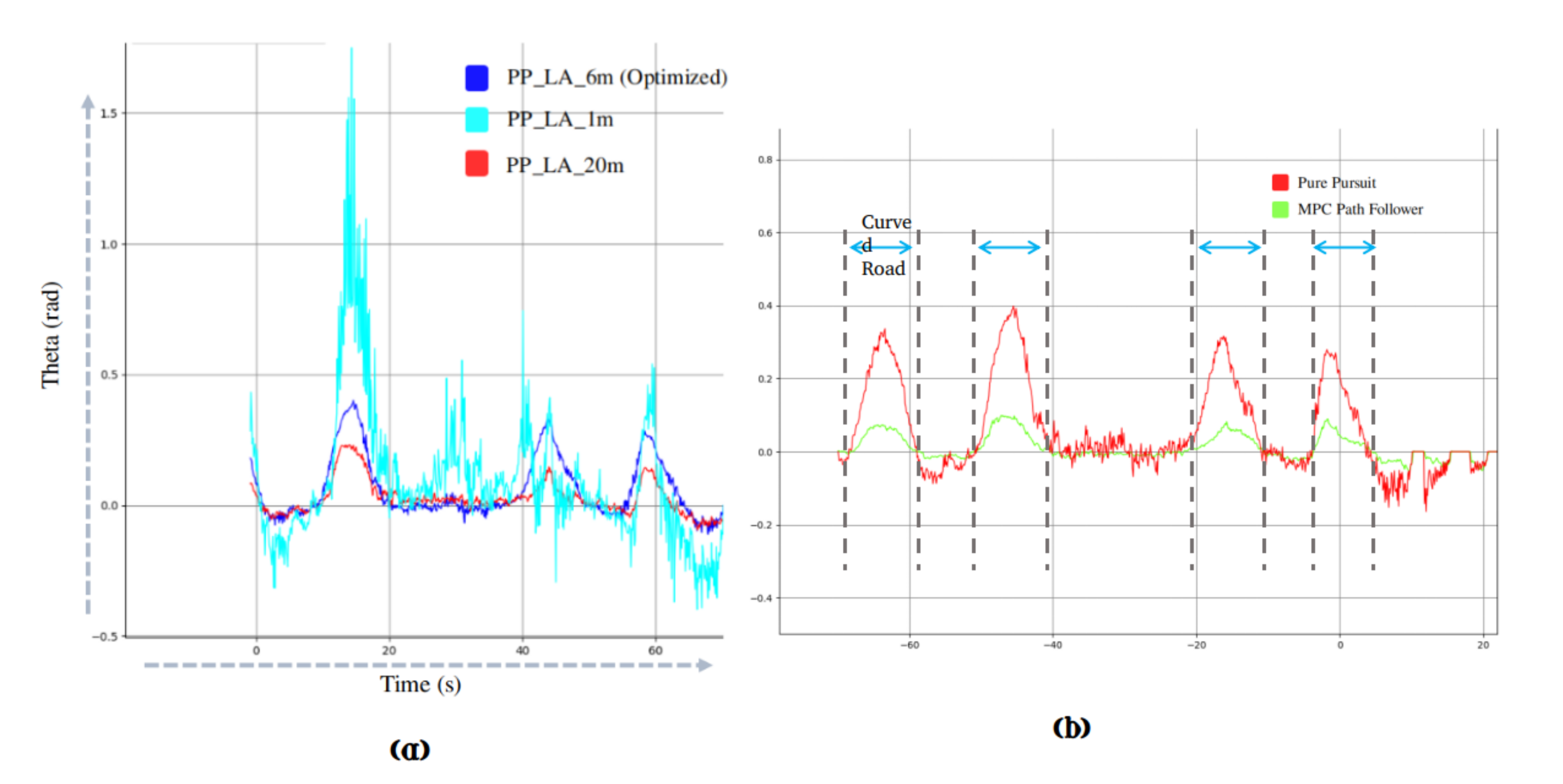

Figure 20.

(

a) illustrates the effect of look-ahead distance in pure pursuit. The look-ahead distance with different configuration is illustrated in the legend of the graph; for instance, the PP-LA-1m corresponds to 1m look-ahead distance in the pure pursuit. The look-ahead distance of 1m is more prone to noise, and produces more vibrations in the lateral control of the vehicle. Moreover, the look-ahead distance of 20 m, deviates the lateral control from the original track. The optimized look-ahead distance of 6m gives the optimal result with minimal error in contrast to former look-ahead distances. The steering angle for lateral control is shown by Theta (rad). (

b) illustrates the graph of lateral error difference between MPC and pure pursuit [

24] (Best viewed in color).

Figure 20.

(

a) illustrates the effect of look-ahead distance in pure pursuit. The look-ahead distance with different configuration is illustrated in the legend of the graph; for instance, the PP-LA-1m corresponds to 1m look-ahead distance in the pure pursuit. The look-ahead distance of 1m is more prone to noise, and produces more vibrations in the lateral control of the vehicle. Moreover, the look-ahead distance of 20 m, deviates the lateral control from the original track. The optimized look-ahead distance of 6m gives the optimal result with minimal error in contrast to former look-ahead distances. The steering angle for lateral control is shown by Theta (rad). (

b) illustrates the graph of lateral error difference between MPC and pure pursuit [

24] (Best viewed in color).

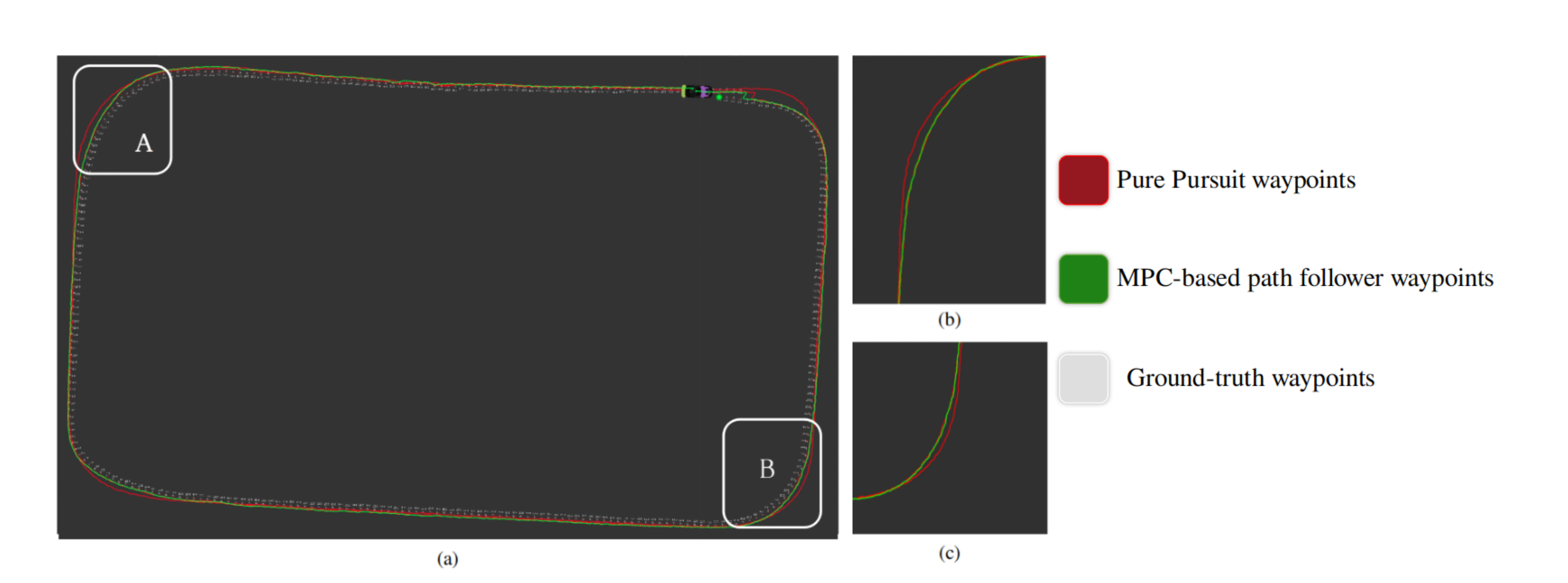

Figure 21.

(

a) illustrates the qualitative results between Pure Pursuit and MPC-based path follower. The difference between Pure Pursuit, and MPC-based path follower is shown in (

b,

c) respectively [

24] (Best viewed in color).

Figure 21.

(

a) illustrates the qualitative results between Pure Pursuit and MPC-based path follower. The difference between Pure Pursuit, and MPC-based path follower is shown in (

b,

c) respectively [

24] (Best viewed in color).

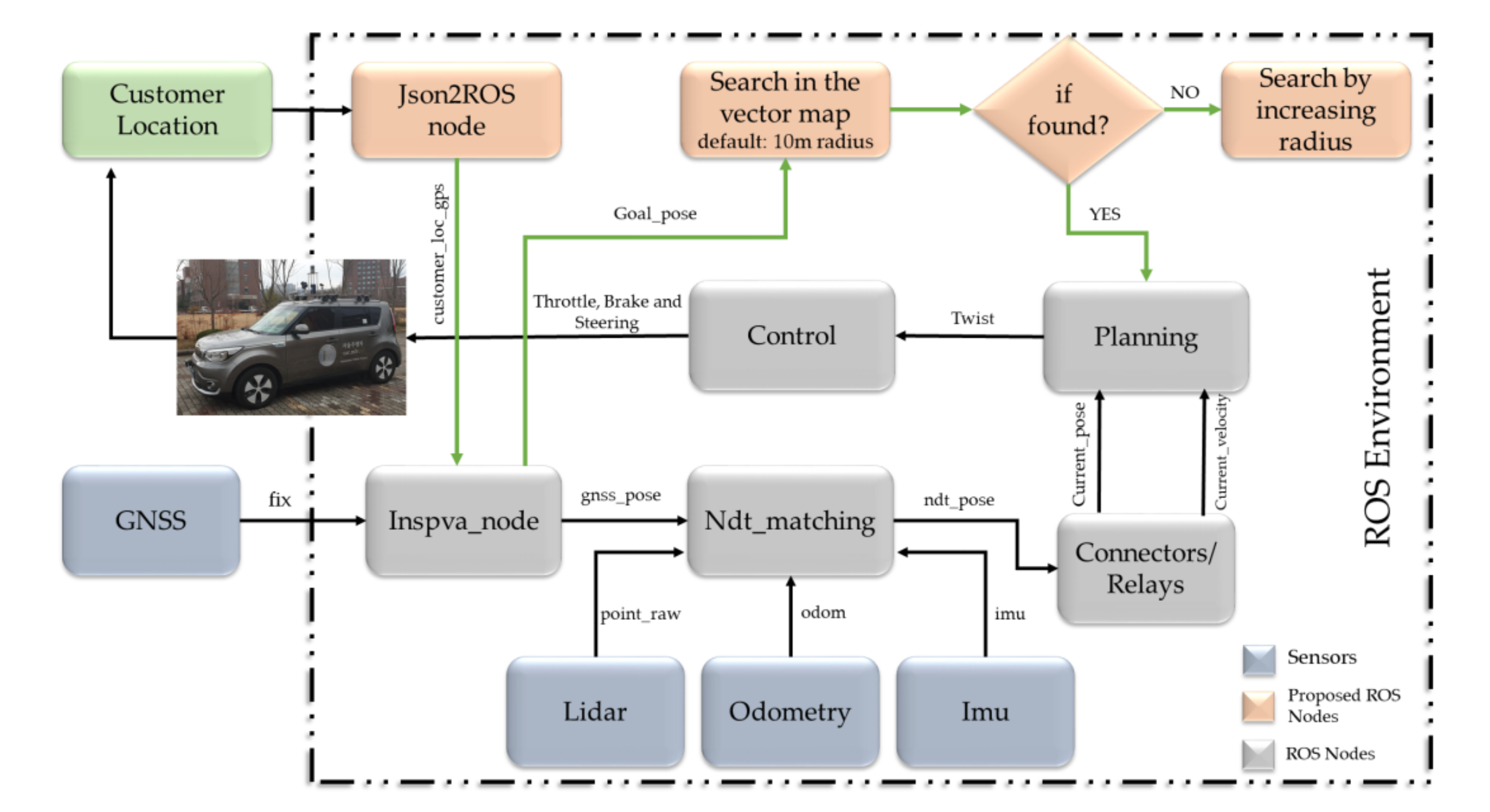

Figure 22.

The framework of cab booking service (Best viewed in color).

Figure 22.

The framework of cab booking service (Best viewed in color).

Figure 23.

The detailed architecture of converting the autonomous vehicle to a cab service (Best viewed in color).

Figure 23.

The detailed architecture of converting the autonomous vehicle to a cab service (Best viewed in color).

Figure 24.

The visualization of autonomous taxi service as an application of our autonomous vehicle. (a) The 3D map shows the start and customer’s pick up position upon receiving the request. (b) Image view of start position. (c) Front-facing camera view from the start position. (d) illustrates that the customer is waiting for the autonomous taxi service. (e) The map of our institute shows the start, customer and destination position (Best viewed in color).

Figure 24.

The visualization of autonomous taxi service as an application of our autonomous vehicle. (a) The 3D map shows the start and customer’s pick up position upon receiving the request. (b) Image view of start position. (c) Front-facing camera view from the start position. (d) illustrates that the customer is waiting for the autonomous taxi service. (e) The map of our institute shows the start, customer and destination position (Best viewed in color).

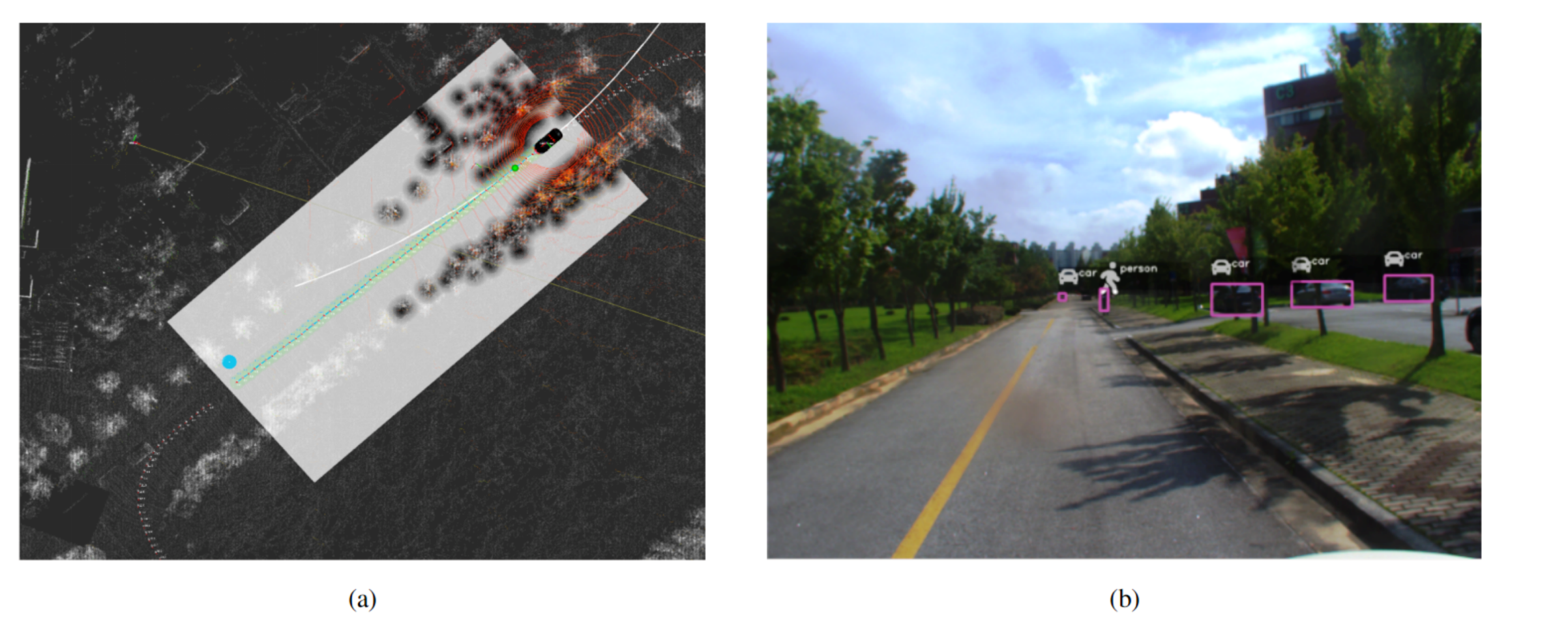

Figure 25.

The visualization results of an autonomous vehicle approaching to pick up the customer. (a) The 3D map of the environment showing the ego vehicle and customer’s position. (b) The view of the customer from the autonomous vehicle front-facing camera. (c) illustrate the environment and also showing the app which customer is using to request for autonomous taxi service (Best viewed in color).

Figure 25.

The visualization results of an autonomous vehicle approaching to pick up the customer. (a) The 3D map of the environment showing the ego vehicle and customer’s position. (b) The view of the customer from the autonomous vehicle front-facing camera. (c) illustrate the environment and also showing the app which customer is using to request for autonomous taxi service (Best viewed in color).

Figure 26.

The route of the autonomous vehicle after picking the customer to the destination is illustrated in (a–c) along with object detection and autonomous vehicle stopping at the obstacle as shown in (d,e). In that particular case, obstacle avoidance is not performed because this is the one-side road and obstructing the yellow line is against the traffic rules (Best viewed in color).

Figure 26.

The route of the autonomous vehicle after picking the customer to the destination is illustrated in (a–c) along with object detection and autonomous vehicle stopping at the obstacle as shown in (d,e). In that particular case, obstacle avoidance is not performed because this is the one-side road and obstructing the yellow line is against the traffic rules (Best viewed in color).

Table 1.

Normal Distribution Transform (NDT) Matching Experimental Parameters.

Table 1.

Normal Distribution Transform (NDT) Matching Experimental Parameters.

| Parameters | Values |

|---|

| Error Threshold | 1 |

| Transformation Epsilon | 0.1 |

| Max Iteration | 50 |

Table 2.

Quantitative comparison between GNSS pose and NDT pose. RMSE is computed in x, y, and z direction respectively. The overall RMSE is also computed between the two poses.

Table 2.

Quantitative comparison between GNSS pose and NDT pose. RMSE is computed in x, y, and z direction respectively. The overall RMSE is also computed between the two poses.

| Error between GNSS Pose and NDT Pose | RMSE |

|---|

| x-direction | 0.2903 |

| y-direction | 0.0296 |

| z-direction | 0.0361 |

| Overall | 1.7346 |

Table 3.

Ground Plane Removal Filter Experimental Parameters.

Table 3.

Ground Plane Removal Filter Experimental Parameters.

| Parameters | Values |

|---|

| Sensor height | 1.8 |

| Distance Threshold | 1.58 |

| Angle Threshold | 0.08 |

| Size Threshold | 20 |

Table 4.

Experimental Parameters for Planning Layer.

Table 4.

Experimental Parameters for Planning Layer.

| Parameters | Values |

|---|

| Stop distance for obstacle Threshold | 10 |

| Velocity replanning distance Threshold | 10 |

| Detection range | 12 |

| Threshold for objects | 20 |

| Deceleration for obstacle | 1.3 |

| Curve Angle | 0.65 |

Table 5.

Quantitative comparison of Cohen-Coon method and Genetic algorithm for tuning the PID parameters. Cohen-Coon method is manual tuning as compared to Genetic algorithm in which the tuning of PID parameters are obtained by defining the objective function and solve as an optimization problem [

24].

Table 5.

Quantitative comparison of Cohen-Coon method and Genetic algorithm for tuning the PID parameters. Cohen-Coon method is manual tuning as compared to Genetic algorithm in which the tuning of PID parameters are obtained by defining the objective function and solve as an optimization problem [

24].

| Controller Parameters | Throttle | Steering |

|---|

| Cohen-Coon Method | Genetic Algorithm | Cohen-Coon Method | Genetic Algorithm |

|---|

| 0.085 | 0.003 | 0.0009 | 0.0005 |

| 0.0045 | 0.0001 | 0.0001 | 0.0002 |

| 0.01 | 0.09 | 0.0005 | 0.0008 |

Table 6.

Pure pursuit tuned parameters for 30 km/h speed.

Table 6.

Pure pursuit tuned parameters for 30 km/h speed.

| Pure Pursuit Parameters | Values |

|---|

| Look-ahead ratio | 2 |

| Minimum Look-ahead Distance | 6 |

Table 7.

Hardware level stack comparison between state-of-the-art and our autonomous vehicle in term sensor utilization.

Table 7.

Hardware level stack comparison between state-of-the-art and our autonomous vehicle in term sensor utilization.

| Hardware Level Stack Comparison |

|---|

| S.No | Autonomous Vehicles | Lidar Units | Camera Units | Supplement Sensors (GNSS,IMU, Radars, Sonars) |

| 1. | Waymo [14] | 5 | 1 | GNSS+IMU |

| 2. | GM Chevy Bolt Cruise [15] | 5 | 16 | Radars |

| 3. | ZOOX [79] | 8 | 12 | Radar+GNSS+IMU |

| 4. | Uber(ATG) [12] | 7 | 20 | Radars, GNSS+IMU |

| 6. | Ours (Car.Mlv.ai) | 1 | 2 | GNSS+IMU |

Table 8.

Hardware comparison of the relevant research-based autonomous vehicle is shown. ● indicates an existing component, ❍ shows the nonexistence of specific component and ? explains that the required information is not public. For reference, DARPA challenges and Apollo are considered for comparison.

Table 8.

Hardware comparison of the relevant research-based autonomous vehicle is shown. ● indicates an existing component, ❍ shows the nonexistence of specific component and ? explains that the required information is not public. For reference, DARPA challenges and Apollo are considered for comparison.

| | 1994 | 2007 | 2013 | 2015 | 2016 | 2018 | 2019 |

|---|

| Sensors | VaMP [6] | Junior [7] | Boss [17] | Bertha [11] | Race [8] | Halmstad [9] | Bertha [18] | Apollo [10] | Ours (Car.Mlv.ai) |

| Camera | ● front/rear | ❍ | ● front | ● stereo | ● front | ❍ | ● stereo/360 deg | ● front/side | ● front |

| Lidar | ❍ | ● 64 channels | ● | ❍ | ● 4 channels | ❍ | ● 4 channels | ● 64 channels | ● 32 channels |

| Radar | ❍ | ● | ● | ● | ● | ● series | ● | ● | ● |

| GPS | ❍ | ● | ● | ● | ● | ● rtk | ● | ● rtk | ● rtk |

| INS | ● | ● | ● | ? | ● | ● | ● | ● | ● |

| PC | ● | ● | ● | ? | ● | ● | ● | ● | ● |

| GPU | ❍ | ❍ | ❍ | ? | ❍ | ❍ | ● | ● | ● |

Table 9.

Software comparison of relevant research based autonomous vehicles. ? illustrates that the required information is not public.

Table 9.

Software comparison of relevant research based autonomous vehicles. ? illustrates that the required information is not public.

| | 1994 | 2007 | 2013 | 2015 | 2016 | 2018 | 2019 |

|---|

| | VaMP [6] | Junior [7] | Boss [17] | Bertha [11] | Race [8] | Halmstad [9] | Bertha [18] | Apollo [10] | Ours (Car.Mlv.ai) |

| Application | German Highway | DARPA Urban Challenge | German Rural | Parking | Cooperative Driving Challenge | Various | Various but focus on Autonomous cab services |

| Middleware | ? | Publish/Subscribe IPC | Publish/Subscribe IPC | ? | RACE RTE | LCM | ROS | Cyber RT | ROS |

| Operating System | ? | Linux | ? | ? | Pike OS | Linux | Linux | Linux | Linux |

| Functional Safety | None | Watchdog module | Error Recovery | ? | Supporting

ASIL D | Trust System | ? | System Health Monitor | Supporting

ASIL D |

| Controller | ? | PC | ? | ? | RACE DDC | Micro Autobox | realtime onboard comp. | PC | DriveKit and on board controller |

| Licensing | Proprietary | Partly open | Proprietary | Proprietary | Proprietary | Proprietary | Proprietary | Open | Open |

Table 10.

The details of sensors operating frequency.

Table 10.

The details of sensors operating frequency.

| Sensors | Frequency (Hz) |

|---|

| Lidar | 15 |

| Camera | 25 |

| GPS | 20 |

| Can info | 200 |

| IMU | 20 |

| odomerty | 200 |