ARBIN: Augmented Reality Based Indoor Navigation System

Abstract

:1. Introduction

2. Related Work

2.1. Marker-Based Methods

2.2. 2D Image Recognition-Based Methods

2.3. 3D Space Recognition-Based Methods

3. Methodology

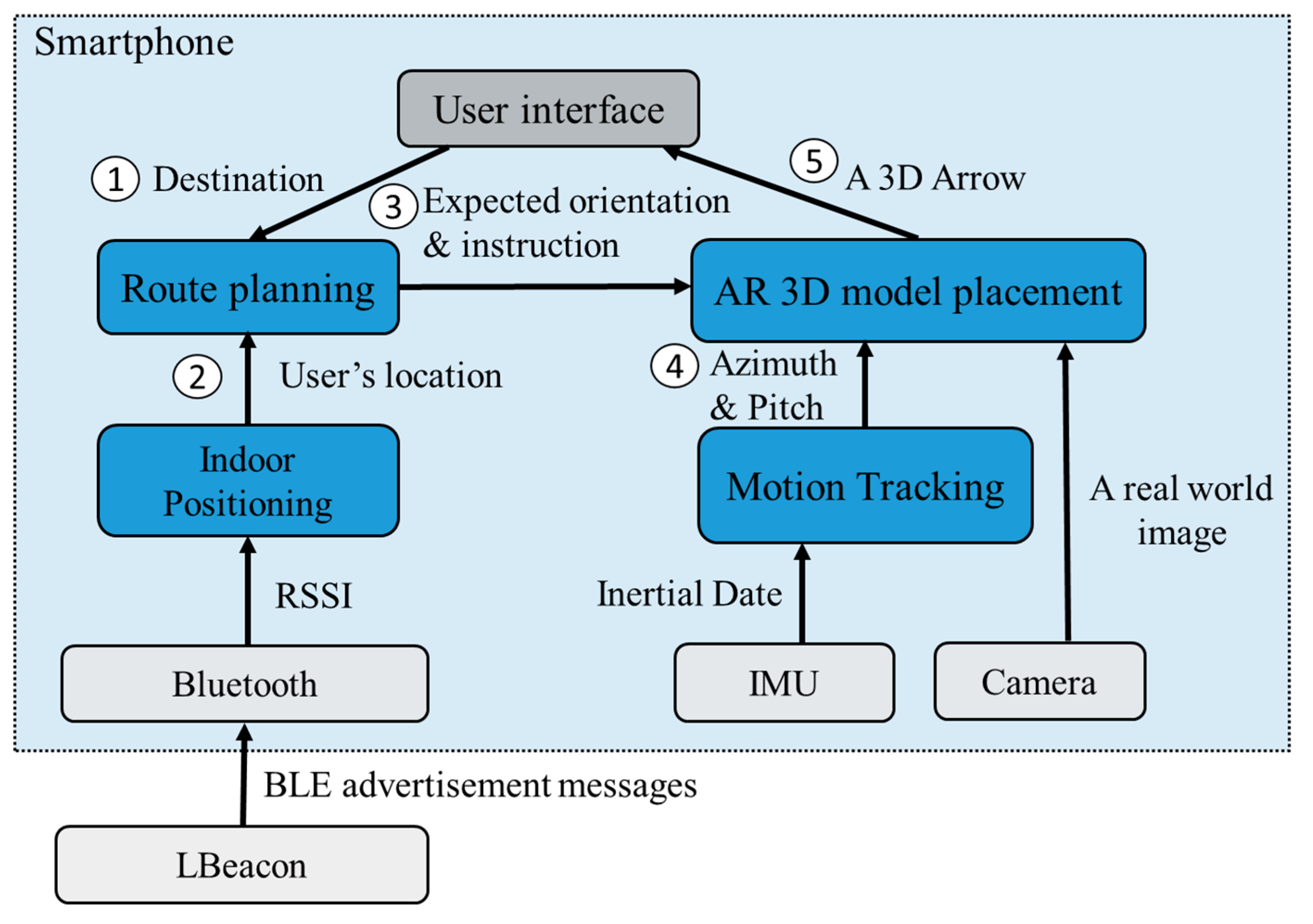

3.1. System Overview

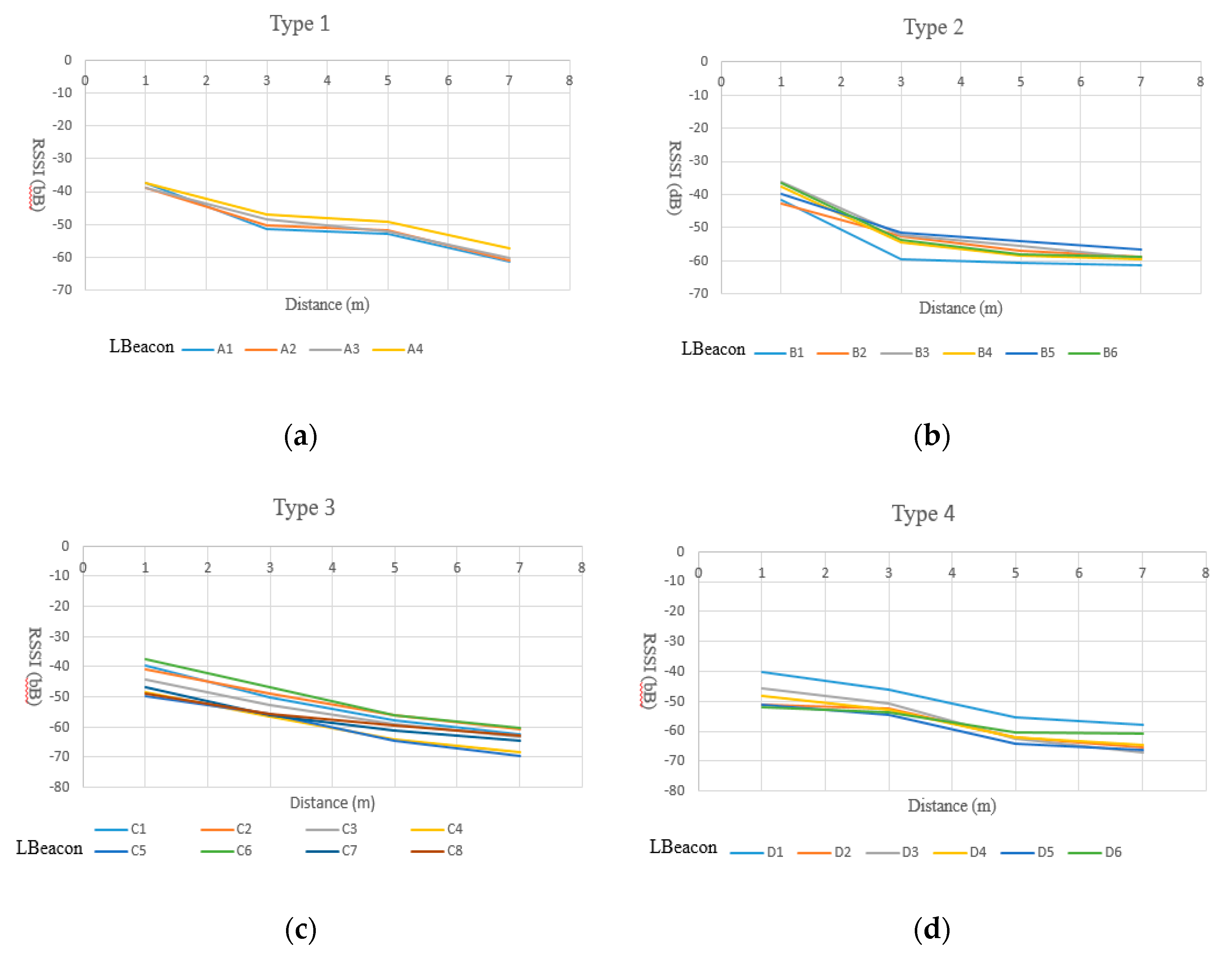

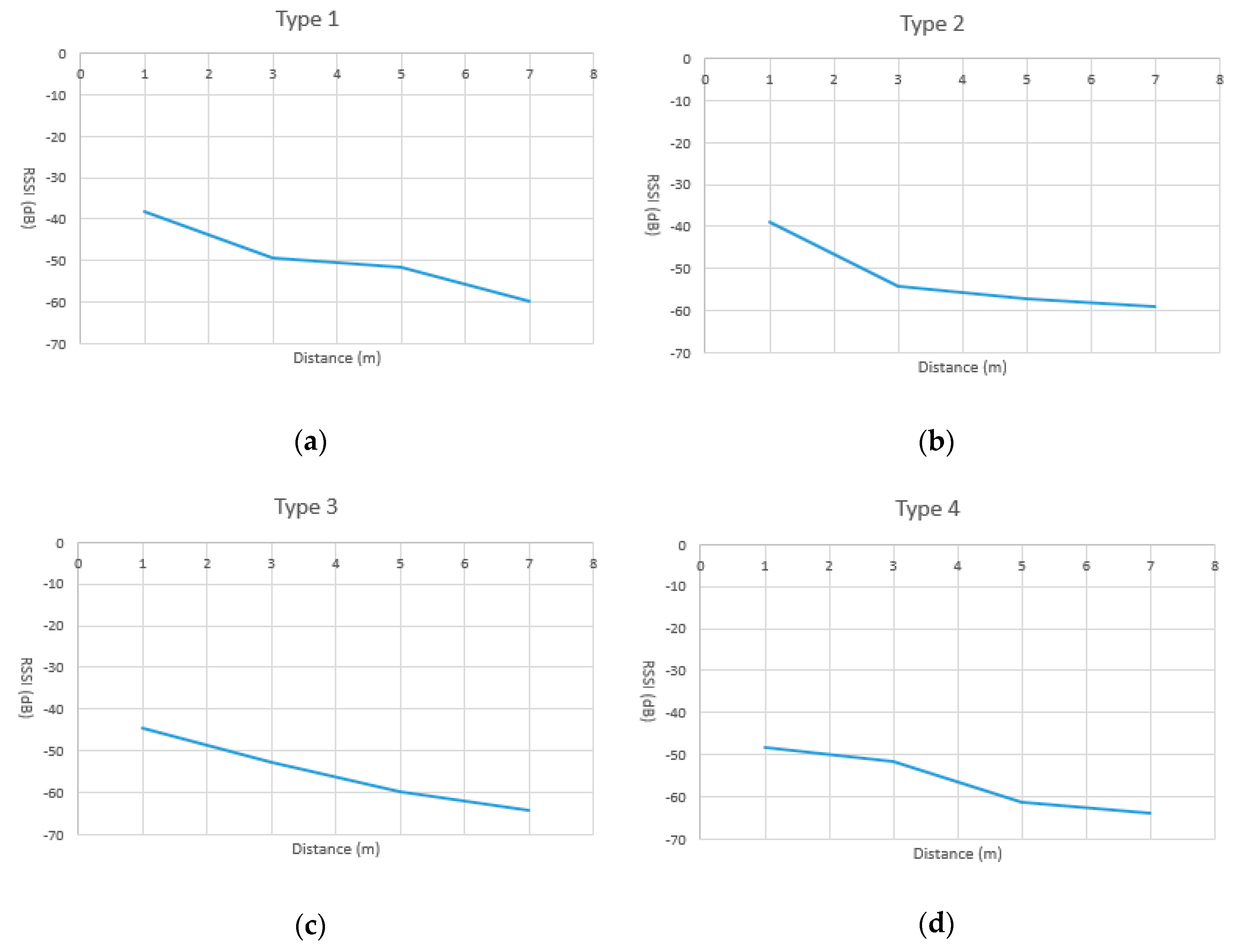

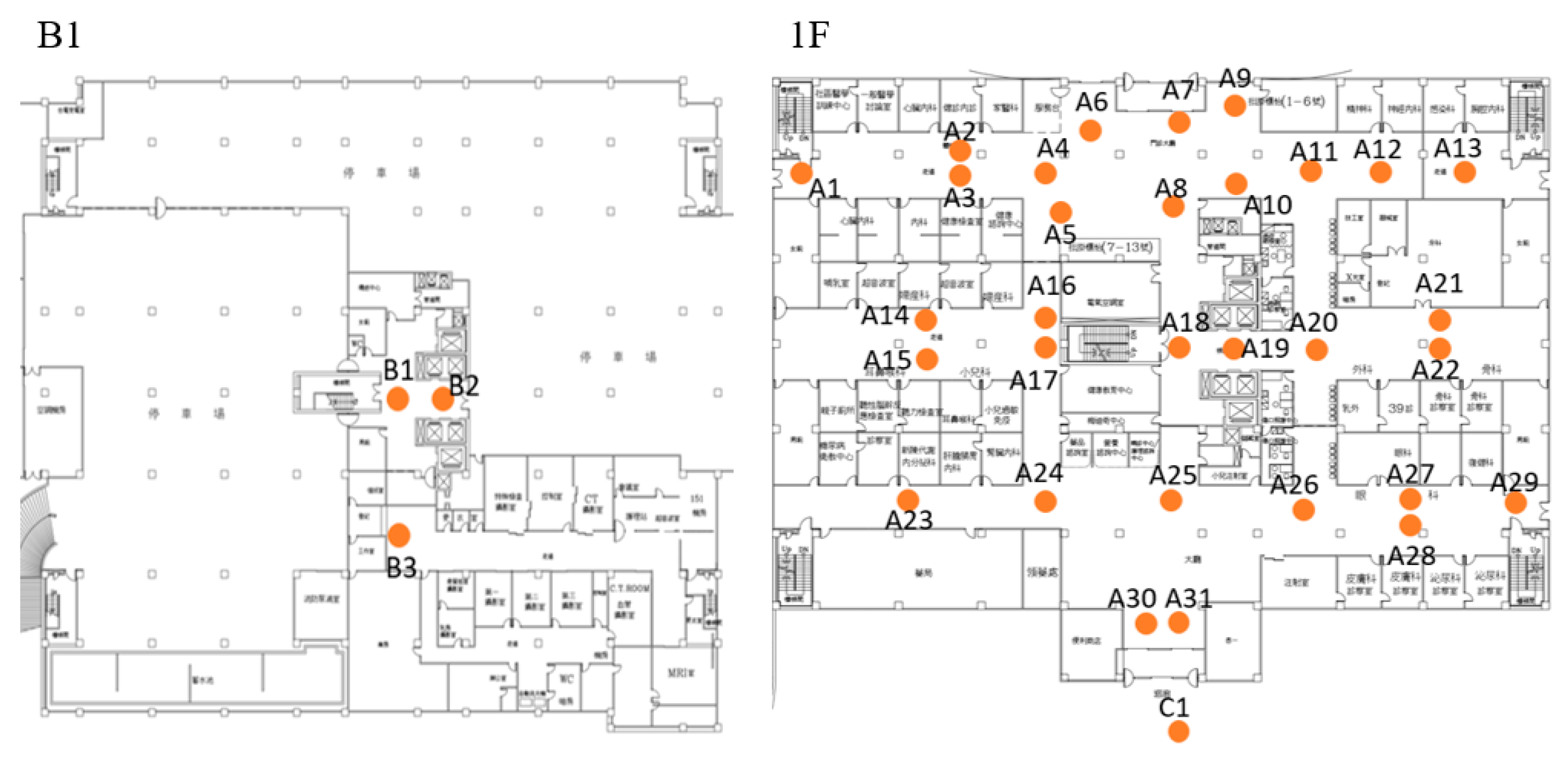

3.2. Indoor Positioning Module

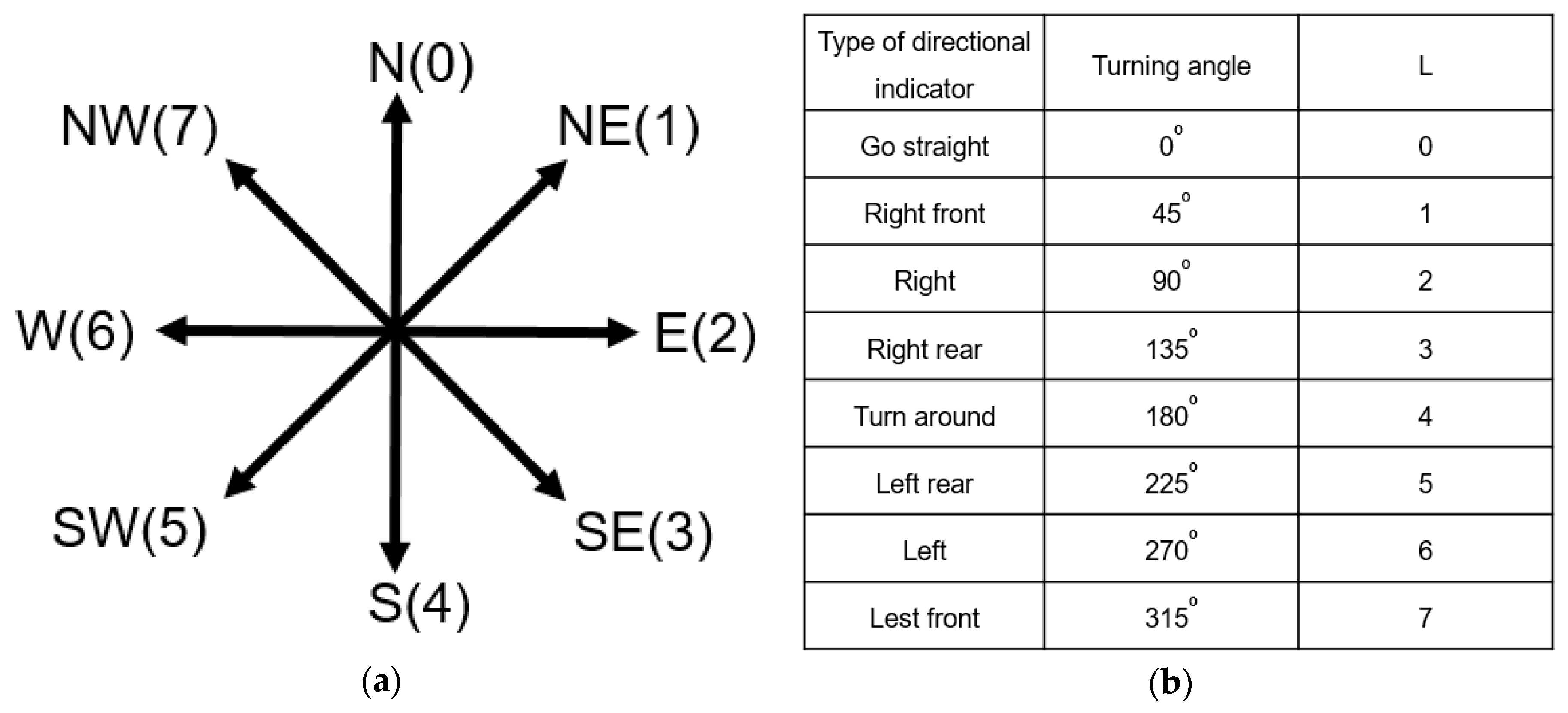

3.3. Route Planning Module

3.4. Motion Tracking Module

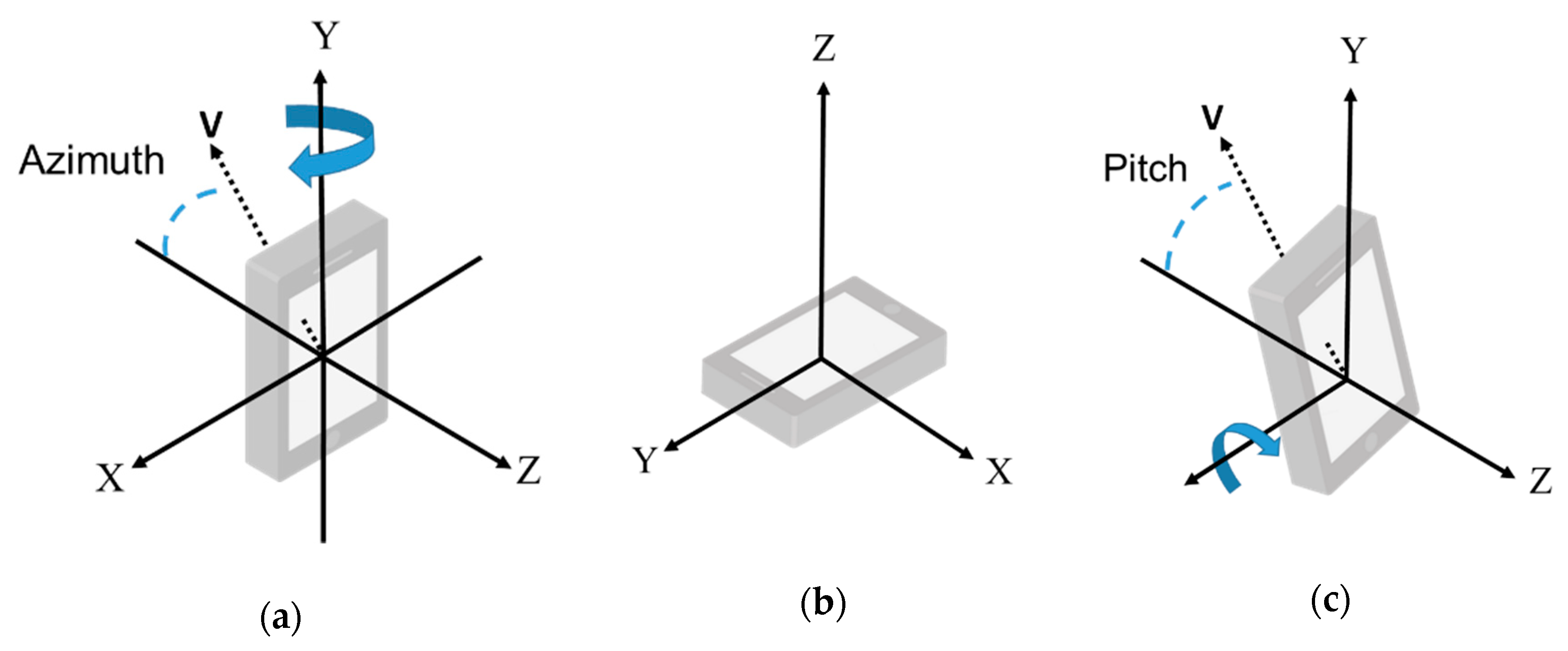

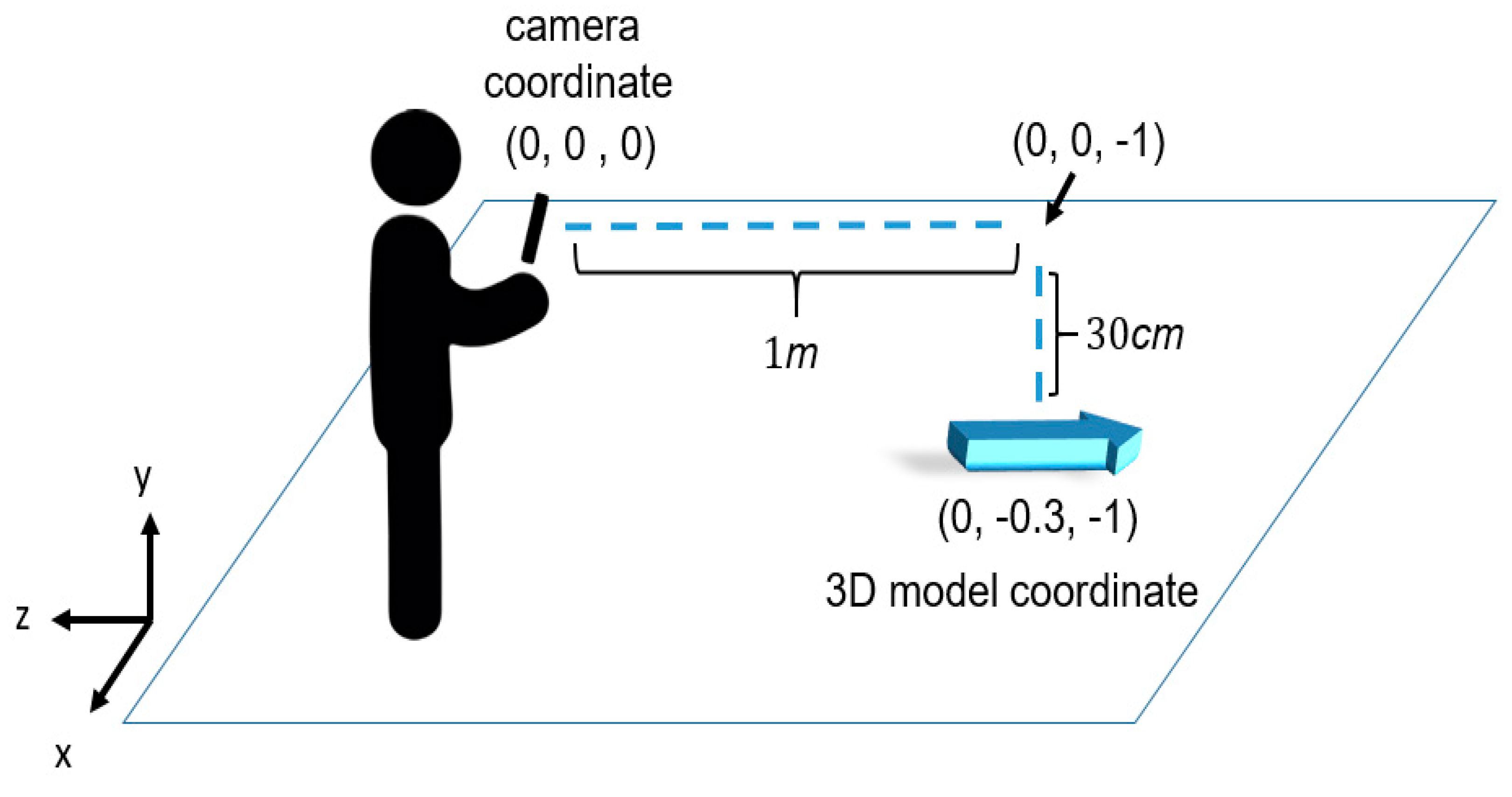

3.5. AR 3D Model Placement Module

4. Experiment

4.1. In-House Experiments

4.1.1. Evaluation of Azimuth of Smartphones

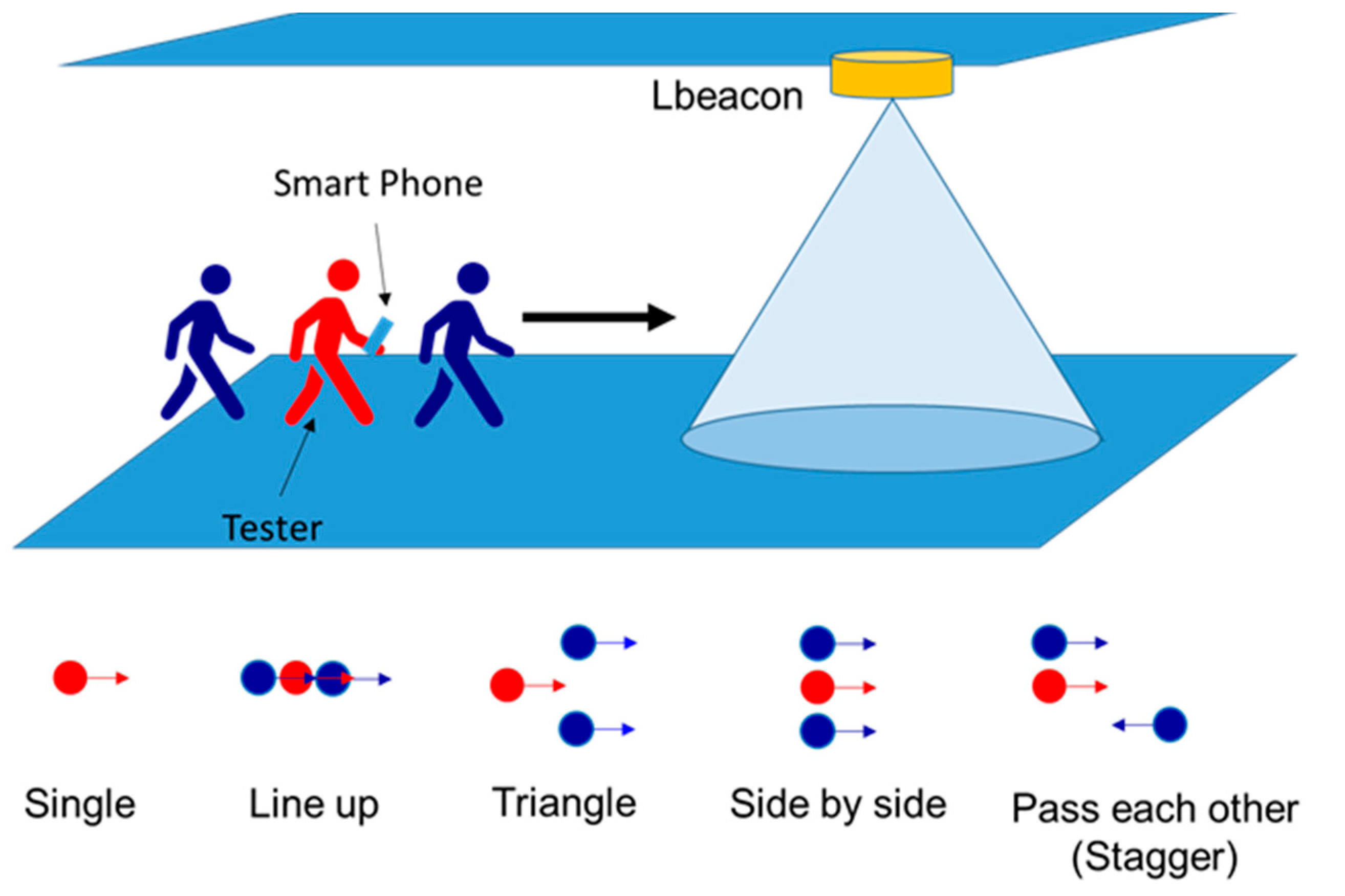

4.1.2. Responsiveness of ARBIN

4.2. Field Trial

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rehman, U.; Cao, S. Augmented-Reality-Based Indoor Navigation: A Comparative Analysis of Handheld Devices Versus Google Glass. IEEE Trans. Hum. Mach. Syst. 2017, 47, 140–151. [Google Scholar] [CrossRef]

- Mulloni, A.; Wagner, D.; Barakonyi, I.; Schmalstieg, D. Indoor positioning and navigation with camera phones. IEEE Pervasive Comput. 2009, 8, 22–31. [Google Scholar] [CrossRef]

- Mulloni, A.; Seichter, H.; Schmalstieg, D. Handheld augmented reality indoor navigation with activity-based instructions. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services, Stockholm, Sweden, 30 August–2 September 2011; pp. 211–220. [Google Scholar]

- Huey, L.C.; Sebastian, P.; Drieberg, M. Augmented reality based indoor positioning navigation tool. In Proceedings of the IEEE Conference on Open Systems, Langkawi, Malaysia, 25–28 September 2011; pp. 256–260. [Google Scholar]

- Kasprzak, S.; Komninos, A.; Barrie, P. Feature-based indoor navigation using augmented reality. In Proceedings of the 9th International Conference on Intelligent Environments, Athens, Greece, 18–19 July 2013; pp. 100–107. [Google Scholar]

- Kim, J.; Jun, H. Vision-based location positioning using augmented reality for indoor navigation. IEEE Trans. Consum. Electron. 2008, 54, 954–962. [Google Scholar] [CrossRef]

- Chu, E.T.-H.; Wang, S.C.; Liu, J.W.S.; Hsu, J.; Wu, H.M. WPIN: A waypoint-based indoor navigation system. In Proceedings of the IEEE 10th International Conference on Indoor Positioning and Indoor Navigation, Pisa, Italy, 30 September–3 October 2019. [Google Scholar]

- ARCore. Available online: https://developers.google.com/ar (accessed on 25 August 2020).

- Reitmayr, G.; Schmalstieg, D. Location based applications for mobile augmented reality. In Proceedings of the 4th Australasian User Interface Conference, Adelaide, SA, Australia, 4–7 February 2003; pp. 65–73. [Google Scholar]

- Sato, F. Indoor Navigation System Based on Augmented Reality Markers. In Proceedings of the 11th International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing, Torino, Italy, 10–12 July 2017; pp. 266–274. [Google Scholar]

- Feng, C.; Kamat, V.R. Augmented reality markers as spatial indices for indoor mobile AECFM applications. In Proceedings of the 12th International Conference on Construction Applications of Virtual Reality, Taipei, Taiwan, 1–2 November 2012; pp. 235–242. [Google Scholar]

- Al Delail, B.; Weruaga, L.; Zemerly, M.J. CAViAR: Context aware visual indoor augmented reality for a university campus. In Proceedings of the IEEE/WIC/ACM International Conferences on Web Intelligence and Intelligent Agent Technology, Macau, China, 4–7 December 2012; pp. 286–290. [Google Scholar]

- Koch, C.; Neges, M.; König, M.; Abramovici, M. Natural markers for augmented reality-based indoor navigation and facility maintenance. Autom. Constr. 2014, 48, 18–30. [Google Scholar] [CrossRef]

- Romli, R.; Razali, A.F.; Ghazali, N.H.; Hanin, N.A.; Ibrahim, S.Z. Mobile Augmented Reality(AR) Marker-based for Indoor Library Navigation. IOP Conf. Ser. Mater. Sci. Eng. 2020, 767, 012062. [Google Scholar] [CrossRef]

- Al Delail, B.; Weruaga, L.; Zemerly, M.J.; Ng, J.W.P. Indoor localization and navigation using smartphones augmented reality and inertial tracking. In Proceedings of the IEEE International Conference on Electronics Circuits and Systems, Abu Dhabi, UAE, 8–11 December 2013; pp. 929–932. [Google Scholar]

- Nam, G.H.; Seo, H.S.; Kim, M.S.; Gwon, Y.K.; Lee, C.M.; Lee, D.M. AR-based Evacuation Route Guidance System in Indoor Fire Environment. In Proceedings of the 25th Asia-Pacific Conference on Communications (APCC), Ho Chi Minh City, Vietnam, 6–8 November 2019; pp. 316–319. [Google Scholar]

- Wu, J.; Huang, C.; Huang, Z.; Chen, Y.; Chen, S. A Rapid Deployment Indoor Positioning Architecture based on Image Recognition. In Proceedings of the IEEE 7th International Conference on Industrial Engineering and Applications (ICIEA), Bangkok, Thailand, 16–21 April 2020; pp. 784–789. [Google Scholar]

- Gerstweiler, G.; Vonach, E.; Kaufmann, H. HyMoTrack: A mobile AR navigation system for complex indoor environments. Sensors 2015, 16, 17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Metaio SDK. Available online: http://www.metaio.com/products/sdk (accessed on 25 August 2020).

- Rustagi, T.; Yoo, K. Indoor AR navigation using tilesets. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, Tokyo, Japan, 28 November–1 December 2018; pp. 1–2. [Google Scholar]

- MapBox. Available online: https://docs.mapbox.com/api/ (accessed on 25 August 2020).

- Unity. Available online: https://unity.com/ (accessed on 25 August 2020).

- Koc, I.A.; Serif, T.; Gören, S.; Ghinea, G. Indoor Mapping and Positioning using Augmented Reality. In Proceedings of the 7th International Conference on Future Internet of Things and Cloud (FiCloud), Istanbul, Turkey, 26–28 August 2019; pp. 335–342. [Google Scholar]

- ARKit. Available online: https://developer.apple.com/documentation/arkit (accessed on 25 August 2020).

- Choi, H.; Lim, K.; Ko, Y. Improved Virtual Anchor Selection for AR-assisted Sensor Positioning in Harsh Indoor Conditions. In Proceedings of the Global Internet of Things Summit (GIoTS), Dublin, Ireland, 3 June 2020; pp. 1–6. [Google Scholar]

- Android Sensor Manager. Available online: https://developer.android.com/reference/android/hardware/SensorManager (accessed on 25 August 2020).

- Shuster, M.D. A survey of attitude representations. Navigation 1993, 8, 439–517. [Google Scholar]

- Kok, M.; Hol, J.D.; Schön, T.B.; Gustafsson, F.; Luinge, H. Calibration of a magnetometer in combination with inertial sensors. In Proceedings of the 15th International Conference on Information Fusion, Singapore, 9–12 July 2012; pp. 787–793. [Google Scholar]

- Wang, Y.; Zhang, J.Y.; Zhang, D.W. Error Analysis and Algorithm Validation of Geomagnetic Sensor. Appl. Mech. Mater. 2015, 742, 21–26. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, X.; Xiao, W. Calibration and compensation method of three-axis geomagnetic sensor based on pre-processing total least square iteration. J. Instrum. 2018, 13, T04006. [Google Scholar] [CrossRef]

- ViroCore SDK. Available online: https://viromedia.com/virocore (accessed on 25 August 2020).

- Li, C.C.; Su, J.; Chu, E.T.-H.; Liu, J.W.S. Building/environment Data/information Enabled Location Specificity and Indoor Positioning. IEEE Internet Things J. 2017, 6, 2116–2128. [Google Scholar] [CrossRef]

| Model | CPU | Memory | OS | Bluetooth |

|---|---|---|---|---|

| Samsung Galaxy S10e | Exynos 9820 | 6 GB | Android 9.0 | 5.0 |

| Sony Xperia XZ Premium | Snapdragon 835 | 4 GB | Android 9.0 | 5.0 |

| Azimuth (Degree) | Samsung S10e | SONY Xperia XZ Premium | ||

|---|---|---|---|---|

| Max (Degree) | Min (Degree) | Max (Degree) | Min (Degree) | |

| North (0) | +2.66 | –3.32 | +2.35 | –1.46 |

| Northeast (45) | +4.67 | –1.35 | +0.98 | –2.18 |

| East (90) | +3.26 | –2.54 | +1.23 | –3.35 |

| Southeast (135) | +2.09 | –2.52 | +2.33 | –3.04 |

| South (180/–180) | –0.03 | +0.01 | –0.01 | +0.01 |

| Southwest (–135) | +1.94 | –3.48 | +1.74 | –3.05 |

| West (–90) | +2.28 | –3.77 | +3.44 | –4.31 |

| Northwest (–45) | +2.49 | –3.64 | +1.28 | –1.65 |

| Lbeacon | Samsung Galaxy S10e | Sony Xperia XZ Premium | ||

|---|---|---|---|---|

| L | L | |||

| Forward Direction | Backward Direction | Forward Direction | Backward Direction | |

| A1 | 2.0 m | 0.8 m | 0.2 m | 0.4 m |

| A2 | 2.0 m | 1.1 m | 0.3 m | 0.4 m |

| A3 | 3.0 m | 5.3 m | 1.4 m | 0.4 m |

| B1 | 2.1 m | 1.4 m | 1.1 m | 0.4 m |

| B2 | 1.0 m | 3.1 m | 2.2 m | 0.6 m |

| B3 | 0.1 m | 1.8 m | 0.2 m | 0.2 m |

| B4 | 2.2 m | 2.0 m | 1.2 m | 0.2 m |

| C1 | 1.5 m | 1.6 m | 0.5 m | 1.2 m |

| C2 | 1.3 m | 3.1 m | 1.1 m | 1.2 m |

| C3 | 2.7 m | 3.0 m | 1.5 m | 1.9 m |

| Average | 1.7 m | 2.3 m | 1.0 m | 0.7 m |

| Task 1: Go to The Register Counter and Then the Clinic. | |||||

|---|---|---|---|---|---|

| Route Information | Volunteer A (Samsung) | Volunteer B (Asus) | Volunteer C (Oppo) |

Volunteer D (SONY) | |

| Actions | Lbeacons | ||||

| Go to the registration counter | A6–A11 | Moderate | Moderate | Moderate | Moderate |

| Go to the clinic | A11–A20 | Moderate | Moderate | Moderate | Moderate |

| A20–A22 | Arrive successfully | Arrive successfully | Arrive successfully | Arrive successfully | |

| Task 2: Go to the X-ray examination room. | |||||

| Go to the stairs | A22–A20 | Moderate | Moderate | Moderate | Moderate |

| A20–A19 | Moderate | Moderate | Moderate | Moderate | |

| A19–A18 | Moderate | Moderate | Moderate | Moderate | |

| Down the stairs | A18–B1 | Moderate | Moderate | Moderate | Moderate |

| Go to the examination room | B1–B3 | Arrive successfully | Arrive successfully | Arrive successfully | Arrive successfully |

| Task 3: Go to the pharmacy. | |||||

| Go to the stairs | B3–B1 | Moderate | Moderate | Moderate | Moderate |

| Go up the stairs | B1–A18 | Moderate | Slow | Moderate | Moderate |

| Go to the pharmacy | A18–A25 | Arrive successfully | Arrive successfully | Arrive successfully | Arrive successfully |

| Task 4: Go to the exit. | |||||

| Go to the exit | A25–A31 | Moderate | Moderate | Moderate | Moderate |

| A31–C1 | Arrive successfully | Arrive successfully | Arrive successfully | Arrive successfully | |

| Model/Working Pattern | No Signal | Slow | Moderate | Fast | |

|---|---|---|---|---|---|

| Samsung Galaxy S10e | Single | 0 | 0 | 14 | 0 |

| Line up | 0 | 0 | 14 | 0 | |

| Triangle | 0 | 0 | 14 | 0 | |

| Side by side | 0 | 0 | 14 | 0 | |

| Stagger | 0 | 0 | 14 | 0 | |

| Sony Xperia XZ Premium | Single | 0 | 0 | 14 | 0 |

| Line up | 1 (B3) | 0 | 13 | 0 | |

| Triangle | 0 | 1 (B3) | 13 | 0 | |

| Side by side | 0 | 0 | 14 | 0 | |

| Stagger | 1(A10) | 0 | 13 | 0 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, B.-C.; Hsu, J.; Chu, E.T.-H.; Wu, H.-M. ARBIN: Augmented Reality Based Indoor Navigation System. Sensors 2020, 20, 5890. https://doi.org/10.3390/s20205890

Huang B-C, Hsu J, Chu ET-H, Wu H-M. ARBIN: Augmented Reality Based Indoor Navigation System. Sensors. 2020; 20(20):5890. https://doi.org/10.3390/s20205890

Chicago/Turabian StyleHuang, Bo-Chen, Jiun Hsu, Edward T.-H. Chu, and Hui-Mei Wu. 2020. "ARBIN: Augmented Reality Based Indoor Navigation System" Sensors 20, no. 20: 5890. https://doi.org/10.3390/s20205890

APA StyleHuang, B.-C., Hsu, J., Chu, E. T.-H., & Wu, H.-M. (2020). ARBIN: Augmented Reality Based Indoor Navigation System. Sensors, 20(20), 5890. https://doi.org/10.3390/s20205890