INS/CNS Deeply Integrated Navigation Method of Near Space Vehicles

Abstract

:1. Introduction

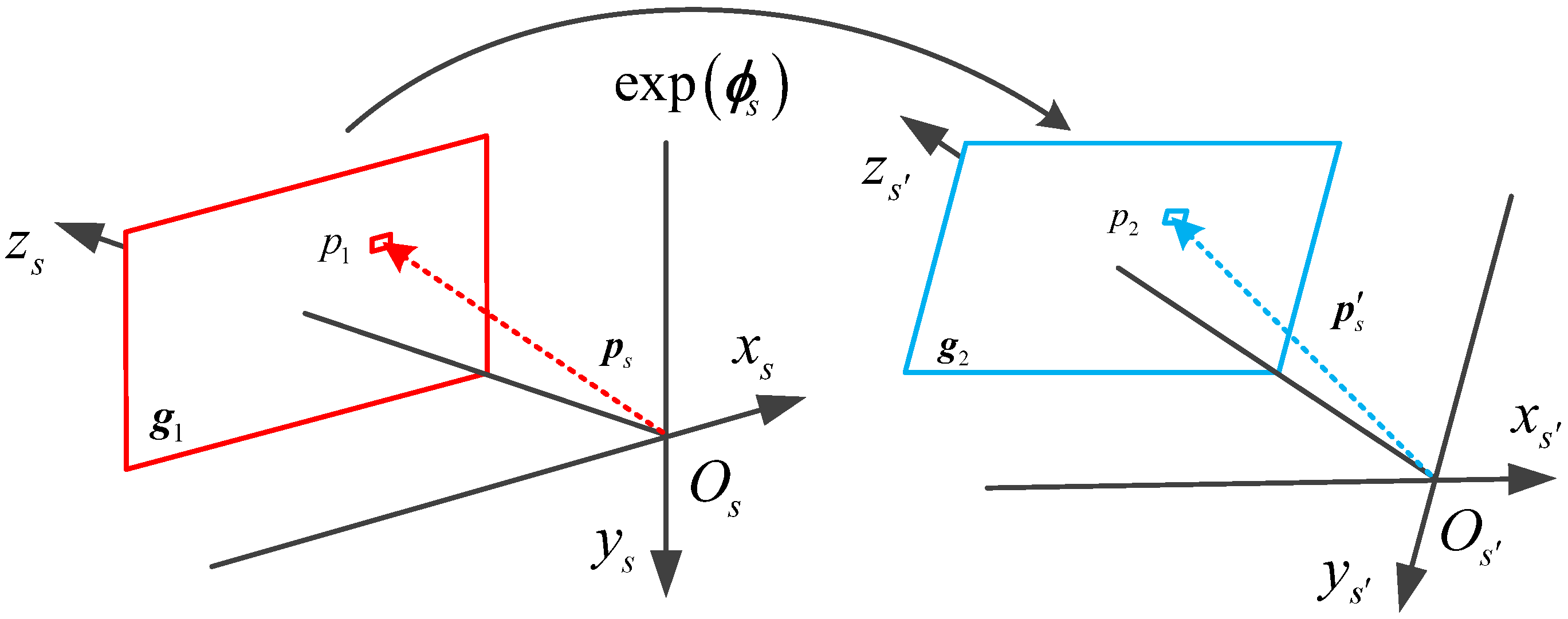

2. INS/CNS Deeply Integrated Model

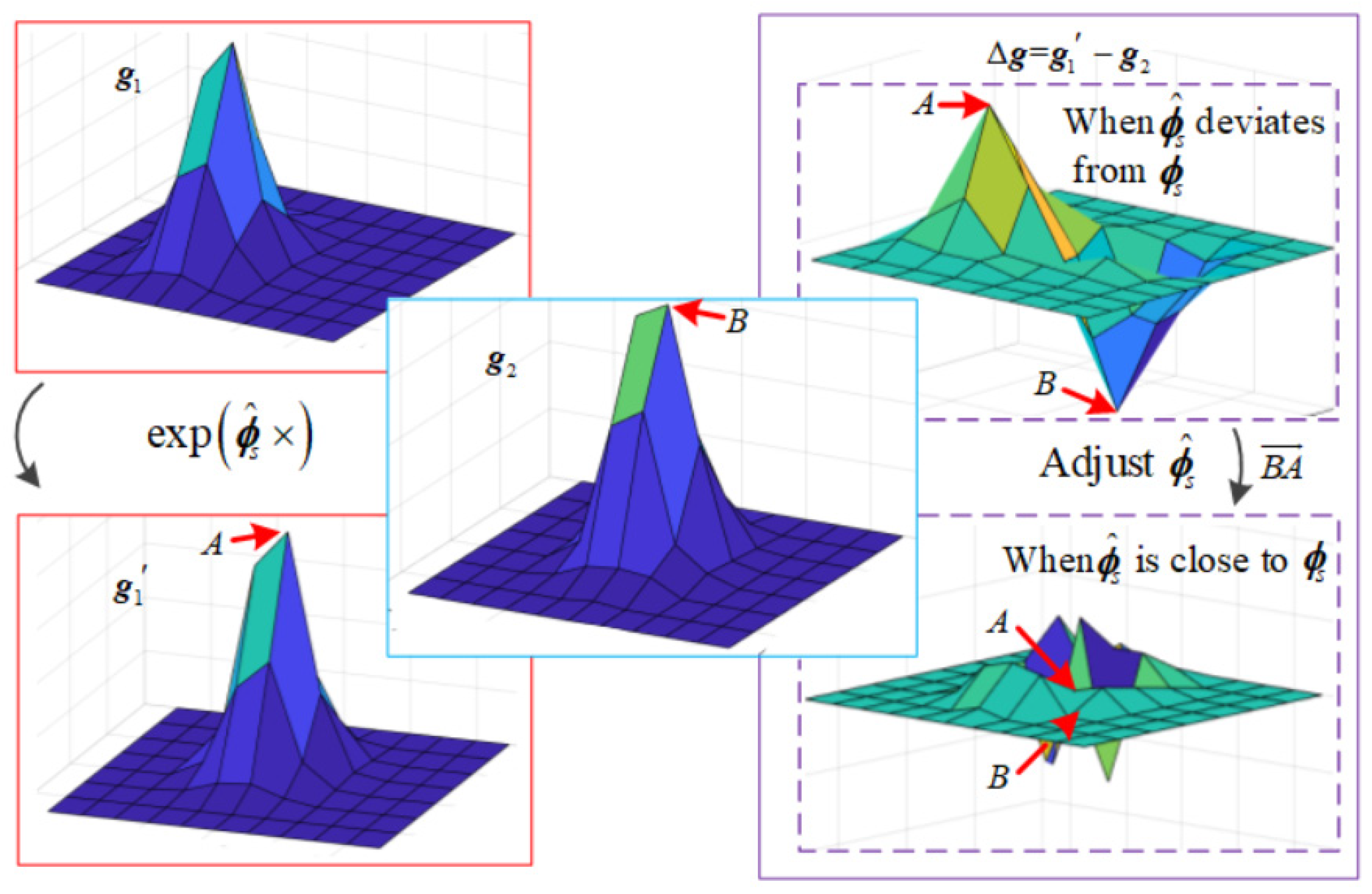

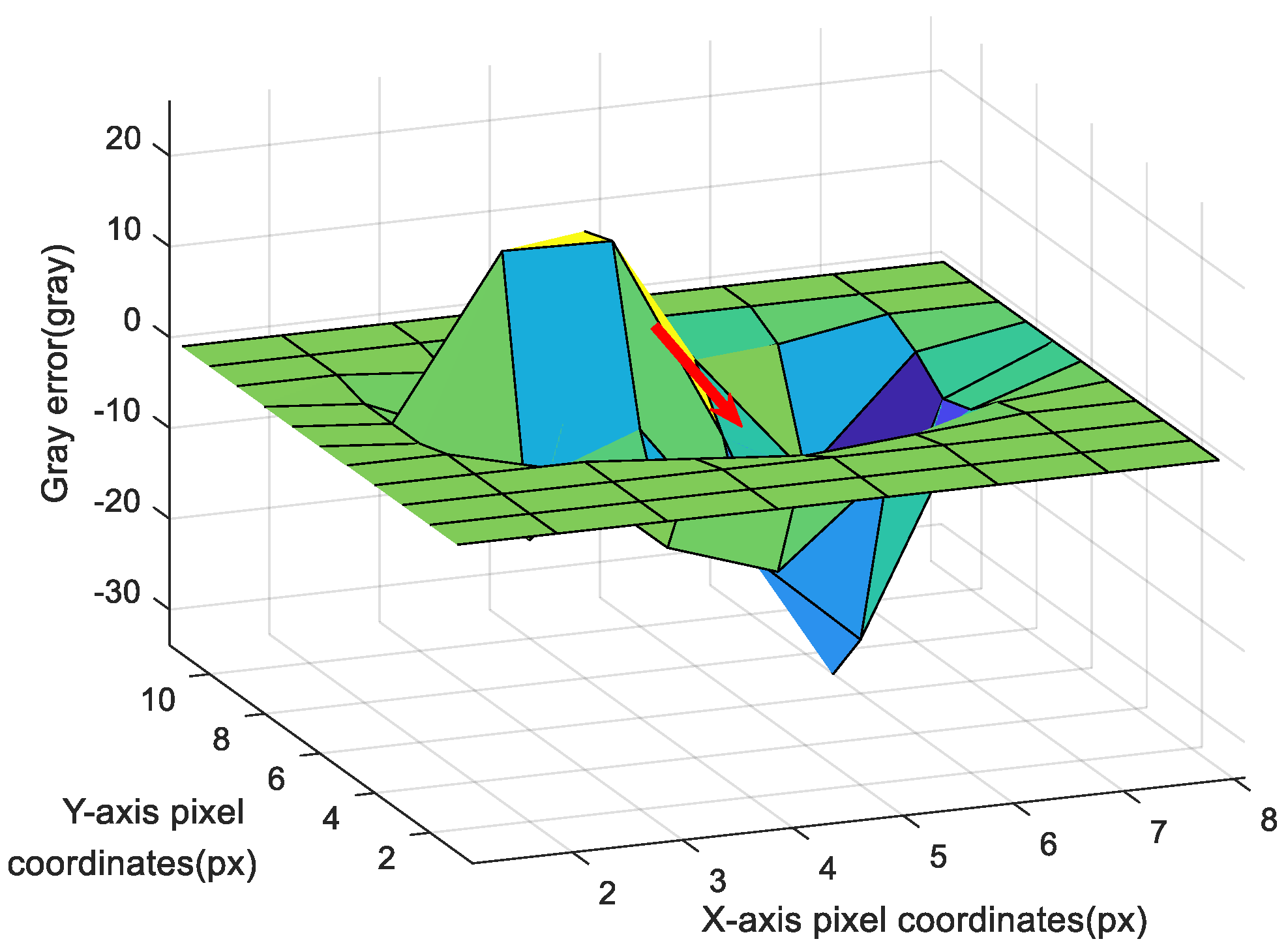

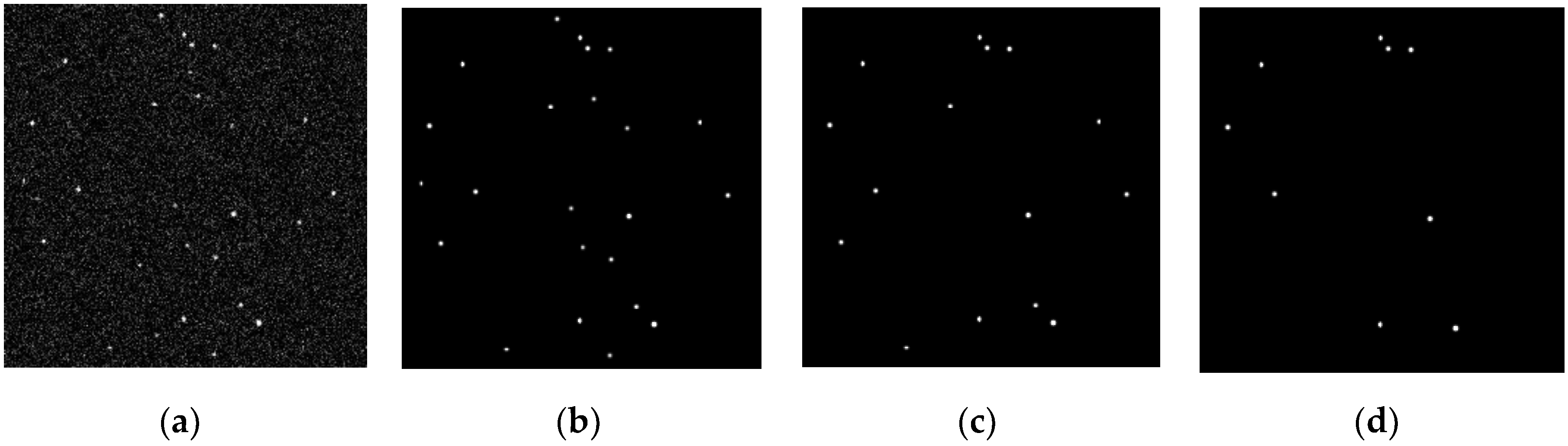

2.1. Gray Image Error Function

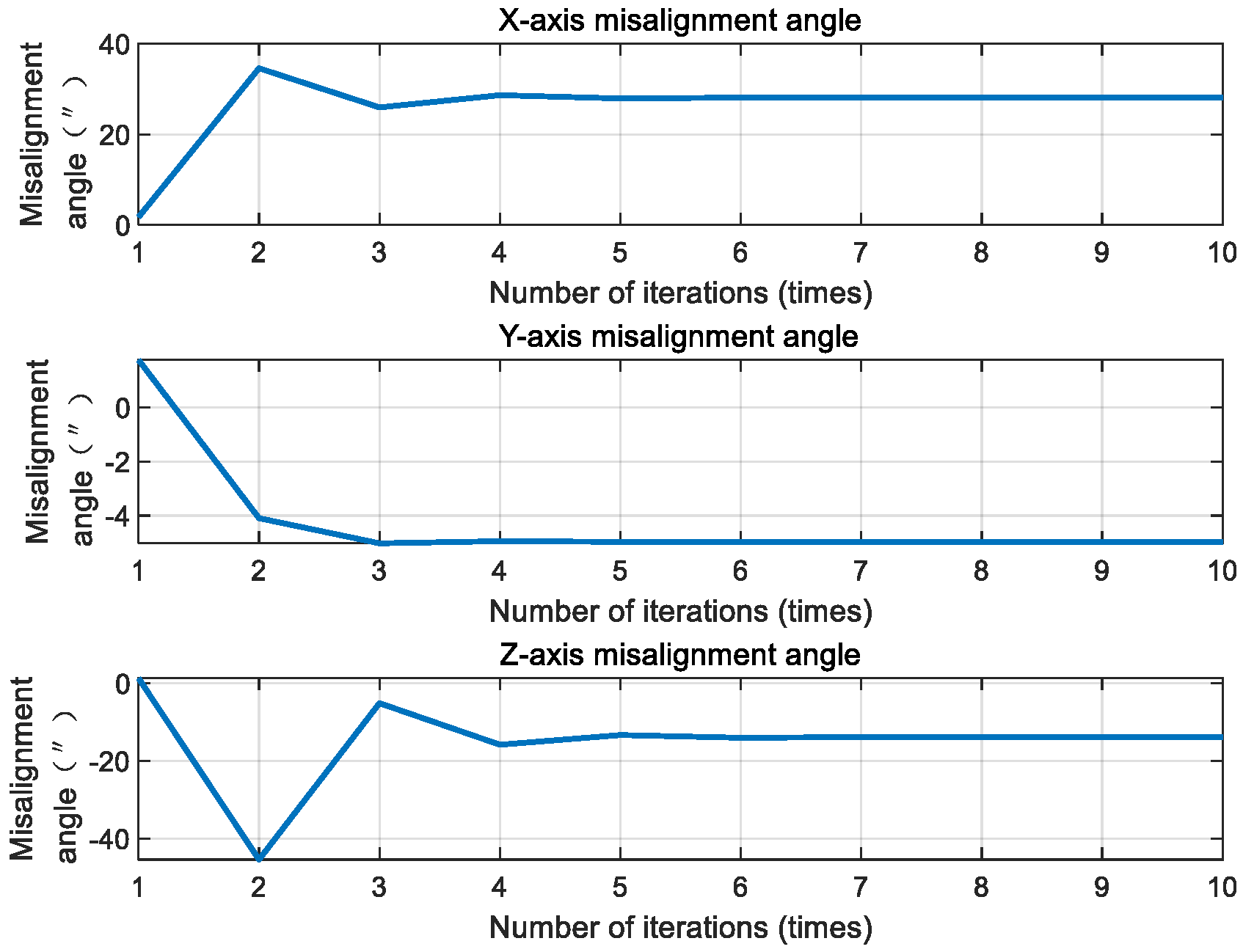

2.2. Attitude Optimization Algorithm Based on the Damped Newton Method

3. Second-Order State Augmented H-Infinity Filter

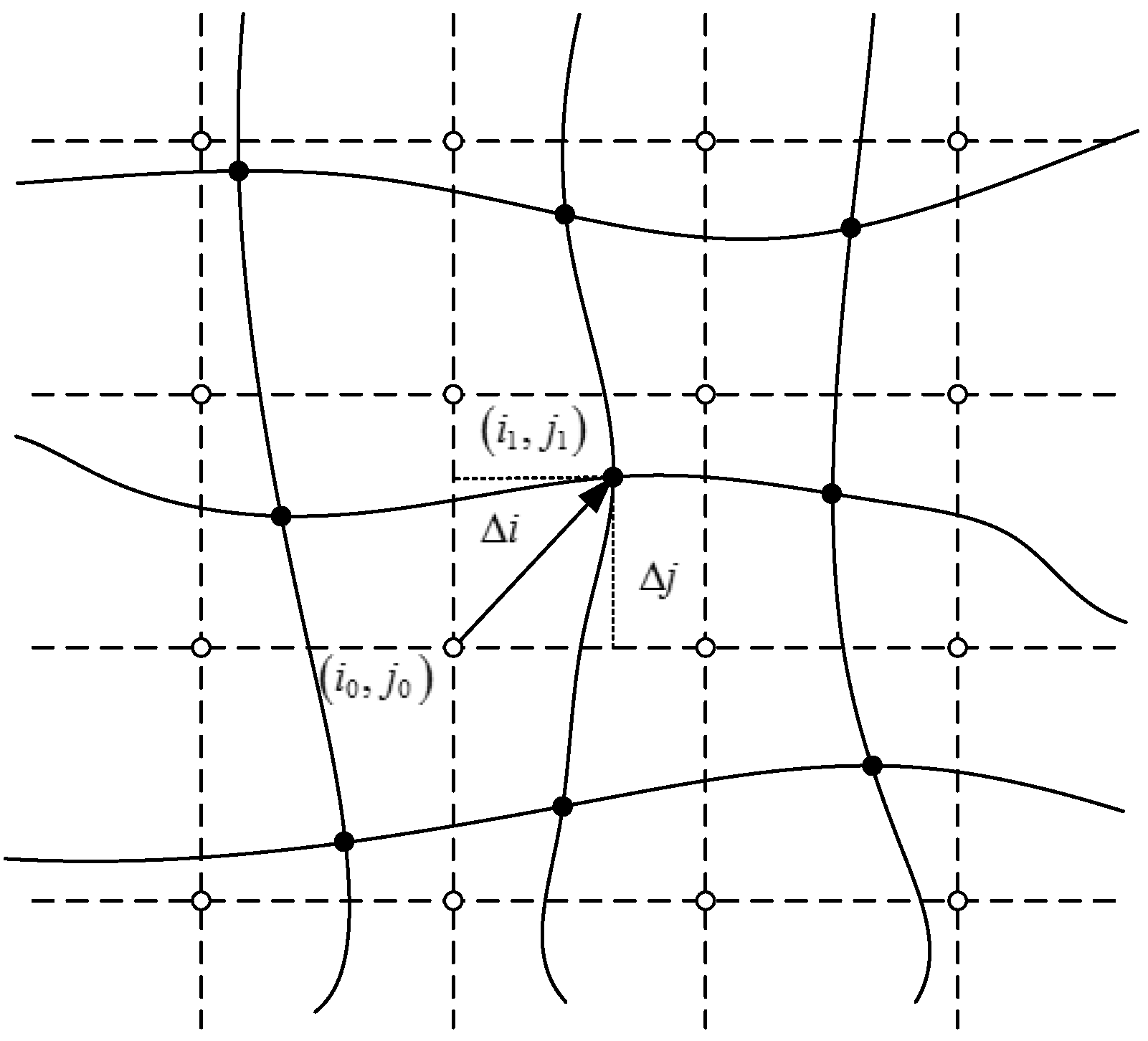

3.1. Star Sensor Pixel Offset Model in the Near Space Environment

3.2. Second-Order State Augmented H-Infinity Filtering Model

3.2.1. Measurement Noise Whitening

3.2.2. Decorrelation of System Noise and Measurement Noise in the Second-Order Augmented Model

4. Results and Discussion

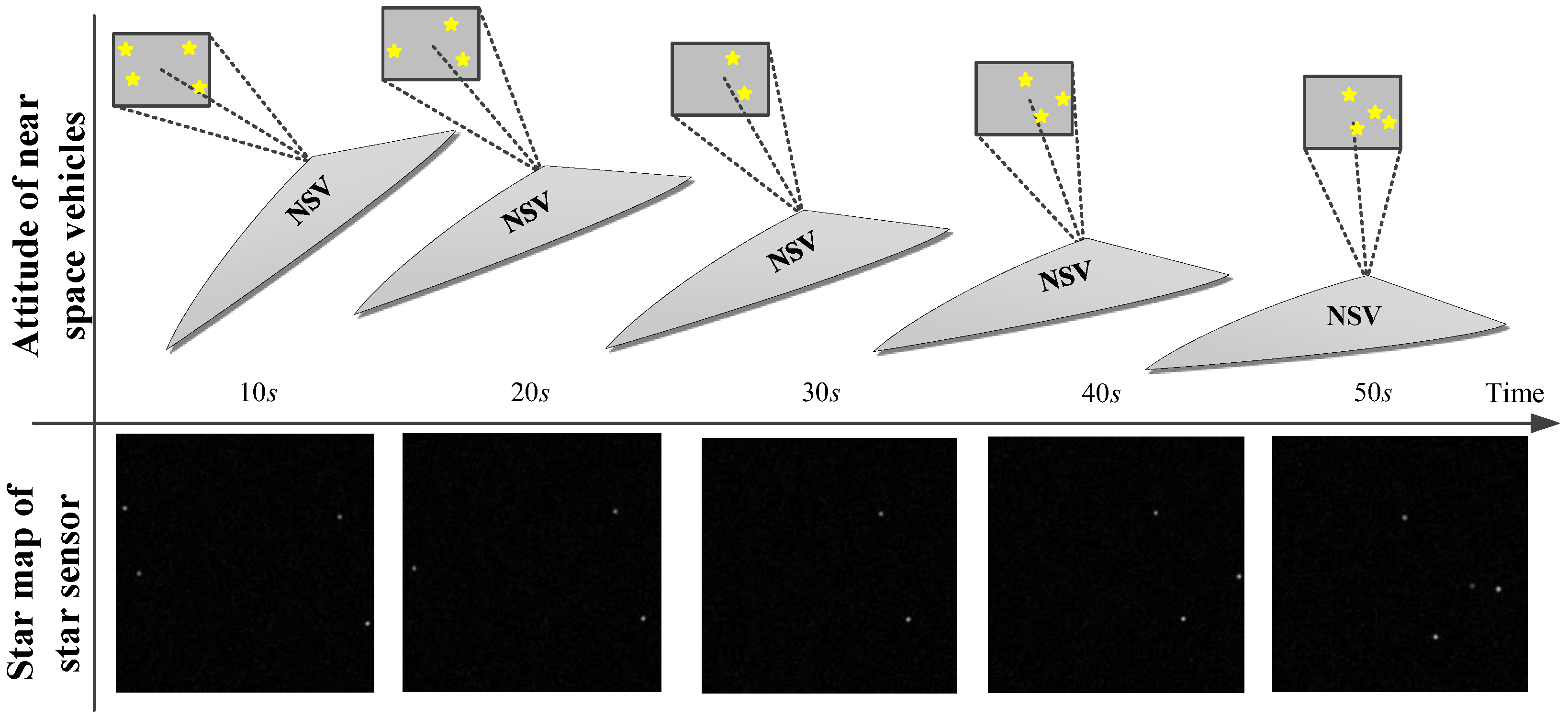

4.1. Simulation Verification of the INS/CNS Deeply Integrated Model

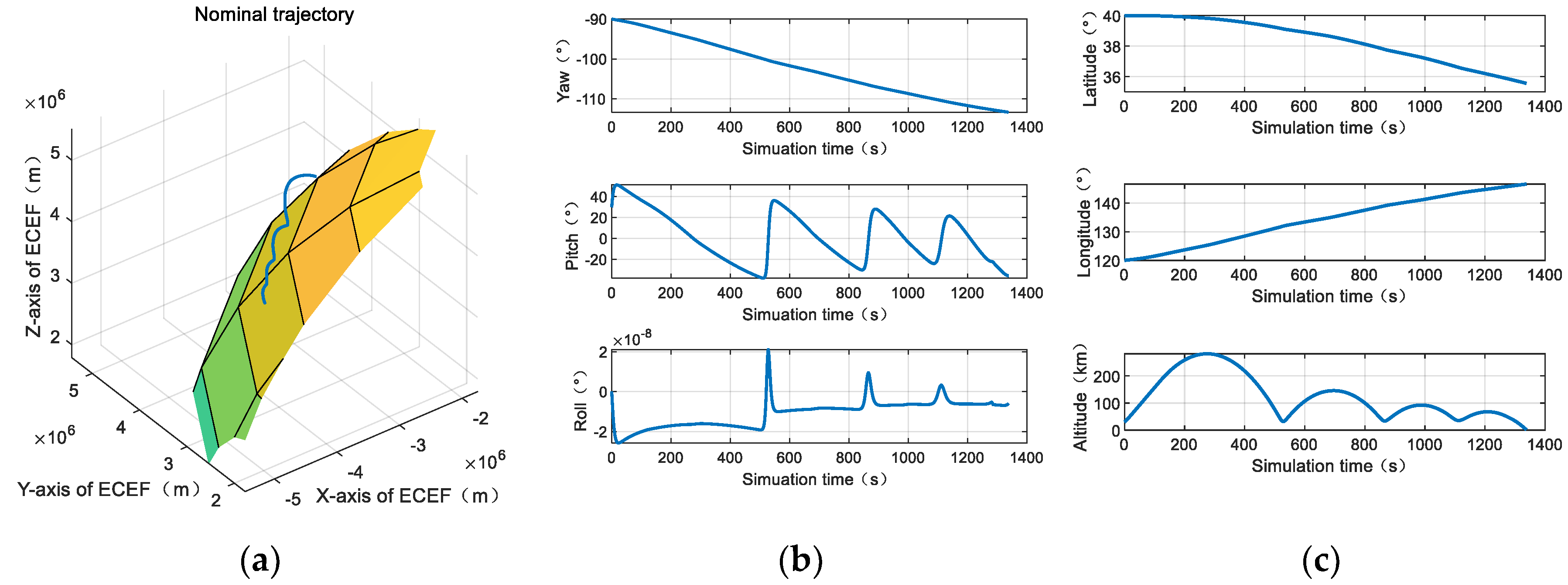

4.1.1. Algorithm Robustness Verification

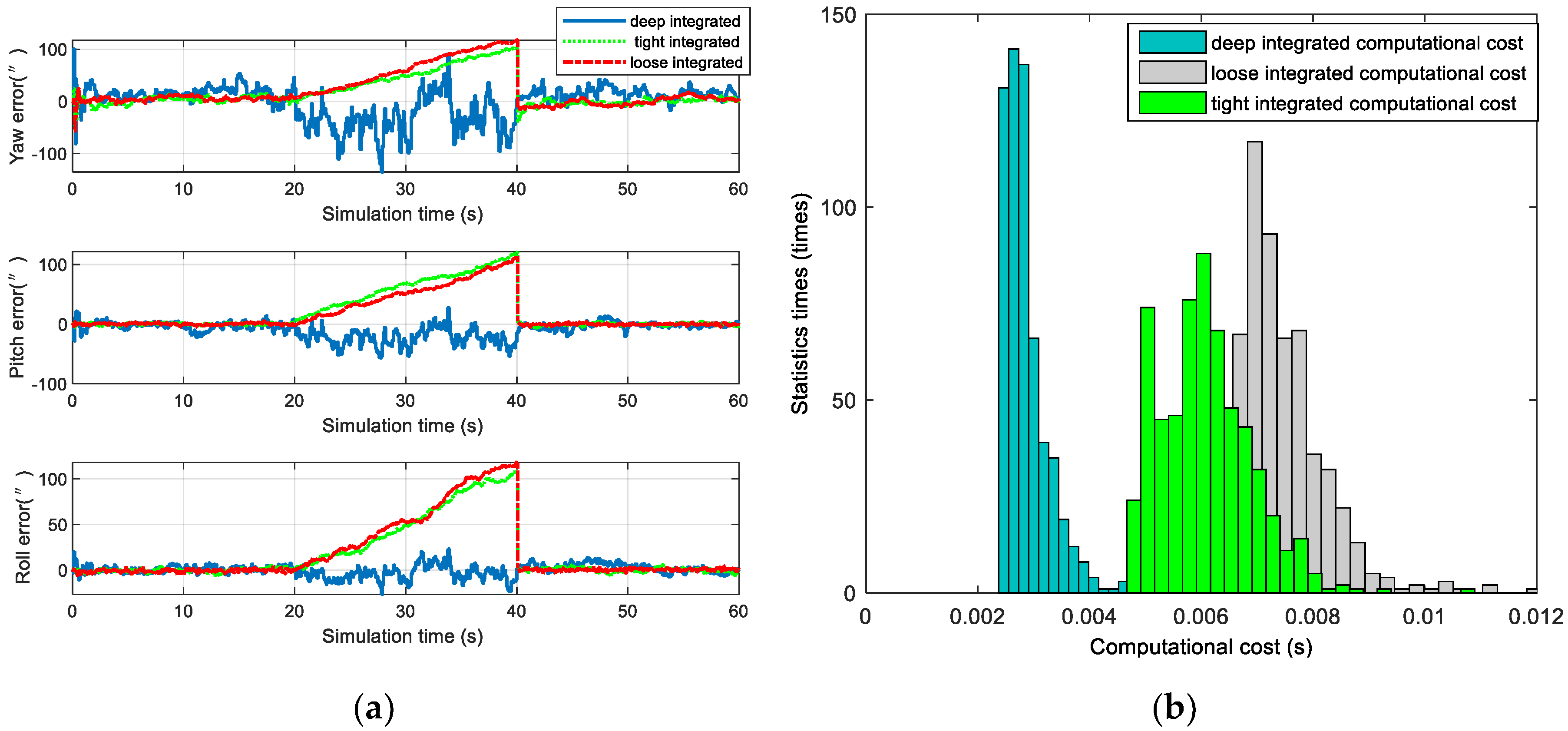

4.1.2. Comparison of Different Integrated Models

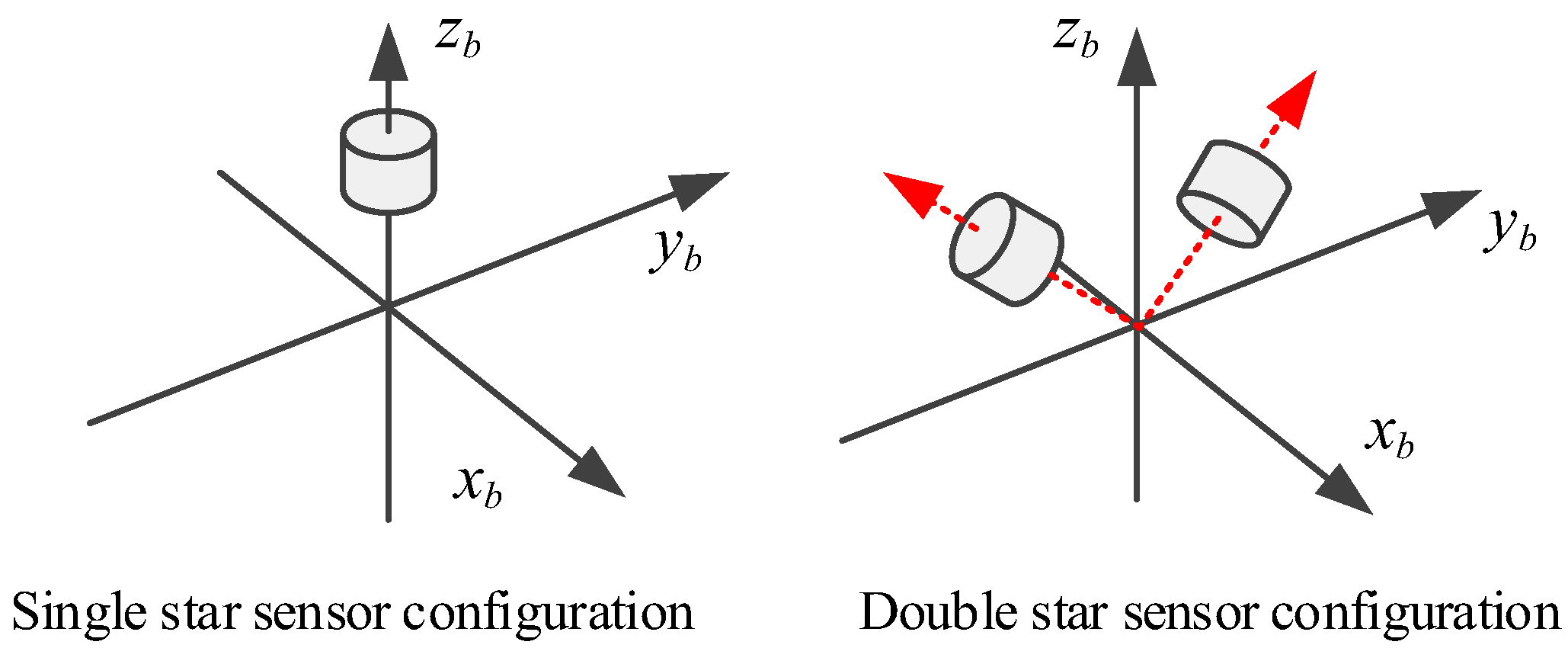

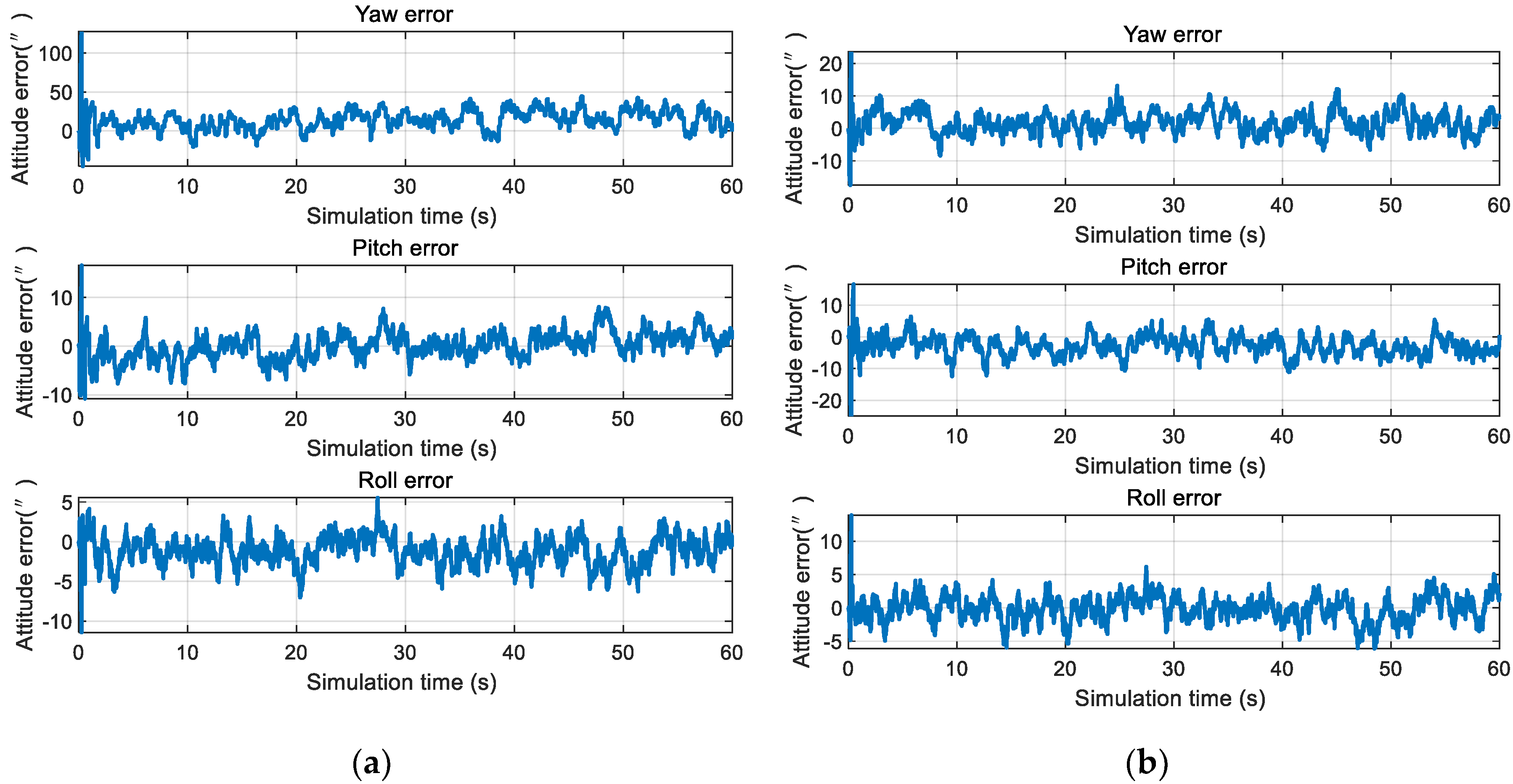

4.1.3. Comparative Simulation of Single- and Double-Star Sensor Configurations

4.2. Simulation Verification of the Second-Order State Augmented H-Infinity Filter

4.2.1. Comparison of the Second-Order State Augmented H-Infinity Filter and Standard H-Infinity Filter

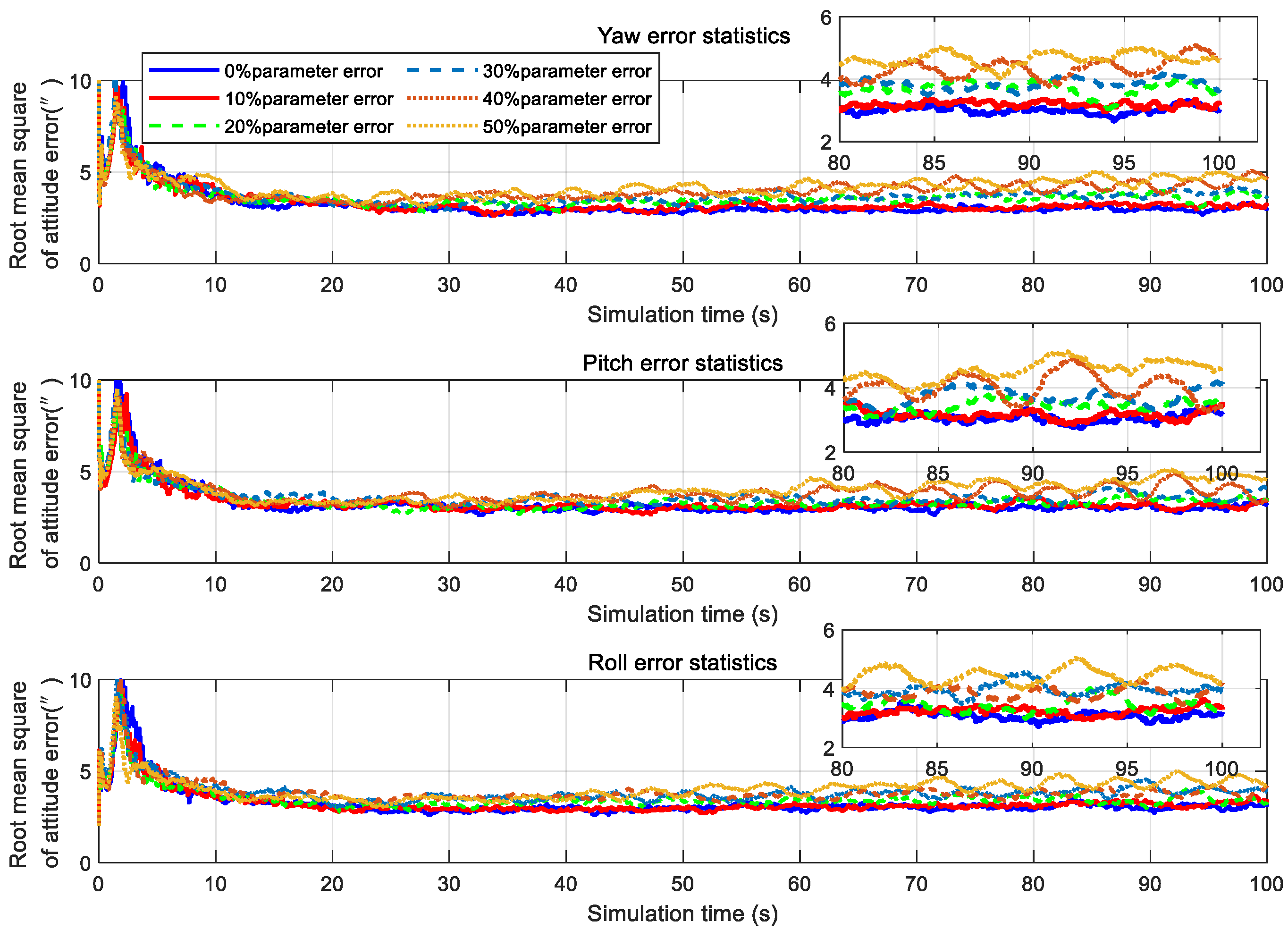

4.2.2. The Influence of Colored Noise Model Error on the Filtering Effect

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wang, W. Near-Space Vehicle-Borne SAR with Reflector Antenna for High-Resolution and Wide-Swath Remote Sensing. IEEE Trans. Geosci. Remote Sens. 2012, 50, 338–348. [Google Scholar] [CrossRef]

- Gao, Z.; Jiang, B.; Qi, R.; Xu, Y. Robust reliable control for a near space vehicle with parametric uncertainties and actuator faults. Int. J. Syst. Sci. 2011, 42, 2113–2124. [Google Scholar] [CrossRef]

- Zhang, J.; Sun, C.; Zhang, R.; Qian, C. Adaptive sliding mode control for re-entry attitude of near space hypersonic vehicle based on backstepping design. IEEE/CAA J. Autom. Sin. 2015, 2, 94–101. [Google Scholar]

- Xu, Y.; Jiang, B.; Tao, G.; Gao, Z. Fault Tolerant Control for a Class of Nonlinear Systems with Application to Near Space Vehicle. Circuits Syst. Signal Process. 2011, 30, 655–672. [Google Scholar] [CrossRef]

- Chen, B.; Zheng, Y.; Chen, Z.; Zhang, H.; Liu, X. A review of celestial navigation system on near space hypersonic vehicle. Acta Aeronaut. Astronaut. Sin. 2020, 41, 623686. [Google Scholar]

- Jiang, B.; Gao, Z.; Shi, P.; Xu, Y. Adaptive Fault-Tolerant Tracking Control of Near-Space Vehicle Using Takagi–Sugeno Fuzzy Models. IEEE Trans. Fuzzy Syst. 2010, 18, 1000–1007. [Google Scholar] [CrossRef]

- Zhang, W.; Quan, W.; Guo, L. Blurred Star Image Processing for Star Sensors under Dynamic Conditions. Sensors 2012, 12, 6712–6726. [Google Scholar] [CrossRef]

- Quan, W.; Fang, J. A Star Recognition Method Based on the Adaptive Ant Colony Algorithm for Star Sensors. Sensors 2010, 10, 1955–1966. [Google Scholar] [CrossRef] [Green Version]

- Wang, G.; Lv, W.; Li, J.; Wei, X. False Star Filtering for Star Sensor Based on Angular Distance Tracking. IEEE Access 2019, 7, 62401–62411. [Google Scholar] [CrossRef]

- Wan, X.; Wang, G.; Wei, X.; Li, J.; Zhang, G. Star Centroiding Based on Fast Gaussian Fitting for Star Sensors. Sensors 2018, 18, 2836. [Google Scholar] [CrossRef] [Green Version]

- Fialho, M.A.A.; Mortari, D. Theoretical Limits of Star Sensor Accuracy. Sensors 2019, 19, 5355. [Google Scholar] [CrossRef] [Green Version]

- Wang, W.; Wei, X.; Li, J.; Wang, G. Noise suppression algorithm of short-wave infrared star image for daytime star sensor. Infrared Phys. Technol. 2017, 85, 382–394. [Google Scholar] [CrossRef]

- Ning, X.; Wang, L.; Wu, W.; Fang, J. A Celestial Assisted INS Initialization Method for Lunar Explorers. Sensors 2011, 11, 6991–7003. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ning, X.; Liu, L.; Fang, J.; Wu, W. Initial position and attitude determination of lunar rovers by INS/CNS integration. Aerosp. Sci. Technol. 2013, 30, 323–332. [Google Scholar] [CrossRef]

- Ning, X.; Liu, L. A Two-Mode INS/CNS Navigation Method for Lunar Rovers. IEEE Trans. Instrum. Meas. 2014, 63, 2170–2179. [Google Scholar] [CrossRef]

- Wang, Q.; Cui, X.; Li, Y.; Ye, F. Performance Enhancement of a USV INS/CNS/DVL Integration Navigation System Based on an Adaptive Information Sharing Factor Federated Filter. Sensors 2017, 17, 239. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, B.; Cheng, Y.; Ruiter, H.J. INS/CNS navigation system based on multi-star pseudo measurements. Aerosp. Sci. Technol. 2019, 95, 105506. [Google Scholar]

- Yang, Y.; Zhang, C.; Lu, J. Local Observability Analysis of Star Sensor Installation Errors in a SINS/CNS Integration System for Near-Earth Flight Vehicles. Sensors 2017, 17, 167. [Google Scholar] [CrossRef] [Green Version]

- Quan, W.; Xu, L.; Fang, J. A New Star Identification Algorithm based on Improved Hausdorff Distance for Star Sensors. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 2101–2109. [Google Scholar]

- Chen, Z.; Wang, X. Research on INS / CNS Integrated Navigation System in near space vehicle. Aerodyn. Missile J. 2020, 4, 90–95. [Google Scholar]

- Kong, X.; Ning, G.; Yang, M.; Peng, Z.; Zhao, X.; Wang, S.; Xiu, P.; Liu, L. Study on aero optical effect of star navigation imaging. Infrared Laser Eng. 2018, 47, 367–372. [Google Scholar]

- Xiong, X.; Fei, J.; Chen, C.; Xiao, H. Connotation of Aero-Optical Effect and Its Influence Mechanism on Imaging Detection. Mod. Def. Technol. 2017, 45, 139–146. [Google Scholar]

- Guo, G. Studies on Mechanism and Control Methods of Aero-optical Effects for Supersonic Mixing Layers. Ph.D. Thesis, Shanghai Jiao Tong University, Shanghai, China, 2018. [Google Scholar]

- Guo, G. Study on correction method of aero-optical effects caused by supersonic mixing layer based on flow control. Infrared Laser Eng. 2019, 48, 245–252. [Google Scholar]

- Wang, Q.; Li, Y.; Diao, M.; Gao, W.; Qi, Z. Performance enhancement of INS/CNS integration navigation system based on particle swarm optimization back propagation neural network. Ocean Eng. 2015, 108, 33–45. [Google Scholar] [CrossRef]

- Gao, B.; Hu, G.; Gao, S.; Zhong, Y.; Gu, C. Multi-sensor Optimal Data Fusion for INS/GNSS/CNS Integration Based on Unscented Kalman Filter. Int. J. Control Autom. Syst. 2018, 16, 129–140. [Google Scholar] [CrossRef]

- Wang, X. Maneuvering Target Tracking Based on H∞ Filter and Multi-Model Algorithm. Ph.D. Thesis, Zhejiang University, Hangzhou, China, 2018. [Google Scholar]

- Li, J.; Wei, X.; Zhang, G. An Extended Kalman Filter-Based Attitude Tracking Algorithm for Star Sensors. Sensors 2017, 17, 1921. [Google Scholar]

- Liang, H. Study of Nonlinear Gaussian Filters and Its Application to CNS/SAR/SINS Integrated Navigation. Ph.D. Thesis, Harbin Institute of Technology, Harbin, China, 2015. [Google Scholar]

- Song, L. Research on the Key Technologies of Vision Navigation for UAV in Flight. Ph.D. Thesis, Northwestern Polytechnical University, Xi’an, China, 2015. [Google Scholar]

- Zhu, Z. Research on Vision-Based Navigation Methods for Aircrafts Using 3D Terrain Reconstruction and Matching. Ph.D. Thesis, National University of Defense Technology, Changsha, China, 2014. [Google Scholar]

- Gao, X.; Zhang, T. 14 Lectures on Visual SLAM: From Theory to Practice; Publishing House of Electronics Industry: Beijing, China, 2017; pp. 192–195. [Google Scholar]

- Zhao, J.; Mili, L. A Decentralized H-Infinity Unscented Kalman Filter for Dynamic State Estimation Against Uncertainties. IEEE Trans. Smart Grid 2019, 10, 4870–4880. [Google Scholar] [CrossRef]

- Jiang, C.; Zhang, S.; Zhang, Q. A New Adaptive H-Infinity Filtering Algorithm for the GPS/INS Integrated Navigation. Sensors 2016, 16, 2127. [Google Scholar] [CrossRef] [PubMed] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

| Parameter | Value |

|---|---|

| Field of star sensor | 10° |

| Resolution of star sensor | 256 * 256 |

| Measurement noise of star sensor | 20”(3 ) |

| Gyro bias | 0.1 |

| Gyro noise | 0.5 (3 ) |

| Initial attitude estimation error | 20”(3 ) 1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mu, R.; Sun, H.; Li, Y.; Cui, N. INS/CNS Deeply Integrated Navigation Method of Near Space Vehicles. Sensors 2020, 20, 5885. https://doi.org/10.3390/s20205885

Mu R, Sun H, Li Y, Cui N. INS/CNS Deeply Integrated Navigation Method of Near Space Vehicles. Sensors. 2020; 20(20):5885. https://doi.org/10.3390/s20205885

Chicago/Turabian StyleMu, Rongjun, Hongchi Sun, Yuntian Li, and Naigang Cui. 2020. "INS/CNS Deeply Integrated Navigation Method of Near Space Vehicles" Sensors 20, no. 20: 5885. https://doi.org/10.3390/s20205885

APA StyleMu, R., Sun, H., Li, Y., & Cui, N. (2020). INS/CNS Deeply Integrated Navigation Method of Near Space Vehicles. Sensors, 20(20), 5885. https://doi.org/10.3390/s20205885