Distributed Artificial Intelligence-as-a-Service (DAIaaS) for Smarter IoE and 6G Environments

Abstract

:1. Introduction

2. Background and Related Works

2.1. Artificial Intelligence (AI)

2.2. Internet of Everything (IoE)

2.3. Edge, Fog, and Cloud Computing

2.4. Smart Cities, Societies, and Ecosystems

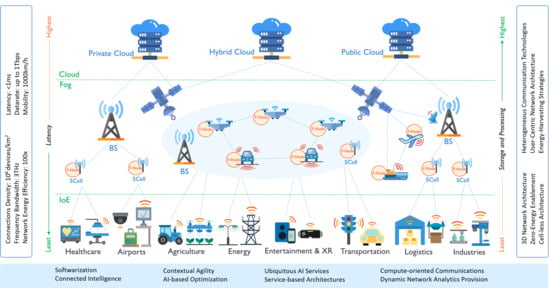

2.5. Sixth Generation Networks (6G)

3. Methodology and Design

3.1. Cloud, Fog, and IoE Layers

3.2. Distributed Applications and AI Delivery Models

3.3. Network Infrastructure

3.4. Performance Metrics

4. Case Study 1: Smart Airport

4.1. IoE in Smart Airports

4.2. Smart Airport: Architectural Overview

4.3. Application: Smart Counter

4.4. Application: Smart Gate Control

4.5. Experiment Configurations

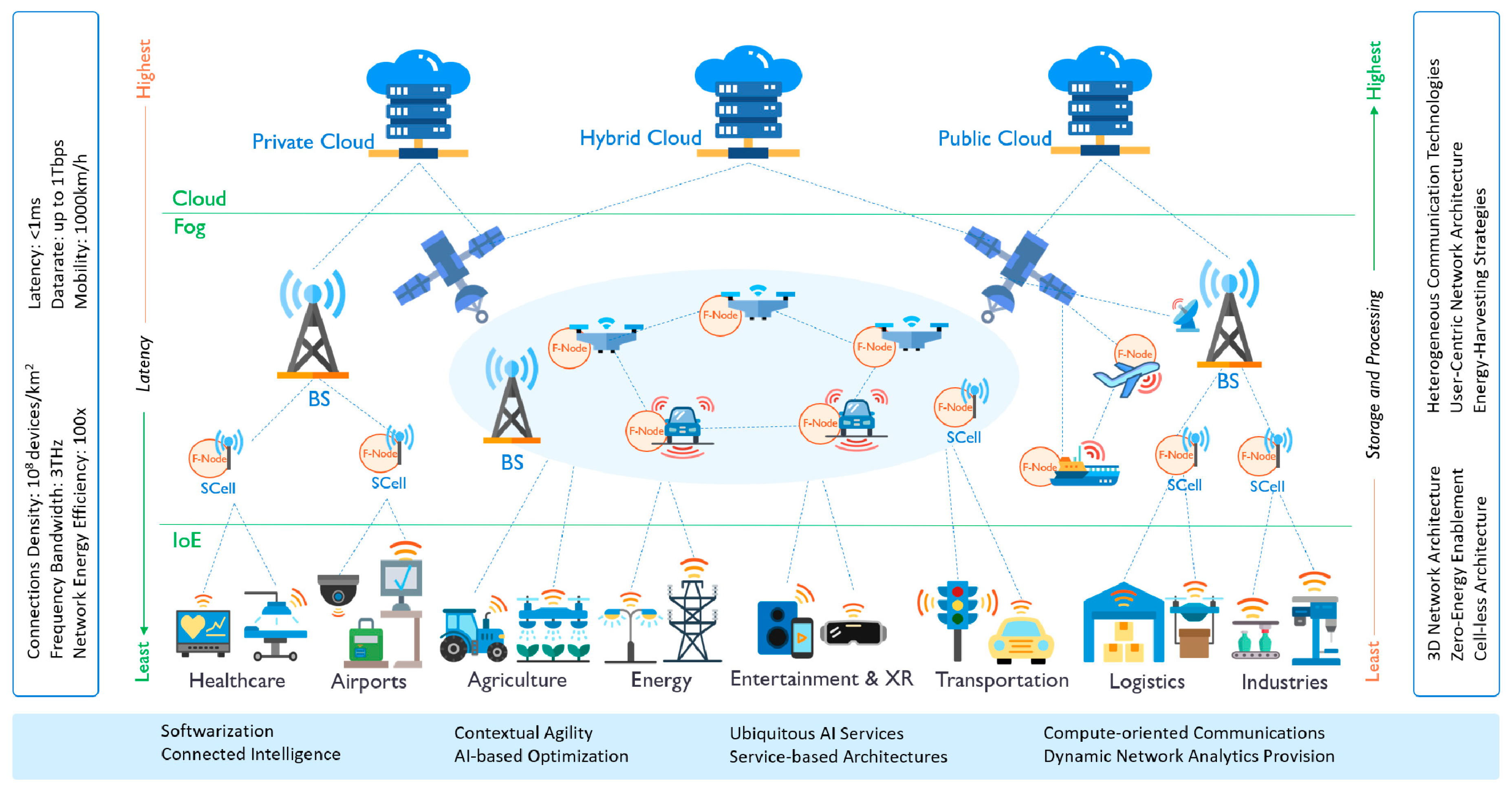

4.6. Results and Analysis

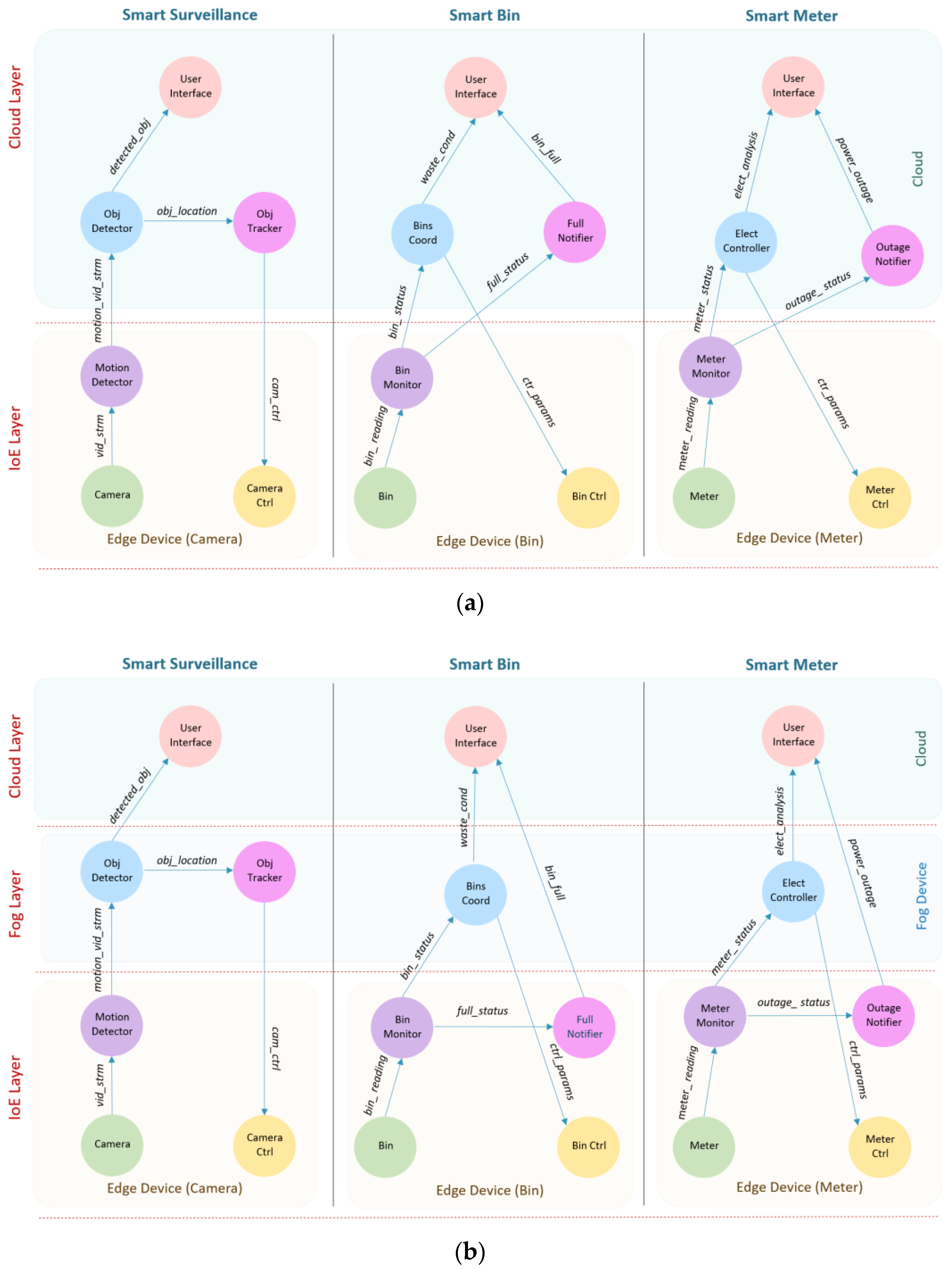

5. Case Study 2: Smart District

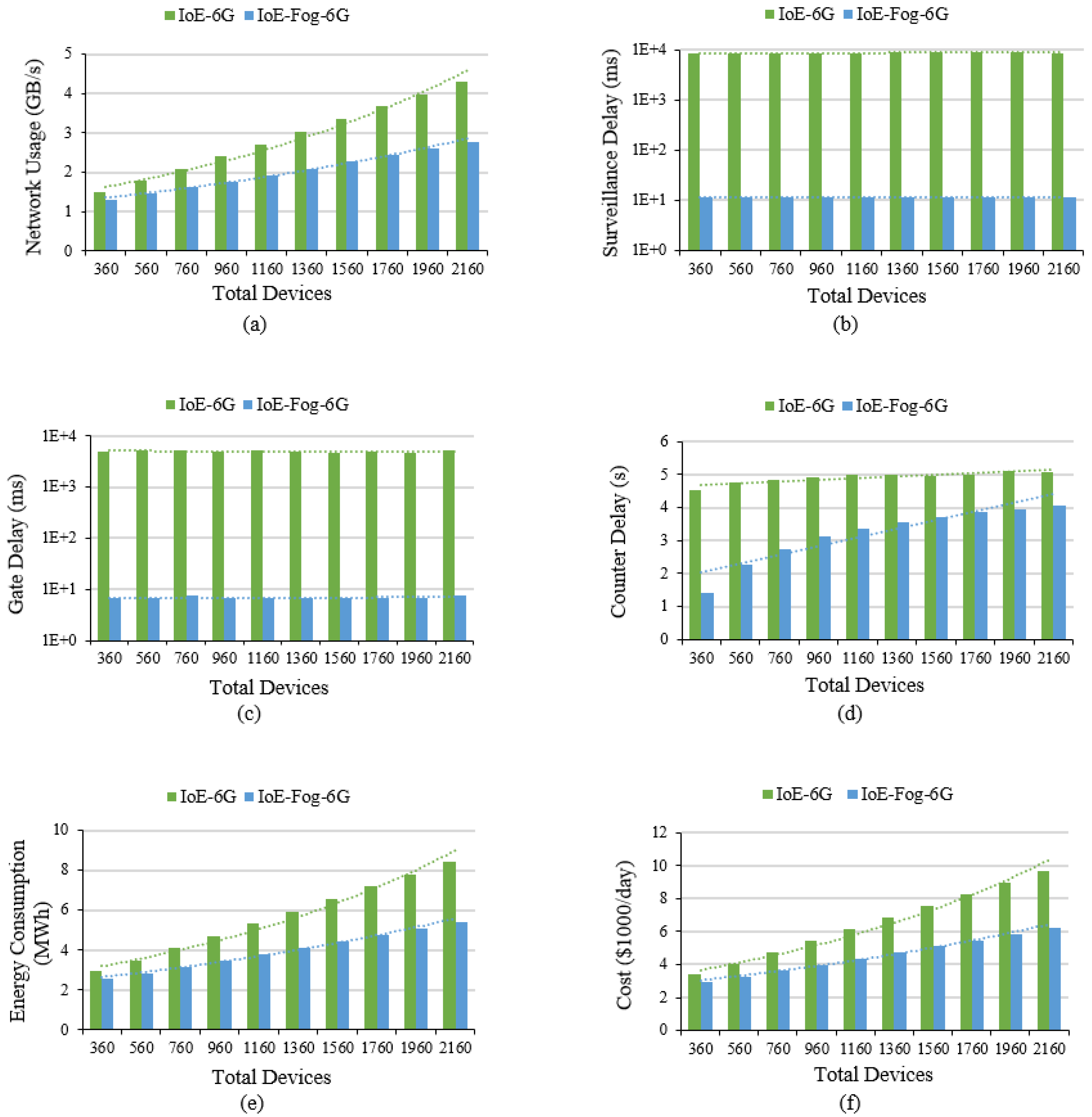

5.1. IoE in Smart District

5.2. Smart District: Architectural Overview

5.3. Application: Smart Meter

5.4. Application: Smart Bin

5.5. Experiment Configurations

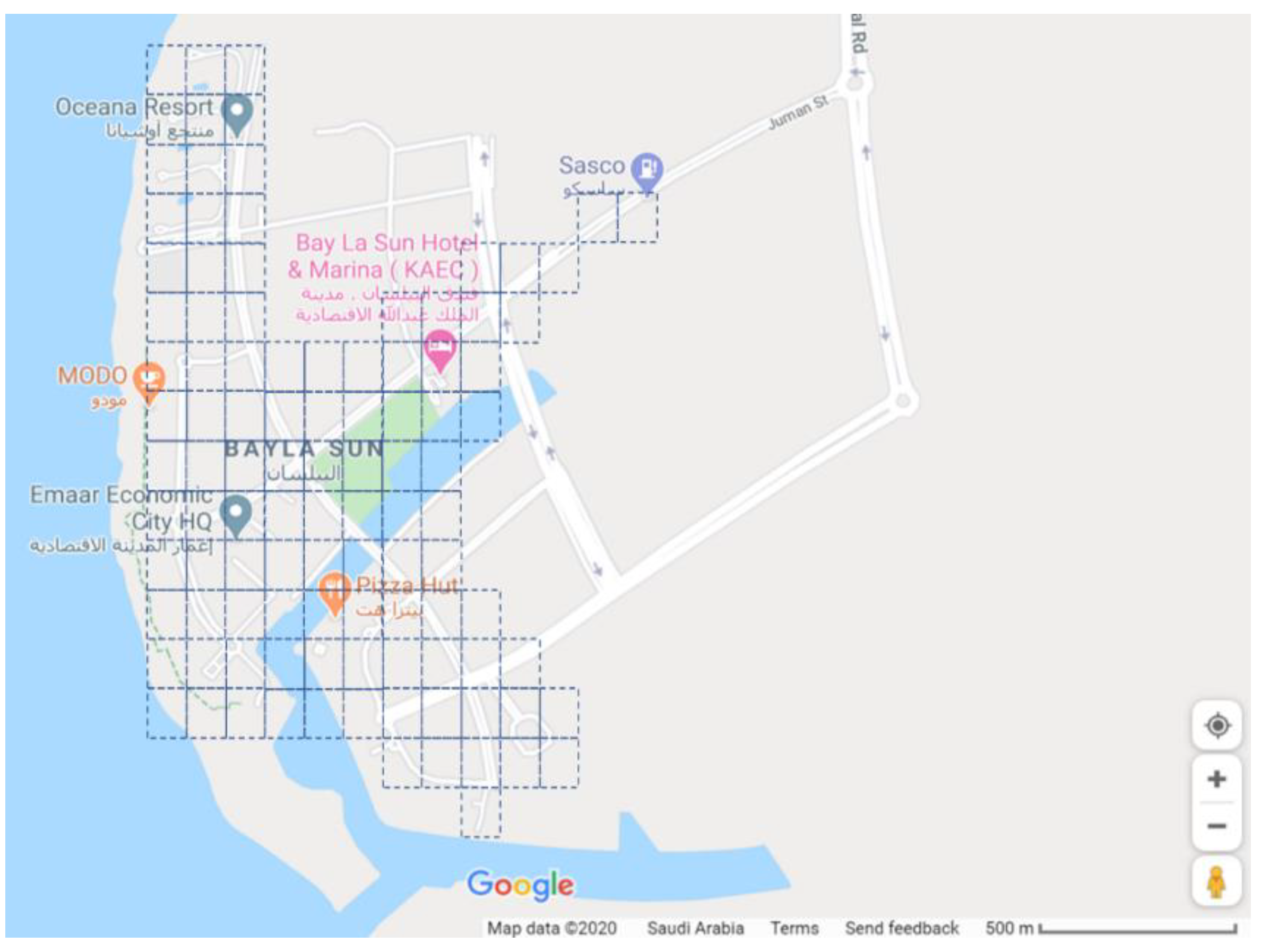

5.6. Results and Analysis

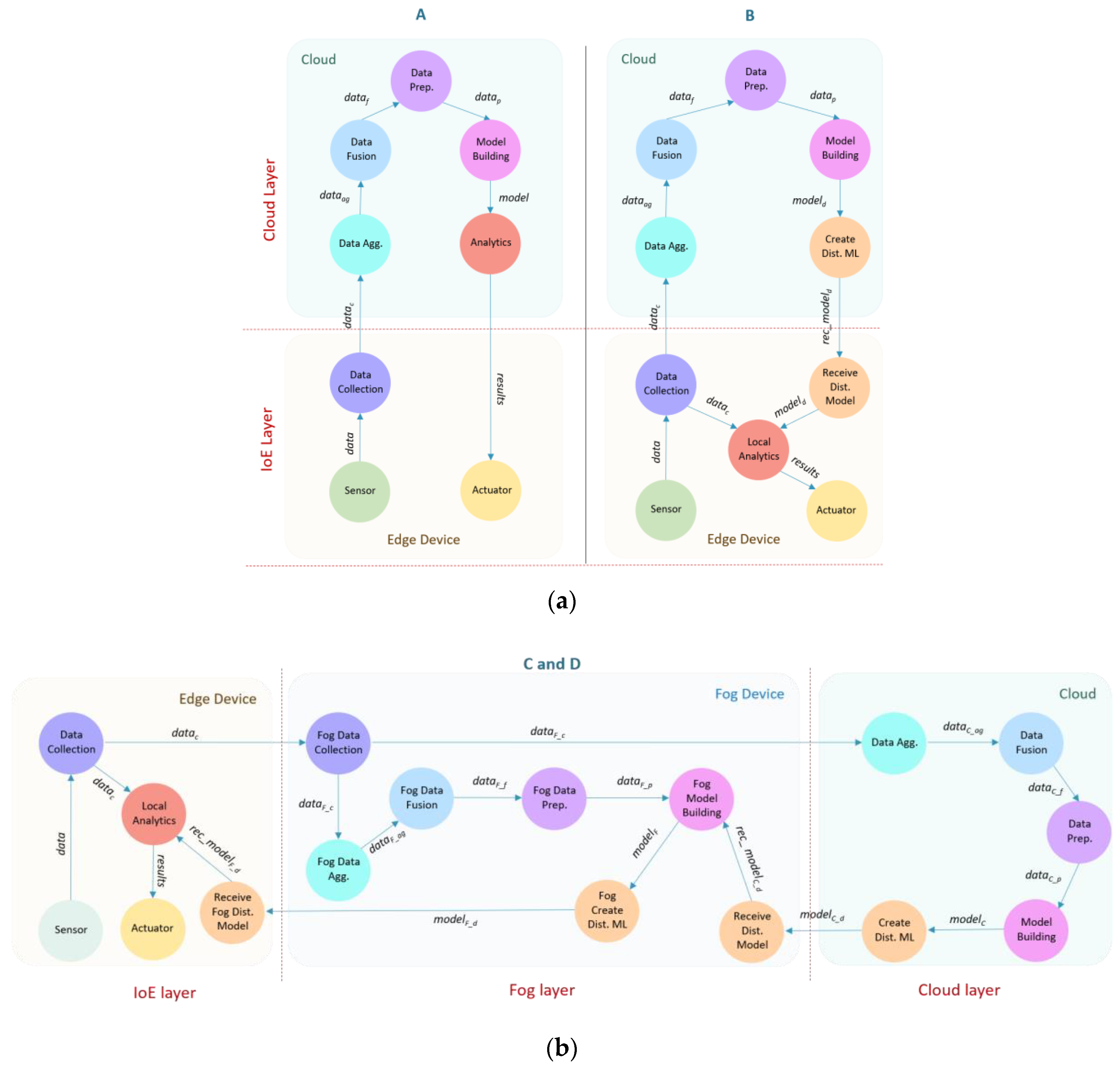

6. Distributed Artificial Intelligence-as-a-Service (DAIaaS)

6.1. DAIaaS: Architectural Overview

6.2. Scenario A: Training/Retraining and Inference at Cloud

6.3. Scenario B: Training/Retraining at Cloud & Inference at Edge

6.4. Scenarios C and D: Training/Retraining at Cloud and Fog and Inference at Edge

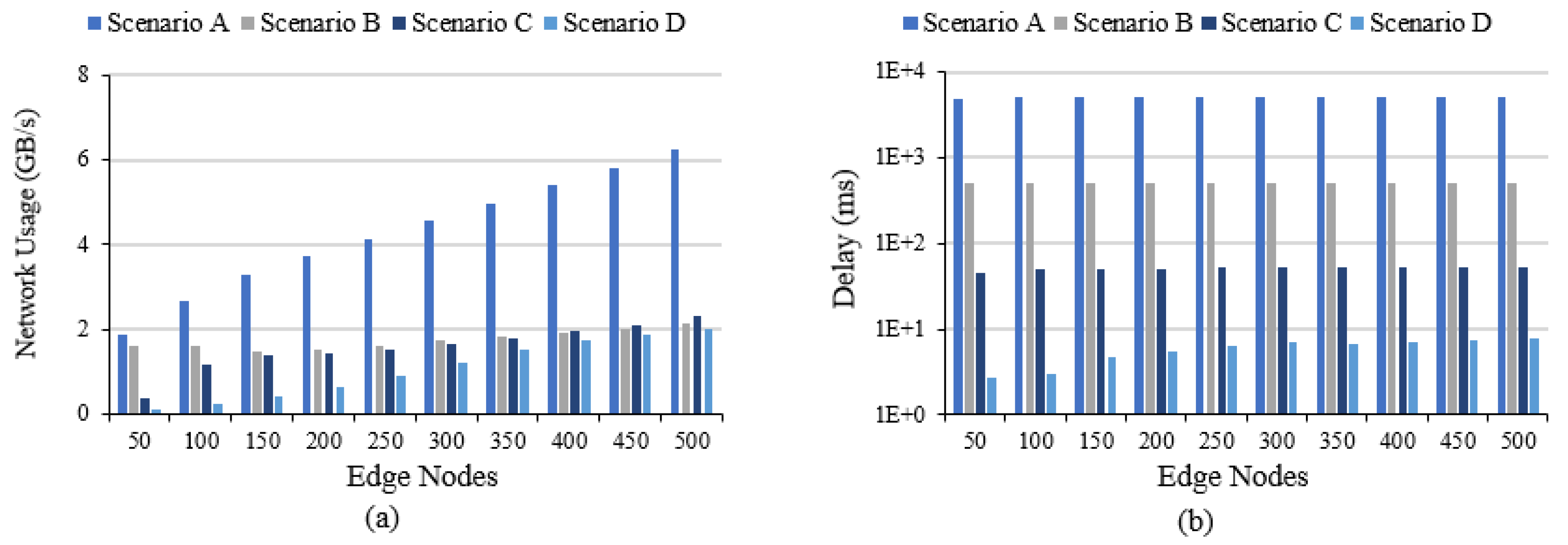

6.5. Results and Analysis

7. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jespersen, L. Is AI the Answer to True Love? 2021.AI. 2018. Available online: https://2021.ai/ai-answer-true-love/ (accessed on 21 September 2020).

- Yigitcanlar, T.; Butler, L.; Windle, E.; DeSouza, K.C.; Mehmood, R.; Corchado, J.M. Can Building “Artificially Intelligent Cities” Safeguard Humanity from Natural Disasters, Pandemics, and Other Catastrophes? An Urban Scholar’s Perspective. Sensors 2020, 20, 2988. [Google Scholar] [CrossRef] [PubMed]

- Mehmood, R.; See, S.; Katib, I.; Chlamtac, I. Smart Infrastructure and Applications: Foundations for Smarter Cities and Societies. In EAI/Springer Innovations in Communication and Computing; Springer International Publishing: New York, NY, USA; Springer Nature Switzerland AG: Cham, Switzerland, 2020; p. 692. [Google Scholar]

- Bibri, S.E.; Krogstie, J. The core enabling technologies of big data analytics and context-aware computing for smart sustainable cities: A review and synthesis. J. Big Data 2017, 4, 1–50. [Google Scholar] [CrossRef]

- Statista. Global AI Software Market Size 2018–2025. Tractica. 2020. Available online: https://www.statista.com/statistics/607716/worldwide-artificial-intelligence-market-revenues/ (accessed on 21 September 2020).

- Alotaibi, S.; Mehmood, R.; Katib, I.; Rana, O.; Albeshri, A. Sehaa: A Big Data Analytics Tool for Healthcare Symptoms and Diseases Detection Using Twitter, Apache Spark, and Machine Learning. Appl. Sci. 2020, 10, 1398. [Google Scholar] [CrossRef] [Green Version]

- Vaya, D.; Hadpawat, T. Internet of Everything (IoE): A New Era of IoT. In Lecture Notes in Electrical Engineering; Springer Verlag: Berlin/Heidelberg, Germany, 2020; pp. 1–6. [Google Scholar]

- Usman, S.; Mehmood, R.; Katib, I. Big Data and HPC Convergence for Smart Infrastructures: A Review and Proposed Architecture. In Smart Infrastructure and Applications Foundations for Smarter Cities and Societies; Springer: Cham, Switzerland, 2020; pp. 561–586. [Google Scholar]

- Latva-Aho, M.; Leppänen, K. Key Drivers and Research Challenges for 6G Ubiquitous Wireless Intelligence. In 6G Research Visions 1; University of Oulu: Oulu, Finland, 2019. [Google Scholar]

- Giordani, M.; Polese, M.; Mezzavilla, M.; Rangan, S.; Zorzi, M. Toward 6G Networks: Use Cases and Technologies. IEEE Commun. Mag. 2020, 58, 55–61. [Google Scholar] [CrossRef]

- Khan, L.U.; Yaqoob, I.; Imran, M.; Han, Z.; Hong, C.S. 6G Wireless Systems: A Vision, Architectural Elements, and Future Directions. IEEE Access 2020, 1. [Google Scholar] [CrossRef]

- Muhammed, T.; Albeshri, A.; Katib, I.; Mehmood, R. UbiPriSEQ: Deep Reinforcement Learning to Manage Privacy, Security, Energy, and QoS in 5G IoT HetNets. Appl. Sci. 2020, 10, 7120. [Google Scholar] [CrossRef]

- Letaief, K.B.; Chen, W.; Shi, Y.; Zhang, J.; Zhang, Y.J.A. The Roadmap to 6G: AI Empowered Wireless Networks. IEEE Commun. Mag. 2019, 57, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Gui, G.; Liu, M.; Tang, F.; Kato, N.; Adachi, F. 6G: Opening New Horizons for Integration of Comfort, Security and Intelligence. IEEE Wirel. Commun. 2020. [Google Scholar] [CrossRef]

- NTT Docomo, Inc. White Paper—5G Evolution and 6G; NTT Docomo, Inc.: Tokyo, Japan, 2020. [Google Scholar]

- Taleb, T.; Aguiar, R.; Yahia, I.G.B.; Christensen, G.; Chunduri, U.; Clemm, A.; Costa, X.; Dong, L.; Elmirghani, J.; Yosuf, B.; et al. White Paper on 6G Networking; University of Oulu: Oulu, Finland, 2020. [Google Scholar]

- Saad, W.; Bennis, M.; Chen, M. A Vision of 6G Wireless Systems: Applications, Trends, Technologies, and Open Research Problems. IEEE Netw. 2020, 34, 134–142. [Google Scholar] [CrossRef] [Green Version]

- Lovén, L.; Leppänen, T.; Peltonen, E.; Partala, J.; Harjula, E.; Porambage, P.; Ylianttila, M.; Riekki, J. EdgeAI: A vision for distributed, edge-native artificial intelligence in future 6G networks. In Proceedings of the 1st 6G Wireless Summit, Levi, Finland, 24–26 March 2019; pp. 1–2. [Google Scholar]

- Chen, S.; Liang, Y.-C.; Sun, S.; Kang, S.; Cheng, W.; Peng, M. Vision, Requirements, and Technology Trend of 6G: How to Tackle the Challenges of System Coverage, Capacity, User Data-Rate and Movement Speed. IEEE Wirel. Commun. 2020. [Google Scholar] [CrossRef] [Green Version]

- Arfat, Y.; Usman, S.; Mehmood, R.; Katib, I. Big Data Tools, Technologies, and Applications: A Survey. In Smart Infrastructure and Applications; Springer: Cham, Switzerland, 2020; pp. 453–490. [Google Scholar]

- Arfat, Y.; Usman, S.; Mehmood, R.; Katib, I. Big Data for Smart Infrastructure Design: Opportunities and Challenges. In Smart Infrastructure and Applications; Springer: Cham, Switzerland, 2020; pp. 491–518. [Google Scholar]

- Alam, F.; Mehmood, R.; Katib, I.; Albogami, N.N.; Albeshri, A. Data Fusion and IoT for Smart Ubiquitous Environments: A Survey. IEEE Access 2017, 5, 9533–9554. [Google Scholar] [CrossRef]

- Alam, F.; Mehmood, R.; Katib, I.; Altowaijri, S.M.; Albeshri, A. TAAWUN: A Decision Fusion and Feature Specific Road Detection Approach for Connected Autonomous Vehicles. Mob. Networks Appl. 2019. [Google Scholar] [CrossRef]

- Alomari, E.; Mehmood, R.; Katib, I. Road Traffic Event Detection Using Twitter Data, Machine Learning, and Apache Spark. In Proceedings of the 2019 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Leicester, UK, 19–23 August 2019; pp. 1888–1895. [Google Scholar]

- Alomari, E.; Katib, I.; Mehmood, R. Iktishaf: A Big Data Road-Traffic Event Detection Tool Using Twitter and Spark Machine Learning. Mob. Networks Appl. 2020. [Google Scholar] [CrossRef]

- Shi, Z. Advanced Artificial Intelligence; World Scientific: Singapore, 2019. [Google Scholar]

- Wang, S.; Ananthanarayanan, G.; Zeng, Y.; Goel, N.; Pathania, A.; Mitra, T. High-Throughput CNN Inference on Embedded ARM big.LITTLE Multi-Core Processors. IEEE Trans. Comput. Des. Integr. Circuits Syst. 2019, 1. [Google Scholar] [CrossRef]

- Mayer, R.; Jacobsen, H.A. Scalable Deep Learning on Distributed Infrastructures: Challenges, techniques, and tools. ACM Comput. Surv. 2020. [Google Scholar] [CrossRef] [Green Version]

- Tang, Z.; Shi, S.; Chu, X.; Wang, W.; Li, B. Communication-Efficient Distributed Deep Learning: A Comprehensive Survey. Available online: https://arxiv.org/abs/200306307 (accessed on 24 September 2020).

- Wang, X.; Han, Y.; Leung, V.C.; Niyato, D.; Yan, X.; Chen, X. Convergence of Edge Computing and Deep Learning: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef] [Green Version]

- Park, J.; Samarakoon, S.; Bennis, M.; Debbah, M. Wireless Network Intelligence at the Edge. Proc. IEEE 2019, 107, 2204–2239. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Ran, X. Deep Learning with Edge Computing: A Review. Proc. IEEE 2019. [Google Scholar] [CrossRef]

- Isakov, M.; Gadepally, V.; Gettings, K.M.; Kinsy, M.A. Survey of Attacks and Defenses on Edge-Deployed Neural Networks. In Proceedings of the 2019 IEEE High Performance Extreme Computing Conference (HPEC), Boston, MA, USA, 24–26 September 2019; pp. 1–8. [Google Scholar]

- Rausch, T.; Dustdar, S. Edge Intelligence: The Convergence of Humans, Things, and AI. In Proceedings of the 2019 IEEE International Conference on Cloud Engineering (IC2E), Prague, Czech Republic, 24–27 June 2019; pp. 86–96. [Google Scholar]

- Marchisio, A.; Hanif, M.A.; Khalid, F.; Plastiras, G.; Kyrkou, C.; Theocharides, T.; Shafique, M. Deep Learning for Edge Computing: Current Trends, Cross-Layer Optimizations, and Open Research Challenges. In Proceedings of the 2019 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Miami, FL, USA, 15–17 July 2019; pp. 553–559. [Google Scholar]

- Parra, G.D.L.T.; Rad, P.; Choo, K.K.R.; Beebe, N. Detecting Internet of Things attacks using distributed deep learning. J. Netw. Comput. Appl. 2020, 163, 102662. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated Learning: Challenges, Methods, and Future Directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated Machine Learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019. [Google Scholar] [CrossRef]

- Smith, A.J.; Hollinger, G.A. Distributed inference-based multi-robot exploration. Auton. Robot. 2018, 42, 1651–1668. [Google Scholar] [CrossRef]

- Pathak, N.; Bhandari, A.; Pathak, N.; Bhandari, A. The Artificial Intelligence 2.0 Revolution. In IoT, AI, and Blockchain for .NET; Apress: Berkeley, CA, USA, 2018; pp. 1–24. [Google Scholar]

- Casati, F.; Govindarajan, K.; Jayaraman, B.; Thakur, A.; Palapudi, S.; Karakusoglu, F.; Chatterjee, D. Operating Enterprise AI as a Service. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2019; pp. 331–344. [Google Scholar]

- Milton, R.; Hay, D.; Gray, S.; Buyuklieva, B.; Hudson-Smith, A. Smart IoT and Soft AI. In IET Conference Publications; Institution of Engineering and Technology (IET): London, UK, 2018. [Google Scholar]

- Dialogflow. 2020. Available online: https://cloud.google.com/dialogflow (accessed on 24 September 2020).

- Yu, J.; Zhang, P.; Chen, L.; Liu, J.; Zhang, R.; Wang, K.; An, J. Stabilizing Frame Slotted Aloha Based IoT Systems: A Geometric Ergodicity Perspective. IEEE J. Sel. Areas Commun. 2020, 8716, 1. [Google Scholar] [CrossRef]

- Shilpa, A.; Muneeswaran, V.; Rathinam, D.D.K.; Santhiya, G.A.; Sherin, J. Exploring the Benefits of Sensors in Internet of Everything (IoE). In Proceedings of the 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, 15–16 March 2019; pp. 510–514. [Google Scholar]

- Markets and Markets Blog. Smart Sensor Market. 2020. Available online: http://www.marketsandmarketsblog.com/smart-sensor-market.html (accessed on 24 September 2020).

- Sirma, M.; Kavak, A.; Inner, B. Cloud Based IoE Connectivity Engines for The Next Generation Networks: Challenges and Architectural Overview. In Proceedings of the 2019 1st International Informatics and Software Engineering Conference (UBMYK), Ankara, Turkey, 6–7 November 2019; pp. 1–6. [Google Scholar]

- Alsuwaidan, L. Data Management Model for Internet of Everything. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2019; pp. 331–341. [Google Scholar]

- Lv, Z.; Kumar, N. Software defined solutions for sensors in 6G/IoE. Comput. Commun. 2020, 153, 42–47. [Google Scholar] [CrossRef]

- Aiello, G.; Camillo, A.; Del Coco, M.; Giangreco, E.; Pinnella, M.; Pino, S.; Storelli, D. A context agnostic air quality service to exploit data in the IoE era. In Proceedings of the 2019 4th International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 18–21 June 2019. [Google Scholar]

- Badr, M.; Aboudina, M.M.; Hussien, F.A.; Mohieldin, A.N. Simultaneous Multi-Source Integrated Energy Harvesting System for IoE Applications. In Proceedings of the 2019 IEEE 62nd International Midwest Symposium on Circuits and Systems (MWSCAS), Dallas, TX, USA, 4–7 August 2019; pp. 271–274. [Google Scholar]

- Ryoo, J.; Kim, S.; Cho, J.; Kim, H.; Tjoa, S.; DeRobertis, C. IoE Security Threats and You. In Proceedings of the 2017 International Conference on Software Security and Assurance (ICSSA), Altoona, PA, USA, 24–25 July 2017; pp. 13–19. [Google Scholar]

- Sunyaev, A.; Sunyaev, A. Fog and Edge Computing. In Internet Computing; Springer International Publishing: New York, NY, USA, 2020; pp. 237–264. [Google Scholar]

- Muhammed, T.; Mehmood, R.; Albeshri, A.; Katib, I. UbeHealth: A Personalized Ubiquitous Cloud and Edge-Enabled Networked Healthcare System for Smart Cities. IEEE Access 2018, 6, 32258–32285. [Google Scholar] [CrossRef]

- Khan, L.U.; Yaqoob, I.; Tran, N.H.; Kazmi, S.M.A.; Dang, T.N.; Hong, C.S. Edge Computing Enabled Smart Cities: A Comprehensive Survey. IEEE Internet Things J. 2020, 1. [Google Scholar] [CrossRef] [Green Version]

- Negash, B.; Rahmani, A.M.; Liljeberg, P.; Jantsch, A. Fog Computing Fundamentals in the Internet-of-Things. In Fog Computing in the Internet of Things; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–13. [Google Scholar] [CrossRef]

- Yi, S.; Li, C.; Li, Q. A Survey of Fog Computing. In Proceedings of the 2015 Workshop on Mobile Big Data, Association for Computing Machinery (ACM), New York, NY, USA, 22–25 June 2015; pp. 37–42. [Google Scholar]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Arch. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog computing and its role in the internet of things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Association for Computing Machinery (ACM), New York, NY, USA, 13–17 August 2012; pp. 13–16. [Google Scholar]

- Nath, S.; Seal, A.; Banerjee, T.; Sarkar, S.K. Optimization Using Swarm Intelligence and Dynamic Graph Partitioning in IoE Infrastructure: Fog Computing and Cloud Computing. In Communications in Computer and Information Science; Springer: Berlin, Germany, 2017; pp. 440–452. [Google Scholar]

- Wang, X.; Ning, Z.; Guo, S.; Wang, L. Imitation Learning Enabled Task Scheduling for Online Vehicular Edge Computing. IEEE Trans. Mob. Comput. 2020, 1. [Google Scholar] [CrossRef]

- Badii, C.; Bellini, P.; DiFino, A.; Nesi, P. Sii-Mobility: An IoT/IoE Architecture to Enhance Smart City Mobility and Transportation Services. Sensors 2019, 19, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tammemäe, K.; Jantsch, A.; Kuusik, A.; Preden, J.S.; Õunapuu, E. Self-Aware Fog Computing in Private and Secure Spheres. In Fog Computing in the Internet of Things: Inteliligence at the Edge; Springer International Publishing: New York, NY, USA, 2017; pp. 71–99. [Google Scholar]

- Aqib, M.; Mehmood, R.; Alzahrani, A.; Katib, I.; Albeshri, A.; Altowaijri, S.M. Rapid Transit Systems: Smarter Urban Planning Using Big Data, In-Memory Computing, Deep Learning, and GPUs. Sustainability 2019, 11, 2736. [Google Scholar] [CrossRef] [Green Version]

- Mehmood, R.; Meriton, R.; Graham, G.; Hennelly, P.; Kumar, M. Exploring the influence of big data on city transport operations: A Markovian approach. Int. J. Oper. Prod. Manag. 2017, 37, 75–104. [Google Scholar] [CrossRef]

- Aqib, M.; Mehmood, R.; Alzahrani, A.; Katib, I.; Albeshri, A.; Altowaijri, S.M. Smarter Traffic Prediction Using Big Data, In-Memory Computing, Deep Learning and GPUs. Sensors 2019, 19, 2206. [Google Scholar] [CrossRef] [Green Version]

- Mehmood, R.; Alam, F.; Albogami, N.N.; Katib, I.; Albeshri, A.; Altowaijri, S.M. UTiLearn: A Personalised Ubiquitous Teaching and Learning System for Smart Societies. IEEE Access 2017, 5, 2615–2635. [Google Scholar] [CrossRef]

- Suma, S.; Mehmood, R.; Albeshri, A. Automatic Detection and Validation of Smart City Events Using HPC and Apache Spark Platforms. In Smart Infrastructure and Applications: Foundations for Smarter Cities and Societies; Springer: Berlin/Heidelberg, Germany, 2020; pp. 55–78. [Google Scholar]

- Alomari, E.; Mehmood, R. Analysis of Tweets in Arabic Language for Detection of Road Traffic Conditions. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, LNICST; Springer: Cham, Switzerland, 2018; pp. 98–110. [Google Scholar]

- Arfat, Y.; Suma, S.; Mehmood, R.; Albeshri, A. Parallel Shortest Path Big Data Graph Computations of US Road Network Using Apache Spark: Survey, Architecture, and Evaluation. In Smart Infrastructure and Applications Foundations for Smarter Cities and Societies; Springer: Cham, Switzerland, 2020; pp. 185–214. [Google Scholar]

- Bosaeed, S.; Katib, I.; Mehmood, R. A Fog-Augmented Machine Learning based SMS Spam Detection and Classification System; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2020; pp. 325–330. [Google Scholar]

- Usman, S.; Mehmood, R.; Katib, I.; Albeshri, A. ZAKI+: A Machine Learning Based Process Mapping Tool for SpMV Computations on Distributed Memory Architectures. IEEE Access 2019, 7, 81279–81296. [Google Scholar] [CrossRef]

- Usman, S.; Mehmood, R.; Katib, I.; Albeshri, A.; Altowaijri, S.M. ZAKI: A Smart Method and Tool for Automatic Performance Optimization of Parallel SpMV Computations on Distributed Memory Machines. Mob. Networks Appl. 2019. [Google Scholar] [CrossRef]

- Ahmad, N.; Mehmood, R. Enterprise Systems for Networked Smart Cities. In Smart Infrastructure and Applications: Foundations for Smarter Cities and Societies; Springer: Cham, Switzerland; pp. 1–33.

- Kuchta, R.N.R.; Novotný, R.; Kuchta, R.; Kadlec, J. Smart City Concept, Applications and Services. J. Telecommun. Syst. Manag. 2014, 3, 1–8. [Google Scholar] [CrossRef]

- Ahad, M.A.; Tripathi, G.; Agarwal, P. Learning analytics for IoE based educational model using deep learning techniques: Architecture, challenges and applications. Smart Learn. Environ. 2018, 5, 1–16. [Google Scholar] [CrossRef]

- Al-dhubhani, R.; Al Shehri, W.; Mehmood, R.; Katib, I.; Algarni, A.; Altowaijri, S. Smarter Border Security: A Technology Perspective. In Proceedings of the 1st International Symposium on Land and Maritime Border Security and Safety, Jeddah, Saudi Arabia, 15–19 October 2017; pp. 131–143. [Google Scholar]

- Queralta, J.P.; Gia, T.N.; Tenhunen, H.; Westerlund, T. Collaborative Mapping with IoE-based Heterogeneous Vehicles for Enhanced Situational Awareness. In SAS 2019 IEEE Sensors Applications Symposium Conference Proceedings, LNCST; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Alam, F.; Mehmood, R.; Katib, I. D2TFRS: An Object Recognition Method for Autonomous Vehicles Based on RGB and Spatial Values of Pixels. In Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, LNICST; Springer: Cham, Switzerland, 2018; Volume 224, pp. 155–168. [Google Scholar]

- Mehmood, R.; Bhaduri, B.; Katib, I.; Chlamtac, I. Smart Societies, Infrastructure, Technologies and Applications. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering (LNICST); Springer: Berlin, Germany, 2018; p. 367. [Google Scholar]

- Alomari, E.; Mehmood, R.; Katib, I. Sentiment Analysis of Arabic Tweets for Road Traffic Congestion and Event Detection. In Smart Infrastructure and Applications: Foundations for Smarter Cities and Societies; Springer International Publishing: Cham, Switzerland, 2020; pp. 37–54. [Google Scholar]

- Aqib, M.; Mehmood, R.; Alzahrani, A.; Katib, I. A Smart Disaster Management System for Future Cities Using Deep Learning, GPUs, and In-Memory Computing. In Smart Infrastructure and Applications. EAI/Springer Innovations in Communication and Computing; Springer: Cham, Switzerland, 2020; pp. 159–184. [Google Scholar]

- Mehmood, R.; Graham, G. Big Data Logistics: A health-care Transport Capacity Sharing Model. Procedia Comput. Sci. 2015, 64, 1107–1114. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Xiao, Y.; Ma, Z.; Xiao, M.; Ding, Z.; Lei, X.; Karagiannidis, G.K.; Fan, P. 6G Wireless Networks: Vision, Requirements, Architecture, and Key Technologies. IEEE Veh. Technol. Mag. 2019, 14, 28–41. [Google Scholar] [CrossRef]

- Gupta, H.; Dastjerdi, A.V.; Ghosh, S.K.; Buyya, R. iFogSim: A toolkit for modeling and simulation of resource management techniques in the Internet of Things, Edge and Fog computing environments. Software Pr. Exp. 2017, 47, 1275–1296. [Google Scholar] [CrossRef] [Green Version]

- Andrae, A.S.G.; Edler, T. On Global Electricity Usage of Communication Technology: Trends to 2030. Challenges 2015, 6, 117–157. [Google Scholar] [CrossRef] [Green Version]

- Global Petrol Prices. 2019. Saudi Arabia Electricity Price. Available online: http://www.efficiency-from-germany.info/ENEFF/Redaktion/DE/Downloads/Publikationen/Zielmarktanalysen/marktanalyse_saudi_arabien_2011_gebaeude.pdf?__blob=publicationFile&v=4 (accessed on 24 September 2020).

- International Air Transport Association (IATA). IATA Forecast Predicts 8.2 Billion Air Travelers in 2037. IATA Press Release No. 62. 2018. Available online: https://www.iata.org/pressroom/pr/Pages/2018-10-24-02.aspx (accessed on 24 September 2020).

- Karakus, G.; Karşıgil, E.; Polat, L. The Role of IoT on Production of Services: A Research on Aviation Industry. In Proceedings of the International Symposium for Production Research, Vienna, Austria, 28–30 August 2018; pp. 503–511. [Google Scholar]

- Lazaroiu, C.; Roscia, M. Smart District through IoT and Blockchain; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2017; pp. 454–461. [Google Scholar]

- Paidi, V.; Fleyeh, H.; Håkansson, J.; Nyberg, R.G. Smart parking sensors, technologies and applications for open parking lots: A review. IET Intell. Transp. Syst. 2018, 12, 735–741. [Google Scholar] [CrossRef]

- Song, E.Y.; Fitzpatrick, G.J.; Lee, K.B. Smart Sensors and Standard-Based Interoperability in Smart Grids. IEEE Sens. J. 2017, 17, 7723–7730. [Google Scholar] [CrossRef] [PubMed]

| Research | IoE | Edge/Fog | Smart Societies | 6G | Distributed AI (DAI) | as a Service (aaS) | AIaaS | DAIaaS |

|---|---|---|---|---|---|---|---|---|

| Letaief et al. [13] | x | x | x | x | ||||

| Smith and Hollinger [40] | x | x | x | |||||

| AlSuwaidan [49] | x | x | x | |||||

| Lv and Kumar [50] | x | x | x | |||||

| Aiello et al. [51] | x | x | x | |||||

| Nath et al. [61] | x | x | x | x | ||||

| Wang et al. [62] | x | x | x | x | ||||

| Badii at al. [63] | x | x | x | |||||

| Ahad et al. [77] | x | x | ||||||

| Casati et al. [42] | x | x | x | |||||

| Milton et al. [43] | x | x | x | x | ||||

| This work | x | x | x | x | x | x | x | x |

| Device Parameter | Cloud (VM) | Fog Device | Edge Device |

|---|---|---|---|

| MIPS | 220,000 | 50,000 | 5000 |

| RAM (MB) | 40,000 | 4000 | 1000 |

| Uplink Bandwidth (Mbps) | 100 | 10,000 | 10,000 |

| Downlink Bandwidth (Mbps) | 10,000 | 10,000 | 10,000 |

| Busy Power (W) | 16 × 103 | 107.339 | 87.53 |

| Idle Power (W) | 16 × 83.25 | 83.4333 | 82.44 |

| Workload Type | Source Module | Destination Module | CPU Requirement (wc) (MI) | Network Requirement (wn) (Bytes) |

|---|---|---|---|---|

| vid_strm | Camera | Motion Detector | 1000 | 20K |

| motion_vid_strm | Motion Detector | Obj Detector | 2000 | 2000 |

| detected_obj | Obj Detector | User Interface | 500 | 2000 |

| obj_location | Obj Detector | Obj Tracker | 1000 | 100 |

| cam_ctrl | Obj Tracker | Camera Ctrl | 50 | 100 |

| Link (L) | Latency (ms) |

|---|---|

| Cloud-Gateway | 100 |

| Gateway-Fog | 2 |

| Fog-Edge | 2 |

| Edge-Sensor/actuator | 1 |

| Estimated Energy Consumption | 2010 (kWh/GB) | 2020 (kWh/GB) | 2030 (kWh/GB) |

|---|---|---|---|

| Best | 5.65 | 0.05 | 0.002 |

| Worst | 14.78 | 1.04 | 0.048 |

| Average | 10.22 | 0.54 | 0.025 |

| Camera | Barcode Reader | Counter Device | |

|---|---|---|---|

| Workload type | vid_strm | barcode | info |

| Distribution (ms) | Deterministic Distribution (5) | Uniform Distribution (5,20) | Uniform Distribution (5,20) |

| Workload Type | Source Module | Destination Module | CPU Req. (MI) | Network Req. (Byte) |

|---|---|---|---|---|

| barcode | Barcode Reader | Boarding Processor | 100 | 1000 |

| passenger_info | Boarding Processor | Authenticator (Auth.) | 2000 | 1000 |

| gate_ctrl | Authenticator | Gate Ctrl | 100 | 100 |

| auth_info | Auth. Info Provider | Authenticator | 100 | 100 |

| info | Counter Device | Check Info | 100 | 1000 |

| passenger | Check Info | Passenger Processing | 500 | 1000 |

| passenger_info_req | Passenger Processing | Auth. Info Provider | 1000 | 1000 |

| passenger_info_res | Auth. Info Provider | Passenger Processing | 1000 | 100 |

| counter control | Passenger Processing | Counter Ctrl | 100 | 500 |

| Workload Type | Source Module | Destination Module | CPU Req. (MI) | Network Req. (Byte) |

|---|---|---|---|---|

| meter_ reading | Meter | Meter Monitor | 100 | 500 |

| outage_ status | Meter Monitor | Outage Notifier | 500 | 2000 |

| meter_ status | Meter Monitor | Elect Controller | 1000 | 2000 |

| elect_analysis | Elect Controller | User Interface | 1000 | 500 |

| ctrl_params | Elect Controller | Meter Ctrl | 500 | 50 |

| bin_ reading | Bin | Bin Monitor | 100 | 500 |

| full_status | Bin Monitor | Full Notifier | 200 | 2000 |

| bin_ status | Bin Monitor | Bins Coord | 800 | 2000 |

| waste_cond | Bins Coord | User Interface | 1000 | 500 |

| ctrl_params | Bins Coord | Bin Ctrl | 500 | 50 |

| Workload Type | Source Module | Destination Module | CPU Req. (MI) | Network Req. (Byte) |

|---|---|---|---|---|

| Scenario A | ||||

| data | Sensor | Data Collection | 100 | RD = 20 K |

| Collected data (datac) | Data Collect | Data Aggregation | 200 | RD |

| Aggregated data (dataag) | Data Aggregation | Data Fusion | 100 K | DA = RD × E |

| Fused data (dataf) | Data Fusion | Data Prep. | 150 K | DF = DA × 0.80 |

| Preprocessed data (datap) | Data Prep. | Model Build | 150 K | DP = DF × 0.50 |

| model | Model Build | Analytics | 200 K | 1 MG |

| results | Analytics | Actuator | 100 K | 1000 |

| Scenario B | ||||

| data | Sensor | Data Collection | 100 | RD = 20 K |

| Collected data (datac) | Data Collection | Data Aggregation | 200 | RD |

| Aggregated data (dataag) | Data Aggregation | Data Fusion | 100 K | DA = RD × E |

| Fused data (dataf) | Data Fusion | Data Pre-Processing | 150 K | DF = DA × 0.80 |

| Preprocessed data (datap) | Data Pre-Processing | Model Building | 150 K | DP = DF × 0.50 |

| model | Model Building | Create Dist. ML | 200 K | M = 1 MB |

| Dist. model (modeld) | Create Dist. ML | Receive Dist. Model | 200 K | DM = M/E 5K < DM < 50K |

| Rec dist. model (rec_modeld) | Receive Dist. Model | Local Analytics | 1000 | DM |

| Collected data (datac) | Data Collection | Local Analytics | 200 | RD |

| Results | Local Analytics | Actuator | 3000 | 1000 |

| Scenarios C and D | ||||

| data | Sensor | Data Collection | 100 | RD = 20000 |

| Cloud collected data (data C_c) | Fog Data Collection | Data Aggregation | 200 | FDC = RD × E |

| Cloud aggregated data (data C_ag) | Data Aggregation | Data Fusion | 100 K | DA = FDC × F |

| Cloud fused data (data C_f) | Data Fusion | Data Pre-Processing | 150 K | DF = DA × 0.80 |

| Cloud preprocessed data (data C_p) | Data Pre-Processing | Model Building | 150 K | DP = DF × 0.50 |

| Cloud model (modelC) | Model Building | Create Dist. ML | 200 K | M = 1 MB |

| Cloud dist. model (modelC_d) | Create Dist. ML | Rec. Dist. Model | 200 K | DM = M/F 20K < DM < 200 K |

| Rec cloud model (rec_modelC_d) | Rec. Dist. Model | Fog Model Building | 10 K | DM |

| Collected data (datac) | Data Collection | Fog Data Collection | 200 | RD |

| Fog collected data (dataF_c) | Fog Data Collection | Fog Data Aggregation | 200 | FDC = RD × E |

| Fog aggregated data (data F_ag) | Fog Data Aggregation | Fog Data Fusion | 20 K | FDA = FDC × E |

| Fog fused data (data F_f) | Fog Data Fusion | Fog Data Pre-Processing | 30 K | FDF = FDA × 0.80 |

| Fog preprocessed data (data F_p) | Fog Data Pre-Processing | Fog Model Building | 30 K | FDP = FDF × 0.50 |

| Fog model (modelF) | Fog Model Building | Fog Create Dist. ML | 40 K | FM = M/F 20 K < FM< 200 K |

| Fog dist. model (modelF_d) | Fog Create Dist. ML | Rec. Fog Dist. Model | 40 K | DFM = FM/E 5 K < DFM < 50 K |

| Rec fog model(rec_modelF_d) | Rec. Fog Dist. Model | Local Analytics | 1000 | DFM |

| Collected data (datac) | Data Collection | Local Analytics | 200 | RD |

| results | Local Analytics | Actuator | 3000 | 1000 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Janbi, N.; Katib, I.; Albeshri, A.; Mehmood, R. Distributed Artificial Intelligence-as-a-Service (DAIaaS) for Smarter IoE and 6G Environments. Sensors 2020, 20, 5796. https://doi.org/10.3390/s20205796

Janbi N, Katib I, Albeshri A, Mehmood R. Distributed Artificial Intelligence-as-a-Service (DAIaaS) for Smarter IoE and 6G Environments. Sensors. 2020; 20(20):5796. https://doi.org/10.3390/s20205796

Chicago/Turabian StyleJanbi, Nourah, Iyad Katib, Aiiad Albeshri, and Rashid Mehmood. 2020. "Distributed Artificial Intelligence-as-a-Service (DAIaaS) for Smarter IoE and 6G Environments" Sensors 20, no. 20: 5796. https://doi.org/10.3390/s20205796