ISOBlue HD: An Open-Source Platform for Collecting Context-Rich Agricultural Machinery Datasets

Abstract

:1. Introduction

2. Related Works

3. Hardware Components

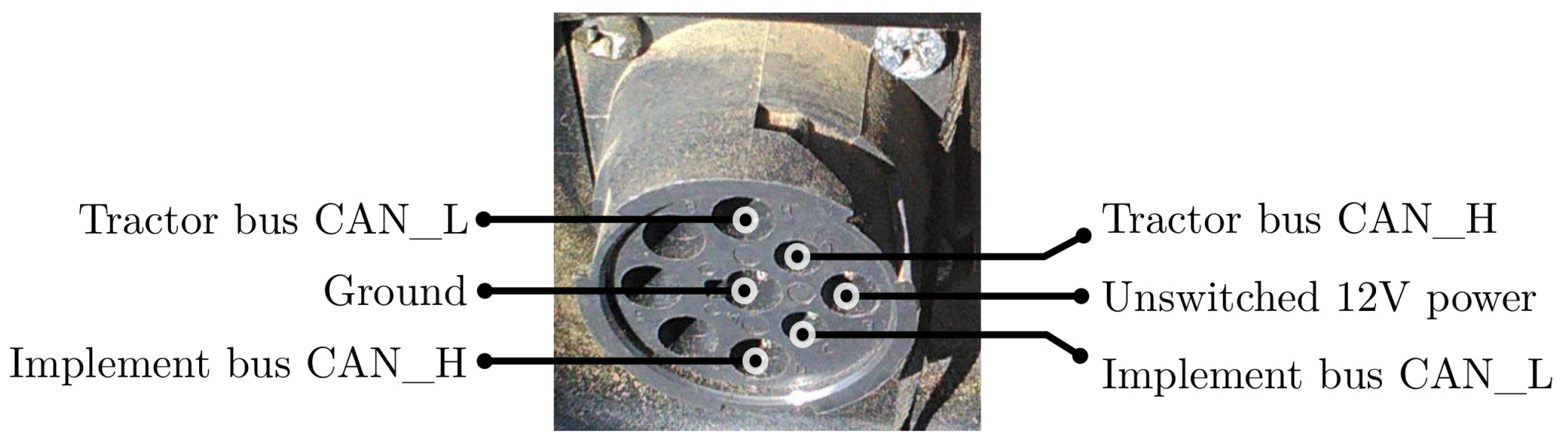

3.1. Data Sources

3.2. Additional Components

3.3. Enclosure

4. Software Development

4.1. System Management

4.2. Power Management

4.3. Kafka Cluster

4.4. Data Loggers

5. Wheat Harvest Experiment

5.1. Contextual Label Generation

5.2. Preliminary Contextual Knowledge

5.3. GPS Track with Contexts

5.4. Extracted CAN Signal with Contexts

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Component | Model | Quantity | Unit Cost (USD) |

|---|---|---|---|

| Single board computer | |||

| Ixora carrier board | Toradex 01331000 | 1 | $99 |

| Apalis computer module | Toradex 00281101 | 1 | $139 |

| Sensors | |||

| IP cameras | Ubiquiti Networks UVC-G3-BULLET | 3 | $149 |

| USB GPS module | Navisys GR-701W | 1 | $49 |

| CAN transceiver (on Ixora) | Analog Device ADM3053BRWZ | 2 | $0 |

| Data storage | |||

| mSATA solid state drive | Samsung PM851 | 1 | $299 |

| USB hard drive | Western Digital WDBYFT0040BBK | 1 | $81 |

| Cellular connectivity | |||

| miniPCIe 4G/LTE module | Telit LE910-NAG | 1 | $95 |

| Antennas | Taoglas FXUB65-07-0180C | 2 | $10 |

| Power related | |||

| Relay PCB | Custom built | 1 | $10 |

| RTC battery | CR 1225 | 1 | $1 |

| Network switch | Veracity VCS-8P2-MOB | 1 | $248 |

| Enclosure | |||

| IP 68 Enclosure | Integra H12106SCF | 1 | $193 |

| Plexiglass | Custom built | 1 | $10 |

| Ethernet coupler | Tripp Lite N206-BC01-IND | 3 | $14 |

| USB coupler | USBFirewire RR-111200-30 | 1 | $15 |

| ISOBUS coupler | Deutsch HD10-9-1939PE-B022 | 1 | $10 |

| Cablings | Various vendors | N/A | $5 |

References

- Oksanen, T.; Visala, A. Coverage path planning algorithms for agricultural field machines. J. Field Robot. 2009, 26, 651–668. [Google Scholar] [CrossRef]

- Gonzalez-de Soto, M.; Emmi, L.; Garcia, I.; Gonzalez-de Santos, P. Reducing fuel consumption in weed and pest control using robotic tractors. Comput. Electron. Agric. 2015, 114, 96–113. [Google Scholar] [CrossRef]

- Kortenbruck, D.; Griepentrog, H.W.; Paraforos, D.S. Machine operation profiles generated from ISO 11783 communication data. Comput. Electron. Agric. 2017, 140, 227–236. [Google Scholar] [CrossRef]

- Marx, S.E.; Luck, J.D.; Hoy, R.M.; Pitla, S.K.; Blankenship, E.E.; Darr, M.J. Validation of machine CAN bus J1939 fuel rate accuracy using Nebraska Tractor Test Laboratory fuel rate data. Comput. Electron. Agric. 2015, 118, 179–185. [Google Scholar] [CrossRef]

- Gomez-Gil, J.; Ruiz-Gonzalez, R.; Alonso-Garcia, S.; Gomez-Gil, F. A Kalman Filter Implementation for Precision Improvement in Low-Cost GPS Positioning of Tractors. Sensors 2013, 13, 15307–15323. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Finner, M.; Straub, R. Farm Machinery Fundamentals; American Publishing Company: Madison, WI, USA, 1985. [Google Scholar]

- American Society of Agricultural Engineers. Automated Agriculture for the 21st Century, Proceedings of the 1991 Symposium, Chicago, IL, USA, 16–17 December 1991; American Society of Agricultural Engineers: St. Joseph, MI, USA, 1991. [Google Scholar]

- International Organization for Standardization. Tractors and Machinery for Agriculture and Forestry—Serial Control and Communications Data Network—Part 1: General Standard for Mobile Data Communication. 2017. Available online: https://www.iso.org/standard/57556.html (accessed on 11 October 2020).

- International Organization for Standardization. Road Vehicles—Controller Area Network (CAN)—Part 1: Data Link Layer and Physical Signalling. 2015. Available online: https://www.iso.org/standard/63648.html (accessed on 10 October 2020).

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine Learning in Agriculture: A Review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [Green Version]

- Torii, T. Research in autonomous agriculture vehicles in Japan. Comput. Electron. Agric. 2000, 25, 133–153. [Google Scholar] [CrossRef]

- Eaton, R.; Katupitiya, J.; Siew, K.W.; Howarth, B. Autonomous Farming: Modeling and Control of Agricultural Machinery in a Unified Framework. Int. J. Intell. Syst. Technol. Appl. 2010, 8, 444–457. [Google Scholar] [CrossRef]

- Steen, K.; Christiansen, P.; Karstoft, H.; Jørgensen, R. Using Deep Learning to Challenge Safety Standard for Highly Autonomous Machines in Agriculture. J. Imaging 2016, 2, 6. [Google Scholar] [CrossRef] [Green Version]

- Duckett, T.; Pearson, S.; Blackmore, S.; Grieve, B.; Chen, W.H.; Cielniak, G.; Cleaversmith, J.; Dai, J.; Davis, S.; Fox, C.; et al. Agricultural Robotics: The Future of Robotic Agriculture. arXiv 2018, arXiv:1806.06762. [Google Scholar]

- Fountas, S.; Mylonas, N.; Malounas, I.; Rodias, E.; Hellmann Santos, C.; Pekkeriet, E. Agricultural Robotics for Field Operations. Sensors 2020, 20, 2672. [Google Scholar] [CrossRef] [PubMed]

- Romans, W.; Poore, B.; Mutziger, J. Advanced instrumentation for agricultural equipment. IEEE Instrum. Meas. Mag. 2000, 3, 26–29. [Google Scholar] [CrossRef]

- O’Grady, M.; Langton, D.; O’Hare, G. Edge computing: A tractable model for smart agriculture? Artif. Intell. Agric. 2019, 3, 42–51. [Google Scholar] [CrossRef]

- Perera, C.; Zaslavsky, A.; Christen, P.; Georgakopoulos, D. Context Aware Computing for The Internet of Things: A Survey. IEEE Commun. Surv. Tutorials 2014, 16, 414–454. [Google Scholar] [CrossRef] [Green Version]

- Conesa-Muñoz, J.; Bengochea-Guevara, J.M.; Andujar, D.; Ribeiro, A. Route planning for agricultural tasks: A general approach for fleets of autonomous vehicles in site-specific herbicide applications. Comput. Electron. Agric. 2016, 127, 204–220. [Google Scholar] [CrossRef]

- Spekken, M.; de Bruin, S. Optimized routing on agricultural fields by minimizing maneuvering and servicing time. Precis. Agric. 2013, 14, 224–244. [Google Scholar] [CrossRef]

- Diekhans, N.; Huster, J.; Brunnert, A.; Helligen, L.-P.M.Z. Method for Creating a Route Plan for Agricultural Machine Systems. U.S. Patent 8170785B2, 1 May 2012. [Google Scholar]

- Diekhans, N.; Brunnert, A. Route Planning System for Agricultural Working Machines. U.S. Patent 7756624B2, 13 July 2010. [Google Scholar]

- Truck Bus Control and Communications Network Committee. Off-Board Diagnostic Connector; SAE International: Warrendale, PA, USA, 2016. [Google Scholar] [CrossRef]

- Jensen, M. Diagnostic Tool Concepts for ISO11783 (ISOBUS). SAE Trans. 2004, 113, 415–419. [Google Scholar]

- Heiß, A.; Paraforos, D.; Griepentrog, H. Determination of Cultivated Area, Field Boundary and Overlapping for A Plowing Operation Using ISO 11783 Communication and D-GNSS Position Data. Agriculture 2019, 9, 38. [Google Scholar] [CrossRef] [Green Version]

- Rohrer, R.; Pitla, S.; Luck, J. Tractor CAN bus interface tools and application development for real-time data analysis. Comput. Electron. Agric. 2019, 163, 104847. [Google Scholar] [CrossRef]

- Navarro, E.; Costa, N.; Pereira, A. A Systematic Review of IoT Solutions for Smart Farming. Sensors 2020, 20, 4231. [Google Scholar] [CrossRef]

- Darr, M.J. CAN Bus Technology Enables Advanced Machinery Management. Resour. Eng. Technol. Sustain. World 2012, 19, 10–11. [Google Scholar]

- Layton, A.W.; Balmos, A.D.; Sabpisal, S.; Ault, A.C.; Krogmeier, J.V.; R, B.D. ISOBlue: An Open Source Project to Bring Agricultural Machinery Data into the Cloud. In Proceedings of the 2014 ASABE Annual International Meeting, Montreal, QC, Canada, 13–16 July 2014; pp. 1–8. [Google Scholar]

- Wang, Y.; Balmos, A.D.; Layton, A.W.; Noel, S.; Krogmeier, J.V.; Buckmaster, D.R.; Ault, A.C. CANdroid: Freeing ISOBUS Data and Enabling Machine Data Analytics. In Proceedings of the 2016 ASABE Annual International Meeting, Orlando, FL, USA, 16–20 July 2016; p. 1. [Google Scholar]

- Wang, Y.; Balmos, A.D.; Layton, A.W.; Noel, S.; Ault, A.; Krogmeier, J.V.; Buckmaster, D.R. An Open-Source Infrastructure for Real-Time Automatic Agricultural Machine Data Processing. In Proceedings of the 2017 ASABE Annual International Meeting, Spokane, WA, USA, 16–19 July 2017; p. 1. [Google Scholar]

- Kragh, M.; Christiansen, P.; Laursen, M.; Larsen, M.; Steen, K.; Green, O.; Karstoft, H.; Jørgensen, R. FieldSAFE: Dataset for Obstacle Detection in Agriculture. Sensors 2017, 17, 2579. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fite, A.; McGuan, A.; Letz, T. Tractor Hacking. Available online: https://tractorhacking.github.io/ (accessed on 3 August 2020).

- Backman, J.; Linkolehto, R.; Koistinen, M.; Nikander, J.; Ronkainen, A.; Kaivosoja, J.; Suomi, P.; Pesonen, L. Cropinfra research data collection platform for ISO 11783 compatible and retrofit farm equipment. Comput. Electron. Agric. 2019, 166, 105008. [Google Scholar] [CrossRef]

- Covington, B.R. Assessment of Utilization and Downtime of a Commercial Level Multi-Pass Corn Stover Harvesting Systems. Master’s Thesis, Iowa State University, Ames, IA, USA, 2013. [Google Scholar]

- Askey, J.C. Automated Logistic Processing and Downtime Analysis of Commercial Level Multi-Pass Corn Stover Harvesting Systems. Master’s Thesis, Iowa State University, Ames, IA, USA, 2014. [Google Scholar]

- Toradex Inc. NXP® i.MX 6 Computer on Module—Apalis iMX6. Available online: https://www.toradex.com/computer-on-modules/apalis-arm-family/nxp-freescale-imx-6 (accessed on 5 August 2020).

- Analog Device. Signal and Power Isolated CAN Transceiver with Integrated Isolated DC-to-DC Converter. Available online: https://www.analog.com/media/en/technical-documentation/data-sheets/ADM3053.pdf (accessed on 5 August 2020).

- Navisys Technology. Navisys GR-701, u-blox7 Ultra-High Performance GPS Mouse Receiver. Available online: http://www.navisys.com.tw/products/GPS&GNSS_receivers/flyer/GR-701_flyer-150703.pdf (accessed on 5 August 2020).

- Ubiquiti Inc. Ubiquiti Network UniFi G3 Video Camera. Available online: https://www.ui.com/unifi-video/unifi-video-camera-g3/ (accessed on 5 August 2020).

- The Internet Society. Real Time Streaming Protocol (RTSP). Available online: https://tools.ietf.org/html/rfc2326 (accessed on 10 October 2020).

- Veracity. CAMSWITCH 8 Mobile. Available online: https://www.veracityglobal.com/products/networked-video-integration-devices/camswitch-mobile.aspx (accessed on 5 August 2020).

- IEEE Standard for Information Technology - Telecommunications and Information Exchange between Systems—Local and Metropolitan Area Networks—Specific Requirements—Part 3: Carrier Sense Multiple Access with Collision Detection (CSMA/CD) Access Method and Physical Layer Specifications—Data Terminal Equipment (DTE) Power Via Media Dependent Interface (MDI). Available online: https://standards.ieee.org/standard/802_3cm-2020.html (accessed on 5 August 2020).

- Telit. LE910 Cat. 1 Series. Available online: https://www.telit.com/le910-cat-1-le910b1/ (accessed on 5 August 2020).

- International Electrotechnical Commission. Degrees of Protection Provided by Enclosures (IP Code). Available online: https://webstore.iec.ch/publication/2452 (accessed on 5 August 2020).

- Raj, K. The Ångström Distribution. Available online: https://github.com/Angstrom-distribution (accessed on 5 August 2020).

- Wang, Y. ISOBlue HD Software GitHub. Available online: https://github.com/ISOBlue/isoblue2/tree/hd (accessed on 5 August 2020).

- Kroah-Hartman, G.; Sievers, K. udev—Dynamic Device Management. Available online: https://www.freedesktop.org/software/systemd/man/udev.html (accessed on 5 August 2020).

- Remco, T.; Brashear, D. gpsd—A GPS Service Daemon. Available online: https://gpsd.gitlab.io/gpsd/index.html (accessed on 5 August 2020).

- Poettering, L.; Sievers, K.; Hoyer, H.; Mack, D.; Gundersen, T.; Herrmann, D. Systemd—System and Service Manager. Available online: https://www.freedesktop.org/wiki/Software/systemd/ (accessed on 5 August 2020).

- The OpenBSD Project. openssh. Available online: https://www.openssh.com/ (accessed on 5 August 2020).

- Kelley, S. dnsmasq. Available online: http://www.thekelleys.org.uk/dnsmasq/doc.html (accessed on 5 August 2020).

- Curnow, R. Chrony. Available online: https://chrony.tuxfamily.org/documentation.html (accessed on 5 August 2020).

- Kleine-Budde, M.; Hartkopp, O. socketCAN—Linux-CAN / SocketCAN User Space Applications. Available online: https://github.com/linux-can/ (accessed on 6 August 2020).

- Apache Software Foundation. Kafka—A Distributed Streaming Platform. Available online: https://github.com/apache/kafka (accessed on 6 August 2020).

- Qian, S.; Wu, G.; Huang, J.; Das, T. Benchmarking modern distributed streaming platforms. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; pp. 592–598. [Google Scholar] [CrossRef]

- Akanbi, A.; Masinde, M. A Distributed Stream Processing Middleware Framework for Real-Time Analysis of Heterogeneous Data on Big Data Platform: Case of Environmental Monitoring. Sensors 2020, 20, 3166. [Google Scholar] [CrossRef] [PubMed]

- Apache Software Foundation. Kafka Software Archive. Available online: https://archive.apache.org/dist/kafka/0.10.1.0/kafka_2.10-0.10.1.0.tgz (accessed on 5 August 2020).

- Edenhill, M. librdkafka—The Apache Kafka C/C++ library. Available online: https://github.com/edenhill/librdkafka (accessed on 5 August 2020).

- Powers, D. kafka-python - A Python client for Apache Kafka. Available online: https://github.com/dpkp/kafka-python (accessed on 5 August 2020).

- Apache Software Foundation. Avro C. Available online: https://avro.apache.org/docs/current/api/c/index.html (accessed on 5 August 2020).

- Apache Software Foundation. Apache Avro Getting Started (Python). Available online: https://avro.apache.org/docs/current/gettingstartedpython.html (accessed on 5 August 2020).

- Moe. gps3—Python 2.7 to 3.5 Interface to gpsd. Available online: https://pypi.org/project/gps3/ (accessed on 5 August 2020).

- Bellard, F. ffmpeg—A Complete, Cross-Platform Solution to Record, Convert and Stream Audio and Video. Available online: https://www.ffmpeg.org/ (accessed on 5 August 2020).

- CNH Industrial America LLC. Axial-Flow Combines. Available online: https://www.caseih.com/northamerica/en-us/products/harvesting/axial-flow-combines (accessed on 5 August 2020).

- Masullo, A.; Dalgarno, L. MUltiple VIdeos LABelling tool. Available online: https://github.com/ale152/muvilab (accessed on 5 August 2020).

- Pesé, M.D.; Stacer, T.; Campos, C.A.; Newberry, E.; Chen, D.; Shin, K.G. LibreCAN: Automated CAN Message Translator. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 2283–2300. [Google Scholar] [CrossRef]

- Zago, M.; Longari, S.; Tricarico, A.; Gil Pérez, M.; Carminati, M.; Martínez Pérez, G.; Zanero, S. ReCAN - Dataset for Reverse engineering of Controller Area Networks. Data Brief 2020, 29, 105149. [Google Scholar] [CrossRef]

- Markovitz, M.; Wool, A. Field classification, modeling and anomaly detection in unknown CAN bus networks. Veh. Commun. 2017, 9, 43–52. [Google Scholar] [CrossRef]

- Verma, M.; Bridges, R.; Hollifield, S. ACTT: Automotive CAN Tokenization and Translation. In Proceedings of the 2018 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 12–14 December 2018; pp. 278–283. [Google Scholar] [CrossRef] [Green Version]

- Nolan, B.C.; Graham, S.; Mullins, B.; Kabban, C.S. Unsupervised Time Series Extraction from Controller Area Network Payloads. In Proceedings of the 2018 IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–5. [Google Scholar] [CrossRef] [Green Version]

- Marchetti, M.; Stabili, D. READ: Reverse Engineering of Automotive Data Frames. IEEE Trans. Inf. Forensics Secur. 2019, 14, 1083–1097. [Google Scholar] [CrossRef]

- Yaacoub, E.; Alouini, M.S. A Key 6G Challenge and Opportunity—Connecting the Base of the Pyramid: A Survey on Rural Connectivity. Proc. IEEE 2020, 108, 533–582. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Reibman, A.R.; Ault, A.C.; Krogmeier, J.V. Video Classification of Farming Activities with Motion-Adaptive Feature Sampling. In Proceedings of the 2018 IEEE 20th International Workshop on Multimedia Signal Processing (MMSP), Vancouver, BC, Canada, 29–31 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, H.; Reibman, A.R.; Ault, A.C.; Krogmeier, J.V. Video-Based Prediction for Header-Height Control of a Combine Harvester. In Proceedings of the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), San Jose, CA, USA, 28–30 March 2019; pp. 310–315. [Google Scholar] [CrossRef]

- Huybrechts, T.; Vanommeslaeghe, Y.; Blontrock, D.; Van Barel, G.; Hellinckx, P. Automatic Reverse Engineering of CAN Bus Data Using Machine Learning Techniques. In Advances on P2P, Parallel, Grid, Cloud and Internet Computing; Xhafa, F., Caballé, S., Barolli, L., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 13, pp. 751–761. [Google Scholar] [CrossRef]

| Name | Collected Data | Reference |

|---|---|---|

| CyCAN | CAN | Darr [28] |

| ISOBlue | CAN | Layton et al. [29] |

| CANdroid | CAN | Wang et al. [30] |

| ISOBlue 2.0 | CAN, GNSS | Wang et al. [31] |

| FieldSafe | GNSS, video, IMU, ranging | Kragh et al. [32] |

| PolyCAN | CAN | Fite et al. [33] |

| Cropinfra | CAN, GNSS | Backman et al. [34] |

| Feature | Description |

|---|---|

| Processor | ARM® Cortex-A9, 800 MHz, quad-core |

| RAM | 2 GB DDR3 |

| Flash | 4 GB eMMC flash |

| Interfaces | mSATA, miniPCIe, Ethernet, CAN, USB, GPIO |

| Input power | 7 to 27 V |

| Component | Quantity | Model | Description | Acquisition Rate (Nominal) |

|---|---|---|---|---|

| CAN transceiver | 2 | Analog Device ADM3053 [38] | Converts CAN bus signals to bitstreams. | 700 frames/second |

| USB GPS module | 1 | Navisys GR-701W [39] | Provides positioning data with 2.5 m accuracy. | 1 message/second |

| IP camera | 3 | Ubiquiti Networks UVC-G3-BULLET [40] | Provides full HD (1080p) video streams. | 30 frames/second |

| Application | Description |

|---|---|

| udev [48] | System device manager |

| systemd [50] | System service daemon |

| openssh [51] | Networking tools using Secure Shell (SSH) |

| dnsmasq [52] | Multi-purpose networking tool |

| chronyd [53] | Clock synchronization daemon |

| gpsd [49] | GPS service daemon |

| Topic | Description |

|---|---|

| imp | Implement bus data |

| tra | Tractor bus data |

| gps | GPS data |

| remote | GPS and diagnostic data |

| Name | Description |

|---|---|

| librdkafka [59] | Apache Kafka client C API |

| kafka-python [60] | Apache Kafka client Python API |

| avro-c [61] | Apache Avro serialization C library |

| avro-python [62] | Apache Avro serialization Python library |

| gps3 [63] | Client Python library for gpsd |

| Label | Description |

|---|---|

| Header position | |

| Header up | Header is at up position. |

| Transition | Header transition (up to down or vice versa). |

| Header down | Header is at down position. |

| Operator actions | |

| None | No operator action observed. |

| Joystick 1 | Header height and tilt. |

| Joystick 2 | Reel height adjustment. |

| Joystick 3 | Unload auger swing out/in. |

| Joystick 4 | Resume header preset or raise header. |

| Joystick 5 | Unload auger on/off. |

| Panel 1 | Header rotation on/off. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Liu, H.; Krogmeier, J.; Reibman, A.; Buckmaster, D. ISOBlue HD: An Open-Source Platform for Collecting Context-Rich Agricultural Machinery Datasets. Sensors 2020, 20, 5768. https://doi.org/10.3390/s20205768

Wang Y, Liu H, Krogmeier J, Reibman A, Buckmaster D. ISOBlue HD: An Open-Source Platform for Collecting Context-Rich Agricultural Machinery Datasets. Sensors. 2020; 20(20):5768. https://doi.org/10.3390/s20205768

Chicago/Turabian StyleWang, Yang, He Liu, James Krogmeier, Amy Reibman, and Dennis Buckmaster. 2020. "ISOBlue HD: An Open-Source Platform for Collecting Context-Rich Agricultural Machinery Datasets" Sensors 20, no. 20: 5768. https://doi.org/10.3390/s20205768

APA StyleWang, Y., Liu, H., Krogmeier, J., Reibman, A., & Buckmaster, D. (2020). ISOBlue HD: An Open-Source Platform for Collecting Context-Rich Agricultural Machinery Datasets. Sensors, 20(20), 5768. https://doi.org/10.3390/s20205768