Abstract

Pansharpening is a technique that fuses a low spatial resolution multispectral image and a high spatial resolution panchromatic one to obtain a multispectral image with the spatial resolution of the latter while preserving the spectral information of the multispectral image. In this paper we propose a variational Bayesian methodology for pansharpening. The proposed methodology uses the sensor characteristics to model the observation process and Super-Gaussian sparse image priors on the expected characteristics of the pansharpened image. The pansharpened image, as well as all model and variational parameters, are estimated within the proposed methodology. Using real and synthetic data, the quality of the pansharpened images is assessed both visually and quantitatively and compared with other pansharpening methods. Theoretical and experimental results demonstrate the effectiveness, efficiency, and flexibility of the proposed formulation.

1. Introduction

Remote sensing sensors simultaneously capture a Multispectral (MS) low resolution image along with a single-band high resolution image of the same area, referred to as Panchromatic (PAN) image. However, MS high-resolution images are needed by many applications, such as land use and land cover analyses or change detection. Pansharpening is a technique that fuses the MS and PAN images into an MS high resolution image that has the spatial resolution of the PAN image and the spectral resolution of the MS one.

In this paper we formulate the pansharpening problem following the Bayesian framework. Within this framework, we use the sensor characteristics to model the observation process as a conditional probability distribution. The observation process describes both the MS high resolution image to MS low resolution image relationship and how the PAN image is obtained from the MS high resolution one. This probability distributions provides fidelity to the observed data in the pansharpened image reconstruction process. together with from fidelity to the data, Bayesian methods incorporate prior knowledge on the MS high resolution image in the form of prior probability distributions. Crisp images, such as high resolution MS images, are expected to have Super-Gaussian (SG) statistics, while upsampled images suffer from blur that smooths out sharp gradients, making them more Gaussian in their statistics [1]. Our goal is to integrate the sharp edges of the PAN image into the pansharpened image, leading to less Gaussian statistics which makes SG priors a suitable choice. SG priors have been successfully applied to other image processing tasks, such as compressed sensing [2], blind deconvolution [1,3] and blind color deconvolution [4] and so it is also expected to produce good results in pansharpening. However, the form of the SG prior does not allow us to obtain the posterior distribution in an analytical way, making full Bayesian inference intractable. Hence, in this paper, we use the variational Bayesian inference to estimate the distribution of the pansharpened image as well as the model parameters from the MS low resolution and PAN images.

The rest of the paper is organized as follows: a categorization and short review of related pansharpening methods is presented in Section 2. In Section 3 the pansharpening problem is mathematically formulated. Following the Bayesian modelling and inference, in Section 4 we propose a fully Bayesian method for the estimation of all the problem unknowns and model parameters. In Section 5, the quality of the pansharpened images is assessed both visually and quantitatively and compared with other classic and state-of-the-art pansharpening methods. In Section 6 we discuss the obtained results and finally, Section 7 concludes the paper.

2. Related Work

Early pansharpening techniques, such as in the Brovey method [5], substituted some bands for image visualization or performed simple arithmetic transformations. Other classical methods included the transformation of the MS image and the substitution of one of its components by the high spatial resolution PAN image. Examples of this strategy are Principal Components Analysis PCA substitution [6], Brovey Transform [7] and Intensity-Hue-Saturation (IHS) [8] methods. A review of those early methods, among others, can be found in [9].

Over the past 20 years, numerous methods have been presented and, in an attempt to bring some order to the diversity of approaches, different reviews, comparisons and classifications have been proposed in the literature (see, for instance, [10,11,12,13,14,15,16,17]) each one with different criteria and, therefore, with a different categorization. Nevertheless, in the last years, there seems to be a consensus in three main categories, namely Component Substitution (CS), Multi-Resolution Analysis (MRA) and Variational Optimization (VO) [15,16,17]. Additionally, the increasing number of Deep Learning (DL)-based pansharpening methods proposed in recent years can be regarded as a new category.

The Component Substitution (CS) category includes the most widely used pansharpening methods. CS methods [12] usually upsample the MS image to the size of the PAN image and transform it to another space that separates the spatial and spectral image components. Then, the transformed component containing the spatial information is substituted by the PAN image (possibly, after histogram matching). Finally, the backward transform is applied to obtain the pansharpened image. Examples of these methods include the already mentioned PCA substitution [6], IHS methods [8,18,19], the Gram–Schmidt (GS) methods [20] and Brovey transform [7]. In [21], the transformation is replaced by any weighted average of the MS bands. It is shown that this approach generalizes any CS image fusion method. Determination of the weights has been carried out in different ways. For instance, in [22] the weights are optimally estimated to minimize the mean squared error while in [23] they are set to the correlation coefficient between a single band low resolution image (obtained from the MS image) and each MS band. A local criterion, based on the belonging of a given pixel to a fuzzy cluster, was applied in [24] to estimate weights that are different for each pixel of the image. To obtain a crisper MS high-resolution image, in [25] a Wiener deconvolution of the upsampled MS bands was performed before fusion.

In general, CS-based methods produce spectral distortions due to the different statistics of the PAN image and the transformed component containing the spatial details. To tackle this issue, Multi-Resolution Analysis (MRA) methods decompose the MS and PAN images to different levels, extract spatial details from the decomposed PAN image, and inject them into the finer scales of the MS image. This principle is also known as the ARSIS concept [10]. The High-Pass Filtering (HPF) algorithm in [11,18], can be considered to be the first approach in this category where only two levels are considered. Multi-scale decompositons, such as the wavelet transform (WT) [26,27,28], the Generalized Laplacian Pyramid (GLP) [29,30,31] or the Non-Subsampled Contourlet Transform (NSCT) [32,33,34], were used to bring more precision to the methods. The “a trous” wavelet transform (AWT) was the preferred decomposition technique [26,28] until the publication of [31] showed the advantages of GLP over AWT. This was later corroborated in [14] where a comparison of different methods based on decimated and undecimated WT, AWT, GLP and NSCT concluded that GLP outperforms AWT because it better removes aliasing. MRA category also includes the Smoothing Filter Based Intensity Modulation (SFIM) method [35,36], which first upsamples the MS image to the size of the PAN one and then uses a simplified solar radiation and land surface reflection model to increase its quality, and the Indusion method [37] in which upscaling and fusion steps are carried out together.

Deep Learning (DL) techniques have gained prominence in the past years and several methods have been proposed for pansharpening. As far as we know, the use of Deep Neural Networks (DNN) for pansharpening were first introduced in [38] where a Modified Sparse Denoising Autoencoder (MSDA) algorithm was proposed. For the same task, a Coupled Sparse Denoising Autoencoder (CSDA) was used in [39]. Convolutional neural networks were introduced in [40] and also used, for instance, in [41]. Instead of facing the difficult task of learning the whole image, residual networks [42,43] learn, from upsampled MS and PAN patches, only the details of the MS high-resolution image that are not already in the upsampled MS image and add them to it to obtain the pansharpened image. To adjust the size of the MS image to the size of the PAN one in a coarse-to-fine manner, two residual networks in cascade were set in the so called Progressive Cascade Deep Residual Network (PCDRN) [44]. In [45] a multi-scale approach is followed by learning a DNN to upsample each NSCT directional sub-band from the MS and PAN images. In general, the main weaknesses of the DL techniques are the high computational resources needed for training, the need of a huge amount of training data, which, in the case of pansharpening, might not be available, and the poor generalization to satellite images not used during training. The absence of ground-truth MS high-resolution images, needed for training these DL methods, is a problem pointed-out by [46] where a non-supervised generative adversarial network (Pan-GAN) was proposed. The GAN aims to generate pansharpened images that are consistent with the spectral information of the MS image while maintaining the spatial information of the PAN image. However, the generalization of this technique to satellite images different from the ones used for training is not clear. The adaptation of general image fusion methods, like the U2Fusion method in [47], to the pansharpening problem is a promising research area.

From a practical perspective, Variational Optimization (VO)-based methods present advantages both from a theoretical as well as computational points of view [48]. VO-based methods mathematically model the relation between the observed images and the original MS high resolution image, building an energy functional based on some desired properties of the original image. The pansharpened image is obtained as the image that minimizes this energy functional [49]. This mathematical formulation allows to rigorously introduce and process features that are visually important into the energy functional. Variational optimization can be considered as a particular case of the Bayesian approach [50], where the estimated image is obtained by maximizing the posterior probability distribution of the MS high resolution image. Bayesian methods for pansharpening formulate the relations between the observed images and the original MS high resolution image as probability distributions, model the desired properties as prior distributions and use Bayes’ theory to estimate the pansharpened image based on the posterior distribution of the original MS high resolution image.

Following the seminal P+Xs method [51], the PAN image is usually modelled as a combination of the bands of the original high resolution mutispectral image. However, in [49] this model was generalized by substituting the intensity images by their gradients. Note that while the P+Xs method [51] preserves spectral information, it produces blurring artifacts. To remove blur while preserving spectral similarity, other restrictions are introduced as reasonable assumptions or prior knowledge about the original image such as Laplacian prior [52], total variation [53,54], sparse representations [55], band correlations [56,57], non-local priors [58,59], etc. Spectral information is also preserved by enforcing the pansharpened image to be close to the observed MS one when downsampled to the size of the latter [52,60,61]. A special class of VO-based methods are the super-resolution methods which model pansharpening as the inverse problem of recovering the original high-resolution image by fusing the MS image and the PAN (see [52,62] for a recent review and [63] for a recent work). Deconvolution methods, such as [64], also try to solve the inverse problem but the upsampling of the MS image to the size of the PAN one is performed prior to the pansharpening procedure. Registration and fusion are carried out simultaneously in [65].

Note that the variational Bayesian approach, also followed in this paper, is more general than variational optimization. While VO-based methods aim at obtaining a single estimate of the pansharpened image, the variational Bayesian approach estimates the whole posterior distribution of the pansharpened images and the model parameters, given the observations. When a single image is needed, the mode of the distribution is usually selected, but other solutions can be obtained, for instance, by sampling the estimated distribution. Even more, the proposed approach allows us to simultaneously estimate the model parameters along with the pansharpened image using the same framework.

3. Problem Formulation

Let us denote by the MS high-resolution image hypothetically captured with an ideal high-resolution sensor with B bands , , of size pixels, that is, , where the superscript denotes the transpose of a vector or matrix. Note that each band of the image is flattened into a column vector containing its pixels in lexicographical order. Unfortunately, this high-resolution image is not available in real applications. Instead, we observe an MS low-resolution image with B bands of size pixels with , .

The bands in this image are flattened as well to express them as a column vector. The relation between each low-resolution band, , and its corresponding high-resolution one, , is defined by

where is decimation operator, is a blurring matrix, , and the capture noise is modeled as additive white Gaussian noise with variance .

A single band high-resolution PAN image covering a wide range of frequencies is also provided by the sensor. This PAN image of size is modelled as an spectral average of the unknown high-resolution bands , as

where are known quantities that depend on each particular satellite sensor, and the capture noise is modeled as additive white Gaussian noise with variance .

Once the image formation is formulated, let us use the Bayesian formulation to tackle the problem of recovering , the MS high resolution image, using the observed , its degraded MS low resolution and PAN .

4. Bayesian Modelling and Inference

We model the distribution of each low resolution image , , following the degradation model in Equation (1) as a Gaussian distribution with mean and covariance matrix . Then, the distribution of the observed image is modelled by

with .

Analogously, using the degradation model in Equation (2), the distribution of the PAN image is given by

The starting point for Bayesian methods is to choose a prior distribution for the unknowns. In this paper, we use SG distributions as priors for the MS high resolution image as

with and and is a partition function. In Equation (5), is a filtered version of the b-th band, , where is a set of J high-pass filters, is the i-th pixel value of , and is a penalty function. The image priors are placed on the filtered image . It is well-known that the application of high-pass filters to natural images returns sparse coefficients. Most of the coefficients are zero or close to zero while only the edge related coefficients remain large. Sparse priors enjoy SG properties, heavier tails, more peaked and positive excess kurtosis compared to the Gaussian distribution. The distribution mass is located around zero, but large values have a higher probability than in a Gaussian distribution. For in Equation (5) to be SG, has to be symmetric around zero and the function increasing and concave for . This condition is equivalent to being decreasing on , and allows to be represented as

where inf denotes the infimum, is the concave conjugate of and are a set of positive parameters. The relationship dual to Equation (6) is given by [66]

To achieve sparsity, the function should suppress most of the coefficients in and preserve a small number of key features. Table 1 shows some penalty functions, corresponding to SG distributions (see [1]).

Table 1.

Some possible penalty functions.

From Equations (3)–(5), the joint probability distribution , with the set of all unknowns, is given by

where flat hyperpriors , and on the model hyperparameters have been included.

Following the Bayesian paradigm, inference will be based on . Since this posterior distribution cannot be analytically calculated due to the form of the SG distribution, in this paper we use the mean-field variational Bayesian model [67] to approximate by the distribution of the form , that minimizes the Kullback–Leibler divergence [68] defined as

The Kullback–Leibler divergence is always non-negative and it is equal to zero if and only if .

Even with this factorization, the SG prior for hampers the evaluation of this divergence, but the quadratic bound for in Equation (7) allows us to bound the prior in Equation (5) with a Gaussian form such that

We then define the lower bound of the prior where

and obtain the lower bound of the joint probability distribution

to obtain the inequality .

Utilizing the lower bound for the posterior probability distribution in Equation (10) we minimize instead of .

As shown in [67], for each unknown , the estimated will have the form

where represents all the variables in except and denotes the expected value calculated using the distribution . When point estimates are required is used.

For variables with a degenerate posterior approximation, that is, for , the value where the posterior degenerates is given by [67]

Let us now obtain the analytic expressions for each unknown posterior approximation.

4.1. High Resolution Multispectral Image Update

Using Equation (14) we can show in a straightforward way that the posterior distribution for the high resolution MS image will have the form

where the inverse of the covariance matrix is given by

with ⊗ denoting the Kronecker product, is a diagonal matrix formed from the elements of a vector and the mean is obtained as

4.2. Variational Parameters Update

To estimate the value of the variational parameters, introduced in Equation (7), we need to solve, for each band , filter , and pixel , the optimization problem

where . Since

whose minimum is achieved at , we have, differentiating the right hand side of (19) with respect to x,

4.3. Model Parameters Update

The estimates of the noise variance in the degradation models in Equations (3) and (4) are obtained using Equation (15) as

where represents the trace of the matrix.

From Equation (14) we obtain the following distribution for the parameter of the SG prior in Equation (5).

The mode of this distribution can be obtained (see [69]) by solving

The penalty function shown in Table 1 produces proper priors, for which the partition function can be evaluated, but the log penalty function produces an improper prior. We tackle this problem examining, for , the behavior of

and keeping in the term that depends on . This produces for the log prior

4.4. Calculating the Covariance Matrices

The matrix in Equation (17) must be explicitly computed to find its trace and also to calculate . However, since its calculation is very intense, we propose the following approximation. We first approximate using

where is calculated as the mean of the values in .

We then use the approximation

with

Finally we have

4.5. Proposed Algorithm

Based on the previous derivations, we propose the Variational Bayesian SG Pansharpening Algorithm in Algorithm 1. The linear equations problem in Equation (18), used in step 4 of Algorithm 1, has been solved using the Conjugate Gradient approach.

| Algorithm 1: Variational Bayesian SG pansharpening. |

| Require: Observed multispectral image, , panchromatic image , and parameter. |

| Set and . is obtained by bicubic interpolation of , . |

| while convergence criterion is not met do |

| 1. Set . |

| 2. Obtain , and from Equations (22), (23) and (25) respectively. |

| 3. Using and , update the variational parameters , from Equation (21). |

| 4. Using , , , and , update in Equation (17) and solve Equation (18) for . |

| end while |

| Output the high resolution hyperspectral image . |

5. Materials and Methods

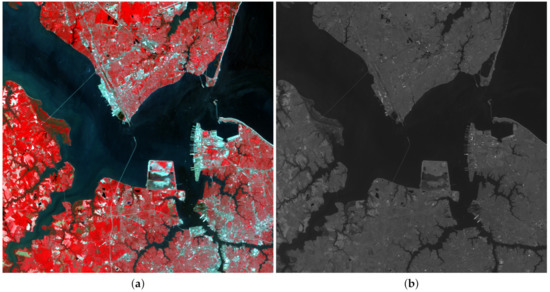

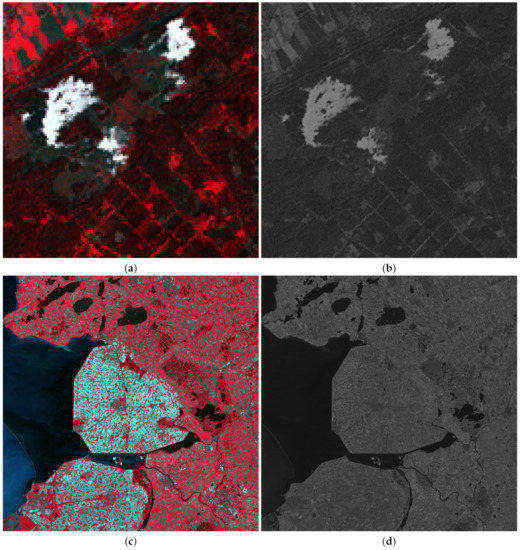

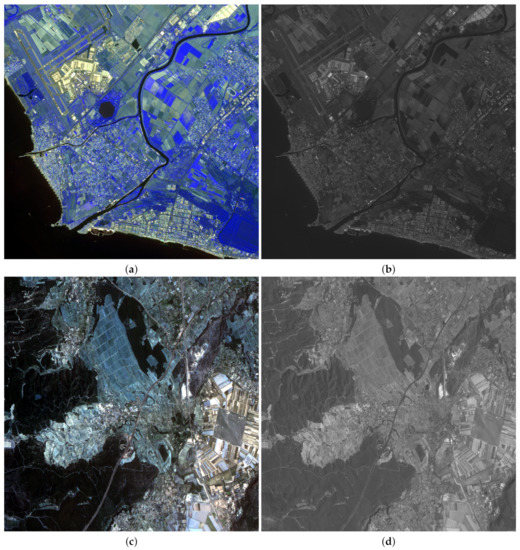

To test the performance of the proposed methodology on different kind of images, five satellite images were used: three LANDSAT 7-ETM+ [70] images, a SPOT-5 [71] image and a FORMOSAT-2 [72] image. LANDSAT MS images have six bands and a ratio between PAN and MS images . Figure 1 and Figure 2 show RGB color images formed by the bands B4, B3 and B2 of LANDSAT MS images, and their corresponding PAN images. Figure 1 corresponds to an area from Chesapeake Bay (US) while Figure 2 depicts two areas from Neatherland.SPOT-5 MS images have four bands and two PAN images, with resolution ratios of and , are provided. FORMOSAT-2 MS images also have four bands and a ratio between PAN and MS images . Figure 3a,c show the RGB color images formed from bands B3, B2 and B1 bands of a SPOT-5 image from Roma (IT) and a FORMOSAT-2 MS image from Salon-de-Provence (FR) and Figure 3b,d their corresponding PAN images.

Figure 1.

Observed LANDSAT 7-ETM+ Chesapeake Bay image: (a) multispectral (MS), (b) panchromatic (PAN).

Figure 2.

Observed LANDSAT 7-ETM+ Netherland images: (a) MS, (b) PAN, (c) MS, (d) PAN.

Figure 3.

Observed SPOT-5 Roma image: (a) MS, (b) PAN. FORMOSAT-2 Salon-de-Provence image: (c) MS, (d) PAN.

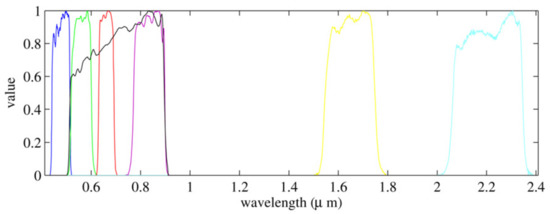

Both the observed and images have been normalized to the range before running Algorithm 1. The convergence criterion in the algorithm was or 50 iterations were reached, whatever occurs first. The relationship between the MS high resolution image and the panchromatic image in Equation (2) is governed by the parameters that need to be set before pansharpening is carried out. If we knew the sensor spectral response characteristics, the values of could be estimated from them. For instance, for LANDSAT 7-ETM+, Figure 4 shows the sensor spectral response curves for the MS bands B1-B6, shown in color, and the PAN band shown in black. For this sensor, the PAN band mainly overlaps B2-B4 MS bands, and coefficients could be obtained from this overlapping (see [52]). In this paper, however, a more general approach is followed to estimate from the observations. First, we define , a version of the PAN image downsampled to the size of the MS image. Then, since the sensor spectral response is the same in high and low resolution, the parameters can be obtained by solving

Figure 4.

LANDSAT 7-ETM+ band spectral response normalized to one.

Table 2 shows the associated to the different considered observed images. For the LANDSAT 7-ETM+ images only the first four bands are positive and and values are 0 since we know that bands B5 and B6 are not covered by the panchromatic sensor. For this process, each band is normalized to the interval . Note that due to the normalization, the estimated values do not only depend on the sensor spectral response but also on the observed area characteristics. This explains the differences between the obtained values for the images in Figure 2a,c. Although those images are from the same area of Netherlands, clouds in Figure 2a modify the estimation of the values of .

Table 2.

Estimated values for the different sensors.

6. Discussion

Within the variational Bayesian methodology, two methods are proposed in this paper: one using the log penalty function (see Table 1), hence, named log method, and another using the penalty function, with , referred as method. The proposed methods have been compared with the following classic and state-of-the-art pansharpening methods: the Principal Component Analysis (PCA) [6], the Intensity–Hue–Saturation (IHS) transform [19], the Brovey transform (Brovey) [7], the Band-Dependent Spatial-Detail (BDSD) method in [22], the Gram-Schmidt (GS) method in [20], the Gram-Schmidt adaptive (GSA) method in [21], the Partial Replacement Adaptive Component Substitution (PRACS) method in [23], the High Pass Filtering (HPF) algorithm in [18], the Smoothing Filter Based Intensity Modulation (SFIM) method [35,36], the Indusion method in [37], the Additive A Trous Wavelet Transform (ATWT) in [26], the Additive Wavelet Luminance Proportional (AWLP) method in [28], the ATWT Model 2 (ATWT-M2) and ATWT Model 3 (ATWT-M3) methods in [10], the Generalized Laplacian Pyramid (GLP)-based methods in [29], concretely the modulation transfer functions (MTF)-GLP, GLP with High Pass Modulation (MTF-GLP-HPM), and GLP with Context Based Decision (MTF-GLP-CBD) methods, and the pansharpening method using a Total Variation (TV) image model in [53]. We have used the implementation of the methods and measures provided by the Pansharpening Toolbox (https://rscl-grss.org/coderecord.php?id=541) [13]. For those methods not included in the toolbox we have used the code provided by the authors. The code of the proposed methods will be publicly available at https://github.com/vipgugr. We have also included the results of bilinear interpolating the MS image to the size of the PAN, marked as EXP, as a reference. Both quantitative and qualitative comparisons of the different methods have been performed.

6.1. Quantitative Comparison

A common problem in pansharpening is the nonexistence of a MS high resolution ground-truth image to compare with. Hence we performed two kinds of quantitative comparisons. Firstly, the images obtained using the different methods have been compared following Wald’s protocol [73] as follows: the observed MS image, , and the PAN image, , are downsampled by applying the operator to generate low resolution versions of them. Then, pansharpening is applied to those low resolution images and the obtained estimation of the MS image, , is quantitatively compared with the observed MS image, . Secondly, the different methods have been compared using Quality with No Reference (QNR) measures [13,74]. As previously stated, for the LANDSAT image in Figure 1, the resolution ratio between MS and PAN images is . Since the SPOT-5 satellite provides two PAN images, two experiments were carried out on the image in Figure 3, one with a decimation ratio of 4 and another with a ratio of 16. For the FORMOSAT-2 image the ratio is . However, for the sake of completeness, two experiments were also carried out, one assuming a decimation ratio of 4 and another with a ratio of 16.

Both spatial and spectral quality metrics have been used to compare the results obtained using the different methods. Details for the metrics used is shown below:

Spatial measures:

- Q

- –

- Universal Quality Index (UQI) [75] averaged on all MS bands.

- –

- Range: [-1, 1]

- –

- The higher the the better.

- Q4, Q8

- –

- Instances of the [76] index taking values. Suitable to measure quality for multiband images having an arbitrary number of spectral bands. Q4 is used for SPOT-5 and FORMOSAT-2 images which have four bands and Q8 for the LANDSAT image with six bands.

- –

- Range: [0, 1]

- –

- The higher the better.

- Spatial Correlation Coefficient (SCC) [77]

- –

- Measures the correlation coefficient between compared images after the application of a Sobel filter.

- –

- Range: [0, 1]

- –

- The higher the better.

- QNR spatial distortion () [78]

- –

- Measures the spatial distortion between MS bands and PAN image.

- –

- Range: [0, 1]

- –

- The lower the better.

Spectral measures:

- Spectral Angle Mapper (SAM) [79]

- –

- For spectral fidelity. Measures the mean angle between the corresponding pixels of the compared images in the space defined by considering each spectral band as a coordinate axis

- –

- Range: [0, 180]

- –

- The lower the better.

- Erreur Relative Globale Adimensionnelle de Synthese (ERGAS) [80]

- –

- Measures spectral consistency between compared images.

- –

- Range:

- –

- The lower ERGAS value the better consistency, specially for values lower than the number of image bands B.

- QNR spectral distortion () [78]

- –

- This measure is derived from the differences between the inter-band Q index values computed for HR and LR images.

- –

- Range: [0, 1]

- –

- The lower, the better.

Spatial and spectral measures:

- Jointly Spectral and Spatial Quality Index (QNR) [78]

- –

- QNR is obtained as the product of (1-) and (1-).

- –

- Range: [0, 1]

- –

- The higher the better.

Table 3 shows the obtained figures of merit using Wald’s protocol for the LANDSAT image in Figure 1. As it is clear from the table, ℓ1 outperforms all the other methods both in spectral fidelity and the incorporation of spatial details. Note the high SCC value (meaning that the details in the PAN image have been successfully incorporated into the pansharpened image) while also obtaining the lowest spectral distortion as evidenced by the SAM and ERGAS values. The TV method obtains the second best results except for the SAM metric, for this metric, the proposed log method has the second best value. This method also obtains the third best values for ERGAS and SCC measures. GLP based and PRACS methods also obtain high values for the Q, Q8 indices and low value for SAM. However, their ERGAS and SCC performance is worse. Table 4 shows the QNR quantitative results for the LANDSAT image in Figure 1. In this table, the proposed methods achieve competitive results. Log obtains the best value and this method together with ℓ1 obtain second and third QNR scores, respectively. Note that EXP obtained the highest score using QNR since bilinear interpolation of the observed MS low resolution image is used as the MS high resolution estimation to calculate and calculations.

Table 3.

Quantitative results on the LANDSAT 7-ETM+ Chesapeake Bay image. Bold values indicate the best score.

Table 4.

QNR Quantitative results on the LANDSAT 7-ETM+ Chesapeake Bay image. Bold values indicate the best score.

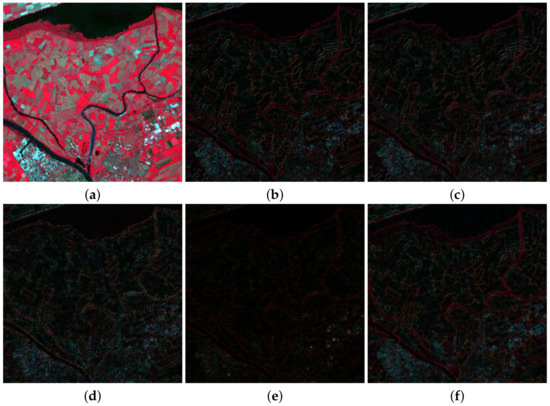

Table 5 and Table 6 show the quantitative results using Wald’s protocol for the LANDSAT images in Figure 2a,c, respectively. PRACS outperforms all other methods on the image in Figure 2a (see Table 5) and the proposed ℓ1 and log obtain the first and second best scores on the image in Figure 2c (see Table 6). Table 7 and Table 8 show the obtained QNR figures of merit for those two images. The proposed methods produce good , and QNR values for both images, both above 0.9 which supports their good performance. Again the EXP results are the best in all the measures for Table 8 and provides the best , for the image associated to Table 7. The ℓ1 method obtains the best for this image and BDSD the highest QNR.

Table 5.

Quantitative results using Wald’s protocol on the LANDSAT 7-ETM+ Netherland image in Figure 2a. Bold values indicate the best score.

Table 6.

Quantitative results using Wald’s protocol on the LANDSAT 7-ETM+ Netherland image in Figure 2c. Bold values indicate the best score.

Table 7.

QNR quantitative results on the LANDSAT 7-ETM+ Netherland image in Figure 2a. Bold values indicate the best score.

Table 8.

QNR quantitative results on the LANDSAT 7-ETM+ Netherland image in Figure 2c. Bold values indicate the best score.

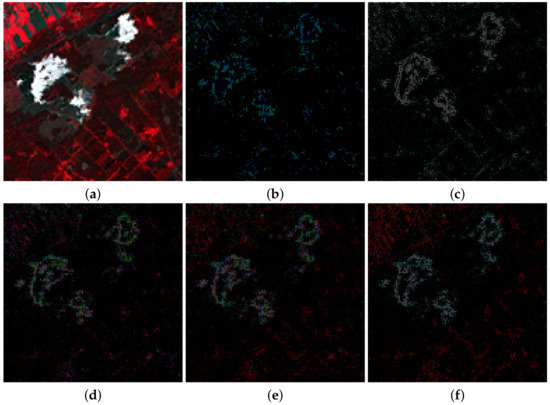

Figure 5 and Figure 6 show a zoomed in region of the RGB color images formed by bands B4, B3, and B2 of MS ground truth images used to apply Wald’s protocol and also the absolute error images for the methods in Table 7 and Table 8. In those images, the darker the intensity the lower the absolute error. Figure 5 and Figure 6 are consistent with the quantitative comparison shown in Table 5 and Table 6, respectively. The best results for the image in Figure 2a were obtained using PRACS, while for the image in Figure 2c the best performing method is ℓ1. Note that brighter areas in Figure 5e,f correspond to the borders of cloudy areas in Figure 2a. We argue that since clouds alter the weights of estimated using Equation (30), the boundaries of clouds and land areas in Figure 2a are not well resolved. This explains a worse behavior of the proposed methods in the cloudy areas of this image.

Figure 5.

(a) Ground truth image from Figure 2a. The normalized maximum absolute error minus the absolute error images images for the following methods, (b) Partial Replacement Adaptive Component Substitution (PRACS), (c) modulation transfer functions (MTF)-generalized Laplacian pyramid (GLP)-context based decision (CBD), (d) Total Variation (TV), (e) ℓ1 and (f) log.

Figure 6.

(a) Ground truth image from Figure 2c. The normalized maximum absolute error images for the following methods: (b) PRACS, (c) MTF-GLP-CBD, (d) TV, (e) ℓ1 and (f) log.

Table 9 and Table 10 show, respectively, the quantitative results using Wald’s protocol for the SPOT-5 and the FORMOSAT-2 images in Figure 3 for the decimation ratios and . The proposed log obtains the best figures of merit for the SPOT image in Figure 3a with except for Q and Q4 metrics. The Q values obtained by log and ℓ1 are slightly lower than those obtained by BDSD. Note that BDSD achieved the third best general figures just below the proposed log and ℓ1 algorithms. With the proposed log algorithm provides the best results except for Q, Q4 and SAM values, where competitive values are obtained. The proposed log achieves a slightly lower Q value than PRACS and a slightly higher SAM value than Brovey. In general, PRACS is the second best performing method for this image for . For the FORMOSAT-2 image in Figure 3c, the proposed ℓ1 and log algorithms obtained the best numerical results for a magnification. Both methods provide similar results, which are better than all the one provided by the competing methods. For a ratio , there is not a clear winner. The proposed methods are competitive in this image although they do not stand out in any of the measures. Table 11 and Table 12 show, respectively, the QNR quantitative results for the SPOT-5 and the FORMOSAT-2 images in Figure 3 for the decimation ratios and . In Table 11, EXP achieves the best , and QNR scores. In this table, the proposed methods obtain good scores. The log method obtains the second best and values and very high QNR values for both decimation ratios. Results for the FORMOSAT image, shown in Table 12, are very similar although in this case, BDSD obtains the best and QNR values for and ATWT-M3 for .

Table 9.

Quantitative results using Wald’s protocol on the SPOT-5 Roma image. Bold values indicate the best score.

Table 10.

Quantitative results using Wald’s protocol on the FORMOSAT-2 Salon-de-Provence image. Bold values indicate the best score.

Table 11.

QNR Quantitative results on the SPOT-5 Roma image. Bold values indicate the best score.

Table 12.

QNR quantitative results on the FORMOSAT-2 Salon-de-Provence image. Bold values indicate the best score.

Table 13 shows the required CPU time in seconds on a 2.40GHz Intel® Xeon® CPU for the pansharpening of a MS image with 4 bands to a size, for and , using the different methods under comparison. Equation (18) has been solved using the Conjugate Gradient method which required, to achieve convergence, less than 30 iterations for the ℓ1 prior and at least 1000 iterations for the log prior. This explains the differences between their required CPU time. Note that the proposed methods automatically estimate the model parameters which increases the running time but makes our methods parameter free.

Table 13.

Elapsed CPU time in seconds for the different pansharpening methods on a 1024 × 1024 image and with different ratios.

6.2. Qualitative Comparison

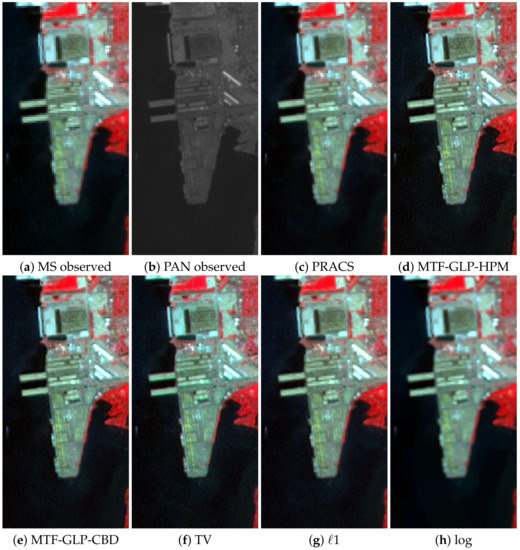

Figure 7 shows a small region of interest of the observed LANDSAT-7 images in Figure 1 and the pansharpening results with obtained by the proposed methods and the competing ones with the best quantitative performance, that is, PRACS, MTF-GLP-HPM, MTF-GLP-CBD and TV methods. All color images in this figure are RGB images formed from the B4, B3 and B2 Landsat bands. Since we are using full resolution images, there is no ground truth to compare with, so a visual analysis of the resulting images is performed. The improved resolution of all the pansharpening results in Figure 7c–h with respect to the observed MS image in Figure 7a is evident. PRACS, MTF-GLP-HPM and MTF-GLP-CBD images in Figure 7c–e have a lower detail level than TV and the proposed ℓ1 method, see Figure 7f,g, respectively. See, for instance, the staircase effects in some diagonal edges not present in the TV and proposed ℓ1 method results. The PRACS, MTF-GLP-HPM and MTF-GLP-CBD methods produce similar, but lower, spectral quality than the proposed method, which is consistent with the numerical results in Table 3 and discussion presented in Section 6.1. The image obtained using the ℓ1 method, Figure 7g, has colors closer to those of the observed MS image than the TV image, Figure 7f, which is also somewhat noisier. The log method is very good at removing noise in the image (see the sea area) but it tends to remove other fine details too.

Figure 7.

A region of interest of the LANDSAT 7-ETM+ Chesapeake Bay image in Figure 1a. Observed images: (a) MS, (b) PAN. pansharpened images by: (c) PRACS, (d) MTF-GLP-High Pass Modulation (HPM), (e) MTF-GLP-CBD, (f) TV, (g) ℓ1 and (h) log methods.

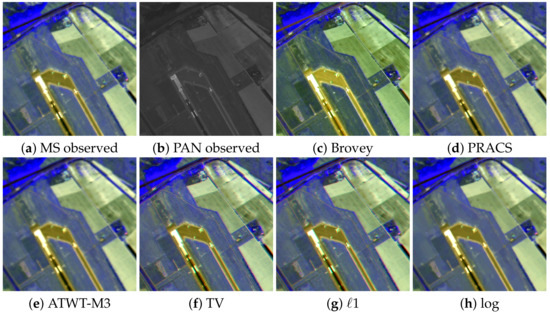

Figure 8 shows a region of interest of the observed SPOT-5 images in Figure 3a,b and the pansharpening results with obtained using the competing methods with the best performance on this image, that is, Brovey, PRACS, ATWT-M3, and TV methods, and the proposed ℓ1 and log methods. All color images in this figure are RGB images formed from the bands B3, B2 and B1 of the SPOT-5 image. The Brovey method (Figure 8c) produces the highest spectral distortion, however, it also recovers more spatial details in the image (see the airport runway and plane). ATWT-M3 (Figure 8e), on the other hand, produces a blurry image. PRACS produces a sharper image, see Figure 8d, but details in the PAN image do not seem to be well-integrated. The TV method (Figure 8f) and the proposed ℓ1 and log methods (Figure 8g,h obtain the most consistent results, with high spatial details and low spectral distortion. However, TV introduces staircase artifacts on diagonal lines that are not noticeable in the ℓ1 and log images. As with the LANDSAT-7 image, the log image in Figure 8h lacks some small details, removed by the method along with noise.

Figure 8.

A region of interest of the SPOT-5 Roma image in Figure 3a. Observed images: (a) MS, (b) PAN. pansharpened images by: (c) Brovey, (d) PRACS, (e) Additive A Trous Wavelet Transform (ATWT)-M3, (f) TV, (g) ℓ1 and (h) log methods.

7. Conclusions

A variational Bayesian methodology for the pansharpening problem has been proposed. In this methodology, we model the relation between the MS high resolution image and the PAN image as a linear combination of the MS bands whose weights are estimated from the available data. The observed MS image is modelled as a downsampled version of the original MS image. The expected characteristics of the pansharpened image are incorporated in the form of SG sparse image priors. Two penalty functions corresponding to SG distributions are used, ℓ1 and log. All the unknowns and model parameters have been automatically estimated within the variational Bayesian modelling and inference, and an efficient algorithm has been obtained.

The proposed ℓ1 and log methods have been compared to classic and state-of-the-art methods obtaining very good results both quantitative and qualitatively. In general, they have obtained the best quantitative results for LANDSAT-7 ETM+, SPOT-5 and FORMOSAT-2 images with a resolution ratio of 4 and SPOT-5 with a resolution ratio of 16. Competitive results were also obtained for the FORMOSAT-2 image with a resolution ratio of 16. They stand out in terms of spectral consistency while improving the spatial resolution of pansharpened images. We argue that the superior spectral consistency of SG methods arises from the modelling of the PAN image which selectively incorporates PAN detailed information into the different MS high resolution bands without changing their spectral properties. Qualitatively, SG methods produce results consistent with the observed PAN and MS images and with the numerical results previously described. The log method is better at removing noise in the images, at the cost of removing some fine details.

Author Contributions

Conceptualization, M.V., R.M.; methodology, M.V.; software, F.P.-B., M.V.; validation, F.P.-B.; formal analysis, J.M., M.V.; investigation, F.P.-B., M.V.; resources, J.M., M.V.; data curation, F.P.-B.; writing—original draft preparation, F.P.-B., M.V.; writing—review and editing, A.K.K., J.M. and R.M.; visualization, F.P.-B., J.M. and M.V.; supervision, A.K.K., R.M.; project administration, J.M., R.M.; funding acquisition, J.M., R.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Spanish Ministerio de Economía y Competitividad under contract DPI2016-77869-C2-2-R, by the Ministerio de Ciencia e Innovación under contract PID2019-105142RB-C22, and the Visiting Scholar Program at the University of Granada.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| MS | multispectral |

| PAN | pancrhomatic |

| PCA | principal components analysis |

| IHS | intensity-hue-saturation |

| CS | component substitution |

| MRA | multi-resolution analysis |

| VO | variational optimization |

| DL | deep learning |

| CS | component substitution |

| GS | Gram-Schmidt |

| HPF | high-pass filtering |

| WT | wavelet transform |

| GLP | generalized Laplacian pyramid |

| NSCT | non-subsampled contourlet transform |

| AWT | “a trous” wavelet transforms |

| SFIM | smoothing filter based intensity modulation |

| DNN | deep neural networks |

| MSDA | modified sparse denoising autoencoder |

| CSDA | coupled sparse denoising autoencoder |

| PCDRN | progressive cascade deep residual network |

| GAN | generative adversarial network |

| SG | super-Gaussian |

| Brovey | Brovey transform |

| BDSD | band-dependent spatial-detail |

| GSA | Gram-Schmidt adaptive |

| PRACS | partial replacement adaptive component substitution |

| ATWT | additive “a trous” wavelet transform |

| ATLP | additive wavelet luminance proportional |

| MTF | modulation transfer functions |

| HPM | high pass modulation |

| CBD | context based decision |

| TV | total variation |

| UQI | universal quality index |

| SCC | spatial correlation coefficient |

| SAM | spectral angle mapper |

| ERGAS | erreur relative globale adimensionnelle de synthese |

| QNR | quality with no reference |

References

- Babacan, S.D.; Molina, R.; Do, M.N.; Katsaggelos, A.K. Bayesian blind deconvolution with general sparse image priors. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012. [Google Scholar]

- Rubio, J.; Vega, M.; Molina, R.; Katsaggelos, A.K. A general sparse image prior combination in Compressed Sensing. In Proceedings of the 21st European Signal Processing Conference (EUSIPCO 2013), Marrakech, Morocco, 9–13 September 2013; pp. 1–5. [Google Scholar]

- Zhou, X.; Vega, M.; Zhou, F.; Molina, R.; Katsaggelos, A.K. Fast bayesian blind deconvolution with Huber super Gaussian priors. Digit. Signal Process. 2017, 60, 122–133. [Google Scholar]

- Pérez-Bueno, F.; Vega, M.; Naranjo, V.; Molina, R.; Katsaggelos, A. Fully automatic blind color deconvolution of histological images using super Gaussians. In Proceedings of the 28th European Signal Processing Conference, EUSIPCO 2020, Amsterdam, The Netherlands, 18–22 January 2021. [Google Scholar]

- Ehlers, M. Multisensor image fusion techniques in remote sensing. ISPRS J. Photogramm. Remote Sens. 1991, 46, 19–30. [Google Scholar]

- Chavez, P.S.; Kwarteng, A.Y. Extracting spectral contrast in Landsat thematic mapper image data using selective principal component analysis. Photogramm. Eng. Remote Sens. 1989, 55, 339–348. [Google Scholar]

- Gillespie, A.R.; Kahle, A.B.; Walker, R.E. Color enhancement of highly correlated images. II. Channel ratio and “chromaticity” transformation techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar]

- Carper, W.J.; Lillesand, T.M.; Kiefer, R.W. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote. Sens. 1990, 56, 459–467. [Google Scholar]

- Pohl, C.; Genderen, J.L.V. Multi-sensor image fusion in remote sensing: Concepts, methods, and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar]

- Ranchln, T.; Wald, L. Fusion of high spatial and spectral resolution images: The ARSIS concept and its implementation. Photogramm. Eng. Remote Sens. 2000, 66, 49–61. [Google Scholar]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Amro, I.; Mateos, J.; Vega, M.; Molina, R.; Katsaggelos, A. A survey of classical methods and new trends in pansharpening of multispectral images. EURASIP J. Adv. Signal Process. 2011, 2011, 79. [Google Scholar]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A critical comparison among pansharpening algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Aiazzi, B.; Garzelli, A. Spatial Methods for Multispectral Pansharpening: Multiresolution Analysis Demystified. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2563–2576. [Google Scholar]

- Duran, J.; Buades, A.; Coll, B.; Sbert, C.; Blanchet, G. A survey of pansharpening methods with a new band-decoupled variational model. ISPRS J. Photogramm. Remote Sens. 2017, 125, 78–105. [Google Scholar] [CrossRef]

- Kahraman, S.; Erturk, A. Review and performance comparison of pansharpening algorithms for RASAT images. Istanb. Univ. J. Electr. Electron. Eng. 2018, 18, 109–120. [Google Scholar] [CrossRef]

- Meng, X.; Shen, H.; Li, H.; Zhang, L.; Fu, R. Review of the pansharpening methods for remote sensing images based on the idea of meta-analysis: Practical discussion and challenges. Inf. Fusion 2019, 46, 102–113. [Google Scholar] [CrossRef]

- Chavez, P.S.; Sides, S.; Anderson, J. Comparison of three different methods to merge multiresolution and multispectral data: Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote. Sens. 1991, 57, 295–303. [Google Scholar]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion. 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process For Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal MMSE pan sharpening of very high resolution multispectral images. IEEE Trans. Geosci. Remote Sens. 2007, 46, 228–236. [Google Scholar] [CrossRef]

- Choi, J.; Yu, K.; Kim, Y. A new adaptive component-substitution-based satellite image fusion by using partial replacement. IEEE Trans. Geosci. Remote Sens. 2010, 49, 295–309. [Google Scholar] [CrossRef]

- Shahdoosti, H.R.; Javaheri, N. Pansharpening of clustered MS and Pan images considering mixed pixels. IEEE Geosci. Remote Sens. Lett. 2017, 14, 826–830. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. MTF-based deblurring using a Wiener filter for CS and MRA pansharpening methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2255–2269. [Google Scholar] [CrossRef]

- Nuñez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-Based Image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote. Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef]

- King, R.; Wang, J. A wavelet based algorithm for pansharpening Landsat 7 imagery. In Proceedings of the IEEE 2001 International Geoscience and Remote Sensing Symposium, Sydney, Australia, 9–13 July 2001; pp. 849–851. [Google Scholar]

- Otazu, X.; Gonzalez-Audicana, M.; Fors, O.; Nuñez, J. Introduction of sensor spectral response into image fusion methods. Application to wavelet-based methods. IEEE Trans. Geosci. Remote. Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and Pan Imagery. Photogramm. Eng. Remote. Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Pippi, I.; Selva, M. Generalised Laplacian pyramid-based fusion of MS + P image data with spectral distortion minimisation. ISPRS Internat. Arch. Photogramm. Remote Sens. 2002, 34, 3–6. [Google Scholar]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. Advantages of Laplacian pyramids over ”à trous” wavelet transforms for pansharpening of multispectral images. In Image and Signal Processing for Remote Sensing XVIII; International Society for Optics and Photonics: Edinburgh, UK, 2012; p. 853704. [Google Scholar]

- Shah, V.P.; Younan, N.H.; King, R.L. An efficient pan-sharpening method via a combined adaptive PCA approach and contourlets. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1323–1335. [Google Scholar] [CrossRef]

- Amro, I.; Mateos, J.; Vega, M. Parameter estimation in Bayesian super-resolution pansharpening using contourlets. In Proceedings of the IEEE International Conference on Image Processing ICIP 2011, Brussels, Belgium, 11–14 September 2011; pp. 1345–1348. [Google Scholar]

- Upla, K.P.; Gajjar, P.P.; Joshi, M.V. Pan-sharpening based on non-subsampled contourlet transform detail extraction. In Proceedings of the 2013 Fourth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Jodhpur, India, 18–21 December 2013; pp. 1–4. [Google Scholar]

- Liu, J. Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T. Liu’Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details’. Int. J. Remote Sens. 2002, 23, 593–597. [Google Scholar] [CrossRef]

- Khan, M.M.; Chanussot, J.; Condat, L.; Montanvert, A. Indusion: Fusion of multispectral and panchromatic images using the induction scaling technique. IEEE Geosci. Remote Sens. Lett. 2008, 5, 98–102. [Google Scholar] [CrossRef]

- Huang, W.; Xiao, L.; Wei, Z.; Liu, H.; Tang, S. A new pan-sharpening method with deep neural networks. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1037–1041. [Google Scholar] [CrossRef]

- Cai, W.; Xu, Y.; Wu, Z.; Liu, H.; Qian, L.; Wei, Z. Pan-sharpening based on multilevel coupled deep network. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7046–7049. [Google Scholar]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by convolutional neural networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Eghbalian, S.; Ghassemian, H. Multi spectral image fusion with deep convolutional network. In Proceedings of the 2018 9th International Symposium on Telecommunications, Tehran, Iran, 17–19 December 2018; pp. 173–177. [Google Scholar]

- Wei, Y.; Yuan, Q.; Shen, H.; Zhang, L. Boosting the Accuracy of Multispectral Image Pansharpening by Learning a Deep Residual Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1795–1799. [Google Scholar] [CrossRef]

- Li, N.; Huang, N.; Xiao, L. PAN-Sharpening via residual deep learning. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium, Fort Worth, TX, USA, 23–28 July 2017; pp. 5133–5136. [Google Scholar]

- Yang, Y.; Tu, W.; Huang, S.; Lu, H. PCDRN: Progressive Cascade Deep Residual Network for Pansharpening. Remote Sens. 2020, 12, 676. [Google Scholar] [CrossRef]

- Huang, W.; Fei, X.; Feng, J.; Wang, H.; Liu, Y.; Huang, Y. Pan-sharpening via multi-scale and multiple deep neural networks. Signal Process. Image Commun. 2020, 85, 115850. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A Unified Unsupervised Image Fusion Network. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef]

- Chan, T.; Shen, J.; Vese, L. Variational PDE models in image processing. Not. AMS. 2003, 50, 14–26. [Google Scholar]

- Fang, F.; Li, F.; Shen, C.; Zhang, G. A variational approach for pan-sharpening. IEEE Trans. Image Process. 2013, 22, 2822–2834. [Google Scholar] [CrossRef]

- Loncan, L.; de Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simões, M.; et al. Hyperspectral pansharpening: A review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 27–46. [Google Scholar] [CrossRef]

- Ballester, C.; Caselles, V.; Igual, L.; Verdera, J.; Rougé, B. A variational model for P+XS image fusion. Int. J. Comput. Vis. 2006, 69, 43–58. [Google Scholar] [CrossRef]

- Molina, R.; Vega, M.; Mateos, J.; Katsaggelos, A. Variational posterior distribution approximation in Bayesian super resolution reconstruction of multispectral images. Appl. Comput. Harmon. Anal. 2008, 24, 251–267. [Google Scholar] [CrossRef]

- Vega, M.; Mateos, J.; Molina, R.; Katsaggelos, A. Super resolution of multispectral images using TV image models. In Proceedings of the 2th International Conference on Knowledge-Based and Intelligent Information & Engineering Systems, Zagreb, Croatia, 3–5 September 2008; pp. 408–415. [Google Scholar]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. A new pansharpening algorithm based on total variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 318–322. [Google Scholar] [CrossRef]

- Yang, X.; Jian, L.; Yan, B.; Liu, K.; Zhang, L.; Liu, Y. A sparse representation based pansharpening method. Future Gener. Comput. Syst. 2018, 88, 385–399. [Google Scholar] [CrossRef]

- Vega, M.; Mateos, J.; Molina, R.; Katsaggelos, A. Super resolution of multispectral images using l1 image models and interband correlations. J. Signal Process. Syst. 2011, 65, 509–523. [Google Scholar] [CrossRef]

- Zhang, M.; Li, S.; Yu, F.; Tian, X. Image fusion employing adaptive spectral-spatial gradient sparse regularization in UAV remote sensing. Signal Process. 2020, 170, 107434. [Google Scholar] [CrossRef]

- Duran, J.; Buades, A.; Coll, B.; Sbert, C. A nonlocal variational model for pansharpening image fusion. SIAM J. Imaging Sci. 2014, 7, 761–796. [Google Scholar] [CrossRef]

- Fu, X.; Lin, Z.; Huang, Y.; Ding, X. A variational pan-sharpening with local gradient constraints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10265–10274. [Google Scholar]

- Li, W.; Hu, Q.; Zhang, L.; Du, J. Pan-sharpening with a spatial-enhanced variational model. J. Appl. Remote Sens. 2018, 12, 035018. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, T.; Fang, F.; Zhang, G. A pan-sharpening method based on the ADMM algorithm. Front. Earth Sci. 2019, 13, 656–667. [Google Scholar] [CrossRef]

- Garzelli, A. A review of image fusion algorithms based on the super-resolution paradigm. Remote Sens. 2016, 8, 797. [Google Scholar] [CrossRef]

- Tian, X.; Chen, Y.; Yang, C.; Gao, X.; Ma, J. A Variational Pansharpening Method Based on Gradient Sparse Representation. IEEE Signal Process. Lett. 2020, 27, 1180–1184. [Google Scholar] [CrossRef]

- Vivone, G.; Simões, M.; Dalla Mura, M.; Restaino, R.; Bioucas-Dias, J.M.; Licciardi, G.A.; Chanussot, J. Pansharpening based on semiblind deconvolution. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1997–2010. [Google Scholar] [CrossRef]

- Chen, C.; Li, Y.; Liu, W.; Huang, J. SIRF: Simultaneous Satellite Image Registration and Fusion in a Unified Framework. IEEE Trans. Image Process. 2015, 24, 4213–4224. [Google Scholar] [PubMed]

- Rockafellar, R. Convex Analysis; Princeton University Press: Princeton, NJ, USA, 1996. [Google Scholar]

- Bishop, C. Mixture models and EM. In Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; pp. 454–455. [Google Scholar]

- Kullback, S. Information Theory and Statistics; Dover Publications: Mineola, NY, USA, 1959. [Google Scholar]

- Vega, M.; Molina, R.; Katsaggelos, A. Parameter Estimation in Bayesian Blind Deconvolution with Super Gaussian Image Priors. In Proceedings of the 2014 22nd European Signal Processing Conference (EUSIPCO), Lisbon, Portugal, 1–5 September 2014; pp. 1632–1636. [Google Scholar]

- U.S. Geological Survey. Landsat Missions. Available online: https://www.usgs.gov/land-resources/nli/landsat (accessed on 16 September 2020).

- Satellite Imaging Corporation. SPOT-5 Satellite Sensor. Available online: https://www.satimagingcorp.com/satellite-sensors/other-satellite-sensors/spot-5/ (accessed on 16 September 2020).

- Satellite Imaging Corporation. FORMOSAT-2 Satellite Sensor. Available online: https://www.satimagingcorp.com/satellite-sensors/other-satellite-sensors/formosat-2/ (accessed on 16 September 2020).

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote. Sens. 1997, 63, 691–699. [Google Scholar]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L. Comparison of pansharpening algorithms: Outcome of the 2006 GRS-S data-fusion contest. IEEE Trans. Geosci. Remote. Sens. 2007, 45, 3012–3020. [Google Scholar]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Sign. Proc. Lett. 2002, 9, 81–84. [Google Scholar]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi/hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar]

- Pratt, W.K. Correlation Techniques of Image Registration. IEEE Trans. Aerosp. Electron. Syst. 1974, AES-10, 353–358. [Google Scholar]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar]

- Yuhas, R.H.; Goetz, A.F.H.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the Spectral Angle Mapper (SAM) algorithm. In Proceedings of the Summaries 3rd Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992; pp. 147–149. [Google Scholar]

- Wald, L. Quality of high resolution synthesized images: Is there a simple criterion? Proc. Int. Conf. Fusion Earth Data 2000, 1, 99–105. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).