1. Introduction

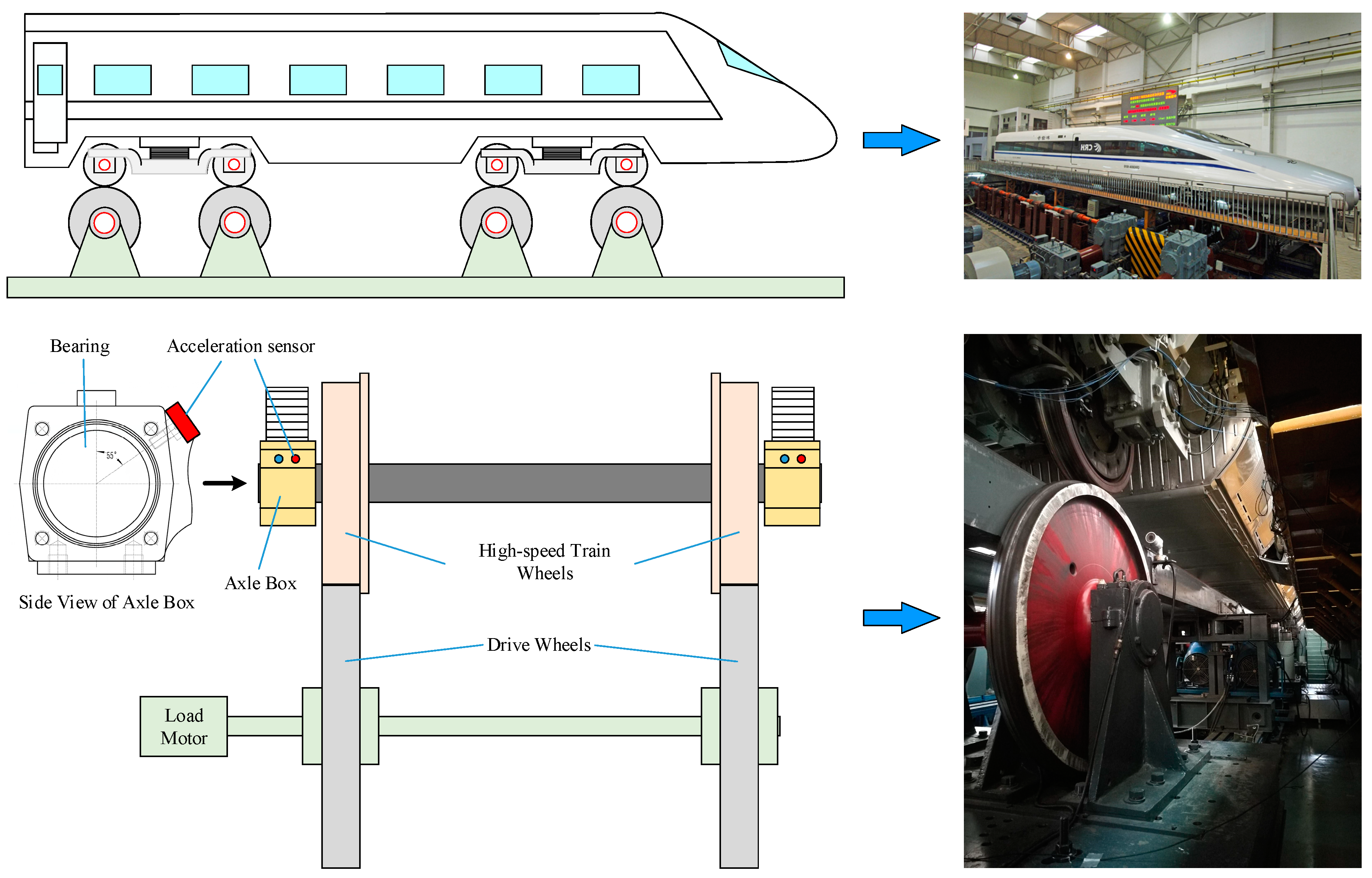

Axle-box bearings, as the key component of the high-speed train, can have a significant impact on the security, stability and sustainability of railway vehicles [

1]. If an axle-box bearing failure is not detected promptly, it may cause severe delays or even dangerous derailments, implicating human life prejudice and significant costs for railway managers and operators. Therefore, how to identify the axle-box bearing fault accurately and quickly has become an urgent challenge to be solved. Currently, vibration analysis, acoustic analysis and temperature analysis are three main approaches for axle-box bearings failure detection [

2]. However, the temperature does not raise much for an early-stage bearing fault, and noises from wheel/rail contacts and the train drive system, as well as aerodynamic forces, may contaminate the signal acquired by the acoustic arrays [

3]. Due to its higher reliability, various fault diagnosis techniques based on vibration signal-processing techniques have been applied to maintain axle-box bearings operating properly and reliably [

4,

5,

6,

7]. However, it is a time-consuming and labor-intensive task to determine the type of bearing defects using conventional diagnosis methods based on various signal-processing techniques, which can only achieve a qualitative result without the severity of a bearing fault. Compared with the conventional signal-processing technique-based method, the intelligent diagnosis method based on machine learning can automatically process the bearing data and evaluate the bearing health status comprehensively [

8,

9].

Taking the advantages in the qualitative and quantitative analyses of bearing faults, various typical intelligent diagnosis techniques have been proposed to improve the bearing fault diagnosis performance. Among them, artificial neural networks (ANN) and support vector machines (SVM) are commonly applied in the detection and diagnosis of machine faults [

8,

9,

10]. Generally, two essential steps are necessary for rotating machinery fault diagnosis based on ANN or SVM: feature extraction using signal-processing techniques and fault classification using pattern recognition techniques. Jamadar et al. [

11] adopted a back propagation neural network (BPNN) to classify various bearing faults using 24 dimensionless parameters. Van et al. [

12] proposed a novel wavelet kernel function-based SVM model for bearing fault diagnosis using features extracted from the signals, which are decomposed by nonlocal means (NLM) and empirical mode decomposition (EMD). Chen et al. [

13] applied ANN for diagnosing bearing fault severity using the simulation data to solve the issue of adequate data requirement of the ANN. Lei et al. [

14] employed the ensemble empirical mode decomposition (EEMD) for feature extraction and wavelet neural network (WNN) for diagnosing bearing faults. Tang et al. [

15] carried out SVM to process the Shannon entropy and autoregressive model coefficients for detecting the different faults. Batista et al. [

16] combined different SVMs to detect the bearing failures using 13 statistical parameters in the time domain and frequency domain. In addition, some improved methods, such as hidden markov, adaptive neuro-fuzzy inference system (ANFIS), extreme learning machine (ELM) and support tensor machine (STM), have been proposed to implement the bearing fault diagnosis and classification [

17,

18,

19,

20].

Although traditional intelligent diagnosis methods have been widely applied in machinery fault diagnosis, they still have three inherent disadvantages [

8,

21]: (1) Signal feature extraction is easily affected by the complicated working conditions, and the signal features of composite faults cannot be extracted effectively. (2) The sensitive features selected mainly depend on the engineering experience of diagnostic experts. (3) Traditional intelligent diagnosis methods, such as ANN and SVM, belong to shallow learning models, which are difficult to learn complex nonlinear relationships effectively.

Deep learning, as a new machine-learning method, can automatically learn in-depth local features from the raw data for classification to overcome the inherent disadvantages of traditional intelligent methods [

10]. The convolution neural network, proposed by LeCun [

22,

23], is an effective deep-learning method and has been applied in bearing fault diagnosis. Shao et al. [

6] proposed a new deep-learning model that combines the advantages of the deep belief network (DBN) and convolutional neural network (CNN) to detect the bearing failure. Lo et al. [

24] propose a novel prognostic method based on a 1D CNN with clustering loss by classification training to detect bearing and gear wears. Chen et al. [

25] proposed a novel fault diagnosis method integrating CNN and ELM to reduce the training complexity and obtain robust features. Wang et al. [

26] proposed a modified fault diagnosis method combining CNN and hidden markov models (HMM) to classify rolling element bearing faults. Janssens et al. [

27] proposed a 2D CNN with one convolutional layer to learn useful features extracted from the frequency spectrum using two accelerometers for bearing fault detection. Chen et al. [

28] proposed a novel CNN model named the convolution recurrent neural network, which combines the advantages of the CNN and recurrent neural network (RNN) to build up end-to-end health indicators of bearings adaptively. Mao et al. [

21] proposed a new method for bearing incipient fault online detection using semi-supervised architecture and deep feature representation. Wang et al. [

29] presented a comprehensive survey on deep-learning techniques and their applications in smart manufacturing, in which four typical deep-learning models, including the CNN, restricted Boltzmann machine, auto encoder and RNN, are discussed in detail.

Although the CNN has achieved some results in bearing fault diagnosis, the diagnosis performance of CNN still needs to be improved to meet the requirement of axle-box bearing fault diagnosis. Different from the fault diagnosis for other industrial equipment, the fault diagnosis of railway transportation equipment has its special characteristics. For high-speed trains, safety is the priority. The fault diagnosis model should process the bearing data quickly and accurately to meet stringent reliability and real-time requirements for the failure monitoring of axle-box bearings. Due to the complexity of the CNN, more layers mean more convolution kernels, and each neuron multiplies the input data with connection weights, which will lead to the size of the parameter of the CNN being more than tens or even hundreds of thousands. More computational burdens and longer training times are needed due to the large size of the parameters, which can lead to poorer performance of the CNN. In addition, each layer of the CNN has a different expression of the input data and with multiple layers. However, only the outputs of the last layer are connected to the fully connected layer, and the shallow information in other layers is neglected in a traditional CNN framework. Therefore, it is necessary to reduce the number of model parameters and make use of shallow information to improve the diagnosis performance of the CNN.

Some research has been done to fill this gap. Fu et al. [

30] proposed a multiscale comprehensive feature fusion-CNN (MCFF-CNN) based on residual learning for vehicle color recognition and achieved an improved recognition performance. Zhang et al. [

31] proposed a compact convolutional neural network augmented with multiscale feature extraction to carry out diagnosis tasks with limited training samples and presented three cases to verify the effectiveness of the proposed method. Meng et al. [

32] proposed a CNN-based framework for digital subtraction angiography cerebrovascular segmentation and obtained some results. Jun et al. [

33] proposed a multiscale CNN model for bearings’ remaining useful life predictions, in which the last convolutional layer and pooling layer were combined to form a mixed layer before being connected to the fully connected layer. However, the performances of the methods mentioned above still need to be improved.

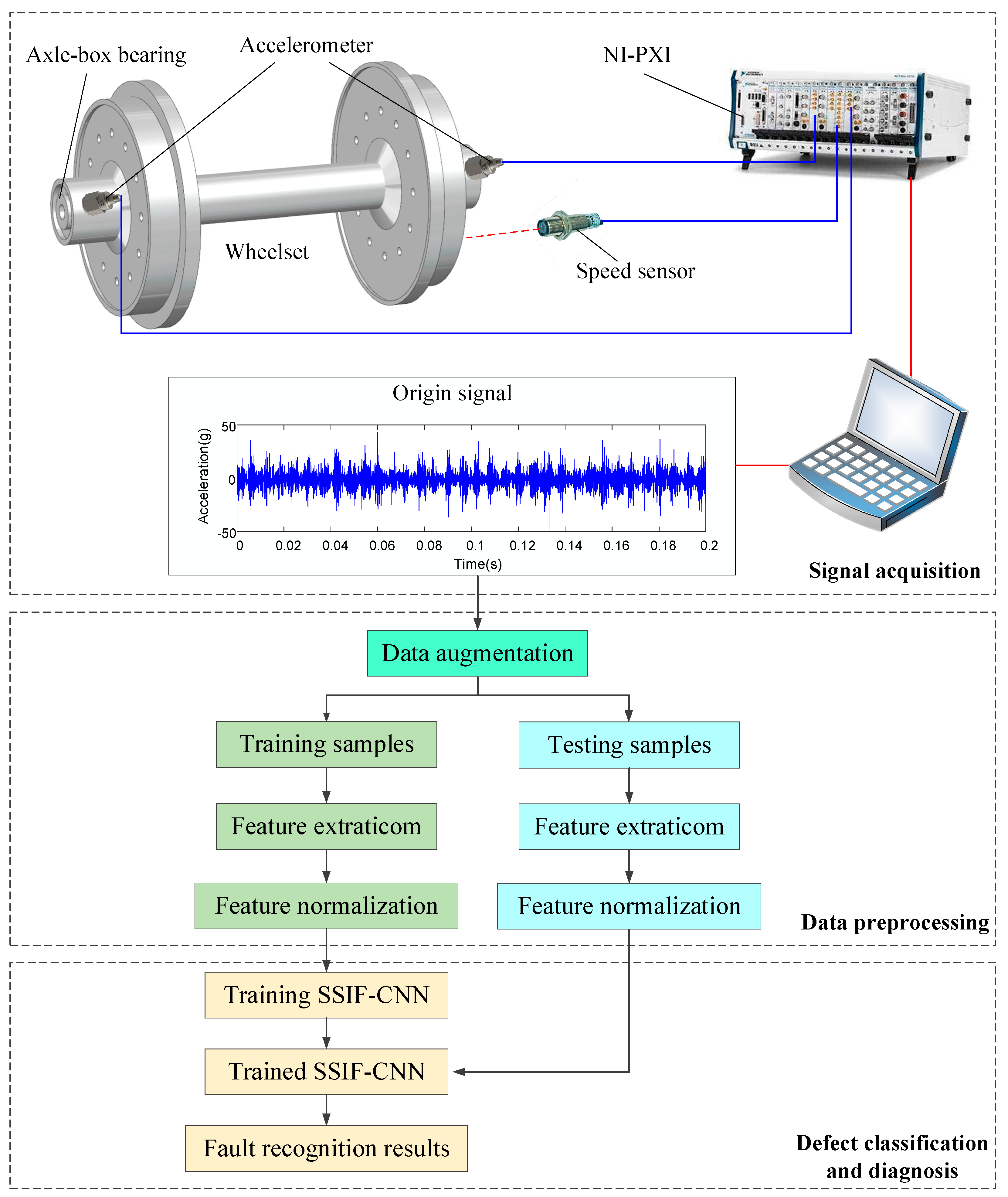

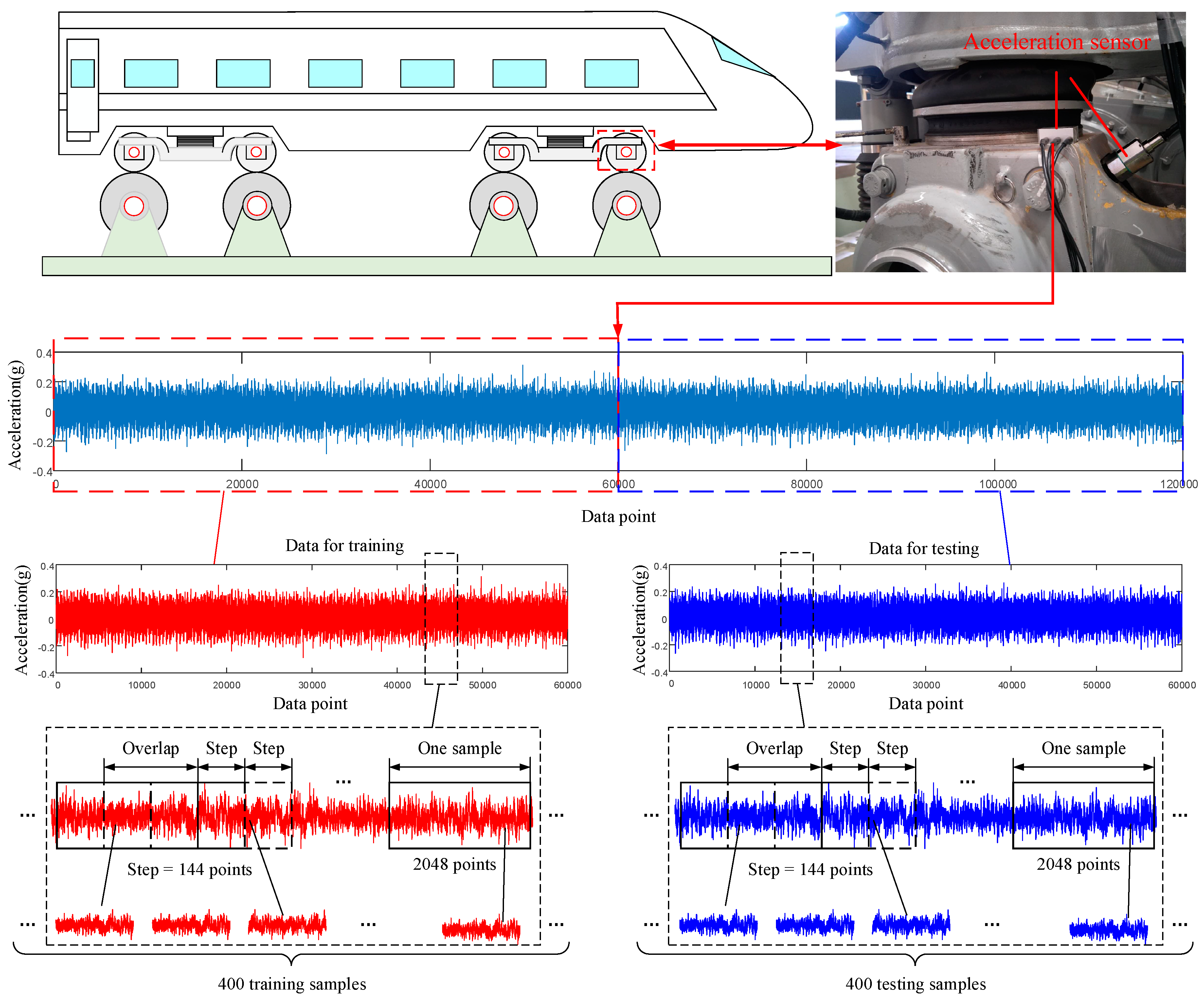

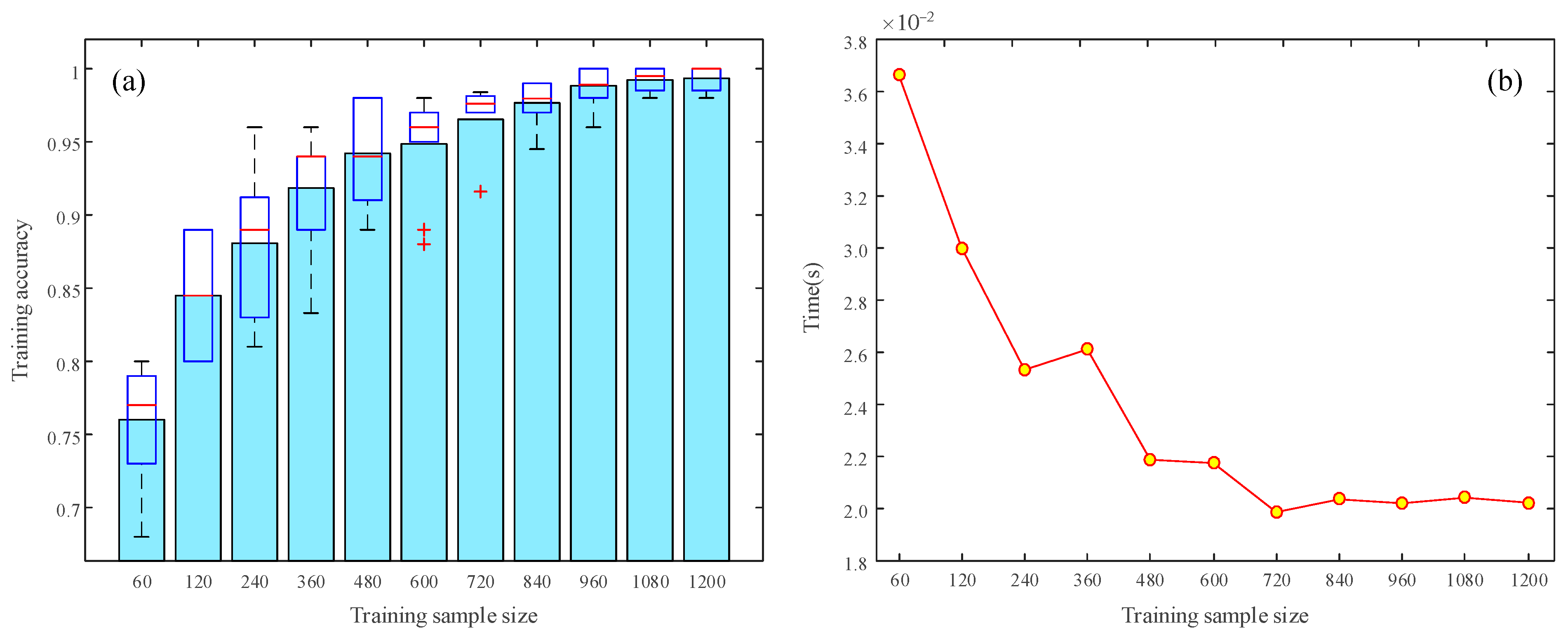

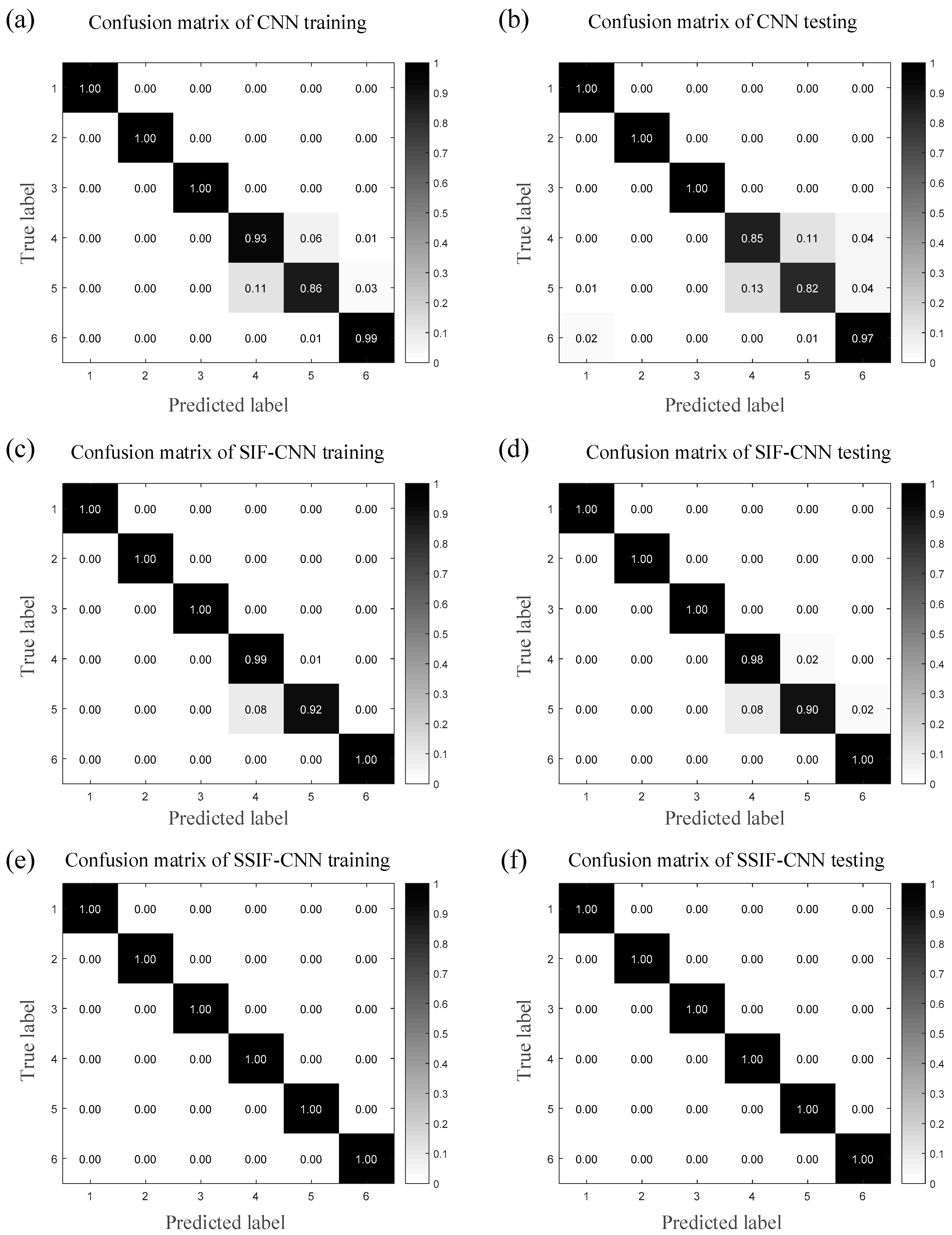

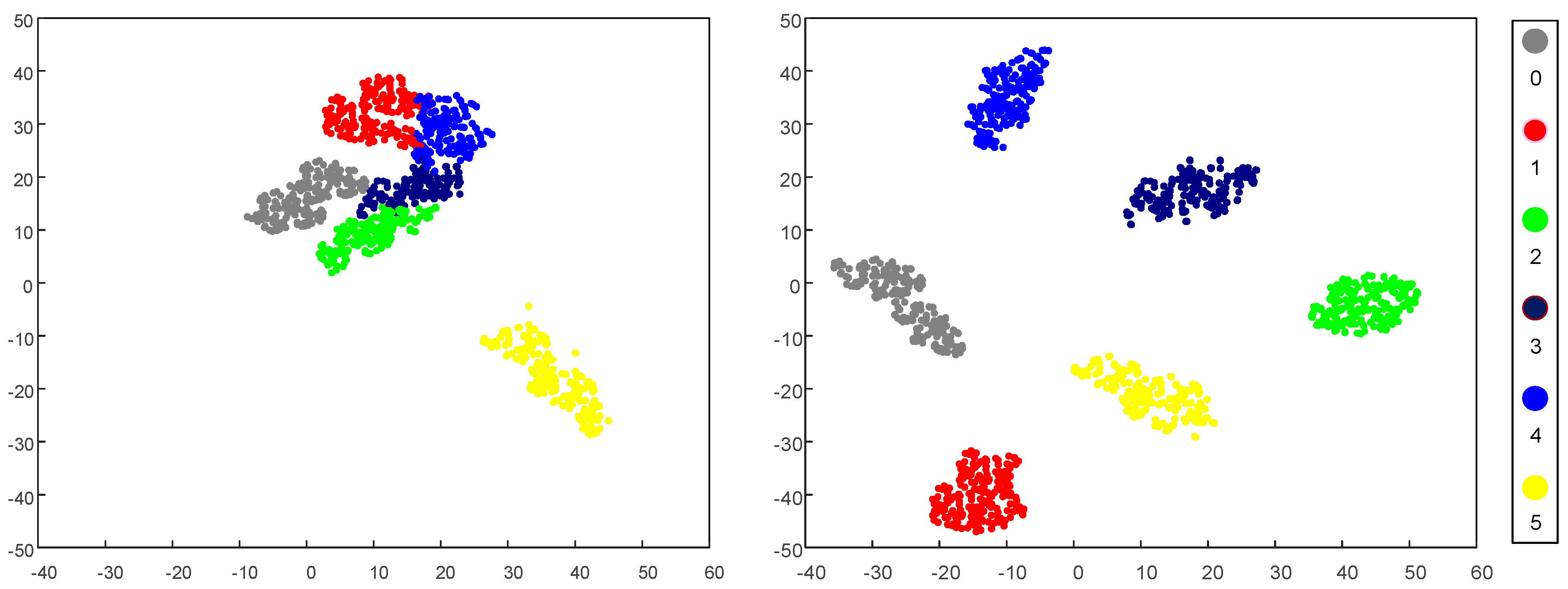

Aiming to improve the computational efficiency and the diagnostic accuracy, a novel simplified shallow information fusion-convolutional neural network (SSIF-CNN) is proposed for vibration-based axle-box bearing fault diagnosis. The proposed method firstly converts the feature maps obtained from each pooling layer into a feature sequence by the global convolution operation. Then, those feature sequences obtained from different pooling layers are concatenated into a one-dimensional vector before been connected to the classifier through the fully connected layer. The experimental results show that, compared to the traditional CNN, the SSIF-CNN improves the computing efficiency on the premise of ensuring the accuracy of the fault diagnosis.

The contributions of this paper can be summarized as follows:

- (a)

We employ an SSIF-CNN model structure to extract more identifiable features for the axle-box bearing fault diagnosis. By integrating the simplified shallow information, the features with more information are maintained to enhance the network capacity and to reduce the dimension of the parameter.

- (b)

Due to fewer fully connected layer parameters in the SSIF-CNN framework, the model computational efficiency and fault diagnosis accuracy are improved.

- (c)

The proposed systematic approach integrates feature extraction and SSIF-CNN into a framework, which could realize the goal of monitoring axle-box bearing conditions automatically.

The remaining parts of the paper are organized as follows: In

Section 2, the modified procedure of the CNN is introduced. In

Section 3, the diagnosis procedure using the modified method is proposed. In

Section 4, the benchmark data and experimental data are described and analyzed. Finally, some conclusions are presented in

Section 5.

2. Simplified Shallow Information Fusion CNN

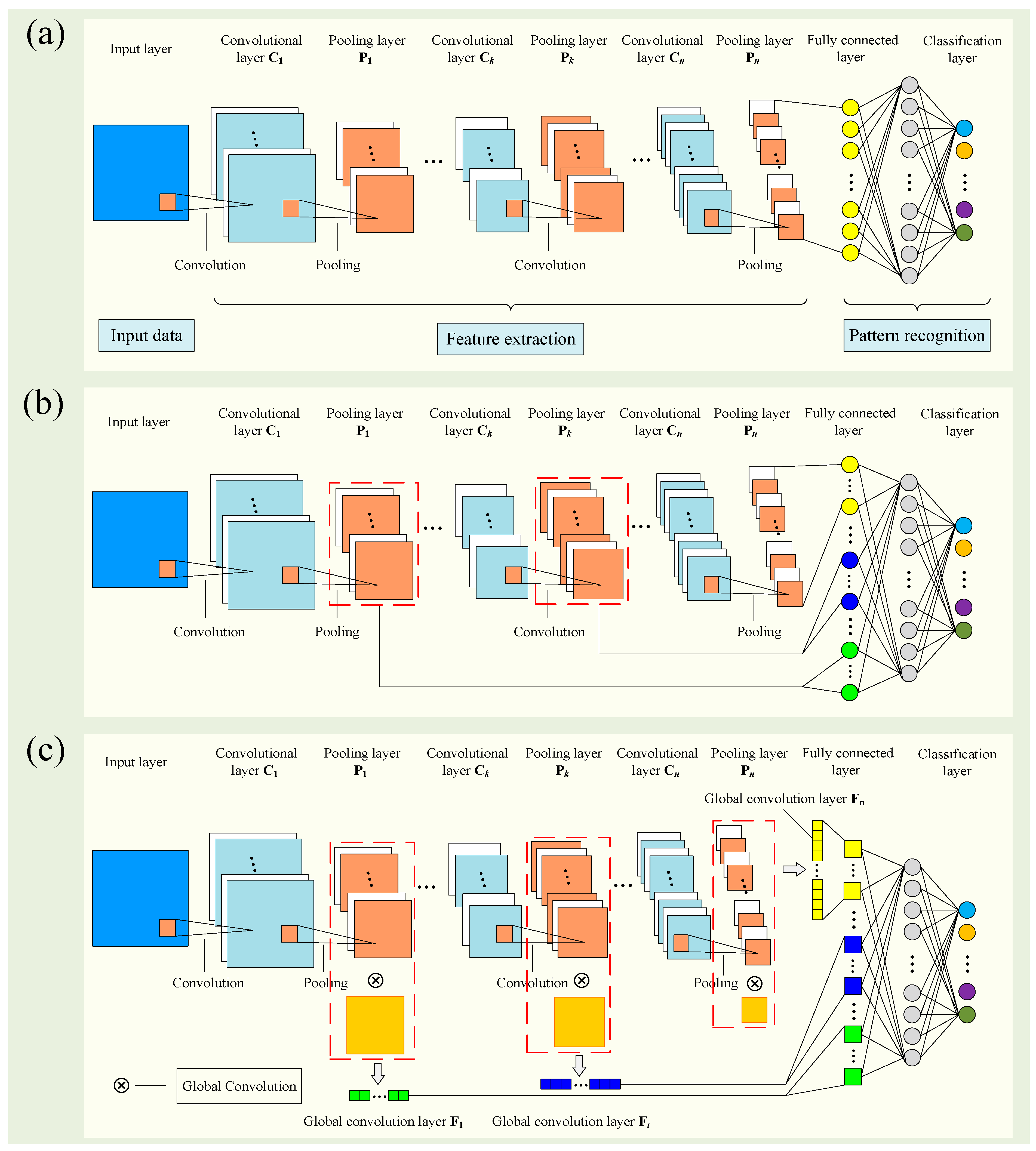

As shown in

Figure 1a, the CNN is a kind of multilayered feedforward neural network, and it mainly contains three parts: a convolutional layers, pooling layers and a fully connected layer. The convolutional layer detects the local conjunctions of the features of the input data by the local convolution operation. The pooling layer merges similar features into one to reduce the size of the network parameters and achieves a translation-invariant characteristic. The fully connected layer converts the inputs into a vector to achieve the categories for different tasks.

In a general CNN architecture, only the outputs of the last layer are connected to the fully connected layer, and the shallow convolution information is neglected. In order to make use of shallow information, the shallow features obtained from shallow pooling layers are connected to the fully connected layer, along with the features of the last layer. As shown in

Figure 1b, the yellow circles in the fully connected layer represent the depth information extracted by the last pooling layer

, and the blue and green circles represent the shallow information extracted from pooling layer

and the first pooling layer

respectively. Each line between the circles represents the connection weight of the neurons. The calculations made by the neurons in the new fully connected layer can be expressed as:

where

is the output of the jth neuron in the new fully connected layer,

is the outputs of the ith pooling layer,

is the number of outputs oft he ith pooling layer,

is the weight vector,

is the bias value,

m is the number of neurons in the new fully connected layer,

n is the number of pooling layers and

represents the nonlinear activation function. The new fully connected layer contains more neurons due to integrating the shallow information. The shallow information fusion-CNN model has a larger model parameter dimension, which could result in much more computational burdens and longer training times.

In order to reduce the dimension of the model parameters after integrating the shallow information, the feature maps obtained from each pooling layer are transformed into a feature sequence by the global convolution operation before being input into the fully connected layer. As shown in

Figure 1c, the global convolution kernels with the same dimension as the feature maps obtained from each pooling layer are used to convolve those corresponding feature maps, and the results extracted from different pooling layers are further concatenated into a 1D feature vector. Then, the 1D feature vector is taken as the new fully connected layer to achieve the pattern recognition task. The green, blue and yellow rectangles represent the feature sequences outputted by using the corresponding global convolution kernels to convolve the outputs of the pooling layer

, pooling layer

and the last pooling layer

respectively. The global convolution feature sequences obtained from different pooling layers are concatenated as the new fully connected layer before being transmitted to the classification layer. The calculations made by a neuron in the new fully connected layer can be expressed as:

where

is the output of the jth neuron in the new fully connected layer,

is the outputs of the ith pooling layer,

is the number of outputs of the ith pooling layer,

is the corresponding global convolution kernel with the same dimension of

,

is the weight vector,

is the bias value,

m is the number of neurons in the new fully connected layer,

n is the number of pooling layers,

represents the nonlinear activation function and

represents the global convolution operator.